| Issue |

A&A

Volume 644, December 2020

|

|

|---|---|---|

| Article Number | A99 | |

| Number of page(s) | 53 | |

| Section | Catalogs and data | |

| DOI | https://doi.org/10.1051/0004-6361/201936794 | |

| Published online | 14 December 2020 | |

Planck intermediate results

LV. Reliability and thermal properties of high-frequency sources in the Second Planck Catalogue of Compact Sources

1

AIM, CEA, CNRS, Université Paris-Saclay, Université Paris-Diderot, Sorbonne Paris Cité, 91191 Gif-sur-Yvette, France

2

APC, AstroParticule et Cosmologie, Université Paris Diderot, CNRS/IN2P3, CEA/lrfu, Observatoire de Paris, Sorbonne Paris Cité, 10 Rue Alice Domon et Léonie Duquet, 75205 Paris Cedex 13, France

3

African Institute for Mathematical Sciences, 6-8 Melrose Road, Muizenberg, Cape Town, South Africa

4

Astrophysics Group, Cavendish Laboratory, University of Cambridge, J J Thomson Avenue, Cambridge CB3 0HE, UK

5

Astrophysics & Cosmology Research Unit, School of Mathematics, Statistics & Computer Science, University of KwaZulu-Natal, Westville Campus, Private Bag X54001, Durban 4000, South Africa

6

CITA, University of Toronto, 60 St. George St., Toronto, ON M5S 3H8, Canada

7

CMDLABS, Cambridge Machines Deep Learning and Bayesian Systems Ltd, 22 Wycombe End, Beaconsfield, Buckinghamshire HP9 1NB, UK

8

CNRS, IRAP, 9 Av. colonel Roche, BP 44346, 31028 Toulouse Cedex 4, France

9

California Institute of Technology, Pasadena, CA, USA

10

Computational Cosmology Center, Lawrence Berkeley National Laboratory, Berkeley, CA, USA

11

Département de Physique Théorique, Université de Genève, 24, Quai E. Ansermet, 1211 Genève 4, Switzerland

12

Département de Physique, École normale supérieure, PSL Research University, CNRS, 24 Rue Lhomond, 75005 Paris, France

13

Departamento de Astrofísica, Universidad de La Laguna (ULL), 38206 La Laguna, Tenerife, Spain

14

Departamento de Física, Universidad de Oviedo, C/ Federico García Lorca, 18, Oviedo, Spain

15

Department of Astrophysics/IMAPP, Radboud University, PO Box 9010, 6500 GL Nijmegen, The Netherlands

16

Department of Physics & Astronomy, University of British Columbia, 6224 Agricultural Road, Vancouver, BC, Canada

17

Department of Physics & Astronomy, University of the Western Cape, Cape Town 7535, South Africa

18

Department of Physics, Gustaf Hällströmin katu 2a, University of Helsinki, Helsinki, Finland

19

Department of Physics, Princeton University, Princeton, NJ, USA

20

Dipartimento di Fisica e Astronomia G. Galilei, Università degli Studi di Padova, Via Marzolo 8, 35131 Padova, Italy

21

Dipartimento di Fisica e Scienze della Terra, Università di Ferrara, Via Saragat 1, 44122 Ferrara, Italy

22

Dipartimento di Fisica, Università La Sapienza, P. le A. Moro 2, Roma, Italy

23

Dipartimento di Fisica, Università degli Studi di Milano, Via Celoria, 16, Milano, Italy

24

Dipartimento di Fisica, Università degli Studi di Trieste, Via A. Valerio 2, Trieste, Italy

25

Dipartimento di Fisica, Università di Roma Tor Vergata, Via della Ricerca Scientifica, 1, Roma, Italy

26

European Space Agency, ESAC, Planck Science Office, Camino bajo del Castillo, s/n, Urbanización Villafranca del Castillo, Villanueva de la Cañada, Madrid, Spain

27

European Space Agency, ESTEC, Keplerlaan 1, 2201 AZ Noordwijk, The Netherlands

28

Gran Sasso Science Institute, INFN, Viale F. Crispi 7, 67100 L’Aquila, Italy

29

HEP Division, Argonne National Laboratory, Lemont, IL 60439, USA

30

Haverford College Astronomy Department, 370 Lancaster Avenue, Haverford, PA, USA

31

Helsinki Institute of Physics, Gustaf Hällströmin katu 2, University of Helsinki, Helsinki, Finland

32

INAF – OAS Bologna, Istituto Nazionale di Astrofisica – Osservatorio di Astrofisica e Scienza dello Spazio di Bologna, Area della Ricerca del CNR, Via Gobetti 101, 40129 Bologna, Italy

33

INAF – Osservatorio Astronomico di Padova, Vicolo dell’Osservatorio 5, Padova, Italy

34

INAF – Osservatorio Astronomico di Trieste, Via G.B. Tiepolo 11, Trieste, Italy

35

INAF, Istituto di Radioastronomia, Via Piero Gobetti 101, 40129 Bologna, Italy

36

INAF/IASF Milano, Via E. Bassini 15, Milano, Italy

37

INFN – CNAF, Viale Berti Pichat 6/2, 40127 Bologna, Italy

38

INFN, Sezione di Bologna, Viale Berti Pichat 6/2, 40127 Bologna, Italy

39

INFN, Sezione di Ferrara, Via Saragat 1, 44122 Ferrara, Italy

40

INFN, Sezione di Roma 2, Università di Roma Tor Vergata, Via della Ricerca Scientifica, 1, Roma, Italy

41

Institut d’Astrophysique Spatiale, CNRS, Univ. Paris-Sud, Université Paris-Saclay, Bât. 121, 91405 Orsay Cedex, France

42

Institut d’Astrophysique de Paris, CNRS (UMR7095), 98 bis Boulevard Arago, 75014 Paris, France

43

Institute Lorentz, Leiden University, PO Box 9506, Leiden 2300 RA, The Netherlands

44

Institute of Astronomy, University of Cambridge, Madingley Road, Cambridge CB3 0HA, UK

45

Institute of Theoretical Astrophysics, University of Oslo, Blindern, Oslo, Norway

46

Instituto de Astrofísica de Canarias, C/Vía Láctea s/n, La Laguna, Tenerife, Spain

47

Instituto de Física de Cantabria (CSIC-Universidad de Cantabria), Avda. de los Castros s/n, Santander, Spain

48

Istituto Nazionale di Fisica Nucleare, Sezione di Padova, Via Marzolo 8, 35131 Padova, Italy

49

Jet Propulsion Laboratory, California Institute of Technology, 4800 Oak Grove Drive, Pasadena, CA, USA

50

Jodrell Bank Centre for Astrophysics, Alan Turing Building, School of Physics and Astronomy, The University of Manchester, Oxford Road, Manchester M13 9PL, UK

51

Kavli Institute for Cosmology Cambridge, Madingley Road, Cambridge CB3 0HA, UK

52

Laboratoire d’Océanographie Physique et Spatiale (LOPS), Univ. Brest, CNRS, Ifremer, IRD, Brest, France

53

Laboratoire de Physique Subatomique et Cosmologie, Université Grenoble-Alpes, CNRS/IN2P3, 53 Rue des Martyrs, 38026 Grenoble Cedex, France

54

Laboratoire de Physique Théorique, Université Paris-Sud 11 & CNRS, Bâtiment 210, 91405 Orsay, France

55

Lawrence Berkeley National Laboratory, Berkeley, CA, USA

56

Low Temperature Laboratory, Department of Applied Physics, Aalto University, Espoo, 00076 Aalto, Finland

57

Max-Planck-Institut für Astrophysik, Karl-Schwarzschild-Str. 1, 85741 Garching, Germany

58

Mullard Space Science Laboratory, University College London, Surrey RH5 6NT, UK

59

NAOC-UKZN Computational Astrophysics Centre (NUCAC), University of KwaZulu-Natal, Durban 4000, South Africa

60

National Centre for Nuclear Research, ul. L. Pasteura 7, 02-093 Warsaw, Poland

61

Purple Mountain Observatory, No. 8 Yuan Hua Road, 210034 Nanjing, PR China

62

SISSA, Astrophysics Sector, Via Bonomea 265, 34136 Trieste, Italy

63

San Diego Supercomputer Center, University of California, San Diego, 9500 Gilman Drive, La Jolla, CA 92093, USA

64

School of Chemistry and Physics, University of KwaZulu-Natal, Westville Campus, Private Bag X54001, Durban 4000, South Africa

65

School of Physics and Astronomy, Cardiff University, Queens Buildings, The Parade, Cardiff CF24 3AA, UK

66

School of Physics and Astronomy, Sun Yat-sen University, 2 Daxue Rd, Tangjia, Zhuhai, PR China

67

School of Physics, Indian Institute of Science Education and Research Thiruvananthapuram, Maruthamala PO, Vithura, Thiruvananthapuram, 695551 Kerala, India

68

Simon Fraser University, Department of Physics, 8888 University Drive, Burnaby BC, Canada

69

Sorbonne Université, CNRS, UMR 7095, Institut d’Astrophysique de Paris, 98 bis bd Arago, 75014 Paris, France

70

Sorbonne Université, Observatoire de Paris, Université PSL, École normale supérieure, CNRS, LERMA, 75005 Paris, France

71

Space Science Data Center – Agenzia Spaziale Italiana, Via del Politecnico snc, 00133 Roma, Italy

72

Space Sciences Laboratory, University of California, Berkeley, CA, USA

73

The Oskar Klein Centre for Cosmoparticle Physics, Department of Physics, Stockholm University, AlbaNova, 106 91 Stockholm, Sweden

74

Université de Toulouse, UPS-OMP, IRAP, 31028 Toulouse Cedex 4, France

75

Warsaw University Observatory, Aleje Ujazdowskie 4, 00-478 Warszawa, Poland

Received:

26

September

2019

Accepted:

18

July

2020

We describe an extension of the most recent version of the Planck Catalogue of Compact Sources (PCCS2), produced using a new multi-band Bayesian Extraction and Estimation Package (BeeP). BeeP assumes that the compact sources present in PCCS2 at 857 GHz have a dust-like spectral energy distribution (SED), which leads to emission at both lower and higher frequencies, and adjusts the parameters of the source and its SED to fit the emission observed in Planck’s three highest frequency channels at 353, 545, and 857 GHz, as well as the IRIS map at 3000 GHz. In order to reduce confusion regarding diffuse cirrus emission, BeeP’s data model includes a description of the background emission surrounding each source, and it adjusts the confidence in the source parameter extraction based on the statistical properties of the spatial distribution of the background emission. BeeP produces the following three new sets of parameters for each source: (a) fits to a modified blackbody (MBB) thermal emission model of the source; (b) SED-independent source flux densities at each frequency considered; and (c) fits to an MBB model of the background in which the source is embedded. BeeP also calculates, for each source, a reliability parameter, which takes into account confusion due to the surrounding cirrus. This parameter can be used to extract sub-samples of high-frequency sources with statistically well-understood properties. We define a high-reliability subset (BeeP/base), containing 26 083 sources (54.1% of the total PCCS2 catalogue), the majority of which have no information on reliability in the PCCS2. We describe the characteristics of this specific high-quality subset of PCCS2 and its validation against other data sets, specifically for: the sub-sample of PCCS2 located in low-cirrus areas; the Planck Catalogue of Galactic Cold Clumps; the Herschel GAMA15-field catalogue; and the temperature- and spectral-index-reconstructed dust maps obtained with Planck’s Generalized Needlet Internal Linear Combination method. The results of the BeeP extension of PCCS2, which are made publicly available via the Planck Legacy Archive, will enable the study of the thermal properties of well-defined samples of compact Galactic and extragalactic dusty sources.

Key words: catalogs / cosmology: observations / submillimeter: general

© ESO 2020

1. Introduction

The Planck1 satellite (Planck Collaboration I 2016) was designed to image the temperature anisotropies of the cosmic microwave background (CMB) with a precision limited only by astrophysical foregrounds. To achieve its objectives, Planck observed the entire sky in nine broadband channels between 30 and 857 GHz. The Planck all-sky maps contain not only the CMB, but also a variety of diffuse sources of “foreground” emission – especially the Milky Way from radio to far-infrared wavelengths, as well as extragalactic backgrounds such as the cosmic infrared background (CIB) and Sunyaev–Zeldovich emission from clusters of galaxies. In addition to diffuse emission, the Planck maps contain emission from compact Galactic objects (cold dense clumps, supernova remnants, etc.) and a wide variety of unresolved external galaxies.

The Planck Catalogue of Compact Sources (PCCS; Planck Collaboration XXVIII 2014) contains compact sources extracted from the Planck maps using the first 15 months of data. The source-detection algorithm was independent at each frequency and consequently the PCCS comprises nine independent lists. The second version of the catalogue (PCCS2; Planck Collaboration XXVI 2016) was produced using the full-mission data, obtained between 13 August 2009 and 3 August 2013.

At the frequencies observed by the High Frequency Instrument (HFI; 100–857 GHz), the diffuse sky background consists mainly of cirrus, i.e., dust emission from our own Galaxy, which covers a large part of the sky, is bright, and spatially fluctuates in a complex way (Low et al. 1984). The presence of this cirrus significantly complicates the detection and validation of compact sources, particularly because the statistical properties of this background are poorly understood, and since this cirrus contains localized structures that can be easily confused with genuinely compact sources. In addition, most of the compact sources expected in the frequency range 217–857 GHz, both Galactic and extra-galactic, have a dust-dominated spectrum similar to that of the cirrus.

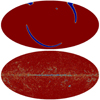

The approach of PCCS2 to this problem was the cautious but simple one of defining a set of masks within which the cirrus emission was bright or complex, and labelling all compact sources found within these masks as “suspicious”. The masks were derived at each frequency from: (a) brightness-thresholded total emission maps; and (b) maps of filamentary emission derived from a difference-of-Gaussians technique. All the compact sources detected in the union of these two masks were put into separate lists, referred to as PCCS2E (E for “Excluded”), and their reliability was not determined. The Exclusion masks include the Galactic plane and the low-Galactic-latitude regions, and cover from 15% of the sky at 100 GHz to 66% of the sky at 857 GHz. PCCS2E contains 2487 (43 290) sources at 100 GHz (857 GHz), to be compared to 1742 (4891) sources in the PCCS2 “proper”2. The vast majority of compact sources detected in the HFI maps therefore reside in the PCCS2E. While it is likely that many of the sources within PCCS2E are not genuine compact sources, but rather bumps or filaments in the cirrus background, inspection by eye of the maps clearly reveals that many of the sources are very probably genuine. Figure 1 shows a 10° × 10° patch of sky on which the locations of both PCCS2 and PCCS2E sources are displayed. The lack of information on the reliability of the PCCS2E sources diminishes the overall utility of the PCCS2+2E. This new study addresses that problem.

|

Fig. 1. Left: a 10° × 10° mid-Galactic-latitude (bII ≈ 45°) region of the Planck 857 GHz map superimposed on the PCCS2+2E filament mask (grey contours). PCCS2 sources are yellow diamonds and PCCS2E sources are red triangles. The selected region contains complex backgrounds with localized features such as filaments and cirrus, causing the mask to break up into numerous islands. Many PCCS2+2E sources trace these structures, suggesting that some of the sources are parts of filamentary structures broken up by the source-finder and not genuine compact sources. Right: central 4.9° × 4.9° of the picture on the left, showing more clearly the spatial distribution of the PCCS2 and PCCS2E sources relative to the mask. |

We do this by making use of two kinds of information available in the Planck maps but not used by PCCS2. First, we use data from multiple frequencies simultaneously. The vast majority of high-frequency compact sources in PCCS2+2E, both Galactic and extragalactic, radiate thermal dust emission, which can be adequately modelled with a modified blackbody (MBB) spectral energy distribution (SED) characterized by a temperature and a spectral index (T, β). This smooth spectral behaviour can be used to improve the detectability and reliability of individual sources at high frequencies, while at the same time determining the parameters of the corresponding SEDs. This technique has been used to construct several previous Planck catalogues, including: the Catalogue of Galactic Cold Clumps (Planck Collaboration XXVIII 2016); the Catalogue of Sunyaev–Zeldovich Sources (Planck Collaboration XXVII 2016); the List of High-Redshift Source Candidates (Planck Collaboration Int. XXXIX 2016); the band-merged version of the Early Release Catalogue of Compact Sources (Chen et al. 2016); and the Multi-frequency Catalogue of Non-thermal Sources (Planck Collaboration Int. LIV 2018).

The second piece of information is that the brightness distribution of the diffuse cirrus emission varies relatively slowly and smoothly across the sky. This implies that its spatial-statistical properties are likely to be homogeneous within relatively large patches. In addition, since the cirrus itself has an SED of the MBB type, its spatial distribution is correlated across frequency channels. The statistical properties of the background can therefore be determined locally with good precision, and this information can be used to help separate sources from backgrounds.

We have carried out a re-analysis of all the sources contained in PCCS2+2E at 857 GHz3, which assumes that a single compact source is responsible for the emission observed across a range of frequencies, both below and above 857 GHz. We further assume that each source can be distinguished from the diffuse background in which it is embedded, either by being an outlier (in the sense that its spatial distribution does not match the statistical properties of the background) or by exhibiting a significantly different SED. We combine multi-channel information re-extracted from Planck and IRAS maps to: (a) assess the reliability of detection of each source, taking into account potential confusion with the background; (b) re-determine the flux density of each source at frequencies from 353 to 857 GHz; (c) evaluate the spatial parameters (location and extension) of the compact source; and (d) estimate the parameters of an MBB fit to the emission across all the frequencies considered.

The results of this re-analysis are included in the Planck Legacy Archive4 (PLA) as an extension of the PCCS2 and PCCS2E 857 GHz catalogues, appending the values of the new parameters to the original files. This extension of PCCS2 enables extraction of sub-samples that have well understood statistical properties, which in turn enables the study of the thermal properties of compact Galactic and extragalactic sources.

The outline for this paper is as follows. In Sect. 2, we present the data that we use as input to the analysis. In Sect. 3, we detail the model that we use to describe the sources and associated backgrounds, and we outline the Bayesian algorithm that we use to analyse each source and the main parameters that it outputs (details are given in Appendix A). In Sect. 4, we describe the simulations that we have built and used to tune and validate the algorithm and some of the main results. In Sect. 5, we describe how we produce and filter the new information added to the PCCS2+2E catalogue. In Sect. 6, we carry out a global characterization of the results of this analysis. In Sect. 7, we validate the results of this analysis against PCCS2 and other catalogues, and (for diffuse emission parameters) against dust maps derived from Planck data. In Sect. 8, we summarize our results, and provide recommendations for users of the new source information.

We have also included several appendices as follows. In Appendix A we detail the statistical machinery that we use. In Appendix B we describe how we have used our simulations to characterize and test the results. In Appendix C we comment on our Bayesian approach to contamination analysis, as opposed to a more classical frequentist approach. Finally, in Appendix D we include for reference the resulting SEDs that we obtain for a small number of well-known sources.

Parts of this paper describe details of our methods, and are necessarily long and technical. For readers whose main interests are the use of our results, we recommend to focus on Sects. 3.1 and 3.2, which describe our source and background models, and Sects. 5 and 6, which describe how we generate catalogue information, and how we then select a “base” catalogue of reliable sources. Section 7 compares our results to other catalogues, and can be skimmed unless such comparisons are important to the reader. Our main results are summarized in the final section, and Appendix D provides some specific examples of well-studied or interesting sources extracted from our catalogue.

2. Data

We use the 857 GHz source list of the Second Planck Catalogue of Compact Sources (Planck Collaboration XXVI 2016) to provide the initial source locations for our multifrequency Bayesian analysis. The angular resolution of Planck was highest at 857 GHz (corresponding to 4 7), and this list contains the largest number of sources of any individual frequency in PCCS2. The 857 GHz source list contains flux densities for each source detected at 857 GHz, as well as estimates of flux densities at 545 and 353 GHz at the same locations. We note that the 857 GHz list does not contain any indication of the reliability of individual sources; the highest frequency at which such an indication is given is 353 GHz.

7), and this list contains the largest number of sources of any individual frequency in PCCS2. The 857 GHz source list contains flux densities for each source detected at 857 GHz, as well as estimates of flux densities at 545 and 353 GHz at the same locations. We note that the 857 GHz list does not contain any indication of the reliability of individual sources; the highest frequency at which such an indication is given is 353 GHz.

Our analysis then uses the Planck all-sky temperature maps at 353, 545, and 857 GHz from the Planck 2015 release (Planck Collaboration I 2016) to derive the characteristics of sources and their surrounding background. These maps are provided in the Planck Legacy Archive in HEALPix (Górski et al. 2005) format with Nside = 2048. The description of these maps can be found in Planck Collaboration VII (2016). In addition, we use the 3000 GHz IRIS map, a reprocessed IRAS map described in Miville-Deschênes & Lagache (2005), with the same pixelization as the Planck maps5.

Since the start of this work, a new generation of Planck maps has been released, which is referred to as the 2018 or Legacy release (Planck Collaboration I 2020). However, a new catalogue of compact sources has not been extracted from the Legacy maps. Therefore, we continue using the Planck 2015 maps that are the source of PCCS2.

3. Methodology

There is a long history of astronomers constructing catalogues, and many different approaches have been implemented, depending on the source and background properties. When the sources are unresolved and the background has no correlations, then the optimal approach is simply to use a point-spread-function filter (e.g., Stetson 1987) or thresholding methods appropriate for isolated sources, perhaps with varying noise levels, using software such as SExtractor (Bertin & Arnouts 1996). When the statistical properties of the background are known, one can instead use a matched-filter approach (e.g., Tegmark & de Oliveira-Costa 1998; Barreiro et al. 2003). If the background is more complex, if the sources themselves are partially resolved, or if the observed fields are crowded, the task of making a reliable catalogue becomes much more difficult. Several methods have been used to extract compact sources from confused Galactic regions, for example, using second derivatives and multi-Gaussian fitting as in CuTEx (Molinari et al. 2011), using higher-resolution data and multi-scale extraction as in getdist (Men’shchikov 2013) applied to Herschel data, a similar multi-scale approach with Gaussclumps applied to LABOCA data (Csengeri et al. 2014), or associating contiguous bright regions as a single source in Clumpfind (Williams et al. 1995) or FellWalker (e.g., Nettke et al. 2017) for SCUBA-2 data. A completely different strategy focuses on estimating the background properties simulaltaneously with the source properties, and that is the approach we follow here.

We carry out an independent Bayesian likelihood analysis (see e.g., Hobson et al. 2009) for each source contained in the 857 GHz catalogue of PCCS2+2E, and for the background surrounding it. The likelihood analysis takes as input four maps (353, 545, and 857 GHz from Planck 2015, and 3000 GHz from IRIS). We implement this analysis in software called the Bayesian Estimation and Extraction Package, and refer to it as BeeP. The analysis of each source assumes a model of the signal due to the source, and another due to the background.

3.1. Source model

We model the signal sj due to the jth source as

where Aj is an overall amplitude for the source at some chosen reference frequency, which we take to be 857 GHz6, f contains the emission coefficients at each frequency, which depend on the emission-law parameter vector ϕj of the source (see below), and τ(x − Xj; aj) is the convolved spatial template at each frequency of a source centred at the position Xj ≡ {Xj,Yj} and characterized by the shape parameter vector aj. Thus, the parameters to be determined for the jth source are its overall amplitude, position, shape, and emission law, which we denote collectively by Θj = {Aj, Xj, aj, ϕj}.

If we make explicit the dependence of the source signal with the frequency channel (i), we have

where Bi(x) is the beam point-spread function of channel i. In this study we are mostly targeting completely unresolved objects, i.e., beam-shaped “point sources”; however, since PCCS2+2E also includes extended objects, we model the intrinsic shape of a source as a symmetrical two-dimensional Gaussian,

where a ≡ r is the source radius.

The intrinsic spatial profile of the source  (before any instrumental distortion) is assumed to remain unchanged across frequencies7. To allow the intrinsic source size to vary with frequency would require more parameters and increased uncertainties to account for a situation that corresponds to a minority of sources. We have therefore chosen to impose a single, constant size parameter for a given source.

(before any instrumental distortion) is assumed to remain unchanged across frequencies7. To allow the intrinsic source size to vary with frequency would require more parameters and increased uncertainties to account for a situation that corresponds to a minority of sources. We have therefore chosen to impose a single, constant size parameter for a given source.

As mentioned in Sect. 1, the frequency spectra of most of the compact objects found in the Planck-HFI maps can be well-represented by an MBB spectrum (Planck Collaboration XXVI 2016); however, the SEDs of a minority of sources, for instance blazars, are not well-described by a modified blackbody. Therefore, we fit all sources with both MBB and “Free” models. In the latter, the emission coefficient fνi at each channel is a free parameter. The MBB spectrum is written as

where the spectral parameters ϕ = {β,T} are the dust emissivity spectral index and temperature, respectively, Bν(T) is the Planck law of blackbody radiation, and ν0 is once again the reference frequency. We normalize f so that fν = 1 at ν = ν0.

The Free model is written as

where the emission coefficients fνi are free parameters. In effect, this model is a way to estimate source flux densities in each channel without imposing an SED, but still assuming that there is a single source at all frequencies. This extra flexibility comes at the cost of a larger model complexity, since it requires more free parameters. The flux-density estimates for the Free model are those that can most closely be compared to the ones already present in PCCS2+2E.

The location of the centre of the source is represented in Eq. (1) by Xj. Our analysis initially assumes that the source is centred at the location defined in the 857 GHz list of PCCS2+2E. However, the source centre may be expected to vary slightly from channel to channel, and for this reason we allow our method to deviate from the initial values in an attempt to find the best overall location. Furthermore, during this investigation we realized that many of the source locations listed in PCCS2+2E are not well determined: in many cases we see that the centres of one or more sources are located around the edge of a well-defined blob of emission (e.g., Fig. 2). This problem affects about 10% of all sources in PCCS2+2E for the higher-frequency channels, and is inherent to the Mexican-hat wavelet 2 (MHW2) algorithm used to perform the detection. This wavelet, when used as a filter, is known to maximize the S/N of the objects, but it is also known to produce artefacts at a fixed distance from the centre of the source, Such artefacts related to the shape of the filter can be identified and removed particularly well in the cleaner regions of the sky. This additional cleaning step was performed for the lower-frequency channels of PCCS2, where the beamwidths are larger and these ringing effects are more prominent, but it was not performed for the higher-frequency channels because it was not considered necessary. Moreover, the MHW2 algorithm is well suited for the detection of point-like objects; however, when dealing with slightly extended structures such as those found at 857 GHz, the artefacts introduced by this filter are more evident, and a two-step cleaning procedure is definitely needed.

|

Fig. 2. Small patch ( |

In our analysis we allow a new location to be determined from all the frequencies considered. As a result, in a number of cases several PCCS2+2E sources will be associated with the same physical source location8. However, there are also many genuinely independent sources that are relatively close to each other, and there is a risk that the algorithm would “merge” them. We have therefore compromised by allowing our algorithm to move the location by at most 3 pixels (4 5) away from its starting point. If this extreme is reached without an optimal solution being found, a flag indicating this is set in the final parameters.

5) away from its starting point. If this extreme is reached without an optimal solution being found, a flag indicating this is set in the final parameters.

3.2. Background model

We now need to account for the astronomical background b(x) and the instrumental noise n(x). A strong assumption of our framework is that the joint background in which the sources are immersed (b(x) + n(x)) is a two-dimensional, statistically isotropic Gaussian random field. Such a field is fully defined by its covariance matrix, which we use in our method as the mathematical representation of the background. The full-sky maps observed by Planck however, are neither statistically isotropic nor Gaussian. At high Galactic latitudes, the diffuse emission from Galactic dust is faint, and the (mostly extra-galactic) brighter compact sources stand out easily against it. However, the situation changes rapidly at low Galactic latitudes, as the diffuse emission competes in brightness with even the brightest compact sources. In this situation, confusion between “genuine” sources and the diffuse emission leads to difficulties in estimating the statistical properties of the background alone.

To improve our estimation of the properties of the background, we first reduce the size of the sky patch analysed around the source such that we can assume that statistical isotropy applies locally9. Second, we use the covariance matrix of the cross-power spectra across frequency channels. This improves the situation, since the instrumental noise n(x) is mostly uncorrelated across channels, and the astronomical background b(x) is better-determined by the larger data volume. The determination of an accurate cross-spectrum covariance matrix turns out to be a key element in our method. To improve the estimation of the off-diagonal components of this matrix, we filter out the noise component using the theory of random covariance matrices (Bouchaud & Potters 2004, Chap. 9). We have found that we also need to weight the off-diagonal elements (which represent the correlated part of the background) with respect to the diagonal elements (which represent the “noise”) in order to accommodate the very large dynamic range of sources. The weighting factor that we use is tuned on simulations to reduce bias in the recovery of source parameters. More details on these analysis choices are described in Appendix A.

In practice, PCCS2+2E provides a list of potentially genuine sources that are embedded in the background whose properties we are estimating. For each of these sources, we create “background” maps (see Appendix A.2) by masking all surrounding PCCS2+2E sources10 and inpainting the masked areas (see Sect. 5.3 of Casaponsa et al. 2013). We use a 7′ masking and inpainting radius to provide a good balance between effective source-brightness removal and preservation of the statistical properties of the background (see Figs. A.1 and A.2, and the discussion in Appendix A.1.4), especially at low Galactic latitudes where the density of sources is very high. Close to the Galactic plane, a large fraction of the background patch (up to 74% near the Galactic centre) is masked and inpainted, which might be expected to have a significant effect on the estimation of the detection significance11. More generally, we expect that inpainting may bias the estimation of the background properties, but it cannot be avoided because the effect of unremoved bright sources or of corresponding holes would certainly be much higher. The impact of inpainting cannot be modelled analytically, and the only way to assess it is through simulations. Simulations with different degrees of inpainting are discussed in Sect. 4, and show that the effect on source parameters is indeed small (as discussed further in Appendix B.2).

3.3. Combined model and its analysis

In this section we present the principles of our Bayesian analysis methodology. Appendix A gives technical details of the approach and its practicalities.

We first combine our models for sources and background into a model of the observed maps. A realistic model would have to include the entirety of sources and the full sky together. However, as described in Appendix A.1, under the assumption that the sources do not blend together, it is possible to simplify the problem and model each source independently:

where dj is the data vector (pixel values), and bj and nj represent astrophysical and noise backgrounds in the neighbourhood of the source (j).

We can now build the likelihood of a single compact object as

where  is the generalized background (b + n), N is the generalized background covariance matrix, and all individual source parameters have been concatenated into Θ for convenience. For clarity we have dropped the source index here.

is the generalized background (b + n), N is the generalized background covariance matrix, and all individual source parameters have been concatenated into Θ for convenience. For clarity we have dropped the source index here.

The above expression allows us to consider the likelihood of a “no-source” model ℒ0, when A, the source amplitude is 0. ℒ0 is a constant, since it does not contain any parameters. The expression that we seek to maximize is the log of the ℒ(Θ)/ℒ0 ratio, which represents the likelihood that there is a source in addition to the background.

If  is the parameter set that maximizes the likelihood ratio (Eq. (7)), then we define the quantity ℛ, corresponding to NPSNR in the catalogue12 (the Neyman–Pearson signal-to-noise ratio), by

is the parameter set that maximizes the likelihood ratio (Eq. (7)), then we define the quantity ℛ, corresponding to NPSNR in the catalogue12 (the Neyman–Pearson signal-to-noise ratio), by

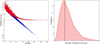

ℛ is the detection significance level that expresses the number of sigmas of the detection, and is given in the NPSNR column of the BeeP catalogues. In the case that all of our assumptions hold, and all source parameters are known except amplitude, A, then ℛ would in fact be the inverse of the fractional error on the amplitude, A/ΔA. However, in practice, as we shall see, typical values of ℛ are considerably higher than A/ΔA. This is the result of either broken assumptions or uncertainties on the other estimated parameters that propagate into the source amplitude. In particular, the presence of cirrus produces strong positive-tail events in the likelihood, and this might be interpreted (erroneously) as generated by the source of interest (see Fig. 3 for examples).

|

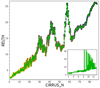

Fig. 3. Examples of potential analysis fields. Upper panel: high significance source (PCCS2 857 G172.20+32.04). The histogram (shown in the inset; Y-scale is log) is a mixture of a Gaussian component from the background pixels, plus a strong upper tail generated by the source in the centre. Lower panel: field with no detected sources in it (PCCS2 857 G172.20+32.04; Y-scale is linear). This time only the Gaussian component is present. The tails of the distribution are compatible with “just background”. Each field is 25 × 25 pixels (1 pixel |

To account for this effect, we build an estimate of the non-Gaussianity of the background that is independent of the likelihood, which we refer to as RELTH. Essentially we look in the background patch for outliers to a white-noise, unitary (σ = 1) Gaussian random field in pixel space (X), which is what we would expect if all our assumptions hold, in other words, under the null hypothesis of our model. We assume that the positive outlier pixels created by the source itself are no more than a small fraction of the total number of pixels in a small patch around the source. Using the definition of quantiles, one would expect that

where RELTH is the 1 − α distribution quantile, and σ is the width of the Gaussian. Using simulations, we have verified that the fraction of outlier pixels created by the source is less than 5% of the total, so we use α = 5%.

RELTH can be read directly from the histogram of the actual field, and then Eq. (9) solved for σ. If the background pixels ([1 − α]% of the patch pixels) comply with the assumptions of the background model, then they will follow a unitary Gaussian distribution and the solution of Eq. (9) is σ = 1. However, as a result of the intrinsic non-Gaussianity of the background, the tails of the background histogram are expected to be larger than those of the unitary Gaussian distribution. This distribution of background pixel brightness with extended tails can then be approximated by a Gaussian, but with σ > 1 to account for the larger tails. Solving Eq. (9),

where k is a pure numerical constant given by

and erfc−1 is the inverse complementary error function.

We can now correct our “naive” significance NPSNR and define a new source significance variable as

where k is a constant given by Eq. (11), which is the same for all sources. SRCSIG expresses the likelihood that there is a source in the patch being analysed. If the histogram of the background patch is Gaussian, then  by definition and SRCSIG = NPSNR. If our initial assumptions hold, as predicted, then NPSNR is the detection significance. However when there is non-Gaussianity in the background, either from diffuse components or localized features, then RELTH increases and a penalty is applied to the Gaussian criterion. The penalty is reduced towards high Galactic latitudes away from cirrus, where the isotropy and Gaussian assumptions hold well. In the neighbourhood of the Galactic plane, or inside cirrus structures, the criterion becomes more stringent in order to avoid false positives induced by the non-Gaussianity of the background13.

by definition and SRCSIG = NPSNR. If our initial assumptions hold, as predicted, then NPSNR is the detection significance. However when there is non-Gaussianity in the background, either from diffuse components or localized features, then RELTH increases and a penalty is applied to the Gaussian criterion. The penalty is reduced towards high Galactic latitudes away from cirrus, where the isotropy and Gaussian assumptions hold well. In the neighbourhood of the Galactic plane, or inside cirrus structures, the criterion becomes more stringent in order to avoid false positives induced by the non-Gaussianity of the background13.

Finally, we note that RELTH depends on the detailed statistics of the field brightness. Therefore its ability to provide an estimate of the relative level of non-Gaussianity in the background is not uniform across the sky. However, tests using simulations show that it is effective both at low and high Galactic latitudes, and it can safely be used to correct NPSNR. On the other hand, it should probably not be used to directly compare levels of non-Gaussianity in regions that differ significantly in complexity.

4. Simulations

We have tested our method extensively using simulations. These tests have allowed us to tune parameters intrinsic to the method, and to assess the quality of the extracted source descriptors. There are four types of simulations, as follows.

-

1.

Synthetic simulations (Appendix B.1) comprise data that mimic a basic assumption of the method as closely as possible, namely that the background is a homogeneous Gaussian random process. To make these simulations, we combine CMB map realizations based on the Planck 2015 best-fit cosmological model, with noise consistent with that of the Planck detectors as described in Planck Collaboration XII (2016). To these we add Gaussian sources whose thermal emission characteristics are taken from a preliminary BeeP extraction. We use these simulations to test the algorithm, and fix some of its basic parameters, such as the optimal size of the patch analysed around each source, and to check the impact of some systematics such as projection distortions.

-

2.

Injection simulations (Appendix B.2) attempt to reproduce the properties of the diffuse backgrounds that are seen by Planck. The basic principle is to use the 2015 Planck maps and add to them a known set of sources. We have produced three distinct types of these simulations: (a) we remove from the observed maps the sources present in PCCS2+2E, inpaint the holes, and inject at the same locations point-like sources whose thermal emission parameters are those of the original source (as extracted by BeeP in a preliminary run); (b) as in (a), but the fake sources are injected in the vicinity of the original ones rather than at the PCCS2+2E location; and (c) the locations of the fake sources are randomly drawn from a uniform distribution over the high-latitude sky, and their thermal properties are drawn from the distribution present in PCCS2. In this case the original PCCS2+2E sources are not removed from the maps. In addition, we have also produced realizations of the above three types that include known source extensions. As detailed further in Appendix B.2, these simulations allow us to:

-

assess the effect of inpainting on the results;

-

determine an optimal level for the covariance matrix cross-correlation factor;

-

assess biases in the recovered source parameters, e.g., temperature and spectral index;

-

assess the accuracy of the estimated source locations, and on this basis establish a correction to the estimated location uncertainties; and

-

assess biases and establish corrections to both the estimated flux densities and their uncertainties (see Sect. 6.2.4).

-

-

3.

FFP8 simulations (Appendix B.4) are the most realistic realizations of the all-sky maps as observed by Planck and processed through the PR2 pipelines14, and are fully independent of the observed maps. In particular they reproduce the variation across the sky and in frequency of the Planck beams, which is something that we do not include in our injection simulations. However, an important drawback is that a corresponding simulation of the IRIS sky is not available and therefore we cannot extract thermal-emission parameters in order to compare them directly to BeeP’s results on Planck maps. Nonetheless, we are able to use these simulations to assess the impact of the beam variation on the recovery of flux densities and on the positional error, and on this basis we establish a correction to the flux-density estimates.

-

4.

No-source simulations (see Sect. 5.1) use a list of locations that are not present in PCCS2+2E, and on which we run BeeP. Under the assumption that such locations contain only background emission15, these simulations allow us to estimate the number of spurious sources generated by BeeP, i.e., the background-related contamination fraction of the resulting catalogue. The empty locations are selected in the neighbourhood of the catalogue positions in order to preserve the distribution of sources on the sky. We have placed the sources at a random location within an annulus of radii 12′ and 14′, enforcing that each injection location is at least 12′ from any other. We then mask and inpaint the original source.

All of the above tests and their results are described in detail in the Sects. 5.1 and 5.2, as well as Appendix B.

5. Catalogue production

The basic principles of the production methodology for the catalogue are described in Sect. 3 and implementation details in Appendix A. The BeeP software takes as input a catalogue of sources and associated maps, and processes all sources. The output is an extension of the input catalogue, in effect adding to each source a number of new parameter fields.

As described in Sect. 2, the input catalogue is the union of the 857 GHz PCCS2 and PCCS2E (PCCS2+2E) source lists, which contains 48 181 entries. The input data are the 2015 Planck full-mission frequency maps between 353 and 857 GHz, and the IRIS map. The IRIS map does not cover the full sky, and therefore a small subset of sources (650) has been processed with Planck channels only. This restriction seriously impairs the constraining capabilities of the likelihood, and hence a downgraded quality status has been assigned to these sources. As a consequence, the output catalogue contains 47 531 complete entries. Of those, 42 869 (about 90%) are in the PCCS2E, and only 4662 (10%) in the PCCS2.

5.1. Reliability assessment

Once we have processed the entire input catalogue through BeeP, we can apply filters to select subsets of sources. The first and most critical filter is reliability. For this purpose, we interpret our detection significance statistic SRCSIG in terms of reliability.

In a classical frequentist framework, we would draw the test receiver operational characteristic curve (ROC, Trees 2001, Chap. 2). The ROC curve shows the balance between “completeness”, or true positive rate, and the false positive or “spurious” rate, when varying the threshold of the detection significance statistic. However, since we are not adding any new entries to the PCCS2+2E catalogue, we will always be limited by the initial catalogue’s completeness. Our focus will therefore be on the spurious error rate or “contamination”. The spurious error rate is the probability of classifying a source as real when only background is present for a given SRCSIG16,

Owing to the complexity of the data, the most practical way of estimating contamination is through simulations. For this purpose, we use the no-source simulations described in Sect. 4. We run the BeeP algorithm on the no-source catalogues, and compute the SRCSIG statistic. We then compute the percentage of locations where there is not a source for which the SRCSIG statistic is larger than a certain threshold. This gives an estimate of the contamination (Eq. (13))17, under the assumption that there are no sources.

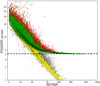

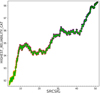

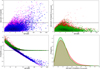

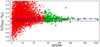

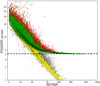

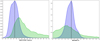

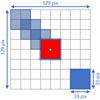

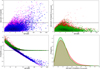

Figure 4 shows how this estimate of the contamination varies with the SRCSIG threshold, for two different thresholds of NPSNR. Solid blue lines are full-sky results, and dashed lines correspond to a catalogue restricted to PCCS2 sources. The solid (full-sky) green line is obtained similarly, but the original source is not removed. This test is carried out to show that the presence of the original source in the background significantly and systematically modifies the non-Gaussianity of the background in the area being analysed, reducing in a systematic way the SRCSIG distribution. As can be seen in Fig. 4, this effect would artificially (and incorrectly) reduce the contamination for a given SRCSIG threshold.

|

Fig. 4. Contamination (Eq. (13) and Appendix C) when SRCSIG ≥ x, in the cases of NPSNR > 3 (left) and NPSNR > 5 (right). The blue curves, solid for full sky (PCCS2+2E) and dashed for high Galactic latitudes (PCCS2), display the contamination for simulations when the “no sources” are located in the neighbourhood of actual catalogue positions. The original sources are masked and inpainted. The solid green line shows the estimated contamination of a simulation exactly like that of the solid blue curve (full sky), but this time the original sources are not removed and inpainted. In this figure and several others in this paper, we label the axis with the name of the corresponding field in the output of BeeP analysis (in capital roman letters), e.g., here “SRCSIG”. |

Figure 4 shows that if the catalogue is restricted to the more reliable sources, there is very little difference in the contamination levels of the PCCS2+2E full catalogue (solid line) and the PCCS2 subset (dashed line); this indicates that BeeP accounts adequately for the non-Gaussianity of the background. We select SRCSIG > 3.7 as an interesting threshold, which leads to a contamination level between 5% and 10% (Fig. 4).

Our simulation-based estimate of contamination relies on the prior assumption that there are no sources at the locations analysed, which is probably not correct for PCCS2+2E where crowding becomes significant. This makes the estimate of Eq. (13) a conservative one. The curves in Fig. 4 should then be read as the maximum contamination for a given SRCSIG threshold. To make it more realistic, the estimate should be reduced taking into account the catalogue completeness, as described in Appendix C. However, for high values of NPSNR, the correction is very small18; in this case one can safely use Fig. 4 as a reasonable estimate of the catalogue contamination. Comparison of the solid and dashed lines in Fig. 4 also shows the effect of crowding on contamination, which is at most 10% for low SRCSIG.

With the above considerations, a catalogue can be selected to have a given reliability level by adopting thresholds in SRCSIG and NPSNR. For example, if we define the condition

where the symbol “∧” means “logical and”, the resulting catalogue has a maximum contamination between 5 and 10%19. The reliability condition in Eq. (14) is one of the important components for building the “BeeP/base” catalogue (see Sect. 5.5).

5.2. Rejection of outliers

As a result of the large range of source flux densities and the background conditions, it is reasonable to expect that under extreme conditions the simplified data model, and the likelihood, become a sub-optimal description of the statistical properties of the data, and that significant outliers will arise. As one of our goals is to have a well-defined set of statistical descriptors for the catalogue estimates, these extreme outliers need to be identified and removed to avoid biasing or distorting the characterization.

The extensive set of simulations described in Sect. 4 was used to identify such cases (see Appendix B.2 for more details). We find that any sources whose estimates do not meet the following “outlier-rejection criterion” must be considered unreliable:

where EXT, TEMP, and BETA are the estimated source extension, temperature, and spectral index, respectively. The differences (TH2SB − TL2SB) and (BETAH2SB − BETAL2SB) are the estimated uncertainties of the temperature and spectral index20. The value of EXT that we use to create the filter is the “uncorrected” source size parameter (see Sect. 6.2.2 and Appendices A.2.3, A.2.4).

The criterion of Eq. (15) selects a very small fraction of the catalogue sources (2462, or about 5%). Of those, 1463 would also have been rejected by the reliability criterion (Eq. (14)). Thus only 999 or 2% of the sources that pass the reliability criterion are rejected by the outlier-rejection criterion (Eq. (15)).

5.3. Convergence filter

Our logical framework assumes a binary classification scheme, such that each region of interest is either diffuse background or a compact source. However, a binary classification model, regardless of the significant advantage of its simplicity, is not complete enough to explain the full complexity of the data set. In fact, as described in Sect. 5.1 (see also Appendix C), we compute the probability of a set of pixels not being part of the diffuse background (rejection of the null hypothesis), and the SRCSIG statistic acts as the discriminating variable. This mathematical machinery requires us to find a likelihood maximum in the proximity of the source position. However, in some cases, e.g., at low Galactic latitudes or along very extended sources, that condition may not be met. For example, in Fig. 5 there are some PCCS2E positions (blue triangles) that are well separated from the actual centre of the compact object, which coincides with the likelihood maximum. Since we have limited the likelihood “travel” distance to three pixels from the original PCCS2E+2E location (see Sect. 3.1 and Appendix A.2), in some of these cases BeeP fails to find a maximum. The code then assumes that the original PCCS2+2E position is correct, and samples the likelihood field around it. For extended sources where BeeP could not find a likelihood maximum, such as those shown in Fig. 5, SRCSIG can still attain a high value because the location does not have background-like properties. For this reason we have introduced a new catalogue field MAXFOUND, that flags when a likelihood maximum was found. Considering that being above a given SRCSIG threshold means, it is likely that this is not part of the background. MAXFOUND then allows one to discriminate between a compact object (value 1, Fig. 5, green squares) or something else (value 0, Fig. 5, red squares).

|

Fig. 5. Patch of |

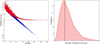

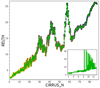

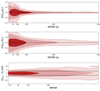

In Fig. 6 we show the total fraction of PCCS2+2E sources with NPSNR> 5 and above a given SRCSIG. The dashed curves in Fig. 6 show the impact of adding the condition of MAXFOUND = 1. The intersection of the curves with the SRCSIG = 0 axis shows the fraction of sources with NPSNR> 5.

|

Fig. 6. Fraction of PCCS2+2E sources with NPSNR > 5 and above a given SRCSIG threshold. Green curves show PCCS2 sources, blue curves show PCCS2E sources, and red curves show the full PCCS2+2E. Dashed lines are the result of imposing MAXFOUND = 1. The dashed black vertical line (SRCSIG = 3.7) is the reliability criterion threshold that we have selected for the BeeP/base catalogue. |

5.4. Quality filter

We summarize the quality of the source parameter estimates in a new field, EST_QUALITY, which assigns five points to each source and subtracts penalties from this maximum value if certain quality criteria are not met. EST_QUALITY = 5 means that the estimates of source parameters are highly reliable. Penalties subtracted if specific quality criteria are not met are listed in Table 1. When MAXFOUND ≠1 (no likelihood maximum), it is not possible to guarantee an optimal extraction of source parameter estimates. However, sources that fail only the MAXFOUND condition may still be used in many cases where a rigorous statistical characterization is not required. For this reason the associated penalty was set to half of the other criteria. Source estimates not meeting the “outliers criterion”, or that were examined in only the Planck channels (because they are located in the IRAS gaps), should be used with great caution.

Penalties applied to sources whose parameter estimates do not meet the quality criteria (note that the maximum quality level is 5).

5.5. BeeP/base catalogue

Let us now examine the sub-catalogue defined by the conditions given in Eq. (14). If we require EST_QUALITY ≥ 4, this sub-catalogue contains 24 511 of the 43 290 objects in the PCCS2E (56.6%). If we require EST_QUALITY = 5, however, we still find 21 997 sources (50.8% of the PCCS2E objects). We therefore add this condition and define a “reliable and accurate” sub-catalogue based on the three following conditions:

This sub-catalogue, which we shall refer to as BeeP/base, contains 26 083 (54.1% of the full PCCS2+2E) objects. Unless otherwise stated, all figures in the rest of this paper are based on it. If we require a more stringent contamination level, say below 1%, (SRCSIG> 7.0 and EST_QUALITY = 5), there remain 5077 (11.7%) compact objects in the PCCS2E.

Although in the PCCS2+2E there is no indication of the source-detection significance, for comparison we computed one by dividing the MHW2 estimates of the source flux density and its uncertainty, DETFLUX/DETFLUX_ERR. The median value of the PCCS2+2E-estimated S/N (8.96) is considerably lower than the equivalent value of NPSNR in the BeeP catalogue (12.82). However, one must remember that BeeP is a multi-channel method, and jointly analysing more than one frequency strengthens the background-rejection criterion.

5.6. Beyond BeeP/base

In Sect. 5.5 we have described how we have extracted a subset of the sources in PCCS2+2E (BeeP/base) that we consider to be “reliable and accurate”. Based on our analysis, this means that:

-

the uncertainties on the extracted model parameters are realistic;

-

the number of false detections is low.

We caution the user of BeeP/base that the parameter uncertainties for many sources in this catalogue are relatively large. For example, Fig. 11 (supported by simulations in Appendix B, see e.g., Fig. B.7) shows that sources with the lowest NPSNRs have flux-density extraction uncertainties larger than about 40%. At first glance this does not seem consistent with a naive interpretation of NPSNR as an “SNR-like” quantity, but we remind the reader that NPSNR reflects the uncertainties of all model parameters, not only flux-density determination. Figure 8 shows in particular that the flux-density determination is correlated with other parameters (in particular the size, temperature, and spectral index), and this certainly contributes significantly to increasing the uncertainties.

We have selected BeeP/base as a good approach for studying the broad characteristics of the results of our analysis. However, we expect that each user of these results will select a specific subset of sources based on their own needs. For example, if low flux-extraction uncertainties are required, then the threshold on NPSNR should be correspondingly increased, and we suggest using Fig. 11 as a guideline. Similarly, Fig. 4 can be used to set a threshold related to contamination by false detections. Each user of our results should determine the specific criteria that need to be applied to meet their objectives.

6. Base catalogue characteristics

We now describe and characterize the BeeP/base catalogue. As mentioned previously, all the results of this analysis (i.e., for all PCCS2+2E sources, not only those in BeeP/base) are available online via the Planck Legacy Archive. The Explanatory Supplement (Planck Collaboration ES 2018), which accompanies the results, includes an annotated list of all the parameters provided for each source. In this paper, we provide a summary of the key parameters in Table 2. Some of these are described in more detail in this section.

Summary of the key parameters generated by BeeP for each source in PCCS2+2E and available online via the Planck Legacy Archive.

6.1. Reliability and quality parameters

The set of reliability and quality parameters includes:

-

NPSNR, which measures the S/N of the combined detection (Eq. (8));

-

SRCSIG, which measures the likelihood that the source is a real compact object distinct from the background (Eq. (12));

-

EST_QUALITY, which measures the trustworthiness of the source descriptor estimates extracted by BeeP (see Sect. 5.4).

It is important not to confuse the roles of SRCSIG and EST_QUALITY. SRCSIG indicates the likelihood of a source being real, whereas EST_QUALITY provides an assessment of the quality of the estimated source parameters, given that the source is real. For instance, a bright nearby object may have a very large SRCSIG because we are sure it is a real object. Nonetheless it might still fail the EST_QUALITY criteria if, for example, BeeP cannot find the likelihood peak. In that case there is no guarantee that the recovered parameter estimates are optimal.

6.2. Source properties

This set of parameters gives the position and properties of the sources and their uncertainties.

6.2.1. Thermal properties

We fit the multifrequency data for a given source with two SED models (see Fig. 7), each of which requires an independent run of the likelihood.

-

Modified Blackbody (MBB) model. The source brightness levels are colour-corrected to account for the detector bandpasses. The following parameters are optimized by the likelihood:

-

X and Y position coordinates, with origin at the PCCS2+2E position;

-

EXT, source extension;

-

SREF, source reference flux density;

-

TEMP, source temperature;

-

BETA, source spectral index.

Fig. 7. Example of fitting the MBB (upper panel) and Free (middle panel) SED models to the data for one source (NGC 895). The background is given in the bottom panel. The yellow and red dashed curves are the median and maximum-likelihood fits, respectively. The purple and black bands are the ±1 σ and ±2 σ regions, respectively, of the posterior density. Blue diamonds are the PCCS2+2E flux-density estimates (APERFLUX). The green diamonds are: in the upper panel BeeP’s estimate of the flux density at 857 GHz, and in the middle panel BeeP’s Free estimates of the flux density at each frequency. In the lower panel, dark green diamonds are the background brightness estimates at each frequency, and the green curves are the maximum likelihood (dashed) and the median (solid) models. Red diamonds are the average source brightness divided by the background rms brightness in that patch, i.e., raw S/N. The data points are slightly displaced from their nominal frequencies to avoid overlaps. A similar plot is provided in the Planck Legacy Archive for each source in the BeeP catalogue; see the Planck Explanatory Supplement for further information (http://www.cosmos.esa.int/web/planck/pla/). We note that this figure is reproduced exactly as it will be delivered to the user from the online archive. In Appendix D we provide some representative examples of spectra for different kinds of sources, to show some of the results obtained by BeeP.

All source parameters, geometrical and physical, are sampled jointly. The reference flux density is given at 857 GHz. The reference flux density at 857 GHz is not the flux density measured in the 857 GHz channel; it is rather a scaling factor for the model that could be specified at any frequency. We have chosen 857 GHz for convenience (see Eq. (4)). For this model we also provide the flux densities in the individual channels, computed from the fitted model.

-

-

Free model. The FREE columns are developed in two steps. First, samples are drawn from the geometrical parameters and flux densities at each channel. The flux densities at individual channels are optimized by the likelihood. All source parameters, geometrical and physical, are sampled jointly. From the flux-density samples at each frequency we compute a best-fit value and an uncertainty. The following parameters are optimized by the likelihood:

-

X and Y position coordinates, with the origin at the PCCS2+2E position;

-

EXT, source extension;

-

FREES3000, flux density at 3000 GHz;

-

FREES857, flux density at 857 GHz;

-

FREES545, flux density at 545 GHz;

-

FREES353, flux density at 353 GHz.

We then fit an MBB model to the four data pairs (Sν, σSν), using a Gaussian likelihood with colour-correction, resulting in a source reference flux density given at 857 GHz.

-

BeeP also provides, as an output, plots of the source-parameter posterior distributions for the MBB model (see e.g., Fig. 8).

|

Fig. 8. Corner plot (Foreman-Mackey 2016) of parameter posterior distributions for one source (NGC 895). Off-diagonal positions show marginalized bi-dimensional posterior distributions of the parameter samples defining the row and the column. Diagonal positions contain posterior marginalized distributions. The magenta lines mark the PCCS2+2E catalogue flux density in the 857 GHz channel. There is one such plot for each source in BeeP’s catalogue. The source extension (EXT) samples shown have not been corrected for the narrower beams employed in the likelihood. See the Planck Explanatory Supplement for further information (http://www.cosmos.esa.int/web/planck/pla/). This figure is reproduced exactly as it will be delivered to the user from the online archive. |

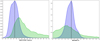

6.2.2. Size

The spatial extent of source-related emission peaks in the maps results from the convolution of the source size and the beam. These are degenerate variables over the relatively narrow range of variation of beam size in the Planck maps. BeeP uses a source-extension parameter EXT which represents the intrinsic radius of the source in Eq. (3). However, in Appendix A.2.3, we explain that BeeP artificially narrows the beams to allow for emission bumps in the maps that are narrower than the beam size. Therefore EXT does not correspond to the actual intrinsic source size; however, EXT is easily corrected to a new parameter R, which is the intrinsic source radius corresponding to the real beam sizes. Both parameters are provided in the BeeP results. Furthermore, we remind the reader that we have simplified the source model by assuming that it is a symmetrical 2D Gaussian. The parameter R thus gives a useful indication of whether the source is extended, but it does not reflect any potential source elongation and should therefore be used with appropriate caution.

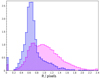

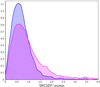

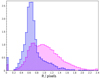

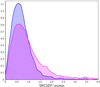

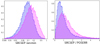

The distribution of source radii (R) found by BeeP is shown in Fig. 9. The PCCS2 subset (shown in blue), is compatible with a population overwhelmingly dominated by unresolved sources (the size distribution peaks at 1 2). Instead, the full PCCS2+2E (purple) set peaks at 1

2). Instead, the full PCCS2+2E (purple) set peaks at 1 7. This is expected, since a large fraction of the PCCS2E objects are nearby and Galactic, and many of them show more extended shapes.

7. This is expected, since a large fraction of the PCCS2E objects are nearby and Galactic, and many of them show more extended shapes.

|

Fig. 9. Normalized histograms of the recovered source size R PCCS2 sources are shown in blue and the full catalogue in purple. R has been corrected for the excess resulting from using narrower beams in the likelihood. Beam-sized objects appear in the figure at R ∼ 0. One pixel here corresponds to 1 |

6.2.3. Position

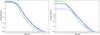

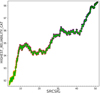

One of the important characteristics of BeeP is its ability to determine an effective sub-pixel source position. Since the position is determined from a multifrequency analysis, it does not in general correspond to any of the positions found in PCCS2+2E. POSERR is the uncertainty radius around the position. Its probability density function is a Rayleigh distribution with a scaling parameter equal to POSERR. If  and {X, Y} are independent and both normally distributed with a standard deviation σ, then Z follows a Rayleigh distribution with a scaling parameter equal to σ. BeeP’s sub-pixel accuracy significantly reduces the large negative kurtosis usually imposed by the pixelization on the error distributions, as can be seen in Fig. 8. POSERR is computed as the 95th percentile of the samples’ radial offset distribution divided by 2.45, to give σ, the Rayleigh scale factor. The probability that the true source position is inside a radius of (1×, 2×, 3×) POSERR is (39.3%, 86.5%, 98.9%). Figure 10 shows the dependence of POSERR on NPSNR.

and {X, Y} are independent and both normally distributed with a standard deviation σ, then Z follows a Rayleigh distribution with a scaling parameter equal to σ. BeeP’s sub-pixel accuracy significantly reduces the large negative kurtosis usually imposed by the pixelization on the error distributions, as can be seen in Fig. 8. POSERR is computed as the 95th percentile of the samples’ radial offset distribution divided by 2.45, to give σ, the Rayleigh scale factor. The probability that the true source position is inside a radius of (1×, 2×, 3×) POSERR is (39.3%, 86.5%, 98.9%). Figure 10 shows the dependence of POSERR on NPSNR.

|

Fig. 10. Radial position error POSERR versus NPSNR. Grey and yellow points mark sources in the PCCS2+2E and PCCS2, respectively, before correction. Red and green points mark sources in the PCCS2+2E and PCCS2, respectively, after correction. The horizontal dashed line is the saturation constant added to correct the position uncertainty, 4 |

Simulations show that POSERR is significantly underestimated in a subset of cases, predominantly those with high values of NPSNR. A detailed description of this issue is given in Appendix B.2 and shown in Fig. B.6. To address this problem, we correct the position errors using the procedure developed in Appendices B.2 and B.4, which follows closely that used for PCCS2 (see Eq. (7) and Table 8 of Planck Collaboration XXVI 2016). The correction consists of adding a term in quadrature to POSERR, which causes small values to saturate at a minimum level of  (see Fig. 10). This level was determined through simulations, as described in Appendices B.2 and B.4.

(see Fig. 10). This level was determined through simulations, as described in Appendices B.2 and B.4.

To verify that the correction determined through simulations applies to the BeeP/base catalogue, we examined the PCCS2 subset. The correlation seen in Fig. 10 (yellow dots) is very high (−0.98), and its slope a = −1.09 is very close to what is seen in the simulations. This high degree of consistency between the simulated data and the real data justifies application of the correction to the data.

The median positional error of the full corrected catalogue is 11 5 (1/9 of a Planck pixel). For the PCCS2 subset it is 7

5 (1/9 of a Planck pixel). For the PCCS2 subset it is 7 9, or less than 1/12 of a pixel.

9, or less than 1/12 of a pixel.

6.2.4. Flux density

To obtain an unbiased estimate of a flux density, one must know the shape of the instrumental beam and the morphology of the source. By using a constant Gaussian shape to model the beam, equal to the average Planck Gaussian effective beam (Mitra et al. 2011), we introduce a systematic bias in estimates of the flux density (see, e.g., Planck Collaboration XXVI 2016, Sect. 2 and Table 2). Furthermore, in any multi-channel analysis such as BeeP, the beam shape is not as clearly defined as in the case of a single-channel catalogue. The effective beam is in fact a combination of the individual channel beams, and it changes with the beam spatial Fourier mode (via the covariance) and source SED parameters. A simple correction such as the one suggested in Planck Collaboration XXVI (2016) is insufficient in this case. Instead, our approach is to “calibrate” the bias in the output of BeeP using simulations. This is explained in detail in Appendix B.4 (see also Appendix A.2.3). The simulations that we use are the Planck FFP8 simulations, which are the most complete and realistic for Planck 2015 data, and which contain accurate sky and instrument models. Using the FFP8 simulations (Appendix B.4), and comparing recovered values to input values, we estimate that BeeP’s reference flux-density estimator is biased high by about 11.0%, which reflects the lack of realism of our model regarding source extension. An 11% reduction in the reference flux densities produced by BeeP is therefore applied to both SED models (MBB and Free). Specifically, flux densities in all four channels are reduced by this same factor for the Free model.

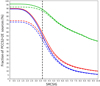

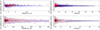

The estimated flux-density accuracy is also subject to systematic effects caused by beam and source shapes. Figure 11 displays the variation of the relative flux-density error bar  , defined as

, defined as

|

Fig. 11. Flux density uncertainties ( |

where S is the estimated flux density, ΔS is the estimated flux-density uncertainty, and  is the inverse of the measured S/N. For reference, the black dashed line on the left lower corner is the NPSNR−1 line. This is the theoretical lower boundary for

is the inverse of the measured S/N. For reference, the black dashed line on the left lower corner is the NPSNR−1 line. This is the theoretical lower boundary for  that would be expected if the only unknown parameter were the flux density. Figure 11 shows that the catalogue’s flux-density uncertainties are much higher (

that would be expected if the only unknown parameter were the flux density. Figure 11 shows that the catalogue’s flux-density uncertainties are much higher ( ≫ NPSNR−1) than the lower boundary, which should be expected from the fact that there are five more unknown parameters, whose individual uncertainties propagate into the flux-density estimate. However, not all of the additional parameters contribute equally. Inspecting the posteriors in Fig. 8, it becomes clear that EXT and the MBB parameters {T, β} have a much larger contribution than the position parameters. The correlation between the flux errors and the other parameter uncertainties explains the gap between the black dashed line and the green points in the figure. However, with the help of simulations (see Appendices B.3 and B.4), we find that the estimated flux-density errors are overly optimistic for a fraction of the high NPSNR population. The situation is similar to that for the positional accuracy estimates (see Sect. 6.2.3). For most purposes the (uncorrected) flux-density estimates and uncertainties found in the catalogue can be used without concern. But if a more rigorous statistical characterization is required, we suggest correcting the flux-density uncertainty estimates using the procedure developed in Appendix B.3. There is a modest penalty in flux-density accuracy for applying this correction (Fig. 11, red contours).

≫ NPSNR−1) than the lower boundary, which should be expected from the fact that there are five more unknown parameters, whose individual uncertainties propagate into the flux-density estimate. However, not all of the additional parameters contribute equally. Inspecting the posteriors in Fig. 8, it becomes clear that EXT and the MBB parameters {T, β} have a much larger contribution than the position parameters. The correlation between the flux errors and the other parameter uncertainties explains the gap between the black dashed line and the green points in the figure. However, with the help of simulations (see Appendices B.3 and B.4), we find that the estimated flux-density errors are overly optimistic for a fraction of the high NPSNR population. The situation is similar to that for the positional accuracy estimates (see Sect. 6.2.3). For most purposes the (uncorrected) flux-density estimates and uncertainties found in the catalogue can be used without concern. But if a more rigorous statistical characterization is required, we suggest correcting the flux-density uncertainty estimates using the procedure developed in Appendix B.3. There is a modest penalty in flux-density accuracy for applying this correction (Fig. 11, red contours).

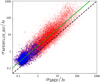

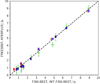

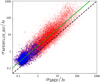

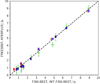

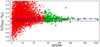

BeeP produces two sets of flux-density estimates: the MBB SREF and the Free FREESREF. In Fig. 12 we compare their values to test the consistency between the two models. Instead of simply calculating percentage differences, we plot the logarithm of the output to input ratio. If out/in ≈ 1, then ln(out/in)∼(out − in)/in, which corresponds closely to percentages. But when out/in is far from 1, then ln(out/in) keeps the symmetry between in and out, which would not be the case with the more common (out − in)/in formula. We find this feature very convenient for visually identifying biases in the differences.

|

Fig. 12. Ratio of free flux density to MBB model flux density at 857 GHz, as a function of SRCSIG. PCCS2 sources are shown in green, while PCCS2+2E are in red. Only sources whose Free and MBB positions are within 0 |

As expected, there is higher dispersion for sources drawn from the PCCS2E catalogue (shown in red), as a result of generally more complex backgrounds at low Galactic latitudes. Sources from the PCCS2 (shown in green) are less affected by this issue. We note the small (3.5%) bias towards negative values of ln(SFree/SMBB). This bias becomes more pronounced at lower values of SRCSIG. A possible source of this bias is that inclusion of inter-frequency cross-correlations in the likelihood for the background model allows for better removal of background emission, on average raising SMBB.

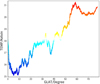

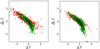

6.2.5. Spatial distribution of the source properties

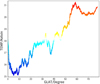

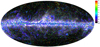

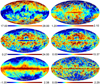

Figure 13 shows the spatial distribution on the sphere of MBB prameters T and β for the compact sources. High Galactic latitudes show a larger percentage of warmer objects (see Fig. 14) and very few cold sources (dark blue). Cold sources are mostly Galactic in nature, and aligned with filaments of gas and dust. They also match regions of intense star formation. As a result of the strong correlation between T and β (see Sect. 6.2.6 and Fig. 8), a higher density of sources with low β is expected at higher Galactic latitudes.

|

Fig. 13. Top: temperatures of sources in the catalogue (colour scale in thermodynamic kelvins). Bottom: spectral indices β of the MBB SED model. The catalogue was filtered using the condition of Eq. (16). The size of each circle representing an object is proportional to the logarithm of the source flux density in janskys. This figure also makes clear the extent that sources in PCCS2+2E trace cirrus; see also the smaller region in Fig. 1. |

|

Fig. 14. Boxcar average (window 500 samples) temperature of sources ordered by absolute Galactic latitude. There is a clear trend. |