| Issue |

A&A

Volume 628, August 2019

|

|

|---|---|---|

| Article Number | A78 | |

| Number of page(s) | 35 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201935519 | |

| Published online | 08 August 2019 | |

GAUSSPY+: A fully automated Gaussian decomposition package for emission line spectra⋆

1

Max-Planck Institute for Astronomy, Königstuhl 17, 69117 Heidelberg, Germany

e-mail: riener@mpia.de

2

Chalmers University of Technology, Department of Space, Earth and Environment, 412 93 Gothenburg, Sweden

3

Department of Physics & Astronomy, Johns Hopkins University, 3400 N. Charles Street, Baltimore, MD 21218, USA

Received:

22

March

2019

Accepted:

25

June

2019

Our understanding of the dynamics of the interstellar medium is informed by the study of the detailed velocity structure of emission line observations. One approach to study the velocity structure is to decompose the spectra into individual velocity components; this leads to a description of the data set that is significantly reduced in complexity. However, this decomposition requires full automation lest it become prohibitive for large data sets, such as Galactic plane surveys. We developed GAUSSPY+, a fully automated Gaussian decomposition package that can be applied to emission line data sets, especially large surveys of HI and isotopologues of CO. We built our package upon the existing GAUSSPY algorithm and significantly improved its performance for noisy data. New functionalities of GAUSSPY+ include: (i) automated preparatory steps, such as an accurate noise estimation, which can also be used as stand-alone applications; (ii) an improved fitting routine; (iii) an automated spatial refitting routine that can add spatial coherence to the decomposition results by refitting spectra based on neighbouring fit solutions. We thoroughly tested the performance of GAUSSPY+ on synthetic spectra and a test field from the Galactic Ring Survey. We found that GAUSSPY+ can deal with cases of complex emission and even low to moderate signal-to-noise values.

Key words: methods: data analysis / radio lines: general / ISM: kinematics and dynamics / ISM: lines and bands

© M. Riener et al. 2019

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access funding provided by Max Planck Society.

1. Introduction

Observations of emission lines are of fundamental importance in radio astronomy. Starting with the first detections of neutral hydrogen (HI) via the 21 cm line at 1420.4 MHz by Ewen & Purcell (1951) and the first detection of interstellar carbon monoxide (CO) in the Orion nebula by Wilson et al. (1970), the study of emission lines at radio wavelengths has led to groundbreaking astrophysical insights. Our knowledge about the interstellar medium (ISM) is in large part shaped by observations of the emission of its gas molecules. In particular, we can use the radial velocity – corresponding to Doppler shifts of the emission line with respect to its rest frequency – to gain information about the kinematics and dynamics of the gas.

In our Milky Way, large Galactic surveys of HI (e.g. Stil et al. 2006; Murray et al. 2015; Beuther et al. 2016) and isotopologues of CO (e.g. Dame et al. 2001; Jackson et al. 2006; Dempsey et al. 2013; Barnes et al. 2015; Rigby et al. 2016; Umemoto et al. 2017; Schuller et al. 2017; Su et al. 2019) have been used, for example, to study Galactic structure (e.g. Dame et al. 2001; Nakanishi & Sofue 2006) and construct catalogues of molecular clouds and clumps (e.g. Rathborne et al. 2009; Miville-Deschênes et al. 2017; Colombo et al. 2019). Such studies are usually more focused on the average properties of the gas on Galactic scales or on the scales of molecular clouds or clumps. However, there is a tremendous wealth of physically interesting information that can be gleaned from studying the detailed velocity structure of the gas, among them fundamental insights about turbulence properties in the ISM and molecular clouds (e.g. Larson 1981; Ossenkopf & Mac Low 2002; Heyer & Brunt 2004; Burkhart et al. 2010; Orkisz et al. 2017; for reviews, see Elmegreen & Scalo 2004 and Hennebelle & Falgarone 2012) and dense cores (e.g. Falgarone et al. 2009; Pineda et al. 2010; Keto et al. 2015; Chen et al. 2019), inference about imprints of shear in molecular clouds (e.g. Hily-Blant & Falgarone 2009), and the internal velocity structure of filaments (e.g. Arzoumanian et al. 2013, 2018; Hacar et al. 2013; Henshaw et al. 2014; Orkisz et al. 2019).

While the gas dynamics on smaller scales has been already well studied, the detailed velocity structure of the gas on Galactic scales remains as yet unexplored. We currently do not know whether the velocity structure across large scales shows properties that could serve as diagnostics of phenomena such as molecular cloud formation and evolution or the impact of the Galactic structure on the ISM. To facilitate such analyses, we would ideally like to apply the methods and techniques of the small-scale studies to the large surveys of the Galactic plane.

One approach that has substantial potential is quantifying and analysing the complex spectra taken through the Galactic plane by decomposing them into velocity components and then analysing the properties and statistics of these components. In such analyses, the components are usually assumed to have Gaussian shapes, as random thermal and non-thermal motions in the gas lead to Doppler motions with a Gaussian distribution of gas velocities. Moreover, adopting the Gaussian shape is mathematically simple and leads to a significant reduction in complexity and enables easier post-analysis steps through a rich set of available Gaussian statistics tools.

Recently, several semi-automatic (e.g. Ginsburg & Mirocha 2011; Hacar et al. 2013; Henshaw et al. 2016, 2019) and fully automated (e.g. Haud 2000; Lindner et al. 2015; Miville-Deschênes et al. 2017; Clarke et al. 2018; Marchal et al. 2019) spectral fitting techniques have been introduced. The semi-automated techniques require user interaction, usually in deciding how many velocity components to fit. This can be achieved, for instance, by using spatially smoothed spectra to inform the fit. However, the user-dependent decisions introduce subjectivity to the fitting procedure that reduces reproducibility of the results. The required interactivity with the user can also make it difficult to distribute the analysis to multiple processors. Therefore, while semi-automated approaches are well-suited for small data sets (individual molecular clouds or nearby galaxies at high or low spatial resolution, respectively), they can become prohibitively time-consuming for the analysis of big surveys with millions of spectra and components.

The automated methods overcome these drawbacks by removing the user interaction. The initial number of components can either be a guess (Miville-Deschênes et al. 2017; Marchal et al. 2019) or based on the derivatives of the spectrum (Lindner et al. 2015; Clarke et al. 2018). However, currently these automated routines either: fit the spectra independently from each other (Lindner et al. 2015; Clarke et al. 2018), which might introduce unphysical differences between the fit results of neighbouring spectra; use a fixed number of velocity components as initial guesses (Miville-Deschênes et al. 2017; Marchal et al. 2019), which can be computationally expensive; or are not freely available to the community. Also, the current versions of the automated methods listed above are of the “first generation”; there is still potential to improve the decomposition techniques and their applicability to different data sets.

In this work, we present GAUSSPY+, an automated decomposition package that is based on the existing GAUSSPY algorithm (Lindner et al. 2015), but with physically-motivated developments specifically designed for analysing the dynamics of the ISM. We developed GAUSSPY+ with the specific aim of analysing CO surveys of the Galactic plane, such as the Galactic Ring Survey (GRS; Jackson et al. 2006) and SEDIGISM (Schuller et al. 2017). However, GAUSSPY+ should be easily adaptable to other emission line surveys for which Gaussian shapes provide a good approximation of the line shapes. Some of the line-analysis tasks of GAUSSPY+, such as the estimation of noise and the identification of signal peaks, can also be used as independent stand-alone modules to serve more specific purposes.

In this paper, we present the algorithm and test it thoroughly on synthetic spectra and a GRS test field. A full application of GAUSSPY+ on the entire GRS data set is in preparation and will be presented in a subsequent paper.

2. Archival data and methods

2.1. The GAUSSPY algorithm

In this work we extend and modify the GAUSSPY algorithm (Lindner et al. 2015), which is an autonomous Gaussian decomposition technique for automatically decomposing spectra into Gaussian components. While GAUSSPY was developed for the decomposition of HI spectra (e.g. Murray et al. 2018; Dénes et al. 2018) it can in principle be used for the decomposition of any spectra that can be approximated well by Gaussian functions (e.g. CO).

One of the strengths of the GAUSSPY algorithm is that it automatically determines the initial guesses for Gaussian fit components for each spectrum with a technique called derivative spectroscopy. This technique is based on finding functional maxima and minima in the spectrum to gauge which of the features are real signal peaks. Since the estimation of maxima and minima requires the calculation of higher derivatives (up to the fourth order), an essential preparatory step in GAUSSPY is to smooth the spectra in such a way as to get rid of the noise peaks without smoothing over signal peaks (cf. Fig. 2 in Lindner et al. 2015). If the data set contains signal peaks that show a limited range in widths, smoothing with a single parameter α1 may already lead to good results in the fitting. In the original GAUSSPY algorithm users can choose between two different versions of denoising the spectrum before derivatives of the data are calculated: a total variation regularisation algorithm and filtering with a Gaussian kernel. We use exclusively the latter approach, in which the parameter α1 refers to the size of the Gaussian kernel that is used to Gaussian-filter the spectrum. The decomposition of data sets that show a mix of both narrow and broad linewidths likely requires an additional smoothing parameter α2 to yield good fitting outcomes. The fitting procedure using a single or two smoothing parameters is referred to as one-phase or two-phase decomposition, respectively.

It is essential for the best performance of the derivate spectroscopy technique to find the optimal smoothing parameters for the original spectra. The GAUSSPY algorithm achieves this via an incorporated supervised machine learning technique, for which the user has to supply the algorithm with a couple of hundred well-fit spectra, from which the algorithm then deduces the best smoothing parameters.

More specifically, GAUSSPY uses the gradient descent technique – a first-order iterative optimisation algorithm – to find values for α1 and α2 that yield the most accurate decomposition of the training set. This accuracy is measured via the F1 score, which is defined as:

where precision refers to the fraction of fit components that are correct and recall refers to the fraction of true components that were found in the decomposition of the training set with guesses for α1 and α2. See Lindner et al. (2015) for more details on how the training set is evaluated.

2.2. 13CO data

We test GAUSSPY+ on data from the Boston University–Five College Radio Astronomy Observatory GRS (Jackson et al. 2006) that we downloaded from the online repository of the Boston University Astronomy Department1. This survey covered the lowest rotational transition of the 13CO isotopologue with an angular resolution of 46″, a pixel sampling of 22″, and a spectral resolution of 0.21 km s−1. The values in the GRS data set are given in antenna temperatures, which we converted to main beam temperatures by dividing them with the main beam efficiency of ηmb = 0.481.

The lowest rotational transition of 12CO can show strong self-absorption that can severely affect the lineshape (e.g. Hacar et al. 2016). A decomposition of the spectrum can therefore lead to incorrect results, as strong self-absorption features can be erroneously fit with multiple components. We do not expect such strong opacity effects for 13CO observations, but it can still become optically thick in very bright regions (e.g. Hacar et al. 2016). Optical depth effects are also expected for 13CO observations of nearby regions or observations with high spatial resolution, for which the opacity effects are not smoothed out as in a larger physical beam. For the moderate spatial resolution of the GRS survey one would thus not expect severe optical depth effects, even though the analysis by Roman-Duval et al. (2010) suggests that opacity effects do indeed have to be taken into account for the GRS data set. In this work we will not address the potential problems of optical depth effects or self-absorption on the decomposition results, but we caution that fitting 13CO peaks with Gaussian components might lead to incorrect fits of multiple components for a single self-absorbed emission line in case regions of optically thick 13CO are expected to be present in the data set.

Even though in this paper we demonstrate the functionality of GAUSSPY+ only for a small GRS test field, we used the entire data set in testing and developing the algorithm. A forthcoming paper will present and discuss the decomposition results of GAUSSPY+ for the whole GRS data set and will also discuss the effects and implications of possible optical depth effects for the 13CO emission and the fitting results.

3. New decomposition package: GAUSSPY+

The methods and procedures described in this section are all either new preparatory steps for, or extensions to, the original GAUSSPY algorithm. They aim at either improving the performance of GAUSSPY or automating required preparatory steps. Figure 1 presents a schematic outline of the GAUSSPY+ algorithm.

|

Fig. 1. Schematic outline describing new automated methods and procedures included in GAUSSPY+, along with corresponding sections in this paper. |

The main shortcomings of the original GAUSSPY algorithm that we aim at improving are: (i) the noise values are calculated from a fixed fraction of channels in the spectrum, which is not ideal in cases where signal peaks might occur at all spectral channels; (ii) the user has to supply the training set; (iii) there is no in-built quality control of the fit results; (iv) the fit of each spectrum is treated independently of its neighbours. The last point might lead to drastic jumps between the number of Gaussian components between neighbouring spectra. From a physical point of view we would not expect such component jumps for resolved extended objects with sizes larger than the beam. Moreover, observations are often Nyquist sampled, in which case the beam size or resolution element is larger than the pixel size. Therefore neighbouring pixels will contain part of the same emission, which also introduces coherence between the number of components between neighbouring spectra.

To develop a fitting algorithm that improves on the above points, we have included in GAUSSPY+: (i) automated preparatory steps for the noise calculation and creation of the training set (see Sect. 3.1); (ii) automated quality checks for the decomposition, some of which can be customised by the user and are used to flag and refit unphysical or unwanted fit solutions (see Sect. 3.2); (iii) automated routines that check the spatial coherence of the decomposition and in case of conflicting results try to refit the spectrum based on neighbouring fits (see Sect. 3.3).

In the following, the GAUSSPY+ algorithm is described in detail, following the outline presented in Fig. 1. A description of GAUSSPY+ keywords including their default values and other symbols used throughout the paper can be found in the Appendix F.2.

3.1. Preparatory steps

3.1.1. Noise estimation

The original GAUSSPY algorithm either requires the user to supply noise estimates or uses a certain fraction of the spectral channels, assumed to contain no signal, for the noise estimation. However, the latter approach only leads to correct noise estimates if one can exclude the presence of signal peaks in the spectral channels used to calculate the noise.

A reliable noise estimation is of fundamental importance for the decomposition – key steps of GAUSSPY depend on the noise value, and also the new procedures in GAUSSPY+ rely on accurate noise estimation: the signal-to-noise (S/N) threshold is used for the initial guesses for the number of components in GAUSSPY and the noise estimate is needed for the quality assessments of the fit components in GAUSSPY+. Because of the key role of the noise, we developed a new, automated noise estimation routine as a preparatory step for the decomposition.

The fundamental, underlying assumptions in our noise estimation process are: i) the noise statistics are Gaussian, meaning “white noise”; ii) the spectral channels are uncorrelated; and iii) the noise is fluctuating around a baseline of zero. These assumptions enable us to make use of the number statistics of negative and positive channels in the noise estimation process (elaborated further in item 1 below).

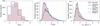

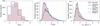

In the following, we describe how our automated noise estimation proceeds. The overall idea is to identify the spectral channels that can be used for noise estimation and maximise their number. To do so, the routine has to identify as many channels as possible that are free from signal and instrumental effects. We demonstrate the steps of the process for a mock spectrum in Fig. 2. The spectrum has 100 channels and contains two challenging features for the noise estimation: a negative noise spike in the first few channels and a broad signal feature with a maximum amplitude of two times the root-mean-square noise σrms.

|

Fig. 2. Illustration of our automated noise estimation routine for a mock spectrum containing two signal peaks and a negative noise spike. Hatched red areas indicate spectral channels that are masked out and hatched blue areas indicate all remaining spectral channels used in the noise calculation. Right panel: Comparison of the true noise value (σrms, true; black dash-dotted lines) with the noise value estimated by our automated routine (σrms, blue solid lines). See Sect. 3.1.1 for more details. |

The steps to estimate the noise are the following:

1. Mask out broad features in the spectrum; such features are likely to be either positive signal or instrumental artefacts due to, for instance, insufficient baseline corrections. Given our basic assumptions (see above), spectra containing (only) noise have the same number of positive and negative spectral channels on average. We can use this fact to determine the probability of having a number of consecutive positive or negative channels in the spectrum, meaning the probability that a given feature is noise (instead of a signal peak or an artefact). This provides a mean to mask out features that are likely not noise. We estimate the probability that a consecutive number of positive or negative channels is due to noise with a Markov chain (see Appendix A for more details). We then mask out all features whose probability to be caused by noise is below a user-defined threshold PLimit. For the example spectrum in Fig. 2 we used the default value of PLimit = 2%2. From the Markov chain calculations for a spectrum with 100 spectral channels we get that all features with more than twelve consecutive positive or negative channels have a probability less than PLimit = 2% to be the result of random noise fluctuations and are thus masked out (one feature; see left panel in Fig. 2). In many cases, peaks will still continue on both sides of the identified consecutive channels. To take this into account, the user can specify how many additional channels Npad will be masked out on both sides of the identified feature. In the example spectrum (Fig. 2) we set Npad = 2, so two additional channels on both sides of the identified features got masked out.

2. Use the unmasked negative channels to calculate their median absolute deviation (MAD). We use the MAD statistic because it is very robust against outliers in the data set, such as noise spikes. The relationship of MAD to the standard deviation σ is MAD ≈ 0.67σ. We restrict the calculation of the MAD to spectral channels with negative values, since the positive channels can still contain multiple narrow high signal peaks that were not identified in the previous step. Narrow negative spikes will still be included in this calculation but we assume that their presence is sufficiently uncommon so that they will not significantly affect the estimation of the MAD.

3. Identify intensity values with absolute value higher than 5 × MAD. We then mask out all consecutively negative or positive channels of all features that contain an intensity value higher than ±5 × MAD3. The mask is extended again on both sides by the user-defined number of channels Npad. In the example spectrum, two regions are masked out in this step (middle panel in Fig. 2), corresponding to the second positive signal and the negative noise spike in the spectrum.

4. Use all remaining unmasked channels to calculate the rms noise. The example spectrum is left with 51 unmasked channels (blue hatched areas in the right panel of Fig. 2) from which the noise is estimated.

The right panel of Fig. 2 shows the determined σrms value (blue solid line), which is very close to the true value σrms, true (black dash-dotted line) that was used to generate the noise. This example represents a case in which estimating the noise from a fixed fraction of channels in the beginning or the end of the spectrum would obviously not work well. Had we estimated the noise with the first or last 20% of spectral channels, we would have overestimated the noise by factors of 2.3 and 1.3, respectively.

In case of residual continuum in the spectrum or signal peaks covering almost all of the spectral channels, the noise estimation can be skewed and biased towards low values. To circumvent this problem, the user can supply an average noise value ⟨σrms⟩ or calculate ⟨σrms⟩ directly from the datacube by randomly sampling a specified number of spectra throughout the cube. This ⟨σrms⟩ value is adopted instead of the value resulting from steps 1−4 above, if (1) the fraction of spectral channels available for noise calculation from steps 1−4 is less than a user-defined value (default: 10%), and (2) the noise value resulting from steps 1−4 is less than a user-defined fraction of ⟨σrms⟩ (default: 10%)4. If no ⟨σrms⟩ value is supplied or calculated, the spectra that do not reach the required minimum fraction of spectral channels for the noise calculation are masked out.

We performed thorough testing of the effects of random noise fluctuations on our noise estimation routine. A detailed description of the tests is given in Appendix B.2. The tests showed that the routine is robust in typical situations (pure white noise, white noise with signal, white noise with signal and negative noise spikes, white noise with weak signal and negative noise spikes).

3.1.2. Identification of signal intervals

If a spectrum contains a high fraction of signal-free spectral channels, goodness of fit calculations can be completely dominated by noise and their value thus may decrease to acceptable numbers even in cases for which the fit did not work out. Therefore, we added a routine to GAUSSPY+ that automatically identifies intervals of spectral channels that contain signal; goodness of fit calculations are subsequently restricted to these channels5. However, the fitting itself is still performed on all spectral channels.

As part of our automated noise estimation routine (outlined in Sect. 3.1.1) we already identify consecutive positive spectral channels that can potentially contain signal (see Fig. 2). We identify these features as signal intervals using a criterion that takes both the S/N and the extent of the feature into account (this criterion is described in more detail in Sect. 3.2.1). For spectra that contain a single narrow peak, only a small fraction of the spectrum might be identified as signal interval. To ensure that for such cases the goodness of fit values are not artificially increased by a too small number of spectral channels, the user can require that a minimum number of spectral channels be adopted as signal intervals (Nmin; default value: 100). If the signal intervals identified in the spectrum contain fewer channels than required by Nmin, the size of all individual signal intervals identified in the spectrum is incrementally increased on both sides by Npad, until Nmin is reached. This incremental padding will not include regions masked out as negative noise spikes (see next section). If no signal intervals could be identified in the spectrum, all channels are used for goodness of fit calculations, even though it is unlikely in this case that there are peaks in the spectrum that will be fit. We tested the performance of the signal interval identification on synthetic spectra and found that it is able to reliably determine weak and strong signal peaks without being sensitive to smaller peaks caused by random noise fluctuations (see Appendix B.3).

3.1.3. Masking noise artefacts

Spectra can sometimes contain negative noise spikes, which can bias the goodness of fit calculations. In principle, candidate regions with negative noise spikes are already identified in the automated noise estimation routine (Sect. 3.1.1). However, since the MAD-based threshold is set to a conservative value to exclude most of the narrow signal peaks from the noise estimation, it will also incorrectly remove an increased fraction of regular noise peaks or false positives (see the distribution for sample A of our synthetic spectra in Fig. B.2). To avoid such contamination of identified noise artefacts by regular noise peaks, the user can decide below which negative value features get masked out by supplying the value in terms of the S/N (S/Nspike; default value: 5). Setting S/Nspike = 5 means that any region of consecutive negative channels that contains at least one channel with a value lower than −5 × σrms will get masked out. We tested the performance of the identification of noise spikes on synthetic spectra and found that we are able to reliably mask such features out (see Appendix B.4).

3.1.4. Creation of the training set

As described in Sect. 2.1, GAUSSPY needs a sample of already decomposed spectra to determine the smoothing parameters used in the decomposition. In principle, this training set can be composed of synthetic spectra whose noise and emission properties are similar to the data set the user wants to analyse. Another approach is to use actual spectra from the data set for which the user can supply a reliable decomposition. We added a routine to GAUSSPY+ that adopts the latter approach and automatically decomposes a user-defined number of spectra from the data set. These decomposition results are then supplied to GAUSSPY, which uses its machine learning functionality to infer the most appropriate smoothing parameters for the data set.

In principle, we could use GAUSSPY itself to construct decompositions for this training sample by first guessing the smoothing parameters and correcting them accordingly to get good fitting results. However, since it can be tricky and time-consuming to guess the correct smoothing parameters for a data set we added a routine to GAUSSPY+ that decomposes spectra for a training set.

Our key requirement for this decomposition routine was that it should be able to produce high quality fits for a small subset of the data set. We recommend to use training set sizes of about 200−500 decomposed spectra, as these should already give very good values for the smoothing parameter. In principle also larger training sets can be created, but users should be aware that in this case it can become time-consuming to train GAUSSPY, as it might be necessary to use different starting values for the smoothing parameters α1 and α2 to make sure that the search for optimal smoothing parameters explored the parameter space properly and did not get stuck in a local minimum (see Fig. 3 in Lindner et al. 2015). Training sets containing < 200 spectra bear the risk of higher uncertainties for the resulting smoothing parameter values, as incorrectly fitted features in the training set may have a large negative impact on the F1 score. While deviations of the smoothing parameters from the optimal values will impact the decomposition with GAUSSPY, the improved fitting (Sect. 3.2.3) and spatially coherent refitting (Sect. 3.3) routines in GAUSSPY+ should be able to mitigate such incorrect or insufficient decomposition results. Thus the decomposition of GAUSSPY+ also has a bigger margin for deviations of the smoothing parameters from their optimal values than the decomposition with GAUSSPY, which allows the use of smaller training set sizes.

For the decomposition of the spectra for the training set we use the SLSQP optimisation algorithm and least squares statistic (SLSQPLSQFITTER) of the ASTROPY.MODELING package, which produced good fits to the spectra in our tests of the routine. We have to supply the SLSQPLSQFITTER routine with initial guesses for possible Gaussian fit components. We determine the number of Gaussian fit component candidates and their initial guesses by estimating how many local positive extreme values or maxima are present in the spectrum. To find these local extreme values, we first set all values to zero that are below a user defined S/N threshold (S/Nmin; default value: 3). The remaining positive values are then searched for local maxima. We define a local maximum as a peak that exceeds all values for a minimum number of neighbouring spectral channels on either side of the peak. This required minimum number of spectral channels on either side can be defined by the user with the ξ parameter (default value: 6). To infer a good value for ξ, users are advised to check the shape of the components present in the spectra or make a test run for a small training set size and check the decomposition results (routines for plotting the spectra, decomposition results, and residuals are contained in our method).

Our routine then tries to fit a number of Gaussian components according to the inferred peaks of local positive maxima present in the spectrum. We therefore likely start out with the maximum possible number of Gaussian fit components for the spectrum. The individual fit parameters of each Gaussian parameter (amplitude ai, mean position μi, standard deviation σi) are then checked for the following criteria:

-

amplitude ai ≥ S/Nmin × σrms

-

significance 𝒮fit ≥ 𝒮min. See Sect. 3.2.1 for more information about this criterion.

-

the standard deviation σi is between user defined limits: σmin ≤ σi ≤ σmax, where the limits for the standard deviation can be specified in terms of the full width at half maximum (FWHM) given as fraction of channels (Θmin and Θmax; default values: 1. and None, respectively).

We do not check if components are blended in the creation of the training set. If any of the individual Gaussian components do not satisfy all these requirements, their values are removed from the list of initial guess values and a new fit is performed. These checks and the subsequent refitting is performed as long as some of the individual Gaussians are not satisfying all the criteria or there are no more Gaussian parameters remaining. In the process of refitting a spectrum we do not add any new fit component candidates.

We thoroughly tested the routine outlined in this section on samples of synthetic spectra and found that it is able to create reliable training sets that allow inferring optimal smoothing parameters with GAUSSPY (see Appendix B.5). However, we did not optimise the SLSQPLSQFITTER decomposition routine for speed, which is why we recommend to only use this fitting technique for the creation of training sets. See Appendix C.1 for a quantitative comparison between the SLSQPLSQFITTER fitting routine and the improved fitting routine of GAUSSPY+ (Sect. 3.2.3) in terms of execution time and performance of the decomposition.

3.2. Improving the GAUSSPY decomposition

3.2.1. In-built quality control

In this section, we describe the automated quality checks for the decomposition results we implemented in GaussPy+. Figure 3 illustrates how the in-built quality controls for individual fit components are used to improve the fit results for a spectrum. If individual Gaussian components do not satisfy one of the criteria outlined in Fig. 3 they get discarded. This refitting procedure using the in-built quality controls is applied to all fit solutions obtained in the decomposition steps of GAUSSPY+ (Sects. 3.2.3–3.3.2). The corrected Akaike information criterion and normality tests for the normalised residual are used to decide between different fit solutions of a spectrum and to assess whether a spectrum needs to be refitted, respectively. See also Appendix C.4 for a discussion about the performance of the in-built quality controls on the decomposition results of the synthetic spectra (Sect. 4) and the GRS test field (Sect. 5).

|

Fig. 3. Flowchart outlining how in-built quality controls from Sect. 3.2.1 are applied to fit results of a spectrum. |

FWHM value. If users supply limits for the lower and upper values of the FWHM (Θmin and Θmax, respectively) all fitted components with FWHM values outside this defined range are removed. In the GAUSSPY+ default settings Θmin = 1, which means that the FWHM value of a fit component has to be at least one spectral channel. By default, GAUSSPY+ does not set any value for Θmax. Users are advised to use the Θmax parameter with caution, as it can produce artefacts in the decomposition, such as an increase of the number of fit components whose widths are close to or exactly at this predefined upper limit.

Signal-to-noise ratio. The user-defined minimum S/N of S/Nmin (default value: 3) is in the default settings used as the S/N threshold for: (i) the original spectrum and the second derivative of the smoothed spectrum in the GAUSSPY decomposition (i.e. SNR1 = S/Nmin and SNR2 = S/Nmin); (ii) the search for new peaks in the residual (Sect. 3.2.3); (iii) the search for negative residual peaks (i.e. S/Nmin, neg = S/Nmin, Sect. 3.2.2); (iv) the decomposition of the training set (Sect. 3.1.4). These parameters can all be set to different values from each other to improve the fitting results but we advise to keep them at the same value for consistency.

The minimum required amplitude values of Gaussian fit components are determined by the S/Nmin, fit parameter, whose default value is half the value of S/Nmin. All Gaussian components with ai < S/Nmin, fit × σrms will be removed from the fit. We recommend setting S/Nmin, fit < S/Nmin to allow fit components to also converge to an amplitude value that is below S/Nmin, as such smaller unfit peaks might otherwise negatively influence the fitting results of higher signal peaks that are close by (cf. panel b in Fig. 5). A smaller value for S/Nmin, fit can also be beneficial if it cannot be excluded that some of the spectra might be affected by insufficient baseline subtraction effects, in which case the spectra would show a very broad but low-amplitude feature that can stretch over all spectral channels. However, the S/Nmin, fit can also be supplied by the user directly in case the default settings do not yield good results.

Significance. To further check the validity of fitted Gaussian components, we use the integrated area of the Gaussian as a proxy for the significance of the component. Assuming that the noise properties are Gaussian (white noise), random noise fluctuations are more likely to cause narrow features with a higher amplitude than broader, extended features with a lower amplitude. With this significance criterion we basically require that the fit components, or data peaks, have either very high intensity or are extended over a wide channel range.

The integrated area Wi of a Gaussian component can be calculated from its amplitude and FWHM value Θ in terms of spectral channels:

with the parameter c defined as

For the calculation of the significance value, we compare the area of the Gaussian component to the integrated σrms interval of the channels from the interval μi ± Θi, which gives a good approximation for the total width of the emission line:

The 𝒮fit value is then compared to a user-defined minimum 𝒮min (default value: 5) and the Gaussian component is discarded if 𝒮fit < 𝒮min. This check helps to remove noise peaks that might have been fit and were not discarded in the checks for the S/N.

We can use the significance parameter also as a threshold to decide whether peaks in the data are valid signal peaks. For this estimate of the significance (𝒮data), we first search for peaks in the data above the user-defined S/N threshold and then compare the integrated intensity of all positive consecutive channels belonging to this feature to the integrated σrms interval of the channels spanned by this feature. We discard the peak as a valid signal feature if 𝒮data < 𝒮min.

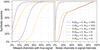

Figure 4 illustrates this significance measure for three different cases. Panel a shows a signal peak and fit component that is very likely corresponding to a true signal, with the significance measures for the data peak and the fit both above the critical default value of 5. Panel b shows a data peak with narrow linewidth that might be caused by random fluctuations of the noise. The 𝒮data value of this feature passes the threshold value 𝒮min = 5, but the depicted Gaussian fit component for this data feature only has a 𝒮fit value of 3.8. This low 𝒮fit value would cause the algorithm to reject this fit component even though its peak has a high S/N of about 5. Panel c shows a broader feature, which has only low S/N values. However, since this feature is spread over more spectral channels than the feature shown in panel b, we would accept it based on its 𝒮data value. With the default settings of GAUSSPY+ we would also keep the depicted fit component. As already mentioned in Sect. 3.2.1, it can be beneficial to keep Gaussian components with such low S/N in the decomposition results, as to not negatively influence the fitting of nearby data peaks (cf. panel b in Fig. 5).

|

Fig. 4. Calculation of the significance for Gaussian fit components (𝒮fit; blue dashed lines) or peaks in the data (𝒮data; red-shaded areas). The dotted and dash-dotted lines indicate the σrms value and S/N thresholds of 3, respectively. |

|

Fig. 5. Optional criteria used to flag fits in the improved fitting routine and in the spatially coherent refitting stage: (a) negative residual features introduced by the fit, (b) broad components, (c) blended components. |

For a fitted feature or signal peak containing Nfeat spectral channels, the 𝒮min parameter implies an average S/N of ⟨S/N⟩

Users can apply this relation to judge which value for 𝒮min is most suitable for their data set. For the default value of 𝒮min = 5, Gaussian fits or signal peaks spanning 4 or 9 spectral channels would require ⟨S/N⟩ values across the feature of 2.5 and ∼1.7, respectively. See Appendix C.3 for a discussion about the effects a variation of the S/Nmin and 𝒮 parameters has on the decomposition results.

Mean position outside channel range or signal intervals. All Gaussian components whose mean positions μi are outside the channel range [0,Nchan] are automatically discarded from the fit. If the mean position of a fit component is located outside the estimated signal intervals (Sect. 3.1.2), we check the significance value of the fitted data peak 𝒮data (Sect. 3.2.1). We discard the corresponding fit component, if 𝒮data is smaller than the user-defined threshold for the significance 𝒮min.

Estimation of the goodness of fit. When we fit a model to data whose errors are Gaussian distributed and homoscedastic, we can arrive at a good fit solution by minimising the chi-squared (χ2), which is defined as the weighted sum of the squared residuals:

with yi and Yi denoting the data and fit value at channel position i, respectively. The reduced chi-square ( ) value is often used as an estimate for the goodness of fit, since it also takes the sample size (in our case the number of spectral channels) and number of fit parameters into account.

) value is often used as an estimate for the goodness of fit, since it also takes the sample size (in our case the number of spectral channels) and number of fit parameters into account.  is defined as the chi-squared per degrees of freedom:

is defined as the chi-squared per degrees of freedom:

with N being the sample size (in our case this corresponds to the number of considered spectral channels) and k denoting the degrees of freedom, which in the case of a Gaussian decomposition would be three times the number of fitted Gaussian components. It thus may seem straightforward to use the  value to judge whether all signal peaks in a spectrum were fitted, as one would expect

value to judge whether all signal peaks in a spectrum were fitted, as one would expect  in this case. However, as Andrae et al. (2010) pointed out, in case of non-linear models such as a combination of Gaussian functions, the exact value for k cannot be reliably determined and can vary between 0 and N − 1 and need not even stay constant during the fit. The

in this case. However, as Andrae et al. (2010) pointed out, in case of non-linear models such as a combination of Gaussian functions, the exact value for k cannot be reliably determined and can vary between 0 and N − 1 and need not even stay constant during the fit. The  estimate is thus not the best metric to decide between different fit solutions for a spectrum6.

estimate is thus not the best metric to decide between different fit solutions for a spectrum6.

A more suited criterion for model selection is the Akaike information criterion (AIC; Akaike 1973), which aims for a compromise between the goodness of fit of a model and its simplicity, by penalising the use of a large number of fit components that do not contribute to a significant increase in the fit quality. The AIC is defined as

with  being the maximum value of the likelihood function for the model. If the parameters of a model are estimated using the least squares statistic – as in our case – the AIC is given as7:

being the maximum value of the likelihood function for the model. If the parameters of a model are estimated using the least squares statistic – as in our case – the AIC is given as7:

For small sample sizes, the AIC tends to select models that have too many parameters, meaning that it will overfit the data. Therefore a correction to the AIC was introduced for small sample sizes8 – the corrected Akaike information criterion (AICc; Hurvich & Tsai 1989) that is defined as:

We employ the AICc as our model selection criterion to decide between different fit solutions. The AICc value is meaningful only in relative terms, that is if the AICc values for two different fit solutions are compared with each other. In such a comparison, the fit solution with the lower AICc value is preferred as it incorporates a better trade-off between the used number of components and the goodness of fit of the model.

As an alternative to goodness of fit determinations based on the  value, Andrae et al. (2010) suggest to check whether the normalised residuals show a Gaussian distribution. We implement this additional goodness of fit criterion in GAUSSPY+ by subjecting the normalised residuals to two different normality tests: the SCIPY.STATS.KSTEST, which is a two-sided Kolmogorov-Smirnov test (Kolmogorov 1933; Smirnov 1939); and the SCIPY.STATS.NORMALTEST, which is a based on D’Agostino (1971) and D’Agostino & Pearson (1973) and analyses the skew and kurtosis of the data points. Both of these normality tests examine the null hypothesis that the residual resembles a normal distribution, as would be expected if we are only left with Gaussian noise after we subtract the fit solution from the data. If the p-value from one of these test is less than a user-defined threshold (default: 1%), we reject the null hypothesis and will try to refit the spectrum. We found that the combined results of these two hypothesis tests allows a robust conclusion of whether the residual is consistent with Gaussian noise (see Appendix D for more details).

value, Andrae et al. (2010) suggest to check whether the normalised residuals show a Gaussian distribution. We implement this additional goodness of fit criterion in GAUSSPY+ by subjecting the normalised residuals to two different normality tests: the SCIPY.STATS.KSTEST, which is a two-sided Kolmogorov-Smirnov test (Kolmogorov 1933; Smirnov 1939); and the SCIPY.STATS.NORMALTEST, which is a based on D’Agostino (1971) and D’Agostino & Pearson (1973) and analyses the skew and kurtosis of the data points. Both of these normality tests examine the null hypothesis that the residual resembles a normal distribution, as would be expected if we are only left with Gaussian noise after we subtract the fit solution from the data. If the p-value from one of these test is less than a user-defined threshold (default: 1%), we reject the null hypothesis and will try to refit the spectrum. We found that the combined results of these two hypothesis tests allows a robust conclusion of whether the residual is consistent with Gaussian noise (see Appendix D for more details).

3.2.2. Optional quality control

The automated checks described in the previous section should already help to reject many fit components that are not satisfying our quality requirements. However, depending on the data set, the user might want to flag and refit the decomposition based on more criteria, which we outline in this section9. The quality criteria discussed in this section are used to flag and refit spectra in the improved fitting and spatially coherent refitting routines discussed in Sects. 3.2.3 and 3.3, respectively10. See Appendix C.4 for a discussion about the performance of the optional quality controls on the fitting results of the synthetic spectra (Sect. 4) and the GRS test field (Sect. 5).

Negative peaks in the residual. The first quality check examines negative peaks in the residual, since these can indicate a poor fit. Panel a in Fig. 5 presents a scenario in which a double peaked profile (shown in dashed grey lines) is fit with a single Gaussian component (red line), leading to a significant negative peak in the residual (dash-dotted black line) at the position between the two data peaks. The search for negative peaks in the residual can be controlled by the user with the S/Nmin, neg parameter, which defines the minimum S/N that the negative peak has to have (in the default settings S/Nmin, neg = S/Nmin). To be flagged as a negative residual feature, a negative peak has to satisfy |yi − Yi| ≥ S/Nmin, neg × σrms, with yi and Yi denoting the data and corresponding fit value at channel position i. This requirement takes into account that negative peaks could have already been present in the original spectrum and requires that a significant part of the negative peak was introduced by the fit.

Gaussian components with a broad FWHM. It can occur that a single, broad Gaussian component is fit over multiple peaks in the spectrum, which can be an undesired property. A broad feature can be caused by peaks being close to the noise limit, multiple blended components, or issues in the data reduction, for instance, insufficient baseline corrections or unsubtracted continuum emission. Panel b in Fig. 5 shows an example of a broad component that was incorrectly fit over multiple data peaks without introducing significant residual features as in panel a. This would lead to wrong estimates of the total number of components present in this spectrum, a severe overestimate of the linewidth for the two smaller peaks incorrectly fit with one component, and an underestimate of the amplitude of the rightmost component. The example presented in panel b also highlights why it can be beneficial to set the required minimum S/N threshold for fitted component S/Nmin, fit to lower values than the S/N threshold for data peaks S/Nmin (see Sect. 3.2.1). If S/Nmin, fit were set equal to S/Nmin, the fit component for the leftmost peak in panel b will get discarded, forcing the fit of a broad component over the two leftmost peaks to minimise the residual.

Unfortunately, it can be difficult to set a maximum allowed FWHM value for the Gaussian components, as the range of expected values in the data may not be known. Setting a strict limit for the maximum FWHM value might also lead to a large number of components which have their linewidth equal to the limiting value. To prevent such an undesired effect, we flag a component as broad if it is broader by a user-defined factor fΘ, max (default value: 2) than the second broadest fit component. This obviously does not work for spectra with only one Gaussian component fit, but this case is taken into account during the spatially coherent refitting (Sect. 3.3.1).

Another physical cause for the broadening of the lines could be opacity broadening, which is especially relevant for optically thick emission lines such as the 12CO(1-0) rotational transition (Hacar et al. 2016). In case the user expects opacity broadening for a significant number of spectra in the data set, we recommend to not flag or refit broad fit components.

Blended Gaussian components. We define a Gaussian component i as blended with a neighbouring component j, if the distance between their mean positions μi and μj is less than the minimum required separation μsep. This minimum required separation is determined by multiplying the lower FWHM value of the two components with a user-defined factor fsep:

The default value of fsep is  . This value was chosen so that the required separation between two identical Gaussian components defaults to two times their standard deviation. If two identical Gaussian fit components are separated by a distance larger than two times their standard deviation, their combined signal would have a local minimum between the two peak positions, which we define as a requirement for well resolved Gaussian fit components. Panel c in Fig. 5 shows a case in which the minimum separation between the peak positions of the two identical Gaussian fit components is not reached. The combined signal of the fit components (shown in orange) shows no local minimum between the peak positions and a single Gaussian component that corresponds to the sum of the two individual components would thus be evaluated as a better fit.

. This value was chosen so that the required separation between two identical Gaussian components defaults to two times their standard deviation. If two identical Gaussian fit components are separated by a distance larger than two times their standard deviation, their combined signal would have a local minimum between the two peak positions, which we define as a requirement for well resolved Gaussian fit components. Panel c in Fig. 5 shows a case in which the minimum separation between the peak positions of the two identical Gaussian fit components is not reached. The combined signal of the fit components (shown in orange) shows no local minimum between the peak positions and a single Gaussian component that corresponds to the sum of the two individual components would thus be evaluated as a better fit.

Without additional information from neighbouring spectra it can be very difficult to reliably conclude whether a two-component fit is a better choice than the fit of a single component. If this quality criterion is selected by the user we will therefore always try to replace two blended components with a single bigger component in the improved fitting routine (Sect. 3.2.3), where each spectrum is still treated independently.

Residuals not normally distributed. This flag checks whether the normalised residuals show a Gaussian distribution. We subject the normalised residual to two different tests for normality (see Sect. 3.2.1 for more details), with the null hypothesis that the residual values are normally distributed. We reject this null hypothesis if the p-value of at least one of the normality tests is less than a user-defined threshold (default: 1%), in which case the spectrum gets flagged.

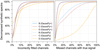

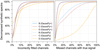

Different number of components compared to neighbouring spectra. This quality criterion compares the number of fitted Gaussian components of a spectrum with its immediate neighbouring spectra. We include the fit solutions of all neighbouring spectra in this comparison, irrespective of whether they were already flagged by another optional quality criterion. There are two conditions for which a spectrum can be flagged by this check:

– The number of components Ncomp in the spectrum is different by more than a user defined value ΔNmax (default value: 1) from the weighted median number of components determined from all its immediate neighbours. For a sequence of n ordered elements x1, x2, …, xn with corresponding positive weights w1, w2, …, wn that sum up to wtot, the weighted median is defined as the element xk for which  and

and  . Panel a in Fig. 6 shows the weights we apply to the immediate neighbours, which are inversely proportional to their distance to the central spectrum.

. Panel a in Fig. 6 shows the weights we apply to the immediate neighbours, which are inversely proportional to their distance to the central spectrum.

|

Fig. 6. Illustration of the flagging of spectra based on their number of components with the default settings of our algorithm. Each 3 × 3 square shows the central spectrum (in white) and the surrounding immediate neighbours coloured according to their weights. Panel a: Weights we apply to each neighbouring fit solution to calculate their weighted median. Panels b and c: Two cases where the fitted number of components of the central spectrum would be flagged as incompatible with the fitted number of components of their neighbours. See Sect. 3.2.2 for more details. |

– The spectrum shows differences in Ncomp towards individual neighbours that exceed a user defined value ΔNjump (default value: 2). We flag a spectrum if these differences occur towards more than Njump (default value: 1) of its neighbouring spectra.

We illustrate this criterion in Fig. 6 for two cases and the default settings of GAUSSPY+. Panel b shows an instance where the fit solution of the central spectrum shows no component jumps > 2 to any of its neighbours. However, we would still flag the central spectrum for its number of fitted components, since it differs by more than ΔNmax to the weighted median number of components as inferred from the neighbouring fit solutions (2 components). Panel c shows the opposite case, where the median number of components of 5 is still compatible with the actual number of components but the fit solution of the central spectrum would be flagged as inconsistent with its neighbours as it shows two component jumps > 2 with two of its neighbours.

3.2.3. Improved fitting routine

The improved fitting routine in GAUSSPY+ aims to improve the fitting results of the original GAUSSPY algorithm via the use of the quality controls described in Sects. 3.2.1 and 3.2.2. The original version of GAUSSPY hands over its initial guesses to a least squares minimisation routine without restricting the fitting parameters, apart from a requirement of positive amplitude values. This means that the individual Gaussian components are allowed to freely vary their FWHM and mean positions. Moreover, the number of Gaussian components is set and fixed by the initial guesses, so if GAUSSPY determined that the fit should contain a certain number of Gaussian components, it will try to fit all those components even if one of them does not contribute to improving the fit or is making the fit worse. This unrestricted fitting can lead to unphysical results or conflicting fit solutions between neighbouring spectra (see the quality flags discussed in Sect. 3.2.2).

The general idea of our routine is to try to improve the fit based on the residual and optional user-selected quality criteria (Sect. 3.2.2). This improved fitting phase is applied to every spectrum. The steps of this routine proceed as follows (see also Fig. 7):

|

Fig. 7. Flowchart outlining basic steps of our improved fitting routine. The conditional stages in red correspond to optional stages that can be selected by the user. See Sect. 3.2.3 for more details. |

1. Check the best fit result of GAUSSPY with the quality criteria outlined in Sect. 3.2.1 (see Fig. 3). All Gaussian components not satisfying any of these criteria are removed from the best fit solution of GAUSSPY and the spectrum is refit with the remaining fit components; this procedure gets repeated until all of the leftover fit components satisfy all quality criteria.

2. Try to iteratively improve the fit by adding new Gaussian components based on positive peaks in the residual of the best fit solution. Requirements for the acceptance of residual peaks as additional Gaussian component candidates are that: (i) the maximum value of the residual peak is higher than S/Nmin; (ii) the consecutive positive spectral channels of the residual peak satisfy the significance criterion 𝒮data ≥ 𝒮min outlined in Sect. 3.2.1. If one or multiple peaks are found in the residual that satisfy these requirements for being new Gaussian component candidates, a refit of the spectrum is performed by adding all of these new candidates. For the refit, the initial Gaussian parameter guesses for the accepted residual peaks are set to: the maximum positive value of the residual peak for the amplitude; the spectral channel containing the maximum positive value of the residual peak for the mean position; the number of consecutive positive channels of the residual peak for the FWHM parameter. After a successful pass of all quality criteria, we adopt the new fit as the new best fit if its AICc value is lower than the AICc value of the previous best fit solution. If a new best fit was chosen, a new iteration with a search for peaks in the residual of the new best fit solution continues. We proceed to the next step if no new positive peaks are found in the residual or no new best fit could be assigned.

3. Optional: Check whether a negative residual feature (Sect. 3.2.2) was introduced by the fit components. This check is only performed if it is the first pass through the main loop or a new best fit was assigned. Negative residual features can be indicative of a poor fit with multiple signal peaks fit by a single broad component. In case such a feature is present, we try to replace the broadest Gaussian component at the place of the residual feature with two narrower components. The initial guesses for the two new narrow components are estimated from the residual obtained if the broad component is removed, which proceeds in a similar way as in the previous step. If the new fit with the two narrow components passes all quality requirements and its AICc value is lower than the AICc value of the current best fit, we will assign it as the new best fit and repeat the search for negative residual peaks. In case multiple negative residual features are present in a spectrum, we deal with the features in order of increasing negative residual values, that is we will first try to replace the Gaussian component causing the residual feature that contains the most negative value. We proceed to the next step if no new negative peaks are found in the residual or no new best fit could be assigned.

4. Optional: Check for broad components (Sect. 3.2.2). If a broad Gaussian component is present we will try to replace it in this step with multiple narrower components. The number of narrow components and their initial parameter guesses are estimated from the residual we get if the broadest component is removed from the fit. If this results in a new best fit we will repeat this procedure with the resulting next broadest component. We proceed to the next step if no excessively broad component is identified anymore, or no new best fit could be assigned.

5. Optional: Check for blended components (Sect. 3.2.2). If this is the case we will try to refit the spectrum by in turn omitting one of the blended components and checking whether the AICc value of the resulting best fit is better than the AICc value of the current best fit. Blended components are omitted in order of increasing amplitude value, that is we will first try to refit the spectrum by excluding the blended component with the lowest amplitude value. If no new best fit is assigned or no blended components are present in the spectrum we exit the improved fitting procedure and finalise the fitting results if the normalised residuals of the best fit solution show a normal distribution, which we verify with two different normality tests (Sect. 3.2.1). If this is not the case, we repeat the whole improved fitting procedure beginning with step 2, the search for positive peaks in the residual.

We tested the performance of our improved fitting routine on synthetic spectra and found that it yields a significant improvement in the decomposition compared to the original GAUSSPY algorithm. In Sect. 4 and Appendix B.6 we give a detailed discussion about the decomposition results for the synthetic spectra.

3.3. Spatially coherent refitting

So far all steps of the fitting routine treated each spectrum separately and independently from its neighbours. Here we describe a new routine that aims to also incorporate the information from neighbouring spectra and tries to refit spectra according to this information. Our routine proceeds iteratively and starts from the fitting results obtained with the method outlined in the previous section (Sect. 3.2.3). This is different to algorithms such as SCOUSEPY, which first start with an averaged spectrum and use its decomposition result to fit the individual spectra. We proceed in a reverse manner: we first produce a sample of high quality fits for each spectrum without regarding their neighbours and then refit them, if it is deemed to be necessary, using the fit solutions of the immediate neighbouring spectra11.

The spatial refitting proceeds in two phases. In phase 1, we try to improve the fit solutions based on a flagging system, for which the fitting results from the previous stage are checked and flagged according to user-selected criteria. We subsequently try to refit each flagged spectrum with the fit solutions from its neighbours and thereby already introduce a limited form of local spatial coherence. In phase 2, we use a weighting system to try to enforce spatial coherence more globally. We check for the entire data set if the Gaussian components of each spectrum are spatially consistent with the neighbouring spectra, by comparing the centroid positions of the Gaussian components. We then try to refit spectra whose Gaussian components show centroid velocity values that are inconsistent with the fit solutions from neighbouring spectra.

3.3.1. Phase 1: Refitting of the flagged fits

The steps of the first phase of the spatially coherent refitting method are outlined in Fig. 8. The idea here is to determine which of the spectra need to be refit based on flags set by the user. We try to refit all spectra that show features that do not satisfy the quality requirements imposed on the fits (these are also retained as flags indicating bad quality fits in case the spectrum cannot be successfully refit). Depending on the data set, the user might not always want to flag or refit spectra that show one or more of these features. Therefore, all of the following flags can be chosen as required by the user. In the current version of GAUSSPY+, the following features can be flagged by the user (see Sect. 3.2.2 for more explanation about the flags):

|

Fig. 8. Flowchart outlining the steps of the first phase of our spatially coherent refitting routine. See Sect. 3.3.1 for more details. |

-

(i)

ℱneg. res. peak: The presence of negative peaks in the residual.

-

(ii)

ℱΘ: Gaussian components with a broad FWHM value. For the spatial refitting we additionally flag a component as broad if it is broader by a user-defined factor (fΘ, max) than the broadest component in more than half of its neighbours.

-

(iii)

ℱblended: The presence of blended Gaussian components in the fit.

-

(iv)

ℱresidual: Fits whose normalised residual values do not pass the tests for normality.

-

(v)

ℱNcomp: The number of components Ncomp differs significantly from its neighbours.

Flags (i)–(v) are recomputed in each new iteration. We then try to refit each flagged spectrum with the help of one or all of the best fit solutions of its neighbouring unflagged spectra. In the default settings of the algorithm we try to refit all flagged spectra by using fit solutions from unflagged neighbouring spectra. At maximum, this provides eight new different fit solutions for the flagged spectrum (if all of its eight neighbouring spectra are unflagged). If there are multiple unflagged neighbours, they get ranked according to their  values, and the neighbouring fit solution with the lowest

values, and the neighbouring fit solution with the lowest  value is used first.

value is used first.

It is also possible to only flag fit solutions without refitting them, though this has to be selected by the user. This might be useful, for instance, if users want to exclude neighbouring fit solutions whose normalised residuals did not satisfy the normality tests as templates for the refit but do not want to refit these spectra themselves.

The refitting of an individual flagged spectrum proceeds in the following way (see right part of Fig. 8):

1. Use the fit solutions of unflagged neighbouring spectra to refit individual components of the flagged spectrum. Spectra that are flagged as having negative residual features, broad, or blended components might show a good fit solution apart from the flagged features. Therefore we first try to replace the Gaussian components of such flagged features by using the Gaussian components of neighbouring unflagged fit solutions that cover the same region in the spectrum as new input guesses. The refit attempt is then performed for the entire spectrum by combining these new initial guesses from a neighbouring fit solution with the remaining fit components of the old fit solution of the spectrum that were not affected by the flagged feature. If multiple regions of a spectrum are flagged with different flags we will try to refit the flagged features in the order of: negative residual feature, broad component, and blended components. As soon as a flagged feature is successfully refit we stop the refitting iteration, even if other flagged features should still be present in the spectrum. We impose no selection criteria on the neighbouring Gaussian components, that is we will in turn use all unflagged neighbouring fit solutions as new initial guesses, starting with the fit solution that has the lowest  value. If one of the input guesses of the unflagged neighbours leads to a new improved fit the refitting of the flagged spectrum is successfully terminated, otherwise we proceed with the next step.

value. If one of the input guesses of the unflagged neighbours leads to a new improved fit the refitting of the flagged spectrum is successfully terminated, otherwise we proceed with the next step.

2. Use the fit solutions of unflagged neighbouring spectra to refit the complete flagged spectrum. In this step all fit components of a neighbouring spectrum are used as new input guesses for refitting the entire spectrum. We again loop through all unflagged neighbouring fit solutions, starting with the one that has the lowest  value. The refitting of the flagged spectrum is successfully terminated as soon as one of the neighbouring fit solutions leads to a new improved fit, otherwise we continue with the next step.

value. The refitting of the flagged spectrum is successfully terminated as soon as one of the neighbouring fit solutions leads to a new improved fit, otherwise we continue with the next step.

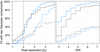

3. Obtain a new set of fit parameters from the fit solutions of all unflagged neighbouring spectra, by grouping and averaging the parameters of all their Gaussian components in a parameter space spanned by the fitted velocity centroid and FWHM values. Figure 9 illustrates how the grouping proceeds. First, the grouping is only performed for the μ values (blue shaded areas). The requirement for group membership is that data points are at maximum located at a distance of Δμmax (default value: 2 channels) from any other point of this group. We require a minimum group membership of two points, which means that single points that do not belong to any group are treated as outliers. The blue points and shaded areas show the new fitting constraints used for the refitting. As initial guesses for the amplitude, FWHM value and centroid position we use the corresponding average values of all the data points belonging to a group. The fitting constraints for the centroid positions are based on the extent of the groups along the μ axis. For each amplitude value we require that it has a positive value and set its maximum limit to the maximum data point in the original spectrum that occurs in the range that encompasses all μ values of this group multiplied by a user-defined factor fa. FWHM values are not allowed to be smaller than the user-defined parameter Θmin but there is no upper constraint for their values. If this first grouping approach does not lead to a successful refit, we use a second grouping approach that additionally groups the data points according to their FWHM values (red shaded areas in Fig. 9). A group membership for a data point is established if its μ and Θ values are at maximum located at a distance of Δμmax (default value: 2 channels) and ΔΘmax (default value: 4 channels), respectively, from any other point of this group. The points in each group are then averaged in a similar way as for the first grouping approach and supplied as new fit parameters for the refitting.

|

Fig. 9. Illustration of the grouping routine. Black points indicate centroid (μ) and FWHM (Θ) values of Gaussian components from the best fit solutions of unflagged neighbouring spectra. Blue shaded areas indicate the results of the first grouping, in which data points are only separated according to their μ values. Red shaded areas mark the results of the second grouping in which data points are additionally separated according to their Θ values. Blue squares and red stars indicate the initial guesses for the refitting with the first and second grouping approach, respectively. |

Grouping only by the centroid values has the advantage that it will try to fit the spectrum with the least amount of components inferred from its neighbours. A disadvantage is that outliers in the FWHM regime can negatively influence the initial fit values. The second grouping approach should be able to deal better with the fidelity of the data even though some of the initial guesses for Gaussian fits could overlap heavily.

For the decision of whether to accept a refit as the new fit solution we define a total flag value ℱtot that increases by one for each of the user-selected flags the fit solution does not satisfy. For the proposed new fit solutions, the total flag value increases in addition by one for each flagged criterion that got worse than in the current best fit solution, that is for an increase in the number of blended components or negative residual features, broad components that got broader, smaller p-values for the null hypothesis testing for normally-distributed residuals, and a greater difference in the number of components compared to the neighbouring fit solutions.

In the stage where all spectra were treated independently (Sect. 3.2.3), the decision to accept a fit model was made via the AICc. In the spatial refitting phase this decision is mainly guided by the comparison of the total flag value of the new fit solution ( ) with the old best fit solution (

) with the old best fit solution ( ). There are three possible scenarios:

). There are three possible scenarios:

-

. In this case the new fit solution is rejected.

. In this case the new fit solution is rejected. -

. The new fit solution is accepted if its AICc value is smaller than the AICc value for the best fit solution we started out with.

. The new fit solution is accepted if its AICc value is smaller than the AICc value for the best fit solution we started out with. -

. The new fit solution is accepted if the data points of the normalised residual pass the normality tests.

. The new fit solution is accepted if the data points of the normalised residual pass the normality tests.

In the last case we have to test whether new fit solutions incorrectly decreased  by removing valid fit components. For example, both ℱblended and ℱΘ could be reduced by one if a broad component is deleted. To prevent such incorrect fit solutions we require that the normalised residual resembles a Gaussian distribution, which we check with two different normality tests (see Sect. 3.2.1). The null hypothesis of normally distributed residual values gets rejected if the p-value is less than a user-defined threshold (default: 1%), in which case we do not accept the new fit solution.

by removing valid fit components. For example, both ℱblended and ℱΘ could be reduced by one if a broad component is deleted. To prevent such incorrect fit solutions we require that the normalised residual resembles a Gaussian distribution, which we check with two different normality tests (see Sect. 3.2.1). The null hypothesis of normally distributed residual values gets rejected if the p-value is less than a user-defined threshold (default: 1%), in which case we do not accept the new fit solution.

3.3.2. Phase 2: Refitting of the spatially incoherent fits

In the second phase of the spatially coherent refitting, we check for coherence of the centroid positions of the fitted Gaussian components for all spectra. The motivation for this step is that we would expect coherence in the centroid positions of the fitted Gaussian components for resolved extended objects, especially for oversampled observations where the size of a pixel is smaller than the beam size or resolution element.

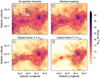

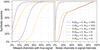

The spatial consistency check, in which we determine whether a spectrum should contain Gaussian components in specific spectral ranges based on the fitting results from neighbouring spectra, proceeds in an iterative way. For that, we use 16 neighbours along the 4 main directions (see panel a in Fig. 11)12. For simplicity we do not consider the off-diagonal pixels.

Users can specify the ratio of the weight of the closest neighbour (w1) to the weight of the neighbour located one pixel farther away (w2) with the parameter fw = w1/w2 (default value: 2). In the default settings the contribution of the neighbours is inversely proportional to their distance to the central spectrum (see left panel of Fig. 11). The weights w1 and w2 are normalised so that 2w1 + 2w2 = 1, which means that along the horizontal and vertical direction the weights sum up to a value of 1. Setting the parameter fw to higher values than the default value has the effect of decreasing the contribution of neighbours that are located at a distance of two pixels and thus puts even more emphasis on the closest neighbours. In case the central spectrum has Gaussian components whose centroid positions do not match with what would be expected from the fit results of its neighbouring spectra, we try to refit the spectrum with a better-matching fit solution from one of its neighbours.

In the following, we outline the spatial consistency check of the centroid positions in more detail (see also Fig. 10):

|

Fig. 10. Flowchart outlining the basic steps of the second phase of the spatial refitting routine. See Sect. 3.3.2 for more details. |

1. Check for a consistent feature in the neighbouring spectra along any of the main directions indicated in the left panel of Fig. 11. For each of the four directions, we group the centroid positions of the fitted Gaussian components as described in section Sect. 3.3.1 and shown schematically in Fig. 9 (blue hatched areas). We perform the grouping in each direction rather than globally to simplify the grouping, which might get too confused if all 16 neighbours are considered together.

|

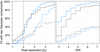

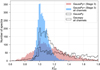

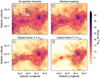

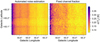

Fig. 11. Illustration of phase two of the spatial refitting routine of GAUSSPY+. Each 5 × 5 square shows a central spectrum (in white) and its surrounding neighbours. White squares that are crossed out are not considered. Left panel: Principal directions for which we check for consistency of the centroid positions and shows the applied weights w1 and w2 attached to the neighbouring spectra. Middle and right panels: Two different example cases with simple fits of one and two Gaussian components shaded in blue and red, respectively. Based on the fits of the neighbouring spectra we would try to refit the central spectrum in the first case (panel b) with one Gaussian component, whereas the central spectrum in the second case (panel c) is already consistent with what we would expect from our spatial consistency check of the centroid positions. See Sect. 3.3.2 for more details. |