| Issue |

A&A

Volume 693, January 2025

|

|

|---|---|---|

| Article Number | A178 | |

| Number of page(s) | 18 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202450575 | |

| Published online | 20 January 2025 | |

Informed total-error-minimizing priors: Interpretable cosmological parameter constraints despite complex nuisance effects

1

University Observatory, Faculty of Physics, Ludwig-Maximilians-Universität, Scheinerstraße 1, 81679 Munich, Germany

2

Department of Physics, Stanford University, 382 Via Pueblo Mall, Stanford, CA 94305, USA

3

Kavli Institute for Particle Astrophysics & Cosmology, 452 Lomita Mall, Stanford, CA 94305, USA

4

SLAC National Accelerator Laboratory, 2575 Sand Hill Road, Menlo Park, CA 94025, USA

5

Excellence Cluster ORIGINS, Boltzmannstraße 2, 85748 Garching, Germany

⋆ Corresponding author; bried@stanford.edu

Received:

1

May

2024

Accepted:

5

December

2024

While Bayesian inference techniques are standard in cosmological analyses, it is common to interpret resulting parameter constraints with a frequentist intuition. This intuition can fail, for example, when marginalizing high-dimensional parameter spaces onto subsets of parameters, because of what has come to be known as projection effects or prior volume effects. We present the method of informed total-error-minimizing (ITEM) priors to address this problem. An ITEM prior is a prior distribution on a set of nuisance parameters, such as those describing astrophysical or calibration systematics, intended to enforce the validity of a frequentist interpretation of the posterior constraints derived for a set of target parameters (e.g., cosmological parameters). Our method works as follows. For a set of plausible nuisance realizations, we generate target parameter posteriors using several different candidate priors for the nuisance parameters. We reject candidate priors that do not accomplish the minimum requirements of bias (of point estimates) and coverage (of confidence regions among a set of noisy realizations of the data) for the target parameters on one or more of the plausible nuisance realizations. Of the priors that survive this cut, we select the ITEM prior as the one that minimizes the total error of the marginalized posteriors of the target parameters. As a proof of concept, we applied our method to the density split statistics measured in Dark Energy Survey Year 1 data. We demonstrate that the ITEM priors substantially reduce prior volume effects that otherwise arise and that they allow for sharpened yet robust constraints on the parameters of interest.

Key words: methods: data analysis / methods: numerical / methods: statistical / cosmological parameters

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

Present and near-future wide-area surveys of galaxies will provide an unprecedented volume of data over most of the extragalactic sky. Examples of these surveys are the Dark Energy Survey (https://www.darkenergysurvey.org/; DESI Collaboration 2016; Sevilla-Noarbe et al. 2021), the Vera C. Rubin Observatory’s Legacy Survey of Space and Time (http://www.lsst.org/lsst; LSST Science Collaboration 2009; Ivezić et al. 2019), the Dark Energy Spectroscopic Instrument (https://www.desi.lbl.gov/) survey (Dark Energy Survey Collaboration 2016), the space mission www.cosmos.esa.int/web/euclid, www.euclid-ec.org (Laureijs et al. 2011), the 4 m Multi-Object Spectroscopic Telescope (https://www.4most.eu/cms/) (de Jong et al. 2012), the Kilo-Degree Survey (http://kids.strw.leidenuniv.nl/; de Jong et al. 2012), the Hyper Suprime-Cam (https://hsc.mtk.nao.ac.jp/ssp/; Aihara et al. 2017), and the https://roman.gsfc.nasa.gov/ Space Telescope (Eifler et al. 2021). The promise of precision cosmology with the resulting data can only be realized by accurately accounting for the nuisance effects – both astrophysical and in the calibration uncertainty of data – that become relevant in increasingly complex models. Examples of astrophysical effects include intrinsic alignments of galaxies as a nuisance effect for weak lensing analyses (see Troxel & Ishak 2015 and Lamman et al. 2024 for recent reviews and Secco et al. 2022; Samuroff et al. 2023; Asgari et al. 2021 and Dalal et al. 2023 for the latest analyses); baryonic physics that impact the statistics of the cosmic matter density field, such as star formation, radiative cooling, and feedback (e.g., Cui et al. 2014; Velliscig et al. 2014; Mummery et al. 2017; Tröster et al. 2022); galaxy bias and other parameters describing the galaxy-matter connection (see Schmidt 2021 for a recent review, Scherrer & Weinberg 1998; Dekel & Lahav 1999; Baldauf et al. 2011; Heymans et al. 2021; Ishikawa et al. 2021 and Pandey et al. 2022); and systematic effects on the baryon acoustic oscillation signal (Seo & Eisenstein 2007; Ding et al. 2018; Rosell et al. 2021 and Lamman et al. 2023). Calibration-related nuisance effects include measurement biases on galaxy shapes (see Mandelbaum et al. 2019 for a review, MacCrann et al. 2021; Georgiou et al. 2021 and Li et al. 2022), the estimation of redshift distributions of photometric galaxy samples (see Newman & Gruen 2022 for a review and Tanaka et al. 2017; Huang et al. 2017; Hildebrandt et al. 2021; Myles et al. 2021; Cordero et al. 2022), and systematic clustering of galaxies induced by observational effects (e.g. Cawthon et al. 2022; Baleato Lizancos & White 2023).

Cosmological inference from survey data is performed through likelihood analyses. The first step is to predict a model data vector as a function of parameters (both cosmological and nuisance) using a theoretical modeling pipeline. The second step is to infer the posterior probability distribution of the parameters using the observed data vector as a noisy measurement. Finally, one obtains a marginalized posterior for a subset of parameters by projecting out the remaining parameters. The results are summarized in the form of point estimates (best estimate of a parameter value) with their confidence errors. It might be desirable for these marginalized posteriors to have certain properties:

-

Unbiasedness: we require the point estimate of each cosmological parameter to have a bias, with respect to the fiducial parameters, smaller than some fraction of the width of the confidence interval (e.g., to be inside the 1σ confidence interval or region if the space is two or more dimensions). In Sect. 2.3.1 we review the point estimators that are currently used in cosmology.

-

Reliability: in repeated trials (with the same realization of the nuisance, independent noise, and the same fiducial values for the cosmological parameters), we impose that the “true” values of a set of cosmological parameters be within a derived confidence region of those parameters for at least some desired fraction of time. In other words, the frequentist coverage of the independent realizations should be consistent with the confidence level (the idea of matching Bayesian and frequentist coverage probabilities has been discussed extensively in Percival et al. 2021).

-

Robustness: we demand that the previous two requirements hold across a list of plausible nuisance and physical realizations of the fiducial configuration (e.g., under two different fiducial nuisance parameters, we still can recover unbiased and reliable marginal posteriors on the cosmology).

-

Precision: among all the possible priors whose resulting confidence regions fulfill the above requirements, we prefer the one that, on average, results in the smallest total (i.e., statistical and systematic) uncertainty on the cosmological parameters.

There are two ways in which the treatment of nuisance parameters can cause systematic errors and therefore violate these statements. The first one is to assume a nuisance parameter model that is overly simplistic, for instance, by fixing a nuisance parameter that indeed needs to be free to accurately describe the observed data. In the left panel of Fig. 1, we present a simple 2D sketch of this situation. There, the assumption is that a nuisance parameter, θNuisance, equivalent to zero leads to an unavoidable bias on the cosmological parameter θTarget1. We refer to this effect as “underfitting” of the nuisance, following Bernstein (2010). With the coming cosmological surveys, the complexity of the data is expected to increase, and therefore, unknown or not modeled nuisance effects could become underfitted.

|

Fig. 1. Sketches on how the treatment of nuisance parameters can cause systematic errors on the target parameter posteriors. For both panels, we assume a 2D space where the parameter θ = (θTarget, θNuisance). Left: bias due to underfitting a nuisance effect. In a 2D model, let 0 be the real value of the parameter θTarget, despite the true value of θNuisance. When θNuisance is a free parameter of the 2D Gaussian model presented in the panel, the true value of the target parameter is recovered with the 2D Maximum a Posteriori (2D MAP) corresponding to the red mark. With a simpler nuisance model that fixes θNuisance = 0 and thus underfits the nuisance, the 1D MAP (blue mark) of θTarget is biased towards negative values as a result. Right: prior volume effect. In this toy illustration, we show the 2D and 1D marginalized posteriors of a distribution with the 1σ and 2σ confidence contours with blue and light blue respectively. Such posteriors can, for example, result from a model that is highly non-Gaussian in the full posterior space. The black dashed line represents the fiducial value, and the intersection of them is inside the 1σ contour. If we only have access to the 1D marginalized posterior of θTarget, we would hardly estimate the fiducial value with our point estimators. In other words, even if the posterior in the entire parameter space is centered around the correct parameters, the posterior marginalized over the nuisance parameters can be off of the correct target parameters. This bias error is called the prior volume effect, and in this paper, we propose a pipeline to overcome it by constraining systematically the priors on the nuisance parameters. |

The second treatment involves assuming a prior distribution such that the estimated parameters on the marginalized posterior are biased with respect to the “true” values. This systematic error is known as prior volume effect or projection effect, and it is shown in the right panel of Fig. 1. We emphasize that the prior volume effect is a continuous one (i.e., there are cases in which this error is larger than others), and it is not limited to cases where distributions are non-symmetric or exclusively non-Gaussian. To avoid confusion, we limit ourselves to the definition of prior volume effect stated above, in which the point estimates calculated from the marginalized posteriors will be substantially offset from the true value being predicted (for real-life examples of the effect, see, e.g., Secco et al. 2022; Amon et al. 2022 on intrinsic alignments, Sugiyama et al. 2020; Pandey et al. 2022 on galaxy bias, or Sartoris et al. 2020 on galaxy cluster mass profiles. For studies on overcoming this effect in weak lensing analyses, see Joachimi et al. 2021; Chintalapati et al. 2022, and Campos et al. 2023).

Another consequence of the prior volume effect is that the marginalized coverage fraction (the fraction of marginalized confidence intervals in repeated trials that encompass the true target parameters) may not be consistent with the associated credible level. The Bayesian and frequentist interpretations imply that the full posterior’s confidence region does not necessarily have the same credible region. If this is the case, these two effects combine when marginalizing, and the marginalized coverage fraction is less intuitively characterized. This can be rephrased as follows: the marginalized 1σ confidence contours obtained with repeated Bayesian analyses in an ensemble of realizations do not necessarily encloses the fiducial parameter configuration with a frequency of ∼68%. The discrepancy may not constitute a problem from a purely Bayesian standpoint since Bayesian statistics do not claim to satisfy frequentist expectations. However, this does not change the fact that one may want to make probabilistic statements about the true value of a physical parameter and that marginalized parameter constraints quoted in cosmological publications are interpreted in a frequentist way by a large fraction (if not the majority) of the cosmology audience. Projection effects in high-dimensional nuisance parameter spaces may hence cause a buildup of wrong intuitions in the inferred parameters. Therefore, in the absence of projections, we would only find the general mismatch between the confidence and credible regions of the marginalized posteriors, and this mismatch can be overcome by using solutions such as the one presented in Percival et al. (2021) and Chintalapati et al. (2022).

The situation is further complicated by the fact that a precise model for nuisance effects with a finite set of parameters and well-motivated Bayesian priors is often not available. Commonly, at best, some plausible configurations of the nuisance effect are known. These could, for example, be a set of summary statistics measured from a range of plausible hydrodynamical simulations (van Daalen et al. 2019; Walther et al. 2021) or a compilation of different models and parameters that have been found to approximately describe the nuisance, as is the case for intrinsic alignment (Hirata & Seljak 2004; Kiessling et al. 2015; Blazek et al. 2019), the galaxy-matter connection (Szewciw et al. 2022; Voivodic & Barreira 2021; Friedrich et al. 2022; Britt et al. 2024), and in the calibration of redshift distributions (Cordero et al. 2022). Additionally, simulation-based inference techniques, such as simulation-based calibration (SBC; Talts et al. 2018), have offered an alternative way to discriminate among priors, and the cosmology community has recently applied SBC in a number of studies (Novaes et al. 2024; Ho et al. 2024; Nguyen et al. 2024; Ivanov et al. 2024; Yao et al. 2023). SBC does not make any assumptions about the analytical likelihood functions (Cranmer et al. 2020), yet it evaluates whether a probabilistic model accurately infers parameters from data by comparing the inferred posterior distributions with the priors. However, it has limitations, such as the fact that the SBC method quantifies biases in a one-dimensional posterior relative to a baseline prior distribution using a rank histogram (Yao & Domke 2023). Current high-dimensional cosmological models, however, include multiple nuisance parameters, which requires us to diagnose biases in the joint distribution.

In this paper, our objective is to make statements about the marginalized posteriors of the target parameters that fulfill the above criteria of unbiasedness, reliability, robustness, and precision. These are pragmatic objectives and not necessarily consistent with common Bayesian approaches. We chose to do this by defining the prior on nuisance effects in such a way that our objectives are met instead of by directly using our prior knowledge of their parameter values. Since the nuisance priors we constructed to satisfy these criteria are informed by knowledge of possible configurations of the nuisance effects and they minimize the systematic and statistical errors, we call them informed total-error-minimizing (ITEM) priors. How we constructed these priors has two important consequences for the interpretation of the resulting parameter constraints:

-

In the presence of limited information about the nuisance effect, the considered realizations can be used to tune the priors of the nuisance parameters to ensure certain properties of the resulting constraints on the target parameters. Hence, we do not assume the ITEM prior directly represents information about the nuisance effect or our knowledge about it. It is merely a tool for interpretable inference of the target parameters. As a consequence, we relinquish the ability to derive meaningful posterior constraints on the nuisance parameters.

-

The coverage fraction and bias of any procedure to derive parameter constraints in a set of repeated experiments will be sensitive to the “true” values of cosmological and nuisance parameters that underlie those experiments. Given a set of plausible realizations of cosmology and nuisances, we can therefore only ensure a minimum coverage and a maximum bias of the constraints derived from ITEM priors.

This paper is structured as follows: we start by describing the general methodology for obtaining ITEM priors in Sect. 2. In Sect. 3, we investigate the performance of the method in a test case: cosmological parameter constraints marginalized over models for non-Poissonian shot-noise in density split statistics (Friedrich et al. 2018; Gruen et al. 2018). We explore the change in simulated and DES Y1 results of these statistics when using ITEM priors defined from a simplified set of plausible nuisance realizations. Finally, a discussion and conclusions are given in Sect. 4.

2. Methodology

Assume that some observational data can be described in a data vector  of Nd data points. Let ξ[θ] be a model for this data vector that depends on a parameter vector θ of Np parameters, and let C be the covariance matrix of

of Nd data points. Let ξ[θ] be a model for this data vector that depends on a parameter vector θ of Np parameters, and let C be the covariance matrix of  . In many cases, it is reasonable to assume that the likelihood ℒ of finding

. In many cases, it is reasonable to assume that the likelihood ℒ of finding  given the parameters θ is a multivariate Gaussian distribution:

given the parameters θ is a multivariate Gaussian distribution:

where  are the elements of the inverse of the covariance matrix C, and 𝒞 is a constant as long as the covariance does not depend on the model parameters. We derived the posterior distribution

are the elements of the inverse of the covariance matrix C, and 𝒞 is a constant as long as the covariance does not depend on the model parameters. We derived the posterior distribution  using Bayes’ theorem:

using Bayes’ theorem:

with Π(θ) being a prior probability distribution incorporating a priori knowledge or assumptions on θ.

In many situations, parameters θ can be divided into two types: target parameters θT and nuisance parameters θN, as done in Fig. 1 (θTarget and θNuisance respectively). This distinction is subjective and depends on which parameters one would like to make well-founded statements about (i.e., robust, valid, reliable, and/or precise estimates). Also, this division is based on which parameters are needed to describe the complexities of the experiment but are not considered intrinsically of interest. For example, in a cosmological experiment, the target parameters are usually those describing the studied cosmological model, such as the Λ-Cold Dark Matter (ΛCDM) and its variations. This could, for example, include the present-day density parameters (Ωx, with x representing a specific content of the universe), the Hubble constant (H0), and the equation of state parameter of dark energy (w). On the other hand, the nuisance parameters account for observational and astrophysical effects, or uncertainties in the modeling of the data vector.

Recent cosmological analyses have chosen an approximate nuisance model with a limited number of free parameters for all these complex effects. These either assume a wide flat prior distribution over the nuisance parameters or an informative prior distribution set by simulation and/or calibration measurements (Aghanim et al. 2020; Asgari et al. 2021; Sugiyama et al. 2022; Abbott et al. 2022). As we explained in Sect. 1, precarious modeling of nuisance parameters can cause systematic errors, leading to the following consequences:

-

Limited modeling of the nuisance effects: the assumed model ξ[θ] may not sufficiently describe the nuisance effects. For example, there could be an oversight of one underlying source of the nuisance, the model of a nuisance effect may be truncated at too low an order, or it can be entirely neglected. This results in an intrinsic bias on the inferred target parameters, as would, for instance, be revealed by performing the analysis on simulated data that include the full nuisance effect.

-

Prior-volume effects: the prior distribution Π(θN) over one or multiple nuisance parameters could result in biases in the marginalized posterior when projecting to lower dimensions.

In this work, we introduce a procedure that determines a prior distribution on the nuisance parameters that ensures the criteria presented in Sect. 1 for marginalized posteriors of the target parameters. We will call the resulting priors Informed Total-Error-Minimizing priors, or ITEM priors. In Sect. 2.1, we describe how to obtain different data vectors given a model and plausible realizations of the nuisance effect. In Sect. 2.2, we generate posteriors for each data vector given three ingredients: the likelihood of the model, a set of fixed prior distributions for the target parameters, and a collection of nuisance candidate priors. In Sect. 2.3 we determine the ITEM prior after requiring the posteriors to account for specific criteria based on maximum biases, a minimum coverage fraction, and the minimization of the posterior uncertainty. The steps are summarized in Fig. 2.

|

Fig. 2. Basic steps and concepts present in our ITEM prior methodology. In light blue, we summarize the steps of our pipeline, and in green, we highlight the ingredients of the methodology. |

2.1. Step 1: Obtain a set of data vectors that represent plausible realizations of the nuisance effect

We assumed that at fixed target (e.g. cosmological) parameters θT, the range of plausible realizations of a nuisance effect is well represented by a set of n different resulting data vectors:

These could, for example, be based on a model for the nuisance effect with different nuisance parameter values. Alternatively, the  could result from a set of simulations with different assumptions that produce realizations of the nuisance effect (e.g., the impact of baryonic physics on the power spectrum from a variety of hydrodynamical simulations, as done in Chisari et al. 2018 and Huang et al. 2019). In some other cases, direct measurements of the nuisance effect may be used, and for example a bootstrapping of those measurements could yield a set of possible realizations. The ITEM prior is constructed such (cf. Sects. 2.3.1, 2.3.2, and 2.3.3) that if the most extreme among the reasonable nuisance realizations are included in the process, our desired properties of the posterior derived with the resulting ITEM prior are likely to hold for any intermediate nuisance realizations as well.

could result from a set of simulations with different assumptions that produce realizations of the nuisance effect (e.g., the impact of baryonic physics on the power spectrum from a variety of hydrodynamical simulations, as done in Chisari et al. 2018 and Huang et al. 2019). In some other cases, direct measurements of the nuisance effect may be used, and for example a bootstrapping of those measurements could yield a set of possible realizations. The ITEM prior is constructed such (cf. Sects. 2.3.1, 2.3.2, and 2.3.3) that if the most extreme among the reasonable nuisance realizations are included in the process, our desired properties of the posterior derived with the resulting ITEM prior are likely to hold for any intermediate nuisance realizations as well.

For the sake of notation, let us assume that the nuisance realizations are given in terms of particular nuisance parameter combinations θNi of the considered nuisance model: ![$ \boldsymbol{\hat{\xi}}^i = \boldsymbol{\hat{\xi}}[\boldsymbol{\theta}_{T}, \boldsymbol{\theta}_{N}^i] $](/articles/aa/full_html/2025/01/aa50575-24/aa50575-24-eq10.gif) . However, we note that this is not an assumption that a model to be used in the analysis shares those same nuisance parameters. For the following tests to be meaningful, we require these data vectors to contain no noise, or noise that is negligible compared to the covariance considered in the likelihood analysis.

. However, we note that this is not an assumption that a model to be used in the analysis shares those same nuisance parameters. For the following tests to be meaningful, we require these data vectors to contain no noise, or noise that is negligible compared to the covariance considered in the likelihood analysis.

In principle, it is possible to consider more than one realization of the target parameters as well (e.g., more than one cosmology for the same nuisance parametrization). This extra layer of complexity can be added to the pipeline, but as a first pass, we will limit ourselves to a fixed target parameter vector for simplicity, yet suggest doing this in future works.

2.2. Step 2: Generate posteriors for each data vector, with each of a set of nuisance priors, with and without a set of noise realizations

We chose our ITEM prior among the space of nuisance priors, that is, the space of the parameters that describe a family of considered prior distributions Π(θN). The details of that family may depend on: the preferred functional form of the priors, on consistency relations that are a priori known, and on practical considerations such as limits on the computational power. As mentioned before, the distinction between target and nuisance is subjective, and these labels may change between iterations of the pipeline (i.e., a prior on a target parameter can be fixed for one analysis, but it can be studied and optimized as a nuisance in another).

For example, we may search for an ITEM prior among a set of uniform prior distributions for one particular nuisance parameter, Π(θNuisance)∼𝒰(a, b), where a and b are the lower and upper bounds, respectively. In that case, we propose to sample uniformly and independently over both a and b, subject to the consistency relation that a < b. In practice, we can for example sample ma lower bounds (ai ∈ [a1, a2, …, ama]) and mb upper bounds (bj ∈ [b1, b2, …, bmb]). Besides requiring that ai < bj for all tested pairs one could demand, if there was some reason to, that other requirements are met, for example a minimum and maximum value for the prior width bj − ai, or that some nuisance parameter values are contained within the considered priors. More generally, we aim to use candidate priors that cover a reasonable range of nuisance realizations, ensuring that these realizations are enclosed within a region of non-negligible probability and can therefore be sampled. A Kullback-Leibler divergence test (Kullback & Leibler 1951) could also be used to help determine the ranges these priors could span. When combining all these configurations, we will have up to ma ⋅ mb possible priors with different widths and midpoints.

This idea can be extended to many dimensions, and the combination of all possible priors that could be used will lead to a total number of priors * denote by m. Therefore, for any given measurement of the data  , this will result in n ⋅ m different posteriors on the full set of parameters θ.

, this will result in n ⋅ m different posteriors on the full set of parameters θ.

for i = 1, …, n and j = 1, …, m.

In the next steps of our pipeline, we generate such sets of posteriors for different data vector realizations to determine which priors meet the criteria we outlined in Sect. 1. To obtain all these posteriors, instead of running a large number of Monte-Carlo-Markov-Chains (MCMCs) for the n ⋅ m total posteriors, we propose to do an importance sampling (Siegmund 1976; Hesterberg 1988) over the posterior derived from the widest prior (i.e., the prior on the nuisance that encloses all the other priors on its prior volume). We refer to Sect. 3.4.2 from Campos et al. (2023) for a summary on importance sampling. This sampling technique is straightforward when using uniform priors, but becomes highly time-consuming with more complex priors. In such cases, neural networks and importance-weighted variational autoencoders can help accelerate the process. Recent work on likelihood emulators in cosmology has been proposed to speed up inference (see, e.g., To et al. 2023). Additionally, we generate a collection of nnoise realizations by adding multi-variate Gaussian noise (according to our fiducial covariance) to each noiseless  . For this paper, we focus on a family of uniform priors; however, it is straightforward to generalize our pipeline to, for example, Gaussian prior distributions or other functional forms commonly used in cosmological analyses.

. For this paper, we focus on a family of uniform priors; however, it is straightforward to generalize our pipeline to, for example, Gaussian prior distributions or other functional forms commonly used in cosmological analyses.

2.3. Step 3: Determine the ITEM prior

Our goal is to find a prior on the nuisance parameters that returns robust, valid, reliable, and precise posteriors for the target parameters. To determine the ITEM prior, we apply filters to the considered family of nuisance prior distributions. The first and second ones are to set an upper limit for the bias and a lower limit for the coverage fraction of the posterior respectively. The third and final one is an optimization that minimizes the uncertainty on the target parameters. Figure 3 synthesizes these steps in a diagram.

|

Fig. 3. Flowchart of the procedure to obtain the ITEM prior. First, a set of m candidate prior distributions is proposed for the nuisance parameters. Then, the first criterion filters some of the priors by requiring that the bias on the target parameters in a simulated likelihood analysis be less than x* times its posterior’s statistical uncertainty (see Eq. (6)). Second, we require that the remaining priors result in a minimum coverage y* (see Eq. (8)) over the target quantities. This leads to m′≤m candidate priors, which are finally optimized by minimizing the target parameters’ uncertainty (see Sect. 2.3.3). |

2.3.1. Criterion 1: Maximum bias for the point estimate

Constraints on target parameters are commonly reported as confidence intervals around a point estimate for those parameters. For example, that point estimate may be the maximum a posteriori probability (MAP) estimator, the maximum of a marginalized posterior on the target parameters, or the 1D marginalized mean (that we will refer as mean for simplicity). To devise a measure for the bias with respect to the true values of the target parameters let us return to the exercise that was already mentioned in Sect. 1: consider a noise-free realization ξ of a data vector, and a data vector model ξ[θ], which we assume to model the data perfectly, and the fiducial parameters θ are known. We use this model to run an MCMC around ξ as if the latter was a noisy measurement of the data. If the model ξ[θ] is non-linear in the nuisance parameters, then for example the MAP of the marginalized posterior for the target parameters obtained from that MCMC can be biased with respect to the true parameters (even though ξ is noiseless and the model ξ[θ] is assumed to be perfect).

In Sect. 2.1 we have obtained a set of realizations  (with i = 1, … , n) of the data, corresponding to n different realizations of the considered nuisance effects. For each marginalized posterior from

(with i = 1, … , n) of the data, corresponding to n different realizations of the considered nuisance effects. For each marginalized posterior from  we can obtain a point estimator. The difference between these estimates and the true target parameters underlying our data vectors will then act as a measure of how much projection effects bias our inference.

we can obtain a point estimator. The difference between these estimates and the true target parameters underlying our data vectors will then act as a measure of how much projection effects bias our inference.

If θT is the true target vector and  is the vector estimate from the nuisance realization i, we then use the Mahalanobis distance (Mahalanobis 1936; Etherington 2019) to quantify the bias between the two, namely:

is the vector estimate from the nuisance realization i, we then use the Mahalanobis distance (Mahalanobis 1936; Etherington 2019) to quantify the bias between the two, namely:

where Ci−1 is the inverse covariance matrix of the parameter posterior, which we directly estimate from the chain for the i-th nuisance realization. We note that Eq. (5) is related to the χ-square test.

The quantity xi measures how much the point estimate deviates from the truth compared to the overall extension and orientation of the posterior constraints. The first filter we apply to our family of candidate priors demands all xi to be smaller than a maximum threshold, which we denote as x*. This means that we calculate xi for each realization of the nuisance, and we then determine the maximum:

of those Mahalanobis distances. All candidate priors that do not meet the requirement

will be excluded. In other words, we demand that the prior results in less than x* bias for any realization of the nuisance with respect to the target parameter uncertainties. That leaves us with an equal or smaller number of candidate priors, depending on the considered threshold quantity x*. For example, in the DES Y3 2-point function analyses, this threshold was chosen as 0.3σ2D (with the subscript “2D” standing for the 68% credible interval) for the joint, marginalized posterior of S8 and Ωm (Krause et al. 2021). If none of the considered priors meet the bias requirement, this suggests that, given the setup of the problem, a less stringent bias threshold should be chosen and taken into account when interpreting the posterior.

2.3.2. Criterion 2: Minimum frequentist coverage

We now apply a second filter to the candidate priors: a minimum coverage probability for the 1σ confidence region of the marginalized posterior of the target parameters (i.e. the smallest sub-volume of target parameter space that still encloses about 68% of the posterior). This coverage probability measures how often the true target parameters would be found within that confidence region in independent repeated trials. In a Bayesian analysis, it can in general not be expected that this coverage matches the confidence level of the considered region (in our case 68%). This has been extensively discussed in Percival et al. (2021), for example. The presence of projection effects does impact this additionally when studying the marginalized posteriors.

To measure the coverage probability, we proceed as follows for a given prior: for each of the nnoise noise realizations per data vector  described in Sect. 2.2, we determine the 1σ confidence region (e.g., the contour in the target parameter space or the 1D confidence limit of each parameter). The fraction of these confidence regions that enclose the true target parameters is an estimate for the coverage probability. We will denote with yi the fraction corresponding to the i-th nuisance realization. With an approach similar to our previous filter, we considered the minimum coverage per nuisance realization,

described in Sect. 2.2, we determine the 1σ confidence region (e.g., the contour in the target parameter space or the 1D confidence limit of each parameter). The fraction of these confidence regions that enclose the true target parameters is an estimate for the coverage probability. We will denote with yi the fraction corresponding to the i-th nuisance realization. With an approach similar to our previous filter, we considered the minimum coverage per nuisance realization,

and then we required each nuisance realization to have a coverage of at least y*,

in order for a candidate nuisance prior to pass our coverage criterion. In other words, we demanded that a prior result in a coverage of at least y*.

It is worth mentioning that the value of y* is not necessarily the probability associated with a confidence level of 1σ (i.e., ∼68%) because the coverage fraction will scatter around that value following a binomial distribution. As an example, for 100 independent noisy realizations, the probability of having z out of the 100 realizations covering the true parameters will be P(z) = Bin(z; n = 100, p = 0.68). Therefore, ensuring a minimum coverage such that the cumulative binomial distribution at that value is statistically meaningful should be enough. Using our same previous example, a coverage of 62 or more out of 100 realizations will happen with 90% probability. The larger the number of nuisance realizations used to derive an ITEM prior, the smaller the coverage threshold should be chosen to avoid false positives of low coverage. The smaller the number of nuisance realizations used to derive an ITEM prior, the higher the coverage threshold should be chosen to avoid false positives of low coverage.

In the presence of a bias on the target parameters, however, there will be a mismatch between the coverage probability and the confidence level of the posterior. If an otherwise accurate multivariate Gaussian posterior is not, on average, centered on the true parameter values, any of its confidence regions will have a lower coverage than nominally expected. To account for this effect in the coverage test without double-penalizing bias, and later to find the prior that minimizes posterior volume in a total error sense, we propose to appropriately raise the confidence level to be used. For our implementation of this, we made the assumption of a Gaussian posterior. For an unbiased posterior, this means that among many realizations of the noisy data vector, the log-posterior value of the true parameters is a χ2 distributed random variable with the number of degrees of freedom equal to the number of target parameters. In the case of a biased analysis, the log-posterior of the true parameters instead follows a non-central χ2 distribution.

To calculate the required confidence level, we proceeded as follows:

-

We calculated for which value the cumulative distribution function of a non-central χ2-distribution representing the biased marginalized posterior takes a value of 68%.

-

At that value, we evaluated the cumulative distribution function of a central χ2-distribution (with the same number of degrees of freedom). This will always be higher than 68%.

It is this higher confidence level that we use to calculate the enlarged “total error” 1σ confidence region for the noisy realizations of our data vectors ξi and to measure the coverage probabilities.

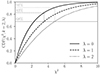

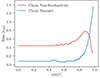

The χ2-distribution with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. Its cumulative distribution function (CDF) is

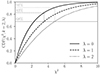

where γ is the lower incomplete gamma function and Γ is the gamma function. In Fig. 4, we plot with a continuous line the CDF of a χ2-distribution with two degrees of freedom (k = 2) for visualization purposes.

|

Fig. 4. Cumulative distribution functions of two degrees of freedom central and non-central χ2-distributions. The plotted non-central χ2-distribution shows that for the same significance threshold, the central χ2-distribution will always have a larger CDF. |

The non-central χ2-distribution with k degrees of freedom is an extension of the central χ2-distribution, and it has a non-centrality parameter λi defined as

where θT is the true target parameter vector,  is the target parameter estimate for nuisance realization i, and Ci−1 is the associated inverse covariance matrix. We point out that λi = xi2 from Eq. (5). In Fig. 4 we show the CDF of two non-central χ2-distributions with two degrees of freedom, but different non-centrality parameters. For CDF(χ2; k = 2, λ = 1, 2) = 0.68, it is clear that CDF(χ2; k = 2) > 0.68. This augmented cumulative probability accounts for the overall bias, including the one induced by projection effects.

is the target parameter estimate for nuisance realization i, and Ci−1 is the associated inverse covariance matrix. We point out that λi = xi2 from Eq. (5). In Fig. 4 we show the CDF of two non-central χ2-distributions with two degrees of freedom, but different non-centrality parameters. For CDF(χ2; k = 2, λ = 1, 2) = 0.68, it is clear that CDF(χ2; k = 2) > 0.68. This augmented cumulative probability accounts for the overall bias, including the one induced by projection effects.

2.3.3. Criterion 3: Minimizing the posterior volume

The candidate priors that have passed our previous two filters follow our definitions of unbiasedness, reliability, and robustness. The ITEM prior corresponds to the one among them that yields the smallest posterior uncertainty on the target parameters. This final selection step is necessary because it is in fact easier to meet our previous criteria with wide posteriors that result from unconstrained nuisance priors.

We quantify the target parameter random error by approximating the volume that the 1σ confidence contours (68%) enclose. For a Gaussian posterior of any dimensionality, the volume would be given in terms of the parameter covariance matrix Ci as (see Huterer & Turner 2001 for a derivation)

and for simplicity, we used that formula also for our (potentially non-Gaussian) posteriors.

We let m′ be the number of candidate priors that passed the filters listed in Sects. 2.3.1 and 2.3.2. We let Vi, j be the volume enclosed by the 1σ ellipsoid of the i-th posterior distributions at the j-th prior, j = 1, …, m′. Then, we considered the maximum of these volumes across all noiseless data vectors:

As done in Sect. 2.3.2, we accounted for the bias when optimizing the posterior uncertainty by considering the volume enclosed by the extended central χ2-distribution. This allows us to minimize the total error, including random and systematic contributions. The re-scaled volume includes a factor equivalent to (see Appendix A)

We chose the prior that has the minimum volume  as the ITEM prior.

as the ITEM prior.

3. ITEM priors for density split statistics

In this section we examine the performance of the ITEM prior when analyzing a higher-order statistic of the matter density field called Density Split Statistics. We do this as a proof of concept under simplified assumptions, particularly on the nuisance realization deemed plausible. Readers are referred to Britt et al. (2024) for more careful analysis of potential nuisance effects relevant for those statistics.

3.1. Density split statistics

We test our ITEM prior methodology in considering as the relevant nuisance effect the galaxy-matter connection models employed for the Density Split Statistics (DSS, Friedrich et al. 2018; Gruen et al. 2018 for the Dark Energy Survey Year 1, DES Y1, analysis). The data vector of DSS is a compressed version of the joint PDF of projected matter density and galaxy count. These studies consider two ways of generalizing a linear, deterministic galaxy-matter connection, which is their most relevant nuisance effect. The first one accounts for an extension of the Poissonian shot-noise in the distribution of galaxy counts by adding two free parameters: α0 and α1. For simplicity, we call this the α model. The second one more simply parametrizes galaxy stochasticity via one correlation coefficient r. We refer to this as the r model (for a derivation and explanation of these models, see Appendix B).

3.2. Simulated likelihood analysis with wide priors

In this subsection, we present results of simulated likelihood analyses of DSS with the wide prior distributions used in the original DES Y1 analysis (Friedrich et al. 2018; Gruen et al. 2018). Our study focuses on the five quantities listed in Table 1. The cosmological parameters are Ωm2 and σ83. The parameters describing the galaxy-matter connection are the linear bias b4, and the stochasticity parameters α0 and α1. These two last parameters were introduced in the DSS to accurately model the galaxy-matter connection. However, the study did not introduce a physically motivated prior distribution of these parameters. For a more detailed explanation on the α parameters, including their physical meaning and origin, we refer to Friedrich et al. (2022). As a derived parameter, we also considered S85:

Parameters used in the simulated likelihood analyses for the α model.

Both Ωm and S8 are the target parameters (as presented in, e.g., Krause et al. 2021), while α0 and α1 are the nuisance parameters whose priors we are optimizing. The prior on b remains unchanged, since we find its joint posterior with cosmological parameters to be symmetric and well constrained, at least in the case without modeling the excess skewness of the matter density distribution ΔS3 as an additional free parameter, which is the only case we consider here.

The first step of the ITEM prior method is the use of different realizations of the nuisance. We limit our analysis by considering two realizations of the stochasticity listed in the second and third columns of Table 1. The first one corresponds to a configuration in which there is no stochasticity in the distribution of galaxies, that is where the shot-noise of galaxies follows a Poisson distribution, which is often assumed to be correct at sufficiently large scales (but which has been shown to fail at exactly those scales, see e.g. Friedrich et al. 2018; MacCrann et al. 2018). That is equivalent to setting α0 = 1.0 and α1 = 0.0, since these two values turn Eq. (B.5) into a Poisson distribution. We call this the Non-stochasticity case. The second corresponds to the best-fit shot-noise parameters found by Friedrich et al. (2018) on REDMAGIC mock catalogs (Rozo et al. 2016), constructed given realistic DES Y1-like survey simulations called Buzzard-v1.1 (DeRose et al. 2019). These Buzzard mock galaxy catalogs (Wechsler et al. 2022) have been used extensively in DES analyses (DeRose et al. 2022). The values for the stochasticity parameters are α0 = 1.26 and α1 = 0.29. We call this the Buzzard stochasticity case.

These two realizations should be considered as a simple test case for the methodology. This work, as mentioned previously, emphasizes that the resulting ITEM prior is limited because of the few, and potentially unrealistic, nuisance realizations considered. There is a wide range of plausible stochasticity configurations that could be taken into account (see e.g. the findings of Friedrich et al. 2022). In a companion paper (Britt et al. 2024) we consider diverse realizations of halo occupation distributions (HODs) to derive such a set of stochasticity configurations.

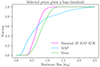

For our simulated likelihood analysis, we obtain noiseless data vectors for each nuisance realization from the model prediction (Friedrich et al. 2018) for the Buzzard-measured values of the stochasticity parameters. Additionally, we used the priors presented in the last column of Table 1, which are the ones used originally in the DES Y1 analysis of DSS (Gruen et al. 2018). To obtain the corresponding posterior, we run MCMCs using the EMCEE algorithm (Foreman-Mackey et al. 2013), leaving the stochasticity parameters free with the original broad priors from the last column in Table 1. We point to Abbott et al. (2023) for a discussion of the use of posterior samplers. We also run two additional MCMCs fixing the stochasticity parameters to the values listed in Table 1 for comparison purposes. In Fig. 5, we show the four estimated marginalized posteriors of Ωm and S8. We find that in the cases in which we considered free stochasticity parameters, the posterior returns much larger uncertainties over both cosmological parameters, with a somewhat shifted mean.

|

Fig. 5. Marginalized (Ωm, S8) posteriors with 1σ and 2σ contours from the simulated DSS α model of synthetic, noiseless data vectors using the fiducial values and priors from Table 1. The red and blue contours show the case with 5 degrees of freedom, while the purple and black contours show the case with 3 degrees of freedom, with α0 = [1.0, 1.26] and α1 = [0.0, 0.29] respectively. |

Subsequently, we fit a 2D Gaussian distribution centered at the 2D marginalized MAP and with the chains’ parameter covariance matrix, and evaluate the off-centering of the chains from the true cosmological parameters the model was evaluated for when generating the data vector. Figure 6 illustrates the biases for each chain obtained with the original wide priors on stochasticity parameters. Both cases are biased: the bias of the Buzzard stochasticity case (in blue) reaches a value of 0.42σ2D, more than the defined DES threshold of 0.3σ2D. The bias, in both cases, is systematically pointing towards lower and higher values on Ωm and S8, respectively.

|

Fig. 6. Parameter biases in simulated likelihood analyses for the α model (top) and the r model (bottom). In red and blue, we show the ellipses for the 2D marginalized constraints of the non-stochasticity and the Buzzard stochasticity configurations, respectively. These figures follow Fig. 4 from Krause et al. (2021). For the α model, these are centered on their corresponding 2D marginal MAP. Because of prior volume effects, the marginalized constraints are not centered on the input cosmology. For the r model, there is a systematic bias in the non-stochasticity case that is explained by the sharp upper bound from the prior on r. |

In an additional case study, we assume an alternative parametrization of galaxy stochasticity (the r model, introduced in Appendix B) as a basis, yet still use the data vectors obtained previously with the α model. The r model is simpler than the α model because it only considers one correlation parameter r for the effect of stochasticity. This test checks whether the conditions demanded by the ITEM formalism can be met even when a model does not produce the data, which happens often with real data, and how much information the ITEM prior can recover in such a case.

The original DSS prior used on r follows a uniform distribution: Π(r)∼𝒰[0.0, 1.0], where r = 1 recovers the non-stochasticity case (see Appendix B). The sharp upper bound results in a projection effect as seen in Fig. 7, with posteriors biased towards large values of S8, particularly in the non-stochastic case. We also illustrate the bias for both chains in the bottom panel of Fig. 6 with the original wide prior on r.

|

Fig. 7. Marginalized posteriors (Ωm, S8, r) from the simulated DSS r model using a non-stochastic parameter vector from the α model. The fiducial values are represented by the dashed lines. Hence for rfiducial = max[r] = 1.0, it is in the right edge of the plot. Because r > 1 is unphysical, projection effects cause a systematic offset between the mean or peak of the 1D marginalized posteriors, particularly in r and S8, and their input values. |

3.3. ITEM priors on the density split statistics

In this subsection we detail the procedure to obtain ITEM priors for two nuisance models: first in Sect. 3.3.1, a simple approach considering the α model as a baseline, and second in Sect. 3.3.2, a case when the data vectors are simulated with the α model, but analyzed with the r model, leading to a systematic error.

3.3.1. ITEM prior for the α model

In the original DSS work, the nuisance parameters α0 and α1 had uniform priors. We made the same choice here and only varied the limits of these priors6.

We started by sampling the candidate priors as done in the example in Sect. 2.2. We shrank the bounds of the priors (min[α0], max[α0], min[α1] and max[α1]) with a step of 0.1 units per cut until they reached the closest rounded fiducial value of the parameter vector:

The final number of candidate priors corresponds to 58 140 when combining each different bound. Next, we apply an importance sampling to the original 5D wide posteriors described in Sect. 3.2 and follow the three steps described in Sect. 2 to obtain the ITEM priors:

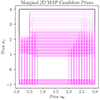

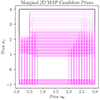

First, we estimate, for each candidate prior, the maximum Mahalanobis distance x from Eq. (6) for the different posteriors. We set the upper threshold to 0.4σ2D for the first filter of the ITEM priors. A total of 1980 priors had a bias of less than 0.4σ2D (∼3% of the original set, indicating that prior volume related biases are prevalent in this inference problem). In Fig. 8, we show their distribution on the prior space. These mainly preferred lower values for the upper prior bound on α1, indicating that the original asymmetric prior caused a prior volume related bias.

|

Fig. 8. Distribution of the candidate priors for the α model that pass the first filter. Each rectangle represents a combination of lower and upper bounds of α0 and α1. |

Second, we run 50 noisy simulated realizations, 25 for each nuisance realization, following the criterion from Sect. 2.3.2. These noisy realizations were simulated by adding multivariate Gaussian noise with the covariance matrix from Gruen et al. (2018) to the noiseless data vectors. We choose the threshold z = 14 such that the cumulative binomial distribution for independent 25 realizations is at least 90% (this corresponds to a 14/25 = 56% coverage). Only a small fraction of priors should thus be excluded due to chance fluctuations in the number of contours that contain the input parameter values. In this step, we considered the systematic bias as explained in Sect. 2.3.2. A total of 1868 out of the 1980 remaining candidate priors qualified under the 1D coverage requirement for both nuisance realizations. In Fig. C.3 we show the distribution of the coverages among the tested priors.

The final step corresponds to the minimization of the error from Sect. 2.3.3. The resulting ITEM priors for α0 and α1 are listed in the second row of Table 2. We first note that the prior widths were reduced to ∼48% and 42% their original ones respectively. In particular, the prior in α0 remained centered around 1.3, while all upper bounds such that max[α1]> 1.1 were rejected. The biases obtained with the original and ITEM priors are displayed in Fig. 9. While the bias in Ωm slightly increased, the one on S8 decreased as expected from the imposed threshold. The coverages for both nuisance realizations did not change more than ±12% with respect to the coverages from the original priors. In the last three columns of Table 2, we present the average standard deviations and the statistical uncertainty of the target parameters. These were reduced, corresponding to an improvement that is formally equivalent to an experiment surveying more than three times the volume. Finally, the marginalized distributions are shown in Fig. 10.

|

Fig. 9. Parameter biases of the data vectors with the original and ITEM priors. The red and blue ellipses show wide contours (same from Fig. 6), while the yellow and green ones were obtained after enforcing the 0.4σ2D bias threshold and 1D coverage threshold for the 2D marginalized constraints. Both are centered in their respective 2D marginalized MAP. Due to parameter volume effects, the marginalized constraints from the baseline prior analysis are not centered on the input cosmology. Top: simulated likelihood analysis for the data vector without shot-noise (α0 = 1.0, α1 = 0.0). Bottom: simulated likelihood analysis for the data vector with Buzzard stochasticity (α0 = 1.26, α1 = 0.29). The dashed horizontal and vertical lines indicate the fiducial parameter values. |

Ranges of the original and ITEM priors for the α model with a maximum bias of 0.4σ2D and a minimum coverage of 56% for both Ωm and S8.

|

Fig. 10. Marginalized posterior distributions for the chains from the α and r models from DSS. These have been plotted with the widened 1σ and 2σ confidence levels to account for the bias as presented in Sect. 2.3.2. In the top and bottom panels, we illustrate the non-stochasticity and the Buzzard stochasticity respectively. The larger contours correspond to the posterior simulated using the original DSS α model priors. The narrow continuous contours illustrate the one with the ITEM prior on the α model, and the discontinuous contours for the r model. |

We note that in a proper implementation of the ITEM prior program one should consider an exhaustive list of possible nuisance realizations, instead of just the two realizations considered in our example. In the companion study (Britt et al. 2024), we include shot-noise realizations generated from a range of plausible HOD descriptions to obtain fiducial stochasticity parameters and derive a more realistic result than the one presented here. The nuisance realizations derived there will be used in a more thorough application of the ITEM prior method in the future.

Due to recent discussions on the impact of using different point estimators in cosmological analyses (e.g. Amon et al. 2022), in Appendix C we explore the ITEM priors obtained when, instead of using the marginalized MAP, we use the MAP and means as point estimators.

3.3.2. ITEM prior for r model with a different basis model

In this subsection we investigate how the ITEM priors can help in cases where the assumed nuisance models are not the generators of the data. We use the same two-parameter vectors from Sect. 3.3.1 (the non-stochasticity and the Buzzard stochasticity) and follow the same procedure. However, we use the r model presented in Appendix B.2 to obtain posteriors. The parameters considered are Ωm, σ8, b and r. We use the same prior distribution over Ωm, σ8 and b, and change the prior on r. We then obtain two posteriors using the non-stochasticity and the Buzzard realization of the nuisance in the simulated data. Next, we sample different priors for r: we changed the lower bound min[r] and kept max[r] fixed to 1.0:

The total number of priors is 99. Then, we repeat the three steps of the ITEM prior pipeline.

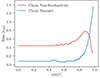

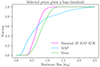

In this case there is a larger systematic bias due to the sharp upper bound on r and the nuisance realization from a different model. In Fig. 11 we show the resulting bias for different lower bounds on min[r]. Because of the systematic error present in the data vector, there is a clear trade-off. On one side, for the non-stochasticity case (red line in Fig. 11), priors with a higher lower bound on r (from min[r] = 0.9 and above) will be substantially less biased. This is expected as priors with higher min[r] will exclusively select values close to r = 1, near the fiducial values of the parameter vector (we recall that α0 = 1.0, α1 = 0.0 is algebraically equivalent to r = 1 as shown in Appendix B). On the other side, the Buzzard stochasticity case (blue line in Fig. 11) becomes highly biased for min[r]> 0.9. Those priors on r restrict the parameter space substantially to a “more” Poissonian configuration of the galaxy matter connection (see Appendix B), increasing their bias drastically. To account for the systematic bias, we choose a flexible bias threshold of 0.5σ2D, which reduces the number of candidate priors to 68.

|

Fig. 11. Biases of the non-stochasticity and Buzzard chains for different lower bounds of Π(r). After a min[r] ∼ 0.9, we see a trade-off between the two stochasticity cases. |

Subsequently, and as we did in Sect. 3.3.1, we run 50 noisy simulated realizations, 25 for each nuisance realization using the same data vectors. We require the noisy realizations to have at least a 56% 1D coverage for both Ωm and S8 out of the 25 simulated realizations. This second filter did not reduce the number of candidate priors because all of them fulfilled the requirement.

Finally, we determine the ITEM prior by optimizing the total error from Sect. 2.3.3. In Table 3, we summarize the resulting prior, with the corresponding biases, coverage, and uncertainties. We find similar statistics, but we used a prior that is substantially less wide (reduction of 68%). For comparison purposes, in Fig. 10 we show the marginalized posterior distributions.

Ranges of the original and ITEM priors for the r model with a maximum bias of 0.5σ2D and a minimum coverage of 56% for both Ωm and S8.

4. Discussion and conclusion

In the era of precision cosmology, observations require increasingly complex models that account for systematic astrophysical and observational effects. Prior distributions are assigned to the nuisance parameters modeling these phenomena based on limits in which they are plausible, ranges of simulation results, or actual calibration uncertainty. However, the analyses using such priors often yield biased or overly uncertain marginalized constraints on cosmological parameters due to so-called projection or prior volume effects. In this paper, we have presented a procedure to overcome projection effects through a pragmatic choice of nuisance parameter priors based on a series of simulated likelihood analyses. We named this the ITEM priors method.

The ITEM approach performs these simulated likelihood tests in order to find a prior that, when applied, leads to a posterior with some desired statistical properties and interpretability. We proposed to divide the parameters of a given model into two types: the target parameters (the ones of interest) and the nuisance parameters (the ones to optimize the prior for). Our approach then finds an equilibrium between the underfitting and overly free treatment of nuisance effects. This is done by enforcing three requirements on the target parameter posteriors found when applying the nuisance parameter prior in question in the analysis:

-

The biases on the target parameters need to be lower than a certain fraction of their statistical uncertainties.

-

In the presence of noise, we still need to cover the original fiducial parameter values a certain fraction of times in some desired confidence region.

-

With these two criteria being met, we prefer nuisance parameter priors that lead to small target parameter posterior volumes.

We tested the ITEM prior pipeline using two different nuisance realizations from the density split statistics analysis framework (Friedrich et al. 2018; Gruen et al. 2018). We simulated two realistic data vectors, where one does not include stochasticity between galaxy counts and the underlying matter density contrast, and the other considers a specific stochasticity found in an N-body simulation based on a mock catalog. For both cases, we applied the above criteria to a list of candidate priors in order to limit bias, ensure coverage, and minimize uncertainties. The result from applying the ITEM prior to the constraining power on target parameters corresponds to an improvement equivalent to an experiment surveying more than three times the cosmic volume. Additionally, we studied the case in which the model used to treat the nuisance effect is not the same as the one used to generate the data, a common situation in practice. The range of the resulting ITEM prior is less than half of the original prior’s size, resulting in a smaller total uncertainty of the target parameters with a similar level of bias and coverage as obtained with the original prior.

One of the main limitations of this method is that its results may depend on the fiducial values that are assigned to the parameter of interest and that it formally requires a complete set of plausible realizations of the nuisance effect. In this first study, we fixed the target parameters and chose just two nuisance realizations; however, one can extend our method to explore a variety of target and nuisance realizations in future works that apply it to actual inference.

Some potential extensions to our work include incorporating Bayesian evidence to compare and/or select priors in the different ITEM steps. Modern sampling techniques, such as the nested sampling algorithm (Skilling 2004) and its extensions (Chopin & Robert 2010; Brewer et al. 2010; Habeck 2015), provide the Bayes factor as part of their analysis pipelines. Well-known samplers, such as MultiNest (Feroz et al. 2009) and more recently dynesty (Speagle 2020) and Nautilus (Lange 2023), have become popular tools within the cosmology community. We anticipate their usage will continue to grow, and therefore implementing the ITEM procedure should not incur more computational cost than what is usually invested.

To summarize, in this work, we aimed at exploring an alternative approach to assigning prior distributions to nuisance parameters. The method we have presented is flexible, as it allows us to define requirements and propose priors, given some set of plausible nuisance realizations, for a reliable and information-maximizing statistical inference.

Data availability

The Dark Energy Survey Year 1 data (Density Split Statistics) used in this article is publicly available at https://des.ncsa.illinois.edu/releases/y1a1/density.

The parameter S8 has been widely used because it removes degeneracies between Ωm and σ8 present in analysis of cosmic structure. Some analyses have found there to be a tension between the S8 measured in the late universe (Aghanim et al. 2020; Heymans et al. 2021; Lemos et al. 2021 and Dalal et al. 2023) and from the CMB (Raveri & Hu 2019; Asgari et al. 2021 and Anchordoqui et al. 2021), rendering it of particular interest to have an interpretable posterior for.

Acknowledgments

We would like to thank Elisabeth Krause, Steffen Hagstotz, Raffaella Capasso, Elisa Legnani, and members of the Astrophysics, Cosmology, and Artificial Intelligence (ACAI) group at LMU Munich for helpful discussions and feedback. BRG was funded by the Chilean National Agency for Research and Development (ANID) – Subdirección de Capital Humano / Magíster Nacional / 2021 – ID 22210491, the German Academic Exchange Service (DAAD, Short-Term Research Grant 2021 No. 57552337) and by the U.S. Department of Energy through grant DE-SC0013718 and under DE-AC02-76SF00515 to SLAC National Accelerator Laboratory, and by the Kavli Institute for Particle Astrophysics and Cosmology (KIPAC). BRG also gratefully acknowledges support from the Program for Astrophysics Visitor Exchange at Stanford (PAVES). DB acknowledges support provided by the KIPAC Giddings Fellowship, the Graduate Research & Internship Program in Germany (operated by The Europe Center at Stanford), the German Academic Exchange Service (DAAD, Short-Term Research Grant 2023 No. 57681230), and the Bavaria California Technology Center (BaCaTec). DG and OF were supported by the Excellence Cluster ORIGINS, which is funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy (EXC 2094-390783311). OF also gratefully acknowledges support through a Fraunhofer-Schwarzschild-Fellowship at Universitätssternwarte München (LMU observatory). This work used the following packages: MATPLOTLIB (Hunter 2007), CHAINCONSUMER (Hinton 2016), NUMPY (Harris et al. 2020), REBACK2020PANDAS (The pandas development team 2020), SCIPY (Virtanen et al. 2020), INCREDIBLE and EMCEE (Foreman-Mackey et al. 2013) software packages.

References

- Abbott, T., Aguena, M., Alarcon, A., et al. 2022, Phys. Rev. D, 105, 023520 [NASA ADS] [CrossRef] [Google Scholar]

- Abbott, T. M. C., Aguena, M., Alarcon, A., et al. 2023, Open J. Astrophys., 6, 36 [NASA ADS] [Google Scholar]

- Aghanim, N., Akrami, Y., Ashdown, M., et al. 2020, A&A, 641, A6 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Aihara, H., Arimoto, N., Armstrong, R., et al. 2017, PASJ, 70, S4 [NASA ADS] [Google Scholar]

- Amon, A., Gruen, D., Troxel, M., et al. 2022, Phys. Rev. D, 105, 023514 [NASA ADS] [CrossRef] [Google Scholar]

- Anchordoqui, L. A., Di Valentino, E., Pan, S., & Yang, W. 2021, J. High Energy Astrophys., 32, 28 [NASA ADS] [CrossRef] [Google Scholar]

- Asgari, M., Lin, C.-A., Joachimi, B., et al. 2021, A&A, 645, A104 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Baldauf, T., Seljak, U., Senatore, L., & Zaldarriaga, M. 2011, JCAP, 2011, 031 [CrossRef] [Google Scholar]

- Baleato Lizancos, A., & White, M. 2023, JCAP, 2023, 044 [CrossRef] [Google Scholar]

- Bernstein, G. M. 2010, MNRAS, 406, 2793 [NASA ADS] [CrossRef] [Google Scholar]

- Blazek, J. A., MacCrann, N., Troxel, M., & Fang, X. 2019, Phys. Rev. D, 100, 103506 [NASA ADS] [CrossRef] [Google Scholar]

- Brewer, B. J., Pártay, L. B., & Csányi, G. 2010, Stat. Comput., 21, 649 [Google Scholar]

- Britt, D., Gruen, D., Friedrich, O., Yuan, S., & Ried Guachalla, B. 2024, A&A, 689, A253 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Campos, A., Samuroff, S., & Mandelbaum, R. 2023, MNRAS, 525, 1885 [NASA ADS] [CrossRef] [Google Scholar]

- Cawthon, R., Elvin-Poole, J., Porredon, A., et al. 2022, MNRAS, 513, 5517 [NASA ADS] [CrossRef] [Google Scholar]

- Chintalapati, P., Gutierrez, G., & Wang, M. 2022, Phys. Rev. D, 105, 043515 [CrossRef] [Google Scholar]

- Chisari, N. E., Richardson, M. L. A., Devriendt, J., et al. 2018, MNRAS, 480, 3962 [Google Scholar]

- Chopin, N., & Robert, C. P. 2010, Biometrika, 97, 741 [CrossRef] [Google Scholar]

- Cordero, J. P., Harrison, I., Rollins, R. P., et al. 2022, MNRAS, 511, 2170 [NASA ADS] [CrossRef] [Google Scholar]

- Cranmer, K., Brehmer, J., & Louppe, G. 2020, PNAS, 117, 30055 [NASA ADS] [CrossRef] [Google Scholar]

- Cui, W., Borgani, S., & Murante, G. 2014, MNRAS, 441, 1769 [NASA ADS] [CrossRef] [Google Scholar]

- Dalal, R., Li, X., Nicola, A., et al. 2023, Phys. Rev. D, 108, 123519 [CrossRef] [Google Scholar]

- Dark Energy Survey Collaboration (Abbott, T., et al.) 2016, MNRAS, 460, 1270 [Google Scholar]

- de Jong, R. S., Bellido-Tirado, O., Chiappini, C., et al. 2012, SPIE, https://doi.org/10.1117/12.926239 [Google Scholar]

- Dekel, A., & Lahav, O. 1999, ApJ, 520, 24 [Google Scholar]

- DeRose, J., Wechsler, R. H., Becker, M. R., et al. 2019, arXiv e-prints [arXiv:1901.02401] [Google Scholar]

- DeRose, J., Wechsler, R., Becker, M., et al. 2022, Phys. Rev. D, 105, 123520 [NASA ADS] [CrossRef] [Google Scholar]

- DESI Collaboration (Aghamousa, A., et al.) 2016, arXiv e-prints [arXiv:1611.00036] [Google Scholar]

- Ding, Z., Seo, H.-J., Vlah, Z., et al. 2018, MNRAS, 479, 1021 [NASA ADS] [Google Scholar]

- Eifler, T., Miyatake, H., Krause, E., et al. 2021, MNRAS, 507, 1746 [NASA ADS] [CrossRef] [Google Scholar]

- Etherington, T. R. 2019, PeerJ, 7, e6678 [CrossRef] [Google Scholar]

- Feroz, F., Hobson, M. P., & Bridges, M. 2009, MNRAS, 398, 1601 [NASA ADS] [CrossRef] [Google Scholar]

- Foreman-Mackey, D., Hogg, D. W., Lang, D., & Goodman, J. 2013, PASP, 125, 306 [Google Scholar]

- Friedrich, O., Gruen, D., DeRose, J., et al. 2018, Phys. Rev. D, 98, 023508 [Google Scholar]

- Friedrich, O., Halder, A., Boyle, A., et al. 2022, MNRAS, 510, 5069 [NASA ADS] [CrossRef] [Google Scholar]

- Georgiou, C., Hoekstra, H., Kuijken, K., et al. 2021, A&A, 647, A185 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Gruen, D., Friedrich, O., Krause, E., et al. 2018, Phys. Rev. D, 98, 023507 [Google Scholar]

- Habeck, M. 2015, AIP Conf. Proc., 1641, 121 [NASA ADS] [CrossRef] [Google Scholar]

- Harris, C. R., Millman, K. J., van der Walt, S. J., et al. 2020, Nature, 585, 357 [NASA ADS] [CrossRef] [Google Scholar]

- Hesterberg, T. C. 1988, Advances in Importance Sampling [Google Scholar]

- Heymans, C., Tröster, T., Asgari, M., et al. 2021, A&A, 646, A140 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Hildebrandt, H., van den Busch, J. L., Wright, A. H., et al. 2021, A&A, 647, A124 [EDP Sciences] [Google Scholar]

- Hinton, S. R. 2016, J. Open Source Softw., 1, 00045 [NASA ADS] [CrossRef] [Google Scholar]

- Hirata, C. M., Seljak, U. C. V., 2004, Phys. Rev. D, 70, 063526 [NASA ADS] [CrossRef] [Google Scholar]

- Ho, M., Bartlett, D. J., Chartier, N., et al. 2024, Open J. Astrophys., 7, 54 [NASA ADS] [CrossRef] [Google Scholar]

- Huang, S., Leauthaud, A., Murata, R., et al. 2017, PASJ, 70, S6 [Google Scholar]

- Huang, H.-J., Eifler, T., Mandelbaum, R., & Dodelson, S. 2019, MNRAS, 488, 1652 [NASA ADS] [CrossRef] [Google Scholar]

- Hunter, J. D. 2007, Comput. Sci. Eng., 9, 90 [NASA ADS] [CrossRef] [Google Scholar]

- Huterer, D., & Turner, M. S. 2001, Phys. Rev. D, 64, 123527 [Google Scholar]

- Ishikawa, S., Okumura, T., Oguri, M., & Lin, S.-C. 2021, ApJ, 922, 23 [NASA ADS] [CrossRef] [Google Scholar]

- Ivanov, M. M., Cuesta-Lazaro, C., Mishra-Sharma, S., Obuljen, A., & Toomey, M. W. 2024, Phys. Rev. D, 110, 063538 [CrossRef] [Google Scholar]

- Ivezić, v., Kahn, S. M., Tyson, J. A., et al. 2019, ApJ, 873, 111 [NASA ADS] [CrossRef] [Google Scholar]

- Joachimi, B., Lin, C.-A., Asgari, M., et al. 2021, A&A, 646, A129 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Kiessling, A., Cacciato, M., Joachimi, B., et al. 2015, Space Sci. Rev., 193, 67 [Google Scholar]

- Krause, E., Fang, X., Pandey, S., et al. 2021, arXiv e-prints [arXiv:2105.13548] [Google Scholar]

- Kullback, S., & Leibler, R. A. 1951, Ann. Math. Stat., 22, 79 [CrossRef] [Google Scholar]

- Lamman, C., Eisenstein, D., Aguilar, J. N., et al. 2023, MNRAS, 522, 117 [NASA ADS] [CrossRef] [Google Scholar]

- Lamman, C., Tsaprazi, E., Shi, J., et al. 2024, Open J. Astrophys., 7, 34 [NASA ADS] [CrossRef] [Google Scholar]

- Lange, J. U. 2023, MNRAS, 525, 3181 [NASA ADS] [CrossRef] [Google Scholar]

- Laureijs, R., Amiaux, J., Arduini, S., et al. 2011, arXiv e-prints [arXiv:1110.3193] [Google Scholar]

- Lemos, P., Raveri, M., Campos, A., et al. 2021, MNRAS, 505, 6179 [NASA ADS] [CrossRef] [Google Scholar]

- Li, X., Miyatake, H., Luo, W., et al. 2022, PASJ, 74, 421 [NASA ADS] [CrossRef] [Google Scholar]

- LSST Science Collaboration (Abell, P. A., et al.) 2009, arXiv e-prints [arXiv:0912.0201] [Google Scholar]

- MacCrann, N., DeRose, J., Wechsler, R. H., et al. 2018, MNRAS, 480, 4614 [NASA ADS] [CrossRef] [Google Scholar]

- MacCrann, N., Becker, M. R., McCullough, J., et al. 2021, MNRAS, 509, 3371 [CrossRef] [Google Scholar]

- Mahalanobis, P. C. 1936, Proc. Natl. Acad. Sci. India, 2, 49 [Google Scholar]

- Mandelbaum, R., Blazek, J., Chisari, N. E., et al. 2019, BAAS, 51, 363 [NASA ADS] [Google Scholar]

- Mummery, B. O., McCarthy, I. G., Bird, S., & Schaye, J. 2017, MNRAS, 471, 227 [NASA ADS] [CrossRef] [Google Scholar]

- Myles, J., Alarcon, A., Amon, A., et al. 2021, MNRAS, 505, 4249 [NASA ADS] [CrossRef] [Google Scholar]

- Newman, J. A., & Gruen, D. 2022, ARA&A, 60, 363 [NASA ADS] [CrossRef] [Google Scholar]

- Nguyen, N.-M., Schmidt, F., Tucci, B., Reinecke, M., & Kostić, A. 2024, Phys. Rev. Lett., 133, 221006 [CrossRef] [Google Scholar]

- Novaes, C. P., Thiele, L., Armijo, J., et al. 2024, arXiv e-prints [arXiv:2409.01301] [Google Scholar]

- Pandey, S., Krause, E., DeRose, J., et al. 2022, Phys. Rev. D, 106, 043520 [NASA ADS] [CrossRef] [Google Scholar]

- Percival, W. J., Friedrich, O., Sellentin, E., & Heavens, A. 2021, MNRAS, 510, 3207 [Google Scholar]

- Raveri, M., & Hu, W. 2019, Phys. Rev. D, 99, 043506 [NASA ADS] [CrossRef] [Google Scholar]

- Rosell, A. C., Rodriguez-Monroy, M., Crocce, M., et al. 2021, MNRAS, 509, 778 [CrossRef] [Google Scholar]

- Rozo, E., Rykoff, E. S., Abate, A., et al. 2016, MNRAS, 461, 1431 [NASA ADS] [CrossRef] [Google Scholar]

- Samuroff, S., Mandelbaum, R., Blazek, J., et al. 2023, MNRAS, 524, 2195 [Google Scholar]

- Sartoris, B., Biviano, A., Rosati, P., et al. 2020, A&A, 637, A34 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Scherrer, R. J., & Weinberg, D. H. 1998, ApJ, 504, 607 [NASA ADS] [CrossRef] [Google Scholar]

- Schmidt, F. 2021, JCAP, 2021, 033 [CrossRef] [Google Scholar]

- Secco, L., Samuroff, S., Krause, E., et al. 2022, Phys. Rev. D, 105, 023515 [NASA ADS] [CrossRef] [Google Scholar]

- Seo, H., & Eisenstein, D. J. 2007, ApJ, 665, 14 [CrossRef] [Google Scholar]

- Sevilla-Noarbe, I., Bechtol, K., Carrasco Kind, M., et al. 2021, ApJS, 254, 24 [NASA ADS] [CrossRef] [Google Scholar]

- Siegmund, D. 1976, Ann. Stat., 4, 673 [CrossRef] [Google Scholar]

- Skilling, J. 2004, AIP Conf. Proc., 735, 395 [Google Scholar]

- Speagle, J. S. 2020, MNRAS, 493, 3132 [Google Scholar]

- Sugiyama, S., Takada, M., Kobayashi, Y., et al. 2020, Phys. Rev. D, 102, 083520 [Google Scholar]

- Sugiyama, S., Takada, M., Miyatake, H., et al. 2022, Phys. Rev. D, 105, 123537 [CrossRef] [Google Scholar]

- Szewciw, A. O., Beltz-Mohrmann, G. D., Berlind, A. A., & Sinha, M. 2022, ApJ, 926, 15 [NASA ADS] [CrossRef] [Google Scholar]

- Talts, S., Betancourt, M., Simpson, D., Vehtari, A., & Gelman, A. 2018, arXiv e-prints [arXiv:1804.06788] [Google Scholar]

- Tanaka, M., Coupon, J., Hsieh, B.-C., et al. 2017, PASJ, 70, S9 [NASA ADS] [Google Scholar]

- The pandas development team 2020, https://doi.org/10.5281/zenodo.3509134 [Google Scholar]

- To, C.-H., Rozo, E., Krause, E., et al. 2023, JCAP, 2023, 016 [CrossRef] [Google Scholar]

- Tröster, T., Mead, A. J., Heymans, C., et al. 2022, A&A, 660, A27 [CrossRef] [EDP Sciences] [Google Scholar]

- Troxel, M., & Ishak, M. 2015, Phys. Rep., 558, 1 [NASA ADS] [CrossRef] [Google Scholar]

- van Daalen, M. P., McCarthy, I. G., & Schaye, J. 2019, MNRAS, 491, 2424 [Google Scholar]

- Velliscig, M., van Daalen, M. P., Schaye, J., et al. 2014, MNRAS, 442, 2641 [Google Scholar]

- Virtanen, P., Gommers, R., Oliphant, T. E., et al. 2020, Nat. Methods, 17, 261 [Google Scholar]

- Voivodic, R., & Barreira, A. 2021, JCAP, 2021, 069 [Google Scholar]

- Walther, M., Armengaud, E., Ravoux, C., et al. 2021, JCAP, 2021, 059 [Google Scholar]

- Wechsler, R. H., DeRose, J., Busha, M. T., et al. 2022, ApJ, 931, 145 [NASA ADS] [CrossRef] [Google Scholar]

- Yao, Y., & Domke, J. 2023, arXiv e-prints [arXiv:2305.14593] [Google Scholar]