| Issue |

A&A

Volume 699, July 2025

|

|

|---|---|---|

| Article Number | A213 | |

| Number of page(s) | 19 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202453339 | |

| Published online | 08 July 2025 | |

Simulation-based inference has its own Dodelson–Schneider effect (but it knows that it does)

1

University Observatory, Faculty of Physics, Ludwig-Maximilians-Universität,

Scheinerstr. 1,

81677

Munich,

Germany

2

Munich Center for Machine Learning (MCML),

Germany

3

Excellence Cluster ORIGINS,

Boltzmannstr. 2,

85748

Garching,

Germany

★ Corresponding author: jed.homer@physik.lmu.de

Received:

6

December

2024

Accepted:

7

May

2025

Context. Making inferences about physical properties of the Universe requires knowledge of the data likelihood. A Gaussian distribution is commonly assumed for the uncertainties with a covariance matrix estimated from a set of simulations. The noise in such covariance estimates causes two problems: it distorts the width of the parameter contours, and it adds scatter to the location of those contours that is not captured by the widths themselves. For non-Gaussian likelihoods, an approximation may be derived via simulation-based inference (SBI). It is often implicitly assumed that parameter constraints from SBI analyses, which do not use covariance matrices, are not affected by the same problems as parameter estimation with a covariance matrix estimated from simulations.

Aims. We aim to measure the coverage and marginal variances of the posteriors derived using density-estimation SBI over many identical experiments to investigate whether SBI suffers from effects similar to those of covariance estimation in Gaussian likelihoods.

Methods. We used a neural-posterior and likelihood estimation with continuous and masked autoregressive normalising flows for density estimation. We fitted our approximate posterior models to simulations drawn from a Gaussian linear model, so the SBI result can be compared to the true posterior, and effects related to noise in the covariance estimate are known analytically. We tested linear and neural-network-based compression, demonstrating that neither method circumvents the issues of covariance estimation.

Results. SBI suffers an inflation of posterior variance that is equal to or greater than the analytical result in covariance estimation for Gaussian likelihoods for the same number of simulations. This inflation of variance is captured conservatively by the reported confidence intervals, leading to an acceptable coverage regardless of the number of simulations. The assumption that SBI requires a smaller number of simulations than covariance estimation for a Gaussian likelihood analysis is inaccurate. The limitations of traditional likelihood analysis with simulation-based covariance remain for SBI with finite simulation budget. Despite these issues, we show that SBI correctly draws the true posterior contour when there are enough simulations.

Key words: methods: data analysis / methods: statistical / cosmological parameters / large-scale structure of Universe

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Current- and next-generation cosmological experiments such as the Dark Energy Survey (DES; Dark Energy Survey Collaboration 2016), Euclid (Laureijs et al. 2011), the Nancy Grace Roman Space Telescope (Eifler et al. 2021), the Vera C. Rubin Observatory Legacy Survey of Space and Time (LSST; Ivezić et al. 2019), the 4m Multi-Object Spectroscopic Telescope (4MOST; de Jong et al. 2019), and the Dark Energy Spectroscopic Instrument (DESI; Levi et al. 2019) will return a huge volume of observational data of the large-scale structure in the Universe. The purpose of this effort is to constrain the values of fundamental physical parameters as accurately and precisely as possible. The limit of the cosmological information that can be extracted from a measurement ultimately depends on the typically unknown likelihood function of the data. The likelihood function compares the data to a theoretical model, and this comparison may be inaccurate and imprecise because

the model prediction for the expectation value as a function of the parameters may not be known analytically, or it may be inaccurately predicted in numerical simulations;

in addition to the expectation value, the likelihood function, which gives the distribution of the measurement around the expectation, is not known.

The likelihood functions for observables of the large-scale structure are often unknown, and only approximate expressions for them exist (Uhlemann et al. 2020). This is particularly the case for higher order statistics. These obtain information beyond the amount extracted by traditional two-point functions (2pt Collaboration 2024) – and can potentially break parameter degeneracies, with many approaches based on measuring higher order correlations of cosmological fields having been proposed (Kacprzak et al. 2016; Uhlemann et al. 2020; Gruen et al. 2018; Friedrich et al. 2018; Hamaus et al. 2020; Contarini et al. 2023; Halder et al. 2021; Valogiannis & Dvorkin 2022; Davies et al. 2022; Hou et al. 2024). This promise but lack of analytical expressions for their likelihood promotes the use of SBI (or ‘likelihood-free’; Cranmer et al. 2020) methods to extract information from such statistics.

Simulation-based inference (SBI; Cranmer et al. 2020) covers a broad class of statistical techniques (e.g. Akeret et al. 2015; Cranmer et al. 2016; Papamakarios 2019; Lueckmann et al. 2017; Alsing et al. 2018; Cole et al. 2022) that derive an approximate likelihood or posterior model from a set of simulations paired with their model parameters. The likelihood is fitted with no assumptions on the data-generating process and allows for complex effects in the measurement process to be forward-modelled. This is in contrast with classical or explicit likelihood methods that require an analytic model for the expectation value and statistical uncertainties in the data. An additional claimed benefit of SBI methods is the ease of using multiple probes simultaneously without analytically modelling cross-correlations between the measurements (Fang et al. 2023; Reeves et al. 2024). The density estimation techniques used for SBI (Alsing et al. 2018; Alsing & Wandelt 2019; Lueckmann et al. 2017; Papamakarios 2019; Glöckler et al. 2022) apply generative models fitted with either maximum-likelihood optimisation or variational inference.

The use of SBI is now established in many branches of cosmology, with a variety of different methods for deriving posteriors from sets of simulations. These include density estimation of the likelihood or posterior (Alsing et al. 2018; Akeret et al. 2015; Makinen et al. 2021; Leclercq & Heavens 2021; Leclercq 2018); ABC (Akeret et al. 2015; Weyant et al. 2013); neural-ratio estimation (Cranmer et al. 2016; Cole et al. 2022), which has been applied to galaxy clustering (Tucci & Schmidt 2024; Modi et al. 2025; Hahn et al. 2023a,b; Lemos et al. 2024; Hou et al. 2024); weak lensing (Lin & Kilbinger 2015; Jeffrey et al. 2020; Gatti et al. 2024; Jeffrey et al. 2025); cosmic shear (Lin et al. 2023); cluster abundance (Tam et al. 2022); cosmic microwave background radiation (Cole et al. 2022); gravitational wave sirens (Gerardi et al. 2021); type Ia supernovae (Weyant et al. 2013); and the cosmic 21cm signal (Prelogović & Mesinger 2023), emerging as a fast and efficient method for deriving posteriors from measurements after fitting to forward-modelled simulations of data. The potential of SBI methods is said to allow for Bayesian inference with high-dimensional data when it comes to unknown or inaccurate models; this is for an expectation value and likelihood that are intractable or difficult to analyse using traditional likelihood-based methods.

Due to the issues surrounding modern observational cosmology, the potential of SBI methods for these problems, and the rapid innovations within the machine learning literature to implement the analyses, we would like to know if SBI methods can return posterior estimates whose locations scatter less compared to the true posterior than those of a Gaussian likelihood with simulation-estimated covariance for the same number of simulations. Moreover, if this is the case, we endeavour to find out whether SBI can determine the additional scatter in the location of its posterior estimates; that is, if SBI inflates its contours sufficiently (compared to the true posterior) to still achieve good parameter coverage in repeated experiments.

To answer these questions, we tested the SBI framework for Gaussian-data vectors with a parameter-independent covariance matrix and linear parameter dependence for the expectation value of the data. This allowed us to compare our SBI posterior estimates, in which the likelihood or data covariance is not known, to both the true posterior and to the posteriors that would be derived from covariance estimation within a Gaussian likelihood assumption. As a data vector, we assumed a tomographic cosmic-shear data vector, and we tested different techniques to compress it: score compression (Alsing & Wandelt 2018) with and without knowledge of the data vectors’ covariance as well as a neural-network-based compression. We then investigated how SBI combined with either of these compression techniques performs with respect to the scatter, width, and coverage of resulting parameter contours as a function of the number of simulations used for training (again, compared to the true posterior and posteriors derived from covariance estimation). These experiments test the common assertion that SBI is not affected by the errors that make covariance estimation impracticable for high-dimensional data vectors and computationally expensive simulations (e.g. Jeffrey et al. 2020; Jeffrey et al. 2025; Gatti et al. 2024). In Fig. 1, we illustrate the answers to our questions with a set of posteriors derived with a Gaussian-likelihood analysis – accounting for the unknown covariance – contrasted with SBI analyses with either compression method.

Our data were generated from a Gaussian likelihood with a model that is linear in its parameters, where a Fisher analysis, the noise bias correction of Hartlap et al. (2006), and the derivation of the excess scatter of the best-fit parameters in Dodelson & Schneider (2013) – known as the Dodelson–Schneider effect – are all valid. This allowed us to analytically determine the bestfit parameters and the confidence contours in each likelihood analysis for comparison with the posteriors derived with SBI.

In Sect. 2, we review the main issues for estimating data covariances and methods used to account for the noise in covariances estimated from simulations, highlighting where SBI is claimed to be advantageous. In Sect. 3, we outline the methods for density estimation, SBI, data compression used in this work, and a simple experiment in which we tested these methods1. In Sect. 4, we present results from the experiments, and in Sect. 6, we conclude.

|

Fig. 1 Effects of estimated data error distribution on posterior contours and their locations. We show estimates |

2 Parameter estimation with noisy covariance matrices

We studied a simple inference problem in which the likelihood is not known. A common ansatz in cosmological analyses is to assume a Gaussian likelihood for a given set of statistics of the large-scale structure. Typically, this statistical model for the likelihood depends on a parametrised expectation value, ξ[π], and a covariance matrix, Σ[π] where π physical parameters need to be estimated from the data. If the true covariance is not known, then an estimate ![$\[\hat{\Sigma}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq2.png) may be derived from simulated data. Often, this estimator is derived for one fiducial set of parameters, and the parameter dependence of the covariance is assumed to be negligible. Note, however, that this is not in principle a limitation of covariance estimation within the Gaussian likelihood assumption. It is possible to derive estimates,

may be derived from simulated data. Often, this estimator is derived for one fiducial set of parameters, and the parameter dependence of the covariance is assumed to be negligible. Note, however, that this is not in principle a limitation of covariance estimation within the Gaussian likelihood assumption. It is possible to derive estimates, ![$\[\hat{\Sigma}[\boldsymbol{\pi}]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq3.png) , at different values of the parameters, π (and e.g. interpolate between them). We emphasise this because the parameter dependence of the (width of the) likelihood function is not the main reason why SBI may be preferable to covariance estimation paired with a Gaussian-likelihood assumption. Instead, SBI is most needed in cases where the Gaussian assumption itself (whether with or without parameter-dependent covariance) is insufficient.

, at different values of the parameters, π (and e.g. interpolate between them). We emphasise this because the parameter dependence of the (width of the) likelihood function is not the main reason why SBI may be preferable to covariance estimation paired with a Gaussian-likelihood assumption. Instead, SBI is most needed in cases where the Gaussian assumption itself (whether with or without parameter-dependent covariance) is insufficient.

The Gaussian likelihood assumption can be justified when the data are made up of measurements in independent sub-volumes of a survey area so that their sum is Gaussian distributed via the central limit theorem. In practice, the validation of this ansatz is demanding (Joachimi et al. 2021; Friedrich et al. 2021) and can result in scale cuts to the data vector, thus reducing the information return of the analysis.

However, even when the Gaussian-likelihood ansatz is justified, a covariance estimation only yields noisy versions of the true covariance, Σ, and its inverse Σ−1 (the precision matrix). If independent and accurately drawn realisations, ξi (i = 1 , ... , ns), of the data vector in simulated data are available, then an unbiased estimate for the data covariance Σ is calculated as

![$\[S=\frac{1}{n_s-1} \sum_{i=1}^{n_s}\left(\boldsymbol{\xi}_i-\overline{\boldsymbol{\xi}}\right)\left(\boldsymbol{\xi}_i-\overline{\boldsymbol{\xi}}\right)^T,\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq4.png) (1)

(1)

is the mean calculated over all available data. The inversion of this matrix is a non-linear process that causes S−1 to be a biased estimator of Σ−1 and to have significantly poorer noise properties than S (Taylor et al. 2013).

If the true likelihood function is indeed Gaussian, then the bias of S−1 can be corrected by applying a factor h as (Kaufman 1967; Hartlap et al. 2006)

![$\[\hat{\Sigma}^{-1}=(h S)^{-1},\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq6.png) (3)

(3)

The above correction only de-biases ![$\[\hat{\Sigma}^{-1}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq8.png) and not the full likelihood function, which even in the Gaussian case is a non-linear function of

and not the full likelihood function, which even in the Gaussian case is a non-linear function of ![$\[\hat{\Sigma}^{-1}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq9.png) . Surprisingly, though, at least the width of the likelihood function and of the parameter posteriors that might be derived from it are usually quite well approximated just by inserting (hS)−1 into the Gaussian likelihood function (Friedrich & Eifler 2017; Percival et al. 2021). The main problem of covariance estimation is not the width of parameter posteriors, but the location of the latter. The noise in (hS)−1 causes additional scatter in the location of parameter contours, which can make the overall scatter of maximum-posterior parameters in repeated experiments much larger than indicated by the posterior width itself (Dodelson & Schneider 2013; Friedrich & Eifler 2017).

. Surprisingly, though, at least the width of the likelihood function and of the parameter posteriors that might be derived from it are usually quite well approximated just by inserting (hS)−1 into the Gaussian likelihood function (Friedrich & Eifler 2017; Percival et al. 2021). The main problem of covariance estimation is not the width of parameter posteriors, but the location of the latter. The noise in (hS)−1 causes additional scatter in the location of parameter contours, which can make the overall scatter of maximum-posterior parameters in repeated experiments much larger than indicated by the posterior width itself (Dodelson & Schneider 2013; Friedrich & Eifler 2017).

It is this effect of increased parameter scatter that we were in search of. Naive covariance estimation together with the correction factor h does not understand that it suffers from this scatter (e.g. Fig. 1 of Friedrich & Eifler 2017). This raises the question as to why SBI – via which we estimated not only the covariance, but the full likelihood shape from simulations – would not suffer from this effect. If it does suffer from it, we want to know why SBI automatically adjusts its own contour size to account for this additional scatter in maximum-posterior parameters.

In the case of covariance estimation, Dodelson & Schneider (2013) found that the scatter of maximum-posterior parameters (or rather their parameter covariance) is enhanced compared to inverse Fisher matrix ![$\[F_{\Sigma}^{-1}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq10.png) (i.e. compared to the naive expectation) via

(i.e. compared to the naive expectation) via

![$\[\left\langle(\hat{\boldsymbol{\pi}}-\boldsymbol{\pi})(\hat{\boldsymbol{\pi}}-\boldsymbol{\pi})^T\right\rangle_{\xi, S}=\left[1+B\left(n_{\xi}-n_\pi\right)\right] F_{\Sigma}^{-1},\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq11.png) (5)

(5)

where nξ is the number of data points, nπ is the number of parameters, and the factor B is given by

![$\[B=\frac{n_s-n_{\xi}-2}{\left(n_s-n_{\xi}-1\right)\left(n_s-n_{\xi}-4\right)}.\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq12.png) (6)

(6)

In order for parameter contours to take into account this additional uncertainty, the covariance of parameter posteriors should be enhanced by the Dodelson–Schneider factor:

![$\[f_{\mathrm{DS}}=1+\frac{\left(n_{\xi}-n_\pi\right)\left(n_s-n_{\xi}-2\right)}{\left(n_s-n_{\xi}-1\right)\left(n_s-n_{\xi}-4\right)}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq13.png) (7)

(7)

![$\[\approx 1+\frac{n_{\xi}-n_\pi}{n_s-n_{\xi}},\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq14.png) (8)

(8)

where the approximation in the last line is valid if ns ≫ nξ ≫ nπ. A concrete recipe for how to widen parameter posterior in order to correct for the Dodelson–Schneider effect (and other, usually subdominant effects) was provided by Percival et al. (2021). There, the authors marginalised over the unknown, true covariance Σ in a Bayesian approach that takes into account the likelihood function, p(S|Σ), given by the Wishart distribution in the Gaussian case, and a prior distribution p(Σ) ∝ |Σ|m. They provided the coefficient m in the prior distribution that leads to the desired frequentist coverage of the resulting Bayesian parameter constraints (note in particular that the coefficient m considered by Sellentin & Heavens (2015) does not lead to that desired coverage).

As mentioned before, we want to investigate whether SBI suffers from problems analogous to the ones described above, and if it does, whether it self-corrects to obtain the desired coverage properties or whether a manual widening of posteriors as in the case of Gaussian covariance estimation is required. Note, however, that even if SBI automatically corrects for its own Dodelson–Schneider effect, this correction still means that posteriors are widened and this parameter information is diluted. This may significantly hinder SBI approaches from delivering on the promised improvements of parameter constraints compared to likelihood full analyses of summary statistics whose uncertainties can be modelled analytically.

One more comment to emphasise that the Dodelson–Schneider effect cannot be easily circumvented is that it is not possible to beat down fDS by simply compressing a given set of statistics to a smaller data vector. For example, for the popular MOPED compression (Heavens et al. 2000, or equivalently score compression; Alsing & Wandelt 2018), this would require knowledge of the covariance, Σ, in the first place. If that is approximated by an estimate, S, then this is just shifting the problem from one side of the statistical analysis to another. In fact, Dodelson & Schneider (2013, hereafter DS13) derived fDS exactly by considering the scatter of optimally compressed statistics that use noisy covariances.

Given the described assumptions, and upon acquiring a measurement, ![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq15.png) , inference on the values of model parameters is based on a Gaussian likelihood and the estimated precision matrix

, inference on the values of model parameters is based on a Gaussian likelihood and the estimated precision matrix ![$\[\hat{\Sigma}^{-1}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq16.png) :

:

![$\[p\left(\hat{\boldsymbol{\xi}} \mid \boldsymbol{\pi}, \hat{\Sigma}^{-1}\right) \propto \exp \left[-\frac{1}{2} \chi^2\left(\hat{\boldsymbol{\xi}}, \boldsymbol{\pi}, \hat{\Sigma}^{-1}\right)\right],\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq17.png) (9)

(9)

A posterior distribution for the parameters in light of the measurement, using a prior density p(π), is expressed as

![$\[p(\boldsymbol{\pi} \mid \hat{\boldsymbol{\xi}}) \propto p\left(\hat{\boldsymbol{\xi}} \mid \boldsymbol{\pi}, \hat{\Sigma}^{-1}\right) p(\boldsymbol{\pi}),\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq19.png) (11)

(11)

ignoring a parameter-independent normalisation factor.

For a given S and ![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq20.png) , the maximum a posteriori (MAP) or best-fit parameter estimates

, the maximum a posteriori (MAP) or best-fit parameter estimates ![$\[\hat{\boldsymbol{\pi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq21.png) are obtained with an estimated precision matrix of

are obtained with an estimated precision matrix of ![$\[\hat{\Sigma}^{-1}=(h S)^{-1}=\Sigma^{-1}+\Delta_{\Sigma^{-1}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq22.png) as

as

![$\[\hat{\boldsymbol{\pi}}=\boldsymbol{\pi}+\left[F_{\Sigma}+\Delta_F\right]^{-1} \partial_{\boldsymbol{\pi}} \boldsymbol{\xi}[\boldsymbol{\pi}]^T\left[\Sigma^{-1}+\Delta_{\Sigma^{-1}}\right](\hat{\boldsymbol{\xi}}-\overline{\boldsymbol{\xi}}).\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq23.png) (12)

(12)

Here, FΣ is the Fisher matrix, which is a function of the likelihood written as

![$\[F_{\Sigma}(\boldsymbol{\pi})=\partial_\boldsymbol{\pi} \boldsymbol{\xi}[\boldsymbol{\pi}]^T \Sigma^{-1} \partial_\boldsymbol{\pi} \boldsymbol{\xi}[\boldsymbol{\pi}]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq24.png) (13)

(13)

for a Gaussian likelihood parametrised with the true precision matrix, Σ−1. The quantity ΔF is of the same form with the error on the precision matrix ΔΣ−1 instead of the unknown true precision matrix Σ−1. For a model ξ[π] – linear in π with Gaussian error bars on the data – the Fisher matrix defined above precisely quantifies the information content of the data upon the model at a fixed point in parameter space.

With the increase in the dimensionality of measurements returned from cutting-edge cosmological surveys, it may not be possible to obtain a precision matrix due to the singularity of the estimated covariance matrix (Hartlap et al. 2006; Dodelson & Schneider 2013; White & Padmanabhan 2015). In particular, this is true for data vectors that combine multiple probes that are critical to breaking parameter degeneracies and calibrate systematic effects independently (Kacprzak & Fluri 2022; Fang et al. 2023; Reeves et al. 2024). In this case, estimating one from simulations requires an unfeasible amount of computation (Taylor & Joachimi 2014; Friedrich & Eifler 2017).

However, if it is possible, a covariance matrix Σ may be estimated from data realisations themselves (e.g. Jackknifing or sub-sampling (Norberg et al. 2009; Friedrich et al. 2015; Mohammad & Percival 2022); a set of accurate numerical simulations that assumed an underlying cosmological model (Percival et al. 2014; Uhlemann et al. 2020); a theoretical covariance model (Schneider et al. 2002; Friedrich et al. 2021; Joachimi et al. 2021; Aghanim et al. 2020; Krause & Eifler 2017; Linke et al. 2023; Fang et al. 2023; Reeves et al. 2024), or some hybrid method combining these techniques (Friedrich & Eifler 2017; Hall & Taylor 2018)). Alternatively, covariance matrices computed from an analytical covariance model are noiseless and easily invertible, but they are only accurate given a good understanding of the statistical properties of the data. Ultimately, obtaining an analytic covariance may be an insurmountable task.

3 Methods

In the following subsections, we describe the data likelihood with which we ran our analyses, the SBI experiments, the data compression, and the normalising flow models we used for density estimation and hyperparameter optimisation.

3.1 Model

We ran an inference of cosmological parameters estimated from a measurement, drawn from a linearised model of the DES-Y3 cosmic-shear two-point function data vector, where noise is sampled from a fiducial covariance matrix. This data covariance is calculated with an analytic halo-based model from Krause & Eifler (2017). The linear model is a Taylor expansion of the full model around the fiducial point in parameter space π0, written as

![$\[\boldsymbol{\xi}[\boldsymbol{\pi}]=\boldsymbol{\xi}\left[\boldsymbol{\pi}^0\right]+\left.\left(\boldsymbol{\pi}-\boldsymbol{\pi}^0\right)^T \partial_\boldsymbol{\pi} \boldsymbol{\xi}[\boldsymbol{\pi}]\right|_{\boldsymbol{\pi}=\boldsymbol{\pi}^0.}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq25.png) (14)

(14)

The true data likelihood from which we sampled data in our experiments is written ![$\[\mathcal{G}[\hat{\boldsymbol{\xi}} {\mid} \boldsymbol{\xi}[\boldsymbol{\pi}], \boldsymbol{\Sigma}]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq26.png) . We used a uniform prior on the parameters where Ωm ∈ [0.05, 0.55], σ8 ∈ [0.45, 1.0], and w0 ∈ [−1.4, −0.1]. The parameter set that maximises the likelihood (and therefore the posterior in this case) is given by Eq. (12).

. We used a uniform prior on the parameters where Ωm ∈ [0.05, 0.55], σ8 ∈ [0.45, 1.0], and w0 ∈ [−1.4, −0.1]. The parameter set that maximises the likelihood (and therefore the posterior in this case) is given by Eq. (12).

The data vector consists of two-point functions of cosmicshear measurements (Schneider et al. 2002; Schneider 2006; Kilbinger 2015; Amon et al. 2022). The shear field is a map of coherent distortions from weak gravitational lensing of galaxy images in the large-scale structure matter distribution. The measurement is sensitive to the density fluctuations projected along the line of sight and weighted by the lens galaxy distribution. It is known to measure a degenerate combination of σ8 and the matter-density parameter Ωm.

Describing the experiments we ran.

3.2 Experimental setup

We ran a simple inference on a set of parameters from a noisy data vector with a linear compression. Note that since we assume a linear expectation value and constant covariance, this is equivalent to score compression (Alsing & Wandelt 2018, 2019). This experiment repeated when the data covariance is known and done separately when it is estimated from a set of ns simulations. When the data covariance is estimated, the linear compression increases the scatter in the estimated parameters. This affects the compression on the noisy data vector as well as the simulated data that were used to fit the normalising flow-density estimators. We also ran separate SBI experiments that use a neural network for the compression – this would be assumed to be a simple fix to the issues of covariance matrix estimation.

We measured the marginal uncertainty on the inferred parameters by calculating the variance of samples from the posteriors estimated with SBI conditioned on the noisy data vectors. We also measured the coverage of the posteriors for each data vector. Each analysis consists of separately applying neural-posterior estimation (NPE; Greenberg et al. 2019) and neural-likelihood estimation (NLE; Papamakarios et al. 2019) to fit a posterior when given a set of simulations and their model parameters (see Table 1 for a description of the separate analyses we ran). The total number of simulations available in the experiment mimics the number of available N-body simulations in a cosmological analysis. We repeated the NPE and NLE analyses 200 times for experiments where the data covariance is known and another 200 times for where it is estimated from simulations.

In one experiment, we sampled a set of ‘true’ parameters from a uniform prior π ~ p(π), initialised the density estimator parameters randomly, and generated a set of ns simulations. We did so either at the fiducial parameters, π, sampling ![$\[\left\{\boldsymbol{\xi}_{i}\right\}_{i=1}^{n_{s}} \sim \mathcal{G}[\boldsymbol{\xi} {\mid} \boldsymbol{\xi}[\boldsymbol{\pi}], \Sigma]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq31.png) , and calculated the sample covariance matrix S if Σ is unknown; or at a set of parameters sampled from the prior p(π) to train a neural network to compress our data. In the same experiment, we sampled physics parameters from a uniform prior

, and calculated the sample covariance matrix S if Σ is unknown; or at a set of parameters sampled from the prior p(π) to train a neural network to compress our data. In the same experiment, we sampled physics parameters from a uniform prior ![$\[\left\{\boldsymbol{\pi}_{i}\right\}_{i=1}^{n_{s}} \sim p(\boldsymbol{\pi})\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq32.png) and generated ns simulations from the ns prior samples using the linearised model with noise sampled from the true covariance matrix, Σ. We did this to compress the simulations either with a linear compression

and generated ns simulations from the ns prior samples using the linearised model with noise sampled from the true covariance matrix, Σ. We did this to compress the simulations either with a linear compression ![$\[\hat{\boldsymbol{\pi}}=\boldsymbol{\pi}+F_{\hat{\Sigma}^{-1}}^{-1} \partial_\boldsymbol{{\pi}} \boldsymbol{{\xi}}[\pi] \hat{\Sigma}^{-1}(\hat{\boldsymbol{\xi}}- \overline{\boldsymbol{\xi}})\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq33.png) , parametrised by true expectation ξ[π], the true or estimated precision (Σ−1 or (hS)−1), and the theory derivatives ∂πξ[π]; or with a neural network trained on the first set of ns simulations and parameter pairs. Finally, we fitted a normalising flow to the set of compressed simulations and parameters

, parametrised by true expectation ξ[π], the true or estimated precision (Σ−1 or (hS)−1), and the theory derivatives ∂πξ[π]; or with a neural network trained on the first set of ns simulations and parameter pairs. Finally, we fitted a normalising flow to the set of compressed simulations and parameters ![$\[\left\{\hat{\boldsymbol{\xi}}_{i}, \boldsymbol{\pi}_{i}\right\}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq34.png) by maximising the log-probability (of the likelihood or posterior) with stochastic gradient descent, and we sampled the posterior given a measurement,

by maximising the log-probability (of the likelihood or posterior) with stochastic gradient descent, and we sampled the posterior given a measurement, ![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq35.png) , compressed to,

, compressed to, ![$\[\hat{\boldsymbol{\pi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq36.png) , from the true data-generating likelihood.

, from the true data-generating likelihood.

This experiment is idealised in the following ways:

the measurement errors upon our data

![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq37.png) were drawn from the same distribution from which the simulations used to fit the density estimation models were drawn;

were drawn from the same distribution from which the simulations used to fit the density estimation models were drawn;there are no nuisance parameters in our modelling of the data to marginalise over;

the analytic compression of our data is (given enough simulations at the fiducial parameters) lossless, so the posterior given the summary is identical to the posterior given the data;

the true expectation value,

![$\[\overline{\boldsymbol{\xi}}=\langle\hat{\boldsymbol{\xi}}\rangle\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq38.png) , lies in the parameter space, meaning there are parameters π such that

, lies in the parameter space, meaning there are parameters π such that ![$\[\boldsymbol{\xi}[\boldsymbol{\pi}]=\overline{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq39.png) ;

;the Dodelson–Schneider correction factor (Eq. (5)) is exact given that the Fisher information of a Gaussian likelihood (with a model that is linear in the parameters) quantifies the posterior covariance.

Note that NLE requires a specific prior in order to calculate the posterior, whereas NPE implicitly uses the prior defined by the distribution of parameters that were used to generate the training data. We therefore adopted the priors used to sample the parameters (in the NLE analyses) to generate the training data of the flows and ensure both methods use the same prior and allow for a comparison of equivalent analyses.

The number of simulations ns input into an experiment using either NPE or NLE depends on the compression method being used. This is shown in Table 1 for reference. To compress our data, we used either a linear compression with ![$\[\hat{\Sigma}=\Sigma, \hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq40.png) , or

, or ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq41.png) and, separately, a neural network fitted with a mean-squared error loss. Either option, except when Σ is known, requires 2ns simulations in total – ns for the covariance estimation, or neural-network training and ns for fitting the density estimator of the likelihood or posterior. The comparison of the posteriors from SBI should therefore be compared, for our Gaussian linear model, with a Gaussian-likelihood analysis using 2ns simulations.

and, separately, a neural network fitted with a mean-squared error loss. Either option, except when Σ is known, requires 2ns simulations in total – ns for the covariance estimation, or neural-network training and ns for fitting the density estimator of the likelihood or posterior. The comparison of the posteriors from SBI should therefore be compared, for our Gaussian linear model, with a Gaussian-likelihood analysis using 2ns simulations.

Simulation-based inference methods fit the model, ξ[π], covariance, Σ, and likelihood shape simultaneously from simulations. In Appendix D, we use the same Gaussian linear model to test if fitting the expectation alone, with a known covariance, introduces significant uncertainty in the posterior as a function of ns. Since the sample’s mean and covariance are independent, this would only affect analyses for which ![$\[n_{\xi} \sim n_{\xi}^{2}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq42.png) , which is a concern for future experiments. However, this shows that the tests presented in this work are fair for SBI (the fitting of the model alongside the covariance has almost no effect), even for the lowest number of simulations we considered in our experiments. The experiments were run with eight CPU cores taking about 2–3 minutes for each, depending on if a neural network was trained for the data compression.

, which is a concern for future experiments. However, this shows that the tests presented in this work are fair for SBI (the fitting of the model alongside the covariance has almost no effect), even for the lowest number of simulations we considered in our experiments. The experiments were run with eight CPU cores taking about 2–3 minutes for each, depending on if a neural network was trained for the data compression.

3.3 Compressing the data

For density estimation it is advantageous to reduce the dimensionality of the data. In the case of a linear compression (Tegmark et al. 1997; Heavens et al. 2000; Alsing & Wandelt 2018) one implicitly assumes a model to derive the statistics (Heavens et al. 2020), and the sampling distribution of the statistics is Gaussian only if the data errors are Gaussian. Neural-network-based summary statistics (Fluri et al. 2021; Kacprzak & Fluri 2022; Charnock et al. 2018; Prelogović & Mesinger 2024; Villanueva-Domingo & Villaescusa-Navarro 2022) can easily be fit to data (which are typically non-standard summary statistics), though they have no analytic likelihood for the summary given the input, and so extracting credible intervals is not currently possible, except with the use of SBI. Additionally, there is no guarantee that fitting a neural network to regress the model parameters of input data will produce an unbiased estimator of the parameters, since the MSE estimator is only the maximum-likelihood estimator for Gaussian-distributed data with unit covariance (Murphy 2022).

In the experiments in which ![$\[\hat{\Sigma}=\Sigma\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq43.png) or

or ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq44.png) , the data are linearly compressed via Eq. (12) to nπ summaries, so the normalising-flow-likelihood model is fitted to a Gaussian likelihood with a Fisher matrix; this is given by either FΣ or FS depending on whether the covariance is known or not. The noisy data covariance (as a function of ns) in our experiments limits the amount of information the normalising-flow posterior or likelihoods can extract about the model parameters. In the case that the precision matrix is known exactly and the model, ξ[π], is linear in the parameters, the linear compression conserves the information content of the data,

, the data are linearly compressed via Eq. (12) to nπ summaries, so the normalising-flow-likelihood model is fitted to a Gaussian likelihood with a Fisher matrix; this is given by either FΣ or FS depending on whether the covariance is known or not. The noisy data covariance (as a function of ns) in our experiments limits the amount of information the normalising-flow posterior or likelihoods can extract about the model parameters. In the case that the precision matrix is known exactly and the model, ξ[π], is linear in the parameters, the linear compression conserves the information content of the data, ![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq45.png) .

.

We also tested the use of a neural network, fψ, in compressing the data. The neural network consists of simple linear layers and non-linear activations. A simulation is input to the network, and the parameters of the network, ψ, are obtained by stochastic gradient descent of the mean-squared error loss:

![$\[\Lambda(\boldsymbol{\xi}, \boldsymbol{\pi} ; \psi)=\left\|f_\psi(\boldsymbol{\xi})-\boldsymbol{\pi}\right\|_2^2.\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq46.png) (15)

(15)

Whilst many neural-network architectures exist for parametrising the compression function that can exploit symmetries in the data in different ways, they ultimately cannot invent or extract more information than a simple neural MLP network in this experiment: the optimal compression with either a noisy or the true data covariance is the best one can do. Given the Gaussian data likelihood in these experiments, the expectation value of the MAE loss is the same as that for the MSE loss for any neural network – given by ![$\[f_{\psi}(\hat{\xi})=\langle\pi\rangle_{p(\pi \mid \hat{\xi})}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq47.png) .

.

One option that may help avoid the issues of covariance estimation would be to fit density estimators without compression of the simulations and data. This would, for the optimal and linear change of variables (e.g. in a normalising flow), still depend on the inverse of a data covariance that would be fitted from simulations.

3.4 Density estimation with normalizing flows

In order to derive posteriors from a measurement using density-estimation SBI methods, a density estimator was fitted to pairs of model parameters and simulated data to estimate the likelihood or posterior directly (Cranmer et al. 2020; Lueckmann et al. 2017; Papamakarios 2019; Alsing et al. 2018). Normalizing flows (Tabak & Vanden-Eijnden 2010; Tabak & Turner 2013; Jimenez Rezende & Mohamed 2016; Papamakarios et al. 2021) are a class of generative models that fit a sequence of bijective transformations from a simple base distribution to a complex data distribution. The transformation is estimated directly from the simulation and parameter pairs via minimising the KL-divergence between the unknown likelihood (posterior) and the flow likelihood (posterior). We used masked autoregressive flows (MAFs; Papamakarios et al. 2018) and continuous normalizing flows (CNFs; Grathwohl et al. 2018; Chen et al. 2019). We used CNFs in order to adopt some of the latest density estimation techniques from the machine-learning literature2. Two different models were used to validate and compare the performance of the density estimation of either flow.

Normalising flows transform data, x, to Gaussian-distributed samples, y. This mapping, when conditioned on physics parameters, π, and parameters, ϕ, of a neural network, fϕ, is written as

![$\[\boldsymbol{y}=f_\phi(\boldsymbol{x} ; \boldsymbol{\pi}),\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq48.png) (16)

(16)

where y ~ 𝒢[y|0, 𝕀] and 𝕀 is the identity matrix. An exact log-likelihood estimate of the probability of a data point conditioned on physics parameters can be calculated with a normalising flow using a change of variables between y and x expressed as

![$\[\log p_\phi(\boldsymbol{x} {\mid} \boldsymbol{\pi})=\log \mathcal{G}\left[f_\phi(\boldsymbol{x} ; \boldsymbol{\pi}) \mid \mathbf{0}, \mathbb{I}\right]+\log \left|\mathbf{J}_{f_\phi}(\boldsymbol{x} ; \boldsymbol{\pi})\right|,\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq49.png) (17)

(17)

where Jfϕ is the Jacobian of the normalising flow transform, fϕ, in Eq. (16).

These bijective transformations between two densities can be composed to produce more complex distributions by using separate transformations in a sequence. For a normalising flow with K transforms ![$\[\left\{g_{k}\right\}_{k=0}^{K}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq50.png) in sequence parametrised as

in sequence parametrised as ![$\[\phi=\left\{\phi_{k}\right\}_{k=0}^{K}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq51.png) , the log-likelihood of the flow is written

, the log-likelihood of the flow is written

{\mid} \mathbf{0}, \mathbb{I}\right) \\& +\sum_{k=0}^K \log \left|\mathbf{J}_{g_{\phi_k}}(\boldsymbol{x} ; \boldsymbol{\pi})\right|.\end{aligned}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq52.png) (18)

(18)

We note that while it is common to use ensembles of density estimators together to diagnose the fit of the likelihood models (Alsing et al. 2018; Jeffrey et al. 2020; Gatti et al. 2024; Jeffrey et al. 2025), this should not be necessary for the simple Gaussian linear model we used here.

To obtain an approximate likelihood pϕ(π|x) (for NLE) or posterior model pϕ(x|π) (for NPE), we fitted a normalising flow to a set of simulations and parameters. The parameters of the normalizing flow model, ϕ, which maximise the log-likelihood pϕ(x|π) (or log-posterior), were obtained by minimising the forward KL-divergence between the unknown likelihood (posterior) q(x|pi) and the normalising-flow likelihood (posterior) pϕ(x|π).

The loss function for the normalising flow is then given by

![$\[\begin{aligned}\left\langle D_{K L}\left(q \| p_\phi\right)\right\rangle_\boldsymbol{\pi} & =\int \mathrm{d} \boldsymbol{\pi} ~p(\boldsymbol{\pi}) \int \mathrm{d} \boldsymbol{x} ~q(\boldsymbol{x} {\mid} \boldsymbol{\pi}) ~\log~ \frac{q(\boldsymbol{x} {\mid} \boldsymbol{\pi})}{p_\phi(\boldsymbol{x} {\mid} \boldsymbol{\pi})}, \\& =\int \mathrm{d} \boldsymbol{\pi} \int \mathrm{~d} \boldsymbol{x} ~p(\boldsymbol{\pi}, \boldsymbol{x})\left[\log~ q(\boldsymbol{x} {\mid} \boldsymbol{\pi})-\log~ p_\boldsymbol{\phi}(\boldsymbol{x} {\mid} \boldsymbol{\pi})\right], \\& \geq-\int \mathrm{d} \boldsymbol{\pi} \int \mathrm{d} \boldsymbol{x} ~p(\boldsymbol{x}, \boldsymbol{\pi}) ~\log~ p_\phi(\boldsymbol{x} {\mid} \boldsymbol{\pi}), \\& \approx-\frac{1}{N} \sum_i^N ~\log~ p_\phi\left(\boldsymbol{x}_i {\mid} \boldsymbol{\pi}_i\right).\end{aligned}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq53.png) (19)

(19)

Note that terms independent of ϕ are dropped since their derivative with respect to ϕ is zero. This implies the loss function for the normalising flow is given by

![$\[\Lambda(\{\boldsymbol{x}, \boldsymbol{\pi}\} ; \phi)=-\frac{1}{N} \sum_{i=1}^N \log p_\phi\left(\boldsymbol{x}_i {\mid} \boldsymbol{\pi}_i\right)\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq54.png) (20)

(20)

for NPE (since the derivation of Eq. (19) applies in the same way to the posterior). The CNF and MAF models we used for our experiments are described in detail in Appendix A.

Note that whilst diffusion (Song et al. 2021; Ho et al. 2020; Kingma et al. 2023) and flow-matching (Lipman et al. 2023) models avoid simulating the ODE-trajectory over t (e.g. from Gaussian variates to data samples), they inject noise at each time step to diffuse the data to noise. This stochastic process means that their density-estimation capabilities are limited in comparison to normalising flows due to the fact that diffusion only lower-bounds the log-likelihood of the data (Song et al. 2021; Ho et al. 2020; Kingma et al. 2023).

3.5 Architecture and fitting

Our CNF model has one hidden layer of eight hidden units with tanh(·) activation functions and an ODE solver time step of 0.1. We trained it using early stopping with a patience value of 40 epochs (Advani & Saxe 2017). The MAF models had five MADE transforms, which were parametrised by neural networks with two layers and 32 hidden units using tanh(·) activation functions. The MAFs used a patience value of 50 epochs. We used the ADAM (Kingma & Ba 2017) optimiser with a learning rate of 10−3 for a stochastic gradient descent of the negative log-likelihood loss with both density estimator models.

3.6 Hyperparameter optimisation

It is not computationally feasible in SBI analyses to optimise simultaneously for the architecture and parametrisation of a density estimation model when fitting the likelihood or posterior. Since the reconstructed posterior depends on these hyperparameters implicitly, we had to run our experiment separately to obtain the best settings when tested on data that were not part of the training set.

The parameters of the architecture and optimisation procedure are tuned by experimentation using optuna (Akiba et al. 2019) to find the parameters that minimise an optimisation function over repeated experiments where the true data covariance is known exactly. The experiment uses a further 104 data samples for training. The average log likelihood (or log posterior) of the flow was calculated on a separate test set of 104 simulation and parameter pairs. The architecture and training parameters that maximised this average log likelihood were chosen (see Appendix B for details of the hyperparameter optimisation).

4 Results

In the next section, we discuss the quantitative results of measuring the posterior widths (Sect. 4.1) and the coverage over repeated experiments (Sect. 4.2).

4.1 Posterior widths

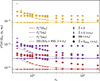

Figure 2 shows the reconstructed posterior widths σ2[π] from repeated identical experiments as a function of the number of simulations ns used to fit the normalising flows (and where appropriate – estimating the data covariance or training a neural network for compression – which requires another ns simulations). The results for the other experiments (noted in Table 1) are shown in Appendix C.

The DS13 factor (Eq. (7)) sets the expected width of the scatter in the parameter estimators when both the model, ξ[π], and covariance, Σ, are determined by data. The factor is calculated for 2ns simulations (the size of the training set plus those used to estimate the data covariance that is used for the compression), with the number of bins in the data equal to the uncompressed data dimension of nξ = 450 bins. For each value of ns, the factor is plotted to compare the expected posterior width in a Gaussian-likelihood analysis – where the covariance is estimated from a set of simulations – to the SBI analyses. By default, every SBI analysis uses ns simulations to train the normalising flows.

The SBI analyses plotted for this comparison were repeated with four compressions. The first is a linear compression (Eq. (12)) in which the true covariance is known (e.g. ![$\[\hat{\Sigma}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq56.png) = Σ). The second is a linear compression with the simulationestimated covariance

= Σ). The second is a linear compression with the simulationestimated covariance ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq57.png) (calculated with ns simulations). The third is a linear compression with only the diagonal elements of this same covariance

(calculated with ns simulations). The third is a linear compression with only the diagonal elements of this same covariance ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq58.png) . Last is a compression parametrised with a neural network that is trained on an additional ns simulations.

. Last is a compression parametrised with a neural network that is trained on an additional ns simulations.

For the experiments where the true covariance is known, SBI can obtain posterior widths equal to the Fisher errors for all ns values as expected. The posterior widths for the ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq59.png) compression converge closely to the DS13 factor at around 2 × 104 simulations (for the training set of the flows plus the covariance estimation), which means the flow models fit the correct likelihood shape. The

compression converge closely to the DS13 factor at around 2 × 104 simulations (for the training set of the flows plus the covariance estimation), which means the flow models fit the correct likelihood shape. The ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq60.png) experiments (plotted with diamonds) return posterior widths that are less optimal, over all values of ns, compared to the marginal Fisher variances and the SBI analyses that use

experiments (plotted with diamonds) return posterior widths that are less optimal, over all values of ns, compared to the marginal Fisher variances and the SBI analyses that use ![$\[\hat{\Sigma}=\Sigma\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq61.png) . Despite the posterior width being significantly increased, for low values of ns of around a few hundred simulations, the posterior widths obtained when using

. Despite the posterior width being significantly increased, for low values of ns of around a few hundred simulations, the posterior widths obtained when using ![$\[\hat{\Sigma}=S_{\text {diag}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq62.png) are below the Fisher variances expanded by the DS13 factor; that is, the posterior width when estimating the full covariance – for the same ns input to the experiment. This is simply due to the fact that the inversion of a diagonal matrix Sdiag. does not combine the noisy, off-diagonal elements (of the matrix S that is inverted) in a non-linear way. Nonetheless, the structure of the estimated covariance is incorrect, which inflates the variance of the summaries. This increases the marginal variances of the posteriors obtained by the flows.

are below the Fisher variances expanded by the DS13 factor; that is, the posterior width when estimating the full covariance – for the same ns input to the experiment. This is simply due to the fact that the inversion of a diagonal matrix Sdiag. does not combine the noisy, off-diagonal elements (of the matrix S that is inverted) in a non-linear way. Nonetheless, the structure of the estimated covariance is incorrect, which inflates the variance of the summaries. This increases the marginal variances of the posteriors obtained by the flows.

The experiments involving a neural network for the compression function show a significantly different relation of the posterior width to the number of simulations compared to the ![$\[\hat{\Sigma}=\Sigma\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq63.png) and

and ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq64.png) experiments. The widths from the neural network compression experiments are not the same as any of the widths using linear compression since the network does not invert the true (or estimated) data covariance. Rather, if the network calculates a compression close to an optimal linear compression – it is possible that the network down-weights the noisier data-vector elements to minimise the MSE loss for a smaller number of simulations. This is not the same as the inverse-variance weighting of the linear compression in Eq. (12). Curiously, this effect – within the regime of ns ≤ nξ for a given value of nπ – allows one to obtain an average posterior width that is smaller than that of a posterior using an estimated covariance adjusted with the DS13 factor (calculated with 2ns simulations), despite the fact that the covariance is not known. How optimal the summary by a neural network is strongly depends on the training hyperparameters, optimisation method, and choice of loss function – though quantifying the information content is only possible with an analytic posterior such as in this work.

experiments. The widths from the neural network compression experiments are not the same as any of the widths using linear compression since the network does not invert the true (or estimated) data covariance. Rather, if the network calculates a compression close to an optimal linear compression – it is possible that the network down-weights the noisier data-vector elements to minimise the MSE loss for a smaller number of simulations. This is not the same as the inverse-variance weighting of the linear compression in Eq. (12). Curiously, this effect – within the regime of ns ≤ nξ for a given value of nπ – allows one to obtain an average posterior width that is smaller than that of a posterior using an estimated covariance adjusted with the DS13 factor (calculated with 2ns simulations), despite the fact that the covariance is not known. How optimal the summary by a neural network is strongly depends on the training hyperparameters, optimisation method, and choice of loss function – though quantifying the information content is only possible with an analytic posterior such as in this work.

|

Fig. 2 Average model parameter posterior variance reported by methods compared in this work, conditioned on noisy data vectors estimated with neural-likelihood estimation using a masked autoregressive flow. The colour-coded dashed lines show the (Fisher) variances that would have been measured if the exact data covariance had been known and used in a Gaussian likelihood ansatz with a flat prior. Cross points label posterior variances from SBI analyses where the exact data covariance was known for linear compression (Eq. (12)). Dotted lines show the expected variances of the maximum a posteriori that would have been measured when using a data covariance estimated from a set of ns simulations. Note that these lines multiply the Fisher variance with the factor (Eq. (7)) Dodelson & Schneider (2013) calculated using 2 × ns simulations. Circle points label posterior variances from SBI analyses where the data covariance was estimated from ns simulations and used in a linear compression. Diamond points label posterior variances obtained when using a compression with the diagonal elements of the estimated covariance matrix. Square points show variances obtained using a neural network for the compression, trained on ns simulations. All points include a 1-σ error bar on the variances estimated over all of the experiments. The additional simulations, not labelled on the x-axis but required for the separate compressions (where the true covariance is unknown), are noted for each method. When the true data covariance is not known, necessitating the use of double the number of simulations, the reconstructed posterior errors from SBI are significantly higher than the Dodelson & Schneider (2013) corrected errors when that correction is substantial. |

|

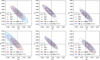

Fig. 3 Coverage Fω; i.e. how often the true cosmology in the experiment is found inside the 68 (1 − σ) and 95 (2 − σ) credible regions of the estimated posterior (see Eq. (23)) against the number of simulations ns used for the training set. Shown is the result for a neural-likelihood estimation (with a MAF model) for independently sampled data vectors and data covariance matrices in a series of repeated experiments with the number of simulations for each experiment on the horizontal axis. The expected coverage of a Gaussian posterior with a debiased estimate of the precision matrix (using the Hartlap correction; Eq. (3)) and posterior covariance corrected with the Dodelson & Schneider (2013) factor is plotted for both coverage intervals with dashed lines. The grey lines show the expected coverage of the common approach using a Gaussian posterior with a precision matrix corrected applying the Hartlap factor (i.e. using the IJ prior). The additional simulations, not labelled on the x-axis but required for the separate compressions (where the true covariance is unknown), are noted for each method. The SBI posteriors obtain the correct coverage to within errors for all numbers of simulations ns and each compression method. |

4.2 Coverage

The expected coverage probability of the SBI posterior estimators measures the proportion of repeated identical experiments where a credible region of the posterior estimator contains the true parameter set used to generate the data vector. The coverage probability quantifies how conservative or overconfident the posterior estimator is compared to the true posterior.

We define Fω as the fraction of experiments where the true cosmology π is inside the 68.3% (ω = 0.68) or 95.4% (ω = 0.95) confidence contour around the MAP ![$\[\boldsymbol{\hat{\pi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq65.png) . The fraction of J posterior samples with posterior probability under the SBI estimator less than that of the true data-generating parameters πi for the i-th experiment is the empirical coverage probability of the i-th posterior; this is written as

. The fraction of J posterior samples with posterior probability under the SBI estimator less than that of the true data-generating parameters πi for the i-th experiment is the empirical coverage probability of the i-th posterior; this is written as

![$\[f\left(\boldsymbol{x}_i, \boldsymbol{\pi}_i, \phi_i\right)=\frac{1}{J} \sum_{j=1}^J \mathbb{1}\left[p_{\phi_i}\left(\boldsymbol{\pi}_i {\mid} \boldsymbol{x}_i\right)>p_{\phi_j}\left(\boldsymbol{\pi}_j {\mid} \boldsymbol{x}_i\right)\right],\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq66.png) (22)

(22)

where ![$\[\mathbb{1}[\cdot]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq67.png) is the indicator function and πj is the j-th posterior sample from the i-th posterior. The fraction of experiments that obtain a coverage probability ω is calculated as

is the indicator function and πj is the j-th posterior sample from the i-th posterior. The fraction of experiments that obtain a coverage probability ω is calculated as

![$\[F_\omega=\frac{1}{n_e} \sum_{i=1}^{n_e} \mathbb{1}\left[f\left(\boldsymbol{x}_i, \boldsymbol{\pi}_i, \phi_i\right)>\omega\right]\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq68.png) (23)

(23)

over a set of independent but identical experiments with independently sampled data, ![$\[\hat{\boldsymbol{\xi}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq69.png) , data covariance matrices, S, and density estimator model parameters, ϕi. If the true covariance is known, then Fω should be equal to 0.68 for the 1 − σ region, or to 0.95 for the 2 − σ region if the data are sampled from the true likelihood and the posterior estimator has converged.

, data covariance matrices, S, and density estimator model parameters, ϕi. If the true covariance is known, then Fω should be equal to 0.68 for the 1 − σ region, or to 0.95 for the 2 − σ region if the data are sampled from the true likelihood and the posterior estimator has converged.

For the NLE experiments using an MAF, Figure 3 (see Appendix C for the remaining experiments in Table 1) shows the coverages measured over the repeated experiments. Either density estimation method obtains the correct coverage to within errors, regardless of the model used and whether the data covariance is known or not. This suggests that the normalising-flow likelihoods correctly expand their contours to account for the MAP scatter when using an estimated covariance or a trained neural network for compression, which would be expected given the hierarchical modelling by the normalising flow of the functional form of the likelihood (including the expectation and covariance) used to calculate the other likelihoods.

It should be noted that, as found in Friedrich & Eifler (2017) and Percival et al. (2021) for the same nξ and nπ as in our experiments, the use of the independence Jeffreys prior on true covariance matrix in Sellentin & Heavens (2015) gives a posterior with a model parameter covariance that matches a Gaussian posterior after scaling the data covariance matrix by the Hartlap factor and applying the factor from Dodelson & Schneider (2013) (Eq. (5)). Importantly, however, this posterior does not take into account the Dodelson–Schneider factor. Hence, in Fig. 3 (see also Appendix C) we plot the analytic expected coverage for the Gaussian posterior with a scaled parameter variance and debiased precision matrix, accounting for the simulations used to estimate the covariance as well as the training-set simulations. The SBI methods are both able to obtain the correct coverage for all ns values, regardless of whether the true covariance is known or not.

5 Discussion

Based on the results of this work – the widths of the SBI posteriors and their coverage fractions measured over many repeated experiments – the good news is that SBI functions as well as covariance estimation methods possibly can. The bad news is that the number of simulations required to obtain errors close to the true posterior variance exceeds the computational budget of existing simulation suites, even for data of modest dimensions from Gaussian linear models in which the true expectation, ξ[π], is known. The coverages and posterior widths for SBI presented in this work show that SBI – for modest nξ and nπ – does not obtain smaller widths than a Gaussian-likelihood analysis with access to an accurate model ξ[π].

When considering the posterior widths obtained with SBI – using both NLE and NPE – there is a discrepancy between each compression method. Within the limits of a low number of simulations, the linear compression parametrised with the diagonal elements of a simulation-estimated covariance, denoted ![$\[\hat{\Sigma}=S_{\text {diag}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq70.png) , is closer to optimal (i.e. a smaller posterior width) in our example than a neural network and the

, is closer to optimal (i.e. a smaller posterior width) in our example than a neural network and the ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq71.png) compression (for lower numbers of simulations, ns). This is because Sdiag. correctly (though limited by ns) estimates the variances of the data but not the covariances, thus reducing the noise propagated by the additional off-diagonal elements that would be estimated with S. This shows that the increase in posterior width obtained by ignoring the off-diagonal elements of the data covariance (using

compression (for lower numbers of simulations, ns). This is because Sdiag. correctly (though limited by ns) estimates the variances of the data but not the covariances, thus reducing the noise propagated by the additional off-diagonal elements that would be estimated with S. This shows that the increase in posterior width obtained by ignoring the off-diagonal elements of the data covariance (using ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq72.png) ) is smaller than the increase in width when estimating the off-diagonal elements (using

) is smaller than the increase in width when estimating the off-diagonal elements (using ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq73.png) ).

).

The posterior variance, when using a neural network for the compression, should be between ![$\[\hat{\Sigma}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq74.png) and

and ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq75.png) since the assumption that all of the data-vector components are independent is very strong. Ultimately, this depends on the covariance structure of the statistic at hand. How optimal each of the compressions that we tested is with respect to the others is not fixed in order. The effects of one particularly unfavourable covariance structure are examined in Appendix F. The fact that the posterior variances derived with neural-network summaries follow – though are biased above – the Fisher variances (corrected for an estimated covariance via the DS13 factor) stems from the fact that the neural network does not invert any covariance; therefore, it cannot be biased by minimising the incorrect likelihood in the same way as the

since the assumption that all of the data-vector components are independent is very strong. Ultimately, this depends on the covariance structure of the statistic at hand. How optimal each of the compressions that we tested is with respect to the others is not fixed in order. The effects of one particularly unfavourable covariance structure are examined in Appendix F. The fact that the posterior variances derived with neural-network summaries follow – though are biased above – the Fisher variances (corrected for an estimated covariance via the DS13 factor) stems from the fact that the neural network does not invert any covariance; therefore, it cannot be biased by minimising the incorrect likelihood in the same way as the ![$\[\hat{\Sigma}=S_{\text {diag.}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq76.png) compression. This will depend on the covariance structure of the data. Analysing a DS13-like effect for neural networks remains an objective for future work. Nonetheless, the mean-squared error loss, when minimised in training, does not guarantee an unbiased estimator with the correct variance. It is not clear exactly how the network compression affects the resulting posterior width when optimised with stochastic gradient descent (for low ns); this problem would not be detected in an analysis that is not directly comparable to an analytic posterior, in particular for the cases in which SBI is needed most.

compression. This will depend on the covariance structure of the data. Analysing a DS13-like effect for neural networks remains an objective for future work. Nonetheless, the mean-squared error loss, when minimised in training, does not guarantee an unbiased estimator with the correct variance. It is not clear exactly how the network compression affects the resulting posterior width when optimised with stochastic gradient descent (for low ns); this problem would not be detected in an analysis that is not directly comparable to an analytic posterior, in particular for the cases in which SBI is needed most.

Our results, based on commonly adopted compression methods, show a concerning inflation of the posterior width compared to the true posterior. This is particularly true for low-ns values, which are comparable to existing simulation budgets available to current analyses and where the widths are greater than those inflated by accounting for an unknown data covariance via the DS13 factor. One exception is the case where the compression only estimates the diagonal elements of true data covariance. It is possible that current analyses based on SBI methods fall in a regime of ns, nξ and nπ in which posteriors may only be derived with SBI via compression using a neural network since the data covariance is singular for ns < nξ. This shrouds the amount of information that is lost, because the comparison with a linear compression cannot be made, but it does not change the fact that for ns − nξ < nξ − nπ any compression at low ns is severely sub-optimal. Whilst this is true for our linear model and Gaussian errors, the problem will likely be worse for non-Gaussian errors and non-linear models; i.e. for applications that require SBI. As shown in our posterior width results (Sect. 4.1), the neural network can progress the information return relatively to the posterior obtained with a simulation-estimated covariance at a given low value of ns. Despite this improvement, the posterior errors are inflated by a factor of between two and four relatively to the true posterior – which increases significantly as a function of nξ (see Eq. (7)) under the assumption that the covariance structure is fixed for increasing nξ. However, if the data covariance structure stays fixed with increasing nξ, the optimality of the neural-network summary may be constant, whereas the DS13 factor would increase, thus decreasing the information in linear summaries using a simulation-estimated covariance compared to those from a neural network.

The structure of the data covariance significantly affects the information content in summaries from a linear compression (with an estimated covariance) compared to those from a neural network. In Appendix F, we display results of NPE experiments using an MAF density estimator repeated with a data covariance, Σr, that has large off-diagonal elements (unlike the covariance Σ used in the other sections of this work). For a covariance with strong correlations between elements in the data vector, a neural network compression is far less optimal – due to the network being ignorant of the data errors – compared to a linear compression with an estimated data covariance of ![$\[\hat{\Sigma}_{r}=S\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq77.png) . The same holds for a linear compression-parametrised

. The same holds for a linear compression-parametrised ![$\[\hat{\Sigma}_{r}=S_{\text {diag}}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq78.png) . (which minimises the correct χ2 using the correct model ξ[π], but given the wrong data covariance). This highlights the fact that the issue of covariance matrix estimation is not alleviated by data compression. For some statistics with covariances that are unfavourable in this way, the covariance estimation effects on the posterior widths are significant. Using a neural network to compress the data – so as to avoid the estimation of the covariance – not only not reduces the posterior width relatively to the DS13 factor, it actually substantially deteriorates the returned parameter constraints.

. (which minimises the correct χ2 using the correct model ξ[π], but given the wrong data covariance). This highlights the fact that the issue of covariance matrix estimation is not alleviated by data compression. For some statistics with covariances that are unfavourable in this way, the covariance estimation effects on the posterior widths are significant. Using a neural network to compress the data – so as to avoid the estimation of the covariance – not only not reduces the posterior width relatively to the DS13 factor, it actually substantially deteriorates the returned parameter constraints.

In the posterior widths of Fig. 2 (see also Appendix C), there is a shift towards lower variance for the reported widths. It should be noted that, as with other machine-learning approaches, the posterior density estimators in the NPE experiments absorb the prior defined by the training set. This requires us to force the same prior for the NLE experiments to obtain a direct comparison between the two approaches. That said, the NLE posteriors (for all compression methods) show a bias to slightly lower posterior widths, which can be seen by comparing the ![$\[\hat{\Sigma}=\Sigma\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq79.png) points to the Fisher variance (in Fig. 2 and Appendix C). For the NLE estimators, the likelihood function is more biased when the posterior width is low. This is because, similarly to the NPE estimator, the data likelihood is also informed by the prior from which the simulations used to fit the normalising flows are drawn. It should also be noted that some of the posteriors for NPE and NLE are truncated near the prior edges. This occurs more for lower ns since the estimated covariance causes additional scatter of the MAP – around which the contours are drawn – towards the prior edges.

points to the Fisher variance (in Fig. 2 and Appendix C). For the NLE estimators, the likelihood function is more biased when the posterior width is low. This is because, similarly to the NPE estimator, the data likelihood is also informed by the prior from which the simulations used to fit the normalising flows are drawn. It should also be noted that some of the posteriors for NPE and NLE are truncated near the prior edges. This occurs more for lower ns since the estimated covariance causes additional scatter of the MAP – around which the contours are drawn – towards the prior edges.

Compared to analytic methods for either deriving covariance estimators or posteriors that account for a noisy covariance, SBI returns posteriors with correct coverage but larger errors. The posterior errors tend towards the Fisher errors at around 4 × 104 simulations – for compression and likelihood fitting – meaning that the SBI methods can correctly recover the true posterior given a noisy data vector, though this will depend on the size of the data vector. Comparing the results to the PME estimator (Friedrich & Eifler 2017), we see that SBI density estimation requires many more simulations to obtain a similar error width and coverage. For a DES-like data vector, Friedrich & Eifler (2017) found that 400 N-body simulations are sufficient to achieve negligible additional statistical uncertainties on parameter constraints from a noisy covariance estimate. Our results show that SBI appears to require many more simulations than the PME estimator. This number will be far greater for LSST and other next-generation surveys – which will also increase further in the presence of nuisance parameters.

Whilst interpreting our results, we noted that the DS13 correction term inflates the posterior covariance for a Gaussian likelihood and linear model for the expectation, whereas in density-estimation SBI approaches, the contour is drawn by a generative model. This estimator for the likelihood shape may suffer from an additional DS13-like term such that the contours drawn in the SBI analyses are inflated on average with respect to the Dodelson–Schneider factor for half the number of simulations (2ns simulations are required for compression and fitting the flow when Σ is unknown). In addition, the scatter of the posterior mean with respect to the true parameters may be biased above or below the DS13 scatter of the MAP around the truth. The unknown form of the flow likelihoods may contribute an additional scatter of the posterior mean ⟨π⟩π|xi to the true parameters π. In Appendix E, we measure the scatter of the SBI posterior mean around the true parameters. We fixed the true parameters at values in the centre of our prior to minimise effects from it that would affect the results of the SBI posterior widths and coverages. There appears to be some minimal scatter for ns < 2 × 103, which includes simulations to estimate ![$\[\hat{\Sigma}\]$](/articles/aa/full_html/2025/07/aa53339-24/aa53339-24-eq80.png) as well as train the posterior or likelihood estimator.

as well as train the posterior or likelihood estimator.

6 Conclusions