| Issue |

A&A

Volume 690, October 2024

|

|

|---|---|---|

| Article Number | A211 | |

| Number of page(s) | 42 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202449548 | |

| Published online | 09 October 2024 | |

YOLO-CIANNA: Galaxy detection with deep learning in radio data

I. A new YOLO-inspired source detection method applied to the SKAO SDC1

1

LERMA, Observatoire de Paris, Université PSL, Sorbonne Université, CNRS,

75014

Paris,

France

2

Canadian Institute for Theoretical Astrophysics, University of Toronto,

60 St. George Street,

Toronto,

ON

M5S 3H8,

Canada

3

Research School of Astronomy & Astrophysics, Australian National University,

Canberra

ACT 2610,

Australia

4

Université de Strasbourg, CNRS UMR 7550,

Observatoire astronomique de Strasbourg,

67000

Strasbourg,

France

5

DIO, Observatoire de Paris, CNRS, PSL,

75014

Paris,

France

6

IDRIS, CNRS,

91403

Orsay,

France

7

Collège de France,

11 Place Marcelin Berthelot,

75005

Paris,

France

8

GEPI, Observatoire de Paris, CNRS, Université Paris Diderot,

5 Place Jules Janssen,

92190

Meudon,

France

9

Department of Physics & Electronics, Rhodes University,

PO Box 94,

Grahamstown

6140,

South Africa

★ Corresponding author; david.cornu@observatoiredeparis.psl.eu

Received:

8

February

2024

Accepted:

19

August

2024

Context. The upcoming Square Kilometer Array (SKA) will set a new standard regarding data volume generated by an astronomical instrument, which is likely to challenge widely adopted data-analysis tools that scale inadequately with the data size.

Aims. The aim of this study is to develop a new source detection and characterization method for massive radio astronomical datasets based on modern deep-learning object detection techniques. For this, we seek to identify the specific strengths and weaknesses of this type of approach when applied to astronomical data.

Methods. We introduce YOLO-CIANNA, a highly customized deep-learning object detector designed specifically for astronomical datasets. In this paper, we present the method and describe all the elements introduced to address the specific challenges of radio astronomical images. We then demonstrate the capabilities of this method by applying it to simulated 2D continuum images from the SKA observatory Science Data Challenge 1 (SDC1) dataset.

Results. Using the SDC1 metric, we improve the challenge-winning score by +139% and the score of the only other post-challenge participation by +61%. Our catalog has a detection purity of 94% while detecting 40–60% more sources than previous top-score results, and exhibits strong characterization accuracy. The trained model can also be forced to reach 99% purity in post-process and still detect 10–30% more sources than the other top-score methods. It is also computationally efficient, with a peak prediction speed of 500 images of 512×512 pixels per second on a single GPU.

Conclusions. YOLO-CIANNA achieves state-of-the-art detection and characterization results on the simulated SDC1 dataset and is expected to transfer well to observational data from SKA precursors.

Key words: methods: data analysis / methods: numerical / methods: statistical / galaxies: statistics / radio continuum: galaxies

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Modern astronomical instruments generate ever-increasing data volumes in response to the need for better resolution, sensitivity, and wider wavelength intervals. Astronomical datasets are often highly dimensional and require precise encoding of the measurements due to a high dynamic range. Also, it is often necessary to preserve the raw data so they can be re-analyzed with new versions of the processing pipelines. Radio-astronomy is strongly affected by this explosion of data volumes, especially regarding giant radio interferometers. The upcoming Square Kilometer Array (SKA, Braun et al. 2015) is expected to have an unprecedented real-time data-production rate, and will provide 700 PB of archived data per year (Scaife 2020). This instrument is foreseen to have the necessary sensitivity to set constraints on the cosmic dawn and the epoch of reionization and to trace the evolution of astronomical objects over cosmological times. Faced with data of such volume and complexity, some classical analysis methods and tools employed in radio astronomy will struggle to keep up due to their limited scalability.

In this context, the SKA Observatory (SKAO) began organizing recurrent Science Data Challenges (SDCs) in order to gather astronomers from the international community around simulated datasets that resemble future SKA data products. The objective is to evaluate the suitability of existing analysis methods and encourage the development of new ones. It is also an opportunity for astronomers to become familiar with the nature of such datasets and to gain experience in their exploration.

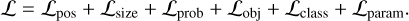

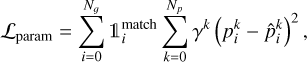

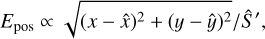

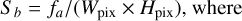

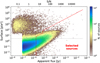

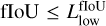

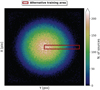

The first edition, SDC1 (Bonaldi et al. 2021), focused on a source detection and characterization task in simulated continuum radio images at different frequencies and integration times. Figure 1 shows a cutout from one of the SDC1 images, illustrating the source density and high dynamic range. Sourcefinding is a common task in astronomy and is often the first analysis carried out on a newly acquired image product; it is already performed by a variety of classical methods, such as Source-Extractor (Bertin & Arnouts 1996), SFIND (Hopkins et al. 2002), CUTEX (Molinari et al. 2011), BLOBCAT (Hales et al. 2012), DUCHAMP (Whiting 2012), SELAVY (Whiting & Humphreys 2012), AEGEAN (Hancock et al. 2018), PyBDSF (Mohan & Rafferty 2015), PROFOUND (Robotham et al. 2018), PySE (Carbone et al. 2018), CAESAR (Riggi et al. 2019), and CERES (Lucas et al. 2019). The obtained source catalogs can then be augmented with characterization information and used as primary data for subsequent studies. This task is especially affected by increases in volume and dimensionality, making it a good probe of the upcoming data-handling challenges.

The past decade has seen a rapid increase in the use of machine learning (ML) methods in all fields, including astronomy and astrophysics (Huertas-Company & Lanusse 2023). One of the advantages of ML methods is their superior scaling with data size and dimensionality. There are a considerable variety of ML approaches, but we focus here on methods based on deep artificial neural networks (LeCun et al. 2015). These approaches have been extensively used for computer vision tasks, including object detection in everyday-life images (Russakovsky et al. 2015; Everingham et al. 2010; Lin et al. 2014). While detection models have been used in other domains for several years, they are not yet widely adopted in the astronomical community.

Deep-learning object-detection methods are usually separated into three families (Zhao et al. 2019): segmentation models, region-based detectors, and regression-based detectors. The main advantage of the segmentation models is their ability to identify all the pixels that belong to a given class or even individual objects. They can also be used as a convenient structure for denoising tasks. Their main drawback is their symmetric structure (encoder and decoder) and the high level of expressivity required at near-input resolution, making them computationally intensive for high-resolution images. This family is mainly represented by the U-Net (Ronneberger et al. 2015) method. Given their proximity with classical source detection approaches, they have been employed for a variety of astronomical applications (e.g., Akeret et al. 2017; Vafaei Sadr et al. 2019; Lukic et al. 2019; Paillassa et al. 2020; Bianco et al. 2021; Makinen et al. 2021; Sortino et al. 2023; Håkansson et al. 2023).

The second family, the region-based detectors, mostly comprise multi-stage neural networks that split the detection task into a region-proposal step and a detection-refinement step. They are the most popular method for mission-critical tasks due to their accuracy. While faster than segmentation methods, these high- detection-accuracy models are computationally intensive due to the multi-stage process. This family is mainly represented by the R-CNN method (Girshick et al. 2013) and all its derivatives (e.g., Fast R-CNN, Faster R-CNN). Examples of astronomical applications with these methods are more limited (e.g., Wu et al. 2019; Jia et al. 2020; Lao et al. 2021; Yu et al. 2022; Sortino et al. 2023). There is also a subfamily that combines the region-based detection formalism with a mask prediction used to perform instance segmentation. This subfamily is mainly represented by the Mask R-CNN method (He et al. 2017), which is increasingly used in astronomy (e.g., Burke et al. 2019; Farias et al. 2020; Riggi et al. 2023; Sortino et al. 2023). We note that region-based methods are commonly combined with some flavor of pyramidal feature hierarchy construction (Lin et al. 2017), which helps represent multiple scales in the detection task.

The last family, the regression-based detectors, are often based on single-stage neural networks, making them computationally efficient. Therefore, they are frequently used for real-time object detection. The most popular regression-based detector method is the You Only Look Once (YOLO) method and its descendants (Redmon et al. 2016; Redmon & Farhadi 2017; Redmon & Farhadi 2018), but we can also cite the Single Shot Detector (SSD) method (Liu et al. 2015). There have only been a few astronomical applications of regression-based detectors, mainly in the visible domain (González et al. 2018; He et al. 2021; Wang et al. 2021; Grishin et al. 2023; Xing et al. 2023).

We highlight that methods based on transformers (Vaswani et al. 2017) are now common in computer vision (Carion et al. 2020), and astronomical applications are just starting to be published (Gupta et al. 2024; He et al. 2023). We also note that some methods include deep learning parts in more classical source detection tools, which can improve the detection purity and the source characterization (e.g., Tolley et al. 2022). Further references regarding deep learning methods for source detection can be found in Sortino et al. (2023) and Ndung’u et al. (2023).

The present paper is the first of a series, the aim of which is to present a new source detection and characterization method called YOLO-CIANNA. It was developed and used in the context of the MINERVA (MachINe lEarning for Radioastronomy at Observatoire de Paris) team participation in the SDC2 (Hartley et al. 2023), where we obtained first place. The primary objective of this first paper is to provide a complete description of the method and to justify several design choices regarding the specific properties of astronomical images. We then illustrate its capability by presenting the results of its application to simulated 2D continuum images from the SDC1 dataset. A second paper will present an application to simulated 3D cubes of HI emission using the SDC2 dataset. The series will then continue by applying the method to observational data from surveys of several SKA precursors.

The present paper is organized as follows:

In Sect. 2, we present the YOLO-CIANNA method in a complete and comprehensive manner, targeting readers unfamiliar with deep-learning object detectors. Details regarding the most complex aspects of the method and the in-depth differences from a classical YOLO implementation are given in Appendix A along with details adapted to readers familiar with these families of methods.

In Sect. 3, we present the SDC1 dataset, which is composed of comprehensive 2D images, and describe how we used it as a benchmark to evaluate the detection and characterization capabilities of YOLO-CIANNA.

In Sect. 4, we present the detection results of our method, as well as a detailed analysis of the detection catalog we obtained from the SDC1.

In Sect. 5, we use these results to highlight the strengths and weaknesses of our source-detector and also discuss the impact of some design choices of the SDC1. We then elaborate on how this new approach could be applied to real observational data from SKA precursor instruments.

There are four Appendices. In Appendix A, we detail the differences between our method and the classical YOLO implementation, as well as benchmarks over classical computer vision datasets. In Appendix B, we evaluate the performance of a classical YOLO backbone architecture on the SDC1 and compare it to our custom backbone. In Appendix C, we present an alternative training area definition for the SDC1. In Appendix D, we evaluate whether performing the detection and characterization using a single unified network is beneficial or detrimental to detection-only performance.

|

Fig. 1 Cutout of 512 square pixels in the SDC1 560 MHz 1000 h simulated field. Minimum and maximum cutting values are those used for our object detector, and the displayed intensity is the raw image flux. |

2 Method

Our method finds its inspiration in the classical YOLO (Redmon et al. 2016; Redmon & Farhadi 2017; Redmon & Farhadi 2018) object detector, but it is also very similar to the SSD method (Liu et al. 2015), both regression-based deep learning object detector. While region-based approaches like R-CNN (Girshick et al. 2013) are often considered the most accurate object detectors, regression-based methods present a straightforward single network architecture, making them more computationally efficient at a given detection accuracy. Both families can reach state-of-the-art accuracy depending on implementation details and architecture design, but regression methods are usually preferred for real-time detection applications. In this context, our choice to design a regression-based approach was driven by (i) fewer implementation constraints, (ii) a strong emphasis on computational performance considering the data volume of current and future radio-astronomical surveys, and (iii) the single-stage regression-based network structure on top of which it is easier to add more predictive capabilities.

In this section, we present the main design of our method. Despite being depicted for an astronomical application, our method remains suitable for general-purpose object detection (Appendix A.8). Instead of listing all the subtle differences with various descendant versions of the classical YOLO approach or other detectors, we describe the main components of our method from scratch in a comprehensive manner. The description of the most technical parts of the method is done in the dedicated Appendix A, which also discusses the differences with the classical YOLO approach. As a result, the main method description should remain accessible to readers unfamiliar with deep-learning object detectors. Even though our method differs significantly from the YOLO algorithm in some critical aspects, we chose to refer to our approach as YOLO-CIANNA for simplicity and as a legacy for its inspiration.

The implementation was made inside the custom high- performance deep learning framework CIANNA (Cornu 2024b)1. The implementation and usage details can be found on the CIANNA wiki pages. For reproducibility purposes, we provide example scripts for training and applying the method to the SDC1 dataset in the CIANNA git repository.

To ease the understanding of the technical parts of the paper, we list a few ML-specific terms and the descriptions we have for them. The most common ML terms are not redefined as they can be found in any ML textbook or review (LeCun et al. 2015).

Bounding box: in classical computer vision, the smallest rectangular box that includes all the visible pixels belonging to a specific object in a given image.

Expressivity: refers to the predictive strength of a network. The higher the expressivity, the more complex or diverse the predictions can be. The expressivity increases with the number of weights and layers in a network.

Receptive field: corresponds to all the input pixels that can contribute to the activation of a neuron at a specific point in the network. It represents the maximum size of the patterns that can be identified in the input space.

Reduction factor: the ratio between the input layer spatial dimension and the output layer spatial dimension.

2.1 Bounding boxes for object detection

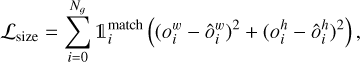

Our method uses a fully convolutional neural network (CNN) to construct a mapping from a 2D input image to a regular output grid. Each output grid cell represents a fixed area of the input image with a size that depends on the reduction factor of the chosen CNN backbone. Each grid cell is tasked to detect all possible objects whose center is located inside the input region it represents. To characterize an object, we rely on the bounding box formalism that encodes an object as a four-dimension vector composed of the box center and its size (x, y, w, h). The grid cells are tasked to predict these quantities. As a supervised learning approach, our method relies on a training set composed of images associated with a list of visible objects to be detected. Each object can be encoded as a target bounding box that the CNN is tasked to predict from the raw input image. A training phase is used to optimize the network parameters to minimize a loss function ℒ that compares the target boxes with the predicted boxes from all grid cells at the current step. This loss should encompass all the object properties to be predicted. To ease the method description, we first write an abstract loss as

(1)

(1)

The aim of the Sects. 2.1–2.4 is to describe all of the loss subparts. Our complete detailed loss function is presented in Sect. 2.7 with Eq. (14).

For now, we only describe the case of a single box prediction per grid cell. The more realistic case of multiple objects per grid cell is presented in Sect. 2.5. We consider that the grid is composed of gw columns and gh lines and that each grid cell is represented by its coordinate in the grid (gx,gy). To represent a bounding box, a grid cell predicts a 4-element vector (ox, oy, ow, oh) that maps to the geometric properties of the box following

(2)

(2)

(3)

(3)

(4)

(4)

(5)

(5)

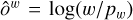

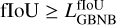

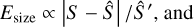

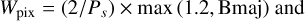

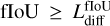

Each grid cell is only tasked to position the object center inside its dedicated area using two sigmoid-activated values (ox , oy). The position of the grid cell in the image (gx , gy ) is added to obtain the relative global position of the object. These coordinates must then be multiplied by the mapped width and height of a grid cell, corresponding to the reduction factor of the backbone network, to obtain pixel coordinates in the input image. Object size is obtained by an exponential transform of the predicted values (ow, oh ) that acts as a scaling on a predefined size prior (pw, ph), which can be expressed in pixels directly. This is equivalent to an anchor-box formalism (Ren et al. 2015) as discussed in Sect. 2.5. The corresponding bounding box construction on the output grid is illustrated in Fig. 2.

With this formalism, it is possible to construct a detector with an output of size 〈gw, gh, 4〉 that can position and scale one bounding box per output grid cell. For each prediction-target pair, we use a sum-of-square error to compute the loss function for center coordinates and sizes (Sect. 2.7). The error is not computed on the sigmoid-activated positions but on the raw output for the sizes after target conversion using  and

and  . This results in the following loss terms

. This results in the following loss terms

(6)

(6)

(7)

(7)

where the hat values represent the target for the corresponding predicted value, the sum over i represents all the grid cells with Ng = gw ×gh, and  is a mask to identify the predicted boxes that have an associated target box (Sect. 2.6). The grid cells that do not contain any object have no contribution to these loss terms. We discuss the possible limitations of using bounding boxes to describe astronomical objects in Sect. 5.2.2.

is a mask to identify the predicted boxes that have an associated target box (Sect. 2.6). The grid cells that do not contain any object have no contribution to these loss terms. We discuss the possible limitations of using bounding boxes to describe astronomical objects in Sect. 5.2.2.

A common misconception about grided detection is that the predicted size can only be as large as a grid element, which is wrong. The predicted size can be as large as necessary up to the size of the full image. Each grid cell receives information from a large area corresponding to the backbone network receptive field. The receptive fields of nearby grid cells usually overlap, but a target box center can only lie in one grid cell, hence the attribution of the detection to a single grid cell. Due to the fully convolutional structure required for our method, each grid cell performs a localized prediction using identical weights but using a different subpart of the image as input. More details about the effect of the fully convolutional architecture and the output grid encoding are provided in Appendices A.1 and A.2.

|

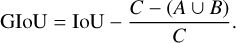

Fig. 2 Illustration of the YOLO bounding box representation following Eqs. (2)–(5). The dashed black box represents the theoretical prior (ow = oh = 0), while the red box is the scaled predicted size. |

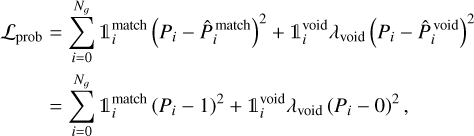

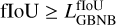

2.2 Detection probability and objectness score

Only predicting bounding boxes is insufficient to obtain an object detector. We also need to evaluate the chances that they contain an object. For this, we add a self-assessed detection probability prediction P to each grid cell, which is constrained during training. This term uses a sigmoid activation and adds a sum-of- square error contribution to the loss. Due to our grid structure, the detector always outputs box properties for every grid cell. In a context where only a few target boxes are present in the image, most grid cells only map irrelevant background regions. During training, we identify the predicted boxes that best represent each target box and attribute them a target probability of  . For all the remaining empty predicted boxes, we attribute them a target probability of

. For all the remaining empty predicted boxes, we attribute them a target probability of  . To compensate for the likely imbalance between the number of matching and empty predictions, we must define a λvoid factor to apply to the loss term corresponding to the empty case. The resulting loss term can be written as

. To compensate for the likely imbalance between the number of matching and empty predictions, we must define a λvoid factor to apply to the loss term corresponding to the empty case. The resulting loss term can be written as

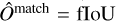

(8)

(8)

where the sum over i represents all the grid cells,  is a mask to identify the predicted boxes that match a target box, and

is a mask to identify the predicted boxes that match a target box, and  a mask to identify the empty predicted boxes. Through the stochas- ticity of the training process, the network will learn to predict a continuous probability score that reflects the detection confidence. At prediction time, it is used to identify the grid cells that should contain an object.

a mask to identify the empty predicted boxes. Through the stochas- ticity of the training process, the network will learn to predict a continuous probability score that reflects the detection confidence. At prediction time, it is used to identify the grid cells that should contain an object.

One limit of this probability definition is that it contains no information about the quality of the predicted box. For this, we must define a metric that measures the proximity and resemblance between two bounding boxes. The classical object detection metric for this is the intersection over union (IoU, Everingham et al. 2010; Lin et al. 2014). It is defined as the surface area of the intersection between two boxes, A and B, divided by the surface area of their union, which is expressed as

(9)

(9)

This quantity takes values between 0 and 1 depending on the amount of overlap. This classical IoU is the most commonly used in computer vision, but it presents some weaknesses for astronomical applications. We present a few alternative matching metrics better suited to our application case in Appendix A.4. Since several hyperparameters of our method depend on this choice of metric, we will use a generic fIoU term that can be replaced by the selected matching metric in all the following equations. The default choice for our detector is the distance- IoU (DIoU, Zheng et al. 2020), as it includes information about the distance between the center of the two boxes to compare. For the unicity of the equations, the selected match metric function is always considered linearly rescaled in the 0 to 1 range.

From this, we add a self-assessed score called objectness, O, to each predicted box, which is also constrained during training. The objectness is defined as the combination of an object presence probability P and the fIoU between the predicted box and the target box, expressed as

(10)

(10)

This term also uses a sigmoid activation and adds a sum- of-square error contribution to the loss. During training, the objectness is constrained like the probability by considering that  for prediction-target matches, while

for prediction-target matches, while  for empty predictions. Therefore, following Eq. (10), the target objectness for prediction-target matches is

for empty predictions. Therefore, following Eq. (10), the target objectness for prediction-target matches is  , using the fIoU between the target and predicted boxes. For predictions with no associated matches, the target objectness is

, using the fIoU between the target and predicted boxes. For predictions with no associated matches, the target objectness is  . The resulting loss term can be written as

. The resulting loss term can be written as

(11)

(11)

using the same notations as Eq. (8). We stress that fIoU is used as a scalar in this equation. Therefore, the derivative of the corresponding matching function is not computed for gradient propagation, so ℒobj does not contribute to updating the position and the size. After training, we obtain a continuous objectness prediction representing a global detection score that accounts for the predicted geometrical box quality. Probability and object- ness can be used independently or in association to construct advanced prediction filtering conditions (Sect. 2.8).

With this formalism, we formulate only two states for a predicted box, either a match or empty. In practice, multiple predicted boxes can try to represent the same target simultaneously. This is common if the target box center is positioned at the edge of a grid cell or if the boxes are large. This will be even more common with multiple detections per grid cell (Sect. 2.5). In such a case, only the best-predicted box will be considered a match. The remaining plausible detections are called good- but-not-best (GBNB) predictions. The previous formalism would result in a loss that lowers the objectness of these GBNB predictions, actively forcing relevant features to fade. To prevent this, we define a representation quality threshold  above which the corresponding boxes are excluded from both

above which the corresponding boxes are excluded from both  and

and  masks. In summary, there are three types of contribution to the loss: (i) the best detection for each target updates its box position and size while increasing its probability and objectness, (ii) the background boxes lower their probability and objectness, and (iii) the GBNB boxes are ignored.

masks. In summary, there are three types of contribution to the loss: (i) the best detection for each target updates its box position and size while increasing its probability and objectness, (ii) the background boxes lower their probability and objectness, and (iii) the GBNB boxes are ignored.

|

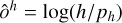

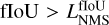

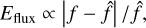

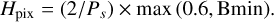

Fig. 3 Illustration of the output vector of a single detection unit. The elements are colored according to the corresponding loss subpart. For multiple detection units per grid cell, this vector structure is repeated on the same axis (Sect. 2.5). |

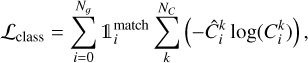

2.3 Classification

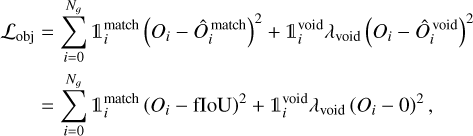

The detected box can be enriched with a classification capability. This can be done by adding Nc components, corresponding to all the possible classes, to the output vector of the detected boxes (Fig. 3). The activation of these components can either be (i) a sigmoid for all classes using a sum-of-square error, which allows multi-labeling, or (ii) a soft-max activation, which corresponds to exponentiating all the outputs and normalizing them so their sum is equal to 1, with a cross-entropy error. These two options are available in our method. In both cases, only the best detection for each target box updates its classes by comparing the target class vector with the predicted one. There is no contribution to the class loss from either GBNB or background predictions. The resulting loss term for a soft-max activation with a cross-entropy error can be written as

(12)

(12)

where the sum over k represents all the classes for a given predicted box, and  ) is the corresponding class output for the k-th class of the predicted box i.

) is the corresponding class output for the k-th class of the predicted box i.

2.4 Additional parameters prediction

For astrophysical applications, we often need to predict the characteristics of the sources, such as the flux or some geometric properties not described by a bounding box formalism. For this, we propose to add Np components to the output vector of the detected boxes, corresponding to all the additional parameters to predict. The activation of these components is linear with a sum-of-square error contribution to the loss. The respective contribution of these parameters to the loss can be scaled with a set of γp factors. The resulting loss term can be written as

(13)

(13)

where the sum over k represents all the independent parameters for a given predicted box, and  is the corresponding parameter output for the k-th parameter of the predicted box i. This is a strong added value of our YOLO-CIANNA method compared to other approaches, allowing it to predict an arbitrary number of additional properties per detection for any application while preserving the one-stage formalism specific to regression-based object detectors.

is the corresponding parameter output for the k-th parameter of the predicted box i. This is a strong added value of our YOLO-CIANNA method compared to other approaches, allowing it to predict an arbitrary number of additional properties per detection for any application while preserving the one-stage formalism specific to regression-based object detectors.

2.5 Multiple boxes per grid-cell and detection unit definition

With the present definition, the detector output would have a shape of 〈gw, gh, (6 + Nc + Np)〉, where gw and gh are the grid dimensions, the six static parameters are the box coordinates, probability, and objectness (x, y, w, h, P, O), Nc is the number of classes, and Np is the number of additional parameters (Fig. 3). While the geometric and detection score outputs are always predicted, both Nc and Np are problem-dependent and user-defined.

In theory, the network reduction factor could be adjusted to the average object density of the application to have no more than one target object in each grid cell. However, for many use cases, it would result in an output grid resolution close to the input resolution, which is very computationally intensive (Appendix A.2). To overcome this, we can expand the output vector at each grid cell to contain multiple boxes by stacking their independent vector as a longer 1D vector. The new output shape is then 〈gw, gh, Nb×(6 + Nc + Np)〉, with Nb the number of independent boxes predicted at each grid cell, which is a hyperparameter of the detector. For each possible box in a grid cell, we define an individual size-prior (pw, ph), which impacts the size scaling in Eqs. (4) and (5). This definition helps to distribute objects over the available boxes on a given grid cell based on box sizes and shapes. The prior list is the same for all grid cells as they only represent different positions at which the same detector is applied through the fully convolutional architecture (Appendix A.1). The size priors are user-defined hyperparameters but are often automatically defined through clustering of the size distribution of targets from the training sample. In the latter, we refer to the independent predictive elements for a single grid cell as detection units. For example, a detector set capable of predicting up to six independent boxes per grid cell comprises six detection units. Some detection units can have the same size prior, but they still represent independent predictions.

Because scales or shapes are unlikely to be evenly represented in the training sample, the detection units should adapt their positive-to-negative detection ratio to rebalance their probability and objectness distribution (Sect. 2.2). For this, a λbvoid factor is defined for each detection unit. These factors must be adjusted so the objectness and probability responses are similar enough for all detection units to be comparable during prediction filtering (Sect. 2.8).

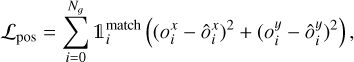

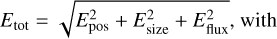

2.6 Target-prediction association function

With multiple box predictions per grid cell, deciding which detection unit should be associated with each target box is critical. With our YOLO-CIANNA method, we introduce a prediction-aware association process. Our approach differs significantly from the one used in the classical YOLO formalism, which only uses size priors to define theoretical best matches. Our method is expected to be more robust for images with a high density of small objects, which is typical for astronomical data products. An in-depth discussion about the motivation behind our implementation and a comparison with the classical YOLO association process are both presented in Appendix A.3.

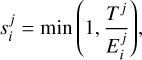

The main objective of our association process is to find the best target-prediction pairs regarding a specific fIoU matching metric. We start by setting 𝟙void = 1 for all detection units. Then, we identify all predicted boxes that are a good enough representation of at least one target regarding our  threshold. This comparison is made for all detection units and all targets regardless of their center position. All objects that respect this criterion are removed from 𝟙void. The rest of the association algorithm aims to find the best match for each target box through an iterative process. First, matching scores for all possible target-prediction pairs in a grid cell are stored in a scoring matrix. Then, the best current score in the matrix is used to define a new target-prediction pair that is added to the 𝟙match mask and removed from 𝟙void if it was not already the case. The full row and column corresponding to the target and detection unit of the best match are masked in the score matrix. This search process is repeated until the score matrix is empty or fully masked. A full example of the association a in a grid cell with the evolution of the score matrix is presented in Fig. A.4, associated with Appendix A.5. Interestingly, we observed that our approach presents many similarities with the Kuhn–Munkres algorithm (Kuhn 1955; Munkres 1957) that tackles the problem of optimal score-based association, which was not anticipated.

threshold. This comparison is made for all detection units and all targets regardless of their center position. All objects that respect this criterion are removed from 𝟙void. The rest of the association algorithm aims to find the best match for each target box through an iterative process. First, matching scores for all possible target-prediction pairs in a grid cell are stored in a scoring matrix. Then, the best current score in the matrix is used to define a new target-prediction pair that is added to the 𝟙match mask and removed from 𝟙void if it was not already the case. The full row and column corresponding to the target and detection unit of the best match are masked in the score matrix. This search process is repeated until the score matrix is empty or fully masked. A full example of the association a in a grid cell with the evolution of the score matrix is presented in Fig. A.4, associated with Appendix A.5. Interestingly, we observed that our approach presents many similarities with the Kuhn–Munkres algorithm (Kuhn 1955; Munkres 1957) that tackles the problem of optimal score-based association, which was not anticipated.

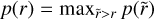

As presented in Sect. 2.2, the best match associations contribute to all subparts of the loss, while the detection units that remained in 𝟙void contribute only to the probability and object- ness following Eq. (11). The remaining GBNB detection units not being part of either 𝟙match or 𝟙void do not contribute to the loss. To account for edge cases, size imbalance, or training instability, we added several specific refinements inside our new association process, which we detail in an appendix section dedicated to advanced subtleties of our method (Appendix A.6). The global association function algorithm is presented in Fig. 4 in a way that separates the simplified association process and the advanced association with refinements.

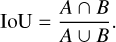

2.7 YOLO-CIANNA complete loss function

Depending on the application, it might be necessary to balance the relative importance of the predicted quantities. For example, when detecting small objects, the center coordinates become the main estimate of prediction quality, while a high precision of the predicted size becomes mostly irrelevant. Therefore, we use loss scaling factors for the box position λpos, the box size λsize, the probability λprob, the objectness λobj, the classes λclass, and the extra parameters λparam.

While loss scaling balances the general importance of each predicted quantity, it does not allow the network to guide its expressivity regarding its current prediction quality dynamically. For example, adjusting the predicted class of a detection unit that does not yet properly position or detect the corresponding object is irrelevant and can result in reinforced wrong features or noisy training. Therefore, we chose to add prediction quality limits over some predicted properties directly into the loss function. While the position and the size are always updated for a match, we added quality conditions for the objectness  , the probability

, the probability  , the classification

, the classification  , and the extraparameters

, and the extraparameters  . Each loss subpart is set to zero if the current fIoU between the target and predicted boxes is below its specific quality threshold.

. Each loss subpart is set to zero if the current fIoU between the target and predicted boxes is below its specific quality threshold.

This quality limit principle, combined with other association refinements, results in what we call a “cascading loss” that varies during training to guide the network expressivity toward the important aspects, not adjusting currently irrelevant properties. A complete description of this process and the effect it has on training performances and loss monitoring is given in Appendix A.7.

Combining all the previously introduced loss subparts, conditional masks, and scalings, we can define our complete YOLO- CIANNA loss function for one image as

![$\eqalign{ & {\cal L} = \sum\limits_{i = 0}^{{N_g}} {\sum\limits_{j = 0}^{{N_b}} {_{ij}^{{\rm{match }}}} } \left( {{\lambda _{{\rm{pos }}}}\quad \left[ {{{\left( {o_{ij}^x - \hat o_{ij}^x} \right)}^2} + {{\left( {o_{ij}^y - \hat o_{ij}^y} \right)}^2}} \right]} \right. \cr & + {\lambda _{{\rm{size }}}}\quad \left[ {{{\left( {o_{ij}^w - \hat o_{ij}^w} \right)}^2} + {{\left( {o_{ij}^h - \hat o_{ij}^h} \right)}^2}} \right] \cr & + {\lambda _{{\rm{class }}}}\quad _{ij}^C\sum\limits_k^{{N_C}} {\left( { - \hat C_{ij}^k\log \left( {C_{ij}^k} \right)} \right)} \cr & + {\lambda _{{\rm{param }}}}\quad _{ij}^p\sum\limits_k^{{N_p}} {{\gamma ^k}} {\left( {p_{ij}^k - \hat p_{ij}^k} \right)^2} \cr & \matrix{ { + {\lambda _{{\rm{prob}}}}_{ij}^P{{\left( {{P_{ij}} - 1} \right)}^2}} \cr { + {\lambda _{{\rm{obj}}}}\left. {_{ij}^O{{\left( {{O_{ij}} - {\rm{flo}}{{\rm{U}}_{ij}}} \right)}^2}} \right)} \cr } \cr & + \sum\limits_{i = 0}^{{N_g}} {\sum\limits_{j = 0}^{{N_b}} {_{ij}^{{\rm{void }}}} } \lambda _{{\rm{void }}}^j\left( {{\lambda _{{\rm{prob }}}}{{\left( {{P_{ij}} - 0} \right)}^2} + {\lambda _{{\rm{obj }}}}{{\left( {{O_{ij}} - 0} \right)}^2}} \right). \cr} $](/articles/aa/full_html/2024/10/aa49548-24/aa49548-24-eq35.png) (14)

(14)

In this equation, in addition to already defined parameters, the first sum runs over all the elements of the output grid for a single image Ng , and the second sum runs over all the detection units in a grid cell Nb. All the values with a hat represent the targets for the corresponding predicted values, and the  are masks of predictions that pass the quality limit of each subpart. This loss is written for a classification based on a soft-max activation and a cross-entropy error classification, but it can be modified for a sigmoid activation with a sum-of-square error.

are masks of predictions that pass the quality limit of each subpart. This loss is written for a classification based on a soft-max activation and a cross-entropy error classification, but it can be modified for a sigmoid activation with a sum-of-square error.

|

Fig. 4 Summary of the target-prediction association algorithm of YOLO-CIANNA. All steps are performed independently by each grid cell. All the elements are executed in order from top to bottom. The A, B, and C blocks represent the general case for the association. All the refinement steps of the association process corresponding to the B.2 block are described in Appendix A.6. |

|

Fig. 5 Illustration of the NMS process. Dashed boxes are the targets, and solid boxes are the predictions. The line width of each box is scaled according to its objectness score. The colors indicate the state of the box in the NMS process at different steps. Frame (a) shows the targets and the remaining detector predictions after objectness filtering. Frames (b) and (c) represent two successive steps of the NMS process with a different best current box. Frame (d) shows the selected boxes after the NMS. |

2.8 Prediction filtering and non-maximum suppression

We expect a properly trained detector to order its predictions by quality based on the objectness score for each detection unit. The raw detector output is always a static list of boxes of size 〈ɡw, ɡh, Nb×(6 + Nc + Np)〉 regardless of the input content. Consequently, the predicted boxes must be filtered based on their objectness score to remove those unlikely to represent an object. By design, the number of detectable objects in the image should be low compared to the total number of detection units. Therefore, most predicted boxes belong to the background type with a low objectness score.

While the continuous objectness score for all the predictions is the best direct representation of the sensitivity of the detector, it is incompatible with some final metric that needs a list of considered “good” detections. Visualizing the predicted boxes also requires filtering to preserve only the plausible detection. In such cases, an objectness threshold can be used to remove low-confidence detections. The threshold is usually optimized to maximize a metric score on a validation or test dataset not used for training but for which the targets are known.

Due to the fully convolutional structure of the network, objectness scores from the same detection unit can be compared over the full grid, meaning that the same threshold can be used. On the contrary, the predicted objectness between two independent detection units is not comparable as it depends on the type and frequency of targets associated with each of them during training. A possible solution is to fit an individual object- ness threshold for each detection unit. However, it relies on the assumption that predictions from different detection units are independent, which is not true for most applications. In practice, it is still a good solution to remove the vast majority of false positives. To achieve the best results, the objectness regimes must be homogenized between the different detection units from the start by adjusting the individual  factors (Sect. 2.5). This is done by balancing the ratio between detection and background cases based on how the objects from the training sample are expected to distribute over the detection units.

factors (Sect. 2.5). This is done by balancing the ratio between detection and background cases based on how the objects from the training sample are expected to distribute over the detection units.

With most false positives removed, there can still be multiple high-objectness predictions that represent the same underlying object in the image. To preserve only the best-detected box for each object, we use a classical post-processing step called nonmaximum suppression (NMS, Felzenszwalb et al. 2010; Girshick et al. 2013). It consists of an iterative search for the box with the highest objectness score in the image that is then used to remove all the overlapping predicted boxes. To consider that there is an overlap, the two boxes must verify  with the fIoU being computed between the two predicted boxes. The best box is then stored in a static list of selected detection. This process is repeated until no boxes are left in the raw-prediction list. It is illustrated in Fig. 5.

with the fIoU being computed between the two predicted boxes. The best box is then stored in a static list of selected detection. This process is repeated until no boxes are left in the raw-prediction list. It is illustrated in Fig. 5.

The NMS is done regardless of what detection unit generated the predicted boxes, demonstrating that they are not independent as they can remove each other based on their respective object- ness. This is one of the main reasons we force all detection units to have similar objectness distributions. The detection quality can only be evaluated after the NMS, so searching for the best  factors is dependent on the

factors is dependent on the  and respectively.

and respectively.

3 Dataset description and network training

In this section, we present the main properties of the SDC1 data along with the expected products and the associated metrics. A complete description of the SDC1 challenge can be found in Bonaldi et al. (2021), while the underlying T-RECS simulation is detailed in Bonaldi et al. (2019). We also present the preprocessing of the data to construct our training sample. From this, we describe our best-performing network architecture and specify the corresponding setup and hyperparameters for our detector.

3.1 Subchallenge definition

The SDC1 is a source detection and characterization task in simulated SKA-like data products (Sect. 1) that comprises nine 4GB images (three frequencies, with three integration times each) of the same field. The SDC1 is only modestly challenging regarding data volume, especially compared to the SDC2 with its 1TB data cube. Still, it represents significant challenges for detection methods in many other aspects. All the images have an identical pixel size of 32768x32768. As the frequency increases, the angular resolution improves while the field of view reduces. Therefore, images at different frequencies only partially overlap, meaning that the problem to solve varies with the position in the field. In addition, the number of detectable sources varies significantly with the integration time and frequency (Table 2 in Bonaldi et al. 2021). All the images are considered noise-limited, even at the highest 1000 h integration time. As a benchmark for our YOLO-CIANNA method, we used only the 560 MHz– 1000 h image due to its wider field of view, higher total number of sources, and higher source density per square pixel. This image is a fair example of a typical astronomical detection context with a high dynamic range and a high density of small sources with occasional blending. While our method could technically work for the other SDC1 images, it would not be more informative regarding its capabilities. The consequences and limits of this choice are further discussed in Sect. 5.2.1.

In the original challenge, participating teams were provided a True catalog for a small fraction of the image. This was supposed to facilitate method development and tuning but also allow training of supervised ML approaches. At the time, the full underlying True catalog was unavailable, and the teams had to submit their result to a remote scoring service. After the challenge, the organizers released the full True catalog, the scoring code in open source2 (Clarke & Collinson 2021), and the submitted source catalogs from the participating teams. In this study, we use only the training catalog to constrain our detector so our results can be compared to those published in the context of the challenge. We then use the full True catalog only to present an in-depth analysis of our trained detector performances. Since the scorer allows individual image scoring (for all frequencies and exposure), we can produce a detection catalog for the 560 MHz–1000 h image that is directly comparable to other team submissions during and after the challenge.

3.2 Image and source catalog description

The 560 MHz–1000h image has a field of view of 5.5 square degrees, which contains the primary beam out to the first null. It has a full size of 32 768 square pixels, a pixel size of 0.6 arcsec, and an imaging resolution in the Gaussian approximation of 1.5 arcsec full width at half-maximum. A subfield of 512 square pixels from this image is presented in Fig. 1. The simulated image contains no systematic instrumental effects like calibration, pointing, or deconvolution errors, making it unrealistically clean (Sect. 5.3). The image is not primary-beam corrected, but the corresponding primary beam image is available as ancillary data. With this setup, the instrument sensitivity decreases on the edges, but it preserves a uniform noise level over the full field. The thermal noise level reported for this image in Bonaldi et al. (2021) is 2.55×10−7 Jy beam−1; however, a direct estimate of the noise using the provided image is about 3.45×10−7 Jy beam−1. We use this second value for the rest of the paper when necessary. While we could convert the image to a beam-corrected one, it is better for the detector to work on the constant noise image and to detect sources from their apparent brightness (Sect. 3.5). The apparent flux of a source can be obtained by multiplying the flux from the True catalog by the interpolated primary beam value at the central position of the source.

The SDC1 challenge task is to detect and characterize the sources. The expected parameters for each source are the central coordinates (RA, Dec), the integrated source flux f , the core fraction cf if different from zero, the major and minor axis (Bmaj, Bmin), the major axis position angle PA, and a classification C (one of AGN-steep, AGN-flat, or star-forming galaxy). The provided True catalog supplies all these properties for each source, allowing the training of supervised methods. From Sect. 5.3 in Bonaldi et al. (2021), the classification part of the challenge was considered problematic a posterior, as it is only feasible on the tiny fraction of the field where all frequencies are available. For this reason, we focused on getting the best performances on a detection and characterization problem only.

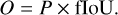

3.3 SDC1 scoring metric

The first element of the scorer is a match criterion. Due to source density, relying only on the central positions of the sources for matching predictions with the underlying True catalog is likely to result in many false positives. The SDC1 scorer uses a combination of the position, the size, and the predicted flux accuracies to represent a global matching score defined as

(15)

(15)

(16)

(16)

(17)

(17)

(18)

(18)

where the true values from the catalog are indicated with a hat, (x, y) is the central coordinates in pixels corresponding to (RA, Dec), S is the average value of Bmaj and Bmin, S′ is the largest axis convolved with the synthesized-beam, and f is the source intrinsic flux. We note that the difference between S and S′ is present in the scorer code but not specified in either Bonaldi et al. (2021) or in the challenge documentation. To prevent false detections due to the high source density, the scorer uses a strict match threshold value for Etot. Each subpart of the error is normalized to a value considered representative of a 1σ error. In the scorer, the normalization coefficients are set to 0.93 for the position, 0.36 for the flux, and 4.38 for the size, all using the catalog units defining a global 1σ error. These individual limits were obtained by fitting the distribution of the corresponding values on the combination of all the submitted catalogs at the challenge time. A match is defined if Etot < 5σ, which was optimized to reduce the average random association chance of all submitted catalogs below 10%. We note that the scorer distinguishes false detection into two categories: either “False” if there is no target source closer than 1.5 × S′ or “bad” if it passes this distance limit but has a too-high Etot.

The list of identified matches is then converted to a global score that captures the detection and characterization quality. The SDC1 scorer attributes a score of up to one point for each match depending on its characterization quality and penalizes every false detection with a strict minus one point. The characterization evaluation is decomposed into seven individual subscores that all respect the following scoring rule (based on the scorer code) for a single source

(19)

(19)

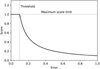

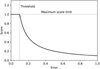

where j represents the subscore part and i a single source from the match list, Tj is a threshold for this subscore part, and finally,  correspond to the error term and the final subscore part for this source. We list all the subscore parts and their corresponding Ej function and Tj values in Table 1, and we illustrate the typical score as a function of the error regarding a given threshold value in Fig. 6.

correspond to the error term and the final subscore part for this source. We list all the subscore parts and their corresponding Ej function and Tj values in Table 1, and we illustrate the typical score as a function of the error regarding a given threshold value in Fig. 6.

We note that all subpart error functions are based on a relative error, which has an asymmetric behavior. When overestimating the value, the error can rise infinitely, while underestimating a strictly positive prediction will never lower the relative error below −1. The issue is that these errors are associated with symmetric score response functions. Consequently, the score will be higher when underestimating the predictions, which is unlikely to naturally happen on quantities with intrinsic minimum values linked to instrumental limits like the flux, Bmaj, and Bmin. While the effect is minor for the subpart scoring, we note that for the matching criteria, it will result in excluding properly detected faint sources for which the noise caps the minimum predictable flux (Sects. 4.5 and 5.1).

The final score for a given source and the average subpart score for all sources are

(20)

(20)

To obtain the full SDC1 score, there is a scaling between the different frequencies and integration times. However, since we limit this study to a single image, there is no need for these definitions. The average source score for matching sources is

(21)

(21)

and the final SDC1 score for a single image is then

(22)

(22)

When scoring a submitted catalog, the training area is excluded, so detection performances are evaluated only for examples that were not used to constrain the detector.

Error functions and threshold values for all subscores.

|

Fig. 6 Generic scoring function response for a source parameter with T = 0.1 as a function of the prediction error. |

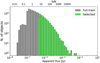

3.4 Selection function

The full simulation of the SDC1 contains more than five million sources. Due to the added simulated noise, only a fraction of these sources are detectable in the image. In Bonaldi et al. (2021), they estimated that only around 758 000 sources are above the noise level by 5σ in the 560 MHz–1000 h image. This construction is problematic for supervised ML methods as they are sensitive to wrong labeling during training. For an object detector, it goes two ways: (i) if a source is detectable but not labeled as a target box, the network lowers the detection object- ness of all predicted boxes that try to detect sources with similar features; (ii) if there is a target box for a source that the network is incapable of detecting, it increases the objectness of nonrepresentative features, likely background noise. To achieve good detection performances, we must construct a training sample that is both complete and pure regarding the detectability of the sources it contains. For this, we defined a selection function based on the source properties of the provided True catalog.

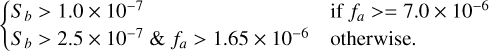

Our selection function combines the apparent source flux fa in Jy (Sect. 3.2), and the surface brightness Sb computed as

(23)

(23)

(24)

(24)

(25)

(25)

The size saturation values are in arcsec and correspond to a two and one-pixel size, respectively, and Ps represents the pixel size in arcsec used to obtain the box size in pixels. The selection function to keep a source in our training sample is then defined as

(26)

(26)

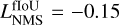

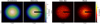

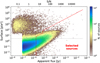

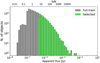

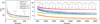

The fa > 1.65 × 10−6 hard cut in apparent flux is equivalent to a signal-to-noise-ratio (S/N) selection with S /N > 4.8. However, we observed that S/N cuts were insufficient to achieve good results as too-low cuts failed to properly remove extended faint sources, while too-high cuts tended to remove detectable point sources. Consequently, we added the surface brightness cuts that helped to remove undetectable objects. We represent our selection cuts over a 2D histogram of the source surface against apparent flux for the training area in Fig. 7. In this figure, the top left patchy distribution corresponds to steep spectrum AGN. The flat spectrum AGN and star-forming galaxies distribute similarly and occupy the rest of the distribution, but they are strongly unbalanced with only a few flat spectrum AGN. In practice, both types of AGNs are mostly undetectable with the 560 MHz–1000 h setup, and are therefore strongly under-represented after our selection function. This also contributes to the identified difficulty of the classification task in the SDC1 result paper (Sect. 5.3 in Bonaldi et al. 2021). The hard cut at the bottom left of the distribution results from a per-class S/N prefiltering done directly by SKAO, characterized by the “selected” flag in the training catalog. Due to our size clipping, most of the surface range of this space is collapsed at a minimum surface, represented by the dotted gray line. We observed that lowering the clipping limits below the current values increases the number of nonvisible objects that pass our selection, which should be avoided.

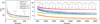

We represent the effect of our selection function on the source apparent flux distribution in Fig. 8. We also illustrate our selection on a small field in Fig. 9. We see that it misses some apparent compact signals, which can result from several effects: (i) the local noise contribution can increase the perceived apparent flux of a faint source, (ii) blended faint sources can add their flux at the same location, and (iii) it can be a bright and compact part of an extended faint source below the surface brightness limit. Regardless of their origin, these nonlabeled compact signals will likely confuse the detector training. We tried to adapt the selection process and our threshold values, but the current formulation produced the best results. We also tried to define our surface brightness using a more common astronomical size definition by convolving the Bmaj and Bmin with the synthesized beam. Still, it resulted in lower detector scores after training.

We emphasize that this hand-made selection function relies on parameters from the True source catalog. Therefore, applying the method to observed data instead of simulated ones will require either using estimates from another approach to define the target or constructing a selection function that does not rely on these source properties (Sect. 5.3). In Appendix C.2, we discuss an alternative way to construct a selection function iteratively using the prediction of a naively trained detector to evaluate the detectability of the sources.

|

Fig. 7 Two-dimensional histogram of the source surfaces as a function of their apparent flux. The dotted gray line indicates the saturated minimum surface with clipping. The red dashed line represents our selection function, with all the sources on the right of the line being selected. |

|

Fig. 8 Source apparent flux distribution histogram in log scale and using log-bins for the True catalog and the selected sources. |

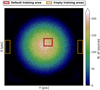

3.5 Training area

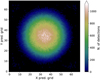

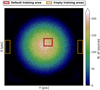

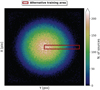

Using the challenge setup, the provided training area only spans a small part of the image center, corresponding to about 0.308 square degree. The associated training catalog contains 190 552 sources (using the predefined “selected” flag), corresponding to roughly 3.6% of the sources from the full True catalog. Applying our custom selection function to this region drops the number of sources to 33 813. Due to the effect of the primary beam sensitivity over the image field, the central area is not a good representation of other parts of the image. The learned features and context awareness of a detector trained on this region are unlikely to generalize properly to other parts of the image. We represent the distribution of sources that pass our selection function for the whole image field using the full True cat in Fig. 10. The footprint of the primary beam sensitivity is clearly visible. The provided training area corresponds to the red box. A more suited training area definition could have been a narrow band over a complete beam radius of the image field, which we explore in Appendix C. To mitigate the generalization issue while still following the original challenge definition, the detector could be constrained to either be flux agnostic and only perform morphological detection or to reject all sources outside a given radius from the image center.

Instead, we propose another approach that uses other regions of the image without adding any target sources. We observed that detecting an object above a given radius from the image center becomes very unlikely. From this, we added two “noise only” regions to our training sample that are sufficiently far from the image center to be considered devoid of any detectable source. We selected two rectangular regions of identical width and height of 2000 and 5600 pixels, respectively, that are both vertically centered in the image but on opposite sides horizontally with a margin to the image edge of 250 pixels (Fig. 10). These regions lie between the main lobe and the first sidelobe of the primary beam. We note that the regions are, in fact, not fully empty and that it will result in a few unlabeled visible sources. Still, this approach remains vastly beneficial regarding global detector performances. During training, examples are drawn randomly in the default training area but also at a low 5% rate in one of the two noise-only regions. This forces the detector to understand the input intensity dynamic of parts of the image where it is not expected to detect anything. We observed that detectors trained with this process can interpolate between the two regimes and provide much better results for the full image without manually excluding difficult regions. Other things being equal, the best achievable challenge score is increased by more than 10% with this approach compared to training only with the default region.

|

Fig. 9 Illustration of the selection function over a typical input field. The background image represents an identical 256×256 input patch in all frames, centered on RA = 0.1 deg, Dec = −30.2 deg, using the renormalized input intensity but saturated at half the maximum value to increase visual contrast. The displayed grid corresponds to the detector output grid mapping for a network reduction factor of 16. The left frame is provided as an image reference, the middle frame represents all the target boxes from the True catalog, and the right frame represents the remaining target boxes after our selection function. |

|

Fig. 10 Two-dimensional histogram of the central coordinates of the sources from the full True catalog that pass our selection function. The red box indicates the default SDC1 training area, and the orange boxes indicate our additional noise-only regions. |

3.6 Network backbone architecture

As stated in Sect. 2.1, our method requires a fully convolutional neural network backbone to create a mapping from a 2D input image to a regular output grid. Efficient neural network architectures can be built by stacking convolutional layers while progressively reducing the spatial dimension to filter the relevant information and construct higher-level representations of the input content (Simonyan & Zisserman 2015; LeCun et al. 2015). With a fully convolutional structure, the last layer is responsible for encoding the output grid of the detector. Its spatial dimension represents the output grid, and the filters encode all the output vector elements for each grid cell. It also results in the output grid size being controlled by the reduction factor of the backbone network, which corresponds to the total spatial reduction of the network from its input to the output. The properties of a fully convolutional architecture and its impact on our method design are further detailed in Appendix A.1.

With a fully convolutional architecture, the input size does not impact the model structure and number of parameters. In this section, we consider the input size to be 256×256 pixels (Sect. 3.8). Regarding the reduction factor, we found that a value of 16 resulted in the best detection accuracy, which means that the training output grid is composed of 16×16 cells. Considering the source density of the 560 MHz–1000 h image, each grid cell has to detect multiple sources, which was the motivation for using a prediction-aware association process (Appendix A.3).

At this point, it would be tempting to adopt the classical darknet-19 backbone network introduced in YOLO-V2 (Redmon & Farhadi 2017). We notably used it successfully with our custom association process for other contexts (Appendix A.8). We discuss this possibility and explain why it would lead to poor performances in Appendix B. Instead, we meticulously explored increasingly complex custom backbone architectures. We specifically looked for an architecture that is both computationally efficient and capable of high detection accuracy. An illustration of our final architecture is presented in Fig. 11.

Our final architecture was based on a few educated guesses, but it also required exploration through score optimization. We note that the spatial dimension is always reduced by convolution operations instead of pooling operations, which helps preserve the apparent flux information and better represents continuous objects with no sharp edges. The first layer has larger filters to extract continuous luminosity profiles better. The second layer performs a local compression (both spatially and in the number of filters), mainly acting as a local noise filter. Then, for a few layers, we progressively increase the number of filters while decreasing the spatial dimension at a rate that maximizes computing efficiency. Starting with the seventh layer, we begin alternating large layers with 3×3 filters and smaller layers with 1×1 filters, which is typical of the YOLO darknet architecture. This structure alternates searches for local spatial coherency with representation compressions. It improves compute performance and reduces the global number of parameters compared to a more classical stacking of identically sized 3×3 layers. We note that our third spatial-dimension reduction layer (eighth layer in global) is also considered a compression layer in this scheme. The second last layer has a 25% dropout rate, which is used for regularization (Srivastava et al. 2014).

We tested adding group normalization (Wu & He 2018) at various places in the network, but it almost always degraded the best achievable score by a few percent (Appendix B). This is likely because in-network normalization tends to lose the absolute values of the input pixels, making it more difficult to predict the flux accurately. Since group normalization works at the scale of the whole spatial dimension, it might also affect the input dynamic in a way that makes faint sources more challenging to detect in the presence of bright sources in the image. The only place where it produces a beneficial effect is near the end of the network, after the last spatial correlation, where the flux value is likely fully re-encoded in the high-level features. This specific normalization layer has several beneficial effects, including a speed up and stabilization of the training process and a slight improvement of the best achievable score of about 2%.

The complete architecture contains 17 convolution layers for around 12.62 million weights, but 76% of these weights are concentrated at the end of the network in the connections between layers 14 and 16. With this architecture, the receptive field for a given grid cell at the output layer is about 100 pixels. It limits both the maximum size of the sources that can be detected with this architecture and the context windows accessible to each detection unit. We discuss the limits of the current network structure and potential architecture improvements in Sect. 5.2.

|

Fig. 11 Illustration of our final CNN backbone architecture. The left column provides the layer structural properties, while the right column indicates the spatial output dimension for each layer starting from a 256×256 input size. The input image is on the top, and layers are stacked in order vertically. The width of a layer represents its number of filters. The green color indicates a layer that preserves the spatial dimension, while the red indicates a reduction. The output grid dimension and the list of the predicted parameters for each box are also indicated. |

3.7 YOLO-CIANNA configuration for the SDC1

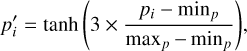

3.7.1 Input normalization

Input normalization is critical to obtain good detection performances in our specific context. This aspect is key for astronomical images due to their very high dynamic range. Everyday-life images are usually encoded using three 8-bit integers, resulting in 256 possible values for each color. Converting an astronomical image to a similar format often induces a significant information loss. We note that some off-the-shelves deep learning detectors or frameworks implicitly convert images to this format without warning, sometimes explaining poor performances. We found that 16-bit floating-point quantization was sufficient for the SDC1 image after other input transformations, but some astronomical datasets are likely to require 32-bit floating-point encoding. It is also useful to offset the raw dynamic to better represent the low flux regime. In our case, we redefine our minimum and maximum values as minp = 4×10−7 Jy beam−1 and maxp = 4×10−5 Jy beam−1, and apply a scaled hyperbolic tangent for all pixel values, which can be summarized as

(27)

(27)

where pi is the raw pixel value in Jy beam−1 clipped with the two limits, and pi′ is the pixel value as it is presented to the detector. This normalization remaps all input data in the 0–1 range and grants most of this range to low signal values using an almost linear regime to help distinguish faint sources from the noise. The counterpart is a flattening of the dynamic for high fluxes, but bright sources require less accuracy on the pixel values to obtain a good relative flux estimate.

|

Fig. 12 Target distribution over the closest box size prior. The association uses the Euclidean distance in the 2D box size space. |

3.7.2 Detection units settings

To configure our detection units, we must first define our target boxes. The True catalog does not contain the necessary information to define them in the classical computer vision way. Instead, we define our boxes as centered on the source central coordinates and with a size that is scaled on its major and minor axes. For each target source, we define a rectangular box with  and

and  corresponding to PA = 0. This box is then rotated to correspond to the actual

corresponding to PA = 0. This box is then rotated to correspond to the actual  of the source, and we search for the smallest square box that contains the four rotated vertex. The resulting dimensions are clipped in the 5–64 pixels range to obtain the final

of the source, and we search for the smallest square box that contains the four rotated vertex. The resulting dimensions are clipped in the 5–64 pixels range to obtain the final  and

and  dimensions that are used to define our target box for the corresponding source (Fig. 9). The minimum clipping implies that all unresolved point sources get the same minimum size, which was calibrated to optimize the association process of our detection layer. We stress that box sizes do not have to be very accurate in our specific context. Firstly, they do not constrain the receptive field in any way, meaning that the detector can use information outside the box to detect or characterize the source. Secondly, they are only used during association so the detector can be trained and are not used in the scorer matching criteria and characterization score. The important Bmaj and Bmin values are instead predicted as additional parameters (Sect. 3.7.3).

dimensions that are used to define our target box for the corresponding source (Fig. 9). The minimum clipping implies that all unresolved point sources get the same minimum size, which was calibrated to optimize the association process of our detection layer. We stress that box sizes do not have to be very accurate in our specific context. Firstly, they do not constrain the receptive field in any way, meaning that the detector can use information outside the box to detect or characterize the source. Secondly, they are only used during association so the detector can be trained and are not used in the scorer matching criteria and characterization score. The important Bmaj and Bmin values are instead predicted as additional parameters (Sect. 3.7.3).

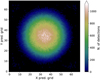

We define the number of detection units and their size priors based on the target source density and the box-size distribution. We chose to have three size regimes: i) a small regime that is composed of several identical units of 6×6 size prior, ii) an intermediate regime with two units of identical surfaces but two aspect ratios with size priors of 9×12 and 12×9 respectively, and iii) a large regime with a single unit of 24×24 size prior. We illustrate how the target sources distribute over these size-priors based on the smallest Euclidian distance with their respective size in Fig. 12. This indicates that most sources would theoretically be associated with the smallest size regime. We tried an alternative setup with all our detection units in the small regime, but it resulted in lower detection performances for all size regimes. This confirms that the source size remains an appropriate first-order criterion for distributing the network expressivity over the detection units, even with such a massive target size regime imbalance (Appendix A.3).

To detect multiple small sources in the same grid element, we must populate the small-size regime with several identical detection units. We observed good results for all models trained using four to height small units with optimum results for six. Too few detection units limit the number of detectable objects. It can also force some units to encapsulate multiple contexts, preventing them from being detected simultaneously at prediction time. Conversely, too many boxes dilute the context diversity and increase the training difficulty. Combined with the three detection units from the two other size regimes, our detector predicts nine independent boxes in the same grid cell. In practice, all these detection units are never used simultaneously. We discuss how the actual predictions are distributed among them in Sect. 4.2.

3.7.3 Source properties to predict