| Issue |

A&A

Volume 694, February 2025

|

|

|---|---|---|

| Article Number | A253 | |

| Number of page(s) | 29 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202450977 | |

| Published online | 19 February 2025 | |

Towards an optimal marked correlation function analysis for the detection of modified gravity

1

Aix-Marseille Université, CNRS, CNES, LAM, Marseille, France

2

Aix-Marseille Université, Université de Toulon, CNRS, CPT, Marseille, France

⋆ Corresponding author; martin.karcher@lam.fr

Received:

3

June

2024

Accepted:

13

December

2024

Modified gravity (MG) theories have emerged as a promising alternative to explain the late-time acceleration of the Universe. However, the detection of MG in observations of the large-scale structure remains challenging due to the screening mechanisms that obscure any deviations from general relativity (GR) in high-density regions. The marked two-point correlation function, which is particularly sensitive to the surrounding environment, offers a promising approach to enhancing the discriminating power in clustering analyses and to potentially detecting MG signals. This work investigates novel marks based on large-scale environment estimates, which also that exploit the anti-correlation between objects in low- and high-density regions. This is the first time that the propagation of discreteness effects in marked correlation functions is investigated in depth. In contrast to standard correlation functions, the density-dependent marked correlation function estimated from catalogues is affected by shot noise in a non-trivial way. We assess the performance of various marks to distinguish GR from MG. This is achieved through the use of the ELEPHANT suite of simulations, which comprise five realisations of GR and two different MG theories: f(R) and nDGP. In addition, discreteness effects are thoroughly studied using the high-density Covmos catalogues. We have established a robust method to correct for shot-noise effects that can be used in practical analyses. This methods allows the recovery of the true signal, with an accuracy below 5% over the scales of 5 h−1 Mpc up to 150 h−1 Mpc. We find that such a correction is absolutely crucial to measure the amplitude of the marked correlation function in an unbiased manner. Furthermore, we demonstrate that marks that anti-correlate objects in low- and high-density regions are among the most effective in distinguishing between MG and GR; they also uniquely provide visible deviations on large scales, up to about 80 h−1 Mpc. We report differences in the marked correlation function between f(R) with |fR0| = 10−6 and GR simulations of the order of 3–5σ in real space. The redshift-space monopole of the marked correlation function in this MG scenario exhibits similar features and performance as the real-space marked correlation function. The combination of the proposed tanh-mark with shot-noise correction paves the way towards an optimal approach for the detection of MG in current and future spectroscopic galaxy surveys.

Key words: large-scale structure of Universe

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

The seminal works of Riess et al. (1998) and Perlmutter et al. (1999) revived the cosmological constant Λ as a form of dark energy to explain the late-time accelerated expansion of the Universe. Together with cold dark matter (CDM), this established the ΛCDM model as the current concordance model of cosmology. Upon closer examination, however, the ΛCDM model is found to exhibit certain inherent problems. On the theoretical side, the fine-tuning problem of Λ, as extensively studied in Martin (2012), represents a significant challenge. On the observational side, most recent cosmological results show a growing tension between early- and late-time measurements of the Hubble constant H0, respectively, extracted from the cosmic microwave background (CMB) anisotropies (Planck Collaboration VI 2020) and local distance ladders (Riess et al. 2022). Another source of contention, although not as significant, comes from an apparent mismatch in the measured variance of matter fluctuations, σ8, between early- (Planck Collaboration VI 2020) and late-time large-scale structure measurements (Tröster et al. 2020).

To address the aforementioned issues, numerous attempts have been made on the theoretical level. Of particular interest to circumvent the introduction of a cosmological constant or dark energy component in the first place, are modified gravity (MG) theories (see Clifton et al. 2012). One of the most popular modifications is the theory of inflation by Guth (1981) accommodated with scalar-tensor models to resolve the classical flatness and horizon problem in ΛCDM. MG theories are commonly defined and compared through their respective action. Possibly the most straightforward extension to the Einstein-Hilbert action in general relativity (GR) consists of replacing the Ricci scalar by a free function of it, dubbed f(R) theories (see for De Felice & Tsujikawa 2010, a review). The latter have been subjected to comprehensive analysis, resulting in tight constraints on viable f(R) functions to ensure the realisation of accelerated expansion without the necessity of a cosmological constant, while simultaneously satisfying solar system GR tests (Cognola et al. 2008). The f(R) model proposed by Hu & Sawicki (2007) is of particular importance, as it simultaneously realises accelerated expansion and evades Solar System tests through the use of a so-called screening mechanism.

For MG theories to be a viable replacement or extension to GR they have to fulfil very stringent tests coming from solar system observations (see Bertotti et al. 2003; Williams et al. 2004, 2012). On larger scales, the situation is more complex, but there is an increasing effort to tighten constraints on MG theories by using CMB data (Planck Collaboration XIV 2016) in combination with large-scale structure and supernova observables (Lombriser et al. 2009; Battye et al. 2018). In order for a modification to standard gravity to shroud its effects on small scales to recover GR, screening mechanisms are invoked. In general terms, the screening mechanism describes a suppression of any fifth force to a negligible level such that gravity follows GR in certain environments. Screening can happen in different ways such as the chameleon screening in scalar-tensor-theories of gravity (see Khoury & Weltman 2004a,b). It also emerges in some f(R) theories due to the equivalence between scalar-tensor and f(R) theories (Sotiriou & Faraoni 2010). The DGP gravity model, originally developed by Dvali et al. (2000), exhibits the screening mechanism first introduced by Vainshtein (1972). A third popular screening mechanism by Damour & Polyakov (1994) is present in the symmetron model (Hinterbichler & Khoury 2010). For a detailed description of the field of screening mechanisms we refer the reader to Brax et al. (2022). Intuitively, screening mechanisms in the cosmological context, particularly the chameleon one, can be understood as a density dependency, where modification to GR should appear only in low-density regions compared to the mean density of the Universe. In high-density regions, inside galaxies or stellar systems for instance, any modification should be negligible. This imprints a fundamental environmental dependency on the clustering of matter predicted in those theories.

Since the modifications to GR are expected to be small, the observational detection of MG on cosmological scales poses a major challenge. Guzzo et al. (2008) advocated the use of the growth rate of structure f measured from redshift-space distortions (RSD) in the galaxy clustering pattern as an indicator of the validity of GR in the large-scale structure. Since then, f has become a quantity of major interest and has been measured in large galaxy redshift surveys (e.g. Blake et al. 2011; Beutler et al. 2012; de la Torre et al. 2013; Bautista et al. 2021). It is now a standard probe that will be measured by ongoing surveys, in particular the dark energy spectroscopic instrument (DESI) (DESI Collaboration 2016) and Euclid mission (Euclid Collaboration: Mellier et al. 2025) with an exquisite precision. It is worth mentioning the Eg statistic developed by Zhang et al. (2007), a mixture of galaxy clustering and weak lensing measurements to probe the properties of the underlying gravity theory and that has been measured (e.g. Reyes et al. 2010; de la Torre et al. 2017; Jullo et al. 2019; Blake et al. 2020). Other quantities that can in principle be measured from observations are the gravitational slip parameter η and the growth index γ (see Ishak 2019, for a review). At the present time, any of the aforementioned observables has enabled the detection of a deviation from the standard gravitational field.

To improve on existing approaches and to exploit the additional environmental dependency of MG in clustering analyses, White (2016) proposed the marked correlation function as a tool to increase the difference in the clustering signal between MG and GR. In that case, the marked correlation function is a weighted correlation function normalised to the unweighted correlation function, and where object weights or marks, are a function of the local density. The latter is estimated from the density field inferred by dark matter or galaxies. With this methodology, Hernández-Aguayo et al. (2018), Armijo et al. (2018), and Alam et al. (2021) investigated marked correlation functions in N-body simulations of MG. In addition to examining different mark functions based on density, they also considered marks based on the local gravitational potential or the host halo mass of the galaxy. They observed significant differences between MG and GR for marks based on density on small scales, below about 20 h−1 Mpc. White & Padmanabhan (2009) also showed the potential of marked correlation functions to break the degeneracy between the halo occupation distribution (HOD) and cosmological parameters. Similar approaches using weighted statistics or transformation of the density field have further been proposed. Llinares & McCullagh (2017) used logarithmic transformations of the density field and computed power spectra of the transformed field in N-Body simulations to improve on the detection of MG. Boosting the constraining power in cosmological parameter inference using power spectra has been shown by using Fisher forecasts by Valogiannis & Bean (2018), where they compare the Fisher boost using the field transformation of Llinares & McCullagh (2017), the clipping strategy to mask out high-density regions (Simpson et al. 2011, 2013) and the mark proposed in White (2016). Another application of clipping has been done by Lombriser et al. (2015) to the power spectrum in order to better detect f(R) theories with chameleon screening. Recently, the use of marked power spectra has been extended to constrain massive neutrinos (Massara et al. 2021) and tighten constraints on cosmological parameters (Yang et al. 2020; Xiao et al. 2022).

While a lot of effort on marked statistics for MG has been carried out on simulation, there have been several applications to observational data. Satpathy et al. (2019), for the first time, measured marked correlation functions from observations in the context of MG. They used the proposed original mark introduced by White (2016) and investigated the monopole and quadrupole of the marked correlation function measured over the LOWZ sample of the Sloan Digital Sky Survey (SDSS) data release 12 (DR12) dataset (Alam et al. 2015). They could not detect significant differences between MG and GR and they attributed this to modelling uncertainties of the two-point correlation function (2PCF) on scales of 6 h−1 Mpc < s < 69 h−1 Mpc. Armijo et al. (2024b) applied the strategy introduced in Armijo et al. (2024a) to LOWZ and CMASS catalogues of SDSS thereby incorporating uncertainties of the HOD on the projected weighted clustering. They compare predictions from GR and f(R) but find no significant differences, both fit the LOWZ data and are within the uncertainties for the investigated scales between 0.5 h−1 Mpc and 40 h−1 Mpc. For the CMASS catalogue the predictions for both GR and f(R) models fail to properly follow the data in the first place.

A number of the issues encountered in the literature regarding the use of marked correlation functions to distinguish MG from GR can be identified as arising from two main sources. The first is the choice of the mark function, which, in the majority of cases, results in significant differences on small scales only. On those scales, a thorough theoretical modelling is difficult as a proper inclusion of non-linear effects of redshift-space distortions is needed as well. The second issue is the propagation of discreteness effects in the mark estimation, namely, computing the local density from a finite point set, into the measurement of the marked correlation function. To the best of our knowledge, this has not been done so far and can lead to biased measurements if not accounted for. The present work therefore aims at identifying an optimal mark function that is able to significantly discriminate GR from MG on larger scales where theoretical modelling is more tractable. For this, we develop new ways to include environmental information into weighted statistics as well as investigating new algebraic functions of the density contrast to be used as a mark. Furthermore, we investigate the discreteness effects and devise a new methodology to correct marked correlation function measurements for the bias induced by estimating density-dependent marks on discrete point sets. We demonstrate that by applying this methodology we are able to robustly measure the amplitude of marked correlation function and mitigate possible artefacts in the subsequent analysis of MG signatures.

This article is structured as follows. Section 2 describes the f(R) and nDGP gravity models that are later investigated and tested. Section 3 introduces the basics of weighted two-point statistics and marked correlation function. Section 4 presents the MG simulations used in this work and measurements of unweighted statistics, which serve as a reference for comparison with the marked correlation function. Section 5 presents new marks to be used in the analysis of MG. This is followed, in Sect. 6, by the study of the effects of shot noise in weighted two-point statistics. Section 7 shows the main results of this article, which are obtained by applying the previously-defined methodology to MG simulations. Section 8 comprises a discussion on the optimal methodology for marked correlation function and the conclusions are provided in Sect. 9.

2. Modified gravity

We provide in this section a brief review of the theory behind the two classes of MG models that are used later in this work. In particular, we report the respective actions alongside with the equation of motion for the additional scalar degree of freedom, which elucidates the different screening mechanisms incorporated in those gravity theories.

2.1. f(R) Gravity

A general extension to the Einstein-Hilbert action in GR is accomplished by adding a general function of the Ricci scalar f(R), which then takes the form

when including a matter Lagrangian ℒm. This leads to the field equations

where □ denotes the d’Alembertian operator and Greek indices run from 1 to 4. From these field equations an equation of motion for the scalaron field fR = ∂f/∂R can be deduced by taking the trace. The Ricci scalar is given by

where a prime denotes a differentiation with respect to the natural logarithm of the scale factor. In a ΛCDM universe, today’s Ricci scalar is

Although the f(R) function being completely general, there are several constraints concerning its derivatives with respect to R to obtain a theory that is free from ghost instabilities (see Tsujikawa 2010, for a derivation of those stability conditions). Furthermore, specific functions can be chosen depending on the context of the theory. Here we focus on a cosmological model with a late-time accelerated expansion for which the Hu-Sawicki theory (Hu & Sawicki 2007) is the most promising. The f(R) function in this model takes the form

with m = 8πGρ0/3, and c1, c2, n being constants. In the simulations presented in the next sections, a value of n = 1 was used. To produce a background expansion as dictated by ΛCDM, the ratio between c1 and c2 has to be chosen such that

From this follows a Lagrangian of the form ℒ = R/16πG − Λ for the gravitational sector in the R ≫ m2 limit where f(R)≈ − m2c1/c2, which corresponds to the well-known Einstein-Hilbert action with cosmological constant. Furthermore, by expanding the f(R) function in the aforementioned limit but keeping the next-to-leading order term we arrive at

where, in the second equality, we used the expression of the scalaron field,

evaluated for the background Ricci scalar value today (fR0). We replaced c1/c2 with the previous expression to obtain a ΛCDM background. In this approximation, and by fixing n = 1, the f(R) function depends solely on the cosmological parameters and fR0, the latter encoding the strength of the modification to GR.

Having an f(R) modification in the Lagrangian will introduce additional force terms into the Poisson equation in the quasi-static and weak-field limit, as can be derived from perturbed field equations (Bose et al. 2015)

and

where  and

and  are the matter density and Ricci scalar at the background level. These additional terms should be suppressed in the vicinity of massive objects, otherwise solar system tests might have detected the fifth force. When f(R) gravity is rewritten as a scalar-tensor gravity, the potential of the scalar field receives a contribution from the matter density (Khoury & Weltman 2004a) as

are the matter density and Ricci scalar at the background level. These additional terms should be suppressed in the vicinity of massive objects, otherwise solar system tests might have detected the fifth force. When f(R) gravity is rewritten as a scalar-tensor gravity, the potential of the scalar field receives a contribution from the matter density (Khoury & Weltman 2004a) as

with β being a dimensionless constant and Mpl = (8πG)−1. This leads in turn to a modified equation of motion for the scalar field φ that includes density-dependent potential. In this context a thin-shell condition can be derived, stating that the difference between the scalar field far away from the source φ∞ and inside the object φc should be small compared to the gravitational potential on the surface of the object (Khoury & Weltman 2004a). Exterior solutions for φ around compact objects satisfying the thin-shell condition will reach the solution φ∞ at larger distances, thereby suppressing the effect of the scalar field close to the object.

2.2. nDGP Gravity

The modification to standard gravity devised by Dvali, Gabadadze and Porrati (Dvali et al. 2000), hereafter DGP gravity, is of a radically different kind compared to f(R) gravity. The setup is a 4D brane embedded in a 5D bulk and the modification to gravity comes from the fifth-dimensional contribution. The action is given by (Clifton et al. 2012)

where g5 and g4 are the 5D and 4D metric, respectively. The matter Lagrangian ℒm does live on the 4D brane as well as the brane tension σ, which can act as a cosmological constant. Furthermore, there is both a 5D Ricci scalar R5 and its 4D counterpart R4, and the brane has an extrinsic curvature term K. Generally, both the brane and bulk have their individual mass scales M4 and M5 and they give rise to a specific cross-over scale rc defined as

which regulates the contribution of 4D with respect to 5D gravity.

The modified Poisson equation for the gravitational potential and the equation for the additional scalar degree of freedom φ (also called brane-bending mode as it describes the displacement of the brane) lead to the fifth force. They are given in the quasi-static approximation by (see Koyama & Silva 2007)

where β is

The dot refers to a derivative with respect to metric time t. One important feature of the DGP model is the existence of a normal branch and of a self-accelerating branch, indicated respectively by the + and − signs in the equation for β. While the self-accelerating branch appears appealing for cosmology at first sight, as it can generate accelerated expansion without cosmological constant (the limit of vanishing brane tension), it contains unphysical ghost instabilities (Clifton et al. 2012). Hence the model used in the simulation analysed in this work implements the normal branch, which does need a non-vanishing brane tension to produce accelerated expansion. It is interesting to study normal branch DGP models as it exhibits the Vainshtein screening mechanism (see Schmidt 2009; Barreira et al. 2015). To illustrate that mechanism, the equation for the scalar field has to be studied around a mass source. Far away from the source, only the linear term ∇2φ will dominate and this will contribute substantially to the usual gravitational force as it will also scale ∝1/r. However, non-linear terms start to dominate once we are closer to the source than to the Vainshtein radius rV, defined by

with rs being the Schwarzschild radius of the source. At some point, non-linear terms will dominate and the resulting force will scale as  and hence will be suppressed with respect to the gravitational force. A derivation of the solution for φ can be found in Koyama & Silva (2007) for the general case, which includes linear and non-linear terms, and where the same scaling are recovered in the respective regimes.

and hence will be suppressed with respect to the gravitational force. A derivation of the solution for φ can be found in Koyama & Silva (2007) for the general case, which includes linear and non-linear terms, and where the same scaling are recovered in the respective regimes.

At fixed Schwarzschild radius, the cross-over scale determines the Vainshtein radius, so by running simulations with different rc one will obtain different strengths of the Vainshtein screening. Therefore, varying rc allows for the tuning of the amount of deviation to GR that is required.

3. Weighted statistics and estimators

In Table 1 we summarise the notation used throughout this work to ease distinguishing between the different discrete and continuous quantities.

Notations used in this article.

3.1. Unweighted Statistics

The density contrast δ(x), which encodes the relative change of the density field ρ(x), is defined as

where  is the mean density. To study the matter clustering in the cosmological context, one of the most common summary statistics to characterise the density field is the two-point correlation function ξ(x, y) or its Fourier counterpart the power spectrum. The 2PCF is the cumulant ⟨δ(x)δ(y)⟩c of the density contrast at positions x and y. For two-point correlations, the cumulant and standard ensemble average are the same quantity. They remain the same up to three-point correlations but start to differ from four-point correlations onwards. Due to the assumed statistical invariance by translation, the correlation function does only depend on the separation vector r = x − y. By inserting the definition of the density contrast we have that

is the mean density. To study the matter clustering in the cosmological context, one of the most common summary statistics to characterise the density field is the two-point correlation function ξ(x, y) or its Fourier counterpart the power spectrum. The 2PCF is the cumulant ⟨δ(x)δ(y)⟩c of the density contrast at positions x and y. For two-point correlations, the cumulant and standard ensemble average are the same quantity. They remain the same up to three-point correlations but start to differ from four-point correlations onwards. Due to the assumed statistical invariance by translation, the correlation function does only depend on the separation vector r = x − y. By inserting the definition of the density contrast we have that

From the last equation one can see that the 2PCF is zero if the field is totally uncorrelated at two different positions.

To estimate the 2PCF, we can deploy the commonly-used Landy-Szalay pair-counting estimator proposed by Landy & Szalay (1993) to minimise the variance, and which takes the form

The terms DD(r) and RR(r) are the normalised pair counts measured in the data sample and a random sample following the geometry of the data sample, respectively. In addition, a cross term with pairs consisting of one point in the data sample and the other in the random sample is given by DR(r). In this work, we only compute two-point correlation functions in periodic boxes without selection function. In this case, the term DR converges to the term RR in the limit of many realisations of random catalogues, and we can use the natural estimator given by Peebles & Hauser (1974)

The distribution of pairs in real space is isotropic, and together with periodic boundary conditions, lead the correlation function to only depend on the modulus r of the pair separation vector.

In redshift space, it is useful to compute the anisotropic correlation function ξ(s, μ), binned in the norm of the pair separation vector s and the cosine angle between the line of sight (LOS) and the pair separation vector μ. The 2PCF estimator for a periodic box is hence

and normalised RR counts are given by

which can be derived by calculating the volume covered by the respective bins in s and μ relative to the total volume of the bin. For real space measurements, the RR counts can be evaluated analytically in a similar fashion as in Eq. (22).

The 2PCF in redshift space, ξ(s, μ), can be decomposed into multipole moments, which is a basis encoding the different angle dependencies of the full 2PCF. Usually the decomposition is done into the first three non-vanishing multipole moments, being the monopole, quadrupole and hexadecapole. In the following, we focus on the first two since the hexadecapole can be quite noisy for small point sets. The multipole moment correlation functions are obtained by decomposing the ξ(s, μ) in the basis of Legendre polynomials as

yielding for the monopole and quadrupole to

In practice, these integrals are discretised and we measure ξ(s, μ) in 100 bins from μ = 0 to μ = 1 using the symmetry under interchange of galaxies for a given pair, which is fulfilled in our periodic box simulations. The discretised correlation function is then integrated by approximating the integral as a Riemann sum.

3.2. Weighted statistics

Let us now define the weighted density contrast

where the weighted density field is given by ρM(x) = m(x)ρ(x),  , and m(x) is the mark field. The weighted correlation function is the ensemble average of the weighted density contrast correlation,

, and m(x) is the mark field. The weighted correlation function is the ensemble average of the weighted density contrast correlation,

which, when substituted with the definition of the density contrast, takes the form

The mark field m(x) can be continuous in space, or defined on the point set (galaxy or halo catalogue) in a discrete way. Each object in the catalogue can be assigned a mark from the mark field, e.g. the i-th object has a mark mi. The normalised weighted pair counts are obtained as

where the sum is computed over all pairs with a separation inside the bin centred on r. The marked correlation function is then defined as (Beisbart & Kerscher 2000; Sheth 2005)

It converges to ℳ(r) = 1 on large scales as W(r) and ξ(r) approach zero.

To estimate the weighted correlation function from a catalogue, the natural estimator can be generalised to include weighted DD(r) so that we can simply replace DD(r) with WW(r) counts, arriving at

Inserting this into the definition of the marked correlation function ℳ(r) we have

If the LS estimator is employed instead, one has to compute WR(r) and DR(r) terms in addition.

Computing the multipoles of the weighted correlation function is analogous to the unweighted case. However, the multipoles of the marked correlation function can be defined in two ways. The most intuitive definition is obtained by decomposing the marked correlation function ℳ(s, μ) in the basis of Legendre polynomials, yielding

The second approach uses the following definition

which is motivated by the fact that the denominator is the actual multipole of the unweighted 2PCF. This is not the case in the first definition in Eq. (32). The second definition has been used for instance by White (2016) and Satpathy et al. (2019). Throughout this work we use the form given in Eq. (32).

4. Simulations

4.1. Characteristics

To investigate different marked correlation functions and assess their discriminating power regarding GR and MG, we use the Extended LEnsing PHysics using ANalytic ray Tracing (ELEPHANT) simulation suite, thoroughly discussed in Sect. II B. of Alam et al. (2021). We only provide a brief description of it in the following. This simulation suite consists of five realisations of GR with ΛCDM cosmology, f(R) gravity with three different values of |fR0|=[10−6, 10−5, 10−4], and nDGP gravity with H0rc = [5.0, 1.0]. Henceforth, we refer to the different simulations as GR, F6, F5, F4, N5, and N1, respectively. The background cosmology is summarised in Table 2 and resembles the best-fitting cosmology obtained from the nine-year Wilkinson Microwave Anisotropy Probe (WMAP) CMB analysis presented in Hinshaw et al. (2013).

Reference cosmology of the ELEPHANT (first column) and DEMNUni (second column) simulations.

Key simulation parameters are summarised in Table 3. The dark matter halos have been identified with the ROCKSTAR algorithm (Behroozi et al. 2013) and have been subsequently populated with galaxies using the 5-parameter HOD model of Zheng et al. (2007). For each realisation, redshift-space coordinates have been calculated by fixing the LOS to one of the three simulation box axes, individually, and by ‘observing’ the box from a distance equal to 100 times the box side length. One crucial property of this suite of simulations, which makes it particularly suitable for our studies, is the matching of the projected 2PCF of galaxies wp(rp) predicted by GR in the MG simulations. The latter was done by tuning the HOD parameters of the MG simulations. For the GR simulation, the best-fit HOD parameters were taken from Manera et al. (2013).

Characteristics of the ELEPHANT simulation suite.

We use a second set of simulations to assess discreteness effects in the estimation of the mark and how they propagate into the marked correlation function. For this, we make use of the Covmos realisations from Baratta et al. (2023). These are not full N-body simulations, rather they reproduce dark-matter particle one- and two-point statistics following the technique described in Baratta et al. (2020). This procedure consists of applying a local transformation to a Gaussian density field such that it follows a target PDF and power spectrum. The point set is then obtained by a local Poisson sampling on the linearly interpolated density values. For the set of Covmos realisations, the target PDF and power spectrum were set by the DEMNUni N-body simulation (Castorina et al. 2015) statistics. The DEMNUni simulation assumes a ΛCDM cosmology with parameters presented in Table 2. The Covmos catalogues contain about 20 × 106 points in a box of 1 h−1 Gpc side length, resulting in a number density of about 0.02 h3 Mpc−3. This high density level enables us to treat those catalogues as if they were (almost) free from shot noise.

4.2. Two-point correlation function

Although the galaxy projected correlation function is matched in the ELEPHANT suite, it is instructive to assess residual deviations in other statistics, particularly for the interpretation of differences arising in the analysis of marked correlation functions.

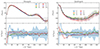

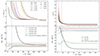

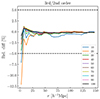

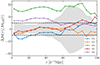

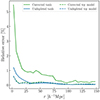

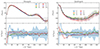

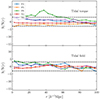

We measured both the real- and redshift-space correlation functions in 30 linear bins in r and s, respectively, ranging from 10−3 h−1 Mpc to 150 h−1 Mpc. For the redshift-space measurements, we used the ELEPHANT catalogues with the LOS fixed to the x-direction. All correlation function measurements in this work have been performed using the publicly available package Corrfunc (Sinha & Garrison 2019, 2020). In the upper panel of Fig. 1, we show the standard correlation function in real space for the different gravity simulations. The measurements appear to be within the respective uncertainties over all scales, albeit on very small scales, a more careful assessment of possible deviations is advised as the error bars are very small. In Fig. 2, the monopole (right) and quadrupole (left) of the anisotropic 2PCF in redshift space are presented in the upper panels. Similarly as for the real space correlation function, the multipoles are within the respective uncertainties on large scales, although the N1 measurement appears to deviate from the others in the quadrupole. On smaller scales, discrepancies seem to appear as uncertainties get very small and a visual inspection is not sufficient to quantify those differences.

To properly assess the difference between MG and GR in weighted or unweighted correlation functions, we define the difference between MG and GR as the mean

where i ranges over the number of realisations. However, the mean differences alone does not tell about the significance as the data might fluctuate much more than differences. We therefore divide the mean difference by the standard deviation as

The factor of 1/(N − 1) is necessary in order to compute an unbiased standard deviation, since we only have five realisations at hand. Furthermore, the additional factor of 1/N comes from fact that we want the error on the mean and not of a single measurement. In a similar manner, we compute the standard deviation of a single marked correlation function as

In the end, the ratio of interest is the signal-to-noise ratio (S/N) defined by

giving directly the difference in terms of standard deviations. If the absolute value of this S/N is larger than 3 then we would advocate a significant deviation between MG and GR.

Another quantity of interest that we use throughout this work is the ratio between the error on a single measurement of the marked correlation function and the noise σavg, as used in the signal-to-noise ratio. We refer to this ratio as

and will include it the figures as shaded regions. The error of a single measurement σs(r) is hereby taken for the GR case. This ratio gives an indication on the statistical significance of a difference, if we would have only one simulation/measurement at hand. To assess this, we have to compare S/N(r) with α(r), and if S/N(r) > 3α(r) then we can claim a 3σ difference to be detectable with a single measurement. Of course, care must be taken if the error of a single measurement is significantly different between GR and MG, since α(r) will differ depending on what simulations are used to estimate the error, therefore possibly affecting conclusions.

In the lower panel of Fig. 1, we display the S/N(r) as introduced above. The differences rarely cross the limit of 3σ except for the very lowest scales below 20 h−1 Mpc, or for F4 at intermediate scales where deviations can reach up to 6σ. However these large deviations happen only for single scales and there is no general trend. This suggests that the crossing of the 3σ border might be caused by sample variance, as the statistical power is limited with only five realisations. In addition, when considering the error of a single realisation volume, as displayed by the blue shaded region, we can see that the deviations for F4 are within the uncertainty.

|

Fig. 1. Difference in the measured standard correlation function ξ(r) between GR and MG in real space. In the upper panel, the correlation functions themselves are plotted where different colours indicate the underlying gravity theory. The curves show the average over five realisations and the errorbars correspond to the mean standard deviation over these realisations. The lower panel quantifies possible differences in terms of the S/N, as introduced in Sect. 4. Black dashed lines indicate a S/N of ±3. The shaded region refers to the error of a single measurement divided by the mean error of the difference as described in Eq. (38). |

In the lower panels of Fig. 2 we show the S/N(s) for the multipoles in redshift space. Except for the smallest scales, the simulations F5, F6 and N5 show generally no significant differences to GR. In the case of the monopoles, the S/N is close to 0 for almost all scales. For F4 and N1 significant differences are present although mainly on scales below 20 h−1 Mpc. On larger scales both simulations show S/N varying around 3σ. These differences are mostly within the uncertainty, if the error of a single volume is considered. This confirms that by considering the standard correlation function only, in real or redshift space, we cannot really distinguish between GR and those MG models.

|

Fig. 2. Differences in the standard measured correlation function multipoles in redshift space between GR and MG. The upper panels present the mean correlation function multipoles taken over five realisations with the monopole on the left side and the quadrupole on the right side. The errorbars correspond to the mean standard deviation over five realisations. The lower panels show the respective S/N with 3σ indicated by the black dashed lines. The colour coding refers to different gravity simulations and the corresponding shaded regions refer to the error of a single measurement divided by the mean error of the difference (see Eq. (38)). |

5. Marks for modified gravity

There is a vast space of possible marks that can be used and the specific choice strongly depends on the context in which the marked correlation function is studied. The most popular mark function M[ρ(x)] in the literature within the context of detecting MG was introduced by White (2016) and takes the form

where ρ* and p are free parameters used to control the mark’s upweighting of low- versus high-density regions. We refer to this mark in the following as the White mark and be indicated via the W in the subscript. The White mark can be seen as a local transformation of the density field. Choices for the free parameters range from (ρ*, p) = (10.0, 7.0) (Aviles et al. 2020), (ρ*, p) = (4.0, 10.0) Alam et al. (2021), Valogiannis & Bean (2018), to (ρ*, p) = (10−6, 1.0) (Hernández-Aguayo et al. 2018). Upweighting galaxies in high-density regions using (ρ*, p) = (1.0, −1.0) has also been explored in Alam et al. (2021). Other values have also been investigated by Satpathy et al. (2019) and Massara et al. (2021) for the marked power spectrum. This underlines the wide range of possible mark functions and configurations to be used and the amount of freedom this can introduce in the analysis.

Marks based on the local density require an estimation of the latter from a finite point set in the first place and there exist several different approaches to do so. While we use an estimation based on mass assignment schemes (MAS), adaptive approaches such as Delaunay (Schaap & van de Weygaert 2000) or Voronoi tessellations as used in void finders (e.g. Neyrinck 2008) could also be used. With a MAS applied to a discrete density field (subscript f for finite):

where the i-th point is located at position xi, the estimated density field on the grid takes the form (Sefusatti et al. 2016)

with  is the density of points per grid cell, N is the number of points, a is the size of one grid-cell, and F(x) the MAS kernel. The coordinate x is only evaluated at grid points but in principle can be placed anywhere. For this derivation, we assumed all points to have the same mass m and we made use of the simplified notation where F(x)≡F(x1)F(x2)F(x3). The density field obtained in this way is related to the true density field by a convolution with the MAS kernel. In this work, we mainly use a piece-wise cubic spline (PCS) for the MAS but higher- and lower-order kernels are employed for specific tests. The explicit form of the used kernels up to septic order can be found in the appendix of Chaniotis & Poulikakos (2004)1.

is the density of points per grid cell, N is the number of points, a is the size of one grid-cell, and F(x) the MAS kernel. The coordinate x is only evaluated at grid points but in principle can be placed anywhere. For this derivation, we assumed all points to have the same mass m and we made use of the simplified notation where F(x)≡F(x1)F(x2)F(x3). The density field obtained in this way is related to the true density field by a convolution with the MAS kernel. In this work, we mainly use a piece-wise cubic spline (PCS) for the MAS but higher- and lower-order kernels are employed for specific tests. The explicit form of the used kernels up to septic order can be found in the appendix of Chaniotis & Poulikakos (2004)1.

5.1. Beyond local density

A way to include information beyond the local density field is by using the large-scale environment that can be divided into clusters, filaments, walls and voids. Generally, there are different ways to define these structures from a galaxy catalogue ranging from the sophisticated approach by Sousbie (2011) based on topological considerations to the work of Falck et al. (2012) using phase-space information. One of the most straightforward approaches utilises the T-web formalism (Forero-Romero et al. 2009) based on the Hessian of the gravitational potential. For a thorough comparison of the above mentioned cosmic web classifications and many more we refer the reader to Libeskind et al. (2018). In this analysis we deploy the T-web classification that uses the relation of the eigenvalues λ1, λ2 and λ3 of the tidal tensor to the density evolution as given in Cautun et al. (2014)

where D(t) is the growth factor. This expression can be derived from Lagrangian perturbation theory to linear order (Zel’dovich 1970). The dimensionality of the structure then depends on the number of eigenvalues with positive sign. Three positive eigenvalues corresponds to a cluster as it encodes a collapse among all three spatial directions. Two or one positive eigenvalues result in a filament or wall, respectively. If all eigenvalue are negative then ρ(x) will never diverge and we can interpret this as a void. A pitfall of this classification appears if some of the eigenvalues are very small but positive as the corresponding structure might not collapse in a Hubble time. To circumvent this issue while not having to rely on thresholds for the eigenvalues we use the scheme as proposed in Cautun et al. (2013). They give an environmental signature 𝒮 for ordered eigenvalues λ1 ≤ λ2 ≤ λ3 in their Eqs. (6) and (7) as signatures for clusters, filaments, walls and voids, respectively. We adapted this scheme to be used on the eigenvalues of the Tidal tensor instead of the Hessian of the density contrast as proposed in Cautun et al. (2013).

To obtain the tidal tensor in a simulation we follow the grid-based approach as used for the density field. With a density field on a grid at hand, the gravitational potential or tidal tensor can be straightforwardly deduced by a series of fast Fourier transforms (FT). For this we use the Poisson equation to relate the density field to the gravitational potential

We absorb the spatial constant  into the definition of the gravitational potential. There exists a singularity when the wavevector is equal to zero, which we evade by simply setting the zeroth mode of Φ(k) to zero, as we expect the gravitational potential sourced by the density contrast to have a zero mean. The components of the tidal tensor Tij can then be derived by taking successive derivatives in the respective directions as

into the definition of the gravitational potential. There exists a singularity when the wavevector is equal to zero, which we evade by simply setting the zeroth mode of Φ(k) to zero, as we expect the gravitational potential sourced by the density contrast to have a zero mean. The components of the tidal tensor Tij can then be derived by taking successive derivatives in the respective directions as

The off-diagonal terms suffer from a break in the Fourier symmetry pairs when evaluated on a finite grid. This leads to a non-vanishing imaginary part once the tidal tensor in configuration space is obtained by inverse FT. We circumvent this issue by setting the imaginary part to zero via applying a filter that sets the symmetry-breaking modes at the Nyquist frequency to zero. In our implementation we evaluate the environmental signatures on the grid over which the eigenvalues of the tidal tensor have been computed. For each grid cell the largest signatures defines the corresponding environment and if all signatures are zero then the environment is set to be a void.

Once each galaxy has been classified, this information can be used to enhance effects of MG in clustering measurements. The simplest use of the environmental classification is to divide the catalogue into sub-catalogues consisting of galaxies located in voids, walls, filaments and clusters, respectively, and compute auto-correlation functions. The difference in clustering amplitude in the different environments is expected to be stronger in MG, particularly in the correlation function of void galaxies. In the work of Bonnaire et al. (2022), they computed the power spectra of density fields that have been obtained by splitting the original density field into respective contributions from galaxies in voids, walls, filaments and clusters. They applied this approach to the dark matter particles of the Quijote simulation (Villaescusa-Navarro et al. 2020) thereby having a much larger set of points. A drawback in the analysis of these galaxy mock catalogues, but also when using limited survey samples, is the loss of information due to discarding many galaxies leading to an increase in uncertainty of the measurements. Computing environmental correlation functions is the same as computing weighted correlation functions but with a mark set to one for all galaxies living in the respective environment and zero otherwise. We refer the interested reader to Appendix A for some notes on the structure of weighted correlation functions for those kinds of weights. In the following we denote such marked correlation functions, consisting of the environmental weighted correlation function divided by the total un-weighted correlation function as in Eq. (29), via their respective environment; for instance, with a void marked correlation function.

Conversely, we can use the full catalogue of objects and put larger weights to progressively more unscreened galaxies, as done by the following mark field

where LEM stands for linear environment mark. This approach is similar to the density split technique as used in Paillas et al. (2021), where cross-correlation functions of galaxies living in differently dense regions have been investigated. Our proposed mark can also be divided into a specific combination of auto and cross-correlations. This mark could in principle be extended to a WallLEM mark, where wall galaxies get assigned a weight of 4 and voids galaxies a weight of 3, as well as similarly peaked functions for filaments and clusters. However, as we expect MG to be the strongest in low-density regions we restrict ourselves to upweighting void or wall galaxies only.

Yet another idea of using the environmental classification of galaxies as a mark would be to further increase the anti-correlation present in low density regions. This can be accommodated with the following mark

where we abbreviated anti-correlation with AC. In principle, there is no difference if we switch signs of this mark because from Eq. (28) it is clear that any overall factor of the marks would be cancelled by the division of the normalisation. This mark leaves galaxy pairs that are in voids unweighted as well as galaxy pairs not in voids. However, if one galaxy is in a void and the other is not, the weight will be -1 thereby creating an anti-correlation.

Marks based on the tidal tensor components may appear promising to go beyond the local density. An interesting quantity first introduced by Heavens & Peacock (1988) and then used by Alam et al. (2019), is the tidal torque, defined as

with λ1, λ2 and λ3 are the eigenvalues of the tidal tensor. The larger the difference between the eigenvalues the more anisotropic is the structure. Hence we expect the tidal torque to be large for filaments and walls and small for clusters or voids. Another field depending directly on tidal tensor components is the tidal field, also known as the second Galileon 𝒢2 (Nicolis et al. 2009), and which was used extensively for the emergence of non-local bias between galaxies and dark matter (Chan et al. 2012). The tidal field is defined as 𝒢2 = (∂i∂jΦ)2 − (∂i∂iΦ)2, where we can identify the components of the tidal tensor as introduced in Eq. (44). In practise we investigate two separate marks consisting of the tidal field and tidal torque as they are, respectively. Using these fields in this way should give an insight on the suitability of the tidal field or tidal torque to disentangle MG from GR.

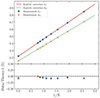

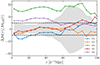

In Fig. 3, we present the different marked correlation functions introduced in this section. For the cluster marked correlation function we see a strong signal on very small scales which relates to the correlation between galaxies insides clusters. The compensation feature on scales between 20 h−1 Mpc and 60 h−1 Mpc comes from less clustered regions around clusters and is similar, although reversed, to the compensation seen in the void-galaxy cross-correlation function (e.g. Aubert et al. 2022; Hamaus et al. 2022). The filament and wall marked correlation functions show progressively less signal as the clustering of galaxies inside walls and filaments are closer to the total clustering of all galaxies. Notably, if voids are considered, the observed signal below unity implies that void galaxies are less clustered compared to the total clustering. The large signal of the cluster marked correlation function comes at the cost of larger errors due to small amount of galaxies residing in clusters. In general we aim for marked correlation functions with a signal different from unity over a wide range of scales as this might lead to differences at those scales between MG and GR. On the other side, if the marked correlation function stays very close to unity on most scales, then any possible difference between MG and GR can only originate from the clustering itself if the marked correlation function of MG is also close to unity. The 2PCF is matched between MG and GR in the ELEPHANT simulations to fit observations as described in Sect. 4. For these reasons, the VoidAC mark and particularly the WallAC mark are of strong interest as they exhibit a signal up to large scales. Looking at the lower panel of Fig. 3, we can see a strong signal when the tidal field is used, which extends to large scales. The same, although with considerably less amplitude on small scales, is found for the tidal torque. Hence, these marks are also interesting candidates to be investigated to discriminate between MG and GR. Although an impact of the mark is a necessary prerequisite, it is not sufficient to guarantee a disentanglement of GR from MG because the signal could be the same in MG and GR, nevertheless.

|

Fig. 3. Summary of the different marked correlation functions using marks based on the tidal field, tidal torque (both in the lower panel) or large-scale environment (upper panel). The two panels show measurements made using the ELEPHANT simulations of GR. The black dashed line indicates an amplitude of 1. Curves represent the mean taken over five realisations and the error corresponds to the mean standard deviation over five realisations. The measurements have not been corrected for any form of bias due to shot-noise effects. |

It has to be noted that the marked correlation functions shown in Fig. 3 are not corrected for a possible bias due to the estimation of the mark on a discrete catalogue, as we discuss in the next section. Hence, the exact amplitude of the measurements might be subject to changes if such a correction is applied. For the marks based on the environmental classification we do not expect this bias to be particularly strong because possible miss-classifications, originating from a biased estimate of the density field, should not affect every galaxy in a catalogue.

5.2. Anti-correlating galaxies using local density

Until now, we have seen that marks based on the local density are particularly simple and introducing an anti-correlation with the mark appears to be promising regarding the discrimination between GR and MG. Therefore, we propose the following mark function based on the hyperbolic tangent, satisfying both aforementioned advantages,

where a and b are parameters controlling how steeply the transition from -1 to 1 takes place and where the transition happens, respectively. In general, we could use a third parameter c as an overall factor in front of the hyperbolic tangent but constant factors can be pulled out of the mean and hence are cancelled by the normalisation in Eq. (28). It is worth mentioning the fact that theoretical modelling of marks based on the environmental classification, such as the VoidAC mark, might be particularly challenging as it is not straightforward to express the mark in terms of the density contrast. From a theoretical perspective, marked correlation functions with marks based on the density are more tractable. Furthermore, discreteness effects, arising in the density estimation itself can be more easily corrected for in the measurement of the marked correlation function as elucidated in the next section.

6. Propagation of discreteness effects of the mark estimation into weighted correlation functions

When dealing with finite point sets we can assume the sampling process to be locally of Poisson nature (Layzer 1956). This means that the number of points found in some small-enough grid cells appears as being drawn from a Poisson distribution with some expectation value. However, the expectation value of the local Poisson process does have a PDF on its own. The PDF from which the expectation values is drawn is continuous and describes the density field globally. If this PDF is a Dirac delta function then the expectation value of the Poisson process is the same everywhere and the moments estimated from the sample points coincide with the moments from the continuous PDF. However, if the continuous PDF is not a Dirac delta function, as is the case for the cosmological density field, then the estimated moments contain a bias with respect to the true moments of the continuous PDF. This bias is usually called shot noise or Poisson noise in the literature. In the power spectrum estimation, the shot noise appears as an additive constant for all scales in k-space. In the 2PCF instead, the shot noise emerges only at zero lag, that is at a pair separation of zero. Hence, shot noise is inherently a problem of correlating a point with itself. When we use the density field inside a mark function, we use a smoothed version of the true field. We spread points over a finite volume leading to self-correlations also at non-zero pair separation and in turn to shot-noise effects. Intuitively, this can be understood in the following manner: in the unsmoothed case all points are infinitely small dots, while in the smoothed case the points are represented by circles with a non-zero radius. Inside this radius one point can be correlated with itself.

To precisely understand how shot noise affects marked correlation functions we have to do a small detour and carefully distinguish between the statistical properties of the true density contrast δ(x), the smoothed true density contrast δR(x), and the respective quantities estimated from finite point sets, hereby denoted with an f in the subscript δf(x) and δRf(x). The weighted correlation function estimated from a finite point set can be written as

where we defined the quantity wf(r) as

and  is the mean mark taken over the points, i.e. weighted by the density. In Eq. (49) both wf(r) and

is the mean mark taken over the points, i.e. weighted by the density. In Eq. (49) both wf(r) and  are expected to be sensitive to the noise induced by the auto-correlation of objects with themselves, and we denote the corresponding shot-noise free signals w and

are expected to be sensitive to the noise induced by the auto-correlation of objects with themselves, and we denote the corresponding shot-noise free signals w and  . Indeed, Eq. (50) shows that there is a mark function M of the smoothed density field that is multiplied by the density field itself. This constitutes the main source of shot noise that is expected to happen even at large separation r, where there is no overlap between the smoothing kernels. In this section, we first show the effect of shot noise on the marked correlation function for a specific mark and then devise a general method to correct for shot noise.

. Indeed, Eq. (50) shows that there is a mark function M of the smoothed density field that is multiplied by the density field itself. This constitutes the main source of shot noise that is expected to happen even at large separation r, where there is no overlap between the smoothing kernels. In this section, we first show the effect of shot noise on the marked correlation function for a specific mark and then devise a general method to correct for shot noise.

6.1. A toy model

To understand how the shot noise propagates into wf(r) and  , we focus on a very simple mark function defined by M[δRf(x)] = δRf(x) and M[δRf(x + r)] = 1. This corresponds to a marked correlation function in which only one point of the pair is weighted by the density contrast and the other point stays unweighted. In this instructive case we can split wf(r) into three terms

, we focus on a very simple mark function defined by M[δRf(x)] = δRf(x) and M[δRf(x + r)] = 1. This corresponds to a marked correlation function in which only one point of the pair is weighted by the density contrast and the other point stays unweighted. In this instructive case we can split wf(r) into three terms

where the individual contributions are given by

and consist of correlators between the smoothed and unsmoothed density field estimated on a finite point set. Given that the smoothed density field δRf(x) is related to the density field δf(x) through the convolution

one can immediately see that the three contributions in Eq. (51) involve integrals over two- and three-point correlation functions of the density field. In general, N-point correlation functions are affected by shot noise as (see Chan & Blot 2017)

where the function 𝒜m(n) contains all the scale dependency of the shot-noise contribution to the N-point correlation function at the respective order in  , the mean density of points in the volume V. Therefore, the shot noise takes the form of a power series in

, the mean density of points in the volume V. Therefore, the shot noise takes the form of a power series in  . In particular for the 2PCF we have

. In particular for the 2PCF we have

and for the three-point correlation function

As a result, one can express each individual term of Eq. (51) in terms of the true signal and a shot-noise contribution (depending on the number density of objects) as

where we introduce  that corresponds to the mean number of objects per grid cell. These noise contributions are obtained by using Eq. (53) in Eq. (52), inserting Eqs. (55) and (56), and integrating out the Dirac delta functions where applicable. It has to be noted that we only report noise contributions in Eq. (57) that are not proportional to Dirac delta functions, as these would appear at zero lag only and hence be irrelevant for our considerations. By utilising the aforementioned splitting into signal and noise we can write Eq. (51) as

that corresponds to the mean number of objects per grid cell. These noise contributions are obtained by using Eq. (53) in Eq. (52), inserting Eqs. (55) and (56), and integrating out the Dirac delta functions where applicable. It has to be noted that we only report noise contributions in Eq. (57) that are not proportional to Dirac delta functions, as these would appear at zero lag only and hence be irrelevant for our considerations. By utilising the aforementioned splitting into signal and noise we can write Eq. (51) as

where w is the true signal and the shot-noise contribution is formally expressed as

Equation (59) shows that even if on a scale r larger than the smoothing scale (when F(r/a) = 0) there is still a large-scale contribution to the shot noise due to F(0). In addition, the large-scale contribution is expected to decrease when increasing the order of the MAS (F(0) decreases). That is the reason why, in general, increasing the order of the MAS reduces the intrinsic shot-noise contribution to the signal.

Following the same reasoning, it is straightforward to show that with the toy model the shot-noise affected mean mark  , estimated from a discrete set of objects, can be related to the true mean mark

, estimated from a discrete set of objects, can be related to the true mean mark  via

via

where

Finally, by combining Eqs. (58) and (60) we can show that the shot-noise-corrected weighted correlation function 1 + W(r) can be expressed as

This demonstrates that the shot-noise correction on the marked correlation function implies to correct both the numerator wf(r) and denominator  in order to properly extract the true signal. This is at odd with usual shot-noise corrections on N-point correlation functions that are only additive.

in order to properly extract the true signal. This is at odd with usual shot-noise corrections on N-point correlation functions that are only additive.

There is another subtlety due to the fact that we assign the mark field back on the galaxies to measure the weighted correlation function. This back-assignment is done with a specific scheme in the sense that we check in which grid cell a galaxy is located and assign the mark corresponding to that grid cell, thereby introducing another smoothing of the field with a nearest-grid-point (NGP) kernel. In our computation this leads to an additional convolution for the field δRf(x). We show in Appendix B that this additional convolution is equivalent to a single one with a kernel that is a convolution of both a PCS and a NGP kernel, that is, a quartic kernel. Hence, in the actual calculation of the analytic shot noise as in Eqs. (59) and (61), a quartic kernel has to be used, which explicit expression can be found in the appendix of Chaniotis & Poulikakos (2004).

To validate the analytic prediction of the noise in wf(r) and  we use five realisations of Covmos as introduced in Sect. 4. The goal is to have realisations at different number densities to assess the behaviour of shot noise as a function of

we use five realisations of Covmos as introduced in Sect. 4. The goal is to have realisations at different number densities to assess the behaviour of shot noise as a function of  . Therefore, for each realisation we deplete the catalogue by randomly throwing away points down to the desired density. The exact densities are motivated by applying the shot-noise correction later to the ELEPHANT simulation suite, which has much lower point densities compared to Covmos. Hence by depleting the Covmos realisations down to {1.7%,1.53%,1.36%,1.19%,1.02%,0.85%,0.68%,0.51%} we generate catalogues with the same

. Therefore, for each realisation we deplete the catalogue by randomly throwing away points down to the desired density. The exact densities are motivated by applying the shot-noise correction later to the ELEPHANT simulation suite, which has much lower point densities compared to Covmos. Hence by depleting the Covmos realisations down to {1.7%,1.53%,1.36%,1.19%,1.02%,0.85%,0.68%,0.51%} we generate catalogues with the same  as in the ELEPHANT suite if they were depleted down to {100%,90%,80%,70%,60%,50%,40%,30%} with 64 grid cells per dimension. The depletion is done to match the density of points in grid cells and not in the full volume because the shot-noise behaviour is a power series in

as in the ELEPHANT suite if they were depleted down to {100%,90%,80%,70%,60%,50%,40%,30%} with 64 grid cells per dimension. The depletion is done to match the density of points in grid cells and not in the full volume because the shot-noise behaviour is a power series in  . The depletion is repeated 100 times followed by a mean to minimise the sample variance coming from the stochasticity of the random depletion process. We need to carefully distinguish the five independent realisations of Covmos from the depletion realisations used to get a converged result for a depleted catalogue, which has to be done for each of the five independent realisations.

. The depletion is repeated 100 times followed by a mean to minimise the sample variance coming from the stochasticity of the random depletion process. We need to carefully distinguish the five independent realisations of Covmos from the depletion realisations used to get a converged result for a depleted catalogue, which has to be done for each of the five independent realisations.

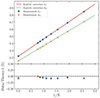

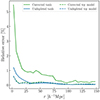

As we have seen in Eq. (62) we need to measure  as well as

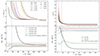

as well as  and those can be straightforwardly computed from the weighted correlation function at each level of depletion. In the upper panel of Fig. 4 we present the measurements in one Covmos realisation of wf(r) (blue points) and

and those can be straightforwardly computed from the weighted correlation function at each level of depletion. In the upper panel of Fig. 4 we present the measurements in one Covmos realisation of wf(r) (blue points) and  (orange points) as a function of

(orange points) as a function of  . The scale at which we plot wf(r) is fixed to a bin close to 20 h−1 Mpc. We can already see that we there is a linear relation with

. The scale at which we plot wf(r) is fixed to a bin close to 20 h−1 Mpc. We can already see that we there is a linear relation with  as predicted by the expression in Eqs. (59) and (61). The solid and dashed curve refer to the analytical prediction using the depletion case to 1.7% (the second data point from the left) as an anchor. That anchor is needed to obtain a noiseless signal by correcting for shot noise and then add to the true signal the noise contribution, as a function of

as predicted by the expression in Eqs. (59) and (61). The solid and dashed curve refer to the analytical prediction using the depletion case to 1.7% (the second data point from the left) as an anchor. That anchor is needed to obtain a noiseless signal by correcting for shot noise and then add to the true signal the noise contribution, as a function of  , to obtain the curve. By doing so, the relative difference between the prediction and the measurement is exactly zero by construction for this depletion as can be seen in the lower panel of Fig. 4. Moreover, even for the other data points at different levels of depletion we can predict the expected signal with high accuracy. The relative difference is at the sub-percent level for both wf(r) and

, to obtain the curve. By doing so, the relative difference between the prediction and the measurement is exactly zero by construction for this depletion as can be seen in the lower panel of Fig. 4. Moreover, even for the other data points at different levels of depletion we can predict the expected signal with high accuracy. The relative difference is at the sub-percent level for both wf(r) and  .

.

|

Fig. 4. Analytical correction for wf(r) and |

Now that we have established the correctness of our analytical predictions for wf(r) and  individually, we can check how well they perform when combining them into 1 + Wf(r), as shown in Fig. 5. In the upper panel we present the mean over the five Covmos realisations at different levels of depletion as indicated with different colours in the legend. As expected, since the kernel and 2PCF drop off at large separations, differences in the curves are only evident on small scales. This is further underlined by the lower panel where the relative difference between the depletion down to 1.7% and the undepleted case is shown in black. This curve refers to the difference between the two if we would not have applied any correction and only data with a depleted number density of 1.7% would be available. For 1 + Wf(r) the relative difference can reach more than 10% on small scales but at scales above around 60 h−1 Mpc the depleted and undepleted case lay within 1% and the effect of shot noise becomes negligible. This is somewhat expected due to the smoothing of the density field, as the quartic kernel decreases down to zero over the course of 2.5 grid cells, which in Covmos corresponds to ≈40 h−1 Mpc. It is important to note here that, although we expect shot noise to be stronger when correlating within the volumes of the smoothing kernels, it is peculiar to the toy model that the shot-noise contribution does only contain linear factors of the kernel with and without the 2PCF. It can be shown in the more general case that if one weights both galaxies in a pair by the associated density field, then the shot noise will contain contributions from a convolution of two quartic kernels resulting in a nonic kernel, which is much more extended in configuration space. In contrast to the black curve that has no correction, we show the relative difference of the analytical correction for 1 + Wf(r) to the undepleted case in red. To have a fair comparison we corrected the 1.7% case down to the density of the undepleted realisations, as these still have a finite, yet very high density. The analytical correction reproduces the undepleted measurements to within 1% relative difference on all scales. We conclude that for the toy model we are able to analytically predict the shot noise. Moreover, we show that even with this simple toy model the shot noise acquires a non-trivial scale dependency. In the next section we extend this formalism to general weighted correlation functions and describe a procedure to estimate the signal without having to rely on an analytical model.

individually, we can check how well they perform when combining them into 1 + Wf(r), as shown in Fig. 5. In the upper panel we present the mean over the five Covmos realisations at different levels of depletion as indicated with different colours in the legend. As expected, since the kernel and 2PCF drop off at large separations, differences in the curves are only evident on small scales. This is further underlined by the lower panel where the relative difference between the depletion down to 1.7% and the undepleted case is shown in black. This curve refers to the difference between the two if we would not have applied any correction and only data with a depleted number density of 1.7% would be available. For 1 + Wf(r) the relative difference can reach more than 10% on small scales but at scales above around 60 h−1 Mpc the depleted and undepleted case lay within 1% and the effect of shot noise becomes negligible. This is somewhat expected due to the smoothing of the density field, as the quartic kernel decreases down to zero over the course of 2.5 grid cells, which in Covmos corresponds to ≈40 h−1 Mpc. It is important to note here that, although we expect shot noise to be stronger when correlating within the volumes of the smoothing kernels, it is peculiar to the toy model that the shot-noise contribution does only contain linear factors of the kernel with and without the 2PCF. It can be shown in the more general case that if one weights both galaxies in a pair by the associated density field, then the shot noise will contain contributions from a convolution of two quartic kernels resulting in a nonic kernel, which is much more extended in configuration space. In contrast to the black curve that has no correction, we show the relative difference of the analytical correction for 1 + Wf(r) to the undepleted case in red. To have a fair comparison we corrected the 1.7% case down to the density of the undepleted realisations, as these still have a finite, yet very high density. The analytical correction reproduces the undepleted measurements to within 1% relative difference on all scales. We conclude that for the toy model we are able to analytically predict the shot noise. Moreover, we show that even with this simple toy model the shot noise acquires a non-trivial scale dependency. In the next section we extend this formalism to general weighted correlation functions and describe a procedure to estimate the signal without having to rely on an analytical model.

|

Fig. 5. Results of the analytic shot-noise correction applied to the toy model for which only one point in a pair is weighted by the density contrast δRf. The upper panel shows the full weighted correlation function 1 + Wf as a mean over the five Covmos realisations, where different colours denote different levels of depletion. Errorbars are computed by taking the mean standard deviation over five realisations. To obtain the depleted catalogues we took the mean over 100 depletions. The lower panel shows the relative difference of the analytically corrected result to the undepleted case as a red dashed line. The solid black line refers to the relative difference of the depletion level 1.7% to the undepleted case, which illustrates the effect of no correction. Horizontal dashed lines in black indicate levels of relative differences of ±1% and the vertical dashed line in grey refers to side length of one grid cell. We used 64 grid cells per dimension and a PCS MAS to obtain the density field on the grid. |

6.2. A general model

Building on top of the results obtained with the toy model, we can devise a general model that has a mark function expandable in powers of the density contrast as

where ci are the coefficients of the Taylor series. Plugging the series expansion in Eq. (63) into Eq. (49) we arrive at

and

It is evident from these expressions that by weighting both galaxies in a given pair, the resulting marked correlation function will contain auto-correlation contributions of the mark with itself. If for example the weight is constructed by an external catalogue of voids then the weighted correlation function will consist of a smoothed version of the void auto-correlation and void-galaxy cross-correlation functions. In Appendix A, we give some further insights in how the weighted correlation function can be split up into two auto- and one cross-correlation function for certain weighting schemes.

To work out the shot-noise contribution to wf(r) and  we can use, analogously to Eq. (54), the relation

we can use, analogously to Eq. (54), the relation

where ℬp(i, j)(r) contains the shot-noise contribution for a given (i, j) proportional to the inverse of  to the power of p. Inserting this expression into Eq. (64) we obtain

to the power of p. Inserting this expression into Eq. (64) we obtain

where we identified in the second equality the first sum to be the desired true signal w(r) and the second sum to be the shot-noise contribution ϵw(r). Similarly, for  we obtain

we obtain

At this point it is clear that the double sum can be written as a power series of  such that

such that

with correspondingly defined ϵw, p(r) and  . The correction in the general case is therefore, analogously to Eq. (62),

. The correction in the general case is therefore, analogously to Eq. (62),

The power series in  (which in principle extends to infinite order) together with the fact that, following Eqs. (55) and (56), shot noise of N-point correlation functions is scale-dependent and contains (N − 1)-point correlation functions, makes an analytic correction for the general case intractable. This would require, in particular, the computation of higher order correlation functions, which are computationally expensive. It might appear at first glance that a simple truncation of the Taylor expansion would solve the problem, but to avoid computing four-point correlators and above, the Taylor expansion would need to be cut already at linear order. Moreover, the conversion of moments into cumulants might lead to significant contributions from higher order correlators at low order in

(which in principle extends to infinite order) together with the fact that, following Eqs. (55) and (56), shot noise of N-point correlation functions is scale-dependent and contains (N − 1)-point correlation functions, makes an analytic correction for the general case intractable. This would require, in particular, the computation of higher order correlation functions, which are computationally expensive. It might appear at first glance that a simple truncation of the Taylor expansion would solve the problem, but to avoid computing four-point correlators and above, the Taylor expansion would need to be cut already at linear order. Moreover, the conversion of moments into cumulants might lead to significant contributions from higher order correlators at low order in  . In the following we outline an approach to circumvent analytical computation and that uses the resummation of contributions into a power series in

. In the following we outline an approach to circumvent analytical computation and that uses the resummation of contributions into a power series in  .

.

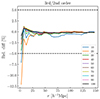

The quantities  as well as

as well as  are directly measurable from simulations for a given mark. We propose therefore an algorithm consisting of a polynomial fit through measurements of