| Issue |

A&A

Volume 691, November 2024

|

|

|---|---|---|

| Article Number | A221 | |

| Number of page(s) | 27 | |

| Section | Catalogs and data | |

| DOI | https://doi.org/10.1051/0004-6361/202450503 | |

| Published online | 15 November 2024 | |

J-PLUS: Bayesian object classification with a strum of BANNJOS

1

Centro de Estudios de Física del Cosmos de Aragón (CEFCA), Unidad Asociada al CSIC,

Plaza San Juan 1,

44001

Teruel,

Spain

2

Instituto de Astrofísica de Andalucía, IAA-CSIC,

Glorieta de la Astronomía s/n,

18008

Granada,

Spain

3

Instituto de Física, Universidade Federal da Bahia,

40170-155,

Salvador,

BA,

Brazil

4

PPGCosmo, Universidade Federal do Espírito Santo,

29075-910

Vitória,

ES,

Brazil

5

Instituto de Astronomia, Geofísica e Ciências Atmosféricas, Universidade de São Paulo,

05508-090

São Paulo,

Brazil

6

Centro de Astrobiología, CSIC-INTA,

Camino bajo del castillo s/n,

28692

Villanueva de la Canãda,

Madrid,

Spain

7

Departamento de Física, Universidade Federal do Espírito Santo,

29075-910

Vitória,

ES,

Brazil

8

INAF, Osservatorio Astronomico di Trieste,

via Tiepolo 11,

34131

Trieste,

Italy

9

IFPU, Institute for Fundamental Physics of the Universe,

via Beirut 2,

34151

Trieste,

Italy

10

Instituto de Física, Universidade Federal do Rio de Janeiro,

21941-972,

Rio de Janeiro,

RJ,

Brazil

11

Observatório do Valongo, Universidade Federal do Rio de Janeiro,

20080-090,

Rio de Janeiro,

RJ,

Brazil

12

Observatório Nacional – MCTI (ON),

Rua Gal. José Cristino 77, São Cristóvão,

20921-400

Rio de Janeiro,

Brazil

13

Donostia International Physics Centre (DIPC),

Paseo Manuel de Lardizabal 4,

20018

Donostia-San Sebastián,

Spain

14

IKERBASQUE, Basque Foundation for Science,

48013,

Bilbao,

Spain

15

University of Michigan, Department of Astronomy,

1085 South University Ave.,

Ann Arbor,

MI

48109,

USA

16

University of Alabama, Department of Physics and Astronomy,

Gallalee Hall,

Tuscaloosa,

AL

35401,

USA

17

Instituto de Astrofísica de Canarias,

La Laguna,

38205

Tenerife,

Spain

18

Departamento de Astrofísica, Universidad de La Laguna,

38206

Tenerife,

Spain

19

Centro de Estudios de Física del Cosmos de Aragón (CEFCA),

Plaza San Juan 1,

44001

Teruel,

Spain

★ Corresponding author; andresdelpinomolina@gmail.com

Received:

25

April

2024

Accepted:

23

September

2024

Context. With its 12 optical filters, the Javalambre-Photometric Local Universe Survey (J-PLUS) provides an unprecedented multicolor view of the local Universe. The third data release (DR3) covers 3192 deg2 and contains 47.4 million objects. However, the classification algorithms currently implemented in the J-PLUS pipeline are deterministic and based solely on the morphology of the sources.

Aims. Our goal is to classify the sources identified in the J-PLUS DR3 images as stars, quasi-stellar objects (QSOs), or galaxies. For this task, we present BANNJOS, a machine learning pipeline that utilizes Bayesian neural networks to provide the full probability distribution function (PDF) of the classification.

Methods. BANNJOS has been trained on photometric, astrometric, and morphological data from J-PLUS DR3, Gaia DR3, and CatWISE2020, using over 1.2 million objects with spectroscopic classification from SDSS DR18, LAMOST DR9, the DESI Early Data Release, and Gaia DR3. Results were validated on a test set of about 1.4 × 105 objects and cross-checked against theoretical model predictions.

Results. BANNJOS outperforms all previous classifiers in terms of accuracy, precision, and completeness across the entire magnitude range. It delivers over 95% accuracy for objects brighter than r = 21.5 mag and ~ 90% accuracy for those up to r = 22 mag, where J-PLUS completeness is ≲ 25%. BANNJOS is also the first object classifier to provide the full PDF of the classification, enabling precise object selection for high purity or completeness, and for identifying objects with complex features, such as active galactic nuclei with resolved host galaxies.

Conclusions. BANNJOS effectively classified J-PLUS sources into around 20 million galaxies, one million QSOs, and 26 million stars, with full PDFs for each, which allow for later refinement of the sample. The upcoming J-PAS survey, with its 56 color bands, will further enhance BANNJOS’s ability to detail the nature of each source.

Key words: methods: data analysis / catalogs / Galaxy: stellar content / quasars: general / galaxies: statistics

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Public photometric large digital sky surveys are revolutionizing our view of the Universe. Covering large areas of the sky (≳5000 deg2), surveys such as the Second Palomar Observatory Sky Survey(POSS-II, three optical gri broad bands; Gal et al. 2004), the Sloan Digital Sky Survey (SDSS, five optical ugriz broad bands; Abazajian et al. 2009), and the VISTA Hemisphere Survey (VHS, three near-infrared HJKs bands; McMahon et al. 2013) have provided crucial information about the large-scale structures that dominate the Universe. This has allowed astronomers to better understand the nature of celestial objects and to study a wide range of phenomena, from star formation to the evolution of galaxy groups. The next generation of surveys such as the Dark Energy Survey (DES, five optical ugriz broad bands; Flaugher 2012) and the UKIRT Hemisphere Survey (UHS, two near-infrared JKs bands; Dye et al. 2018) as well as those of Euclid (three near-infrared YJH broad bands; Laureijs et al. 2011) and the Large Synoptic Survey Telescope (LSST, six optical ugrizY broad bands; Ivezić et al. 2019) will push the current sensitivity limits and open the door to new fields, including time-domain astronomy.

Among the recent additions to the family of public photometric surveys is the Javalambre Photometric Local Universe Survey1 (J-PLUS; Cenarro et al. 2019), carried out at the Observatorio Astrofísico de Javalambre (OAJ; Teruel, Spain; Cenarro et al. 2014) using the 83 cm Javalambre Auxiliary Survey Telescope (JAST80) and T80Cam, a panoramic camera of 9.2k × 9.2k pixels that provides a 2 deg2 field of view (FoV) with a pixel scale of 0.55 arsec pix−1 (Marín-Franch et al. 2015). J-PLUS aims to cover 8500 deg2 of the northern sky hemisphere with an unprecedented set of 12 filters: five broad ugriz bands plus seven narrow optical bands (refer to Table 1). The vast dataset produced by J-PLUS has broad astrophysical applications that can enhance our understanding of the Universe. The third data release (DR3) of J-PLUS spans 3192 deg2 (2881 deg2 after masking) and catalogs 47.4 million objects with improved photometric calibration (López-Sanjuan et al. 2024), offering an unprecedented multicolor view of the local Universe2.

Due to its photometric flux-limited nature, J-PLUS images all astronomical sources down to its limiting magnitude without pre-selection. Identifying and classifying the observed objects within its footprint, such as stars and galaxies, is crucial. As with any photometric survey, this is one of the first steps in creating science-ready data products from J-PLUS data. Reliable object classification enables the study of specific astronomical sources and aids in the discovery of uncommon or new types of objects. Classification algorithms typically employ two complementary approaches: color-based and morphological. Color classifiers leverage the distinct positions of stars, galaxies, and quasi-stellar objects (QSOs) in color-color diagrams (e.g., Huang et al. 1997; Elston et al. 2006; Baldry et al. 2010; Saglia et al. 2012; Małek et al. 2013), while morphological classifiers distinguish between point-like and extended sources based on isophotal concentration (e.g., Kron 1980; Reid et al. 1996; Odewahn et al. 2004; Vasconcellos et al. 2011). J-PLUS utilizes the CLASS_STAR morphological classifier from the SExtractor photometry package (Bertin & Arnouts 1996). However, this algorithm, which considers only object elongation, extension, and peak brightness, simplistically categorizes objects as “star” or “not star.” Such a method is prone to misclassification, particularly when distinguishing between compact sources such as stars, distant active galactic nuclei, and compact galaxies. Refined classification through manual inspection is possible but requires significant time and resources.

Some of these problems can be eased by incorporating prior information within a Bayesian framework (e.g., Sebok 1979; Scranton et al. 2002; Henrion et al. 2011; Molino et al. 2014). In López-Sanjuan et al. (2019), the authors introduced the sglc_prob_star classification, which imposes priors based on concentration, broad-band colors, object counts, and distance to the Galactic plane to achieve more reliable results, especially for sources with a low S/N. However, this method does not utilize the valuable multifilter color information available in J-PLUS, and its output remains bimodal, distinguishing only between “compact” and “extended” sources. To achieve a reliable classification based on an object’s nature, not just its morphology, it is essential to utilize all photometric information in J-PLUS. Prior works have attempted this by using various classification methods. For instance, Wang et al. (2022) employed the 12 photometric bands to include the QSO class. Yet, their method was limited to high S/N sources, classifying only about 3.5 million sources out of the ~13 million in J-PLUS’s first data release.

To classify the entire catalog, methods capable of handling missing data are necessary. Techniques based on machine learning, which has been successfully implemented in other surveys, offer classification into two (e.g., Ball et al. 2006; Miller et al. 2017) or three categories (e.g., Małek et al. 2013). In the particular case of J-PLUS, in von Marttens et al. (2024) the authors applied extreme Gradient Boosting (XGBoost; Chen & Guestrin 2016) to classify objects as stars, galaxies, and QSOs. Notably, it was the first classifier to approach a three-class classification for this survey. Unlike Bayesian approaches, this method provides a deterministic classification for each object. In the best case, classifiers would offer deterministic probabilities for an object’s class membership (e.g., star, QSO, galaxy) without uncertainty or correlation between the probabilities of each class. However, models and training data carry inherent uncertainties, and the distinctions between classes are sometimes not clear, as in the case of partially resolved active galaxies blurring the lines between QSOs and galaxies. Reliable classification across the entire catalog requires models that accommodate these uncertainties, leveraging morphological, photometric, and external information to provide confidence intervals and correlations in their predictions. The inclusion of uncertainty and degeneration among classes is also critical in order to control the purity and completeness of object selections as well as biases in samples containing objects of mixed characteristics.

In this paper, we introduce BANNJOS, a pipeline utilizing Bayesian artificial neural networks (BANNs) to classify J-PLUS objects into one of three categories: stars, QSOs, and galaxies. We evaluate the classification’s quality and suggest methods to enhance the purity of the selected object samples. The paper is structured as follows: Section 2 outlines the BANNJOS pipeline; Sect. 3 discusses its application to J-PLUS, highlighting specific execution details; Sect. 4 presents classification results and model validation; Sect. 5 presents examples of selection criteria; Sect. 6 compares our method with three existing classifications for J-PLUS; Sect. 7 presents the results for the entire J-PLUS catalog and extra statistical validation tests; Sect. 8 explains known caveats, and finally, Sect. 9 summarizes our findings and conclusions.

J-PLUS photometric system and limiting magnitudes.

2 BANNJOS

The BANNs for the Javalambre Observatory Surveys, or BANNJOS, is a publicly available3 machine learning pipeline designed to derive any desired property of an astronomical object using supervised learning techniques. It is coded in Python using the Tensorflow and Tensorflow probability libraries (Abadi et al. 2015). BANNJOS works as a general-purpose regres-sor and is designed to operate at a variety of user input levels. It can run nearly automatically once provided with the minimum input files, but allows for extensive customization through optional keywords, giving users greater control over the process.

In essence, BANNJOS trains a model, ƒ(x), based on the relation between a dependent variable ytIìie and a set of independent variables, x, using a training sample. It then predicts this variable,  , in any given x’. Its most critical components include:

, in any given x’. Its most critical components include:

Reading and preprocessing the data,

Training the model,

Computing ypred.

A flowchart showcasing the working flow of BANNJOS can be found in Fig. 1. In the following we explain the details of the preprocessing of the data and the different models available.

2.1 Preprocessing of the data

The training sample consists of a table containing x and ytrue. The nature of these variable sets may vary; for instance, ytrue can include categorical, continuous, or both types of information, whereas x may encompass diverse types of data, such as photometric magnitudes or the positions of sources on the detector’s focal plane. Adapting all available information for the training process is a critical step with a notable impact on the final results. Initially, BANNJOS shuffles the training set and constructs x and ytrue based on user-defined options and criteria. The data are then divided into training and test sets, with the latter containing a subset of the training data that will not be exposed to the model during its training. This test sample, denoted as x′ and  in Fig. 1, will serve as a validation sample to assess the quality of the results.

in Fig. 1, will serve as a validation sample to assess the quality of the results.

Next, BANNJOS normalizes x, an essential step prior to training a neural network. Outliers can artificially widen the dynamic range of the training sample, thereby compressing the range containing actual information. To mitigate this, normalization is performed individually to each variable using the 0.005 and 0.995 quantiles of x as the minimum and maximum, respectively, and then clipping the normalized set to the range [0,1]. Any missing data in x are assigned a default value of −0.1. This approach allows BANNJOS to handle missing data within the training sample. Lastly, BANNJOS converts any categorical data into continuous numerical values, enabling it to treat classification problems as regression problems by assigning probabilities to each class.

|

Fig. 1 Flowchart illustrating the processing flow of BANNJOS. Central components are represented by yellow rhombuses, while inputs and outputs are indicated by rounded rectangles. The procedure commences with the training data (depicted in blue, located at the top-left) and the options (illustrated in light blue, positioned at the top-right), which govern the behavior of BANNJOS. Throughout the chart, colors show the data type: light blue for options or variables controlling the process, green for training data, orange for the test sample, and red for the data on which predictions are intended to be made. An general description of BANNJOS can be found in Sect. 2. More detailed information about the main processing stages of BANNJOS applied to J-PLUS can be found in Section. |

2.2 Dealing with uncertainties with different models

BANNJOS provides users the option to choose between six different regression models: three deterministic and three probabilistic. The deterministic models include a k-neighbors regressor (kNN), a random forest regressor (RF), and a multilayer feed-forward artificial neural network (ANN). The probabilistic models consist of an ANN with a Gaussian posterior and two Bayesian ANNs (BANNs): a variational inference ANN and a dropout ANN.

Each model has its own set of advantages and disadvantages. Simple models like k-neighbors and random forest regressors are highly scalable and perform quickly on large, well-distributed samples, with few hyperparameters requiring tuning. However, they may not be the best choice for highly complex problems and are incapable of extrapolating data. ANNs based regressors may provide better results for complex problems but require more hyperparameters to be fine-tuned and demand greater computational resources.

Measuring reliable uncertainties associated with each model’s predictions is crucial for most scientific cases. BANNJOS computes these uncertainties, σ(ypred), in different ways depending on the model’s capabilities.

2.2.1 Deterministic models

Three of the six models implemented in BANNJOS are unable to predict the uncertainty associated with a prediction’s nominal value. These models include kNN, RF, and the basic ANN. In such cases, BANNJOS calculates the expected uncertainty using a k-fold cross-validation scheme. In brief, the training sample is randomly divided into k equal-sized subsamples. A nominal model is then trained on k − 1 subsamples and used to predict values in the remaining subsample, which acts as the validation data. This process is repeated k times4, with each of the k sub-samples used once as validation data. After completing the k-fold cross-validation, the results are used by BANNJOS to train a second model (the variance model) based on the relation between |ytrue − ypred| and x. This variance model predicts the uncertainties for the nominal model’s predictions. In our trials, this method proved to be faster than using k models trained during the k-fold cross-validation to predict uncertainty, specially when predicting in large datasets, while providing very similar results.

2.2.2 Probabilistic models

BANNJOS includes three probabilistic neural network models, two of which operate as Bayesian approximations. The first model, an ANN with a Gaussian posterior, fits and predicts both the nominal result and its aleatoric uncertainty, that is, the uncertainty associated with randomness observed in the training sample. The variational inference BANN and dropout BANN models, however, can estimate both aleatoric and epistemic uncertainties, the latter arising from insufficient information in the training sample.

If a probabilistic model is used, ypred and its associated uncertainty are derived by sampling the model’s posterior multiple times (N). This process is equivalent to sampling the PDF of the prediction for each instance (astronomical object), ypred,i. BANNJOS computes several useful statistics from these PDFs such as the mean and several percentiles (see Sect. 3.3 and Appendices C and F).

3 Using BANNJOS on J-PLUS for object classification

BANNJOS has been designed as a general-purpose regressor capable of fitting and predicting continuous variables. Since categorical variables can be transformed into continuous ones, the regressor nature of BANNJOS provides additional versatility, allowing it to perform both regression and classification tasks. In this work, we have utilized BANNJOS for object classification in J-PLUS. We adopted a systematic approach to construct the training sample, select the optimal model and its corresponding hyperparameters, and derive the PDFs for each object’s likelihood of belonging to a specific class.

3.1 Training sample

We compiled an extensive training sample consisting of a selection of objects with J-PLUS photometry and available spectroscopic classification. We first downloaded the entire J-PLUS catalog, with over 47 million sources, taking information from several of the main scientific tables available at CEFCA’s archive5. We combined the resulting table with data from ongoing and past all-sky surveys that contain additional information about the observed sources. These data include astrometric and photometric information from Gaia DR3 (Gaia Collaboration 2016, 2023) and CatWISE2020 (Marocco et al. 2021), as well as reddening information from dust maps of Schlafly & Finkbeiner (2011). In order combine all the data, we took advantage of the catalogues matches already available at the J-PLUS archive. Details on the specific J-PLUS archive query and the variables utilized during the training are available in Appendix A. Broadly, the training list encompasses data on:

J-PLUS photometry, that is, fluxes measured across eight apertures for the 12 bands using forced photometry (SExtractor dual mode);

J-PLUS position on the CCD and morphology (i.e., ellipticity, effective radius);

J-PLUS photometry and masking flags;

J-PLUS Tile observation details, for example, seeing, zero-point, and noise;

CatWISE2020 photometry (i.e., W1mpro_pm and W2mpro_pm bands);

Gaia and CatWISE2020 astrometry (i.e., parallaxes and the absolute value of the one-dimensional proper motions6.)

Flux measurements across different apertures provide additional insights into the source’s morphology. Its position in the detector can also be relevant, as it may help identify geometric distortions or other image-affecting cosmetic effects. Furthermore, details regarding the tile image’s quality, such as average seeing during acquisition, can be crucial for determining whether the source is extended. In total, we included 445 variables in our analysis.

In order to obtain the spectroscopic classification, we performed a cross-match of the resultant J-PLUS+Gaia+CatWISE2020 table with the SpecObj table from SDSS DR18 (Almeida et al. 2023), the Low Resolution spectroscopy catalog from LAMOST DR9 (Large Sky Area Multi-Object Fiber Spectroscopic Telescope; Cui et al. 2012), and the Early Data Release (EDR) from DESI (Dark Energy Spectroscopic Instrument; DESI Collaboration 2023). The match is based on the sky position of sources across the catalogs, considering a source identical if its registered positions differ between catalogs by less than a specified angular separation. This maximum separation was determined in two steps. In the first step, a very large radius of 2 arcsec was used, ensuring all possible matches were included. In the second step, the maximum separation was determined based on finding 99% of matches within it. For J-PLUS and SDSS, the maximum allowed separation was found to be 0.6 arcseconds, while for J-PLUS with LAMOST and DESI, it was set to 0.65 arcseconds. Lastly, we included a set of unequivocally classified Gaia sources, where the probability of belonging to a particular class is 1 and 0 for the other two classes. The resulting catalog underwent cleaning and consistency checks. Initially, we filtered out poorly measured sources by applying recommended criteria from SDSS:

0.864 <RCHI2 < 1.496,

Z_ERR > 0,

Z_ERR/(1+Z) < 3 × 10−4,

PLATEQUALITY not “bad”,

(SPECPRIMARY = 1) or (SPECLEGACY = 1),

SN_MEDIAN_ALL ≥ 2,

ZWARNING_NOQSO = 0.

The clipping levels for RCHI2 were set to the 0.1 and 0.9 quantiles of its distribution, respectively. For sources from LAMOST, the following criteria were applied:

FIBERMASK = 0,

Z_ERR > 0,

Z_ERR/(1+Z) < 3 × 10−4,

SN_MEDIAN_ALL ≥ 3.

For the reliable sources classified in DESIEDR, we selected those with:

TARGETID > 0,

SV_PRIMARY = 1,

ZWARN = 0.

Lastly, Gaia sources were chosen based solely on their renormal-ized unit weight error, with RUWE ≤ 1.3. The applied quality filters resulted in 586 641 sources from SDSS, 508 432 from LAMOST, 256 532 from DESI, and 152 566 from Gaia, totaling 1 504 171 sources.

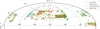

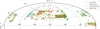

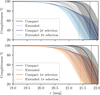

The spatial distribution of these sources is illustrated in Fig. 2. Histograms displaying the magnitude and color ranges covered by each of the surveys in the training list are shown in Fig. 3. Although the training list spans the entire J-PLUS footprint, the levels of completeness and color coverage vary depending on the contributing survey. For instance, Gaia (depicted in blue) provides uniform sampling across the footprint but is limited to relatively blue sources (ɡ − r ≲ 1.6) and does not reach the depths of some of its spectroscopic counterparts. Conversely, surveys such as SDSS or DESI reach deeper magnitudes and sample the less populated galactic poles, offering insights into distant galaxies and QSOs. Incorporating various catalogs into the training list maximizes the coverage across the parameter space spanned by J-PLUS, minimizing instances where the model must extrapolate predictions, which in theory should enhance results. However, due to the disparate selection criteria of the spectroscopic surveys, the training sample exhibits a spurious correlation between the coordinates and the physical properties of the sources, which results from selection biases and does not reflect an actual correlation in the target population. To help the model generalize and mitigate this bias, it is essential to remove any positional information from the training data. For example, if sky coordinates were used during training, the model could erroneously learn that there is a higher density of QSOs within the footprint of surveys aimed at observing this particular kind of object and predict a higher density of QSOs in such areas.

A significant number of sources have multiple measurements in two or more of the four classification catalogs. Confusion matrices for the classification of such objects, shown in Fig. 4, illustrate the consistency (or lack thereof) between the surveys, indicating the relative number of objects classified identically or differently between pairs of surveys. These matrices were constructed using all objects shared between each survey, with SDSS serving as the reference. Although inter-survey consistency is generally good, it is not without discrepancies. For example, LAMOST tends to classify fewer objects as stars compared to SDSS (~91%), and Gaia often classifies many of SDSS’s galaxies as QSOs (~15%). Despite these differences, the overall agreement is satisfactory, especially considering that Gaia contributes only around 9% of the galaxies and QSOs to the final list.

We removed all but one instance from repeated objects with consistent spectroscopic classes across catalogs (1160 in total), retaining duplicates where classifications differed depending on the catalog. We identified a small subset of objects (747) with varying classifications across two or more input lists. After visually inspecting the spectra of some of these objects, we found they were primarily galaxies misidentified as stars and vice versa (233 cases), and distant active galaxies classified either as QSOs or galaxies (467 cases). We refined this last group further by excluding nearby sources (stars) with parallax ϖ/σ(ϖ) > 1, meaning objects with parallax different from zero at a 1σ confidence level. Since the dichotomy between galaxies and QSOs is not always clear, we decided to keep those objects (426), but removed those with implausible class combinations, such as Galaxy-Star or QSO-Star. Notably, we found no objects classified differently across three or more catalogs, meaning no objects were assigned all three possible classes.

The final list comprises 1 365 700 objects (1 365 274 unique), distributed into 480267 galaxies, 127 633 QSOs, and 757 800 stars. Each object’s record in the catalog includes photometric, astrometric, and morphological data from J-PLUS, Gaia DR3, and CatWISE2020, alongside spectroscopic classification from SDSS DR18 (585 336 objects in common), LAMOST DR9 (507737 objects in common), DESI EDR (254586 objects in common), and spectrophotometric classifications from Gaia DR3 (151 974 objects in common). The details about the composition of the training set can be found in Table 2. We reserved 10% of these sources to compose the ‘test’ set, which we utilize later to validate and assess our results.

The compiled training list is disproportionately biased toward the “star” class, with galaxies being the second most prevalent. Indeed, within the J-PLUS footprint, stars are the most numerous due to the survey’s depth limitations, but the class ratios in the training list might be artificially skewed by the design of the contributing surveys. To evaluate and mitigate potential biases, we tested our models using three different versions of the training list. The first version retained the original composition after removing 10% for the test sample, resulting in 1 229 130 sources. The second, a downsampled version, randomly reduced the numbers of the two most abundant classes to match those of the least populated class, the QSOs, resulting in a list of 344 322 equally distributed sources among the “Star”, “QSO”, and “Galaxy” classes. The third, a balanced version of the original list, employed oversampling for the less populated classes using a Synthetic Minority Over-sampling Technique (SMOTE) (Chawla et al. 2002), which uses a k-neighbor interpolator to generate new instances (sources) based on the averages of neighboring properties. This “augmented” list, balanced among classes, contains 2046123 sources.

|

Fig. 2 Aitoff projection of the training list sources. The positions of the sources are represented by small dots, color-coded according to their originating survey. These are sources identified in J-PLUS with available spectroscopic or photospectroscopic classification. The small squares with 1.4-degree sides reflect the J-PLUS tiles. For a reference on the number of sources, see Table 2. |

|

Fig. 3 Distribution of the r magnitude (top panel) and ɡ − r color (bottom panel) for the sources in J-PLUS and the training list. The distribution of sources from each contributing survey is depicted by thin lines in various colors (as in Fig. 2). The aggregate training list is represented by a thick gray line, while the entirety of J-PLUS sources, 47 751 865 objects, is shown by a thick black line. Histograms related to the training list have been normalized to the peak of the total training list (gray line), whereas the J-PLUS histograms have been normalized to their own peak. A vertical thin dashed line shows the limiting magnitude of J-PLUS DR3 (r = 21.8 mag, see Table 1). The extended tail of objects with ɡ − r ≳ 1.5 is mostly composed by low S/N sources with poorly determined ɡ magnitude. |

|

Fig. 4 Confusion matrices between the four surveys used to compile the training list. The proportion of objects in each bin relative to the total number of objects in SDSS is indicated by varying shades of blue and is presented as a real number ∈ [0,1]. The confusion matrices also specify the total number of objects used in their computation. |

Composition of the training set.

3.2 Model selection and hyperparameter tuning

To achieve optimal classification, we evaluated the models described in Sect. 2.2, identifying the model and hyperparameter configuration yielding the best results. Additionally, we assessed the three variations of our training sample outlined in Sect. 3.1. The performance of each configuration was measured on the test sample through cross-validation, comparing the spectro-scopic/spectrophotometric class of an object, ytrue, against the predicted one, ypred. For BANNs, which provide the PDF for each class, we designated the class corresponding to the highest median value (quantile 0.5) from the three PDFs as the predicted class class = max [PCGalaxy(50), PCQSO(50), PCStar(50)]. We started evaluating the models in a coarse grid of hyperparameters in order to gain a general idea about their performance. All the ANN based models were trained using a validation sample of 40%7 In general, ANNs significantly surpassed RF and kNN in performance, with a deep dropout BANN emerging as the superior model. This result is somewhat expected, as dropout BANNs are less susceptible to overfitting compared to traditional and varia-tional inference ANNs, especially when the model architecture is sufficiently deep. With the exception of RF, the augmented training list consistently yielded slightly better results across all models. After selecting the dropout BANN as the best overall model, we evaluated its performance across an extensive grid of potential hyperparameter configurations:

Number of hidden layers: [8,7,6,5,4,3,2]

Batch size: [32,64,128,256,512]

Ln: [1600,1300,1000,700,500,300,200,100,50,5]

Dropout ratio at L1−8: [0.1,0.2,0.3,0.4,0.5]

Dropout ratio at L0: [0.0,0.1,0.2]

Loss function: MSE, Huber

Initial learning rate: [10−3,5 × 10−4,10−4, 5 × 10−5]

Step decay in learning rate: [10,20,30,50, ∞].

Here, “Number of hidden layers” denotes the quantity of hidden layers between the input and output. “Batch size” refers to the number of samples processed before updating the model. The term Ln indicates the number of neurons in the n-th layer for 1 ≤ n ≤ 8. The “Dropout ratios” represent the fraction of randomly dropped neurons in each epoch at hidden layers (n > 0) or at the input layer (n = 0). The “Loss function” is the metric minimized during the fitting process. “Initial learning rate” denotes the step size at each epoch during optimization, and “Step decay in learning rate” specifies the epoch count before the learning rate updates to half of its previous value, with ∞ indicating a constant learning rate.

Given the vast number of possible hyperparameter combinations, exceeding 1011, testing all configurations was computationally unfeasible. Therefore, we employed a Random Search Cross-Validation approach, randomly selecting and testing 800 configurations against the test sample. We limited each model’s training to 2000 epochs and incorporated an early stopping mechanism that halts training if the loss does not improve over 50 epochs8. To make the predictions, we sampled the posterior only 128 times. This allows us to keep a reasonable computational cost for all the optimization tests, while realistically sampling the hyperparameter space. Upon completing cross-validation for the 800 models, we utilized a Histogram-based Gradient Boosting Regression Tree to identify the optimal model architecture and training hyperparameters. Model evaluation was based on three metrics: balanced accuracy, average precision, and their quadratic sum. The found optimal hyperparameters for the dropout BANN are:

Number of hidden layers: 4

Batch size: 256

L1−4 = [700,1300,500,300]

Dropout ratio at L1−4: 0.4

Dropout ratio at L0: 0

Loss function: MSE

Initial learning rate: 10−4

Step decay in learning rate: 30

Where now L1–4 is the number of neurons in the hidden layers 1 to 4. Despite this being the best configuration, our testing indicates that model performance is largely invariant to hyperparameter configuration, provided the values are reasonable. For instance, the accuracies of our top 10 models differ by less than 0.1%. More details on the impact on the accuracy that the model hyperparam-eters have, the correlation between some hyperparamenters, and the individual conditional expectation (ICE) curves, can be found in Appendix B.

3.3 Training the model and sampling the posterior

We used the hyperparameters derived in the previous Sect. 3.2 to build our model. To maximize results, we extended the training stopping criteria to 10 000 epochs and updated the early stop function to 500 epochs. The model converged after 8963 epochs with a loss function precision of 10−7.

A significant advantage of using a BANN model is its capability to provide the full PDF for ypred. Here, the total PDF for a specific object is the sum of the individual class PDFs, defined within the 3-dimensional space delineated by orthogonal axes P(class = Galaxy), P(class = QSO), and P(class = Star). Sampling the model multiple times, N, in a Monte-Carlo fashion, BANNJOS outputs three probabilities for each sample, corresponding to the likelihood of the object belonging to each class. Due to the model’s stochastic nature, different samples yield unique outcomes, resulting in N × 3 data points. Figure C.1 shows a sampling example with N = 5000.

While maintaining and analyzing all points permits comprehensive PDF reconstruction and facilitates informed class determination, extensive posterior sampling (large N) is computationally intensive and data-heavy, becoming impractical for the entire J-PLUS catalog. To alleviate computation time and storage, we employed a reduced sampling count (N = 300, denoted as classBANNJOS,lo) and projected the 3-dimensional probabilities onto the plane defined by P(class = Galaxy) + P(class = QSO) + P(class = Star) = 1, thereby reducing the dimensionality to two. We then applied a 2-dimensional Gaussian Mixture Model (GMM) with three components to model the projected probabilities. The GMM parameters – covariance matrices, means, and weights – are compactly stored, effectively capturing the posterior’s essence with significantly fewer parameters. BANNJOS additionally computes mean, median absolute deviation (MAD), and specified percentiles for each class’s cumulative distribution, along with Pearson’s correlation coefficients between classes. The compression method and its efficacy are explained in more detail in Appendix C.

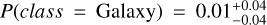

In Fig. 5, we showcase a faint source (r = 21.12 mag) classified by BANNJOS. Due to its high photometric and astrometric uncertainties, BANNJOS assigns a low-confidence QSO classification to the source with  . The derived PDF exhibits multiple peaks and a pronounced anticorrelation between P(class = QSO) and P(class = Galaxy), indicating a considerable probability that the object could also be a galaxy with

. The derived PDF exhibits multiple peaks and a pronounced anticorrelation between P(class = QSO) and P(class = Galaxy), indicating a considerable probability that the object could also be a galaxy with  . This ambiguity underscores the intricate nature of distinguishing between QSOs and active galaxies, particularly when faced with unresolved targets. Indeed, when checking the SDSS’S SUBCLASS field for this an other similar objects, we found all to be unresolved active galaxies or QSOs. Interestingly, in this particular case the sglc_prob_star classifier wrongly assigned a very high probability of being a star to the object, showcasing how the combination of photometric, astrometric and morphological information used by BANNJOS can help distinguish sources based on their nature, rather than just their apparent aspect, even in low S/N conditions.

. This ambiguity underscores the intricate nature of distinguishing between QSOs and active galaxies, particularly when faced with unresolved targets. Indeed, when checking the SDSS’S SUBCLASS field for this an other similar objects, we found all to be unresolved active galaxies or QSOs. Interestingly, in this particular case the sglc_prob_star classifier wrongly assigned a very high probability of being a star to the object, showcasing how the combination of photometric, astrometric and morphological information used by BANNJOS can help distinguish sources based on their nature, rather than just their apparent aspect, even in low S/N conditions.

The implemented GMM compression method was tested against an additional validation sample with N = 5000 BANN posterior samples (classBANNJOS,hi). The GMM faithfully reproduces the original results. The high-quality sampling (black histograms in Fig. 5), and the ones using N = 300 compressed with the GMM (classBANNJOS,GMM, blue histograms in Fig. 5) are consistent across the entire test sample, barring rare exceptions. Only in 4 objects with very low S/N (0.003% of the cases, see Appendix C), we obtained a different classification using the highest median probability of the three classes, indicating that the implemented compression method provides a reliable classification.

In contrast with the low S/N example, in Fig. 6 we show an example of a source classified by BANNJOS with high- confidence. The relatively bright source (r = 17.06 mag), is unequivocally identified as a star by BANNJOS:  ,

,  ,

,  . Most sources brighter than r ~ 20 mag will show posteriors that tightly distribute around the predicted class with very little dispersion or correlation to other classes probabilities.

. Most sources brighter than r ~ 20 mag will show posteriors that tightly distribute around the predicted class with very little dispersion or correlation to other classes probabilities.

|

Fig. 5 Example of results obtained with BANNJOS for the source Tile Id = 101797, Number = 25111. Upper left: J-PLUS color image composed using the r, 𝑔, and i bands. The fluxes in each band have been normalized between the 1st and 99th percentile of the total flux. The classified source is at the center of the reticle, marked by an orange open circle, while other sources detected in the J-PLUS catalog are marked with white open circles. Lower left: SDSS spectrum undersampled by a factor of ten for improved visibility (in gray) alongside J-PLUS photometry across its 12 bands. Right: corner plot illustrating the three-dimensional posterior probability distributions for P(class = Galaxy), P(class = QSO), and P(class = Star). Orange lines denote the spectroscopic class, ytrue. Black contours and histograms represent the posterior probability distribution sampled 5000 times (classBANNJOS,hi), with black lines indicating the median probability for each class and the gray-shaded area covering the 2nd to 98th (lighter gray) and the 16th to 84th (darker gray) percentile ranges. Blue contours, histograms, and shaded areas depict the reconstructed posterior probability distribution from the GMM model fitted to N = 300 points. A text box in the upper right corner lists the complete source ID, its r magnitude, the ’true’ classification from spectroscopy (classspec), its CLASS_STAR and sglc_prob_star scores, and the BANNJOS classifications from the high-quality posterior sampling (N = 5000, classBANNJOS,hi), the regular-quality posterior sampling (N = 300, classBANNJOS,lo), and the classification from the reconstructed PDF following the GMM compression method, classBANNJOS,GMM. The classification is determined as the one with the highest median probability value. Despite its complexity, the PDFs obtained from sampling 5000 times and the one after reconstruction from the GMM are nearly indistinguishable, with gray and blue contours and shaded areas covering the same areas. The corresponding classifications, classBANNJOS,hi (black) and classBANNJOS,GMM (blue), also match the spectroscopic classification. The GMM compression procedure is described in Appendix C. |

|

Fig. 6 Example of results obtained with BANNJOS for the source Tile Id = 85560, Number = 1088. At r = 17.06 mag. BANNJOS classifies this source as a star with high confidence. The markers correspond with those used in Fig. 5. Most sources classified with BANNJOS will show PDFs similar to this one, with very little dispersion around the predicted class. |

4 Validation of the model

In this section, we validate the classification outcomes of BANNJOS using the identical test sample and criteria established in Sect. 3.2. This involves assigning the predicted class of the object, ypred, and comparing these with the spectroscopic classifications, ytrue. Since BANNJOS provides the full PDF of the classification, there are multiple criteria that could be used in order to assign a class to each source. However, we will focus now on the most simple approach that is assigning classes based on the highest median probability value across the three PDFs. In Sect. 5 we explore how the purity of the selected samples can be improved using more sophisticated selection criteria. It is worth mentioning that the test sample was subtracted from the training list before any preprocesing of the data, and thus it is unbalanced with proportions very similar to those of the original training set (see Table 2).

4.1 Average validation

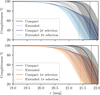

To gauge the model’s average efficacy, we compared the predicted and true classifications for objects with r ≤ 21.8 mag, corresponding to the limiting magnitude of J-PLUS DR3 (López-Sanjuan et al. 2024, 5σ, 3 arcsec diameter aperture). This magnitude limit is also close to the median 50% completeness threshold of J- PLUS for compact sources across 1642 tiles (r ~ 21.9 mag). In Fig. 7 we show the normalized counts for each predicted class in pairs and their respective receiver operating characteristic (ROC) curves. Each pair shows the statistics of true and false positives (TPs and FPs, respectively), therefore showcasing the ability of the model to avoid confusion between species. For example, the galaxy versus QSO pair shows how many real galaxies were classified as such (TPs) and how many as QSOs (FPs), while the QSO versus galaxy shows the same statistics but for actual QSOs that are classified as such or as a galaxy.

An ideal classification would yield an area under the curve (AUC) of one with no intermixing between classes in the count histograms. For example, objects with ytrue = Galaxy would exhibit a probability P(class = Galaxy) = 1, while probabilities for other classes would be zero. Our model’s results are nearly perfect, with all ROC AUC values exceeding 0.99 for all six possible inter-class combinations. The results also seem to be nearly symmetric between switching classes, with differences below 10−3 in number counts and very similar ROC AUC values, indicating that the rate of FPs and false negatives (FNs) is close to one. Asymmetric results are not desirable, since they reflect biases in the model’s prediction toward specific classes. For contrast, BANNJOS shows its ability to recover well the ratios between classes in all possible combinations, even though the distribution of the test sample is not balanced. The histograms in the upper panels further corroborate the accuracy of BANNJOS, displaying nearly all objects congregating at the extremes, indicating P(class = x) ~ 1 for the evaluated predicted class and P(class = y) ~ 0 for the other class. As anticipated, the pairs exhibiting the lowest accuracy are galaxy versus QSO and vice versa, with ROC AUCs of 0.993 and 0.995, respectively, due to the actual dual nature of these sources. The small increase (≲10−3 in normalized number counts) of galaxies classified as stars and vice versa consist of galaxies with a bright foreground star in front of them. These sources can be classified as either of the two classes by both BANNJOS and the spectroscopic surveys, depending on factors such as which source dominates the spectrum, the presence of astrometric measurements, and other criteria.

|

Fig. 7 Model performance evaluated up to the limiting magnitude of J-PLUS DR3 (r = 21.8 mag, see Table 1). The performance was assessed on the test sample, consisting of 136 570 objects. The top panels illustrate the distribution of probabilities (in logarithmic scale) for objects being classified according to their spectroscopic category. The difference in bar heights reflects the different amount of sources present in the test sample. The bottom panels depict the ROC curves in blue for each class combination. These curves approach the maximum True Positive Rate (1) almost immediately, demonstrating excellent model performance. The AUC exceeds 0.99 for all six class combinations. |

4.2 Signal-to-noise dependence

The predictive capability of BANNJOS is expected to be influenced by the quality of the data and its signal-to-noise ratio (S/N). We studied the potential impact of lower S/Ns by evaluating ROC curves across various magnitude bins, as shown in Fig. 8. This figure also displays Precision-Recall (PR) curves, which balance the purity and completeness of the model’s classifications. Ideally, predictions should be both 100% pure and complete (full Recall). A decline in predictive power results in a compromise between these metrics. As anticipated, the S/N of source measurements in x markedly affects prediction quality, with dimmer objects proving more challenging to classify accurately. Deeper magnitude bins, indicated by darker shades, exhibit progressively deviating ROC curves from the ideal upper-left corner as magnitudes increase. This reduction in accuracy is mirrored in the PR curves, with fainter magnitudes resulting in a more obvious trade-off between purity and completeness. The third row in Fig. 8 illustrates the AUC for both ROC and PR curves as a function of magnitude, alongside the median completeness for both compact and extended J-PLUS sources, depicted in light and darker gray, respectively (do not confuse with the Recall of the classification). The model’s classification remains nearly flawless up to r ~ 21 mag (AUC ≳ 0.99 for both curves), before experiencing a decline, coinciding roughly with J-PLUS’s limiting magnitude due to diminished S/N and information loss in certain bands. Despite these challenges, even in the least favorable scenarios, such as differentiating QSOs from galaxies or stars, the model maintains high ROC and PR AUC values (~0.98 and ~0.93 at r = 22 mag, respectively), underscoring its exceptional performance. Also noteworthy is the increased confusion between QSOs and galaxies at magnitudes brighter than r ~ 18 mag, likely stemming from the presence of active galaxies in both the test and training samples, classified differently across surveys.

Further insight into the model’s classification performance across different brightness levels is provided by Fig. 9, which illustrates the confusion matrices between ytrue and ypred for varying magnitude ranges. Overall, the model achieves an average accuracy of approximately 95% for objects with r ~ 21.5 mag. However, it faces challenges in accurately classifying QSOs at fainter magnitudes, a foreseeable issue since QSOs are morphologically similar to stars and their color differentiation becomes less reliable at r ≥ 21 mag due to the lower S/Ns. Nevertheless, 81% of QSOs were correctly identified at r ≥ 21.5 mag, a good result given that J-PLUS’s nominal limiting magnitude is around r ~ 21.8 mag. Additionally, since the sample is predominantly composed of stars and galaxies, the average error rate at the faint end remains below 12–13%.

Similar to observations from Fig. 8, there is a noticeable increase in the misclassification of QSOs as galaxies at magnitudes brighter than r ~ 18 mag. This trend is attributed to the inclusion in both the test and training samples of active galaxies, which exhibit similar characteristics but are categorized differently as either galaxies or QSOs, depending on the spectroscopic survey in question.

4.3 Position dependence

To ensure our model remains unbiased, we deliberately excluded all positional information (e.g., positions in the sky, twodimensional proper motions) during its training phase. Nevertheless, due to the incorporation of dust attenuation data from Schlafly & Finkbeiner (2011), some positional data (albeit significantly degraded) may have influenced the model. To identify and mitigate potential biases and to study the presence of contaminants from other classes, we analyzed the prediction errors relative to position and class. This is shown in Fig. 10, where the diagonal panels show the object surface density for each class in the test sample, and the off-diagonal panels the relative classification error for each possible combination between the three classes, i.e. the percentage of pollutants from each other class. Here, errors are calculated as the number of incorrectly classified objects divided by the total in the corresponding true class for each spatial bin, approximately 3.5° on each side.

Our analysis reveals no significant correlation between source positions and classification error for any of the six class combinations, with error variations across different sky regions consistent with a normal distribution. This includes areas near the Galactic plane, where we might expect an increased prediction of stars if the model were biased by position. The existence of areas with higher surface density, clearly visible in the diagonal panels, is also noteworthy. These correspond to areas that were covered by more surveys or to deeper magnitudes (see Fig. 2), hence the higher number of observed sources. This inhomogeneity in the training list is the key reason why positional information must not be passed to the model. Otherwise it could learn the specific classification from each spectroscopic survey within their footprints, without generalizing the solution to the entire sky.

We further examined potential Galactic plane proximity effects by assessing the relative error solely as a function of Galactic latitude, b, and class. In Fig. 11, histograms for each predicted class display the number counts and the average classification error across 10° intervals from 20° to 90°. Similar to Fig. 10, errors are defined as the quantity of objects incorrectly assigned to each class, FPs, ordered by Galactic latitude b. Mild trends emerge here; notably, the misclassification rate of QSOs as stars increases with b, suggesting the model does not entirely compensate for the declining stellar density relative to QSOs. We also notice an increase in wrongly predicted galaxies that are spectroscopically identified as stars at low Galactic latitudes, consistent with higher stellar densities. Conversely, QSO predictions remain relatively stable across latitudes, indicating consistent contamination levels in QSO-predicted samples across all Galactic latitudes. Nonetheless, it’s crucial to highlight the scale of demonstrated errors, with the highest error rates being approximately 5% for predicted QSOs and only 0.8% and 0.5% for the galaxies and stars categories, respectively.

|

Fig. 8 Dependence of model performance on the S/N of the sources. The top panels present the ROC curves for objects within different magnitude bins, shaded in varying tones of blue. Magnitude bins range from r = 17.5 to r = 22.5 mag in 0.5 mag increments (10 bins). The middle panels exhibit the Precision-Recall (PR) curves, illustrating the trade-off between sample purity and completeness, shaded in different red tones according to magnitude bin. Both quantities are related by ƒ 1, the harmonic mean of precision and recall, for which several values are plotted. Notice that the axes are a zoomed version of those in Fig. 7, spanning rates from 0 to 0.3 for FPs and 0.7 to 1 for TPs, Precision, and Recall. The bottom panels summarize the results, depicting the evolution of the AUC for both ROC and PR curves relative to the central magnitude of each bin. Blue and red colors denote the AUCs for ROC and PR, respectively. The median photometric completeness for compact and extended sources within J-PLUS is shown by dark and light gray curves, respectively, with surrounding gray shaded areas indicating the 16th and 84th percentiles of completeness. Classification remains near-perfect up to r ~ 21 mag (AUC ≳ 0.99 for both curves), thereafter beginning to diminish, aligning with J-PLUS’s limiting magnitude and the ensuing loss of information in some bands. However, even under the most challenging conditions, such as distinguishing QSOs from galaxies or stars, ROC and PR AUC values maintain levels of ~0.98 and ~0.93 at r = 22 mag, respectively, showcasing outstanding model performance. Notably, BANNJOS exhibits a propensity for mistaking QSOs for galaxies and vice versa at magnitudes brighter than r ~ 18 mag, possibly due to active galaxies within the test (and training) sample being variably classified as galaxies or QSOs based on the specific spectroscopic survey. |

5 Refining your selection

Throughout the paper, we have conventionally assumed that the object’s class corresponds to the one with the highest median probability, i.e., class = max [PCGalaxy(50), PCQSO (50), PCStar (50)]. This approach, employed in Sects. 3.2 and 4, does not account for prediction uncertainties. Leveraging the complete PDF for the three classes enables more refined object selection. For instance, applying specific probability thresholds can enhance the purity of the selected sample. Moreover, apart from the median probability, BANNJOS provides the mean probability and the PDF compressed through the GMM model. These metrics can be used to select classes at any given threshold.

If the model works well, the classification success ratio (true probability) should match the probability used to select the objects (predicted probability). Specifically, the proportion of objects truly belonging to a selected class should correspond to their assigned probability of class membership. This is shown in Fig. 12, where the probability predicted by BANNJOS using three different statistics is compared to the true probability for each class across ten probability bins ranging from 0 to 1. The true probability for each class and probability bin is calculated as the ratio of the number of objects correctly classified according to their BANNJOS predicted probability and their actual spectroscopic class to the total number of objects with that particular predicted probability.

The three statistical measures yield similar results, with higher predicted probability thresholds correlating to increased true probabilities. The difference between the predicted and true probabilities remains consistently below ~0.1 across all cases, indicating a good level of accuracy. However, there are some departures from the one-to-one relation, especially when using the median of the probability, that we should point out. For example, BANNJOS appears to be slightly underconfident when classifying stars with low probability 0.1 ≲ PCStar(50) ≲ 0.5, and slightly overconfident when classifying galaxies at 0.5 ≲ PCGalaxy (50) ≲ 0.9. This might result in purer and more contaminated samples of stars and galaxies, depending on the used probability threshold for the median. In contrast, the mean and the reconstructed mean from the GMM model appear to adhere more closely to a one- to-one relation. Figure 12 also highlights the probabilistic nature of our findings, with shaded areas representing the sample purity using the 16th and 84th percentiles of the PDF for source selection and the confidence regions derived from the GMM model.

We further investigate the effect of the selection threshold in Fig. 13, which illustrates the impact of varying selection thresholds on the 2nd, 16th, 50th, 84th, and 98th percentiles of the cumulative PDFs, x, for each class: PCGalaxy(x), PCQSO(x), PCStar (x). These curves, similar to the PR curves in Fig. 8, demonstrate the purity-completeness trade-off based on the selection threshold.

Increasing the probability threshold typically yields purer samples but reduces completeness. The chosen percentile for selection significantly affects the outcome. For example, selecting objects based on the 98th percentile being a QSO with ≥0.5 results in a sample with approximately 87% purity and 98% completeness. Opting for the 2nd percentile increases purity to roughly 98% at the expense of dropping completeness to about 91%. Setting higher thresholds further improves sample purity but invariably reduces completeness. Achieving over 99% purity in QSO selection is possible by selecting sources whose 2nd percentile exceeds 0.8. Conditions for galaxies and stars are more lenient, with the model classifying them with greater ease. For instance, a purity above 99.5% is achievable for stars with the 2nd percentile of their PDF exceeding 33%.

Combining probabilities for the three classes enables the creation of purer samples. Typically, high purity and substantial completeness are attainable by setting the 16th and 84th percentiles above or below the random classification probability (1/3 in this case). By combining these 1σ confidence intervals, we can define the object’s 1σ class as follows:

Star ⇔ PCGalaxy (84) < 1/3 & PCQSO (84) < 1/3 & PCStar(16) > 1/3

Galaxy ⇔ PCStar(84) < 1/3 & PCQSO (84) < 1/3 & PCGalaxy (16) > 1/3

QSO ⇔ PCGalaxy (84) < 1/3 & PC Star(84) < 1/3 & PCQSO(16) > 1/3.

Adopting the stricter 2σ confidence intervals, that is, the 2nd and 98th percentiles instead of the 16th and 84th, yields even purer but less complete samples.

In Fig. 14, confusion matrices between ytrue and ypred are presented for sources selected using the 2σ criteria for different magnitude ranges. Comparison with Fig. 9 reveals enhanced sample purity, particularly for QSO classification, which achieves 91% accuracy in the 21.5 < r ≤ 22.5 mag range and 96% for the other classes. However, this precision is gained at the expense of excluding low-confidence classified sources, evidenced by the reduced count of objects in the same magnitude bin (only 1 311 from 1 744 objects). The persistent galaxy-QSO confusion at r ≳ 18 mag corroborates that these objects are confidently classified by BANNJOS, suggesting that variation in classification stems from the disparate spectroscopic surveys constituting the training and test sets. The drop from 15% to 7% in galaxy-QSO misclassifications at fainter magnitudes supports this notion, as most active galaxies at such depths are identified as QSOs by the employed surveys.

The three selection strategies discussed serve merely as examples of the possible approaches. Higher (lower) selection thresholds can produce more pure (more complete) samples. Even more refined object selections can be achieved by exploiting the full covariance matrix and various correlation coefficients between the classes. For instance, active galaxies could be identified as objects with high probabilities of being either a galaxy or a QSO, accompanied by a significant negative correlation between these probabilities, as discussed in Sect. 3.3. If very pure samples are required, the correlation coefficients between probabilities can be useful too, by selecting sources with low uncertainties and little degeneration between species. Lastly, since the full PDF is recoverable from BANNJOS’s output, users can also opt to use it for weighting the PDFs, finding particular objects, etc.

In Appendix D, we provide examples on how to query BANNJOS data to obtain pure samples of specific objects. In particular, we demonstrate how to select a pure sample of QSOs that could be candidates for spectroscopic follow up.

|

Fig. 9 Confusion matrices depicting the correlation between the true classes and the predicted classes for objects in the test sample across different magnitude bins. These bins range from r = 16.5 to r = 22.5 mag in 1 mag increments, totaling six bins. The proportion of objects within each bin relative to the true class is indicated by varying shades of blue, with values represented by real numbers within the interval [0, 1]. Additionally, the confusion matrices include the count of objects contributing to each calculation. |

|

Fig. 10 Sky maps illustrating the surface density and expected contamination ratios for each class from the test sample. Panels are arranged as in a confusion matrix, with true classes, ytrue, on the vertical axis and predicted classes, ypred, on the horizontal axis. Diagonal panels display the sky surface density of sources classified into each category – galaxies, QSOs, and stars – uniformly scaled in color. The off-diagonal panels show the relative classification error for given true and predicted classes, calculated as the number of misclassified sources divided by the total number of objects in the true class. A minimum of 4 sources is required in order to compute the classification error. The off-diagonal panels share a distinct color scale from the diagonal ones. No apparent trends are visible based on sky position. |

|

Fig. 11 Number counts and contamination ratios as a function of Galactic latitude, b. Top panels: object counts for each class (star, galaxy, QSO, from left to right) of object classified spectroscopically (black) and by BANNJOS (red). Bottom panels: contamination ratios (FPs) in each class predicted by BANNJOS. The error is computed as the number of objects incorrectly assigned to each class by BANNJOS (one of the other two potential classes), divided by the number of objects of the spectroscopic class (i.e., star, galaxy, QSO) from left to right. Bins are computed in 10° increments from 20° to 90° for each class. The bin [10°,20°) is excluded from the analysis due to its very low number of galaxies (47). |

6 Comparisons with other classifiers

At the moment of writing this paper, there are three other classifications available for J-PLUS. All of them are deterministic, meaning that they provide a single value for the probability of each object belonging to a certain class. The most important difference between them lies in the basis of their classification (morphological or nature based) and thus in the number of classes they manage. In the following subsections, we compare BANNJOS’s predictions to these previous works by using the highest median as the assigned class.

6.1 Two-class classifiers

Two classifications are currently available along J-PLUS tables at CEFCA’s archive. The first, CLASS_STAR, is derived from the SExtractor photometry package. The second, sglc_prob_star, introduced by López-Sanjuan et al. (2019), applies Bayesian priors to enhance the classification accuracy beyond that provided by SExtractor. Both classifiers categorize sources into two morphological classes: compact or extended, thereby assigning the probability of a source being star-like. However, this binary scheme complicates direct comparisons with our three-class approach. To address this, we recategorized the true and predicted classes from our test sample (ytrue and ypred) into these two morphological categories. We classified objects as compact if their CLASS_STAR, sglc_prob_star scores, or their median PCStar|QSO(50) exceeded 0.5. Conversely, objects were deemed extended otherwise. This approach enables direct comparison between our results and those of the pre-existing binary classifiers.

Figure 15 presents confusion matrices for these morphological classification derived from the three classifiers. All three classifiers perform commendably in classifying compact sources, with correct classification rates of approximately 98%. However, CLASS_STAR exhibits notable shortcomings in identifying extended sources, correctly classifying only about 89% as galaxies. The sglc_prob_star classifier significantly improves upon this, correctly identifying around 96% of galaxies. When examining the misclassification of galaxies as compact (starlike) sources, sglc_prob_star shows a threefold improvement over CLASS_STAR. Nevertheless, BANNJOS demonstrates superior accuracy, significantly reducing the misclassification rate of extended objects compared to sglc_prob_star.

The left part of Fig. 16 (blue) illustrates the classification error rates for the three classifiers across various magnitude bins.

Errors for each classifier are quantified as the ratio of misclassified objects to the total number of objects per bin, and are further categorized as FPs or FNs. Since only two classes are involved, the FNs in compact sources correspond to the FPs in extended sources. BANNJOS consistently outperforms the other classifiers across all bins, maintaining lower error rates. This holds true except possibly for FPs (Compact) at the faintest magnitudes, where the error rates for all three classifiers converge. A notable aspect in this comparison is the significant asymmetry displayed by the sglc_prob_star and CLASS_STAR classifiers between FPs and FNs, indicating a tendency to misclassify QSOs or stars as extended sources as the S/N decreases, with CLASS_STAR being the most pronounced in this regard.

Adding FPs and FNs yields the total classification error, where BANNJOS outperforms the other two classifiers by a significant margin. For example, CLASS_STAR incorrectly classifies approximately 30% of objects beyond r > 20.5 mag, resulting in a cumulative error rate of about 11% at r = 22.5 mag. In contrast, sglc_prob_star reduces the error rate to approximately 12–13% at similar magnitudes, with an accumulated error of about 5% at r = 22.5 mag. BANNJOS, however, achieves an error rate of merely around 3.5% in the same range, with a cumulative error below 2% for all objects. Remarkably, BANNJOS maintains near-perfect performance for objects brighter than r = 19.5 mag, with error rates around 0.3% and cumulative errors below 0.1%. While the purity of the classification could potentially be enhanced by adjusting the probability thresholds for the three classifiers, our experiments indicate that the relative differences between the classifiers remained almost unchanged, or favored BANNJOS even more.

|

Fig. 12 Predicted versus true probability for the selection based on different thresholds for each class. From top to bottom, the panels show the galaxy, QSO, and star class. For each class, the true probability is derived as the amount of objects with confirmed spectroscopic class divided by the total number of objects in each probability interval. The probability intervals are measured on the predicted probability from zero to one in steps of 0.1 (ten in total). The predicted probability is the probability derived with BANNJOS using three different methods: The mean probability, the median probability, and the mean probability from the reconstructed PDF using the GMM model. The light shaded areas show the true probability of the sample if the 16th or the 84th percentile is used to select the sample. The uncertainties from the GMM model are shown by darker shaded areas and are computed by sampling the model 2000 times. The diagonal dashed line shows the one-to-one relation. Due to the binning used to create the figure, there is only data in the [0.05, 0.95] range. |

|

Fig. 13 Purity versus completeness for the selection based on different thresholds for each class From top to bottom, the panels show the galaxy, QSO, and star class. The curves represent purity (blue) and completeness (red) of the sample as functions of the chosen threshold and percentile used to select the class. Vertical dashed lines in corresponding colors indicate the probability thresholds required to achieve 99% purity (blue) and 99% completeness (red), respectively. |

6.2 Three-class classifiers

Differently from CLASS_STAR and sglc_prob_star, the classification introduced in von Marttens et al. (2024), hereafter referred to as vM24, bases its results not only on the morphology of the source but also on its colors. This approach, coupled with the utilization of a more sophisticated algorithm, XGBoost, enables the authors to differentiate sources based on their inherent nature, adding the QSO class to their classification. Consequently, their classification encompasses the three categories of galaxy, QSO, and stars, making it a natural counterpart for comparison with our classification.

A new test sample is required for this comparison, one that includes only objects never seen during the training phases of any of the models (i.e., BANNJOS and XGBoost). This new test sample was composed by cross-matching our own test sample with the training sample used in vM24, retaining only sources present in our test sample but absent in their training sample. From our original test sample of 136 570 objects, we identified 52 105 sources not included in the vM24 training sample. While significantly reduced, this number of objects is still sufficiently large to conduct a comparative analysis. This dataset, composed entirely of sources unfamiliar to both models, allows us to assess their general performance on independent data, thus facilitating a fair comparison between the two. As in previous sections, we assign the class predicted by BANNJOS as the one with the highest median probability, max[PCclass(50)]. In vM24, the classification is presented similarly to that of BANNJOS, albeit with a single probability value per class. We assigned the class in vM24 as the one with the highest probability. While simple, this is the only criterion ensuring 100% completeness in the sample selection.

Following the analysis from the previous section, we proceeded to bin the new test sample according to the r-magnitude of its sources. We then computed the relative and cumulative errors as in the left sub-figure of Fig. 16, i.e., the relative classification error per class for each classifier is defined as the ratio between misclassified objects and the total number of objects, determined by their spectroscopic class, within each magnitude bin. The results are shown in the right part of Fig. 16 (orange), where upper panels detail the relative FP and FN errors for each classifier by class, and bottom panels present cumulative errors for FPs and FNs.

BANNJOS significantly outperforms the performance presented in vM24 across all magnitude bins, with accuracies varying from 2 to 10 times better, depending on the class and magnitude bin. The higher error rate of vM24 is clearly visible as a generally higher rate of FPs and FNs in the vM24 results compared to those of BANNJOS. For instance, the typical total classification error (FPs+FNs) for Galaxies at r = 21 mag is around 27% in vM24, while BANNJOS reduces this to merely 3%. These differences diminish at the faintest magnitudes, where BANNJOS still typically yields classification accuracies twice as higher as those obtained in vM24. To provide some figures, the average total classification error for QSOs in vM24 is approximately 26% at r ~ 22, compared to around 12% for BANNJOS, while cumulative errors typically escalate to 8% at r ~ 22.5 in vM24 but remain at ~ 1.5% for BANNJOS.

Asymmetries between FPs and FNs could indicate a bias in the model’s predictions toward a specific class. Notably, stars exhibit a very high rate of FNs in vM24, which seems to translate into FPs for galaxies and QSOs, indicating that at magnitudes fainter than r ~ 18 mag, the XGBoost used in vM24 tends to misclassify a significant number of stars as galaxies and QSOs. Confusion also seems to occur between the galaxy and QSO classes at the faintest magnitudes. This effect is also observable in BANNJOS’s results, albeit with a lower error rate and more symmetry between FPs, FNs, and the classes themselves. However, as discussed in Sect. 4.1, this is expected and likely caused by the dual nature of active galaxies.

We conducted an additional test using a refined sample, specifically selecting sources classified with over 95% probability in vM24 in any of the three classes. This criterion reduced the sample size to 37 355 objects (approximately 72% of the original sample) that are classified with high confidence in vM24. Upon reevaluating both models with this high-probability sample, improvements were noticeable, although BANNJOS continued to exhibit superior performance across all magnitude bins. In vM24, typical error rates peaked at around 8% at r ~ 21 mag for both stars and galaxies, with QSOs remaining lower at 2% at the same magnitude. However, the error distribution between these classes remained very asymmetric, with classification errors for stars being almost entirely FNs and for galaxies mostly FPs. The high asymmetry shown between the galaxy and star classes at magnitudes fainter than r ~ 19 mag indicates that the vM24 classification systematically misclassifies stars as galaxies at these magnitudes when applying high-probability selection criteria. Interestingly, the numbers of FPs and FNs are consistent for QSOs under this high-probability selection. In contrast, BANNJOS exhibits its maximum error at the faintest magnitudes, r = 22.5 mag, at around 2% for galaxies and around 1% for the other classes, demonstrating higher consistency between FPs and FNs across the entire magnitude range. In summary, using this particular high-confidence sample from the vM24 catalog, BANNJOS still outperforms XGBoost significantly.

The largest difference between the training of the models stems from the addition of DESI data to our training list. The test sample used for this comparison also contains sources classified by DESI, which could potentially represent and advantage for BANNJOS. To test whether the presence of these sources in the test sample was beneficial to BANNJOS, we removed all the DESI-only-measured sources from the list, leaving a total of 31 124 sources, and repeated the experiment. While BANNJOS’s errors remained the same or even decreased in some cases, the classification errors in vM24’s results increased, particularly in the star and galaxy classes.