| Issue |

A&A

Volume 681, January 2024

|

|

|---|---|---|

| Article Number | A62 | |

| Number of page(s) | 26 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202348329 | |

| Published online | 16 January 2024 | |

First upper limits on the 21 cm signal power spectrum from cosmic dawn from one night of observations with NenuFAR

1

Kapteyn Astronomical Institute, University of Groningen, PO Box 800, 9700 AV Groningen, The Netherlands

e-mail: munshi@astro.rug.nl

2

LERMA, Observatoire de Paris, Université PSL, CNRS, Sorbonne Université, 75014 Paris, France

3

ASTRON, PO Box 2, 7990 AA Dwingeloo, The Netherlands

4

Université de Strasbourg, CNRS, Observatoire astronomique de Strasbourg (ObAS), 11 rue de l’Université, 67000 Strasbourg, France

5

School of Physics and Astronomy, Tel Aviv University, Tel Aviv 69978, Israel

6

Institute for Advanced Study, 1 Einstein Drive, Princeton, New Jersey 08540, USA

7

Department of Astronomy and Astrophysics, University of California, Santa Cruz, CA 95064, USA

8

Laboratoire de Physique de l’Ecole Normale Supérieure, ENS, Université PSL, CNRS, Sorbonne Université, Université de Paris, 75005 Paris, France

9

LESIA, Observatoire de Paris, CNRS, PSL, Sorbonne Université, Université Paris Cité, 92195 Meudon, France

10

ORN, Observatoire de Paris, CNRS, PSL, Université d’Orléans, 18330 Nançay, France

11

Kavli Institute for Cosmology, Madingley Road, Cambridge CB3 0HA, UK

12

Institute of Astronomy, University of Cambridge, Madingley Road, Cambridge CB3 0HA, UK

13

ARCO (Astrophysics Research Center), Department of Natural Sciences, The Open University of Israel, 1 University Road, PO Box 808, Ra’anana 4353701, Israel

14

AIM, CEA, CNRS, Université Paris Cité, Université Paris-Saclay, 91191 Gif-sur-Yvette, France

15

LPC2E, Université d’Orléans, CNRS, 45071 Orléans, France

16

LUTh, Observatoire de Paris, Université PSL, Université de Paris Cité, CNRS, 92190 Meudon, France

Received:

19

October

2023

Accepted:

3

November

2023

The redshifted 21 cm signal from neutral hydrogen is a direct probe of the physics of the early universe and has been an important science driver of many present and upcoming radio interferometers. In this study we use a single night of observations with the New Extension in Nançay Upgrading LOFAR (NenuFAR) to place upper limits on the 21 cm power spectrum from cosmic dawn at a redshift of z = 20.3. NenuFAR is a new low-frequency radio interferometer, operating in the 10–85 MHz frequency range, currently under construction at the Nançay Radio Observatory in France. It is a phased array instrument with a very dense uv coverage at short baselines, making it one of the most sensitive instruments for 21 cm cosmology analyses at these frequencies. Our analysis adopts the foreground subtraction approach, in which sky sources are modeled and subtracted through calibration and residual foregrounds are subsequently removed using Gaussian process regression. The final power spectra are constructed from the gridded residual data cubes in the uv plane. Signal injection tests are performed at each step of the analysis pipeline, the relevant pipeline settings are optimized to ensure minimal signal loss, and any signal suppression is accounted for through a bias correction on our final upper limits. We obtain a best 2σ upper limit of 2.4 × 107 mK2 at z = 20.3 and k = 0.041 h cMpc−1. We see a strong excess power in the data, making our upper limits two orders of magnitude higher than the thermal noise limit. We investigate the origin and nature of this excess power and discuss further improvements to the analysis pipeline that can potentially mitigate it and consequently allow us to reach thermal noise sensitivity when multiple nights of observations are processed in the future.

Key words: methods: data analysis / techniques: interferometric / dark ages / reionization / first stars

Note to the reader: Bottom panels of Figures 9 and 13 were not correctly published. The figures were corrected on 17 January 2024.

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

The redshifted 21 cm signal arising out of the hyperfine transition of neutral hydrogen (HI) is a very sensitive probe of the astrophysical processes active during the early stages of cosmic evolution (Madau et al. 1997; Shaver et al. 1999). Cosmic dawn is a particularly interesting epoch in the early universe for which the 21 cm signal can potentially give us rich insights. This is the period when the gas aggregated in dark-matter halos became dense enough to undergo gravitational collapse and form the first luminous objects in the universe. The two main processes believed to dominate during cosmic dawn are Lyman-α coupling and X-ray heating. The Lyman-α radiation emitted by the first sources couples the spin temperature of HI to the kinetic temperature of the intergalactic medium (IGM) through the Wouthuysen-Field effect (Wouthuysen 1952; Field 1958). Due to large spatial fluctuations in the distribution of early galaxies, this Lyman-α coupling is believed to have occurred inhomogeneously, leading to observable 21 cm signal intensity fluctuations (Barkana & Loeb 2005). At later stages, X-ray radiation by stellar remnants heats the IGM (Venkatesan et al. 2001; Chen & Miralda-Escudé 2004; Pritchard & Furlanetto 2007), and this in turn increases the spin temperature of the 21 cm transition, which is coupled to the IGM temperature. Since ionization can still be largely neglected at the high redshifts of interest, these physical processes guide the magnitude and spatial distribution of the brightness temperature of the 21 cm signal against the cosmic microwave background radiation (CMBR) during this era. Radio-wave observations by interferometric arrays targeting the redshifts corresponding to cosmic dawn can directly probe the fluctuations in the three-dimensional brightness temperature distribution of the 21 cm signal during this epoch. Thus, these observations have the immense potential of providing us with crucial constraints on the properties of the IGM and early star formation histories (e.g., Furlanetto et al. 2006; Pritchard & Loeb 2012; Fialkov & Barkana 2014; Mebane et al. 2020; Reis et al. 2020; Gessey-Jones et al. 2022; Hibbard et al. 2022; Munoz et al. 2022; Monsalve et al. 2019; Adams et al. 2023; Bevins et al. 2024).

The prospect of probing cosmic dawn and the subsequent epoch of reionization (EoR) has led to many interferometric experiments devoting significant observing time to 21 cm cosmology programs. Most of these experiments have focused on the EoR, and over the years increasingly stringent upper limits on the redshifted 21 cm signal power spectrum from the EoR have been set by the GMRT1 (Paciga et al. 2013), PAPER2 (Kolopanis et al. 2019), MWA3 (Barry et al. 2019; Li et al. 2019; Trott et al. 2020), LOFAR4 (Patil et al. 2017; Mertens et al. 2020), and HERA5 (Abdurashidova et al. 2022). Many current and upcoming interferometric experiments are also trying to detect or place limits on the 21 cm signal from cosmic dawn, with some of them having already set upper limits on the 21 cm power spectrum at z > 15. The first attempt at measuring the 21 cm power spectrum during cosmic dawn was by Ewall-Wice et al. (2016), who used 6 h of MWA data to set upper limits of  at z = 15.35 and k = 0.21 h Mpc−1, and

at z = 15.35 and k = 0.21 h Mpc−1, and  at z = 17.05 and k = 0.22 h Mpc−1. Later, Yoshiura et al. (2021) used 5.5 h of MWA data to set an upper limit of

at z = 17.05 and k = 0.22 h Mpc−1. Later, Yoshiura et al. (2021) used 5.5 h of MWA data to set an upper limit of  at k = 0.14 h Mpc−1 and z = 15.2. The OVRO-LWA6 was used first by Eastwood et al. (2019) to provide upper limits of

at k = 0.14 h Mpc−1 and z = 15.2. The OVRO-LWA6 was used first by Eastwood et al. (2019) to provide upper limits of  at z ≈ 18.4 and k ≈ 0.1 Mpc−1 using 28 h of data and later by Garsden et al. (2021), who reported an upper limit of

at z ≈ 18.4 and k ≈ 0.1 Mpc−1 using 28 h of data and later by Garsden et al. (2021), who reported an upper limit of  at z = 28 and k = 0.3 h Mpc−1 using 4 h of data. Gehlot et al. (2019) set 2σ upper limits for the 21 cm power spectrum at

at z = 28 and k = 0.3 h Mpc−1 using 4 h of data. Gehlot et al. (2019) set 2σ upper limits for the 21 cm power spectrum at  at z = 19.8–25.2 and k = 0.038 h cMpc−1 using 14 h of data obtained using the LOFAR LBA7 system. The ACE program (Gehlot et al. 2020), which uses the LOFAR AARTFAAC8, placed 21 cm power spectrum upper limits of

at z = 19.8–25.2 and k = 0.038 h cMpc−1 using 14 h of data obtained using the LOFAR LBA7 system. The ACE program (Gehlot et al. 2020), which uses the LOFAR AARTFAAC8, placed 21 cm power spectrum upper limits of  at z = 17.9–18.6 and k = 0.144 h cMpc−1 using 2 h of data. Second-generation experiments such as HERA (DeBoer et al. 2017) and SKA-Low (Koopmans et al. 2015) are expected to have higher sensitivities in these redshifts, and SKA-Low has the potential to detect the 21 cm signal from cosmic dawn in tomographic images.

at z = 17.9–18.6 and k = 0.144 h cMpc−1 using 2 h of data. Second-generation experiments such as HERA (DeBoer et al. 2017) and SKA-Low (Koopmans et al. 2015) are expected to have higher sensitivities in these redshifts, and SKA-Low has the potential to detect the 21 cm signal from cosmic dawn in tomographic images.

In a complementary approach, several single dipole experiments have attempted to detect the sky-averaged (global) 21 cm signal at high redshifts. Among these, the EDGES9 (Bowman et al. 2008) and SARAS10 (Singh et al. 2017; Subrahmanyan et al. 2021) are the only ones currently in the sensitivity range of a detection. In 2018, the EDGES collaboration reported an absorption trough in the global 21 cm signal at the redshifts corresponding to cosmic dawn (Bowman et al. 2018a). However, the feature detected by EDGES is unusually deep and flat (Hills et al. 2018; Bowman et al. 2018b), and it becomes necessary to introduce “exotic” nonstandard models of the 21 cm signal to explain it if it is cosmological. One such approach to modeling the EDGES signal involves supercooling of gas by scattering off cold dark-matter particles (e.g., Barkana 2018; Fialkov et al. 2018; Berlin et al. 2018; Muñoz & Loeb 2018; Liu et al. 2019). Another alternative class of models suggests that the background against which we observe the 21 cm signal could have a component in addition to the CMBR, thus explaining the unusual depth of the trough (e.g., Feng & Holder 2018; Ewall-Wice et al. 2018; Dowell & Taylor 2018; Fialkov & Barkana 2019). These models, in turn, predict stronger fluctuations in the 21 cm signal from cosmic dawn, implying that the 21 cm power spectrum could potentially be easier to detect. However, as of now, no independent confirmation of the EDGES result has been obtained by other experiments, and a recent measurement by the SARAS team claims to disprove the EDGES detection with a 95% confidence level (Singh et al. 2022).

In this work we present the first upper limits on the 21 cm signal power spectrum from cosmic dawn using NenuFAR11 (Zarka et al. 2012), a radio interferometer nearing completion at the Nançay Radio Observatory in France. NenuFAR is a low-frequency phased array instrument that will have 1976 receiving antennas, with a very dense core and a large collecting area, making it extremely sensitive to the large scales necessary for constraining the 21 cm signal (Joseph 2012). It is one of the most sensitive instruments for cosmic dawn observations and is currently the most sensitive instrument below 50 MHz. In this study we performed an end-to-end analysis of a single night of observations made with an incomplete array composed of 79 active interferometric elements. We used an analysis method that adopts many of the strategies that have been developed and improved over the years during the evolution of the LOFAR EoR analysis pipeline (Chapman et al. 2012, 2013; Patil et al. 2014, 2016, 2017; Asad et al. 2015, 2016, 2018; Mertens et al. 2018; Gehlot et al. 2018, 2019; Offringa et al. 2019b; Hothi et al. 2021; Mevius et al. 2022; Gan et al. 2023). We demonstrate the performance of the NenuFAR calibration pipeline on a single night of observations made with the current incomplete array and present its first upper limits on the 21 cm signal power spectrum. This pilot analysis also reveals the limitations of the current processing pipeline and helps shape our strategy for overcoming them in the future.

The paper is organized as follows: Sect. 2 introduces the NenuFAR instrument and its Cosmic Dawn Key Science Program (CD KSP). In Sect. 3 we describe the data acquisition process and the preprocessing steps. The different steps in the data calibration procedure are described in Sect. 4. In Sect. 5 we describe an additional step of post-calibration flagging. Section 6 describes the power spectrum estimation, and Sect. 7 describes the first application of the machine learning (ML) Gaussian process regression (GPR) foreground removal technique (Mertens et al. 2024) to our data. Section 8 summarizes our results and presents the robustness tests that were performed to estimate the level of signal suppression due to the analysis pipeline. In Sect. 9 we discuss the limitations and future improvements. A short summary of the main conclusions from this analysis is presented in Sect. 10. Throughout this paper, a flat Λ cold dark matter cosmology is used, with the cosmological parameters (H0 = 67.7, Ωm = 0.307) consistent with results from Planck observations (Planck Collaboration XIII 2016).

2. NenuFAR Cosmic Dawn Key Science Program

The NenuFAR12 CD KSP13 (KP01, P.I.: L. V. E. Koopmans, B. Semelin and F. G. Mertens) is one of the programs that started in the early scientific phase of NenuFAR, which aims to detect or place stringent limits on the 21 cm power spectrum from cosmic dawn in the redshift range of z = 15 − 31 (Mertens et al. 2021). The project has already been allocated 1267 h of observations, which started in September 2020 and are still going on with an array that is progressively more sensitive as more core and remote stations are being added. With NenuFAR’s sensitivity, detection of the 21 cm signal predicted by EDGES-inspired exotic models is possible within 100 h of integration. Moreover, with 1000 h of integration, NenuFAR is expected to reach a thermal noise sensitivity comparable to the levels of the signal predicted by more standard models (Mesinger et al. 2011; Murray et al. 2020; Semelin et al. 2023).

2.1. The instrument

NenuFAR (Zarka et al. 2015, 2020) is a large low-frequency radio interferometer. The primary receiving antennas for NenuFAR are crossed inverted V-shaped dual polarization dipoles, similar to the antenna elements employed by the LWA (Hicks et al. 2012), but equipped with an original custom-made low noise amplifier (Charrier & the CODALEMA Collaboration 2007). In total, 19 such antennas, arranged in a hexagonal array, form a single mini-array (MA), whose station beam is analog steerable in different directions in the sky. The hexagonal MA has a six-fold symmetry, resulting in considerable grating lobes in an individual MA primary beam. In order to reduce the level of these grating lobes, the MAs are rotated with respect to each other by angles that are multiples of 10 degrees, with only 6 such non-redundant orientations being necessary due to their hexagonal symmetry. At completion, NenuFAR will have 96 MAs distributed in a 400 m diameter core and 8 remote MAs located at distances up to a few kilometers from the center. Figure 1 presents a schematic representation of the NenuFAR configuration used for obtaining the data used in this analysis. The dense core of NenuFAR with a large number of short baselines makes it extremely sensitive to large scales and especially well suited to constraining the 21 cm signal. The longer baselines involving the remote stations, combined with pointing observations and Earth rotation synthesis, yield a good uv coverage out to several kilometers (3.5–5 km at completion, currently 1.4 km) and thus enable efficient point source foreground modeling and subtraction.

|

Fig. 1. Schematic representation of the NenuFAR array configuration used in the observation. |

2.2. Observations and data selection

The primary target field for the NenuFAR CD KSP is the north celestial pole (NCP). The NCP is a particularly favorable field since it is visible at night throughout the year, allowing long observations on winter nights. The NCP is in a fixed direction, thus requiring no beam or phase tracking with time. Moreover, extensive sky models of the NCP, made using deep integrations with LOFAR, are readily available as a byproduct of the LOFAR EoR Key Science Project (KSP) and these aid the analysis and calibration efforts. The NCP observations with NenuFAR are preceded or succeeded by a 30 min observation of a calibrator – Cygnus A (Cyg A) or Cassiopeia A (Cas A), depending on which one of the two sources is at a high enough altitude to yield good quality observations.

Phase I observations of the NCP with NenuFAR started in September 2019 with the primary goal of understanding the instrument and monitoring its performance. Deep integrations of the NCP field commenced with the start of Phase II observations in September 2020 and as of May 2022, 1080 h of NCP data have been gathered, with the newer observations being carried out with more core and remote stations. In July 2021 the number of core MAs was increased from 56 to 80 and in September 2021, the third remote MA was installed. The night used in this analysis was selected on the basis of considerations related to low ionospheric activity among the observations that were available with three remote MAs active as of May 2022 when this pilot survey was initiated. Calibration solutions for the calibrator (Cyg A or Cas A) observations were obtained for all these nights and the temporal fluctuations in these solutions were used as a proxy for ionospheric activity. The trend in the temporal fluctuations as a function of observation nights was verified to be roughly consistent with the trend in the total electron content values for these nights obtained independently from GPS measurements using RMextract14 (Mevius 2018). The night of 12–13 December 2021 had mild ionospheric activity and coincidentally relatively low level of flagged data due to radio-frequency interference (RFI). This night was selected for the current analysis.

The 11.4 h NCP observation was preceded by a 30 min observation of Cas A, which was used for bandpass calibration. Though the observations are recorded in 4 spectral windows covering a wide frequency range of 37–85 MHz, we selected a single spectral window ranging from 61 to 72 MHz for this analysis on the basis of superior RFI statistics (Appendix A). The main observation specifications are summarized in Table 1. Figure 2 shows the baseline coverage of the observation.

|

Fig. 2. Baseline coverage in the uv plane for the NCP observation. The baseline tracks for the core-remote baselines are indicated with different colors for the baselines involving the three remote MAs. For visual clarity, only the U,V points are plotted here, not the -U,-V points. The full coverage including the -U,-V points is much more symmetric. |

Observation specifications for the raw data (L0) used in the analysis.

3. Preprocessing

Observational data obtained with NenuFAR undergo several routine steps of preliminary processing, which prepare it for further stages of precise calibration and foreground subtraction. These steps are described in this section.

3.1. Data acquisition

The NenuFAR correlator is a replica of the COBALT-2 correlator used by LOFAR (Broekema et al. 2018) and is called NenuFAR Imager Correlation Kluster Elaborated from LOFAR’s (NICKEL). During a NenuFAR observation, the two linear polarization components of the signal from all MAs reach the receiver where they are sampled at a frequency of 200 MHz, followed by channelization into 512 sub-bands of 195.3 kHz width each using a polyphase filter bank. The data for each second is digitally phased to the target direction and cross correlated across the different polarizations to yield full polarization visibilities in 384 sub-bands with a total bandwidth of 75 MHz. Each sub-band is further channelized into 64 channels with a 3.05 kHz spectral resolution. The raw correlated visibilities are finally exported into a measurement set (MS) format.

3.2. RFI mitigation, bandpass calibration, data averaging, and compression

The preprocessing of NenuFAR data is performed using the nenucal-cd package15, which in turn uses the various tasks for calibration, flagging and averaging included in the default preprocessing pipeline (DP3; van Diepen et al. 2018) used for analysis of LOFAR data. NenuFAR visibility data during preprocessing is available at three data levels: L0, L1, and L2 in the order of decreasing time resolution, frequency resolution, and data volume. The raw visibilities output by the correlator (the L0 data) have a time resolution of 1 s and frequency resolution of 3.05 kHz with 64 channels per sub-band. To capture intermittent and narrowband RFI, the software AOFlagger (Offringa et al. 2012) is used directly at this highest time and frequency resolution to flag the data affected by strong RFI. To avoid the edge effects of the polyphase filter used in channelization, two channels at both ends of the sub-band are flagged, and the remaining data are averaged into 4 s time resolution and 15.26 kHz frequency resolution with 12 frequency channels per sub-band. This is followed by data compression with Dysco (Offringa 2016) to produce the L1 data, leading to the reduction in data volume by a factor of ≈80 compared to the L0 data. Though this compression introduces some noise into the data, it has been verified through simulations that the compression noise is significantly lower than the expected 21 cm signal. Moreover, the noise is uncorrelated across separate observations taken on different days, and hence averaging the data across different days decreases this compression noise just like thermal noise. The L1 data of the targeted observation of Cas A were calibrated using the software DDECal (described in Sect. 4) against a sky model of Cas A consisting of point sources and Gaussians created previously using LOFAR LBA observations. The parameters used in the bandpass calibration with DDECal are listed in Table 2. The bad calibration solutions were flagged based on a threshold-clipping algorithm and the remaining solutions were averaged in time to produce one solution per MA, per channel, and per polarization. These bandpass solutions were then applied to the L1 NCP data. The bandpass calibration sets the approximate amplitude scale of the target visibilities and more importantly, accounts for the cable reflections that can produce rapid frequency fluctuations in the data. The results of bandpass calibration for a few example MAs are shown in Appendix B.1. The bandpass calibration is followed by another step of RFI flagging with AOFlagger and averaging to a frequency resolution of 61.03 kHz with three channels per sub-band, to get the L2 data product. These data are now suitable for the next steps of calibration.

Calibration parameters used in DDECal at different stages of the analysis.

4. Calibration

The dataset after the preprocessing was divided into 13 time segments (twelve 52 min segments and one 56 min segment), which were processed in parallel, in 13 computational nodes of the DAWN cluster (Pandey et al. 2020). This decreased the computation time and enabled us to conduct multiple calibration runs that allowed us to optimize the calibration settings for this dataset.

Calibration of NenuFAR data is done using DDECal, a calibration software that is part of the DP3. DDECal employs a directional solving algorithm similar to the scalar algorithm described by Smirnov & Tasse (2015). In addition to the fulljones mode in which all four elements of the gain matrix are solved for, DDECal can also be used in the diagonal mode in which the off-diagonal elements are set to zero. A detailed description of the fulljones algorithm of DDECal is presented by Gan et al. (2023). DDECal also has a feature to solve for spectrally smooth solutions by applying constraints to the solutions, using a Gaussian kernel of a chosen full width at half maximum (FWHM). The calibration parameters that were used in DDECal at different stages of the data processing are listed in Table 2.

The frequency smoothing kernel prevents rapid nonphysical frequency fluctuations in the calibration solutions and ensures that the gain solutions do not over-fit the thermal noise and sources that are not part of the incomplete sky model used in calibration, including the 21 cm signal (Mouri Sardarabadi & Koopmans 2019; Mevius et al. 2022). The smoothing kernel FWHM was chosen by performing multiple signal injection tests with different kernel widths to find a compromise between maximizing the accuracy of source subtraction and minimizing the signal loss (described in Sect. 8.1). At every stage of calibration, we applied a baseline cut of at least 20λ and only used longer baselines for the calibration. This was done because the shorter baselines are especially sensitive to the diffuse Galactic emission, which is not present in the sky model used for calibration. If these baselines were used in calibration, this additional diffuse emission would affect the gain solutions and could reappear elsewhere in the final image or on different spatial and frequency scales (Patil et al. 2016). A similar calibration strategy is used by the LOFAR EoR KSP, but the difference is that LOFAR uses a much higher baseline cut (250λ) and computes the power spectrum using only the shorter baselines (between 5 and 250λ) that are not used in calibration. However, this is not feasible with NenuFAR for all the calibration steps since there are not enough long baselines to get reliable calibration solutions for all MAs, and the baselines used for generating power spectra are also used in one of the calibration steps. This creates a risk of signal suppression during this calibration step and this was assessed during signal injection tests (described in Sect. 8.1). Figure 3 summarizes all the different steps in the processing pipeline used in this analysis, except for the signal suppression tests.

|

Fig. 3. Data processing pipeline with the different steps of preprocessing, calibration, foreground subtraction, and power spectrum estimation. The calibration settings used in the different steps in this pipeline are summarized in Table 2. Note that the signal injection tests performed on the calibration and foreground removal steps are not described in this flowchart (see Fig. 12). |

4.1. Bright source subtraction and direction-independent correction

The sources Cas A, Cyg A, Taurus A (Tau A), and Virgo A (Vir A), collectively referred to as the A-team, are the brightest astronomical radio sources in the northern celestial hemisphere at low radio frequencies. In addition to these, there are several other radio sources in the northern sky with a high enough flux to have a significant impact on the NCP field through their point spread function (PSF) sidelobes. The spectra of most of these sources are steep, which means that they become extremely bright at the low frequencies that we are interested in. The result is that even though some of these sources are far away from the NCP and are attenuated by the primary beam, the PSF sidelobes of these sources leave a considerable imprint on the NCP field. Naturally, precise subtraction of these bright sources and mitigation of their PSF sidelobes is crucial for our analysis.

4.1.1. A-team subtraction and direction-independent correction

The NenuFAR primary beam model is not yet a part of DDECal. Since the four A-team sources are well away (at least 30 degrees) from the NCP, we do not expect the MA gains in the direction of these sources to be the same as those in the direction of the NCP, mainly because of the primary beam. Hence, as these sources move through the stationary primary beam, the MA gain solutions in the direction of these sources will carry an imprint of the primary beam pattern. It is, therefore, necessary to perform direction-dependent (DD) calibration in order to obtain separate time and frequency-dependent gain calibration solutions for the NCP field and the individual A-team sources.

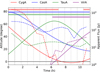

We used intrinsic sky models for all the A-team sources, obtained previously using the LOFAR LBA and consisting of point sources (delta-functions) and Gaussians. Not all the A-team sources are above the horizon for all 13 time segments into which we have divided the dataset. So for a given data segment, if a particular A-team source is below the horizon for most of the time, we excluded it from the sky model. This is illustrated in Fig. 4. For the NCP, we used an intrinsic model obtained from the global sky model16 that was created using data from The GMRT Sky Survey (TGSS; Intema et al. 2017), NRAO VLA Sky Survey (NVSS; Condon et al. 1998), WEsterbork Northern Sky Survey (WENSS; Rengelink et al. 1997), and VLA Low-frequency Sky Survey (VLSS; Cohen et al. 2007). For each data segment, the primary beam17, averaged over all stations, was computed at three equally spaced time points within the segment (corresponding to 1/4, 1/2 and 3/4 of the time duration of the segment) and averaged together to give the station and time averaged beam. The intrinsic sky model components were then attenuated by the beam values at the positions of the components. These “apparent” sky models (as seen by NenuFAR) for the different A-team sources and the NCP were subsequently put in separate sky-model-component clusters to get the final apparent sky model for each data segment. Each such cluster forms a direction for which a separate calibration solution is solved. It should be noted that this kind of attenuation by a simulated beam model accounts for the rotation of the primary beam against the sky on timescales longer than the duration of the time segments (since we used a single sky model for a segment), and up to the accuracy of the preliminary simulated beam model that was used to obtain the apparent sky model used in calibration. The inaccuracy of this preliminary beam model and the spatially averaged effect of the rotation of the beam on timescales as low as the gain solution intervals will be accounted for by the gain calibration solutions. However, since the NenuFAR primary beam model is currently not integrated into DDECal, the differences between the actual sky and the apparent sky model within a solution time interval and inside one direction cannot be accounted for and will lead to errors in the calibration.

|

Fig. 4. Altitude- and beam-attenuated flux values for the four A-team sources, Cyg A, Cas A, Tau A, and Vir A, that are subtracted in the analysis. The vertical dotted lines demarcate the 13 different segments into which the data are divided. The solid lines indicate the altitude of the A-team and are to be read using the scale on the left-hand side axis. The dashed lines connect the intrinsic flux values for the A-team attenuated by the averaged preliminary primary beam model value for each data segment and are to be read using the axis on the right-hand side. The four horizontal bands on top indicate the data segments in which each A-team was included in the sky model and hence subtracted through the method described in Sect. 4.1.1. |

DDECal was used to calibrate the L2 data against the apparent sky model for each time segment. It returns separate calibration solutions for the NCP and each of the A-team sources per MA, polarization, time, and frequency step. Next, the visibilities corresponding to each of the A-team sky models were predicted and the calculated gain solutions were applied to the visibilities. These corrupted predicted visibilities were then subtracted from the data. This removes the A-team from the data if both the source model and the calibration are perfect. The gain solutions in the direction of the NCP were then applied to the data. We note that this is a single calibration solution for the entire NCP field, so this is similar to a direction-independent (DI) correction step if only the NCP field is concerned and this sets the absolute flux scale of the data. This completes the A-team subtraction and DI gain correction on the NCP field using a catalog sky model. The final parameters used for this calibration step were selected after multiple calibration trial runs (described in Appendix C). We find that including all four A-team sources when they are above the horizon, flagging MAs 6, 18, 62, 64, 72, and using diagonal mode gives us the optimal results for this dataset.

The next step is to make a sky model of the NCP field using this calibrated NenuFAR data, which will serve as an updated version of the preliminary catalog sky model. This is similar to a self-calibration loop in which the DI gains and the sky model are iteratively updated. First, the A-team subtracted and DI-corrected data were used to make a deconvolved multifrequency synthesis (MFS) image with WSClean (Offringa et al. 2014). The parameters used in making the cleaned image are given in Table 3 under the column “High Res Clean”. The image was made using multi-scale clean (Offringa & Smirnov 2017) in which both point sources and Gaussians are part of the model. The clean model is given as an output by WSClean in the form of a component list, and this provides an updated sky model of the NCP field as observed by NenuFAR. This is an apparent sky model of the NCP because the NenuFAR primary beam, averaged over the observation, is not corrected for in the image. We then used this updated sky model to calibrate the L2 dataset, using the same calibration settings, which gave the best results for the A-team subtraction and DI correction using the catalog sky model. The only difference here is that for each data segment, in place of the intrinsic NCP sky model attenuated by the averaged primary beam, we used this apparent sky model of the NCP. This A-team subtraction and DI correction run provides slightly improved results compared to when the catalog NCP sky model is used, with the final residual image from WSClean having 1% lower rms. The corresponding clean model from a WSClean run on the DI corrected data now provides a third iteration model of the NCP field and has a few more sources than the previous sky model. The upper panel of Table 4 lists the three different sky models used at different stages: the initial catalog sky model from the Global Sky Model (“Initial”), the updated model after A-team subtraction and DI correction with the catalog model (“2nd Iteration”) and the third iteration model after A-team subtraction and DI correction with the 2nd Iteration model (“3rd Iteration”).

Imaging parameters used for producing the high-resolution clean images (High Res Clean) and the dirty image cubes for the power spectrum (Dirty Cube).

NCP sky model used at different analysis stages.

To assess the level of residuals at each stage of calibration and subtraction, the visibilities at each stage were imaged using WSClean and the MFS images of the data at different stages are presented in Fig. 5. The left panels of Fig. 5 show dirty wide-field images, which were made with the same parameters reported in the “High Res Clean” column of Table 3, except with an image size of 2400 pixels and without the last five parameters, which are needed only for cleaning. The wide-field image in the top-left panel clearly shows the level of contamination from the A-team sources (particularly Cas A and Cyg A), which are well subtracted in the A-team subtraction step (mid-left panel). The narrow-field cleaned images (right panels of Fig. 5) show how the PSF sidelobes of the A-team running through the NCP field, are also well subtracted. To assess the impact of the PSF sidelobes of wide-field sources on the uv plane, the standard deviation of the data in system equivalent flux density (SEFD) units was calculated from the frequency-channel-differenced noise for each uv cell in the gridded data cube (the gridding procedure is described in Sects. 6.1 and 6.2). This was repeated at different stages of source subtraction and the results are presented in Fig. 6. It should be noted that only the central 16 degrees of the field was used in constructing these data cubes as well as the final power spectra. This figure illustrates the impact of the PSF sidelobes of the A-team sources, which appear as straight lines in the spatial frequency domain18 and are well subtracted using this method. However, the subtraction of Cyg A is not perfect and the PSF sidelobes of the Cyg A residuals can still be seen after the A-team subtraction step (middle and right panels of Fig. 6).

|

Fig. 5. Images of the L2, L3, and L4 data. Top: wide-field dirty image (left) and narrow-field cleaned image (right) of the L2 data. Middle: same but for the L3 data. Bottom left: the seven clusters that the sky model is divided into for the NCP subtraction step. Bottom right: narrow-field cleaned image of the L4 data. |

4.1.2. Subtraction of 3C sources

In addition to the A-team, there are several other compact sources in the northern sky that have fluxes of hundreds of janskys at the low frequencies of interest in our observations. In general, most of these sources will be highly attenuated by the primary beam and hence their PSF sidelobes will not be bright enough to contaminate the NCP field. However, in our data, we find that the PSF sidelobes of seven sources19 do have a strong effect on the NCP field. This is because all these sources pass through the grating lobes of the MA beam, and the primary attenuation that their fluxes get is due to the dipole beam and not due to the array factor of the MA, which is very high at the grating lobes. From Fig. 6, it is clear that the PSF sidelobes of the 3C sources have a strong signature on the uv plane. To subtract these sources, we performed a DD calibration in eight directions: the seven 3C sources and the NCP. The time solution interval of 4 min was selected to be small enough to allow the gain solutions to model the grating lobes and large enough such that there is sufficient signal-to-noise ratio (S/N) to obtain reliable calibration solutions. The sky model used for each of these sources is a single delta function at the location of the source, obtained from catalogs. The sources were included in the sky model for all segments where they are above 10 degrees altitude. This results in 3C 438 being excluded for the last seven segments, 3C 452 for the last six segments, 3C 48 for the last two segments, and 3C 84 for the last segment. The three other sources were included in the calibration for all segments. The third iteration NCP sky model made from the A-team subtracted and DI-corrected data was used as the model in the direction of the NCP field. Once the calibration solutions were obtained, the visibilities for the 3C sources were predicted, the calculated gains in the respective direction were applied and the corrupted predicted visibilities were subtracted from the data. This largely removes these seven sources and their PSF sidelobes from the data. The impact of this step on the image and the uv plane are shown in Figs. 5 and 6, respectively. The impact of the PSF sidelobes is particularly prominent in Fig. 6 and the sidelobes are seen to be well subtracted using this method (right panel). The bright source subtracted and DI-corrected data will hereafter be referred to as the L3 data.

|

Fig. 6. Data standard deviation in SEFD units calculated from the channel-differenced noise at each uv cell of the Stokes-I data cube. The three panels correspond to the data after preprocessing (left), after A-team subtraction (middle), and after 3C subtraction (right). The dotted and dashed circles correspond to the 20λ and 40λ limits, respectively, between which the power spectrum is finally computed. |

4.2. NCP subtraction

The sources in the NCP field can now be subtracted from the L3 data. Here it is important to account for the contaminating effects of ionospheric phase shifts and a non-axisymmetric central lobe of the primary beam of NenuFAR, both of which are expected to introduce DD effects on the data within the field of view (FOV). Currently, it is not possible to solve for small timescale ionospheric effects because the solution time interval needed to have a high enough S/N to yield reliable calibration solutions is much longer than the timescale on which these effects occur on short baselines (a few seconds to 1 min, following Vedantham & Koopmans 2015). Ideally one would want to obtain separate gain matrices for all the different components in the NCP sky model. However, this is infeasible because the flux for each component is not sufficient to yield reliable calibration solutions and it would also be extremely computationally expensive. Additionally, solving for a large number of directions would also increase the degrees of freedom, thus increasing the risk of absorption of the noise and the 21 cm signal. Therefore, we used an alternative approach, employed by the LOFAR EoR KSP, in which the NCP sky model is divided into several clusters and a separate calibration solution is obtained for each cluster under the assumption that DD effects do not vary strongly over each cluster (Kazemi et al. 2013). For this purpose, we used the third iteration sky model made from the data after DI correction (Table 4). The sources outside the primary beam beyond 13.4 degrees from the phase center were first removed from the sky model since they have a low flux density. The remaining sources were divided into seven clusters using a k-means-based clustering algorithm. The number seven was chosen to maximize the number of clusters and retain a sufficiently large S/N for the faintest cluster. The flux in the faintest cluster is 33 Jy, which is at a 6σ level when compared to the expected thermal noise in the visibilities for the chosen 8 min and 183.1 kHz solution interval. The details of the clustered sky model are presented in the bottom half of Table 4 and the clusters are shown in the bottom-left panel of Fig. 5. The L3 data were then calibrated against this clustered sky model, with separate solutions calculated for the direction of each cluster. Once the calibration solutions were obtained, the visibilities corresponding to each cluster were predicted, then corrupted by the calculated gains and finally subtracted from the data. We performed multiple runs of the DD calibration in conjunction with signal injection simulations using a fiducial simulated 21 cm signal (described in Sect. 8.1) to converge to the final calibration settings, which gives the best compromise between optimal results in calibration solutions and images and minimum signal suppression. The data after NCP subtraction will hereafter be referred to as the L4 data.

In the bottom-right panel of Fig. 5 we can see that most sources are subtracted well until we reach the confusion noise level (of 0.9 Jy beam−1 20) beyond which we do not have sky model components and hence they are not subtracted. The rms of the fluxes in the image pixels in the central 5 degrees radius is 0.15 Jy beam−1, with a maximum and minimum flux of 1.05 Jy beam−1 and −0.45 Jy beam−1, respectively. The remaining poorly subtracted sources at the edge of the clusters are likely due to the fact that the DD effects are not accounted for on scales smaller than the cluster sizes. As a result, the average gain solution for each cluster does not correctly represent the gains in the direction of these sources and is a complex flux-weighted average over the cluster.

5. Post-calibration RFI flagging

The data after the different stages of calibration and point source subtraction still have significant residual power at high delay (Fourier dual of frequency) modes. Strong features are seen in the delay and fringe rate (Fourier dual of time) power spectra of multiple baselines (Fig. 7), which are likely due to near-field RFI.

|

Fig. 7. Delay and fringe rate power spectra of an example baseline where a strong RFI feature is seen. Delay vs. time plot (top-left) and delay vs. fringe rate plot (top-right) for the Stokes-I data after NCP subtraction. The dashed horizontal lines indicate the expected delay corresponding to the local RFI source at the building. Bottom left: power as a function of fringe rate at the expected delay for the local RFI source. The vertical dotted lines correspond to a fringe rate of 18 min−1. Bottom right: power as a function of delay at the expected fringe rate of 18 min−1. The vertical dashed line is the expected delay. |

To identify local sources of RFI, we constructed a near-field image (Paciga et al. 2011) from the L4 data. This image was created by coherently summing all visibilities after their amplitudes had been set to unity and assuming that phase differences are only due to sources on the ground. Thus, the amplitude in the image does not necessarily correspond to the strength of the local RFI source. It only allows us to pinpoint its location. The near field image reveals that the buildings within the NenuFAR core, housing the electronic containers, are producing significant RFI (left panel of Fig. 8). We performed a comprehensive study of the impact of such near-field sources of RFI on the power spectrum. The details of this study will be published in a separate paper. To mitigate this near-field RFI, we adopted a simple approach of examining the delay power spectra of individual baselines and selecting baselines that are most severely affected by the RFI source. The RFI source at the two buildings within the array is seen to have a periodic fluctuation in intensity with a periodicity of 18 min, leading to a well-defined signature in the delay-fringe rate space (Fig. 7). The origin of this periodicity is still unknown. Any baseline showing a similar feature in the delay-fringe rate power spectra can be easily identified and flagged. This can potentially allow us to filter it out from the data instead of manually flagging entire baselines, but we defer this to future analysis. We examined the Stokes-I delay power spectra for all baselines manually and identified those that show a strong periodic feature. For each such baseline, a feature is always also present in the Stokes-V delay spectra, where it is usually more prominent because the power from sky sources is negligible. The histogram of the MAs contributing to the flagged baselines reveals that baselines involving MAs close to the building are more strongly affected by the RFI (middle panel of Fig. 8). In addition to this periodic RFI signature, the delay spectra of many baselines also show other unusually strong features beyond the horizon delays. The histogram of the MAs contributing to these baselines shows a peak near the northeast of the array (right panel of Fig. 8) in a region where we see a strong RFI source in the near-field image. These baselines were flagged as well. In this process, we flagged 7.5% of the data. After this additional flagging step, the data are deemed sufficiently clean, calibrated and sky model subtracted, and can be used for residual foreground subtraction using ML-GPR and power spectrum estimation.

|

Fig. 8. Local RFI sources at the NenuFAR site and their impact on the MAs. Left: normalized near-field image of the data. The locations of the buildings housing the electronic containers are indicated with black triangles, and the MAs are indicated with gray circles. Center: histogram of the MAs contributing to the baselines that exhibit a periodic amplitude fluctuation in time. Right: histogram of the MAs contributing to all other baselines, which have been flagged based on unusual features seen in the delay power spectra. The size and color of the circles in the middle and right panels indicate the number of baselines involving that MA that have been flagged. |

6. Power spectra estimation

The estimation of power spectra was done using the power spectrum pipeline pspipe21, which is also used to generate the power spectrum for the LOFAR EoR KSP.

6.1. Image cubes

The data need to be gridded in a uvν grid in order to construct the power spectra. For this purpose, WSClean was used to construct dirty image cubes from the visibilities. WSClean uses a w-stacking algorithm while making the image, which accounts for wide-field effects due to the w-term. The imaging parameters are specified in the column named “Dirty Cube” in Table 3. Separate image cubes were made for alternating odd and even time samples and these were used at a later stage in the estimation of the noise level in the data. A Hann filter with an FOV of 16 degrees was applied to the dirty image cubes in the image plane in order to suppress primary-beam effects on sources far away from the phase center as well as aliasing artifacts. These dirty image cubes were subsequently used to obtain the power spectra.

6.2. Conversion to visibility cubes

The gridded image cubes (ID) produced as described above were first converted from units of Jy/PSF to units of Kelvin using the relation (Offringa et al. 2019a; Mertens et al. 2020)

where kB is the Boltzmann constant, δl, δm are the pixel sizes in the l and m directions (in units of radians), respectively, ℱl, m denotes a Fourier transform and  is its inverse, IPSF is the point spread function and ⊘ is the element-wise division operator. These image cubes in the (l, m, ν) space were then Fourier transformed in the spatial direction to get the data cube in the uvν-space:

is its inverse, IPSF is the point spread function and ⊘ is the element-wise division operator. These image cubes in the (l, m, ν) space were then Fourier transformed in the spatial direction to get the data cube in the uvν-space:  . A uv range of 15–50λ was chosen for further analysis, and the remaining data were nulled for all frequencies. This avoids baselines shorter than 15λ, which could have strong contaminating effects from mutual coupling, and those longer than 50λ, which have a higher thermal noise contribution. We note that though we constructed the final spherical power spectra in the 20 − 40λ baseline range, here we tried to retain as many baselines as possible since it allows better foreground modeling and removal through ML-GPR. A final outlier rejection was done on the gridded visibility cubes to flag potentially remaining low-level RFI using a simple threshold-clipping method. The uv cells were flagged based on outliers in the uv weights, Stokes-V variance, and channel-differenced Stokes-I variance. About 15% of the uv cells were flagged in this procedure.

. A uv range of 15–50λ was chosen for further analysis, and the remaining data were nulled for all frequencies. This avoids baselines shorter than 15λ, which could have strong contaminating effects from mutual coupling, and those longer than 50λ, which have a higher thermal noise contribution. We note that though we constructed the final spherical power spectra in the 20 − 40λ baseline range, here we tried to retain as many baselines as possible since it allows better foreground modeling and removal through ML-GPR. A final outlier rejection was done on the gridded visibility cubes to flag potentially remaining low-level RFI using a simple threshold-clipping method. The uv cells were flagged based on outliers in the uv weights, Stokes-V variance, and channel-differenced Stokes-I variance. About 15% of the uv cells were flagged in this procedure.

6.3. Cylindrically and spherically averaged power spectra

The data cube  at any stage of calibration and foreground subtraction can now be Fourier transformed along the frequency axis (after applying a Blackman-Harris filter to suppress aliasing) to obtain

at any stage of calibration and foreground subtraction can now be Fourier transformed along the frequency axis (after applying a Blackman-Harris filter to suppress aliasing) to obtain  where η is the Fourier dual of frequency, commonly referred to as the delay. The corresponding power spectrum as a function of the wave numbers kl, km, k∥ is given by

where η is the Fourier dual of frequency, commonly referred to as the delay. The corresponding power spectrum as a function of the wave numbers kl, km, k∥ is given by

The wave modes are related to u, v, η as (Morales & Hewitt 2004; McQuinn et al. 2006)

where ν21 = 1420 MHz, H0 is the Hubble constant, E(z) is the dimensionless Hubble parameter with E(z) = H(z)/H0 where H(z) is the Hubble parameter. Ωlm denotes the angular extent of the image cube and Bbw denotes the frequency bandwidth of the data cube. DM(z) and ΔD are conversion factors to go to comoving distance units from angular and frequency units, respectively. The denominator in Eq. (2) accounts for the limitation of the angular extent due to the primary beam Apb(l, m) and the spatial tapering function Aw(l, m) and also the limitation in the frequency extent due to the frequency filter Bw(ν) used before the Fourier transform along the frequency direction. ⟨⋯⟩ denotes an average over the respective domains. The power values P(kl, km, k∥) are next averaged in cylindrical and spherical shells to yield the cylindrical (two-dimensional) power spectrum P(k⊥, k∥) and the spherical power spectrum P(k), respectively, where  and

and  .

.

We computed the Stokes-I cylindrical power spectra at all the stages of calibration as a diagnostic to compare the power levels after each step. The cylindrical power spectra for each calibration step and the ratio of the power spectra in successive steps are presented in Fig. 9. The gray lines correspond to the horizon delays and the foreground wedge is clearly visible to have several orders of magnitude higher power than the “EoR window”. The bright horizontal feature at k‖ ≈ 1.84 h cMpc−1 is the result of the flagging of sets of 2 channels at the ends of each sub-band during preprocessing, in order to avoid the edge effect of the polyphase filter used in forming the sub-bands. This feature at such a high k mode does not affect our power spectrum analysis significantly, since it is focused on k modes typically much smaller than this. It is evident that a significant amount of power near the wedge’s horizon is removed during the A-team and 3C subtraction steps, while the NCP subtraction removes power at the lowest k∥ modes corresponding to the low delay values of the NCP field at the phase center. Post-calibration flagging decreases the power by a factor of more than 10 in the high k∥-modes beyond the horizon line (0.2 − 0.6 h cMpc−1). In some k-modes this factor is less than unity because the thermal noise level is higher after flagging due to the lower volume of remaining data. It should be noted that the data prior to the DI correction stage is not absolutely calibrated. The noise power spectrum is estimated by taking the difference of the Stokes-V dirty image cubes of the even and odd time samples and then forming the power spectra as usual, accounting for the extra factor of 2 increase in variance in the process. The panel on the bottom right in Fig. 9 shows the power spectrum of the data after post-calibration RFI flagging divided by the noise power. We see that within the wedge, there is still more than three orders of magnitude of power beyond the thermal noise limit, likely due to Galactic diffuse emission and confusion noise due to extragalactic sources. The power far beyond the wedge could be due to a variety of factors such as residual RFI and polarization leakage. Well away from the wedge, at k‖ > 1 h cMpc−1, the residual power approaches the thermal noise limit.

|

Fig. 9. Cylindrical power spectra at different stages of calibration and source subtraction. Top row: cylindrical power spectra after preprocessing (“Data”), after A-team subtraction (“Ateam Sub”), after DI correction (“DI Corr”), after 3C subtraction (“3C Sub”), after NCP Subtraction (“NCP Sub”), and after post-calibration RFI flagging (“Postflag”). Bottom row: ratio of successive power spectra in the top row. This shows how much power has been subtracted and from which part of the k space at the different calibration stages. The rightmost plot in the bottom row is the ratio of the power spectrum of the data after post-calibration RFI flagging to the noise power spectrum obtained from time-differenced Stokes-V data. Note that the color bars for all plots in the same row are the same, except for the plot on the extreme right in the bottom row. |

7. Residual foreground removal

The data, after the different steps of calibration and compact source subtraction, are still dominated by residual foregrounds, such as the diffuse Galactic emission and extragalactic point sources, which have a flux density at or below the confusion noise limit. However, the fact that foregrounds have a larger frequency coherence scale than thermal noise or the 21 cm signal can be utilized to model and subtract the foregrounds from the data. One approach for subtracting foregrounds from the data is through GPR (Mertens et al. 2018).

7.1. Gaussian process regression

In GPR, the data are modeled as a sum of Gaussian processes describing the foreground, thermal noise, and the 21 cm signal components. Each Gaussian process is characterized by a certain frequency covariance function and zero mean. The covariance function is parametrized by a set of adjustable hyperparameters that control properties such as the variance, coherence scale, and the shape of the covariance function. Using a Bayesian approach, the maximum a posteriori values of the hyperparameters are derived from their posterior probability distribution conditioned on the observed data. The expectation value of the foreground component at each data point is subtracted from the data to yield the residual foreground subtracted data.

To limit the computational requirements, we applied GPR to the gridded visibility data cube  before power spectrum generation. Performing GPR along the frequency direction in the uv space allows us to easily take into account the baseline dependence of the frequency coherence scale of the different components, such as the foreground wedge and the thermal noise. Following the same approach as taken by Mertens et al. (2018), the data d is modeled as a sum of different components, namely the foregrounds ffg, the 21 cm signal f21 and the noise n as functions of frequency ν,

before power spectrum generation. Performing GPR along the frequency direction in the uv space allows us to easily take into account the baseline dependence of the frequency coherence scale of the different components, such as the foreground wedge and the thermal noise. Following the same approach as taken by Mertens et al. (2018), the data d is modeled as a sum of different components, namely the foregrounds ffg, the 21 cm signal f21 and the noise n as functions of frequency ν,

The different GP components should in principle be possible to separate by virtue of their having different spectral behavior. This spectral behavior is specified by the covariance of the components between frequency channels, with the total covariance matrix of the data being a sum of the individual GP covariances:

Here K is the total covariance matrix of the data whose entries are a function of two frequencies νp and νq, and is given by the sum of the foregrounds covariance matrix Kfg, the 21 cm covariance matrix K21 and the noise covariance matrix Kn.

In an earlier approach to implementing GPR, which was employed by Gehlot et al. (2019, 2020), and Mertens et al. (2020), both the foreground and 21 cm covariances have a specific functional form along with hyperparameters that guide their respective variance and frequency coherence scale. However, one concern with this method is that there is a risk of signal loss if the 21 cm covariance function is not a good match to the frequency covariance of the 21 cm signal in actual data (Kern & Liu 2021). This becomes particularly important when the objective is to use the 21 cm power spectrum upper limits to rule out astrophysical models that have a 21 cm power spectrum that is not well described by the covariance function adopted for the 21 cm signal in GPR. Addressing these concerns, a novel approach to GPR-based foreground subtraction called ML-GPR has been developed by Mertens et al. (2024), which employs ML methods to build a covariance model of the 21 cm signal directly from simulations. The main steps in the ML-GPR approach are summarized in the following section.

7.2. Training

In ML-GPR, a variational auto-encoder (VAE) kernel is trained to build a low dimensional representation of the 21 cm signal covariance from a large number of simulations of the 21 cm signal. We used the 21cmFAST (Mesinger et al. 2011; Murray et al. 2020) code to produce simulations of the 21 cm signal at our redshift of interest (z = 20), with a comoving box size of 600 Mpc and a resolution of 2 Mpc per pixel. We followed the parameterization introduced by Park et al. (2019) with the following range of astrophysical parameters:

-

f*,10

the normalization of the fraction of Galactic gas in stars at high z, evaluated for halos of mass 1010 M⊙: log10(f*, 10) = [−3, 0].

-

α*

the power-law scaling of f* with halo mass: α* = [ − 0.5, 1].

-

fesc, 10

the normalization of the ionizing UV escape fraction of high z galaxies, evaluated for halos of mass 1010 M⊙: log10(fesc, 10) = [−3, 0].

-

αesc

the power-law scaling of fesc with halo mass: αesc = [ − 0.5, 1].

-

t*

the star formation timescale taken as a fraction of the Hubble time: t* = [0, 1].

-

Mturn

the turnover halo mass below which the abundance of active star forming galaxies is exponentially suppressed: log10(Mturn/M⊙) = [8, 10].

-

E0

the minimum X-ray photon energy capable of escaping the galaxy, in keV: E0 = [0.1, 1.5].

-

LX/SFR

the normalization of the soft-band X-ray luminosity per unit star formation computed over the band E0 − 2 keV.: log10(LX/SFR) = [38, 42].

Generating meaningful simulations of cosmic dawn made it necessary to perform IGM spin temperature fluctuations. Inhomogeneous recombination was also turned on in the simulations. Latin hypercube sampling was used to sample 1000 sets of parameters in this eight-dimensional space and 21cmFAST was used to perform 1000 simulations and obtain the corresponding brightness temperature cubes. We note that this relatively sparsely sampled parameter space is sufficient since the VAE, being a generative model, is able to interpolate between the training samples. This has been shown by Mertens et al. (2024). Additionally, since we used a VAE instead of an auto-encoder (AE), the added regularization allowed us to avoid overfitting the sparse sample (Kingma & Welling 2013). Next, the spherically averaged power spectra for all 21 cm brightness temperature cubes were computed and normalized in the k range 0.03 − 2.0 h cMpc−1 to have a variance of unity. This was done because we aim to use the VAE to learn only the shape of the 21 cm power spectrum and keep its variance as a separate free parameter, thus allowing it to account for boosted signals predicted by exotic models. Though this provides more freedom to the models, in the case of a detection it would be necessary to check if the converged model is physically plausible. The normalized spherically averaged power spectra of all simulations used in the training set can be seen in Fig. 10, showing the large variety of power spectrum shapes.

|

Fig. 10. Normalized spherically averaged power spectra of the 1000 21cmFAST simulations at z = 20 used as the training set for the 21 cm VAE kernel. |

These power spectra were then used to train a VAE with a two-dimensional latent space, meaning that we want to capture the shape of the 21 cm signal using two parameters. It should be noted that due to the 21 cm signal power spectrum being isotropic (to first order, ignoring peculiar velocities), the spherical power spectrum contains all information about the signal under the assumption of Gaussianity. So it is sufficient to train the VAE on the spherical power spectra, rather than the covariance matrix itself. The VAE has two components, an encoder that maps the normalized power spectra to the latent space, and a decoder that can be used to recover the normalized power spectra corresponding to any point in the two-dimensional latent space. Both the encoder and decoder were trained, and the optimization was performed by minimizing the reconstruction loss between the training power spectrum used as input to the encoder, and the recovered power spectrum given as output by the decoder. We divided the simulated power spectra into a training set of 950 power spectra and a validation set of 50 power spectra. The reconstruction loss, defined as the mean squared error (MSE) between the output and the input power spectra, stabilized after 500 out of a total of 4000 iterations. When comparing the behavior of the reconstruction loss for the training and validation sets, no over-fitting was observed. After training, we also checked the ratio between the input and output. A median value of 1 and rms of 0.1 was observed for both the training and validation sets. This rms is well below what is typically expected in terms of measurement errors with the first-generation detection experiments. The power spectrum obtained from the trained decoder at any given point in the latent space can now be used to calculate the frequency-frequency covariance matrix, thus effectively capturing the covariance of the 21 cm signal from the simulations into two latent space quantities. We also tested training on a higher dimension of the latent space but did not find any improvement in the reconstruction. This is likely due to the sparse sampling of the eight-dimensional parameter space using 1000 simulations and a denser sample with a higher latent space dimension could possibly capture subtler changes in the power spectra. However, we defer that to future analyses with higher sensitivities, where such small effects on the power spectrum will be more important.

7.3. Covariance model

The trained VAE kernel serves as the 21 cm covariance (K21) with three parameters: the two latent space dimensions x1 and x2 and a scaling factor for the 21 cm signal variance  . For the foregrounds, we used an analytical covariance model, which is a good description of the spectral structure we see in the data based on multiple trials using different combinations of covariance functions. The form of the functions used in our covariance model can be described using the Matern class functions,

. For the foregrounds, we used an analytical covariance model, which is a good description of the spectral structure we see in the data based on multiple trials using different combinations of covariance functions. The form of the functions used in our covariance model can be described using the Matern class functions,

Here, σ2 is the variance, l is the frequency coherence scale, r = |νp − νq| is the frequency separation, Γ is the Gamma function and Kη is the modified Bessel function of the second kind. Different values of η correspond to different functional forms that are special cases of the Matern class functions. The GP covariance has a foreground, 21 cm, and noise components (Eqs. (4) and (5)). However, we find that it is necessary to use two components to model the foregrounds: an “intrinsic” and a “mode-mixing” component. In addition, an “excess” component was used to account for the excess power seen in the data. The different components used in the GP covariance model are:

Intrinsic foregrounds –  . Diffuse Galactic emission and extragalactic point sources below the confusion limit within the FOV constitute the intrinsic foregrounds after sky model subtraction. These foregrounds are expected to have a very large frequency coherence scale due to the smooth spectrum of the synchrotron emission mechanism. We modeled the covariance of the intrinsic foregrounds using a radial basis function (RBF; obtained by setting η = ∞ in Eq. (6)), which yields very smooth models along frequency (Mertens et al. 2018). We used a uniform prior 𝒰(20, 40) MHz on lint to capture the very large frequency coherence scale features due to intrinsic foregrounds. We note that a wider prior does not affect the converged value of lint and the specific range 𝒰(20, 40) was chosen to have a narrow prior enclosing the converged value, which sped up the ML-GPR runs for the 100 signal injection tests that were performed on ML-GPR (described in Sect. 8.3).

. Diffuse Galactic emission and extragalactic point sources below the confusion limit within the FOV constitute the intrinsic foregrounds after sky model subtraction. These foregrounds are expected to have a very large frequency coherence scale due to the smooth spectrum of the synchrotron emission mechanism. We modeled the covariance of the intrinsic foregrounds using a radial basis function (RBF; obtained by setting η = ∞ in Eq. (6)), which yields very smooth models along frequency (Mertens et al. 2018). We used a uniform prior 𝒰(20, 40) MHz on lint to capture the very large frequency coherence scale features due to intrinsic foregrounds. We note that a wider prior does not affect the converged value of lint and the specific range 𝒰(20, 40) was chosen to have a narrow prior enclosing the converged value, which sped up the ML-GPR runs for the 100 signal injection tests that were performed on ML-GPR (described in Sect. 8.3).

Mode-mixing foregrounds –  . Interferometers are chromatic instruments and flat-spectrum sources far away from the phase center till the horizon occupy a region in the k⊥, k∥ space known as the foreground wedge (Morales et al. 2012; Vedantham et al. 2012). Apart from this, additional frequency modulations can be imparted on the foreground data by the chromatic primary beam, especially near nulls and sidelobes, the instrumental bandpass, and other systematic effects. To account for these effects, we used a mode-mixing foreground component. The covariance model used for this component is an RBF but with a 𝒰(0.1, 0.5) MHz prior on lmix accounting for the smaller frequency scale fluctuations due to the mode mixing. An RBF was chosen here since it has a rapid fall in power at high delay due to the smooth models it yields. This also makes it easier to separate it from the 21 cm signal without signal loss and this has been tested through simulations and signal injection tests.

. Interferometers are chromatic instruments and flat-spectrum sources far away from the phase center till the horizon occupy a region in the k⊥, k∥ space known as the foreground wedge (Morales et al. 2012; Vedantham et al. 2012). Apart from this, additional frequency modulations can be imparted on the foreground data by the chromatic primary beam, especially near nulls and sidelobes, the instrumental bandpass, and other systematic effects. To account for these effects, we used a mode-mixing foreground component. The covariance model used for this component is an RBF but with a 𝒰(0.1, 0.5) MHz prior on lmix accounting for the smaller frequency scale fluctuations due to the mode mixing. An RBF was chosen here since it has a rapid fall in power at high delay due to the smooth models it yields. This also makes it easier to separate it from the 21 cm signal without signal loss and this has been tested through simulations and signal injection tests.

Excess –  . We find that the data cannot be adequately described by just a foreground and a 21 cm component, and there is additional power in the data with a small coherence scale that is difficult to differentiate from the 21 cm signal. This could be caused by small-scale frequency fluctuations introduced into the data by instrumental effects and RFI. Suboptimal calibration and polarization leakage could also be contributing factors to this additional power. This “excess power” is seen to be well described by an exponential covariance model. An exponential function is obtained by setting η = 0.5 in Eq. (6). We used a 𝒰(0.2, 2) MHz prior on lex for this component. We note that even though this prior range is similar to the prior range for l in Kmix, an exponential kernel does not have a sharp drop in power at large k∥ like the RBF, making it considerably more difficult to separate from the 21 cm signal component. Hence, this excess component was not subtracted from the data. This avoids a potential signal loss due to the absorption of the 21 cm signal into this component.

. We find that the data cannot be adequately described by just a foreground and a 21 cm component, and there is additional power in the data with a small coherence scale that is difficult to differentiate from the 21 cm signal. This could be caused by small-scale frequency fluctuations introduced into the data by instrumental effects and RFI. Suboptimal calibration and polarization leakage could also be contributing factors to this additional power. This “excess power” is seen to be well described by an exponential covariance model. An exponential function is obtained by setting η = 0.5 in Eq. (6). We used a 𝒰(0.2, 2) MHz prior on lex for this component. We note that even though this prior range is similar to the prior range for l in Kmix, an exponential kernel does not have a sharp drop in power at large k∥ like the RBF, making it considerably more difficult to separate from the 21 cm signal component. Hence, this excess component was not subtracted from the data. This avoids a potential signal loss due to the absorption of the 21 cm signal into this component.

Noise – Kn. The noise covariance is calculated from the time-differenced Stokes-V image cubes as a proxy for the thermal noise. We find that using a fixed noise covariance is sufficient, and multiplying the noise covariance by a scaling factor does not affect our results since such a scaling factor converges to a value very close to 1.

7.4. Application to data

The data used as input to ML-GPR are the gridded visibility cubes described in Sect. 6.2. The optimal parameters for our covariance model were obtained using Monte Carlo-based algorithms, Markov chain Monte Carlo (MCMC) or nested sampling, to generate samples from the posterior distribution. Monte Carlo-based methods offer an advantage over simple gradient-based optimization techniques since the former yield both the optimal parameters and the uncertainties associated with them, which can be propagated down to obtain the corresponding uncertainties on the final power spectrum. We get very similar results with both MCMC and nested sampling as the optimization method, but nested sampling, while being more computationally expensive, yields a more complete sampling of the parameter space within the prior bounds and also provides an evidence value. Hence, we used nested sampling with 100 live points to obtain the optimal set of parameters. The prior ranges and converged values for the parameters in our covariance model are listed in Table 5. Figure 11 shows a corner plot of the posterior probability distribution of the parameters. The parameters x1 and x2, which describe the 21 cm signal shape, do not converge, and the variance  hits the lower bound of the prior range, as we would expect for data in which the thermal noise level is well above the 21 cm signal. All other parameters for the foreground and excess components converge to well-constrained l and σ2 values, within the prior bounds. Finally, multiple realizations of foreground cubes were sampled from the posterior probability distribution of the parameters and subtracted from the data to yield residual data cubes, which were then used to calculate the residual power spectrum (Fig. 15) along with its uncertainties, representing the spread in the distribution of samples in the parameter space.

hits the lower bound of the prior range, as we would expect for data in which the thermal noise level is well above the 21 cm signal. All other parameters for the foreground and excess components converge to well-constrained l and σ2 values, within the prior bounds. Finally, multiple realizations of foreground cubes were sampled from the posterior probability distribution of the parameters and subtracted from the data to yield residual data cubes, which were then used to calculate the residual power spectrum (Fig. 15) along with its uncertainties, representing the spread in the distribution of samples in the parameter space.

|

Fig. 11. Corner plot showing the posterior probability distributions for the different parameters used in ML-GPR. The dashed contours correspond to the 68%, 95%, and 99.7% confidence levels. The vertical dashed lines in the one-dimensional histogram enclose the central 68% of the probability. |

Components of the covariance model used in ML-GPR along with the priors and converged values of the parameters.

8. Robustness tests and residual power spectra