| Issue |

A&A

Volume 652, August 2021

|

|

|---|---|---|

| Article Number | A62 | |

| Number of page(s) | 23 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202140383 | |

| Published online | 11 August 2021 | |

Catalog-free modeling of galaxy types in deep images

Massive dimensional reduction with neural networks

Institut d’Astrophysique de Paris, Sorbonne Université, CNRS, UMR 7095, 98 bis boulevard Arago, 75014 Paris, France

e-mail: florian.livet@iap.fr, livetflorian@gmail.com

Received:

20

January

2021

Accepted:

5

May

2021

Context. Current models of galaxy evolution are constrained by the analysis of catalogs containing the flux and size of galaxies extracted from multiband deep fields. However, these catalogs contain inevitable observational and extraction-related biases that can be highly correlated. In practice, taking all of these effects simultaneously into account is difficult, and therefore the derived models are inevitably biased as well.

Aims. To address this issue, we use robust likelihood-free methods to infer luminosity function parameters, which is made possible by the massive compression of multiband images using artificial neural networks. This technique makes the use of catalogs unnecessary when observed and simulated multiband deep fields are compared and model parameters are constrained. Because of the efficient data compression, the method is not affected by the required binning of the observables inherent to the use of catalogs.

Methods. A forward-modeling approach generates galaxies of multiple types depending on luminosity function parameters rendered on photometric multiband deep fields that include instrumental and observational characteristics. The simulated and the observed images present the same selection effects and can therefore be properly compared. We trained a fully convolutional neural network to extract the most model-parameter-sensitive summary statistics out of these realistic simulations, shrinking the dimensionality of the summary space to the number of parameters in the model. Finally, using the trained network to compress both observed and simulated deep fields, the model parameter values were constrained through population Monte Carlo likelihood-free inference.

Results. Using synthetic photometric multiband deep fields similar to previously reported CFHTLS and WIRDS D1/D2 deep fields and massively compressing them through the convolutional neural network, we demonstrate the robustness, accuracy, and consistency of this new catalog-free inference method. We are able to constrain the parameters of luminosity functions of different types of galaxies, and our results are fully compatible with the classic catalog-extraction approaches.

Key words: methods: numerical / methods: statistical / galaxies: elliptical and lenticular, cD / galaxies: spiral / galaxies: evolution / galaxies: luminosity function, mass function

© F. Livet et al. 2021

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1. Introduction

Traditionally, the study of galaxy evolution is based on the analysis of large sets of photometric surveys with long exposure times and a wide range of bands. These data are used with the goal of answering specific questions about galaxy evolution, such as the star-formation histories, the accretion histories, the morphological transformations, and the merging rates for galaxies of various types and masses, and the role of environment in these various characteristics.

In a classic approach, photometric catalogs of galaxies are extracted from the images and the catalogs are used to model the joint evolution of their luminosities, colors, and sizes with redshift. However, catalogs are subject to a variety of incompleteness effects. Rigorous studies based on flux-limited samples can be performed, but they are not optimal: in flux-limited catalogs, the very faint galaxies near the background noise are ignored by construction, which induces a Malmquist bias if this effect is not dealt with properly (Malmquist 1922, 1925). Moreover, some galaxies can overlap, and confusion is often a limiting effect at low luminosities (Condon 1974), mainly at large wavelengths. Poor segmentation, morphological biases, or even the lack of a detection of galaxies can be a consequence of cosmological dimming, which decreases the surface brightness of an object by a factor (1 + z)−4, where z is the redshift (Tolman 1934; Calvi et al. 2014). Another statistical selection effect is the Eddington bias (Eddington 1913): at a given apparent flux, the number of fainter galaxies with a flux overestimation is larger than the number of brighter galaxies with a flux underestimation. The stellar contamination of deep surveys (Pearson et al. 2014) is another limitation of faint galaxy catalogs because some stars can be misidentified as galaxies and contaminate the results. Finally, redshift affects the luminosities and colors of objects, and K-correction must be applied (Hogg et al. 2002, 2003) to correct for these effects.

Moreover, these various selection effects are correlated in subtle ways that are very difficult to express analytically, and some may be spatially variable within a survey (Bernardi et al. 2017, Appendix B). As a result, the classic approach of extracting catalogs is a complex inverse problem of trying to correct for the effects of correlated biases of these catalogs (Marzke 1998; Taghizadeh-Popp et al. 2015). If this is not successful, the models derived from these catalogs tend to be biased in a nontrivial way.

In order to avoid solving the hard inverse problem of model inference from catalogs, forward modeling coupled with approximate Bayesian computation (ABC) can be used. This technique has been used in many fields and for various applications, for example, in cosmology (Akeret et al. 2015; Kacprzak et al. 2018), in modeling the initial mass function (Cisewski-Kehe et al. 2019), to study type Ia supernovae (Weyant et al. 2013), and in modeling galaxy evolution (Carassou et al. 2017; Tortorelli et al. 2020). In the specific case of galaxy evolution, the forward-modeling approach simulates realistic deep multiband images and compares them to observed data in order to constrain the model parameters.

Placing constraints on the parameters of galaxy population models is difficult when catalogs are extracted from the deep surveys that contain a huge number of galaxies with various fluxes, shapes, and sizes. Several source-extraction packages are available, for example, SExtractor (Bertin & Arnouts 1996, 2010). In a recent paper, Carassou et al. (2017) have developed a method for binning extracted catalogs in fluxes and sizes in order to infer the parameters of the luminosity functions used in their model. They successfully measured the evolution parameters of one and two galaxy-type luminosity functions. However, the binning of the apparent magnitude distributions had to be limited to ten intervals per band (of eight bands), which nevertheless meant that the study took place in a very sparsely populated 108 dimension space. Moreover, catalogs are affected by a number of extraction biases: the model point spread function (PSF), the type of magnitude used to perform the extraction (isophotal, aperture, or model), the determination of the sky background, the segmentation process, and the confusion of sources. Consequently, the analytical form for the likelihood is unknown for the parameters of the luminosity functions of the various populations of galaxies, and the information from such deep surveys could be lost by compressing it into catalogs.

To circumvent all of these problems simultaneously, we describe here a novel method for massive data compression using neural networks in order to enable a direct comparison of observation to model in the multiwavelength images. This completely bypasses the biases induced by source extraction. We use the algorithm called information maximizing neural network (IMNN; Charnock et al. 2018), which fits a neural network that is only sensitive to the effects of the model parameters in the simulations that are obtained from our forward model. We implement this method for the first time in deep and large multiwavelength images of galaxies: the 1 deg2 Canada–France–Hawaii Telescope Legacy Survey (CFHTLS) D1 deep field observed in the optical, using the MegaPrime instrument in the u′, g′, r′, i′, z′ filters, and in the near-infrared (IR) using the WIRCam instrument in the J, H, Ks filters. We used the final releases of the TERAPIX processed data for each survey, T0007 for CFHTLS (Hudelot et al. 2012) and T0002 for WIRDS (Bielby et al. 2012); the WIRDS images are rebinned to match the 0.186 arcsec pixel−1 of the optical images. A red, green, blue (RGB) image of part of the D1 deep field in the optical is shown in Fig. 1. It highlights the complexity of the data that are available for studying the various populations of galaxies. A huge wealth of information is distributed such that it is impossible to construct a likelihood that describes the probability of obtaining such a field.

|

Fig. 1. 3 × 6 arcmin2 of the 1 deg2 CFHTLS D1 deep field in RGB (using the i′, r′, g′ filters). This shows the diversity of sizes, shapes, and colors that we can use to perform a deep statistical study of the various galaxy populations. This image reveals the complexity of the object distribution and the huge wealth of information that is available in such a field. This complexity presents a barrier in the methods we used to constrain the parameters of the luminosity functions for each population of galaxies. |

The paper is structured as follows: Sect. 2 introduces the forward model we used to create simulated multiband deep fields and discusses its features. These are the luminosity function parameterization, the spectral energy distributions (SED) of a bulge+disk decomposition, internal dust extinction, stars, reddening, etc. In Sects. 3 and 4 we present the compression of deep fields using IMNN and discuss its characteristics. These are Fisher information, summary statistics, Gaussian and non-Gaussian likelihoods, and quasi-maximum likelihood estimators. This includes the description of the neural network architecture and the loss function we used to fit the network. Section 5 presents a toy application with only two parameters (ϕ* and M*) of the luminosity functions of one spiral population. Section 6 demonstrates a more realistic application to constrain the density parameter ϕ* of both elliptical and spiral galaxies in parts of the observed CFHTLS and WIRDS D1 deep field in eight photometric bands. It also validates the methods on virtual data. As a key comparison, we use in Sect. 6 the recent results of López-Sanjuan et al. (2017). In this paper, a Λ cold dark matter (ΛCDM) cosmology is adopted with ΩΛ = 0.7, ΩM = 0.3, and H0 = 70 km s−1 Mpc−1.

2. Forward model

In this section, we describe in detail the forward model that we used to produce mock images. These images were then used (Sect. 4.3) to train the IMNN and also to perform the Bayesian inference in the two applications of Sects. 5 and 6.

2.1. Basis of the model

We created mock photometric catalogs of galaxies with a code based on the Stuff program (Bertin 2010) rewritten from C to Python by F. Livet. It now accounts for (i) distinct extinction in the bulge and disk components, and (ii) suppression of sample variance, which allows generating images with variations in the luminosity function parameters by only adding or suppressing galaxies depending on the sign of the change in the luminosity function at each absolute magnitude. This therefore results in common galaxies at the same coordinates and with identical bulge and disk parameters (see Sect. 5.2). The aim of this code is to produce realistic photometric multiwavelength catalogs of properties of galaxies from various populations. Each galaxy of these mock catalogs is decomposed as a sum of a bulge and a disk with the following properties: its apparent magnitudes in each observational band (the Sloan Digital Sky Survey (SDSS) u′, g′, r′, i′, z′, and the near-IR J, H, Ks used for the CFHTLS and WIRDS surveys), its apparent bulge-to-total light ratio in the g′ band used as reference (defining its Hubble type), the characteristic sizes of its bulge and disk, its disk inclination, which is used to determine its internal extinction, and its redshift. The code uses a set of galaxy types defined by a specific luminosity function, an intrinsic bulge-to-total luminosity ratio B/T, the SED of the bulge and disk, the bulge and the disk scale lengths satisfying (with some dispersion) the observed scaling laws, and extinction laws. The galaxies of each type are then generated with Poisson draws in small redshift bins of 5h−1 Mpc (where h = H0/100 is the reduced Hubble constant) from z = 0.001 to z = 20.

2.2. Luminosity functions per galaxy type

Each of the galaxy populations we modeled is described by its luminosity function, that is, the number of galaxies per unit comoving volume of the Universe and per magnitude interval, as a function of absolute magnitude, M. This probability distribution function is commonly fit by a Schechter profile (Schechter 1976),

with three parameters: ϕ* the normalization density (in Mpc−3 mag−1), α the faint-end slope (e.g., for α < −1, the density of faint galaxies increases with magnitude), and M* a characteristic absolute magnitude. The luminosity function is redshift dependent (Lilly et al. 1995), thus two other parameters are introduced,  and

and  , defined as

, defined as

where z is the redshift at which the luminosity function is measured. It has been shown that the luminosity function may evolve differently for different populations of galaxies (Zucca et al. 2006), which means that these five parameters may be different for each galaxy population. In Sects. 5 and 6 we focus on constraining M* and ϕ* for spirals and  for spirals and ellipticals.

for spirals and ellipticals.

2.3. Spectral energy distributions (SED)

The SEDs we used to model the diversity of galaxies were sampled from the template library (Coleman et al. 1980): “E” and “Irr”. These two SEDs were assigned to the bulge and disk of a galaxy for a given morphological type in variable proportions (using B/T), which led to a wide range of colors for the individual galaxies: an example is shown in Fig. 2. In the current implementation, we keep the SEDs and the B/T ratios fixed with redshift for all galaxy types. However, the evolution of the SEDs of individual galaxies is allowed through the evolution of the type-dependent luminosity functions and the different extinction effects (see Sect. 2.6).

|

Fig. 2. Example of the evolution of the bulge and disk SEDs from far-UV to near-IR. The plain red curve shows the generic E template SED of an elliptical galaxy of Coleman et al. (1980) at redshift z = 0 and without extinction. The dashed red curve shows the same SED at redshift z = 0.5 and with extinction (where ω = 1.68 and i = 45°, see Sect. 2.6). The plain blue curve shows the generic Irr template SED of Coleman et al. (1980) used here for the disk modeling of spirals at redshift z = 0 and without extinction. The dashed blue curve shows the same SED at redshift z = 0.65 and with extinction (where ω = 1.68 and i = 45°). This shows that even though these SEDs do not evolve explicitly, the evolution of the type-dependent luminosity functions and the different extinction effects implicitly allow for an SED evolution of individual galaxies. The plain curves are normalized so that the integral of the template SED multiplied by the SED of the reference SDSS g′ band equals 1. The eight filters used in this paper (u′, g′, r′, i′, z′, J, H, Ks) are shown at the bottom, and their responses are multiplied by a factor 0.0003. |

2.4. Bulge component

The de Vaucouleurs profile (de Vaucouleurs 1953) describes how the surface brightness IB (in cd.m−2) of a bulge or elliptical varies as a function of the apparent distance R (in kpc) from the center,

where Re (in kpc) is the half-light radius or effective radius (i.e., the isophote containing half of the total luminosity of the bulge), and Ie (in cd.m−2) is the surface brightness at Re. The same profile can be written in terms of magnitude as

where μB(R) is the bulge surface brightness (in mag.kpc−2) at radius R, and MB is the bulge absolute magnitude. It has been shown that the average effective radius follows an empirical relation with the absolute magnitude of the bulge (Binggeli et al. 1984),

where R0 = 1.58h−1kpc, M0 = −20.5, and

We allowed the effective bulge radius to evolve with redshift by a (1 + z)γB factor, where γB is a constant (Trujillo et al. 2006; Williams et al. 2010). Furthermore, it has been shown that the intrinsic flattening, q, of the bulge follows a normal distribution with mean μ = 0.65 and standard deviation σ = 0.18 (Sandage et al. 1970). Finally, the absolute magnitude of the bulge MB is related to the total absolute magnitude of the galaxy M through the relation

where B/T is the bulge-to-total light ratio, which is assumed not to evolve for galaxies of a given type.

2.5. Disk component

The exponential profile describes how the surface brightness ID (in cd.m−2) of a disk varies as a function of the apparent distance R (in kpc) from the center,

where hD (in kpc) is the disk scale length, and I0 (in cd.m−2) is the surface brightness in the center. As for the bulge, the same profile can be written in terms of magnitude as

where μD(R) is the disk surface brightness (in mag.kpc−2) at radius R, and MD = M − 2.5log(1 − B/T) is the disk absolute magnitude. A log-normal relation linking the disk scale length to its absolute magnitude can be fit (de Jong & Lacey 2000),

where h0 = 3.85h−1kpc, β = −0.214, and 𝒩(0, σ) is a random number following a normal distribution with zero-centered mean and standard deviation σ = 0.36. We allowed the disk scale-length to evolve with redshift by a (1 + z)γD factor, where γD is a constant (Trujillo et al. 2006; Williams et al. 2010).

2.6. Internal extinction

The internal extinction by dust was applied separately for the bulge and disk using the interstellar extinction curve (Fitzpatrick & Massa 2007) of the Milky Way in the wavelength range from the ultraviolet (UV) to the near-IR,

where SED(λ) is the extincted SED of the bulge and disk, SED0(λ) is the face-on non-extincted SED of the bulge and disk, τ(λ) is the extinction curve, and κ(i, ω, λ) is a coefficient depending on the wavelength λ, the inclination i, and the total central opacity ω of the disk.

In order to determine the value of κ(i, ω, λ), we used the attenuation-inclination relations of Popescu et al. (2011) to compute the differences of extinct and non-extinct magnitudes for the bulge and the disk. Then, we interpolated these relations to obtain the values of κ(i, ω, λ) for a random inclination i between 0 and π/2 rad. To determine the total central opacity ω of the galaxy, we used the values obtained from a Markov chain Monte Carlo (MCMC) analysis (Chevallard et al. 2013) of the SDSS Data Release 7 and of Abazajian et al. (2009),

In our applications in Sects. 5 and 6, we only use  because we consider elliptical and/or spiral populations of galaxies, and we model both populations with a bulge (B/T = 1 for the elliptical and B/T = 0.2 for the spiral galaxies). Finally, in each redshift bin, we extincted the magnitude of the bulge and of the disk for the effect of the intergalactic medium on a source (Madau et al. 1996).

because we consider elliptical and/or spiral populations of galaxies, and we model both populations with a bulge (B/T = 1 for the elliptical and B/T = 0.2 for the spiral galaxies). Finally, in each redshift bin, we extincted the magnitude of the bulge and of the disk for the effect of the intergalactic medium on a source (Madau et al. 1996).

2.7. Stars

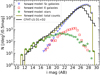

In order to obtain realistic deep fields, we added stars using the Besançon model (Robin et al. 2003) web service, which includes their recent improvements (Robin et al. 2012, 2014; Bienaymé et al. 2015; Amôres et al. 2017). This model was run at the coordinates of the CFHTLS D1 and D2 deep fields in the eight photometric bands. When a smaller image than the 1 deg2 deep field was used, a Poisson random draw was performed to select only the relative number of stars in the subarea. Figure 3 shows the total differential counts for the D1+D2 deep fields and our forward model including stars in all bands, and Fig. 4 the decomposition into stars and both types of galaxies in the i′ band alone. The similarity of the model and the observations is clear in the total density of objects. The stars represent only a few percent of the objects below the completeness limit of g ≃ 26.5 mag.

|

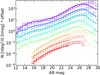

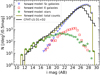

Fig. 3. Differential source counts in u′, g′, r′, i′, z′, J, H, Ks (from top to bottom). Histograms show CFHTLS observations: star+galaxy counts built from u′, g′, r′, i′, z′ source catalogs from MegaPrime D1+D2 (Hudelot et al. 2012), and J, H, Ks galaxy counts taken from WIRCam D1+D2+D3+D4 (Bielby et al. 2012). Our fiducial model is shown as empty circles (without stars for near-IR bands, like the observations). For clarity, the counts in each band are regularly offset vertically downward by 1 dex from u′ to Ks. This graph shows that the magnitudes of the galaxies in the forward model agree well with the observations down to their completeness limits in all eight photometric bands. |

|

Fig. 4. Differential counts (stars and galaxies) in the i′ band for the CFHTLS D1+D2 fields matching our fiducial model decomposed into stars (Besançon model), elliptical galaxies, and spiral galaxies down to the completeness limit. This graph shows the dominance of stars and spiral galaxies at the bright and faint end, respectively, and the difficulty of constraining the modeling of elliptical galaxies because there are so few of them. At the faint end, the completeness of the CFHTLS source extractions is limited to i ≃ 26, whereas the fiducial model shows the fainter input source distribution. |

2.8. Milky Way reddening

In order to correct the apparent magnitudes of the galaxies for the effect of dust in the Milky Way at the specific location of the CFHTLS D1 deep field, we corrected each observed magnitude with the parameterization of Fitzpatrick & Massa (1990) in the UV and spline fitting in the optical and IR with the RV factor of 3.1 and an average E(B − V) of 0.02 using the Python extinction package1. These correction values are given in Table 3.

1D confidence intervals for the five images of the virtual data and the initial values at which the virtual data were generated.

1D confidence intervals for the five insets of the D1 observed data and the joint data.

Parameters for the SkyMaker program.

2.9. Image generation

The catalogs of galaxy magnitudes and sizes generated following the procedure described above were converted into images using SkyMaker (Bertin 2009). SkyMaker renders the modeled galaxies on a high-resolution pixel grid and convolves them with an auto-computed realistic PSF (Bertin 2011). The PSF is not completely suitable for very bright stars or galaxies that are above the saturation level, which explains why the simulations do not show diffraction patterns. In addition, for each simulation, we used the weight maps provided by Hudelot et al. (2012) and Bielby et al. (2012) to realistically model the dead pixels and the differences between the charge-coupled device (CCD) detectors. The model was then subsampled at the final image resolution and centered at some specified coordinates. SkyMaker finally adds the sky background, saturation, photon (Poisson) noise and read-out (Gaussian) noise. The various parameters we used for each passband are shown in Table 3 (the long exposure times of the CFHTLS deep fields are reflected in the high effective gains with a 1 s exposure time).

2.10. Data conditioning

Fluxes from galaxies and/or stars may have a wide dynamic range that reaches the saturation level in the observed and simulated deep-field images. This can be problematic when a neural network is fit on these fluxes because it will have more effect on very high input values than it does on the bulk. Therefore we applied the following inverse hyperbolic sine transform to each pixel of the images:

This function reduces the dynamic range of the fluxes and allows successful fitting of the neural network with the observed and simulated deep-field images, see Sect. 3.

3. Compression of deep fields

In this section, D is a set of n independent observations or simulations (in the form of multiband deep-field images where all pixels have been assembled into a single long vector), di (i = 1, …, n) is one specific observation/simulation of D (i.e. a vector with millions of components), and θ is a vector with p components θα (α = 1, …, p).

3.1. Compression through neural networks

In the past two decades, progress in the field of pattern recognition or classification on images has been tremendous. It was shown in 2004 that standard neural networks can be greatly accelerated by using graphics processing units (GPUs), whose implementation is 20 times faster than the same implementation on central processing units (CPUs; Oh & Jung 2004). Convolutional neural networks were shown to outperform more traditional machine-learning methods for images (Cireşan et al. 2010): a deep convolutional neural network won the ImageNet Large Scale Visual Recognition Challenge in 2012 (Krizhevsky et al. 2012).

To compress the images, we used a neural network whose parameters were fit via the maximization of the logarithm of the determinant of the Fisher information matrix (Charnock et al. 2018). Because of the multiscale properties and the various shapes of the galaxies, we decided to use a fully convolutional inception architecture; see Sect. 4 for a full description.

3.2. Fisher information

The Fisher information is the amount of information on the unknown model parameters that the set of observations or simulations, D, contains when considered from a particular position θ of the parameters space.

The probability of the data given the model is described by a likelihood function, ℒ, whose value, ℒ(D|θ), describes how likely the observations or simulations D are, given particular values for the model parameters, θ.

We define the score of a set of observations, D, with likelihood function ℒ depending on the parameters θ,

The score indicates the sensitivity of the likelihood function to small changes in the parameters of the model. The variance of the score is the Fisher information matrix at some value of the parameters, θ,

3.3. Gaussian likelihood function

If we assume that the data set D for parameters θ satisfies a Gaussian likelihood function, ∀i ∈ {1, …, n},

where ℒ(di|θ) is the likelihood function describing the probability of a single data observation, di, given parameters θ,  is the mean vector over all the data depending on θ and

is the mean vector over all the data depending on θ and  is the covariance matrix of the data. Assuming the covariance to be independent of the parameters θ (see Sect. 4.2 for a full explanation) and replacing the Gaussian likelihood of Eq. (17) in Eq. (16) for the data set, D, yields the Fisher information matrix,

is the covariance matrix of the data. Assuming the covariance to be independent of the parameters θ (see Sect. 4.2 for a full explanation) and replacing the Gaussian likelihood of Eq. (17) in Eq. (16) for the data set, D, yields the Fisher information matrix,

By maximizing the likelihood function with respect to the parameters, that is, finding the parameters  at which the score is zero, we have a quasi-maximum likelihood estimator for any unknown observation or simulation d,

at which the score is zero, we have a quasi-maximum likelihood estimator for any unknown observation or simulation d,

where here, S(d, θ) = (∇θμ)TC−1(d−μ).

3.4. General case: Unknown likelihood

For a problem such as infering model parameters from deep galactic fields, the likelihood is not Gaussian, and worse, is not known. Developing the idea of score compression (Alsing & Wandelt 2018) and the MOPED algorithm (Heavens et al. 2000) in parallel, the proposed method (Charnock et al. 2018) used here considers a neural network, f : D → T, which compresses the data set, D = (d1, …, dn), to a set of summary statistics, T = (t1, …, tn), where each ti is a vector of p components, under the constraint of maximizing the Fisher information. Each piece of data, di, can be very large (e.g., in our application, each simulation is a large multiband deep-field image with 1024 × 1024 pixels) but can be compressed, in practice without any loss, down to a vector of p components, the number of model parameters (p = 2 in the applications of Sects. 5 and 6).

Because each piece of data, di, is described in our model by the parameters θ, the corresponding summary statistic, ti, is also dependent on θ. The neural network f can be seen as a function that transforms the original unknown likelihood function ℒ(di|θ) of the data into an asymptotically Gaussian likelihood function ℒ(ti|θ) of the summary statistics, ∀i ∈ {1, …, n},

where, with the same formalism as the Sect. 3.3, f(di) = ti, ℒ(ti|θ) is the likelihood function for a single summary statistic ti obtained from the data di via the transformation f given parameters θ, μf is the mean vector of the summary statistics and Cf is the covariance matrix of the summary statistics. Consequently, the Fisher information matrix, ignoring covariance dependence on the parameters, is

As shown in Alsing & Wandelt (2018), and following the MOPED algorithm (Heavens et al. 2000), a compression scheme can be designed to be optimal in terms of lossless data compression for data where the likelihood function is known. To do so, we note that the information inequality states that the variance of some observable statistic, ti(θ), of parameters θ, is bounded by

By equating the summary statistic with the score, ti(θ) = S(di, θ), we note that the left-hand side of Eq. (22) is equivalent to the Fisher information defined in Eq. (25). Because the gradient with respect to the parameters commutes with the expectation value,

Substituting this back into Eq. (22) shows that using the score function as a summary statistic saturates the information inequality (Lehmann & Casella 1998) and therefore constitutes an optimal, lossless compression. This transformation yields the same quasi-maximum likelihood estimator  as Eq. (19), but for the summary statistics t of an unknown observation or simulation d,

as Eq. (19), but for the summary statistics t of an unknown observation or simulation d,

where here,  .

.

By fitting the parameters of a neural network to maximize the logarithm of the determinant of Eq. (21), we can therefore approximate the score compression for general, nonlinear likelihoods and thereby efficiently compress our large multiband images to approximately sufficient statistic vectors.

4. Training the inception network

4.1. Inception network

Galaxies can be of different sizes and shapes, hence salient parts in the image can have extremely large variation in size. Because of this huge variation in the distribution in scale of the information, choosing the correct kernel size for the convolution operation is delicate but critical. Moreover, very deep networks (several layers with many neurons) are prone to overfitting, and naively stacking large convolution operations is computationally expensive. A way to circumvent these problems was developed (Szegedy et al. 2015) using a so-called inception block: a parallel mixture of convolutions and pooling layers (see an example in Fig. 5 with a series of five consecutive inception blocks). Inception blocks allow studying the input data at different scales in parallel and to extract features of different sizes from the same input. A succession of inception blocks can be used to create a full network architecture, which is called an inception network. The network architecture we used in the applications of Sects. 5 and 6 is shown in Fig. 5.

|

Fig. 5. Fully convolutional inception network to perform the compression, see Table 4 for a full description. Each inception block is composed of parallelized convolutions that simultaneously process the same input at different scales to extract different features, then concatenates the full output. After each inception block, the input is compressed with a 4 × 4 pooling layer to decrease the resolution by a factor 4, then we indicate the current size. Finally, the output layer allows a compression down to the number of parameters of the model and is the summary statistics vector of Sect. 3.4. |

Because distant galaxies are generally spread over only a few pixels in size, we used kernels with pixel size 1 × 1, 3 × 3 (equivalent to) and 5 × 5 (equivalent to) in each of the layers2. As part of the inception architecture, 1 × 1 convolutions were performed before each 3 × 3 or 5 × 5 kernel sized convolutions to reduce the depth, hence the number of kernels, of each block. Each convolution had eight channels (i.e., depths), which kept the total number of parameters relatively low (12 800 parameters in our applications) compared to traditional inception networks (with millions of parameters). At the end of each inception block, we concatenated (in depth) each output of the convolutions and then applied an average pooling using the mean over 4 × 4 patches of the output to decrease the resolution of the image by 4. This process was applied several times until the output was of the expected dimension, that is, the number of parameters of the model. Table 4 gives a complete description of each component of the inception network. Specifically, the full 1024 × 1024 × 8 input data used in the applications described in Sects. 5 and 6 were massively compressed by the network down to the number of parameters in the corresponding luminosity function model. These compressed summaries were then used to compute the quantities defined in Sect. 3.4: μ, C, and finally, F. Our inception network is fully convolutional, we do not use any fully connected layer so that complete translational invariance is incorporated.

4.2. Loss function

Normally, the loss function is a measure of the fit of the neural network to the data. However, with the IMNN, the loss function is a measure of the amount of information that is extracted from the data. We used IMNN to maximize the Fisher information of the output summaries obtained from the network. As a consequence, during the training, we measured the determinant of the Fisher matrix, which we wished to maximize. However, because the Fisher information is invariant under linear scaling of the summaries, the magnitude of the summaries needs to be controlled, which can be done by constraining the covariance matrix (see below). We therefore defined two loss functions,

where ∥ ⋅ ∥F is the Frobenius norm. The first loss function ΛF measures the Fisher information, and the second loss function ΛC measures the difference of the covariance from the identity matrix. We tried to find a network parameter configuration such that both loss functions are minimal. Minimizing ΛF maximizes the information, while minimizing ΛC provides a covariance of the summaries that is close to the identity matrix. Keeping the covariance relatively close to identity fixes the scale of the summary statistics while providing a covariance that is mostly independent of the parameters, which justifies dropping the covariance term in Eqs. (18) and (21). Several techniques (Hwang et al. 1979) exist to achieve the minimization process of such a multiobjective problem (e.g., linear scalarization and ϵ-constraint). We used a continuously penalized optimization in which we combined the loss functions ΛF and ΛC as follows:

where

is a sigmoid function with user-defined parameters λ and α. As a result, when the covariance is far from identity, the rΛC function is large and the optimization concentrates on bringing the covariance and its inverse back to identity.

4.3. Training of the network

Following the description of the IMNN code3 (Charnock et al. 2018) to train the network when exact gradients of the simulator are not available, we need a vector of fiducial parameter values, θfid, and a vector of deviation values about the fiducial, Δθ. From these values, we generate a training set of images consisting of a first collection of n images generated with values at the fiducial parameters θfid, a second collection of m images generated with values θfid + Δθα4, for each α ∈ {1, …, p} and a third collection of m images generated with values θfid − Δθα, for each α ∈ {1, …, p}. As illustrated in Fig. 6, we passed the training set of images through the network to obtain the summary statistics, and we computed the quantities of interest: the covariance matrix from the fiducial set of images, the numerical derivative of the mean of the summary statistics vector for each parameter from the perturbed parameter sets, and with these, the determinant of the Fisher information matrix. For the simulations for the numerical derivatives, each parameter was varied independently, keeping the other variable parameters fixed at their fiducial values. Furthermore, for the second and third collections of images, the initial seeds were fixed to be the same for each individual image, such that the galaxies were generated at the same location in the image and with identical flux and shape. This ensured that the only changes from one image of the second collection to the corresponding image of the third collection were galaxies that appeared or disappeared according to the luminosity function. The suppression of this variance allowed us to make fewer simulations (i.e., m < n) for the derivatives than for the fiducial simulations used to calculate the covariance matrix.

|

Fig. 6. Schematic description of the training of the network to obtain the covariance matrix and the derivative of the mean vector that are used in Eq. (21) to obtain the Fisher information matrix. The top, middle, and bottom lists of images form what we call the training set and are generated with fiducial parameters θfid (with θfid + Δθα and θfid − Δθα, for each α ∈ {1, …, p}). From the first collection of images, we compute the covariance matrix, and from the second and third collections of images, we compute the derivative of the mean vector for each α ∈ {1, …, p}. |

At each iteration of the training phase, the training set of images was passed through the network before the back-propagation was run, that is, before the gradient of the loss function was calculated. We used the chain rule to calculate the effect of each network parameter on the value of the loss function such that they could be updated, thereby minimizing the loss. Consequently, as the training advanced, the network summarized the data while retaining increasingly more information about the model parameters. Furthermore, the network became increasingly insensitive to parameter-independent noise, providing a function that calculated extremely robust summary statistics. We used another independent set of different images that the network did not train on in order to validate the performance: the validation set.

To update the network parameters at each iteration, we used the Adam optimizer (Kingma & Ba 2014) and/or the stochastic gradient descent method. In this training process, we did not know the expected Fisher information of the images. We therefore recommend to continue the training until the loss reaches a plateau (or starts to decrease) when evaluated on the validation set, indicating that the network has compressed the data with the maximum information available. At this point, the summaries are Gaussianly distributed in regions close to the fiducial choice of parameter values, even if the likelihood of the actual data is non-Gaussian (Charnock et al. 2018).

4.4. Choice of fiducial values: Iterative method

If the values of the inferred parameters are far (and/or completely unknown) from the fiducial choice of training parameters, then there is a risk that the summaries are not Gaussianly distributed in the regions close to the inferred values. This can lead to very wide or flat posterior distributions when the Bayesian likelihood-free inference techniques of Appendix A are applied. To fix this problem, the following iterative method can be applied to choose a good set of fiducial training values:

-

Train the IMNN with any guess (or random choice) for the fiducial values.

-

Compute the quasi-maximum likelihood estimate of the data for which the parameter values are to be inferred, using Eq. (24).

-

Use this estimate as the new fiducial values and train a new IMNN with these values.

This iterative process was applied until the quasi-maximum likelihood estimate was relatively constant between iterations. It generally took very few iterations for convergence. By iterating toward the quasi-maximum likelihood, more information about the model parameters can potentially be extracted. In our applications of Sects. 5 and 6, we did not need to apply this iterative method because our choice of fiducial values for the training of the network was relatively similar (or identical) to the inferred parameter values.

5. Application to M* and ϕ* for spirals alone

This section is only meant to explain the method for nonrealistic deep-field simulations with only one spiral population and does not allow a comparison with the CFHTLS data. However, the full description of the forward model of Sect. 2 applies and stars are included as well. The goal here is to prove that the network is able to retrieve the strong correlation between the two parameters M* and ϕ* of a spiral population.

5.1. Description

We used simulated deep fields with only one spiral population of galaxies with two free parameters of the luminosity function, M* and ϕ*, while the slope was fixed to α = −1.3 and there was no redshift evolution in the luminosity function,  = 0 and

= 0 and  = 0 (but the bulge or disk redshift evolutions of Sects. 2.4 and 2.5 still applied). We used a B/T = 0.2, with the “E” SED for the bulge and the “Irr” SED for the disk, see Fig. 2. As explained in Sect. 4.3, we chose the following fiducial parameter values and their offsets for the numerical derivatives to fit the network:

= 0 (but the bulge or disk redshift evolutions of Sects. 2.4 and 2.5 still applied). We used a B/T = 0.2, with the “E” SED for the bulge and the “Irr” SED for the disk, see Fig. 2. As explained in Sect. 4.3, we chose the following fiducial parameter values and their offsets for the numerical derivatives to fit the network:

where ϕ* is given for H0 = 100 km s−1 Mpc−1. Table 6 gives an overview of the values that were used to generate the simulations according to the description of the forward model of Sect. 2.

Figure 7 shows the five theoretical luminosity functions we used when making the images to train the network. Figure 8 shows the effect on the RGB image (using the i′, r′, g′ filters) of a simulation when only one of the two parameters M* or ϕ* was changed. The density of spirals is boosted for the Δlog10(ϕ*) increase in log10(ϕ*) and for the ΔM* decrease in M*. Consequently, we expect a strong correlation between these two parameters.

|

Fig. 7. Theoretical luminosity functions of the spiral population with the perturbed values of the parameters used to train the network. The parameters used for each curve are listed in Eq. (29). The explicit features in the data, generated from these functions, provide the differences that the IMNN will become sensitive to when training, therefore mapping the effect of log10(ϕ*) and M* to informative summaries. |

|

Fig. 8. Color images (g′, r′, i′ filters) showing the effect of the perturbed values from fiducial for each parameter, as listed in Eq. (29). For each subplot we only added or removed the offset for one parameter listed in Eq. (29) and kept the other at its fiducial value. Decreasing the value of M* (top right) increases the number of galaxies. Decreasing the value of log10(ϕ*) decreases the number of galaxies. This shows that these two parameters are highly correlated. |

5.2. Training the network

To fit the network parameters (i.e., weights and biases), we followed the prescription described in Sect. 4.3 and generated n = 200 simulations (in the eight photometric bands u′, g′, r′, i′, z′, J, H, Ks) with parameters at fiducial values. For the images used for the numerical derivatives, we generated m = 50 simulations (in each of the eight bands) for each parameter, that is, M* and log10(ϕ*), at values θfid, α + Δθα, α = 1, 2, and another m = 50 simulations per parameter at values θfid, α − Δθα, α = 1, 2, see Eq. (29). This yielded a total of 200 + 2 × 2 × 50 = 400 images (in the eight bands) for the training set, and we used the same number for the validation set. Each simulation was a 1024 × 1024 pixel deep-field image in the eight photometric bands. The optimizer techniques and the corresponding learning rates used during the training are listed in Table 5. We used this training scheme to quickly find a transformation that forces the covariance matrix of the summaries to be approximately the identity matrix, and then explore the more complex landscape of the parameter-dependent information. The architecture of the network is that of Fig. 5, which yields a total of 12 170 network parameters to be fitted. Because of the number and size of the images, with this configuration, one epoch of training (i.e., when the whole training and validation sets are passed through the network and the network parameters are updated) took about 40 s to run (on a NVIDIA QUADRO RTX 8000 45 GB GPU).

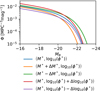

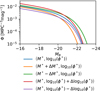

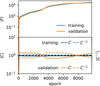

We trained the network for almost 14 000 epochs (≈6 days). Figure 9 shows the evolution of the determinant of the Fisher information matrix (top) and the determinants of the covariance and inverse covariance matrices (bottom). The determinant of the Fisher information matrix increased during the training, and the determinant of the covariance matrix and its inverse became constant after a few hundred epochs. This shows that the covariance has very little parameter dependence, and the sensitivity in the network instead is due to the derivative of the mean of the summaries with respect to the parameters. The determinant of the covariance matrix (and its inverse) are not precisely the identity, as the regularization attempts to enforce in the optimization routine, because the parameters are extremely highly correlated, and so the summaries are as well. However, the only requirement for the IMNN is that the covariance matrix is parameter independent, and only the arbitrary scale of the summaries is set by the value of the covariance, which can be seen by the constant value in Fig. 9. The determinant of the Fisher information begins to plateau (in the training and validation data), and we decided that this is sufficient to stop the training and accept this as the amount of information we are willing to extract from the data given the training time.

|

Fig. 9. Top panel: evolution of the determinant of the Fisher information matrix during almost 14 000 epochs. The blue curve represents the information extracted from the training set, and the orange curve the information from the validation set. The determinant of the Fisher information is maximized on the training set and is comparable to the determinant of the Fisher information calculated with the validation set. This confirms that the two sets are representative samples. The training is stopped when the Fisher information of the validation set becomes relatively constant. Bottom panel: evolution of the determinants of the covariance matrix (solid line) and of the inverse of the covariance matrix (dashed line) for the training set (blue) and for the validation set (orange). The values quickly become constant, which shows that the network suppresses the parameter dependence of the covariance. Because the correlation between the parameters is strong, the covariance matrix cannot exactly diagonalize to the identity matrix, but the fact that it is constant shows that the parameter dependence is weak, which is the only requirement for the IMNN. |

5.3. ABC posteriors

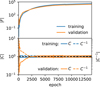

After the network was trained, we ran an ABC procedure to test its reliability, see Appendix A.1 for more details. Here, we replaced what is referred to as “observed data” in Appendix A.1 with a simulation called “virtual data”, generated with parameters set to the fiducial parameter values in Eq. (29),

where ϕ* is given for H0 = 100 km s−1 Mpc−1. We used the formalism of Appendix A.1 and chose a uniform prior for the two parameters,

From these prior intervals, we uniformly drew an initial sample of 5000 pairs of parameters and generated a simulated deep field (using the forward model of Sect. 2) for each pair. We then passed both the virtual data and the 5000 simulated fields through the network in order to compress them. The bottom left plot of Fig. 10 shows the values of these parameters for the entire 5000 prior simulations in orange. We decided to only keep the 50 simulations in blue for which the distance to the virtual data is minimal. The dotted red lines are the parameter values used to generate the virtual data. The ABC procedure retrieves the expected strong correlation between the two parameters. However, because we do not know which value of ϵ should be used, the posterior is not properly approximated here, therefore we used the population Monte Carlo (PMC) procedure of Appendix A.2 to do it automatically. However, the ABC procedure works as well and gives a clear indication of why the method works.

|

Fig. 10. Results of the ABC procedure. Bottom left panel: values of the parameters for the 5000 prior simulations (orange) and for the 50 simulations with minimum distance (blue) to the virtual data. The dotted black lines are the parameter values at which the virtual data were generated. We retrieve the strong correlation between the two parameters. Top left and bottom right panels: 1D marginal distributions of the parameter values. |

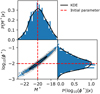

5.4. PMC posteriors

For the PMC, we used the same prior for the two parameters as in Eq. (31), and we applied the PMC procedure of Appendix A.2 with N = 1000, M = 50 000, and Q = 75% for resampling the N sets of parameters. This yielded about 232 577 draws and generated simulations in total. The results are shown in Fig. 11. The 2D distribution (bottom left) and the 1D projected distributions are concentrated around the values of the parameters we used to generate the virtual data (i.e., M* = −20 and log10(ϕ*) = − 2.01). The procedure clearly identifies the strong correlation between the two parameters, both affecting the density of objects, and is able to infer that the parameter values we used to generate the virtual data lie in the bulk of the probability density. This application illustrates that the network is able to summarize the effects in the images of the two correlated parameters, and that it is able to robustly constrain the possible values of the parameters we used to generate the virtual data.

|

Fig. 11. Results of the PMC procedure. Bottom left panel: distribution of the parameter values for final 1000 points obtained by the PMC. The black and dark, gray and light, and gray show the 68%, 95%, and 99% contours, and the dotted red line shows the values of the parameters that were used to generate the virtual data. Top left and bottom right panel: 1D marginal distributions of the parameters and their KDE in black. The procedure converged around the parameter values we used to generate the virtual data. There is evidence here, in 2D, that the uncertainty due to the correlation between the parameters whose effect is evident in the data is large, see Fig. 8. |

6. Application to  for ellipticals and spirals

for ellipticals and spirals

In this section, we consider a more realistic model with two populations of galaxies, ellipticals and spirals, which can then be compared to the observed data for the CFHTLS and WIRDS D1 deep field. We only infer the density parameter  of the luminosity functions for these two populations, and we use the recent results of López-Sanjuan et al. (2017) as a reference because they provided a deep statistical analysis of the luminosity function parameters for these two populations of galaxies.

of the luminosity functions for these two populations, and we use the recent results of López-Sanjuan et al. (2017) as a reference because they provided a deep statistical analysis of the luminosity function parameters for these two populations of galaxies.

6.1. Description

We used the following fiducial parameter values from López-Sanjuan et al. (2017) and offsets for the numerical derivatives to fit the network:

where ϕ* is given for H0 = 100 km s−1 Mpc−1. The other luminosity function parameters were set to be constant with the values from López-Sanjuan et al. (2017), see Table 6. We considered an offset value of  a factor of 10 smaller for the spiral galaxies than

a factor of 10 smaller for the spiral galaxies than  for the ellipticals when we generated the simulations for the numerical derivatives, see Eq. (32) because the density of spirals is higher than that of ellipticals, see Fig. 13. We used the reference SDSS g′ band for the absolute magnitude, therefore we applied a correction of B − g′ = 0.78 for the elliptical population and B − g′ = 0.40 for the spiral population, as suggested by Table 7 of Fukugita et al. (1995), to express the magnitudes in the reference B band.

for the ellipticals when we generated the simulations for the numerical derivatives, see Eq. (32) because the density of spirals is higher than that of ellipticals, see Fig. 13. We used the reference SDSS g′ band for the absolute magnitude, therefore we applied a correction of B − g′ = 0.78 for the elliptical population and B − g′ = 0.40 for the spiral population, as suggested by Table 7 of Fukugita et al. (1995), to express the magnitudes in the reference B band.

For computational efficiency, we only used a 3.17 arcmin2 images (corresponding to 1024 × 1024 pixels of 0.186 arcsec) of the full 1 deg2 D1 deep field in the eight photometric bands u′, g′, r′, i′, z′, J, H, Ks to infer the values of  for the elliptical and spiral populations. Figure 12 shows the effects on a color image of the g′, r′, i′ simulations for the elliptical population (left column) and the spiral population (right column) of changing the fiducial parameters by their respective positive (top images) and negative (middle images) offset from the fiducials that were used to calculate the numerical derivatives. The bottom image of each column is the difference of the above images and shows the galaxies that appear in the images we used to calculate the numerical derivatives of the summaries using the IMMN. The bottom left panel shows that only elliptical galaxies remain when only

for the elliptical and spiral populations. Figure 12 shows the effects on a color image of the g′, r′, i′ simulations for the elliptical population (left column) and the spiral population (right column) of changing the fiducial parameters by their respective positive (top images) and negative (middle images) offset from the fiducials that were used to calculate the numerical derivatives. The bottom image of each column is the difference of the above images and shows the galaxies that appear in the images we used to calculate the numerical derivatives of the summaries using the IMMN. The bottom left panel shows that only elliptical galaxies remain when only  for the elliptical is changed (these galaxies appear as yellow or red because of the SED used to model them), as opposed to the blue galaxies of the spiral population remaining in the bottom right panel. Table 6 gives an overview of the values that were used to generate the simulations according to the description of the forward model of Sect. 2.

for the elliptical is changed (these galaxies appear as yellow or red because of the SED used to model them), as opposed to the blue galaxies of the spiral population remaining in the bottom right panel. Table 6 gives an overview of the values that were used to generate the simulations according to the description of the forward model of Sect. 2.

|

Fig. 12. Left panel: difference of the two upper images is shown in the bottom panel. The two upper images are simulated by only changing the fiducial parameter of the elliptical population to the perturbed parameter values by +Δθ1 (top) and −Δθ1 (middle). Only yellow or red elliptical galaxies remain, which confirms that |

Figure 13 shows the six theoretical luminosity functions, at z = 0 and z = 2, that we used to train the network. The density of bright red ellipticals is lower at z = 2 than at z = 0: fewer galaxies with early-type SEDs existed in the past than today because they were still forming their stars and therefore had bluer SEDs. We also expect a relatively higher density of faint spirals compared to ellipticals at z = 0 and z = 2. The curves for the perturbed luminosity functions of spirals are closer together because the offset Δθ is a factor 10 lower than for the ellipticals.

|

Fig. 13. Theoretical luminosity functions of the elliptical (blue, orange, and green) and spiral (red, purple, and brown) populations at redshift z = 0 (top) and z = 2 (bottom). The legend applies to both panels. The parameters used for each luminosity function are given in Table 6. These curves show the higher integrated density of spiral galaxies than of elliptical galaxies. The steep faint-end slope of the spiral population implies that the images contain many faint spiral galaxies, which is not the case for the elliptical population. There is also a redshift effect that decreases the proportion of ellipticals or spirals when looking at distant objects. |

6.2. Training of the network

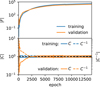

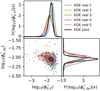

As in the previous application of Sect. 5.2, we generated simulations in the same way, with n = 200 fiducial images of 3.17 arcmin2 and 1024 × 1024 pixels in the eight photometric bands, and 2 × 2 × 50 = 200 (m = 50) seed-matched images for the numerical derivatives. We used the same inception architecture with the Adam optimizer (learning rate of 0.04) for the first 4000 epochs of the training, then the stochastic gradient descent optimizer (learning rate of 5 × 10−6) for the remaining epochs. We trained the network on the GPU NVIDIA TITAN X 12Go for almost 10 000 epochs (≈8 days), and we show in Fig. 14 the convergence of the determinant of the Fisher matrix (top) and the determinant of the covariance and inverse covariance matrices (bottom) for the training set (blue) and the validation set (orange). During the fitting, the training and validation curves remain close to each other, which indicates that the training and validation sets contain approximately the same amount of information about the two parameters. Consequently, both sets seem representative enough of the diversity of realizations of a deep field of spiral and elliptical galaxies with these fiducial parameters.

|

Fig. 14. Top panel: evolution of the determinant of the Fisher information matrix during almost 10 000 epochs. The blue curve represents the information extracted from the training set, and the orange curve shows the same from the validation set. The curves increase, which means that the network learns more about the two parameters as the training continues. The training was stopped when the validation curve flattened, suggesting that the network has converged. Bottom panel: evolution of the determinant of the covariance matrix (solid line) and the inverse of the covariance matrix (dashed line). The blue curves show the training set, and the orange curves show the validation set. The training curves reach 1 very fast, which shows that the loss is stabilized and that the magnitude of the summaries is under control. The validation curve oscillates while being still very close to identity, which is a sign that there is some weak parameter dependence on the covariance. |

6.3. Observed and virtual data

Our ultimate goal is to infer the parameters on the 1 deg2 D1 deep field. However, we limited the analysis to deep fields of size 3.17 arcmin2 (i.e., 1024 × 1024 pixels) for computational efficiency, and randomly chose five such regions of the D1, see Fig. 16. In order to confirm that the network is trained properly and performs well, we also generated five simulations with the fiducial parameters of Table 6 and the same angular size as the CFHTLS data, which we consider as virtual data (see Fig. 15). The goal is to obtain the individual posterior distributions of the five observed data images, then to compute a joint posterior to constrain the values of the parameters and extract the confidence intervals. The same procedure was applied to the five virtual data. The difference was that we knew the values of the parameters that were used to generate the virtual data. We chose five images in both cases as a good compromise between the computational time and the accuracy of the joint posterior.

|

Fig. 15. RGB images using the g′, r′, i′ filters for the five virtual data of 3.17 arcmin2 generated with fiducial values of the luminosity function parameters (see Table 6), used to validate the method. |

|

Fig. 16. RGB images using the g′, r′, i′ filters for five random regions of 3.17 arcmin2 within the 1 deg2 CFHTLS D1 deep field that are used to infer the luminosity function parameters of the elliptical and spiral galaxies, namely the logarithm of their amplitudes log10( |

6.4. ABC posteriors

We applied the ABC procedure of Appendix A.1 and used a uniform prior distribution with the same lower bound for the amplitudes of the luminosity functions of the elliptical and spirals galaxies, but different upper bounds:

The choice of a smaller upper bound of the prior for the spirals was made because the luminosity function for this population has a steep faint end slope (see Table 6 and Fig. 13), and it can lead to very long generation times of the simulations with high values of the amplitude  . Moreover, previous studies such as López-Sanjuan et al. (2017) have shown that log10(

. Moreover, previous studies such as López-Sanjuan et al. (2017) have shown that log10( ) > −1 is very unlikely, and indeed, this is what we also find from Fig. 19. We wish to note that cutting possible regions of parameter space due to computational resources is not a statistically rigorous process, but the information from López-Sanjuan et al. (2017) gives us reason to believe that it is acceptable to truncate here.

) > −1 is very unlikely, and indeed, this is what we also find from Fig. 19. We wish to note that cutting possible regions of parameter space due to computational resources is not a statistically rigorous process, but the information from López-Sanjuan et al. (2017) gives us reason to believe that it is acceptable to truncate here.

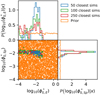

Figure 17 shows the results of the ABC procedure with 5000 draws of the parameters following the uniform prior of Eq. (33). A distance threshold can be chosen from the data to accept or reject some of the parameters, as shown by the green, blue, and red points in the bottom left plot. The number of accepted points decreases when this threshold is low, but their 2D region also becomes more concentrated. As a consequence, the corresponding 1D marginalized distribution of log10( ) (top left) varies significantly depending on this choice for a distance threshold. This is because we did not densely sample from a stationary distribution, a problem that can occur when sparsely drawing from the prior and not choosing a small enough ϵ-ball in which to accept simulations. In order to properly approximate the posterior, the distance threshold must approach ϵ = 0 and more simulations need to be done for the ABC until a steady distribution is reached. This highlights the need for a PMC that automatically approaches the posterior, which we describe in the following.

) (top left) varies significantly depending on this choice for a distance threshold. This is because we did not densely sample from a stationary distribution, a problem that can occur when sparsely drawing from the prior and not choosing a small enough ϵ-ball in which to accept simulations. In order to properly approximate the posterior, the distance threshold must approach ϵ = 0 and more simulations need to be done for the ABC until a steady distribution is reached. This highlights the need for a PMC that automatically approaches the posterior, which we describe in the following.

|

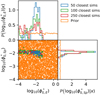

Fig. 17. Results of the ABC procedure. Bottom left panel: parameter values corresponding to 5000 simulations (dots) drawn from our random uniform prior (orange) of Eq. (33). The colored points are those with a small distance ρ (Eq. (A.3)) to the observed data (frame “b” of Fig. 16 from the CFHTLS D1 Deep field): the 50 closest points are shown in blue, the 100 closest points are shown in green, and the 250 closest points are shown in red. Top left and bottom right: marginalized distributions of the distance selections in the bottom left panel. The points with the smallest distances appear to be around |

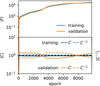

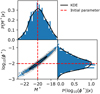

6.5. PMC posteriors and confidence intervals

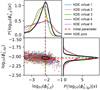

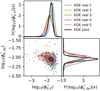

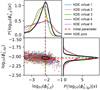

We applied a parallelized version of the PMC procedure to the five images of the virtual and observed data. We used the same priors as in Eq. (33) and applied the PMC procedure of Appendix A.2 with N = 500, M = 5000, and a 25% threshold for resampling the N sets of parameters. This yielded about 60 000 draws and generated simulations per image.

The results are shown in Fig. 18 for the virtual data and Fig. 19 for the observed data. As shown by the 2D distribution of points (bottom left) and the 1D projected Gaussian kernel density estimates, the posteriors are concentrated around similar regions of parameter values for the five insets of each sample for the observed and virtual data because the data come from the same generative process, while the differences in the posteriors are due to the independent environment (or realization) containing more or less constraining power. We provide the 68%, 95%, and 99% confidence intervals for the 1D marginal distributions. Because the distribution is not Gaussian in 2D, its covariance (nor the 1D marginal standard deviations) are sufficient to wholly encapsulate the information in this posterior distribution. This gives us further good reason to consider such likelihood-free inference methods. The parameter values used to generate the virtual data images are shown with dotted red lines in Fig. 18 and can be compared to the posterior distributions. All results, namely the parameter values used to generate the data (for the virtual data alone) and the 68%, 95%, and 99% 1D confidence intervals for  and

and  are listed in Tables 1 and 2 for the five individual images.

are listed in Tables 1 and 2 for the five individual images.

|

Fig. 18. Posterior distributions of the two parameters log10( |

We emphasize that for the observed and virtual data, Figures 18 and 19 show that the results are narrower 1D marginalized distributions for the spiral population than for the elliptical population. This comes from the steep faint-end slope of the luminosity function for the spirals: a small change in log10( ) has a strong effect on the number of faint spirals, which can be more easily distinguished. This is not exactly the case for the other parameters: a strong change in log10(

) has a strong effect on the number of faint spirals, which can be more easily distinguished. This is not exactly the case for the other parameters: a strong change in log10( ) is needed to considerably alter the number of elliptical galaxies, which equates to greater uncertainty on this parameter.

) is needed to considerably alter the number of elliptical galaxies, which equates to greater uncertainty on this parameter.

|

Fig. 19. Posterior distributions of the two parameters log10( |

Comparison of Figs. 18 and 19 show that if the posterior distributions of the spirals have a similar dispersion for the virtual and observed data, the posterior distributions of the elliptical population is narrower for the observed than for the virtual data. This is an effect of the small number of ellipticals in an image: with a low value for log10( ), there are very few elliptical galaxies in the image, and this lack of a statistically significant sample increases the width of the posterior due to the lack of available information. In comparison, the posterior is narrower for the observed data because more elliptical galaxies appear to be present: the peak of the joint posterior is at log10(

), there are very few elliptical galaxies in the image, and this lack of a statistically significant sample increases the width of the posterior due to the lack of available information. In comparison, the posterior is narrower for the observed data because more elliptical galaxies appear to be present: the peak of the joint posterior is at log10( ) = − 1.7, compared to a lower value of log10(

) = − 1.7, compared to a lower value of log10( ) = − 2.1 for the virtual data (see Tables 1 and 2). The summaries provided by the network encode information about the relation of the number of ellipticals and spirals in the images to the model parameters, and therefore can be used for the inference of this property using the PMC.

) = − 2.1 for the virtual data (see Tables 1 and 2). The summaries provided by the network encode information about the relation of the number of ellipticals and spirals in the images to the model parameters, and therefore can be used for the inference of this property using the PMC.

For the observed data frame labeled “real 3” in Fig. 19, the 1D confidence interval of the parameter log10( ) is slightly displaced compared to the other observed images, and moreover toward lower densities of spirals. This can be explained by the presence of a large spiral galaxy in the image of frame “c” in Fig. 16. This galaxy covers ∼100 × 100 pixels, an area of the image (i.e., ∼1% of the full 1024 × 1024 image) that reduces the amount of informative data with which to correlate the number of detected spirals, which therefore appear to be fewer because the area in which they are visible is smaller. This illustrates the fact that the inference is correct even if the PMC procedure happens to be biased because of statistically anomalous data. This is the reason why we used five insets of the D1 deep field, with the goal to improve the robustness of the results.

) is slightly displaced compared to the other observed images, and moreover toward lower densities of spirals. This can be explained by the presence of a large spiral galaxy in the image of frame “c” in Fig. 16. This galaxy covers ∼100 × 100 pixels, an area of the image (i.e., ∼1% of the full 1024 × 1024 image) that reduces the amount of informative data with which to correlate the number of detected spirals, which therefore appear to be fewer because the area in which they are visible is smaller. This illustrates the fact that the inference is correct even if the PMC procedure happens to be biased because of statistically anomalous data. This is the reason why we used five insets of the D1 deep field, with the goal to improve the robustness of the results.

6.6. Joint posterior and confidence intervals

Because we only had individual posteriors for each image, we combined to derive a unique posterior. Unfortunately, posterior chains cannot be combined in a simple way. A rigorous way to achieve such a posterior is to use the Bayesian chain rule,

where θ is a set of parameters, and X1, …, Xn are the n individual pieces of data. The chain rule allows obtaining the posteriors sequentially: ∀i ∈ {1, …, n − 1} we assumed that obtained the posterior distribution (via PMC) of p(θ|X1, …, Xi), then

-

Consider p(θ|X1, …, Xi), derived from the pieces of data X1, …, Xi, as an approximate proposal distribution for θ.

-

Use that proposal distribution of θ for the inference, using ABC or PMC, given the new piece of data, Xi + 1.

-

Derive a new posterior distribution from p(θ|X1, …, Xi + 1) that can be used in turn as the proposal distribution for the next piece of data.

We ran a parallelized version of such a sequential-PMC for the five images for the virtual and observed data. The resulting joint posteriors are shown as black lines (called kernel density estimate ”KDE joint”) in Figs. 18 and 19 and can be compared to the posterior distributions obtained for the individual images. In these figures, the 68%, 95% and 99% contours are drawn using the joint posterior in black, gray, and light gray, respectively. The 1D 68%, 95%, and 99% confidence intervals for the joint posteriors are listed at the end of Table 1 for the virtual data and in Table 2 for the observed data. The confidence intervals are narrower for the joint posteriors because more information is available, which allows us to draw tighter constraints on the parameter values.

6.7. Comparison with other studies

We compared our results with other measurements of the luminosity functions for the elliptical (or red, or quiescent) galaxy population and for the spiral (or blue, or star-forming) population obtained from deep multiband galaxy surveys: López-Sanjuan et al. (2017), Brown et al. (2007), Beare et al. (2015), Salimbeni et al. (2008), Drory et al. (2009), and Faber et al. (2007). In these studies, the two populations are identified either by comparing the observed magnitudes in different bands or by finding the best-fit SEDs to each galaxy. These methods are affected by several biases because of the catalog extraction process (see Sect. 1) and because of the choice of threshold used to split galaxies into red or blue.

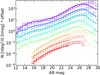

Figure 20 shows the luminosity function using the 68% confidence intervals of the joint posterior over the five insets of the CFHTLS+WIRDS D1 field in blue for the elliptical (top) and spiral populations (bottom) compared to the results from the other articles. Figure 20 shows a good agreement between our results and the other surveys for the spiral population (bottom panel) for which the network was able to precisely constrain the luminosity function (narrow 68% confidence interval). Despite a different sample and no catalog extraction, when our results are compared to those of López-Sanjuan et al. (2017), we obtain for the dominant spiral population a 1σ confidence interval on ϕ* comparable to theirs. The top panel of Fig. 20 shows that for the elliptical population, there are larger differences between all analyses, including ours. This is likely partly due to Poisson noise: the number density of galaxies in the elliptical population is typically a factor of 10–100 lower than for the spiral population in a given magnitude bin, introducing more dispersion. Moreover, as pointed out in several of the analyses we quoted, the chosen samples of red or blue galaxies contain misidentified galaxies, which affect the smaller elliptical population more.

|

Fig. 20. Inferred luminosity functions derived for the elliptical (top) and the spiral (bottom) populations using the 68% confidence intervals (blue). Our results are compared with the results of López-Sanjuan et al. (2017) in green, Brown et al. (2007) in pink, Beare et al. (2015) in red, Salimbeni et al. (2008) in brown, Drory et al. (2009) in purple, and Faber et al. (2007) in orange. Our g′ magnitudes are converted into the Johnson B-band absolute magnitude, at redshift z = 0.5 and for H0 = 100 km s−1 Mpc−1 using B − g′ = 0.78 for ellipticals and B − g′ = 0.40 for spirals, listed in Table 7 of Fukugita et al. (1995). |

The top panel of Fig. 20 also indicates evidence for an excess of red faint galaxies: López-Sanjuan et al. (2017), Drory et al. (2009), and Salimbeni et al. (2008) have used the sum of two Schechter functions to better model the luminosity function of the elliptical population (and its rising faint end). We suspect that this apparently observed excess of faint elliptical galaxies to which our method is in principle quite sensitive to tends to pull our single-Schechter luminosity function upward for the elliptical population and is therefore systematically higher than the luminosity functions derived by Brown et al. (2007), Beare et al. (2015), and Faber et al. (2007). In this case, our limited model of two galaxy populations with single evolving Schechter luminosity functions for each population is too coarse. Nevertheless, the rising of the elliptical luminosity function at the faint end could be caused by some of the faint spiral galaxies, which are numerous, being categorized as red galaxies by some source extraction method.

Conversely, some of the elliptical galaxies in the other analyses may have been misclassified by some source extraction method as blue galaxies and might lead to a systematically too low amplitude for the elliptical luminosity function. Moreover, dusty star-forming galaxies (which have red colors) may be modeled as ellipticals in our analysis (due to the resemblance of the strong starburst to a bulge-dominated object in the image), whereas they may be classified as spirals in the other analyses. Both effects would affect only the luminosity function of elliptical galaxies because spiral types vastly dominate the elliptical types in number.

7. Conclusions and perspectives