| Issue |

A&A

Volume 687, July 2024

|

|

|---|---|---|

| Article Number | A238 | |

| Number of page(s) | 28 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202449447 | |

| Published online | 16 July 2024 | |

The SRG/eROSITA All-Sky Survey

X-ray selection function models for the eRASS1 galaxy cluster cosmology

1

IRAP, CNRS, UPS, CNES, 14 Avenue Edouard Belin, 31400 Toulouse, France

e-mail: nicolas.clerc@irap.omp.eu

2

Max Planck Institute for Extraterrestrial Physics, Giessenbachstrasse 1, 85748 Garching, Germany

3

Dr. Karl-Remeis-Sternwarte and ECAP, Friedrich-Alexander-Universität Erlangen-Nürnberg, Sternwartstr. 7, 96049 Bamberg, Germany

4

Universität Innsbruck, Institut für Astro- und Teilchenphysik, Technikerstr. 25/8, 6020 Innsbruck, Austria

5

Argelander-Institut für Astronomie (AIfA), Universität Bonn, Auf dem Hügel 71, 53121 Bonn, Germany

Received:

1

February

2024

Accepted:

2

May

2024

Aims. Characterising galaxy cluster populations from a catalogue of sources selected in astronomical surveys requires knowledge of sample incompleteness, known as the selection function. The first All-Sky Survey (eRASS1) by eROSITA on board Spectrum Roentgen Gamma (SRG) has enabled the collection of large samples of galaxy clusters detected in the soft X-ray band over the western Galactic hemisphere. The driving goal consists in constraining cosmological parameters, which puts stringent requirements on the accuracy and flexibility of explainable selection function models.

Methods. We used a large set of mock observations of the eRASS1 survey and we processed simulated data identically to the real eRASS1 events. We matched detected sources to simulated clusters and we associated detections to intrinsic cluster properties. We trained a series of models to build selection functions depending only on observable surface brightness data. We developed a second series of models relying on global cluster characteristics such as X-ray luminosity, flux, and the expected instrumental count rate as well as on morphological properties. We validated our models using our simulations and we ranked them according to selected performance metrics. We validated the models with datasets of clusters detected in X-rays and via the Sunyaev–Zeldovich effect. We present the complete Bayesian population modelling framework developed for this purpose.

Results. Our results reveal the surface brightness characteristics most relevant to cluster selection in the eRASS1 sample, in particular the ambiguous role of central surface brightness at the scale of the instrument resolution. We have produced a series of user-friendly selection function models and demonstrated their validity and their limitations. Our selection function for bright sources reproduces the catalogue matches with external datasets well. We discuss potential inconsistencies in the selection models at a low signal-to-noise revealed by comparison with a deep X-ray sample acquired by eROSITA during its performance verification phase.

Conclusions. Detailed modelling of the eRASS1 galaxy cluster selection function is made possible by reformulating selection into a classification problem. Our models are used in the first eRASS1 cosmological analysis and in sample studies of eRASS1 cluster and groups. These models are crucial for science with eROSITA cluster samples and our new methods pave the way for further investigation of faint cluster selection effects.

Key words: methods: statistical / catalogs / surveys / X-rays: galaxies: clusters

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

Galaxy clusters sit at the most massive nodes of the cosmic web. They form last in the cosmic evolution by accreting groups and smaller structures. Their distribution is sensitive to the underlying cosmological model and for this reason they are recognised as a key cosmological probe (Clerc & Finoguenov 2023). Being rare objects, their census requires surveys spanning a large fraction of the sky with sensitive instrumentation. The diffuse X-ray emitting gas, filling the megaparsec-wide intracluster medium (ICM), signposts virialised halos and enables their discovery up to large distances. In the context of X-ray cluster studies, accurate knowledge of a selection function has been required for cosmological abundance studies (e.g. Böhringer & Chon 2015; Mantz et al. 2015; Finoguenov et al. 2020; Garrel et al. 2022), cluster clustering studies (e.g. Marulli et al. 2018; Lindholm et al. 2021), scaling relation studies (e.g. Pacaud et al. 2006; Mantz 2019; Bahar et al. 2022), and for the investigation of extreme objects (e.g. Hoyle et al. 2012).

The eROSITA instrument on board the Spectrum Roentgen Gamma Mission (SRG/eROSITA, Predehl et al. 2021) has surveyed the entire sky during its first six months of operations (Merloni et al. 2024), collecting enough photons to discover several thousand galaxy clusters in the western Galactic hemisphere (Bulbul et al. 2024). Those extended sources are identified in multi-band optical surveys and their redshift is measured, hence providing a distance to us observers. The first catalogues of clusters discovered in the first eROSITA All-Sky Survey (eRASS1) western Galactic hemisphere (Bulbul et al. 2024; Kluge et al. 2024) support detailed individual cluster studies (e.g. Liu et al. 2023; Veronica et al. 2024) and population studies revealing for the first time the large-scale evolution of clusters and groups up to z ≃ 1 and beyond. Among them, the cosmological analyses particularly stand out, being a driver of the eROSITA mission design and of the construction of cluster catalogues. The first cosmological results based on cluster number counts are presented in Ghirardini et al. (2024) and Artis et al. (2024). In this article, we explain how the selection function model supporting these results was constructed. In fact, virtually any cluster population study based on the published eRASS1 catalogues needs to account for incompleteness at some stage. It is indeed inefficient, if not ill-defined, to require complete samples for population analyses (Rix et al. 2021); modelling incompleteness is in general more fruitful and, in fact, rather inevitable. The current blooming of large astronomical surveys fosters the development of accurate selection models with powerful statistical methods. The recent Gaia survey is an illustrative instance where selection functions for the parent catalogue (Boubert & Everall 2020) and for its subsets (Rix et al. 2021; Boubert & Everall 2021) are modelled using detailed accounting for the magnitudes, position, and parallaxes (and uncertainties) of the stars.

This paper is organised as follows. We first present the detailed motivation for this work in Sect. 2, where we also introduce our notations. We present the twin eRASS1 simulations in Sect. 3, in particular we discuss our procedure matching detections and simulated clusters. We describe a first class of selection function models uniquely relying on X-ray count profiles in Sect. 4, highlighting some salient features of cluster selection in the observable space. In Sect. 5 we introduce a second class of models relying on intermediate variables. We show the outcome of a validation of our intermediate models using external catalogues (X-ray and millimetre-band detections) in Sect. 6. We discuss our results in Sect. 7 and summarise our findings in Sect. 8.

Unless stated otherwise, we assume a flat ΛCDM cosmological model with parameters from Planck Collaboration XIII (2016). We use ln for natural logarithm, and the base ten logarithm is written as log10. The notation 𝒩(μ;σ) indicates a normal distribution centred on μ with standard deviation σ. We use the ″ symbol for arcsecond units.

2. Motivation

Let us follow an illustrative example based on cluster count cosmology studies, although this demonstration may be generalised to other kinds of population analyses. Most cosmology analyses involve a numerical likelihood:

This quantity represents the probability that a set of observed data derives from a certain model. One assumes the model to be dependent on a set of parameters Θmodel fully describing it (see Table 1):

Glossary of symbols and conventions used throughout this paper.

Posterior distributions p(Θmodel|data) are final products of cosmology analyses, obtained by application of the Bayes theorem involving the likelihood (Eq. (1)). Most often the required integrals are computed by repeated samplings of the parameter space, and by formulating as many queries to the likelihood function value L. A practical consequence is that likelihood evaluation must be computationally efficient.

In experiments involving counts of galaxy clusters – which include cluster abundance and cluster clustering studies – objects are grouped in bins of one or several measured quantities. The bins are drawn in a predefined parameter space Θbin: measured mass, redshift, flux, pairwise distances, etc. The likelihood functions L combine observed numbers  and model-predicted numbers nj by means of a statistics (j is an index for the bins). A famous example is the Poisson log-likelihood (see also Cash 1979), adequate for shot-noise dominated bins. The size of the bins is part of the likelihood design and it is usual practice to consider infinitesimally small bins containing

and model-predicted numbers nj by means of a statistics (j is an index for the bins). A famous example is the Poisson log-likelihood (see also Cash 1979), adequate for shot-noise dominated bins. The size of the bins is part of the likelihood design and it is usual practice to consider infinitesimally small bins containing  or 1 object. In such a case, nj transforms into dn/dΘbin. In presence of additional variance terms, the Poisson likelihood must be modified. For instance, Garrel et al. (2022) present a likelihood accounting for sample variance (e.g. Hu & Kravtsov 2003). This component is an important source of variance when dealing with large numbers of objects (relatively to Poisson noise that affects small samples).

or 1 object. In such a case, nj transforms into dn/dΘbin. In presence of additional variance terms, the Poisson likelihood must be modified. For instance, Garrel et al. (2022) present a likelihood accounting for sample variance (e.g. Hu & Kravtsov 2003). This component is an important source of variance when dealing with large numbers of objects (relatively to Poisson noise that affects small samples).

A model should then predict the value of nj. In the ideal case of unrestricted computational power, excellent fidelity may be achieved by means of end-to-end models of the eRASS1 sky, and by retrieving the number nj from the simulated catalogues. However it is prohibitive to run one new end-to-end simulation each time a value is queried by the likelihood function (Eq. (1)). The cosmological analysis process necessarily resorts to approximations, interpolations and emulators. For instance, a parameterised halo mass function (HMF) model would replace series of full N-body simulations; intra-cluster medium (ICM) scaling relations replace detailed hydrodynamical simulations; while a selection function model replaces mock image generation and processing.

We write p(I|Θsel) the probability of selecting an object in the sample given the value of parameters Θsel. These values must be a prediction of the model. The expression for the modelled counts nj may write formally:

In the latter expression we have introduced dn/dΘmodel, the predicted number distribution of galaxy clusters under a certain model assumption. The indicator function 1 ensures only those objects with Θbin taking values in bin indexed by j are counted. The three sets of parameters may share part or all of their members.

An important preparatory task in the cosmological analysis consists in carefully selecting Θbin and Θsel so the model is accurate and the modelling effort is well balanced between p(I|Θsel) and p(Θbin, Θsel|Θmodel). Depending on how close Θsel is from purely observable quantities, selection function models involve a varying amount of astrophysical modelling. It is also important to recognise that some aspects of selection may result from human intervention and thus they are difficult to model with analytic formulae. A certain degree of empiricism may then be introduced in building selection models. We will discuss several options in this paper and compare their advantages and shortcomings.

3. The eROSITA galaxy cluster survey and its simulated twin

3.1. The eRASS1 galaxy cluster samples

The primary eRASS1 cluster catalogue in the western Galactic hemisphere (hereafter eRASS1-primary or eRASS1-main) is described in detail in Bulbul et al. (2024) and Kluge et al. (2024). This catalogue builds upon the eRASS1 X-ray source detection list in the soft band (0.2–2.3 keV) presented in Merloni et al. (2024). The source detection is achieved with the eROSITA Science Analysis Software (eSASS in version 010), and it incorporates two essential steps in its final stages (see Brunner et al. 2022, for further information). First, sources are detected by searching counts significantly above the background level; those sources are individually characterised using a point-spread function (PSF) fitting algorithm. The detection likelihood (DET_LIKE_0 in the parent catalogue, hereafter shortened to ℒdet) and the extent likelihood (EXT_LIKE, hereafter ℒext) are among the most relevant parameters for selecting extended sources. In particular, the eRASS1-primary catalogue is compiled based on a low threshold of ℒext > 3 to maximise completeness. A rigorous cleaning process is applied to the X-ray source catalogue to ensure clean and uncontaminated images are suitable for extended source identification. A series of additional cleaning steps remove spurious split detections; we will assume their impact on completeness is null, as suggested by the number of secondary matches found in the digital twin matching the fraction of split sources in real data (Seppi et al. 2022, and next section).

The description of the eRASS1 cosmology sample (hereafter eRASS1-cosmo) is detailed in Bulbul et al. (2024). It is compiled with a more conservative cut on the measured parameter ℒext > 6 compared to the eRASS1-primary sample to maximise the purity. Exclusively, galaxy clusters with measured photometric redshifts between 0.1 and 0.8 are selected, keeping only areas of the sky where the photometry can be uniformly applied in redshift measurements in the Legacy DR10-south area (Kluge et al. 2024). The cosmology sample comprises 5259 identified clusters with low contamination levels below 5%.

This paper focuses only on X-ray selection function models. However, both the eRASS1-primary and eRASS1-cosmo catalogues are derived from extensive optical follow-up designed for identification and redshift measurement. This identification may act as an additional selection filter impacting completeness and purity estimates and should be accounted for in science analyses with these catalogues (see Ghirardini et al. 2024). Most of the discussion in the paper neglects this effect, and we refer to Kluge et al. (2024) for a detailed description of the optical selection function.

3.2. The eRASS1 digital twin

Understanding selection effects requires mock observations reproducing as many of the characteristics of the actual data as possible. We briefly summarise here the main features of the eRASS1 digital twin depicted in Seppi et al. (2022). The parent halo catalogue originates from UNIT1i dark-matter simulations (Chuang et al. 2019). A full sky light cone is created by replicating shells of the individual snapshots of the simulation (Comparat et al. 2020). The large, albeit finite, box size prevents simulating the very nearby, massive halos that constitute a tiny fraction of the sources in eRASS1 and require a separate treatment. X-ray sources are associated to the light cone using models for the emission of AGN (Comparat et al. 2019) and galaxy clusters and groups (Comparat et al. 2020). The cluster model is generated from a set of real clusters by accounting for the covariances between the surface brightness profile, halo mass, temperature, and redshift. The baryon profiles are painted onto halos based on observations; therefore, the predicted emissivity profiles are representative of observations present in the literature, possibly biased against low-luminosity and low-mass objects. Therefore, low-mass groups M500c < 5 × 1013 M⊙ require a flux rescaling correction (see appendix of Seppi et al. 2022). This mass-dependent correction is derived by displacing simulated objects along the observed stellar mass–X-ray luminosity relation of groups and clusters (Anderson et al. 2015; Comparat et al. 2022; Zhang et al. 2024). Stellar masses for distinct haloes are predicted via the stellar to halo mass relation (and its scatter) from Moster et al. (2013), see Eq. (1) in Comparat et al. (2019). The correction factor so obtained is negligible at high mass, while it can reach down to 0.1 at 1013 M⊙. This correction procedure preserves scatter in the X-ray luminosity at fixed halo mass. This approach is validated a posteriori by the good agreement between the observed and simulated galaxy cluster flux distributions.

The AGN model (Comparat et al. 2019) reproduces by construction the observed hard X-ray AGN luminosity function from Aird et al. (2015) and the number density as a function of AGN flux at redshifts 0 < z < 4. AGN are associated to dark matter halos and subhalos by combining together a stellar mass to halo mass relation with a duty cycle, and a abundance matching between stellar mass and X-ray luminosity. The observed rate of AGN in galaxies as a function of stellar mass and redshift (i.e. the AGN duty cycle, Georgakakis et al. 2017) is thus naturally reproduced. As the algorithm matches halos to AGN sources, a parametric scatter in stellar mass at fixed AGN luminosity is tuned to a value ensuring the distribution of specific accretion rates follows observed data. Since AGN and clusters are painted onto halos drawn from the same N-body simulation, these two populations are spatially correlated. Except for the link to stellar mass, our simulations make no account for environmental AGN dependence nor dependence on the star formation rate of galaxies (e.g. Aird et al. 2018). Despite being simple, this model produces AGN Halo Occupation Distributions in good agreement with eFEDS data in group- and cluster-size halos, and this is true in particular for central galaxies in those halos (Comparat et al. 2023). Brightest Central Galaxies (BCG) are not treated separately from other halos in our simulation. Because they are hosted in higher mass halos, the stellar mass associated to BCG is higher than that in average field or satellite galaxies; so is their associated AGN X-ray luminosity. In practice, due to the scatter in the abundance matching procedure and to the low value of the duty cycle of high-LX AGN, it is rare to find a luminous AGN at the centre of a simulated cluster. In fact, only 0.4% of the M > 1014 M⊙ simulated halos host a central AGN more X-ray luminous than the cluster itself.

A simulated half-sky twin contains more than 106 simulated halos up to z ∼ 1.5. Besides AGN and clusters, foreground X-ray emitting stars and galactic foregrounds also have their share of simulated photons. A total of 373 316 simulated stars have a flux assigned according to the X-ray flux cumulative distribution measured in eFEDS (Schneider et al. 2022); their positions are selected at random among true Gaia DR2 positions to follow the observed density of stars. Diffuse foregrounds include mainly emission from the local hot bubble, eROSITA bubble, and the galactic halo (Predehl et al. 2020; Ponti et al. 2023; Locatelli et al. 2024; Zheng et al. 2024a,b). Normal galaxies whose X-ray emission is driven by hot gas and X-ray binaries, also contribute to the unresolved background component. The sky density of X-ray emitting galaxies is well below the number of AGN in stars at the limiting flux of eRASS1 (Lehmer et al. 2012), hence we do not simulate these sources individually, rather their contribution is entirely considered as part of the eRASS1 unresolved background component. Our practical implementation relies on resampling real eRASS1 background events obtained after conservatively masking each eRASS1 detected source region. This approach enables the generation of spatially varying background (at the angular resolution of 0.9 deg) that correctly reproduces actual eRASS1 data.

The SIXTE software (Dauser et al. 2019) generates mock event lists and images of these photons, as would be seen by eROSITA in the eRASS1 survey. In particular, instrumental characteristics and scanning laws enable reproducing the response of the telescopes to the X-ray simulated sky. In order to examine variability due to stochastic Poisson noise, several tens of simulations are produced (using the same X-ray parent population). Processing of event files in tiles of size 3.6 × 3.6 deg2 took place in a similar way as for real data. In particular the eSASS (version eSASSusers_201009) source finding algorithm runs over each tile and delivers a list of detections with associated measurements ℒdet and ℒext.

All clusters simulated in a twin mock are associated to a set of properties, such as mass M500, true redshift z, X-ray luminosity LX, flux fX or count-rate CR (all within R500, see Table 1). These “true” properties will serve in establishing selection models in Sect. 5. However, we consider important at this stage to recall that such properties are “labels” associated to simulated sources. The link between those labels and the mock events depends obviously on the models imprinted in the twin simulation.

3.3. Matching input and detected sources

The next step consists in attributing a binary flag to each simulated halo stating whether it is selected in the cluster sample. This operation relies on matching the input catalogue to entries in the detection list. We explore and compare two different procedures. The first method, hereafter “photon-based”, takes advantage of the SIXTE simulator as it individually tracks simulated CCD events back to the source that emitted the photon. The second method, hereafter “position-based”, uses positions and sizes of sources on sky, aided with prior knowledge of the flux distribution of selected sources.

3.3.1. Photon-based matching

The photon-based matching is in fact our baseline method. We refer the reader to Seppi et al. (2022) for a comprehensive explanation of the procedure. The input object contributing most photons to the counts making a detection is identified as the best matching counterpart. If a detection is split into several sources by the detection software, again preference is given to the source comprising most photons originating from the identified counterparts. This enables assigning a unique match (ID_Uniq) to a given detection and to a given simulated object. This is the definition we take for matches in the following. Sources with a unique match are flagged as selected (Imain = 1). We note that this procedure involves sources of all types: simulated AGN, clusters, stars; and detected point-like and extended sources.

3.3.2. Positional matching

The positional matching technique is a two-way match between the input and detection catalogues. It was employed in the context of establishing a selection function for the eROSITA Final Equatorial-Depth Survey (eFEDS, see Liu et al. 2022). This technique does not assume a fixed cross-matching radius; instead it takes into account the sizes of sources. Each simulated halo is listed with a central coordinate and an angular extent (we take 10% of the virial radius). Each extended detection is reported with a coordinate, a 1-σ error circle, and an angular extent (we take the core-radius of the best-fit β-model). We combine source extents with the source positional uncertainty; effectively it is similar to spreading location of the source over a small region. We use the NWAY algorithm (Salvato et al. 2018), since it is well-suited to cross-matching catalogues in presence of positional uncertainties. We first take the input catalogue as reference and look for matches in the detection catalogue. For each reference source, the algorithm returns a list of all matches located within a large buffer region of radius 3 arcmin. Each of the matches is assigned a probability pi that it is indeed a valid association, based on its proximity and positional uncertainties. A second value pany is computed, representing the probability for the input source to have at least one counterpart among the detections. The probabilities pi and pany account for chance associations, through the source density estimated over a degree-scale region. Sorting matches by the value of pi provides a ranking of most likely counterparts. More often than not our catalogues enclose complex configurations with multiple input sources projected along neighbouring line of sights, and detections split in multiple sources. To deal with these effect we update the probabilities with a prior distribution of the flux of selected sources, as enabled by the NWAY formalism. The exact shape of this prior is unimportant in this context, and we simply take it from the simpler pre-launch selection functions derived in Clerc et al. (2018). As a consequence, NWAY will preferentially up-weight the probability of brighter sources. Converting probabilities into binary flags requires setting thresholds on pi and pany. Our experiments showed that the result is rather insensitive to their exact values. For this first pass (input catalogue as reference) we kept only pairs with pany > 0.9 and pi > 0.1. Pairs with the highest pi among all associations are called primary matches. We reiterate the above procedure after exchanging the role of the input and detection catalogues. For this second pass (detection catalogue as reference) we do not input any prior and we chose pany > 0.5 and pi > 0.1 to select valid pairs and primary matches. Finally, matches that are primary in both runs were selected as solid matches. For each input source, it is flagged as a selected cluster (Imain = 1) if it belongs to a solid match. Such a double matching procedure is more conservative than a single-pass procedure; as a primary matched source will not be available for a second pair.

3.3.3. Comparison of matching techniques

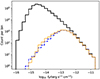

Figure 1 illustrates the comparison between both matching procedures. They agree well on a wide range of fluxes. Deviations are visible at a low flux, that is, below a few 10−14 erg s−1 cm−2. One possible cause (not unique) stems from the presence of a bright AGN near a relatively dim cluster. An example is shown in Fig. 2. In photon-based matching, even if there is an extended detection nearby, it is matched to the input simulated AGN due to the large amount of photons (and events) it deposits in the vicinity of the extended detection. In the position-based procedure, the extended source is matched to the input cluster, since it does not include input AGN sources in the analysis. The problem of characterising a source as detected in such case is ill-posed. Another subtle difference in the treatment of the catalogues plays a role, due to the definition of an extended detection. The position-based matching takes as input the list of sources with extent likelihood greater than a predefined threshold (6 for the cosmology sample). The photon-based matching takes as input the entire list of sources, associates a detection in the list and only then flags as selected those halos with a detection having extent likelihood above threshold. Reversing the order of operations has an impact in crowded regions with multiple split sources, or multiple halos along neighouring line of sights.

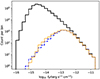

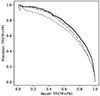

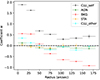

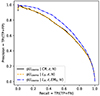

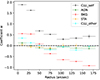

|

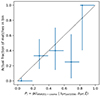

Fig. 1. Comparing the outcome of two matching procedures relating simulated halos (plain black histogram) to detected sources (photon-based matching is a dashed blue line, and position-based matching is a thin orange line). A simulated halo is flagged as selected (Imain = 1) whenever it is (solidly) matched to an extended source in the detection catalogue. The details of the matching algorithms impact the flux distribution of detected sources, especially at flux below a few 10−14 erg s−1 cm−2, with deviations up to a factor 2 in certain bins. |

|

Fig. 2. Example case of blending, where a bright AGN drives the detection and the presence of a faint extended source favours its classification as extended. This figure is a cut-out of a simulated count image, smoothed with a Gaussian. The red 2- and 5-arcmin circles are centred on a faint simulated cluster (filled triangle symbol) with flux ∼5 × 10−14 erg s−1 cm−2. Detected sources appear with blue squares. A detection close to the cluster centre is classified as extended (magenta circle). It also coincides with a bright simulated AGN (orange “x”). The position-based matching algorithm does consider the simulated cluster as selected (Imain = 1), contrary to the photon-based matching procedure. Two other faint simulated clusters are shown with open triangles, they have no impact on the detection. |

The comparison exercise described in this section serves in bracketing the uncertainty associated to the matching procedure. In practice, model uncertainties in the low count regime are expected to overcome such variations. The photon-based matching is taken as a baseline for the rest of the paper.

3.4. Training and test samples

Each realisation of the twin eRASS1 sky comprises about 106 halos, with less than 4% being detected (IeRASS1 = 1) and less than 1% being selected (Imain = 1) according to the matching algorithm. The sample is split into two parts: two thirds are saved for training a model, the other third is left untouched and will serve to test the validity of the model. Splitting is performed after shuffling the list of halos at random.

We also create supersets of the simulated sky by concatenating training sets of eleven realisations, and randomly shuffling their content. Although the realisations are not independent stricto sensu (they share the exact same population of objects), this procedure helps in reducing the impact of photon noise. Each super-training set is 11 × 2/3 ≃ 7 times larger than the actual eRASS1 sky.

4. Selection models with surface brightness profiles

Equation (2) involves three important factors that require modelling. The task of modelling the selection function consists in building p(I|Θsel), this is the main interest of this paper. The choice of Θsel usually results from a compromise between precision of the selection function and complexity of the model p(Θbin,Θsel|Θmodel).

A natural choice for the selection parameters Θsel is the collection of pixel values that form the images of clusters. Then p(I|image) is obtained by feeding eSASS with an image and applying thresholds on the values (ℒext, EXT) returned by the algorithm; because eSASS is deterministic, p(I|image) takes either value 0 or 1. The selection function model is thus very precise – it is actually the most precise model since it exactly reproduces the actual data processing. However, analysing a typical 10′×10′ image with eSASS takes about three seconds with standard CPUs. Computing a likelihood (Eq. (1)) for about 12 000 clusters would then amount to about three CPU⋅hours, which is unrealistically long for a cosmological analysis. To this cost must be added the time to compute a model cluster image given Θmodel.

Realising that galaxy cluster images are almost circularly symmetric, we may accept to lose precision and to reduce the complexity of modelling a cluster. Let us consider the number of photon hits (counts) deposited by sources onto the detectors, split in several sky annular apertures around the centre of a putative dark matter halo. We emphasise that the number of counts should be a prediction of the model p(counts|Θmodel). It is not the outcome of a measurement process – that is available for detected sources only. It is not the scope of this paper to discuss how such a generative model is constructed, we simply assume it exists. For constructing our selection model we rely on the twin simulations, that have kept a record of the origin of each count deposited in the image.

4.1. Illustration with a single feature: the 90″ cluster counts

Let us initiate our procedure by involving only one feature, namely the number of cluster counts received in a circular aperture around the (RA, Dec) centre of the simulated halo. The aperture radius is set to R = 90″. We transform the counts (integer values N) with the following:

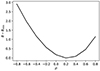

We set Σ = N/(πR2) and c = 0.0002 counts arcsec−2. In our training set, N ranges from 0 to 3194 counts within 90″ aperture. Figure 3 displays the histogram of η associated to all halos in the training set, and for those flagged as selected (Imain = 1). The ratio of the two histograms provides an empirical estimate of the probability of detecting a halo given the number of counts deposited on the detectors. The empirical probability follows a characteristic “S-shaped” curve. We perform logistic regression to fit a model to the probability of detecting a cluster as extended (ℒext > 3) given the value η. We use the scikit-learn implementation (Pedregosa et al. 2011) with the Broyden–Fletcher–Goldfarb–Shanno optimizer and fit two coefficients, namely the intercept w0 and the slope wη of the linear model f(η) = w0 + wηη. The model probabilities are such that

|

Fig. 3. Distribution of clusters in the training set as a function of Σ (units counts arcsec−2), and the average surface brightness in the 90″ radius around their centre. The x-axis is rescaled with c = 2 × 10−4 arcsec−2. Top panel: histogram of all simulated clusters (blue) and histogram of the subset of those found as extended by eSASS (orange). Bottom panel: dots indicate the ratio of the histograms (empirical selection rate). Error bars are the 68% confidence range estimated according to Appendix C. The vertical dashed line indicates the transition |

The cost function to minimise during the fitting procedure is the log-loss 𝒞(w0, wη), its expression is shown in Eq. (A.2).

Thanks to the one-to-one relation between η and N (Eq. (3)), we thus obtain a model p(Imain|N). We can interpret the values of the best-fit coefficients as follows: wη governs the sharpness of the transition in the S-shaped curve,  is the value at which this transition occurs. In our experiment, we find wη = 4.59 ± 0.04 and w0 = −8.92 ± 0.06, leading to

is the value at which this transition occurs. In our experiment, we find wη = 4.59 ± 0.04 and w0 = −8.92 ± 0.06, leading to  . This corresponds to a transition of the S-shaped curve taking place at N ≃ 30 cluster counts in the 90″ circular region. Positiveness of wη reflects the fact that detection probability increases with number of counts N. The computation of coefficient uncertainties is detailed in Appendix A.

. This corresponds to a transition of the S-shaped curve taking place at N ≃ 30 cluster counts in the 90″ circular region. Positiveness of wη reflects the fact that detection probability increases with number of counts N. The computation of coefficient uncertainties is detailed in Appendix A.

4.2. A model using light profiles from all sources

The model presented so far involves only one feature, it is too simplistic to explain subtle variations in the probability of selecting a given cluster. The model improves by involving more of the quantities available from the twin simulations, namely the number of detector hits deposited by each of the five sky source components: the cluster of interest, Active Galactic Nuclei (AGN), stars, back- and foreground and other clusters. Each of these contribute to the count image and deposit photons into seven annular regions around the central coordinate of the cluster. Radial boundaries (units arcseconds) are 0–20, 20–40, 40–60, 60–90, 90–120, 120–150 and 150–180. For each simulated cluster, we thus construct a 5 × 7 = 35-element feature vector η = {ηj}j = 1…35 and perform logistic regression, involving a total of 36 coefficients {wj}j = 0…35 (one for each feature, plus the intercept). Introducing Σj the average surface brightness in an annulus, we write the following:

One may interpret the value of the coefficients wj as the sensitivity of the detection rate p(Imain|η) to a small variation of the surface brightness of one component in one annulus. Indeed we have

where the derivative is performed at constant ηk (k ≠ j). It yields the following:

In other terms, everything else maintained fixed, a small relative variation ϵ = ΔΣj/Σj of the surface brightness of one component in one given annulus leads to a variation of the detection probability proportional to wjϵ. In particular, positive coefficients relate to those features that marginally contribute to selecting a cluster, and conversely for negative coefficients. Figure 4 conveniently represents the value of the 35 coefficients wj (j ≠ 0) split into each component and annulus; the last coefficient not shown on this figure is w0 = −8.82 ± 0.08.

|

Fig. 4. Representation of the 35 coefficients wj of a logistic regression model p(Imain|counts), trained to predict whether a cluster was selected in the primary cluster sample. The 35 features are surface brightness in 7 radial annuli, associated to the 5 components indicated in legend (counts from the galaxy cluster of interest, from neighbouring AGN, fore- and background counts, counts from foreground stars, counts from other neighbouring galaxy clusters). High absolute value of a coefficient indicates high importance of the associated feature (Eq. (6)). |

Keeping in mind that wj are proportional to the marginal increase of the detection probability, comparing together values of coefficients provides an approximate way of assessing the “importance” of a feature in promoting detectability of a cluster. For instance, it appears that a small increase of surface brightness in the 20 − 40″ annulus from a given cluster has the strongest impact on the detectability of this cluster. The fact that it is twice as important as the 0 − 20″ surface brightness is not surprising. A detected source (IeRASS1 = 1) must be classified as extended in order to be selected (Imain = 1); from this perspective, it is much more profitable to increase the counts beyond the eRASS point-spread function radius (∼30″). This argument may appear more clearly from Fig. B.1, which shows the coefficients wj of a model trained to predict the detection (not the selection) of a cluster. Clearly in this case the central 20″ surface brightness is the most relevant feature contributing to detectability of a cluster.

The effect of star-emitted photons is barely constrained, mostly due to their paucity in the simulation. AGN photons within one arcmin of the centre tend to marginally increase the detection rate, while those located beyond 1 arcmin tend to decrease the detection rate. This is because the source detection algorithm would put a mask and remove photons useful for cluster detection. An excess of photons from instrumental and astrophysical fore- and background within 1.5′ of a cluster tend to increase its probability of being selected; while at larger distances they decrease its probability by a large factor. The impact of neighbouring clusters is similar, although less pronounced.

4.3. Internal validation of the selection models

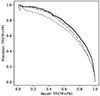

The previous interpretation of the wj coefficients in terms of marginal probabilities should not hide it is only relevant to a specific model, here a logistic model, that is not a perfect classifier. We assess performance of this model on the left-apart test sample. Figure 5 represents the so-called “precision-recall” curve. This curve is obtained by scanning values of a threshold set to convert probabilities p(Imain|counts) into a binary classification Imain. Once this threshold is set, one retrieves the number of true positives (TP), false positives (FP) and false negatives (FN) by comparison with the actual detection flag in the test sample. The higher the recall1 TP/(TP + FN) and the precision TP/(TP + FP), the better performance of a model. For any value of the threshold, the model using 35 surface brightness indicators performs significantly better than the model using only the 1.5-arcmin cluster counts as input. We have also displayed the curve obtained from a logistic model using two features, namely the 1.5-arcmin cluster and background counts. Its performance lies between the simple and the sophisticated (7 × 5) models. This agrees with common sense, in that adding more detailed information provides more precise models.

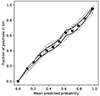

|

Fig. 5. Performance of three different logistic models p(Imain|counts) to predict a cluster as selected given the values of surface brightness in radial bins. The plain black line is obtained with a model using surface brightness values in 7 annuli for each of the 5 sky components. The dot-dashed line is for a model taking as input both cluster and background counts within 90″. The dashed grey line stands for a model using as input only the 90″ cluster counts. Each precision-recall curve results from model evaluations on the test sample. It is obtained by varying the threshold over which a cluster should be considered as selected and counting the number of true positives (TP), false negatives (FN) and false positives (FP). |

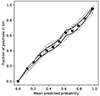

A second test is shown on Fig. 6. This evaluation is performed by binning test clusters by their value of p(Imain|counts) returned by the model. The actual fraction of objects selected in the sample is reported on the vertical axis. The 35-feature model produces the plain envelope, in satisfactory agreement with the one-to-one line. This result indicates reliable probabilities delivered by the model in a statistical sense. The probabilities from the model using only one cluster count indicator (round dots and dashed envelope) are less reliable. This is reflected in Fig. 3 where the S-shaped curve does not reach unity around values of η ≃ 2.5 − 4 (corresponding to N ≃ 60 − 270 counts in the 90″ aperture).

|

Fig. 6. Reliablity of two different logistic models p(Imain|counts) to predict the probability of a cluster being selected given the values of surface brightness in radial bins. Each series of points is obtained with the test sample, by comparing probabilities predicted by the model (horizontal axis) with the actual fraction of selected objects (vertical axis) in each bin of probability. The envelopes materialise the 68% uncertainties (Appendix C). An ideal model would align along the 1:1 curve (thin dotted line). Black squares and plain lines correspond to the model taking 7 × 5 parameters as input, grey dots and dashed lines for the model using only cluster counts within 90″ to make its prediction. |

Despite its apparent simplicity, a linear logistic model using circularly averaged surface brightness as training features does output probabilities that are representative of the actual selection probabilities. It is therefore well-suited to selection function problems involving populations of clusters. However, an average precision of 77% (Fig. 6) indicates that it is not well-suited to the classification on an individual basis. This floor performance is due to using circularly averaged profiles, hence neglecting two-dimensional effects (e.g. blending, masking, gradients) in the selection process. Furthermore, logistic regression is a simple linear model. An interesting development would involve more sophisticated models such as neural networks (e.g. building upon the multi-layer perceptron). Their use may be able to add the right level of non-linear feedback and complexity to accurately reproduce the selection process, at the expense of clarity and simplicity.

A selection function model based on surface brightness as above is attractive due to its use of observable features. In the context of cosmological studies (as idealised in Eq. (2)), it is necessary to construct a second, independent model able to predict the radial surface brightness profile of five components: clusters, AGN, stars, background and neighbouring clusters. Such a complex model p(counts|Θmodel) represents a computational bottleneck.

5. Selection models with intermediate variables

We may instead choose Θsel such as to reduce this complexity, at the cost of a less precise selection model. We consider variables (i.e. selection model features) such as cluster mass, redshift, luminosity, size, central emissivity, etc. We also consider exposure time, background level, galactic hydrogen column density. For some of these variables (e.g. flux, luminosity) it is more convenient to manipulate their logarithmic values.

Having fixed the set of selection variables Θsel, we build a model assuming that the detection probability varies slowly over the range of interest of these (rescaled) features. However, we do not make prior assumption on the exact shape, nor on monotonicity of the function. A kernel-based model is well-suited to describe this problem, as it reflects the fact that similarly looking clusters should have similar detection probabilities. We use Gaussian process classification (GPC) to build our model, using the SVGP implementation in the GPy package (GPy 2012). Details on the procedure and algorithm are discussed in Appendix D. The very nature of GPC allows to issue not only an estimate of p(I|Θsel), but also a range of statistical uncertainty. Our implementation does not provide extrapolation properties and a model returns a default value (p = 0.5) when Θsel is outside the domain where the training set lives. For the purpose of cosmological analysis it is not deemed an issue, as long as care is taken in handling the integration bounds in Eq. (2).

In the following we highlight three models relevant to this paper. In particular we demonstrate the interest of a model based on count-rate CR, which was selected for the eRASS1 cosmological analysis (Ghirardini et al. 2024). In Appendix E we describe several other models, notably those including cluster morphology in their input parameters.

5.1. Mass and redshift

Setting Θsel = {M500c, z} allows to gain insights on the selection process in the fundamental parameter space of cluster cosmology. Figure 7 is a visualisation of a model adjusted to predict the selection of a cluster with extent likelihood above 3. The probability of selecting a cluster located at z = 0.3 monotonically increases with mass, with a transition taking place at M500c ≃ 5 × 1014 M⊙.

|

Fig. 7. Representation of the model p(Imain|M500c, z) predicting a cluster to be detected and selected with an extent likelihood above three. The explanatory variables (features) are labels attached to simulated clusters in the twin simulation, standing for galaxy cluster M500c mass and cosmological redshift. The bottom-left panel represents contours of equal detection probability, labelled in steps of 0.1. Both one-dimensional curves (top-left and bottom-right panels) are slices through the function displayed in the bottom-left corner, at fixed z = 0.3 and M500c = 3 × 1014 M⊙ (indicated with dotted lines). |

Despite its formal interest, this model is not of practical use in context of cosmology studies. The two features entering this model (mass and redshift) are mere labels attached to clusters in the twin simulation. The model is therefore valid only under assumption of physical models implemented in the simulation. In particular, it is only valid for the set of scaling relations and cosmological parameters (Planck Collaboration XIII 2016) imprinted in the simulation.

5.2. Luminosity and redshift

Setting Θsel = {LX, z} advantageously removes the strong dependence of the model upon the M500 → LX relation that is imprinted in the twin simulation. It is therefore up to the cosmologist to model the relation p(LX|Θmodel) with (most likely) parameterised scaling laws. A visual representation of the model is shown in Fig. 8. As expected, clusters brighter and closer to us are more likely to be selected.

|

Fig. 8. Representation of the model p(Imain|LX, z) predicting a cluster to be detected and selected with an extent likelihood above three. The explanatory variables (features) are labels attached to simulated clusters in the twin simulation, standing for galaxy cluster luminosity LX measured in the 0.5–2 keV energy band at the cluster rest-frame (units erg s−1, rescaled in log10) and for cosmological redshift z. Other details are similar as in Fig. 7. |

Although useful, this model cannot account for variations of the detection probability as a function of sky position: galactic absorption, background and exposure time values all have an impact on cluster detection. Offering these degrees of freedom in the selection function allows one to detach from the assumptions imprinted in the twin simulation. We have trained a second model with those three features, using the GPC formalism. Figure 9 is a partial visualisation of the model output, all following quantities but exposure time Texp being fixed at z = 0.3, NH = 3 × 1020 cm−2, background brightness 5.2 × 10−15 erg s−1 cm −2 arcmin−2 in the 0.3–2.3 keV energy band, LX = 1043 or 1044 erg s−1. The dependence of the model output on the exposure time appears clearly on this figure and thus the model is more precise in delivering a selection probability for a given cluster.

|

Fig. 9. Representation of the models p(Imain|LX, z, NH, Texp, bkg) and p(Icosmo|LX, z, NH, Texp, bkg) for fixed values of cluster redshift z, two different values for the luminosity LX, and local galactic absorption NH, in addition to a nominal background level and a range of exposure times Texp (along the x-axis). The shaded regions indicate the approximate 68% confidence range output of the model. |

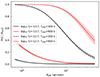

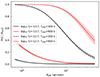

5.3. Unabsorbed count rate and redshift

In the course of the development of the eROSITA cosmology pipeline, trade-off discussions led to setting Θsel as a five-component feature, namely: the cluster redshift z, sky position-dependent quantities ℋ≡ (NH, Texp and background) and the cluster CR. Here CR represents the unabsorbed, 0.2–2.3 keV survey-average count-rate collected within a R500 aperture and unaffected by shot noise. It derives from the formula:

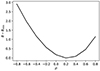

In this expression, ECF is the Energy Conversion Factor from the 0.5–2 keV band in the cluster rest-frame to the 0.2–2.3 keV band in the observer frame. It is computed for a APEC emission spectrum at temperature kBT, redshift z, unabsorbed (hence NH = 0) and folded through the survey-averaged instrumental response of the 7 telescopes. This quantity is readily available for each simulated cluster in the twin simulation, since we know its redshift and temperature. The luminosity-distance dL is computed using the same cosmology as for the twin simulation. Figure 10 represents a few slices through the multi-dimensional model p(Icosmo|CR, z, NH, Texp, bkg), fixing the value of NH and background level as previously, for two different redshift values and a range of count-rate (CR) values. The dependence on count-rate shows the usual S-shaped curve and larger exposure times imply higher sensitivities. The slight dependence on redshift is less intuitive: all other quantities being fixed, a source at higher redshift is more compact, thus its surface brightness is concentrated over a smaller area, making its detection comparatively easier.

|

Fig. 10. Representation of a model p(Icosmo|CR,z,NH,Texp,bkg) for two values of cluster redshift z = 0.2 and z = 0.5, fixed local galactic absorption NH, a nominal background level, two values of exposure times Texp (units s), and a range of count-rate values (x-axis). The shaded regions indicate the approximate 68% confidence range output of the model. |

The selection model obtained this way is quasi-independent of the cosmology imprinted in the twin simulation. It is also capable of reflecting the variations of selection depth over the sky. The cluster model is relatively simple (one cluster is represented by one count-rate and one redshift), which makes the computation of p(CR,z|Θmodel) fast and easy in likelihood (Eq. (2)). However, this simplicity comes at a cost, since all variations of the selection due to cluster morphology, for example, are marginalised over, relying on the distribution of morphologies in our twin simulation.

5.4. Internal validation of the selection models

We now quantify the absolute performance of models relying on intermediate variables Θsel. By doing so we will also assess the relative performance between models involving various parameters as input. We use our test sample to make such tests and compare the predicted model outcomes to the actual detection flags in the sample. Similarly as in Sect. 4 we will highlight two performance tests: the precision-recall curve and the calibration curve.

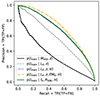

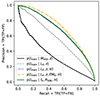

Figure 11 compares five of our models predicting the presence of a cluster in the eRASS1-main sample. They differ from each other by their input parameters (features Θsel). The model involving only mass and redshift has quite a low precision and recall (average precision of 34%). Predicting the detectability of a cluster requires indeed more detailed characterisation. Changing to a model taking luminosity and redshift notably improves the overall performance, since luminosity is more tightly linked to photon counts than mass. However, it still has an average precision of 56%, unsatisfactory enough for detailed analyses. Including sky position-dependent parameters ℋ = (NH, Texp, bkg) dramatically boosts the classification performance (average precision of 67%). As expected, adding information on the local background, exposure time and foreground absorption increases the capability of the model. An additional performance gain is obtained by adding a morphological parameter, here the EM0 parameter (as presented in Appendix E). The average precision increases to 71%, closer to that obtained with the 7 × 5 surface brightness model depicted in Fig. 5. Finally, a model involving (absorbed) flux, apparent R500 radius and ℋ shows good performance with an average precision reaching 68%.

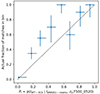

A similar assessment is provided in Fig. 12, now for models predicting the presence of a cluster in the eRASS1 cosmology sample. The baseline model used for the eRASS1 cosmological analysis relies on CR (count-rate) and redshift, as well as on sky local information. Its good performance (average precision 70%) is comparable to a model involving luminosity and redshift. Again, adding morphological information (here via EM0, see Appendix E) provides a noticeable enhancement of the model precision (average precision 74%).

|

Fig. 11. Precision-recall curves obtained with selection models trained to predict the selection of a cluster in the primary sample. The input parameters vary from one model to the other, as indicated in the legend. A model involving luminosity, redshift, morphological features and local sky information performs best (yellow dot-dashed curve). |

|

Fig. 12. Similar to Fig. 11, but comparing three models predicting the presence of a cluster in the cosmology. The model represented with the black curve corresponds to the baseline selection model used in the analysis of Ghirardini et al. (2024). |

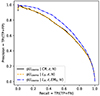

We now assess the reliability of the probabilistic output of the models. In Fig. 13 we compare the same five models for the primary catalogue selection function. All curves lie very close to the one-to-one line: the probability outputs reflect well the actual fraction of positive detections in the test catalogue. The result for the cosmology sample classifiers appear in Fig. 14 and they all are very well aligned along the one-to-one line.

|

Fig. 13. Evaluating the reliability of five models predicting the probability of a cluster to appear in the eRASS1 primary cluster catalogue (x-axis). The y-axis represents the actual fraction of selected objects in the test sample. The bottom panel shows the residuals (difference) of each curve with respect to the one-to-one line. Shaded regions indicate 68% confidence intervals in each bin of probability. |

|

Fig. 14. Similar to Fig. 13, but comparing three models predicting the presence of a cluster in the cosmology sample. |

The tests depicted above illustrate good prediction performance of the models, in the sense that probability outputs correctly reflect the actual selection probabilities. This performance indicator is valid for the ensemble of clusters in the simulated eRASS1 sky. In the course of our model production efforts, we have monitored several other indicators in order to assess the performance and reliability of the models. Among them were: the Receiver-Operator Curve (ROC) comparing recall and fall-out rates FP/(FP + TN); the Detection-Error Tradeoff Curve (DET) comparing fall-out and miss rates FN/(FN + TP) and the correlation coefficient suggested by Matthews (1975). All together, these performance indicators helped in selecting the best models to be used in the cosmological analysis.

6. External validation of the selection function

We have described several models and we validated their performance on a simulated test set. This test set is produced in a very similar manner as the training sample, splitting the original twin simulation set in two parts. Additional tests are required using new samples independent from the twin simulation. We aim to test eRASS1 selection models with (i) a sample of clusters selected from a much deeper dataset (eFEDS) and (ii) two SZ-selected sample selected from South Pole Telescope surveys of different depths: SPTpol-100d and SPT-Early Cluster Sample (SPT-ECS). Selection biases affecting these samples must be taken into account; moreover we have to link their measured properties to eRASS1 selection variables. We present our formalism in the most generic manner, then we apply it to the specific cases of eFEDS and SPT-SZ.

6.1. General presentation of the formalism

Given two surveys, R taken as a reference and the other T to be tested, we wish to validate our selection function models for both R and T. We do so by comparing the populations selected by each of the survey. In the optimal case where R is much more complete (e.g. deeper) than T, the problem does not require precise knowledge of the selection process leading to catalogue R. In general both catalogues comprise a biased selection of the true underlying population of clusters, the latter we take as prior in the following analysis.

Extending the formalism laid out in previous work (e.g. Grandis et al. 2020) we calculate for each object i in the reference catalogue an associated probability:

There, IT and IR are random boolean variables indicating whether an object is listed in catalogue T and R, respectively. Θmeas is a collection of measured properties attached to the cluster: its measured flux, redshift, luminosity, extent, etc. are likely candidates. Using Bayes’ rule, Pi writes as the ratio of two joint probability distributions, the denominator being interpreted as the number of objects expected in the reference catalogue with measured properties  ; the numerator as the number of objects expected in both the reference and test catalogues with these same measured properties. In this perspective, Pi is a model for the fraction of reference objects listed with some measured properties

; the numerator as the number of objects expected in both the reference and test catalogues with these same measured properties. In this perspective, Pi is a model for the fraction of reference objects listed with some measured properties  that are also present in the test sample. Introducing Θmodel and Θsel enables hierarchical Bayesian expansion of the joint probabilities into elementary factors. The numerator specifically involves the conditional probability distribution:

that are also present in the test sample. Introducing Θmodel and Θsel enables hierarchical Bayesian expansion of the joint probabilities into elementary factors. The numerator specifically involves the conditional probability distribution:

and similarly for the denominator:

The last two expressions are valid as long as selection in the test survey only depends on variable Θsel, T and selection in the reference survey only depends on variable Θsel, R. We have introduced a new, intermediate variable α to reflect the dependence of the measurement vector. For instance, if the measurement vector is X-ray flux, α may represent the cluster luminosity and redshift. The interest of this decomposition lies in it involving the selection functions p(I|Θsel), which are tabulated for each survey. It also accounts for covariances in the selection and measurement processes, through the multivariate distribution of (Θsel, T, Θsel, R, α) given the values of Θmodel.

The probability Pi (Eq. (8)) is the main outcome of this model. It is calculated independently for each object listed in the reference catalogue. By comparing this probability to the actual presence of a match in the test catalogue, we obtain powerful diagnostics on the validity of the models, and the presence of outliers in the population. In particular, we will denote by “surprising detections” those objects listed in R and detected in the test catalogue despite their low forecast probability (Pi < 0.025 and  ). We will denote “missed objects” those listed in R and not detected in the test catalogue despite their high forecast probability (Pi > 0.925 and

). We will denote “missed objects” those listed in R and not detected in the test catalogue despite their high forecast probability (Pi > 0.925 and  ).

).

Our procedure to find matches between the reference and the test catalogues relies on simple positional match. For efficiency reason we trim the reference catalogue to the approximate sky footprint of the test sample and conversely. We then search for symmetric 2-arcmin matches between the samples, keeping only the best match, that is the closest distance. Such a procedure does not require redshifts to be identical in both catalogues. Our choice of the maximal separation corresponds to the most sensible cross-matching radius for surveys and instruments considered in this study (Bulbul et al. 2022).

Beyond the interpretation of individual Pi values, we also base our evaluation on three aggregated diagnostics. First, from repeated Bernoulli samples of Pi values, we obtain a distribution for the expected number of matching entries between both catalogues, and its associated uncertainty. Comparing the actual number of matches to this distribution provides a bulk validation test of the model. The second diagnostic consists in grouping clusters by their Pi values in bins of finite width, and in computing the actual fraction of matches in each bin. This diagnostics informs on the reliability of the Pi values, hence on the underlying model. Our third diagnostic empirically tests for leakage in our model, by introducing two numbers representing the fraction of objects that should be in the test catalogue, but they are not (δ1) and the fraction of objects that should not be in the test catalogue, but they are present (δ2). We compute posterior distributions for δ1 and δ2 by sampling the following likelihood (product of independent Bernoulli likelihoods):

The product runs over all clusters in the reference catalogue, and  equals 1 if the cluster is matched in the test catalogue, it equals 0 otherwise. If the posterior distribution for δ1 significantly excludes zero, it indicates that part of the clusters predicted to be in the test catalogue actually escape our model and are not detected for some reason. Similarly, a distribution of δ2 significantly away from zero hints towards our model not capturing properly the number of undetected objects. In this computation we assume flat prior distribution for δ1 and δ2 bounded in the [0, 1] interval. We sample the posterior with a Monte-Carlo Markov Chain algorithm provided in the emcee package (Foreman-Mackey et al. 2013).

equals 1 if the cluster is matched in the test catalogue, it equals 0 otherwise. If the posterior distribution for δ1 significantly excludes zero, it indicates that part of the clusters predicted to be in the test catalogue actually escape our model and are not detected for some reason. Similarly, a distribution of δ2 significantly away from zero hints towards our model not capturing properly the number of undetected objects. In this computation we assume flat prior distribution for δ1 and δ2 bounded in the [0, 1] interval. We sample the posterior with a Monte-Carlo Markov Chain algorithm provided in the emcee package (Foreman-Mackey et al. 2013).

Using the formalism depicted in this section, we have run several study cases:

-

eFEDS as reference, eRASS1-cosmo as test (Sect. 6.2);

-

eRASS1-cosmo as reference, eFEDS as test (Sect. 6.3);

-

eFEDS as reference, eRASS1-primary as test (Sect. 6.4);

-

SPTpol-100d as reference, eRASS1-cosmo as test (Sect. 6.5);

-

eRASS1-cosmo as reference, SPTpol-100d as test (Sect. 6.6).

-

SPTpol-ECS as reference, eRASS1-cosmo as test (Sect. 6.7).

-

eRASS1-cosmo as reference, SPTpol-ECS as test (Sect. 6.8).

Table 2 provides a summary of the setup for each test, along with the number of matched entries between the catalogues.

Setup summary of the external validation tests.

6.2. Testing the eRASS1 cosmological cluster sample against eFEDS

The deep eFEDS survey consists of a 140 deg2 area scanned by eROSITA during the CalPV phase with exposure about 10 times that of the average eRASS1 survey. Specifically, the average net exposure of eFEDS is about 1200 s, while in eRASS1 it amounts to a median 80 s in the same field (in both cases accounting for vignetting).

We opt for a population model described by Θmodel = (M500, z) representing the (true) mass within R500c and the (true) redshift of halos. The prior distribution p(Θmodel) is the halo mass function dn/dM500dz. Its exact shape in this specific experiment is not of critical relevance, given the wide sensitivity difference between eFEDS and eRASS1. We assume it follows the fit of Tinker et al. (2008).

The eFEDS cluster sample (Liu et al. 2022) comprises 542 candidate galaxy groups and clusters, among them 531 are in the eRASS1 catalogue footprint. Each entry is associated to a measured redshift zeFEDS (column z in the catalogue) and to an estimated 0.5–2 keV flux within a 300 kpc aperture (column F_300kpc, expressed in units erg s−1 cm−2). These two elements constitute our vector of measured features Θmeas. We construct a generative model for flux and redshift that depends on α = (LX, z, M500), where LX is a cluster (true) 0.5–2 keV luminosity integrated in a cylinder of radius R500. Our generative model further assumes independence of flux and redshift measurements, that is:

We take redshift measurements to be unbiased, with uniform Gaussian scatter at fixed z, that is supposed equal to 0.01(1 + z):

We suppose a log-normally distributed flux F_300kpc given the values of α, that is:

In this expression, both μF_300kpc and σlnF_300kpc depend on (LX, z, M500) as follows. We first convert any set of values (LX, z) into a 0.5–2 keV flux within R500 by assuming an APEC plasma model with temperature set by a standard luminosity–temperature relation (Bulbul et al. 2019). Foreground absorption is taken into account using a position-dependent value of the hydrogen column density (HI4PI Collaboration 2016). Aperture correction (from R500 to 300 kpc) requires knowledge of an emissivity profile for the ICM. We take an isothermal, isometallicity gas density profile obtained from local X-COP clusters (Ghirardini et al. 2019). The aperture correction thus depends solely on the value of R500 (itself deriving from M500 and z). We assume unbiased flux measurements and set μF_300kpc to the value of this aperture-corrected flux. We build a simple model for measurement errors by setting a power-law model, with an extra term ϵi:

Numerical coefficients in this expression were obtained by fitting a power-law model to the flux uncertainties reported in Liu et al. (2022). In order to account for cluster-to-cluster variability of the error, the extra term ϵi is specific to each cluster (labelled by i). As an assumption, ϵi is the deviation of the reported error to the value predicted by the empirical power-law model. The eFEDS cluster catalogue contains some clusters for which only an upper limit is reported on the measured 300 kpc flux. For these objects, we still assume a log-normal model. Its central value μF_300kpc is taken to be half of the upper limit value; the dispersion is such that ϵi is the deviation of the reported upper limit to the fixed value of 2.5 × 10−14 erg s−1 cm−2 (median of all upper limits in the catalogue). Although imperfect, the generative model depicted here should capture the main trends underlying the flux measurement process. It is aimed at predicting what would be the reported flux F_300kpc and redshift zeFEDS of an eFEDS-detected cluster, for any set of (true) values α = LX, z, M500.

The reference survey selection function  is the eFEDS selection function p(IeFEDS|LX,z,EM0,Texp). It is constructed as described in Liu et al. (2022), with addition of the morphological parameter EM0 characterising the central emissivity of the clusters (Appendix E). The exposure time Texp varies from one cluster to the other and its value is read from the eFEDS exposure map (Brunner et al. 2022).

is the eFEDS selection function p(IeFEDS|LX,z,EM0,Texp). It is constructed as described in Liu et al. (2022), with addition of the morphological parameter EM0 characterising the central emissivity of the clusters (Appendix E). The exposure time Texp varies from one cluster to the other and its value is read from the eFEDS exposure map (Brunner et al. 2022).

The eRASS1 selection p(Icosmo|Θsel, T) is the main model ingredient we wish to validate in this work. We present results for the model used in the eRASS1 cosmological analysis (Ghirardini et al. 2024, see also Fig. 10), involving count-rate CR, redshift z as well as position-dependent, cluster-independent, selection features ℋi. These features are galactic absorption column density, local background brightness and exposure time and their values are read from static sky maps at each cluster position. The eRASS1 cosmology sample is restricted to clusters with eRASS1-measured redshift 0.1 < zλ < 0.8. We assume Gaussian-distributed errors for the eRASS1 redshifts with standard deviation σz/(1 + z) = 0.015, see also Eq. (14). The criterion IN_ZVLIM = True, recommended by Kluge et al. (2024) is present in our model through the implementation of a sky mask.

The last ingredient required in the model is the distribution p(Θsel, T,Θsel, R,α|Θmodel). Given the assumptions enumerated in this section, it reduces to p(LX|M500,z). We take the scaling relation of Bulbul et al. (2019) relating the core-included 0.5–2 keV luminosity within R500 to the M500 mass and redshift z. It follows a log-normal distribution with scatter 0.27 in the luminosity direction. In practice, this operation implies computing a probabilistic weight on a three-dimensional grid representing the values of mass, redshift and luminosity.

Putting together the model ingredients, we are able to predict for each cluster in eFEDS, associated to a given flux and redshift measurement, what would be its probability to appear in the eRASS1 cosmological catalogue of clusters. By performing a 2 arcmin match between the eFEDS and eRASS1 catalogue, we find 35 actual clusters in common between both samples (see also Bulbul et al. 2024), and 90% of the matches are separated by less than 30″.

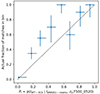

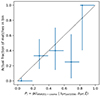

Figure 15 summarises the outcome of this exercise. As expected, low-flux clusters are associated to a low Pi value. Reassuringly, none of the “bright” (rigorously, the high-Pi) eFEDS clusters is missed in the eRASS1 cosmology sample. The model predicts 19.5 ± 3.2 eFEDS clusters to be in the eRASS1 cosmology sample, while the actual number is 35 (that is, a 5-σ underestimate). We find the empirical parameters δ1 and δ2 have posterior distributions compatible with zero. However, the Pi values appear systematically lower than the actual fraction of matches in each bin of Pi values (Fig. 16). Overall these results point to a slight mismatch between the predicted detection rates and the actual number of matches. We will discuss this issue later in the paper (Sect. 7.2).

|

Fig. 15. Testing the models with eRASS1-cosmo as a test catalogue, and eFEDS as a reference catalogue. Each dot corresponds to one of the 531 eFEDS clusters in the plane of measured 300 kpc flux (F_300kpc in units erg s−1 cm−2) and measured redshift (zeFEDS). eFEDS clusters with only flux upper limits are placed at the bottom with star symbols. Black circles indicate clusters also found in the eRASS1 cosmology sample. Colour reflects the model probability Pi (Eq. (8)) computed for each eFEDS cluster. |

|

Fig. 16. Testing the models with eRASS1-cosmo as a test catalogue, and eFEDS as a reference catalogue. The 531 eFEDS entries are grouped in bins of the model output probabilities Pi (horizontal axis). In each bin, the actual fraction of matches in the eRASS1 catalogue is reported on the vertical axis. Error bars represent the estimated 68% confidence range (Appendix C). The dotted line indicates the one-to-one relation, that would follow a perfectly reliable model. In general, the model predicts slightly lower probabilities than actually observed. |

6.3. Testing the eFEDS cluster sample against eRASS1 cosmology sample

By exchanging the role of eFEDS and eRASS1 in the test presented above, we can use the eRASS1 cosmology catalogue as a reference sample and test the eFEDS selection model. The interest of this operation is limited to sanity checks, because eRASS1 is 15 times shallower than eFEDS in their overlapping area. We consider only the 85 eRASS1 clusters extracted from the cosmology sample located in the fiducial eFEDS area bounded in Right Ascension by 8 h and 10 h and in Declination by −5 and 8deg.

To describe each of the eRASS1 detections, we constructed a model for the flux and redshift measurements of each entry. We assume independence of the estimated redshift zλ (column Z_LAMBDA in the eRASS1 cosmology catalogue) and the estimated R500 flux in the band 0.5–2 keV (F500_0520, units erg s−1 cm−2) and we form the model:

This model expresses normally distributed redshift uncertainties with the error increasing with redshift. For flux measurements, we assume a log-normal distribution:

In this equation, μF500_0520 and σF500_0520 depend on LX the true luminosity within R500 and on the true redshift z. Assuming unbiased flux measurements, μF500_0520 is obtained by converting the cluster rest-frame 0.5–2 keV luminosity into the observer-frame 0.5–2 keV flux, assuming an isothermal ICM and a position-dependent hydrogen column density taken from the HI4PI survey (HI4PI Collaboration 2016). As for the uncertainty model, we proceed by fitting a simple model linking the catalogue flux and errors reported in Bulbul et al. (2024). We take:

The quantity ϵi is specific to each cluster in the eRASS1 sample and equals the deviation of the catalogue uncertainty relative to the power-law model.

Figure 17 illustrates the outcome of this comparison exercise. The model predicts 35.7 ± 1.3 eRASS1 clusters to be found in eFEDS. The actual number of matches is 362. A number of eRASS1 clusters fall in the non-exposed eFEDS area, hence their value of Pi = 0. Most of the clusters covered by eFEDS have very high probabilities of being detected (Pi ≃ 1), as a consequence of its much deeper exposure. Reassuringly the eFEDS survey does not miss any eRASS1 cluster, highlighting in turn the low level of spurious contamination in the eRASS1 cosmology sample. In particular, it is not considered problematic that both clusters 1eRASS J094023.3+022824 and 1eRASS J084147.8−031154 have no corresponding match in eFEDS despite their respective predicted values Pi = 0.79 and 0.55. At their location on sky, the eFEDS survey is very shallow (with 140 s and 10 s effective exposure time, respectively) and the eFEDS selection model is not well calibrated in this regime.

|

Fig. 17. Similar to Fig. 15, but now taking eRASS1-cosmo as the reference sample (85 entries) and eFEDS as the test sample. The vertical axis represents F500_0520, the 0.5–2 keV flux within R500 reported in the eRASS1 catalogue (units 10−14 erg s−1 cm−2). The horizontal axis is the catalogue redshift zλ. Clusters falling in zones of zero eFEDS exposure have vanishing probability (Pi = 0). Other eRASS1 clusters are very likely to be detected in eFEDS (Pi ≃ 1); in fact they are, as shown by the black circles. The isolated point at the bottom of the figure only has a flux upper limit 1.5 × 10−13 erg s−1 cm−2. |

The model correctly explains the outcome of matching eFEDS to the eRASS1 cosmology catalogue. There is no evidence for a bias in the value of the probabilities Pi; most of them are close to zero or one, in agreement with the cluster being absent or present in the eFEDS catalogue (respectively). The values of δ1 and δ2 are themselves compatible with zero, indicating again consistency between the model and the present catalogues being tested.

6.4. Testing the eRASS1-main sample against eFEDS