| Issue |

A&A

Volume 691, November 2024

|

|

|---|---|---|

| Article Number | A331 | |

| Number of page(s) | 23 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202349113 | |

| Published online | 25 November 2024 | |

CLAP

I. Resolving miscalibration for deep learning-based galaxy photometric redshift estimation

1

Pengcheng Laboratory,

Nanshan District,

Shenzhen,

Guangdong

518000,

P.R. China

2

Aix-Marseille Université, CNRS/IN2P3, CPPM,

Marseille

13009,

France

3

School of Physics and Microelectronics, Zhengzhou University,

Zhengzhou,

Henan

450001,

P.R. China

4

Department of Physics E. Pancini, University Federico II,

Via Cinthia 6,

80126

Naples,

Italy

5

School of Physics and Astronomy, Sun Yat-sen University,

Zhuhai Campus, 2 Daxue Road, Tangjia,

Zhuhai,

Guangdong

519082,

P.R.China

6

CSST Science Center for Guangdong-Hong Kong-Macau Great Bay Area,

Zhuhai,

Guangdong

519082,

P.R. China

7

Department of Astronomy, The Ohio State University,

Columbus,

OH

43210,

USA

8

Center for Cosmology and AstroParticle Physics (CCAPP), The Ohio State University,

Columbus,

OH

43210,

USA

9

Research School of Astronomy & Astrophysics, Australian National University,

Cotter Rd.,

Weston,

ACT 2611,

Australia

10

School of Computing, Australian National University,

Acton,

ACT 2601,

Australia

★ Corresponding author; linqf@pcl.ac.cn

Received:

28

December

2023

Accepted:

22

September

2024

Obtaining well-calibrated photometric redshift probability densities for galaxies without a spectroscopic measurement remains a challenge. Deep learning discriminative models, typically fed with multi-band galaxy images, can produce outputs that mimic probability densities and achieve state-of-the-art accuracy. However, several previous studies have found that such models may be affected by miscalibration, an issue that would result in discrepancies between the model outputs and the actual distributions of true redshifts. Our work develops a novel method called the Contrastive Learning and Adaptive KNN for Photometric Redshift (CLAP) that resolves this issue. It leverages supervised contrastive learning (SCL) and k-nearest neighbours (KNN) to construct and calibrate raw probability density estimates, and implements a refitting procedure to resume end-to-end discriminative models ready to produce final estimates for large-scale imaging data, bypassing the intensive computation required for KNN. The harmonic mean is adopted to combine an ensemble of estimates from multiple realisations for improving accuracy. Our experiments demonstrate that CLAP takes advantage of both deep learning and KNN, outperforming benchmark methods on the calibration of probability density estimates and retaining high accuracy and computational efficiency. With reference to CLAP, a deeper investigation on miscalibration for conventional deep learning is presented. We point out that miscalibration is particularly sensitive to the method-induced excessive correlations among data instances in addition to the unaccounted-for epistemic uncertainties. Reducing the uncertainties may not guarantee the removal of miscalibration due to the presence of such excessive correlations, yet this is a problem for conventional methods rather than CLAP. These discussions underscore the robustness of CLAP for obtaining photometric redshift probability densities required by astrophysical and cosmological applications. This is the first paper in our series on CLAP.

Key words: methods: data analysis / techniques: image processing / surveys / galaxies: distances and redshifts

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

In cosmological and extragalactic studies, the cosmological redshift ɀ characterises the cosmic distance, and it is a crucial quantity for probing the properties of galaxies and the evolution of the Universe. The most direct and accurate way to measure redshift is through spectroscopy. However, spectroscopic redshifts (spec-ɀ) are highly time intensive to obtain. There have been multiple ongoing or planned galaxy imaging surveys in recent years, such as the Kilo-Degree Survey (KiDS; de Jong et al. 2013), the Dark Energy Survey (DES; Dark Energy Survey Collaboration 2016), the Euclid survey (Laureijs et al. 2011), the Hyper Suprime-Cam (HSC; Aihara et al. 2018), the Nancy Grace Roman Space Telescope (Spergel et al. 2015), the Vera C. Rubin Observatory Legacy Survey of Space and Time (LSST; Ivezić et al. 2019), and the China Space Station Telescope (CSST; Zhan 2018). They require redshift estimates for hundreds of millions or billions of galaxies for which spectroscopic measurements are infeasible. Given such extremely rich datasets, photometric redshifts (photo-ɀ), measured typically using photometry, have become an alternative in order to meet the needs for large imaging surveys.

The idea behind photometric redshift estimation for individual galaxies lies in the mapping between the observed galaxy photometric or morphological properties and the redshift. Broadly speaking, two categories of methods are commonly leveraged for deriving individual redshift estimates (see Salvato et al. 2019 and Newman & Gruen 2022). Template-fitting methods (e.g. Arnouts et al. 1999; Benítez 2000; Feldmann et al. 2006; Ilbert et al. 2006; Greisel et al. 2015; Leistedt et al. 2019) determine redshifts by finding the best fit between the galaxy spectral energy distribution (SED) and a library of SED templates covering different physical and morphological properties such as galaxy types. Data driven or empirical methods determine redshifts by empirically learning the mapping from photometric data to redshift estimates without any theoretical modelling. These include unsupervised learning approaches in which known redshifts are given as external information, such as k-nearest neighbours (KNN; e.g. Zhang et al. 2013; Beck et al. 2016; De Vicente et al. 2016; Speagle et al. 2019; Han et al. 2021; Luken et al. 2022), self-organising maps (SOMs; e.g. Way & Klose 2012; Carrasco Kind & Brunner 2014; Speagle & Eisenstein 2017; Buchs et al. 2019; Wilson et al. 2020), and supervised learning approaches in which known redshifts are used as labels, such as artificial neural networks (ANNs; e.g. Collister & Lahav 2004; Brescia et al. 2014; Bonnett 2015; Cavuoti et al. 2015; Hoyle 2016; Sadeh et al. 2016; Cavuoti et al. 2017; Bilicki et al. 2018; D’Isanto & Polsterer 2018; Pasquet et al. 2019; Mu et al. 2020; Schuldt et al. 2021; Dey et al. 2022a; Ait Ouahmed et al. 2024; Treyer et al. 2024), decision trees and random forest (e.g. Carliles et al. 2010; Carrasco Kind & Brunner 2013; Luken et al. 2022), boosted decision trees (e.g. Gerdes et al. 2010), support vector machines (SVMs; e.g. Jones & Singal 2017), and Gaussian mixture models (GMMs; e.g. D’Isanto & Polsterer 2018; Jones & Heavens 2019; Hatfield et al. 2020; Ansari et al. 2021).

Thanks to the recent advances in deep learning, a few studies (e.g. D’Isanto & Polsterer 2018; Pasquet et al. 2019; Schuldt et al. 2021; Dey et al. 2022a; Ait Ouahmed et al. 2024; Treyer et al. 2024) have developed deep neural networks (DNNs) to predict photometric redshifts and achieve state-of-the-art predictive accuracy. These discriminative models, developed in a supervised end-to-end manner and usually having a large number of trainable weights, directly take multi-band stamp images of galaxies as inputs and produce redshift estimates. They are trained iteratively with mini-batches of training data, minimising a loss function via gradient descent in which spectroscopic redshifts are used as labels. In this way, the models can automatically establish a mapping from input images to the target redshifts. Studies such as Henghes et al. (2022), Li et al. (2022a), Zhou et al. (2022a), and Zhou et al. (2022b) explored hybrid networks that are fed with both images and multi-band photometry or fluxes. The outperformance of such image-based methods in accuracy over photometry-only methods suggests that other than photometry there may be extra information such as galaxy morphology encoded in images that helps improve redshift estimation (Soo et al. 2018).

Photometric redshift estimates for individual galaxies are usually considered in two forms: (a) the point estimate ɀphoto derived from photometric data d (i.e. photometry or images) and (b) the (posterior) probability density estimate p(ɀ|d) over possible values given photometric data d, accounting for imperfect knowledge in determining redshifts. This work concentrates on probability density estimation via deep learning.

Obtaining well-calibrated redshift probability density estimates is a challenging task, as reported for a large array of redshift estimation methods (e.g. Wittman et al. 2016; Tanaka et al. 2018; Amaro et al. 2019; Euclid Collaboration 2020; Schmidt et al. 2020; Kodra et al. 2023). There have been several techniques coupled with deep learning models used to express redshift probability densities, including the softmax function (Pasquet et al. 2019; Treyer et al. 2024), GMMs (D’Isanto & Polsterer 2018), and Bayesian neural networks (BNNs; Zhou et al. 2022b), outputting vectors covering a redshift range and normalised to unity. Despite their high accuracy, the calibration of such estimates produced by neural networks remains an open issue. From a statistical viewpoint, a well-calibrated probability density estimate should fulfil the frequentist definition: for a given sample of galaxies, the integrated probability within any arbitrary redshift range must describe the relative frequency of true redshifts falling in this range (Dey et al. 2021, 2022b). While there are proofs that ANNs are capable of providing reliable posterior probability estimates (Richard & Lippmann 1991; Rojas 1996), several studies have shown evidence that the probability density outputs from discriminative models especially those with high capacity may suffer from miscalibration, that is, a model’s confidence is not consistent with its accuracy (e.g. Guo et al. 2017; Thulasidasan et al. 2019; Minderer et al. 2021; Wen et al. 2021). In other words, miscalibration would lead to a deviation between the estimated p′(ɀ|d) and the empirically correct p(ɀ|d). Guo et al. (2017) pointed out that the extent of miscalibration generally grows with the model capacity for typical neural networks. As we show, neural networks similar to the one developed by Treyer et al. (2024) (with ~60 layers and ~7 million trainable parameters) already exhibit clear miscalibration behaviours. Furthermore, the variability stemming from the iterative training procedure would contribute additional uncertainties and increase the potential risk of miscalibration (Huang et al. 2021, 2023).

Unlike point estimates that only give the collapsed values, well-calibrated probability density estimates are capable of describing the intrinsic dispersions due to the complex many-to- many mapping from photometric data to redshift, and describing the uncertainties due to the method implemented for redshift estimation and errors in photometric data. They are thus preferred for precision cosmology, in which one needs to understand and incorporate the full uncertainties in photometric redshift estimation into analysis (e.g. Mandelbaum et al. 2008; Myers et al. 2009; Bonnett et al. 2016; Abruzzo & Haiman 2019; Ruiz-Zapatero et al. 2023; Zhang et al. 2023). On the other hand, this leads to a strong requirement for the calibration of probability density estimates. The potential miscalibration issue associated with conventional deep learning would result in unreliable characterisation of uncertainties and would bias the photometric redshift estimation, consequently degrading the cosmological inference. In particular, miscalibrated probability densities for individual galaxies may induce biases on the mean redshifts estimated in tomographic bins and lead to poorly reconstructed redshift distribution over a galaxy sample, which are severe for weak lensing tomography (e.g. Ma et al. 2006; Huterer et al. 2006; Laureijs et al. 2011; Joudaki et al. 2020; Hildebrandt et al. 2020; Euclid Collaboration 2021).

In our previous work (Lin et al. 2022) we developed a set of consecutive steps to correct biases in photometric redshift estimation with deep learning, potentially overcoming the impact of miscalibration on the mean redshifts in tomographic bins. However, this approach is limited to point estimate analysis, and suffers from a trade-off between constraining estimation errors and correcting biases, which originates from retraining a fraction of a network using a subset of training data and soft labels that enlarges estimation errors. We thus attempt to find a better solution to tackle the miscalibration issue for probability density estimation.

In this work we propose the Contrastive Learning and Adaptive KNN for Photometric Redshift (CLAP), a novel method that resolves the miscalibration encountered by conventional deep learning in the context of photometric redshift probability density estimation. CLAP leverages the gains from KNN and retains the advantages of deep learning simultaneously. It turns a discriminative model into a contrastive learning framework that projects galaxy images and additional input data to redshift-sensitive latent vectors, followed by an adaptive KNN algorithm and a KNN-enabled recalibration procedure to produce locally calibrated probability density estimates, which leverage diagnostics with the probability integral transform (PIT; Gass & Harris 2001). The contrastive learning is coupled with supervised learning in which spectroscopic redshifts are used as labels. We give a solution for alleviating the reliance on the expensive computation required for KNN, and suggest a proper way to combine an ensemble of probability density estimates for reducing uncertainties. We demonstrate CLAP using data separately from the Sloan Digital Sky Survey (SDSS; Eisenstein et al. 2011), the Canada-France-Hawaii Telescope Legacy Survey (CFHTLS; Gwyn 2012), and the Kilo-Degree Survey (KiDS; de Jong et al. 2013). As we show, the main advantages of CLAP include the following:

a substantial improvement on the calibration of probability density estimates thanks to KNN;

a high accuracy comparable to that obtained by conventional image-based deep learning methods, better than photometry- only KNN approaches such as Beck et al. (2016);

computational efficiency of end-to-end deep learning models retained by bypassing the intensive computation required for KNN.

This is in line with other works that demonstrate the merits of combining deep learning with KNN (e.g. Papernot & McDaniel 2018; Chen et al. 2020; Dwibedi et al. 2021; Sun et al. 2022; Liao et al. 2023). CLAP is analogous to likelihood-free inference approaches for parameter estimation and inference (e.g. Charnock et al. 2018; Fluri et al. 2021; Livet et al. 2021), but instead of exploiting simulated data for inference, we perform KNN on real data and labels to ensure the probability density estimates to be locally calibrated.

With CLAP as a reference, we delve into the miscalibration issue and showcase that it is a common shortcoming of conventional deep learning methods for probability density estimation. In particular, we present an illustration of miscalibration over different representative discriminative models, and reveal its association with uncertainties and correlations between data instances. In light of these findings, suggestions are given on circumventing miscalibration in deep learning in order to obtain reliable photometric redshift estimates applicable to actual astrophysical and cosmological analysis.

As a further note, another common issue for conventional methods is the mismatch between the spectroscopic sample used to train a model and the target (or test) sample to which the model is applied. As the learned distribution p(ɀ, d) is fixed in the model once the model is trained, applying it to a target sample with a different distribution would induce biases. In contrast, CLAP has the flexibility to match the target distribution by assigning different weights to the nearest neighbours for each data instance, offering a possible way to circumvent mismatches. This is another advantage of CLAP over conventional methods. We note that a mismatch may also lead to inconsistency between a model’s confidence and accuracy for the target sample, though we use ‘miscalibration’ to exclusively refer to the issue internal to the method implementation irrespective of mismatches. We will discuss the approaches built on CLAP for resolving mismatches in the next paper of our CLAP series, while in the current work we present the details of CLAP and solely focus on miscalibration.

In addition, we note that probability density estimates are denoted with probability density functions (PDFs) in most studies. From a rigorous statistical point, a PDF should characterise the dispersions intrinsic to a physical phenomenon, free from model assumptions, implementations, and noise in data. In actual applications, however, probability density estimates inevitably depend on the certain estimation method and the data quality. It is challenging to get rid of all those extra uncertainties, even after resolving miscalibration. We thus do not refer to probability density estimates as PDFs in order to avoid misuse of the terminology. For brevity, we adopt the expression p(ɀ|d) without adding extra terms to the condition unless otherwise noted.

This paper organised is as follows. Section 2 describes the data used in this work, primarily galaxy images and spectroscopic redshifts. Section 3 introduces our method, CLAP. The information about the network architectures is provided in Appendix A. In Sect. 4 we show our results on probability density estimation using CLAP. An investigation on miscalibration is presented in Sect. 5. Finally, we summarise our results and give concluding remarks in Sect. 6. In the section dedicated to Data and Code Availability, we provide supplementary appendices that contain more results and discussions; we also provide the photometric redshift catalogues produced by CLAP and the code used in this work.

2 Data

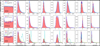

We took data from three imaging surveys, the Sloan Digital Sky Survey (SDSS), the Canada-France-Hawaii Telescope Legacy Survey (CFHTLS), and the Kilo-Degree Survey (KiDS), each of which covers a different redshift and magnitude range. The distributions of spectroscopic redshift and r-band magnitude for each dataset are shown in Fig. 1. The redshift coverage and sample division are detailed in Table 1.

2.1 Sloan Digital Sky Survey (SDSS)

The SDSS dataset used in this work contains 516 524 galaxies from the Main Galaxy Sample available from SDSS Data Release 12 (Alam et al. 2015). These data were retrieved by Pasquet et al. (2019) and also used in Lin et al. (2022), with detailed information provided in Pasquet et al. (2019). This dataset has a low-redshift coverage ɀ < 0.4 and a cut at 17.8 on dereddened r-band petrosian magnitude, providing a rich reservoir of data in a restricted parameter space. The galactic reddening E(B – V) for each galaxy along the line of sight is obtained using the dust map from Schlegel et al. (1998). Each galaxy is associated with a spectroscopically measured redshift. Five stamp images that cover five optical bands u, g, r, i, ɀ were made to have the galaxy located at the centre and encompass 64 × 64 pixels in spatial dimensions, with each pixel covering 0.396 arcsec. The five images together with the galactic reddening E(B – V) are regarded as a data instance and used as input data for CLAP. While previous work noticed that inputting the information of point spread functions (PSFs) to deep learning models would improve the estimation of galaxy properties (e.g. Umayahara et al. 2020; Li et al. 2022b), we did not find a strong impact of PSFs on photometric redshift estimation for the data used in our work. Therefore, we did not include the PSF information in the input data for CLAP.

Via complete random sampling, we selected 393 219 galaxies as a training sample, 20 000 galaxies as a validation sample, and 103 305 galaxies as a target sample, similar to the sample division by Pasquet et al. (2019). Such division imposes that all the samples follow the same parent redshift-data distribution in spite of different sample sizes. This is the assumption we hold in this work without considering mismatches.

|

Fig. 1 Distributions of spectroscopic redshift and r-band magnitude for the SDSS, CFHTLS, and KiDS datasets used in our work. |

Data coverage in spectroscopic redshift and sample division.

2.2 Canada-France-Hawaii Telescope Legacy Survey (CFHTLS)

We took the CFHTLS dataset that was described in detail in Treyer et al. (in prep.) and also used in Lin et al. (2022). It contains 158 193 galaxies observed in either the CFHTLS-Deep survey or the CFHTLS-Wide survey (Hudelot et al. 2012), with spectroscopic redshift up to ɀ ~ 4.0 and dereddened r-band petrosian magnitude up to r – 27.0.

Since CFHTLS had no spectroscopic observations, the spectroscopic redshifts were retrieved from a few other spectroscopic surveys. The majority is a collection of high-quality redshifts measured with high S/N spectra or multiple spectral features, obtained from the COSMOS Lyman-Alpha Mapping And Mapping Observations survey (CLAMATO; Data Release 1; Lee et al. 2018), the DEEP2 Galaxy Redshift Survey (Data Release 4; Newman et al. 2013), the Galaxy And Mass Assembly survey (GAMA; Data Release 3; Baldry et al. 2018), the SDSS survey (Data Release 12), the UKIDSS Ultra-Deep Survey (UDS; McLure et al. 2013; Bradshaw et al. 2013), the VANDELS ESO public spectroscopic survey (Data Release 4; Garilli et al. 2021), the VIMOS Public Extragalactic Redshift Survey (VIPERS; Data Release 2; Scodeggio et al. 2018), the VIMOS Ultra-Deep Survey (VUDS; Le Fèvre et al. 2015), the VIMOS VLT Deep Survey (VVDS; Le Fèvre et al. 2013), the WiggleZ Dark Energy Survey (Final Release; Drinkwater et al. 2018), and the zCOS- MOS survey (Lilly et al. 2007). There is also a collection of low-resolution redshifts, acquired from the secure low-resolution prism redshift measurements from the PRIsm MUlti-object Survey (PRIMUS; Data Release 1; Coil et al. 2011; Cool et al. 2013) and the grism redshift measurements from the 3D-HST survey (Data Release v4.1.5; Skelton et al. 2014; Momcheva et al. 2016). In the 158 193 CFHTLS galaxies, 134 759 have high- quality spectroscopic redshifts and 23 434 have low-resolution redshifts. Similar to the SDSS images, the stamp images of each CFHTLS galaxy cover five optical bands u, g, r, i, ɀ and have 64 × 64 pixels with a pixel scale of 0.187 arcsec. The galactic reddening E(B – V) is also included as a part of the data.

We randomly selected 14 759 galaxies as a validation sample and 20 000 galaxies as a target sample, both from the high-quality collection. The remaining 100 000 galaxies and the low-resolution collection of 23 434 galaxies form a training sample. That is, for testing the results we only use the high-quality redshifts that are assumed to be secure, while the low-resolution redshifts are just used to increase statistics in training. In addition, we do not treat the CFHTLS-DEEP sample and the CFHTLS-WIDE sample separately as in Lin et al. (2022).

|

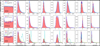

Fig. 2 Graphic illustration of our method CLAP for photometric redshift probability density estimation. The development of a CLAP model consists of several procedures, including supervised contrastive learning (SCL), adaptive KNN, reconstruction, and refitting. The SCL framework is based on neural networks. It uses an encoder network to project multi-band galaxy images and additional input data (i.e. galactic reddening E(B – V), magnitudes) to low-dimensional latent vectors, which form a latent space that encodes redshift information and has a distance metric defined. The spectroscopic redshift labels are leveraged to supervise the redshift outputs predicted by an estimator network for extracting redshift information, but the trained estimator and its outputs are no longer used once the latent space is established (indicated by the shaded region). These outputs are uncalibrated, and should not be regarded as the final estimates produced by CLAP. The adaptive KNN and the KNN-enabled recalibration are implemented locally on the latent space via diagnostics with the probability integral transform (PIT), constructing raw probability density estimates using the known redshifts of the PIT-selected nearest neighbours. The raw estimates are then used as labels to retrain the estimator from scratch in a refitting procedure with the trained encoder fixed, resuming an end-to-end model ready to process imaging data and produce the desired estimates. Lastly, we combine the estimates from an ensemble of CLAP models developed following these procedures (not shown in the figure). |

2.3 Kilo-Degree Survey (KiDS)

We used the KiDS dataset retrieved by Li et al. (2022a) from the KiDS Data Release 4 (Kuijken et al. 2019). There are 134 147 galaxies in this dataset, with spectroscopic redshift up to ɀ ~ 3.0 and dereddened r-band ‘Gaussian Aperture and Point spread function (GAaP)’ magnitude up to r ~ 24.5. The detailed description can be found in Li et al. (2022a). Similar to the CFHTLS dataset, the spectroscopic redshifts of the KiDS galaxies were acquired from other surveys including the Chandra Deep Field South (CDFS; Szokoly et al. 2004), the DEEP2 Galaxy Redshift Survey, the Galaxy And Mass Assembly survey (GAMA), and the zCOSMOS survey. The stamp images from KiDS cover four optical bands u, g, r, i and have a pixel scale of 0.2 arcsec. Again, we set the spatial dimensions to be 64 × 64.

In addition to the KiDS images, this dataset includes the dereddened GAaP magnitudes in the five near-infrared (NIR) bands Z, Y, J, H, Ks from the VISTA Kilo-degree Infrared Galaxy Survey (VIKING; Edge et al. 2014), as well as the galactic reddening E(B – V). Nonetheless, we refer to this dataset as ‘the KiDS dataset’ for brevity throughout the paper.

Similar to Li et al. (2022a), we randomly selected 100 000 galaxies as a training sample, 14 147 galaxies as a validation sample, and 20 000 galaxies as a target sample.

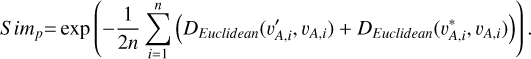

Supervised contrastive learning: Compress high-dimensional input data to low-dimensional redshift-sensitive latent vectors so that the subsequent KNN can be performed.

Adaptive KNN: Obtain the optimal number of nearest neighbours for each data instance via local PIT diagnostics, whose known spectroscopic redshifts are used to construct an initial probability density estimate.

Recalibration: Ensure that the obtained probability density estimates are locally calibrated.

Refitting: Resume an end-to-end model in order to bypass the expensive computation of KNN and regain the computational efficiency of deep learning.

Combining an ensemble of estimates: use the harmonic mean to combine the probability density estimates from an ensemble of models developed following the procedures above.

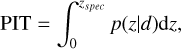

3 Our method: CLAP

3.1 Overview

This section introduces our method CLAP. As illustrated in Fig. 2 and summarised in Algorithm 1, a CLAP model is developed via supervised contrastive learning (SCL), adaptive KNN, reconstruction, and refitting, based on deep learning neural networks. For the model inputs, we prefer multi-band galaxy images rather than magnitudes or colours alone, because the images contain more information than photometry and potentially enable better accuracy to be achieved in photometric redshift estimation. On the other hand, as we demonstrate, the KNN algorithm is a key component of CLAP in resolving miscalibration, while the multi-band images have a large number of dimensions such that directly implementing the KNN on the images is infeasible. Therefore, CLAP first leverages contrastive learning to project the multi-band images and additional input data (i.e. galactic reddening E(B – V), magnitudes) to low-dimensional redshiftsensitive latent vectors that form a latent space with a defined distance metric, enabling the subsequent KNN to be performed. It is essentially a deep learning-based compression of complex high-dimensional data. The contrastive learning is coupled with supervised learning using the spectroscopic redshift labels for better extraction of redshift information.

The adaptive KNN is implemented on the obtained latent space. For each data instance from the target sample or the validation sample, the optimal k value (i.e. the number of the nearest neighbours), which defines its neighbourhood, is determined via diagnostics based on the local PIT distributions. The PIT values are computed using the known spectroscopic redshifts of the selected nearest neighbours from the training sample. Once the k value is determined, the known redshifts of the k nearest neighbours within the neighbourhood are used to construct a probability density estimate, which is then recalibrated via local PIT diagnostics on the nearest neighbours again. The PIT values used for recalibration may be computed using the known redshifts from the validation sample. In essence, with the adaptive KNN and the KNN-enabled recalibration, the joint distribution p(ɀ, d) for the given dataset is modelled locally by leveraging the neighbourhood of the projection Φ(d) in the latent space, where Φ stands for the implemented model for projecting data in SCL that has been omitted in the expression p(ɀ|d) for brevity.

The computational complexity of KNN precludes its use for processing large amounts of data envisioned by future imaging surveys. In order to avoid running computationally expensive KNN for every data instance from a potentially large target sample, a refitting procedure is implemented to resume an end-to-end model ready to directly produce the desired probability density estimates given input images, which regains the efficiency of deep learning.

Finally, for reducing uncertainties and improving accuracy, we develop an ensemble of CLAP models following the procedures above and combine the estimates from the ensemble. We propose that using the harmonic mean is a proper way for performing such combination.

The details of CLAP are elaborated in the following subsections.

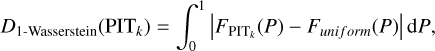

3.2 Supervised contrastive learning

We adopted a contrastive learning technique to establish a latent space that encodes redshift information (see Huertas-Company et al. 2023 for a review of contrastive learning in astrophysics). The essence of contrastive learning is to have positive pairs and negative pairs simultaneously, minimising the contrast between positive pairs and maximising the contrast between negative pairs. A naive choice of generating positive pairs would be to modify the images on the pixel level such as colour jittering, resizing, smoothing, and adding noise, such that contrastive learning is performed in an unsupervised manner (e.g. Hayat et al. 2021; Wei et al. 2022). However, our initial experiments showed that unsupervised contrastive learning with such pixellevel operations failed to produce a good representation for redshift, presumably because redshift is complex high-order information in a multi-dimensional spectroscopic and photometric parameter space, and may have exquisite dependence on pixel intensities (Campagne 2020). Therefore, we propose to incorporate supervised learning in the contrastive learning technique using spectroscopic redshift labels, so that meaningful redshift information can propagate from input data to the latent space. We also consider implementing a deep learningbased reconstruction of redshift-informed images to provide positive counterparts for the original images to avoid performing pixel-level operations.

In addition, we do not perturb input data to account for the noise in data (or ‘aleatoric’ uncertainties) like that applied in MLPQNA (Brescia et al. 2014; Cavuoti et al. 2015, 2017), because, again, this may destruct redshift information. In fact, the errors in the input data from the training sample can be encoded in the latent space in the training process, and then encapsulated in the obtained probability density estimates by KNN for the target sample. Assuming that the errors in the training data are representative of those in the target data, these errors can be well accounted for without any particular treatment. While we assume that the spectroscopic redshifts used in this work are noiseless (except the low-resolution redshifts from the CFHTLS dataset), the possible errors in the spectroscopic redshifts from the training sample can also be encapsulated in the same way.

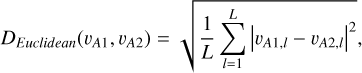

With these considerations, we developed a supervised contrastive learning (SCL) framework customised for the given problem (illustrated in Fig. 3). It consists of an encoder network, an estimator network, and a decoder network. The encoder is fed with multi-band galaxy images (denoted with I) and additional input data, and outputs two low-dimensional vectors vA and vB . The vector vA is used to encode redshift information, which we refer to as the ‘latent vector’ that is taken to form a latent space, while the vector vB is used to encode other information extracted from the input data needed for reconstructing images. The vector vA is inputted to the estimator that produces a redshift output having the form of a probability density (denoted with q(ɀ|d)), which is expressed in a series of bins given by the softmax function applied on the last fully connected layer and trained in a classification setting (i.e. considering each redshift bin as a class). The vectors vA and vB are concatenated and inputted to the decoder, reconstructing images that resemble the original inputs I . The reconstructed images (denoted with Ir) together with the original additional input data are re-inputted to the encoder, producing vectors  and

and  , and subsequently a redshift output q′(ɀ|d) and images

, and subsequently a redshift output q′(ɀ|d) and images  . In this second pass, the encoder, the estimator, and the decoder all use shared weights. Furthermore, we inputted the images I* that are original but reshaped with random flipping and rotation by 90 deg steps, yet the redshift is not modified under the assumption of spatial invariance. Correspondingly,

. In this second pass, the encoder, the estimator, and the decoder all use shared weights. Furthermore, we inputted the images I* that are original but reshaped with random flipping and rotation by 90 deg steps, yet the redshift is not modified under the assumption of spatial invariance. Correspondingly,  , and

, and  were obtained.

were obtained.

In this framework, we regarded the images Ir and I (or equivalently, the latent vectors  and va) for the same galaxy as a positive pair on condition that redshift information has been passed to vA,

and va) for the same galaxy as a positive pair on condition that redshift information has been passed to vA,  , and Ir. I* and I (or

, and Ir. I* and I (or  and vA ) for the same galaxy were regarded as another positive pair, though this would not extract meaningful redshift information but only disentangle spatially variant information such as galaxy morphology. Lastly, the original images I1 and I2 for any two different galaxies (or the corresponding latent vectors vA1 and vA2) were regarded as a negative pair.

and vA ) for the same galaxy were regarded as another positive pair, though this would not extract meaningful redshift information but only disentangle spatially variant information such as galaxy morphology. Lastly, the original images I1 and I2 for any two different galaxies (or the corresponding latent vectors vA1 and vA2) were regarded as a negative pair.

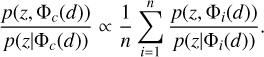

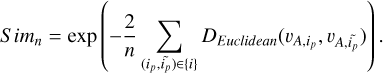

We adopted the Euclidean distance as a metric to characterise the contrast between two latent vectors vA1 and vA2,

(1)

(1)

where the index l runs over the L dimensions of the latent vectors. For a mini-batch of n data instances from the training sample, we obtained the latent vectors {vA,i}, { }, and {

}, and { }, where the index i runs over all the data instances. Both (

}, where the index i runs over all the data instances. Both ( , vA,i) and (

, vA,i) and ( , vA,i) for each data instance were used as positive pairs, having 2n pairs in total. The similarity function for all the positive pairs was defined as

, vA,i) for each data instance were used as positive pairs, having 2n pairs in total. The similarity function for all the positive pairs was defined as

(2)

(2)

We generated n/2 negative pairs from {vA,i} each having two different galaxies (denoted with ( )). The similarity function for all the negative pairs was defined as

)). The similarity function for all the negative pairs was defined as

(3)

(3)

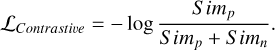

We then defined the contrastive loss function as

(4)

(4)

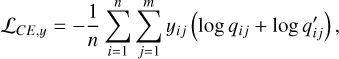

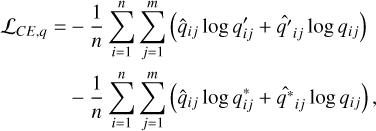

We converted the spectroscopic redshifts into one-hot labels using the same binning as the softmax output, assuming no spectroscopic errors beyond the bin size. The same one-hot label (denoted with y) was used to supervise both q(ɀ| d) and q′(ɀ| d) via the cross-entropy loss function

(5)

(5)

where the index i runs over the n data instances in the mini-batch; the index j runs over the m redshift bins for each data instance. We also used the cross-entropy to impose mutual consistency between q(ɀ|d), q′ (ɀ|d), and q* (ɀ|d),

(6)

(6)

where the hat ˆ on top of q(ɀ|d), q′ (ɀ|d), and q*(ɀ|d) refers to gradient stopping in training when they are used as labels in front of the log terms. Equations (5) and (6) ensure redshift information to be encoded in vA,  , and Ir.

, and Ir.

We adopted the pixel-wise mean square error (MSE) to constrain the reconstructed images Ir,  , and

, and  ,

,

(7)

(7)

where the index k runs over a total number of K pixels of the images over multiple bands. The different MSE constraints for Ir and  ensure spatially variant information to be encoded in the vector vB. We note that Ir is required to be not identical to I, otherwise vA and

ensure spatially variant information to be encoded in the vector vB. We note that Ir is required to be not identical to I, otherwise vA and  would collapse to a single point in the latent space, making the contrast between any different data instances uncontrollable and nullifying the contrastive learning. This requirement is naturally fulfilled since the random noise on I cannot be fully recovered given a compression of information by the encoder.

would collapse to a single point in the latent space, making the contrast between any different data instances uncontrollable and nullifying the contrastive learning. This requirement is naturally fulfilled since the random noise on I cannot be fully recovered given a compression of information by the encoder.

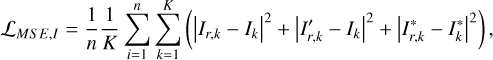

Finally, we had the total loss function to perform SCL by summing up all the aforementioned loss functions,

(8)

(8)

where the coefficients λCE and λMS E,I define the relative weights among different terms. With a trained SCL framework, we can obtain the projection of each data instance (i.e. the latent vector) from a sample and establish a latent space.

As an additional note, having the estimator produce redshift outputs q(z|d) in SCL is not yet to make estimation but only to let redshift information propagate to the latent vectors. These outputs are not used once the latent space is established. As we show, they are typically not calibrated, and should not be confused with the final estimates given by the complete implementation of CLAP that we introduce later, but they are compared in our analysis.

|

Fig. 3 Supervised contrastive learning (SCL) framework. It contains an encoder, an estimator, and a decoder. The encoder, the same as that shown in Fig. 2, takes multi-band galaxy images and additional data as inputs, and produces two vectors vA and vB. The vector vA is used to encode redshift information and is referred to as the ‘latent vector’ throughout this work. It is inputted to the estimator that produces a redshift output supervised by the spectroscopic redshift label for extracting redshift information. The concatenation of vA and vB is inputted to the decoder to reconstruct images that resemble the input images. With the reconstructed images as inputs, this process is conducted again using the three networks with shared weights, producing the vector vA. Furthermore, the images reshaped with random flipping and rotation by 90 deg steps are exploited as inputs, producing the vector vA. For contrastive learning, the contrast between vA and vA and the contrast between vA and vA for the same galaxy are minimised (i.e. positive pairs), which are characterised by the Euclidean distance. The contrast between the latent vectors for any two different galaxies is maximised (i.e. a negative pair). |

3.3 Adaptive KNN

We performed KNN to retrieve a group of nearest neighbours for the projection of any query data instance in an established latent space, whose known redshifts were used to construct an initial probability density estimate. This idea is based on the topology of the latent space, that is, the data instances with neighbouring projections would have similar redshift-related properties and can be approximated as random draws from a common redshift probability density. The neighbourhood for a data instance in the latent space is represented by its nearest neighbours. We note that a neighbourhood would typically encapsulate different redshifts; at the same time, different neighbourhoods would overlap, having shared nearest neighbours that provide the same redshifts. In other words, the topology of the latent space naturally depicts the many-to-many mapping between photometric data and redshift.

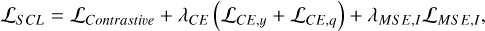

Since the data are not uniformly distributed, the conventional KNN with a constant k value as performed by Wei et al. (2022) may not define the neighbourhood properly for every query data instance. Therefore, we proposed an ‘adaptive’ KNN algorithm with varying k values for different data instances. To be specific, we relied on local PIT diagnostics to determine the optimal k for each data instance. The PIT for a data instance is defined as

(9)

(9)

where ɀspec stands for its spectroscopic redshift. If a neighbourhood is properly defined that best describes the local properties and gives well-calibrated probability density estimates, the distribution of the PIT values computed for the data instances within the neighbourhood should be uniform between 0 and 1 (see also Dey et al. 2021; Zhao et al. 2021; Dey et al. 2022b). Therefore, the optimal k can be found when the PIT distribution constructed by the corresponding number of nearest neighbours is closest to uniformity (i.e. via PIT diagnostics).

The adaptive KNN algorithm consists of two rounds of gridsearch from a grid of k values. The first round is performed to find the k nearest neighbours for each query data instance with each k selected from the grid. The query data instances and the nearest neighbours are all from the training sample. We adopt the Euclidean distance for KNN, the distance metric used in SCL. The known spectroscopic redshifts of the k nearest neighbours provide a probability density; then with the known redshift of the query data instance, a PIT value for each given k can be computed (denoted with PITk). The second round of search, for each query data instance from the target sample or the validation sample, is performed to find its k nearest neighbours from the training sample with each given k. Then with the PITk values of the k nearest neighbours obtained from the first round, a distribution of PITk can be constructed that represents the local PIT distribution for the query data instance within a neighbourhood whose scale is characterised by the given k.

To quantify the discrepancy between uniformity and the PITk distribution for each k, we adopted the 1-Wasserstein distance (Villani 2009; Panaretos & Zemel 2019) defined as

(10)

(10)

where  (P) and Funi form (P) stand for the cumulative versions of the normalised PITk distribution and the uniform distribution, respectively. The 1-Wasserstein distance mainly captures the deviation between the broad shapes of the two distribution and is insensitive to random fluctuations in the PITk distribution. Using it as a metric, we searched for the optimal k for each query data instance from the target sample or the validation sample, for which the corresponding D1-Wasserstein (PITk) reaches the minimum. Then the spectroscopic redshifts of the k nearest neighbours were collected to construct an initial probability density estimate pini(ɀ|d), using the same binning as the softmax output in SCL.

(P) and Funi form (P) stand for the cumulative versions of the normalised PITk distribution and the uniform distribution, respectively. The 1-Wasserstein distance mainly captures the deviation between the broad shapes of the two distribution and is insensitive to random fluctuations in the PITk distribution. Using it as a metric, we searched for the optimal k for each query data instance from the target sample or the validation sample, for which the corresponding D1-Wasserstein (PITk) reaches the minimum. Then the spectroscopic redshifts of the k nearest neighbours were collected to construct an initial probability density estimate pini(ɀ|d), using the same binning as the softmax output in SCL.

3.4 Recalibration

A PIT distribution closest to uniformity is not necessarily uniform. To ensure good calibration of the to-be-obtained probability density estimates, after determining the optimal k and constructing pini(ɀ|d), we performed a (first-order) recalibration procedure similar to Bordoloi et al. (2010) using the local PITk distribution for the given k,

(11)

(11)

where pr(ɀ|d) is the recalibrated probability density estimate; Fini (ɀ|d) stands for the cumulative density estimate given by Pini(ɀ|d);  ) stands for the PITk number density at Fini(ɀ|d). We note that this is a local recalibration procedure for every data instance enabled by KNN, rather than global calibration over the whole sample as performed by Bordoloi et al. (2010).

) stands for the PITk number density at Fini(ɀ|d). We note that this is a local recalibration procedure for every data instance enabled by KNN, rather than global calibration over the whole sample as performed by Bordoloi et al. (2010).

A robust recovery of  is crucial for recalibration. Naturally,

is crucial for recalibration. Naturally,  can be approximated using the training sample alone,

can be approximated using the training sample alone,

(12)

(12)

Given possible overfitting in SCL, the distributions of the projections p(Φ(d)|ɀ) for the data seen and unseen in the training process may mildly differ, thus  may not perfectly reflect the actual PIT distribution within the defined neighbourhood of an unseen data instance. Therefore, we re-computed the PITk value for each data instance from the training sample, but with ɀspec in Eq. (9) being the redshift of its nearest instance from the validation sample. The distribution of such PITk values is denoted with

may not perfectly reflect the actual PIT distribution within the defined neighbourhood of an unseen data instance. Therefore, we re-computed the PITk value for each data instance from the training sample, but with ɀspec in Eq. (9) being the redshift of its nearest instance from the validation sample. The distribution of such PITk values is denoted with  . With this, we propose another estimate of

. With this, we propose another estimate of  as

as

(13)

(13)

Combining  and

and  is due to the consideration that using

is due to the consideration that using  alone would introduce large errors because of limited statistics. For each dataset, we checked the recalibration results with either Eq. (12) or Eq. (13) using the validation sample, and applied the better one for the target sample. The KNN-calibrated raw probability density estimates pr(ɀ|d) for the target sample produced by Eq. (11) are used for refitting described in the next subsection.

alone would introduce large errors because of limited statistics. For each dataset, we checked the recalibration results with either Eq. (12) or Eq. (13) using the validation sample, and applied the better one for the target sample. The KNN-calibrated raw probability density estimates pr(ɀ|d) for the target sample produced by Eq. (11) are used for refitting described in the next subsection.

Essentially, this recalibration procedure determines the weights assigned to the nearest neighbours of each data instance that better characterise the local redshift-data distribution p(ɀ, d). An assessment on recalibration is presented in the supplementary appendices (see Data and Code Availability).

3.5 Refitting

To bypass the intensive computation required for KNN, we implemented a refitting procedure that resumed an end-to-end discriminative model ready to be applied directly to imaging data and produce probability density estimates p(ɀ|d). To be specific, for a SCL framework developed in Sect. 3.2, we discarded the decoder, fixed the trained encoder and refit the estimator from scratch in a simple supervised manner with the KNN-calibrated raw probability density estimates pr(ɀ|d) as labels. This is equivalent to retraining only the estimator using the obtained latent vectors as inputs. Both the inputs and the KNN-calibrated labels for refitting are now from the target sample. We note that the KNN-calibrated labels have no access to the actual spectroscopic redshifts from the target sample, thus there is no leakage of target redshift information to the model.

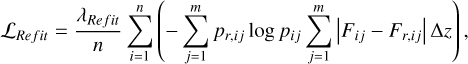

To better constrain the shapes of the probability density estimates p(ɀ|d), we adopted a hybrid loss function that consists of the cross-entropy and the 1-Wasserstein distance between p(ɀ|d) and pr(ɀ|d),

(14)

(14)

where the index i runs over the n data instances in a mini-batch; the index j runs over the m redshift bins for each data instance. Fij and Fr,ij stand for the two cumulative density estimates. ∆ɀ is the size of a redshift bin (i.e. ∆ɀ = (ɀmax – 0)/m). The coefficient λRefit controls the overall amplitude of the loss.

The model resumed this way has no difference from conventional end-to-end discriminative models but its outputs have been calibrated thanks to KNN. We demonstrate that refitting using the KNN-calibrated labels rather than the one-hot labels does not introduce apparent biases (Sect. 4.6) or miscalibration (Sect. 5).

The output probability density estimates may be unfavourably discretised due to sparse data coverage in redshift bins. Hence, once a model was resumed, each output estimate was further smoothed with a Gaussian filter, whose dispersion was determined as 5% of the standard deviation of the unsmoothed estimate. We found that this choice would effectively reduce the degree of discretisation but have negligible impact on the width of each probability density estimate (see Data and Code Availability for a few examples).

In practice, when a target sample is too large to perform KNN, we can select a representative subset from the target sample as a reference subsample, implement the refitting procedure, and apply the resumed model to the remaining data for inference. We test the outcomes of inference using the SDSS dataset in Sect. 4.6.

3.6 Combining probability density estimates

Combining probability density estimates from an ensemble of measurements would reduce uncertainties and improve accuracy (Treyer et al. 2024). Pasquet et al. (2019) took the arithmetic mean over individual probability density estimates from six realisations of the same network to obtain the final estimates. Though seemingly intuitive, such combination tends to produce overdispersed probability density estimates. This is because  does not hold true in general for any models {Φi} to be combined (see Sect. 5.3 for more discussions).

does not hold true in general for any models {Φi} to be combined (see Sect. 5.3 for more discussions).

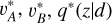

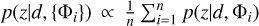

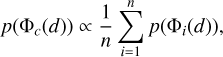

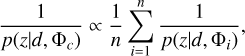

In this work, we suggest that a proper way to combine estimates from an ensemble of models is via the harmonic mean. To prove this, we first assume that the projection of a data instance d (with a particular redshift z) via any individual model Φi to the latent space (i.e. Φi(d)) is a random draw from the hypothetical neighbourhood of the projection via the combined model Φc, though these individual projections do not need to be enclosed in an actual common neighbourhood. We also assume that the probability of getting the projection Φc (d) can be computed by averaging the probabilities of getting each individual projection Φi(d) under the linear approximation,

(15)

(15)

Since the data instance d with the given redshift z falls in the neighbourhoods of all the projections {Φi(d)} and Φc(d), the probability of ɀ belonging to any neighbourhood remains constant, i.e. p(Φc(d)|ɀ) = p(Φi(d)|ɀ) for any model Φi. This leads to the conclusion that

(17)

(17)

for which p(ɀ|Φc(d)) and p(ɀ|Φi(d)) have been rewritten as p(ɀ|d, Φc) and p(ɀ|d, Φi), respectively, where ɀ is now considered as any redshift bins. Therefore, we propose to use the harmonic mean for combining an ensemble of probability density estimates. The combined estimates should be renormalised to unity if using the harmonic mean. For brevity in the text, we do not mention this explicitly hereafter. We show a few examples of probability densities before and after combination in the supplementary appendices (see Data and Code Availability).

3.7 Implementing CLAP

We implemented CLAP following all the procedures introduced in the previous subsections, using the SDSS, CFHTLS, and KiDS datasets separately. With each dataset, we used the training sample and the validation sample to develop the SCL framework, then included the target sample in the downstream procedures. For the CFHTLS dataset, both the galaxies with high-quality and low-resolution spectroscopic redshifts from the training sample were used in SCL, while only those with high-quality spectroscopic redshifts were used after SCL. This removes the mismatch between the initial training sample and the target sample.

For the encoder and the estimator in SCL, we adopted a modified version of the inception network developed by Treyer et al. (2024). It falls in the category of convolutional neural networks (CNNs), featured by a set of multi-scale inception modules (Szegedy et al. 2015) accompanied with convolutional layers and pooling layers. They produce feature maps that are then flattened and fed into a set of fully connected layers for predicting redshifts. Between the first two fully connected layers in this network, we inserted two new parallel layers to produce the vectors vA and vB. The whole part of the network before the vectors is regarded as the encoder. The last fully connected layers, only linked to vA, form the estimator. For it, we discarded the regression branch and only kept the classification branch (with a softmax function) from the original implementation by Treyer et al. (2024). The redshift bin size we used in the softmax output and the one-hot spectroscopic redshift labels for each dataset is listed in Table 1. The concatenation of vA and vB is fed into the decoder that has convolutional layers and bilinear interpolation for upsampling and reconstructing images. The latent vector vA has 16 dimensions for encoding redshift information, and vB has 512 dimensions. These numbers were chosen to ensure computational efficiency and no major loss of information. For the SDSS dataset and the CFHTLS dataset, we included the galactic reddening E(B – V) as an extra channel to the multi-band images inputted to the encoder. For the KiDS dataset, the 5-band NIR magnitudes from VIKING were further included as extra channels. More details about the network architectures can be found in Appendix A.

The raw SDSS and CFHTLS images have AB magnitude zeropoints of 22.5 and 30, respectively. The KiDS images were redefined to have a zeropoint of 25. In order to lessen the significance of the galaxy peak flux, we then rescaled the images from all the datasets using the formula

(18)

(18)

where I and I0 stand for the rescaled and the original pixel intensities, respectively.

In Eq. (8), we set the coefficient λMSE,I = 100 for the SDSS and KiDS datasets while λMSE,I = 1 for the CFHTLS dataset. λCE = 1 was set for all the datasets. With each dataset, we trained our SCL framework from scratch using the mini-batch gradient descent implemented with the default Adam optimiser (Kingma & Ba 2015). In each training iteration, a mini-batch of 64 images- label pairs was randomly selected from the training sample. The images were randomly flipped and rotated with 90 deg steps before being used as the initial inputs I. We adopted an initial learning rate of 10–4, which was reduced by a factor of 5 after 60 000 training iterations. By monitoring the loss with the validation sample, we decided to conduct 150 000, 120 000, 120 000 training iterations in total for the SDSS, CFHTLS, and KiDS datasets, respectively. The SCL framework for each dataset was properly trained with these numbers, while we note that mild underfitting or overfitting would not have a significant impact on the results.

For the adaptive KNN and the recalibration procedures, we used 100 bins from 0 to 1 to express the PIT distributions for all the datasets. We note that a small number of bins would enlarge quantisation errors, while a large number of bins would make the data insufficient to fill in the bins, leading to shot noise. An intermediate number such as 100 is a compromise between reducing quantisation errors and limiting shot noise. For efficient grid search, we selected discrete k values from four consecutive ranges: 5–200 with a step of 5, 200–600 with a step of 10, 600–1000 with a step of 20, and 1000–2000 with a step of 50. The maximum k is set to be 2000, large enough to avoid finding too small (sub-optimal) k values while still ensuring locality of each obtained neighbourhood and circumventing unnecessary intensive computation. The medians of the distributions of the obtained optimal k are 660, 110, and 145 for the SDSS, CFHTLS, and KiDS target samples, respectively. The majority of data have optimal k well below 2000, while only a minor fraction of the obtained optimal k values (2.9, 0.2, and 0.5%, respectively) are equal to this maximum value and thus may potentially exceed the defined k range. We note that small k values may be disfavoured because of large shot noise, but this potential bias can be corrected by recalibration (see Data and Code Availability for an assessment on recalibration).

In order to obtain the PIT distributions  for recalibration, by checking the validation samples, we chose to use Eq. (13) for the SDSS and CFHTLS datasets, and Eq. (12) for the KiDS dataset. We performed the second-order polynomial fit to remove random fluctuations in

for recalibration, by checking the validation samples, we chose to use Eq. (13) for the SDSS and CFHTLS datasets, and Eq. (12) for the KiDS dataset. We performed the second-order polynomial fit to remove random fluctuations in  and used the fit

and used the fit  in Eq. (11) for recalibration.

in Eq. (11) for recalibration.

In the refitting procedure, in order to test whether the resumed end-to-end models are capable of making inference for held-out data, we split the SDSS target sample into a reference subsample and an inference subsample, used for refitting and inference, respectively, while the CFHTLS and KiDS target samples are only used as reference samples due to limited statistics. We set λRefit = 100 in Eq. (14). For each dataset, we set the minibatch size to be 128, took 40 000 training iterations in total, and adopted a constant learning rate of 10−4. The mini-batch gradient descent with the default Adam optimiser was used again.

For the hyperparameters and coefficients involved in the implementation of CLAP, we tested a few different values around the aforementioned chosen values and found negligible impact on the results, indicating that the CLAP models established with the current implementation have converged. We refer to Lin et al. (2022) for detailed discussions on the impacts of varying implementing conditions, involving, for example, the training sample size, the redshift bin size, the number of training iterations, and the mini-batch size.

Finally, we leveraged the strategy of combining an ensemble of models to improve accuracy. Following the aforementioned procedures, we implemented CLAP ten times. Each time, the SCL and the refitting were conducted in the same way but with a different initialisation of trainable weights and different selections of mini-batches. The outcomes of adaptive KNN and recalibration may consequently be different due to such stochas- ticity. The output probability density estimates from the ten CLAP models were combined using the harmonic mean.

We compared the models developed with the full range of data for each dataset illustrated in Fig. 1 and those developed with segmented ranges of redshift and r-band magnitude. The results produced by these ‘sub-models’ generally follow the evolution trends of biases and accuracy obtained by the ‘fullmodels’ shown in Sect. 4.5. Therefore, we only discuss the results from the full-models in the remainder of the paper.

4 Results on probability density estimation

Throughout this section, we present our results on photometric redshift estimation by CLAP using the target samples from the SDSS, CFHTLS, and KiDS datasets. Comparisons are made with a few benchmark methods. In Sects. 4.3, 4.4, and 4.5, we use the whole SDSS target sample without separating the reference and the inference subsamples, while in Sect. 4.6 we make this separation when assessing the resumption of end-to-end models by refitting. We demonstrate that the reference and the inference subsamples have consistent results produced by CLAP and thus they can be combined. A few examples of probability density estimates obtained by CLAP are presented in the supplementary appendices (see Data and Code Availability).

4.1 Assessment techniques

Before further discussions in the next subsections, we present the techniques used for assessing the probability density estimates and the density-derived point estimates.

Firstly, we discuss our choice of point estimates, which is important for our analysis. There are several possible definitions of point estimates that can be derived from probability density estimates, such as ɀmean (i.e. the probability-weighted mean redshift), ɀmode (i.e. the redshift at the peak probability), and ɀmedian (i.e. the median redshift at which the cumulative probability is 0.5). Different definitions have been discussed in many studies such as Tanaka et al. (2018), Pasquet et al. (2019), Lin et al. (2022), Treyer et al. (2024), and Treyer et al. (in prep.). For template-fitting methods, the best-fitting redshifts are usually adopted as point estimates, analogous to ɀmode. In our work, we chose ɀmean as point estimates. Our consideration for this links back to the frequentist definition: if a probability density accurately describes the actual rate of occurrence of true redshifts, the empirical mean of the true redshifts should be equal to the expected mean redshift weighted by the probability density, i.e. ɀmean. In other words, with sufficient statistics, perfect probability density estimates lead to zero mean redshift residuals if estimated with ɀmean (though the reverse is not necessarily true). Other choices of point estimates do not have such property in general. Therefore, ɀmean is taken as our exclusive choice of point estimates ɀphoto for CLAP hereafter.

To have an inspection over the properties of the probability density estimates, we checked a few summary statistics defined using the individual probability densities and the corresponding spectroscopic redshifts ɀspec, including the probability integral transform (PIT), the 1-Wasserstein distance, the continuous ranked probability score (CRPS), the cross-entropy, the entropy, the standard deviation, the skewness, and the kurtosis. Regarding the point estimates, we characterised the mean redshift biases using δ<ɀ>, the residuals of mean redshifts estimated in redshift or magnitude bins, and quantified the estimation accuracy using σMAD, the estimate of deviation based on the median absolute deviation (MAD). The definitions of all these metrics are shown in Table 2.

Knowing that each individual probability density has only one realisation (i.e. a unique spectroscopic redshift for a given galaxy), we also considered a distribution offset analysis with a stacking technique to assess the global behaviour of the probability density estimates over a sample. Specifically, we recentred each probability density estimate by shifting the point estimate ɀphoto to ɀ = 0 and stacked all such recentred estimates over a sample (denoted with pΣ(ɀ – ɀphoto)); we also drew the histogram of ɀspec – ɀphoto. If the probability density estimates are well- calibrated, these two distributions should be consistent given sufficient statistics (though the reverse is not necessarily true). Hence, we checked the cumulative offset of the stacked recentred probability density pΣ(ɀ – ɀphoto) relative to the ɀspec – ɀphoto histogram (denote with ∆FpΣ–h). Such cumulative offset is sensitive to any inconsistency between the two distributions to be compared.

Although many studies stacked the original probability density estimates and made a comparison with the actual ɀspec distribution, we note that such stacked probability density and the ɀspec distribution are usually not directly comparable. In other words, for probability densities p(ɀ|d1), p(z|d2),…, p(ɀ|dn) to be stacked, there may exist a data instance with any particular redshift ɀ enclosed in the neighbourhoods of d1, d2,…, dn simultaneously, making the selections of (ɀ, d1), (ɀ, d2),…, (ɀ, dn) not mutually exclusive, such that  (see also Malz 2021). However, the connections among d1, d2,…, dn through the shared data instance with ɀ can be broken by instead using ∆ɀi = ɀ – ɀphoto,i for each di. This is because (ɀ, d1), (ɀ, d2),…, (ɀ, dn) that initially had the shared ɀ are now reassigned to different {∆ɀi} bins since {ɀphoto,i} are different. Now the selections of (∆ɀ, d1), (∆ɀ, d2),…, (∆ɀ, dn) for the same ∆ɀ are statistically mutually exclusive, since ∆ɀ is no longer contributed by a shared data instance in the neighbourhoods of d1, d2,…, dn. Hence, the stacked recentred probability density pΣ(ɀ – ɀphoto) and the ɀspec –ɀphoto distribution are now comparable provided with sufficient statistics. Admittedly, this stacking technique is not rigorously mathematically meaningful, but it serves as a tool to check the reliability of the probability density estimates globally over a sample.

(see also Malz 2021). However, the connections among d1, d2,…, dn through the shared data instance with ɀ can be broken by instead using ∆ɀi = ɀ – ɀphoto,i for each di. This is because (ɀ, d1), (ɀ, d2),…, (ɀ, dn) that initially had the shared ɀ are now reassigned to different {∆ɀi} bins since {ɀphoto,i} are different. Now the selections of (∆ɀ, d1), (∆ɀ, d2),…, (∆ɀ, dn) for the same ∆ɀ are statistically mutually exclusive, since ∆ɀ is no longer contributed by a shared data instance in the neighbourhoods of d1, d2,…, dn. Hence, the stacked recentred probability density pΣ(ɀ – ɀphoto) and the ɀspec –ɀphoto distribution are now comparable provided with sufficient statistics. Admittedly, this stacking technique is not rigorously mathematically meaningful, but it serves as a tool to check the reliability of the probability density estimates globally over a sample.

Similarly, we checked the cumulative offset between the zspec − zphoto histograms for two methods (denote with ∆Fh–h), which can be used to demonstrate their relative accuracy and biases, irrespective of whether or not they have probability density estimation.

Estimates and summary statistics derived from probability densities.

4.2 Benchmark methods

We compared CLAP with a few benchmark methods for the three datasets. The results predicted by our SCL framework (i.e. the softmax outputs q(z|d) from SCL rather than refitting) are shown for the first comparison. We reiterate that the SCL prediction is only regarded as a baseline and should not be confused with the results from the complete implementation of CLAP. For the KiDS dataset, we also show the results produced by CLAP but without including the 5-band NIR magnitudes from VIKING as inputs, demonstrating the impact of the NIR data on photometric redshift estimation. For both CLAP and the SCL prediction, the ensemble of ten probability density estimates are combined using the harmonic mean unless otherwise noted.

For further comparison, we present the results obtained by several image-based deep learning methods collected from different studies, including inception networks from Pasquet et al. (2019) and Treyer et al. (in prep.), a bias correlation approach based on inception networks from Lin et al. (2022), a capsule network from Dey et al. (2022a), as well as a VGG network (using the 4-band KiDS images as inputs) and a hybrid network (using the 4-band KiDS images and the 5-band VIKING photometry as inputs) from Li et al. (2022a). Regarding photometry-only methods, we show the results from Beck et al. (2016) who applied KNN and local regression, the results obtained using Le Phare (Arnouts et al. 1999; Ilbert et al. 2006), as well as those retrieved from the public KiDS catalogues1 produced by MLPQNA (Brescia et al. 2014; Cavuoti et al. 2015, 2017), ANNz2 (Sadeh et al. 2016; Bilicki et al. 2018), and BPZ (Benítez 2000).

We show the results from Lin et al. (2022), Pasquet et al. (2019), Dey et al. (2022a), Treyer et al. (in prep.), and Li et al. (2022a) using their test samples. All but the CFHTLS test sample from Lin et al. (2022) essentially have the same redshift-data distributions as our target samples in spite of different sample divisions. We use the results provided by Lin et al. (2022) for which the zphoto-dependent mean redshift biases have been corrected, bypassing the impact of the mismatch in distributions. The results from Beck et al. (2016) and Le Phare are shown using our target samples, while those from MLPQNA, ANNz2, and BPZ are shown using the test sample provided by Li et al. (2022a). These results are directly compared despite that different choices of point estimates were adopted by different studies. Pasquet et al. (2019), MLPQNA, and BPZ have probability density estimates available for comparison, while the other methods only provide point estimates. The probability density estimates provided by Pasquet et al. (2019) have the same redshift coverage and binning as ours. For consistency with our analysis on the KiDS dataset selected with zspec < 3, the original probability density estimates given by MLPQNA and BPZ were truncated at z = 3, rebinned, and renormalised. We further caution that the VGG network, MLPQNA, ANNz2, and BPZ for the KiDS dataset did not use the five NIR bands, thus they can be treated as a direct reference for the ‘No-NIR’ CLAP implementation, but not the default implementation with the NIR data included. These results are all shown to enrich the comparison among various methods.

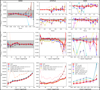

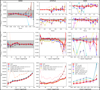

4.3 Calibration of probability density estimates

In the upper panels of Fig. 4, the dashed curves show the zspec – zphoto histograms for CLAP and the benchmark methods for the SDSS, CFHTLS, and KiDS datasets. The solid curves show the stacked recentred probability densities (z − zphoto) when the probability density estimates are available. Their cumulative offsets  are shown as the solid curves in the lower panels. The first column of Fig. 5 shows the PIT distributions. The cumulative offset

are shown as the solid curves in the lower panels. The first column of Fig. 5 shows the PIT distributions. The cumulative offset  and the PIT distribution are complementary and offer a cross-check over the calibration of a given set of probability density estimates.

and the PIT distribution are complementary and offer a cross-check over the calibration of a given set of probability density estimates.

For all the three datasets, the cumulative offsets  given by CLAP (including the No-NIR implementation for the KiDS dataset) are constrained within low levels (i.e. 0.005, 0.01, and 0.01, respectively), better than those given by the SCL prediction and the other benchmark methods. CLAP also produces nearly flat PIT distributions, though the PIT distributions obtained for the CFHTLS dataset and by the No-NIR implementation for the KiDS dataset are slightly convex, in addition to some fluctuations probably as a result of estimation errors. The exclusion of the NIR data for the KiDS dataset degrades the estimation accuracy (see Sect. 4.5), but hardly influences the calibration of probability densities. In particular, No-NIR CLAP outperforms the SCL prediction for which the NIR data were used. Both the small offsets and the nearly flat PIT distributions gain evidence that the probability density estimates obtained by CLAP are well-calibrated.

given by CLAP (including the No-NIR implementation for the KiDS dataset) are constrained within low levels (i.e. 0.005, 0.01, and 0.01, respectively), better than those given by the SCL prediction and the other benchmark methods. CLAP also produces nearly flat PIT distributions, though the PIT distributions obtained for the CFHTLS dataset and by the No-NIR implementation for the KiDS dataset are slightly convex, in addition to some fluctuations probably as a result of estimation errors. The exclusion of the NIR data for the KiDS dataset degrades the estimation accuracy (see Sect. 4.5), but hardly influences the calibration of probability densities. In particular, No-NIR CLAP outperforms the SCL prediction for which the NIR data were used. Both the small offsets and the nearly flat PIT distributions gain evidence that the probability density estimates obtained by CLAP are well-calibrated.

In contrast, for either the CFHTLS dataset or the KiDS dataset, the tilde around zero in the cumulative offset  given by the SCL prediction indicates that the stacking of the recentred probability density estimates is narrower than the zspec − zphoto histogram, reflecting that the probability density estimates are underdispersed (or overconfident), as also implied by the convex PIT distributions. This is a sign of miscalibra-tion caused internally by the estimation method. The probability density estimates given by MLPQNA and BPZ are significantly uncalibrated, producing large cumulative offsets

given by the SCL prediction indicates that the stacking of the recentred probability density estimates is narrower than the zspec − zphoto histogram, reflecting that the probability density estimates are underdispersed (or overconfident), as also implied by the convex PIT distributions. This is a sign of miscalibra-tion caused internally by the estimation method. The probability density estimates given by MLPQNA and BPZ are significantly uncalibrated, producing large cumulative offsets  . For the SDSS dataset, the trough centred at zero in the cumulative offset

. For the SDSS dataset, the trough centred at zero in the cumulative offset  given by the SCL prediction indicates that the probability density estimates are on average shifted towards higher redshift, resulting in overestimated zphoto and the slightly tilted PIT distribution, though the PIT distribution is already close to flatness thanks to high accuracy. For Pasquet et al. (2019), the reversed tilde and the concave PIT distribution indicate that the probability density estimates are overdispersed, which is an artefact erroneously introduced by combining probability density estimates using the arithmetic mean (discussed in Sect. 5.3).

given by the SCL prediction indicates that the probability density estimates are on average shifted towards higher redshift, resulting in overestimated zphoto and the slightly tilted PIT distribution, though the PIT distribution is already close to flatness thanks to high accuracy. For Pasquet et al. (2019), the reversed tilde and the concave PIT distribution indicate that the probability density estimates are overdispersed, which is an artefact erroneously introduced by combining probability density estimates using the arithmetic mean (discussed in Sect. 5.3).

These results indicate that CLAP is more robust than the other methods in obtaining well-calibrated probability density estimates. We present a deeper investigation on miscalibra-tion in Sect. 5. We note that the adaptive KNN algorithm and recalibration are both key parts of CLAP for ensuring good calibration for each data instance. An assessment on recalibration is presented in the supplementary appendices (see Data and Code Availability).

|