| Issue |

A&A

Volume 640, August 2020

|

|

|---|---|---|

| Article Number | A42 | |

| Number of page(s) | 21 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202037722 | |

| Published online | 10 August 2020 | |

RASSINE: Interactive tool for normalising stellar spectra

I. Description and performance of the code

1

Astronomy Department of the University of Geneva, 51 Ch. des Maillettes, 1290 Versoix, Switzerland

e-mail: michael.cretignier@unige.ch

2

Département de Physique Théorique, Université de Genève, 24 quai Ansermet, 1211 Genève 4, Switzerland

e-mail: jeremie.francfort@unige.ch

Received:

13

February

2020

Accepted:

3

June

2020

Aims. We provide an open-source code allowing an easy, intuitive, and robust normalisation of spectra.

Methods. We developed RASSINE, a Python code for normalising merged 1D spectra through the concepts of convex hulls. The code uses six parameters that can be easily fine-tuned. The code also provides a complete user-friendly interactive interface, including graphical feedback, that helps the user to choose the parameters as easily as possible. To facilitate the normalisation even further, RASSINE can provide a first guess for the parameters that are derived directly from the merged 1D spectrum based on previously performed calibrations.

Results. For HARPS spectra of the Sun that were obtained with the HELIOS solar telescope, a continuum accuracy of 0.20% on line depth can be reached after normalisation with RASSINE. This is three times better than with the commonly used method of polynomial fitting. For HARPS spectra of α Cen B, a continuum accuracy of 2.0% is reached. This rather poor accuracy is mainly due to molecular band absorption and the high density of spectral lines in the bluest part of the merged 1D spectrum. When wavelengths shorter than 4500 Å are excluded, the continuum accuracy improves by up to 1.2%. The line-depth precision on individual spectrum normalisation is estimated to be ∼0.15%, which can be reduced to the photon-noise limit (0.10%) when a time series of spectra is given as input for RASSINE.

Conclusions. With a continuum accuracy higher than the polynomial fitting method and a line-depth precision compatible with photon noise, RASSINE is a tool that can find applications in numerous cases, for example stellar parameter determination, transmission spectroscopy of exoplanet atmospheres, or activity-sensitive line detection.

Key words: techniques: spectroscopic / methods: numerical / methods: data analysis

© ESO 2020

1. Introduction

A spectrum is a fundamental observable that is used to study astronomical objects such as galaxies, stars, and exoplanets. It describes the distribution of photons per wavelength bins and can either be used in terms of absolute quantity to determine the luminosity of the objects, or colours can be analysed separately through photometric bands. A rich content of information is also brought by the absorption or emission lines, for which the spectrum has to be normalised by a continuum. This happens for instance in the framework of stellar abundances studies (e.g. Blanco-Cuaresma et al. 2014; Sousa et al. 2015; Adibekyan et al. 2016) or exoplanet atmospheres (e.g. Wyttenbach et al. 2015; Allart et al. 2017). For radial velocity (RV), the spectrum does not need to be continuum-normalised, but a colour correction has to be applied (e.g. Bourrier & Hébrard 2014; Malavolta et al. 2017), which is itself related to the continuum of the spectrum. Normalised spectra are also necessary to construct the binary masks used in Doppler spectroscopy to extract RV using the cross-correlation technique (Pepe et al. 2003). Finally, a precise determination of the continuum level also allows observing the stellar line variability that is induced by the stellar activity (Thompson et al. 2017; Wise et al. 2018; Dumusque 2018; Cretignier et al. 2020).

When absorption lines are studied, a non-trivial step consists of normalising the spectrum by its continuum, where the latter can differ from a black-body curve due to Rayleigh scattering reddening. This differential chromatic response can also be directly induced by the spectrograph itself through the optical elements and the CCD quantum efficiency, which varies with wavelength. An effective process for normalising high-resolution spectra obtained by various surveys in a unified and coherent way appears as an important step. These surveys include ESPRESSO (Pepe et al. 2014), EXPRES (Fischer et al. 2017), NEID (Schwab et al. 2016), PEPSI (Strassmeier et al. 2015), CRIRES, NIRPS (Bouchy et al. 2017), and previous surveys, such as CORALIE (Queloz et al. 2000), HARPS (Pepe et al. 2002a, 2003; Mayor et al. 2003), HIRES (Pasquini et al. 2010), or HARPS-N (Cosentino et al. 2012).

Current methods often deal with the individual orders of 1D extracted echelle-order spectra because they represent a narrower band of the spectrum where the continuum presents fewer inflection points. A filter is often used in order to smooth the data and remove as many stellar lines as possible. This filter can be a rolling maximum or moving average, an asymmetric sigma clipping, or even a Fourier filtering. The continuum is then estimated by fitting a low-order polynomial on the filtered spectrum (Tody 1986, 1993).

When the orders are blaze-corrected and merged to produce a single merged 1D spectrum with a large wavelength coverage, a model with many free parameters is necessary to account for the numerous inflection points in the continuum, which are induced by atmospheric absorption and CCD response. A high-order polynomial is often not flexible enough. When a stellar template is available for the star or spectral type for which we wish to normalise the spectrum, it is possible to calculate the ratio between the spectrum we wish to normalise and the template, and fit any trend observed in this ratio. This trend is then removed from the spectrum to normalise it. The main advantage of this method consists of considerably reducing the required order of the polynomial, but the intrinsic disadvantages of polynomial fitting remain (Skoda 2008). A method like this allows for instance to correct for colour variation induced by different airmass observations (Malavolta et al. 2017).

The rolling alpha shape for a spectrally improved normalisation estimation (RASSINE) is an open-source1 Python code (Van Rossum & Drake 1995, 2009; Python Core Team 2019) for normalising merged 1D spectra, which are obtained by merging blaze-corrected 1D echelle-order spectra. The code provides interactive graphical user interfaces (GUI) to help users through the different steps that are required to efficiently normalise a spectrum. The code uses standard Python libraries such as Numpy (Oliphant 2006; van der Walt et al. 2011), Matplotlib (Hunter 2007), Scipy (Virtanen et al. 2020), Pandas (McKinney 2010), and Astropy (Astropy Collaboration 2018).

RASSINE uses the convex-hull and alpha-shape theories to model the upper envelope of a spectrum, which is often equivalent to the stellar continuum for solar-type stars. We note that the algorithm used by RASSINE to normalise spectra is similar to the AFS code that was recently published in Xu et al. (2019), where the authors showed the higher performance of the alpha-shape method compared to classical iterative methods. RASSINE and AFS are different, however, because the former uses merged 1D spectra as input while the latter uses 1D echelle-order extracted spectra. We note that RASSINE uses six free parameters to model the spectrum continuum. An automatic mode of the algorithm, described in Appendix B, can provide first guesses for five of them.

The theory behind RASSINE is described in Sect. 2. The continuum accuracy and line-depth precision of the code on spectral line depth, as well as its ability to extract the correct continuum around broad absorption lines, are described in Sect. 3. We then conclude in Sect. 4.

2. Theory

2.1. Convex hull and alpha shape

Determining the upper envelope of a spectrum is closely related to the concepts of convex hull and alpha shape, the latter being a generalisation of the former. We briefly review these ideas here and restrict our description to the 2D case.

The convex hull of a set of points S in the plane can be understood instinctively as follows: take an elastic band and stretch it around the set S. The final shape that the band will take is the convex hull of the set. Mathematically speaking, if the set is made of points xi, the convex hull C(S) can be written as (Asaeedi et al. 2013)

The convexity of the convex hull forbids the existence of inflexion points. Because a black-body radiation curve presents inflexion points, we need to consider the concept of alpha shapes, which is a generalisation of the convex-hull theory and allows for concave hulls.

When a convex hull is considered, all the internal angles are at most 180°. In the alpha-shape framework, this condition is relaxed such that each internal angle is at most 180 ° +α. This allows modelling the inflexion points in the upper envelope of a spectrum. This upper envelope is then modelled by a shape that is intermediate between a convex hull (α = 0°) and a full alpha shape (α = 180°).

2.2. Outline and structure of the code

2.2.1. Brief overview

The main assumption behind the code is that the local maxima of the spectrum correspond to the continuum. This assumption is relatively well satisfied for solar-type spectra, except perhaps in the bluest part of the visible because of atmosphere extinction and the high density of spectral lines. However, this assumption no longer holds for M-dwarf spectra, for which the extremely high density of lines completely erases the stellar continuum at nearly all wavelengths.

RASSINE can be subdivided into five steps known as the SNAKE sequence:

-

Smoothing of the spectrum,

-

neighborhood maxima detection,

-

alpha-shape algorithm,

-

killing outliers,

-

envelope interpolation.

The smoothing is necessary to increase the signal-to-noise ratio (S/N) per bin element, which allows handling spectra at different noise levels. This step is also relevant to prevent adjusting the continuum to the upper envelope of the noise in spectra with low S/N. After the smoothing, all the local maxima are first flagged. The maxima corresponding to the upper envelope are then chosen using a modified gift-wrapping algorithm, which removes the local maxima formed by blended lines. This alpha-shape algorithm can be compared to a rolling pin that rolls over the local maxima. The code finally rejects remaining outliers that exist in the final selection of local maxima and performs different types of interpolation on the remaining maxima to obtain the stellar continuum.

2.2.2. Smoothing of the spectrum

This first step of smoothing consists of removing the high-frequency noise from the stellar continuum to prevent contamination by it when the best fit to the continuum is determined. RASSINE proposes two different smoothing options, either degrading the spectrum resolution, or using a Savitzky-Golay filter (Savitzky & Golay 1964).

For the first smoothing option, the spectral resolution is degraded by convolving the spectrum with either a rectangular or Gaussian kernel. We note that this convolution will change the depth of the spectral line, but because the goal is to best fit the stellar continuum, this is not a problem. The kernels can be chosen with the parameter par_smoothing_kernel. We use the convention of the bold and italic font for the parameters from here on, where the name of the parameter is written as in the config file of the code. The strength of the smoothing is controlled by the width of the kernel, which can be tuned by the par_smoothing_box parameter (in units of wavelength sampling).

The second smoothing option consists of a Savitzky-Golay filter, which can be described as a low-order polynomial rolling fit on the data. The polynomial is chosen to be of the third degree. The advantage of this filter is its ability to keep the global shape of the spectrum almost invariant, whereas it filters the oscillations on a scale smaller than the window very well. However, this filter remains more sensitive to strong flux variation, which can be due to cosmic rays, lamp contamination, or hot pixels, for example. To counteract this problem, an asymmetric sigma-clipping is performed on the absolute difference between the smoothed and initial spectrum, and outliers in the smoothed spectrum are brought back to their initial flux values to detect and reject these points more easily at a later stage.

2.2.3. Local maxima

The second step performed by the code is to search for the local maxima. A point is called a local maximum if its value is the highest in its closest neighbourhood, which can be tuned by the parameter par_vicinity that corresponds to the size of the half-window defining the neighbourhood (in units of the wavelength sampling, e.g. 0.01 Å for HARPS spectra). If the previous smoothing step was performed correctly, this parameter remains quite irrelevant, but it becomes important when low S/N spectra are studied to speed up the code.

2.2.4. Penalty for broad absorption regions

As we show in the next sub-section, the idea behind RASSINE is to roll a rolling pin, of a size larger than typical spectral lines, on top of the spectrum to adjust its continuum. At first order, all the spectral lines should have the same width in velocity space, determined by the projected stellar rotation, macroturbulence, and instrumental resolution (Gray 2005). It is therefore important to determine an optimal value for the average line width, which is stored in the par_fwhm variable (units of km s−1). This can be done by using the full width at half-maximum (FWHM) of a Gaussian fitted to the cross-correlation function (CCF, Baranne et al. 1996; Pepe et al. 2002b).

However, if the size of the rolling pin is always the same, the continuum will be poorly fitted in region of strong absorption. When lines begin to saturate, for example for the Hα, CaII H&K, sodium doublet NaD or triplet magnesium MgIb absorption lines, the wings of the Voigt profile begin to dominate and the line width diverges from the typical value of the weak regime. To prevent the rolling pin from falling in regions of broad absorption, a penalty map is used to increase the radius of the rolling pin as soon as such a region is reached. The GUI for this step is presented in Fig. 1. First of all, we adjust two approximated continua of the spectrum using a rolling maximum algorithm. The first, S1 (red curve in the top left panel of Fig. 1), using a small window of 40 times the FWHM of the CCF (converted into Å using λmin, the minimum wavelength of the analysed spectrum), and the second, S2, using a window 10 times larger than S1 (black curve in the top left panel of Fig. 1). Even when both windows are fixed in wavelength, it is not necessary to increase them in size with the dispersion because both of them are already far more extended than the typical line width. To prevent the rolling maximum algorithm to be sensitive to anomalous flux intensities (cosmic rays or contamination between fibers), we first reject outliers using a rolling sigma-clipping on a 5 Å window, with the specificity that in our sigma-clipping, the mean is replaced by the median and the standard deviation by 1.5 time the interquartile range (1.5 time the 75%−25% quartiles, Upton & Cook 1996). Then, for each wavelength, the penalty p = (S2 − S1)/S2 is computed and normalised by the minimum and maximum value over the entire spectrum to lie between 0 and 1. This penalty curve (grey curve in the centre left panel of Fig. 1) is then transformed into a step function (black curve in the same panel) to make the code more efficient.

|

Fig. 1. Second GUI of RASSINE that is used to define the varying radius of the alpha shape. In this case, the values for the parameters are bad (ν too high), but they are chosen to highlight the dependence of the radius on wavelength. Top left: local maxima (blue dots) extracted from the smoothed spectrum (grey curve). A continuum S1 using a small window (red curve) and S2 using a large window (black curve) are computed with a rolling maximum on the spectrum. Middle left: penalty computed from the relative difference between S1 and S2 (grey curve). The penalty is rounded and looks like a step function (black curve). Bottom left: final radius values for the rolling pin along the wavelength axis. Broader absorption lines such as Hβ at 4861 Å or the MgI triplet around 5172 Å are penalized such that the rolling pin increases in size. The observed linear trend corresponds to the dispersion because all spectral lines at first order have the same line width in velocity space. Top right: penalty map relation between the radius of the rolling pin and the penalty value. The curve is parametrised with a minimum radius R, a maximum radius Rmax, and an exponent ν that represents the exponent of the power law. The second parameter μ is only useful when the sigmoid function is selected for the penalty map and represents the centre of the sigmoid (ν being proportional to the sigmoid width). |

The next step consists of choosing the parameter par_reg_nu, which appears in the function used to map the penalty p to the radius r of the rolling pin (black curve in the bottom left panel of Fig. 1). Two functions to map the penalty to the radius of the rolling pin are provided: a polynomial function, and a sigmoid one. For polynomial mapping (black curve in the top right panel of Fig. 1), the radius is given by

where C(λ) is the chromatic law. As line widths are similar at first order in velocity space, the classical Doppler effect formula tells us that line width in wavelength-space will be a linear function of wavelength. Therefore the radius of the rolling pin in wavelength should satisfy the chromatic law C(λ) = λ/λmin. ν is a real positive parameter specified directly with par_reg_nu, and R, Rmax are two parameters of the model: par_R and par_Rmax. They define the minimum and maximum radii in wavelength units (generally Å). Both parameters must be specified at the minimum wavelength of the spectrum because the values will be scaled by the C(λ) in the penalty law. The values for par_R and par_Rmax have to be provided by the user regarding the typical line width of its spectrum as well as the broadest absorption gap, but both parameters can be estimated using the automatic mode (see Appendix B). If ν > 1, the penalty map is convex, and only high penalties will modify the radius substantially. In contrast, if ν < 1, the map is concave, and only low penalties will leave the radius unchanged. The second available law, the sigmoid (see Fig. D.2 for an example), allows us to produce a two-radius regime because its shape is step-like. The transition from the smallest and largest radius is given by the sigmoid centre μ, and the smoothness of the transition is given by the sigmoid width ν. The best value for a given spectrum depends on its shape. Smoothed spectra can be effectively reduced with ν < 1, whereas spectra with long oscillations due to instrumental effects or poor merging of 1D extracted echelle-order spectra are better reduced with ν > 1. A good value for several cases was found using the polynomial mapping and 0.7 < ν < 1.3. This parameter is the only one for which no calibration was performed to adjust it automatically.

2.2.5. Alpha shape (rolling pin)

This part is the main step of RASSINE. At this stage, we have a collection of selected local maxima from which we would like to extract a continuum. Moreover, for each of these local maxima, a radius for the alpha shape was assigned in the previous section (Sect. 2.2.4). The idea is the following: the alpha shape, which can be seen as a rolling pin, starts on the maxima with the smallest wavelength. It then rolls clockwise, being anchored to this maximum, until it touches another maximum. This maximum is then taken to be the next anchor point, the radius is updated, and the code continues iteratively. An algorithm like this is called gift wrapping or Jarvis walk (Jarvis 1973) and is presented in Appendix A. Other algorithms for a convex hull exist (e.g. Graham 1972; Eddy 1977; Phan 2007), but the Jarvis walk remains the most intuitive.

The alpha shape, or more intuitively, the rolling pin, is a circle that rolls on top of the spectrum. However, when we take the raw spectrum, we have a problem because flux units, normally expressed in analog-to-digital units (ADU), are much larger than the wavelength units, which are generally expressed in Å. We therefore have to renormalise the flux so that variations in the wavelength and flux directions are comparable. This is done by transforming the flux according to the following relation:  , with fraw the flux of the raw spectrum, and Δλ and Δfraw the difference between the maximum and minimum wavelength and raw flux values, respectively. After this first scaling, the following equality is satisfied: λmax − λmin = fmax − fmin. A second stretching is then applied using the parameter par_stretching, which is positive and larger than 1, which leads to the following relation:

, with fraw the flux of the raw spectrum, and Δλ and Δfraw the difference between the maximum and minimum wavelength and raw flux values, respectively. After this first scaling, the following equality is satisfied: λmax − λmin = fmax − fmin. A second stretching is then applied using the parameter par_stretching, which is positive and larger than 1, which leads to the following relation:

The scaling of the axes is critical for the success of the alpha-shape step performed later. An inappropriate value can lead to unsuitable normalisation where the continuum is not on top of the spectrum, but is rather going through it (see the blue curve in Fig. 2). This can be explained by the fact that the alpha shape rolls on the spectrum from the left to the right and that the algorithm is only applied to local maxima. If the stretching parameter is too small, the good local maxima are beyond reach and the alpha shape chooses local maxima created by blended lines to continue rolling. When the stretching is well selected, the alpha shape rolls on top of the spectrum, thereby selecting local maxima corresponding to the continuum. Details about this process and the selection of local maxima are described in Appendix A. Hopefully, as was concluded in Xu et al. (2019), a wide range of values for the stretching produces good enough results and incorrect values are easily recognisable in the final product. For intuition, the par_stretching parameter can be seen as the inverse tension of a veil that would cover the spectrum; the lower its value, the higher the tension.

|

Fig. 2. Effect of different values for par_stretching on the normalisation of the spectrum (grey curve). When par_stretching is too small (blue curve), the alpha shape falls on the blended local maxima. As soon as par_stretching is sufficiently high (orange curve), the alpha-shape approach provides a good normalisation. A wide range of values (orange and green curves) can provide a good normalisation below the 1% level. |

2.2.6. Outlier detection

Algorithms for selecting an upper envelope remain very sensitive to outliers with anomalous flux intensities. Even though much attention has been expended so far into suppressing as many of these points as possible, we perform here a final check by considering three types of outliers: (1) edge maxima, (2) high or low extrema, and (3) maxima that are too close.

1. At the blue and red edges of the spectrum, the rolling pin touches spurious maxima simply because it starts and ends on them. If the spectrum were measured on an infinite wavelength grid, the continuum would touch other maxima, which are unfortunately not available. To prevent selecting those spurious maxima as part of the continuum, we associate with the count_cut_lim first and count_cut_lim last maxima the value of the count_cut_lim + 1 and N − count_cut_lim − 1 maxima, where N is the number of selected maxima. By default, count_cut_lim is set to three, therefore RASSINE flattens the first and last three maxima. This step can be performed with the GUI (see Fig. D.3).

2. Sharp peaks are removed by computing the left and right derivative at each selected maximum with respect to the two neighbouring maxima. By default, the five points with the highest difference between the left and right absolute derivatives are displayed by the GUI, and the user can choose to retain or delete them (see Fig. D.4). If one point is suppressed, the next one with the highest absolute derivative difference will replace it. By default, RASSINE suppresses the points that are higher than the 99.5 percentiles of the distribution. The process can be run iteratively, with the number of iterations controlled by the optional parameter count_out_lim.

3. Maxima that are too close to each other are problematic for the cubic interpolation used at the end to define the continuum (see Sect. 2.2.7). Moreover, because these points do not add any relevant information, they are removed automatically. To detect them, the code computes the distance between each pair of neighbouring maxima that is still present in the final selection. Then, an asymmetric sigma-clipping is performed on the distribution of the distance difference to identify outliers. For each group of detected close maxima, the algorithm retains the point that maximises the equidistance with the two neighbouring maxima.

2.2.7. Envelope interpolation

We are now left with several reliable local maxima, none or very few of them being outliers. To generate the continuum, RASSINE interpolates linearly and cubically on them, which produces two distinct continua. Some points may still be too close, producing wiggles in some cases for the reason explained in Sect. 2.2.6 regarding item 3. RASSINE also provides two other continua that can be a better match for noisy spectra, for which the upper envelope is always more or less correlated with the noise level of the spectrum. This tends to produce a continuum that is slightly too high, as already mentioned by Xu et al. (2019). In this case, instead of taking the flux value of the selected local maxima to build the continuum, an average of the flux around each selected local maximum is used. The average is performed using a window of half-size denoising_dist, which by default is set to five wavelength elements.

In summary, RASSINE produces four continua (linear and cubic, and denoised and undenoised) and the users are free to decide which of them they use. In Sect. 3.4 we show that in most cases, the linear interpolation gives the best results, therefore we recommend to use this continuum. For some applications, it might be interesting to have a continuum that can be differentiated everywhere, and thus the cubic interpolated continuum might be better. An example of the final result of RASSINE, performed in complete automatic mode (see Appendix B), is shown for a HARPS solar spectrum in Fig. 3 and for four other spectra in Appendix E, Fig. E.1.

|

Fig. 3. Final plot of the GUI. Top: initial solar spectrum given as input to RASSINE (green curve), as well as the continuum (red curve) as computed by RASSINE in complete automatic mode (see Appendix B). This continuum is derived using a cubic interpolation over a selection of relevant local maxima that are also called anchor points (black dots). The continuum avoids three spurious peaks due to simulated cosmic rays at 6550, 6600, and 6750 Å and jumps above the large absorption regions. Bottom: spectrum normalised with RASSINE. |

2.3. Comparison with the AFS code (Xu et al. 2019)

A similar alpha-shape strategy for normalising spectra has been developed by Xu et al. (2019) with a code called AFS. It is written in R. The authors also showed that the alpha-shape strategy outperforms iterative methods. Interestingly enough, our codes, which are rather similar in approach, were not thought to be used on the same objects. Whereas the purpose of RASSINE initially was to normalise merged 1D spectra, AFS was developed to remove the blaze function of the extracted echelle-order spectra. Both codes are then quite different in their conception.

Another important difference between the two codes is that in our case, the radius of the alpha shape varies according to a penalty law, which is not the case in AFS. As a consequence, even if their code contains only three parameters, they have necessarily to be fine-tuned depending on the spectral features present in the 1D extracted echelle-order spectrum that is analysed. In comparison, RASSINE contains six parameters for the full spectrum, but five of them are calibrated and can be automatically adjusted to provide a first guess.

AFS is by nature more sensitive to broad absorption lines than RASSINE. As an example, Fig. 10 in Xu et al. (2019) shows that for the sodium doublet at 5890 Å, their alpha-shape process selects the flux between the two lines as part of the continuum, whereas this location is in fact still a wing absorption. The two broad lines therefore appear slightly shallower. The same issue occurred in our case for the solar spectrum, but we resolved it by increasing the tension (see Sect. 3.1). This trouble emerges in their case precisely because the authors work with 1D extracted echelle-order spectra that are not corrected for blaze. As the interline region is precisely situated at the maximum of the blaze, the alpha shape is forced to select the region as part of the continuum. We note that Xu et al. (2019) described a more sophisticated correction, called ALSFS, which appears to resolve this issue. This is not clearly demonstrated in their manuscript, however.

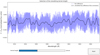

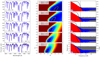

2.4. Normalisation of a spectral time series

When several spectra of the same star are considered, for instance during a series of consecutive nights, it is expected that the position in wavelength of the local maxima forming the continuum remains the same. Different local maxima from one night to the next would be due to instrumental or atmospheric effects, but not be caused by the star. When different local maxima are used from one spectrum to the next, wiggles are induced in spectral ratios or differences, which are not desired (blue curves in Fig. 4). These numerical artefacts are mostly produced by the cubic interpolation (blue curve in the bottom panel of the same figure) and can already be mitigated using the linear interpolation (blue curve in the top panel of the same figure). Nevertheless, some of them are still present and need to be removed.

|

Fig. 4. Visualisation of the wiggles produced in a difference of normalised spectra (blue curve) with an arbitrary zero-point. The two spectra come from the same star and are taken one night apart. Top: difference between the spectra normalised by the linearly interpolated continuum. Bottom: same as the top for the cubic interpolation. The green curve shows the improved stability that is reached with the clustering algorithm (see main text). The black curve shows the remaining fluctuations, and the red curve plots the residuals after those fluctuations are suppressed by the low-pass filter. |

To solve this problem, RASSINE provides an optional function in its library that is called intersect_all_continuum. This function takes a series of spectra as input and extracts the anchor points for each of them. For each of these anchor points, the function calculates the fraction of spectra in which this anchor point has been detected. The function then selects only the anchor points that appear more frequently throughout the series of spectra than a threshold fixed with the GUI (see the screenshot of the GUI in Fig. D.7). When two maxima fall in the same cluster for one spectrum, the maximum farthest from the cluster centre is removed. Maxima that are missing from the cluster are added. In this way, we ensure that all continua are formed with the same anchor points. This considerably reduces the wiggles under the 1% level (see the green curves in Fig. 4). We note that when the user has to work with time series of spectra at moderate S/N (from 75 up to 150), there is a risk for the clustering algorithm to fail to work properly if the smoothing step is not efficient enough because the local maxima will be spuriously positioned. In this specific case, the user can follow the procedure described in Appendix C.

A second function can be used, called matching_diff_continuum, to reduce the wiggles even further. This function searches for the spectrum with the highest S/N at 5500 Å and uses it as reference. The spectrum difference is computed with all the other spectra, one by one, the wiggles are fitted on each spectrum difference using a Savitzky-Golay filtering (black curves in Fig. 4) and are removed from the spectra (red curve in Fig. 4). The window of the filter is chosen in the GUI (see Fig. D.8). Because this step can also suppress true variations, we advise to use it with caution. Nevertheless, this option seems to produce the most precise spectral time series (see Sect. 3.4 and Fig. 8).

3. Results

3.1. Broad absorption lines

As a first test, we investigated whether broad absorption lines were correctly normalised when RASSINE modified their profile. To do so, we chose as test cases 61 Cyg A, the Sun, and HD142, which are three stars that probe a wide range of spectral types and CCF FWHM values (see Table B.1). The spectra of 61 Cyg A were measured with the HARPS-N spectrograph, whereas the specra of the Sun and HD142 were obtained with HARPS2. The HARPS and HARPS-N spectra were normalised in automatic mode (see Appendix B) with intermediate tension (par_stretching = “auto_0.5”) and polynomial law (ν = 1). The 1D spectra of both instruments are already corrected for the blaze function, and orders are merged.

We focused on two broad lines of first interest, which are the sodium doublet NaD and Hα. In Fig. 5 we compare the spectrum normalised by the alpha shape with a more classical method that is described as follows. Two spectral windows, as free of stellar lines as possible, were selected by eye on either side of each broad line, each window being different for each star. The average flux over each window was measured before the continuum was estimated as being the straight line connecting the two obtained points.

|

Fig. 5. Comparison between the RASSINE normalisation and the normalisation obtained with a classical approach (linear fit between pseudo-continuum windows on either side of the selected line, set text) for two broad absorption lines for three stars with different spectral types: K5V, G2V, and F7V, from left to right: 61 Cyg A, the Sun, and HD142, respectively. Top: spectral region corresponding to the sodium doublet NaD. Bottom: spectral region corresponding to Hα. Both 61 Cyg A and HD142 were normalised in automatic mode with intermediate tension (par_stretching = “auto_0.5”) and polynomial mapping ν = 1. For the Sun, a higher tension (par_stretching = “auto_0.0”) and smaller polynomial mapping (ν = 0.7) were needed to exclude the interline region of the sodium doublet from being part of the continuum. A comparison with the IAG solar atlas (Reiners et al. 2016) degraded at the HARPS resolution R ∼ 115 000 is also displayed. The deeper lines in the IAG atlas are tellurics. |

For 61 Cyg A, which is a K5V star, we observe that the classical method leads to a continuum level that is too low for the two broad absorption lines. This produces a normalised spectrum with several values higher than 1 because the regions that are selected as continuum for cool stars are necessarily contaminated by absorption lines because of the high density of spectral lines. The normalisation produced by RASSINE appears to be better for this star because the continuum level is higher. We note that it might still be too low regarding the true stellar continuum.

For the Sun, we observed at first a strong discrepancy of 2% for the sodium doublet between the RASSINE continuum and the continuum derived using the classical method. This latter is compatible with the Kitt peak (Wallace et al. 2011) and IAG (Reiners et al. 2016) solar atlases, therefore we concluded that the normalisation was not performed correctly by RASSINE. This discrepancy was explained by the fact that the interline region between the sodium doublet was considered by RASSINE as being part of the continuum in the automatic mode. To solve this problem, we increased the tension (par_stretching = “auto_0.0”) and decreased the coefficient ν to 0.7 for the polynomial mapping. After doing so, the continuum found by RASSINE was similar to the continuum found using the classical method. However, our reduction leads to a smaller line width for Hα than is listed in IAG. This might be explained by instrument systematics, a different activity level, or by the selection of a different continuum region in which the normalisation is performed. In any case, the continuum obtained by RASSINE is similar to the continuum obtained with the classical approach on the same spectrum.

For HD142, an F7 dwarf, a good agreement between RASSINE and the classical method is found for Hα. However, a discrepancy is found in the continuum obtained with the classical method in the sodium doublet. A clear quadratic drift is visible in the right part of the spectra window, which is not induced by the method itself because a linear fit was performed. Such low-frequency variations are thus inherent to the merged 1D spectrum and are either due to the instrument systematics or are produced during the construction of the merged 1D spectrum. This latter option is more likely because this wavelength range is precisely situated in the overlapping region between the HARPS orders 56 and 57 (echelle orders 104 and 103, respectively).

3.2. Evaluation of the continuum accuracy of RASSINE

Even though it is not the primary interest of the RASSINE code, we tested whether the normalisation returned accurate line depths. Because the alpha-shape method implemented in RASSINE is an upper-envelope approach, it is known that for low S/N spectra the continuum level may be too high (Xu et al. 2019), which will provide overestimated line depths. Obtaining accurate line depths is a difficult task. Probably the best method is to rely on stellar templates. However, these templates have been generated using a few atmospheric parameters that are themselves derived from spectra that have been normalised in some way. Another strong difficulty is obtaining a stellar template with atmospheric parameters as close as possible to the observed star. Because of the high dimensionality of the stellar atmospheric parameter space, template libraries often mainly focus on a good coverage of the effective temperature and gravity surface parameters, at the expense of abundance diversity. Moreover, the resolution of an observed spectrum is generally not uniform, which might introduce incorrect line depths because a stellar template is convoluted with a kernel at a fixed resolution. As an example, the resolution for HARPS typically varies between 95 000 and 110 000. For this reason, the continuum accuracy cannot be computed by measuring a difference in line depth.

To measure the continuum accuracy, we compared a spectrum normalised by RASSINE, using the linearly interpolated continuum, with a normalised synthetic spectrum. We used the POLLUX database (Palacios et al. 2010), which is a library of spectra containing normalised high-resolution stellar templates. We evaluated the RASSINE continuum accuracy on two stars: α Cen B, and the Sun. We used the spectra from the α Cen B 2008 data set, but the same results are obtained with the 2010 dataset. The atmospheric parameters chosen for the templates are given in Table 1 and were derived from MARCS (de Laverny et al. 2012) and ATLAS (Kurucz 2005) models. For the Sun, it is also possible to use the IAG solar atlas (Reiners et al. 2016) in order to compare the continuum accuracy of the alpha-shape strategy with an iterative polynomial fitting method. The solar atlas and the synthetic spectra were degraded at the HARPS resolution, shifted to match the rest frame of the stellar spectra, and interpolated on the same wavelength grid. Because the IAG spectrum begins at 4047 Å, we removed the shorter wavelength range from the HARPS spectrum. Conversely, because the HARPS spectrum ends at 6910 Å, we suppressed the longer wavelength in the IAG atlas.

Atmospheric parameters chosen to generate our reference synthetic spectra.

To measure the continuum accuracy, we extracted flux values fi of the normalised stellar template, or IAG atlas, at the same wavelength positions as the anchor points we used to build our continuum. As by definition our continuum takes a value of unity at these locations, the standard deviation of the continuum difference 1 − fi provides a good metric for the RASSINE continuum accuracy, which is defined here as the 2σ width of the standard deviation distribution. We note that because this metric is only based on the anchor points (selected local maxima), no conclusion can be reached about the accuracy value of the continuum between anchor points, and thus the accuracy for the linear and cubic interpolated continuum are the same. Considering that the continuum is a smooth function between two anchor points, which is a good approximation here as the average distance between consecutive anchors points is small (∼4.5 Å), the derived continuum accuracy can be considered as an average value representative of the alpha-shape strategy for the whole spectrum between 4047 and 6910 Å. In addition, this metric is insensitive to a global offset between our continuum and the continuum from the template because it relies on computing the standard deviation of the distribution, but this is strongly unlikely for high S/N spectra.

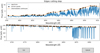

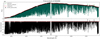

Additional spectral lines were sometimes present in the synthetic spectrum and thus produced easily identifiable outliers in the continuum difference (see the grey dots in Fig. 6). We rejected these points by performing a rolling median on 20 adjacent points, and rejected all points in the residuals that were ten times the median absolute deviation (MAD) farther away from zero. This process was performed iteratively until no more outliers were detected. Furthermore, because the IAG atlas was taken at a different barycentric Earth RV (BERV) value, a few local maxima were situated in telluric lines. We removed these points by performing the same outlier rejection.

|

Fig. 6. Computation of the RASSINE continuum accuracy by measuring the continuum difference level between our spectra and synthetic stellar templates. First row: difference of the continuum for α Cen B in the MARCS template and the RASSINE normalised spectrum as a function of wavelength. A few continuum differences cannot be extracted correctly in the synthetic template because some stellar lines are absent from our spectra (grey dots). The distribution of the continuum difference is plotted on the right (grey histogram), as is the distribution for λ > 4500 Å (green histogram). Second row: difference of the continuum for the Sun in the ATLAS template and the RASSINE normalised spectrum. Third row: difference of the continuum for the Sun in the ATLAS template and the IAG normalised solar atlas. The IAG solar atlas was normalised with a low-order polynomial fit. |

Figure 6 shows that our distribution of the continuum difference is always positive, meaning that the continuum fitted by RASSINE remains always below the synthetic continuum, in absolute flux. This observation is coherent with the alpha-shape strategy because the only possibility for our continuum level to be higher than the synthetic would be that one of the anchor points used to obtain the continuum were a spurious local maximum, for example due to a cosmic hit. However, as seen in Sect. 2.2.6, such outliers should be removed during the cleaning process. Overall, RASSINE tends to select too many anchor points as being part of the continuum, an effect that is enhanced when the tension parameter is reduced (par_stretching increased). As an example, in the case of the continuum fitted to α Cen B and the solar spectrum, RASSINE considers the maximum of the inter-region between the CaII H & K lines as being part of the continuum, which is clearly not the case in the synthetic template because of the strong absorption in this region. This discrepancy produces the strong excursion of 0.35 around 3900 Å, seen for α Cen B in Fig. 6. For the Sun, the discrepancy is as high as 0.2 at the same wavelength, but it is not shown in the same figure as the HARPS spectrum was truncated at 4047 Å to match the IAG solar atlas.

A summary of the continuum accuracy statistics can be found in Table 2. The continuum accuracy is lower for α Cen B in the blue part of the spectrum than for the Sun. This is due to the CH molecular band at 4300 Å, also called G band, and to the CN violet molecular band at 3883 Å. These bands are deeper in cool stars and sensitive to stellar activity (Berdyugina & Usoskin 2003). The continuum accuracy for α Cen B is estimated to be 2.0%, which is six times lower than the continuum accuracy measured on the Sun, 0.29%. This conclusion is expected because cooler stars present more blended lines, in addition to molecular bands, which implies that fewer local maxima probe the continuum. For this reason, we also computed the continuum accuracy without wavelengths shorter than 4500 Å. In this more restrictive spectral range, the continuum accuracy is 1.3% for α Cen B and 0.26% for the Sun.

Summary of the different statistics reached with the automatic mode of RASSINE.

When the continuum of the IAG solar atlas, which is fitted using a low-order polynomial fit, is compared with the synthetic continuum, the distribution of the differences gives values above and below zero, meaning that sometimes the flux continuum level is higher and sometimes lower than the reference. The continuum accuracy we found is twice lower that the accuracy obtained with RASSINE, which is 0.67% compared to 0.29%. When wavelengths shorter than 4500 Å are excluded, the continuum accuracy is 0.58%, which is three times lower than the 0.20% obtained with RASSINE. Finally, a clear excursion of 3% around Hβ at 4861 Å is visible in both IAG and HARPS spectra, which indicates that the difference is induced by the synthetic template. It does not model this spectral feature properly.

3.3. Evaluation of the line-depth precision of RASSINE

Another important statistics to estimate is the precision on line depth that the code provides. For this purpose, we normalised several spectra with very high S/N of α Cen B that were taken during successive nights. We selected consecutive 9-night binned spectra at minimum activity in 2008, from BJD = 2454550 to BJD = 2454558. We also selected 13 consecutive nights in 2010, from BJD = 2455288 to BJD = 2455301, keeping in mind that this data set is known to be contaminated by a large magnetically active region (Dumusque et al. 2015), which might significantly change the depth of spectral lines (Thompson et al. 2017; Wise et al. 2018; Dumusque 2018). Because we study night-to-night variations in line depth and because the rotational period is about 36 days (DeWarf et al. 2010), we expect the change induced by stellar activity to be negligible. This is confirmed below because both data sets give the same precision on line depth. We also selected 11 consecutive days of HARPS solar spectra from BJD = 2458507 to BJD = 2458518.

All the nightly stacked spectra have an S/N at 5500 Å that is higher than 1000. For each of these spectra, line depths were measured and compared between adjacent days. Because spectra are close in time and the HARPS spectrograph is extremely stable on this timescale, the line depths are not expected to change from one night to the next. The measured variation therefore is a direct measurement of the line-depth precision of RASSINE. The spectra were reduced in complete automatic mode with a Savitzky-Golay filtering, a par_smoothing_box of six wavelength elements, a polynomial penalty mapping (ν = 1.0), and using an intermediate tension (par_stretching = “auto_0.5”).

Line depths were estimated by fitting a parabola on the points present inside a ±2.5 km s−1 window around the core of each spectral line. The uncertainty in depth was derived considering photon noise. For α Cen B, we fitted the lines defined in Cretignier et al. (2020), and all lines contaminated by a telluric by more than 2% were rejected. The telluric spectrum we used was generated by Molecfit (Smette et al. 2015) as described in Cretignier et al. (2020). For the Sun, we fitted the lines defined in the HARPS G2 mask used for cross correlation.

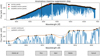

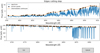

Two examples of line-depth differences between two adjacent nights are displayed in Fig. 7. The line depth precision is computed by measuring the weighted rms of the line-depth differences and displayed in Fig. 8. Because of the poor constraint of the interpolation at the edges of the spectrum, the weighted rms was computed excluding the first and last 20 Å of the HARPS spectra. The photon noise is higher in 2008 than in 2010 and induces an average systematics on the line-depth measurement of 0.12% and 0.09%, respectively, whereas for the Sun, this is 0.05%. A summary of the line depth precision can be found in Table 2. The line-depth precision is lower for the cubic interpolation than for the linear interpolation. For the former, the rms significantly changes depending on the pair of nights that is studied, which is related to the number of wiggles that is produced in the spectral difference. On average, the RASSINE precision in line depth for the linearly interpolated continuum is 0.17% and 0.15% for 2008 and 2010, which is approximately 50% higher than photon noise, respectively. A slightly better line depth precision of 0.12% is obtained for the Sun.

|

Fig. 7. Line-depth difference between two α Cen B spectra taken one night apart (BJD = 2454557 and BJD = 2454558). The uncertainties from the photon noise are not displayed for clarity, but their median value σphot is indicated, as is the weighted rms used to measure the dispersion. Both spectra are normalised by the RASSINE cubic continuum, which is the least precise continuum (see Fig. 8). Top: line-depth difference when both spectra are normalised by the cubic continuum. This result matches the eighth square blue data point in the left panel of Fig. 8 and was the least precise result, with a line-depth precision of 0.21%. Part of the jitter is not Gaussian, as displayed in the inner plot, which is a zoom at 4100 Å, where the discrepancy is observed to be about 1.0%. The structures observed are induced by the cubic spline interpolation. Bottom: same as the top panel after performing clustering and low-frequency filtering (see Sect. 2.4). This result matches the eighth square red data point in the right panel of Fig. 8. The remaining jitter of 0.15% is dominated by the interference pattern (see Fig. 9). |

As shown in Fig. 4 and in the top panel of Fig. 7, the continuum fitted by RASSINE presents correlated noise. These structures are produced when a local maximum is selected in a zone where no local maxima were detected for the other spectrum (see Sect. 2.4), and this constitutes a major limitation for achieving high line-depth precision. We discuss ways below to improve this line-depth precision further.

3.4. Evaluation of the line-depth precision of RASSINE for a spectral time series

We so far investigated the line-depth precision and continuum accuracy of RASSINE for the normalisation of individual spectra. Each spectrum was thus reduced independently, without sharing any information between the different nights. However, when all the spectra are obtained from the same star and thus form a spectral time series, it is possible to gather the information of all continua to improve the normalisation. We have presented this aspect in Sect. 2.4.

We performed the previous analysis of the line-depth measurement on the 9- and 13-night binned adjacent spectra of the 2008 and 2010 α Cen B data sets, and also on the 11-day binned adjacent solar spectra, and this time, we applied the intersect_all_continuum and matching_diff_continuum functions on the three data sets separately. A summary of the line-depth precision we obtained can be found in Table 2.

As described in Sect. 2.4, the first function selects only local maxima that are present in most of the individual spectra, whereas the second function applies a low-pass filter to the difference between all the spectra and a reference, and individually corrects all spectra for the filtered signal (see Fig. 4). The precision in line depth that is obtained after these additional corrections are implemented is displayed in green and red in Fig. 8, respectively. Again, the linear interpolation appears to be more precise than the cubic interpolation because the wiggles are produced by the latter. When the clustering is performed on local maxima (i.e. the intersect_all_continuum function is applied) the precision in line depth significantly improves down to 0.12%, and when in addition low-frequency filtering is performed (i.e. the matching_diff_continuum function is applied), the line-depth precision is improved even more, down to 0.10%. This level is compatible with photon noise for α Cen B. For the Sun, the line-depth precision is always higher than photon noise, except for the eighth night pair (BJD = 2458514 and BJD = 2458515), for which the line-depth precision is compatible with it. After investigation, it turns out that this different behaviour arises because for this pair, the same flat field was used to reduce both spectra. The other night pairs are thus limited by flat fielding, which on HARPS has an S/N of ∼1000, which limits the line-depth precision to 0.10%. When this is taken into account, the RASSINE precision on line depth is similar to the photon noise.

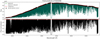

|

Fig. 8. Measurement of the RASSINE line-depth precision using series of adjacent nights. The date of the first night forming a pair is indicated on the x-axis. For each night, the line depth was computed on the full spectrum, rejecting telluric regions. The weighted rms (coloured dots) was extracted from the distribution of the line-depth difference, where the difference is taken between two adjacent nights (see e.g. Fig. 7). The average rms ⟨rms⟩ on the night-to-night differences is measured and shown as horizontal solid lines. Left: 2008 α Cen B dataset. Middle: 2010 α Cen B dataset. Right: solar dataset. |

In addition, we note that regardless of the algorithm used to build the continuum of the two last night pairs for α Cen B in 2008, the precision in line depth is clearly lower than for the other night pairs. We found that in 2008, HARPS spectra were contaminated by an interference pattern produced by a filter of 1.5 mm width, that was placed in the parallel beam of the tungsten lamp that was used to perform flat fielding. The width of the interference filter and the periodicity Δλ of the observed pattern are linked by the formula  . This pattern affects the flat field and is introduced in the stellar spectrum when correcting for it. We note that this pattern remains stable for some nights and then changes significantly, producing the interference pattern in the spectral difference seen in Fig. 9, with a peak-to-peak difference of 1% in normalised flux units. In the data we analysed from 2008, the interference pattern remains stable for the first seven nights, and then changes for night 8 and changes again for night 9. The variation of this interference pattern during the two last nights is responsible for the degradation of the line-depth precision observed in Fig. 8. An upgrade of the instrument in August 2009 modified the position of this filter from parallel to diverging beam, which solved this problem.

. This pattern affects the flat field and is introduced in the stellar spectrum when correcting for it. We note that this pattern remains stable for some nights and then changes significantly, producing the interference pattern in the spectral difference seen in Fig. 9, with a peak-to-peak difference of 1% in normalised flux units. In the data we analysed from 2008, the interference pattern remains stable for the first seven nights, and then changes for night 8 and changes again for night 9. The variation of this interference pattern during the two last nights is responsible for the degradation of the line-depth precision observed in Fig. 8. An upgrade of the instrument in August 2009 modified the position of this filter from parallel to diverging beam, which solved this problem.

|

Fig. 9. Top: zoom into the red 6640 Å region of the normalised spectral difference corresponding to the last red dot of the 2008 data set shown in the left panel of Fig. 8. A clear interference pattern is visible in the spectral difference (red curve) and is even more pronounced when the difference is smoothed (black curve). Bottom: fourier transform of the spectral difference. A power excess is visible and corresponds to an interference pattern created by an optical element of width 1.51 mm. |

4. Conclusion

We have presented the concepts on which the RASSINE Python code is based. The code is a tool developed to facilitate the normalisation of stellar spectra. The realisation of the code was motivated by the challenge to develop a coherent and robust normalisation algorithm of merged 1D spectra, allowing us to deal with different spectral types and S/N, presenting a limited number of free parameters to adjust. RASSINE proposes a GUI (see Appendix D) that allows the user to choose the best parameter values. In addition, calibrations based on the S/N level of the spectrum and the FWHM of the stellar CCF were performed to obtain first guesses for five of the six relevant RASSINE parameters (described in Appendix B). The code was tested with CORALIE, HARPS, HARPS-N, and ESPRESSO, four instruments that span different instrumental resolutions. The code produced visually satisfying results each time (see Appendix E). We focused on high S/N spectra (S/N > 500) to test the continuum accuracy and line-depth precision of RASSINE, which are thought to be the objects for which the alpha-shape strategy is expected to be efficient. This does not mean that spectra with a lower S/N cannot be reduced with RASSINE. If the smoothing step is performed correctly, the code can theoretically perform well on low S/N spectra (S/N < 100). However, the nature of the algorithm means that determining the continuum for spectra with S/N lower than 50 remains challenging.

We tested the continuum accuracy and the line-depth precision of the automatic mode of the code on the 2008 and 2010 α Cen B data set, keeping in mind that this mode is not guaranteed to provide the best values in continuum accuracy. We showed that the linear interpolation provided a better continuum that the cubic interpolation in all cases because cubic interpolation produces undesired artefacts. The accuracy on the continuum level was measured on the 2008 α Cen B data set and on solar spectra by comparing our continuum with stellar templates from MARCS and ATLAS models. A continuum accuracy of 2.0% was derived for the former star, whereas a value a 0.29% was found for the Sun. This is 2.3 times better than the 0.67% obtained on the IAG solar atlas, which uses low-order polynomial fitting. By considering wavelengths larger than 4500 Å, the accuracy of the continuum improved to 1.3% and 0.20%, which is three times better than for the IAG (about 0.59%). For α Cen B, the line-depth precision on the 2010 data set, which does not contain the interference pattern, is about 0.14%. Additional algorithms were developed in order to stabilise the continuum even more, in particular when the goal is to study a spectral time series. A clustering algorithm that always allows selecting the same local maxima on all spectra improved the line-depth precision down to ∼0.12%. The line-depth precision can be improved even more when a low-frequency filtering is applied on the spectral difference, which seems to produce a line-depth precision limit for RASSINE of ∼0.10%. This value was reached for α Cen B and solar spectra. This limit is the photon-noise limit on α Cen B spectrum and the photon-noise limit of the flat fields used to reduce solar spectra. We therefore conclude that the RASSINE normalisation was always found compatible with photon noise. All the continuum accuracy and line-depth precision statistics are summarised in Table 2.

A tool like this can find applications in numerous situations, such as computations of stellar atmospheric parameters (Blanco-Cuaresma 2019), the development of a tailored cross-correlation mask for each star in the context of radial velocities (Bourrier et al. 2020), line-by-line variability related to stellar activity (Thompson et al. 2017; Wise et al. 2018; Dumusque 2018; Cretignier et al. 2020), or correcting for the interference observed in transmission spectra. This normalisation algorithm might also improve the radial velocity derived from high-resolution spectra by reducing the jitter in radial velocity time series by providing a better colour correction and facilitating further correction algorithms for mitigating stellar activity or telluric contamination.

The Sun is observed using the HELIOS solar telescope http://www.sc.eso.org/~pfigueir/HELIOS.html

Acknowledgments

We are grateful to Nathan Hara and his constructive comments. We thank Lila Chergui and Yannick Demets for their help regarding English. This work has made use of the VALD database, operated at Uppsala University, the Institute of Astronomy RAS in Moscow, and the University of Vienna, and data coming from the ESO archive (Alpha Cen B) and from the HELIOS solar telescope at La Silla Observatory. This work has been carried out within the frame of the National Centre for Competence in Research “PlanetS” supported by the Swiss National Science Foundation (SNSF). M.C., J.F. and R.A. acknowledges the financial support of the SNSF. F.P. greatly acknowledges the support provided by the Swiss National Science Foundation through grant Nr. 184618. X.D is grateful to the Branco-Weiss Fellowship for continuuous support. This project has received funding from the European Research Council (ERC) under the European Unionś Horizon 2020 research and innovation program (grant agreement No. 851555).

References

- Adibekyan, V., Delgado-Mena, E., Figueira, P., et al. 2016, A&A, 592, A87 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Allart, R., Lovis, C., Pino, L., et al. 2017, A&A, 606, A144 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Asaeedi, S., Didehvar, F., & Mohades, A. 2013, ArXiv e-prints [arXiv:1309.7829] [Google Scholar]

- Astropy Collaboration (Price-Whelan, A. M., et al.) 2018, AJ, 156, 123 [Google Scholar]

- Baranne, A., Queloz, D., Mayor, M., et al. 1996, A&AS, 119, 373 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Berdyugina, S. V., & Usoskin, I. G. 2003, A&A, 405, 1121 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Blanco-Cuaresma, S. 2019, MNRAS, 486, 2075 [NASA ADS] [CrossRef] [Google Scholar]

- Blanco-Cuaresma, S., Soubiran, C., Heiter, U., & Jofré, P. 2014, A&A, 569, A111 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Bouchy, F., Doyon, R., Artigau, É., et al. 2017, The Messenger, 169, 21 [NASA ADS] [Google Scholar]

- Bourrier, V., & Hébrard, G. 2014, A&A, 569, A65 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Bourrier, V., Ehrenreich, D., Lendl, M., et al. 2020, A&A, 635, A205 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Cosentino, R., Lovis, C., Pepe, F., et al. 2012, in Ground-based and Airborne Instrumentation for Astronomy IV, Proc. SPIE, 8446, 84461V [NASA ADS] [Google Scholar]

- Cretignier, M., Dumusque, X., Allart, R., Pepe, F., & Lovis, C. 2020, A&A, 633, A76 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- de Laverny, P., Recio-Blanco, A., Worley, C. C., & Plez, B. 2012, A&A, 544, A126 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- DeWarf, L. E., Datin, K. M., & Guinan, E. F. 2010, ApJ, 722, 343 [NASA ADS] [CrossRef] [Google Scholar]

- Dumusque, X. 2018, A&A, 620, A47 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Dumusque, X., Glenday, A., Phillips, D. F., et al. 2015, ApJ, 814, L21 [NASA ADS] [CrossRef] [Google Scholar]

- Eddy, W. F. 1977, ACM Trans. Math. Softw., 3, 398 [CrossRef] [Google Scholar]

- Fischer, D., Jurgenson, C., McCracken, T., et al. 2017, Am. Astron. Soc. Meet. Abstr. #229, 229, 126.04 [Google Scholar]

- Graham, L. 1972, Inf. Process. Lett., 1, 132 [CrossRef] [Google Scholar]

- Gray, D. F. 2005, The Observation and Analysis of Stellar Photospheres (Cambridge, UK: Cambridge University Press) [CrossRef] [Google Scholar]

- Hunter, J. D. 2007, Comput. Sci. Eng., 9, 90 [NASA ADS] [CrossRef] [Google Scholar]

- Jarvis, R. 1973, Inf. Process. Lett., 2, 18 [CrossRef] [Google Scholar]

- Kurucz, R. L. 2005, Mem. Soc. Astron. It. Suppl., 8, 14 [Google Scholar]

- Malavolta, L., Lovis, C., Pepe, F., Sneden, C., & Udry, S. 2017, MNRAS, 469, 3965 [NASA ADS] [CrossRef] [Google Scholar]

- Mayor, M., Pepe, F., Queloz, D., et al. 2003, The Messenger, 114, 20 [NASA ADS] [Google Scholar]

- McKinney, W. 2010, in Proceedings of the 9th Python in Science Conference, eds. S. van der Walt, & J. Millman, 51 [Google Scholar]

- Oliphant, T. 2006, NumPy: A Guide to NumPy (USA: Trelgol Publishing) [Google Scholar]

- Palacios, A., Gebran, M., Josselin, E., et al. 2010, A&A, 516, A13 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Pasquini, L., Cristiani, S., García López, R., et al. 2010, in Ground-based and Airborne Instrumentation for Astronomy III, Proc. SPIE, 7735, 77352F [NASA ADS] [Google Scholar]

- Pepe, F., Mayor, M., Rupprecht, G., et al. 2002a, The Messenger, 110, 9 [NASA ADS] [Google Scholar]

- Pepe, F., Mayor, M., Galland, F., et al. 2002b, A&A, 388, 632 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Pepe, F., Bouchy, F., Queloz, D., & Mayor, M. 2003, in Scientific Frontiers in Research on Extrasolar Planets, eds. D. Deming, & S. Seager, ASP Conf. Ser., 294, 39 [Google Scholar]

- Pepe, F., Molaro, P., Cristiani, S., et al. 2014, Astron. Nachr., 335, 8 [NASA ADS] [CrossRef] [Google Scholar]

- Phan, T. 2007, Annal. Math. Inf., 34, 3 [Google Scholar]

- Python Core Team 2019, Python: A Dynamic, Open Source Programming Language, Python Software Foundation, Python Version 3.7 [Google Scholar]

- Queloz, D., Mayor, M., Weber, L., et al. 2000, A&A, 354, 99 [NASA ADS] [Google Scholar]

- Reiners, A., Mrotzek, N., Lemke, U., Hinrichs, J., & Reinsch, K. 2016, A&A, 587, A65 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Savitzky, A., & Golay, M. J. E. 1964, Anal. Chem., 36, 1627 [NASA ADS] [CrossRef] [Google Scholar]

- Schwab, C., Rakich, A., Gong, Q., et al. 2016, in Ground-based and Airborne Instrumentation for Astronomy VI, Proc. SPIE, 9908, 99087H [Google Scholar]

- Skoda, P. 2008, in Astronomical Spectroscopy and Virtual Observatory, eds. M. Guainazzi, & P. Osuna, 97 [Google Scholar]

- Smette, A., Sana, H., Noll, S., et al. 2015, A&A, 576, A77 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Sousa, S. G., Santos, N. C., Adibekyan, V., Delgado-Mena, E., & Israelian, G. 2015, A&A, 577, A67 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Strassmeier, K. G., Ilyin, I., Järvinen, A., et al. 2015, Astron. Nachr., 336, 324 [NASA ADS] [CrossRef] [Google Scholar]

- Thompson, A. P. G., Watson, C. A., de Mooij, E. J. W., & Jess, D. B. 2017, MNRAS, 468, L16 [NASA ADS] [CrossRef] [Google Scholar]

- Tody, D. 1986, in Instrumentation in Astronomy VI, ed. D. L. Crawford, Proc. SPIE, 627, 733 [NASA ADS] [CrossRef] [Google Scholar]

- Tody, D. 1993, in Astronomical Data Analysis Software and Systems II, eds. R. J. Hanisch, R. J. V. Brissenden, & J. Barnes, ASP Conf. Ser., 52, 173 [Google Scholar]

- Upton, G., & Cook, I. 1996, Understanding Statistics (Oxford: Oxford University Press), 443 [Google Scholar]

- van der Walt, S., Colbert, S. C., & Varoquaux, G. 2011, Comput. Sci. Eng., 13, 22 [Google Scholar]

- Van Rossum, G., & Drake, F. L., Jr. 1995, Python Tutorial (The Netherlands: Centrum voor Wiskunde en Informatica Amsterdam) [Google Scholar]

- Van Rossum, G., & Drake, F. L. 2009, Python 3 Reference Manual (Scotts Valley, CA: CreateSpace) [Google Scholar]

- Virtanen, P., Gommers, R., Oliphant, T. E., et al. 2020, Nat. Meth., 17, 261 [Google Scholar]

- Wallace, L., Hinkle, K. H., Livingston, W. C., & Davis, S. P. 2011, ApJS, 195, 6 [NASA ADS] [CrossRef] [Google Scholar]

- Wise, A. W., Dodson-Robinson, S. E., Bevenour, K., & Provini, A. 2018, AJ, 156, 180 [NASA ADS] [CrossRef] [Google Scholar]

- Wyttenbach, A., Ehrenreich, D., Lovis, C., Udry, S., & Pepe, F. 2015, A&A, 577, A62 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Xu, X., Cisewski-Kehe, J., Davis, A. B., Fischer, D. A., & Brewer, J. M. 2019, AJ, 157, 243 [CrossRef] [Google Scholar]

Appendix A: Alpha-shape algorithm

Rolling the alpha shape, or rolling pin, on top of the spectrum given our conditions is only a problem of trigonometry. Assuming our rolling pin is situated on a local maximum (see Fig. B.1), we first obtain all the local maxima (to the right) such that the distance d to the current local maximum satisfies d < 2r. If no such points exist, the radius is increased by a factor 1.5 until a point is found that is close enough. We call the current point P and the potential next point N. We compute the vector from P to N, called δ, whose norm is δ. We wish to determine the coordinates of the centre C of a circle of radius r (the current value) that touches P and N. The distance h between this centre and the segment δ is given by the Pythagoras theorem,

The vectorial components of the centre C are then given by

The second term extends from P to the middle of the segment δ, for instance, the point M. We note that PCN is isosceles and CM is perpendicular to PN. The last term of the equation is a vector perpendicular to δ that is normalised to have norm h. In components terms,  .

.

When the coordinates of the centre are found, we need to compute the rotation angle, that is, the angle the rolling pin has to roll to touch this particular point. Let (px, py) and (cx, cy) be the coordinates of the current point and of the circle centre, respectively. Trigonometric considerations show that this angle is given by

This angle is computed for every candidate point, that is, those that are closer than 2r to the current point (N and N′ in the figure). The next selected maximum is the first candidate touched by the rolling pin, mathematically speaking, the maximum with the smallest θ, N in this case. This process repeats iteratively until the code reaches the end of the spectrum.

Appendix B: Description of the automatic procedure

Even though RASSINE is a code with a complete interactive Python interface taking advantage of widgets and sliders, which facilitates choosing the parameters, it can still be technical for a user who is not familiar with this to determine the best parameters when they have several spectra of different stars of different spectral types or several spectra of the same star with different S/N. Hopefully, these parameters can often be approximated directly from the spectra. All of them, except for the penalty law, can be replaced by the keyword “auto”. In this case, several algorithms that we describe below can automatically guess initial values. We recall that there is no guarantee for the quality of the final product in automatic mode. This analysis should only be used to help the user make a first guess for the continuum, or when many spectra of the same star have to be reduced. Moreover, even though theoretically nothing prevents RASSINE from being used on extracted echelle-order spectra, the user has to be aware that the automatic mode presented below was calibrated on merged 1D spectra.

The first information that can be obtained from a spectrum is the typical line width, which is determined by the instrumental resolution, projected stellar velocity, and macroturbulence (Gray 2005). As said previously, the easiest method to determine its value consists of computing the FWHM of the CCF, which represents roughly an average line profile. If the parameter CCF_mask of RASSINE is put in “master” mode, the code computes the CCF and its corresponding FWHM. The first step is to build the cross-correlation mask that will then be used to produce the CCF by correlating this mask with the stellar spectrum. This is done by first obtaining a crude estimate of the spectral continuum using a rolling maximum in a window of 30 Å. Because the continuum we obtain has a step-like shape, we smoothed with a rectangular kernel of 15 Å. We then find all the local minima with a derivative criterion on a Savitsky-Golay smoothed version of the spectrum. This local minimum corresponds to spectral lines, and the difference between these local minima and the neighbour-fitted continuum provides an approximate depth for each spectral line. The final mask is a collection of the wavelength of each minimum with its respective depth, as defined in Pepe et al. (2002b). The CCF obtained from cross-correlating the mask with the stellar spectrum is then fitted with a Gaussian and the FWHM is extracted. A list of wavelength bands can be given as parameters mask_telluric in order to exclude some regions that are contaminated by telluric lines from the obtained CCF mask. A mask can also be given directly as input in the CCF_mask parameter, in which case the user also has to specify the systemic velocity of the star so that the stellar spectrum and binary mask are in the same rest frame. This is more complex, and we do not recommend using this option for users unfamiliar with RASSINE.

When the FWHM is known, the first step consists of smoothing the spectra. If the par_smoothing_box parameter is set to “auto”, a high-frequency filter is applied in Fourier space to suppress the frequencies above the sigma width. Two filters are available: an error function, and a top hat with an exponentially decreasing tail. Their expressions are given by

where C is a normalisation constant (the filters should all satisfy f(0) = 1), ω0 is the centre of the filter, which can be understood as the cut-off starting at which high-frequency modes are suppressed, and δω is the width of the filter (a low value of δω produces a sharp filter, and a high value produces a smooth filter).

By default, the error function filter is used if the par_smoothing_kernel parameter is set to “auto”. Some care is then needed to derive the correct values for ω0 and δω. As mentioned before, a typical wavelength scale is given by the width of the Gaussian fitted on the CCF. This implies the existence of a given frequency scale σ−1. Hence, we can parametrise ω0 = α1σ−1 and δω = α2σ−1, where the αi values are two dimensionless parameters. To adjust them to the best values, a calibration curve was constructed using a high S/N (∼12 000) spectrum of α Cen B and of the Sun, which were constructed by stacking 1000 observations together. We took care of correcting the spectra for long radial velocity trends in both cases in order to allow an optimal stacking.

We then simulated spectra with different S/N by adding several levels of Poison noise to the spectrum (see Fig. B.2). The Fourier filter was performed in an optimisation grid of α1 and α2. The best values of the α parameters were determined by measuring the standard deviation in the spectral difference between the noise-free and smoothed spectrum. By minimising this quantity, we determined the best values for α1 and α2 for each S/N value, which was extrapolated for every value of the S/N (see Fig. B.3) by fitting a polynomial function. The error bars Δα were defined as Δα1, 2 = 0.5 * (max(α1, 2)j − min(α1, 2)j), with j the collections of the 5% best simulations delimited by the white contour in Fig. B.3. The centre of the filter is more important than its width because a clear dependence is observed for α1, but not for α2. If the automatic mode for the spectral smoothing is chosen, the flux units of the input file have to be in analogue-to-digital (ADU) units such that the S/N value can be extracted by taking the square root of the flux. We advise using this automatic smoothing mode only when several spectra that span different order of magnitude in S/N have to be reduced.

|

Fig. B.1. Schema of the gift-wrapping algorithm. The rolling pin rotates around the point P. N and N′ are two local maxima. The selected maximum is N because the rolling pin falls on it before it falls on N′. Mathematically speaking, this is represented by the condition θ < θ′. |

|