| Issue |

A&A

Volume 686, June 2024

|

|

|---|---|---|

| Article Number | A80 | |

| Number of page(s) | 29 | |

| Section | Extragalactic astronomy | |

| DOI | https://doi.org/10.1051/0004-6361/202348152 | |

| Published online | 30 May 2024 | |

Total and dark mass from observations of galaxy centers with machine learning

1

School of Physics and Astronomy, Sun Yat-sen University, Zhuhai Campus, 2 Daxue Road, Tangjia, Zhuhai, Guangdong 519082, PR China

e-mail: napolitano@mail.sysu.edu.cn

2

CSST Science Center for Guangdong-Hong Kong-Macau Great Bay Area, Zhuhai, Guangdong 519082, PR China

3

Department of Physics E. Pancini, University Federico II, Via Cinthia 6, 80126 Naples, Italy

4

INAF – Osservatorio Astronomico di Capodimonte, Salita Moiariello 16, 80131 Naples, Italy

5

Instituto de Física, Universidade Federal da Bahia, 40210-340 Salvador-BA, Brazil

6

PPGCosmo, Universidade Federal do Espírito Santo, 29075-910 Vitória, ES, Brazil

7

Department of Physics, Federal University of Sergipe, Avenida Marechal Rondon s/n, Jardim Rosa Elze, São Cristovão, SE 49100-000, Brazil

8

National Astronomical Observatories, Chinese Academy of Sciences, 20A Datun Road, Chaoyang District, Beijing 100012, PR China

9

School of Astronomy and Space Science, University of Chinese Academy of Sciences, Beijing 100049, PR China

Received:

4

October

2023

Accepted:

15

February

2024

Context. The galaxy total mass inside the effective radius is a proxy of the galaxy dark matter content and the star formation efficiency. As such, it encodes important information on the dark matter and baryonic physics.

Aims. Total central masses can be inferred via galaxy dynamics or gravitational lensing, but these methods have limitations. We propose a novel approach based on machine learning to make predictions on total and dark matter content using simple observables from imaging and spectroscopic surveys.

Methods. We used catalogs of multiband photometry, sizes, stellar mass, kinematic measurements (features), and dark matter (targets) of simulated galaxies from the Illustris-TNG100 hydrodynamical simulation to train a Mass Estimate machine Learning Algorithm (MELA) based on random forests.

Results. We separated the simulated sample into passive early-type galaxies (ETGs), both normal and dwarf, and active late-type galaxies (LTGs) and showed that the mass estimator can accurately predict the galaxy dark masses inside the effective radius in all samples. We finally tested the mass estimator against the central mass estimates of a series of low-redshift (z ≲ 0.1) datasets, including SPIDER, MaNGA/DynPop, and SAMI dwarf galaxies, derived with standard dynamical methods based on the Jeans equations. We find that MELA predictions are fully consistent with the total dynamical mass of the real samples of ETGs, LTGs, and dwarf galaxies.

Conclusions. MELA learns from hydro-simulations how to predict the dark and total mass content of galaxies, provided that the real galaxy samples overlap with the training sample or show similar scaling relations in the feature and target parameter space. In this case, dynamical masses are reproduced within 0.30 dex (∼2σ), with a limited fraction of outliers and almost no bias. This is independent of the sophistication of the kinematical data collected (fiber vs. 3D spectroscopy) and the dynamical analysis adopted (radial vs. axisymmetric Jeans equations, virial theorem). This makes MELA a powerful alternative to predict the mass of galaxies of massive stage IV survey datasets using basic data, such as aperture photometry, stellar masses, fiber spectroscopy, and sizes. We finally discuss how to generalize these results to account for the variance of cosmological parameters and baryon physics using a more extensive variety of simulations and the further option of reverse engineering this approach and using model-free dark matter measurements (e.g., via strong lensing), plus visual observables, to predict the cosmology and the galaxy formation model.

Key words: methods: data analysis / galaxies: fundamental parameters / galaxies: kinematics and dynamics / dark matter

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

Galaxies originate within the gravitational confines of dark matter halos. They consist of baryonic matter, mostly in the form of stars and gas, as well as dark matter (DM). The spatial distribution and interplay of these two components play a major role in shaping the process of galaxy formation and evolution. Due to the elusiveness of the DM, a compelling characterization of its properties, from the very basic, such as the total dark mass (e.g., using virial theorem, Busarello et al. 1997), to the more complex, such as their mass density profiles (Burkert 1995; Navarro et al. 1996), remains unattainable.

The only approach to constrain DM distribution in galaxies relies on gravitational effects. Ever since the initial discoveries indicating the presence of DM in galaxies, rotation curves have been extensively utilized to investigate the mass distribution of (rotation-supported) spiral systems (e.g., Rubin et al. 1970; Lelli et al. 2016). For ellipticals, instead, typical probes are stellar velocity dispersion profiles and higher-order velocity moments (Kronawitter et al. 2000; Gerhard et al. 2001; Thomas et al. 2007; Romanowsky et al. 2003; Cappellari et al. 2006; Napolitano et al. 2009; Tortora et al. 2009), and strong gravitational lensing (Treu & Koopmans 2004; Koopmans et al. 2006; Auger et al. 2010; Tortora et al. 2010; Sonnenfeld et al. 2013).

Our comprehension of the physical processes contributing to the assembling of baryons and DM received a burst thanks to the advent of new techniques, allowing us to collect data for large galaxy samples or resolve their kinematics in detail. Multiobject spectrographs have been used to simultaneously obtain spectra from up to hundreds of objects in a single observation (e.g., SDSS; Blanton et al. 2003) and Integral Field Spectrographs (IFS) have provided full two-dimensional kinematical maps, including galaxy rotation, velocity dispersion and even higher-velocity moments, (see, e.g., ATLTAS3D, Cappellari et al. 2011; MaNGA, Bundy et al. 2015; SAMI, Croom et al. 2012)

The next-generation (photometric and spectroscopic) sky surveys (or stage IV surveys), such as the Chinese Survey Space Telescope (CSST; Zhan 2011), Vera-Rubin/Large Synoptic Survey Telescope (VR-LSST; Ivezić et al. 2019), Euclid mission (Laureijs et al. 2011), Dark Energy Spectroscopic Instrument (DESI; Levi et al. 2013; DESI Collaboration 2016), and 4-metre Multi-Object Spectrograph Telescope (4MOST; de Jong 2011; de Jong et al. 2019) will provide us with even more massive amounts of data, posing tremendous challenges for data modeling. Hence, finding methods that can swiftly obtain reliable results (e.g., to serve as crucial reference values for further analysis and more complex observations) without the need for complex modeling is very necessary.

Machine learning (ML) has become, in the last decade, an efficient solution in various astronomical applications. For example, it has been successfully applied in complex tasks where conventional analytical methods often proved to be challenging or computationally expensive. It has been used for predicting photometric redshifts (e.g., Amaro et al. 2019; Li et al. 2021b, 2022a); deriving structural parameters of galaxies from light profiles (Li et al. 2022b); performing star, galaxy, and quasar separation in both images and catalogs (Li et al. 2021a; von Marttens et al. 2024; Baqui et al. 2021); identifying and modeling strong gravitational lensing events (Li et al. 2020; Gentile et al. 2022); determining DM distribution of galaxies (de los Rios et al. 2023); and connecting properties of galaxies and DM halos (Moster et al. 2021).

Recently, we have proposed using ML algorithms, trained on cosmological simulations, to estimate the DM content of galaxies (von Marttens et al. 2022, vM+22 hereafter). Cosmological simulations are based on physical principles and realistic recipes for feedback processes, producing seemingly realistic galaxy distributions and scaling relations. Therefore they can be used to train ML algorithms to estimate the DM content of galaxies. In the first paper in this series, we used a Tree-based Pipeline Optimization Tool (TPOT; Olson et al. 2016) to find the optimal ML pipeline. We demonstrated that we can infer the DM properties of galaxies (e.g., central DM mass, DM half-mass radius) starting from general catalog properties, such as luminosity, size, kinematics, colors, and stellar masses in the IllustrisTNG simulation (Nelson et al. 2019, N+19 hereafter). There are other studies following a similar strategy: using the Cosmology and Astrophysics with MachinE Learning Simulation (CAMELS; Villaescusa-Navarro et al. 2023, VN+23 hereafter). For example, Villanueva-Domingo et al. (2022) infer halo mass given the positions, velocities, stellar masses, and radii of the hosted galaxies using graph neural networks. Shao et al. (2022) predict the total mass of a subhalo from their internal properties, such as velocity dispersion, radius, or star formation rate, using neural network and symbolic regression. However, these studies are solely conducted on simulations and have not been tested using real observational data1. On the other hand, utilizing cosmological simulations for replicating and comprehending observational data via ML represents a novel strategy imbued with unforeseeable potentialities.

For instance, we can constrain cosmological parameters and feedback processes comparing DM-related scaling relations from cosmological simulations with galaxy data, which has been envisioned within the CAMELS project (see, e.g., Villaescusa-Navarro et al. 2022), and has recently been shown to be feasible using classical statistical methods (e.g., Busillo et al. 2023). In this paper, we apply a random forest algorithm to predict central total mass and DM mass of galaxies from both simulations and observations. The Mass Estimate machine Learning Algorithm (MELA) is trained with simulation data to make predictions for the galaxies from observations that are compared with results from literature dynamical analyses.

The advantage of our novel method is that it is based on low-level photometric and kinematical information, including only aperture photometry and aperture kinematics. These are typical standardized data provided by photometric large sky surveys, for example, the CSST, VR-LSST, and Euclid, and spectroscopic surveys, such as fiber-aperture velocity dispersion (e.g., from DESI and 4MOST). If successful, this method can provide a significant advantage with respect to standard analysis tools generally based on much higher-level information in Jeans analysis, including accurate surface photometry analysis (Tortora et al. 2009, T+09 hereafter) and integral field spectroscopy (Cappellari 2008, 2020). More importantly, it provides physically motivated inferences because they are based on realistic cosmological simulations.

The other major novelty of the MELA project is that it is entirely data-driven. It provides, for the first time, a full application to real systems and a direct comparison of the predicted masses against classical dynamical methods on samples of early-type and late-type galaxies (Tortora et al. 2012; Zhu et al. 2023) and dwarf systems (Eftekhari et al. 2022) (see Sect. 2 for details).

The outline of the paper is as follows. In Sect. 2 we describe the data and the main physical quantities therein. In Sect. 3 we describe how MELA works and how to evaluate the performance of MELA’s predictions. In Sect. 4 we show the result of self-prediction and apply the trained MELA to real data. In Sect. 5 we discuss the robustness of the MELA and the possible reason for the errors. In Sect. 6 we draw conclusions and give future perspectives.

Throughout this work, we adopt a flat Universe with a ΛCDM model: (ΩΛ, Ωm, h) = (0.6911, 0.3089, 0.6774) based on Planck2015 (Planck Collaboration XIII 2016).

2. Simulation and observation data

In this section, we introduce the simulation datasets we want to use for the training and testing of the MELA and the different observational datasets we want to use as predictive samples. These latter are designated for testing only and are not utilized for model training. In fact, they are used for assessing the performance of the algorithm previously trained using the entire simulation dataset. As anticipated, one of the novelties of this paper is a direct application to dynamical samples to check if MELA can predict the mass content of galaxies consistently with standard dynamical analyses. For this reason, differently from our previous analysis, in vM+22, here we concentrate on the prediction of the central dark matter content of galaxies, namely the total mass Mtot(r1/2) and the dark matter within effective radius MDM(r1/2). In a future work, we will test the same methods against analyses based on extended datasets able to constraint more total dark matter quantities (e.g., based on planetary nebulae, see Napolitano et al. 2009).

The simulation data we use for the training of the machine learning algorithms is based on the IllustrisTNG simulation (N+19), a state-of-the-art magneto-hydrodynamic simulation.

As predictive samples, we consider datasets covering different mass ranges (from dwarf to massive ETG systems) and different dynamical approaches. In particular, we have collected dynamical masses of massive ETGs from Spheroids Panchromatic Investigation in Different Environmental Regions project (SPIDER; Tortora et al. 2012, T+12 hereafter) and combined analysis of the Dynamics and stellar Population for the MaNGA survey (MaNGA DynPop; Zhu et al. 2023, Z+23 hereafter), while we also use dwarf spheroidals from the SAMI Fornax Dwarf survey (Eftekhari et al. 2022, E+22 hereafter).

2.1. Features and targets: A necessary preamble

Before going into the details of the different datasets, we start by describing the physical quantities that we use throughout the paper, as they are differently defined in simulations and observations. This is an important semantic preamble to set the following discussion and motivate some of the choices we need to make when combining simulations and observation in a single analysis. “Observational realism” is a complex chapter of this comparison (see, e.g., Bottrell et al. 2019; Fortuni et al. 2023), which also involves the impact of inappropriate definitions of the observational-like parameters derived from simulations (see, e.g., Tang et al. 2021). Addressing these issues is beyond the scope of this paper, except for some relevant aspects concerning the measurement errors (see, e.g., Qiu et al. 2024, and Sect. 2.2.2). and will be fully addressed in future studies. However, here we need to discuss, and possibly quantify, all obvious mismatches of physical quantities in simulation and observation datasets and introduce some basic assumptions to align the two data types as much as possible.

In Table 1 we show the most important physical properties that we want to use as input of the machine learning algorithm (features) and the quantities we want to predict (targets), with their broad meaning in simulations and observations, face-to-face. We also add some accessory features, that are indirectly used for the target definitions. In particular, we remark the stellar mass inside the half-light radius, M⋆(r1/2) which we decided to exclude from the training/testing features because redundant with respect to the total stellar mass, M⋆, at least in simulations, where, by definition, M⋆(r1/2) = M⋆/2. Here below are some notes to save for the rest of the paper.

Definition of features and targets.

3D vs. 2D features and targets. Data dimensionality is a critical aspect of our study. On the one hand, simulations provide 3D quantities, inherently projection invariant. On the other hand, observational data are essentially 2D, derived from images; for example, they represent projection variant views of astronomical objects, meaning that some of their attributes can change depending on different observation angles. For “total” quantities (luminosity, stellar masses), the use of 2D or 3D does not make a real difference, but for sizes and partial quantities (r1/2, and stellar and total masses inside it) it does. Generally speaking, for real galaxies the dynamical masses (i.e., the ones coming from Jeans modeling or equivalent) can be easily modeled as projected, 2D, or de-projected, 3D, although this conversion comes along with some geometrical assumption (from spherical symmetry, e.g., Wolf et al. 2010; Tortora & Napolitano 2022, to axisymmetric, e.g., Z+23).

3D half-light (r1/2⋆) vs. half-mass (r1/2) radii. Half-light radii or effective radii are certainly the physical parameters that potentially carry the most complex systematics, due to their strong relation with galaxy masses and galaxy types (see, e.g., Shen et al. 2003). We stress in particular two of them: 1) the constant mass-to-light ratio, as in simulations the radius is computed on stellar mass particles; 2) 2D vs. 3D definition (see also above). However: 1) M/L gradients might have a little impact on the mass-size relation of galaxies with an average ratio of the mass weighted, Rm, with respect to the light weighted, Rl, that is of the order of Rm/Rl ∼ 0.6–0.7, from low redshift (see, e.g., Bernardi et al. 2023), up to about redshift 1 (e.g., Suess et al. 2019), although with a large scatter (and assuming uniform initial mass function inside galaxies); 2) the ratio between the 2D and the 3D half-light radii can be quantified to be ∼3/4 for pressure supported systems with a large variety of light distribution under spherical symmetry (see Wolf et al. 2010, W+10 hereafter), while it is basically bias-less for disks where the 3D radius can be obtained from simple thin disk deprojection. Putting these two arguments together we finally conclude that, as the M/L gradients are computed in real galaxies using 2D quantities (e.g., Bernardi et al. 2023, Eq. (4)), and being the 2D radii more compact than the 3D radii, these gradients are an upper limit for the equivalent 3D ones. Hence, the rm/rl (having used the r for 3D quantities) has to be closer to unity than the one measured on 2D gradients, making it reasonable to use the 3D half-mass radius (r1/2) in simulations as a good proxy of the half-light radius (r1/2⋆). In the following, we use the symbol r1/2 to indicate both ones, equivalently.

Predicting and comparing 3D targets. A consequence of the conclusion above is that we can use the 3D features of simulations to predict the 3D targets, and so we can for real galaxies if we use analogous 3D features and targets. These latter can be compared with the equivalent obtained from dynamical models, only under the conditions that the conversion of the 2D to 3D mass properties of real galaxies is free from systematics. Alternatively, to make use of more observation-like features, we should train on 2D features, which are not yet a standard product for hydro-simulations. We will address this in the forthcoming paper on this project.

Velocity dispersion. The velocity dispersion in simulations is defined over the full set of particles (i.e., DM, stellar, black hole, gas), which are distributed over a large range of radii. In observations, it is measured over a small aperture (Thomas et al. 2013; Napolitano et al. 2020) and possibly corrected with an empirical formula (e.g., Cappellari et al. 2006) to the effective radius, or directly derived at the r1/2 via integral field spectroscopy (e.g., Z+23). The question arises of how comparable these two quantities are. The Observed velocity dispersion of galaxies shows a strong negative slope generally confined within the central regions, up to a few effective radii (see, e.g., Gerhard et al. 2001; Coccato et al. 2009; Napolitano et al. 2009, 2011; Pulsoni et al. 2018). On the other hand, the softening length of the TNG100 (∼0.7 kpc h−1) likely suppresses the typical central peak of the velocity dispersion profile of galaxies. The net effect is that in mid-resolution simulations the overall σ, as in Table 1, is basically sensitive to the large radii flatter part of the velocity dispersion profile that is smaller than the one measured in the central galaxies (e.g., Pulsoni et al. 2018). This is expected to produce a systematic shift on the typical scaling relations involving σ, like the mass-velocity dispersion relation (e.g., Faber & Jackson 1976; Cappellari et al. 2013; Napolitano et al. 2020). This is shown in detail in Sect. 5.2. For dwarf galaxies, though, the typical velocity dispersion profiles look flatter at all radii (see E+22, Fig. 4), hence this effect might be mitigated and the σ from the simulations can be a realistic proxy of the real velocity dispersion (see also Sect. 5.2).

Dark matter in galaxies. This is naturally provided in the TNG100 catalog by the sum of all the DM particles inside a given 3D volume. In our case, this is the one enclosed in the effective radius, MDM(r1/2). For real galaxies, depending on the sample, it can be directly fitted assuming some form of dark matter halo (e.g., a Navarro et al. 1997 or Burkert 1995 profile), or, if only the total mass of the galaxy is inferred, by the equation MDM(r1/2) = Mdyn(r1/2)−M⋆(r1/2)– see Table 1. Either case, the underlying assumption is that the gas mass is negligible in the considered volume. This is somewhat reasonable for the regions inside r1/2 we want to consider in this analysis. However, this might have a nonnegligible impact on the comparison with the simulations. In these latter cases, if we can reasonably assume that Mdyn(r1/2) = Mtot(r1/2) = M⋆(r1/2) + MDM(r1/2) + Mgas(r1/2), the quantity Mdyn(r1/2) − M⋆(r1/2) equals, by definition,  . Hence, this newly defined mass comes from the fact that in real galaxies the albeit limited, gas mass contributes as dark baryons to the MDM(r1/2). Thus, when explicitly checking the predictions of MELA against the DM in real data, we should use

. Hence, this newly defined mass comes from the fact that in real galaxies the albeit limited, gas mass contributes as dark baryons to the MDM(r1/2). Thus, when explicitly checking the predictions of MELA against the DM in real data, we should use  as a target (see Appendix A). For convention, we call this the “augmented” dark matter, meaning that it includes all the unaccounted mass contributions that exceed the stellar mass of a galaxy (aka “missing mass”).

as a target (see Appendix A). For convention, we call this the “augmented” dark matter, meaning that it includes all the unaccounted mass contributions that exceed the stellar mass of a galaxy (aka “missing mass”).

2.2. TNG100 simulation for training and testing

IllustrisTNG (N+19) is a series of state-of-the-art magneto-hydrodynamic simulations using different box sizes: 50 Mpc h−1, 100 Mpc h−1, and 300 Mpc h−1. In this work, we use TNG100-1 (TNG100, for short), which is the highest resolution simulation with a volume of 106.5 Mpc3 and 10243 dark matter particles. The mass resolution of dark matter particles is 7.5 × 106 M⊙, while the mean mass resolution of baryon particles is 1.4 × 106 M⊙. The Plummer equivalent gravitational softening of the collisionless component in the comoving units at z = 0 is Rp = 1.0 kpc h−1 (see N+19, Table 1) or 0.74 kpc h−1 in the physical units at z = 0. The cosmological parameters are based on Planck2015 (see Sect. 1), which is also the cosmology we use for all the observed data throughout the paper. The hydrodynamical part of the simulation includes updated recipes for star formation and evolution, chemical enrichment, cooling, and feedbacks (Weinberger et al. 2016; Pillepich et al. 2018; Nelson et al. 2018). It also accounts for AGN feedback (Weinberger et al. 2016) and galactic winds model (Pillepich et al. 2018), mimicking supernovae feedback.

The IllustrisTNG snapshots and Group catalogs in different redshift bins, from z = 0 to z = 127, are publicly available2. In the following, to illustrate the main properties of the simulations, we will use a reference redshift window from 0 to 0.1. In particular, we only use the information of Subfind Groups, which contain groups of particles recognized as individual objects for which a series of physical quantities have been assigned as the integral of all particles belonging to the group. The main properties we are interested in are indicated as TNG in Table 1 and previously described in Sect. 2.1. In the redshift range z ∈ [0,0.1], they include 9 snapshots, corresponding to z = 0.00, 0.01, 0.02, 0.03, 0.05, 0.06, 0.07, 0.08, 0.10. The Subfind Group Catalog represents the basic dataset to train our machine learning tools and subsequently test the performances, using the known target values as ground truth. If the galaxy-like systems belonging to the TNG simulations are a fair representation of the real galaxies, we can expect that the ML tool trained on the TNG galaxy catalog can predict the target quantities, specifically dark and total mass, not only on the simulated test sample, but also on the real galaxies. In the next section, we detail the selections needed to choose realistic galaxy systems from the Subfind Group Catalog.

2.2.1. Selection and properties of the galaxy sample from TNG100

The galaxy dataset from the Subfind Group Catalog contains subhalos from friend-of-friend (FOF) and subfind algorithms, that represent galaxy-like groups, but not all of them are well-defined, realistic systems. To select physically meaningful galaxies, we use the following criteria:

-

SubhaloFlag=True. This selects subhalos with cosmological origin (i.e., they have not been produced by fragmentation of larger halos by baryonic processes). TNG provides a Flag for these spurious satellite systems3.

-

The stellar half-mass (or effective) radius and dark matter half-mass radius are larger than 2 times of the Plummer radius Rp = 0.74 kpc. This is to avoid that the internal properties of small galaxies are fully dominated by numerical softening.

-

Number of both stellar and dark matter particles is larger than N = 200. This is a further criterion to have robust total quantities based on sufficiently large particle statistics in galaxies. This corresponds to a lower total stellar mass limit of M⋆ = 108.3 M⊙, which also implies M⋆(r1/2) > 108.0 M⊙.

-

We finally assume that every subhalo is a single galaxy.

We divide this galaxy sample into passive/early-type galaxies (ETGs) and active/late-type galaxies (LTGs) using the specific star formation rate as the criterion. Following observational analyses (Paspaliaris et al. 2023), we adopt log sSFR/yr−1 < −11 for ETGs and log sSFR/yr−1 > −11 for LTGs. This is possibly a more robust selection than one based on pure the color-stellar mass cut (see, e.g., Pulsoni et al. 2020), which yet allows us a sharp separation of the red sequence galaxies from the blue cloud systems. In the absence of other relevant structural parameters in the TNG catalogs suitable for morphological (e.g., the n-index of the Sersic 1968 profile) or kinematical selection criteria (e.g., galaxy spin, see Rodriguez-Gomez et al. 2022), the sSFR criterion remains the best physical argument we can use to make the ETG/LTG separation.

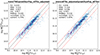

In Fig. 1 we show the correlations/distributions of some of the relevant properties (see Table 1) we will use in the rest of the paper as features and targets for these two classes. From the left panel, we clearly see the differences in the distributions of the ETGs and LTGs, which is also reflected in differences in the scaling relations, among the different quantities. We also remark the bimodal distribution of the ETGs, showing the presence of two populations of high-mass ETGs, at log M⋆(r1/2)/M⊙ ≳ 9.8, and “dwarf”, low-mass sample, at log M⋆(r1/2)/M⊙ ≲ 9.8, which is mirrored by the velocity dispersion distribution, which is also bimodal around log σ/km s−1 ∼ 1.9.

|

Fig. 1. Distribution of relevant features and targets as in Table 1: total mass inside the stellar half-mass radius, augmented dark matter mass inside stellar half-mass radius, half-mass radius, stellar mass in half-mass radius, velocity dispersion, total and dark matter mass in half-light radius. Left: galaxies are divided into ETGs and LTGs on the basis of their SFR. Right: ETGs are further divided into normal and dwarf ETGs based on the classification criteria outlined in Table 2. The normalized distribution of the features and targets is shown along the diagonal. Units are as in Table 1. This is the original data from TNG100 without considering mock measurement errors. To get a comparative picture, a fixed value was set for the different types of galaxies. We randomly get a 20 000 galaxy subsample from the full dataset and from the three galaxy types. |

“Normal” ETGs (nETGs) and “dwarf” ETGs (dETGs) are known to have rather different scaling relations and possibly also different formation mechanisms (Koleva et al. 2009) which might reflect differences in their dark matter properties. Unsurprisingly, we also observe similar bimodalities in the total and DM masses. We then define nETGs as ETGs having log M⋆(r1/2)/M⊙ > 9.8 and log σ/km s−1 > 1.9, and dETGs all the remaining ETGs. With these criteria, the LTGs, and n/dETGs are distributed as shown in the right panel of Fig. 1, where we can use the Pearson correlation coefficient ρ:

to quantify the linear relationship between different pairs of variables (i.e., the features and targets in Table 1). In Eq. (1), cov(y, x) represents the covariance of two variables, while the denominator σ(y) and σ(x) are the standard deviations of y and x. These values normalize the covariance, ensuring that ρ ranges between −1 (indicating a perfect negative linear relationship) and 1 (indicating a perfect positive linear relationship). From these correlation matrices, it is evident that the three classes have different distributions and scaling relations (see, e.g., mass-size relation, and the mass-σ relation). This is more clearly illustrated in the correlation heatmap presented in Fig. 2, where there are groups of physical parameters (i.e., M⋆, M⋆(r1/2), σ, MDM(r1/2), Mtot(r1/2)) that have a different level of correlation for the three distinct classes (three panels on the right). In particular, dwarfs show a looser correlations of the half-light radius, r1/2, with luminosity, mass, and σ, while the nETGs and LTG show tighter size-luminosity, mass-size relations, compatibly with observations (see also, Tang et al. 2021). Also looking at the target quantities, MDM(r1/2) and Mtot(r1/2), dETGs show shallower correlations with other parameters (e.g., luminosity, size, and stellar masses) with respect to nETGs and LTGs. When combined together, the full TNG galaxy sample (TNG_all) shows a correlation matrix (first panel on the left) which is very similar to the one of LTGs, which is the numerically dominant population with intermediate properties. We will come back to these correlations later, when interpreting the results of the mass predictions.

|

Fig. 2. Correlation heat map of the different TNG galaxy samples defined in Sect. 2.2 when not considering (upper row) and considering (bottom row) the mock measurement errors, as in Sect. 2.2.2. The correlation coefficients are calculated using the Pearson correlation coefficient (see Eq. (1)). |

Due to these different features in the correlations highlighted by the Figs. 1 and 2, we will compare the mass predictions obtained by considering these three classes (i.e., nETGs, dETGs, LTGs) as separated and joined together, as done in vM+22. This will allow us to check whether MELA can perform better on the individual classes rather than all galaxies together.

In Table 2 we summarize the definition of the three galaxy classes from the TNG simulation. The number we collected in the different redshift bins is used in the next analysis to predict the total mass and the dark mass for the observed samples.

Classification criteria for simulated and observed galaxy samples.

2.2.2. Mock measurement errors for TNG100 galaxies

The physical quantities extracted from simulation usually are provided with no error (although they are themselves the product of a measurement process) and they ideally represent “exact predictions” of theoretical models. Statistically speaking they can be assumed to be the true value of physical quantities that, in observed galaxies, come along with measurement uncertainties. These measurement errors need to be taken into account when doing predictions, because their net effect is to dilute the correlations seen in Fig. 1, making also the predictions of the target broadened. This is a very basic form of “observational realism”, discussed in Sect. 2.1.

To include the effect of observing measurements on the simulated quantities, we have assumed Gaussian errors with typical relative uncertainties of 10% for the features (i.e., g and r magnitudes, M⋆, M⋆(r1/2), σ, r1/2) and 15% for the targets (i.e., MDM(r1/2) and Mtot(r1/2)) to be consistent with typical uncertainties found for galaxy observations and dynamical samples in the galaxy centers (see, e.g., T+09, Z+23, Cappellari et al. 2013). Note that the adoption of errors on targets is not strictly necessary, under the assumption that the targets represent some ground truth properties of galaxies. However, our choice intends to conservatively account for the fact that targets are extracted from the simulation with a measurement process that brings some uncertainties. Finally, the mock measurements are obtained by randomly drawing the “measured” quantities from a Gaussian centered in their original (true) value and with standard deviation corresponding to the adopted relative errors. The new “measured” quantity is taken inside 3σ from the original “true” value. This step is needed to fully account for the intrinsic errors of the observed features in the training phase, which should reproduce the distribution of the observed features one wants to use to make the predictions of the chosen targets.

In Fig. 2 we finally report the correlation matrices for the whole sample and the three galaxy classes after having added the measurement errors, face-to-face with the same matrices before the measurement errors. As seen the correlations have not been dramatically affected, although we can see a decrease of the correlation coefficients of the order of ∼5% or smaller for TNG_all and ∼10% or smaller for the n/dETG and LTG classes.

2.3. Observational data: The predictive sample

As mentioned in the introduction of this section, we use a variety of galaxy mass catalogs based on different dynamical methods as a predictive sample. These include SPIDER, MaNGA DynPop a SAMI Fornax Dwarf survey that we briefly describe below in this section. Here we anticipate that according to the independent and identically distributed hypothesis in machine learning inferences, to have reliable ML predictions, we need to ensure that the feature and target distributions of the test sample are comparable with those of the training sample. To do that we apply to observational samples above the same selection criteria of the TNG classes, as summarized in Table 2. Here we see that, for observations, we add the further condition that the dynamical mass inside the effective radius is larger than the stellar mass inside the effective radius (i.e., Mdyn(r1/2) > M⋆(r1/2)). This is because mass estimators, due to the statistical errors, might sometimes bring to such unphysical results. We also remark that the r1/2 ≳ 1.5 kpc criterion, imposed by the softening length of the simulations, is quite restrictive for observations as there are massive ultra-compact galaxies (UCMGs, e.g., Tortora et al. 2016, 2018; Scognamiglio et al. 2020; Spiniello et al. 2021) and dwarf galaxies (Graham & Guzmán 2003), having sizes generally smaller than the threshold used above. If, on one hand, the UCMGs are rare in the local universe (Trujillo et al. 2014; Tortora et al. 2016) and this selection does not impact the predictive sample, on the other hand, given the mass-size relation of dETGs (e.g., E+22) r1/2 > 1.5 kpc corresponds to stellar masses of the order of M⋆/M⊙ ∼ 109 or higher, thus strongly impacting the accessible lower mass limit of observations. As we are interested in pushing the predictions of the MELA toward low stellar masses, then we decide to add a secondary dwarf predictive sample for which we use the r1/2 = 1 kpc as a lower size limit and use a separate analogous training sample to make predictions for it.

2.3.1. SPIDER ETGs

The Spheroid’s Panchromatic Investigation in Different Environmental Regimes (SPIDER) is a sample of 39 993 bright ETGs in the redshift range of 0.05–0.095, possessing SDSS optical photometry and optical spectroscopy. 5080 galaxies also have YJHK photometric from DR2 of UKIDSS-LAS (see La Barbera et al. 2010). ETGs are defined as bulge-dominated systems, with passive spectra in their centers. Following Bernardi et al. (2003), they select ETGs with eClass < 0 and fracDevr > 0.8, where the SDSS spectroscopic parameter eClass gives the spectral type of a galaxy, while the SDSS photometric parameter fracDevr measures the fraction of galaxy light that is better fitted by a de Vaucouleurs (rather than an exponential) law. Here we are interested in a subsample of this catalog for which Jeans analysis has been used to derive the dynamical mass inside the r1/2 from T+12. We note that the selections are very effective in removing late-type systems (see La Barbera et al. 2010), but do not allow a clear separation of E and S0 galaxy types. However, this has no significant impact on the Jeans analysis results, as discussed in T+12. The final SPIDER sample contains 4260 galaxies for which information about the quantities as in Table 1 are available from T+12, and that we briefly summarize here below:

-

g- and r-band photometry from SDSS-DR6;

-

Re: effective radius obtained from the Sérsic fit to the SDSS imaging in the K-band. We convert it to 3D by r1/2 = 1.35 × Re;

-

M⋆: total stellar mass Swindle et al. (2011), obtained by fitting synthetic stellar population models from Bruzual & Charlot (2003) from SDSS (optical) + UKIDS (NIR) using the software LePhare (Ilbert et al. 2006), assuming a extinction law (Cardelli et al. 1989) and Chabrier IMF;

-

σ and σe: respectively, SDSS fiber velocity dispersion and aperture corrected velocity dispersion within 1r1/2 following Cappellari et al. (2006);

-

Mdyn(r1/2) and MDM(r1/2) respectively, dynamical mass from the Jeans equation and dynamical minus stellar mass in the 3D effective radius (see T+12).

In Fig. 3 we compare the distribution of the galaxy features and targets of the nETG sample against the same quantities from SPIDER ETGs selected according to the all set of criteria above. From the distribution, we can see that the SPIDER sample nicely overlaps with most of the distribution of the nETGs, except for the velocity dispersion. This is expected, as discussed in Sect. 2.1. All other deviations from the ETG simulated sample of the observed sample should be tracked to the difference of the definitions highlighted in Table 1. As anticipated in Sects. 2.1 and 2.2.2, this matter is related to the “observational realism” of simulations. Here we want to check whether the application of uniform selection criteria, such as the one introduced above in this section, can provide reasonably realistic mass predictions, or whether we need physically motivated corrections to empirically align observations and simulations (see, e.g., Pillepich et al. 2018).

|

Fig. 3. Kernel density estimation (KDE) for each class of the dataset. Top row: KDE of the nETG dataset. Bottom row: KDE of the LTG and dETG dataset. The number of each class of datasets is indicated in Table 2. All the data points are within the x-axis limit. In the case of Fornax, an incompleteness of the smoothed estimate is evident due to the limited number of data points. |

2.3.2. MaNGA DynPop

Mapping Nearby Galaxies at APO (MaNGA4; Bundy et al. 2015) is a spectroscopic program included in the Sloan Digital Sky Survey, (SDSS), released in the final Data Release 17 (DR17). Unlike previous SDSS surveys based on fiber spectroscopy, MaNGA obtained 3D spectroscopy of ∼10 000 (10 k) nearby galaxies, hence providing two-dimensional maps of stellar velocity and velocity dispersion, mean stellar age, and star formation history for an unprecedented sample at z ∼ 0. As no preliminary selections on size, inclination, morphology, or environment were applied, MaNGA is a volume limited sample fully representative of the local universe galaxy population, eventually including all varieties of galaxies as ETGs, LTGs, and also irregular systems.

For our analysis, we are interested in the DynPop catalog (Z+23), combining the Jeans equation dynamical analysis with stellar population (Lu et al. 2023) for the full 10 k MaNGA sample5. The stellar dynamics is performed using the Jeans anisotropic modeling (JAM) method (Cappellari 2008, 2020), which was successfully adopted for extended analyses (e.g., Cappellari et al. 2013). The JAM method contains a final combination of eight different set-ups. Namely: two orientations of the velocity ellipsoid (cylindrically-aligned JAMcyl or spherically-aligned JAMsph) and four assumptions for the models’ dark versus luminous matter distribution: 1) mass-follows-light (e.g., Cappellari et al. 2012; Shetty et al. 2020), 2) free NFW dark halo (Navarro et al. 1996), 3) cosmologically-constrained NFW halo 4) generalized NFW dark halo (Wyithe et al. 2001) (i.e., using a free inner DM halo central slope). The catalog contains a series of parameters that we are interested in using in our analysis, and we briefly describe here below (see Z+23, their Appendix B):

-

M⋆: total stellar masses from K-correction fit for Sersic fluxes which is from the NSA catalog (Blanton & Roweis 2007; Blanton et al. 2011) with Chabrie IMF. In the DynPop catalog, they also provide the decomposition of the stellar mass from their total mass model.

-

r1/2: 3D radius of the sphere which encloses half the total luminosity, based on JAM.

-

σ: effective velocity dispersion within elliptical half-light isophote.

-

Mdyn(r1/2): dynamical masses derived via JAM.

-

“Qual” flag: this is a quality flag that classifies galaxies according to the “goodness” of the dynamical model fit. Qual = −1 means irregular galaxies; Qual = 0 no good fit for both velocity (V) and

maps; Qual = 1 means acceptable fit for the Vrms map. Qual = 2 means have a good fit to the Vrms but a bad fit to the v. Qual = 3 means that both Vrms and V are well fitted (see Z+23, Sect 5.1).

maps; Qual = 1 means acceptable fit for the Vrms map. Qual = 2 means have a good fit to the Vrms but a bad fit to the v. Qual = 3 means that both Vrms and V are well fitted (see Z+23, Sect 5.1).

For our test, we choose as reference model the JAMsph with the generalized NFW as a reference and use the related Qual flag to select the predictive sample. We start by selecting a sample with quality flag Qual ≥ 1 (i.e., good fit to either the velocity map, or the velocity dispersion map, or both) from the original sample of 10 296 galaxies. However, we will also report the MELA predictions for all galaxies that are not clearly irregular (i.e., Qual ≥ 0) in Appendix C. In the same Appendix, we also show the predictions by MELA_ALL as compared against all other JAM models. The Redshift range of the DynPop sample is 0.00–0.17, so for consistency with the training sample used for SPIDER, we further select only the z < 0.1 systems to start with.

Using Qual ≥ 1 and z < 0.1, we are left with 5737 galaxies. To separate ETGs from LTGs, we adopt the criteria proposed by Domínguez Sánchez et al. (2021) and consistently adopted by Z+23:

-

E: (PLTG < 0.5) and (T-Type < 0) and (PS0 < 0.5) and (VC = 1) and (VF = 0)

-

S0: (PLTG < 0.5) and (T-Type < 0) and (PS0 > 0.5) and (VC = 2) and (VF = 0)

-

S: (PLTG > 0.5) and (T-Type > 0) and (VC = 3) and (VF = 0)

where PLTG, PS0 and PLTG are machine learning probabilities available as SDSS DR17 value added catalogs6.

We finally fixed the E+S0 sample as the final DynPop ETG sample. According to Z+23, MANGA is not suitable to be effectively used to study dwarf galaxies, due to the low spectral resolution. Although some low-velocity dispersion systems are present in the sample, we decided to discard these “dwarf” systems. We finally show the features and target distribution in Fig. 3, and find also in this case a good overlap with both the nETG and LTGs from TNG. We also see a nice overlap of the DynPop ETG sample with the SPIDER sample, especially looking at the central velocity dispersion distribution.

For the DynPop sample, Z+23 reports the use of Planck15 cosmology.

2.3.3. SAMI-Fornax Dwarf Survey

As a reference observational data on dwarf galaxies, we use the recent dynamical sample from the SAMI-Fornax Dwarf Survey (DSAMI, for short), reported in E+22. DSAMI is an integral field, high-resolution R ∼ 5000 survey of dwarf galaxies in the Fornax Cluster. The surveys provide spectroscopical data for the largest sample of low-mass (107 − 108 M⊙) galaxies in a cluster to date. The full description of the sample and the spatially resolved stellar radial velocity and velocity dispersion maps, together with their specific stellar angular momentum are given in Scott et al. (2020), and E+22 provides, in Table 1 and 5, the following parameters that we can use as predictive samples:

-

M⋆: the stellar mass within the effective radius, defined by the formula provided by Taylor et al. (2011, Eq. (3)) with Chabrie IMF:

where Mr, e is the absolute magnitude inside an effective radius, assuming Ωm = 0.3, ΩΛ = 0.7, h = 0.7, as cosmology;

-

Re: the effective radius obtained from r-band GALFIT model (Venhola et al. 2018). We convert it to 3D by r1/2 = 1.33 × Re;

-

σe: the velocity dispersion inside the effective radius. Due to the flat dispersion profile, they use the velocity dispersion with an aperture of 15″diameter as a proxy of the σe;

-

Mdyn(r1/2) and MDM(r1/2): respectively, the inferences of the total dynamical mass (see details below) and dark matter inside r1/2. The former is obtained via a simple mass estimator calibrated on the spherical Jeans equation from W+10 with h = 0.702 from WMAP5 cosmology:

where the MDM(r1/2) is a 3D mass in the 3D half-light radius, although σe and Re are both projected, according to W+10.

According to W+10, Eq. (3) is a rather robust estimator that is little sensitive to orbital anisotropy and is valid if the projected velocity dispersion profile is fairly flat near the half-light radius. This is a good approximation for most of the observed dwarf kinematics in the E+22 sample, hence we expect it to provide fairly unbiased Mtot(r1/2) estimates.

The total number of galaxies, which matches the training sample limits, in terms of stellar mass and effective radius, is 15 and the distribution is also shown in Fig. 3.

To convert all features and targets on the same scale as the TNG data, we use a distance of 19.7 Mpc for the Fornax cluster, which corresponds to z ∼ 0.005 in Planck15 cosmology, and rescale all dynamical quantities from WMAP5 to this latter cosmology.

3. The Mass Estimate machine Learning Algorithm

In this section we describe the principle of the Mass Estimate machine Learning Algorithm (MELA) we want to develop in this paper. We first introduce the main architecture and training strategy and then the statistical indicators we will use to assess its performances.

3.1. Random forests

As a first model for MELA we want to use random forests (RF). This is a powerful method for ensemble learning (the idea of combining the outputs of multiple models through some kind of voting or averaging). In particular, it is suitable for the specific goal of predicting the dark matter properties of galaxies, starting from a list of observations, as we have seen in vM+22, where RF has been the algorithm always picked by the Tree-based Pipeline Optimization Tool (TPOT; Olson et al. 2016). RF makes use of decision trees and, more specifically, for regression problems, it is based on CART trees. Compared to a simple decision tree, RF results, based on averages from all the decision trees which is made, are more robust and less prone to overfit. We use the package sklearn.ensemble.RandomForestRegressor and keep the default structural parameters, with 100 trees, after having tested that the performances would not significantly change with the adoption of any variation around the default set-up. Finally, in order to make the results reproducible, we set the structure parameters random_state=1.

3.2. Training MELA

In this section, we outline the various MELA configurations we will utilize throughout the paper, each corresponding to different training samples. As previously mentioned, while the pipeline remains consistent and relies on the same features and targets, we plan to employ distinct training samples. These samples are anticipated to offer more specialized and consequently accurate predictions for individual classes. To differentiate between the various configurations, we will use the following MELA extensions:

-

MELA_ALL. The MELA trained using the full original TNG100 sample, with no classes. Training size: 339 504 galaxies.

-

MELA_NETG. The MELA trained using the “normal” ETG sample. Training size: 21 416 galaxies.

-

MELA_DW. The MELA trained using the “dwarf” ETG sample. Training size: 70 859 galaxies.

-

MELA_LTG. The MELA trained using the LTG sample. Training size: 247 229 galaxies.

For the self-prediction (in Sect. 4.1), we allocate 80% of the complete training dataset as a training set and the remaining 20% as a test set to assess the performance of MELA. However, when applying the MELA to real observations, we use the entire dataset for training.

Looking at the training sample sizes of the different MELAs above, we remark that they seem rather unbalanced, being the LTG sample the largest one in the TNG_all sample. In principle, being the number counts of the individual classes a realistic representation of a complete sample of galaxies, their true distributions can be considered as a “prior” which realistically describes the observed samples. However, one can expect that such unbalanced training samples can affect the relative performances of the different trained MELAs above. To check that, we will also adopt a more “balanced” training/testing approach to verify if this can impact the different MELAs predictions. In particular, in Sect. 4.1, we will use a sample consisting of 21 000 galaxies per class, which is randomly selected from the complete dataset of each respective class. This balanced sample is aligned with the less abundant test set among the three classes (i.e., the nETGs, with 21 416 entries).

In this case, the class samples are divided into 16 800 galaxies for training and 4200 for testing. We will compare both training approaches, referred to as “full-counts” and “balanced-counts” training samples, against the respective test samples.

3.3. ML evaluator metrics

To evaluate the performance of the MELA, in terms of accuracy and precision, we use four different statistical estimators (see symbol definitions at the end of this list):

-

The coefficient of determination R2:

-

The Mean Absolute Error (MAE):

-

The Mean Standard Error (MSE):

-

The Pearson correlation coefficient ρ, already introduced in Eq. (1), but re-defined here as:

-

The Median of Bias (MdBias), defined as:

-

The outlier fraction: the fraction of prediction exceeding ∼2σ errors of the typical mass estimates from dynamical analyses. In particular, we have found that this corresponds to ±0.3 dex for DynPop, being 0.15 dex about the average errors in that sample (see K+23). In case of log-normal errors, we expect a 5% outliers, as an acceptable outlier fraction.

In all equations above, N represents the total number of data points, while the variable y refers to the output of the MELA, which is also known as the target (hence, yi represents the value of the ith output), the subscripts “true” and “pred” indicate the true and predicted values respectively and  denotes the mean value of y. Furthermore, RSS indicates the residual sum of squares and TSS indicates the total sum of squares. R2 is a statistical measure used to assess the goodness of fit of a regression. By definition, R2 ranges from 0 to 1, with R2 = 1 meaning the perfect fit. However, in practical cases RSS can be larger than TSS, making R2 < 0. In these cases, the ML fails to make the prediction. It is important to note that R2 alone does not quantify the quality of a regression model; for example, it does not consider the complexity of the model, the significance of individual predictors, or the presence of overfitting. Therefore, R2 in used in conjunction with other metrics. MSE and MAE are both commonly used metrics for evaluating the performance of a regression model. They quantify the average magnitude of errors between the predicted values and the actual values. Both MSE and MAE are defined to be non-negative, with lower values indicating better performances of the ML tool. Finally, ρ describes the linear correlation between the true value and the predicted value. It ranges from −1 (perfect anti-correlation) to 1 (perfect correlation). For the purpose of our analysis, when comparing predictions with ground truth, the closer ρ and R2 are to 1 and the closer MAE and MSE to 0, the better the performances of the MELA are.

denotes the mean value of y. Furthermore, RSS indicates the residual sum of squares and TSS indicates the total sum of squares. R2 is a statistical measure used to assess the goodness of fit of a regression. By definition, R2 ranges from 0 to 1, with R2 = 1 meaning the perfect fit. However, in practical cases RSS can be larger than TSS, making R2 < 0. In these cases, the ML fails to make the prediction. It is important to note that R2 alone does not quantify the quality of a regression model; for example, it does not consider the complexity of the model, the significance of individual predictors, or the presence of overfitting. Therefore, R2 in used in conjunction with other metrics. MSE and MAE are both commonly used metrics for evaluating the performance of a regression model. They quantify the average magnitude of errors between the predicted values and the actual values. Both MSE and MAE are defined to be non-negative, with lower values indicating better performances of the ML tool. Finally, ρ describes the linear correlation between the true value and the predicted value. It ranges from −1 (perfect anti-correlation) to 1 (perfect correlation). For the purpose of our analysis, when comparing predictions with ground truth, the closer ρ and R2 are to 1 and the closer MAE and MSE to 0, the better the performances of the MELA are.

4. Results

In this section, we first evaluate the performance of the MELA to predict the total and the dark mass over a test sample derived by the TNG simulation. In our first analysis (see vM+22) we have demonstrated that ML can effectively predict the dark matter content of galaxies as a whole (i.e., without breaking these into different classes). Here, we want to check the performances of the MELA on the different galaxy types. We start by using test samples containing simulated galaxies from one of the three TNG100 classes and checking the predictions using the corresponding trained MELA (see Sect. 3.2) (i.e., the MELA_NETG, the MELA_DW, and the MELA_LTG) in turn, and compare these against the MELA_ALL trained over the full TNG sample. After having tested MELA which trained on the all features, we also checked the performance of the algorithm with different combinations of a smaller number of them. As discussed in vM+22 and in Sect. 1, some of the features reported in Table 1 might be redundant and bring little contribution to the accuracy of the predictions. Hence, we will check whether we can find a minimal sample of features that can provide accurate enough predictions, for each of the three galaxy classes.

4.1. Training and testing on TNG100: Self-prediction

In this section, we report the performances of MELA on the three test samples from the different classes of simulated TNG100 galaxies, using the full set of features: g, r, M⋆, r1/2, σ. Since the training and test samples are derived from the same simulated dataset, we dub this test “self-prediction”. For the sake of brevity, we illustrate here only the detailed results of the MELA trained with measurement errors included, while we will shortly report the results with no errors in Appendix B.

4.1.1. Self-prediction

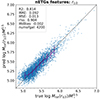

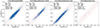

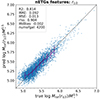

We start by showing the results by the “full-counts” training sample defined in Sect. 3.2. In Fig. 4, we show the predicted values of the two targets vs. ground truth. Accuracy-wise, we can clearly see that nETGs and LTGs have R2 and ρ both larger than 0.9 for the two targets, although for Mtot(r1/2) the indicators are systematically better than the MDM(r1/2). For the dETGs, the R2 is ∼0.75 and ∼0.83, while ρ ∼ 0.87 and ∼0.91, for MDM(r1/2) and Mtot(r1/2), respectively (i.e., smaller than the other two classes). Looking at the same figure, all predictions look quite nicely aligned to the 1-to-1 relation with a negligible number of outliers (< 2%) consistent with a log-normal scatter. We argue that the lower R2 and ρ, for the dETGs, come from a lower correlation in the plots, due to the smaller mass range covered by the dETG sample. The scatter, on the other hand, as measured by the MAE and MSE, is rather similar for all the three classes (MAE ∼ 0.07 − 0.09, MSE ∼ 0.007 − 0.014) regardless of the target, suggesting very similar performances of MELA for all classes. We also note the emergence of a systematic deviation (within a few percent) at logMtot(r1/2)/M⊙ < 9.3, due to some incompleteness effect on objects close to the low-mass limit.

|

Fig. 4. Self-prediction test using full features as indicated in Table 1, with the full-counts training sample incorporating added measurement errors, as described in Sect. 2.2.2. Top row: target is MDM(r1/2). Bottom row: target is Mtot(r1/2). The results without measurement errors are presented in Appendix B. The data is divided into 80% for training and 20% for testing. The x-axis represents the true values, while the y-axis represents the predicted values. “numofgal” is the number of the test set. The purple error bar represents the 16%, 50%, 84% percentiles as a function of Mtrue(r1/2), with a bin size of 0.2 dex. The red dashed line is ±0.30 dex (corresponding to ∼2σ errors, see text). Outliers are defined as the fraction of data outside the red dashed line. In the case of accurate predictions, the data points are expected to lie along the dotted 1-to-1 line. |

In vM+22 (see their Table 1), for TNG_all with target MDM(r1/2) they found R2 ∼ 0.98, ρ ∼ 0.99, MAE ∼ 0.04 and MSE ∼ 0.004 in the “joint analysis” (i.e., using all features). Our accuracy and overall scatter is obviously larger because we are now considering the measurement errors, which were not taken into account in vM+22. The inclusion of errors ultimately returns a more realistic forecast of the accuracy and scatter we might expect in real applications. In Appendix B, we will present our results without considering the measurement errors, to compare directly with what was done in vM+22, and show that these are in full agreement with the latter.

Next, we move to the results of the “balanced-counts” training sample, as shown in Fig. 5. Here we see that all self-predictions look almost unchanged, with all the statistical indicators remaining consistent with 1%, as seen by comparing the R2, MAE, MSE, and ρ values in the insets with the ones in Fig. 4. This shows significant stability of the MELAs with respect to the “prior” parameter distributions of the training samples. Most of all, the MELA_ALL performance remains insensitive to the relative balance of the three galaxy classes. This confirms the evidence that MELA can fully capture the diversity of the correlations of the three galaxy classes (nETG, dETGs, and LTGs) even if mixed together. To demonstrate that, in Fig. 6 we show the predictions of the Mtot(r1/2) for the same test sample of nETG, dETGs and LTGs, but using MELA_ALL for all classes. Compared to the same quantities predicted in Fig. 5 by MELA_NETG, MELA_DW and MELA_LTG, respectively, we find that the resulting R2 are almost indistinguishable for the three classes.

|

Fig. 5. Self-prediction test performed using the full set of features and a balanced-counts training sample, which includes measurement errors. The training-test sample has been adjusted to maintain an equal number of entries across all samples in Fig. 4 through random selection, aligning with the less populated class (nETGs). The training set consists of 80% of the randomly selected subsample (16 800 entries), while the remaining 20% (4200 entries) is allocated for testing. Top row: target is MDM(r1/2). Bottom row: target is Mtot(r1/2). |

|

Fig. 6. Self-prediction test of the MELA_ALL is performed using the full set of the features, and the Mtot(r1/2) as the target. As explained in Sect. 3.2, for this test the training utilizes balanced-counts training samples. These training samples comprise 21 000 × 80% = 16 800 galaxies for each class (i.e., dETGs, nETGs, LTGs), accompanied by 4200 galaxies for testing. The predictions of the entire TNG sample are presented as the self-prediction test in Fig. 5. |

This result seems rather surprising for two main reasons: 1) one could expect that the tilt of the different scaling relations in Fig. 1 should give more sensitivity to MELA to the different galaxy classes, in particular for the dETGs, showing the more deviating correlations in Fig. 1; 2) one would also expect that the different distributions of the galaxy observed quantities (features), in Fig. 3, should impact the prediction of MELA moving from one sample to the other. Instead, the result above seems to show that the MELA_ALL can use the combined information of all features from the different galaxy species, regardless of the specific correlations they have with the DM and the total mass, within the classes. Also, this result seems to show that MELA_ALL can correctly make predictions if the features and targets of a predictive sample are included in the dominion of the training sample, regardless of the detailed distribution of features and targets of the former of the two samples. We will return to this point in the discussion in Sect. 5.

As a final note of this section, one might guess that the equivalence of the performances of MELA_ALL and the customized MELAs can be a consequence of training over features (i.e., σ and M⋆) that are used to split the samples. First, this is true for the ETGs, for which we indeed use a condition on σ and M⋆ to separate the nETGs from the dETGs (see Table 2), but not for LTGs, which are selected only according to the sSFR. Second, as discussed above, the MELAs eventually learns from correlations among features and targets, and thresholds in the features only define the range of the correlations to use in training, which cannot tightly correlate with the mass of the individual galaxies. To check that, we conduct two tests. First, we compare the results obtained from MELA_ALL and MELA_ETG (where MELA was trained on the whole ETG sample) applying on the whole ETG sample (i.e., applying no selection based on the features used for the predictions). We find that the R2 values are nearly identical, meaning that MELA performs equally if any of the features used to split the sample is involved in the training. Second, we dig more into the details of the effect of the features in the splitting by predicting on the nETG, where we have used the σ and M⋆ in their selection, but predicting using the MELA_ETG above, trained on the whole nETG+dETG sample. Here we find, again, almost no change in the results, this also shows that the information used to split the sample is not used by the MELAs to predict.

4.1.2. Optimizing the features combination

One of the aims of this analysis is to find the optimal combination of the features needed to correctly predict the dark matter and/or the total mass of galaxies. In vM+22 we have grouped the canonical features one can collect from galaxy surveys into “Photometric” (including a series of broad optical and NIR bands), “Structural” (including the stellar mass and the r1/2), and “Kinematical” (including σ and a global circular velocity parameter), for a total of 14 features. We have shown that Structural and Photometric features are particularly effective in the prediction of MDM(r1/2), being typically R2 ∼ 0.88 − 0.94, and that the best predictions are found when using all groups of features (R2 ∼ 0.98 − 0.99). In vM+22 we did not try to optimize the feature selection, although we noticed that some groups of features might be more relevant than others in the feature importance analysis.

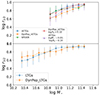

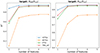

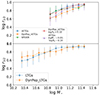

Here we want to check in detail the impact of the use of the individual features on the accuracy of the predictions. This is a heuristic “feature importance”, which is more oriented to accuracy optimization by avoiding redundant features that might add noise rather than effective predictive power. This becomes particularly important for real applications, where the predictions might suffer more from the feature noise introduced by measurement errors (see above). In Tables 3 and 4, we report the R2 estimator for MDM(r1/2) and Mtot(r1/2), respectively, obtained by changing the number of features considered and selecting the first ranked feature combination giving the highest accuracy among all possible combinations allowed for that particular number of features. The content of both tables is graphically summarized in Fig. 7, where we show the R2 as a function of the number of features in Tables 3 and 4, regardless the features.

|

Fig. 7. Accuracy as a function of the number of features for MDM(r1/2) and Mtot(r1/2), taking measurement errors into account. This figure is based on Tables 3 and 4. The results without considering measurement errors can be found in Appendix B. |

Accuracy as a function of the number of features for the MDM(r1/2), considering measurement errors.

Accuracy as a function of the number of features for the Mtot(r1/2), considering measurement errors.

The first thing to notice from Fig. 7 is that the accuracy (R2) of both the targets, MDM(r1/2) and Mtot(r1/2), reaches a “plateau” for all the galaxy groups with just 3 features, which eventually are the same for all classes and for both targets (i.e., r1/2M⋆ and σ), although the two highest ranked features can be different for the different classes and targets (see num = 2 rows in Tables 3 and 4). According to the same tables, the stellar mass is the primary feature in almost all cases except the MDM(r1/2) predictions of the dETGs, where σ is the primary parameter. Interestingly, while σ appears to be important for the dETGs for both targets, it seems to be less important for the nETGs, LTGs, and the full sample, TNG_all, where it starts contributing to the predictions only after the stellar mass and the effective radius. The result of TNG_all, in particular, seems to be consistent with vM+22, which also discussed the kinematics to have a feature importance in the DM predictions at the r1/2 lower than the “structural” parameters (including M⋆ and r1/2).

A second important result is that differently from the dETGs, which, at least for Mtot(r1/2), clearly need three features to reach the “accuracy plateau”, the nETGs and LTGs both seem to need only two features (i.e., the stellar mass and the r1/2) to reach the same plateau. This finding has interesting implications, which we need to explore further, in particular, on the real galaxies (see Sect. 4.2). For instance, this might be a reflection of the strong correlation of the M⋆ and the MDM(r1/2), which scores the highest in the correlation matrix in Fig. 2. However, this might be a partial explanation, as we notice that there is no direct connection between the feature ranking in Tables 3 and 4, with the correlation coefficients. For instance, for the TNG_all sample in Fig. 2, the second highest correlation of the MDM(r1/2) is σ, but this latter, as commented before, is the third feature kicking in the feature ranking. Finally, we stress, that in selecting the features bringing the highest gain in accuracy in Tables 3 and 4, in some cases the difference among features is rather small, meaning that some features are just as good as others to make accurate predictions. This becomes clear when moving to the features that score fourth or higher. On the other hand, for the features ranked below the third, the feature ranking is rather robust (i.e., the first of the second-ranked features provides a larger gain in accuracy with respect to other features).

An obvious conclusion of this “feature ranking” test is that the MELAs do not need all the features in Table 1 to accurately predict the central total and dark matter content of galaxies. From Tables 3 and 4 we can see that the combination of the 3 features [r1/2, M⋆, σ] is sufficient for all galaxy classes.

Using only these 3 features, we also notice that MELA_ALL reaches the highest accuracy for both targets (i.e., R2 ≳ 0.93 for the MDM(r1/2) and R2 ≳ 0.96 for the Mtot(r1/2)). This copes with the result found in Fig. 6 about the superiority of the performances of MELA_ALL with respect to customized MELAs. Based on these results, for the test on the real galaxies in Sect. 4.2, we decide: 1) to use MELA_ALL as a unique tool for all galaxy species, unless otherwise specified; 2) to use only [r1/2, M⋆, σ] as training/testing features.

Although these latter are standard physical products of imaging and spectroscopic surveys, it is still interesting to check the effective ability of the MELA_ALL to minimize the input information needed to make reliable predictions of the MDM(r1/2) and Mtot(r1/2), with respect to the customized MELA_NETG, MELA_LTG, MELA_DW. In Fig. 8 we show the predictions of MELA_ALL, trained on the “balanced-counts” sample using only r1/2 and M⋆ as features. We can see that the prediction of dwarf galaxies has strongly degraded with respect to the same predictions using the 3 features in Fig. 6 as the R2 is decreased by ≳19% (0.687 vs. 0.815), the MAE increased by ≳24% (0.104 vs. 0.084), and the MSE increased up to ∼73% (0.019 vs. 0.011) for dETGs. On the other hand, as suggested by Table 4, for the nETGs and LTGs the R2 decreases by less than 3% (0.956 vs. 0.963 for nETGs and 0.924 vs. 0.943 for LTGs) and the MAE and MSE increase by < 9% (0.074 vs. 0.068 for nETGs and 0.095 vs. 0.082 for LTGs) and < 16% (0.009 vs. 0.007 for nETGs and 0.014 vs. 0.011 for LTGs), which are all much lower than the dETGs. Finally, the TNG_all sample, despite keeping the least accuracy degradation (< 2% in R2, i.e., 0.967 vs. 0.978), shows the largest increase in scatter (MAE: 0.094 vs. 0.078 and MSE: 0.015 vs. 0.010). This allows us to conclude that, when using M⋆, r1/2 and σ as features, or even adding other features like the photometry, we can have a better accuracy using the MELA_ALL vs. customized MELAs for each class, while the customized MELAs work equally accurately if the number of features is suboptimal (e.g., using only M⋆ and r1/2, at least for nETGs and LTGs).

|

Fig. 8. Self-prediction test of the MELA_ALL with the target being Mtot(r1/2). This test uses the balanced-counts training sample and takes measurement errors into account. The test focuses on only two features: r1/2 and M⋆. |

Finally, following the same logic of feature optimization, in Sect. 4.3 we will explore other possible combinations of them, excluding some that are more difficult to measure (e.g., σ for dETGs) or more prone to systematics (e.g., stellar mass). To do that, we will test these combinations both on the TNG and the real datasets.

4.2. Prediction on real data

In this section, we can finally apply the MELA to the datasets introduced in Sect. 2. This is the first attempt we are aware of, a ML tool trained on simulations is applied to perform mass predictions of galaxies. The fundamental premise here is that simulations are based on complex physical processes, which serve as a physically motivated ground truth. Alternatively, we could use real mass estimates and observables (see, e.g., Sect. 5.1), but this would make MELA learn how to predict masses mimicking the process of dynamical modeling, including their assumptions and systematics. As discussed in Sect. 1 this is not our goal, as we want to rather provide an orthogonal method to use for comparison with standard tools.

As mentioned earlier, for the real galaxies, the obvious target to use is the Mtot(r1/2) as this is a rather standard diagnostic for classical dynamical analysis of galaxies. We have also discussed in Sect. 2.1 that, at least for the dynamical analysis of ETGs, the usual assumption is the absence of gas, which, instead, cannot be excluded in the simulations. This brought us to define the “augmented” dark mass,  , in Sect. 2.1, that will be tested in Appendix A. Here we anticipate that the line of arguments we want to use is that, if we can demonstrate that MELA is able to 1) correctly predict the total mass in galaxies, under the assumption that the dynamical analyses provide unbiased estimates of the galaxy total masses, and 2) also predict the augmented dark matter, then 3) we will deliberately conclude that also the DM estimates are correct, specifically in the context of the cosmological framework provided by the TNG100 simulation.

, in Sect. 2.1, that will be tested in Appendix A. Here we anticipate that the line of arguments we want to use is that, if we can demonstrate that MELA is able to 1) correctly predict the total mass in galaxies, under the assumption that the dynamical analyses provide unbiased estimates of the galaxy total masses, and 2) also predict the augmented dark matter, then 3) we will deliberately conclude that also the DM estimates are correct, specifically in the context of the cosmological framework provided by the TNG100 simulation.

As discussed in the Sect. 1, the combination of stellar masses, baryonic mass, and dark matter in a galaxy is the complex interplay of the cosmological parameters and the galaxy formation recipes, driving the star formation efficiency in galaxies. Hence, the fact that MELA can provide consistent predictions of the total mass of real galaxies is not a trivial result. For sure the predictions of both the Mtot(r1/2) and MDM(r1/2) are model-dependent by definition (i.e., those expected exclusively in the cosmology + feedback model of the TNG100). Hence, if this cosmology/baryon physics mix is different than the one beyond the dynamical inferences of the real sample (e.g., the combination of the choice of the cosmological parameters and assumptions on the dark matter properties in the models), we should not expect MELA to return predictions consistently with the dynamical models. As far as the cosmological parameter choice concerned, we have uniformed the units of the quantities (e.g., the distances and the stellar masses) that have a direct dependence on the cosmological parameters, by aligning the real datasets to the TNG cosmology (i.e., Planck2015, see Sect. 1). Hence, we can expect that most of the deviation of the MELA predictions from the classical mass estimates can be tracked either to the assumptions behind the mass estimators from the real sample side, or the combination of the (cosmological + feedback) model + observational realism, from the simulation side. We will return to this point in detail in Sect. 5.

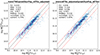

After this premise, we can now show the results on the real data. We have anticipated in Sect. 4.1.2 that for the application to the observed datasets, we can use the MELA_ALL trained using the three most important features [M⋆, r1/2, and σ]. In particular, we use the “full-counts” training set. The results are shown in Fig. 9.

|

Fig. 9. MELA_ALL predictions of the central total mass, Mtot(r1/2), for the real galaxy dynamical samples. The optimal feature combination (i.e., r1/2, M⋆ and σ) is used, as discussed in Sect. 4.1 and Table 4. Shown (from left to right) are predictions of the SPIDER sample; the DynPop/nETG; the DynPop/LTG samples; and the DSAMI sample. The dynamical model used as representative of the MaNGA Dynpop results is the JAMsph+generalized NFW profile (see Sect. 2.3.2). For the DSAMI sample, the red triangles represent the data points from the secondary test sample (1 kpc < r1/2 < 2Rp). The legend provides an overview of the statistical estimators for the different samples. |