| Issue |

A&A

Volume 691, November 2024

|

|

|---|---|---|

| Article Number | A348 | |

| Number of page(s) | 23 | |

| Section | Extragalactic astronomy | |

| DOI | https://doi.org/10.1051/0004-6361/202450975 | |

| Published online | 26 November 2024 | |

Constrained cosmological simulations of the Local Group using Bayesian hierarchical field-level inference

1

Kapteyn Astronomical Institute, University of Groningen, P.O Box 800 9700 AV, Groningen, The Netherlands

2

CNRS & Sorbonne Université, UMR 7095, Institut d’Astrophysique de Paris, 98 bis boulevard Arago, F-75014 Paris, France

3

Max-Planck-Institut für Astrophysik, Karl-Schwarzschild-Straße 1, 85748 Garching, Germany

4

The Oskar Klein Centre, Department of Physics, Stockholm University, Albanova University Center, SE 106 91 Stockholm, Sweden

⋆ Corresponding author; ewoudwempe@gmail.com

Received:

3

June

2024

Accepted:

1

October

2024

We present a novel approach based on Bayesian field-level inference that provides representative ΛCDM initial conditions for simulation of the Local Group (LG) of galaxies and its neighbourhood, constrained by present-day observations. We extended the Bayesian Origin Reconstruction from Galaxies (BORG) algorithm with a multi-resolution approach, allowing us to reach the smaller scales needed to apply the constraints. Our data model simultaneously accounts for observations of mass tracers within the dark haloes of the Milky Way (MW) and M31, for their observed separation and relative velocity, and for the quiet surrounding Hubble flow, represented by the positions and velocities of 31 galaxies at distances between one and four megaparsec. Our approach delivers representative posterior samples of ΛCDM realisations that are statistically and simultaneously consistent with all of these observations, leading to significantly tighter mass constraints than found if the individual datasets are considered separately. In particular, we estimate the virial masses of the MW and M31 to be log10(M200c/M⊙) = 12.07 ± 0.08 and 12.33 ± 0.10, respectively, their sum to be log10(ΣM200c/M⊙) = 12.52 ± 0.07, and the enclosed mass within spheres of radius R to be log10(M(R)/M⊙) = 12.71 ± 0.06 and 12.96 ± 0.08 for R = 1 Mpc and 3 Mpc, respectively. The M31-MW orbit is nearly radial for most of our ΛCDM realisations, and most of them feature a dark matter sheet aligning approximately with the supergalactic plane, despite the surrounding density field not being used explicitly as a constraint. High-resolution, high-fidelity resimulations from initial conditions identified using the approximate simulations of our inference scheme continue to satisfy the observational constraints, demonstrating a route to future high-resolution, full-physics ΛCDM simulations of ensembles of LG look-alikes, all of which closely mirror the observed properties of the real system and its immediate environment.

Key words: methods: numerical / galaxies: evolution / galaxies: formation / Local Group / dark matter

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1. Introduction

Abundant and very detailed observational information is available for the Local Group (LG) of galaxies. It thus provides a unique laboratory to study the physics of galaxy formation, galactic dynamics, and even cosmology. For instance, the LG has a complete sample of dwarf galaxies down to low luminosity, and the sample’s properties have led to controversies such as the ‘missing satellite’ and ‘too-big-to-fail’ problems (Bullock & Boylan-Kolchin 2017, and references therein) which highlight apparent differences between the observed dwarf luminosity function and that predicted by cosmological simulations. This has driven refinements of galaxy formation modelling on small scales involving more realistic treatments of baryonic processes such as star formation and feedback, but it has also motivated the exploration of alternative particle physics candidates for dark matter (Sales et al. 2022, and references therein). Another observed peculiarity of the LG is the co-planar spatial and orbital configuration of the satellites surrounding the two major galaxies, which has led to debates on whether this can plausibly occur in a ΛCDM universe (Libeskind et al. 2005; Pawlowski 2018). Some authors have argued that this configuration is transient and of low statistical significance (Sawala et al. 2023a) and that the clustering of orbital planes may reflect coordinated infall of groups of satellites (Li & Helmi 2008; Taibi et al. 2024). The LG is also the only place where we can use detailed kinematic and chemical information for individual stars to deduce the detailed assembly history of a galaxy, an area in which substantial progress has been made since the latest data release of Gaia (Helmi 2020).

Cosmological simulations are central to addressing these problems, as they provide realistic models for the formation of structure, which allows us to evaluate observations in the context of a specific physical model such as the ΛCDM paradigm. To understand whether particular observed properties of our LG are consistent with this paradigm, and to explore its predictions for the formation history of LG galaxies, it is valuable to construct a representative ensemble of ΛCDM simulations tuned to match closely the well-observed properties of the two main galaxies and their environment.

Much effort has been made on modelling the formation of the LG, initially using two-body models (Kahn & Woltjer 1959; Gott & Thuan 1978; Mishra 1985), later using Numerical Action Methods (Peebles et al. 1989; Peebles 1990; Peebles & Tully 2013), and recently the community has developed several full cosmological simulation programmes aimed at the LG (some recent ones include Gottloeber et al. 2010; Fattahi et al. 2016; Sawala et al. 2016; Garrison-Kimmel et al. 2014, 2019; Libeskind et al. 2020; Sawala et al. 2022; McAlpine et al. 2022). However, obtaining a representative sample of LG analogues that closely match relevant observed properties such as the masses of the two main galaxies, their separation and relative velocity, as well as their somewhat larger-scale environment, has turned out to be challenging. Many studies have started with a cosmological simulation with unconstrained initial conditions, and have picked out LG analogues in the simulation volume (Li & White 2008; González et al. 2014; Fattahi et al. 2016; Carlesi et al. 2017; Zhai et al. 2020; Pillepich et al. 2024), but this approach has limited ability to match the LG at the present day because the number of analogues that one finds is directly related to the total parameter volume allowed by the selection criteria. Hence, tightening the criteria rapidly reduces the number of analogues found. This can be partially mitigated by utilising large volume simulations or by running many simulations with different random initial conditions to increase the volume surveyed, but finding a system that matches all desired properties of the LG accurately is not feasible. This can be seen in Table 1 which provides the number of LG analogues found by different studies, as well as the strictness of the selection criteria. For example, we note that, in the APOSTLE suite, only 12 LG analogues were found to satisfy relatively loose criteria within a (100 Mpc)3 simulation volume.

A summary of the selection requirements of some notable past Local Group simulations.

A more direct and potentially more powerful approach is to use constrained simulations, in which one reconstructs initial conditions given some present-day constraints. Two commonly applied methods exist. One is based on Wiener Filter reconstructions (Hoffman & Ribak 1991; Zaroubi et al. 1999), while the second and more recent is based on Bayesian inference, using Hamiltonian Monte Carlo (HMC) methods. Both have been very successful on large scales (Mathis et al. 2002; Courtois et al. 2012; Sorce et al. 2016; Tully et al. 2019; Jasche et al. 2015; Jasche & Lavaux 2019; Lavaux et al. 2019), reproducing cosmic large-scale structures that match in detail those observed. Bayesian forward modelling schemes are generally preferable because the Hoffman & Ribak (1991) method only exactly samples from the posterior distribution for constraints that are linear in the initial condition field. Bayesian forward modelling with HMC is more versatile in dealing with complex observational data and it can be extended to deal with non-linear scales.

So far, these techniques have only been applied with constraints based on datasets suited to constrain structure on a relatively large scale, such as galaxy redshift surveys, and peculiar velocity catalogues. They have never been applied to generate constrained simulations that match the properties of the LG and its immediate environment. For example, the 2019 analysis of 2M++ with BORG (Jasche & Lavaux 2019) constrains realisations of cosmic structure to match the observed galaxy distribution binned in (4 Mpc)3 voxels, and constrained simulations based on peculiar velocity datasets (e.g. Carlesi et al. 2016) have a similar or larger effective filter scale, because of the requirement that the velocities are well-described by linear theory. Both methods are thus still far from what is required for a constrained simulation of the LG.

Several recent programmes use these large-scale constrained simulations as a base. They then do trial-and-error runs to identify LG analogues with roughly the observed properties. This is done by first generating initial conditions using large-scale constraints. This sets the large-scale Fourier components of the initial density fields. The Fourier components on small scales remain unconstrained and are set at random. One then resimulates many different realisations and picks out those which produce a galaxy pair that is Local-Group-like. For example, in the Clues (Gottloeber et al. 2010; Carlesi et al. 2016; Sorce et al. 2016) and more recent Hestia simulations (Libeskind et al. 2020), one starts from large-scale initial conditions based on a Wiener Filter reconstruction of peculiar velocity data from the CosmicFlows-2 catalogue (Tully et al. 2013). Subsequently, for Hestia, 1000 realisations with randomised small-scale initial conditions are created, resulting in 13 realisations with a halo pair that matches their relatively coarse Local Group criteria, as summarised in Table 1.

For the Sibelius simulations, Sawala et al. (2022) go a step further, using for the large scale structure a realisation from the 2M++ galaxy count data-based reconstructions from BORG (Jasche & Lavaux 2019). They also randomised the small-scale unconstrained structure and found a few good matches, although they did not satisfy their full ‘wish list’ of LG analogue selection criteria, which is briefly listed in Table 1 and is more strict than that for the HESTIA simulations. To get higher-fidelity realisations, they refined promising sets of initial conditions by iteratively randomising smaller and smaller scales, at each stage picking the ‘best’ simulation and then refining even smaller scales. With some careful selection of promising candidates, this allowed them to obtain a small number of initial condition sets that match their strictest LG requirements (see Table 1). However, with such a hierarchical, manual approach, the results cannot be assumed to be an unbiased and fair sample of the posterior distribution of ΛCDM universes subject to the adopted LG constraints.

Such a sample of representative histories for the LG would however be extremely valuable. One could then infer the expected distribution of LG properties in a ΛCDM universe, conditional on its present-day configuration. For instance, one could check expectations for anisotropies in the satellite distribution (i.e. satellite planes), for the immediate environment of the LG, for the distribution of mass on various scales, for the inter-group and circum-group baryon distribution, and even for the detailed assembly history of the system.

In this work, we will use the Hamiltonian Monte Carlo (HMC) methods already used successfully on larger scales to generate constrained simulations of the LG (Jasche & Wandelt 2013; Lavaux & Jasche 2016; Jasche & Lavaux 2019). These methods come with some difficulties, however. Notably the HMC algorithm requires the availability of an efficient evaluation for the derivatives of the posterior probability. In other words, one needs to be able to back-propagate the gradient of the likelihood to the cosmological initial conditions. Additionally, the HMC requires generating many simulations. Keeping computational time feasible requires individual simulations to be efficient. Because of this, constrained simulations cannot be built directly on top of state-of-the-art N-body simulation codes such as Gadget-4 (Springel et al. 2021) or Swift (Schaller et al. 2024) without major modification. Instead, the relevant community has developed custom cosmological simulation codes with hand-coded derivatives, usually based on Lagrangian perturbation theory (Jasche & Wandelt 2013; Kitaura 2013; Wang et al. 2013), or using Particle Mesh (PM) gravity (Wang et al. 2014; Jasche & Lavaux 2019; Li et al. 2022). Particle mesh simulations are particularly attractive because they can be much improved and with marginal additional cost by utilising integration techniques informed by Lagrangian Perturbation theory, for example, COLA (Tassev et al. 2013), which is used in BORG, or FastPM (Feng et al. 2016), which is used by Modi et al. (2021). The existing single-mesh integrator in BORG is still, however, insufficiently accurate to achieve the resolution we require in a reasonable computation time, which is why we implement a zoom method in this paper.

This paper proceeds with Section 2, where the HMC methodology for finding a representative sample of LG initial conditions is described, together with the observational data on the LG that we will use to constrain our inference. Then, in Section 3, we present the results of this inference and the properties of the realistic and representative sample of Local Groups that it produces. In Section 4 we explore how the initial conditions can be used to perform resimulations with Gadget-4. In Section 5 we discuss how our results compare to previous studies, we highlight some of its limitations, and we sketch some future research directions. Finally, in Section 6 we conclude.

2. Methods and setup

For this work, we wish to obtain a fair statistical sample of ΛCDM simulations, all of which reproduce with high fidelity the main observed characteristics of the two largest LG galaxy haloes, as well as the smooth and weakly perturbed Hubble flow in their immediate environment. Since a simulation is determined fully by forward integration from its initial conditions, obtaining a fair sample of simulations is equivalent to obtaining a fair sample of initial condition sets conditional on well-established observational constraints on the present-day system. This motivates us to set up a Bayesian inference problem: we wish to sample the initial conditions, following a ΛCDM prior, conditional on a likelihood that describes our observational knowledge of the LG and its environment. The posterior initial conditions will then generate a fair statistical sample of simulations of LG analogues. To build this machinery, we split this section into three parts: in Section 2.1, we describe the inference process, which uses Hamiltonian Monte Carlo, in Section 2.2, we describe the forward model, which is the cosmological simulation from a Gaussian random initial density field to the present-day particle distribution, and in Section 2.3, we describe the likelihood we adopt to summarise the observational knowledge we have of the LG and its immediate environment. Figure 1 provides an overall summary of the process.

|

Fig. 1. Schematic diagram of our Hamiltonian Monte Carlo method. The procedure starts with a white noise initial condition field s, then uses a nested, double-mesh particle-mesh (PM) integrator to obtain the final particle phase-space coordinates (xfinal and vfinal). The likelihood is implemented in Python using the JAX package (Bradbury et al. 2018), which automatically implements the gradient. We compute the gradient of the likelihood and back-propagate it through the double-mesh integrator steps to the initial condition field. Using this gradient, we then do a Hamiltonian Monte Carlo (HMC) update on the initial condition field and iterate the whole procedure many times to obtain our sample of initial condition fields. |

2.1. Bayesian inference of large-scale structure formation

In cosmological simulations, the initial conditions on particles are created by Gaussian random numbers s, which, assuming a power spectrum and Lagrangian perturbation theory, are then used to determine the initial high-redshift particle positions and velocities (Angulo & Hahn 2022, and references therein). We wish to carry out Bayesian inference on the Gaussian white noise field that generates the initial conditions of our simulation, sampling from the posterior

where π is the prior probability, s indicates the Gaussian random field that generates the initial conditions, F(s) is the result of the forward model, that is, our particle-mesh simulations, and ℒ indicates the likelihood function which describes how likely the observed properties of the LG are, given the result of a simulation (see Section 2.3).

2.1.1. Hamiltonian Monte Carlo

For the inference, we utilise Hamiltonian Monte Carlo (HMC, Duane et al. 1987; Neal 1993), a sampling method that works well for high-dimensional problems and has been successfully used to make large-scale structure inference (Jasche & Wandelt 2013; Lavaux & Jasche 2016; Jasche & Lavaux 2019). The process is well-described in Brooks et al. (2011), and we summarise it briefly here. Conceptually, it means solving numerically the equations of motion for a fictitious physical system, where the potential is minus the log-posterior of the problem. In our case, we consider the motion of our initial condition field vector s, inside the potential defined by the log-posterior of our problem:

where p is the momentum and M is the mass matrix. Each iteration of the Hamiltonian Monte Carlo sampler consists of three steps. One first (step 1) integrates the equations of motion (Equations (3) and (4)) for a given number of steps Nsteps using a step size ϵ. The integrator must be symplectic. Then (step 2) after the integration, the proposed result is rejected or accepted based on a Metropolis-Hastings criterion:

and the new state becomes (sproposed, pproposed) upon acceptance, and (soriginal, −poriginal) upon rejection. And lastly (step 3), the momentum is refreshed

where 𝒩(μ = 0, Σ = M) is a multivariate Gaussian distribution with mean μ and covariance matrix Σ.

This gives the HMC algorithm several hyperparameters that must be chosen. We opt for a leapfrog scheme to integrate Hamilton’s equations of motion, with a fixed number of steps Nsteps, and where the step size drawn is uniformly between [0.9ϵmax, ϵmax]. We set the mass matrix M to be an identity matrix, and we set the momentum conservation ratio α to zero; that is, we do a full momentum refreshment each step.

The parameters ϵmax and Nsteps have to be tuned, and we tune them to achieve acceptance rates around 50 % to 70 %, while maximising the integration length per step – this is required to keep random-walk behaviour to a minimum (Brooks et al. 2011). Although adopting a different mass matrix can help sampling performance, it is not practical to find one, especially in high-dimensional problems like ours. Similarly, tuning α can also benefit sampling performance in some situations; we found no measurable improvement in some exploratory tests, so we kept it to zero. Lastly, we have tried replacing the leapfrog integrator with higher-order schemes as suggested by Hernández-Sánchez et al. (2021), but some cursory tests showed a simple leapfrog integration to perform the best for this problem. More complete testing is left for future work.

2.1.2. Two-grid sampling

In our procedure, we use a zoom setup, in which our Gaussian white noise field s is actually composed of two white noise fields for a low-resolution (LR) grid sLR and a high-resolution (HR) grid sHR. These are NLR3 and NHR3 grids with each element being a 𝒩(0, 1) standard Gaussian random variable. In our procedure, we jointly sample these fields.

2.2. The forward model

In our case, the forward model is the gravity simulation that takes in the Gaussian white noise fields s and outputs the particle positions and velocities at the present time. Four steps are involved. (i) We convolve the Gaussian white noise field s with (the square root of) the (appropriately scaled) power spectrum, in our case the linear matter power spectrum P(k) with the backscaling method (Angulo & Hahn 2022). This gets us the initial condition of the matter field at z = 63. (ii) We compute the Lagrangian displacement field given the generated Gaussian random field at z = 63 and interpolate this displacement onto our particle load that is initially placed on two cartesian grids. In particular, we place high-resolution particles at the centres of each voxel of the high-resolution grid and place low-resolution particles at the centres of each voxel in the low-resolution grid that is not in the high-resolution region. This results in 643 high-resolution particles and 643 − 163 low-resolution particles1. (iii) We perturb the positions and velocities using the Lagrangian displacement field to obtain the particle initial conditions at z = 63. (iv) We run a gravity simulation given these particles down to z = 0, for which we use a two-level particle-mesh method.

2.2.1. Particle mesh simulations

To compute particle forces, in the usual single-mesh particle mesh simulations (Hockney & Eastwood 1988), one also has to do four steps. (i) We compute the density field by depositing particles onto a mesh, distributing the mass of each particle on the nearest mesh cells with the piecewise linear Cloud-In-Cell (CIC) kernel. (ii) We obtain the potential field from this density field by solving the Poisson equation on a mesh. In practice, we convolve the density field with the Green’s function in Fourier space (this is denoted as Δ−1 because, on the mesh, this operation can be thought of as the inverse of the Laplacian). (iii) We compute a force field from the potential field by finite differentiation. (iv) We interpolate the forces onto the particles using a CIC kernel. When taking derivatives of the potential, which must be consistent with the choice of Green’s function, we use a 2nd-order finite-difference approximation of the gradient compatible with our treatment of the inverse Laplacian (see Hahn & Abel 2011, for an overview of alternative higher-order approximations). Using the forces, the equations of motion are then integrated in time using a leapfrog integrator. In this work, we build on the implementation existing in BORG, as described in Jasche & Lavaux (2019).

The computational effort of particle deposition onto a density grid scales with the number of particles, and the computational effort of the Fourier transform with the number of voxels 𝒪(Nlog N), where N = (L/Δx)3, with L the box size and Δx the voxel size. Thus, the computational effort is determined by the required box size, resolution and particle mass.

2.2.2. Zoom simulations

In practice, single-mesh simulations result in a rather limited dynamic range. This is why many improved algorithms have been proposed, for instance, tree-based codes, codes using the fast multipole method, and zoom methods. Here we will use a zoom method because we require high enough spatial resolution to resolve the haloes of the MW and M31 (which have virial radii of order 200 kpc) within a region large enough to avoid artefacts from imposed periodic boundary conditions. In our zoom simulations, a fixed-position high-resolution (HR) zoom region populated with high-resolution (i.e. low-mass) particles is embedded within a lower resolution (LR) but larger region populated with more massive particles.

The particular zoom method we employ is a relatively simple extension of the single-grid particle-mesh method. The forces are split into two parts: a long-range and a short-range part, where the long-range force is computed from the LR grid, and the short-range force is computed from the HR grid. The force on each particle i is

where FLR(x) is the tri-linearly interpolated force vector at position x of the LR region’s force field, and FHR(x) is the equivalent interpolated high-resolution force within the HR region.

To carry out the force splitting faithfully, we convolve the LR potential with a Gaussian filter,

where we set Asmth = 1.25, which is the default value when matching the PM and tree forces in Gadget-4 (Springel et al. 2021). We also convolve the HR potential field with a high-pass filter which is the complement of this Gaussian filter, such that at each frequency in k-space, the total power is preserved. Additionally, since some power is lost in the low-resolution force field as a result of CIC deposition and interpolation, we recover this power from the HR field. We also zero-pad the HR grid in order to achieve vacuum boundary conditions. We found that we require 16 cells of padding, corresponding to eight cells on each side, in order to have convergence on the final positions of the MW-M31 pair.

The final result is that our ‘effective’ gravitational potential – the potential from which the force fields FLR and FHR are calculated – is, in Fourier space

where δLR is the density field of the low-resolution region, deposited with the grid size of the low-resolution region, and Δ−1 is the inverse Laplacian. It is understood that KCIC,LR is set to be zero beyond the Nyquist frequency of the LR grid.

For the initial conditions, we consider two initial Gaussian random fields, ΦLR(k) and a ΦHR(k). These are both simply white noise fields convolved with the matter power spectrum with the appropriate linear scaling. From these we obtain the Lagrangian displacement fields ΨLR, i and ΨHR, i, similarly as the force fields, except that now the total of the squares of the two filters must sum to one (Equation 5 in Stopyra et al. 2021). This results in the displacement fields

To get the actual particle displacements, we do a CIC interpolation of the initial particle grids onto this grid.

Because we use this zoom simulation algorithm inside a Hamiltonian Monte Carlo sampler, we also need to derive its adjoint gradient. This calculation is analogous to Appendix C of Jasche & Lavaux (2019), with the inclusion of the different particle masses for the low- and high-resolution particles; this is just an extra constant factor on the mass deposition end. Additionally, the force adjoint gradient will now have contributions from both the LR and HR grids.

The parallelisation strategy is also similar to BORG. In the single-mesh particle-mesh code of the BORG framework, each MPI task handles a slab of the full simulation box as well as the particles in this slab. At each timestep, we transfer particles that move into a different slab to their new MPI task. This allows for simple parallelisation of the FFTs as well as the CIC assignment and interpolation. In our zoom algorithm, the process is similar, except that each MPI node owns both a slab of the LR box and a slab of the HR box.

2.3. The Local Group likelihood

The likelihood indicates how ‘Local-Group-like’ the final state of a simulation is, or, more precisely, it quantifies the level of consistency between the observational constraints and each particular simulated LG analogue, given the observational uncertainties. To define this likelihood, we first introduce the concept of filtered masses, positions and velocities in Section 2.3.1 as a simple means to extract robust and differentiable values for such quantities from the particle data of our simulation. Our total Local Group likelihood is

We include observational constraints on the masses and position of the two haloes (ℒmass and ℒposition, Section 2.3.2). We also constrain the relative MW-M31 velocity vector (ℒvelocity, Section 2.3.3). Finally, we require that the local Hubble flow as traced by individual galaxies in the Local Group’s immediate surroundings should be reproduced within the observational uncertainties (ℒflow, Section 2.3.4).

2.3.1. Filtered masses, positions and velocities

To be able to compare the output of our simulation to observations, we need to consider some quantities that are easy to measure both in simulations and through observation. We consider therefore the masses, positions and velocities of the objects of interest, averaged over some fixed filter. The filtered mass is defined as a function of position,

where G(Δr, σ) = e−Δr2/2σ2 is an isotropic Gaussian filter with standard deviation σ. Although it might seem desirable to use a tophat filter so that one can use observationally inferred enclosed masses, the result would not be differentiable with respect to the particle positions, which would make the gradients non-informative and reduce the HMC’s efficiency. In the case of particles at locations ri, ρ(r)≈∑imiδ(r − ri), so this becomes:

where mi is the mass of particle i. We also constrain the offset of the filtered centre of mass from the filter centre.

Lastly, we can define the filtered velocity, which is the average velocity within the filter:

2.3.2. Mass and position

Observational estimates of the masses of the Milky Way and Andromeda give rise to the mass likelihood ℒmass, which consists of the terms2

where MMW,obs is the observational estimate of the 100 kpc filtered mass of the Milky Way and MM31,obs that of M31. σMW,obs,rel and σMW,obs,rel are the associated relative errors. The filter size of 100 kpc was chosen because halo masses are well converged on this scale at our simulation resolution and because observational estimates on this scale have reasonably low uncertainty. The estimates we use come from the joint fitting of several observational tracers of the mass profiles of the Milky Way and Andromeda. The data used and assumptions made to obtain them are described in Appendix A, and the final values with associated uncertainties are summarised in Table 2. We note that the uncertainties are mainly the observational uncertainties, but we have also added another 5 % in quadrature to account for halo-to-halo scatter in the relation used to convert the mass found for our fitted contracted NFW profiles to the equivalent mass for a dark-matter-only halo (we follow the recipe of Cautun et al. 2020).

We use a heliocentric coordinate frame that is rotated to have M31 on the x-axis, that is xM31,obs = (780, 0, 0) kpc (our assumed distance to M31 is 780 kpc, see Appendix B). In this coordinate frame, xMW,obs ≈ ( − 3.9, −6.9, −1.8) kpc.

Our mass likelihood (Equations (16) and (17)) is insufficient on its own, because the observed filtered mass could be matched by an overly massive but substantially offset halo. We therefore introduce an additional likelihood term, ℒposition, that forces the filtered centre of mass to be close to the filter centre. Specifically, we constrain the offset in position to be consistent with

where we set the covariance matrix Σx,res to be isotropic with dispersion σ of 30 kpc per direction, which allows for some spread in the simulations but does not lead to significant bias in estimations of the mass.

2.3.3. Velocity likelihood

We also wish to use the relative velocity of the Milky Way and M31 as a constraint. In our setup, we assume the distance between the Milky Way and M31 to be known. Therefore we translate the measured proper motion vector with its uncertainties into a transverse velocity vector with associated uncertainties. This allows us to describe the likelihood ℒvelocity for the 3D relative velocity of the barycentres of the two haloes as a 3D multivariate Gaussian:

where Σmeas is the covariance matrix that incorporates the observational errors on the radial velocity and proper motions, and Σv,res is some additional uncertainty that we include because adopting overly precise values for the constraints would result in inefficient sampling. Moreover, in reality, M31’s 100-kpc filtered halo velocity may be offset from the galaxy’s velocity. We choose Σv,res to be isotropic:  , where

, where  is the identity matrix. We give the observational values adopted in Appendix B.

is the identity matrix. We give the observational values adopted in Appendix B.

Although it would, in principle, be better to compare the proper motions directly to the simulated data, this does not matter in practice: the positions of the two haloes are allowed a dispersion of 30 kpc, namely a ∼5 % scatter in the distance. The uncertainties on the transverse velocities due to proper motion uncertainties are, however, much larger.

2.3.4. Local galaxy flow likelihood

Lastly, we utilise peculiar velocity measurements of galaxies in the immediate environment of the LG. Such measurements have shown that the Hubble flow is particularly quiet in our surroundings (Sandage & Tammann 1975; Schlegel et al. 1994; Karachentsev et al. 2009; Aragon-Calvo et al. 2023). This has implications for the mass of the LG, as it shows that there is no large unresolved mass apart from the Milky Way and M31 in our neighbourhood and puts bounds on the possible total mass of the LG (Peñarrubia et al. 2014). This makes it an ideal constraint for our purposes because it naturally enforces a dynamically quiet environment around the MW and M31 without need for an explicit isolation criterion of the kind usually imposed when identifying LG analogues in simulations (Gottloeber et al. 2010; Libeskind et al. 2020; Sawala et al. 2022). In particular, we constrain the radial component of the velocity at the location of our sample of galaxies (which we describe below). Each galaxy gives us the following constraint for the flow likelihood ℒflow:

where vhelio is the simulation-derived velocity at the galaxy’s location converted to the heliocentric frame, vmeas is the measured heliocentric velocity, d is the distance, σd is the (linearised) uncertainty on the distance, σμ is the error on the distance modulus, and σint is some intrinsic scatter reflecting residual peculiar velocities. To compute the velocity in heliocentric frame vhelio, we evaluate the 3D filtered velocities at the locations of the galaxy in question, as well as the 3D filtered velocity at the location of our Milky Way (from Equation (15)). We compute from their difference a simulated Galactocentric velocity and then convert this to a heliocentric frame.

In order to compute filtered velocities for distant galaxies, we use a filter size of (250 kpc)×(d/Mpc), where d is the distance. The increasing filter size ensures that we have enough particles inside our filter, also at larger distances where we might only have a few particles because the corresponding Lagrangian region can be (partly) outside the zoom region.

We require the residual intrinsic scatter σint in our setup because we only put in the Milky Way and M31 as matter density constraints. Thus, many structures that contribute to the peculiar velocities of more distant galaxies are missing in our simulations. Some of these structures could, in principle, be included, at the cost of making our inference more complex. However, our simulations do not resolve lower mass structures and the observational data on known higher mass structures such as the M81, Centaurus and Maffei groups are uncertain; hence we prefer, for now, to ignore them. We adopt σint = 35 km s−1 since Peñarrubia et al. (2014) estimated  for the residual scatter around their spherical infall model, based on a very similar sample of galaxies.

for the residual scatter around their spherical infall model, based on a very similar sample of galaxies.

To build our sample, we join the galaxies from two sources: the catalogue of local volume galaxies (Karachentsev et al. 2013),3 and the CosmicFlows-1 catalogue (Tully et al. 2009),4 in order to get a complete and up-to-date sample which combines as many independent distance measurements per galaxy as possible. For measurement errors, we follow CosmicFlows-1, which assumes a distance modulus error of 0.2 for a TRGB-only measurement, 0.16 for a TRGB + Cepheid measurement, and similar for other distance measurement methods. In our sample, we include only isolated galaxies, because these are expected to trace well the underlying flow field. Galaxies that have an interaction with a nearby massive perturber would need to be modelled more carefully.

Additionally, we only include galaxies with relatively small distance errors to ensure a reliable estimate of the velocity at the galaxy’s position. To be specific, we use the following criteria to build our sample of galaxies:

-

The distance error must be less than 0.25 Mpc. Given the error estimates we have adopted, this limits the sample to be within

.

. -

There must not be a more luminous galaxy within 0.5 Mpc that has K < −15. The 2MASS K magnitude is used for this. If no K-band magnitude is measured, the estimate from Karachentsev et al. (2013) is used.

-

We exclude the data points for the MW and M31 because they are already treated.

-

We exclude the data point for M81, because of its interaction with M82 and NGC 3077. The three galaxies are strongly interacting and their velocities cover a range of more than 200 km s−1, so including them would require a more thorough dynamical analysis of the group.

-

To filter out satellites, we exclude any galaxy within 800 kpc of: The Milky Way, Andromeda, M81, Maffei 1 / IC 342, Centaurus A (NGC5128).

-

The measurement error on the velocity must be less than 20 km s−1. In practice, this cuts out one galaxy (ESO006-001) which has a radial velocity uncertainty of 58 km s−1.

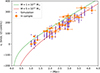

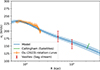

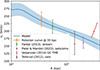

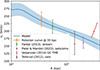

We are left with a sample of 31 galaxies. Their Hubble diagram is plotted in a Local-Group-centric reference frame in Figure 2, while their on-sky distribution is shown in Figure 3. We note that the spatial distribution of galaxies more distant than 2 Mpc follows the Supergalactic plane quite closely. We note that because our likelihood is conditioned on the flow velocity at the position of each galaxy, we should not be biased due to the inhomogeneity and incompleteness of the sky distribution of our sample. Analyses that assume spherical symmetry of the tracer populations and of the surrounding Hubble flow when determining quantities such as the LG mass and turnaround radius may be biased by such issues (see also Santos-Santos et al. 2024).

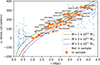

|

Fig. 2. The catalogue of galaxies used as flow tracers in this analysis, analogous to Fig. 11 in Peñarrubia et al. (2014). The x-axis is the Local-Group-centric distance, where the centre of the LG is the centre of mass of the Milky Way-M31 pair when assuming a mass ratio of MM31/MMW = 2. The y-axis is the radial velocity projected in the LG-centric frame, where the offset is computed by interpolating the velocity field along the line between the two galaxies linearly. The different lines indicate spherical Kepler-like velocity models of the recession velocity (Equation (9) of Peñarrubia et al. 2014). |

|

Fig. 3. Sky distribution in Supergalactic coordinates of the catalogue of the galaxies used as flow tracers in this analysis. Symbol type indicates distance from the LG’s barycentre, and colour indicates velocity with respect to the spherical model shown as a red line in Figure 2. Units are in Mpc and km s−1. The blue line shows the Galactic plane. |

2.3.5. Correcting masses for resolution effects

For our purposes, the main effect of low numerical resolution in our simulations is that haloes become less concentrated so that the mass within a Gaussian filter is biased low. This bias is quite systematic and can be well described by a simple power law:  , with some log-normal scatter σlnM. Hence, if we identify simulation parameters for which σlnM is small, we can adequately correct for the effect of lowered resolution. To this end, we ran unconstrained simulations similar to the one shown in Appendix C, but with varying spatial, time and mass resolution. We then cross-matched individual haloes in the BORG and Gadget simulations, and fit power-laws linking the halo masses in the two schemes, determining the mean relation and the scatter for these cross-matched haloes.

, with some log-normal scatter σlnM. Hence, if we identify simulation parameters for which σlnM is small, we can adequately correct for the effect of lowered resolution. To this end, we ran unconstrained simulations similar to the one shown in Appendix C, but with varying spatial, time and mass resolution. We then cross-matched individual haloes in the BORG and Gadget simulations, and fit power-laws linking the halo masses in the two schemes, determining the mean relation and the scatter for these cross-matched haloes.

2.3.6. Velocity reference frame

To compare observational data to our simulations using the velocity likelihoods above, we had to find the velocity offset between the real heliocentric frame and the simulation reference frame. This is done by assuming that the real Galactocentric frame coincides with the simulation reference frame in which the simulated Milky Way has a zero 100 kpc filtered velocity. However, in reality, there might be a difference between the 100 kpc filtered velocity and the velocity of the Galactic centre, and additionally, there is some uncertainty in the Solar Galactocentric velocity vector. We incorporate these uncertainties by marginalising over a possible offset velocity, voff which we assume to have zero mean and a 10 km s−1 isotropic scatter (see Appendix D). Since all our velocity likelihoods are multivariate normal distributions, this marginalisation can be done analytically. The derivation is shown in Appendix D.

3. Results

To present our results, we first describe the experimentation and reasoning underlying the particular simulation parameters we end up using. (Section 3.1). We then present some results derived from the analysis of our chains in Section 3.2, we quantify the resulting autocorrelation in Section 3.3, and we provide some noteworthy quantitative predictions in Section 3.4 and in Section 3.5.

3.1. Simulation parameters

Our set-up incorporates constraints from different aspects: model uncertainties, numerical accuracy, and resolution. We summarise here the considerations for each parameter.

In the first place, there is the log-likelihood itself, which we would like to represent the data as closely as possible. Ideally, we would like to match the positions of the Milky Way and M31, and their relative radial velocity to within the observational uncertainties. However, the measurement errors for these data are very small. This leads to large numerical inaccuracies in the HMC algorithm, in turn requiring very small timesteps in order to reach acceptable acceptance rates (we aim for 50 % to 70 %, which is optimal for Gaussian distributions, Brooks et al. 2011), resulting in greater autocorrelation lengths, and so substantially longer computation time to reach the same effective number of samples. To overcome this problem, in Section 2.3.2 and 2.3.3 we purposely degraded the precision of the constraints on halo positions and relative radial velocity to a level that is compatible with the accuracy of our simulation, taking into account its resolution and the lack of baryonic effects.

To tune the resolution and the particle masses, we require that simulations at high resolution carried out with Gadget match lower resolution runs carried out with our Zoom algorithm. In practice, we found that our filtered halo masses are most sensitive to lowering resolution. Therefore, we tune our resolution parameters to minimise runtime, while still being able to correct for the effect of low resolution on the masses. In practice, we choose parameters for which the uncertainty in the halo mass bias correction σ is below 10% (see Appendix C for the size and uncertainty of the correction for our preferred parameters).

We note that we verify in Section 4 that for our chosen spatial, mass and time resolutions, our final masses, positions and velocities are sufficiently accurate for our problem. The choice of resolution has multiple consequences and it is important to limit it for two reasons: higher resolution leads to more costly computations, but it also leads to a rougher probability landscape which impacts negatively the statistical performance of the HMC. One possible explanation for this might be that higher resolution in space and time allows for more complicated particle trajectories which are then less linear, making the problem harder to sample from.

The sizes of the low- and high-resolution regions were determined by requiring the outer box to be large enough to be able to fit the local Hubble flow while being small enough that the Lagrangian region at its centre does not move substantially. If the bulk flow were too large compared to the size of the high-resolution box, there would be substantial leakage of low-resolution particles, or of particles that have a major part of their history inside the low-resolution region, into the region of interest (the MW-M31 pair and its immediate surroundings). The high-resolution region must be large enough to allow some flexibility, yet small enough that a computationally affordable grid (1283 is currently feasible) can resolve the formation of the MW and M31 haloes.

The initial Gaussian white noise fields themselves are on a 643 grid for the LR field and a 803 grid for the HR field, due to the need for zero-padding. This gives our inference problem a total dimensionality of Ndim = 643 + 803 = 774 144. The particles themselves are placed at the centres of the voxels of two 643 grids. The forces are calculated on grids of twice this size, namely a 1283 grid and a 1603 grid respectively.

To integrate the equations of motion of our N-body simulations, we use the default scheme in BORG (Jasche & Lavaux 2019) for which timesteps are linearly spaced in the cosmological scale factor a.

The resulting numerical parameters are summarised in Table 3. With these settings, we then proceed to sample from our initial condition field s.

A summary of the simulation settings.

3.2. Markov chains

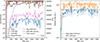

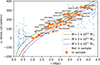

For the work presented in this paper, we have run in parallel 12 Markov chains. A trace plot of the different contributions to the posterior of one HMC chain is shown in the left panel of Figure 4. In this plot, the different terms that make up the likelihood are shown with different colours, namely the log-likelihood of the individual halo masses (red and purple, Equations (16) and (17)) and positions (orange and green, Equations (18) and (19)) for a 100 kpc filter, the 3D M31-MW relative velocity (brown, Equation (20)), and the total likelihood of the Hubble flow tracers (pink, Equation (21)). After burn-in these are expected to vary according to a chi-square distribution (scaled by −1/2) with one degree of freedom (masses), 3 degrees of freedom (positions and 3d-velocity), or 31 degrees of freedom (flow tracers, since we include 31 galaxies).

|

Fig. 4. Trace plots of an example chain, chain number 10. Left: the likelihood values of the observational constraints. The solid blue line is the total likelihood, which is the sum of all other solid lines that indicate components of the likelihood. The dashed lines are the prior on the initial white noise fields sLR and sHR. Right: The masses of the Milky Way and M31 along the chain. The greyed-out lines indicate values of the masses before applying the correction from Figure C.1. This particular chain was considered warmed up by sample 760 according to the criterion of Section 3.3. |

Also shown is the value of the prior log-likelihood (dashed blue and dashed orange), the χ2/2 of the initial Gaussian random fields that generate the initial conditions. During warm-up, the Gaussian random numbers that generate the initial conditions were set to start at zero for all voxels. This procedure is commonly used within BORG to speed up the warm-up process; we aim to improve it in future. Since this prior is just the sum of squares of Gaussian random numbers, after warm-up its value should follow a chi-square distribution (up to a division by two) hence have mean Ndim/2, where, in our case, Ndim/2 = 387 072. That is why the dashed lines in the left panel of Figure 4, which indicate the value of the prior, start at 0 and then converge around 387 000.

Figure 4 shows that a hierarchy exists, where small-scale constraints such as the individual halo properties warm up very quickly, followed by constraints on larger scales such as the MW-M31 velocity (brown) the local Hubble flow (pink), and finally the field as a whole (the dashed lines showing the prior). All the observational constraints get to within a few σ of their required observational values by about 200 HMC steps, the last being the flow tracer velocities (pink curve) which should scatter within a scaled χ2-distribution with a mean value half the number of galaxies we consider, so 31/2 = 15.5.

In the right panel of Figure 4, the simulation-derived masses are shown. We reach convergence within a few hundred samples, and the masses for both the Milky Way and M31 are biased slightly low, even after applying the Appendix C correction which accounts both for the low spatial and time resolution of the simulation, and for the fact that we determine masses for fixed filter positions which do not coincide exactly with the centres of the simulated haloes. In principle, one could avoid the problem of haloes being offset from the filter centre by first ‘finding’ the centre of the halo at each step, but this is not straightforward: one would have to make sure that the procedure stays differentiable, and also that the centre of mass varies continuously as a function of initial conditions. Failing to require these two properties would cause the log-likelihood to be discontinuous, resulting in poor sampling performance.

3.3. Autocorrelation analysis

In Figure 5 we show images of the final state of nine simulations spaced evenly along the chain of Figure 4. The present-day density field reflects directly the initial conditions, and one can see correlations in structure between neighbouring images in Figure 5; present-day density fields (and therefore the corresponding initial conditions) do not fully decorrelate between samples that are not spaced sufficiently far apart. For instance, in consecutive images, one can see large haloes repeating, some of which highlighted by coloured circles, shifted only by relatively small amounts. Some coherence between nearby samples is also visible in the behaviour of the flow tracer likelihood (the pink curve in Figure 4).

|

Fig. 5. Final density fields of the low-resolution box as chain 10 evolves. This chain has Feff = 411, so every Feff sample (going vertically down each column) can be considered as an independent sample. The circles highlight a few haloes that do not shift by much between consecutive samples, illustrating the substantial autocorrelation between samples separated by significantly less than Feff. We note that sample 0 (which occurs after one iteration of the HMC) is almost completely uniform because we start our chains from a uniform field. Visualisation here was done using pySPHViewer (Benitez-Llambay 2015). |

It is helpful to quantify correlation as a function of lag within a chain, which we choose to do by computing correlations between initial condition fields s. These decorrelate the slowest among all the quantities we have considered, so this is a conservative choice. We compute the autocorrelation along the chain of the vector si, representing both the HR and LR initial conditions, which we calculate for a lag t as

which is equivalent to the average fictitious time autocorrelation of the individual components of s. We use only samples after the chains are warmed up. Since the effective sample size is (Brooks et al. 2011)

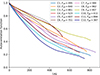

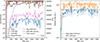

we can find the effective sample size through an estimate of ρt. One would ideally use directly estimated values for ρt; unfortunately, if t is too large, we are limited by the length of the chain. In practice, ρt has exponential behaviour, which is common for HMC where each step has a small integration length. Thus, for each chain, we fit an exponential curve, and from this fit, we can obtain a good estimate of ρt for all t. We show the correlation functions ρt for our different chains in Figure 6, giving also the Neff derived from exponential fits.

|

Fig. 6. Autocorrelation functions as computed using Equation (24) for our twelve different chains, each indicated by a different colour. |

The number of samples per effective sample, Feff, is

so to obtain independent samples, we can thin each chain with the corresponding Feff, taking samples separated by Feff, starting from the last sample in the chain. Proceeding in this way, we obtain 27 independent samples across all chains. In Figure 5, an example chain is shown, where one can verify by eye that samples separated by Feff = 411 appear fully decorrelated. This decorrelation number varies from chain to chain as can be seen seen in Figure 6.

Since the initial condition field decorrelates the slowest, we can use this to provide a simple criterion to indicate when the chain has warmed up. If the prior, (which started at zero) is above 98 % of its final value (this means that the power spectrum is within a few per cent of the cosmological expectation) we consider the chain to be sufficiently warmed-up.

For this paper, we create two sets of samples:

-

An ‘independent’ set of simulations, where each chain has been thinned by the factor Feff above, that is, first taking the sample at the end of the chain, then the sample Feff before that, then repeatedly stepping back by Feff until we reach the warm-up phase.

-

A ‘semi-independent’ set of simulations, which is the same, but thinned by a factor Feff/20 rather than Feff. Despite large scales being more correlated in nearby members of this set, quantities determined primarily by small-scale power, such as individual halo masses, are largely independent.

We believe that we are rather conservative in our estimate of the true effective number of samples: when visually inspecting a chain, one can see almost no correlation when considering two samples separated by Feff. We note that the inter-chain variation is similar to the intra-chain variation.

We note that, in any MCMC analysis, the posterior mean of any inferred quantity q has a corresponding error due to Monte Carlo integration. This error is  (Brooks et al. 2011). In practice, this error for Neff = 27, is of the order of 19 %. Adding in quadrature to the full posterior width σ(q) gives a total error of

(Brooks et al. 2011). In practice, this error for Neff = 27, is of the order of 19 %. Adding in quadrature to the full posterior width σ(q) gives a total error of  , a change of 2 % which barely changes the resulting uncertainty. A typical case in this work is the estimation of masses.

, a change of 2 % which barely changes the resulting uncertainty. A typical case in this work is the estimation of masses.

3.4. Posterior quantities for the individual galaxies

With a reasonable set of independent samples, we can make a variety of quantitative statements based on our chains. A few examples of ‘independent’ samples of the Local Group environment (each from a different chain) are shown in Figure 7. In every sample, the Milky Way and M31 lie within a connecting filament. On a larger scale, the LG lives in a relatively quiet, underdense, environment, in a wall-like structure that is approximately aligned with the Local Sheet: most structures live close to the xy-plane in Supergalactic Coordinates. This is a priori somewhat surprising, since we have not explicitly constrained the density field surrounding the LG, only the peculiar velocity field, which appears close to isotropic.

|

Fig. 7. Final density fields in simulation coordinates of evolution from four of our independent sets of initial conditions (each from a different chain). Each pane corresponds to a single set of initial conditions. Within each pane, the left column shows the full box and the right column the central 10 Mpc zoom box, while the two rows show orthogonal projections. The name at the top of each pane indicates the chain it came from (after C), and its sample index (after S). The visualisations are made using the Lagrangian Sheet density estimation method of the r3d package* (Powell & Abel 2015). The unit vectors of the Supergalactic reference frame are shown at the top right of each high-resolution panel. (* Since our two-grid initial condition layout is not a pure cubic grid, in particular, at the zoom-region boundary, we cannot use the recipe where every cube is divided into 6 tetrahedra as in e.g. Abel et al. (2012). Instead, we use a Delaunay tetrahedralisation to create the simplex tracers.) |

In Figure 8, we show posterior distributions for various quantities along our chains. These histograms indicate plausible values for quantities such as the filtered halo masses of the Milky Way and Andromeda, given our full set of constraints and our specific assumed ΛCDM model, whereas the dashed lines show the observationally based constraints that we adopted as priors for each quantity, as described in Section 2.3; for the filtered halo masses, these are already the result of Appendix A’s quasi-Bayesian analysis of observational data for halo mass tracers surrounding the two galaxies under a ΛCDM prior.

|

Fig. 8. Posterior distributions of LG properties from our Markov chains. In each panel, the histogram is of samples from our chains and therefore indicates the posterior distribution. The dashed lines are the injected observational constraints. The top left panel indicates the centre-of-mass position of the Milky Way, and the top middle panel M31. The right panel shows the relative velocity of the MW-M31 pair. The bottom left and middle panels indicate the 100 kpc filtered masses of the Milky Way and M31. The bottom right panel shows the total log-likelihood of the flow tracers, with on top the χ2-distribution (scaled by |

The centre-of-mass distributions of the two main haloes match well with the likelihood constraints we have put on their positions. In fact, they are less tail-heavy than the input, which is expected, because moving the centre of mass too far away would also impact other terms in the likelihood, in particular, the mass likelihood.

While the 100 kpc-filtered M31 mass matches the injected observational constraint quite well, with only a small downward bias, the filtered Milky Way mass is biased more substantially low. These biases are however mostly a consequence of the halo centres not aligning perfectly with the filter centres; the biases decrease to within a few per cent of the observational prior when re-centring the filters at the precise halo centre. Our chains imply virial mass estimates for the two haloes of log10(M200c/M⊙) = 12.07 ± 0.08 and 12.33 ± 0.10, for the MW and M31, respectively.5 This is very similar to the implied M200c’s we obtain from our NFW-like fitting to observation in Appendix A. It is important to note the small error bars resulting from the need to satisfy all observational constraints simultaneously. The fact that these masses are near the lower end of the range quoted in earlier studies is mainly a consequence of the Hubble flow constraint, which not only requires a relatively small total mass for the LG but also approximately determines its centre of mass, thereby implying a mass ratio for the two main galaxies (see Peñarrubia et al. 2014). With their simple spherical model, these authors found a mass ratio of  (mean and 16th/84th percentiles) whereas we find a mass ratio of

(mean and 16th/84th percentiles) whereas we find a mass ratio of  , similar to the value 0.58 ± 0.19 implied for this ratio by the analysis of Appendix A. These results are discussed further in Section 5.2 below.

, similar to the value 0.58 ± 0.19 implied for this ratio by the analysis of Appendix A. These results are discussed further in Section 5.2 below.

3.5. The environment and mass of the Local Group

Visual inspection of our chains shows that they all produce first-approach trajectories for the MW-M31 pair. Furthermore, in Figure 8, we find that orbits for the pair are almost perfectly radial, that is, along vx. The tangential components in the (α, δ) directions are (vα, vδ) = (12.7 ± 21.3, −6.7 ± 18.7) km s−1, while the velocity in the radial direction has a posterior of vx = ( − 102 ± 13) km s−1. We note that although the histograms showing the tangential velocity distributions from our chains are not centred on the observational constraints, these posteriors are statistically compatible with the observed tangential velocity. The posterior is approximately radial, and the Salomon et al. (2021) M31 proper motions that we have decided to use, which are based on Gaia EDR3 data, are ∼1σ off from a radial orbit in the R.A. direction, and ∼2σ in the Dec. direction. Independent measurements of M31’s transverse velocity, done with for example HST (van der Marel et al. 2012) or through modelling the dynamics of M31’s satellites (van der Marel & Guhathakurta 2008; Salomon et al. 2016) show significant scatter compared to the values of Salomon et al. (2021), and overall a radial orbit is well within the range spanned by the different measurements as can be seen from the comparison in Figure 6 of Salomon et al. (2021). The bias of our posterior distribution towards small tangential motion is likely a result of the constraints on the local Hubble flow that we have applied because a significantly non-radial orbit would require a nearby massive object in order to produce sufficient tidal torque to generate the orbital angular momentum. The presence of such an object is disfavoured by the quiet and at most weakly distorted Hubble expansion indicated by our flow tracers. We can see in Figure 9 that the velocities of our isolated galaxy catalogue are generally well matched by our simulations, with one or two possible exceptions (e.g. Tucana) which would be worth more detailed investigation.

|

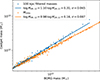

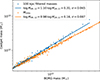

Fig. 9. Similar to Figure 2, but now with the purple points showing the line-of-sight velocities from our simulations at the 3D location of each galaxy. Each purple point is an independent sample from our chains, specifically, we plot the ‘independent’ set as defined in Section 3.3. |

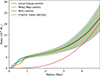

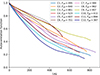

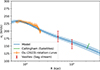

Inference on the mass profiles is shown in Figure 10. We find posterior distributions for the enclosed Local Group mass, M(< 1 Mpc) = (5.20 ± 0.67)×1012 M⊙ and M(< 2.5 Mpc) = (7.77 ± 1.27)×1012 M⊙, when measured from the LG barycentre6. Within the larger radius, the LG is only overdense by a factor of about 3.00 ± 0.49. By a radius of 4 Mpc, the distance of the most distant flow tracers we have used, this factor has dropped to 1.33 ± 0.35. If the Local Group mass is instead defined as the sum of the virial masses M200c of the two objects, we find  . It is interesting to compare these numbers to previous work. From the Timing Argument alone, Li & White (2008) found the sum of the two M200c values to be

. It is interesting to compare these numbers to previous work. From the Timing Argument alone, Li & White (2008) found the sum of the two M200c values to be  for a ΛCDM prior. This is substantially larger than our value (although consistent with it at about 1σ) reflecting the fact that the quiet local Hubble flow is not consistent with the upper end of their Timing Argument range. In contrast, using local Hubble flow observations alone, Peñarrubia et al. (2014) estimated the mass of the LG within about 0.8 Mpc to be M(< 0.8 Mpc) = (2.3 ± 0.7)×1012 M⊙, consistent only at about 2.8σ with the mass, M(< 0.8 M⊙) = (4.82 ± 0.58)×1012 M⊙, that we find; such a low mass is strongly disfavoured by the Timing Argument. Combining the two types of data, namely the MW’s and M31’s orbital and halo properties together with the quiet Hubble flow, leads to our own tighter constraints.

for a ΛCDM prior. This is substantially larger than our value (although consistent with it at about 1σ) reflecting the fact that the quiet local Hubble flow is not consistent with the upper end of their Timing Argument range. In contrast, using local Hubble flow observations alone, Peñarrubia et al. (2014) estimated the mass of the LG within about 0.8 Mpc to be M(< 0.8 Mpc) = (2.3 ± 0.7)×1012 M⊙, consistent only at about 2.8σ with the mass, M(< 0.8 M⊙) = (4.82 ± 0.58)×1012 M⊙, that we find; such a low mass is strongly disfavoured by the Timing Argument. Combining the two types of data, namely the MW’s and M31’s orbital and halo properties together with the quiet Hubble flow, leads to our own tighter constraints.

|

Fig. 10. Enclosed mass profiles in our simulations, centred on the Local Group’s centre of mass, on the Milky Way, and on M31. The lines indicate the posterior mean, and the shaded regions are the 1σ region of the ‘semi-independent’ sample set (see Section 3.3, we use this to avoid being affected by shot noise coming from thinning the chains). |

Finally, in the bottom right panel of Figure 8, we see that the distribution of total log-likelihood values for galaxy velocities in the Local Group’s immediate environment is consistent with the expected χ2-distribution. Since the uncertainties entering this estimate are dominated by the 35 km s−1 residual scatter assumed in our likelihood, the fact that we obtain fully acceptable χ2 values means that our ΛCDM simulations match the observational data as well as the simple spherical model of Peñarrubia et al. (2014).

4. Resimulations with Gadget-4

The simulations discussed so far are based on a zoom scheme with a relatively small grid size for the two nested meshes. This is optimised for computational speed but is relatively low-resolution. In particular, the spatial and time resolution are sub-optimal for studies of the small-scale structure and detailed temporal evolution of the LG galaxies. To enable such studies, it is necessary to carry out higher-resolution simulations from our initial conditions. However, our constraints were applied to simulations executed using the low-resolution BORG scheme, so it is necessary to see if the constraints are still satisfied when the resolution in space, time and mass is improved and high-resolution simulations are carried out with state-of-the-art software. Here we begin by using Gadget-4 (Springel et al. 2021) in a simple Tree-PM setting (non-zoom) but with the same initial particle masses, positions and velocities as in the corresponding BORG simulation. Hence, these runs test only the effect of reducing the timesteps and the softening to values comparable to those usually used in high-resolution calculations. The (comoving) force softenings that we adopt are 6 kpc/h for HR-particles and 24 kpc/h for LR-particles. This approximately follows the rule of thumb proposed by Power et al. (2003), namely  , which for a 1.5 × 1012 M⊙ halo with virial radius ∼250 kpc and particle mass 1.5 × 108 M⊙ leads to ∼10 kpc. Using the initial condition fields s that we found from our chains, we generate the particle’s initial positions and velocities using the technique described in Section 2, specifically Equation (10). We put into Gadget the particle masses, positions and velocities that the original BORG scheme generated at its starting redshift, z = 63.

, which for a 1.5 × 1012 M⊙ halo with virial radius ∼250 kpc and particle mass 1.5 × 108 M⊙ leads to ∼10 kpc. Using the initial condition fields s that we found from our chains, we generate the particle’s initial positions and velocities using the technique described in Section 2, specifically Equation (10). We put into Gadget the particle masses, positions and velocities that the original BORG scheme generated at its starting redshift, z = 63.

Four such resimulations are compared with the BORG originals in Figure 11 where the present-day particle distributions in the LG region are plotted on top of each other. Although the two integration schemes do produce similar LG analogues, there are sometimes noticeable offsets between the halo positions of the Milky Way and Andromeda. In these cases, the corresponding velocities are biased oppositely, suggesting that this difference affects mostly the phase of the orbit and not the overall properties. We discuss the amplitude of these differences in more detail below.

|

Fig. 11. LG region (a box of (2 Mpc)3 centred at the Milky Way) in some resimulations with Gadget-4. The blue dots indicate particles in the BORG simulations, and the orange dots indicate particles in the gadget re-simulations. Each panel indicates one simulation, with two projections being shown. |

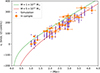

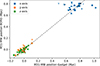

The filtered masses of the BORG run and the Gadget run match quite well after correcting the BORG masses following the prescription of Appendix C. This can be seen in Figure 12, where the corrected 100 kpc filtered masses of the Milky Way and M31 in the BORG simulation are compared with the corresponding masses in the Gadget re-runs. To be able to compare masses properly, we filter based on the actual halo centres, as determined by a shrinking-spheres procedure (Power et al. 2003) for both the Gadget and the BORG runs. The filtered mass ratios are generally close to one, with some small spread, which is, however, expected (about 6.5 % is expected, see Figure C.1).

|

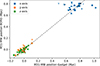

Fig. 12. Filtered mass ratios of the Gadget resimulations compared to BORG (after applying the correction from Appendix C). Each symbol indicates a different set of initial conditions. For five simulations, we also show the result with higher Gadget resolution (with particle masses 64× smaller, softening 16× smaller and power included in the initial conditions to 4× smaller scale) in darker colours. Points referring to simulations with the same initial conditions but different resolutions are connected with a blue line. |

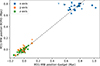

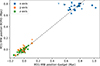

Positional offsets between the main haloes in the BORG-Gadget simulation pairs are typically at the level of 100 kpc and in a few realisations up to 300 kpc, as can be seen in Figure 13, where the three components of the MW-M31 position difference are shown. The peculiar velocity difference between the two haloes also differs somewhat between BORG and Gadget, as can be seen in Figure 14, where the symbols are the same as in Figure 13. We note that cases with a large relative position offset also have a large relative velocity offset, and furthermore, these offsets are strongly correlated: where Gadget gives larger separations, it gives a smaller relative velocity, and vice versa. Forward (or backward) integration of the offset Gadget simulations into their relatively near future (or past) would likely bring them into substantially better agreement with the observational constraints.

|

Fig. 13. Position differences of the M31 and MW analogues in the Gadget resimulations plotted against the same difference in the BORG simulations. The x-axis is the Sun-M31 axis, and y and z are R.A. and Dec. directions at the position of M31. As in Figure 12, pairs of symbols joined by lines refer to the five cases with an additional higher resolution Gadget resimulation. |

For five of the cases, we have also carried out simulations with substantially improved mass and spatial resolution. Specifically, for the HR particles these additional resimulations use a 64× smaller particle mass and a 16× smaller softening length; furthermore, they extend the power in the initial conditions to 4× higher spatial frequency7. This may be expected to add further random offsets in the evolutionary phase of the MW-M31 orbit on top of those found for our main set of resimulations. (See Sawala et al. (2022) for further discussion of the influence of initial conditions on phase offsets in the orbits of LG analogues.) We tested that the effects of the increase in mass resolution and of the extra small-scale power on the masses, positions and velocities of the two main haloes are relatively minor, as may be seen in Figures 12 to 14. Finally we show in Figure 15 how these various resolutions affect the end result; the increasing resolution and the addition of small-scale power allows us to model smaller-scale structures in our simulations.

|

Fig. 14. Peculiar velocity differences between the M31 and MW analogues in the Gadget resimulations plotted component by component against the same quantity for the BORG simulations. The x-axis is the Sun-M31 axis, and y and z are oriented orthogonally in directions of increasing R.A. and Dec. at the position of M31. As in Figure 12, pairs of symbols joined by lines refer to the five cases with an additional higher resolution Gadget resimulation. |

|

Fig. 15. Comparison of the density fields of the LG when integrating the same initial conditions (C6 S1530) with the BORG zoom particle-mesh scheme used in our Markov chains, with Gadget at the same mass resolution (particle mass of m = 1.5 × 108 M⊙ and softening of b = 10 kpc), and with Gadget with a higher resolution (m = 2.4 × 106 M⊙, b = 0.56 kpc). |

5. Discussion

In Table 1, we listed some major recent simulation studies of Local Group analogues in their observed cosmological context. These studies found their analogues by carrying out large numbers of ΛCDM simulations constrained to reproduce nearby large-scale structures (smoothed over scales of ∼5 Mpc) and then selecting the few that, by chance, produced systems approximately matching the observed LG. Because we use the observed properties of the LG and its environment as explicit constraints in our ΛCDM sampling, we match or surpass the requirements of the most precise previous programme, while including additional constraints, for example, requiring a match to the observed quiet Hubble flow in the environment of the LG out to 4 Mpc. Further constraints could easily be included, for example, on the spin orientation of the MW and M31 haloes, or on the properties of well-observed massive nearby groups such as M81 or Centaurus. Our current effective sample size, (∼27 quasi-independent realisations) is relatively small, but additional realisations can be generated simply by continuing the sampling chains as long as is needed. Although the resolution of these simulations is quite low, offsets when resimulating at higher resolution are relatively small.

5.1. Comparison to previous Local Group simulations

In comparison to the simulations listed in Table 1, our simulations generally enforce more precise constraints on MW and M31 properties such as halo masses, separation, and velocity. The one exception is the radial velocity constraint of Sibelius-‘Strict’ (Sawala et al. 2022). They require a relative radial velocity in the range 99 to 109 km s−1, whereas we adopt a Gaussian uncertainty of dispersion 14 km s−1. We have also not placed any constraints on the presence of a massive satellite like the LMC or M33, which was done when refining the selection of LG candidates in for example ELVIS (Garrison-Kimmel et al. 2014). For the Local Group’s environment, we constrained the (quiet) local Hubble flow using 31 observed flow tracers. Only APOSTLE (Fattahi et al. 2016) has a related constraint, which they put on the mean Hubble flow magnitude at 2.5 Mpc. We have placed no constraints on structure more distant than 4 Mpc (unlike Clues, Hestia and Sibelius Carlesi et al. 2016; Libeskind et al. 2020; Sawala et al. 2022). As a result, our simulations do not generally have a Virgo cluster analogue, although an appropriate large-scale tidal field at the Local Group’s position is enforced by our detailed fitting of the local (perturbed) Hubble flow. Finally, our current simulations are gravity-only/dark-matter-only; we intend to carry out higher resolution, full physics resimulations in future work.

5.2. The mass distribution in and around the Local Group

We use four classes of observations to constrain our analysis: dynamical tracers in the MW halo, dynamical tracers in the M31 halo, the relative 3D position and velocity of the MW/M31 pair, and the perturbed Hubble flow in the immediate environment of the LG. Much previous work has addressed each of these topics. Some of the authors of previous work directly or indirectly adopt a ΛCDM prior, but most prefer more idealised and less specific model assumptions. As a result, it is not easy to compare masses obtained by different methods and in different regions to decide whether they are compatible. In contrast, our procedure randomly samples realisations subject to all the constraints simultaneously, leading to consistent estimates of the mass in all regions of the LG and its environment under a ΛCDM prior.