| Issue |

A&A

Volume 645, January 2021

|

|

|---|---|---|

| Article Number | A27 | |

| Number of page(s) | 27 | |

| Section | Interstellar and circumstellar matter | |

| DOI | https://doi.org/10.1051/0004-6361/202037871 | |

| Published online | 23 December 2020 | |

Quantitative inference of the H2 column densities from 3 mm molecular emission: case study towards Orion B★

1

Laboratoire d’Astrophysique de Bordeaux, Univ. Bordeaux, CNRS, B18N, Allee Geoffroy Saint-Hilaire,

33615 Pessac,

France

e-mail: pierre.gratier@u-bordeaux.fr

2

IRAM,

300 rue de la Piscine,

38406 Saint Martin d’Hères,

France

3

LERMA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Universités,

75014 Paris, France

4

LERMA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Universités,

92190 Meudon, France

5

Aix-Marseille Univ, CNRS, Centrale Marseille, Institut Fresnel, Marseille,

France

6

Chalmers University of Technology, Department of Space, Earth and Environment,

412 93 Gothenburg, Sweden

7

University of Toulouse, IRIT/INP-ENSEEIHT, CNRS,

2 rue Charles Camichel, BP 7122,

31071 Toulouse cedex 7, France

8

Univ. Grenoble Alpes, Inria, CNRS, Grenoble INP, GIPSA-Lab,

Grenoble

38000,

France

9

Univ. Lille, CNRS, Centrale Lille, UMR 9189 - CRIStAL,

59651 Villeneuve d’Ascq, France

10

Instituto de Física Fundamental (CSIC). Calle Serrano 121,

28006

Madrid,

Spain

11

Instituto de Astrofísica, Pontificia Universidad Católica de Chile,

Av. Vicuña Mackenna 4860,

7820436 Macul,

Santiago, Chile

12

Institut de Recherche en Astrophysique et Planétologie (IRAP), Université Paul Sabatier,

Toulouse cedex 4, France

13

Laboratoire de Physique de l’Ecole normale supérieure, ENS, Université PSL, CNRS, Sorbonne Université, Université Paris- Diderot, Sorbonne Paris Cité,

Paris,

France

14

National Radio Astronomy Observatory,

520 Edgemont Road,

Charlottesville,

VA,

22903, USA

15

School of Physics and Astronomy, Cardiff University,

Queen’s buildings,

Cardiff CF24 3AA, UK

Received:

3

March

2020

Accepted:

25

August

2020

Context. Based on the finding that molecular hydrogen is unobservable in cold molecular clouds, the column density measurements of molecular gas currently rely either on dust emission observation in the far-infrared, which requires space telescopes, or on star counting, which is limited in angular resolution by the stellar density. The (sub)millimeter observations of numerous trace molecules can be effective using ground-based telescopes, but the relationship between the emission of one molecular line and the H2 column density is non-linear and sensitive to excitation conditions, optical depths, and abundance variations due to the underlying physico- chemistry.

Aims. We aim to use multi-molecule line emission to infer the H2 molecular column density from radio observations.

Methods. We propose a data-driven approach to determine the H2 gas column densities from radio molecular line observations. We use supervised machine-learning methods (random forest) on wide-field hyperspectral IRAM-30m observations of the Orion B molecular cloud to train a predictor of the H2 column density, using a limited set of molecular lines between 72 and 116 GHz as input, and the Herschel-based dust-derived column densities as “ground truth” output.

Results. For conditions similar to those of the Orion B molecular cloud, we obtained predictions of the H2 column density within a typical factor of 1.2 from the Herschel-based column density estimates. A global analysis of the contributions of the different lines to the predictions show that the most important lines are 13CO(1–0), 12CO(1–0), C18O(1–0), and HCO+(1–0). A detailed analysis distinguishing between diffuse, translucent, filamentary, and dense core conditions show that the importance of these four lines depends on the regime, and that it is recommended that the N2H+(1–0) and CH3OH(20–10) lines be added for the prediction of the H2 column density in dense core conditions.

Conclusions. This article opens a promising avenue for advancing direct inferencing of important physical parameters from the molecular line emission in the millimeter domain. The next step will be to attempt to infer several parameters simultaneously (e.g., the column density and far-UV illumination field) to further test the method.

Key words: methods: statistical / ISM: clouds / ISM: molecules

Data are only available at the CDS via anonymous ftp to cdsarc.u-strasbg.fr (130.79.128.5) or via http://cdsarc.u-strasbg.fr/viz-bin/cat/J/A+A/645/A27

© P. Gratier et al. 2020

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1 Introduction

Atoms and molecules have long been thought to be versatile tracers of the cold neutral medium in the Universe, from high-redshift galaxies to star-forming regions and protoplanetary disks because their internal degrees of freedom bear a signature that reveals clues about the physical conditions of their environments. Atoms and molecules are affected by many processes: photoionization and photodissociation by far-UV photons, excitation by collisions with neutrals and electrons, radiative pumping of excited levels by far-UV or IR photons, gas phase chemical reactions, condensation on grains, solid state reactions in the formed ice, (non)-thermal desorption, etc. Moreover, this chemical activity is tightly coupled with gas dynamics. Chemistry affects the gas motions because (1) the ionization state controls the coupling to the magnetic field; and (2) the line radiation from molecules (mostly rotational lines) and atoms (fine structure lines in the far-IR) is the main cooling agent of theneutral gas over a broad range of astrophysical environments, controlling the equation of state and therefore affecting the dynamics. Conversely, the gas dynamics affects the chemistry because it produces steep and time-variable density and velocity gradients, which change the rates of molecule formation and destruction. Numerical models of interstellar clouds face the difficulty of combining sophisticated chemical codes (addressing the molecule formation and destruction processes) with turbulent gas dynamics. This is a tremendous challenge given the non-linearity of fluid dynamics, the rigidity of chemical reactions, and the wide range of time scales involved (Valdivia et al. 2017; Clark et al. 2019). It is, therefore, important to acquire self-consistent data sets that can be used as templates for this theoretical work and, at the same time, to document the diagnostic capabilities of molecular lines accurately.

The recent development of spectrometers in the (sub)-millimeter domain (e.g., IRAM-30m/EMIR, NOEMA, ALMA) opens new avenues to fulfill this goal. First, wide band spectrometers now allow us to simultaneously observe tens of lines instead of a single one along each line of sight. The first studies using these capabilities were sensitive (~ 3−8 mK) unbiased spectral surveys at 1, 2, and 3 mm targeting a few specific lines of sight (e.g., Horsehead WHISPER: Pety et al. 2012, TMC1: Gratier et al. 2016, ASAI: Lefloch et al. 2018). Firstly, these studies show the power of multi-line studies to constrain the physics and the chemistry of molecular clouds. Secondly, the increase in sensitivity now makes it possible to detect these lines over large areas (several square degrees), paving the way for an era of quasi systematic hyperspectral imaging in the millimeter domain. The ORION-B project (Outstanding Radio-Imaging of OrioN-B, co-PIs: J. Pety and M. Gerin) is a IRAM Large Project using the 30m telescope that aims to improve the understanding of the physical and chemical processes of the interstellar medium by mapping a large fraction of the Orion B molecular cloud (5 square degrees) with a typical resolution of 27″(~ 50 mpc at 400 pc, the typical distance to the Orion B cloud) and 200 kHz (or 0.6 km s−1) over the full 3 mm atmospheric band.

In an early study, Pety et al. (2017) showed how tracers of different optical depths like the CO isotopologues allow us to fully trace the molecular medium, from the diffuse envelope to the dense cores, while various chemical tracers can be used to reveal different environments. However, extracting the information contained in these multi-line observations requires powerful statistical tools. A clustering algorithm applied to the intensities of selected molecular lines revealed spatially continuous regions with similar molecular emission properties, corresponding to different regimes of volume density or far-UV illumination (Bron et al. 2018). In addition, a global principal component analysis of the line integrated brightnesses revealed that some combinations of lines are sensitive to the column density, the volume density, and the UV field (Gratier et al. 2017). In this paper, we go one step further by checking whether it would be possible to build a quantitative estimate of the H2 column density and if so, how that could be done, based on the molecular emission and valid over a large range of conditions. Indeed, this is a prerequisite to classify the interstellar medium into its different phases such as diffuse, translucent, and dense regimes (Pety et al. 2017), and to identify its underlying structure, in particular, its filamentary nature (André et al. 2010; Orkisz et al. 2019). Such a method could also be used to estimate the mass of the different (velocity-separated) components of a giant molecular cloud, for instance, the linear mass of the filaments relative to their more diffuse environment. The H2 column density is also required to compute molecular abundances from observed molecular column densities and compare these with the outputs of astrochemical codes.

To do this, we focus on supervised learning methods. Supervised learning is a general set of machine learning methods used to learn how to assign a class or infer the value of a given quantity from a set of measured observables. These methods need a training set for which we know both the measured features and the searched class or value. Here, we use: (1) the emission of selected spectral lines over a fraction of the observed field of view as input observables; and (2) the dust-traced column density as a proxy of the gas column density. Indeed, multi-wavelength observations of dust thermal emission in the submillimeter range and the subsequent fit of the spectral energy distribution is one of the most successful methods for deriving total column density maps of the interstellar medium. Between 2009 and 2015, the Herschel Observatory instruments PACS (70, 100, 160 μm) and SPIRE (250, 350, 500 μm) mapped a fraction of the sky with an angular resolution of ~40″ or better. In particular, large programmes have been dedicated to this task, for example, the HiGal survey mapping of the inner Galactic plane (68° > l > −70° and |b| < 1° Molinari et al. 2016), or the Gould Belt survey (André et al. 2010). However, since the end of the Herschel mission, and until its potential successor SPICA that could be launched in the 2030s, only SOFIA/HAWK+ is currently able to measure the far-IR dust emission. Ground-based or ballon-borne submillimeter telescopes will map the interstellar medium with even higher angular resolution but the lack of data at shorter wavelengths will make the estimation of the dust temperature highly uncertain. This means that dust temperatures would have to be approximated when deriving the dust-traced column density. This method will likely lead to systematic errors. Hence, devising an accurate method for estimating the H2 column density, which only relies on ground-based (sub-)millimeter facilities, is important.

This paper is structured as follows. Section 2 introduces the data sets and Sect. 3 formalizes the problem. Section 4 introduces our concepts and methods. Section 5 discusses how we applied them in practice. Section 6 compares the performances of different methods. Section 7 discusses which lines are the most important for inferring the H2 column density. Section 8 presents a discussion on whether the column density predictor can be used on noisier data or on data from sources more distant from the Sun than Orion. In this section, we also discuss how the method can be generalized to other physical parameters, such as the far-UV illumination. Section 9 presents our conclusions.

Spectral properties of the observed lines.

2 Data

2.1 Molecular emission from IRAM-30m observations

The acquisition and reduction of the molecular data set used in this study is presented in detail in Pety et al. (2017), but the field of view has been extended to the North and East by ~ 60%. In short, the data were acquired at the IRAM-30m telescope by the ORION-B project in only three frequency tunings: the first from 92.0 to 99.8 GHz (LSB band) and from 107.7 to 115.5 GHz (USB band); the second from 84.5 to 92.3 GHz (LSB band) and from 100.2 to 108.0 GHz (USB band); and the third from 71.0 to 78.8 GHz (LSB band) and from 86.7 to 94.4 GHz (USB band). The data were acquired from August 2013 to February 2015 for the two first tunings and in August 2016 for the third tuning.

The selection of the studied lines was performed based on the spectra averaged over the observed field of view. Table 1 lists the 18 selected lines and their associated tuning setup. A velocity interval of 80 km s−1 was extracted around each line and the spectral axis was resampled onto a common velocity grid. The systemic velocity of the source is set to 10.5 km s−1 and the channel spacing is set to 0.5 km s−1, which is the highest velocity resolution achieved for the 12CO (1− 0) line. This impliesthat the noise is more and more correlated from one channel to the next as the rest frequency of the line decreases.

The studied field of view covers 0.9° × 1.6° towards the Orion B molecular cloud part that contains the Horsehead nebula, and the H II regions NGC 2023, NGC 2024, IC 434, and IC 435. Compared to Pety et al. (2017), Gratier et al. (2017), Orkisz et al. (2017), Bron et al. (2018), it additionally comprises the northern molecular edge that contains the hummingbird filament studied in Orkisz et al. (2019). All the cubes were gridded onto the same spatial grid to ease the analysis. The projection center is located on the Horsehead at  . The maps are rotated counter-clockwise by 14° around this position. The angular resolution ranges from 22.5 to 35.6″. The position-position-velocity cubes of each line were smoothed to a common angular resolution of 40″ to avoid resolution effects during the analysis. This was done by convolution with a Gaussian kernel of width

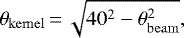

. The maps are rotated counter-clockwise by 14° around this position. The angular resolution ranges from 22.5 to 35.6″. The position-position-velocity cubes of each line were smoothed to a common angular resolution of 40″ to avoid resolution effects during the analysis. This was done by convolution with a Gaussian kernel of width  where θbeam is the telescope beam for each observed line in arcsec. A pixel size of 20″ was used to ensure Nyquist sampling and to avoid too strong correlations between pixels. At a distance of 400 pc (Menten et al. 2007; Zucker et al. 2019, 2020), the sampled linear scales range from ~80 mpc to ~ 11 pc.

where θbeam is the telescope beam for each observed line in arcsec. A pixel size of 20″ was used to ensure Nyquist sampling and to avoid too strong correlations between pixels. At a distance of 400 pc (Menten et al. 2007; Zucker et al. 2019, 2020), the sampled linear scales range from ~80 mpc to ~ 11 pc.

From the position-position-velocity cubes, we computed maps of both the peak temperature and the integrated intensity (moment 0)1. The peak temperature is just the maximum of the spectrum intensity over the 80 km s−1 velocity range. The integrated intensity is computed over a velocity window that is decided as follows. Starting from the peak intensity velocity, all adjacent channels whose intensity is larger than zero are added to the velocity window (Pety 1999). This process is iterated five times, each time starting from the next intensity maximum. Up to five velocity components may, hence, be present on each line of sight.

While the line integrated intensity can be used as a proxy for the column density of the species along the line of sight, at least for some column density interval, we also include the line peak temperature to take into account the possible effect of the excitation temperature on the relationship between the column density and the integrated intensity. As discussed by Pety et al. (2017), we expect variations of the gas temperature (and thus of the excitation temperature) across the targeted field of view because it is exposed to the intense far-UV illumination produced by young OB stars in the more or less embedded H II regions. For optically thick lines, the line peak temperature can be viewed as a proxy for the line excitation temperature where the brightness temperature approaches the excitation temperature. For an (faint) optically thin line, the map of peak temperature is proportional to the excitation temperature times the column density per velocity bin. Using both the line area and the peak temperature partly lifts the degeneracy between excitation and amount of gas along the line of sight (see e.g., the companion article by Roueff et al. 2020).

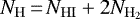

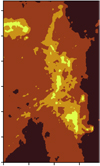

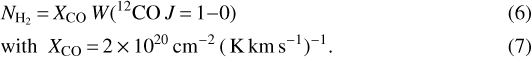

2.2 N(H2) column density derived from dust thermal emission observed with Herschel

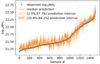

To get an independent measurement of the column density for the Orion B cloud, we use the dust continuum observations from the Herschel Gould Belt Survey (André et al. 2010; Schneider et al. 2013) and from the Planck satellite (Planck Collaboration I 2011). The fit of the spectral energy distribution by Lombardi et al. (2014) gives us access to the spatial distributions of the dust opacity at 850 μm and of the effective dust temperature. As in Pety et al. (2017), we converted τ850 μm to visual extinctions using AV = 2.7 × 104 τ850 mag, and we use NH∕AV = 1.8 × 1021 cm−2 mag−1 as conversion factor between visual extinction and hydrogen column density:  . Over the observed field of view, the column density of atomic hydrogen accounts for less than one visual magnitude of extinction (Pety et al. 2017). We thus choose to neglect this contribution in this study. Figure 1 shows the spatial distribution of this dust-traced column density.

. Over the observed field of view, the column density of atomic hydrogen accounts for less than one visual magnitude of extinction (Pety et al. 2017). We thus choose to neglect this contribution in this study. Figure 1 shows the spatial distribution of this dust-traced column density.

We do not claim that the dust-traced column density used here is a perfect measure of the underlying N(H2) column density. We just wish to check whether the molecular emission alone is able to predict this dust-traced column density. If this method is efficient, the next step will be to anchor it on additional sources of information (see Sect. 8.5).

|

Fig. 1 Spatial distribution of the dust-traced H2 column density derived from Herschel data (André et al. 2010; Lombardi et al. 2014). The dotted grid is used to define the training and test sets. The white square in the bottom right around the Horsehead region corresponds to the test set where the random forest predictions will be compared to the observations. This subset is never used during the training phase. Only the remainder of the map is used as the training set. |

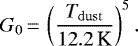

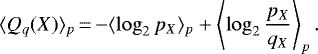

2.3 Information content

Figures 2 and 3 show the spatial distribution of the input variables (integrated intensities and peak temperatures of the lines) and target variable (the dust-traced H2 column density). Table 2 lists, among other properties, the minimum and maximum values of the peak temperature maps for the 18 selected lines, as well as the derived dynamic range computed as the ratio of the maximum over the minimum value. The dynamic range spans values between ~ 20 and ~ 700. The dynamic range of the column density is ~400.

The input and targeted variables have different noise properties. Indeed, the dust-traced H2 column density is derived at high signal-to-noise ratio (S/N) over the full field of view. We can safely assume that the targetedvariable is noiseless even though it may be affected by systematic biases in its derivation. On the contrary, large fractions of the field of view is measured at low S/N of the input variables. This is particularly clear on the maps of the peak temperature, which emphasize the noise pattern at S/N lower than 3. The noise pattern suggests that setups #1 and #2 were mainly observed through vertical scanning, while setup #3 was only observed through horizontal scanning. It also suggests non-negligible variations of the noise levels either because of the weather (mostly summer versus winter weather but also degrading weather during one observing session) or because of the large variation of the telescope elevation between the beginning and the end of an observing session.

3 Astrophysical goal: to determine whether it is possible to accurately predict the H2 column density based on molecular emission

Figure 10 of Pety et al. (2017) shows the joint distributions of the dust visual extinction (proportional to the dust-traced column density of matter along the line of sight,  ) and of the line integrated intensity (Wl) for a selection of the detected lines, l. This figure shows a clear monotonic relationship with low scatter between

) and of the line integrated intensity (Wl) for a selection of the detected lines, l. This figure shows a clear monotonic relationship with low scatter between  and Wl for most of the lines. On one hand, there often exists an interval of column densities for which the relationship between

and Wl for most of the lines. On one hand, there often exists an interval of column densities for which the relationship between  and Wl is linear to a good approximation for one line, but the column density interval depends on the line. On the other hand, these relationships are in general non-linear. This is clear when looking at the relationship between

and Wl is linear to a good approximation for one line, but the column density interval depends on the line. On the other hand, these relationships are in general non-linear. This is clear when looking at the relationship between  and

and  . The integrated intensity stays undetected at low column density and it saturates at high column density. This property is generic for any single molecular line, implying that setting up a predictor of the column density from a single line will always fail in some regime.

. The integrated intensity stays undetected at low column density and it saturates at high column density. This property is generic for any single molecular line, implying that setting up a predictor of the column density from a single line will always fail in some regime.

Taking into consideration that: (1) the relationships between  and Wl are monotonic; and (2) the interval of column densities over which

and Wl are monotonic; and (2) the interval of column densities over which  ∝ Wl to first order depends on the line, this opens up the interesting possibility that a joint analysis of the molecular lines could allow us to devise a predictor of the column density. Building on these empirical facts, Gratier et al. (2017) applied the simplest global analysis of the existing correlations in a multi-dimensional data set, that is, the principal component analysis. Figure 10 from Gratier et al. (2017) shows the joint histogram of the first principal component and the dust-traced column density. This histogram shows a tight correlation between these two quantities with a Spearman’s rank correlation of 0.90 over more than two orders of magnitude in column density, from 1021 to 1023 H2 cm−2. Moreover, this correlation does not saturate any more at either low or high column density. However, it is not exactly linear.

∝ Wl to first order depends on the line, this opens up the interesting possibility that a joint analysis of the molecular lines could allow us to devise a predictor of the column density. Building on these empirical facts, Gratier et al. (2017) applied the simplest global analysis of the existing correlations in a multi-dimensional data set, that is, the principal component analysis. Figure 10 from Gratier et al. (2017) shows the joint histogram of the first principal component and the dust-traced column density. This histogram shows a tight correlation between these two quantities with a Spearman’s rank correlation of 0.90 over more than two orders of magnitude in column density, from 1021 to 1023 H2 cm−2. Moreover, this correlation does not saturate any more at either low or high column density. However, it is not exactly linear.

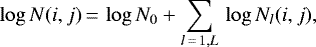

We now assume that there exists a non-linear continuous function F of the line intensities that predicts the dust-traced column density. Our goal in this article is to quantitatively estimate an approximation (noted f) of this function, F. This estimation will be affected by S/N issues since the line intensity measurements are limited by the sensitivity of the observations, that is, our measurements are generally done at an intermediate S/N. We can write our estimation problem as

(1)

(1)

where Tl, Wl is the vector of the peak temperatures and integrated intensities of lines l, and e represents the sum of all uncertainties. In this estimation problem, the measurements may also suffer from systematic biases. For instance, the dust-traced column density may underestimate the amount of matter along the line of sight when the emission from warm dust hides the emission from colder dust (Pagani et al. 2015). In the absence of a more quantitative knowledge of such systematic biases, they cannot be separated from the physical relationship in the estimation, f. They will therefore be de facto included in the function, f, which we try to recover.

Getting an accurate estimate of the H2 column density from molecular line emissions is a long-standing quest in the study of stellar formation. Methods followed the development of (sub)millimeter telescopes, receivers, and spectrometers. They can be divided into two main categories. The first category relies on an empirical linear relationship between the H2 column density and the integrated emission of the (1–0) line of the most abundant tracer of molecular gas, namely, 12CO. Bolatto et al. (2013) explain that this method, known as the XCO-factor method, relies on the fact that giant molecular clouds seem, on average, close to the virial equilibrium. The statistical nature of the basis for this method implies that it mostly works at relatively large linear scale (≳10−50 pc). Several studies (Leroy et al. 2011; Genzel et al. 2012) have shown that the XCO factor depends on the metallicity of the inter-stellar medium to take into account varying fraction of CO dark gas, i.e., H2 gas without enough dust to shield the destruction of CO from the surrounding far UV field.

The second category of methods invert a radiative transfer model to obtain the column density of the associated molecular species from the line intensities or observed spectra. Chemical models estimating the abundances relative to H2 are then used to infer  from the molecular column densities. This method is usually applied on the main CO isotopologues. In this category, Dickman (1978); Dickman et al. (1986) derived the mean 13CO abundance relative to H2. Frerking et al. (1982), Bachiller & Cernicharo (1986) and Cernicharo & Guelin (1987) expanded to other CO isotopologues, showing that the threshold for detecting C18O is higher than that for 12CO or 13CO. Goldsmith et al. (2008) and Pineda et al. (2010) used a modified version with a variable [CO]/[H2] abundance ratio deduced from the Black and van Dishoeck PDR models (see e.g., Visser et al. 2009). By comparing 12CO and 13CO (1–0) emission maps with dust extinction maps, Ripple et al. (2013) showed that the relationship between the 13CO column density and Av is non-linear, indicating variations of the 13CO abundance. Barnes et al. (2018) analyzed a recent large survey of the main CO isotopologues to determine a

from the molecular column densities. This method is usually applied on the main CO isotopologues. In this category, Dickman (1978); Dickman et al. (1986) derived the mean 13CO abundance relative to H2. Frerking et al. (1982), Bachiller & Cernicharo (1986) and Cernicharo & Guelin (1987) expanded to other CO isotopologues, showing that the threshold for detecting C18O is higher than that for 12CO or 13CO. Goldsmith et al. (2008) and Pineda et al. (2010) used a modified version with a variable [CO]/[H2] abundance ratio deduced from the Black and van Dishoeck PDR models (see e.g., Visser et al. 2009). By comparing 12CO and 13CO (1–0) emission maps with dust extinction maps, Ripple et al. (2013) showed that the relationship between the 13CO column density and Av is non-linear, indicating variations of the 13CO abundance. Barnes et al. (2018) analyzed a recent large survey of the main CO isotopologues to determine a  -dependent XCO conversion factor. Their analysis assumes that the excitation temperature is the same for the 12CO and 13CO lines, as well as a constant [13CO]/[12CO] abundance. These two assumptions are shown to be incorrect at least in the Orion B molecular cloud by Bron et al. (2018) and Roueff et al. (2020).

-dependent XCO conversion factor. Their analysis assumes that the excitation temperature is the same for the 12CO and 13CO lines, as well as a constant [13CO]/[12CO] abundance. These two assumptions are shown to be incorrect at least in the Orion B molecular cloud by Bron et al. (2018) and Roueff et al. (2020).

In summary, the first category pursues a a direct connection between the line intensity and the H2 column density, while the second category relies on the estimation of the species abundances. Beam filling factor may be an issue in the latter category if it changes the apparent abundance. The current study belongs to the first category.

|

Fig. 2 Spatial distribution of the line integrated intensity for some of the detected lines in the 3 mm band, plus the dust-traced far-UV illumination (bottom left panel) and the dust-traced H2 column density (top right corner). The color-scales are logarithmic to reveal the distribution of faint signal and positive noise. The maps are rotated counter-clockwise by 14 degrees from the RA/Dec J2000 reference frame. The spatial offsets are given in arcsecond from the projection center located at |

|

Fig. 3 Spatial distribution of the line peak temperature for some of the detected lines in the 3 mm band, plus the dust-traced far-UV illumination (bottom left panel) and the dust-traced H2 column density (top right corner). The color-scales are logarithmic to reveal the distribution of faint signal and positive noise. The maps are rotated counter-clockwise by 14 degrees from the RA/Dec J2000 reference frame. The spatial offsets are given in arcsecond from the projection center located at |

Observed spectral lines and dust-traced properties.

4 Principle: regression in machine learning

In this section, we briefly define the different machine-learning concepts that we use later in this article. It is mostly an intuitive presentation aimed at astronomers who don’t necessarily have a background in machine-learning methods. The main algorithm we use in this paper is called random forest, which was invented by Leo Breiman. Details of its theoretical basis can be found in Breiman (2001)2. An introduction can be found in Hastie et al. (2001, Chap. 15).

4.1 Supervised machine-learning method called regression

Trying to solve Eq. (1) for an approximation of F is a generic machine-learning class of problems known as regression. This approximation of F, which we note as f, is called the regression model. The quantity to be predicted is often called the “dependent” or “target” variable. In our case, it will be the dust-traced column density. The function variables (that is, the observables) are often called “features.” In our case, these will be the measured molecular integrated intensities and peak temperatures. Each line of sight (image pixel) constitutes one measurement of Eq. (1). The regression consists in finding an estimate of F based on the data set. It is a “supervised” method, meaning that it needs to be trained on a dataset for which the solution of the problem is known before the trained method is applied to other datasets.

4.2 Training set, test set, and quality of fit

We use a standard supervised learning workflow by first dividing the full data set into a “training set,” comprising the majority of the observed data on which the best internal parameters of the model are fitted, and a “test set,” which is not seen by the fitting or training algorithm. The quality of the fit is checked by computing the mean square error (MSE) between the value predicted by the model and the observed quantity. There are two kinds of MSE. On one hand, the training MSE is used to fit the model on the training set. On the other hand, the test MSE is computed to assess how the model behaves with regard to data it has not been trained on.

While the regression fit minimizes the training MSE, the final goal is to predict correct values for samples outside of the training set, that is, to minimize the “test” MSE. Small test and training MSE values are expected when the model function is estimated well. This indicates that the model can predict the dust-traced column density based on the values of the molecular intensities on data that have never been used during the fitting procedure. However, finding a test MSE that is much larger than the training MSE is a symptom of a problem known as “overfitting.” In this case, the estimated model function learned not only the searched underlying physics, but also the specifics of the data set measurements, in particular, the noise properties. This can occur, for instance, when the model complexity (i.e., the number of unknown parameters) is too large compared to the information content of the data.

4.3 Variance and bias of the model estimation

The accuracy of the fitted model, that is, achieving the minimum test MSE, always results from a trade-off between the varianceand the bias of the estimated model function (see Sect. 7.3 of Hastie et al. 2001 and Sect. 3.2 of Bishop 2006).

The variance refers to the amount of variation that would affect our estimate of F if the training data set was different. There are different causes of variability in the estimation of F. Firstly, the training set may cover only part of the relevant physical conditions. In our case, we could have failed to sample one of the different column density regimes well enough: either the diffuse or translucent gas, dense cores, etc. Secondly, the condition of the observations, for example, the S/N, can be different from one training set to another.

The bias refers to the fact that there can be a mismatch between the actual complexity of F and the complexity implied by a choice of function family. No matter the amount of data you have in your training sample, trying to fit a non-linear function by an hyper-plane will result in a bias.

Minimizing the test MSE requires selecting a supervised learning method that will find the best trade-off between the variance and the bias. Achieving a low bias but a high variance is easy: any model that goes through all the points of the training data set will do this; it is then completely dependent on the specific noise of the training set and, thus, it is overfitted. Conversely, achieving a low variance but a high bias is just as easy: using the mean of the training data set as model will give this result. The challenge is to find the optimal trade-off between the variance and the bias. As a function of model complexity, variance is minimized at zero complexity and bias is minimized at infinite complexity (or one that is large enough to be an interpolation).

4.4 Decreasing the regressor variance through bagging

Bagging is one of the two standard algorithms used to decrease the variance of a regression method. The other one, called boosting, is not treated in this article. Bagging is an abbreviation for bootstrap aggregating. It is thus related to the bootstrap method, which is often used in astrophysics to estimate random uncertainties in properties inferred from a given data set.

The bootstrap method randomly creates a large number of subsamples of the input data set. In this drawing, replacement is authorized, meaning that the same data point can be chosen multiple times. A regression is then carried out on each sub-sample and the predicted values are computed for each point of each subsample. The aggregation part computes the average of all the predictions. This average becomes the predicted model. It is intuitive to assume that the reduction of the variance comes from the averaging process. This, nevertheless, also assumes that the errors of individual models are uncorrelated. The bias is conserved in this algorithm. In particular, a low bias method will give a low-bias bagged method. A practical advantage of bagging is that it is easily parallelisable, implying short computation times.

4.5 Regression trees

Regression trees are a type of regression method that uses a recursive set of binary splitting on the values of the input variables to estimate thetarget variable. The choice of the split point is made to minimize a cost function. To put it simply, the regression tree poses a series of binary questions to the data, each question narrowing the possible prediction until the method gains enough confidence to assert that this prediction is the right one.

To explain in further detail, at one dimension, the training set is made of couples (xi, yi) that are linked by a to-be-estimated function, f, and the residuals, ei:

(2)

(2)

The function, f, is an approximation of the data in the form of a step function with steps of variable heights and lengths. To obtain it, the method explores all the potential ways to split the values of the x axis into two categories. For each potential split, it computes the MSE of the two resulting classes. It then chooses the split value that minimizes the average of the two MSE weighted by the number of elements in each class. The outcome of this process is a threshold value of x that splits the data set into two classes called tree branches. The decision point is a node of the tree. The process is then iterated in each branch leading to new nodes and branches. The process is stopped when the maximum depth of the tree is reached or a branch contains less than a given number of data points. The predicted value is then computed as the mean of the y values for these points. The first decision point is called the root node. By construction, it’s the one that reduces the final MSE the most. It is called the strongest predictor. A generalization to a multi-dimensional function is straightforward. All the dimensions are split one after the other and the first decision is made along the dimension that minimizes the weighted average of the MSE of the two resulting branches.

Regression trees have several advantages. They make no assumption, either on the basis of the functional form of the learned relationship or on the shape of the underlying probability density of the data set. They thus belong to the class of non-parametric methods. Regression trees are non-linear regressors by nature. They are easy to understand and, thus, to interpret. In particular, the relative importance of the features is easy to extract. Finally, they do not require any normalization nor centring of the data. This last point is a key advantage for us as Gratier et al. (2017) showed that normalization is difficult when the S/N is low for a number of features.

Regression trees, nevertheless, have several drawbacks. A large tree depth brings high flexibility and thus ensures a low bias, but it also makes these trees prone to overfitting. They are unstable, meaning that a small variation in the training set can lead to a completely different regression tree. This implies that their variance is large and that there is no guarantee that the outcome is the globally optimal regression tree. However, most of the drawbacks can be overcome by using a random forest method.

4.6 Random forest

A random forest can overcome the instability and high variance of a regression tree by averaging the predictions from many such trees that include two sources of randomness. First, the input data set is bootstrapped. However, introducing only this kind of randomness would produce highly correlated trees in case the data set contains several strong predictors (i.e., a split decision along a given dimension that largely decreases the MSE). Indeed, these strong predictors will be consistently chosen at the top levels of the tree. So, random forests introduce a second source of randomness at each decision point: instead of minimising the MSE along all the dimensions, it minimizes it along a random subset of the dimensions. Hence, a random forest is a regression method made of bagged regression trees (first kind of randomness) that are split on random subsets of features at each split (second kind of randomness). This not only reduces the variance but it also speeds up the computations because the introduction of randomness is done on both a subset of the data and a subset of the dimensions.

4.7 Model complexity versus interpretability

Later in thispaper, we show that linear regressors fall short in capturing all of the non-linearities of the relationships between molecular emission and N(H2) column density. This calls for a more flexible method. Neural networks are a well-known example of a method that performs well in complex machine learning tasks (see, e.g., Boucaud et al. 2020) but the interpretation of their output is usually difficult. Having an interpretable result is an important criterion for us as we aim to understand the properties of the interstellar medium. Firstly, we put more confidence in the predictions if the interpretation is physically and chemically sound. Secondly, the learned relationships between features and predicted values should provide meaningful insights into the physical and chemical properties of the molecular interstellar medium. Random forests represent a good compromise in complexity versus interpretability as they are able to learn non-linear relationships while keeping the properties that make physical interpretation possible and that allow us to understand a posteriori how the predictions have been obtained.

5 Application

The random forest implementation that we use is the RandomForestRegressor class from the sklearn python module (Pedregosa et al. 2011).

5.1 Quality of the regression and generalization: mean error and RMSE

We monitor the quality of the regressor and its generalization power by computing the mean error and the root mean square error (RMSE) either on the test or training sets. To do this, we compute the residuals, that is, the difference between the observed and predicted values of the column density for each pixel, and then we compute its mean and RMSE. The RMSE quantifies the distance between the observations and the predictions, while the mean error informs us on potential global biases of the regressors and predictors.

While the mean square error (MSE) is used in machine learning because it is simpler and, thus, makes it faster to compute as it does not involve the computation of the square root, the results we present from this point on use the root mean square error. This choice enables us to have values that can be directly compared to the predicted quantity and it gives to first order an estimation of the uncertainty on the predictions. Moreover, there is no loss of generality as the square root is a monotonous function.

5.2 Separation of the data into training and test sets

The molecular emission is spatially coherent over a large number of pixels for two reasons. First, Nyquist sampling implies that neighbor pixels are correlated. Second, the underlying physical properties of the molecular emission are spatially correlated across pixels. Standard methods to divide the data sets into training and test sets, which are based on random draws of the observations, would lead to two correlated sets, weakening the results obtained on the test set. Furthermore, choosing a test region where the physical and chemical properties are well-known is desirable to ease the interpretation of the regression results. Figure 1 shows our choice of training and test sets. We divide the observed region into 40 rectangles of 12.7′ × 13.3′ or 38 × 40 pixels.

The test set is chosen as the rectangle containing the Horsehead Nebula, which has been extensively studied (Pety et al. 2005, 2012; Goicoechea et al. 2006; Gerin et al. 2009; Guzmán et al. 2011, 2012; Gratier et al. 2013; Fuente et al. 2017) and is shown as a bold white rectangle in Fig. 1. Figure 4 zooms in into this test set in all tracers used in this study. For the first tuning setup of the IRAM-30 m data set, the noise increases by about a factor two on an horizontal band towards the southern edge of the test set. This is due to degrading weather conditions when observing this particular region. The Horsehead is a pillar that has been sculpted through photoionization of the Orion B molecular cloud by the O star σ-Ori located about 0.5 of a degree away or 3.5 pc. The Horsehead is thus surrounded by the IC 434 H II region that is not completely devoid of diffuse molecular emission either in the background or in the foreground. As a pillar, it contains two dense cores: one at the top of its head and the other one in its throat. These nevertheless exhibit lower column density than some of the dense cores in the NGC 2024 region. The Horsehead dense cores are surrounded by translucent or diffuse gas whose contact with the far-UV illuminationproduces several photo-dissociation regions. To the south of the pillar, there are a few isolated clumps that contain less column density and which are more illuminated in far-UV. The test set thus contains many different physical and chemical regimes. This region is never used during the training part. The 39 other rectangles are used to train the algorithm.

5.3 Considering whether the test set belongs to the same parameter space as the training set

Supervised learning methods are often biased when used to predict points outside the span of the training set. It is thus important to be able to check whether another data set (e.g., the test set) will belong to the same parameter space as the training set. We need to compute the likelihood that a point belongs to the probability distribution function (PDF) of the training set. To do this, we model this PDF with a sum of simple analytic functions (instead of e.g., using kernel density estimators) because this method is still tractable for high-dimensional data sets.

Gaussian mixture models (GMM, see Sect. 6.8 of Hastie et al. 2001 or Sect. 2.3.9 and 9.2 of Bishop 2006) are flexible methods that give a synthetic probabilistic description of a data set in terms of a finite sum of multidimensional Gaussian PDF. Here, we use the GaussianMixture class from sklearn to fit a sum of n 36-dimensional Gaussians to the training set. The number of Gaussian components in the mixture is a free parameter that we optimize as follows. For each n in  , we train a Gaussian Mixture Model on two-thirds of the training set, selected randomly. We then compute the mean likelihood, that is, the average of the values taken by the GMM for each of the points belonging to the third part not seen during training. This process is repeated three times to improve the estimation of the mean likelihood. The number n of Gaussians that maximizes the mean likelihood is then selected. We find that n = 10 is the optimal value. We also checked different kinds of constraints on the covariance matrix and we found that the best results are obtained when the covariance matrix is left unconstrained.

, we train a Gaussian Mixture Model on two-thirds of the training set, selected randomly. We then compute the mean likelihood, that is, the average of the values taken by the GMM for each of the points belonging to the third part not seen during training. This process is repeated three times to improve the estimation of the mean likelihood. The number n of Gaussians that maximizes the mean likelihood is then selected. We find that n = 10 is the optimal value. We also checked different kinds of constraints on the covariance matrix and we found that the best results are obtained when the covariance matrix is left unconstrained.

Once the Gaussian mixture model (i.e., the PDF consisting of the sum of the weighted individual 10 36-D Gaussians) is fitted to the training set, we compute the value that the Gaussian mixture PDF takes for each point of any data set. Figure 5 shows the histogram of the negative log2 -likelihood values for the training set, the test set, a random set following the GMM PDF, and a random set uniformly sampling the hypercube spanning the whole training set. This latter set is obtained by sampling independently each parameter from a uniform distribution between the minimum and maximum values that are defined in the third and fourth columns of Table 2, which list the minimum and maximum values of the peak temperature for each line. The top panel of this figure shows that the test andtraining sets are well related compared to the random sampling. When zooming on the negative log2 -likelihood range that only contains the test and training sets, the histograms are slightly different (the test-set histogram has a higher wing at low negative log2 -likelihood), but most of the points of both sets span the same range of negative log2 -likelihood.

Appendix A shows that the negative log2 -likelihood of a sample X with respect to a PDF f can be interpreted as the quantity of information, that is the number of bits necessary to encode X with f (up to the constant offset − log2Δ related to the resolution Δ of the quantification). Figure 5 can thus be interpreted as the histogram of the quantity of information necessary to encode the different sets. The mean cost for a uniformly drawn set of points (green histogram) is much larger than for the three others samples, by approximately 3500 bits, computed as the difference between the means of two histograms. The encoding of this set with the estimated Gaussian mixture is clearly not adapted. Conversely, the mean number of bits needed to encode the three other random sample is comparable, i.e., the mean costs in bits to encode the training set, the test set, or a set of points drawn from the GMM are similar. The Gaussian mixture is thus adapted for these three sets.

|

Fig. 4 Zoomof the spatial distribution of the integrated intensity (top) and peak temperature (bottom) towards the Horsehead nebula. |

|

Fig. 5 Probability distribution function of the value of the Gaussian mixture fitted on the training set, for each data point. The log2-likelihood shown on the horizontal axis can be interpreted as the number of bits (to within a constant value) required to encode each point of the sample. The blue, orange, green, and pink colors show the distributions for the training set, the test set, a set of points uniformly drawn in the same multi-dimensional space, and a set of points randomly drawn in the Gaussian Mixture, respectively. The vertical lines show the associated means of the negative log2 -likelihood. Bottom panel: zoom of the top one. |

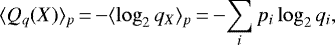

5.4 Optimization of the random forest regressor

Some algorithms have additional parameters, named hyper-parameters, which can be tuned to improve the quality of the regressor. In the case of random forests, the generalization performance can be optimized by tuning three hyper-parameters: (1) the maximum tree depth; (2) the fraction of features randomly chosen to train each node of each individual regression tree; and (3) the number of trees in the forest.

The values of these hyper-parameters are optimized to obtain the best generalization behavior of the predictor to previously unseen data. The goal is to have a predictor that is general enough to learn the complex non-linear relationship between observed features and the predicted quantity without learning the noise in the data set. The standard way of tuning the values of these hyper-parameters is to isolate a part of the training set as a validation set. We randomly put aside 4 out of 39 training rectangles as the validation set. The training procedure is repeated for different (fixed) values of the hyper-parameters. The best hyper-parameter values are then chosen as the ones that minimize the RMSE on the validation set. We also wish to maximize the amount of data used for training in case the validation set contains rare meaningful events (e.g., dense cores). To achieve this, random permutations of the validation sets are implemented and the hyper-parameters are chosen as the ones that are optimal over the average of the different validation sets. In our case, the best parameters are the ones that give the best average performance over ten such cross-validation draws.

We implemented a grid search to optimize these three hyper-parameters. Figure 6 shows the RMSE averaged over the ten cross-validation draws as a function of the three hyper-parameters as described in Sect. 5.2. The red cross shows the values of the hyper-parameters that minimize the RMSE: 300 for the number of trees, 32 for the tree maximum depth, and 30 randomly selected features (i.e., 75% of the total number of features). The optimization of the number of trees and maximum tree depth is particular because we expect that adding more trees monotonically increases the performance by reducing the variance. The most important piece of information here is that that the RMSE does not vary much when the number of trees is larger than 10, the maximum tree depth is larger than 16, and the fraction of randomly selected features is larger than 25%. This makes the overall random forest estimator robust to changes of these hyper-parameter values.

6 Comparison of the random forest prediction with two simpler methods

In order to show the power of the random forest predictor, we compare its performance with two other methods. We focus on the generalization performance of the predictors. This implies that we only check the prediction power on the test data set that has never been seen during the training phase.

|

Fig. 6 Variations of the root mean square error (RMSE) between the predicted and the observed |

6.1 Multi-linear regression with or without a non-linear processing of the line intensities

Firstly, a standard ordinary least square method gives us the minimal achievable regression accuracy. We use the LinearRegression class from sklearn with the keyword normalize=True to apply a standard pre-whitening, that is, subtracting the mean and dividing by the standard deviation.

Secondly, we compare to the result obtained by Gratier et al. (2017). In this study, the linear principal component analysis (PCA) was preceded by the application of a asinh function to the original data set:

(3)

(3)

where a is a constant cutoff. This non-linear transformation allowed us to take care of the specific properties of the histograms of the molecular emission. They are made of a Gaussian core around zero reflecting the noise properties and a power law tail that reflects the signal. The application of the asinh function had the double advantage of (i) applying a logarithm transform to the values above the asinh cutoff a to linearize the power law tail, while (ii) keeping the noise unchanged below the asinh cutoff a. This latter property allowed us to keep all the data set without noise clipping in the analysis. We use a common value of the asinh threshold a = 0.08 K or = 0.08 K km s−1 for the peak temperatures and integrated intensities, respectively, as in Gratier et al. (2017). After applying this transformation, we again use the LinearRegression class from sklearn with the keyword normalize=True as above.

6.2 Spatial distributions of the predictions and of the residuals

The first two columns of Fig. 7 show the spatial distribution of the observed and predicted column densities. The last column shows the ratios of the observed and predicted column densities for the three methods, on a logarithmic scale. It is thus equal to the difference between the logarithms of the predicted and observed column densities.

The residuals (right column of Fig. 7) indicate that all three regressions deliver column density predictions within a typical factor of two (i.e., ±0.3 dex). This means that it is indeed possible to predict the column density of the gas within a factor of two based only on the 3 mm line emission. However, the residuals between the predicted and observed values of  never look like random noise. This implies that the generalization of the column density predictor is imperfect in the three cases.

never look like random noise. This implies that the generalization of the column density predictor is imperfect in the three cases.

The comparison of the spatial distributions of the predicted column densities and the residuals for the three regression methods clearly shows that the linear regression is less successful than the other two non-linear methods. The left-right blue-red pattern indicates that the dense gas column density is clearly underestimated while the diffuse-translucent gas column density is overestimated for the linear predictor. The non-linear predictors perform better overall (lower contrast in the residuals).

The difference between the asinh pre-processing predictor and the random forest one is more subtle. The contrast of the residual image is slightly less pronounced for the random forest predictor. It does better than the asinh pre-processing in the dense cores, under the Horsehead muzzle, and in the diffuse-translucent gas above the Horsehead pillar. However, the back of the Horsehead (its mane) appears bluer in the residual maps of the random forest predictor.

6.3 Jointdistributions of the predicted and observed column density, and the histograms of their ratios

In order to quantitatively compare the three different methods, Fig. 8 shows the joint distributions of the predicted and observed column densities, as well as the histograms of their ratios. Table 3 lists the RMSE over the test set, the maximum root square error, as well as the mean of the ratios. These values here quantify the performance of the generalization of the method.

A linear regression gives a double-peaked histogram of the column density log-residuals (i.e., the base-10 logarithm of the ratio of the predicted over observed column density). This comes from the fact that the prediction overestimates the low column densities and underestimates the high column densities. The linear regression on the asinh of the intensities delivers a much better statistical agreement. Most of the predictions are close to the measured values even though there still is some scatter in particular at intermediate column densities. The joint histograms show that the asinh slightly underestimates the column density around 6.3 × 1021 cm−2 and slightly overestimates it above 1.6 × 1022 cm−2. The random forest predictor shows the least dispersion around the actual column density. All these properties translate into the fact that the histogram of the log-residuals is closest to a Gaussian for the random forest predictor.

These results are quantitatively confirmed by the values of the RMSE and the maximum absolute error listed in Table 3. The RMSE indicates that the predictors infer the column density within 20, 30, and 40% with a maximum error of a factor 1.8, 2.1, and 2.3, for the random forest, the asinh pre-processing, and the linear predictor, respectively. An additional piece of information is that all three methods over-estimate the column density by 20, 10, and 6% for the asinh pre-processing, the random forest and the linear predictors, respectively. This could be due to the fact that we tried to infer a positive quantity from noisy measurements where the centered noise sometimes hides the signal.

More quantitatively, we estimated the standard deviation on the mean error and MSE as

(4)

(4)

where N is the number of pixels in the test set, and var is the variance of the error, that is, the difference between the logarithm of the predicted and observed values. The values listed in Table 3 shows that the difference between the mean errors associated with the random forest, and the asinh pre-processing methods is much larger than the sum of the associated standard deviations. A similar result is obtained for the mean square errors of the two methods. These two results mean that the random forest method yields a significantly better prediction than the asinh pre-processing.

|

Fig. 7 Comparison of the generalization performances of three predictors. Top row: linear method. Middle row: linear method with a non-linear pre-processing. Bottom row: random forest. All results are computed on the Horsehead pillar, i.e., the test set. Left and middle columns: spatial distribution of the observed and predicted column density. Both images share the same color scale. Right column: ratio of the predicted column density over the observed one. The limits of the color scale correspond to a ratio interval from 1∕3 to 3. |

|

Fig. 8 Comparison of the generalization performances of three predictors. Top row: linear method. Middle row: linear method with a non-linear pre-processing. Bottom row: random forest. All results are computed on the Horsehead pillar, i.e., the test set. Left column: joint Probability Distribution Function (PDF) of the predicted column density and of the observed one. The contours are the PDF isocontours enclosing 25, 50, and 75% of the datapoints. Points whose density falls below these values are shown as black dots. The oblique lines have a slope of 1. They indicates ratio values of 1.0 (plain), 0.5 and 2.0 (dashed), 0.1 and 10.0 (dotted). Right column: histogram of the ratio of the predicted column density over the observed one on a logarithmic scale. The dotted lines show the Gaussian of same mean and width. |

6.4 Uncertainty

The fact that a random forest is an ensemble method can be leveraged to associate an uncertainty value to each predicted quantity. Atthe prediction step, the regression trees yield a set of 300 values and the mean of these values is the prediction of the random forest algorithm. It is thus possible to compute the value at a given percentile of the cumulative distribution of these 300 values. Specifically, we compute the values for the {2.3%, 15.9%, 84.1%, 97.7%} percentiles, which would correspond to 1 and 2σ uncertainty intervals for a Gaussian distribution.

Figure 9 compares the median prediction surrounded by the uncertainty intervals that comprise 68 and 95% of the trees with the column density of each pixel with the observed value. This confirms that column densities are well estimated between 2.5 × 1021 cm−2 and 1.6 × 1022 cm−2, and slightly over-estimated outside this interval. Nevertheless, the observed values are within the 95%–uncertainty interval for more than 90% of the test set.

The largest discrepancies between the observed and predicted values happen when the observed column density is lower than 2.5 × 1021 cm−2. In this range, the column densities are most often over-predicted for the three methods tested in this section. This could be related to the fact that 12CO (1–0) is overluminous in the diffuse gas, as already observed by Liszt & Pety (2012). Indeed, diffuse gas is actually present around the Horsehead pillar in the second velocity component between 2 and 8 km s−1, as mentioned in Pety et al. (2017). This behavior may have not been properly learned due to the lack of a clean example in the training set.

Statistical comparison of the performances of three regression methods on the test set (the Horsehead pillar).

|

Fig. 9 Median column density and quantile intervals for each pixel of the test set ordered by increasing observed column density. Theblue dots display the associated observed column densities. |

7 Contribution of the different lines to the performance of the predictor

In the previous section, we show that the random forest predictor is able to approximate the column density with a precision of 20% on data points that belong to the same parameter space as the training set. We now try to quantify the contribution of the different molecular lines to this result. This question can be answered on both the training and test sets, as they belong to similar distributions of the input observables and targeted physical quantity. The advantage of the training set is that it contains 40 times as many points. The advantage of the test set is that the spatial variations of the input and targeted variables show shapes that are easy to describe. We thus use the training set to obtain global trends and the test set to discuss finer trends.

7.1 Lines that contribute the most

A first interpretative tool is the quantification of the line importance, that is, how much each line contributes to the prediction of  . To do this, we first keep the value of the RMSE computed on the training set as a reference. We then randomly permute the values of the intensities for a given line, all other intensities remaining constant. We finally compute the RMSE on the prediction using this shuffled data set. The increase in RMSE is the importance associated with the line. This importance has the same unit as the predicted quantity and it measures how much the performance would be degraded when the species is replaced by a noise that keeps the shape of the probability distribution function. For each line of sight, we simultaneously shuffle the values of the peak intensities and of the integrated intensity values of a given molecular line to estimate the overall importance of this line. Moreover, we try to check whether all lines have a significant contribution. We thus added two random data sets as additional input features to check which lines bring in more information than plain noise. We used two different random data sets to check that the result is not biased by any given random drawing.

. To do this, we first keep the value of the RMSE computed on the training set as a reference. We then randomly permute the values of the intensities for a given line, all other intensities remaining constant. We finally compute the RMSE on the prediction using this shuffled data set. The increase in RMSE is the importance associated with the line. This importance has the same unit as the predicted quantity and it measures how much the performance would be degraded when the species is replaced by a noise that keeps the shape of the probability distribution function. For each line of sight, we simultaneously shuffle the values of the peak intensities and of the integrated intensity values of a given molecular line to estimate the overall importance of this line. Moreover, we try to check whether all lines have a significant contribution. We thus added two random data sets as additional input features to check which lines bring in more information than plain noise. We used two different random data sets to check that the result is not biased by any given random drawing.

The top panel of Fig. 10 shows the line importance by decreasing value for our data set. We first see that all lines bring more information than plain noise. Second, the (J = 1−0) line of 13CO, 12CO, C 18 O, HCO+, HNC, N2 H+, CCH, and the (J = 2−1) line of 12 CS have the largest line importance. Shuffling the 13CO samples increases the RMSE by ~0.22 dex (i.e., a factor 1.65 multiplying the reference factor of 1.2). The least important of these eight lines still increases the RMSE by 0.01 dex (or a factor 1.02), when shuffling its samples. Shuffling the data of any other line increases the RMSE by less than 0.01 dex.

Another way of visualising this effect is to build predictors with an increasing number of lines ordered by decreasing feature importance. The bottom panel of Fig. 10 clearly shows that adding more than the four of the most important lines only marginally increases the overall performance of the prediction. In other words, it seems that only four lines (the J = 1−0 line of the three main CO isotopologues, and HCO+) can predict  almost as well as when all the lines are included. We refine this statement further in Sect. 7.3. Adding more than the first eight lines even seems to bring no added value. This also shows that the random forest method is rather insensitive to the presence of “noisy” data in the input features.

almost as well as when all the lines are included. We refine this statement further in Sect. 7.3. Adding more than the first eight lines even seems to bring no added value. This also shows that the random forest method is rather insensitive to the presence of “noisy” data in the input features.

|

Fig. 10 Contribution of the different lines (both integrated intensity and temperature peak) to the quality of the random forest fit of the training data set. Noise #1 and 2 are two additional random sets of input data. Top: quantitative improvement of the quality of the fit (RMSE feature importance) for each available line. Bottom: evolution of the RMSE (filled circles) and maximum absolute error when each line is progressively added into the training phase in the order defined in the top panel. These results are computed on the training set. The RMSE values on each diagram are commensurate to log |

7.2 Where the lines contributes to the prediction

To confirm these quantitative measures, Fig. 11 shows the evolution of the spatial distribution of the predicted column density and of the associated residuals around the Horsehead pillar when building random forest predictors that are trained on an increasing number of lines ordered by their decreasing importance.

We see that the predictor trained only on the 13CO (1–0) line isable to recover the shape of the Horsehead pillar. This implies that this line contributes to differentiatethe column density between translucent and denser gas. Adding the contribution of the 12CO (1–0) line changes the residual maps mostly in the regions made of diffuse gas (red part on the right side). However, a predictor trained on these two lines alone provide a rather poor estimation of the column density in either the halo that surrounds the Horsehead pillar or the dense cores (e.g., the dense core at the top of the Horsehead or in its throat). Adding the C 18 O (1–0) line is important to improve the estimation within the denser parts (Horsehead spine and dense cores), while including the HCO+ (1–0) line improves the estimation of the column density in the far-UV-illuminated transition region between the translucent and denser gas. Completing the line sample with the HNC and N2 H+ (1–0) lines slightly changes the contrasts of the predicted images but this seems a second-order effect as the shape of the residuals does not change much when adding these lines. The most important change comes from the contribution of the N2 H+ (1–0) line tothe dense cores inside the Horsehead pillar.

Looking at the structure of regression trees, and random forests, it is possible to compute the contribution of each line, l, to the H2 column density. Indeed, it is possible to show3 that the predicted value of the column density at pixel (i, j) can be written as

(5)

(5)

where logN0 is the mean of the column density over the training data set, and logNl is the quantitative contribution of each line (either integrated line profile or peak temperature) to the predicted value of the column density at pixel (i, j). Figure 12 shows the spatial distribution of the contribution of the eight lines most important to predict the column density. The first striking result is that all contribution maps show well-behaved structures (with extremely few exceptions), even though all lines are not detected over the full field of view. This suggests again that the column density estimate is rather insensitive to noise.

The contribution maps also allow us to quantitatively refine the scenario above. They confirm that the 13CO, 12CO, C 18 O, and HCO+ (1–0) lines are the first-order corrections to the mean value of the column density. The integrated intensity of 13CO contributes the most to the predictor. Its contribution map shows that it is important over the whole area. It contributes positively where the column density is high and negatively in the most far-UV illuminated regions. This coincides with the visual impression that the contribution map recovers well the shape of the Horsehead pillar. This is expected because the 13CO line traces most of the gas without being too optically thick. The second biggest contributor is the 12CO (1–0) line. It also contributes positively where the gas is translucent (see e.g., the clumps south of the Horsehead pillar). It is almost neutral (white or light blue around the Horsehead) where the gas is diffuse and it contributes negatively (dark blue) where the H II region dominates. The overall visual impression is that the 12CO line is importantto predict the diffuse to translucent part of the column density along the line of sight. The next two main contributors are the HCO+ and C18O (1–0) lines. They contribute in two complementary physical regimes. The C 18 O line contributes mostly where the gas is dense with a positive contribution at the highest densities (Horsehead spine) and a negative contribution at lower densities (nose, mane, feet). Conversely, the HCO+ line contributes mostly on the photo-dissociation regions that surround more or less dense gas. The comparison of the contribution maps of the integrated intensity and peak temperature for these four lines shows that the integrated intensities contribute more to the estimation of the column density. The peak intensities provide second order corrections, sometimes of opposite signs (clearest on the C 18 O line contributions) to the prediction of  . This suggests that these two parameters indeed play different roles in the estimation of the column density.

. This suggests that these two parameters indeed play different roles in the estimation of the column density.

The next four most important lines are the J = 1−0 lines of HNC, N2H+, CCH, and the J = 2−1 line of 12CS. HNC, and N2H+COntribute mostly on the densest parts of the Horsehead pillar, that is, the dense cores and their surroundings. A striking feature is that the HNC peak temperature contributes one of the most important corrections in the places where the gas is dense, while its integrated intensity does not play a role in the prediction. This property is probably related to the detection pattern of this line in Fig. 4, which shows that the spatial distribution of the peak temperature is more contrasted or structured than the integrated line emission for the HNC line. Indeed, its integrated intensity varies between about 1 and 3 K km s−1 (it appears mostly red) everywhere it is detected in the horsehead pillar, while its peak temperature varies from about 0.3 to 2 K (its color varies from green to white) on the same region. Another striking feature is that the N2 H+ (1–0) peak temperature contribution map is structured even in regions where this line is not obviously detected. This probably means that the random forest has learned that a correction is needed when the N2 H+ line stays undetected. Finally, the CCH (1–0) and 12CS (2–1) lines contribute smaller corrections in photo-dissociation regions and UV-shielded dense gas, respectively. The peak temperatureand integrated intensity of both lines contribute corrections of similar magnitude.

|

Fig. 11 Evolution of the prediction of the column density when adding molecular tracers one by one during the training phase. Left and middle columns: spatial distribution of the observed and predicted column densities. Both images share the same color scale. Right column: ratio of the predicted column density over the observed one. The limits of the color scale correspond to a ratio interval from 1∕5 to 5. |

|

Fig. 12 Spatial distribution of the contribution of the integrated intensity and the peak temperature of the eight most important lines to the estimation of |

|

Fig. 13 Spatial distribution of the four following masks: 1 ≤ Av < 2 in black, 2 ≤ Av < 6 in brown, 6 ≤ Av < 15 in orange, and 15 ≤ Av in yellow. |

7.3 The physical regime each line contributes to

The line importance discussed in Sect. 7.1 is computed on the full training set that indifferently mixes all physical regimes. However, the contribution maps discussed in the previous section clearly showed that some lines are more important in certain particular physical regimes. We thus now ask whether some other lines are important for a given physical regime of column densities. Using the same categories as discussed in Pety et al. (2017), we compute the line importance on four subsets of the training set (see Fig. 13): 1 ≤ Av < 2 (diffuse gas: 14 377 pixels), 2 ≤ Av < 6 (translucent gas: 34 635 pixels), 6 ≤ Av < 15 (filaments: 8 723 pixels), and 15 ≤ Av (dense cores: 1 545 pixels). Figure 14 shows the line importance diagrams for the four different intervals of visual extinction.