| Issue |

A&A

Volume 699, July 2025

|

|

|---|---|---|

| Article Number | A350 | |

| Number of page(s) | 19 | |

| Section | Catalogs and data | |

| DOI | https://doi.org/10.1051/0004-6361/202554425 | |

| Published online | 22 July 2025 | |

HOLISMOKES

XVI. Lens search in HSC-PDR3 with a neural network committee and post-processing for false-positive removal

1

Dipartimento di Fisica, Università degli Studi di Milano,

via Celoria 16,

20133

Milano,

Italy

2

INAF – IASF Milano,

via A. Corti 12,

20133

Milano,

Italy

3

Aix-Marseille Université, CNRS, CNES, LAM,

Marseille,

France

4

Purple Mountain Observatory,

No. 10 Yuanhua Road, Nanjing,

Jiangsu

210033,

PR

China

5

Technical University of Munich, TUM School of Natural Sciences, Physics Department,

James-Franck-Straße 1,

85748

Garching,

Germany

6

Max-Planck-Institut für Astrophysik,

Karl-Schwarzschild Straße 1,

85748

Garching,

Germany

★ Corresponding author: stefan.schuldt@unimi.it

Received:

7

March

2025

Accepted:

19

June

2025

We have carried out a systematic search for galaxy-scale lenses exploiting multiband imaging data from the third public data release of the Hyper Suprime-Cam (HSC) survey with the focus on false-positive removal, after applying deep learning classifiers to all ~110 million sources with an i-Kron radius above 0."8 . To improve the performance, we tested the combination of multiple networks from our previous lens search projects and found the best performance by averaging the scores from five of our networks. Although this ensemble network leads already to a false-positive rate of ~0.01% at a true-positive rate (TPR) of 75% on known real lenses, we have elaborated techniques to further clean the network candidate list before visual inspection. In detail, we tested the rejection using SExtractor and the modeling network from HOLISMOKES IX, which resulted together in a candidate rejection of 29% without lowering the TPR. After the initial visual inspection stage to remove obvious non-lenses, 3408 lens candidates of the ~110 million parent sample remained. We carried out a comprehensive multistage visual inspection involving eight individuals and identified finally 95 grade A (average grade G ≥ 2.5) and 503 grade B (2.5> G ≥ 1.5) lens candidates, including 92 discoveries showing clear lensing features that are reported for the first time. This inspection also incorporated a novel environmental characterization using histograms of photometric redshifts. We publicly release the average grades, mass model predictions, and environment characterization of all visually inspected candidates, while including references for previously discovered systems, which makes this catalog one of the largest compilation of known lenses. The results demonstrate that (1) the combination of multiple networks enhances the selection performance and (2) both automated masking tools as well as modeling networks, which can be easily applied to hundreds of thousands of network candidates expected in the near future of wide-field imaging surveys, help reduce the number of false positives, which has been the main limitation in lens searches to date.

Key words: gravitational lensing: strong / methods: data analysis

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Strong gravitational lensing has emerged in recent decades as a powerful tool to probe galaxy evolution and cosmology. It allows us to obtain in a very precise way the total mass (i.e., baryonic and dark matter (DM)) of the galaxy or galaxy cluster acting as the lens (e.g., Bolton et al. 2008; Shu et al. 2017; Caminha et al. 2019) and, by assuming that mass follows light, we can disentangle the mass components and obtain unique insights into the DM distribution (e.g., Schuldt et al. 2019; Shajib et al. 2021; Wang et al. 2022) or DM substructure (e.g., Ertl et al. 2024; Lange et al. 2025; Enzi et al. 2025). Thanks to the lensing magnification, strong lensing also allows us to study high-redshift sources not visible otherwise (e.g., Shu et al. 2018; Vanzella et al. 2021; Meštrić et al. 2022; Stiavelli et al. 2023; Morishita et al. 2024).

In the case of a time-variable background object, such as a supernova (SN) or a quasar, time delays can be measured (e.g., Courbin et al. 2018; Millon et al. 2020) and exploited for competitive measurements of the value of the Hubble constant, H0 (Refsdal 1964). Given the rarity of SNe and strong lensing events, this time-delay cosmography (TDC) technique was mostly carried out with quasars (e.g., Wong et al. 2020; Acebron et al. 2022; Shajib et al. 2022; Acebron et al. 2024). To date only three strongly lensed SNe are known with time delays usable for a precise measurement of H0: SN Refsdal lensed by the cluster MACS J1149.5–2223 (e.g., Grillo et al. 2018; Kelly et al. 2023; Grillo et al. 2024), SN H0pe strongly lensed by the cluster PLCK G165.7+67.0 (Frye et al. 2024; Pascale et al. 2025), and SN Encore with SN Requiem, both lensed by the same cluster, MACS J0138–2155 (Rodney et al. 2021; Pierel et al. 2024; Granata et al. 2025; Ertl et al. 2025; Pierel et al., in prep.). To prepare a systematic search with current and upcoming wide-field imaging surveys, we initiated the Highly Optimized Lensing Investigations of Supernovae, Microlensing Objects, and Kinematics of Ellipticals and Spirals (HOLISMOKES, Suyu et al. 2020) program. As a precursor to the Legacy Survey of Space and Time (LSST) of the Vera C. Rubin Observatory (Ivezic et al. 2008; Ivezić et al. 2019), we are currently exploiting data from the Hyper Suprime-Cam (HSC), which are expected to be very similar.

In Cañameras et al. (2021, hereafter C21) we presented a residual neural network to search broadly for any static lens, and complemented this in Shu et al. (2022, hereafter S22) with deflectors at relatively high redshift (zd ≥ 0.6) to better cover the whole redshift range. Both projects relied on a single convolutional neural network (CNN) and targeted HSC images of the second public data release (PDR2). In this work, we combine multiple networks into an ensemble network, relax our restrictions on filter coverage to increase the observed sky area (requesting gri bands instead of grizy), and consider data from PDR3 with a slightly deeper and larger footprint.

While the resulting sample of network candidates from HSC is small enough for visual inspection, this will not be the case for LSST, Euclid (Laureijs et al. 2011), Roman (Spergel et al. 2015), and the Chinese Space Station Telescope (Gong et al. 2019), delivering more than a billion images, even with a citizen science approach (e.g., Holloway et al. 2024; Euclid Collaboration: Holloway et al. 2025; Euclid Collaboration: Walmsley et al. 2025). Consequently, we urgently need further automated ways to lower the false-positive rate (FPR) before an unavoidable visual inspection.

In this paper, we show our deep learning ensemble network and, following C21, apply it to cutouts of any object with an i-Kron radius above ![$\[0^{\prime\prime}_\cdot8\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq2.png) that passes standardized HSC image quality flags (see C21 for details). We explore two different approaches to reject false positives: (1) we run SExtractor to reject images with artifacts or without a real astrophysical source in the cutout, followed by a exclusion through HSC-pixel flags, and (2), we run the residual neural network from Schuldt et al. (2023a, hereafter S23a) to reject systems based on the mass model predictions. Specifically, Sect. 2 presents the tested network committee and their performances on known real lenses. We then describe the two approaches of contaminant rejection in Sect. 3, before we carry out a visual inspection as described in Sect. 4 and present the newly discovered lens candidates. Finally, we give a summary and conclusion in Sect. 5. We exploit trained networks from C21, S22, and Cañameras et al. (2024, hereafter C24), and consequently also adopt a flat ΛCDM cosmology with Ωm = 1 − ΩΛ = 0.32 (Planck Collaboration VI 2020) and H0 = 72 km s−1 Mpc−1 (Bonvin et al. 2017).

that passes standardized HSC image quality flags (see C21 for details). We explore two different approaches to reject false positives: (1) we run SExtractor to reject images with artifacts or without a real astrophysical source in the cutout, followed by a exclusion through HSC-pixel flags, and (2), we run the residual neural network from Schuldt et al. (2023a, hereafter S23a) to reject systems based on the mass model predictions. Specifically, Sect. 2 presents the tested network committee and their performances on known real lenses. We then describe the two approaches of contaminant rejection in Sect. 3, before we carry out a visual inspection as described in Sect. 4 and present the newly discovered lens candidates. Finally, we give a summary and conclusion in Sect. 5. We exploit trained networks from C21, S22, and Cañameras et al. (2024, hereafter C24), and consequently also adopt a flat ΛCDM cosmology with Ωm = 1 − ΩΛ = 0.32 (Planck Collaboration VI 2020) and H0 = 72 km s−1 Mpc−1 (Bonvin et al. 2017).

2 Inference with network committees

We followed the approach described in C21 to classify the ~110 million galaxies from HSC Wide PDR3 with an i-band Kron radius of ![$\[\geq0^{\prime\prime}_\cdot8\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq3.png) and optimize the contamination rate of the candidate strong lens sample. Based on test sets drawn from HSC Wide PDR2 images and designed to closely match a real classification setup, Cañameras et al. (2024) show that the purity and overall classification performance are significantly improved with committees of multiple neural networks (see also e.g., Andika et al. 2023). The highest gain is obtained when combining networks trained on different ground-truth datasets, with different prescriptions for the parameters’ distributions over the mock lenses used as positive examples. Taking the average or multiplication of output scores from networks that have little overlap in false positives due to their internal representations is best for improving the true-positive rate (TPR), defined as true-positives over all considered positives, at low FPR.

and optimize the contamination rate of the candidate strong lens sample. Based on test sets drawn from HSC Wide PDR2 images and designed to closely match a real classification setup, Cañameras et al. (2024) show that the purity and overall classification performance are significantly improved with committees of multiple neural networks (see also e.g., Andika et al. 2023). The highest gain is obtained when combining networks trained on different ground-truth datasets, with different prescriptions for the parameters’ distributions over the mock lenses used as positive examples. Taking the average or multiplication of output scores from networks that have little overlap in false positives due to their internal representations is best for improving the true-positive rate (TPR), defined as true-positives over all considered positives, at low FPR.

We investigated several combinations of neural networks chosen among the best performing ResNet and classical CNNs from C21, S22, and C24. All considered networks reached on their own a TPR of 40–60% at a FPR of 0.01% using 70 910 non-lens images from the COSMOS field. We refer to the original publications for more details such as their receiver operating characteristic curves.

After classifying the ~110 million HSC PDR3 image cutouts with these networks, we compared the TPR over three independent sets of confirmed and candidate strong lenses as a function of the total number of lens candidates predicted by the committee. This allowed us to find the optimal committee that maximizes the TPR at a given output sample size (i.e., corresponding to a fixed human inspection time). The first set of test lenses (set 1) includes the 1249 galaxy-scale grade A or B candidates from the SuGOHI papers1. The second set (set 2) focuses on 201 galaxy-scale grade A from SuGOHI, which are also included in set 1. The third set (set 3) corresponds to the cleaned set of 178 galaxy-scale grade A or B lens candidates used in C21 C24, which excludes visually identified systems with multiple lens galaxies, or significant perturbation from the environment.

We investigated various combinations of different networks by taking the average or the product of their individual network scores. As examples, the performance of combinations with two, three, four, and five networks are shown in Fig. 1. The best committee that maximizes the TPR in all three test sets at a fixed number of candidates was obtained by averaging the scores of five individual networks: the ResNet from C21, Classifier-1 from S22, and three additional ResNets from C24, as is illustrated in Fig. 2. We adopted a threshold on the average score of 0.55, which corresponds to 22393 strong-lens candidates, and a TPR of 51, 54, and 75% over test set 1,2, and 3, respectively. Since the TPR curves reach a plateau for all three of the test sets considered, decreasing the score threshold to include 50000 additional candidates would have improved the TPR by only ≃4–5%, which does not justify nearly tripling the human inspection time2. Having various test sets was important, so that we could check that the plateau in TPR is reached irrespective of the exact setup of the test set. Since set 3 best represents the galaxy-galaxy scale lenses we target with this network committee (e.g., set 3 is cleaned from group and cluster lenses), the network committee shows the best performance on this set as expected. Finding about 20 000 candidates among 110 million sources from the parent sample is consistent with the FPR in the range of 0.01–0.03% predicted in C24 for network committees, at a TPR of 75% evaluated among set 3.

The five networks are all from the same type of architecture and include residual neural network blocks (He et al. 2015). Specifically, the Classifier-1 from S22 is based on the CMU DeepLens package from Lanusse et al. (2018), while the other four networks are adapted from the ResNet-18 architecture. The networks were, however, trained on different ground-truth datasets. We introduce the general properties of the training sets and refer the reader to C21, S22, and C24 for further details.

For all five networks, the realistic mock lenses used as positive examples were simulated with the pipeline described in Schuldt et al. (2021, 2023b). Briefly, the pipeline paints lensed arcs on HSC images of massive luminous red galaxies (LRGs) using singular isothermal ellipsoid (SIE) lens mass profiles, and SIE parameter values inferred from SDSS spectroscopic redshifts and velocity-dispersion measurements. After the high-redshift background sources are drawn from the Hubble Ultra-Deep Field (HUDF, Inami et al. 2017), mock lensed arcs are computed with GLEE (Suyu & Halkola 2010; Suyu et al. 2012b), and convolved with the HSC point spread function, before coaddition with the lens galaxy cutout. Positive examples used to train the ResNet from C21 and Classifier-1 from S22 were drawn from the same parent set of mocks, with (i) a nearly uniform Einstein radius distribution between ![$\[0^{\prime\prime}_\cdot75\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq4.png) and

and ![$\[2^{\prime\prime}_\cdot5\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq5.png) , (ii) a boosted fraction of lens galaxies at z > 0.7 with respect to the parent SDSS sample, (iii) lensed images that have μ ≥ 5, and (iv) a minimal ratio of SNRbkg,min = 5 between the brightest arc pixel and the local sky background over the lens LRG cutout. The other three high-performing networks from C24 that are part of the committee were trained on mocks produced with a similar procedure, but with (i) a boosted fraction of red HUDF sources, (ii) a natural Einstein radius distribution between

, (ii) a boosted fraction of lens galaxies at z > 0.7 with respect to the parent SDSS sample, (iii) lensed images that have μ ≥ 5, and (iv) a minimal ratio of SNRbkg,min = 5 between the brightest arc pixel and the local sky background over the lens LRG cutout. The other three high-performing networks from C24 that are part of the committee were trained on mocks produced with a similar procedure, but with (i) a boosted fraction of red HUDF sources, (ii) a natural Einstein radius distribution between ![$\[0^{\prime\prime}_\cdot75\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq6.png) and

and ![$\[2^{\prime\prime}_\cdot5\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq7.png) instead of an uniform distribution, and (iii) no lower limit on μ and a balanced fraction of double and quad configurations. This corresponds to sets labeled L1, L4, and L6 in C24.

instead of an uniform distribution, and (iii) no lower limit on μ and a balanced fraction of double and quad configurations. This corresponds to sets labeled L1, L4, and L6 in C24.

In terms of negative examples, four out of the five networks include a mix of 33% spirals, 27% isolated LRGs without arcs, 6% groups, and 33% random galaxies over the HSC footprint, as is defined for set N1 in C24. The fifth network, namely Classifier-1 from S22, was primarily targeting high-redshift strong-lenses and trained on negative examples drawn from a parent sample with red (g − r) and (g − i) colors. All five networks were trained and validated on images in gri bands, such that we require only the availability of gri bands in HSC, while the image in z or y can be missing.

|

Fig. 1 True-positive rate (TPR), giving the ratio between true-positives and all positives, of different network committees (solid lines) measured on set 3, as a function of the number of lens candidates selected by each committee over the parent sample of 110 million sources. We consider here five different networks, whose individual curves are shown in light purple of various line styles. As examples, we show the performance of the ResNet trained on sets L4 and N1 from C24 (dash-dotted) and Classifier-1 from S22 (long-dotted). The solid gray line shows the performance when averaging the scores from these two networks, the green one when additionally including the ResNet trained on L7 and N1 (long dashed), the orange one when also including the baseline ResNet from C24 (dotted), and red when additionally using the network from C21 (dashed). We further show the TPR evolution with a solid dark blue line when multiplying the network scores from all five networks instead of averaging. |

|

Fig. 2 Evolution of the TPR, as measured over the three test sets of candidate and confirmed strong-lenses, as a function of the number of candidates selected by the neural networks. Solid curves show the results for the final committee of five networks trained on different ground-truth datasets. Light purple curves show the lower TPR obtained on set 3 for the five individual networks included in the committee: the ResNet from C21 (dashed curve), Classifier-1 from S22 (long-dotted curve), the baseline ResNet from C24 (dotted curve), a network trained on mocks with a natural θE distribution (dash-dotted curve), and a ResNet trained on balanced fraction of doubles and quads (long-dashed curve). The vertical gray line marks the final score threshold applied to select the list of strong-lens network candidates. Examples of score thresholds are marked for the evaluation of the best committee on set 3. |

3 Cleaning the output candidate list

Before conducting a visual inspection (see Sect. 4) to identify the high-quality strong lens candidates, the catalog of 22393 candidates was post-processed to remove obvious artifacts and non-lenses. Since prior to this work no larger samples of HSC images were flagged as images with artifacts or crowded fields, we could not include a larger fraction of them in the negative training set. This would have helped to lower the FP rate. However, it would have lowered the fraction of other types (such as LRGs, spirals, or ring galaxies; see C24) in the negative set that more closely resemble the lens images. For this post-processing, we applied mainly two criteria, as is detailed below. An overview table of the stages and resulting sample sizes is given in Table 1. While both techniques could have also been applied to the ~110 million parent sample and only the cleaned sample would have been classified by the network committee, it is significantly more efficient to first rank the full sample, and then further clean only the top candidates. This is mostly due to the longer runtime of the cleaning scripts than a network evaluation and because the second approach (see Sect. 3.2) requires in addition to the gri bands the z band observations which can then be downloaded only for the top ranked candidates rather than the full ~110 million parent sample.

Summary of the different selection stages with corresponding sample size, including rediscoveries.

|

Fig. 3 Examples of false-positive candidates from the network committee that were cleaned with post-processing scripts. A major fraction of contaminants correspond to crowded fields and/or cutouts with nonzero background emission in g, r, and/or i-band, which are rejected by SExtractor and HSC pixel-level flags (top row) or by the modeling network from S23a (bottom row). We show the average score of the network committee and the HSC ID in the top and bottom, respectively, of each panel, which is ~10″ on a side. We further show the predicted lens center as a cross with a length corresponding to the predicted uncertainty, and the Einstein radius as a solid green circle with predicted uncertainty bounds as dotted white lines. Some of the lower limits on the Einstein radii are near zero and are thus not visible. |

3.1 Cleaning using SExtractor and HSC pixel level flags

A substantial fraction of contaminants correspond to cutouts with residual background emission in one or all gri bands (see some examples in the top row of Fig. 3). These cutouts were identified using source masking and estimates of the sky background with SExtractor (Bertin & Arnouts 1996), leaving 19 820 candidates without loss of any test lens. This step was then refined with information from the pixel-level flags inferred by the HSC pipeline. We searched for optimal cuts to remove contaminants using the flags in gri bands over the 72×72 pixel images. First, we discarded cutouts that have >90% pixels with flags ≥512 and <1024, corresponding to pixels located near a bright object. This securely excluded cutouts affected by bright neighboring stars within a few tens of arcseconds. Secondly, we removed cutouts with >99% pixels flagged as “detected pixels”, which are more likely to correspond to crowded fields such as stellar clusters, or star-forming clumps within extended disks, than strong gravitational lenses. These two criteria decreased the sample to 18 712 candidates.

3.2 Cleaning using modeling CNN

In parallel, we applied the ResNet from S23a to all 22 393 network candidates. This modeling network is trained on realistic mock images created in a similar way to the ones used for training the committee network, and obtained a great performance in measuring the Einstein radii of lens systems when compared to glee_auto.py (Schuldt et al. 2023b), a code that relies on the well-tested lens modeling software GLEE (Suyu & Halkola 2010; Suyu et al. 2012a). The modeling network predicts the lens mass center, x and y, the lens mass ellipticity, ex and ey, and Einstein radius, θE, of a SIE profile as well as an external shear (γ1 and γ2), and the corresponding 1 σ uncertainties.

Since the modeling network is trained on solely lens images (i.e., not in combination with lens classification as in Andika et al. 2025), it is forced to provide reasonable model parameters (e.g., θE ∈[![$\[0^{\prime\prime}_\cdot5\]$](/articles/aa/full_html/2025/07/aa54425-25/aa54425-25-eq8.png) , 5″]) even if the given image is clearly not a lens. However, for such a given non-lens image, the modeling network is uncertain and the predicted errors can be significantly higher. Consequently, we can use this fact to reject false-positives from the classification network. To be conservative, we define the cuts here such that we do not exclude any lens candidate from our test set, as is shown in Fig. 4, which results in an additional rejection of 2794 systems. We show some examples of rejected candidates in the bottom row of Fig. 3.

, 5″]) even if the given image is clearly not a lens. However, for such a given non-lens image, the modeling network is uncertain and the predicted errors can be significantly higher. Consequently, we can use this fact to reject false-positives from the classification network. To be conservative, we define the cuts here such that we do not exclude any lens candidate from our test set, as is shown in Fig. 4, which results in an additional rejection of 2794 systems. We show some examples of rejected candidates in the bottom row of Fig. 3.

In sum, we rejected around 29% of the network candidates as false positives by keeping the same TPR on our test sample. This cleaning was performed through automated and fast scripts, and thus is also scalable for sample sizes expected from ongoing and upcoming wide-field imaging surveys that are two orders of magnitude higher. While excluding ~1/3 of the contaminants by keeping the same TPR is already a good improvement in the purity, we note that for significantly higher samples more stringent cuts can be applied to reject more contaminants, with the downside of possibly excluding some lenses.

4 Visual inspection

Before the visual inspection of the remaining network candidates from Sect. 3, we excluded duplicates among the network candidates. Although CNNs are known to be translation-invariant, we only excluded duplicates within two pixels when creating the 110 million parent sample to be very conservative. This ensured that we got network scores for all individual galaxies in the parent sample, and that we preserved cutouts centered on the actual lens galaxies. However, for the visual inspection, we removed duplicate cutouts within 2″. In order to preserve lines of sight with the most extended and most likely deflector galaxy in the center, we rank ordered the catalog entries by decreasing i-Kron radius and removed duplicates of lower rank. Excluding these duplicates reduces the human inspection time further, while the moderate cut of 2″ ensures that we will not shift any multiple image out of the inspected cutout, which would risk us missing the identification.

Furthermore, we excluded additional image stamps previously inspected as part of our HOLISMOKES strong lens searches in earlier HSC data releases (C21; S22; Schuldt et al. (2025), hereafter S25) to lower the need in human resources, as we grade the systems in a similar way and would therefore have similar resulting grades. We only kept 50 lenses (high-quality grade A or B lens candidates, which we simply refer to as “lenses” hereafter) and 50 non-lenses from C21 and S25 relying both on the same network, as well as 50 lenses and 50 non-lenses from S22 for comparison3. This resulted in a catalog of 11 874 candidates, including the 200 systems mentioned above, for visual inspection.

The visual inspection closely followed the procedure proposed by S25 and was conducted jointly with candidates presented by Andika et al. (2025). In short, we (1) carried out a calibration round using 200 systems inspected by all eight inspectors4 since we introduced several new features in the grading tool, as is detailed below. The actual grading then comprised (2) a binary classification (Sect. 4.1), a (3) four-grade inspection of four individuals, and (4) another four-grade inspection from the remaining inspectors, which additionally characterized the predicted mass model and the candidate environment (see Sect. 4.2). Finally, we obtained the average grade, G, from the eight individual grades collected for the interesting candidates (reported in Table 2). As previously, we use a three-panel image to show two different scalings of the gri bands, and, in contrast to previous inspections, an image of the riz bands to ease the identification of lensed quasars. While these image stamps have a size of 10″ × 10″, we additionally show 80″ × 80″ stamps in the same filters and scaling as in the smaller cutout.

|

Fig. 4 Normalized histograms of the mass model parameter predictions using the modeling network from S23a. The top row shows the predicted mass model parameter values, and the bottom row shows the corresponding 1 σ uncertainties. We show the 546 grade A and B lens candidates from C21 and S25 (blue), the visually rejected systems as non-lenses for comparison (orange), and those of the network candidates from our committee network of this work (green). The parameter ranges used to reject non-lenses are marked in red and defined by the highest and/or lowest uncertainty of the above-mentioned grade A and B lens sample (the small overlaps between the red regions and nonzero blue bins are merely due to the finite bin widths of the histograms). |

4.1 Binary inspection

We then split the catalog among ourselves, such that each system was inspected by two graders in a binary classification to rapidly exclude the majority of obvious non-lenses. As it was mentioned above, the catalog contains 200 systems from our previous searches, with 100 lenses and 100 non-lenses. Of the 100 lenses (or lens candidates), we recovered 98/100 with a previous grade of A or B and only missed two systems, namely systems HSC J222002+0605065 and HSC J090929 +010030 which previously obtained both an average grade of 1.6, while the lower limit for grade B is 1.5. Of the 100 non-lenses, we forwarded 59/100, which, however, is understandable as several of these galaxies previously obtained an average grade slightly below 1.5 and we aim to now forwarded all systems above a grade of 1 in the final grading scheme. Interestingly, we also forwarded 12/100 that previously had an average grade of 0. Since this first stage is graded conservatively to rule out obvious non-lenses and there is subsequently another round of inspection for refinement, this result for the 200 systems is overall very good.

4.2 Multi-class inspection

In the next round, four individuals inspected the remaining 3,408 systems from the binary classification. At this stage, each inspector provided one of four possible grades (0 corresponding to “no lens”, 1 to “possible lens”, 2 to “probable lens”, and 3 to “definite lens”) and voted in case the lens was significantly offset from the cutout center. In total, 309 systems obtained the “off-center” flag by at least one person, such that the lens center was subsequently corrected. A re-centering at this stage was crucial for the subsequent round of grading by the other four remaining graders, as we also showed in the central image the lens center and Einstein radius predicted by the ResNet from S23a (see bottom row of Fig. 3 for examples).

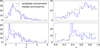

Based on the average grade, G, obtained from these four grades, we forwarded all systems with either G > 1 or G ≤ 1 but a standard deviation of the four grades above 0.75. Including those with a lower grade but a high discrepancy increases the forwarded sample significantly, but minimizes the possibility of missing any good lens candidate. This results in 686 candidates, including 160 systems that got shifted, which were inspected by the remaining four graders. In addition to the grading and “off-center” flag from the previous round, these four individuals were tasked with also indicating if the predicted lens center or Einstein radius is significantly mis-predicted. Furthermore, for each candidate, two histograms of photometric redshifts from the lens candidate environment, one up to z = 1 and the other up to z = 4, were shown in order to obtain a classification of the environment as well. As examples, the histograms of one system in an overdensity (bottom row) and one in the field (top row) are shown in Fig. 5. This allows us to assess if the predicted mass model, which we provide in Table 2 (see also Table A.1 for explanations), is reliable and to characterize the systems’ environment, while only very slightly increasing the visual inspection time.

|

Fig. 5 Histograms of the photometric redshifts within a box of 200″ on a side around two different lens candidates (top and bottom row) as examples. Such histograms, one in the range up to a redshift of 4 (left) and one up to a redshift of 1 (right), were shown during the visual inspection to ease the environment classification. The lens system in the top row has an environment similar to the median, whereas the system in the bottom row shows an overdensity at z ~ 0.4. |

4.3 Comparison to previous inspected candidates

As was mentioned above, we included 200 systems that we already inspected in previous works. From the 100 candidates that we previously excluded (i.e., that obtained an average grade of G < 1.5), only one system has now obtained an average grade above 1.5 and is consequently listed as a grade B candidate. From the sample of previously classified grade A and B lenses, we notice a tendency toward lower grades among most graders. While there were some changes compared to previous inspections, such as moving from PDR2 to PDR3 and showing two gri color images and one riz color image instead of three gri color images, we checked these aspects by directly comparing some inspected images from S25 and this work (see example images in Fig. D.1), and do not see this as a major source of bias. We also note that the applied scalings depend on the pixel values of the given cutout, such that a shifted image appears slightly different. Additionally, in S22 we focused on high-z lenses and applied slightly different scaling functions, but find this to be not a major reason. Furthermore, we speculate that this tendency may come from the relatively pure sample (i.e., a higher proportion of lenses) that we had after the binary inspection, but note that we also had in previous works a binary inspection cleaning carried out by a single inspector, resulting in a comparably pure sample. In contrast, we noticed that the 80″×80″ cutouts help in specific cases to reject non-lenses as the overall environment is visible. Finally, we remark that it is known (Rojas et al. 2023; Schuldt et al. 2025) that every grader has a different expectation and also the grades among a single inspector vary. Consequently, it might be simply that our expectations of an object qualifying as a lens candidate have slightly increased over time, possibly because of the increasing sample of lenses and the higher availability of high-resolution images (e.g., Euclid, see Acevedo Barroso et al. 2025; Nagam et al. 2025; Euclid Collaboration: Walmsley et al. 2025).

4.4 Final lens compilation

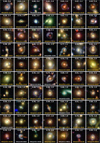

Despite the tendency toward lower grades, we adopt our traditional cuts to define grade A (3 ≥ G ≥ 2.5) and grade B (2.5 > G ≥ 1.5), and release in Table 2 the full catalog of visually inspected systems. This catalog includes jointly inspected systems that obtained a committee score of p ≥ 0.53 and were also detected by VariLens (Andika et al. 2025, noted in column 12 of the catalog), but we exclude any duplicates within <2″. Consequently, using the average grades, G, of all eight inspectors to obtain a stable average (see also Rojas et al. 2023; Schuldt et al. 2025), we found 42 grade A and 187 grade B lens candidates, which are listed in Table 2. Furthermore, the newly discovered grade A candidates are shown in Fig. 6, while the grade B systems are shown in Fig. 7. We further provide in this table all grade A and B lens candidates from C21 (marked in column 15, see Table A.1), S22 (marked in column 14), and S25 (marked in column 13) that we detected with our network committee as candidates but excluded from visual inspection (see also Sect. 4.2). In sum, we found with our network committee 95 grade A and 503 grade B lenses. From these systems, 506 systems (included not regraded systems) were already known, while we show in Figs. B.1 (grade A) and B.2 (grade B) those that we regraded in our inspection (either because they are in the small comparison sample, see Sect. 4.3, or because they were discovered by other work and not by C21, S22, or S25). This high recovery rate is expected, given the enormous lens search projects that already exploited HSC data and in particular our previous searches using individual networks that entered into our network committee now. However, we remind the reader that the new lens identification is only one aspect of this work.

|

Fig. 6 Color-image stamps (12″ × 12″; north is up and east is left) of identified grade-A lens candidates using HSC PDR3 gri multiband imaging data that are detected for the first time. At the top of each panel, we list the scores of the network committee, p, and the average visual inspection grade, G, of eight graders, where ≥2.5 corresponds to grade A. At the bottom, we list the candidate name. We further distinguish between candidates discovered jointly with Andika et al. (2025) using VariLens, appearing with blue frames, and fully new identifications with orange frames. All systems with their coordinates, and further details such as the lens environment and Einstein radii, are listed in Table 2. |

4.5 Discussion on the lens properties

We further classified the lens environment using photo-z histograms during the inspection by the second team (see Fig. 5 for examples), leading to an identification of 147 candidates in an overdensity (corresponding to at least two votes), of which 22 and 66 are ranked as grade A and B candidates, respectively. With the automated photo-z procedure to identify overdensities presented by S25, we identify 569 systems among the whole inspected sample, of which 18 and 70 are classified as grade A or B lens candidates, respectively (see also Table 1). While the automated procedure can be easily applied to large samples, the visual classification requires in particular human time that is highly limited. On the other hand, we visually identified several systems as being in an overdensity that were missed by the automated procedure due to missing z or y band observations required for the photo-z codes. In total, we find a good overlap between the two complementary procedures.

As was mentioned earlier, we further showed the network-predicted lens center and Einstein radius during final inspection. Since we found in the calibration round that the model network from Schuldt et al. (2023a) has difficulties in correctly predicting the model if the lens is not well centered, we mitigated this through a re-centering between the last two inspection stages. We overlay the predicted lens center and Einstein radius on the cutouts of our lens candidates in Appendix C. For the reported lens center coordinates in Table 2, we only found 9% of the lens candidates to have a relatively poor predicted lens center or Einstein radius, which might be because of a group- or cluster-scale lens not being suited for the modeling network or a remaining offset of the lens. We provide the predicted values of the seven mass model parameters with their corresponding 1 σ uncertainties in Table 2 (columns 16–29, see also Table A.1) as well as a flag (column 30) if the lens center and Einstein radius match the system reasonably (defined as two or more votes). This demonstrates once more the power of the modeling network and shows that the result can be used for further analysis.

While we applied in Sect. 3.2 relatively weak cuts on the model parameter uncertainties (see Fig. 4), we note that more stringent cuts are possible when the sample sizes become significantly larger. This would lead to an even higher fraction of systems that can be easily ruled out, while keeping most of the lens candidates.

5 Summary and conclusion

We have carried out a systematic search for strong gravitational lenses using the gri bands of the HSC Wide layer observations from the third public data release. For this, we tested the combination of different networks by averaging or multiplying their individual network scores. The best performance, 75% completeness on real lenses at a FPR of ~0.01%, was obtained by averaging the scores from five different networks.

While it would easily have been possible to visually inspect the resulting sample size, this will change with the next generation of wide-field imaging surveys, such that further cleaning is unavoidable. We tested two approaches, first using SExtractor and the HSC pixel-level flags to reject images with mostly nonzero background or image artifacts, and second, the ResNet modeling network from Schuldt et al. (2023a) as it predicts higher uncertainties for non-lenses. This lowered the contaminants by 29%, while not excluding any lens candidate from our previous identifications. Such post-processing scripts are expected to play a crucial role when two orders of magnitude more images need to be classified.

Thanks to a visual inspection of the cleaned network candidate list, we identified 95 grade A and 503 grade B candidates, including 506 previously known systems. We provide in Table 2 their coordinates together with their SIE and external shear parameters obtained from the ResNet presented by Schuldt et al. (2023a). During the visual inspection, we also showed for the first time the predicted lens center and Einstein radius, and characterized the reliability. We found that only ~9% of the candidates obtained a lens center or Einstein radius that is not well predicted, once the system is well centered. Moreover, we characterized their environment with two complementary approaches, either through a visual inspection of histograms showing the photo-z values of their surroundings, or the selection cuts elaborated by S25. In both cases, we find 88 grade A or B systems to be in a significantly overdense environment. In Table 2, which includes all visually inspected candidates with our obtained average grades and their characteristics as well as their previous discoveries, we are releasing one of the most complete catalogs of strong lensing systems so far.

Particularly in preparation for the ongoing and upcoming wide-field imaging surveys, the removal of false-positives of network classifiers will be a challenge, so the approaches presented here are expected to play a crucial role. Developing and testing fast and autonomous techniques to characterize and analyze the new systems, such as the ones tested here as well, will be a necessity.

Data availability

Table 2 is available at the CDS via anonymous ftp to cdsarc.cds.unistra.fr (130.79.128.5) or via https://cdsarc.cds.unistra.fr/viz-bin/cat/J/A+A/699/A350. It was further released through the HOLISMOKES collaboration webpage at www.holismokes.org and accordingly incorporated in the SuGOHI data base accessible through https://www-utap.phys.s.u-tokyo.ac.jp/~oguri/sugohi/.

Acknowledgements

We thank the anonymous referee for comments that helped to improve the clarity of the paper. S.S. has received funding from the European Union’s Horizon 2022 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 101105167 – FASTIDIoUS. We acknowledge financial support through grants PRIN-MIUR 2017WSCC32 and 2020SKSTHZ. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (LENSNOVA: grant agreement No 771776). This research is supported in part by the Excellence Cluster ORIGINS which is funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy – EXC-2094 – 390783311. This work uses the following software packages: Astropy (Astropy Collaboration 2013, 2018), matplotlib (Hunter 2007), NumPy (van der Walt et al. 2011; Harris et al. 2020), Python (Van Rossum & Drake 2009), Scipy (Virtanen et al. 2020).

Appendix A Detailed description of the released catalog of inspected lens candidates

With this publication, we have release the full catalog of inspected lens candidates. This catalog is introduced in Table 2 and electronically available at the HOLISMOKES webpage6, the SuGOHI database7, and CDS. The columns are described in Table A.1.

Appendix B Color-composite images of rediscovered lens candidates

In this section we present the color-composite image stamps of the lens candidates that obtained an average grade above 1.5 (i.e. grade A or B) during our visual inspection, but are already known in the literature. We note that the lens candidates discovered by C21, S22, and S25, which we excluded before visual inspection to lower the amount, are not shown.

|

Fig. B.1 Color-image stamps of rediscovered but again visually inspected grade-A lens candidates. Same format as Fig. 6. |

|

Fig. B.2 Color-image stamps of rediscovered but again visually inspected grade-B lens candidates. Same format as Fig. 6. |

Appendix C Color-composite images of lens candidates with their predicted mass model

In this section, we show the color-composite image stamps of the lens candidates in analogy to Figs. 6 and 7, as well as those in Sect. B. Contrary to previous figures, we show here the lens center and Einstein radius for each system predicted by the ResNet of Schuldt et al. (2023a). These models were also shown in one panel during the visual inspection to help the grader classify.

|

Fig. C.1 Color-image stamps of newly discovered grade A lenses, but showing the lens center and the Einstein radius as green circles predicted by the ResNet of Schuldt et al. (2023a). The predicted 1 σ uncertainty for the Einstein radii are shown with white circles. We mark those with a well predicted lens center and Einstein radius (see also column 30 of Table 2) by yellow names instead of white. Remaining format as Fig. 6. |

|

Fig. C.2 Color-image stamps of rediscovered but visually re-inspected grade-A lens candidates. Same format as Fig. C.1. |

|

Fig. C.4 Color-image stamps of rediscovered but visually re-inspected grade-B lens candidates. Same format as Fig. C.1. |

Appendix D Comparison between inspected images from S25 and this work

In this section, we show a representative sample of lens candidates that were graded in this work (see Sect. 4) and in S25. While we showed during grading three different scalings, we show here only one filter combination for simplicity.

|

Fig. D.1 Color-images (gri) shown for visual inspection in this work (top row) and in S25 (bottom row) to visualize the minor difference between PDR3 (this work) and PDR2 (S25) and differences in the scaling of the individual filters because of the different cutout centering. We further show the network score (top of each panel) and the HSC ID (bottom). |

References

- Acebron, A., Grillo, C., Bergamini, P., et al. 2022, A&A, 668, A142 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Acebron, A., Grillo, C., Suyu, S. H., et al. 2024, ApJ, 976, 110 [Google Scholar]

- Acevedo Barroso, J. A., O’Riordan, C. M., Clément, B., et al. 2025, A&A, 697, A14 [Google Scholar]

- Andika, I. T., Suyu, S. H., Cañameras, R., et al. 2023, A&A, 678, A103 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Andika, I. T., Schuldt, S., Suyu, S. H., et al. 2025, A&A, 694, A227 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Astropy Collaboration, (Robitaille, T. P. et al.) 2013, A&A, 558, A33 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Astropy Collaboration (Price-Whelan, A. M., et al.) 2018, AJ, 156, 123 [Google Scholar]

- Bertin, E., & Arnouts, S. 1996, A&AS, 117, 393 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Bolton, A. S., Burles, S., Schlegel, D. J., Eisenstein, D. J., & Brinkmann, J. 2004, AJ, 127, 1860 [NASA ADS] [CrossRef] [Google Scholar]

- Bolton, A. S., Treu, T., Koopmans, L. V. E., et al. 2008, ApJ, 684, 248 [NASA ADS] [CrossRef] [Google Scholar]

- Bonvin, V., Courbin, F., Suyu, S. H., et al. 2017, MNRAS, 465, 4914 [NASA ADS] [CrossRef] [Google Scholar]

- Cañameras, R., Schuldt, S., Suyu, S. H., et al. 2020, A&A, 644, A163 [Google Scholar]

- Cañameras, R., Schuldt, S., Shu, Y., et al. 2021, A&A, 653, L6 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Cañameras, R., Schuldt, S., Shu, Y., et al. 2024, A&A, 692, A72 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Cabanac, R. A., Alard, C., Dantel-Fort, M., et al. 2007, A&A, 461, 813 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Caminha, G. B., Rosati, P., Grillo, C., et al. 2019, A&A, 632, A36 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Cao, X., Li, R., Shu, Y., et al. 2020, MNRAS, 499, 3610 [NASA ADS] [CrossRef] [Google Scholar]

- Chan, J. H. H., Suyu, S. H., Sonnenfeld, A., et al. 2020, A&A, 636, A87 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Courbin, F., Bonvin, V., Buckley-Geer, E., et al. 2018, A&A, 609, A71 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Diehl, H. T., Buckley-Geer, E. J., Lindgren, K. A., et al. 2017, ApJS, 232, 15 [NASA ADS] [CrossRef] [Google Scholar]

- Enzi, W. J. R., Krawczyk, C. M., Ballard, D. J., & Collett, T. E. 2025, MNRAS, 540, 247 [Google Scholar]

- Ertl, S., Schuldt, S., Suyu, S. H., et al. 2024, A&A, 685, A15 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ertl, S., Suyu, S. H., Schuldt, S., et al. 2025, arXiv e-prints [arXiv:2503.09718] [Google Scholar]

- Euclid Collaboration (Holloway, P., et al.) 2025, A&A, submitted [arXiv:2503.15328] [Google Scholar]

- Euclid Collaboration (Walmsley, M., et al.) 2025, A&A, submitted [arXiv:2503.15324] [Google Scholar]

- Frye, B. L., Pascale, M., Pierel, J., et al. 2024, ApJ, 961, 171 [NASA ADS] [CrossRef] [Google Scholar]

- Gavazzi, R., Marshall, P. J., Treu, T., & Sonnenfeld, A. 2014, ApJ, 785, 144 [Google Scholar]

- Gong, Y., Liu, X., Cao, Y., et al. 2019, ApJ, 883, 203 [NASA ADS] [CrossRef] [Google Scholar]

- Granata, G., Caminha, G. B., Ertl, S., et al. 2025, A&A, 697, A94 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Grespan, M., Thuruthipilly, H., Pollo, A., et al. 2024, A&A, 688, A34 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Grillo, C., Rosati, P., Suyu, S. H., et al. 2018, ApJ, 860, 94 [Google Scholar]

- Grillo, C., Pagano, L., Rosati, P., & Suyu, S. H. 2024, A&A, 684, L23 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Harris, C. R., Millman, K. J., van der Walt, S. J., et al. 2020, Nature, 585, 357 [NASA ADS] [CrossRef] [Google Scholar]

- He, K., Zhang, X., Ren, S., & Sun, J. 2015, Deep Residual Learning for Image Recognition [Google Scholar]

- Holloway, P., Marshall, P. J., Verma, A., et al. 2024, MNRAS, 530, 1297 [NASA ADS] [CrossRef] [Google Scholar]

- Holwerda, B. W., Baldry, I. K., Alpaslan, M., et al. 2015, MNRAS, 449, 4277 [NASA ADS] [CrossRef] [Google Scholar]

- Hsieh, B. C., & Yee, H. K. C. 2014, ApJ, 792, 102 [Google Scholar]

- Huang, X., Storfer, C., Ravi, V., et al. 2020, ApJ, 894, 78 [NASA ADS] [CrossRef] [Google Scholar]

- Huang, X., Storfer, C., Gu, A., et al. 2021, ApJ, 909, 27 [NASA ADS] [CrossRef] [Google Scholar]

- Hunter, J. D. 2007, Comp. Sci. Eng., 9, 90 [NASA ADS] [CrossRef] [Google Scholar]

- Inami, H., Bacon, R., Brinchmann, J., et al. 2017, A&A, 608, A2 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ivezic, Z., Axelrod, T., Brandt, W. N., et al. 2008, Serbian Astron. J., 176, 1 [NASA ADS] [CrossRef] [Google Scholar]

- Ivezić, Ž., Kahn, S. M., Tyson, J. A., et al. 2019, ApJ, 873, 111 [Google Scholar]

- Jacobs, C., Glazebrook, K., Collett, T., More, A., & McCarthy, C. 2017, MNRAS, 471, 167 [Google Scholar]

- Jacobs, C., Collett, T., Glazebrook, K., et al. 2019, ApJS, 243, 17 [Google Scholar]

- Jaelani, A. T., More, A., Sonnenfeld, A., et al. 2020, MNRAS, 494, 3156 [NASA ADS] [CrossRef] [Google Scholar]

- Jaelani, A. T., More, A., Wong, K. C., et al. 2024, MNRAS, 535, 1625 [Google Scholar]

- Kelly, P. L., Rodney, S., Treu, T., et al. 2023, Science, 380, abh1322 [NASA ADS] [CrossRef] [Google Scholar]

- Lange, S. C., Amvrosiadis, A., Nightingale, J. W., et al. 2025, MNRAS, 539, 704 [Google Scholar]

- Lanusse, F., Ma, Q., Li, N., et al. 2018, MNRAS, 473, 3895 [Google Scholar]

- Laureijs, R., Amiaux, J., Arduini, S., et al. 2011, arXiv e-prints [arXiv:1110.3193] [Google Scholar]

- Li, R., Shu, Y., Su, J., et al. 2019, MNRAS, 482, 313 [NASA ADS] [CrossRef] [Google Scholar]

- Li, R., Napolitano, N. R., Tortora, C., et al. 2020, ApJ, 899, 30 [Google Scholar]

- Li, R., Napolitano, N. R., Spiniello, C., et al. 2021, ApJ, 923, 16 [NASA ADS] [CrossRef] [Google Scholar]

- Meštrić, U., Vanzella, E., Zanella, A., et al. 2022, MNRAS, 516, 3532 [CrossRef] [Google Scholar]

- Millon, M., Courbin, F., Bonvin, V., et al. 2020, A&A, 640, A105 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- More, A., Verma, A., Marshall, P. J., et al. 2016, MNRAS, 455, 1191 [NASA ADS] [CrossRef] [Google Scholar]

- Morishita, T., Stiavelli, M., Grillo, C., et al. 2024, ApJ, 971, 43 [NASA ADS] [CrossRef] [Google Scholar]

- Nagam, B. C., Acevedo Barroso, J. A., Wilde, J., et al. 2025, arXiv e-prints [arXiv:2502.09802] [Google Scholar]

- Paraficz, D., Courbin, F., Tramacere, A., et al. 2016, A&A, 592, A75 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Pascale, M., Frye, B. L., Pierel, J. D. R., et al. 2025, ApJ, 979, 13 [Google Scholar]

- Petrillo, C. E., Tortora, C., Vernardos, G., et al. 2019, MNRAS, 484, 3879 [Google Scholar]

- Pierel, J. D. R., Newman, A. B., Dhawan, S., et al. 2024, ApJ, 967, L37 [NASA ADS] [CrossRef] [Google Scholar]

- Planck Collaboration VI. et al. 2020, A&A, 641, A6 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Refsdal, S. 1964, MNRAS, 128, 307 [NASA ADS] [CrossRef] [Google Scholar]

- Rodney, S. A., Brammer, G. B., Pierel, J. D. R., et al. 2021, Nature Astronomy, 5, 1118 [Google Scholar]

- Rojas, K., Savary, E., Clément, B., et al. 2022, A&A, 668, A73 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Rojas, K., Collett, T. E., Ballard, D., et al. 2023, MNRAS, 523, 4413 [NASA ADS] [CrossRef] [Google Scholar]

- Savary, E., Rojas, K., Maus, M., et al. 2022, A&A, 666, A1 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Schuldt, S., Chirivì, G., Suyu, S. H., et al. 2019, A&A, 631, A40 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Schuldt, S., Suyu, S. H., Cañameras, R., et al. 2021, A&A, 651, A55 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Schuldt, S., Cañameras, R., Shu, Y., et al. 2023a, A&A, 671, A147 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Schuldt, S., Suyu, S. H., Cañameras, R., et al. 2023b, A&A, 673, A33 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Schuldt, S., Cañameras, R., Andika, I. T., et al. 2025, A&A, 693, A291 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Shajib, A. J., Treu, T., Birrer, S., & Sonnenfeld, A. 2021, MNRAS, 503, 2380 [Google Scholar]

- Shajib, A. J., Wong, K. C., Birrer, S., et al. 2022, A&A, 667, A123 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Shu, Y., Bolton, A. S., Kochanek, C. S., et al. 2016, ApJ, 824, 86 [NASA ADS] [CrossRef] [Google Scholar]

- Shu, Y., Brownstein, J. R., Bolton, A. S., et al. 2017, ApJ, 851, 48 [Google Scholar]

- Shu, Y., Marques-Chaves, R., Evans, N. W., & Pérez-Fournon, I. 2018, MNRAS, 481, L136 [Google Scholar]

- Shu, Y., Cañameras, R., Schuldt, S., et al. 2022, A&A, 662, A4 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Sonnenfeld, A., Chan, J. H. H., Shu, Y., et al. 2018, PASJ, 70, S29 [Google Scholar]

- Sonnenfeld, A., Verma, A., More, A., et al. 2020, A&A, 642, A148 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Spergel, D., Gehrels, N., Baltay, C., et al. 2015, arXiv e-prints [arXiv:1503.03757] [Google Scholar]

- Stein, G., Blaum, J., Harrington, P., Medan, T., & Lukić, Z. 2022, ApJ, 932, 107 [NASA ADS] [CrossRef] [Google Scholar]

- Stiavelli, M., Morishita, T., Chiaberge, M., et al. 2023, ApJ, 957, L18 [NASA ADS] [CrossRef] [Google Scholar]

- Storfer, C., Huang, X., Gu, A., et al. 2024, ApJS, 274, 16 [NASA ADS] [CrossRef] [Google Scholar]

- Suyu, S. H., & Halkola, A. 2010, A&A, 524, A94 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Suyu, S. H., Hensel, S. W., McKean, J. P., et al. 2012a, ApJ, 750, 10 [Google Scholar]

- Suyu, S. H., Treu, T., Blandford, R. D., et al. 2012b, arXiv e-prints [arXiv:1202.4459] [Google Scholar]

- Suyu, S. H., Huber, S., Cañameras, R., et al. 2020, A&A, 644, A162 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Talbot, M. S., Brownstein, J. R., Dawson, K. S., Kneib, J.-P., & Bautista, J. 2021, MNRAS, 502, 4617 [NASA ADS] [CrossRef] [Google Scholar]

- Tanaka, M., Coupon, J., Hsieh, B.-C., et al. 2018, PASJ, 70, S9 [Google Scholar]

- van der Walt, S., Colbert, S. C., & Varoquaux, G. 2011, Comp. Sci. Eng., 13, 22 [NASA ADS] [CrossRef] [Google Scholar]

- Van Rossum, G., & Drake, F. L. 2009, Python 3 Reference Manual (Scotts Valley, CA: CreateSpace) [Google Scholar]

- Vanzella, E., Caminha, G. B., Rosati, P., et al. 2021, A&A, 646, A57 [EDP Sciences] [Google Scholar]

- Virtanen, P., Gommers, R., Oliphant, T. E., et al. 2020, Nat. Methods, 17, 261 [Google Scholar]

- Wang, H., Cañameras, R., Caminha, G. B., et al. 2022, A&A, 668, A162 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Wong, K. C., Sonnenfeld, A., Chan, J. H. H., et al. 2018, ApJ, 867, 107 [Google Scholar]

- Wong, K. C., Suyu, S. H., Chen, G. C. F., et al. 2020, MNRAS, 498, 1420 [Google Scholar]

- Wong, K. C., Chan, J. H. H., Chao, D. C. Y., et al. 2022, PASJ, 74, 1209 [CrossRef] [Google Scholar]

- Zhong, F., Li, R., & Napolitano, N. R. 2022, Res. Astron. Astrophys., 22, 065014 [Google Scholar]

Using a threshold of p = 0.55, we obtained around 20 000 network candidates. Therefore, 50 000 additional systems would increase the system by a factor of ~2.5, leading to the specified cut on the network score. Since we recorded the annotation time during our visual inspection described in Sect. 4, we also estimated – a posteriori – the actual inspection time that would have been necessary for the additional sample. Here we obtained a factor ~3 of the additional time, and possibly ~30 additional lenses.

All Tables

Summary of the different selection stages with corresponding sample size, including rediscoveries.

All Figures

|

Fig. 1 True-positive rate (TPR), giving the ratio between true-positives and all positives, of different network committees (solid lines) measured on set 3, as a function of the number of lens candidates selected by each committee over the parent sample of 110 million sources. We consider here five different networks, whose individual curves are shown in light purple of various line styles. As examples, we show the performance of the ResNet trained on sets L4 and N1 from C24 (dash-dotted) and Classifier-1 from S22 (long-dotted). The solid gray line shows the performance when averaging the scores from these two networks, the green one when additionally including the ResNet trained on L7 and N1 (long dashed), the orange one when also including the baseline ResNet from C24 (dotted), and red when additionally using the network from C21 (dashed). We further show the TPR evolution with a solid dark blue line when multiplying the network scores from all five networks instead of averaging. |

| In the text | |

|

Fig. 2 Evolution of the TPR, as measured over the three test sets of candidate and confirmed strong-lenses, as a function of the number of candidates selected by the neural networks. Solid curves show the results for the final committee of five networks trained on different ground-truth datasets. Light purple curves show the lower TPR obtained on set 3 for the five individual networks included in the committee: the ResNet from C21 (dashed curve), Classifier-1 from S22 (long-dotted curve), the baseline ResNet from C24 (dotted curve), a network trained on mocks with a natural θE distribution (dash-dotted curve), and a ResNet trained on balanced fraction of doubles and quads (long-dashed curve). The vertical gray line marks the final score threshold applied to select the list of strong-lens network candidates. Examples of score thresholds are marked for the evaluation of the best committee on set 3. |

| In the text | |

|

Fig. 3 Examples of false-positive candidates from the network committee that were cleaned with post-processing scripts. A major fraction of contaminants correspond to crowded fields and/or cutouts with nonzero background emission in g, r, and/or i-band, which are rejected by SExtractor and HSC pixel-level flags (top row) or by the modeling network from S23a (bottom row). We show the average score of the network committee and the HSC ID in the top and bottom, respectively, of each panel, which is ~10″ on a side. We further show the predicted lens center as a cross with a length corresponding to the predicted uncertainty, and the Einstein radius as a solid green circle with predicted uncertainty bounds as dotted white lines. Some of the lower limits on the Einstein radii are near zero and are thus not visible. |

| In the text | |

|

Fig. 4 Normalized histograms of the mass model parameter predictions using the modeling network from S23a. The top row shows the predicted mass model parameter values, and the bottom row shows the corresponding 1 σ uncertainties. We show the 546 grade A and B lens candidates from C21 and S25 (blue), the visually rejected systems as non-lenses for comparison (orange), and those of the network candidates from our committee network of this work (green). The parameter ranges used to reject non-lenses are marked in red and defined by the highest and/or lowest uncertainty of the above-mentioned grade A and B lens sample (the small overlaps between the red regions and nonzero blue bins are merely due to the finite bin widths of the histograms). |

| In the text | |

|

Fig. 5 Histograms of the photometric redshifts within a box of 200″ on a side around two different lens candidates (top and bottom row) as examples. Such histograms, one in the range up to a redshift of 4 (left) and one up to a redshift of 1 (right), were shown during the visual inspection to ease the environment classification. The lens system in the top row has an environment similar to the median, whereas the system in the bottom row shows an overdensity at z ~ 0.4. |

| In the text | |

|

Fig. 6 Color-image stamps (12″ × 12″; north is up and east is left) of identified grade-A lens candidates using HSC PDR3 gri multiband imaging data that are detected for the first time. At the top of each panel, we list the scores of the network committee, p, and the average visual inspection grade, G, of eight graders, where ≥2.5 corresponds to grade A. At the bottom, we list the candidate name. We further distinguish between candidates discovered jointly with Andika et al. (2025) using VariLens, appearing with blue frames, and fully new identifications with orange frames. All systems with their coordinates, and further details such as the lens environment and Einstein radii, are listed in Table 2. |

| In the text | |

|

Fig. 7 Color-image stamps of newly identified grade-B lens candidates, in the same format as Fig. 6. |

| In the text | |

|

Fig. B.1 Color-image stamps of rediscovered but again visually inspected grade-A lens candidates. Same format as Fig. 6. |

| In the text | |

|

Fig. B.2 Color-image stamps of rediscovered but again visually inspected grade-B lens candidates. Same format as Fig. 6. |

| In the text | |

|

Fig. C.1 Color-image stamps of newly discovered grade A lenses, but showing the lens center and the Einstein radius as green circles predicted by the ResNet of Schuldt et al. (2023a). The predicted 1 σ uncertainty for the Einstein radii are shown with white circles. We mark those with a well predicted lens center and Einstein radius (see also column 30 of Table 2) by yellow names instead of white. Remaining format as Fig. 6. |

| In the text | |

|

Fig. C.2 Color-image stamps of rediscovered but visually re-inspected grade-A lens candidates. Same format as Fig. C.1. |

| In the text | |

|

Fig. C.3 Color-image stamps of newly discovered grade-B lens candidates. Same format as Fig. C.1. |

| In the text | |

|

Fig. C.4 Color-image stamps of rediscovered but visually re-inspected grade-B lens candidates. Same format as Fig. C.1. |

| In the text | |

|

Fig. D.1 Color-images (gri) shown for visual inspection in this work (top row) and in S25 (bottom row) to visualize the minor difference between PDR3 (this work) and PDR2 (S25) and differences in the scaling of the individual filters because of the different cutout centering. We further show the network score (top of each panel) and the HSC ID (bottom). |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.