| Issue |

A&A

Volume 691, November 2024

|

|

|---|---|---|

| Article Number | A109 | |

| Number of page(s) | 27 | |

| Section | Interstellar and circumstellar matter | |

| DOI | https://doi.org/10.1051/0004-6361/202451588 | |

| Published online | 11 November 2024 | |

Quantifying the informativity of emission lines to infer physical conditions in giant molecular clouds

I. Application to model predictions

1

IRAM, 300 rue de la Piscine,

38406

Saint-Martin-d’Hères,

France

2

LERMA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Universités,

92190

Meudon,

France

3

Univ. Grenoble Alpes, Inria, CNRS, Grenoble INP, GIPSA-Lab,

Grenoble

38000,

France

4

Univ. Lille, CNRS,

Centrale Lille, UMR 9189 – CRIStAL,

59651

Villeneuve d’Ascq,

France

5

Université Paris Cité, CNRS, Astroparticule et Cosmologie,

75013

Paris,

France

6

Univ. Toulon, Aix Marseille Univ., CNRS, IM2NP,

Toulon,

France

7

LERMA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Universités,

75014

Paris,

France

8

Department of Earth, Environment, and Physics, Worcester State University,

Worcester,

MA

01602,

USA

9

Center for Astrophysics | Harvard & Smithsonian,

60 Garden Street,

Cambridge,

MA 02138,

USA

10

Instituto de Física Fundamental (CSIC),

Calle Serrano 121,

28006

Madrid,

Spain

11

Department of Astronomy, University of Florida,

PO Box 112055,

Gainesville,

FL

32611,

USA

12

Institut de Recherche en Astrophysique et Planétologie (IRAP), Université Paul Sabatier,

Toulouse cedex 4,

France

13

National Radio Astronomy Observatory,

520 Edgemont Road,

Charlottesville,

VA

22903,

USA

14

Laboratoire d’Astrophysique de Bordeaux, Univ. Bordeaux, CNRS,

B18N, Allée Geoffroy Saint-Hilaire,

33615

Pessac,

France

15

Instituto de Astrofísica, Pontificia Universidad Católica de Chile,

Av. Vicuña Mackenna 4860,

7820436

Macul, Santiago,

Chile

16

Laboratoire de Physique de l’École normale supérieure, ENS, Université PSL, CNRS, Sorbonne Université, Université de Paris, Sorbonne Paris Cité,

Paris,

France

17

Jet Propulsion Laboratory, California Institute of Technology,

4800 Oak Grove Drive,

Pasadena,

CA

91109,

USA

18

School of Physics and Astronomy, Cardiff University,

Queen’s buildings,

Cardiff

CF24 3AA,

UK

★★ Corresponding author; einig@iram.fr;pierre.palud@obspm.fr

Received:

19

July

2024

Accepted:

18

September

2024

Context. Observations of ionic, atomic, or molecular lines are performed to improve our understanding of the interstellar medium (ISM). However, the potential of a line to constrain the physical conditions of the ISM is difficult to assess quantitatively, because of the complexity of the ISM physics. The situation is even more complex when trying to assess which combinations of lines are the most useful. Therefore, observation campaigns usually try to observe as many lines as possible for as much time as possible.

Aims. We have searched for a quantitative statistical criterion to evaluate the full constraining power of a (combination of) tracer(s) with respect to physical conditions. Our goal with such a criterion is twofold. First, we want to improve our understanding of the statistical relationships between ISM tracers and physical conditions. Secondly, by exploiting this criterion, we aim to propose a method that helps observers to make their observation proposals; for example, by choosing to observe the lines with the highest constraining power given limited resources and time.

Methods. We propose an approach based on information theory, in particular the concepts of conditional differential entropy and mutual information. The best (combination of) tracer(s) is obtained by comparing the mutual information between a physical parameter and different sets of lines. The presented analysis is independent of the choice of the estimation algorithm (e.g., neural network or χ2 minimization). We applied this method to simulations of radio molecular lines emitted by a photodissociation region similar to the Horsehead Nebula. In this simulated data, we considered the noise properties of a state-of-the-art single dish telescope such as the IRAM 30m telescope. We searched for the best lines to constrain the visual extinction, AVtot, or the ultraviolet illumination field, G0. We ran this search for different gas regimes, namely translucent gas, filamentary gas, and dense cores.

Results. The most informative lines change with the physical regime (e.g., cloud extinction). However, the determination of the optimal (combination of) line(s) to constrain a physical parameter such as the visual extinction depends not only on the radiative transfer of the lines and chemistry of the associated species, but also on the achieved mean signal-to-noise ratio. The short integration time of the CO isotopologue J = 1 − 0 lines already yields much information on the total column density for a large range of (AVtot, G0) space. The best set of lines to constrain the visual extinction does not necessarily combine the most informative individual lines. Precise constraints on the radiation field are more difficult to achieve with molecular lines. They require spectral lines emitted at the cloud surface (e.g., [CII] and [CI] lines).

Conclusions. This approach allows one to better explore the knowledge provided by ISM codes, and to guide future observation campaigns.

Key words: astrochemistry / methods: numerical / methods: statistical / ISM: clouds / ISM: lines and bands

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

The effect of the feedback of a newborn star on its parent molecular cloud is to this day poorly understood. The newborn star overall dissipates the parent cloud, leading to a decrease in its star-forming capability. However, it also causes a local compression of the gas, which may trigger a gravitational collapse. Both spatially resolved observations of star-forming regions and refined numerical models are needed to better understand the physical phenomena involved. A difficulty for interstellar medium (ISM) studies is that observing many lines in the infrared or millimeter domains is expensive and can require several successive observations with different instrument settings. It appears that using statistical arguments to determine the most relevant tracer to observe in order to estimate a given physical parameter (e.g., the cloud visual extinction, the gas volume density, or the thermal pressure) received only limited attention from the ISM community. This work provides a general approach based on information theory to compare the information provided by different tracers and sets of tracers.

This paper is the first of a series of two on applications of information theory concepts to ISM studies. This paper has two goals. First, it aims to show that tools from information theory can be exploited to visualize and better understand the complex statistical relationships between physical conditions and noisy observations. Second, it aims to provide a tool to guide future observations in choosing the best lines to observe, and for how long, to accurately estimate physical parameters such as the gas column density (or visual extinction), the intensity of the incident UV field, and the thermal pressure. The results of such a study heavily depend on the signal-to-noise ratio (S/N) for each line; that is, on the instrument properties, on the integration time, and on the observed environment. To achieve these two goals, we defined a general method and applied it to data simulated with a fast, accurate emulation of the Meudon PDR code (Le Petit et al. 2006; Palud et al. 2023) and a realistic noise model. The proposed approach is applicable to any ISM model combined with any noise model. The next paper will use real data from the ORION-B Large Program (co-PIs: J. Pety & M. Gerin, Pety et al. 2017), with a focus on photodissociation regions (PDRs).

Selecting the most informative lines to estimate a physical parameter (e.g., visual extinction or gas volume density) is an instance of a machine learning problem called feature selection (Shalev-Shwartz & Ben-David 2014, chapter 25). A straightforward and common approach is to evaluate the Pearson’s correlation coefficient between individual lines and individual physical parameters of interest. The lines with the highest correlation with a given physical parameter would then be selected. This method is common in ISM studies (see, e.g., Pety et al. 2017). However, it suffers from three main drawbacks. First, it is restricted to one-to-one relationships, while one might be interested in selecting multiple lines to predict multiple physical parameters at once. Second, it is restricted to linear relationships, and cannot fully capture nonlinear dependencies between lines and physical parameters. Third, by considering tracers individually, it neglects their complementarity – that is, the possibility for a group of lines to be more informative than any single emission line from the group – while such complementarities are already known and studied with line ratios or line combinations. For instance, (Kaufman et al. 1999) studies line combinations and ratios in order to disentangle several physical parameters whose estimates would be degenerate with a single tracer.

The canonical coefficient analysis (Härdle & Simar 2007) enables considering correlations between multiple lines and multiple physical parameters. It alleviates the one-to-one relationship restriction and enables one to account for many-to-many relationships, and thus to include line complementarities. This approach provides multiple correlation coefficients in the many-to-many case. The difficulty with this method is that ranking lines based on multiple correlation coefficients is not trivial. As is shown in the following, these coefficients can be combined into one number that is interpretable if both observed lines and physical parameters are normally distributed.

Predictor-dependent methods can address the linear and Gaussian limitations. Such methods rely on a regression model; for example, random forests or neural networks. The greedy selection algorithm (Shalev-Shwartz & Ben-David 2014, sect. 25.1) would iteratively select tracers to reduce the error of a type of regression model. Similarly, the greedy elimination method would iteratively remove tracers. For instance, Bron et al. (2021) applied numerous random forest regressions to predict ionization fraction using only one tracer at a time. Then, they defined the best tracers as those leading to the minimum sum of residual squares. Other statistical methods exploit specificities of a predictor class to explain the predictions of a model and remove unused features. For instance, (Gratier et al. 2021) used feature importance from random forests to assess the predictive power of individual lines or on the H2 column density. However, the tracer subsets obtained with these approaches heavily depend on the considered type of regression model.

Finally, explainable AI methods such as SHAP values (Lundberg & Lee 2017) can be used to understand a numerical model and identify its most important features. This kind of approach was already applied in ISM studies; for instance, in Heyl et al. (2023) and Ramos et al. (2024). However, this class of methods only addresses deterministic methods, and is thus not able to handle noisy observations. Besides, it is limited to one-to-one relationships and scales poorly with the number of features. Some fast variants exist, such as Kernel SHAP (Lundberg & Lee 2017), but require the features to be independent, which is strongly violated with ISM lines.

In this work, we propose to exploit entropy and mutual information (Cover & Thomas 2006, sect. 8.6). Mutual information has already been exploited in astrophysics tasks (see, e.g., Pandey & Sarkar 2017), although not in the ISM community to the best of our knowledge. It does not depend on the choice of a regression model, handles at once multiple lines and multiple physical parameters, does not assume any distribution for lines or physical parameters, and accounts for nonlinearities and line complementarities. The methodology proposed in this work can be adapted to other problems with the associated Python package called INFOVAR1, which stands for “informative variables.” The results in this paper are produced using a dedicated Python package2, which is based on INFOVAR and designed for the generation and the statistical analysis of synthetic line observations. All the scripts used to generate these results are freely available3.

Section 2 reviews the three information theory quantitative criteria our method builds upon, namely entropy, conditional entropy and mutual information. Section 2.7 formalizes the line selection problem and introduces an approximate solution that accounts for numerical uncertainties. Section 3 sets up an application of the proposed method to PDRs with the Meudon PDR code on IRAM’s EMIR instrument. Section 4 presents and analyzes global results of this application. Section 5 applies the line selection method to different environments. Section 6 provides some concluding remarks.

2 Information theory toolkit

This section reviews the information theory concepts that the proposed approach builds upon. We first define the considered physical model. Secondly, Shannon and differential entropies are introduced. Entropy is the building block of mutual information, which allows us to compare how informative subsets of lines are. Table 1 summarizes the information theory quantities to be introduced in Sections 2.4–2.6.

In a nutshell, the physical parameters and the line intensities are considered as dependent random variables. The entropy of physical parameters characterizes their distribution uncertainty before any measurements. The mutual information between a physical parameter and a set of line intensities quantifies the information gain on the physical parameter when observing line intensities. A high value of mutual information for a given line thus indicates that an observation would constrain well the inferred value of the physical parameter.

Overview of the information theory quantities used in this work.

2.1 Physical model

A physical model links physical conditions θ with observables y by combining an ISM model f and an observation simulator 𝒜 that includes all sources of noise. In this work, we use it to generate a realistic set of (θ, y) pairs, called sets of physical models. We consider an ISM model f that predicts the true value ![$\[\mathbf{f}(\boldsymbol{\theta})=\left(f_{\ell}(\boldsymbol{\theta})\right)_{\ell=1}^{L}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq3.png) of L observables from a limited number of D ≲ 10 physical parameters

of L observables from a limited number of D ≲ 10 physical parameters ![$\[\boldsymbol{\theta}=\left(\theta_{d}\right)_{d=1}^{D}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq4.png) . For instance, in its version 7 released in 2024, the Meudon PDR code (Le Petit et al. 2006) computes the integrated intensity of 5375 emission lines from the thermal pressure (or gas volume density), the intensity of the incident UV radiative field, the cloud visual extinction, the cosmic ray ionization rate, grain distribution properties, etc. The model f is assumed to simulate accurately the physics of the ISM. This means that for a given set of physical conditions θ and a line of index 1 ≤ ℓ ≤ L, the predicted value fℓ(θ) is considered to be the one a telescope would measure in the absence of noise. In the remainder of this work, the considered observables y are integrated intensities of emission lines associated with ionic, atomic or molecular quantum transitions. However, the approach we propose could be applied with any kind of observable, such as line ratios, raw line profiles or other summary values such as the line width or maximum value.

. For instance, in its version 7 released in 2024, the Meudon PDR code (Le Petit et al. 2006) computes the integrated intensity of 5375 emission lines from the thermal pressure (or gas volume density), the intensity of the incident UV radiative field, the cloud visual extinction, the cosmic ray ionization rate, grain distribution properties, etc. The model f is assumed to simulate accurately the physics of the ISM. This means that for a given set of physical conditions θ and a line of index 1 ≤ ℓ ≤ L, the predicted value fℓ(θ) is considered to be the one a telescope would measure in the absence of noise. In the remainder of this work, the considered observables y are integrated intensities of emission lines associated with ionic, atomic or molecular quantum transitions. However, the approach we propose could be applied with any kind of observable, such as line ratios, raw line profiles or other summary values such as the line width or maximum value.

The noise, as well as other observational effects, are included through the observation simulator 𝒜. Observed integrated intensities ![$\[\mathbf{y}=\left(y_{\ell}\right)_{\ell=1}^{L}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq5.png) can thus be associated with physical conditions θ using

can thus be associated with physical conditions θ using

![$\[y_{\ell}=\mathcal{A}\left(f_{\ell}(\boldsymbol{\theta})\right).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq6.png) (1)

(1)

This observation simulator can include, for instance, additive Gaussian noise for thermal effects or photon counting error, or multiplicative lognormal noise for calibration error. To model the uncertainties due to the noise, we resort to random variables denoted Θ and Y for physical conditions and observations, respectively. For instance, for a subset s of K ∈ {1,..., L} lines, the observation simulator in Eq. 1) defines a probability distribution on observation Y(s) for a physical condition Θ = θ. This random variable is fully described with a probability density function (PDF) π (·|θ) which is a function such that for any physical condition vector θ ∈ ℝD and observation y(s) ∈ ℝK, π (y(s)|θ) ≥ 0 and ∫ π(y(s)|θ) dy(s) = 1. Common probability distributions on multivariate random variables include the uniform distribution Unif(C) on a set C and the normal distribution 𝒩(μ, ∑) with μ the mean of the distribution and ∑ its covariance matrix – also called Gaussian distribution. This paper will also resort to the lognormal distribution that corresponds to the exponential of a normally distributed random variable. In other words, if a random variable follows a lognormal distribution log 𝒩(μ, ∑), then its log follows a Gaussian distribution of parameters μ and ∑.

This work aims at determining the subset of K lines that best constrains the physical parameters Θ. We expect the most informative lines to differ depending on the type of physical regime. For instance, a line that can quickly become optically thick may be most informative on the visual extinction ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq7.png) in translucent or filamentary conditions, before it saturates. We thus define different types of regime, characterized by different priors π(θ), and determine the most informative subset of K emission lines in each of these regimes.

in translucent or filamentary conditions, before it saturates. We thus define different types of regime, characterized by different priors π(θ), and determine the most informative subset of K emission lines in each of these regimes.

2.2 Two-dimensional illustrative example

We now introduce a simple synthetic example that will illustrate the information theory concepts defined below. We use the simplest case where a physical process, controlled by a physical parameter Θ, yields one value of Y per value of Θ. Sources of uncertainty such as the presence of noise or hidden control variables can however blur the relationship between Θ and Y. This implies that inferring the physical parameters from the observed quantity yields uncertain values. By representing Θ and Y as dependent random variables, the concepts of information theory allow us to quantify the uncertainty on the physical parameter Θ before and after measuring Y.

The distribution chosen to represent the couple (Θ, Y) is a two-dimensional lognormal distribution. Its parameters correspond to the mean vector and covariance matrix in the logarithmic scale. They are set to obtain unit expectations, a standard deviation such that a 1σ error corresponds to a factor of 1.3, and a ρ = 0.9 correlation coefficient in linear scale. Appendix A gathers details on the associated computations.

The top panel of Fig. 1 shows the PDF of the joint distribution π(θ, y). The bottom panel compares the prior distribution π(θ) (i.e., the distribution of the physical parameter before any observation) with three conditional distributions π(θ|y) (i.e., each distribution of the physical parameter values consistent with one observed value Y = y). Each represented conditional distribution is tighter and has lighter tails than the prior distribution, which indicates that observing Y reduces the uncertainty on Θ. Besides, among the three considered observed values of Y, the lower ones lead to the tightest conditional distribution, and thus to lower uncertainty on Θ. The information theory concepts to be introduced in the next sections quantify this notion of uncertainty.

2.3 Entropy for discrete random variables

The notion of entropy was first introduced by Boltzmann and Gibbs in the 1870s as a measure of the disorder of a system. It plays a key role in the second law of thermodynamics, which establishes the irreversibility of the macroscopic evolution of an isolated particle system despite the reversibility of microscopic processes. In a large system where particles can only be in a finite set 𝒳 of Ω ≥ 1 states, the state of one particle can be modeled as a discrete random variable X. This random variable is fully described with a probability mass function, π; that is, a function such that for any state x ∈ 𝒳, π(x) ≥ 0 and ∑x∈𝒳 π(x) = 1. In this setting, π(x) is the probability for a particle to be in the state x. The entropy is then defined as (Wehrl 1978)

![$\[S=-k_B \sum_{x \in \mathcal{X}}[\ln \pi(x)] \pi(x),\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq8.png) (2)

(2)

with kB the Boltzmann constant.

In information theory, the entropy refers to that introduced in Shannon (1948). Informally, it measures the uncertainty or lack of information in a probability distribution. The entropy of a discrete random variable X is defined by (Cover & Thomas 2006, chapter 2)

![$\[H(X)=\mathbb{E}_X\left[-\log _2 \pi(X)\right]=-\sum_{x \in \mathcal{X}}\left[\log _2 \pi(x)\right] \pi(x).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq9.png) (3)

(3)

The two definitions are equivalent up to the considered units. The base-2 logarithm in Eq. (3) leads to entropy values in bits.

The entropy is bounded and always positive. The entropy equals exactly 0 when π(x) = 1 for a single state x ∈ 𝒳 and 0 for all the others. In this first case, the probability distribution does not contain any uncertainty. For a particle system, this case corresponds to all particles being in the same state x. Conversely, both definitions are maximized with the uniform distribution; that is, when for all states x ∈ 𝒳, π(x) = 1/Ω. In this second case, the uncertainty is indeed maximum, in the sense that none of the states is favored. This uniform distribution limit corresponds to a macroscopic thermodynamic equilibrium, where Eq. (2) reduces to the well known formula (often called the Boltzmann equation) S = kB ln Ω or, equivalently, Eq. (3) reduces to H(X) = log2 Ω.

Shannon used the entropy to prove that there exists a code that can compress the data for storage and transmission. Shannon not only proposed the algorithm, but also quantified the optimal performances that can be reached. In this context, Shannon entropy in base 2 corresponds to the average minimum length of a binary message to encode an information. A fundamental property of entropy, namely the additivity of independent sources of information, states that, for any couple of independent random variables X1, X2, H(X1, X2) = H(X1) + H(X2). In other words, the minimum length of a message containing two uncorrelated parts is the sum of the lengths required to encode each of the parts. More generally, the uncertainty of a couple of independent random variables is the sum of their individual uncertainties.

|

Fig. 1 A simple synthetic example of a joint distribution on the couple (Θ, Y). Top: contour levels of the PDF of the joint distribution with lognormal marginals and a clear correlation. Three observed values are indicated with horizontal lines. Bottom: comparison of the distribution on Θ before any observation (prior, in dashed black) and for the three y values (conditional distributions, in colors). |

2.4 Differential entropy for continuous random variables

As was introduced in Sect. 2.1, this work relies on continuous random variables, namely subsets of lines Y(s) ∈ ℝK and physical parameters Θ ∈ ℝD; for example, visual extinction or incident UV radiative field intensity. For continuous random variables, the information theory notion of entropy is generalized with the differential entropy (Cover & Thomas 2006, chapter 8):

![$\[h(\Theta)=\mathbb{E}_{\Theta}\left[-\log _2 \pi(\Theta)\right]=-\int\left[\log _2 \pi(\boldsymbol{\theta})\right] ~\pi(\boldsymbol{\theta}) ~\mathrm{d} \boldsymbol{\theta},\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq10.png) (4)

(4)

with π(θ) the PDF of Θ. The differential entropy h (Θ) is the limit of the discrete entropy H of a quantized variable ΘΔ, where Δ is a quantization step (Cover & Thomas 2006, theorem 8.3.1)

![$\[h(\Theta)=\lim _{\Delta \rightarrow 0} H\left(\Theta^{\Delta}\right)+\log _2 \Delta.\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq11.png) (5)

(5)

Unlike the finite case, the differential entropy can take negative values, as log2 Δ < 0 when Δ < 1. Table 2 lists the differential entropy formulae of a few common parametric distributions. For instance, the entropy of a Gaussian distribution only depends on its variance and not on its mean. The entropy of a uniform distribution on a compact set is the logarithm of the set volume.

For the example from Sect. 2.2, using the lognormal formula from Table 2, the uncertainty on Θ before any observation is h (Θ) = 0.07 bits. This corresponds to the uncertainty contained in a uniform distribution on an interval of size 20.07 = 1.05, or in a Gaussian distribution of standard deviation σ = 0.25.

The entropy can also be computed for couples of random variables. For instance, when considering the problem of inferring Θ from Y(s), we can now introduce the differential entropy on the couple (Θ, Y(s)) that is defined as

![$\[h\left(\Theta, Y^{(s)}\right)=\mathbb{E}_{\Theta, Y^{(s)}}\left[-\log _2 \pi\left(\Theta, Y^{(s)}\right)\right]\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq12.png) (6)

(6)

![$\[=-\int\left[\log _2 \pi\left(\boldsymbol{\theta}, \mathbf{y}^{(s)}\right)\right] \pi\left(\boldsymbol{\theta}, \mathbf{y}^{(s)}\right) \mathrm{d} \boldsymbol{\theta} \mathrm{~d} \mathbf{y}^{(s)},\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq13.png) (7)

(7)

where π(θ, y(s)) is the joint PDF of the couple (Θ, Y(s)).

Differential entropy for a few common distributions.

2.5 Conditional differential entropy: Effects of observations

Observations are performed in order to infer physical parameters Θ. In Sect. 2.1, we described observations that include noise. Observing a vector y(s) thus does not permit one to determine the physical conditions Θ with infinite precision. However, it can reduce the uncertainty on the physical parameters Θ.

The conditional differential entropy h (Θ| Y(s)) quantifies the expected uncertainty remaining on Θ when Y(s) is known; that is, after a future observation. It is defined as

![$\[h\left(\Theta \mid Y^{(s)}\right)=\mathbb{E}_{\Theta, Y^{(s)}}\left[-\log _2 \pi\left(\Theta \mid Y^{(s)}\right)\right]\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq18.png) (8)

(8)

![$\[=-\int\left[\log _2 \pi\left(\boldsymbol{\theta} \mid \mathbf{y}^{(s)}\right)\right] \pi\left(\boldsymbol{\theta}, \mathbf{y}^{(s)}\right) \mathrm{d} \boldsymbol{\theta} \mathrm{~d} \mathbf{y}^{(s)}.\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq19.png) (9)

(9)

The conditional differential entropy h Θ | Y(s)) is a mean value characterizing all the possible joint realizations of the observations and the physical parameters. It is therefore not a function of a specific realization y(s) of the Y(s) random variable. Instead, it quantifies how a future observation y(s) of Y(s) would affect the uncertainty on the physical conditions Θ on average. This average is computed with respect to the joint distribution of physical parameters Θ and observations Y(s). The conditional differential entropy can thus be evaluated prior to any observation and estimation. It can be shown that

![$\[h\left(\Theta \mid Y^{(s)}\right)=h\left(\Theta, Y^{(s)}\right)-h\left(Y^{(s)}\right).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq20.png) (10)

(10)

This means that the remaining uncertainty on Θ, once Y(s) is known, is the information jointly carried by both Θ and Y(s) minus the information brought by Y(s) alone. In other words, knowing Y(s) provides additional information to estimate Θ. This implies that the conditional differential entropy is always lower or equal to the differential entropy:

![$\[h\left(\Theta \mid Y^{(s)}\right) \leq h(\Theta).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq21.png) (11)

(11)

This inequality becomes an equality if and only if Θ and Y(s) are independent. This can occur for instance in the low S/N regime, when additive noise completely dominates the line intensity. Conversely, if there exists a bijection between Θ and Y(s) (e.g., in the absence of noise and with a bijective f in Eq. (1)), then h (Θ|Y(s)) is equal to −∞.

The example of Sect. 2.2 shows how different values of Y yield different uncertainties on Θ. The lower panel in Fig. 1 shows that, among the three observed y, lower values of y lead to a tighter distribution and thus to lower uncertainties on Θ. The remaining uncertainty on Θ is −2.01, −1.11, or −0.58 bits after observing y = 0.5, 1, or 1.5, respectively. The conditional differential entropy h (Θ | Y) averages over all possible observations y. Using Eq. (9)) and the lognormal formulae from Table 2, in this case, h (Θ | Y) = −1.08 − 0.07 = −1.15 bits. The latter value is the mean uncertainty on Θ when observing Y, averaged on all possible values of Y(s).

The differential entropy h (Θ | Y) is related to the error in estimating Θ from the Y data, and in particular to the root mean squared error. For instance, in an estimation procedure, decreasing the entropy by 1 bit improves the precision4 by a factor of two in the Gaussian case. Appendix B illustrates the notion of a difference of one bit between two probability distributions. An interpretation valid in the general case will be presented in the second paper of this series.

|

Fig. 2 Venn diagram representation of the differential entropy h (Θ) (and h (Y)) of the conditional differential entropy h (Θ|Y) (and h (Y|Θ)), and of the mutual information I (Θ, Y). |

2.6 Mutual information

The mutual information I (Θ, Y(s)) (Cover & Thomas 2006, sect. 8.6) is often preferred for a simpler interpretation. It quantifies the information on Θ that is gained by knowing Y(s):

![$\[I\left(\Theta, Y^{(s)}\right)=h(\Theta)-h\left(\Theta \mid Y^{(s)}\right).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq22.png) (12)

(12)

Figure 2 shows a Venn diagram that illustrates the relationships between differential entropy, conditional differential entropy and mutual information. It illustrates Eq. (10) and (12).

Mutual information is always positive, as implied by Eq. (11). A high mutual information indicates that knowing Y(s) considerably lowers the uncertainty on Θ. If we consider different distributions of a given physical parameter (e.g., corresponding to different physical regimes), represented by different random variables Θ, the mutual information is delicate to compare as it depends on the initial uncertainty. Indeed, it is easier to provide information on the physical parameter if the latter is highly uncertain than if it is already precisely constrained.

The mutual information is invariant to invertible transformations of Θ or Y(s) separately. Its value is thus identical whether integrated intensities are considered in linear scale, logarithm scale or with a asinh transformation as in Gratier et al. (2017). Conversely, non-bijective transformations result in a loss of information, and thus decrease the mutual information. For instance, an integrated intensity is obtained with a non-invertible integration of the associated line profile, and thus contains less information.

In the example from Sect. 2.2, the value of mutual information is I (Θ, Y) = 1.22 bits; that is, the difference between h (Θ) = 0.07 bits and h (Θ | Y) = −1.15 bits. This means that observing Y increases the information on Θ by 1.22 bits on average. Equivalently, observing Y improves the precision on Θ by a factor of 21.22 ≃ 2.3, on average.

2.7 Finding the lines that best constrain physical parameters

Constraining a physical parameter is commonly defined as reducing the uncertainty associated with it. In information theory, this uncertainty is quantified by the conditional entropy h (Θ | Y). The best subset sK of K lines for a given physical regime is then the solution of the discrete optimization problem

![$\[s_K=\underset{s \in \mathcal{S}_K}{\arg \min } ~h\left(\Theta \mid Y^{(s)}\right),\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq23.png) (13)

(13)

with SK the set of all possible subsets of K lines. Using the relationship h (Θ | Y(s)) = h (Θ) − I (Θ, Y(s)), the problem can be restated as maximizing mutual information such that an equivalent formulation is

![$\[s_K=\underset{s \in \mathcal{S}_K}{\arg \max } ~I\left(\Theta, Y^{(s)}\right).\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq24.png) (14)

(14)

This optimization problem is solved by comparing mutual information values for all subsets s ∈ SK. The entropy and mutual information values are heavily dependent on the choice of prior on the Θ distribution. Solving Eq. (14) requires the ability to evaluate the mutual information for each pair (Θ, Y(s)). In real-life applications, the shape of the distribution on (Θ, Y(s)) can be complex or unknown. In such cases, the mutual information does not have a simple closed-form expression, unlike the simple cases listed in Table 2. It then needs to be evaluated numerically with a Monte Carlo estimator ![$\[\widehat{I}_{N}\left(\Theta, Y^{(s)}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq25.png) from a set of N pairs (

from a set of N pairs (![$\[\left\boldsymbol{\theta}_{n}, \mathbf{y}_{n}^{(s)}\right.\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq26.png) ).

).

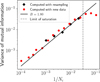

The Monte Carlo estimator ![$\[\widehat{I}_{N}\left(\Theta, Y^{(s)}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq27.png) considered in the remainder of this work is the “Kraskov estimator” (Kraskov et al. 2004). This estimator does not make assumptions on the shape of the joint distribution on Θ, Y(s). It can thus capture both linear and nonlinear relationships between lines Y(s) and physical parameters Θ. See Appendix C for more details on this estimator and the derivation of the associated error bars.

considered in the remainder of this work is the “Kraskov estimator” (Kraskov et al. 2004). This estimator does not make assumptions on the shape of the joint distribution on Θ, Y(s). It can thus capture both linear and nonlinear relationships between lines Y(s) and physical parameters Θ. See Appendix C for more details on this estimator and the derivation of the associated error bars.

The set of N pairs ![$\[\left(\boldsymbol{\theta}_{n}, \mathbf{y}_{n}^{(s)}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq28.png) can be made up of real observations or simulated observations. This paper considers simulated observation. The considered approach involves 3 steps: i) drawing N physical parameters vectors θn from a distribution π(θ), ii) evaluating the ISM model f on each physical parameter θn for all lines, iii) applying the noise model 𝒜 to obtain simulated noisy observations yn. In the second paper of this series, the method is applied to a set of real observations.

can be made up of real observations or simulated observations. This paper considers simulated observation. The considered approach involves 3 steps: i) drawing N physical parameters vectors θn from a distribution π(θ), ii) evaluating the ISM model f on each physical parameter θn for all lines, iii) applying the noise model 𝒜 to obtain simulated noisy observations yn. In the second paper of this series, the method is applied to a set of real observations.

3 Application to simulated photodissociation regions observed with IRAM 30m EMIR

Mutual information, introduced in Sect. 2, allows one to evaluate the constraining power of ionic, atomic and molecular lines. The general method presented in Sect. 2.7 allows one to determine which lines are the most informative to constrain the physical properties of an emitting object. This method can be applied to any astrophysical model that computes line intensities from a few input parameters; for example, radiative transfer codes simulating interstellar clouds, emission lines from protoplanetary disks, or stellar spectra synthesis models. It can also be applied to any other spectroscopic observations.

In this section, we introduce two synthetic cases of PDRs. In both cases, we resort to a fast and accurate emulator of the Meudon PDR code, and simulate noise using the characteristics of the EMIR receiver at the IRAM 30m. With these two cases, we shall show how mutual information can provide insights for ISM physics understanding, and apply the proposed line selection method. As the results of the proposed approach heavily depend on various aspects (e.g., the instrument properties, the integration time, or the observed environment), we depict these two cases in detail.

The Meudon PDR code is first presented along with a fast and accurate emulator. Then, the details of the generation of the sets of models are introduced, namely, the physical parameter distribution and the observation simulator. Overall, we consider two situations with distinct physical parameter distributions.

3.1 The Meudon PDR code

The Meudon PDR code5 (Le Petit et al. 2006) is a one-dimensional stationary code that simulates a PDR; that is, neutral interstellar gas illuminated with a stellar radiation field. It permits the investigation of the radiative feedback of a newborn star on its parent molecular cloud, but it can also be used to simulate a variety of other environments.

The user specifies physical conditions such as the thermal pressure, Pth, the intensity of the incoming UV radiation field, G0 (scaling factor applied to the Mathis et al. 1983 standard field), and the depth of the slab of gas expressed in visual extinctions, ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq29.png) . The code then solves multiphysics coupled balance equations of radiative transfer, thermal balance, and chemistry for each point of an adaptive spatial grid of a one-dimensional slab of gas. First, the code solves the radiative transfer equation, considering absorption in the continuum by dust and in the lines of key atoms and molecules such as H and H2 (Goicoechea & Le Bourlot 2007). Then, from the specific intensity of the radiation field, it computes the gas and grain temperatures by solving the thermal balance. The code accounts for a large number of heating and cooling processes, in particular photoelectric and cosmic ray heating, and line cooling. Finally, the chemistry is solved, providing the densities of about 200 species at each position. About 3000 reactions are considered, both in the gas phase and on the grains. The chemical reaction network was built combining different sources including data from the KIDA database (Wakelam et al. 2012) and the UMIST database (McElroy et al. 2013) as well as data from articles. For key photoreactions, cross sections are taken from Heays et al. (2017) and from Ewine van Dishoeck’s photodissociation and photoionization database. The successive resolution of these three coupled aspects is iterated until a global stationary state is reached.

. The code then solves multiphysics coupled balance equations of radiative transfer, thermal balance, and chemistry for each point of an adaptive spatial grid of a one-dimensional slab of gas. First, the code solves the radiative transfer equation, considering absorption in the continuum by dust and in the lines of key atoms and molecules such as H and H2 (Goicoechea & Le Bourlot 2007). Then, from the specific intensity of the radiation field, it computes the gas and grain temperatures by solving the thermal balance. The code accounts for a large number of heating and cooling processes, in particular photoelectric and cosmic ray heating, and line cooling. Finally, the chemistry is solved, providing the densities of about 200 species at each position. About 3000 reactions are considered, both in the gas phase and on the grains. The chemical reaction network was built combining different sources including data from the KIDA database (Wakelam et al. 2012) and the UMIST database (McElroy et al. 2013) as well as data from articles. For key photoreactions, cross sections are taken from Heays et al. (2017) and from Ewine van Dishoeck’s photodissociation and photoionization database. The successive resolution of these three coupled aspects is iterated until a global stationary state is reached.

The code yields 1D-spatial profiles of density of many chemical species and of temperature of both grains and gas as a function of depth in the PDR. From these spatial profiles, it also computes the line integrated intensities emerging from the cloud that can be compared to observations. As of version 7 (released in 2024), thousands line intensities are predicted from species such as H2, HD, H2O, C+, C, CO, 13CO, C18O, 13C18O, SO, HCO+, OH, HCN, HNC, CH+, CN or CS. Although the Meudon PDR code was primarily designed for PDRs, it can also simulate the physics and chemistry of a wide variety of other environments such as diffuse clouds, nearby galaxies, damped Lyman alpha systems and circumstellar disks.

3.2 Neural network-based emulation of the model

The numerical estimation of the mutual information requires drawing thousands of physical parameters θn and evaluating the associated integrated intensities fℓ(θn) in order to achieve satisfying precisions for line ranking (see, e.g., the experiment from Appendix C). A single full run of the Meudon PDR code is computationally intensive and typically lasts a few hours for one input vector θ. Generating such a large set of models with the original code would therefore be very slow. This is a recurrent limitation of comprehensive ISM models that received a lot of attention recently. The most common solution is to derive a fast approximation of a heavy ISM code using an interpolation method (Galliano 2018; Wu et al. 2018; Ramambason et al. 2022), a machine learning algorithm (Bron et al. 2021; Smirnov-Pinchukov et al. 2022) or a neural network (de Mijolla et al. 2019; Holdship et al. 2021; Grassi et al. 2022; Palud et al. 2023).

In this work, we use the fast, light (memory-wise) and accurate neural network approximation of the Meudon PDR code proposed in Palud et al. (2023). This approximation is valid for ![$\[\log _{10} P_{\text {th }} \in[5,9], \log _{10} G_{0} \in[0,5], \log _{10} A_{V}^{\text {tot }} \in\left[0, \log _{10}(40)\right]\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq30.png) . As neural networks can process multiple inputs at once in batches, the evaluation of 103 input vectors θ with this approximation lasts about 10 ms on a personal laptop. With the original code, performing that many evaluations would require about a week using high performance computing; that is, about 60 million times longer even with much more computing power. For the lines studied in this paper, the emulator results in an average error of about 3.5% on the validity intervals, which is three times lower than the average calibration error at the IRAM 30m. The error on mutual information values due to using the emulator instead of the original code is thus negligible. For this reason and to simplify notation in the remainder of this paper, we denote f this neural network approximation.

. As neural networks can process multiple inputs at once in batches, the evaluation of 103 input vectors θ with this approximation lasts about 10 ms on a personal laptop. With the original code, performing that many evaluations would require about a week using high performance computing; that is, about 60 million times longer even with much more computing power. For the lines studied in this paper, the emulator results in an average error of about 3.5% on the validity intervals, which is three times lower than the average calibration error at the IRAM 30m. The error on mutual information values due to using the emulator instead of the original code is thus negligible. For this reason and to simplify notation in the remainder of this paper, we denote f this neural network approximation.

3.3 Generating sets of models

To demonstrate the power of the approach presented in Sect. 2.7, we apply it to a simulation of lines observed by the EMIR (Eight MIxer Receiver) heterodyne receiver. This receiver operates in the 3 mm, 2 mm, 1.3 mm and 0.9 mm bands at the IRAM 30m telescope (Carter et al. 2012). This application also includes the far infrared (FIR) [CI] 370 μm, [CI] 609 μm and [CII] 157 μm lines. These three lines are relevant for this application as their behavior is well understood within PDRs (Kaufman et al. 1999), especially their dependency on G0.

However, choosing which lines to include in the study is not the only critical choice. Indeed, the values of mutual information and therefore the result of the optimization problem heavily depend on the prior distribution π (θ) on the physical parameters – which, in particular, specifies the expected physical regime – and the observation simulator.

Summary of the parameter distribution for the two studied situations.

3.3.1 Physical regimes and distribution of parameters

The distribution, π(θ), on physical parameters represents the expected proportions of pixels in each physical regime within an observation. This distribution has a crucial influence on ISM model predictions and thus on the mutual information values and line ranking. It should therefore be carefully chosen. In this paper, we study two situations, summarized in Table 3.

First, we consider a loguniform distribution over the whole validity space of the emulated ISM model. As this option does not favor any physical regime, it is a common choice in ISM studies (see, e.g., Behrens et al. 2022; Blanc et al. 2015; Thomas et al. 2018; Holdship et al. 2018; Joblin et al. 2018). In other words, it assumes that all kinds of environments are equally likely, which is not the case in general in observed environments. However, choosing the distribution of maximal entropy on log ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq33.png) and log G0 averages the lines informativity over different physical conditions without introducing any bias.

and log G0 averages the lines informativity over different physical conditions without introducing any bias.

Second, we consider a physical environment similar to the Horsehead pillar. Real life observations of molecular clouds such as Orion B (Pety et al. 2017) or OMC-1 (Goicoechea et al. 2019) typically contain more pixels corresponding to translucent gas than dense cores. This is due to the fact that translucent gas fills a larger volume than dense cores in a galaxy. To incorporate this physical knowledge in our study, we fit a power law distribution on ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq34.png) and G0 (Hennebelle & Falgarone 2012). The associated exponents are adjusted on ORION-B data, following the method described in Clauset et al. (2009).

and G0 (Hennebelle & Falgarone 2012). The associated exponents are adjusted on ORION-B data, following the method described in Clauset et al. (2009).

For a given situation, one can choose to simulate observations only within a particular environment (e.g., translucent clouds with ![$\[3 \leq A_{V}^{\text {tot }} \leq 6\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq35.png) ). This physical a priori can then be used to refine the results. In practice, any available physical knowledge is useful to integrate into the parameters prior distribution or the observation simulator.

). This physical a priori can then be used to refine the results. In practice, any available physical knowledge is useful to integrate into the parameters prior distribution or the observation simulator.

3.3.2 Observation simulator

Eq. (1) involves an abstract noise model 𝒜. In this experiment, the considered noise model combines two sources of noise for each of the considered lines: one additive Gaussian and one multiplicative lognormal. The additive noise corresponds to thermal noise, whereas the multiplicative noise corresponds to the calibration uncertainty. For all lines, we compute the integrated line intensity over a velocity range of 10 km s−1. Overall, for the nth element of the dataset (1 ≤ n ≤ N) and the ℓth line, the observation simulator reads

![$\[y_{n \ell}=\varepsilon_{n \ell}^{(m)} f_{\ell}\left(\boldsymbol{\theta}_n\right)+\varepsilon_{n \ell}^{(a)},\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq36.png) (15)

(15)

The standard deviation of the multiplicative noise, σm, was set so that a 1σ uncertainty interval corresponds to a given percentage for the calibration error. For instance, a 5% calibration error leads to σm = log(1.05). For EMIR lines, this percentage is assumed to be identical for the lines within the same band: 5% at 3 mm, 7.5% at 2 mm and 10% at both 1.3 mm and 0.9 mm. For the time being, the additive noise RMS levels ![$\[\sigma_{a, \ell}^{2}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq38.png) are set according to the ORION-B Large Program observations (Einig et al. 2023). To do this, we resort to the IRAM 30m software that delivers the telescope sensitivity as a function of frequency. We consider standard weather conditions at Pico Veleta and set the integration time per pixel to 24 seconds. An increase in the integration time would amount to dividing the additive noise RMS σa,ℓ by the square root of the increase factor.

are set according to the ORION-B Large Program observations (Einig et al. 2023). To do this, we resort to the IRAM 30m software that delivers the telescope sensitivity as a function of frequency. We consider standard weather conditions at Pico Veleta and set the integration time per pixel to 24 seconds. An increase in the integration time would amount to dividing the additive noise RMS σa,ℓ by the square root of the increase factor.

For FIR lines, we assume that the [CII] line is observed with SOFIA and has an additive noise RMS of 2.25 K per channel in addition of a 5% calibration error (Risacher et al. 2016; Pabst et al. 2017). We also assume that both [CI] lines are observed at Mount Fuji observatory with an RMS of 0.5 K and a 20% calibration error (Ikeda et al. 2002). For all lines, the integration range is assumed to be 10 km s−1.

Important observational effects such as the beam dilution or the cloud geometry are disregarded in Eq. (15). As a consequence, we propose an alternative observation simulator that accounts for such observational effects through a scaling factor, κ. This factor is assumed common to all lines such that

![$\[\forall 1 \leq \ell \leq L, \quad y_{n \ell}=\varepsilon_{n \ell}^{(m)} \kappa_n f_{\ell}\left(\boldsymbol{\theta}_n\right)+\varepsilon_{n \ell}^{(a)}.\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq39.png) (17)

(17)

Beam dilution decreases line intensities, while an edge-on geometry increases line intensities compared to a face-on orientation. Therefore, we consider that log10 κ follows a uniform distribution on [−0.5, 0.5], which seems realistic when looking at extended sources like Orion B. See Sheffer & Wolfire (2013) for a more thorough description of this scaling parameter. This approach of including these effects in the observation simulator is a first order approximation. In particular, the hypothesis of a shared κ among all lines is only valid for optically thin lines.

In the remainder of this work, unless explicitly specified, the considered observation simulator is Eq. (15) – without the κ term.

|

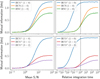

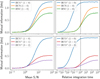

Fig. 3 Violin plots of the S/N of the spectral lines considered in this study, with the S/N defined as fℓ(θ)/σa,ℓ. The EMIR lines are displayed in blue on the left, while the [CI] and [CII] lines are shown in orange on the right. Top: S/N distributions for a loguniform distribution on the full validity intervals on the physical parameters. The considered line filter only keeps lines that have a 99% percentile S/N greater than 3. This threshold is indicated with the horizontal dashed black line, and the actual 99% percentile S/N is shown with a short black line for each line. Bottom: S/N distributions in an environment similar to the Horsehead pillar, for the same lines. The lines are ranked by decreasing median S/N, indicated in red. |

3.3.3 Considered lines

In the simulated observations, the intensity of some lines is completely dominated by the additive noise. The intensity of these lines is thus nearly independent of physical parameters Θ and has a near-zero mutual information with them. To avoid useless mutual information evaluations, we filter out uninformative lines based on their S/N. We thus only study lines that have an S/N greater than 3 for at least 1% of the full parameter space. In total, L = 36 lines are considered: 33 millimeter lines – with multiple lines in each of the four frequency bands – and the 3 lines from atomic and ionized carbon. For lines with hyperfine structure, the Meudon PDR code considers the transitions independently. To simplify our systematic comparison, only the brightest transition is retained. Summing the integrated intensities of all the transitions might lead to a more realistic approximation of the overall line.

Figure 3 shows the distribution of S/N level across the considered parameter space for each of the L = 36 considered lines. These lines include the first three low-J transitions of 12CO, 13CO, C18O, the first four of HCO+, five of the first seven of 12CS, six lines of 12CN, two lines of HNC, three lines of HCN, and four lines of C2H. The first row contains S/N violin plots for a loguniform distribution on the validity intervals for the physical parameters θ. It shows that all the considered lines can have very low S/Ns for some regimes of the explored physical parameter space. Below an S/N of 1–2, signal becomes difficult to distinguish from noise. The second row contains S/N violin plots for a parameter space restricted to the range found in the Horse-head pillar. In this use case, the line S/Ns cover fewer orders of magnitude. For instance, in this case, the lines corresponding to the last 18 blue violin histogram have a very low S/N, and are thus unlikely to be informative. This shows that the subset of informative lines could be further reduced in this case. While dedicated filters could be performed for each use case, we maintain the same subset of L = 36 lines in all the studied use cases to simplify interpretations.

The considered noise properties of the EMIR receiver, of SOFIA, and of the Mt. Fuji observatory are not identical for all lines. For instance, Fig. 3 shows similar range of S/N values for the ground state transition of 12CO and 13CO. This might be surprising, since the ground state transition of 12CO is known to be brighter than that of 13CO (Pety et al. 2017). In this case, the additive noise standard deviation σa,ℓ of 12CO(l − 0) is much larger than that of 13CO (1 − 0) because 12CO (1 − 0) is located on the upper limit of the band at 3 mm. This results in their comparable S/Ns. The same observation can be done for the [CII] line: although this line is usually much brighter than all the other considered lines, its S/N is close to 1 due to the considered noise properties of SOFIA. Appendix G provides the full list of considered lines and the associated noise characteristics.

4 Simulation results and general applications

In this section, we show general results and insights of our approach in the considered setting. To do so, we evaluate the mutual information between the integrated intensity of a few ISM tracers with either the visual extinction ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq40.png) or the UV radiative field G0. First, we consider the impact of integration time, and thus of S/N, on the mutual information value. Second, we show how the mutual information between line intensities and

or the UV radiative field G0. First, we consider the impact of integration time, and thus of S/N, on the mutual information value. Second, we show how the mutual information between line intensities and ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq41.png) or G0 changes with the values of

or G0 changes with the values of ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq42.png) and G0, in order to better understand the physical processes that control the informativity of these lines. Third, we illustrate how combining different lines can impact their mutual information with

and G0, in order to better understand the physical processes that control the informativity of these lines. Third, we illustrate how combining different lines can impact their mutual information with ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq43.png) .

.

The goal of this section is to demonstrate the approach potential and consistency with already known results. Therefore, we restrict the analysis to two variables – for visualization purposes – and choose the two variables for which astrophysicists have the best intuition, namely the visual extinction ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq44.png) or the UV field intensity G0. In particular, we do not present mutual information values for the thermal pressure, Pth, although the proposed approach and code can perform these computations. In addition, we restrict the experiment to univariate physical parameters as this greatly simplifies physical interpretations. In other words, we compute mutual information for only one physical parameter (

or the UV field intensity G0. In particular, we do not present mutual information values for the thermal pressure, Pth, although the proposed approach and code can perform these computations. In addition, we restrict the experiment to univariate physical parameters as this greatly simplifies physical interpretations. In other words, we compute mutual information for only one physical parameter (![$\[\leftA_{V}^{\text {tot }}\right.\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq45.png) or G0) at a time, although the proposed approach and code can evaluate the mutual information for both

or G0) at a time, although the proposed approach and code can evaluate the mutual information for both ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq46.png) and G0 simultaneously. Analyzing less understood physical parameters such as the thermal pressure, Pth, or evaluating the mutual information for multiple physical parameters at once is left for future work.

and G0 simultaneously. Analyzing less understood physical parameters such as the thermal pressure, Pth, or evaluating the mutual information for multiple physical parameters at once is left for future work.

4.1 Signal-to-noise ratio for a line to deliver its full physical potential

The mutual information I (Θ, Yℓ) between a line intensity Yℓ and a given physical parameter Θ not only depends on the intrinsic physical sensitivity of the lines with the considered physical parameter, but also on the mean S/N of the studied observation. For a given line, the mean S/N is influenced by 1) the corresponding species and its quantum transition, 2) the physical conditions (e.g., kinetic temperature and volume density), and 3) the integration time with an observatory to reach a given noise level6.

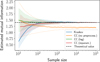

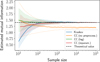

Figure 4 shows the influence of the mean S/N (left column) and the integration time (right column) on ![$\[I\left(A_{V}^{\text {tot }}, Y_{\ell}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq49.png) for several transitions of HCO+, HCN, and HNC. The considered distribution π(θ) on physical parameters is the one similar to the Horsehead Nebula (see Table 4), restricted to filamentary gas (

for several transitions of HCO+, HCN, and HNC. The considered distribution π(θ) on physical parameters is the one similar to the Horsehead Nebula (see Table 4), restricted to filamentary gas (![$\[6 \leq A_{V}^{\text {tot }} \leq 12\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq50.png) ). The dotted vertical line in the right column shows the typical integration time per pixel in the ORION-B dataset. For each line, the mutual information varies with mean S/N and time following an S-shape. Low S/N values lead to zero mutual information because the line intensity is dominated by additive noise. The inflection point of the S-curve is located at S/N about 3. A given line reaches its full informativity potential when the curve starts to saturate; for instance at S/N ~ 10 for all lines in this case. For large S/N, the mutual information converges to a value that depends on the line microphysical characteristics. This value is finite because each

). The dotted vertical line in the right column shows the typical integration time per pixel in the ORION-B dataset. For each line, the mutual information varies with mean S/N and time following an S-shape. Low S/N values lead to zero mutual information because the line intensity is dominated by additive noise. The inflection point of the S-curve is located at S/N about 3. A given line reaches its full informativity potential when the curve starts to saturate; for instance at S/N ~ 10 for all lines in this case. For large S/N, the mutual information converges to a value that depends on the line microphysical characteristics. This value is finite because each ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq51.png) value is combined with many values of thermal pressure and UV illumination.

value is combined with many values of thermal pressure and UV illumination.

Using the proposed method, the integration time can be set to achieve a target mean S/N and mutual information. For instance, according to Fig. 4, ![$\[I\left(A_{V}^{\text {tot }}, Y_{\ell}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq54.png) has already reached its maximum value for HCO+ (1–0) in the filamentary gas part of ORION-B dataset. An increase in the integration time would thus not increase the informativity of this line; in other words, it would not improve the precision in an estimation of

has already reached its maximum value for HCO+ (1–0) in the filamentary gas part of ORION-B dataset. An increase in the integration time would thus not increase the informativity of this line; in other words, it would not improve the precision in an estimation of ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq55.png) from HCO+ (1 − 0). Conversely, a 100-fold increase in the integration time would improve the mutual information for the HCN (1 − 0) and HNC (1 − 0) lines by 0.7 and 0.5 bits, respectively, and would lead to maximum precision in an estimation of

from HCO+ (1 − 0). Conversely, a 100-fold increase in the integration time would improve the mutual information for the HCN (1 − 0) and HNC (1 − 0) lines by 0.7 and 0.5 bits, respectively, and would lead to maximum precision in an estimation of ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq56.png) with these lines. Higher energy transitions of HCO+ could also be fully exploited with such an increase in the integration time. As a reference, the next generation of multibeam receivers currently foreseen in millimeter radio astronomy are expected to bring a 25-fold sensitivity improvement without increasing the integration time. The same figures of evolution of mutual information with the integration time for the 36 considered lines are available online7. They also display results with respect to the intensity of the UV radiative field G0 for translucent gas, filamentary gas and dense cores.

with these lines. Higher energy transitions of HCO+ could also be fully exploited with such an increase in the integration time. As a reference, the next generation of multibeam receivers currently foreseen in millimeter radio astronomy are expected to bring a 25-fold sensitivity improvement without increasing the integration time. The same figures of evolution of mutual information with the integration time for the 36 considered lines are available online7. They also display results with respect to the intensity of the UV radiative field G0 for translucent gas, filamentary gas and dense cores.

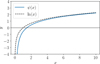

Figure 5 shows how ![$\[I\left(A_{V}^{\text {tot }}, Y_{\ell}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq57.png) evolves with mean S/N for HCO+ (1 − 0) in the Horsehead Nebula (see Table 4) in three physical subregimes: translucent, filamentary, and dense core gas. The inflection point of the S-shape curve happens at an S/N of about 2, 5, and 10, respectively. Comparing the maximum value of mutual information for different regimes is hazardous here because the distribution of the

evolves with mean S/N for HCO+ (1 − 0) in the Horsehead Nebula (see Table 4) in three physical subregimes: translucent, filamentary, and dense core gas. The inflection point of the S-shape curve happens at an S/N of about 2, 5, and 10, respectively. Comparing the maximum value of mutual information for different regimes is hazardous here because the distribution of the ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq58.png) values (and thus the associated entropy) intrinsically depends on the studied physical regime. If a considered physical regime is broad, the mutual information between a given line and

values (and thus the associated entropy) intrinsically depends on the studied physical regime. If a considered physical regime is broad, the mutual information between a given line and ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq59.png) is likely to be higher than for another more localized regime even if the line is a better tracer of

is likely to be higher than for another more localized regime even if the line is a better tracer of ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq60.png) in the latter.

in the latter.

|

Fig. 4 Evolution of mutual information between the visual extinction |

Summary of the considered use cases.

|

Fig. 5 Evolution of mutual information between the visual extinction |

4.2 The physical regimes in which a given line is informative

In this section, we show how mutual information can provide insights for ISM physics understanding. We showed in Fig. 5 that the mutual information between a physical parameter and a line intensity may significantly vary with the physical regime. The three large physical regimes used in the previous section were defined based on a priori astronomical knowledge. This may result in the omission of processes that occur in smaller and intermediate regimes. To overcome this issue, we introduce the notion of maps of the mutual information between a physical parameter (either ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq61.png) or G0) and line intensities as a function of both

or G0) and line intensities as a function of both ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq62.png) and G0. To do this, we filter the

and G0. To do this, we filter the ![$\[\left(\log _{10} A_{V}^{\text {tot }}, \log _{10} G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq63.png) space with a sliding window of constant width, and consider loguniform distribution for each parameter. This width corresponds to a factor of two for

space with a sliding window of constant width, and consider loguniform distribution for each parameter. This width corresponds to a factor of two for ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq64.png) and a factor of about 5.2 for G0; that is, seven independent windows (without overlap) for each parameter. Then, we compute the mutual information between the line intensities, simulated with parameters in the sliding window, and either

and a factor of about 5.2 for G0; that is, seven independent windows (without overlap) for each parameter. Then, we compute the mutual information between the line intensities, simulated with parameters in the sliding window, and either ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq65.png) or G0. The additive noise in the simulated spectra corresponds to the integration time corresponding to the ORION-B observations; that is, 24 seconds per pixel. After describing the obtained maps of mutual information with

or G0. The additive noise in the simulated spectra corresponds to the integration time corresponding to the ORION-B observations; that is, 24 seconds per pixel. After describing the obtained maps of mutual information with ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq66.png) and G0, we explain them with maps of line intensities fℓ(θ).

and G0, we explain them with maps of line intensities fℓ(θ).

Here, the values of mutual information can be compared from one value of the ![$\[\left(A_{V}^{\text {tot }}, G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq67.png) space to another because the sampling of this space is regular and the size of the sliding window is kept fixed. For the same reasons, the values of mutual information can also be compared from one line to another at a constant value of

space to another because the sampling of this space is regular and the size of the sliding window is kept fixed. For the same reasons, the values of mutual information can also be compared from one line to another at a constant value of ![$\[\left(A_{V}^{\text {tot }}, G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq68.png) . Similarly, for a given line and value of

. Similarly, for a given line and value of ![$\[\left(A_{V}^{\text {tot }}, G_{0}\right), I\left(A_{V}^{\text {tot }}, Y_{\ell}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq69.png) and I (G0, Yℓ) can be compared.

and I (G0, Yℓ) can be compared.

The considered prior π(θ) for each parameter is always loguniform in this section. In this very special case, a mutual information value of 1 bit for one physical parameter may be interpreted as a division of the standard deviation on the estimation of log ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq70.png) or log G0 by a factor of two. For instance, if the considered physical parameter is G0 and its mutual information I(log G0, y) with some line y is 1 bit, then the standard deviation of the conditional distribution π(log G0|y) is a factor of two lower than the one of the prior π(log G0). For more general prior distributions, this interpretation does not hold. The second paper of this series will provide an interpretation for the general case.

or log G0 by a factor of two. For instance, if the considered physical parameter is G0 and its mutual information I(log G0, y) with some line y is 1 bit, then the standard deviation of the conditional distribution π(log G0|y) is a factor of two lower than the one of the prior π(log G0). For more general prior distributions, this interpretation does not hold. The second paper of this series will provide an interpretation for the general case.

4.2.1 Relevance of individual ISM lines in constraining AVtot

We here wish to identify 1) which lines are the most relevant to estimate the visual extinction ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq71.png) , and 2) in which part of the

, and 2) in which part of the ![$\[\left(\log _{10} A_{V}^{\text {tot }}, \log _{10} G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq72.png) space. Figure 6 shows maps of mutual information between the intensity of 20 individual lines and

space. Figure 6 shows maps of mutual information between the intensity of 20 individual lines and ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq73.png) . The size of the sliding window is shown in the 12CO map as a red rectangle while the range of the parameters within the Horsehead Nebula is represented with a white rectangle as a reference.

. The size of the sliding window is shown in the 12CO map as a red rectangle while the range of the parameters within the Horsehead Nebula is represented with a white rectangle as a reference.

Among the presented lines, the most informative ones for estimating ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq74.png) on average are the lines of 13CO and C18O followed by HCO+. The lines of 12CO, HCN, 12CS, and [CI] are also informative but on more restricted regions of the

on average are the lines of 13CO and C18O followed by HCO+. The lines of 12CO, HCN, 12CS, and [CI] are also informative but on more restricted regions of the ![$\[\left(A_{V}^{\text {tot }}, G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq75.png) space. The J = 2–1 transitions have systematically lower mutual information with

space. The J = 2–1 transitions have systematically lower mutual information with ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq76.png) than the J = 1–0 transitions, which is due to a lower mean S/N – as shown on Fig. 3.

than the J = 1–0 transitions, which is due to a lower mean S/N – as shown on Fig. 3.

The three CO isotopologues give high values of the mutual information for most of the ![$\[\left(A_{V}^{\text {tot }}, G_{0}\right)\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq77.png) space. For translucent clouds, the first two 13CO lines are the most informative. For dense clouds (large

space. For translucent clouds, the first two 13CO lines are the most informative. For dense clouds (large ![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq78.png) ), the first two 13CO and C18O lines are the most informative. Finally, the fine structure [CI] lines and the ground state transition of 12CO have the highest mutual information values (even though these values are low) for the upper left corner, which corresponds to highly illuminated diffuse clouds.

), the first two 13CO and C18O lines are the most informative. Finally, the fine structure [CI] lines and the ground state transition of 12CO have the highest mutual information values (even though these values are low) for the upper left corner, which corresponds to highly illuminated diffuse clouds.

Although the ground state transitions of HCN and HNC are among the most informative lines in the high-![$\[A_{V}^{\text {tot }}\]$](/articles/aa/full_html/2024/11/aa51588-24/aa51588-24-eq79.png) low-G0 regime, we might have expected them to be even more informative in this physical regime since they are used as tracers of the dense cores. Their relatively low informativity is explained by low mean S/N values. As was shown in Fig. 4, the integration time is too short to exploit the full potential of these lines.

low-G0 regime, we might have expected them to be even more informative in this physical regime since they are used as tracers of the dense cores. Their relatively low informativity is explained by low mean S/N values. As was shown in Fig. 4, the integration time is too short to exploit the full potential of these lines.