| Issue |

A&A

Volume 631, November 2019

|

|

|---|---|---|

| Article Number | A160 | |

| Number of page(s) | 26 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/201935912 | |

| Published online | 15 November 2019 | |

Cosmic shear covariance matrix in wCDM: Cosmology matters

1

Scottish Universities Physics Alliance, Institute for Astronomy, University of Edinburgh, Blackford Hill, UK

e-mail: jharno@roe.ac.uk

2

Department of Physics and Astronomy, University College London, Gower Street, London WC1E 6BT, UK

Received:

17

May

2019

Accepted:

17

September

2019

We present here the cosmo-SLICS, a new suite of simulations specially designed for the analysis of current and upcoming weak lensing data beyond the standard two-point cosmic shear. We sampled the [Ωm, σ8, h, w0] parameter space at 25 points organised in a Latin hyper-cube, spanning a range that contains most of the 2σ posterior distribution from ongoing lensing surveys. At each of these nodes we evolved a pair of N-body simulations in which the sampling variance is highly suppressed, and ray-traced the volumes 800 times to further increase the effective sky coverage. We extracted a lensing covariance matrix from these pseudo-independent light-cones and show that it closely matches a brute-force construction based on an ensemble of 800 truly independent N-body runs. More precisely, a Fisher analysis reveals that both methods yield marginalized two-dimensional constraints that vary by less than 6% in area, a result that holds under different survey specifications and that matches to within 15% the area obtained from an analytical covariance calculation. Extending this comparison with our 25 wCDM models, we probed the cosmology dependence of the lensing covariance directly from numerical simulations, reproducing remarkably well the Fisher results from the analytical models at most cosmologies. We demonstrate that varying the cosmology at which the covariance matrix is evaluated in the first place might have an order of magnitude greater impact on the parameter constraints than varying the choice of covariance estimation technique. We present a test case in which we generate fast predictions for both the lensing signal and its associated variance with a flexible Gaussian process regression emulator, achieving an accuracy of a few percent on the former and 10% on the latter.

Key words: gravitational lensing: weak / methods: numerical / dark matter / dark energy / large-scale structure of Universe

© ESO 2019

1. Introduction

Weak lensing has recently emerged as an accurate probe of cosmology, exploiting the high-quality photometric data recorded by dedicated surveys such as the Canada-France-Hawaii Telescope Lensing Survey1 (CFHTLenS hereafter), the Kilo Degree Survey2 (KiDS), the Dark Energy Survey3 (DES) and the Hyper Suprime-Cam Survey4 (HSC). These collaborations have developed a number of tools to model, extract and analyse the cosmic shear signal – the weak lensing distortions imprinted on the image of background galaxies by the foreground large scale structures (see Bartelmann & Schneider 2001; Kilbinger 2015, for reviews).

Given a catalogue of galaxies with shear and redshift estimates, there exist many ways to extract the lensing information that is required to constrain the underlying cosmological parameters that describe our Universe at its largest scales. The central approach adopted by the above-mentioned surveys starts with the measurement of a two-point summary statistics, either the configuration-space correlation function (as in Kilbinger et al. 2013; Hildebrandt et al. 2017, 2018; Troxel et al. 2018) or the Fourier-space power spectra (as in Liu et al. 2015a; Köhlinger et al. 2017; Hikage et al. 2019).

The motivations for choosing these statistics are multiple and compelling: the accuracy of the signal predictions is better than a percent over many scales (see e.g. Mead et al. 2015), while the effect of most known systematic effects can be either modelled, measured, mitigated, self-calibrated, or suppressed with simple cuts applied on the data vector. Examples of such effects include the secondary signal caused by the intrinsic alignment of galaxies (Joachimi et al. 2015; Kiessling et al. 2015; Kirk et al. 2015), the strong baryon feedback processes that modify the lensing signal at small and intermediate scales (Semboloni et al. 2011) or the relatively large uncertainty on the source redshift distribution and on the shape measurement. For a recent review of the many systematics that affect weak lensing measurements, see Mandelbaum (2018).

In the case of two-point functions, it has been possible to model or parameterise most of these effects in a way that allows for an efficient marginalisation, and therefore leads to a potentially unbiased estimation of the cosmological parameters (MacCrann et al. 2018). These statistics benefit from another key advantage, which is that there exist analytical calculations that describe the covariance of the signal (see, e.g., Scoccimarro & Frieman 1999; Takada & Jain 2009; Krause & Eifler 2017). In addition to its reduced computational cost compared to the simulation-based ensemble approach, this estimate is noise-free, providing a significant gain in stability during the inversion process that occurs within the cosmological inference segment of the analysis. For these reasons, the analytical approach stands out as a prime method for evaluating the statistical uncertainties in cosmic shear analyses (Hildebrandt et al. 2017, 2018; Hikage et al. 2019; Troxel et al. 2018). The caveat is that its accuracy is not well established, and comparisons with the ensemble approach yield discrepancies. Hildebrandt et al. (2017), for example, show that swapping the covariance matrix from a simulation-based to the analytic method shifts the cosmological results by more than 0.5σ. This clearly calls for further investigations in both methods, which have yet to come.

Although two-point functions are powerful and clean summary statistics, they do not capture all the cosmological information contained within the lensing data, and hence they are sub-optimal in that sense. The situation would be different if the matter distribution resembled a Gaussian random field, however gravity introduces a variety of non-Gaussian features that can only be captured by higher-order statistics. Accessing this additional information generally results in an improved constraining power on the cosmological parameters with the same data, as demonstrated in lensing data analyses based on alternative estimators such as the bispectrum (Fu et al. 2014), the peak count statistics (Liu et al. 2015a,b; Kacprzak et al. 2016; Martinet et al. 2018; Shan et al. 2018), the Minkowski functionals (Petri et al. 2015), clipped lensing (Giblin et al. 2018), the density-split lensing statistics (Brouwer et al. 2018; Gruen et al. 2018) or convolutional neural networks (Fluri et al. 2019). Recent studies further suggest that some of these new methods on their own could outperform the two-point cosmic shear at constraining the sum of neutrino masses, and further help in constraining many other parameters (notably Ωm and σ8) when analysed jointly with the two-point functions (Li et al. 2019; Liu & Madhavacheril 2019; Marques et al. 2019; Coulton et al. 2019). Moreover, there is growing evidence that some of these methods could be particularly helpful for probing modifications to the theory of General Relativity (see Liu et al. 2016; Peel et al. 2019, 2018, for modified gravity analyses with peak counts and machine learning methods). These are all compelling reasons to further refine such promising tools, but at the moment they are often regarded as immature alternatives to the standard two-point functions for a number of reasons.

Indeed, developing a new analysis strategy relies heavily on weak lensing numerical simulations for modelling the primary and secondary signals, for covariance estimation and for understanding the impact of residual systematics in the data. Furthermore, these simulations must meet a number of requirements: the redshift distribution of the mock source galaxies has to match that of the data; the noise properties must be closely reproduced; the cosmology coverage of the simulations must be wide enough for the likelihood analysis5; the overall accuracy in the non-linear growth of structure has to be sufficiently high to correctly model the physical scales involved in the measurement. For instance, the Dietrich & Hartlap (2010, DH10 hereafter) simulations were used a number of times (Kacprzak et al. 2016; Martinet et al. 2018; Giblin et al. 2018) and have been shown by the latest of these analyses to be only 5−10% accurate on the cosmic shear correlation functions, a level that is problematic given the increasing statistical power of lensing surveys. Other limitations such as the box size and the mass resolution must further be taken into account in the calibration, carefully understanding what parts of a given lensing estimator are affected by these. To illustrate this point, consider the DarkMatter simulation suite6 described in Matilla et al. (2017), where 5123 particles were evolved in volumes of 240 h−1 Mpc on the side (see Table 1 for more details on existing lensing simulation suites). Such a small box size significantly affects the measurement of shear correlation functions at the degree scale, but has negligible impact on the lensing power spectrum, peak counts or PDF count analyses. Understanding these properties is therefore an integral part of the development of new lensing estimators.

Ranges of the cosmological parameters varied in the cosmo-SLICS, compared to those of the MassiveNuS, the DH10 and the DarkMatter simulation suites.

In this paper we introduce a new suite of simulations, the cosmo-SLICS, which are primarily designed to calibrate novel weak lensing measurement statistics and enable competitive cosmological analyses with current weak lensing data. We followed the global numerical setup of the SLICS simulations7 (Harnois-Déraps et al. 2018, HD18 hereafter) in terms of volume and particle number, which accurately model the cosmic shear signal and covariance over a wide range of scales and are central to many CFHTLenS and KiDS data analyses (e.g. Joudaki et al. 2017, 2018; Hildebrandt et al. 2017; van Uitert et al. 2018; Amon et al. 2018; Giblin et al. 2018). We varied four cosmological parameters over a range informed by current constraints from weak lensing experiments: the matter density Ωm, a combination of the matter density and clumpiness  , the dark energy equation of state w0 and the reduced Hubble parameter h. We sampled this four-dimensional volume at 25 points organised in a Latin hyper-cube, and developed a general cosmic shear emulator based on Gaussian process regression, similar to the tool discussed in e.g. Schneider et al. (2008), Lawrence et al. (2010) and Liu et al. (2018), but in principle applicable to any statistics.

, the dark energy equation of state w0 and the reduced Hubble parameter h. We sampled this four-dimensional volume at 25 points organised in a Latin hyper-cube, and developed a general cosmic shear emulator based on Gaussian process regression, similar to the tool discussed in e.g. Schneider et al. (2008), Lawrence et al. (2010) and Liu et al. (2018), but in principle applicable to any statistics.

We show in the appendix that with as few as 25 nodes, the interpolation accuracy is at the percent level over the scales relevant to lensing analyses with two-point statistics, for most of the four-dimensional parameter volume. Our emulator is fast, flexible and easily interfaces with a Markov chain Monte Carlo sampler.

When calibrating an estimator with a small number of N-body simulations, one needs to consider the impact of sampling variance. This becomes an important issue especially when the measurement is sensitive to large angular scales that fluctuate the most. We suppressed this effect with a mode-cancellation technique that preserves Gaussianity in the initial density fields, unlike the method presented in Angulo & Pontzen (2016) that sacrifice this statistical property, but achieve a higher level of cancellation. Our approach has a significant advantage that becomes clear in the following use.

As a first application, we investigate the accuracy of a weak lensing covariance matrix estimated from the cosmo-SLICS, when compared to the results from 800 truly independent simulations. We revisit and reinforce the findings from Petri et al. (2016), according to which the lensing covariance matrix can be estimated from a reduced number of independent realisations. We discuss the reasons why this works so well with the cosmo-SLICS, and how this can be put to use. In particular, the smaller computational cost allows us to explore the cosmological dependence of the covariance matrices in a four-dimensional parameter space, eventually for any lensing estimator. The variations with cosmology are known to matter to some level, and its impact on the inferred cosmological parameters could lead to important biases if neglected (Eifler et al. 2009; van Uitert et al. 2018). A recent forecast by Kodwani et al. (2019) suggests that the impact on a LSST-like survey would be negligible provided that the fixed covariance is evaluated at the true cosmology, which is a priori unknown. Indeed, under assumption of Gaussian field, a Gaussian likelihood approximation with fixed covariance recovers the mode and second moments of the true likelihood, as shown by Carron (2013). The most accurate posterior with a Gaussian likelihood can therefore be obtained by choosing a covariance model that adopts the best-fit parameters. This can in practice be achieved by the iterative scheme of van Uitert et al. (2018), which observe a clear improvement on the accuracy of the cosmological constraints, however it requires either access to a cosmology-dependent covariance estimator, or to the matrix evaluated at the best-fit cosmology. So far this was only feasible with two-point analyses, however the simulations presented in this paper, combined with our flexible emulator, facilitate incorporating the full cosmological dependence of the covariance for arbitrary statistics into the parameter estimation.

In the context of the lensing power spectrum in a wCDM universe, we verify our covariance estimation against analytical predictions based on the halo model and find a reasonable match, although not for all cosmologies. We study the importance of these differences with Fisher forecasts, assuming different covariance matrix scenarios and different survey configurations. Notably, we investigate whether the impact on the parameter constraints is larger for variations in the cosmology with a fixed covariance estimator, or for variations in estimators at a fixed cosmology. This question is central for determining the next steps to take in the preparation of the lensing analyses for next generation surveys.

This document is structured as follow: we review in Sect. 2 the theoretical background and methods; in Sect. 3 we describe the construction and assess the accuracy of the numerical simulations; we present in Sect. 4 our comparison between different covariance matrix estimation techniques, and investigate their impact on cosmological parameter measurements; we discuss our results and conclude in Sect. 5. Further details on the simulations, the emulator and the analytical covariance matrix calculations can be found in the Appendices.

2. Theoretical background

In this section we present an overview of the background required to carry out these investigations. We first review the modelling aspect of the two-point functions and the corresponding covariance, then describe how these quantities are measured from numerical simulations, and finally we lay down the Fisher forecast formalism that we later use as a metric to measure the effect on cosmological parameter measurements of adopting (or not) a cosmology-dependent covariance matrix. Although our main science goal is to outgrow the two-point statistics, they nevertheless remain an excellent point of comparison that most experts can easily relate to. The method described here can be straightforwardly extended to any other lensing estimator, however we leave this for future work.

2.1. 2-point weak lensing model

The basic approach of two-point cosmic shear is that the cosmology dependence is captured by the matter power spectrum, P(k, z), which is therefore the fundamental quantity we attempt to measure. Many tools exist to compute P(k, z), including fit functions such as HALOFIT (Smith et al. 2003; Takahashi et al. 2012), emulators (Heitmann et al. 2014; Nishimichi et al. 2019), the halo model (Mead et al. 2015) or the reaction approach (Cataneo et al. 2019). The weak lensing power spectrum  is related to the matter power spectrum by8:

is related to the matter power spectrum by8:

where χH is the comoving distance to the horizon, ℓ = kχ and W(χ) is the lensing efficiency function for lenses at redshift z(χ), which depends on the source redshift distribution n(z) via:

Here H0 is the value of the Hubble parameter today, c is the speed of light in vacuum, and n(χ) = n(z)dχ/dz. The lensing power spectrum (Eq. (1)) is directly converted into the cosmic shear correlation function ξ±(ϑ) with:

where ϑ is the angular separation on the sky, and J0/4(x) are Bessel functions of the first kind. Equations (1)–(3) are quickly computed with line-of-sight integrators such as NICAEA9 or COSMOSIS10, and we refer to Kitching et al. (2017) and Kilbinger et al. (2017) for recent reviews on the accuracy of this lensing model.

2.2. 2-point weak lensing covariance

Essential to any analysis of the cosmic shear 2-point function is an estimate of the lensing power spectrum covariance matrix,  , that enters in the likelihood calculation from which the best fit cosmological parameters are extracted. This covariance matrix consists of three contributions, often written as:

, that enters in the likelihood calculation from which the best fit cosmological parameters are extracted. This covariance matrix consists of three contributions, often written as:

The first term on the right-hand side is referred to as the “Gaussian covariance”, which would be the only contribution if the matter field was Gaussian. It can be calculated as:

where  is evaluated from Eq. (1), σϵ characterizes the intrinsic shape noise (per component) of the galaxy sample,

is evaluated from Eq. (1), σϵ characterizes the intrinsic shape noise (per component) of the galaxy sample,  is the mean galaxy density of the source sample, and Nℓ is the number of independent multipoles being measured in a bin centred on ℓ and with a width Δℓ. The quantity Nℓ scales linearly with the area of the survey as 2Nℓ = (2ℓ+1)fskyΔℓ, fsky being the sky fraction defined as Asurvey/(4π). The term δℓℓ′ is the Kronecker delta function, and its role is to forbid any correlation between different multipoles, one of the key properties of the Gaussian term.

is the mean galaxy density of the source sample, and Nℓ is the number of independent multipoles being measured in a bin centred on ℓ and with a width Δℓ. The quantity Nℓ scales linearly with the area of the survey as 2Nℓ = (2ℓ+1)fskyΔℓ, fsky being the sky fraction defined as Asurvey/(4π). The term δℓℓ′ is the Kronecker delta function, and its role is to forbid any correlation between different multipoles, one of the key properties of the Gaussian term.

The second term of Eq. (4) is the “non-Gaussian connected term”, which introduces a coupling between the measurements at multipoles ℓ and ℓ′. This enhances the overall variance and further makes the off-diagonal elements non-zero, by an amount that depends on the parallel configurations of the connected trispectrum, Tκ(ℓ, −ℓ, ℓ′, −ℓ′), which can be computed analytically either from a halo-model approach (Takada & Jain 2009) or from perturbation theory (Scoccimarro & Frieman 1999). The  term is then given by:

term is then given by:

where A(ℓ) is the area of an annulus in multipole-space covering the bin centred on ℓ. The lensing trispectrum Tκ is computed in the Limber approximation from the three-dimensional matter trispectrum Tδ:

The last term in Eq. (4) is called the Super Sample Covariance (SSC) which describes the coupling of survey modes to background density fluctuations δb larger than the survey window M. It is evaluated as (Li et al. 2014; Takada & Hu 2013):

with k = ℓ/χ, k′=ℓ′/χ and z = z(χ). The term σb denotes the variance of super-survey modes for the mask ℳ, while the derivatives of the power spectrum can be estimated from e.g. separate universe simulations or fit functions to these results (Li et al. 2014; Barreira et al. 2018a), or from the halo model directly (Takada & Hu 2013). Note that to first order, this SSC term also scales with the inverse of the survey area.

In this paper we employ the halo model to compute the matter trispectrum and the response of the power spectrum to background modes, using the same implementation that was validated with numerical simulations in Hildebrandt et al. (2017) and van Uitert et al. (2018). Details of the code are provided in Appendix D. In order to match the simulations, we considered a survey area of 100 deg2 in these calculations, and the mask ℳ is assumed to be square. Beyond the SSC term, no survey boundary effects were incorporated in the model in this work.

2.3. 2-point measurements from simulations

Our main weak lensing simulation products consist of convergence κ-maps and galaxy catalogues that include positions, shear, convergence and redshift for every objects. The lensing power spectra  were estimated directly from the Fourier transform of κ-maps (see Sect. 3.4 for details about their constructions), as:

were estimated directly from the Fourier transform of κ-maps (see Sect. 3.4 for details about their constructions), as:

where the brackets refer to an angular averaging over the Fourier ring of radius ℓ. For both simulation measurements and model predictions, we adopted a log-space binning scheme, spanning the range [35 ≤ ℓ ≤ 104] with 20 bins. The lensing power spectrum covariance was computed from an ensemble of N measurements  , following:

, following:

This expression contains all at once the three terms from Eq. (4) with the caveat that the SSC term may not be fully captured due to the finite simulation volume; we present in Sect. 4 a comparison between the two approaches. The shear 2-point correlation functions  were extracted from our simulated galaxy catalogues with TREECORR (Jarvis et al. 2004), which basically measures:

were extracted from our simulated galaxy catalogues with TREECORR (Jarvis et al. 2004), which basically measures:

Here  are the tangential and cross components of the ellipticity measured from galaxy i, wi is a weight generally related to the shape quality and taken to be unity in this work, and the sums run over all galaxy pairs separated by an angle ϑ falling in the angular bin; the binning operator Δij = 1.0 in that case, otherwise it is set to zero. Following Hildebrandt et al. (2017), we computed the

are the tangential and cross components of the ellipticity measured from galaxy i, wi is a weight generally related to the shape quality and taken to be unity in this work, and the sums run over all galaxy pairs separated by an angle ϑ falling in the angular bin; the binning operator Δij = 1.0 in that case, otherwise it is set to zero. Following Hildebrandt et al. (2017), we computed the  in 9 logarithmically-spaced angular separation bins between 0.5 and 300 arcmin.

in 9 logarithmically-spaced angular separation bins between 0.5 and 300 arcmin.

2.4. Fisher forecasts

Given a survey specification, a theoretical model and a covariance matrix, we can estimate the constraints on four cosmological parameters by employing the Fisher matrix formalism. In particular, we are interested in measuring the impact on the constraints from different changes in the covariance matrix, either switching between estimator techniques at a fixed cosmology, or varying the input cosmology for a fixed estimator.

The Fisher matrix ℱαβ for parameters pα, β quantifies the curvature of the log-likelihood at its maximum and provides a lower bound on parameter constraints under the assumption that the posterior is well approximated by a Gaussian. We can construct our matrix ℱαβ from the derivative of the theoretical model  with respect to the cosmological parameter [pα, β]=[Ωm, σ8, h, w0], from the covariance matrix C, and from the derivative of the covariance matrix with respect to these cosmological parameters. Under the additional assumption that the underlying data is Gaussian distributed, we can write (Tegmark 1997):

with respect to the cosmological parameter [pα, β]=[Ωm, σ8, h, w0], from the covariance matrix C, and from the derivative of the covariance matrix with respect to these cosmological parameters. Under the additional assumption that the underlying data is Gaussian distributed, we can write (Tegmark 1997):

Carron (2013) argues that parameter-dependent covariance matrices are not suitable for Fisher forecasts, which are only accurate for Gaussian likelihoods with fixed covariance. In light of this, we neglected the second term of Eq. (12), which at the same time simplified the evaluation. Equipped with this tool, it is now straightforward to compare the impact of using  (Eq. (4)) or

(Eq. (4)) or  (Eq. (10)) in our Fisher forecast, and to investigate the effect of varying the input cosmology at which the covariance matrix is evaluated (and fixing that value, so the derivative of the covariance is still set to zero). Specifically, we monitored changes of the area of the Fisher ellipses, which we took as a metric of the global constraining power. This analysis was repeated with different configurations of the σϵ,

(Eq. (10)) in our Fisher forecast, and to investigate the effect of varying the input cosmology at which the covariance matrix is evaluated (and fixing that value, so the derivative of the covariance is still set to zero). Specifically, we monitored changes of the area of the Fisher ellipses, which we took as a metric of the global constraining power. This analysis was repeated with different configurations of the σϵ,  and Asurvey parameters, which we adjusted to construct covariance matrices that emulate the KiDS-1300, DES-Y5 and LSST-Y10 surveys. Whereas the analytic calculations can evaluate the terms at any specified area and noise levels, the simulations estimates had to be area-rescaled. This introduced a small error since technically the SSC term does not exactly scale that way, but the size of this error is negligible compared to other aspects of the calculations, especially for featureless square masks. In addition, we opted to implement the shape noise term in the simulations simply by adding its analytic contribution, which we obtained from evaluating

and Asurvey parameters, which we adjusted to construct covariance matrices that emulate the KiDS-1300, DES-Y5 and LSST-Y10 surveys. Whereas the analytic calculations can evaluate the terms at any specified area and noise levels, the simulations estimates had to be area-rescaled. This introduced a small error since technically the SSC term does not exactly scale that way, but the size of this error is negligible compared to other aspects of the calculations, especially for featureless square masks. In addition, we opted to implement the shape noise term in the simulations simply by adding its analytic contribution, which we obtained from evaluating  with Asurvey = 100 deg2. This includes both the pure shape noise term and the mixed term, obtained from Eq. (5). Overall, we computed the survey covariance as:

with Asurvey = 100 deg2. This includes both the pure shape noise term and the mixed term, obtained from Eq. (5). Overall, we computed the survey covariance as:

Having established our methods, we now turn to the description of the cosmo-SLICS numerical simulations from which we extracted our light-cone data and evaluated  .

.

3. Weak lensing simulations

There exists a number of ways to construct simulated light-cones for cosmic shear studies, and we adopted here the multiple-plane prescription detailed in Harnois-Déraps et al. (2012); this method was thoroughly tested to meet the accuracy requirements of ongoing weak lensing surveys (see, e.g., Heymans 2012; Hildebrandt et al. 2017). Briefly, the construction pipeline proceeds as follow: after the initial design for volume, particle number and cosmology was specified, an N-body code generated density snapshots at a series of redshifts, chosen to fill the past light-cone. Under the Born approximation, the mass planes were aligned and ray-traced at a pre-selected opening angle, pixel density and source redshifts. In our implementation, this post-processing routine constructed as many mass over-density, convergence and shear maps as the number of density checkpoints in the light-cone. Finally, galaxies were assigned positions and redshifts, and their lensing quantities were obtained by interpolating from the maps. We refer the reader to HD18 for more details on the implementation of this pipeline with the SLICS simulations, and focus hereafter on the new aspects specific to the cosmo-SLICS.

3.1. Choosing the cosmologies

The first part of the design consisted in identifying the parameter space that we wished to sample. Although a significant part of this paper focuses on power spectrum covariance matrices, the cosmo-SLICS have a broader range of applicability, and our primary science goal is, we recall, to provide the means to carry out alternative analyses of the current state-of-the-art weak lensing data, paving the way for LSST and Euclid. Cosmic shear is maximally sensitive to a particular combination of Ωm and σ8, often expressed as  , but also varies at some level with all other parameters. In particular, tomographic lensing analyses are sensitive to the growth of structures over cosmic time and hence probe the dark energy equation of state w0, a parameter that we wish to explore. Furthermore, because of recent claims of a tension in the measurements of the Hubble parameter between CMB and direct H0 probes (Riess et al. 2018; Bonvin et al. 2017; Planck Collaboration I 2019), we decided to vary h as well. In order to reduce the parameter space, we kept all other parameter fixed. More precisely, we fixed ns to 0.969, Ωb to 0.0473 thereby matching the SLICS input values, we ignored any possible evolution of the dark energy equation of state, and we assumed that all neutrinos are massless. In the end, we settled for modelling variations in [Ωm, S8, h, w0].

, but also varies at some level with all other parameters. In particular, tomographic lensing analyses are sensitive to the growth of structures over cosmic time and hence probe the dark energy equation of state w0, a parameter that we wish to explore. Furthermore, because of recent claims of a tension in the measurements of the Hubble parameter between CMB and direct H0 probes (Riess et al. 2018; Bonvin et al. 2017; Planck Collaboration I 2019), we decided to vary h as well. In order to reduce the parameter space, we kept all other parameter fixed. More precisely, we fixed ns to 0.969, Ωb to 0.0473 thereby matching the SLICS input values, we ignored any possible evolution of the dark energy equation of state, and we assumed that all neutrinos are massless. In the end, we settled for modelling variations in [Ωm, S8, h, w0].

We examined the current 2σ constraints from the KiDS-450 and DES-Y1 cosmic shear data11 (Hildebrandt et al. 2017; Troxel et al. 2018), which are both well bracketed by the range Ωm ∈ [0.10, 0.55] and S8 ∈ [0.60, 0.90]. This upper bound on S8 falls between the upper 1σ and the 2σ constraints from Planck, but this is not expected to cause any problems since the cosmo-SLICS are designed for lensing analyses. Constraints on the dark energy equation of state parameter from these lensing surveys allow for w0∈ [−2.5, −0.2]. This wide range of values is expected to change rapidly with the improvement of photometric redshifts, hence we restricted the sampling range to w0 ∈ [ − 2.0, −0.5]. This choice could impact the outskirts of the contours obtained from a likelihood analysis based on the cosmo-SLICS, however this should have no effect on the other parameters. Constraints on h from lensing alone are weak, with KiDS-450 allowing a wide range of values and hitting the prior limits, and DES-Y1 presenting no such results. We instead selected the region of h informed by the Type IA supernovae measurements from Riess et al. (2016). The 5σ values are close to h ∈ [0.64, 0.82], and we further extended the lower limit to 0.60 in order to avoid likelihood samplers from approaching the edge of the range too rapidly. A summary of our final parameter volume is presented in Table 1.

Inspired by the strategy of the Cosmic Emulator12 (Heitmann et al. 2014), we sampled this four-dimensional parameter space with a Latin hyper-cube13, and constructed an emulator to interpolate at any point within this range (see also Nishimichi et al. 2019; Knabenhans et al. 2019; Liu et al. 2018, for other examples relevant to cosmology). A Latin hyper-cube is an efficient sparse sampling algorithm designed to maximise the interpolation accuracy while minimising the node count (see Heitmann et al. 2014, and references therein for more details on the properties of these objects).

Given our finite computing resources, we had to compromise on the number of nodes, which ultimately reflects on the accuracy of the interpolation. We therefore quantify the interpolation error as follow: 1- we varied the number of nodes from 250 down to 50 and 25, then generated for each case a Latin hyper-cube that covered the parameter range summarised in Table 1; 2- we evaluated the ξ± theoretical predictions at these points and trained our emulator on the results (details about our emulator implementation, its accuracy and training strategy can be found in Appendix A); 3- we constructed a fine regular grid over the same range, and compared at each point the predictions from our emulator with the “true” predictions computed on the grid points; 4- we examined the fractional error and decided on whether our accuracy benchmark was reached, demanding an uncertainty no larger than 3%, which is smaller but comparable in size to the accuracy of the HALOFIT model itself. We also recall that the current uncertainty caused by photometric redshifts significantly exceeds this 3% threshold, and that the smaller scales are further affected by uncertainty about baryon feedback mechanisms, hence this interpolation error should be sub-dominant.

We present the fractional error in Fig. A.1 for the 25 nodes case; we achieve a 1−2% accuracy over most of the parameter range, which meets our accuracy requirement, and which we report as our fiducial interpolation error. We emphasise that this error size is not strictly applicable to all types of measurements, for instance the ξ+ interpolation becomes less accurate than that for angular scales larger than two degrees. Instead, this should be viewed as a representative error given an arbitrary lensing signal that varies in cosmology with similar strength as the ξ+ observable over the range 0.5 < ϑ < 72 arcmin.

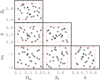

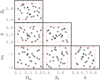

Increasing the node counts from 25 to 50 significantly reduces the size of the regions in parameter space where the accuracy exceeds 2%, which are now pushed to small pockets on the outskirts. Further inflating to 250 nodes moves the bulk of the accuracy below the 1% level. Since our current accuracy target is less strict, we therefore developed the cosmo-SLICS on 25 wCDM plus one ΛCDM nodes, but may complete the Latin hyper-cube with more nodes as in Rogers et al. (2019) in the future; the exact parameter values are listed in Table 2, and their two-dimensional projections are presented in Fig. 1.

|

Fig. 1. Cosmological parameters covered by the cosmo-SLICS. Our fiducial cosmology is depicted here with the “×” symbols. |

Cosmological parameters in the 25+1 cosmo-SLICS models, with S8 is defined as  .

.

3.2. Preparing the light-cones

Prior to running the N-body code, we needed to specify the box size, the particle count and redshift dumps of the projected mass maps, which must form contiguous light-cones along the line of sight. Following HD18, we fixed the simulation volume to Lbox = 505 h−1 Mpc on the side (note that h varies between models) and the particle count to Np = 15363, offering an excellent compromise between large scales coverage and small scales resolution. This set-up allows to estimate cosmic shear correlation functions beyond a degree and under the arc minute without significant impact from the two limitations above-mentioned, thereby covering most of the angular range that enter the KiDS analyses. By fixing the box size however, the number of redshift dumps up to zmax varies with cosmology due to differences in the redshift-distance conversion. We further split these volumes in halves along one of the Cartesian axis and randomly chose one of the six possibilities (three directions for the projections axis times two half-volume options) at every redshift dump. We finally aligned the resulting cuboids to form a long pencil, we worked out the comoving distance to the mid-plane of each of these cuboids, converted14 distances to redshift in the specified cosmology, and proceeded from redshift z = 0 until the back side of the last cuboid exceeds zmax, with zmax = 3.0. The list of redshifts found that way were then passed to the main N-body code which set out to produce particle dumps and mass sheets for each entry. The total number of redshift dumps ranges from 15 (for models-08 and -23) to 28 (for model-01).

3.3. Cosmological simulations with matched pairs

The N-body calculations were carried out with the gravity solver CUBEP3M (Harnois-Déraps et al. 2013) in a setup similar to that described in HD18, except for key modifications due to the wCDM nature of our runs. Dark matter particles were initially placed on a regular grid, then displaced using linear perturbation theory given an initial input power spectrum P(k, zi) and a Gaussian noise map, with zi = 120. Different cosmological models required distinct transfer functions T(k), obtained from running the Boltzmann code CAMB (Lewis et al. 2000) with the parameters values taken from Table 2. The initial power spectrum was then computed as P(k, zi) = Aσ8D2(zi)T(k)kns, where D(zi) is the linear growth factor, and the normalisation parameter Aσ8 is defined such that P(k, z = 0) has the σ8 value given by the model. The initial condition generator included with the public CUBEP3M release can only compute growth factors in ΛCDM cosmologies, hence we computed D(zi, Ωm, ΩΛ, w0) with NICAEA instead, then manually input the results in the generator.

Since the central goal of the cosmo-SLICS is to model the cosmological signal of novel weak lensing methods, it is important to ensure that the simulation sampling variance does not lead to mis-calibrations. Extra-large volume simulations can achieve this through spatial averaging, however these are expensive to run. Instead, we produced a pair of noise maps in which the sampling variance cancels almost completely, such that the mean of any estimator extracted from the pair will be very close to the true ensemble mean. We achieved this in a relatively simple way:

1. We generated a large number of initial conditions at our fiducial cosmology and extracted their power spectra P(k, zi);

2. We computed the mean power spectrum for all possible pair combinations and selected the pair whose mean was the closest to the theoretical predictions, allowing a maximum of 5% residuals;

3. We further demanded that neither of the members of a given pair is a noise outlier. What we mean by this is that the fluctuations in P(k, zi) must behave as expected from a Gaussian noise map and scatter evenly across the input power spectrum. Quantitatively, we required the fluctuations to cross the mean at almost every k-mode. This last requirement further prevented power leakage from large to small scales, which otherwise affects the late-time structure formation.

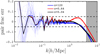

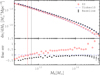

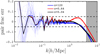

Figure 2 shows the fractional difference between the HALOFIT predictions (set to the horizontal line with zero y-intercept) and the mean initial P(k, zi) measured from our best pair (solid blue); other random pairs are also shown (thin dotted blue lines) and exhibit much larger variance. The drop at high k is caused by the finite mass resolution of our simulations; the grey zone indicates the scales where the departure is greater than 10% at redshift z = 0.0, which occurs at k = 4.0 h−1 Mpc. We used the same pair of noise maps in the initial conditions for our 25 wCDM cosmologies, further ensuring that the sample variance in P(k, zi) is exactly the same across models, and that differences are attributed solely to changes in the input cosmological parameters.

|

Fig. 2. Fractional difference between the mean of simulation pairs at the fiducial cosmology (i.e. model-FID) and the input theoretical model P(k), obtained with HALOFIT. Faint blue dotted lines show the results for a number of random pairs at the initial redshift zi = 120, while the thick blue line highlights the best pair. The sampling variance cancels to better than 5% also at z = 0.64 and 0.04, as demonstrated respectively by the red and black lines. The grey zone indicates the regime where the discrepancy exceeds 10%. |

After this initialisation step, the gravity solver evolved the particles until redshift zero, writing to disk the particles’ phase space and the projected densities at each snapshot. The background expansion subroutine of CUBEP3M has been adapted to allow for w0 ≠ −1 cosmologies by Taylor-expanding the FRW equation to third order in the time coordinate. The exact value of the particle mass depends on the volume and on the matter density, hence varies with h and Ωm, spanning the range [1.42, 7.63]×109 M⊙. The N-body computations were carried out on 256 compute nodes on the Cedar super computer hosted by Compute Canada, divided between 64 MPI tasks and further parallelised with 8 OPENMP threads; they ran for 30−70 h depending on the cosmology. After completion of every simulation, we computed the matter power spectra at every snapshot then erased the particle data to free up space for other runs15. The red and black lines in Fig. 2 show the fractional difference between the non-linear predictions from Takahashi et al. (2012) and the mean P(k) measured from the matched pair at lower redshifts. They demonstrate that the phase cancellation survives well the non-linear evolution.

One potential catch in our matched-pair method is that it is only calibrated against the two-point function, and there is no formal mathematical proof that the sampling variance cancels at the same level for higher order statistics. Evidence points in that direction however: in the initial conditions, the density fields follow Gaussian statistics, hence all the information is captured by the matter power spectrum. Minimising the variance about P(k) is thereby equivalent to minimising the variance about the cosmological information, irrespective of the measurement technique. The results of Villaescusa-Navarro et al. (2018) are encouraging and demonstrate that the matched-pair technique of Angulo & Pontzen (2016) introduces no noticeable bias on the matter-matter, matter-halo and halo-halo power spectra, nor on the halo mass function, void mass function and matter PDF. Additionally, some estimators reconnect with the two-point functions on large scales (e.g. shear clipping, as in Giblin et al. 2018), and for these we expect a significant noise cancellation as well.

3.4. Ray-tracing the light-cone

Closely following the methods of HD18, we constructed mass over-density, convergence and shear maps from the output of the N-body runs. Every light-cone map subtends 100 deg2 on the sky and is divided in 77452 pixels. For each redshift dump zl, we randomly chose one of the six projected density fields, we shifted its origin, then interpolated the result onto the light-cone grid to create a mass over-density map δ2D(θ, zl). We needed here to minimise a second source of sampling variance that arises from the choice of our observer’s position, and which we refer to as the “light-cone sampling variance”. This is distinct from the “Gaussian sampling variance” caused by drawing Fourier modes from a noise map in the initial condition generator. Since the number of mass planes required to reach a given redshift varies across cosmology models, there is an inevitable amount of residual light-cone sampling variance introduced in the δ2D(θ, zl) maps. We nevertheless reduced this by matching the origin-shift vectors and the choice of projection planes at the low-redshift end in our construction.

We computed convergence maps from a weighted sum over the mass planes:

where Δχl = Lbox/nc, nc = 3072 being our grid size. We used Eq. (14) to construct a series of κ(θ, zs) maps for which the source redshift distribution is given by n(z) = δ(z − zs), where zs corresponds to the redshift of the back plane of every projected sub-volume that make up the light-cone. Shear maps, γ1, 2(θ, zs), were obtained by filtering the convergence fields in Fourier space as described by Kaiser & Squires (1993). Our specific implementation of this transform makes use of the periodicity of the full simulation volume to eliminate the boundary effects into the light-cone, as detailed in Harnois-Déraps et al. (2012). Thereafter, any quantity (δ2D, κ, γ1, 2) required at an intermediate redshift (e.g. for a galaxy at coordinate θ and redshift zgal) can be interpolated from these series of maps. For both members of the matched pair and for every cosmological models, we repeated this ray-tracing algorithm with 400 different random shifts and rotations, thereby probing each cosmo-SLICS node 800 times, or total area of 80 000 deg2. We stored the maps for only 50 of these given their significant sizes, but provide galaxy catalogues for all others. These pseudo-independent light-cone maps and catalogues are the main cosmo-SLICS simulation products that we make available to the community.

3.5. Accuracy

3.5.1. Matter power spectrum

As we mentioned before, the calibration of a weak lensing signal can be affected by limitations in the simulations, more specifically by the accuracy of the non-linear evolution, by the finite resolution and by the finite box size. These systematic effects impact every estimator in a different way, and generally exhibit a scale and redshift dependence (see Harnois-Déraps & van Waerbeke 2015, for such a study on ξ± from the SLICS). In many cases however, one can estimate roughly the range of k-modes (or the ϑ values) that enters a given measurement, as in Fig. A1 of van Uitert et al. (2018), hence it is possible to construct an unbiased calibration by choosing only the data points for which the cosmo-SLICS are clean of these systematics. We observe from Fig. 2 that our fiducial cosmology run recovers the non-linear model to better than 2% up to k = 1.0 h−1 Mpc at all redshifts, then the agreement slowly degrades with increasing k-modes, crossing 5% at k = 2 − 3 h−1 Mpc and 10% at 4 − 6 h−1 Mpc, depending on redshift. This comparison is not necessary representative of the true resolution of the cosmo-SLICS, since the HALOFIT predictions themselves have an associated error. It is shown in Harnois-Déraps & van Waerbeke (2015) that the CUBEP3M simulations agree better with the Cosmic Emulator, extending the agreement up to higher k-modes. Unfortunately we cannot use this emulator as our baseline comparison since all of our wCDM nodes lie outside the allowed parameter range.

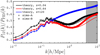

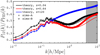

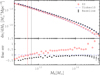

With regards to the growth of non-linear structure across redshifts and cosmologies, the accuracy of the simulations is cleanly inspected with ratios of power spectra, where the small residual sampling variance cancels exactly, owing to the fact that all pairs of N-body calculations originate from the same two noise maps. A comparison between the cosmo-SLICS measurements and the HALOFIT calculations therefore reveals the degree of agreement in a noise-free manner. We show in Fig. 3 a representative example, the ratio between the model-12 and model-FID power spectra, P12(k)/PFID(k). The different colours represent three redshifts, and the vertical offset is caused by differences in the linear growth factor. We observe an excellent match over a large range of scales for the two runs (labelled “sims-A” and “sims-B” in the figure). Some discrepancy is seen at small scales where HALOFIT and the cosmo-SLICS are only 5−8% accurate anyway. A more detailed comparison can be found in Appendix B, where for example we measure that beyond k = 2.0 h−1 Mpc, this ratio agrees to within 10% at z ∼ 0.6, and 5% at z ∼ 0.0. In summary, ratios from simulations are mostly within a few percent of the ratios from the predictions, but some larger departures are observed at low redshift in dark energy models where w0 ≪ −1.0, which we attribute to inaccuracies in the calibration of the Takahashi et al. (2012) predictions in that parameter space.

|

Fig. 3. Ratio between the power spectrum P(k, z) in model-12 and in model-FID (see Table 2). The lines show the predictions from HALOFIT, while the square and triangle symbols are measured from the pair of cosmo-SLICS N-body simulations. Upper (black), middle (red) and lower (blue) lines correspond to redshifts z = 0, 0.6 and 120, respectively. Other cosmologies are shown in Appendix B. |

3.5.2. Lensing 2-point functions

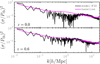

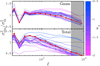

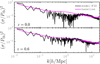

For the particular goal of testing the accuracy of the light-cone products, we examined the lensing power spectrum for each of the 800 pseudo-independent realisations described in Sect. 3.4, assuming a single source plane at zs ∼ 1.0. We present the  measurements from model-FID and model-12 in Fig. 4, compared to the predictions from NICAEA. The grey band identifies a relatively ambitious cut on the lensing data at ℓ = 5000; most forecasts (e.g. The LSST Dark Energy Science Collaboration 2018) are more conservative and reject the ℓ > 3000 multipoles. The agreement between simulations and theory is of the order of a few percent over most of the multipole range for these two cosmologies; the drop at high-ℓ is once again caused both by limitations in the simulation’s resolution and by inaccuracies in the non-linear predictions. Figure 5 next presents the ratio between these two models, and is therefore the light-cone equivalent of Fig. 3. The same trends are recovered, namely a generally good agreement at large scales, followed by an overshooting of a few percent compared to the theoretical models at smaller scales. This disagreement is a known source of uncertainty in the non-linear evolution of the matter power spectrum and hence must be included in the error budget in data analyses that include these scales. It is however sub-dominant compared the uncertainty on baryonic feedback over these same scales, which reaches up to 40%, depending on the hydrodynamical simulations (Semboloni et al. 2011; Harnois-Déraps et al. 2015; Mead et al. 2015; Chisari et al. 2018), and hence is not worrisome for lensing analyses that marginalise over the baryon effects. Ratios computed from other models are presented in Appendix B.

measurements from model-FID and model-12 in Fig. 4, compared to the predictions from NICAEA. The grey band identifies a relatively ambitious cut on the lensing data at ℓ = 5000; most forecasts (e.g. The LSST Dark Energy Science Collaboration 2018) are more conservative and reject the ℓ > 3000 multipoles. The agreement between simulations and theory is of the order of a few percent over most of the multipole range for these two cosmologies; the drop at high-ℓ is once again caused both by limitations in the simulation’s resolution and by inaccuracies in the non-linear predictions. Figure 5 next presents the ratio between these two models, and is therefore the light-cone equivalent of Fig. 3. The same trends are recovered, namely a generally good agreement at large scales, followed by an overshooting of a few percent compared to the theoretical models at smaller scales. This disagreement is a known source of uncertainty in the non-linear evolution of the matter power spectrum and hence must be included in the error budget in data analyses that include these scales. It is however sub-dominant compared the uncertainty on baryonic feedback over these same scales, which reaches up to 40%, depending on the hydrodynamical simulations (Semboloni et al. 2011; Harnois-Déraps et al. 2015; Mead et al. 2015; Chisari et al. 2018), and hence is not worrisome for lensing analyses that marginalise over the baryon effects. Ratios computed from other models are presented in Appendix B.

|

Fig. 4. Fractional difference between the |

|

Fig. 5. Ratio between the convergence power spectrum |

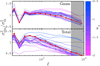

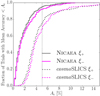

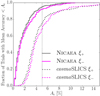

The accuracy of the shear 2-point correlation functions ξ±(ϑ) was next investigated, this time in a more realistic application of the cosmo-SLICS: we populated the simulated light cones with mock galaxies following a N(z) described by the KiDS+VIKING-450 lensing data (Hildebrandt et al. 2018, KV450 hereafter) and compared the mean value from each cosmological model with the theoretical predictions. The fractional difference, presented in Fig. 6, shows that for many models we recover an agreement of a few percent over most of the scales included in the KiDS-450 cosmic shear analysis (the other angular scales are in the grey regions). Some models exceed the 10% agreement marks, highlighting once again limitations in the HALOFIT calibration. This is discussed in greater detail in Appendix A.

|

Fig. 6. Fractional differences between the cosmo-SLICS measurements of ξ± for all models, averaged here across the 50 light-cones, and the corresponding theoretical predictions from NICAEA (with the HALOFIT calibration from Takahashi et al. 2012). The magenta line corresponds to the measurements from the fiducial cosmology, and the grey bands indicate angular scales we recommend to exclude from an emulator training on these simulations. Simulations and predictions are both constructed with the KV450 n(z) here, and we plot the error on the mean. |

4. Covariance matrices

As a first application of the cosmo-SLICS, we investigated the accuracy of the covariance matrix of the convergence power spectra constructed from the 800 light-cones (see Sect. 3.5.2). This enquiry was motivated by a recent study from Petri et al. (2016), where it is shown that a lensing covariance matrix estimated with pseudo-independent realisations could be as accurate as one estimated from truly independent simulations, leading to negligible biases on cosmological parameters constraints. Their results are based on a smaller simulation suite with degraded properties compared to the cosmo-SLICS or the SLICS: they use 200 independent N-body simulations with Lbox = 240 h−1 Mpc and Np = 5123, which they ray-trace up to 200 times each. The authors warn that their findings have to be revisited with better mocks before claiming that the method is robust, a verification we carry out in Sect. 4.1. We further validate the two estimators with the analytical calculations described in Sect. 2.2, then explore in Sect. 4.2 the impact of variations in cosmology on the covariance, and propagate the effect onto error contours about four cosmological parameters. Lastly, we demonstrate in Sect. 4.3 how our Gaussian process emulator can learn the cosmology dependence of these matrices and hence be used in an iterative algorithm similar to the analytical model strategy, but now based exclusively on numerical simulations.

4.1. Simulation-based vs. analytical model: a comparison

In this comparative study, we considered four lensing covariance matrix estimators:

1. Our “baseline” was constructed from 800 truly independent measurements of  extracted from the SLICS, with galaxy sources placed at zs = 1.0. We additionally estimated the uncertainty on that covariance from bootstrap resampling these 800 measurements 1000 times;

extracted from the SLICS, with galaxy sources placed at zs = 1.0. We additionally estimated the uncertainty on that covariance from bootstrap resampling these 800 measurements 1000 times;

2. We identified 14 pairs of simulations within the SLICS whose initial P(k, zi) also satisfy the matched-pair criteria described in Sect. 3.3 (e.g. their mean closely follows the solid blue line in Fig. 2). We resampled the underlying N-body simulations to produce 800 pseudo-independent  measurements and an associated covariance matrix for each of these 14 pairs. We refer to this method as the “matched SLICS” estimate, and treated the variance between the 14 matrices as the uncertainty on the technique;

measurements and an associated covariance matrix for each of these 14 pairs. We refer to this method as the “matched SLICS” estimate, and treated the variance between the 14 matrices as the uncertainty on the technique;

3. We estimated the covariance matrix from the 800 pseudo-independent power spectra extracted from the cosmo-SLICS. We assigned the same uncertainty on that method as on the matched-SLICS method (item 2 above), both being equivalent in their nature. In the fiducial cosmology, we refer to this method as the “model-FID” covariance estimate. We also estimated a matrix for the other 25 cosmological points, which we label “model-00”, “model-01” and so on;

4. At each of the 25+1 cosmologies sampled, we computed the analytic covariance model presented in Eqs. (4)–(8), keeping distinct the Gaussian, non-Gaussian and SSC terms.

We first examined for these four estimators the diagonal and the off-diagonal parts separately, then investigated the overall impact of their residual differences with a Fisher forecast about Ωm, S8, w0 and h. We began with an inspection of the noise-free case before including survey-specific shape noises, galaxy densities and sky coverage. Aside from assuming a global square footprint, we did not apply survey masks in this comparison. This would introduce an extra level of complexity in the comparison, which we would rather keep at a more fundamental level.

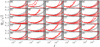

4.1.1. Diagonal elements

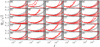

Even though the diagonal part of the covariance is generally the easiest to capture, we do not expect a perfect match between the simulation-based and the analytic methods since differences are already clear at the power spectrum level (see Fig. 4). We show in Fig. 7 the ratio between the variance estimated from the cosmo-SLICS and the analytical estimate, for all cosmologies and in the shape noise-free case, again assuming zs = 1. The baseline and matched SLICS methods closely follow the cosmo-SLICS hence are not shown here for clarity. We examined both the ratio between the Gaussian terms (upper panel, computed from Eq. (5)) and between the diagonal of the full covariance (lower panel), colour-coding the results as a function of w0. Departure from unity in this figure are caused by: 1- residual sampling variance (especially at low ℓ-modes); 2- pixelization of the simulations and slight differences in the ℓ-binning that impact the mode-count 3- resolution limits in the simulations and 4- potential inaccuracies in the theoretical models. We further observe that the high-ℓ mismatch is higher in  than in

than in  , which likely follows from the fact that the Gaussian term is only quadratic in

, which likely follows from the fact that the Gaussian term is only quadratic in  , whereas it is raised to a higher power inside the trispectrum, (to the third power, within first order perturbation theory); consequently the discrepancies observed in the

, whereas it is raised to a higher power inside the trispectrum, (to the third power, within first order perturbation theory); consequently the discrepancies observed in the  are expected to scale more rapidly in the latter case. Models with high and low w0 are shown with blue and magenta lines, respectively. While the Gaussian terms show no colour trend, there is a clear split in the full covariance ratios (lower panel), where blue lines are generally higher than magenta lines. Given that order 50% discrepancies are seen at almost all scales in some models, this points to major differences in the SSC terms, which consequently suggests differences in the halo-mass function. We confirmed this conclusion in Appendix B, where we show that the match in halo mass function degrades for cosmologies with dark energy w0 significantly different from −1.0.

are expected to scale more rapidly in the latter case. Models with high and low w0 are shown with blue and magenta lines, respectively. While the Gaussian terms show no colour trend, there is a clear split in the full covariance ratios (lower panel), where blue lines are generally higher than magenta lines. Given that order 50% discrepancies are seen at almost all scales in some models, this points to major differences in the SSC terms, which consequently suggests differences in the halo-mass function. We confirmed this conclusion in Appendix B, where we show that the match in halo mass function degrades for cosmologies with dark energy w0 significantly different from −1.0.

|

Fig. 7. Ratio between the variance of the shape noise-free lensing power spectrum estimated from the cosmo-SLICS simulations and that obtained from the analytical calculations. The upper panel is for the Gaussian |

Finally, when repeating the above comparison for different redshifts in the model-FID cosmology, we note that the agreement in the full variance improves at higher redshift, where non-linear evolution is less important.

We next investigated the relative departure from pure Gaussian statistics on the diagonal by dividing the full matrix by the Gaussian term. It is therefore convenient to define:

which we evaluated separately for the four methods described at the beginning of this section. The baseline measurement of ℛℓ is reported as the magenta squares in Fig. 8, and clearly captures the non-Gaussian features reported before (e.g. Takahashi et al. 2009, see their Fig. 1). In comparison, the purely Gaussian term  is shown with the thin solid line, which significantly underestimates the simulated variance for ℓ-modes larger than a few hundreds. The matched SLICS are shown with the blue upward triangles, and the cosmo-SLICS model-FID with the black downward triangles. At all scales, we recover an excellent match between these three simulation-based approaches. More precisely, the baseline and the model-FID agree to within 20%, corresponding to a 10% difference on the non-Gaussian part of the error bar about

is shown with the thin solid line, which significantly underestimates the simulated variance for ℓ-modes larger than a few hundreds. The matched SLICS are shown with the blue upward triangles, and the cosmo-SLICS model-FID with the black downward triangles. At all scales, we recover an excellent match between these three simulation-based approaches. More precisely, the baseline and the model-FID agree to within 20%, corresponding to a 10% difference on the non-Gaussian part of the error bar about  . We further examined the agreement with the analytical calculations of ℛℓ for three cases:

. We further examined the agreement with the analytical calculations of ℛℓ for three cases:  % SSC contribution, shown on Fig. 8 as the lower thick solid line; +75% SSC, shown with the thick dashed line; +100% SSC, shown with the upper thick solid line. All simulation-based estimates are bracketed by the two solid lines (except at a few noisy points, e.g. ℓ = 190), consistent with capturing most but not all of the SSC contribution. The k-modes smaller than 2π/Lbox are absent from the simulations and hence do not contribute to the measured SSC, which instead comes from the simulated volume that is not part of the light-cones (this conclusion was also reported in van Uitert et al. 2018, for the baseline estimate). The bottom panel of Fig. 8 compares the error on ℛℓ between the baseline and the model-FID methods, showing that our gain of a factor 400 in computation resources incurs a degradation in precision about ℛℓ by a factor of ∼2 − 3.

% SSC contribution, shown on Fig. 8 as the lower thick solid line; +75% SSC, shown with the thick dashed line; +100% SSC, shown with the upper thick solid line. All simulation-based estimates are bracketed by the two solid lines (except at a few noisy points, e.g. ℓ = 190), consistent with capturing most but not all of the SSC contribution. The k-modes smaller than 2π/Lbox are absent from the simulations and hence do not contribute to the measured SSC, which instead comes from the simulated volume that is not part of the light-cones (this conclusion was also reported in van Uitert et al. 2018, for the baseline estimate). The bottom panel of Fig. 8 compares the error on ℛℓ between the baseline and the model-FID methods, showing that our gain of a factor 400 in computation resources incurs a degradation in precision about ℛℓ by a factor of ∼2 − 3.

|

Fig. 8. Upper: ratio between the diagonal of the lensing power spectrum covariance matrices and the noise-free Gaussian term (i.e. Eq. (15)). We further divide this ratio by |

To frame this comparison in a broader context, we further add to the figure two cases where the shape noise has been included in the Gaussian term, following a KiDS-like (upper/left dotted red curve) and a LSST-like (lower/right) survey configuration (see Table 3 for the numerical specifics of these surveys). In the KiDS-like case, the diagonal is dominated by this noise component, which means that differences of order 10−20% in the non-Gaussian terms are negligible in the total error. In the LSST-like survey however, the shape noise is massively reduced and becomes mostly sub-dominant, meaning that differences between the covariance estimators are expected to have a larger impact.

Survey characteristics used in the analytical covariance calculations.

4.1.2. Off-diagonal elements

We next constructed and compared the four cross-correlation coefficient matrices, defined as  , which highlight the amplitude of the mode-coupling. The results are presented in Fig. 9, where we show slices through the matrices while holding one of the components fixed (ℓ′ = 115, 900 and 5000). From the upper to the lower panel, we present rℓ, 115, rℓ, 900 and rℓ, 5000, using the symbol convention of Fig. 8. We observe an excellent agreement between the simulation-based methods, which both appear to be consistent with capturing about 75% of the SSC contribution once compared with the analytic methods. These results correspond to the shape noise-free case and thereby provide the upper limit on the importance of these off-diagonal terms; the inclusion of shape noise significantly down-weights their overall contributions, further diluting the small differences between the estimators observed in Figs. 8 and 9.

, which highlight the amplitude of the mode-coupling. The results are presented in Fig. 9, where we show slices through the matrices while holding one of the components fixed (ℓ′ = 115, 900 and 5000). From the upper to the lower panel, we present rℓ, 115, rℓ, 900 and rℓ, 5000, using the symbol convention of Fig. 8. We observe an excellent agreement between the simulation-based methods, which both appear to be consistent with capturing about 75% of the SSC contribution once compared with the analytic methods. These results correspond to the shape noise-free case and thereby provide the upper limit on the importance of these off-diagonal terms; the inclusion of shape noise significantly down-weights their overall contributions, further diluting the small differences between the estimators observed in Figs. 8 and 9.

|

Fig. 9. Comparison between the cross-correlation coefficients measured from the baseline method (magenta squares), from the cosmo-SLICS (triangles) and from the analytic model with different amounts of SSC (thick and dashed lines). The spikes seen in these panels indicate the point of crossing with the diagonal, where rℓℓ′ ≡ 1.0 for ℓ = ℓ′. |

4.1.3. Fisher forecast

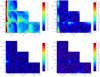

The four different methods agree qualitatively on most properties of the full covariance matrix, but differ in the details, exhibiting various noise levels and converging on coupling strengths that are at times slightly offset. Given that it is unclear which of these covariance estimates is the best, we sought to find out whether these differences matter for weak lensing data analyses. To answer this, we carried out a series of Fisher forecast analyses based on Eq. (12) in which we cycled through three of our four covariance matrix options (baseline, model-FID and analytic, but we dropped the matched SLICS for redundancy reasons) and examined the differences in the constraints on Ωm, σ8, w0 and h. We additionally fragmented the analytical case in its three components to further our insight on the relative importance of each term. We included multipoles in the range 35 < ℓ < 3000, inspired by the fiducial angular scale selection of the LSST Science Requirement Document (The LSST Dark Energy Science Collaboration 2018).

Starting with the analytic methods, the forecasted constraints from the Gaussian-only matrix are shown in Fig. 10 with the dashed-blue lines, the Gaussian+non-Gaussian case with the inner solid red lines, and the total covariance with the outer solid red line (these three lines are plotted in every panel, but overlap in most cases). In the first survey configuration (upper-left triangle plot), we assumed an area of 1300 deg2 with no shape noise. Our results are consistent with the findings of Barreira et al. (2018b), where it is demonstrated that the Gaussian and the SSC terms together capture most of the uncertainty about the cosmological parameters, whereas  contributes minimally. Adopting the area of the Fisher ellipses as a metric, neglecting the non-Gaussian term amounts to underestimating the areas by 5−7% only, except for the [σ8 − h] join contour where the change reaches 18%. Differences in survey geometry and data vectors can explain why we observe a sensitivity in this particular parameter plane while Barreira et al. (2018b) do not: their measurements, made with fine tomographic sampling, are more sensitive to the growth of structure, which translates into tighter constraints in general. The degeneracy direction of the [w0 − Ωm] is also flipped for the same reason. These conclusions about the relative non-importance of

contributes minimally. Adopting the area of the Fisher ellipses as a metric, neglecting the non-Gaussian term amounts to underestimating the areas by 5−7% only, except for the [σ8 − h] join contour where the change reaches 18%. Differences in survey geometry and data vectors can explain why we observe a sensitivity in this particular parameter plane while Barreira et al. (2018b) do not: their measurements, made with fine tomographic sampling, are more sensitive to the growth of structure, which translates into tighter constraints in general. The degeneracy direction of the [w0 − Ωm] is also flipped for the same reason. These conclusions about the relative non-importance of  cannot be generalised to all weak lensing measurement techniques, since some alternatives (e.g. peak statistics) may be more sensitive than

cannot be generalised to all weak lensing measurement techniques, since some alternatives (e.g. peak statistics) may be more sensitive than  to the non-Gaussian signal, and therefore might receive a larger contribution from the

to the non-Gaussian signal, and therefore might receive a larger contribution from the  term.

term.

|

Fig. 10. Measurement forecasts on cosmological parameters obtained with different estimates for the covariance matrix (shown with the different lines in the sub-panels), and for different survey properties. Curves show the 95.4% confidence intervals. In our LSST-Y10 configuration, and cycling through the panels starting from the uppermost, the |

The simulation-based methods are also shown on these plots; the baseline with the dashed black lines and the cosmo-SLICS results with the solid black lines. Although it is difficult to observe in the figure, the Fisher ellipses from these two methods differ by 10−15% in area; the baseline and the analytic estimates (assuming 100% SSC) differ by less than 7%, while the model-FID and the analytic method by less than 11%. Whether these apparently slight differences matter or not depends on the overall error budget of the measurement. In the KiDS-450 cosmic shear analysis for example, these changes were shown to be sub-dominant compared to the uncertainty associated with the photometric redshift estimation or with the baryon feedback models (Hildebrandt et al. 2017). This is bound to change as the statistical power of weak lensing surveys increases, and for this reason we repeated the forecasts with three survey configurations (summarised in Table 3).

First, we included shape noise and sky coverage in amounts that mimic the KiDS survey configuration defined in Table 3 (upper right triangle plot). In this case, the two simulation-based methods provide areas that differ by less than 6%, and by at most 15% with the analytical estimate. Second, we lowered the galaxy density but increased the area to emulate a DES-Y5 survey (lower left triangle). In that case, the baseline and the cosmo-SLICS methods agree to better than 4%, with a 10−16% match in area with the analytic method. We finally increased both the area and the density to generate a LSST Y10-like survey (lower right), in which case the match in areas between the two simulation estimates decreases to the 10% level, while preserving the agreement with the analytic model seen in the DES-Y5 set-up. In summary, when propagated into a Fisher forecast, the three covariance matrices predict cosmological constraints that agree well given their radically different estimation methods. One could then possibly interpret the scatter in area as an uncertainty on the error contours, sourced by systematic error on the covariance.

Once we move away from the two-point statistics however, the simulation-based methods are often the only option left. If we further wish to evaluate the covariance matrix at an arbitrary point in parameter space (i.e. at the best-fit cosmology given by the data), then cosmo-SLICS could be a prime estimation method, which we present next.

4.2. Dependence on cosmology

We have established in the last section that the lensing covariance matrix estimated from the model-FID is well suited for current  -based lensing analyses16, and possibly for upcoming experiments as well. Achieving this accuracy with only two independent N-body simulations opens up a new path to study the impact that variations in cosmology have on the lensing covariance and on the parameter constraints, regardless of the choice of weak lensing estimator. The matched-pair strategy presented in this work could play a key role, as there are no large ensembles required anymore: one simply needs to resample the cosmo-SLICS nodes (or other simulation pairs produced in a similar way) and to interpolate between the nodes to the desired cosmology, as suggested by Schneider et al. (2008).

-based lensing analyses16, and possibly for upcoming experiments as well. Achieving this accuracy with only two independent N-body simulations opens up a new path to study the impact that variations in cosmology have on the lensing covariance and on the parameter constraints, regardless of the choice of weak lensing estimator. The matched-pair strategy presented in this work could play a key role, as there are no large ensembles required anymore: one simply needs to resample the cosmo-SLICS nodes (or other simulation pairs produced in a similar way) and to interpolate between the nodes to the desired cosmology, as suggested by Schneider et al. (2008).

That being said, multiple studies suggest that varying the covariance matrix in a multivariate Gaussian likelihood is neither mathematically correct (e.g. Carron 2013) nor necessary (Kodwani et al. 2019), and that instead one should evaluate the matrix at the best fit cosmology and keep it fixed in the likelihood. This approach was adopted by van Uitert et al. (2018) who use the same analytic covariance model as ours in their analysis of the combined KiDS-450 × GAMA data. At the parameter inference stage, they first guess an initial cosmology at which the covariance matrix is evaluated, they next solve for the best fit cosmology given the data and that initial covariance matrix, they then update the covariance with these new parameters and recalculate a new best fit cosmology; convergence on the posterior distributions of the parameters is achieved after 2−3 iterations.

It seems however that a consensus on the subject has not been reached, considering that cosmology-dependent covariance matrices are utilised as a cross-check in the angular power spectrum analysis of the BOSS-DR12 data (Loureiro et al. 2019, see their Fig. 10), in the HSC-Y1 cosmic shear analysis (Hikage et al. 2019), or in the hybrid17 approach of the CFHTLenS cosmic shear analysis (Kilbinger et al. 2013). We do not intend to settle the issue here, but rather wish to enable this type of inquiries with simulation-based covariance estimators.

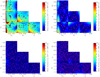

Besides deciding on whether to fix the covariance or let it vary within the likelihood sampling, anchoring the matrix (or converging) to different points in cosmology will have consequences on the parameter constraints, by an amount we need to quantify. We therefore examined in this section what happens to the Fisher forecast contours when we varied the cosmology at which the covariance matrix is fixed. We adopted the same data vector as in Sect. 4.1.3, and present the results at the 25 wCDM cosmologies from both the analytic model and the cosmo-SLICS estimator.