| Issue |

A&A

Volume 688, August 2024

|

|

|---|---|---|

| Article Number | A91 | |

| Number of page(s) | 23 | |

| Section | Interstellar and circumstellar matter | |

| DOI | https://doi.org/10.1051/0004-6361/202346499 | |

| Published online | 08 August 2024 | |

Description of turbulent dynamics in the interstellar medium: Multifractal microcanonical analysis

II. Sparse filtering of Herschel observation maps and visualization of filamentary structures at different length scales

1

INRIA, Geostat team,

200 rue de la Vieille Tour,

33405

Talence Cedex,

France

e-mail: hussein.yahia@inria.fr, arasheri@gmail.com

2

Laboratoire d’Astrophysique de Bordeaux,

CNRS UMR 5804, All. Geoffroy Saint-Hilaire,

33600

Pessac,

France

3

I. Physik. Institut, University of Cologne,

Zülpicher Str. 77,

50937

Cologne,

Germany

4

Université Paris-Saclay, Université Paris Cité, CEA, CNRS, AIM,

91191

Gif-sur-Yvette,

France

5

ICM CSIC, Pg. Marítim de la Barceloneta,

37, Ciutat Vella,

08003

Barcelona,

Spain

6

I2S (Innovative Imaging Solutions),

28-30 rue Jean Perrin,

33608

Pessac Cedex,

France

7

IIT Patna,

GVP2+7F7, Bihta, Dayalpur Daulatpur,

Patna,

Bihar

801106,

India

Received:

24

March

2023

Accepted:

16

May

2024

We present significant improvements to our previous work on noise reduction in Herschel observation maps by defining sparse filtering tools capable of handling, in a unified formalism, a significantly improved noise reduction as well as a deconvolution in order to reduce effects introduced by the limited instrumental response (beam). We implement greater flexibility by allowing a wider choice of parsimonious priors in the noise-reduction process. More precisely, we introduce a sparse filtering and deconvolution approach approach of type l2-lp, with p > 0 variable and apply it to a larger set of molecular clouds using Herschel 250 μm data in order to demonstrate their wide range of application. In the Herschel data, we are able to use this approach to highlight extremely fine filamentary structures and obtain singularity spectra that tend to show a significantly less log-normal behavior and a filamentary nature in the less dense regions. We also use high-resolution adaptive magneto-hydrodynamic simulation data to assess the quality of deconvolution in such a simulated beaming framework.

Key words: ISM: structure / turbulence / ISM: clouds / magnetohydrodynamics (MHD)

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Observations with the Herschel space observatory have highlighted the ubiquity of filamentary structures at different spatial scales in the molecular clouds of the Milky way (Arzoumanian et al. 2011; Hill et al. 2011; Schneider et al. 2012; Schisano et al. 2014; Cox et al. 2016), which raises the question of their supposed role in the star formation process (the literature on this subject is vast; see for instance (André et al. 2014; Hacar et al. 2023). Numerous studies of the dynamical and geometrical structure of filaments on cloud scales (from subparsec scales to scales of a few parsecs) reveal that gas accretion onto filaments and subsequent fragmentation set the initial conditions of star formation. However, the relative importance of turbulence, external pressure, magnetic fields, and gravity in filament formation and evolution is still being explored and strongly debated. There have been a number of proposals for how filaments, or more general dense structures, form. One scenario proposes that magnetized HI bubbles (triggered by supernova explosions) could trigger compression, which initiates mass accumulation along the magnetic field lines on the dense filaments (Inoue et al. 2009, 2018; Bonne et al. 2020b,a; Schneider et al. 2023). This could fit with the observed presence of large bubbles in the solar neighborhood (e.g., Zucker et al. 2023; Pineda et al. 2023). However, other simulations explain cloud formation with colliding large-scale flows of HI, where dense gas, often in filamentary structures, forms in shock-compressed layers (Koyama & Inutsuka 2000; Clarke et al. 2019; Dobbs et al. 2020). In parallel to this large-scale (over tens of parsecs) assembly and structuring of clouds, material flows via fainter filaments (striations) on smaller scales (parsecs) onto larger filaments. The densest ones are called ridges Schneider et al. (2010); Hennemann et al. (2012). In addition to converging flows as a possible mechanism for the formation of HI clouds at large scales (>10pc), there is vast literature on galaxy-scale mechanisms, such as disk instabilities (Kim & Ostriker 2001; Dobbs et al. 2008) and gas-arm interactions (Bonnell et al. 2006). New essential questions are therefore related to whether the different hierarchial levels are interconnected and whether or not certain physical processes dominate at the different scales. In line or continuum maps of any cloud, we observe this hierarchy and our objective is to provide measurements that can be used to quantify spatial structures and to link them to the underlying physical processes.

Yahia et al. (2021) and Robitaille et al. (2019) showed that to accurately access the multiscale properties of a Herschel observation map, it is necessary to work on a reduction of the noise present in the Herschel data. Such noise comes from the cosmic infrared background (CIB), the cosmic microwave background (CMB), and from the instrument itself, among other disturbances. Although the noise of the instrument may be of a fractal nature, the most important components of the noise come from CMB and CIB. The noise from the former can be seen as a Gaussian process to first approximation (Buchert et al. 2017), and tends to modify the shape of a singularity spectrum computed on an observation map, making it more symmetrical, thus concealing the statistics coming from the filamentary structures at small scales, and consequently misleading interpretation of a singularity spectrum in terms of the underlying dynamics. Indeed, a Gaussian process may be mono- or multifractal, and stationary or not; but in all cases it is characterized by second-order statistics only. In addition, the reduction of Gaussian noise makes it possible to visualize – via the geometric distribution of singularity exponents on an observation map – the extraordinary complexity of the spatial distribution of filamentary structures at small scales; that is, structures that are responsible for the non-log-normal character of the obtained singularity spectra. In Yahia et al. (2021), we solved the problem of noise reduction by considering a sparse noise-reduction algorithm that tends to eliminate Gaussian noise while keeping the coherent gradient information at small scales. This was done by solving the following l1 optimization problem:

![$\[\underset{\boldsymbol{s}_f}{\operatorname{argmin}}\left\|\boldsymbol{s}-\boldsymbol{s}_f\right\|_1+\lambda\left\|\nabla \boldsymbol{s}_f\right\|_1,\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq1.png) (1)

(1)

where s is the original (noisy) observation map, sf is the filtered (with noise reduction) computed map, and λ > 0 is a tuning parameter. The first term ∥s − sf∥1 guarantees that the filtered image is very similar to the original (data fitting term), while the second term (also known as prior), λ∥∇sf∥1, when minimized, promotes the sparsity of the filtered gradients (Bach et al. 2011). Different data-fitting terms can be introduced depending on the type of noise present in the data (Gaussian, Laplace, Poisson, etc.) (Getreuer 2012). This type of approach to the problem of noise reduction is a generalization of the total variation (TV)1 methods (Rodríguez & Wohlberg 2009) which have taken on great importance in image processing since the founding article (Rudin et al. 1992). In particular, sparse l1 and the TV scheme are applied in The Event Horizon Telescope Collaboration (The Event Horizon Telescope Collaboration 2019).

Here we present improvements to the Gaussian noise-reduction problem by considering other types of priors, which are more parameterizable and allow finer control over the preservation of filamentary structures. We demonstrate the spectacular improvements in visualization of these filamentary structures at different scales, thus exposing the complexity of the geometric organization of the interstellar medium (ISM) as accessible in the Herschel data, and study the quality of the singularity spectra obtained on filtered and debeamed data. Moreover, the filtering method taken in this study also includes beam reduction in a blind deconvolution formulation, which contributes, in a unified sparse approach, to both noise and beam reduction.

We begin by introducing the general model of deconvolution and noise reduction in Sec. 2. In Sec. 3, we then reiterate the differences in physical processes signed by singularity spectra, namely log-normal and log-Poisson, from our previous work (Yahia et al. 2021), and we demonstrate the superiority of our new algorithm over the Musca observation map. In Sec. 4, we present the magnetohydrodynamic simulation data used in this work, which serve as “ground truth” to evaluate the reduction of the beam effect and the deconvolution algorithm. Section 5 presents the results obtained on several Herschel observation maps; here we also show how the tools developed in this study make it possible to separate – in the Herschel data that we studied – the turbulence properties of dense regions containing protostars from the turbulence properties of regions that are more diffuse and filamentary. We end with a discussion of our results and the conclusions that we draw from this work.

2 Sparse approach to noise reduction and deconvolution

In this work, we focus on a formulation of noise reduction and debeaming as an inverse problem (Hansen 2010; Dupê et al. 2012; Parikh & Boyd 2014; Bertero et al. 2021). Inverse problems arise naturally in many areas of signal and image processing. In the present case, we consider an observational map s containing information perturbed by noise and beam effects; the original or true observation map is the ideal representation of the observed scene. Generally, the observation process is never perfect: there is uncertainty in the measurements, occurring as beam convolution, noise, and other degradation in the recorded observation map. The aim of digital image restoration is to recover an estimate of the original observational map from the degraded observations. The key to being able to solve this ill-posed inverse problem is proper incorporation of prior knowledge about the original observation map into the restoration process (Badri 2015). The many existing studies on interstellar clouds describe them as containing filamentary structures at different scales, which leads to favoring priors respecting sparse gradients.

Image deconvolution is the technique of computing a sharper reconstruction of a digital image from a blurred and noisy one based on a mathematical model of the blurring process (beam plus noise). In astrophysics, noise reduction and beam deconvolution must be performed without notable deterioration in the statistics of the dynamical system observed, which is a very challenging requirement. Image deconvolution is mainly divided into two categories: blind and nonblind deconvolution (Yin & Su 2021). Nonblind image deconvolution seeks an estimate of the true observational map assuming the blur is known. In contrast, blind image restoration tackles the much more difficult (but realistic) problem where the degradation is unknown. In our problem, we are dealing with nonblind image deblurring (NBID) where the observed image is modeled as the convolution of an underlying (sharp) image and a known blurring filter (the instrumental beam), often followed by additive noise.

The general, discrete model for linear degradation caused by beam and additive noise is formulated as:

![$\[\boldsymbol{y}(\boldsymbol{x})=\sum_{\boldsymbol{w} \in \mathcal{S}_{\mathbb{H}}} \mathbb{H}(\boldsymbol{x}, \boldsymbol{w}) s(\boldsymbol{w})+\boldsymbol{n}(\boldsymbol{x}),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq2.png) (2)

(2)

where x = (x, y) ∈ Ω is a spatial location in Ω, the domain of the observation map s; y(x) is the observed image; ℍ(x, w) is the point spread function (PSF); n(x) is the noise; and Sℍ ⊂ ℝ2 is the support of the PSF. The additive noise process n(x) originates during the acquisition of the observation map. Common types of noise are electronic, photoelectric, quantization noise, and – most important in our case – noise coming from CIB, CMB, far distant background objects, and so on. The image-degradation model described by Eq. (2) is very commonly represented in terms of a matrix-vector formulation:

![$\[\boldsymbol{y}=\mathbf{H} \boldsymbol{s}+\boldsymbol{n} \text {, }\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq3.png) (3)

(3)

where H is a matrix representation of a convolution operator ℍ (PSF); if this convolution is periodic, H is then a (block) circulant matrix. In the present paper, the beam effect in the images is modeled in the matrix H. The goal is to recover s from the observational map y. In the inverse problem of Eq. (2), which is ill-conditioned, the maximum likelihood estimation (MLE) ŝ of s is defined by:

![$\[\hat{s}=\hat{s}(\boldsymbol{y})=\underset{\boldsymbol{s}}{\arg \max } \mathbf{~p}_{\boldsymbol{s} \mid \boldsymbol{y}}({\boldsymbol{s} {\mid} \boldsymbol{y}}),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq4.png) (4)

(4)

where ps∣y(s∣y) is the conditional probability distribution of s knowing y. From this, we obtain

![$\[\begin{aligned}\hat{\boldsymbol{s}} & =\underset{\boldsymbol{s}}{\arg \max } \mathbf{~p}_{\boldsymbol{y} \mid \boldsymbol{s}}(\boldsymbol{y} {\mid} \boldsymbol{s}) \mathbf{p}_{\boldsymbol{s}}(\boldsymbol{s}) \\& =\underset{\boldsymbol{s}}{\arg \max } \mathbf{~p}_{\boldsymbol{n}}(\boldsymbol{y}-\mathbf{H} \boldsymbol{s}) \mathbf{p}_{\boldsymbol{s}}(\boldsymbol{s}).\end{aligned}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq5.png) (5)

(5)

Taking logarithms in Eq. (5) leads to

![$\[\hat{\boldsymbol{s}}=\underset{\boldsymbol{s}}{\arg \max } \log \mathbf{p}_{\boldsymbol{n}}(\boldsymbol{y}-\mathbf{H} \boldsymbol{s})+\log \mathbf{p}_{\boldsymbol{s}}(\boldsymbol{s}),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq6.png) (6)

(6)

where pn and ps denote the probability laws of the noise n and random variable s, respectively. When some assumption is made on ps, the MLE is called maximum a posteriori (MAP) estimation, and the MAP estimate takes the form of an optimization problem:

![$\[\hat{s}=\underset{\boldsymbol{s}}{\arg \min } \boldsymbol{~f}(\boldsymbol{s}, \boldsymbol{y}) \text {. }\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq7.png) (7)

(7)

In what follows, we assume that n is Gaussian, so that f(s, y) can be decomposed as a sum of two terms, the first one being an L2 norm and the MAP estimate of s given y is written as (Lorenz 2007):

![$\[\boldsymbol{f}(\boldsymbol{s, y})=\|\mathbf{H} s-\boldsymbol{y}\|^2+\lambda \phi(\boldsymbol{\mathcal{D} s}).\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq8.png) (8)

(8)

In this equation, the first term, ∥Hs − y∥2, is the data fidelity term. The second term, λϕ, is a regularization function that enforces prior knowledge about s into the solution. We note that ϕ corresponds to the entropy of the prior log ps(s). Depending on the assumption of the characteristic of the image or observational map, various ϕ can be chosen in the cost function. It must be noted that, for some interesting priors, ϕ may be neither differentiable nor convex; for example, choosing ![$\[\phi(\boldsymbol{s})=\|\boldsymbol{s}\|_p^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq9.png) with 0 < p < 1 (lp quasi-norm) defines a nonconvex prior. Consequently, one must use the most advanced optimization techniques in the numerical implementation of Eq. (7); for instance, the ones using the notion of a proximal operator, as described in Appendix A. Our observation of images is carried out in the gradient domain, which means that if 𝒟 denotes the discrete gradient operator, for simplicity we write ϕ(𝒟s) instead of ϕ(𝒟xs) + ϕ(𝒟ys), which are respectively the matrix form of the first-order gradients in both directions: dx = [1, −1] and dy = [1, −1]⊤.

with 0 < p < 1 (lp quasi-norm) defines a nonconvex prior. Consequently, one must use the most advanced optimization techniques in the numerical implementation of Eq. (7); for instance, the ones using the notion of a proximal operator, as described in Appendix A. Our observation of images is carried out in the gradient domain, which means that if 𝒟 denotes the discrete gradient operator, for simplicity we write ϕ(𝒟s) instead of ϕ(𝒟xs) + ϕ(𝒟ys), which are respectively the matrix form of the first-order gradients in both directions: dx = [1, −1] and dy = [1, −1]⊤.

In the remainder of this work, H is the PSF of the beam effect, and the choice of the function ϕ is explained by Eq. (B.2). Therefore, we solve the problem of deconvolution and noise reduction by minimizing the functional of Eq. (8), in which H and y(x) are the data of the problem, and the solution is the calculated minimum ŝ. When we set H = Id (identity matrix), then there is no deconvolution and the minimization of the functional of Eq. (8) corresponds to noise reduction only. Concerning the choice of the function ϕ, which is defined in Appendix B via potential functions ![$\[\varphi_q^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq14.png) applied to each coordinate:

applied to each coordinate:

![$\[\phi(\boldsymbol{u})=\sum_i \varphi_q^p\left(u_i\right),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq15.png)

the chosen values of p and q are detailed in Appendix D. In most cases, q = −1 and p is in the interval [1.3, 1.7], which corresponds to the choice of a lp norm: ![$\[\phi(\boldsymbol{u})=\|\boldsymbol{u}\|_p^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq16.png) in the functional definition in Eq. (8). When this is the case, Eq. (8) defines two terms, the first being a l2 norm and the second a lp norm, hence the name l2-lp deconvolution algorithm in that case; this can be compared with Eq. (1), which is of l1-l1 type because of the presence of two l1 norms in its formulation. We show the optimization method used to solve the problem in Eq. (8) in Appendix A.

in the functional definition in Eq. (8). When this is the case, Eq. (8) defines two terms, the first being a l2 norm and the second a lp norm, hence the name l2-lp deconvolution algorithm in that case; this can be compared with Eq. (1), which is of l1-l1 type because of the presence of two l1 norms in its formulation. We show the optimization method used to solve the problem in Eq. (8) in Appendix A.

3 Analysis of an observation map

The results described in this study make use of the methodology detailed in Yahia et al. (2021), to which we refer the reader for further details.

Among the results obtained in our previous study, in particular in regards to the Musca observation map, is the finding of nonsymmetrical singularity spectra, and in particular nonparabolic spectra. We reiterate here that a log-normal process in ℝd has a parabolic singularity spectrum given by the formula:

![$\[D(\mathbf{h})=d-\frac{1}{2}\left(\frac{\mathbf{h}-\mathbf{h}_m}{\sigma_{\mathbf{h}}}\right)^2,\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq17.png) (9)

(9)

where hm and σh are the average and dispersion of the singularity exponents, respectively (see Yahia et al. 2021 for details). Each time one observes a nonsymmetrical singularity spectrum on a real observation map, they are faced with the problem of the physical interpretation – or explanation – of this result. As mentioned in our previous study, a log-normal process has a simple physical interpretation in terms of a multiplicative cascade of a particular type, as commonly invoked in many astrophysics articles and studies dealing with the turbulence of the interstellar medium (Tassis et al. 2010; Bron 2014; Corbelli et al. 2018; Mocz & Burkhart 2019; Mattsson 2020; Bellomi et al. 2020). In contrast, in regards to turbulence, there exists a multiplicative cascade-type phenomenology involving log-Poisson processes: the transfer of energy between scales is carried out via multifractal geometric structures much more complex than those corresponding to the log-normal model (She & Leveque 1994). Regarding turbulence in the ISM, although it may be logical to invoke this type of the nonlog-normal multiplicative cascade, we believe that this needs to be substantiated by observations, because there are many processes other than log-Poisson that have an asymmetric spectrum (e.g., log-α-stable processes, Renosh et al. 2015); notwithstanding that there is not yet any physical justification for the majority of these processes. Moreover, with regards to the nonlog-normal processes present in ISM turbulence, the role of specific physical phenomena (magnetic field, stellar feedback, etc.) with respect to the filamentary structures requires an in-depth study supported by observations.

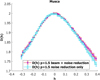

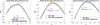

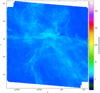

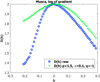

The left panel of Fig. 1 first shows the map of the singularity exponents of the l1-l1 filtered observation map, and the right panel shows the resulting singularity exponents after having applied the noise-reduction procedure explained in Sec. 2 with p = 1.5, q = −1, and λ = 0.1. Figure 2 shows three singularity spectra: that of the raw unfiltered Herschel data, the l1-l1 filtering result, and the filtering result with p = 1.5, q = −1, and λ = 0.1, which corresponds to l2-lp with p = 1.5. The comparison of the spectra on the most singular part, that is, the part corresponding to h < 0, illustrates the type of trade-off that can arise with this noise reduction approach by energy minimization: decreasing p favors the most singular structures, that is, the thinnest filaments, but at the expense of noise elimination, and therefore of their visualization. On the other hand, increasing p improves noise reduction, and therefore the visualization of filaments, but at the expense of a “correct” evaluation of the fractal dimension of these components. This is because the more p increases, the more the filaments tend to thicken. There is no “miracle solution” to this type of problem; hence the trade-off. Different values close to p can be chosen either for the visualization of the filaments hidden in the noise, or for the most exact calculation possible of the singularity spectrum. We note that the spectrum l2-lp nevertheless remains below that of the raw data for the values of p reinforcing the visualization of the filaments, which is the desired outcome. Figures 3 and 4 show the map of the singularity exponents of a subregion of the main filament with both algorithms; the extreme complexity of linear structures at lower scale is now becoming even more evident.

|

Fig. 1 Singularity exponents of the Herschel observation of Musca at 250 μm. Left: after noise reduction using the l1-l1 algorithm presented in Yahia et al. (2021). Right: after noise reduction, p = 1.5, q = −1, λ = 0.1. |

4 Experiments on magnetohydrodynamic simulation data

In this section, we study the results of the proposed approach applied to magnetohydrodynamic simulation data. Our objective in using these data is twofold:

To study the elimination of the beam effect using our approach on data with a “ground truth”.

To present high-spatial-resolution MHD data that will be explored further in future studies.

Synthetic column density maps of the 21 cm hydrogen line were generated from a set of magnetohydrodynamic turbulence simulations of nonisothermal atomic gas designed to statistically reproduce observations of nonself-gravitating cirrus clouds transiting from atomic to molecular gas (Scholtys et al., in prep.). The simulations were carried out with the RAMSES code (Teyssier 2002; Fromang et al. 2006) and use a high-order godunov scheme to compute fluxes at the interface between cells. The ideal MHD equations are solved with the HLLD Riemann solver (Miyoshi & Kusano 2005). The nullity of ∇ · B is guaranteed by the use of the constrained transport method. These simulations leverage the adaptive mesh refinement capabilities of RAMSES. With a coarse grid of 2563 cells, a cubic volume with side length L = 50 pc is refined based on two density thresholds up to effective resolutions of 5123 and 10243. The selected thresholds are n = 10 cm−3 and n = 20 cm−3. They were chosen in order to capture the phase transition from warm to cold atomic gas through thermal instability (Field 1965) while maximizing the resolution of the cold structures and enabling a large number of simulations to be performed. The density and temperature dependant cooling function implemented in RAMSES is based on the work by Wolfire et al. (Wolfire et al. 1995, 2003). The main heating contribution is from the photoelectric effect on small dust grains and polycyclic aromatic hydrocarbons and the cooling occurs through radiative recombination of electrons onto dust grains in addition to emission lines of CII, OI, and Lymanα photons following collisional excitation by electrons and hydrogen atoms.

All the simulations start off in typical warm neutral medium (WNM) conditions with static warm neutral gas with a density of n = 1 cm−3 and a temperature of T = 8000 K cm−3 with a uniform magnetic field along the x axis bx. A turbulent velocity field is then generated through forcing in Fourier space with a parabolic distribution on wavenumbers 1 < k < 3 centered on k = 2, which is half the size of the box L/2. Owing to this wavenumber distribution and to periodic boundary conditions, our simulations therefore model the subvolume of a turbulence cascade generated though energy injection on large scales. The turbulent forcing is continuously applied throughout the evolution of the simulations and takes the form of an acceleration field modeled by an Ornstein-Ulhenbeck stochastic process (Eswaran & Pope 1988; Schmidt et al. 2006, 2009; Saury et al. 2014). The forcing amplitude can be set with the parameter rms and regulates the velocity dispersion amplitude developed by the flow. The percentage of compressible modes or solenoidal modes can be selected through the input cf, where cf = 0 and cf = 1 mean purely solenoidal and purely compressible modes, respectively. As expected for turbulence driven in compressible gas, shearing motions and shocks will develop within the volume, and so locally the compressible fraction may differ from the large-scale driving applied with the forcing. The simulations are evolved until they reach a stationary state and at least three dynamical time steps tdyn = L/σ3D have elapsed, where σ3D represents the 3D turbulent velocity dispersion.

Two synthetic datasets created from the simulation outputs are 21 cm brightness temperature cubes and their corresponding column density maps. As realistic 21 cm spectra are required in the analysis done by Scholtys et al. (in prep.), self-absorption of the 21 cm line by cold gas in between the observer and the emitting cell is also accounted for; although the synthetic column density maps used here are still integrated from the brightness temperature profiles assuming an optically thin medium. The calculation of the brightness temperature assumes that along a line of sight, every individual cell is at local thermodynamic equilibrium (LTE) and contributes an amount of emission that is a function of its density, temperature, and velocity projection on the line of sight to the overall emission at velocity u. For neutral hydrogen gas at LTE, the thermal velocity distribution is a Maxwell-Boltzmann distribution with thermal velocity dispersion ![$\[\sigma_T=\sqrt{k_{\mathrm{B}} T / m_{\mathrm{H}}}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq18.png) and the spinning temperature is equal to the kinetic temperature T (kB: Boltzmann constant, mH: mass of hydrogen). For a line of sight along the z axis, the optical depth dτ(x, y, z, u) at velocity u of a cell of density n(x, y, z), kinetic temperature T(x, y, z), physical size dz, and velocity υz(x, y, z) is given by:

and the spinning temperature is equal to the kinetic temperature T (kB: Boltzmann constant, mH: mass of hydrogen). For a line of sight along the z axis, the optical depth dτ(x, y, z, u) at velocity u of a cell of density n(x, y, z), kinetic temperature T(x, y, z), physical size dz, and velocity υz(x, y, z) is given by:

![$\[\mathrm{d} \tau(x, y, z, u)=\frac{n(x, y, z) \mathrm{d} z}{C~T(x, y, z)} \frac{e^{-\left(u-v_z(x, y, z)\right)^2 / \sigma_T^2}}{\sqrt{2 \pi} \sigma_T(x, y, z)}.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq19.png) (10)

(10)

The constant C = 1.823 × 1018 cm−2(K km s−1)−1 is specific to the 21 cm line and incorporates the Einstein coefficient for spontaneous emission for this transition and the degeneracy of the associated energy levels. For a line of sight along the z axis, the complete brightness temperature spectra TB(x, y, u) are obtained through summation over each cell and weighing their emission by the absorption of the foreground cells at z′ < z:

![$\[T_{\mathrm{B}}(x, y, u)=\sum_z T(x, y, z)\left(1-e^{-\tau(x, y, z, u)}\right) e^-\left(\sum_{z^{\prime}<z} \tau\left(x, y, z^{\prime}, u\right)\right).\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq20.png) (11)

(11)

Real observations of a 21 cm emission spectrum seldom have an absorption counterpart to estimate the true column density. However, self-absorption corrections are expected to be small over the column density range of the simulations, which is Nhi ≤ 5 × 1020 cm−2 (Lee et al. 2015; Murray et al. 2018). Therefore, column density maps are generated under the optically thin approximation, with an integration of the brightness temperature over velocity:

![$\[N_{\mathrm{HI}}(x, y)=C \int T_{\mathrm{B}}(x, y, u) \mathrm{d} u.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq21.png) (12)

(12)

The beam matrix applied to the MHD simulation NHI maps to replicate the instrumental smoothing is a 2D Gaussian kernel of FWHM = 2 pixels, or equivalently σ ≃ 0.849 pixels, because ![$\[F W H M=\sqrt{8 \ln 2} \sigma\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq22.png) . The resulting Gaussian kernel is a 7 × 7 matrix. Each file in the simulation dataset was generated in two versions: the first version with “no beam” (the original one, which may serve as “ground truth” in evaluating the quality of the beam reduction algorithm), and the second one, with beam effect (generated by convolving the original file with the kernel on which beam reduction is to be computed).

. The resulting Gaussian kernel is a 7 × 7 matrix. Each file in the simulation dataset was generated in two versions: the first version with “no beam” (the original one, which may serve as “ground truth” in evaluating the quality of the beam reduction algorithm), and the second one, with beam effect (generated by convolving the original file with the kernel on which beam reduction is to be computed).

All the files produced are HI column density maps at 21 cm. The average density is 1 cm−3. The values of the compressible fraction cf vary between 0.0 (only solenoidal modes) and 1.0 (only compressible modes), with intermediate values of 0.2, 0.5, and 0.8. The amplitude of the magnetic field in each dimension x, y, or z is expressed in multiples of 7.63 μG, where simulations with bx00 are hydrodynamic, and those with bx05, bx10, and bx20 have magnetic field components along x of 3.86, 7.63, and 15.26 μG, respectively. We also include simulation outputs at bx50 corresponding to bx = 38.2 μG, but it should be noted that this value is uncommon. Four different forcing amplitudes were included in the initial condition parameter space of the simulation suite performed by Scholtys et al. (in prep.), namely 9000, 18 000, 36000, and 72 000 (rms), although not all combinations of cf, bx, and rms have a corresponding simulation. Due to the complex interplay between the cooling, the turbulent velocity field, and the magnetic field, simulations with higher magnetic fields yield lower velocity dispersions than their low-bx counterparts. Therefore, simulations with bx00, cf = 0.5, and rms values of 9000, 18 000, and 36000 have 3D velocity dispersions of roughly 3.5, 5.2, and 8.7 km s−1, while runs with bx20, cf = 0.5 and rms values of 18 000, 36 000, and 72 000 produce 3D velocity dispersions of roughly 3.5, 5.2, and 8.7 km s−1.

We have at our disposal a set of stable simulation outputs: simulations are considered stable when the global properties of the simulation have reached a steady state, that is to say that their average values no longer change as a function of time, for at least 1.5–2 dynamic times; the properties considered are: the velocity dispersions according to each spatial component, the mass and volume fractions occupied by each of the phases, and the distribution of temperature and magnetic field as a function of density. This indicates that gas thermodynamics and the balance between turbulence forcing and dissipation has been well established. In this study, we tested our deconvolution algorithm on data with different characteristics in forcing, initial magnetic field, and velocity distribution, and we studied the notable differences obtained on their singularity spectra.

Three files used in the experiment are shown in Table 1. Each file name corresponds to three subfiles, one for each view (x, y, and z).

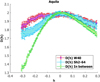

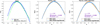

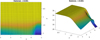

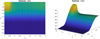

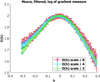

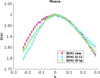

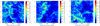

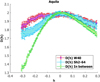

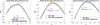

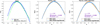

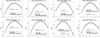

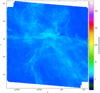

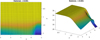

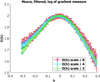

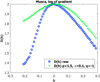

As a first example, let us consider fileA in each view (i.e., x, y, and z). There is no magnetic field in this case. The simulation outputs are displayed in Fig. 5. We reduce the beam effect using the methodology explained in Sec. 2 and compute the singularity spectra of each map, that is, for the “no beam”, “beam”, and with our beam-reduction algorithm (the latter is called “beam corrected” or “debeamed”). The methodology used to determine the values of the parameters p, q, and λ is detailed in Appendix D; as explained there, in order to obtain a prior in the form of an lp norm, we always take q = −1. Values of p and λ are p = 1.7, p = 1.5, p = 1.4 in x, y, and z, respectively, and λ = 0.1. In Fig. 6 we can see the performance of the deconvolution method evaluated on singularity spectra. In the left panel of Fig. 7, we show the three singularity spectra computed in the three views of data file fileA after beam reduction. The spectra are coincident, indicating the same turbulence statistics in the three directions of space. We note that the spectra are not symmetrical, and deviate notably from a log-normal behavior.

As a second example, we consider another data file, which differs only by a modified compressive fraction cf, the other parameters being the same. Figure 8 shows the resulting fileB in each view. Values of p and λ are p = 1.4, p = 1.4, and p = 1.35 in x, y, and z, respectively, and λ = 0.1. In Fig. 9 we note a similar performance in beam deconvolution. We note that the beam convolution has very little effect on the spectrum for the x view. The middle panel of Fig. 7 shows the three singularity spectra computed in the three views of data file fileB after beam reduction. There is a slight anisotropy in the z view. This anisotropy, which is not observed for the more solenoidal case (fileA), might be due to a slight excess of compressive effects (compact regions), which would appear randomly and would only be visible here for one of the chosen projections.

In a third example, we introduce an initial magnetic field and consider data fileC in the x, y, and z views (Fig. 10). The right panel of Fig. 7 shows the three singularity spectra computed in the three views of the data file after beam reduction. In this case, the anisotropy of the turbulence statistics is even more marked in the x direction. We see that in the simulations, the initial magnetic field clearly results in anisotropic turbulence. This complicates observations as we only see one direction, but the result is interesting, as it shows that pure hydrodynamics simulations might not represent all the observed singularity spectra equally well and that the magnetic field indeed influences the statistics of turbulence, as encoded in the singularity spectrum. More precisely, the curves show that, in compressive mode, the curves clearly converge towards close values when the magnetic field increases, whereas greater dispersion is observed in the more clearly solenoidal mode. Such a result is expected, and is confirmed by our analysis.

|

Fig. 3 Singularity exponents of the Herschel observation of Musca at 250 μm. Here we show a zoom onto a particular subregion. The noise reduction with the l1-l1 algorithm corresponds to Fig. 7 of Yahia et al. (2021). |

|

Fig. 4 Singularity exponents of the Herschel observation of Musca at 250 μm. Here we show a zoom onto a particular subregion: l2-lp noise reduction with p = 1.5, q = −1, λ = 0.1. The new noise-reduction algorithm exposes more filamentary structures. Here we show the same gray-level map as that in Fig. 3. |

Filenames given to the three simulation outputs.

|

Fig. 5 Simulation output fileA in the x, y, and z views (see Table 1 for an explanation of the filename syntax). |

|

Fig. 6 Beam reduction performed on simulation output fileA (see Table 1). In each image panel we show: the singularity spectrum of the data generated without any beam effect (called “no beam”, in red, and corresponding to a simulated “ground truth”), the singularity spectrum of the data generated with beam effect as described in the main text (2D Gaussian kernel of FWHM = 2 pixels, called “beam” in the graph, in green), and the singularity spectrum of the data after application of the proposed deconvolution algorithm (called “debeam”, in light blue). The left image panel shows the x view, filtered with p = 1.7 and λ = 0.1; the middle image panel displays the y view, filtered with p = 1.5 and λ = 0.1; and the right panel shows the z view, filtered with p = 1.4 and λ = 0.1. |

|

Fig. 7 Comparison between singularity spectra. Left: comparison of the singularity spectra of output fileA in the x, y, and z views, with p = 1.7 for x, p = 1.5 for y, p = 1.4 for z and λ = 0.1. The three spectra are coincident, indicating same turbulence statistics in the three directions of space. Middle: comparison of the singularity spectra of output fileB in the x, y, and z views, with p = 1.4 for x, p = 1.4 for y, p = 1.35 for z and λ = 0.1. There is anisotropy in the z-direction. Right: comparison of the singularity spectra of output fileC in the x, y, and z views, with p = 1.7 for x, p = 1.6 for y, p = 1.6 for z and λ = 0.1. There is anisotropy in the x-direction. |

|

Fig. 8 Simulation outputs fileB in the x, y, and z views (see Table 1 for an explanation of the filename syntax). |

|

Fig. 9 Beam reduction performed on simulation output fileB (see Table 1 and Fig. 6). The left image panel shows the x view, filtered with p = 1.4 and λ = 0.1; the middle image panel displays the y view, filtered with p = 1.4 and λ = 0.1; and the right panel shows the z view, filtered with p = 1.35 and λ = 0.1. |

|

Fig. 10 Simulation outputs fileC in the x, y, and z views (see Table 1 for an explanation of the filename syntax). |

5 Application to Herschel data

We considered observation maps from the Herschel Gould Belt Survey (André et al. 2010) acquired by the SPIRE instrument (Griffin et al. 2010) at a high spatial resolution and dynamical range. We focused on the 250 μm SPIRE images to study the ISM and their embedded stellar cores (Bontemps et al. 2010; Könyves et al. 2010; Men’shchikov et al. 2010). These data files are publicly available at the Gould Belt project web page2. They are very similar to the data that are also publicly available on the Herschel Science center archive.

In this study, we chose to examine four regions, which are described in the following subsections. We retake the dense but currently nonstar-forming filament Musca from our previous study. In addition, we aim to extend our study to other interstellar clouds: specifically, very diffuse clouds (Spider)3 without dense filaments, a nearby low-mass star-forming region (Taurus), and a nearby star-forming region hosting the impact of stellar feedback (Aquila). We also mention our goal to study a larger sample of star-forming regions in future work and to extend to high-mass star-forming regions. For this purpose, we seek to demonstrate the general character of the image processing methods introduced here and in our previous article, by showing that they apply without difficulty to a large number of types of observation maps. Not yet focusing on high-mass star-forming regions also makes sense because they are a very specific topic in the current debate of ISM evolution. In the numerical implementation of the model presented in Sec. 2, the beam kernel of the SPIRE instrument is a 20 × 20 matrix obtained by calibration on Neptune (Griffin et al. 2010; Vatchanov 2017).

5.1 Effect of beam reduction on the Musca observation map

On the Musca observation map, the effect of beam deconvolution is negligible compared to noise reduction. Indeed, the model for noise and beam reduction presented in Sec. 2 can be implemented using the beam kernel matrix H, which is defined in the case of the SPIRE instrument by the previously mentioned matrix obtained by calibration on Neptune. In this case, beam deconvolution and noise reduction are both operated, but we can also set H = Id (identity matrix), in which case only noise reduction is done. Figure 11 shows the resulting singularity spectrum in the case where p = 1.5, q = −1, and λ = 0.1, with and without beam deconvolution. The curves are very similar, indicating that, at least in the case of Musca, the beam effect is not a hurdle in the multifractal analysis of that map.

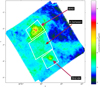

5.2 Aquila rift

The Aquila rift is a structure of 5° in length situated above the Galactic plane at l = 28° and located at an uncertain distance ranging from 240 to 500 pc (Comerón et al. 2022). As pointed out in Bontemps et al. (2010), Spitzer observations indicate the existence of an embedded cluster in the Aquila rift referred to as the Serpens South cluster, which belongs to the Serpens molecular cloud. Figure 12 displays the log of the flux intensity of the Aquila rift at 250 μm, with a focus on the HII region shown in Fig. 13. Figure 14 (top panel) shows the singularity exponents – corresponding to the local correlation measure of formula (B.7) in Yahia et al. (2021) – of the raw Aquila 250 μm (top panel), and the map after noise reduction with p = 1.5, q = −1, and λ = 0.1 (bottom panel). We note that there is more similarity between the two maps than we observed with the Musca map. This comes from the fact that we see less of the background noise for this region as the average column density of dust is larger towards Aquila than towards Musca and then dominates over the background. In the bottom panel of Fig. 14 we zoom onto the central part of the filtered Aquila map. The image of the singularity exponents reveals a similar complex web of smaller-scale filamentary structures, which extend the whole area in a spectacular way. In the left panel of Fig. 15, we see that the singularity spectra of the raw map and the filtered map agree on the most singular transitions of the signal (i.e., h ≤ 0), which confirms that there is less background noise. However, we observe that the two spectra depart from each other in the range h > 0. The two other image panels show the result of a log-normal fit of the singularity spectra D(h). The middle panel of Fig. 15 displays the result of the log-normal fit for the raw Aquila data, and the right image shows the log-normal fit for the filtered Aquila data with parameters p = 1.5, q = −1, and λ = 0.1.

Figure 16 displays the singularity spectra computed over W40, Sh2-64, and over the region between the two. Star-forming regions W40 and Sh2-64 depart clearly from the in-between region. This is confirmed by the log-normal fits of each region, with the star-forming regions being more log-normal as shown in Fig. 17. The range of h values used in generating Fig. 17 has been restricted to [−0.25, 0.3] because of the reduced size of the W40 and Sh2-64 subregions, and the comparison to a log-normal process is based on the residuals for the same reason. This behavior corresponds very well to what was observed in Musca with a clear tendency to show a more log-normal behavior in the densest regions (especially in the upper part of Musca, where we observe the formation of a protostar, as well as toward the crest of the filament) as discussed in Yahia et al. (2021). We thus observe a phenomenon similar to that observed on Musca: the regions containing the densest filaments have a more log-normal singularity spectrum than the less dense regions. The singularity spectrum of the region located between W40 and Sh2-64 (in green in Fig. 16) clearly shows a preponderance of filamentary regions of lower dimensions, a phenomenon also observed on Musca in the less dense regions.

|

Fig. 11 Beam effect on Musca. On the Musca map, the beam effect does not influence the computation of the singularity spectrum. |

|

Fig. 12 Aquila flux intensity map from Herschel at 250 μm. |

|

Fig. 13 Aquila flux intensity map from Herschel at 250 μm. We show a zoom onto one subregion where the flux intensity is more intense (W40 subregion). |

5.3 Taurus L1495

The Taurus star-forming region has been documented in a number of studies using the Herschel SPIRE and PACS data (e.g., Kirk et al. 2013; Palmeirim et al. 2013; Marsh et al. 2016; Roy et al. 2019). It has been noted that the star-forming filament B211, in a way very similar to Musca, reveals the presence of striations perpendicular to the filament and that these striations are generally oriented along the magnetic field direction (Palmeirim et al. 2013; Planck Collaboration Int. XXXIII 2016). Based on complementary kinematic observations, Palmeirim et al. (2013) and Shimajiri et al. (2019) proposed that this filament is gravitationally accreting background cloud material from the molecular cloud, whereas Bonne et al. (2020a) proposed the mass inflow might be mostly driven by magnetic field bending, which is similar to what was proposed for Musca.

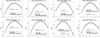

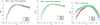

The top panel of Fig. 18 displays the image of the singularity exponents covering the whole SPIRE 250 μm observation map filtered with values p = 1.5, q = −1, and λ = 0.1, and the bottom panel shows a zoom onto the southern subregion. The complexity of small-scale filamentary structures is clearly visible. Figure 19 demonstrates, as in the Musca observation map, the positive effect of noise reduction (here seen quantitatively in the value of the residuals): elimination of the Gaussian noise, notably on the most negative singularity exponents (corresponding to small-scale filamentary structures), clears spurious log-normality in the spectrum not related to the dynamics of the medium, but coming from noise.

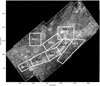

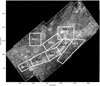

In Fig. 20 we define eight regions of interest (ROIs) in the Herschel SPIRE 250μm, some of them related to the regions highlighted in Hacar et al. (2013) and Li et al. (2021). The regions are chosen at various locations inside the main filament and outside of it, in some less dense regions where smaller-scale striations prevail. We select these regions because ROIs 3, 4, and 6 host protostars, ROIs 5 and 7 do not host protostars (yet), and ROIs 1,2, and 8 trace regions outside the dense gas. We now compare our results obtained with the computation of the singularity spectra on the noise-reduced observation maps with the results of Hacar et al. (2013) and Li et al. (2021). Figure 21 displays, for each region, the result of a log-normal fit of each singularity spectrum. Considering the residual ϵi of ROI i, we see that ROI 7 is the closest to log-normality, followed by ROI 6, ROI 4, ROI 3, and ROI 5. On the other hand, regions 1, 2, and 8 (which are outside of the areas studied by Hacar et al. 2013) depart clearly from the previous group; they are made up of filaments that seem to blend together less, which results in a greater number of isolated filaments contributing much less to the formation of stable zones. Indeed Kolmogorov’s log-normal model is associated with the presence of large chunks of nearly equal dissipation rates. The values of the error fit for regions 1, 2, and 8 indicate a stronger deviation from log-normality than other ROIs. Thus, the phenomenon observed for the ROIs 1, 2, and 8 is similar to that observed for the intermediate region between Sh2-64 and W40 in Aquila. (We reiterate that a residual is a root mean square error (RMSE) between singularity spectra computed on the data and a log-normal fit: smaller residuals imply a more log-normal behavior). We note that, in Fig. 21, the small size in pixels of these regions imposes that we restrict the interval of h values to [−0.25, 0.3] and we do not make use of the kurtosis because high statistical moments are difficult to interpret when there are few data (e.g., the common boundary between ROI4 and ROI5 is about 200 pixels). Figure 22 shows a comparison between the spectra of different regions. The left panel displays the singularity spectra of regions 1, 2, 3, and 7. We clearly see that regions 1 and 2 have similar spectra, while the spectra of regions 3 and 7 depart from the other two. The right panel shows the spectra of the regions 4, 5, 6, and 7, which lie on the main filament of Taurus. While regions 5 and 6 have close spectra, we note marked differences between these regions. The red circle on the left panel of the figure indicates a possible inflexion point in the spectrum of ROI 7. Although this is probably a second-order effect, it is questionable whether this observation can be compared with the findings of Li et al. (2021) of the existence of two regions driven by different dynamics: one subregion dominated by gravity and one by the magnetic field; however, as region ROI 7 is relatively small in pixel size, further confirmation is required in this regard. If confirmed, this would underline the usefulness of the singularity spectrum as a tool to detect underlying dynamics.

We also observe that ROIs 3, 4, 5, 6, and 7 (in the dense gas) are better fitted by log-normal spectra than ROIs 1, 2, and 8. It thus appears that log-normal behavior increases when probing the dense gas (![$\[n_{\mathrm{H}_2}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq23.png) > 104 cm−3). This is in line with the conclusion we draw in the previous section for Aquila and with Musca results too. Clearly, the dense regions have similar spectra, which are more log-normal and less filamentary than those of the more diffuse gas regions (outside the dense structures). This may appear slightly contradictory, since the well-observed dense structure appears as a clear large-scale filament (such as in Musca). This suggests that, despite being a large-scale filament, the dense structure is – statistically on all scales – less filamentary than the less dense gas surrounding it and in particular the striation regions. The very low-intensity striations are probably the reason for these log-normal deviations and for the higher filamentary level in the spectra.

> 104 cm−3). This is in line with the conclusion we draw in the previous section for Aquila and with Musca results too. Clearly, the dense regions have similar spectra, which are more log-normal and less filamentary than those of the more diffuse gas regions (outside the dense structures). This may appear slightly contradictory, since the well-observed dense structure appears as a clear large-scale filament (such as in Musca). This suggests that, despite being a large-scale filament, the dense structure is – statistically on all scales – less filamentary than the less dense gas surrounding it and in particular the striation regions. The very low-intensity striations are probably the reason for these log-normal deviations and for the higher filamentary level in the spectra.

|

Fig. 14 Noise reduction on Aquila. Top panel: singularity exponents of the Herschel observation of Aquila at 250 μm. Left image: display of the singularity exponents of the raw, unfiltered map. Right image: after noise reduction, p = 1.5, q = −1, λ = 0.1. Bottom panel: zoom on subregion of the filtered image. |

|

Fig. 15 Aquila: singularity spectrum and noise reduction. Left: singularity spectra of Aquila observation map with and without noise reduction. Middle: log-normal fit with raw data. Right: log-normal fit after noise reduction. The filtered observation map is less Gaussian than the original raw data. |

|

Fig. 16 Singularity spectra computed over W40, Sh2-64 and in the area between the two. |

|

Fig. 17 In Aquila, subregions W40 and Sh2-64 are more log-normal than the region in between. |

|

Fig. 18 Noise reduction on Taurus L1495. Top panel: singularity exponents of the Herschel observation of Taurus L1495 at 250 μm after noise reduction: p = 1.5, q = −1, λ = 0.1. Bottom panel: zoom onto southern subregion. |

|

Fig. 19 Taurus: singularity spectrum and noise reduction. Left panel: singularity spectra of Taurus L1495 observation map with and without noise reduction. Middle panel: log-normal fit to raw data. Right panel: log-normal fit after noise reduction: p = 1.5, q = −1, λ = 0.1. |

|

Fig. 20 Regions of interest defined over the Taurus observation map. |

5.4 Spider

The Spider ISM was studied by the Planck Collaboration (Planck Collaboration XXIV 2011). Barriault et al. (2010) studied the transition from atomic to molecular gas in the Spider region, and indicate that the Spider region has very low column densities of only ~4 × 1020 cm−2 and shows no star-formation activity; and no molecular core was detected. Figure 23 shows the Herschel flux intensity map, and the top panel of Fig. 24 shows the singularity exponents on the raw observation map (left) and after noise reduction using p = 1.3, q = −1, and λ = 0.1. We note that proper filtering is required in such noise-dominated regions. The bottom panel of Fig. 24 is a zoom onto the central subregion of the filtered map. Again we note the ubiquity of filamentary structures at different scales. Figure 25 displays the singularity spectra obtained with and without noise reduction, and the result of a log-normal fit. We conclude that the Spider region is highly dominated by thinner filamentary structures, which cause its spectrum to strongly deviate from a log-normal behavior; this is again very much in line with previous results for Aquila, Musca, and Taurus, with a typical spectrum of diffuse gas, such as in ROI 1, 2, and 8 in Taurus for instance.

5.5 Dense regions with protostars versus less dense filamentary regions

Figure 26 shows one of the most important results of this study, and one that demonstrates the strength of this approach for studying the ISM: by drawing the singularity spectra of the various regions studied on the same graph, and by retaining dense regions containing protostars as well as diffuse regions rich in filaments of smaller scale, we clearly see the appearance of two classes defined by the most singular part of the spectra (i.e., defined by exponent values h ≤ 0): these correspond to the dense regions with protostars versus diffuse regions.

6 Conclusion and summary

In this study, we present significant improvements in our approach to tackling the problem of noise reduction in the Herschel data. We carried out a sparse filtering of the type l2-lp, which makes it possible to reduce the Gaussian noise while preserving the filamentary structures that appear to be universally observed in the turbulence of interstellar clouds. This article follows on from our previous study, which focuses on Musca only, where we introduced l1-l1 filtering. The new algorithm presented here allows noise reduction and deconvolution (to reduce the beam effect) in a single pass. The algorithm depends on several parameters, which control its convergence as well as the quality of the result. We propose a set of key values for these parameters – which are suitable for all the Herschel data that we have analyzed – and a trade-off between the sparsity term and the data fit in an optimization problem. This type of algorithm was very popular before the arrival of methods based on deep-learning. The latter would undoubtedly make it possible to obtain results of comparable quality (according for instance to Peak Signal-to-Noise Ratio (PSNR)), but it is preferable in our opinion, and in an astrophysical framework, to rely on configurable algorithms, even if, as in the present case, the choice of parameters in a nonlinear context, with few data and without a ground truth, is always difficult.

Among the results obtained, we first note that the beam effect seems to have a negligible influence on the calculation of singularity spectra, which is unlike the problem of noise reduction, a step absolutely necessary for obtaining singularity spectra that can be used in the context of determination of distinct turbulent phenomena.

The less dense regions observed in the studied ISM clouds are found with less log-normal and more filamentary singularity spectra than the densest regions. This may be put in perspective with the proposed scenario of dynamical interactions between two HI and low-density CO clouds at significantly different velocities as proposed for Musca in Bonne et al. (2020a), and in DR21/Cygnus X in Schneider et al. (2023) and Bonne et al. (2023).

We therefore conclude that the singularity spectra computed in a microcanonical formulation can be used to study the filamentary structure of the ISM with similar spectra for low-column-density regions almost devoid of CO gas and for dense structures (mostly CO) in the regions targeted so far (Musca, Aquila, Taurus, and Spider). These similar spectra indicate that low-intensity regions are much less log-normal and more filamentary than the dense regions.

We see an increased log-normality of the spectra in regions with higher intensity. The new results toward Aquila, Taurus, and Spider confirm our proposals of our previous article: we find many more filamentary spectra outside the dense regions (even the dense regions that are nevertheless filamentary to the eye). This suggests that the low-intensity ISM (the HI?) is very strongly filamentary; in any case, even more so than the dense filaments that we see clearly by visual inspection.

Then, in addition to the clear demonstration of filamentary structures at different scales in all our observation data, including in the least dense clouds, we were able to extend and generalize – thanks to an efficient Gaussian noise reduction – the possibility to calculate singularity spectra in distinct subregions. In the case of Taurus for example, we raise the question of obtained signatures of distinct turbulent processes. The positive results obtained here demonstrate the strength of our approach, and give us confidence in the path we have chosen to elucidate the different properties of the magnetohydrodynamic turbulence in interstellar clouds, and their relationships with the complex processes of star formation. The results presented here with real data reveal visible differences in the singularity spectra between dense areas containing cores, and less dense, more filamentary regions, which we interpret in terms of deviation from log-normality. Our future work concerning MHD simulations will focus on the influences of the magnetic field and gravity on the shape of the spectra.

|

Fig. 21 Fit of the singularity spectra for each ROI (observation map filtered with p = 1.6) by a log-normal spectrum. Top row: ROIs 1 to 4, bottom: ROIs 5 to 8. |

|

Fig. 22 Different turbulent dynamics in the ROIs. Left panel: regions 1, 2, 3, and 7. Right panel: regions 4, 5, 6, and 7. The red circle in the left panel is placed over a possible inflexion point in the spectrum, which indicates at least two different types of turbulent dynamics in ROI 7, a finding that can be compared to the results of Li et al. (2021). |

|

Fig. 23 Spider flux intensity map from Herschel at 250 μm. |

|

Fig. 24 Spider: singularity spectrum and noise reduction. Top panel: singularity exponents of the Herschel observation of Spider at 250 μm. Left: display of the singularity exponents of the raw, unfiltered map. Right: after noise reduction: p = 1.3, q = −1, λ = 0.1. Bottom panel: zoom onto subregion (filtered map). |

|

Fig. 25 Noise reduction on Spider. Top: singularity spectra of Spider observation map with and without noise reduction. Botton left: log-normal fit to raw data. Botton right: log-normal fit after noise reduction: p = 1.3, q = −1, λ = 0.1. |

|

Fig. 26 Dense regions with protostars vs diffuse regions. Left panel: definition of subregions Musca 1 and Musca 2. Right panel: left parts of singularity spectra (i.e., corresponding to h ≤ 0) clearly show two classes associated with diffuse regions and dense regions containing protostars. |

Acknowledgements

This work is supported by: 1) the GENESIS project (GENeration and Evolution of Structure in the ISm), via the french ANR and the german DFG through grant numbers ANR-16-CE92-0035-01 and DFG1591/2-1. N.S. and R.S. acknowledge support by the Collaborative Research Center 1601 subproject B2, funded by the DFG, German Research Foundation – 500700252 2) the INRIA InnovationLab Geostat-I2S (Innovative imaging solutions). A.R. was funded by a CIFRE PhD grant in the framework of the INRIA InnovationLab Geostat-I2S. The authors thank the anonymous referees for indications which improved the results.

Appendix A Solution method

The optimisation problem of eq. 8, that is,

![$\[\boldsymbol{\hat{s}}=\underset{\boldsymbol{s}}{\arg \min } \boldsymbol{f}(\boldsymbol{s}, \boldsymbol{y}) \text {, with } \boldsymbol{f}(\boldsymbol{s}, \boldsymbol{y})=\|\mathbf{H} \boldsymbol{s}-\boldsymbol{y}\|^2+\lambda \phi(\mathcal{D} \boldsymbol{s}) \text {, }\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq24.png)

The unconstrained optimization of A.1 is split and we introduce two variables s and u under the constraint that u = 𝒟s. As a result, the new problem is given by

![$\[(\hat{\boldsymbol{s}}, \hat{\boldsymbol{u}})=\underset{\boldsymbol{u}=\mathcal{D} \boldsymbol{s}}{\arg \min }~(\boldsymbol{l}(\boldsymbol{s})+\boldsymbol{m}(\boldsymbol{u})).\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq28.png) (A.3)

(A.3)

With the given constraint, it is clear that u = 𝒟s, and therefore the solutions of equation A.1 and A.3 have to be the same. This method is favored with respect to the latter unconstrained problem because it decomposes two functions, meaning that we can use different techniques for each one of them. A penalty function is added to A.3 to penalize the difference between 𝒟s and u:

![$\[(\hat{\boldsymbol{s}}, \hat{\boldsymbol{u}})=\underset{\boldsymbol{s}, \boldsymbol{u}}{\arg \min }\left(\boldsymbol{l}(\boldsymbol{s})+\boldsymbol{m}(\boldsymbol{u})+\frac{\beta}{2}\|\mathcal{D} \boldsymbol{s}-\boldsymbol{u}\|^2\right).\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq31.png) (A.4)

(A.4)

The constant parameter β controls the magnitude of the penalty function. It is important to note that the choice of β will affect the rate of convergence of the algorithm. As the value of β is increased, the constraint of 𝒟s = u is more aggressively enforced. However, as β becomes large, the problem becomes stiffer. The choice of β falls into the so-called Goldilocks Principle:

If β is too big then the algorithm becomes ill conditioned.

If β is too small then the algorithm may converge very slowly.

The proper value of β is always a trade-off between constraints 1 and 2. In this work, we take β constant: β = 256. A thorough study of the variable splitting technique is available in Wang et al. (2008). Going back to our particular problem, the splitting technique leads us to consider the constrained problem:

![$\[\underset{\boldsymbol{s}, \boldsymbol{u}}{\arg \min }~\|\mathbf{H} \boldsymbol{s}-\boldsymbol{y}\|^2+\lambda \phi(\boldsymbol{u}) \qquad \text { s.t. }\qquad \boldsymbol{u}=\mathcal{D} \boldsymbol{s},\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq33.png) (A.5)

(A.5)

which is then written in the unconstrained form, as explained above:

![$\[\underset{\boldsymbol{s}, \boldsymbol{u}}{\arg \min }~\|\mathbf{H} \boldsymbol{s}-\boldsymbol{y}\|^2+\lambda \phi(\boldsymbol{u})+\frac{\beta}{2}\|\mathcal{D} \boldsymbol{s}-\boldsymbol{u}\|^2.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq34.png) (A.6)

(A.6)

The problem formulated in the form of eq. A.6 can be solved by an alternating minimization scheme, that is, by solving two subproblems iteratively (Badri 2015; Ghayem et al. 2018):

![$\[(S~1) ~\underset{\boldsymbol{u}}{\arg \min } ~\lambda \phi(\boldsymbol{u})+\frac{\beta}{2}\|\mathcal{D} \boldsymbol{s}-\boldsymbol{u}\|^2,\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq35.png) (A.7)

(A.7)

![$\[(S~2) \text { arg } \underset{\boldsymbol{s}}{\operatorname{ain}}\|\mathbf{H} \boldsymbol{s}-\boldsymbol{y}\|^2+\frac{\beta}{2}\|\mathcal{D} \boldsymbol{s}-\boldsymbol{u}\|^2 \text {. }\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq36.png) (A.8)

(A.8)

The algorithm decouples the objective function on variables u and s and minimizes on them independently. This is done with a u-minimization step (A.7) and an s-minimization step (A.8). Sub-problem (S2) is quadratic and is implemented efficiently using the Fourier transform as described below. On the other hand, in subproblem (S1), the most interesting priors for deconvolution and/or noise reduction are often neither differentiable nor convex. For this reason, the solution of eq. A.7 is calculated using shrinkage formulae for proximal operators, which we briefly describe now (the reader is referred to (Lorenz 2007; Rockafellar & Wets 2009; Parikh & Boyd 2014) for a more complete explanation).

The proximal operator of a proper4 and lower semicontinuous function ψ: domψ ⊂ ℝn →] − ∞, + ∞] is the set-valued function ![$\[\mathcal{P}(\psi): \mathbb{R}^n \rightarrow 2^{\mathbb{R}^n}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq37.png) defined by (Rockafellar & Wets 2009):

defined by (Rockafellar & Wets 2009):

![$\[\mathcal{P}(\psi)(\mathbf{x})=\left\{ \operatorname{}_{\boldsymbol{u} \in \operatorname{dom}_\psi}^{\arg \min }\left(\frac{1}{2}\|\boldsymbol{x}-\boldsymbol{u}\|_2^2+\psi(\boldsymbol{u})\right)\right\},\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq38.png) (A.9)

(A.9)

where 2ℝn is the set of all subsets of ℝn (there can be many points u that realize a minimum, or no points at all). The subgradient of ψ is the subset of ℝn ∂ψ(x) = {y ∣ ψ(z) − ψ(x) ≥ yT · (z − x) ∀ z ∈ domψ}. When ψ is differentiable, the subgradient is the usual gradient vector, whereas when ψ is not differentiable, the subgradient may contain a whole continuum of values. For instance if ψ(x) = ∣x∣ (x ∈ ℝ), then ∂ψ(0) = [−1, +1]. A point x minimizes ψ if and only if 0 ∈ ∂ψ(x). When ψ is differentiable, this means ∇ψ(x) = 0. If ψ is convex, x is unique. It can be shown in general that ![$\[\mathcal{P}(\lambda \psi)(\mathbf{x}) \subset\left(I_{\mathbb{R}^n}+\lambda \partial \psi\right)^{-1}(\boldsymbol{x})\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq39.png) with equality when ψ is convex.

with equality when ψ is convex. ![$\[\left(I_{\mathbb{R}^n}+\lambda \partial \psi\right)^{-1}(\boldsymbol{x})\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq40.png) is understood to be the set

is understood to be the set ![$\[\left\{\mathbf{z} \mid \boldsymbol{x} \in\left(I_{\mathbb{R}^n}+\lambda \partial \psi\right)(\mathbf{z})\right\}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq41.png) . It is then shown that a minimum of ψ is a fixed point of its proximal operator (Parikh & Boyd 2014). Consequently, finding a minimum of ψ can be implemented in the form of an iterative process applied on 𝒫(ψ): xk+1 = 𝒫(ψ)(xk), even when ψ is neither convex nor differentiable. When ψ is not convex, the iterative scheme may converge towards a local minimum of ψ. Then, since the second term

. It is then shown that a minimum of ψ is a fixed point of its proximal operator (Parikh & Boyd 2014). Consequently, finding a minimum of ψ can be implemented in the form of an iterative process applied on 𝒫(ψ): xk+1 = 𝒫(ψ)(xk), even when ψ is neither convex nor differentiable. When ψ is not convex, the iterative scheme may converge towards a local minimum of ψ. Then, since the second term ![$\[\frac{\beta}{2}\|\mathcal{D} \boldsymbol{s}-\boldsymbol{u}\|^2\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq44.png) of eq. A.7 is ≥ 0, subproblem (S1) is solved using the iteration procedure applied on the proximal operator 𝒫(λϕ). The potential function ϕ and its proximal operator are given in section B.

of eq. A.7 is ≥ 0, subproblem (S1) is solved using the iteration procedure applied on the proximal operator 𝒫(λϕ). The potential function ϕ and its proximal operator are given in section B.

For a fixed u, eq. A.8 is quadratic in s, and the minimizer s is calculated by taking the derivative of eq. A.8 and setting it to zero. We get:

![$\[\left(\mathcal{D}^T \mathcal{D}+\frac{2}{\beta} \mathbf{H}^T \mathbf{H}\right) \boldsymbol{s}=\mathcal{D}^T \boldsymbol{u}+\frac{2}{\beta} \mathbf{H}^T \boldsymbol{y},\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq46.png) (A.10)

(A.10)

with T denoting transposition. After splitting the discrete gradient operator we get:

![$\[\left(\mathcal{D}_x^T \mathcal{D}_x+\mathcal{D}_y^T \mathcal{D}_y+\frac{2}{\beta} \mathbf{H}^T \mathbf{H}\right) \boldsymbol{s}=\mathcal{D}_x^T u_x+\mathcal{D}_y^T u_y+\frac{2}{\beta} \mathbf{H}^T \boldsymbol{y}.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq47.png) (A.11)

(A.11)

The Hessian matrix on the left-hand side of eq. A.10 can be diagonalized by 2D discrete Fourier transform ℱ. Using the convolution theorem of Fourier transforms, we find that s is equal to:

![$\[\mathcal{F}^{-1}\left(\frac{\overline{\mathcal{F}}\left(\mathcal{D}_x\right) \odot \mathcal{F}\left(u_x\right)+\overline{\mathcal{F}}\left(\mathcal{D}_y\right) \odot \mathcal{F}\left(u_y\right)+\left(\frac{2}{\beta}\right) \overline{\mathcal{F}}(\mathbf{H}) \odot \mathcal{F}(\boldsymbol{y})}{\overline{\mathcal{F}}\left(\mathcal{D}_x\right) \odot \mathcal{F}\left(\mathcal{D}_x\right)+\overline{\mathcal{F}}\left(\mathcal{D}_y\right) \odot \mathcal{F}\left(\mathcal{D}_y\right)+\left(\frac{2}{\beta}\right) \overline{\mathcal{F}}(\mathbf{H}) \odot \mathcal{F}(\mathbf{H})}\right),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq48.png) (A.12)

(A.12)

where ℱ denotes the Fourier transform, “−” denotes complex conjugation and “⊙” component-wise multiplication; the division is also computed component-wise. An advantage of this formulation is that fast-Fourier transform can be used to solve eq. A.8 to reduce computational complexity. In the following section, we introduce the general regularization function, which is used in this paper for deconvolution.

Appendix B A generalized regularization function

We make use of potential functions introduced by (Gholami & Hosseini 2011), which are used as ϕ in eq. 8 and are flexible enough to include in their formulation the classical ℓp priors, the latter being those mainly used in sparse image processing. We consider a family of potential functions:

![$\[\varphi_q^p(\boldsymbol{x})=\left\{\begin{array}{cc}\frac{1}{q}\left(1-\left(|\boldsymbol{x}|^p+1\right)^{-q}\right) & q \neq 0 \\\log \left(|\boldsymbol{x}|^p+1\right) & q=0,\end{array}\right.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq49.png) (B.1)

(B.1)

where x ∈ ℝ. Eq. B.1 defines a family of potential functions indexed by p and q, each with its own characteristics. Some different choices of p and q lead to some known functions as presented in Table B.1.

Potential functions and their corresponding expression according to the values of p and q.

Each potential function can be used as prior information in the second term of equation 8. For instance, nonconvex penalties can achieve better performance to promote sparsity (Lorenz 2007). As a standard example, we note that, according to the table shown, the function ![$\[\varphi_{-1}^p(\boldsymbol{x})=|\boldsymbol{x}|^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq53.png) and the potential function

and the potential function ![$\[\phi(\boldsymbol{u})=\|\boldsymbol{u}\|_p^p=\sum_i \varphi_{-1}^p\left(u_i\right)=\sum_i\left|u_i\right|^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq54.png) make use of the ℓp quasinorm when 0 < p < 1). In our case, and referring to eq. A.7, we make use of the following potential function:

make use of the ℓp quasinorm when 0 < p < 1). In our case, and referring to eq. A.7, we make use of the following potential function:

![$\[\phi(\boldsymbol{u})=\sum_i \varphi_q^p\left(u_i\right).\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq55.png) (B.2)

(B.2)

Then, according to theorem (2.1) of (Parikh & Boyd 2014), one has

![$\[\mathcal{P}(\phi)(\boldsymbol{x})=\left(\mathcal{P}\left(\varphi_q^p\right)\left(x_1\right), \ldots, \mathcal{P}\left(\varphi_q^p\right)\left(x_n\right)\right),\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq56.png) (B.3)

(B.3)

i.e., the computation of the proximal operator 𝒫(ϕ) can be done component-wise.

There are some cases where a closed formula of the proximal operator 𝒫(ϕ) can be derived. As an important example, let us consider the case ![$\[\varphi_{-1}^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq59.png) (which leads, as seen above, to the non-convex penalty ϕ(u) = ∥u∥p when 0 < p < 1). Then, according to theorem 3.2 of (Lorenz 2007), we have the following result:

(which leads, as seen above, to the non-convex penalty ϕ(u) = ∥u∥p when 0 < p < 1). Then, according to theorem 3.2 of (Lorenz 2007), we have the following result:

where (x)+ denotes the positive part of x (i.e., max(x, 0)) and the threshold value ![$\[\lambda_{\mathrm{eff}}=\frac{2-p}{2-2 p}(2(1-p))^{\frac{1}{2-p}}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq63.png) . When 0 < p < 1, the function

. When 0 < p < 1, the function ![$\[\varphi_{-1}^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq64.png) is not convex and the proximal operator

is not convex and the proximal operator ![$\[\boldsymbol{\mathcal{P}}\left(\varphi_{-1}^p\right)\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq65.png) is multivalued at λeff. However, there is no such closed formula known for a general function

is multivalued at λeff. However, there is no such closed formula known for a general function ![$\[\left(\varphi_q^p\right)\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq66.png) . In (Gholami & Hosseini 2011), the authors propose the following approximation of

. In (Gholami & Hosseini 2011), the authors propose the following approximation of ![$\[\boldsymbol{\mathcal{P}}\left(\varphi_q^p\right)\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq67.png) , which is valid for 0 < p ≤ 2:

, which is valid for 0 < p ≤ 2:

![$\[\mathcal{Q}\left(\frac{\lambda}{\beta} \varphi_q^p\right)(\boldsymbol{x})=\left\{\begin{array}{cl}\left(1-\frac{\lambda p}{\beta}\left(\frac{|\boldsymbol{x}|^{p-2}}{\left(|\boldsymbol{x}|^p+1\right)^{q+1}}\right)\right)_{+} \boldsymbol{x} & \text { if }|\boldsymbol{x}|>\eta_{\frac{\lambda}{\beta}} \\0 & \text { otherwise },\end{array}\right.\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq68.png) (B.7)

(B.7)

where ![$\[\boldsymbol{\mathcal{Q}}\left(\frac{\lambda}{\beta} \varphi_q^p\right)\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq69.png) is the approximated proximal operator of the function

is the approximated proximal operator of the function ![$\[\frac{\lambda}{\beta} \varphi_q^p\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq70.png) , and the threshold value

, and the threshold value ![$\[\eta_{\frac{\lambda}{\beta}}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq71.png) can be computed numerically. As shown in eq. B.7, the approximated proximal operator is given by a simple closed-form expression for values bigger than

can be computed numerically. As shown in eq. B.7, the approximated proximal operator is given by a simple closed-form expression for values bigger than ![$\[\eta_{\frac{\lambda}{\beta}}\]$](/articles/aa/full_html/2024/08/aa46499-23/aa46499-23-eq72.png) and set to zero if smaller. The proposed general regularization and its approximation induce sparsity in the sense that a number of values, depending on the strength of regularization, will be exactly equal to zero. For a visual demonstration, in Fig. B.1 we depict the shrinkage thresholding operators for the case of the ℓp quasi-norm, where we show a comparison with the exact numerical solution provided in (Lorenz 2007) (eq. B.6), which is displayed in Fig. B.1. The exact solution is represented by a solid line with its corresponding approximation shown as a dashed line. We point out that the accuracy of the approximation is very high.