| Issue |

A&A

Volume 686, June 2024

|

|

|---|---|---|

| Article Number | A89 | |

| Number of page(s) | 35 | |

| Section | Planets and planetary systems | |

| DOI | https://doi.org/10.1051/0004-6361/202347613 | |

| Published online | 31 May 2024 | |

How do wavelength correlations affect transmission spectra? Application of a new fast and flexible 2D Gaussian process framework to transiting exoplanet spectroscopy

1

School of Physics, Trinity College Dublin, University of Dublin,

Dublin 2,

Ireland

e-mail: fortunma@tcd.ie

2

Center for Computational Astrophysics, Flatiron Institute,

New York,

NY,

USA

3

School of Information and Physical Sciences, University of Newcastle,

Callaghan,

NSW,

Australia

4

Max Planck Institute for Astronomy,

Königstuhl 17,

69117

Heidelberg,

Germany

Received:

31

July

2023

Accepted:

22

February

2024

The use of Gaussian processes (GPs) is a common approach to account for correlated noise in exoplanet time series, particularly for transmission and emission spectroscopy. This analysis has typically been performed for each wavelength channel separately, with the retrieved uncertainties in the transmission spectrum assumed to be independent. However, the presence of noise correlated in wavelength could cause these uncertainties to be correlated, which could significantly affect the results of atmospheric retrievals. We present a method that uses a GP to model noise correlated in both wavelength and time simultaneously for the full spectroscopic dataset. To make this analysis computationally tractable, we introduce a new fast and flexible GP method that can analyse 2D datasets when the input points lie on a (potentially non-uniform) 2D grid – in our case a time by wavelength grid – and the kernel function has a Kronecker product structure. This simultaneously fits all light curves and enables the retrieval of the full covariance matrix of the transmission spectrum. Our new method can avoid the use of a ‘common-mode’ correction, which is known to produce an offset to the transmission spectrum. Through testing on synthetic datasets, we demonstrate that our new approach can reliably recover atmospheric features contaminated by noise correlated in time and wavelength. In contrast, fitting each spectroscopic light curve separately performed poorly when wavelength-correlated noise was present. It frequently underestimated the uncertainty of the scattering slope and overestimated the uncertainty in the strength of sharp absorption peaks in transmission spectra. Two archival VLT/FORS2 transit observations of WASP-31b were used to compare these approaches on real observations. Our method strongly constrained the presence of wavelength-correlated noise in both datasets, and significantly different constraints on atmospheric features such as the scattering slope and strength of sodium and potassium features were recovered.

Key words: methods: data analysis / methods: statistical / techniques: spectroscopic / planets and satellites: atmospheres / stars: individual: WASP-31

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Low-resolution transmission spectroscopy has been a powerful technique for probing the atmospheric composition of exoplanets ever since the first detection of an exoplanet atmosphere (Charbonneau et al. 2002). The technique relies upon observing an exoplanet transit – when an exoplanet appears to pass in front of its host star – and analysing the decrease in flux during the transit as a function of wavelength. The resulting transmission spectrum contains information about the planetary atmosphere (Seager & Sasselov 2000; Brown 2001).

The field of exoplanet atmospheres has entered a new era due to the recent launch of JWST. Early Release Science observations of WASP-39b using JWST NIRSpec’s PRISM mode have produced a 33σ detection of H2O in addition to strong detections of Na, CO, and CO2 (Rustamkulov et al. 2023) – far exceeding what had been achieved with previous ground-based and space-based observations (Nikolov et al. 2016; Sing et al. 2016). As the exoplanet community pushes JWST further to its limits towards smaller terrestrial planets (e.g. Greene et al. 2023; Zieba et al. 2023), the importance of careful treatment of systematics – astrophysical or instrumental – will become of increasing importance.

Gaussian processes (GPs) were introduced in Gibson et al. (2012b) to account for the uncertainty that correlated noise – also referred to as systematics – produces in the resulting transmission spectrum in a statistically robust way. GPs have been shown to provide more reliable estimates of uncertainties when compared to other common techniques such as linear basis models (Gibson 2014). This difference may help explain contradictory results in the field. For example, WASP-31b was reported to have a strong potassium signal at 4.2σ using data from the Hubble Space Telescope (HST; Sing et al. 2015), which were analysed using linear basis models. However, follow-up measurements in Gibson et al. (2017) with the FOcal Reducer and low dispersion Spectrograph (FORS2) on the Very Large Telescope (VLT; Appenzeller et al. 1998) found no evidence of potassium. High-resolution observations in Gibson et al. (2019) using the Ultraviolet and Visual Echelle Spectrograph (UVES) on the VLT as well as low-resolution observations from the Inamori-Magellan Areal Camera and Spectrograph (IMACS) on Magellan (McGruder et al. 2020) also failed to reproduce this detection. These results are more consistent with the re-analysis of the HST data using GPs which reduced the significance of the potassium signal to 2.5σ (Gibson et al. 2017), demonstrating the importance of careful treatment of systematics.

In addition to inconsistent detections of species, conflicting measurements of the slope of the transmission spectrum are also common (e.g. Sedaghati et al. 2017, 2021; Espinoza et al. 2019). A slope in the transmission spectrum can be caused by Rayleigh scattering or from scattering by aerosols including clouds or hazes in the atmosphere (Lecavelier Des Etangs et al. 2008) and it is therefore often referred to as a scattering slope. However, stellar activity can also produce an apparent scattering slope, which is typically used to explain these contradictory results (Espinoza et al. 2019; McCullough et al. 2014; Rackham et al. 2018). It is worth considering whether these effects could be caused by systematics because it is difficult to obtain direct evidence that stellar activity is the cause of these contradictions. This could be particularly relevant for measurements of extreme scattering slopes such as in May et al. (2020), where the authors note that a combination of atmospheric scattering and stellar activity still struggle to explain the observed slope.

One potential issue with current data analyses is that each transit depth in the transmission spectrum is fit separately and is assumed to have an independent uncertainty. Ih & Kempton (2021) studied the effect on retrieved atmospheric parameters if this assumption is incorrect and if transit depth uncertainties are correlated. The authors suggest that both instrumental and stellar systematics could cause correlations in transmission spectra uncertainties, which they showed could significantly impact atmospheric retrievals, but they did not provide a method for retrieving these correlations. Holmberg & Madhusudhan (2023) report the presence of wavelength-correlated systematics in observations of WASP-39b and WASP-96b using the Single Object Slitless Spectroscopy (SOSS) mode of JWST’s Near Infrared Imager and Slitless Spectrograph (NIRISS). They demonstrate why these systematics should result in correlated uncertainties in the transmission spectrum and made multiple simplifying assumptions to derive an approximate covariance matrix of the transmission spectrum, although their method cannot not account for both time and wavelength-correlated systematics.

In this work, we demonstrate a statistically robust way to account for the presence of both time-correlated and wavelength-correlated systematics and its use on both simulated datasets and to real transit observations of WASP-31b from VLT/FORS2 (originally analysed in Gibson et al. 2017). We model the noise present across the full dataset as a Gaussian process. By simultaneously fitting all spectroscopic light curves for transit depth, we also explored the joint posterior of all transit depths using Markov chain Monte Carlo (MCMC) and recovered the covariance matrix of the transmission spectrum. We present an efficient optimisation that can dramatically speed up the required log-likelihood calculation based on work in Saatchi (2011) and Rakitsch et al. (2013). We assume that the inputs to the kernel function of the GP lie on a 2D grid – such as a time by wavelength grid – and refer to this as a 2D GP. Intuitively this assumption is valid for many datasets that can be neatly arranged in a 2D grid, for example an image typically has a 2D grid structure where the inputs describing correlations could be chosen as the x and y coordinates of each pixel. It is not required that the grid is uniform; that is to say for transmission spectroscopy neither the time points nor wavelengths need to be uniformly separated. In contrast, we refer to GPs where the input(s) all lie along a single dimension such as time as 1D GPs. This includes the standard approach of fitting individual transit light curves using a GP.

One example where 2D GPs have already been used would be in radial velocity analysis where multiple parallel time series observations may be jointly fit with a GP (Rajpaul et al. 2015). In this case, time is one of the dimensions and we can consider the other dimension to consist of a small number (~3) of different observables stacked together such as radial velocity and any suitable activity indicators. This is in contrast to transmission spectroscopy where dozens or even hundreds of parallel time series may be observed simultaneously in the form of different wavelength channels and the number of observations in time may also be greater. Unfortunately, the algorithms developed for radial velocity either do not scale to the significantly larger datasets encountered in transmission spectroscopy (as noted in Barragán et al. 2022), or else they make strong assumptions about the form of correlations in both dimensions (Gordon et al. 2020; Delisle et al. 2022) which were not sufficient for our analysis of real observations.

In particular, Gordon et al. (2020) introduced a 2D GP method that could be applied to transmission spectroscopy and scales better than the method introduced here but with a much stronger assumption that the shape of the correlated noise is identical at different wavelengths but can change in amplitude. With weaker assumptions about the correlation in wavelength their method has worse scaling in the number of wavelength channels. The covariance matrix in the time dimension is also limited to celerite kernels as introduced in Foreman-Mackey et al. (2017). Similarly, the extension of the Gordon et al. (2020) method derived in Delisle et al. (2022) is still too limited to apply the kernel function used to fit the VLT/FORS2 data in this work. Our method has significant advantages for transmission spectroscopy as it is computationally tractable even when the length of both dimensions is of the order of hundreds of points and can be used with any general covariance matrices describing the noise in each dimension.

This paper is laid out as follows; Sec. 2 gives a brief introduction to Gaussian processes, the challenges of scaling them to two dimensional datasets and outlines the mathematics of the 2D GP method used in this paper, Sec. 3 compares our method to standard approaches on simulated datasets containing wavelength-correlated noise. Finally, Sec. 4 re-analyses archival VLT/FORS2 observations using this method and the discussion and conclusions are presented in Sects. 5 and 6.

The code developed for this work has been made available as a Python package called LUAS1. It is available on GitHub2 along with documentation and tutorials.

2 2D Gaussian processes for transmission spectroscopy

2.1 Introduction to 2D Gaussian processes

To fit our transit observations we first model the transit using a deterministic function. This function is typically referred to as the ‘mean function’ in the context of GPs and denoted by μ. This light curve model includes a transit depth parameter for each spectroscopic light curve, which we fit for to obtain our transmission spectrum (see Sec. 3.1 for more details on the light curve model). When using 2D GPs, we combine light curve models for each spectroscopic light curve to form our 2D mean function μ(λ, t, θ), which is a function of model parameters θ as well as the wavelength channels λ and the times of our flux observations t.

We model the noise in our observations as a Gaussian process. This means that if we take any two arbitrary input locations xi and xj out of the collection of points in a dataset D, we assume that the noise observed at these points follows a multivariate Gaussian distribution with the covariance given by a kernel function of our choosing. In the case of 1D GPs fitting a single transit light curve, the covariance might be described by the commonly used squared-exponential kernel as a function of the time separation of each data point, giving covariance matrix:

![$\[\mathbf{K}_{i j}=h^2 \exp \left(-\frac{\left|t_i-t_j\right|^2}{2 l_t^2}\right)+\sigma^2 \delta_{i j},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq1.png) (1)

(1)

where h is the height scale or amplitude of the correlated noise, ti and tj are the times of xi and xj, lt is the length scale of the correlated noise in time and we have also included a white noise term with variance σ2 added to the diagonal (δij is the Kronecker delta). The choice of kernel function is problem-specific with a range of kernel functions to choose from (see Rasmussen & Williams 2006).

If we now consider fitting multiple spectroscopic light curves with a GP then any two flux measurements have both a time separation and a wavelength separation. We can combine correlations in both time and wavelength into a single kernel. If we choose the squared-exponential kernel for both dimensions and introduce a wavelength length scale lλ we get:

![$\[\mathbf{K}_{i j}=h^2 \exp \left(-\frac{\left|\lambda_i-\lambda_j\right|^2}{2 l_\lambda^2}\right) \exp \left(-\frac{\left|t_i-t_j\right|^2}{2 l_t^2}\right)+\sigma^2 \delta_{i j},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq2.png) (2)

(2)

where λi and λj· are the wavelength values at locations xi and xj. We can also mix kernel functions, that is we could model time with the Matérn 3/2 kernel and wavelength with a squared-exponential kernel.

In general, the covariance matrix describing the noise in the observations K is a function of inputs λ and t and is a function of parameters such as h, lt, lλ and σ which are typically referred to as hyperparameters. We include both hyperparameters and light curve parameters in θ.

Overall, our dataset of flux observations y are modelled as a multivariate Gaussian distribution, with the likelihood (the probability of the data given our model) given by:

![$\[\p(D \mid \boldsymbol{\theta})=\boldsymbol{\mathcal{N}}(\boldsymbol{y} \mid \boldsymbol{\mu}(\boldsymbol{\lambda}, \boldsymbol{t}, \boldsymbol{\theta}), \mathbf{K}(\boldsymbol{\lambda}, \boldsymbol{t}, \boldsymbol{\theta}))\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq3.png) (3)

(3)

Taking the logarithm of this (to avoid numerical errors) we get the log-likelihood of our model:

![$\[\log (p(D \mid \boldsymbol{\theta}))=-\frac{1}{2} \boldsymbol{r}^T \mathbf{K}^{-1} \boldsymbol{r}-\frac{1}{2} \log |\mathbf{K}|-\frac{N}{2} \log (2 \pi),\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq4.png) (4)

(4)

where we have defined our residuals vector r = y – μ.

In accordance with Bayes’ theorem, we can add the logarithm of any prior probability to our log-likelihood to get the log-posterior of all the parameters3. This equation can then be used by inference methods such as Markov chain Monte Carlo (MCMC) to explore the marginal probability distributions of each parameter.

2.2 The computational cost of 2D Gaussian processes

The log-likelihood function in Eq. (4) can be used for analysing any general dataset including 1D or 2D datasets. However, the computational cost becomes prohibitive for large datasets. Assume we have flux observations measured at N different times and binned into M different wavelength channels. If we move from analysing individual light curves to all spectroscopic light curves simultaneously then the covariance matrix describing the correlation will go from an N × N matrix to an MN × MN matrix to describe correlations in both time and wavelength. Studying Eq. (4), it should be noted that it is necessary to invert the covariance matrix as well as calculate its determinant. The runtime of both of these computations scales as the cube of the number of data points in the most general case. We can write this scaling of runtime in ‘big ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq5.png) ’ notation as

’ notation as ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq6.png) (M3 N3). The covariance matrix K also requires storing M2 N2 entries in memory. The scaling in memory is therefore

(M3 N3). The covariance matrix K also requires storing M2 N2 entries in memory. The scaling in memory is therefore ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq7.png) (M2 N2). This poor scaling in both runtime and memory would make most transmission spectroscopy datasets unfeasible to analyse and is the motivation for introducing a new log-likelihood optimisation.

(M2 N2). This poor scaling in both runtime and memory would make most transmission spectroscopy datasets unfeasible to analyse and is the motivation for introducing a new log-likelihood optimisation.

The optimised GP method introduced in this work is based on Saatchi (2011) and Rakitsch et al. (2013) which both present exact methods of optimising the calculation of Eq. (4). Both methods assume that all inputs for the GP lie on a (potentially non-uniform) 2D grid. In the context of transmission spectroscopy this simply means that if wavelength and time are used as the two inputs then the same set of wavelength bands must be chosen for all points in time (but the wavelength bands do not need to be evenly separated). The grid must also be complete with no missing data, which means outliers at a specific time and wavelength cannot simply be removed from the dataset without either removing all other data points at that specific time or that particular wavelength. However, a common approach for dealing with non-Gaussian outliers has just been to replace them using an interpolating function (e.g. Gibson et al. 2017). This approach keeps the inputs on a complete grid and so this grid assumption likely satisfies most low-resolution transmission spectroscopy datasets.

Both methods differ in the range of kernel functions they can be used with. The Saatchi (2011) method is simpler and is described first, with the Rakitsch et al. (2013) method being a more general extension with a similar computational scaling.

2.3 Kronecker products

Some basics of Kronecker product algebra are useful to explain the optimisations used in this work. See Saatchi (2011) for more details, proofs of the results and how these results generalise to more than two dimensions.

The Kronecker product between two matrices may be written using the ⊗ symbol. It can be thought of as multiplying every element of the first matrix by every element in the second matrix with each multiplication producing its own term in the resulting matrix. For example:

![$\[\mathbf{A} \otimes \mathbf{B}=\left[\begin{array}{lll}a_{11} & a_{12} & a_{13} \\a_{21} & a_{22} & a_{23} \\a_{31} & a_{32} & a_{33}\end{array}\right] \otimes \mathbf{B}=\left[\begin{array}{lll}a_{11} \mathbf{B} & a_{12} \mathbf{B} & a_{13} \mathbf{B} \\a_{21} \mathbf{B} & a_{22} \mathbf{B} & a_{23} \mathbf{B} \\a_{31} \mathbf{B} & a_{32} \mathbf{B} & a_{33} \mathbf{B}\end{array}\right].\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq8.png) (5)

(5)

If A is M × M and B is N × N, then A ⊗ B is MN × MN.

The following equations hold:

![$\[\(\mathbf{A} \otimes \mathbf{B})(\mathbf{C} \otimes \mathbf{D})=\mathbf{A C} \otimes \mathbf{B D},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq9.png) (6)

(6)

![$\[\mathbf{A} \otimes(\mathbf{B}+\mathbf{D})=\mathbf{A} \otimes \mathbf{B}+\mathbf{A} \otimes \mathbf{D},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq10.png) (7)

(7)

![$\[\(\mathbf{A} \otimes \mathbf{B})^{-1}=\mathbf{A}^{-1} \otimes \mathbf{B}^{-1} \text {. }\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq11.png) (8)

(8)

Both methods presented assume the covariance matrix of the noise can be expressed using Kronecker products. The Saatchi (2011) method assumes the covariance matrix can be expressed as:

![$\[\mathbf{K}=\mathbf{K}_\lambda \otimes \mathbf{K}_t+\sigma^2 \mathbb{I},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq12.png) (9)

(9)

where we have written the covariance matrix K as a Kronecker product of separate covariance matrices: Kλ constructed using a wavelength kernel function kλ(λ, λ′); and Kt constructed using the time kernel function kt(t, t′). It also assumes that white noise is constant across the full dataset with variance σ2 – a limitation of the Saatchi (2011) method. An equivalent way of stating this restriction is that the kernel function can be written as:

![$\[\k\left(\lambda_i, \lambda_j, t_i, t_j\right)=k_\lambda\left(\lambda_i, \lambda_j\right) k_t\left(t_i, t_j\right)+\sigma^2 \delta_{i j}.\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq13.png) (10)

(10)

In most transmission spectroscopy datasets, the amplitude of white noise varies significantly in wavelength across the dataset, making the Saatchi (2011) method highly restrictive. In contrast, the Rakitsch et al. (2013) method is valid for any covariance matrix that is the sum of two independent Kronecker products:

![$\[\mathbf{K}=\mathbf{K}_\lambda \otimes \mathbf{K}_t+\boldsymbol{\Sigma}_\lambda \otimes \boldsymbol{\Sigma}_t,\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq14.png) (11)

(11)

or equivalently any kernel function of the form:

![$\[\k\left(\lambda_i, \lambda_j, t_i, t_j\right)=k_{\lambda_1}\left(\lambda_i, \lambda_j\right) k_{t_1}\left(t_i, t_j\right)+k_{\lambda_2}\left(\lambda_i, \lambda_j\right) k_{t_2}\left(t_i, t_j\right).\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq15.png) (12)

(12)

This method is much more general with the second set of covariance matrices ∑λ and ∑t being able to account for white noise that varies in amplitude across the dataset. We can choose Kλ and Kt to include our correlated noise terms and ∑λ and ∑t to be diagonal matrices containing the white noise terms. This allows us to account for any correlated noise that is separable in wavelength and time as well as any white noise that is separable in wavelength and time. The white noise as a function of time and wavelength σ(λ, t) must be able to be written as

![$\[\sigma(\lambda, t)=\sigma(\lambda) \sigma(t).\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq16.png) (13)

(13)

However, there is no requirement that ∑λ and ∑λ be diagonal and the method works for any valid covariance matrices Kλ, Kt, ∑λ and ∑t.

Unlike the method introduced in Gordon et al. (2020) that uses a celerite kernel for the time covariance matrix and is limited to a single regression variable within that matrix, multiple regression variables may be used within any of these covariance matrices. Multiple regression variables are often used in transmission spectroscopy as it is argued they can provide extra information about how strongly correlated the noise may be for different flux observations. For example, the width of the spectral trace on the detector can change in time and a kernel function could be chosen where the correlation in the noise between two points is an explicit function of both the time-separation and difference in trace widths (e.g. Gibson et al. 2012a; Diamond-Lowe et al. 2020) which is not possible using a celerite kernel (Foreman-Mackey et al. 2017). Due to the kronecker product structure, any regression variables used must also lie on a 2D grid, which will be true if they vary in time but are constant in wavelength (or vice versa). One caveat is that multiple regression variables may be used with the Gordon et al. (2020) method by treating them as additional time series to be fit by the GP (see our discussion of Rajpaul et al. 2015 and Delisle et al. 2022 in Sect. 1).

2.4 Log-likelihood calculation for uniform white noise

The algorithms described in Chapter 5 of Saatchi (2011) allow for the calculation of Eq. (4) in ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq17.png) ((M + N)MN) time after an initial matrix decomposition step that scales as

((M + N)MN) time after an initial matrix decomposition step that scales as ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq18.png) (M3 + N3). This holds for any covariance matrix expressible in the form of Eq. (9).

(M3 + N3). This holds for any covariance matrix expressible in the form of Eq. (9).

To understand this method, first note that it takes advantage of Kronecker product structure to speed up matrix-vector products [A ⊗ B]c for an arbitrary MN long vector c using

![$\[\[\mathbf{A} \otimes \mathbf{B}] c=\operatorname{vec}\left(\mathbf{A C} \mathbf{B}^T\right),\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq19.png) (14)

(14)

where we reshape c into an M × N matrix with Cij = c(i−1)M + j and the vec() operator reverses this such that vec(C) = c.

As A ⊗ B is an MN × MN matrix, we would expect multiplication by a vector c to take ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq20.png) (M2 N2) operations to calculate in general. However, Eq. (14) takes

(M2 N2) operations to calculate in general. However, Eq. (14) takes ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq21.png) ((M + N)MN) operations. We also do not need to store the full matrix A ⊗ B in memory but instead just A and B separately, reducing the memory requirement from O(M2 N2) to

((M + N)MN) operations. We also do not need to store the full matrix A ⊗ B in memory but instead just A and B separately, reducing the memory requirement from O(M2 N2) to ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq22.png) (M2 + N2).

(M2 + N2).

We combine this with Eq. (8) and find that once we perform the ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq23.png) (M3 + N3) operations of computing K−1λ and K−1t then

(M3 + N3) operations of computing K−1λ and K−1t then

![$\[\mathbf{K}^{-1} \boldsymbol{r}=\left[\mathbf{K}_\lambda^{-1} \otimes \mathbf{K}_t^{-1}\right] \boldsymbol{r}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq24.png) (15)

(15)

can be computed in O((M + N)MN) operations and using ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq25.png) (M2 + N2) memory.

(M2 + N2) memory.

While it would be sufficient to use any method such as Cholesky factorisation to compute K−1⊗ and K−1t, using the eigen-decomposition of each matrix permits white noise to be easily accounted for. We exploit a particular property of eigendecom-position that the addition of a constant to the diagonal of a matrix shifts the eigenvalues but leaves the eigenvectors unchanged. We denote the eigendecomposition of some matrix A as:

![$\[\mathbf{A}=\mathbf{Q}_{\mathbf{A}} \mathbf{\Lambda}_{\mathbf{A}} \mathbf{Q}_{\mathbf{A}}^T,\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq26.png) (16)

(16)

after which the inverse can be easily computed as:

![$\[\mathbf{A}^{-1}=\mathbf{Q}_{\mathbf{A}} \boldsymbol{\Lambda}_{\mathbf{A}}^{-1} \mathbf{Q}_{\mathbf{A}}^T \text {. }\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq27.png) (17)

(17)

For two matrices A and B it can be shown that

![$\[\(\mathbf{A} \otimes \mathbf{B})^{-1}=\left[\mathbf{Q}_{\mathbf{A}} \otimes \mathbf{Q}_{\mathbf{B}}\right]\left[\mathbf{\Lambda}_{\mathbf{A}} \otimes \mathbf{\Lambda}_{\mathbf{B}}\right]^{-1}\left[\mathbf{Q}_{\mathbf{A}}^T \otimes \mathbf{Q}_{\mathbf{B}}^T\right].\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq28.png) (18)

(18)

We can then add a white noise term to Eq. (18) simply by shifting the eigenvalues:

![$\[\begin{aligned}\mathbf{K}^{-1} \boldsymbol{r} & =\left(\mathbf{K}_\lambda \otimes \mathbf{K}_t+\sigma^2 \mathbb{I}_{M N}\right)^{-1} \boldsymbol{r} \\& =\left[\mathbf{Q}_\lambda \otimes \mathbf{Q}_t\right]\left[\boldsymbol{\Lambda}_\lambda \otimes \boldsymbol{\Lambda}_t+\sigma^2 \mathbb{I}_{M N}\right]^{-1}\left[\mathbf{Q}_\lambda^T \otimes \mathbf{Q}_t^T\right] \boldsymbol{r},\end{aligned}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq29.png) (19)

(19)

where ![$\[\mathbb{I}_{MN}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq30.png) represents the MN × MN identity matrix. We use Eq. (14) to multiply r by the eigenvector matrices Qλ and Qt. The middle term containing the product of eigenvalues is diagonal and so the inverse is easily calculated.

represents the MN × MN identity matrix. We use Eq. (14) to multiply r by the eigenvector matrices Qλ and Qt. The middle term containing the product of eigenvalues is diagonal and so the inverse is easily calculated.

Calculating log |K| can be performed in ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq31.png) (MN) time using:

(MN) time using:

![$\[\log |\mathbf{K}|=\sum_{i=1}^N \log \left(\left(\mathbf{\Lambda}_\lambda \otimes \mathbf{\Lambda}_t\right)_{i i}+\sigma^2\right).\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq32.png) (20)

(20)

2.5 Accounting for non-uniform white noise

The assumption of uniform white noise can be avoided by using a linear transformation presented in Rakitsch et al. (2013). Using this transformation, we can efficiently solve for the log-likelihood for any covariance matrix that is the sum of two Kronecker products – as described by Eq. (11).

First, we solve for the eigendecomposition of ∑λ and ∑t, notating this as follows:

![$\[\boldsymbol{\Sigma}_\lambda=\mathbf{Q}_{\mathbf{\Sigma}_\lambda} \boldsymbol{\Lambda}_{\boldsymbol{\Sigma}_\lambda} \mathbf{Q}_{\mathbf{\Sigma}_\lambda}^T,\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq33.png) (21)

(21)

![$\[\boldsymbol{\Sigma}_t=\mathbf{Q}_{\mathbf{\Sigma}_t} \boldsymbol{\Lambda}_{\boldsymbol{\Sigma}_t} \mathbf{Q}_{\boldsymbol{\Sigma}_t}^T.\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq34.png) (22)

(22)

In the case that ∑λ is diagonal then we simply have ∑λ = Λλ and ![$\[\mathbf{Q}_{\mathbf{\Sigma}_\lambda}=\mathbb{I}_N\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq35.png) (and similarly for ∑t).

(and similarly for ∑t).

We transform Kλ and Kt using these eigendecompositions:

![$\[\tilde{\mathbf{K}}_\lambda=\boldsymbol{\Lambda}_{\boldsymbol{\Sigma}_\lambda}^{-\frac{1}{2}} \mathbf{Q}_{\boldsymbol{\Sigma}_\lambda}^T \mathbf{K}_\lambda \mathbf{Q}_{\mathbf{\Sigma}_\lambda} \boldsymbol{\Lambda}_{\boldsymbol{\Sigma}_\lambda}^{-\frac{1}{2}},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq36.png) (23)

(23)

![$\[\tilde{\mathbf{K}}_t=\mathbf{\Lambda}_{\mathbf{\Sigma}_t}^{-\frac{1}{2}} \mathbf{Q}_{\mathbf{\Sigma}_t}^T \mathbf{K}_t \mathbf{Q}_{\mathbf{\Sigma}_t} \mathbf{\Lambda}_{\mathbf{\Sigma}_t}^{-\frac{1}{2}}.\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq37.png) (24)

(24)

Doing this allows us to write our covariance matrix K as a product of three terms:

![$\[\mathbf{K}=\left[\mathbf{Q}_{\Sigma_\lambda} \boldsymbol{\Lambda}_{\mathbf{\Sigma}_\lambda}^{\frac{1}{2}} \otimes \mathbf{Q}_{\mathbf{\Sigma}_t} \boldsymbol{\Lambda}_{\mathbf{\Sigma}_t}^{\frac{1}{2}}\right]\left[\tilde{\mathbf{K}}_\lambda \otimes \tilde{\mathbf{K}}_t+\mathbb{I}_{\mathrm{MN}}\right]\left[\boldsymbol{\Lambda}_{\mathbf{\Sigma}_\lambda}^{\frac{1}{2}} \mathbf{Q}_{\mathbf{E}_\lambda}^T \otimes \boldsymbol{\Lambda}_{\mathbf{\Sigma}_t}^{\frac{1}{2}} \mathbf{Q}_{\mathbf{\Sigma}_t}^T\right].\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq38.png) (25)

(25)

Multiplying this out reproduces Eq. (11).

The inverse of this equation can be calculated to be:

![$\[\begin{aligned}\mathbf{K}^{-1}= & {\left[\mathbf{Q}_{\mathbf{\Sigma}_\lambda} \boldsymbol{\Lambda}_{\mathbf{\Sigma}_\lambda}^{-\frac{1}{2}} \otimes \mathbf{Q}_{\mathbf{\Sigma}_t} \boldsymbol{\Lambda}_{\mathbf{\Sigma}_t}^{-\frac{1}{2}}\right]\left[\tilde{\mathbf{K}}_\lambda \otimes \tilde{\mathbf{K}}_t+\mathbb{I}_{\mathrm{MN}}\right]^{-1} } \\& \times\left[\boldsymbol{\Lambda}_{\mathbf{\Sigma}_\lambda}^{-\frac{1}{2}} \mathbf{Q}_{\mathbf{\Sigma}_\lambda}^T \otimes \mathbf{\Lambda}_{\mathbf{\Sigma}_t}^{-\frac{1}{2}} \mathbf{Q}_{\mathbf{\Sigma}_t}^T\right].\end{aligned}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq39.png) (26)

(26)

We can now solve K−1 r in three steps. K−1 is the product of three matrices, two of which are of the form A ⊗ B and so we can use Eq. (14). The middle matrix in this product is equivalent to Eq. (19) because we have a Kronecker product plus a constant added to the diagonal being multiplied by a vector. In this case, we need the eigendecomposition of the transformed covariance matrices ![$\[\tilde{\mathbf{K}}_\lambda\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq40.png) and

and ![$\[\tilde{\mathbf{K}}_t\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq41.png) and the ‘white noise’ term is set to σ = 1. The scaling has not changed significantly compared to the method with constant white noise although the eigendecomposition of four matrices instead of two is now required (although this is trivial if ∑λ and ∑t are diagonal).

and the ‘white noise’ term is set to σ = 1. The scaling has not changed significantly compared to the method with constant white noise although the eigendecomposition of four matrices instead of two is now required (although this is trivial if ∑λ and ∑t are diagonal).

log |K| is now computed as follows:

![$\[\log |\mathbf{K}|=\sum_{i=1}^{M N} \log \left(\left(\boldsymbol{\Lambda}_{\tilde{\mathbf{K}}_\lambda} \otimes \mathbf{\Lambda}_{\tilde{\mathbf{K}}_t}\right)_{i i}+1\right)+\log \left(\left(\boldsymbol{\Lambda}_{\boldsymbol{\Sigma}_\lambda} \otimes \mathbf{\Lambda}_{\mathbf{\Sigma}_t}\right)_{i i}\right).\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq42.png) (27)

(27)

The log-likelihood can now be solved for any covariance matrix that can be written in the form of Eq. (11) with an overall scaling of ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq43.png) (2M3 + 2N3 + MN(M + N)) operations and

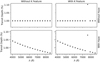

(2M3 + 2N3 + MN(M + N)) operations and ![$\[\boldsymbol{\mathcal{O}}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq44.png) (M2 + N2) memory. Compared to a more general approach of performing Cholesky factorisation on the full MN × MN covariance matrix K, our optimised method provides greater than order of magnitude improvements in runtime for typical transmission spectroscopy datasets (shown in Sec. 4.5).

(M2 + N2) memory. Compared to a more general approach of performing Cholesky factorisation on the full MN × MN covariance matrix K, our optimised method provides greater than order of magnitude improvements in runtime for typical transmission spectroscopy datasets (shown in Sec. 4.5).

2.6 Efficient inference with large numbers of parameters

As multiple parameters are typically fit for each spectroscopic light curve, parameter inference can become computationally expensive when simultaneously fitting multiple light curves and a good choice of inference method may be required. Hamiltonian Monte Carlo (HMC) was chosen as it can scale significantly better when fitting large numbers of parameters compared to other more traditional MCMC methods such as Metropolis-Hastings or Affine-Invariant MCMC (Neal 2011). HMC can make use of the gradient of the log-likelihood to take longer trajectories through parameter space for each step of the MCMC compared to these other methods. Specifically, we use No U-Turn Sampling (NUTS) because it eliminates the need to hand-tune parameters while achieving a similar sampling efficiency to HMC (Hoffman & Gelman 2014).

To provide the gradients of the log-likelihood calculation for NUTS, the above algorithms were implemented in the Python package JAX (Bradbury et al. 2018). JAX has the capability of providing the values as well as the gradients of functions, often with minimal additional runtime cost. It has an implementation of NumPy that allows NumPy code to be converted into JAX code with limited alterations. It also allows the same code to be compiled to run on either a CPU or GPU – where GPUs may provide significant computational advantages. Novel analytic expressions (that were used in combination with JAX) for the gradients and Hessian of the log-likelihood were developed to aid numerical stability – as discussed in Appendices H and I – with numerical stability further discussed in Appendix J.

3 Testing on simulated data

As we do not know a priori if wavelength-correlated systematics are present in a real dataset, this could lead to challenging model selection problems deciding whether to fit the datasets with wavelength-independent 1D GPs or a 2D GP that can account for wavelength-correlated systematics. We will demonstrate using simulated data that 2D GPs can accurately account for both wavelength-independent and wavelength-correlated systematics. This avoids the need for model selection – which could become computationally intractable for large numbers of parameters and is also known to place a heavy reliance on the choice of priors4 (Llorente et al. 2023). For example, the 2D GP method can fit for a wavelength length scale lλ – with the log-likelihood varying significantly with lλ – while the 1D GP method does not. This would result in the favoured model (e.g. measured using the Bayesian evidence) being strongly dependent on the arbitrary choice of prior on lλ. This dependence on the choice of priors can be significantly reduced by avoiding model selection and instead marginalising over both wavelength-independent 1D GPs and wavelength-correlated 2D GPs. This can be performed simply by fitting with a 2D GP using a kernel such as from Eq. (2) and choosing a prior where lλ can go to small values where the 2D GP becomes equivalent to joint-fitting wavelength-independent 1D GPs. While the choice of prior on lλ will still affect how strongly the 1D and 2D GP methods are weighted, both methods are still marginalised over, reducing the importance of the choice of priors.

We aim to validate this approach by testing both methods across different sets of simulated data containing either wavelength-independent or wavelength-correlated systematics. We will show that 2D GPs can have an added benefit of sharing hyperparameters between light curves to better constrain systematics and improve accuracy. We also use these datasets to characterise how wavelength-correlated systematics may contaminate transmission spectra when fitting with 1D GPs.

Finally, we study how we can account for common-mode systematics – systematics that are constant in wavelength – using 2D GPs. Typically, these are accounted for using a separate common-mode correction step before fitting for the transmission spectrum (to be described in Sec. 3.9), we show that these systematics can be accounted for using a 2D GP while simultaneously retrieving the transmission spectrum.

To summarise, we will demonstrate four major points:

- (i)

Sharing hyperparameters between light curves can improve the reliability of results compared to individual 1D GP fits.

- (ii)

2D GPs can accurately account for wavelength-independent systematics by fitting for a wavelength length scale parameter.

- (iii)

When systematics are correlated in wavelength, 2D GPs can accurately retrieve transmission spectra while 1D GPs may retrieve erroneous results.

- (iv)

Common-mode systematics can be accounted for using 2D GPs without requiring a separate common-mode correction step.

Sections 3.1–3.5 will describe how the synthetic data were generated and analysed, with Sects. 3.6–3.9 explaining how the results demonstrate points (i), (ii), (iii) and (iv).

3.1 Light curve model

In this work, our light curve model is calculated using the equations of Mandel & Agol (2002) using quadratic limb-darkening parameters c1 and c2. The other parameters describing the light curve are the central transit time (T0), period (P), system scale (a/R*) and impact parameter (b) as well as the planet-to-star radius ratio (ρ = Rp/R*) we aim to measure. We chose a standard approach of using the transit depth (ρ2 = (Rp/R*)2) to fit each light curve (i.e. Rustamkulov et al. 2023; Alderson et al. 2023). We can also fit a linear baseline function to each light curve, which introduces the flux out-of-transit parameter (Foot) and a fit to the slope of the baseline flux (Tgrad).

Our mean function parameters therefore include five parameters that may be fit for each light curve independently (ρ2, c1, c2, Foot, Tgrad) and four parameters that are shared across all light curves (T0, P, a/R*, b). For the simulations, the transit depths ρ2 were the only mean function parameters being fit for to reduce runtimes for the large number of simulations (the other parameters were kept fixed to their injected values).

We note that the 1D GP method generated light curves using BATMAN (Kreidberg 2015) to fit the data while the 2D GP method used JAXOPLANET (Foreman-Mackey & Garcia 2023). Any differences in the light curves generated between the packages were orders of magnitude below the level of white noise being added. JAXOPLANET is a Python package that can generate transit light curves similar to BATMAN but is implemented in JAX. This allows for the calculation of gradients of the log-likelihood – as required to implement No U-Turn Sampling (NUTS).

3.2 Generating the exoplanet signal

Each simulated dataset contained 100 time points and 16 wavelength channels. The parameter ranges used for generating the exoplanet signal for all sets of simulations are included in Table 1. They are similar to the ranges chosen in Gibson (2014) and are designed to represent typical hot Jupiter transits.

The time range for these observations is [-0.1, 0.1] days sampled uniformly in time. For 100 time points this gives a cadence of approximately 3 min. This cadence is longer than many real observations – such as from VLT/FORS2 or JWST observations – but is still well-sampled. This was required to reduce runtimes and allow for the analysis of a large number of simulated datasets. The parameter ranges chosen resulted in the proportion of time spent in transit varying within the range [26%, 65%].

The wavelength range considered was [3850 Å, 8650 Å] which for 16 evenly separated wavelength bins gives a bin width of 300 Å. This wide wavelength range ensures light curves with a range of limb darkening parameters were included. Limb darkening parameters were calculated by first uniformly sampling a confirmed transiting exoplanet listed on the NASA Exoplanet Archive5 and selecting the listed host star parameters. These parameters were used to compute the limb darkening parameters using PyLDTk, allowing for a realistic range of stellar limb-darkening profiles (Parviainen & Aigrain 2015).

The synthetic transmission spectra were generated using a simple model with the aim of testing the retrieval of typical sharp and broad features within transmission spectra. The planet-to-star radius ratio was randomly drawn as described in Table 2. To study transmission spectra that may have a scattering slope or flat ‘grey’ cloud deck, there was a 50% chance of a Rayleigh-scattering slope being included and a 50% chance the spectrum was flat. The Rayleigh-scattering was implemented using Eq. (28) from Lecavelier Des Etangs et al. (2008):

![$\[\m=\frac{\mathrm{d} R_{\mathrm{p}} / R_*}{\mathrm{~d}(\ln \lambda)}=\frac{\alpha H}{R_*},\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq45.png) (28)

(28)

where m is the slope of the transmission spectrum, H is the scale height and α describes the strength of scattering (α = −4 for Rayleigh scattering).

For simplicity, m = 0 was used for a flat ‘cloudy’ model and m = −0.005 for Rayleigh scattering. For context, WASP-31b has a reported height scale of 1220 km and stellar radius of R* = 1.12 M⊙ (Sing et al. 2015; Anderson et al. 2011) which results in a predicted slope of m = −0.0063 for Rayleigh scattering.

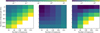

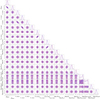

In order to compare how the different methods may constrain a sharp spectral feature such as a K feature (a sharp potassium absorption doublet at ~7681 Å), each dataset had a 50% probability of adding a change in radius ratio of Δρκ = 0.005 to the single wavelength bin which covers the wavelength range 7450 Å to 7750 Å. Overall this resulted in an even 25% probability of the spectrum being flat and featureless, hazy and featureless, flat with a K feature, or hazy with a K feature (see Fig. 1).

Mean function parameter ranges used for all simulations.

Transmission spectrum parameter ranges for all simulations.

|

Fig. 1 Four equally likely types of transmission spectra generated for a radius ratio of ρ = 0.1, showing the spectrum in transit depths. |

Parameter ranges used to generate systematics for Simulations 1–4.

3.3 Generating systematics

Five hundred synthetic transmission spectroscopy datasets (each containing 16 light curves) were generated for each of the four sets of simulations. All sets of simulations used the same transit signal model as described in Sec. 3.2. Parameter ranges used to generate systematics for Simulations 1–4 are included in Table 3. Systematics generated were multiplied by each of the transit models generated. We chose to multiply by the transit signal because many sources of systematics would be expected to be proportional to flux (i.e. from varying instrumental or atmospheric throughput).

For Simulation 1, noise was generated using Eq. (1) to build a covariance matrix and by taking random draws from it independently for each wavelength. These datasets therefore contained wavelength-independent systematics (equivalently this is the limit of Eq. (2) when lλ → 0). For Simulations 2 and 3, noise was generated using the kernel in Eq. (2) and both represent scenarios where wavelength-correlated systematics are present but with different ranges of wavelength length scales. Simulation 4 represents contamination from common-mode systematics – which are often encountered in real observations and are relevant to the VLT/FORS2 analysis in Sec. 4. For each simulated dataset, a single draw of correlated noise was generated using the kernel in Eq. (1) (with negligible white noise σ = 10−6 for numerical stability). All wavelength channels used this same draw of correlated noise so that it was constant in wavelength (which is the limit of Eq. (2) approached as lλ → ∞). White noise was then added to the full dataset from the parameter range in Table 3.

For examples of noise-contaminated light curves generated, three synthetic light curves from a Simulation 1 dataset are plotted in the left plot of Fig. 2, while all light curves from a Simulation 3 dataset are shown in the left plot of Fig. 5. Simulation 2 datasets would appear similar to Simulation 3 but with the systematics changing shape more significantly between different light curves. Simulation 4 datasets would have the same shape systematics in all light curves (but with different white noise values).

3.4 Inference methods

Each of the fitting methods were initialised with the true values used in the simulations. A global optimiser was then used to locate the best-fit values for the parameters being fit. MCMCs were initialised by perturbing them from this best-fit value and run until convergence was reached – as measured by the Gelman–Rubin statistic (Gelman & Rubin 1992) – or until a limit on the number of chains or samples was reached.

The 1D GP fits were performed using the GEEPEA (Gibson et al. 2012b) and INFERNO (Gibson et al. 2017) Python packages. GEEPEA was used for the implementation of the 1D GP with the Nelder-Mead simplex algorithm used to locate a best-fit value. Differential Evolution MCMC (DE-MC) was used to explore the posterior of each of the light curves using the implementation in INFERNO. Four parameters were fit for each MCMC: ρ2, h, lt and σ. One hundred independent chains were run in each MCMC with 200 burn-in steps and another 200 steps run for the chain. If any of the parameters had a GR statistic >1.01 then the chains were extended another 200 steps with this repeating up to a maximum chain length of 1000. This was sufficient to achieve formal convergence in over 99% of simulations performed.

In order to perform inference for the 2D GP fits, the log-likelihood calculation was implemented in JAX using the equations described in Sec. 2.5 and Appendix H. The Python package PYMC was used for its implementation of Limited-Memory BFGS for best-fits and NUTS for inference (Salvatier et al. 2016). Limited-Memory BFGS is a gradient-based optimisation method that can be used for models with large numbers (potentially thousands) of parameters (Liu & Nocedal 1989).

MCMC inference for the 2D GP method initially used two chains with 1000 burn-in steps each followed by another 1000 steps used for inference. Convergence was checked and if all parameters had not yet converged then another two chains were run. This was repeated until a maximum of ten chains were run. This was also sufficient to achieve convergence in over 99% of simulations performed.

For many MCMC methods, an approximation of the covariance matrix of the parameters being fit can be used as a transformation to reduce correlations between parameters and improve sampling efficiency. In NUTS, the so-called mass matrix performs this function (Hoffman & Gelman 2014). A Laplace approximation was performed to find an approximate covariance matrix, which requires calculating the Hessian of the log-posterior at the location of best-fit (see Appendix I). This was found to be significantly more efficient than using the samples from the burn-in period to approximate the covariance matrix.

To overcome a few other challenges in achieving convergence (see Appendix D for details), blocked Gibbs sampling (Jensen et al. 1995) was used to update some groups of parameters together. Slice sampling (Neal 2003) was also used if the time or wavelength length scales were within certain ranges close to a prior limit, as this could sometimes sample these parameters more efficiently than NUTS. Priors used for both 1D and 2D GP methods are described in Appendix C and were unchanged between Simulations 1–4.

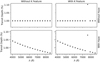

|

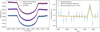

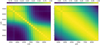

Fig. 2 Example of synthetic dataset analysed in Simulation 1 (containing wavelength-independent systematics) showing some simulated light curves (left) and recovered transmission spectrum (right). Left: the three shortest wavelength light curves in the dataset being fit by the transit model combined with a 2D GP noise model (the other 13 light curves were simultaneously fit but not plotted). Right: resulting transmission spectrum from the joint fit of all 16 light curves, with the three leftmost points corresponding to the light curves in the left plot. The recovered transmission spectrum was consistent with the injected spectrum ( |

3.5 Accuracy metrics

Multiple metrics were used to determine how accurately different methods could recover the injected transmission spectra. An example analysis of a single synthetic dataset from Simulation 1 is shown in Fig. 2, demonstrating both the fitting of the light curves and how the resulting transmission spectrum and atmospheric retrieval compares to the injected spectrum. We will study how accurately each method can extract the transmission spectrum in addition to the accuracy of the retrieved atmospheric parameters.

To measure the accuracy of the transmission spectra, we assumed that the uncertainty in the recovered transit depths was Gaussian. This implies that if we are accurately retrieving their mean and covariance, the reduced chi-squared ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq47.png) of the injected values should be distributed as a

of the injected values should be distributed as a ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq48.png) distribution with 16 degrees of freedom. We compute the

distribution with 16 degrees of freedom. We compute the ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq49.png) of the injected transmission spectrum (accounting for covariance between transit depths) using:

of the injected transmission spectrum (accounting for covariance between transit depths) using:

![$\[\chi_{\mathrm{r}}^2=\left(\boldsymbol{\bar{\rho}}_{\text {ret }}^2-\boldsymbol{\rho}_{\text {inj }}^2\right)^T \mathbf{K}_{\boldsymbol{\rho}^2 \text {;ret }}^{-1}\left(\boldsymbol{\bar{\rho}}_{\text {ret }}^2-\boldsymbol{\rho}_{\text {inj }}^2\right) / M,\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq50.png) (29)

(29)

where ![$\[\bar{\rho}_{\text {ret }}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq51.png) and

and ![$\[\mathbf{K}_{\rho^2; \text {ret }}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq52.png) ;ret represent the retrieved mean and covariance of the transmission spectrum respectively (with ρ2 representing the transit depths).

;ret represent the retrieved mean and covariance of the transmission spectrum respectively (with ρ2 representing the transit depths). ![$\[\rho_{\mathrm{inj}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq53.png) represents the injected transmission spectrum and M is the number of light curves (M = 16 for all the simulations in this paper). As the 1D GP method fits each light curve independently, the covariance between all the transit depths is always assumed to be zero, resulting in the covariance matrices from the 1D GP method being diagonal. The 2D GP method will instead recover the full covariance matrix of transit depths from the MCMC.

represents the injected transmission spectrum and M is the number of light curves (M = 16 for all the simulations in this paper). As the 1D GP method fits each light curve independently, the covariance between all the transit depths is always assumed to be zero, resulting in the covariance matrices from the 1D GP method being diagonal. The 2D GP method will instead recover the full covariance matrix of transit depths from the MCMC.

Since a single synthetic dataset is not sufficient to study the accuracy of each method, we chose to generate 500 synthetic datasets for each set of simulations to provide a sufficient sample to test each method. By comparing how the resulting 500 ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq54.png) values trace out the theoretical

values trace out the theoretical ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq55.png) distribution, we can determine if a method is accurately recovering the uncertainty in the transmission spectrum. For M = 16 degrees of freedom,

distribution, we can determine if a method is accurately recovering the uncertainty in the transmission spectrum. For M = 16 degrees of freedom, ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq56.png) has mean μ = 1 and variance σ2 = 2/M = 0.125. The mean

has mean μ = 1 and variance σ2 = 2/M = 0.125. The mean ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq57.png) being significantly larger than one suggests our uncertainties are too small on average, while the mean being smaller than one suggests our uncertainties are too large on average. We should expect the sample variance of 500 samples from a

being significantly larger than one suggests our uncertainties are too small on average, while the mean being smaller than one suggests our uncertainties are too large on average. We should expect the sample variance of 500 samples from a ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq58.png) distribution with 16 degrees of freedom to be distributed as 0.125 ± 0.009, if values diverge from this it may suggest the presence of outliers.

distribution with 16 degrees of freedom to be distributed as 0.125 ± 0.009, if values diverge from this it may suggest the presence of outliers.

We also performed one-sample Kolmogorov–Smirnov (K–S) tests on the distribution of recovered ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq59.png) values, which is a method for testing if a set of samples follows a given distribution – in this case a

values, which is a method for testing if a set of samples follows a given distribution – in this case a ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq60.png) distribution. It works by comparing the empirical cumulative distribution function (ECDF) of the samples to the cumulative distribution function (CDF) of the distribution chosen, returning the maximum deviation D from the desired CDF. The K-S statistic D for 500 samples and for a

distribution. It works by comparing the empirical cumulative distribution function (ECDF) of the samples to the cumulative distribution function (CDF) of the distribution chosen, returning the maximum deviation D from the desired CDF. The K-S statistic D for 500 samples and for a ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq61.png) distribution with 16 degrees of freedom should have D < 0.060 at a p-value of 0.05 and D < 0.072 at a p-value of 0.01.

distribution with 16 degrees of freedom should have D < 0.060 at a p-value of 0.05 and D < 0.072 at a p-value of 0.01.

In addition to examining the ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq62.png) values, we can also examine how accurately we can retrieve atmospheric features. For our simulations, there are three features of the injected transmission spectra: the radius ratio ρ = Rp/R*, slope m = d(Rp/R*)/d(ln λ) and change in radius ratio in the wavelength bin centred on the K feature Δρκ. For each simulation, these three features were determined by performing a simple atmospheric retrieval on the recovered transmission spectrum using the same atmospheric model, which was used to generate the injected spectra. We determined the mean and uncertainty for each of these features using an MCMC (also using the NUTS algorithm). We measured the number of standard deviations – or the Z-score – each prediction was away from the true injected value for all 500 synthetic datasets (used previously in e.g. Carter & Winn 2009; Gibson 2014; Ih & Kempton 2021). If we are robustly retrieving a given atmospheric parameter, we expect these 500 Z-scores to follow a Gaussian distribution with mean μ = 0 and variance σ2 = 1.

values, we can also examine how accurately we can retrieve atmospheric features. For our simulations, there are three features of the injected transmission spectra: the radius ratio ρ = Rp/R*, slope m = d(Rp/R*)/d(ln λ) and change in radius ratio in the wavelength bin centred on the K feature Δρκ. For each simulation, these three features were determined by performing a simple atmospheric retrieval on the recovered transmission spectrum using the same atmospheric model, which was used to generate the injected spectra. We determined the mean and uncertainty for each of these features using an MCMC (also using the NUTS algorithm). We measured the number of standard deviations – or the Z-score – each prediction was away from the true injected value for all 500 synthetic datasets (used previously in e.g. Carter & Winn 2009; Gibson 2014; Ih & Kempton 2021). If we are robustly retrieving a given atmospheric parameter, we expect these 500 Z-scores to follow a Gaussian distribution with mean μ = 0 and variance σ2 = 1.

If the mean Z-score is zero, it would demonstrate that the parameter is not biased towards larger or smaller values than the true values. The Z-score values having unit variance shows that the uncertainty estimates are accurate. If the variance is less than one then that suggests the error bars are too large on average which might result in a missed opportunity to detect an atmospheric feature. If the variance is greater than one then the error bars are too small on average, which could lead to false detections of signals.

If two methods are both shown to produce reliable uncertainties based on these metrics, then we can also study which method gives smaller uncertainties on the transmission spectrum. Some methods described can produce tighter constraints than others but at the cost of making stronger assumptions about the systematics in the data.

Tables of each accuracy metric for all methods tested are included in Appendix G.

3.6 Result (i): sharing hyperparameters can make constraints more reliable

Before fitting for wavelength-correlations, we first demonstrate that simply by joint-fitting spectroscopic light curves and sharing hyperparameters between light curves, we can more reliably constrain transmission spectra. This technique has already been demonstrated on ground-based observations of WASP-94Ab (Ahrer et al. 2022), but to examine reliability we use it on simulated datasets where the transmission spectrum is known.

For this section, we used the Simulation 1 data containing only wavelength-independent systematics. We first fit each synthetic dataset with individual 1D GPs that fit a separate transit depth ρ2, height scale h, time length scale lt and white noise amplitude σ to each individual light curve. The kernel in Eq. (1) was used for the GP (the same kernel used to generate the systematics). We compared this to joint-fitting all 16 spectroscopic light curves in each dataset, where we still fit for all 16 transit depths but used single shared values for h, lt and σ. This provides more information to constrain the values of the hyperparmeters and should produce better constraints than using wavelength-varying values. We note that this can be considered to be using a 2D GP as the kernel is a function of the two dimensions time and wavelength (the correlation in wavelength is given by the Kronecker delta ![$\[\delta_{\lambda_i \lambda_j}\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq63.png) ). We therefore performed this joint-fit identically to the 2D GP method described in Sec. 3.4 and used the same kernel function but fixed the wavelength length scale lλ to be negligible6. Comparing Eq. (1) and Eq. (2), it can be seen that both kernel functions are mathematically equivalent in the limit as lλ → 0. We therefore refer to this method as the Hybrid method as it shares the same kernel function as the 1D GP method but joint-fits light curves and can benefit from shared hyperparameters like the 2D GP method.

). We therefore performed this joint-fit identically to the 2D GP method described in Sec. 3.4 and used the same kernel function but fixed the wavelength length scale lλ to be negligible6. Comparing Eq. (1) and Eq. (2), it can be seen that both kernel functions are mathematically equivalent in the limit as lλ → 0. We therefore refer to this method as the Hybrid method as it shares the same kernel function as the 1D GP method but joint-fits light curves and can benefit from shared hyperparameters like the 2D GP method.

The Hybrid method had either equal or better performance compared to individual 1D GPs across every accuracy metric measured. For example, the distribution of ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq64.png) values for the 1D GPs had a mean of

values for the 1D GPs had a mean of ![$\[\bar{\chi}_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq65.png) = 1.35 ± 0.04, compared to

= 1.35 ± 0.04, compared to ![$\[\bar{\chi}_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq66.png) = 1.00 ± 0.02. Similarly, the Z-scores of all three atmospheric parameters studied had sample variances that were constrained to be greater than one for the 1D GP fits but consistent with one for the joint-fits.

= 1.00 ± 0.02. Similarly, the Z-scores of all three atmospheric parameters studied had sample variances that were constrained to be greater than one for the 1D GP fits but consistent with one for the joint-fits.

Our results suggest that 1D GPs underestimated the uncertainties of the transmission spectra on average, which may appear surprising given that the systematics in Simulation 1 were generated using 1D GPs with the exact same kernel function. The different results for the two methods appear to have been largely driven by a small number of outliers where individual light curves were fit to have much weaker systematics than was present. This did not happen with the joint-fits and since both methods have mathematically equivalent kernel functions, we interpret this result as the 1D GPs not having sufficient data to consistently fit the systematics. This could happen if an individual light curve happens to have systematics that have a similar shape to a transit dip, making it difficult to determine the amplitude of the systematics. The joint-fit can instead make use of more light curves to accurately constrain the systematics. We note that the 1D GP method had metrics consistent with ideal statistics for the 130 datasets with lt < 0.01 days, which is much shorter than the minimum transit duration of 0.052 days and therefore less likely to mimic a transit dip. We conclude that sharing hyperparameters can improve the reliability of data analyses by utilising more data to account for the systematics, verifying Result (i).

We note however that for real data we need to be careful with which parameters we assume are wavelength-independent. The amplitude of systematics and white noise could vary significantly across wavelength channels. For our VLT/FORS2 analysis in Sec. 4, we only assume that the length scales of correlated noise are the same across light curves, similar to Ahrer et al. (2022).

3.7 Result (ii): 2D GPs can account for wavelength-independent systematics

As stated at the beginning of Sect. 3, 2D GPs (with the kernel given in Eq. (2)) can be used to fit wavelength-independent systematics, meaning that there is no need to use model selection to determine whether to use 1D GPs or 2D GPs. We have already demonstrated that a 2D GP with a wavelength length scale fixed to negligible values (the Hybrid method) can accurately account for wavelength-independent systematics. For this test, we wanted to demonstrate that even if we fit for the wavelength length scale with a broad prior then we can retrieve similar results. This method has the benefit of avoiding the a priori assumption that systematics are wavelength-independent.

Our results showed no statistically significant difference in any accuracy metric measured between the Hybrid method and fitting for lλ with a 2D GP. Both methods showed ideal ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq67.png) statistics, based on both the mean and variance of the resulting

statistics, based on both the mean and variance of the resulting ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq68.png) distributions and the values of the K–S statistics. The Z-score of all retrieved atmospheric parameters were both consistent with the unit normal distribution. The average recovered transmission spectrum did have 2.4% larger uncertainties however so there may have been a minor loss in precision from the more conservative approach of fitting for the wavelength length scale.

distributions and the values of the K–S statistics. The Z-score of all retrieved atmospheric parameters were both consistent with the unit normal distribution. The average recovered transmission spectrum did have 2.4% larger uncertainties however so there may have been a minor loss in precision from the more conservative approach of fitting for the wavelength length scale.

We see that if systematics are wavelength-independent, a 2D GP can constrain the wavelength length scale to be sufficiently small to provide robust retrievals. This also has limited cost to the precision of the transmission spectrum compared to assuming the systematics are wavelength-independent a priori, confirming Result (ii).

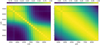

|

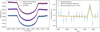

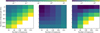

Fig. 3

|

3.8 Result (iii): 2D GPs can account for wavelength-correlated systematics

To study how accurately 1D GPs and 2D GPs can account for systematics correlated in both time and wavelength, we analysed Simulation 2 datasets that cover systematics correlated over wavelength length scales larger than one wavelength bin but smaller than half the wavelength range of the datasets. We fit all 500 datasets with 1D GPs and 2D GPs. However, in addition to having a different kernel to the 1D GP method, the 2D GP could also benefit from sharing hyperparameters (Result i). To isolate the effect of each of these differences, we also fit the Hybrid method to each dataset, which shares hyperparameters between light curves but uses a mathematically equivalent kernel to the 1D GP method.

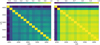

We found that only the 2D GP method had correctly distributed ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq74.png) values and Z-scores of atmospheric parameters with unit variance. The

values and Z-scores of atmospheric parameters with unit variance. The ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq75.png) distributions of each method are shown in Fig. 3. The Hybrid method produces a distribution of

distributions of each method are shown in Fig. 3. The Hybrid method produces a distribution of ![$\[\chi_{\mathrm{r}}^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq76.png) values with the correct mean but with greatly increased variance, while the 1D GP method performs similarly but with outliers producing a higher mean

values with the correct mean but with greatly increased variance, while the 1D GP method performs similarly but with outliers producing a higher mean ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq77.png) value (similar to Sec. 3.6). We interpret the result of the Hybrid method as demonstrating that the systematics in each individual light curve are being accurately described by the kernel function of the Hybrid method (resulting in the correct mean

value (similar to Sec. 3.6). We interpret the result of the Hybrid method as demonstrating that the systematics in each individual light curve are being accurately described by the kernel function of the Hybrid method (resulting in the correct mean ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq78.png) value) but the correlation between light curves is not being accounted for (increasing the variance of the

value) but the correlation between light curves is not being accounted for (increasing the variance of the ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq79.png) values). To understand this, consider fitting two light curves where it is incorrectly assumed that the systematics are independent, but the systematics are actually identical. If the systematics in one light curve are recovered with the correct systematics model but happen to result in a 2σ error in the measured transit depth, then the same measurement will occur in the other light curve. Both light curves analysed together would appear to result in two independent 2σ errors, resulting in a high

values). To understand this, consider fitting two light curves where it is incorrectly assumed that the systematics are independent, but the systematics are actually identical. If the systematics in one light curve are recovered with the correct systematics model but happen to result in a 2σ error in the measured transit depth, then the same measurement will occur in the other light curve. Both light curves analysed together would appear to result in two independent 2σ errors, resulting in a high ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq80.png) value. However, when we account for the fact that the two light curves are perfectly correlated, this is really only a single 2σ error with a smaller

value. However, when we account for the fact that the two light curves are perfectly correlated, this is really only a single 2σ error with a smaller ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq81.png) value. Similarly, when the error in transit depth is very small in one light curve (i.e. 0.1σ), it will be small in both light curves, resulting in a

value. Similarly, when the error in transit depth is very small in one light curve (i.e. 0.1σ), it will be small in both light curves, resulting in a ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq82.png) value that is too low. The effect of this is that the

value that is too low. The effect of this is that the ![$\[\chi_r^2\]$](/articles/aa/full_html/2024/06/aa47613-23/aa47613-23-eq83.png) distribution of two light curves can produce the correct mean but with increased variance when correlations between the light curves are not accounted for.

distribution of two light curves can produce the correct mean but with increased variance when correlations between the light curves are not accounted for.

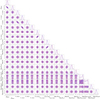

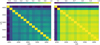

The 1D GP and Hybrid methods were found to poorly retrieve atmospheric parameters: on average the radius ratio and slope uncertainties were underestimated and the uncertainty in the strength of a K feature was overestimated. This is shown in Fig. 4, where it can be seen that the Z-scores have variance that is either too high or too low for the different features using these two methods. In contrast, the Z-scores for the 2D GP method are consistent with unit normal distributions.

To understand our interpretation of why the 1D GP kernel underestimates errors in broad features (such as the slope and radius ratio), but can overestimate errors for sharp features (such as the K feature), note that signals in data are most degenerate with systematics when both have similar length scales. For example, wavelength-independent systematics should be more degenerate with a sharp K feature compared to broader features. A slope in the transmission spectrum should be more degenerate with systematics which vary gradually in wavelength (i.e. with a long wavelength length scale). Both the 1D GP and Hybrid methods assume that the systematics are wavelength-independent, which could produce uncertainties that are too large when fitting the sharp K feature but too small for broader features. The 2D GP can constrain the systematics to have a longer wavelength length scale, which may increase the uncertainty in broader features while reducing uncertainty in sharp features. This result matches related work examining how different length scale correlations in exoplanet transmission or emission spectra affect atmospheric retrievals (Ih & Kempton 2021; Nasedkin et al. 2023) but it had yet to be demonstrated how these correlations can arise from wavelength-correlated systematics in the spectroscopic light curves.

3.9 Result (iv): 2D GPs can account for common-mode systematics