| Issue |

A&A

Volume 655, November 2021

|

|

|---|---|---|

| Article Number | A44 | |

| Number of page(s) | 21 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202141061 | |

| Published online | 11 November 2021 | |

Euclid preparation

XII. Optimizing the photometric sample of the Euclid survey for galaxy clustering and galaxy-galaxy lensing analyses

1

Institut de Ciencies de l’Espai (IEEC-CSIC), Campus UAB, Carrer de Can Magrans, s/n Cerdanyola del Vallés, 08193 Barcelona, Spain

e-mail: pocino@ice.csic.es

2

Institute of Space Sciences (ICE, CSIC), Campus UAB, Carrer de Can Magrans, s/n, 08193 Barcelona, Spain

3

Institut d’Estudis Espacials de Catalunya (IEEC), Carrer Gran Capitá 2-4, 08034 Barcelona, Spain

4

Department of Physics, The Ohio State University, Columbus, OH 43210, USA

5

Center for Cosmology and AstroParticle Physics, The Ohio State University, 191 West Woodruff Avenue, Columbus, OH 43210, USA

6

INFN-Sezione di Torino, Via P. Giuria 1, 10125 Torino, Italy

7

Dipartimento di Fisica, Universitá degli Studi di Torino, Via P. Giuria 1, 10125 Torino, Italy

8

INAF-Osservatorio Astrofisico di Torino, Via Osservatorio 20, 10025 Pino Torinese (TO), Italy

9

INAF-Osservatorio Astronomico di Roma, Via Frascati 33, 00078 Monteporzio Catone, Italy

10

AIM, CEA, CNRS, Université Paris-Saclay, Université de Paris, 91191 Gif-sur-Yvette, France

11

Mullard Space Science Laboratory, University College London, Holmbury St Mary, Dorking, Surrey RH5 6NT, UK

12

Université Paris-Saclay, CNRS, Institut d’astrophysique spatiale, 91405 Orsay, France

13

Instituto de Física Téorica UAM-CSIC, Campus de Cantoblanco, 28049 Madrid, Spain

14

School of Physics and Astronomy, Queen Mary University of London, Mile End Road, London E1 4NS, UK

15

Université St Joseph; UR EGFEM, Faculty of Sciences, Beirut, Lebanon

16

Institut de Recherche en Astrophysique et Planétologie (IRAP), Université de Toulouse, CNRS, UPS, CNES, 14 Av. Edouard Belin, 31400 Toulouse, France

17

INAF-Osservatorio Astronomico di Brera, Via Brera 28, 20122 Milano, Italy

18

Istituto Nazionale di Astrofisica (INAF) – Osservatorio di Astrofisica e Scienza dello Spazio (OAS), Via Gobetti 93/3, 40127 Bologna, Italy

19

IFPU, Institute for Fundamental Physics of the Universe, via Beirut 2, 34151 Trieste, Italy

20

SISSA, International School for Advanced Studies, Via Bonomea 265, 34136 Trieste TS, Italy

21

INFN, Sezione di Trieste, Via Valerio 2, 34127 Trieste TS, Italy

22

INAF-Osservatorio Astronomico di Trieste, Via G. B. Tiepolo 11, 34131 Trieste, Italy

23

Universidad de la Laguna, 38206 San Cristóbal de La Laguna, Tenerife, Spain

24

Instituto de Astrofísica de Canarias, Calle Vía Làctea s/n, 38204 San Cristóbal de la Laguna, Tenerife, Spain

25

INAF-Osservatorio di Astrofisica e Scienza dello Spazio di Bologna, Via Piero Gobetti 93/3, 40129 Bologna, Italy

26

Dipartimento di Fisica e Astronomia, Universitá di Bologna, Via Gobetti 93/2, 40129 Bologna, Italy

27

INFN-Sezione di Bologna, Viale Berti Pichat 6/2, 40127 Bologna, Italy

28

INAF-Osservatorio Astronomico di Padova, Via dell’Osservatorio 5, 35122 Padova, Italy

29

Universitäts-Sternwarte München, Fakultät für Physik, Ludwig-Maximilians-Universität München, Scheinerstrasse 1, 81679 München, Germany

30

Max Planck Institute for Extraterrestrial Physics, Giessenbachstr. 1, 85748 Garching, Germany

31

Université de Paris, CNRS, Astroparticule et Cosmologie, 75006 Paris, France

32

Department of Astronomy, University of Geneva, ch. d’Ecogia 16, 1290 Versoix, Switzerland

33

INFN-Sezione di Roma Tre, Via della Vasca Navale 84, 00146 Roma, Italy

34

Department of Mathematics and Physics, Roma Tre University, Via della Vasca Navale 84, 00146 Rome, Italy

35

INAF-Osservatorio Astronomico di Capodimonte, Via Moiariello 16, 80131 Napoli, Italy

36

Centro de Astrofísica da Universidade do Porto, Rua das Estrelas, 4150-762 Porto, Portugal

37

Instituto de Astrofísica e Ciências do Espaço, Universidade do Porto, CAUP, Rua das Estrelas, 4150-762 Porto, Portugal

38

INFN-Bologna, Via Irnerio 46, 40126 Bologna, Italy

39

Dipartimento di Fisica e Scienze della Terra, Universitá degli Studi di Ferrara, Via Giuseppe Saragat 1, 44122 Ferrara, Italy

40

INAF, Istituto di Radioastronomia, Via Piero Gobetti 101, 40129 Bologna, Italy

41

Université Côte d’Azur, Observatoire de la Côte d’Azur, CNRS, Laboratoire Lagrange, Bd de l’Observatoire, CS 34229, 06304 Nice cedex 4, France

42

Instituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de Lisboa, Tapada da Ajuda, 1349-018 Lisboa, Portugal

43

Observatoire de Sauverny, Ecole Polytechnique Fédérale de Lau- sanne, 1290 Versoix, Switzerland

44

Department of Physics “E. Pancini”, University Federico II, Via Cinthia 6, 80126 Napoli, Italy

45

INFN section of Naples, Via Cinthia 6, 80126 Napoli, Italy

46

INAF-Osservatorio Astrofisico di Arcetri, Largo E. Fermi 5, 50125 Firenze, Italy

47

Centre National d’Etudes Spatiales, Toulouse, France

48

Institute for Astronomy, University of Edinburgh, Royal Observatory, Blackford Hill, Edinburgh EH9 3HJ, UK

49

Jodrell Bank Centre for Astrophysics, School of Physics and Astronomy, University of Manchester, Oxford Road, Manchester M13 9PL, UK

50

European Space Agency/ESRIN, Largo Galileo Galilei 1, 00044 Frascati, Roma, Italy

51

ESAC/ESA, Camino Bajo del Castillo, s/n., Urb. Villafranca del Castillo, 28692 Villanueva de la Cañada, Madrid, Spain

52

Univ Lyon, Univ Claude Bernard Lyon 1, CNRS/IN2P3, IP2I Lyon, UMR 5822, 69622 Villeurbanne, France

53

Aix-Marseille Univ, CNRS, CNES, LAM, Marseille, France

54

University of Lyon, UCB Lyon 1, CNRS/IN2P3, IUF, IP2I, Lyon, France

55

Departamento de Física, Faculdade de Ciências, Universidade de Lisboa, Edifício C8, Campo Grande, 1749-016 Lisboa, Portugal

56

Instituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de Lisboa, Campo Grande, 1749-016 Lisboa, Portugal

57

Department of Physics, Oxford University, Keble Road, Oxford OX1 3RH, UK

58

INFN-Padova, Via Marzolo 8, 35131 Padova, Italy

59

Institute of Theoretical Astrophysics, University of Oslo, PO Box 1029 Blindern 0315 Oslo, Norway

60

School of Physics, HH Wills Physics Laboratory, University of Bristol, Tyndall Avenue, Bristol BS8 1TL, UK

61

INAF-IASF Milano, Via Alfonso Corti 12, 20133 Milano, Italy

62

Aix-Marseille Univ, CNRS/IN2P3, CPPM, Marseille, France

63

Department of Physics, PO Box 64 00014 University of Helsinki, Finland

64

Dipartimento di Fisica “Aldo Pontremoli”, Universitá degli Studi di Milano, Via Celoria 16, 20133 Milano, Italy

65

INFN-Sezione di Milano, Via Celoria 16, 20133 Milano, Italy

66

Jet Propulsion Laboratory, California Institute of Technology, 4800 Oak Grove Drive, Pasadena, CA 91109, USA

67

von Hoerner & Sulger GmbH, SchloßPlatz 8, 68723 Schwetzingen, Germany

68

Max-Planck-Institut für Astronomie, Königstuhl 17, 69117 Heidelberg, Germany

69

Department of Physics and Helsinki Institute of Physics, Gustaf Hällströmin katu 2, 00014 University of Helsinki, Finland

70

Université de Genève, Département de Physique Théorique and Centre for Astroparticle Physics, 24 quai Ernest-Ansermet, 1211 Genève 4, Switzerland

71

NOVA optical infrared instrumentation group at ASTRON, Oude Hoogeveensedijk 4, 7991PD Dwingeloo, The Netherlands

72

Argelander-Institut für Astronomie, Universität Bonn, Auf dem Hügel 71, 53121 Bonn, Germany

73

Institute for Computational Cosmology, Department of Physics, Durham University, South Road, Durham DH1 3LE, UK

74

Université de Paris, 75013 Paris, France

75

LERMA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Université, 75014 Paris, France

76

California institute of Technology, 1200 E California Blvd, Pasadena, CA 91125, USA

77

INAF-IASF Bologna, Via Piero Gobetti 101, 40129 Bologna, Italy

78

Institut de Física d’Altes Energies (IFAE), The Barcelona Institute of Science and Technology, Campus UAB, 08193 Bellaterra (Barcelona), Spain

79

Institute of Cosmology and Gravitation, University of Portsmouth, Portsmouth PO1 3FX, UK

80

European Space Agency/ESTEC, Keplerlaan 1, 2201 AZ Noordwijk, The Netherlands

81

ICC&CEA, Department of Physics, Durham University, South Road, Durham DH1 3LE, UK

82

Department of Physics and Astronomy, University of Aarhus, Ny Munkegade 120, 8000 Aarhus C, Denmark

83

Perimeter Institute for Theoretical Physics, Waterloo, Ontario N2L 2Y5, Canada

84

Department of Physics and Astronomy, University of Waterloo, Waterloo, Ontario N2L 3G1, Canada

85

Centre for Astrophysics, University of Waterloo, Waterloo, Ontario N2L 3G1, Canada

86

Space Science Data Center, Italian Space Agency, via del Politecnico snc, 00133 Roma, Italy

87

Institute of Space Science, Bucharest 077125, Romania

88

Institute for Computational Science, University of Zurich, Winterthurerstrasse 190, 8057 Zurich, Switzerland

89

Dipartimento di Fisica e Astronomia “G.Galilei”, Universitá di Padova, Via Marzolo 8, 35131 Padova, Italy

90

Departamento de Física, FCFM, Universidad de Chile, Blanco Encalada 2008, Santiago, Chile

91

INFN-Sezione di Roma, Piazzale Aldo Moro, 2 – c/o Dipartimento di Fisica, Edificio G. Marconi, 00185 Roma, Italy

92

Institut d’Astrophysique de Paris, 98bis Boulevard Arago, 75014 Paris, France

93

Universidad Politécnica de Cartagena, Departamento de Electrónica y Tecnología de Computadoras, 30202 Cartagena, Spain

94

Kapteyn Astronomical Institute, University of Groningen, PO Box 800 9700 AV Groningen, The Netherlands

95

Department of Physics, PO Box 35 (YFL) 40014 University of Jyväskylä, Finland

96

Infrared Processing and Analysis Center, California Institute of Technology, Pasadena, CA 91125, USA

97

Department of Physics and Astronomy, University College London, Gower Street, London WC1E 6BT, UK

Received:

12

April

2021

Accepted:

5

July

2021

Photometric redshifts (photo-zs) are one of the main ingredients in the analysis of cosmological probes. Their accuracy particularly affects the results of the analyses of galaxy clustering with photometrically selected galaxies (GCph) and weak lensing. In the next decade, space missions such as Euclid will collect precise and accurate photometric measurements for millions of galaxies. These data should be complemented with upcoming ground-based observations to derive precise and accurate photo-zs. In this article we explore how the tomographic redshift binning and depth of ground-based observations will affect the cosmological constraints expected from the Euclid mission. We focus on GCph and extend the study to include galaxy-galaxy lensing (GGL). We add a layer of complexity to the analysis by simulating several realistic photo-z distributions based on the Euclid Consortium Flagship simulation and using a machine learning photo-z algorithm. We then use the Fisher matrix formalism together with these galaxy samples to study the cosmological constraining power as a function of redshift binning, survey depth, and photo-z accuracy. We find that bins with an equal width in redshift provide a higher figure of merit (FoM) than equipopulated bins and that increasing the number of redshift bins from ten to 13 improves the FoM by 35% and 15% for GCph and its combination with GGL, respectively. For GCph, an increase in the survey depth provides a higher FoM. However, when we include faint galaxies beyond the limit of the spectroscopic training data, the resulting FoM decreases because of the spurious photo-zs. When combining GCph and GGL, the number density of the sample, which is set by the survey depth, is the main factor driving the variations in the FoM. Adding galaxies at faint magnitudes and high redshift increases the FoM, even when they are beyond the spectroscopic limit, since the number density increase compensates for the photo-z degradation in this case. We conclude that there is more information that can be extracted beyond the nominal ten tomographic redshift bins of Euclid and that we should be cautious when adding faint galaxies into our sample since they can degrade the cosmological constraints.

Key words: galaxies: distances and redshifts / techniques: photometric / cosmological parameters / surveys

© ESO 2021

1. Introduction

The goal of Stage-IV dark energy surveys (Albrecht et al. 2006), such as Euclid1 (Laureijs et al. 2011) and the Vera C. Rubin Observatory Legacy Survey of Space and Time2 (Rubin-LSST; LSST Science Collaboration 2009), is to measure both the expansion rate of the Universe and the growth of structures up to redshift z ∼ 2 and beyond. These surveys will allow us to constrain a large variety of cosmological models using cosmological probes such as weak gravitational lensing (WL) and galaxy clustering. Stage-IV surveys can be classified into spectroscopic and photometric surveys, depending on whether the redshift of the observed objects is estimated with spectroscopy or using photometric techniques. The latter can provide measurements for many more objects than the former but at the expense of a degraded precision on the redshift estimates, given that photometric surveys observe through multi-band filters instead of observing the full spectral energy distribution that requires more observational time. Because of this, galaxy clustering analyses are usually performed with data coming from spectroscopic surveys, while the data obtained from photometric surveys are generally used for WL analyses. However, given the current (and future) precision of our measurements, the signal we can extract from galaxy clustering analyses using photometric surveys is far from being negligible (see e.g., Abbott et al. 2018; van Uitert et al. 2018; Euclid Collaboration 2020a; Tutusaus et al. 2020). Therefore, upcoming surveys can increase their constraining power if they optimize their photometric samples to include galaxy clustering studies in addition to WL analyses. The main aim of this work is to perform such an optimization study for the Euclid photometric sample.

The Euclid satellite will observe over a billion galaxies through an optical and three near-infrared broad bands. Given the specifications of the satellite, the combination of Euclid and ground-based surveys can enrich the science exploitation of both. On the one hand, the WL analysis of Euclid data requires accurate knowledge of the redshift distributions of the samples used for the analysis. Euclid photometric data alone cannot reach the necessary photometric redshift (photo-z) performance and additional ground-based data are required. On the other hand, Euclid will provide additional information to ground-based surveys such as very precise shape measurements – thanks to the high spatial resolution achieved being in space and avoiding atmospheric distortions – and near-infrared spectroscopy. Euclid’s data will help ground-based surveys improve their deblending of faint objects and improve their photo-z estimates, which will definitely boost their scientific outcome. Surveys where these synergies can be established include the Panoramic Survey Telescope and Rapid Response System3 (PanSTARRS; Chambers et al. 2016), the Canada-France Imaging Survey4 (CFIS; Ibata et al. 2017), the Hyper Suprime-Cam Subaru Strategic Program5 (HSC-SSP; Aihara et al. 2017), the Javalambre-Euclid Deep Imaging Survey (JEDIS), the Dark Energy Survey6 (DES; Dark Energy Survey Collaboration 2005), and Rubin-LSST (Ivezić et al. 2019). The latter is a Stage IV experiment which is extremely complementary to Euclid since it greatly overlaps in area, covers two Euclid deep fields, and reaches a faint photometric depth that will lead to better photo-z estimation (Rhodes et al. 2017; Capak et al. 2019). In this article we consider the addition of ground-based optical photometry to Euclid in order to assess the optimal photometric sample for galaxy clustering and galaxy-galaxy lensing (GGL) analyses.

The optimization of the sample of photometrically selected galaxies for galaxy clustering analyses has been already studied in the literature. In Tanoglidis et al. (2019), the authors focus their analysis on galaxy clustering for the first three years of DES data. Also for DES but including galaxy-galaxy lensing, Porredon et al. (2021) studies lens galaxy sample selections based on magnitude cuts as a function of photo-z, balancing density and photo-z accuracy to optimize cosmological constrains in the wCDM space. Another example is the recent analysis of Eifler et al. (2021) on the Nancy Grace Roman Space Telescope (Spergel et al. 2015) High Latitude Survey (HLS), where the authors simulate and explore multi-cosmological probes strategies on dark energy and modified gravity to study observational systematics, such as photo-z. These studies show the importance of optimizing the galaxy sample for galaxy clustering analysis. We aim to perform a similar optimization for the Euclid mission. We note that there have also been several studies optimizing the spectroscopic sample for galaxy clustering analysis with Euclid (Samushia et al. 2011; Wang et al. 2010).

We want to optimize the Euclid sample of galaxies detected with photometric techniques by performing realistic forecasts of its cosmological performance and observing the improvement on the cosmological constraining power of different galaxy samples. When performing galaxy clustering analyses with a photometric sample there are several effects that need to be taken into account such as galaxy bias, photo-z uncertainties, or shot noise, among other effects. Here, we try to follow the procedures one would perform in a real data analysis when selecting the samples for the analysis. For that purpose, we use the Euclid Flagship simulation (Euclid Collaboration, in prep.; Potter et al. 2017). For a given expected limit of the photometric depth, we select the galaxies included within that magnitude limit and use a machine learning photo-z method to study the optimal way to split the catalog into subsamples for the analysis. We generate realistic redshift distributions, n(z), for the chosen subsamples and estimate their galaxy bias, b(z). We study the constraining power of these samples when we modify the number and width of the tomographic bins, and when we reduce the sample size by performing a series of cuts in magnitude.

The article is organized as follows. We present Euclid and ground-based surveys in Sects. 2 and 3, respectively. In Sect. 4, we introduce the Flagship simulation and describe how we create photometric samples with different selection criteria. We define the set of galaxy samples that will be used throughout the article and explain how we estimate the photometric redshifts. In Sect. 5, we detail the forecast formalism and we describe the cosmological model in Sect. 6. In Sect. 7, we present the results of the optimization when changing the number and type of tomographic bins, and we study the dependency of the cosmological constraints on photo-z quality and sample size. Finally, we present our conclusions in Sect. 8.

2. The Euclid survey

Euclid is an European Space Agency (ESA) M-class space mission due for launch in 2022. In the wide survey, it will cover over 15 000 deg2 of the extra-galactic sky with the main aim of measuring the geometry of the Universe and the growth of structures up to redshift z ∼ 2 and beyond. Euclid will have two instruments on-board: a near-infrared spectro-photometer (Costille et al. 2018) and an imager at visible wavelengths (Cropper et al. 2018). The imager of Euclid, called VIS, will observe galaxies through an optical broad band, mVIS, covering a wavelength range between 540 and 900 nm, with a magnitude depth of 24.5 at 10σ for extended sources. The spectro-photometric instrument, called NISP, has three near-infrared bands, YJH, covering a wavelength range between 920 and 2000 nm (Racca et al. 2016, 2018). The nominal survey exposure is expected to reach a magnitude depth of 24 at 5σ for point sources. If we convert this depth to 10σ level detection for extended sources we obtain a magnitude depth of about 23, which is the value we consider in Table 1. The deep survey will cover 40 deg2 divided in three different fields: the Euclid Deep Field North and the Euclid Deep Field Fornax of 10 deg2 each, and the Euclid Deep Field South of 20 deg2 (Euclid Collaboration, in prep.). In these fields, the magnitude depth will be two magnitudes deeper than in the wide survey. With its two instruments, Euclid will perform both a spectroscopic and a photometric galaxy survey that will allow us to determine cosmological parameters using its three main cosmological probes: galaxy clustering with the spectroscopic sample (GCs), galaxy clustering with the photometric sample (GCph), and WL. We study how the selection of the galaxy sample that enters into the analysis can be optimized to provide the tightest cosmological constraints focusing on the GCph analysis and its cross-correlation with WL – also called GGL.

Limiting coadded depth magnitudes for extended sources at 10σ used in each sample.

3. Ground-based surveys

The single broad band VIS of Euclid cannot sample the spectral energy distribution in the optical range. Euclid will require complementary observations in the optical from ground-based surveys to provide the photometry to estimate accurate photometric redshifts and achieve the scientific goals of Euclid. Several ground-based surveys will be needed to cover all the observed area of Euclid, as Euclid covers both celestial hemispheres and those cannot be reached from a single observatory on Earth. The ground-based complementary data will not cover uniformly the Euclid footprint. It is very likely that there will be at least three distinct areas in terms of photometric data available. The southern hemisphere is expected to be covered with Rubin-LSST data, while the northern hemisphere will be covered with a combination of surveys such as CFIS, PanSTARRS, JEDIS and HSC-SPP. In addition, some area north of the equator may also be covered by Rubin-LSST at a shallower depth than in the southern hemisphere. In this work we include simulated ground-based photometry that try to encompass the range of possible ground-based depths that the Euclid analysis will have from the deepest Rubin-LSST data to the shallower data from other surveys.

Rubin-LSST is expected to start operations in 2022 and over ten years it will observe over 20 000 deg2 in the southern hemisphere with six optical bands, ugrizy, covering a wavelength range from 320 to 1050 nm. The idealized final magnitude depth for coadded images for 5σ point sources are 26.1, 27.4, 27.5, 26.8, 26.1, 24.9, for ugrizy, respectively, based on the Rubin-LSST design specifications (Ivezić et al. 2019). Among other scientific themes, Rubin-LSST has been designed to study dark matter and dark energy using WL, GCph, and supernovae as cosmological probes. The Rubin-LSST survey will provide the best photometry for Euclid-detected galaxies at the time that Euclid data become available.

Another suitable ground-based candidate to cover the optical and near-infrared range in the southern sky is the DES photometric survey. DES completed observations in 2019 after a six-years program. It covered 5000 deg2 around the southern Galactic cap through five broad band filters, grizy, with wavelength ranging from 400 to 1065 nm, and redshift up to 1.4 (Dark Energy Survey Collaboration 2016). The median coadded magnitude limit depths for 10σ and 2″ diameter aperture are 24.3, 24.0, 23.3, 22.6, for griz, respectively. These depths correspond to the published values of the first three years of observations (Sevilla-Noarbe et al. 2021).

4. Generating realistic photometric galaxy samples

The cosmological constraining power of Euclid will depend on the external data available as it will dictate the photo-z performance of the samples to be studied. In order to study the impact of the available photometry, we create six samples selected with different photometric depths. For each sample, we compute the photo-z estimates using machine learning techniques taking into account the expected spectroscopic redshift distribution of the training sample. We use these photo-z estimates to split each sample into tomographic bins for which we can compute their photo-z distributions and galaxy bias from the simulation. These n(z) and b(z) are then used to forecast the cosmological performance. In this section, we provide a detailed description of how we obtain the realistic photo-z estimates of the Euclid galaxies that are later used in the forecast. We first present the cosmological simulation used to extract the photometry and the galaxy distributions. We then explain how we generate realizations of the photometry for the simulated galaxies taking into account the expected depth of the Euclid and ground-based data. We finally present the method used to estimate the photo-z.

4.1. The Flagship simulation

We consider the Flagship galaxy mock catalog of the Euclid Consortium (Euclid Collaboration, in prep.) to create the different samples. The catalog uses the Flagship N-body dark matter simulation (Potter et al. 2017). Dark matter halos are identified using ROCKSTAR (Behroozi et al. 2013) and are retained down to a mass of 2.4 × 1010 h−1 M⊙, which corresponds to ten particles. Galaxies are assigned to dark matter halos using halo abundance matching (HAM) and halo occupation distribution (HOD) techniques. The cosmological model assumed in the simulation is a flat ΛCDM model with fiducial values Ωm = 0.319, Ωb = 0.049, ΩΛ = 0.681, σ8 = 0.83, ns = 0.96, h = 0.67. The N-body simulation ran in a 3.78 h−1 Gpc box with particle mass mp = 2.398 × 109 h−1 M⊙. The galaxy mock generated has been calibrated using local observational constraints, such as the luminosity function from Blanton et al. (2003) and Blanton et al. (2005a) for the faintest galaxies, the galaxy clustering measurements as a function of luminosity and color from Zehavi et al. (2011), and the color-magnitude diagram as observed in the New York university value added galaxy catalog (Blanton et al. 2005b). The catalog contains about 3.4 billion galaxies over 5000 deg2 and extends up to redshift z = 2.3.

For this study, we select an area of 402 deg2, which corresponds to galaxies within the range of right ascension 15° < α < 75° and declination 62° < δ < 90°. All the photometric galaxy distributions obtained in this patch are extrapolated to the 15 000 deg2 of sky that Euclid is expected to observe. The selected area is large enough to minimize the impact of sample variance, but small enough to allow for the production of several galaxy samples in a reasonable amount of time. After the photometric uncertainty is added to the photometry of each galaxy, we perform a magnitude cut in mVIS < 25 that leads to a number density of about 41.5 galaxies per arcmin2.

4.2. Photometric depth

Each galaxy observation leads to a measured value of its magnitude and its associated error. The magnitude depth is usually given as the magnitude at which the median relative error has a particular value. In galaxy surveys it is customary to express the depth at a signal-to-noise of ten for extended objects, that is, when the value of the noise is one tenth of its signal. As explained in detail below, we generate realizations of the photometric errors for a given survey taking into account its magnitude depth and scaling the values of the errors at other magnitudes assuming background limited observations, that is, that the background signal dominates the contribution to the error.

We simulate four different photometric survey depths. Table 1 shows their magnitude limits. The first column corresponds to a combination of Euclid and ground-based photometric depth expected to be achieved in the southern hemisphere. We label this case as optimistic and it is the deepest case we study. The magnitude limits for the optical bands are for extended sources at 10σ, similar to those expected from Rubin-LSST (LSST Science Collaboration 2009). The values for Euclid correspond to a 10σ detection level for extended sources. In addition to the magnitude limits expected in the south, we also want to investigate how the cosmological constraints degrade as the depth is reduced. We investigate three other cases. First, a case were the depth in optical bands are reduced by a factor of two in signal-to-noise ratio. The second column shows the magnitudes limits for this case where the optical bands are reduced by 0.75 magnitude. This column represents a possible case where the Rubin-LSST data have a reduced depth in areas outside its main footprint. Secondly, we study a case were the limiting fluxes of Euclid are brightened by 0.75 magnitudes, shown in the third column. Lastly, we explore a case where the ground-based data is degraded by a factor of five in signal-to-noise but the Euclid space data remains at their nominal depth values. This broadly represents the depth that can be achieved from other ground-based data in the northern hemisphere.

For each survey case, we generate a galaxy catalog drawn from the Flagship simulation. We assign observed magnitudes and errors with the following procedure. First, we compute the expected error for each galaxy, taking into account its magnitude in the Flagship catalog and the magnitude limit of the survey as given in Table 1. We assume that the observations are sky limited (the noise is dominated by the shot noise of the sky) and therefore we scale the ratio of the signal-to-noise between two galaxies i and j as the ratio of their fluxes

where fi is the observed flux of galaxy i detected at signal-to-noise ratio (S/N)i. The magnitude (flux) limits in Table 1 give us the fluxes corresponding to a signal-to-noise ratio of ten, f10σ, and therefore we can compute the expected signal-to-noise at which a galaxy of a given magnitude is detected as

Using the definition of signal-to-noise, (S/N)i = fi/Δfi, we can compute the expected flux error for each galaxy as

The fluxes in the Flagship catalog correspond to the real fluxes of each galaxy. Whenever we observe these galaxies in a given survey, we detect a realization of the real flux. For our study, we generate realizations of the observed fluxes  for each survey as

for each survey as

where N is a random number from a normal distribution. We then assign errors to the resulting fluxes according to Eq. (3). Finally, the new fluxes and their assigned errors are converted into magnitudes and their respective magnitude errors.

4.3. Samples

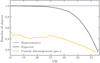

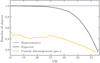

We estimate the expected cosmological constraints using the galaxy clustering analysis of tomographic bins defined with photo-z (see Sect. 5). The magnitude limit of a given sample will give us the galaxies that form the overall sample, while the photo-z algorithm will split that sample into tomographic bins and will provide an estimate of the redshift distributions within these tomographic bins. We can better understand the uncertainties in the method using simulations where we know the true redshift distributions. So far, we have defined four different samples based on the available photometry representing the four cases defined in Table 1. The photo-z performance depends on the photometric depth and the spectroscopic data available to train the method. Now, we generate study cases depending on the spectroscopic data available to train the photo-z. We use three different spectroscopic samples with different completeness profiles as a function of magnitude. First, we consider an idealized case where the spectroscopic training sample is a random subsample of the whole sample and thus it is fully representative (blue line in Fig. 1). Secondly, we consider a case where the spectroscopic sample completeness as a function of magnitude follows the expectations from spectrographs on 8-m class telescopes (Newman et al. 2015). This case is shown in black in Fig. 1. This is intended to mimic the spectroscopic incompleteness as a function of magnitude of surveys such as zCOSMOS (Lilly et al. 2007), VVDS (Le Févre et al. 2013), and DEEP2 (Newman et al. 2013) at least in its shape, although maybe optimistic in its normalization. Finally, we consider a last case where the spectroscopic completeness is similar to the current available spectroscopic surveys, as those listed in Gschwend et al. (2018). We compute how the completeness in spectroscopic data as a function of redshift translates into completeness in mVIS (orange line in Fig. 1). These cases are explained in more detail later in this section. It is worth mentioning that we only consider galaxies and not stars in the samples under study. With the high spatial resolution of Euclid, the contamination in the sample due to stars is expected to be minimal. We have also assumed that the effects of Galactic extinction are corrected in the data reduction pipelines and therefore ignore Galactic extinction. These factors can be include in the future to add another layer of realism to the analysis.

|

Fig. 1. Fraction of simulated objects with successful spectroscopic redshift as a function of mVIS. The lines represent the completeness fraction of the spectroscopic training samples. The blue line corresponds to the fraction of objects for a random training subsample that is fully representative of the sample under study. In black we show an expectation of the spectroscopic completeness for future ground-based surveys in mVIS (see text). In orange we present the completeness of a training sample with an n(z) similar to the currently available spectroscopic data (see text). The number of objects included in each training set is not represented by the normalization of the different curves in this figure (see Fig. 2 for the redshift distributions). Although our photometric samples go up to mVIS = 25, we cut the spectroscopic training samples at mVIS < 24.5 because realistic redshifts have not been reliably determined beyond that magnitude limit yet. |

We combine the four cases of photometric limits with the three cases of different spectroscopic data available to train the photo-z techniques to generate six galaxy samples for our study. With these six samples we try to encompass a wide range of scenarios to try to understand how the cosmological constraints vary depending on the sample available. These combinations of photometric limits and spectroscopic data are chosen to cover the more probable options that will be available with future data. We detail these six cases in the following subsections. Table 2 summarizes all the cases we consider. All our samples have galaxies down to a magnitude limit of mVIS = 25. For our shallower survey (column four in Table 1), galaxies near this mVIS selection limit have larger errors. It is also important to mention that in all cases we assume the magnitude limit in each band to be isotropic – homogeneous on the sky. This will definitely not be the case for Euclid, since ground-based data will consist on a compilation of different surveys pointing at different regions of the sky, with different depths and systematic uncertainties. For instance, Rubin-LSST focuses on the southern hemisphere, while Euclid will also observe the northern one. A more detailed analysis taking into account the depth anisotropy of the ground-based data is left for future work. A possible approach would be to generate several sets of ground-based photometry according to the specific limitations of each ground-based instrument and region of the sky covered, in order to reproduce the expected anisotropy of the photometry. Then we would mix the different sets of ground-based photometry, add them to the Euclid photometry in order to determine the photometric redshifts, and redo the optimization analysis as performed in this article.

Cases under study.

4.3.1. Case 1: Optimistic

This case uses the deepest magnitude limit and a highly idealized spectroscopic training sample. The sample has magnitudes and errors generated as described in Sect. 4.2 with the Euclid and ground-based photometric depth limits shown in the first column of Table 1. The photo-z are estimated using a training set that is a complete and representative subsample in both redshift and magnitude of the whole sample.

4.3.2. Case 2: Fiducial

We take this case to be our fiducial sample. We use the deepest photometry as in the optimistic case 1 but the photo-z estimation now makes use of a training sample that has a completeness drop at faint magnitudes that resembles the incompleteness of spectroscopic surveys carried out with spectrographs in 8m-class telescopes such as Rubin-LSST (see Newman et al. 2015). We show the completeness drop in the spectroscopic training sample in Fig. 1 (black line). While the completeness as a function of magnitude intends to be realistic of current spectroscopic capabilities, we make the simplifying assumption that this incompleteness does not depend on any galaxy property except its magnitude, and therefore we randomly subsample the whole distribution only taking into account the probability of being selected based on the galaxy magnitude.

4.3.3. Case 3: Ground-based mid-depth photometry

We define another sample trained with the same spectroscopic training sample completeness as in the fiducial case but with shallower ground-based magnitude limits in the photometry. The ground-based magnitude limit is a factor of two shallower in signal-to-noise ratio than in cases 1–2. This corresponds to the second column in Table 1. This case is intended to represent areas on the sky between the celestial equator and low northern declinations where Rubin-LSST data at shallower depth may be available.

4.3.4. Case 4: Euclid mid-depth photometry

To explore the possibilities of available photometry, especially the importance of deep near-infrared photometry, we define a case in which both the Euclid and ground-based photometric depth is reduced by 0.75 magnitudes (third column in Table 1). The spectroscopic training sample completeness is the same as in cases 2 and 3.

4.3.5. Case 5: Ground-based shallow depth photometry

The complementary ground-based photometry expected to be available in the northern hemisphere is shallower than the magnitude limits used in our previous cases. We define a sample to roughly represent and cover this option by considering a ground-based flux limit 1.75 magnitudes brighter compared to our optimistic case (fourth column in Table 1). To compute the photo-z, we use a spectroscopic training set with the same completeness in mVIS as in cases 2, 3, and 4.

4.3.6. Case 6: Inhomogeneous spectroscopic sample

In this last sample, we want to study the case in which the spectroscopic training sample is very heterogeneous and composed of the combination of many surveys targeting galaxies with different selection criteria and with different spectroscopic facilities. We choose a spectroscopic training set that tries to model the n(z) of current available spectroscopic data coming from surveys as those listed in Gschwend et al. (2018). Given that some of these surveys have different color selection cuts and magnitude limit depths, the combined redshift distribution is not homogeneous presenting peaks and troughs, which cause strong biases in the photo-z estimation due to over and under-represented galaxies at different redshift ranges (see e.g., Zhou et al. 2021). We want to remark that we only try to reproduce the n(z) of the overall spectroscopic sample. We do not try to gather this spectroscopic sample applying the same selection criteria of the different surveys used. We consider that this is not necessary for our purposes as we are only interested in the overall trend induced by using an inhomogeneous spectroscopic training sample. We create the spectroscopic training sample by randomly selecting galaxies based on their redshift to reproduce the overall targeted redshift distribution. Given that the Flagship simulation area we are using (see Sect. 4.1) is smaller than the surveys sampling the nearby universe, our simulated spectroscopic training does not exactly reproduced our overall redshift distribution at low redshifts. The resulting completeness as a function of the mVIS of this spectroscopic redshift sample can be seen in Fig. 1 (orange line). The modeled n(z) is shown in Fig. 2 (orange line). With this case, which intends to represent the currently available data, we can draw a lower bound on the photo-z accuracy that can be expected for Euclid. In this case, we use the same photometric magnitude limits as in case 5.

|

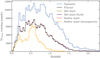

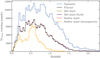

Fig. 2. True redshift distributions of the training samples used to run DNF in all six cases. The training samples include magnitudes brighter than mVIS = 24.5. The true redshift comes from the Flagship simulation. The four training samples with almost identical true redshift distributions have the same completeness drop in mVIS and only differ in the photometric quality. The numbers of training objects for the six samples are about 3.4 ⋅ 105, 1.8 ⋅ 105, 1.8 ⋅ 105, 1.8 ⋅ 105, 1.8 ⋅ 105, and 8.4 ⋅ 104 from top to bottom labels in the legend, respectively. |

The realism of our training samples is limited in the sense that we only try to reproduce the completeness in mVIS or the shape of the n(z) distribution. We do not take into account any dependence of the training samples on other characteristics such as galaxy type or the presence of emission lines, which would have an impact on the determination of the photo-z. The selection of specific galaxies, such as luminous red galaxies, to achieve a sample with better photo-z is used to increase the signal for example in galaxy clustering analysis in DES (see e.g., Rozo et al. 2016; Elvin-Poole et al. 2018). Normally, selecting a subsample with better photo-z performance implies reducing the number density and one has to study the trade off between both effects. We leave such a study to future work.

4.4. Photometric redshifts

The cosmological tomographic analysis of a photometric survey divides the whole sample into redshift bins selected with a photo-z technique. In our study, we want to follow as close as possible the methodological steps that one would carry out in real surveys. For that purpose, we compute the photo-zs of all our study cases described in Table 2. We use the directional neighborhood fitting (DNF; De Vicente et al. 2016) training-based algorithm to estimate realistic photo-z estimates of our simulated galaxies. The exact choice of the machine learning training set method is not important for our analysis as most methods of this type perform similarly to the precision levels we are interested in (see e.g., Euclid Collaboration 2020b; Sánchez et al. 2014).

DNF estimates the photo-z of a galaxy based on its closeness in observable space to a set of training galaxies whose redshifts are known. The main feature of DNF is that the metric that defines the distance or closeness between objects is given by a directional neighborhood metric, which is the product of a Euclidean and an angular neighborhood metrics. This metric ensures that neighboring objects are close in color and magnitude space. The algorithm fits a linear adjustment, a hyperplane, to the directional neighborhood of a galaxy to get an estimation of the photo-z. This photo-z estimate is called zmean, which is the average of the redshifts from the neighborhood. The residual of the fit is considered as the estimation of the photo-z error. In addition, DNF also produces another photometric redshift estimate, zmc that is a Monte Carlo draw from the nearest neighbor in the DNF metric for each object. Therefore, it can be considered as a one-point sampling of the photo-z probability density distribution. As such, it is not a good individual photo-z estimate of the object, but when all the estimates in a galaxy sample are stacked it can recover the overall probability density distribution of the sample (Rau et al. 2017). When working with tomographic bins, we classify the galaxies into different bins using their zmean and we obtain the photometric distribution, n(z), within each bin by stacking their zmc. This is an approach used by DES in analyzing their first year data results (e.g., Hoyle et al. 2018; Crocce et al. 2019; Camacho et al. 2019) providing redshift distributions that are validated with other independent assessment methods. Therefore, we define the n(z) by stacking the zmc estimator instead of the true redshift of the simulation to make the photo-z distribution close to what would be obtained in a real data analysis with the assurance that the method has been validated.

We select a patch of sky of 3.35 deg2 to create the samples to train DNF. These training samples have the magnitudes and errors computed with the same magnitude limits as the sample whose photo-z we want to compute (see Table 1). We generate three types of spectroscopic training samples. For all of them, we limit the spectroscopic training sample to galaxies brighter than mVIS = 24.5 as there are few objects whose redshift has been reliably determined beyond that magnitude limit. The spectroscopic training samples are described in Sect. 4.3.

The true redshift distributions of the spectroscopic training set used to train DNF for each of the sample cases considered here are shown in Fig. 2. In blue, we present the redshift distribution of case 1 with the first spectroscopic training sample that it is fully complete as a function of magnitude. We show in black the resulting N(z) of case 2. Cases 3–5 (olive, red and orange colors in Figs. 2 and 3) have the same training sample completeness as a function of magnitude. The drop in completeness at faint magnitudes translates into a decrease of objects at high redshift. Last, we present the resulting redshift distribution with the third spectroscopic training set in orange. Gathering multiple selection criteria from different spectroscopic surveys leads to an inhomogeneous redshift distribution for the spectroscopic training sample. In Fig. 3, we show the overall photo-z distributions of zmean (top panel) and zmc (bottom panel) values obtained for the full sample for each of the six cases. We see how an inhomogeneous N(z) in the training sample leads to an inhomogeneous distribution of the photo-z. We assign magnitude errors in each sample based on the limiting magnitude at 10σ, according to Table 1 and following Eq. (3). This leads to magnitude errors that change from one sample to another and differences in their corresponding photo-z distributions.

|

Fig. 3. Top:zmean photometric redshift distributions obtained with DNF for the six photometric samples up to mVIS = 25. The zmean photo-z estimate returned by DNF is the value resulting from the mean of the nearest neighbors redshifts. Lower: photometric redshift distributions obtained with DNF for the zmc statistic, which for each galaxy is a one-point sampling of the redshift probability distribution estimated from the nearest neighbor (see text for details). All samples have the same number density of 41.5 galaxies per arcmin2. |

The photo-zs obtained with DNF as a function of true redshift for the six samples up to mVIS < 24.5 are shown in Fig. 4. This figure gives us an indication of how the photo-z scatter decreases with deeper photometry. Photometric samples go up to mVIS = 25. However, we cut the spectroscopic training sample at mVIS = 24.5 to be more realistic. The lack of objects between 24.5 and 25.0 in the training sample forces the algorithm to extrapolate beyond that magnitude, and thus noisier photometric redshifts are obtained. In Fig. 4, we show galaxies only down to mVIS < 24.5 to reduce the noise and make the figure clearer.

|

Fig. 4. Scatter plot of both photometric redshifts given by DNF, zmean (top row) and zmc (bottom row), as a function of true redshift for all the samples described in Sect. 4.3 up to mVIS < 24.5. The σz of photo-z for these sample at mVIS < 24.5 is from left to right: 0.063, 0.049, 0.046, 0.036, 0.032, 0.029. |

To quantify the photo-z precision for the different samples, we use two typical metrics: the normalized median absolute deviation and the percentage of outliers. The former is defined as:

where

We consider outliers those objects with |Δz|> 0.15. In Table 3, we show the values obtained for these two metrics for each photometric sample.

5. Building forecasts for Euclid

So far, we have seen how the photometric depth and the spectroscopic training sample determine the overall redshift distributions of the resulting samples. We have selected six cases to cover a range of possible scenarios that we may encounter in the analysis of Euclid data complemented with ground-based surveys. Once the galaxy distributions for the photometric cases under study have been obtained, we want to propagate the photo-z accuracy in determining tomographic subsamples to the final constraints on the cosmological parameters in order to understand how to optimize the photometric sample for galaxy clustering analyses.

We follow the forecasting prescription presented in Euclid Collaboration (2020a, hereafter EC20). We consider the same Fisher matrix formalism and make use of the CosmoSIS7 code validated for Euclid specifications therein. Our observable is the tomographically binned projected angular power spectrum, Cij(ℓ), where ℓ denotes the angular multipole, and i, j stand for pairs of tomographic redshift bins. This formalism is the same for WL, galaxy clustering (with the photometric sample), and GGL with the only difference being the kernels used in the projection from the power spectrum of matter perturbations to the spherical harmonic-space observable. We focus on the GCph cosmological probe, as well as its combination with GGL. We consider auto- and cross-correlations between the photometric bins for GCph and the combination of probes. The projection to Cij(ℓ) is performed under the Limber, flat-sky, and spatially flat approximations (Kitching et al. 2017; Kilbinger et al. 2017; Taylor et al. 2018). We also ignore redshift-space distortions, magnification, and other relativistic effects (Deshpande et al. 2020). To minimize the impact of neglecting relativistic effects, more relevant at large scales, in our analysis we consider multipole scales from ℓ ≥ 10 to ℓ ≤ 750, which corresponds to the more conservative scenario in EC20.

When considering GGL, its power spectrum contains contributions from galaxy clustering and cosmic shear, but also from intrinsic galaxy alignments (IA). We assume the latter is caused by a change in galaxy ellipticity that is linear in the density field. Such modeling is appropriate for large scales (Troxel et al. 2018), similar to the ones considered in this analysis, but more complex models should be used for the very small scales (see e.g., Blazek et al. 2019; Fortuna et al. 2021). Under this linear assumption, we can define the density-intrinsic and intrinsic-intrinsic three-dimensional power spectra, PδI and PII, respectively. They can be related to the density power spectrum Pδδ with PδI = −A(z)Pδδ and PII = A(z)2Pδδ. We follow EC20 in parameterizing A as

where 𝒞IA is a normalization parameter that we set as 𝒞IA = 0.0134, D(z) is the growth factor, and 𝒜IA is a nuisance parameter fixing the amplitude of the IA contribution.

We model the redshift dependence of the IA contribution as

with ⟨L⟩(z)/L*(z) being the redshift-dependent ratio between the average source luminosity and the characteristic scale of the luminosity function (Hirata et al. 2007; Bridle & King 2007). For a detailed explanation on IA modeling see Samuroff et al. (2019). We use the same ratio of luminosities for every galaxy sample. However, this ratio should in principle depend on the specific galaxy population. Since we select galaxies according to a mVIS cut and not according to a particular galaxy type, we expect that the luminosity ratio does not change significantly between galaxy samples and therefore use the same ratio for simplicity. We set the fiducial values for the intrinsic alignments nuisance parameters to

in agreement with the recent fit to the IA contribution in the Horizon-AGN simulation (Chisari et al. 2015), although the amplitude 𝒜IA might be smaller in practice (Fortuna et al. 2021).

When considering GCph and GGL, one of the primary sources of uncertainty is the relation between the galaxy distribution and the underlying total matter distribution, that is the galaxy bias (Kaiser 1987). We consider a linear galaxy bias relating the galaxy density fluctuation to the matter density fluctuation with a simple linear relation

where we neglect any possible scale dependence. A linear bias approximation is sufficiently accurate for large scales (Abbott et al. 2018). However, when adding very small scales into the analysis, a more detailed modeling of the galaxy bias is required (see e.g., Sánchez et al. 2016). One of the approaches to this modeling is through perturbation theory, which introduces a nonlinear and nonlocal galaxy bias (Desjacques et al. 2018).

We consider a constant galaxy bias in each tomographic bin. We get their fiducial values by fitting the directly measured bias in Flagship to the function

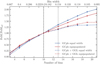

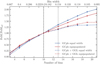

where A, B, and C are nuisance parameters. We select five subsamples with mVIS limiting magnitudes: 25, 24.5, 24, 23.5, and 23 from the Flagship galaxy sample. We compute the bias values as a function of redshift for each of these magnitude-limited subsamples using directly the true redshift of Flagship at redshifts 0.5, 1, 1.5, and 2. As an approximation, we use the same galaxy bias for each of the six photometric samples and change the fiducial according to the magnitude limit cut. The obtained bias and fitted functions are shown in the left panel of Fig. 5. To fit the bias-redshift relation we choose to use all galaxy bias values computed with the Flagship simulation, although values at z = 2 are less reliable. The value of the bias at z = 1.5 falls outside the bias-redshift fit for the mVIS < 23 sample. However, we recomputed the bias fit neglecting the value at z = 2 and including the value at z = 1.5, but no significant changes were appreciated, therefore we keep the bias computed using the fits shown in Fig. 5.

|

Fig. 5. Left panel: galaxy bias as a function of redshift. Dots correspond to the measured values in the Flagship simulation for different magnitude cuts and the solid lines are a fit following Eq. (11). We plot with squares the bias values obtained for z = 2 to indicate that at that redshift there are few objects and thus the values are slightly less reliable. At mVIS < 23 there were not enough objects at z = 2 to compute the bias in Flagship. Right panel: ratio between the HSC bias, bHSC, from N20 and the Flagship bias for each magnitude-limited sample. To assess the 1σ uncertainty of bHSC along the redshift range, we generate a set of Gaussian random numbers for the free parameter α, b1, and b0 of bHSC with their values as mean and their errors as standard deviation. Then we evaluate bHSC in the redshift range for all the set of free parameters previously generated. We pick the maximum and minimum bHSC at each redshift. This corresponds to the shaded regions. |

To validate the bias obtained with Flagship, we compare our bias values to the ones obtained from the Hyper Suprime-Cam Subaru Strategic Program (HSC-SSP) data release 1 (DR1) by Nicola et al. (2020, N20 hereafter). The HSC survey has comparable survey depth and uses similar ground-based bands to the ones considered in this work. N20 fit galaxy bias on magnitude-limited galaxy samples down to i < 24.5. We compare their values to ours in the right panel of Fig. 5. We extrapolate their bias down to i < 25 for our faintest magnitude bin. Strictly speaking, we are comparing i-band magnitude-selected samples from N20 to our mVIS-band magnitude-selected samples. We have checked in Flagship that the bias values for both i-band and mVIS-band selected samples cut at the same magnitude limit do not change by more than 10% and therefore our comparison is meaningful. N20 assume that bias can be split into two separated terms of redshift and limiting magnitude, and define it as

where α is a variable that takes into account the inverse relation between the growth factor and galaxy bias. By fitting α and  in a multistep weighted process they find

in a multistep weighted process they find

where b1 = −0.0624 ± 0.0070 and b0 = 0.8346 ± 0.161. For a detailed explanation see Sect. 4.6 in N20. We compute D(z) for our sample and use our mVIS magnitude cuts as mlim along with their fitted parameters to get a bias to compare. The ratio between the HSC bias, bHSC, and ours, b(z), is shown in the right panel of Fig. 5. In N20, they compute their bias up to redshift 1.25, so we have extrapolated their behavior to higher redshifts for the comparison at z > 1.25. The values of the bias in Flagship stay within 1σ of the HSC values, bHSC (shaded area in the right panel of Fig. 5), confirming that the bias values we use are consistent with the HSC observations.

We consider the same redshift distributions for both GCph and GGL. In practice, this is an oversimplification, since these two probes will probably apply different selection criteria when determining their samples. GGL for instance will give some importance to the shape measurements of the galaxies. But for the present Fisher matrix analysis, since we do not want to make assumptions on the shear measurement, we limit ourselves to use the same sample for both probes, as it was done in EC20.

6. Cosmological model

We optimize the photometric sample of Euclid considering the baseline cosmological model presented in EC20: a spatially flat Universe filled with cold dark matter and dark energy. We approximate the dark energy equation of state parameter with the CPL (Chevallier & Polarski 2001; Linder 2005) parameterization

The cosmological model is fully specified by the dark energy parameters, w0 and wa, the total matter and baryon density today, Ωm and Ωb, the dimensionless Hubble constant, h, the spectral index, ns, and the RMS of matter fluctuations on spheres of 8 h−1 Mpc radius, σ8. We assume a dynamically evolving, minimally-coupled scalar field, with sound speed equal to the speed of light and vanishing anisotropic stress as dark energy. Therefore, we neglect any dark energy perturbations in our analysis. We also allow the equation of state of dark energy to cross w(z) = − 1 using the Hu & Sawicki (2007) prescription.

The fiducial values of the cosmological parameters are given by

Moreover, we fix the sum of neutrino masses to ∑mν = 0.06 eV. The linear growth factor depends on both redshift and scale when neutrinos are massive, but we follow EC20 in neglecting this effect, given the small fiducial value considered. Therefore, we compute the growth factor accounting for massive neutrinos, but neglect any scale dependence. The fiducial values used in this analysis are compatible with the fiducial cosmology of the Flagship simulation presented in Sect. 4.1 except for σ8. This can be explained by the fact that the Flagship simulation does not account for massive neutrinos and therefore considers a slightly larger value for σ8. However, since we are only extracting the galaxy bias and the galaxy distributions from Flagship and we are computing Fisher forecasts, this difference in the fiducial σ8 value does not have any impact on our results.

We quantify the performance of photometric galaxy samples in constraining cosmological parameters through the metric figure of merit (FoM), as defined in Albrecht et al. (2006) but with the parameterization defined in EC20. Our FoM is proportional to the inverse of the area of the error ellipse in the parameter plane of w0 and wa defined by the marginalized Fisher submatrix,  ,

,

We use the FoM defined above throughout this article. The higher the FoM value, the higher the cosmological constraining power.

7. Results

In this section, we carry out a series of tests to optimize the sample selection for GCph analyses. We want to determine the best number and type of tomographic bins to constrain cosmological parameters. We explore the influence of the accuracy in the photo-z estimation and sample size in providing cosmological constraints. We split the data in tomographic redshift bins in order to have more control in the variations of sample size and photo-z accuracy to better understand their impact in constraining cosmological parameters. We use the FoM defined in Eq. (16) to quantify the constraining power on the cosmological parameters. In addition, we also compute the FoM when combining GCph with GGL, assuming the same photo-z sample, which implies the same photo-z binning and number density. When computing the cosmological constraining power for GCph + GGL, we marginalize over the galaxy bias of each tomographic bin and intrinsic alignment parameters, whereas for GCph alone the galaxy bias parameters are fixed to their fiducial values. The main reason for this choice is that, under the linear galaxy bias approximation, there is a large degeneracy between the galaxy bias and σ8. In this case, the Gaussianity assumption of the Fisher matrix approach breaks down and its constraints on the cosmological parameters are not reliable. Therefore, we fix the galaxy bias to break this degeneracy when considering GCph alone. When we combine GCph with GGL, the additional information brought by the latter is enough to break such degeneracy and constrain σ8 and the galaxy bias at the same time.

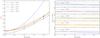

7.1. Optimizing the type and number of tomographic bins

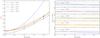

We bin galaxies into different numbers of redshift bins to study the impact of the number of redshift bins on the cosmological parameter inference. When we define redshifts bins, we choose galaxies within the redshift range [0, 2] since the maximum lightcone outputs generated in Flagship are at z = 2.3 and we prefer to avoid working at the limit of the simulation. We check the effect of using bins with the same redshift width and bins with the same number of objects (equipopulated). We also see the difference when using only GCph or both GCph and GGL probes. This analysis is performed using our fiducial sample (case 2) up to mVIS < 24.5. We compute the FoM for all the cases mentioned and show the results in Fig. 6. The FoM are normalized to ten bins since this is the default number used to compute the forecasts in EC20. In Fig. 6, we do not directly compare the FoM between GCph and GCph+ GGL since the assumption used in both cases are different (galaxy bias is fixed for GCph alone while it is free for GCph+ GGL), so the constraining power are not comparable. As a reference, for 13 bins with an equal width the FoM is 713 for GCph and 411 for the combination of probes.

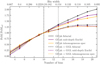

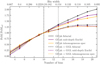

|

Fig. 6. FoM as a function of the number of bins for GCph (solid) and GCph + GGL (dashed), and for bins with the same redshift width (blue) and with the same number of objects (red). The redshift width of the bins when they have the same width is shown in the top x axis. The FoMs are normalized to the FoM at ten bins, FoMref, which corresponds to the specifications for the number of bins used to compute the forecasts in EC20 and denoted by a vertical-dashed line. A vertical-dotted line shows the 13 bins used as our fiducial choice. |

As seen in Fig. 6, the general tendency of the FoM is to increase with the number of bins. EC20 used ten tomographic bins as their fiducial value. For bins with an equal width in redshift, the FoM increase when moving from ten to 13 (15, 17) bins is 35.4% (54.3%, 72.8%) and 15.4% (27.1%, 33.2%), for GCph only and for GCph + GGL, respectively. The FoM improvement we get from going to even more bins does not compensate the increase in computational time needed for the analysis. This is especially true when using both probes, where we notice that the curve flattens while in GCph the FoM continues to increase since the bias is fixed and thus the amount of information that can be extracted is larger than expected in practice. Moreover, our photo-z treatment may start to be too simplistic to realistically deal with too many photometric redshift bins. The FoM saturates with the increasing number of bins because it is not possible to extract more information on radial clustering when the width of the bins is smaller than the photo-z precision. At this limit, the uncertainty at which bin a particular galaxy belongs is greatly increased. For GCph + GGL the curves flatten at lower number of bins since systematic effects in the marginalization of galaxy bias and intrinsic alignment free parameters also affect the cosmological information that can be extracted. Therefore, we choose 13 to be our fiducial number of bins as a conservative choice.

In addition, we choose bins with an equal width in redshift as the optimal way of partitioning the sample since we observe that, overall, for GCph the FoM is larger in this case than in the equipopulated one. For 13 bins with an equal width the FoM is 713 while it is 547 for equipopulated bins8, which is an increase of 30%. For the GCph + GGL combined analysis, the FoM does not appreciably change between the use of bins with an equal width and equipopulated ones. At 13 bins, which is the fiducial choice, the FoM difference of using bins with an equal width or equipopulated ones is negligible.

To further confirm that the choice of increasing the fiducial number of bins is beneficial, we checked the evolution of the FoMs as a function of the number of bins for samples with worse photo-zs than the fiducial sample. In Fig. 7, we observe the same tendency as in Fig. 6. In this case, when moving from ten to 13 (15) bins, the FoM for GCph increases by 31% (45.5%) and 26.5% (41%) for the mid depth Euclid and the inhomogeneous spectroscopic sample, respectively. For GCph + GGL, the FoM increase is 14.3% (23.5%) and 15% (24%) for the same samples, respectively. The improvement of the FoM for samples with worse photo-zs is lower than for the fiducial sample, as expected.

|

Fig. 7. FoM as a function of the number of bins with an equal width for GCph (solid) and GCph + GGL (dashed) for the mid depth Euclid and the inhomogeneous spectroscopic sample in addition to the fiducial sample, as in Fig. 6. |

We choose to use the 13 bins with an equal width configuration to analyze the dependency of cosmological constraints on the photo-z quality and size of the sample. In Fig. 8, we show the redshift distributions for this configuration for our fiducial case 2 sample.

|

Fig. 8. Redshift distributions (zmc) of the 13 bins with an equal width for the fiducial sample (case 2). The top x-axis correspond to the values of the redshift limits of the 13 bins with an equal width in redshift. The shaded regions indicate these limits. |

7.2. FoM dependency on photometric redshift quality and number density

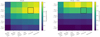

Another aspect we want to study is the effect of the trade-off between photo-z accuracy and number density on the constraining power of cosmological parameters. For that purpose, we take the six photometric samples defined in Sect. 4.3 and apply five cuts (25, 24.5, 24, 23.5, and 23) in mVIS to modify the sample size (leading to a number density of about 41, 29, 18, 12, and 9 galaxies per arcmin2, respectively). Besides reducing the number density of the photometric samples, the cut in mVIS also affects the photo-z distribution and accuracy of the overall sample. A bright magnitude cut, that eliminates the fainter galaxies, mostly removes galaxies with higher and thus less reliable redshifts. We compute the FoM for all the cases mentioned before and normalize them to the FoM of our fiducial (case 2) sample at mVIS < 24.5, for both GCph only and GCph + GGL. To help visualize the results, we present the resulting FoM in a grid format in Fig. 9 and the values themselves in Table 4. The configuration of tomographic bins used to perform the analysis is the optimum one found in the previous section, which is 13 bins with an equal width in redshift.

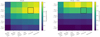

|

Fig. 9. FoM for the samples defined in Sect. 4.3 with different photo-z accuracy and sample size. The size has been reduced by performing a series of cuts in mVIS. The results are normalized to the FoM of the fiducial sample with mVIS < 24.5 (highlighted cell). The figures correspond to the results for using only GCph (left) and for combining it with GGL (right). |

First, we discuss the case of GCph alone. As seen in Fig. 9, in general, the FoM for GCph increases with deeper photometric data, which improves the photo-z performance (increasing along the x-axis in the figure). The FoM also increases with number density, determined by the magnitude limit imposed (increasing along the y-axis). We notice a larger increase in the FoM with sample size in those samples where the photo-z quality is better (e.g., the optimistic, fiducial, and mid depth ground-based photometry cases). In these cases, increasing the sample size from a mVIS cut from 23.5 to 24 and from 24 to 24.5 leads to an increase of the FoM of about 20%. Clearly, having a fainter magnitude cut results in larger samples that yield higher FoM values. This trend is in agreement with the results presented in Tanoglidis et al. (2019).

The trend of increasing FoM as we take fainter magnitude-limit cuts and increase the number density continues as long as the photo-z performance is not degraded. Once we push to faint magnitudes where there are no objects to train the photometric redshift algorithms, their performance degrades and the photometric redshift bins start to be wider. There are many object that do not belong to the bins and spurious cross-correlations between different bins appear. As a result, the strength of the cosmological signal is diminished and the FoM decreases. This effect can be seen in Fig. 9 for the GCph case (left panel), where we can appreciate a reduction in the FoM when we move from a magnitude-limited sample cut at mVIS < 24.5 (second row from the top) to a magnitude-limited sample cut at mVIS < 25.0 (top row). With this change, we are increasing the sample, but with galaxies that cannot be located in redshift as their photo-z cannot be calibrated. As a result, the clustering strength is diluted and some spurious cross-correlation signal appears resulting in a decreased FoM compared to a shallower sample with better photo-zs.

To illustrate this effect, in Fig. 10 we show the redshift distribution of three tomographic bins for three samples with galaxies down to mVIS < 24.5, < 25, and with galaxies only between 24.5 and 25. Galaxies with mVIS between 24.5 and 25 are mostly outside their tomographic bin increasing the width of the distribution and diluting the signal. We conclude that the GCph probe is sensitive to the actual location of their tracer galaxies inside their tomographic bins. Both the photo-z performance and the number density are important contributing factors when performing cosmological inference with GCph. When pushing to faint magnitudes, there is no improvement including galaxies that cannot be located in redshift.

|

Fig. 10. Redshift distributions (zmc) of three of the 13 tomographic bins selected with zmean corresponding to the shaded regions [0.31, 0.46) (blue), [0.92, 1.08) (green), and [1.54, 1.69) (red). For each bin, we plot objects with mVIS < 24.5 (solid), mVIS < 25.0 (dashed), and 24.5 < mVIS < 25.0 (dotted). The photometric sample used is the mid depth Euclid (case 4). |

Now, we discuss the case where we add GGL to GCph (right panel in Fig. 9). We observe that increasing the sample size (moving along the y-axis) has a more significant impact on the improvements of the FoM than the photo-z quality (changes along the x-axis). The greatest improvement, of about 50% for the best photo-z quality samples, takes place going from mVIS < 24 to 24.5. The second largest improvement is of about 25 – 30% when adding objects from mVIS < 24.5 to 25. In the GGL case, source galaxies outside the tomographic bin of the lens galaxy contribute to the signal. The lensing kernel is quite extended in redshift and galaxies beyond the lens contribute to the signal with only a mild dependence on their precise redshift, making the photo-z performance less important compared to the GCph only case. On the other hand, the statistical nature of detecting the lensing signal makes the number density (and therefore the magnitude-limit cut) a more important factor in determining the GGL cosmological inference power.

In the FoM grid, we find a counter intuitive behavior for some samples when combining GCph and GGL (Fig. 9 right panel). If we compare the mid depth and mid depth Euclid samples to the fiducial and optimistic samples at the same number density (along the x-axis), we find that the former pair gives better FoM constraints despite having larger photo-z scatter. This is counter-intuitive as fewer galaxies are properly located in redshift and still the FoM cosmological constraints are slightly better. As we mentioned before, whenever the photo-z performance degrades, more galaxies supposedly being in our tomographic bin belong to other bins. This effect can increase the effective number of sources for our lenses and thus boost the GGL signal. However, this is at the expense of reducing the cosmological constraining power of the GCph probe. The interplay between these two effects is difficult to gauge. The GGL increase appears slightly more prominent when pushing to fainter magnitude limits that produce a sizable increase in number density.

The representativeness of the training sample also determines the photo-z performance and thus the cosmological constraining power. For GCph, if we check the difference in FoM between our fiducial sample, trained with a spectroscopic sample that has a completeness drop at faint mVIS, and the same photometric sample trained with a fully representative training sample (optimistic sample), we see a gain of about 1–2% in the FoM. The spectroscopic incompleteness in this case is small and only affecting faint magnitudes, so the effect on the FoM is also small. This difference greatly increases when we compare the FoM performance of shallower samples and higher incompleteness in the spectroscopic training sample. If we compare the shallow depth sample that was trained with a sample that has a completeness drop in faint mVIS magnitude to the shallow depth inhomogeneous sample that was trained with a sample that is incomplete in the spectroscopic n(z), the difference between FoMs can be up to 25% for GCph and 39% for both probes combined.

Finally, we look at the difference due to the ground-based photometric depth. The difference between our fiducial and shallow depth cases may represent the change in depth to be achieved in the southern and northern hemispheres. For these cases, the difference in cosmological constraint power is about 10% at mVIS < 24.5 for GCph. This percentage reduces to 2% if we also consider GGL.

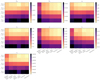

7.3. Impact on the cosmological parameters constraints

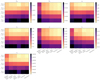

We further investigate the forecasts of the constraints on the cosmological parameters by looking at the parameter uncertainties,  , given by the square root of the diagonal elements of the inverse of the Fisher matrix. The uncertainties are computed for all the photometric samples defined in Sect. 4.3 and for the different sample sizes. For visual clarity, we present the results in grid form in Figs. 11 and 12.

, given by the square root of the diagonal elements of the inverse of the Fisher matrix. The uncertainties are computed for all the photometric samples defined in Sect. 4.3 and for the different sample sizes. For visual clarity, we present the results in grid form in Figs. 11 and 12.

|

Fig. 11. Uncertainties of the cosmological parameters for all the cases considered in Sect. 7.2 for GCph. |

|

Fig. 12. Uncertainties of the cosmological parameters for all the cases considered in Sect. 7.2 for combined GCph and GGL. |

In Fig. 11, we show the uncertainties for the GCph probe. We can appreciate that, in general, the uncertainties have a similar behavior to the FoM, where the sample down to mVIS < 24.5 gives the higher FoM. However, there are parameters, such as Ωb, wa, and h that do not degrade as much their performance when going to the deeper mVIS < 25 sample.

In Fig. 12, we show the uncertainties of the cosmological parameters when we combine the GCph and GGL cosmological probes. Again, we see similar trends compared to the FoM case, but with minor changes in the behavior of how the uncertainties in some of the parameters vary. The addition of galaxies, increasing the survey depth, and the improvement of the photo-z performance produce lower uncertainties in the Ωb and h parameters. The reduction of the uncertainty obtained when considering the deepest mVIS < 25 case compared to the mVIS < 24.5 is minimal, though.

In addition to the values of the FoM and the uncertainties in the parameters, it is also informative to study the distribution of those uncertainties and the error contours in the determination of pairs of parameters. In Fig. 13, we present the confidence contour plots for our fiducial sample at mVIS < 24.5 and 23.5, to check how the number density affects the constraining power and compare them to our shallow sample at mVIS < 24.5, to see the impact of having a sample with shallower ground-based photometry. The contours for the GCph case are shown in the upper panel and the GCph and GGL case in the lower panel. For both probes we see that the fiducial sample gives the best constraints and the largest improvement is gained when the sample size increases. The increase in constraining power with sample size is more prominent in the GCph and GGL combined case in general and for the parameters that characterize dark energy, w0 and wa, in particular.

|