| Issue |

A&A

Volume 605, September 2017

|

|

|---|---|---|

| Article Number | A9 | |

| Number of page(s) | 23 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201730587 | |

| Published online | 01 September 2017 | |

Inferring the photometric and size evolution of galaxies from image simulations

I. Method

Institut d’Astrophysique de Paris, CNRS, UMR 7095 et Sorbonne Universités, UPMC Univ. Paris 6, 98bis bd Arago, 75014 Paris, France

e-mail: lapparent@iap.fr

Received: 9 February 2017

Accepted: 15 April 2017

Context. Current constraints on models of galaxy evolution rely on morphometric catalogs extracted from multi-band photometric surveys. However, these catalogs are altered by selection effects that are difficult to model, that correlate in non trivial ways, and that can lead to contradictory predictions if not taken into account carefully.

Aims. To address this issue, we have developed a new approach combining parametric Bayesian indirect likelihood (pBIL) techniques and empirical modeling with realistic image simulations that reproduce a large fraction of these selection effects. This allows us to perform a direct comparison between observed and simulated images and to infer robust constraints on model parameters.

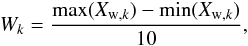

Methods. We use a semi-empirical forward model to generate a distribution of mock galaxies from a set of physical parameters. These galaxies are passed through an image simulator reproducing the instrumental characteristics of any survey and are then extracted in the same way as the observed data. The discrepancy between the simulated and observed data is quantified, and minimized with a custom sampling process based on adaptive Markov chain Monte Carlo methods.

Results. Using synthetic data matching most of the properties of a Canada-France-Hawaii Telescope Legacy Survey Deep field, we demonstrate the robustness and internal consistency of our approach by inferring the parameters governing the size and luminosity functions and their evolutions for different realistic populations of galaxies. We also compare the results of our approach with those obtained from the classical spectral energy distribution fitting and photometric redshift approach.

Conclusions. Our pipeline infers efficiently the luminosity and size distribution and evolution parameters with a very limited number of observables (three photometric bands). When compared to SED fitting based on the same set of observables, our method yields results that are more accurate and free from systematic biases.

Key words: galaxies: evolution / galaxies: bulges / galaxies: spiral / galaxies: luminosity function, mass function / galaxies: statistics / methods: numerical

© ESO, 2017

1. Introduction

During the last decades our understanding of galaxy formation and evolution has been largely shaped by the results of deep multicolor photometric surveys. We can now extract the spectro-photometric properties of millions of galaxies, over large volumes that cover more than ten billion years of cosmic history. Despite this wealth of data, we are still incapable of deriving strong constraints on the free parameters of current semi-analytic models that describe quantitatively how galaxies evolve in color, size, and shape from their high redshifts counterparts. The main reason is that, missing physical ingredients in our models aside, the galaxy catalogs derived from surveys are often incomplete.

First of all, surveys are limited in flux. Consequently, intrinsically faint sources tend to be under-represented because they are above the limiting magnitude only at small distances. This effect, called Malmquist bias (Malmquist 1920), introduces correlations between probably non-correlated variables, mainly distance and other parameters such as luminosity (e.g., Singal & Rajpurohit 2014). Additionally, some galaxies overlap and may be blended into single objects. Source confusion (Condon 1974), caused by unresolved faint sources blended by the point spread function, can act as a signal at the detection limit and also affects number counts in a non-trivial way. Moreover, source confusion affects background estimation by adding a non-uniform component to the background noise, which is correlated with the spatial distribution of unresolved sources (Helou & Beichman 1990). Statistical fluctuations in flux measurements give rise to the Eddington bias (Eddington 1913). As galaxy number counts increase as a power of the flux, there are more overestimated fluxes for faint sources than underestimated fluxes for bright sources. This results in a general increase in the number of sources detected at a given flux (Hasinger & Zamorani 2000; Loaring et al. 2005). Because of the cosmological dimming, the bolometric surface brightness of galaxies gets dimmer with increasing redshift proportionally to (1 + z)-4 (Tolman & Richard 1934), which makes many faint extended sources undetectable. Finally, stellar contamination affects the bright end of the source counts (e.g., Pearson et al. 2014).

Apparent magnitudes in catalogs also have to be corrected for Galactic extinction (e.g., Schlegel et al. 1998), and to account for redshift effects, K-corrections (Hogg et al. 2002) that are sensitive to galaxy spectral type must be applied on the magnitudes of high-redshift galaxies (e.g., Ramos et al. 2011). Both corrections, however, are applied only after the sample is truncated at its flux limit, which causes biases at the survey limit. Inclination-dependent internal absorption from dust lanes in the disk of galaxies also tends to draw a fraction of edge-on spirals below the survey flux limit (e.g., Kautsch et al. 2006). Because of these various selection effects, that correlate in ways that are poorly understood, and that may be spatially variable over the field of view of the survey, observations undergo complex selection functions that are difficult to treat analytically, and the resulting catalogs tend to be biased towards intrinsically brighter, compact, and low dust content sources.

The determination of the luminosity function (LF) of galaxies, a fundamental tool for characterizing galaxy populations that is often used for constraining models of galactic evolution, is particularly sensitive to these biases. As input data, analyses use catalogs containing the photometric properties, such as apparent magnitudes, of a selected galaxy sample. LF estimation requires the knowledge of the absolute magnitude of the sources, which itself depends upon the determination of their redshift. The number density per luminosity bin can be determined by a variety of methods, parametric or non-parametric, described in detail in Binggeli et al. (1988), Willmer (1997), and Sheth (2007). The resulting distribution is usually fitted by a Schechter function (Schechter 1976), but other functions are sometimes required (e.g., Driver & Phillipps 1996; Blanton et al. 2005). The Schechter function is characterized by three parameters: φ∗ the normalization density, α the faint end slope, and M∗ a characteristic absolute magnitude. The LF at z ~ 0 is presently well constrained thanks to the analysis of high-resolution spectroscopic surveys, such as the 2dF Galaxy Redshift Survey (2dFGRS, Norberg et al. 2002) or the Sloan Digital Sky Survey (SDSS, Blanton et al. 2003). There is also clear evidence that the global LF evolves with redshift, and that the LFs for different populations of galaxies evolve differently (Lilly et al. 1995; Zucca et al. 2006).

Measuring the LF evolution is nevertheless a challenge, as high-redshift galaxies are faint, and therefore generally unsuitable for spectroscopic redshift determination, which would require prohibitive exposure times. The current solution to this problem is to use the information contained within the fluxes of these sources in some broad-band filters, in order to estimate their redshift, known as photometric redshift. This procedure has a number of biases in its own right, because the precision of photometric redshifts relies on the templates and the training set used, assumed to be representative of the galaxy populations. These biases are described extensively in MacDonald & Bernstein (2010). In turn, redshift uncertainties typically result in an increase of the estimated number of low and high luminosity galaxies (Sheth 2007).

The forward-modeling approach to galaxy evolution.

The traditional approach when comparing the results of models to data is sometimes referred to as backward modeling (e.g., Marzke 1998; Taghizadeh-Popp et al. 2015). In this scheme, physical quantities are derived from the observed data, and are then compared with the physical quantities predicted from simulations, semi-analytical models (SAM), or semi-empirical models. A more reliable technique is the forward modeling approach: a distribution of modeled galaxies are passed through a virtual telescope with all the observing process reproduced (filters, exposure time, telescope characteristics, seeing properties, as well as the cosmological and instrumental biases described above), and a direct comparison is made between simulated and observed datasets. The power of this approach comes from the fact that theory and observation are compared in the observational space: the same systematic errors and selection effects affect the simulated and observed data. Blaizot et al. (2005) were the first to introduce realistic mock telescope images from light cones generated by SAMs. Overzier et al. (2013) extended this idea by constructing synthetic images and catalogs from the Millenium Run cosmological simulation including detailed models of ground-based and space telescopes. More recently, Taghizadeh-Popp et al. (2015) used semi-empirical modeling to simulate Hubble Deep Field (HDF) images, from cutouts of real SDSS galaxies with modified sizes and fluxes, and compared them to observed HDF images. Here we make the case that forward modeling can be used to perform reliable inferences on the evolution of the galaxy luminosity and size functions.

Bayesian inference.

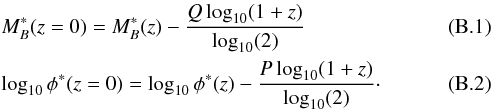

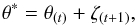

Standard Bayesian techniques provide a framework to address any statistical inference problem. The goal of Bayesian inference is to infer the posterior probability density function (PDF) of a set of model parameters θ, given some observed data  . This probability can be derived using Bayes’ theorem:

. This probability can be derived using Bayes’ theorem:  (1)where

(1)where  is also called the likelihood (or the likelihood function) of the data, which gives the probability of the data given the model, P(θ) is the prior, or the probability of the model with the parameters θ, and

is also called the likelihood (or the likelihood function) of the data, which gives the probability of the data given the model, P(θ) is the prior, or the probability of the model with the parameters θ, and  is the evidence, which acts as a normalization constant and is usually ignored in inference problems. The posterior PDF is approximated either analytically or via the use of sampling techniques, such as Markov chain Monte Carlo (MCMC).

is the evidence, which acts as a normalization constant and is usually ignored in inference problems. The posterior PDF is approximated either analytically or via the use of sampling techniques, such as Markov chain Monte Carlo (MCMC).

However, there are multiple cases where the likelihood is intractable or unknown, for mathematical or computational reasons, which renders classical Bayesian approaches unfeasible. In our case, it is the modeling of the selection effects that is impractical to include in the likelihood. To tackle this issue, a new class of methods, called “likelihood-free”, have been developed to infer posterior distributions without explicit computation of the likelihood.

Approximate Bayesian Computation.

One of the “likelihood-free” techniques is called Approximate Bayesian Computation (ABC), and was introduced in the seminal article of Pritchard et al. (1999) for population genetics. ABC is based on repeated simulations of datasets generated by a forward model, and replaces the likelihood estimation by a comparison between the observed and synthetic data. Its ability to perform inference under arbitrarily complex stochastic models, as well as its well established theoretical grounds, have lead to its growing popularity in many fields, including ecology, epidemiology, and stereology (see Beaumont 2010, for an overview).

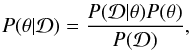

The classic ABC Rejection sampling algorithm, introduced in its modern form by Pritchard et al. (1999), is defined in Algorithm 1,

where ρ is a distance metric built between the simulated and observed datasets, usually based on some summary statistics η, which are parameters that maximize the information contained within the datasets (for example, normally distributed datasets can be characterized using the mean and standard deviation of the underlying Gaussian distribution), and ϵ is a user-defined tolerance level >0. Using the ABC algorithm with a good summary statistic and a small enough tolerance ultimately leads to a fair approximation of the posterior distribution (Sunnaker et al. 2013). The choices of ρ, η and ϵ are highly non-trivial though, and they constitute the fundamental difficulty in the application of ABC methods as they are problem-dependent (Marin et al. 2011). Moreover rejection sampling is notorious for its inherent inefficiency, as sampling directly from the prior distribution results in spending computing time simulating datasets in low-probability regions. Therefore, several classes of sampling algorithms have been developed to explore the parameter space more efficiently. Three of the most popular of them are outlined below.

-

In the ABC-MCMC algorithm (Marjoram et al. 2003), a point in the parameter space called a particle performs a random walk (defined by a proposal distribution or transition kernel) across the parameter space, and is only moving if the simulated dataset generated by these parameters match better the observed dataset, until it converges to a stationary distribution. As in standard MCMC procedures, the efficiency of the algorithm is largely determined by the choice of the scale of the kernel.

-

In the ABC Sequential Monte Carlo parallel algorithm (ABC-SMC, Toni et al. 2009), samples are drawn from the prior distribution until N particles are accepted, that is, those with a distance to the data <ϵ0. All accepted particles are attributed a statistical weight ω0. The weighted particles then constitute an intermediate distribution from which another set of samples is drawn and perturbed with a fixed transition kernel, until N particles satisfy the acceptance criterion: ρ<ϵ1, with ϵ1<ϵ0. They are then weighted with ω1 and the process is repeated with a diminished tolerance at each step. After T iterations of this process, the particles are sampled from the approximate posterior distribution. The performance of ABC-SMC scales as N, where N is the number of particles. Different variations of ABC-SMC algorithms have been published, each with a different weighting scheme for particles.

-

ABC Population Monte Carlo (ABC-PMC, Beaumont et al. 2009) is similar to ABC-SMC, but differs in its adaptive weighting scheme: its transition kernel is Gaussian and based on the variance of the accepted particles in the previous iteration. This scheme requires the fewest tuning parameters of the three algorithms discussed here (Turner & Van Zandt 2012). But ABC-PMC is also more computationally costly than ABC-SMC, as its performance scales as N2 (caused by its adaptability).

The reader is referred to Csilléry et al. (2010), Marin et al. (2011), Turner & Van Zandt (2012), Sunnaker et al. (2013), and Gutmann & Corander (2016) for a set of historical, methodical, and theoretical reviews of this final approach, as well as a complete description of the algorithms mentioned above.

Parametric Bayesian indirect likelihood.

Another class of likelihood-free techniques is called parametric Bayesian indirect likelihood (pBIL). First proposed by Reeves & Pettitt (2005) and Gallant & McCulloch (2009), pBIL transforms the intractable likelihood of complex inference problems into a tractable one using an auxiliary parametric model that describes the simulated datasets generated by the forward model. In this scheme, the resulting auxiliary likelihood function quantifies the discrepancy between the observed and simulated data. It is used in Bayes’ theorem and the parameter space is explored using a user-defined sampling procedure, in an equivalent way to a classical Bayesian technique. While sharing similarities with the previous technique, pBIL is not an ABC method in the strict sense, as it does not require an appropriate choice of summary statistics and tolerance level to compare the observed and synthetic datasets. The accuracy of the inference in the pBIL scheme is determined by how well the auxiliary model describes the data (observed and simulated). The theoretical foundations of this scheme are described extensively in Drovandi et al. (2015).

Application of likelihood-free inference to astrophysics.

The application of likelihood-free methods to astrophysics is still rare, as noted by Cameron & Pettitt (2012) in their review. Only lately has the potential of such techniques been considered. Schafer & Freeman (2012) praised the use of likelihood-free inference in the context of quasar luminosity function estimation. Cameron & Pettitt (2012) explored the morphological transformation of high-redshift galaxies and derived strong constraints on the evolution of the merger rate in the early Universe using an ABC-SMC approach. Weyant et al. (2013) also used SMC for the estimation of cosmological parameters from type Ia supernovae samples, and could still provide robust results when the data was contaminated by type IIP supernovae. Robin et al. (2014) constrained the shape and formation period of the thick disk of the Milky Way using MCMC as their sampling scheme, based on photometric data from the SDSS and the Two Micron All Sky Survey (2MASS). Finally Hahn et al. (2017) demonstrate the feasibility of using ABC to constrain the relationship between galaxies and their dark matter halo. The recent birth of Python packages providing sampling algorithms in an ABC framework, such as astroABC (Jennings & Madigan 2017) and ELFI (Kangasrääsiö et al. 2016), which implement SMC methods, and COSMOABC (Ishida et al. 2015) which implements the PMC algorithm, will probably facilitate the rise of likelihood-free inference techniques in the astronomical community.

Outline of the article.

To the authors’ knowledge, no likelihood-free inference approaches have yet included telescope image simulation in their forward modeling pipeline, because of the difficulty in implementation as well as a prohibitive computational cost. Prototypical implementations in a cosmological context have, however, been tested by Akeret et al. (2015) on a Gaussian toy model for the calibration of image simulations. In the present article we propose a new technique that combines the forward modeling approach with sampling techniques in the pBIL framework. In that regard, we use a stochastic semi-empirical model of evolving galaxy populations coupled to an image simulator to generate realistic synthetic images. Simulated images go through the same source extraction process and data analysis pipeline as real images. The observed and synthetic data distributions are finally compared and used to infer the most probable models.

This article is organized as follows: Sects. 2 to 5 describe in detail the forward-modeling pipeline we propose, from model parameters to data analysis and sampling algorithm. Section 6 defines our convergence diagnostics. In Sect. 7, we demonstrate the validity, internal consistency and robustness of our approach by inferring the LF parameters and their evolution using one realization of our model as input data. We perform these tests in two situations: a configuration where the data is a mock Canada-France-Hawaii Telescope Legacy Survey (CFHTLS) Deep image containing two populations of ellipticals and lenticulars and late-type spirals, and where the parameters to infer are the evolving luminosity function parameters for each population (Sect. 7.4); and a configuration where the data is a mock CFHTLS Deep image with a single population of pure bulge elliptical galaxies, and in which the inference is performed on the evolving size and luminosity (Sect. 7.6). In Sect. 8, we compare the results of our forward modeling approach with those of the more traditional photometric redshift approach applied to the same situation. Finally, Sect. 9 provides suggestions to improve the speed and accuracy of this method.

Throughout this article, unless stated otherwise, we adopt the following cosmological parameters: H0 = 100 h km s-1 Mpc-1 with h = 1, Ωm = 0.3, ΩΛ = 0.7 (Spergel et al. 2003). Magnitudes are given in the AB system.

2. Model: from parameters to image generation

In order to infer the physical properties of galaxies from observed survey images without having to describe the complex selection effects the latter contain, we propose the following pipeline. We start from a set of physical input parameters, drawn from the prior distribution defined for each parameter. These parameters describe the luminosity and size distribution of the various populations of modeled galaxies. From this set of parameters, our forward model generates a catalog of galaxies modeled as the sum of their bulge and disk components, each with a different profile. The projected light profiles of the galaxies are determined by their inclination, the relative fraction of light contained within the bulge, and the galaxy redshift as well as the extinction of the bulge and disk components. The galaxies are randomly drawn from the luminosity function of their respective population. The catalog assumes that galaxies are randomly distributed on a virtual sky that includes the cosmological effects of an expanding universe with a cosmological constant. The survey image is simulated in every band covered by the observed survey, and reproduces all of its characteristics, such as filters transmission, exposure time, point spread function (PSF) model, and background noise model.

Then, a large number of “simulated” images are generated via an iterative process (a Markov chain) generating new sets of physical parameters at each iteration. Some basic flux and shape parameters are extracted in the same way from the observed and simulated images: after a pre-processing step (which is identical for observed and simulated data) where observables are decorrelated and their dynamic range reduced, the multidimensional distributions of simulated observables are directly compared to the observed distributions using a custom distance function on binned data.

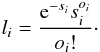

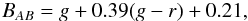

The chain moves through the parameter space towards regions of high likelihood, that is, regions that minimize the distance between the modeled and observed datasets. The pathway of the chain is finally analyzed to reconstruct the multidimensional posterior probability distribution and infer the sets of parameters that most likely reproduce the observed catalogs, as well as the correlations between these parameters. The main steps of this approach are detailed in the sections below, and the whole pipeline is sketched in Fig. 1 of this article.

|

Fig. 1 Summary of the workflow. |

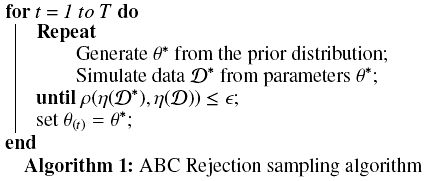

2.1. Physical parameters and source catalog generation

Artificial catalogs are generated with the Stuff package (Bertin 2009) in fields of a given size. Stuff relies on empirical scaling laws applied to a set of galaxy “types”, which it uses to draw galaxy samples with photometric properties computed in an arbitrary number of observation passbands. Each galaxy type is defined by its Schechter (1976) luminosity function parameters, its spectral energy distribution (SED), as well as the bulge-to-total luminosity ratio B/T and rest-frame extinction properties of each component of the galaxy through a “reference” passband.

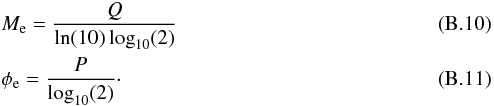

The photometry of simulated galaxies is based on the composite SED templates of Coleman et al. (1980) extended by Arnouts et al. (1999). Any of the six “E”, “S0”, “Sab”, “Sbc”, “Scd”, and “Irr” SEDs can be assigned to the bulge and disk components separately, for a given galaxy type. The version of Stuff used in this work does not allow the SEDs to evolve with redshift; instead, following Gabasch et al. (2004), galaxy evolution is modeled as a combination of density (Schechter’s φ∗) and luminosity (Schechter’s M∗) evolution with redshift z:  where Me and φe are constants. The reference filter (i.e. the filter where the LF is measured) is set to the g-band in the present article.

where Me and φe are constants. The reference filter (i.e. the filter where the LF is measured) is set to the g-band in the present article.

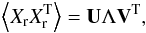

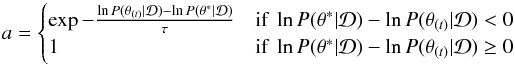

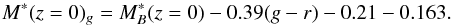

|

Fig. 2 Comparison between an observed survey image and a mock image generated by our model. On the left: a region of the CFHTLS D1 field (stack from the 85% best seeing exposures) built from the gri bands. On the right: a simulated image with Stuff+Skymaker with the same filters, exposure time, and telescope properties as the CFHTLS data. Both images are shown with the same color coding. |

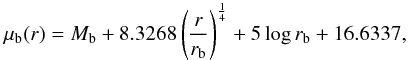

Bulges and elliptical galaxies have a de Vaucouleurs (1953) profile:  (4)where μb(r) is the bulge surface brightness in mag pc-2, Mb = M − 2.5log (B/T) is the absolute magnitude of the bulge component and M the total absolute magnitude of the galaxy, both in the reference passband. As a projection of the fundamental plane, the average effective radius ⟨ rb ⟩ in pc follows an empirical relation we derive from the measurements of Binggeli et al. (1984):

(4)where μb(r) is the bulge surface brightness in mag pc-2, Mb = M − 2.5log (B/T) is the absolute magnitude of the bulge component and M the total absolute magnitude of the galaxy, both in the reference passband. As a projection of the fundamental plane, the average effective radius ⟨ rb ⟩ in pc follows an empirical relation we derive from the measurements of Binggeli et al. (1984):  (5)where rknee = 1.58 h-1 kpc and Mknee = − 20.5. The intrinsic flattening q of bulges follows a normal distribution with ⟨ q ⟩ = 0.65 and σq = 0.18 (Sandage et al. 1970), which we convert to the apparent aspect-ratio

(5)where rknee = 1.58 h-1 kpc and Mknee = − 20.5. The intrinsic flattening q of bulges follows a normal distribution with ⟨ q ⟩ = 0.65 and σq = 0.18 (Sandage et al. 1970), which we convert to the apparent aspect-ratio  , where i is the inclination of the galaxy with respect to the line of sight.

, where i is the inclination of the galaxy with respect to the line of sight.

Disks have an exponential profile:  (6)where μd(r) is the disk surface brightness in mag pc-2, Md = M − 2.5log (1 − (B/T)) is the absolute magnitude of the disk in the reference passband, and rd the effective radius. Semi-analytical models where disks originate from the collapse of the baryonic content of dark-matter-dominated halos (Dalcanton et al. 1997; Mo et al. 1998) predict useful scaling relations. Assuming that light traces mass and that there is negligible transport of angular momentum during collapse, one finds

(6)where μd(r) is the disk surface brightness in mag pc-2, Md = M − 2.5log (1 − (B/T)) is the absolute magnitude of the disk in the reference passband, and rd the effective radius. Semi-analytical models where disks originate from the collapse of the baryonic content of dark-matter-dominated halos (Dalcanton et al. 1997; Mo et al. 1998) predict useful scaling relations. Assuming that light traces mass and that there is negligible transport of angular momentum during collapse, one finds  , where λ is the dimensionless spin parameter of the halo, Ld = 10− 0.4Md the total disk luminosity, and β ≃ − 1 / 3 (de Jong & Lacey 2000). The distribution of λ, as seen in N-body simulations, can well be described by a log-normal distribution (Warren et al. 1992), and is very weakly dependent on cosmological parameters (Steinmetz & Bartelmann 1995), hence the distribution of rd at a given Md should behave as:

, where λ is the dimensionless spin parameter of the halo, Ld = 10− 0.4Md the total disk luminosity, and β ≃ − 1 / 3 (de Jong & Lacey 2000). The distribution of λ, as seen in N-body simulations, can well be described by a log-normal distribution (Warren et al. 1992), and is very weakly dependent on cosmological parameters (Steinmetz & Bartelmann 1995), hence the distribution of rd at a given Md should behave as: ![\begin{equation} n(r_{\rm d} | M_{\rm d}) \propto \frac{1}{r_{\rm d}} \exp \left[-\frac{\left(\ln (r_{\rm d}/r^*_{\rm d}) - 0.4\beta_{\rm d}(M_{\rm d}-M^*_{\rm d})\right)^2}{2\sigma^2_\lambda}\right]\cdot \end{equation}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq65.png) (7)In de Jong & Lacey (2000), a convincing fit to I-band catalog data of late-type galaxies corrected for internal extinction is obtained, with βd = − 0.214, σλ = 0.36,

(7)In de Jong & Lacey (2000), a convincing fit to I-band catalog data of late-type galaxies corrected for internal extinction is obtained, with βd = − 0.214, σλ = 0.36,  kpc, and

kpc, and  (for H0 = 65 km s-1). Both bulge and disk effective radii are allowed to evolve (separately) with redshift z using simple (1 + z)γ scaling laws (see, e.g., Trujillo et al. 2006; Williams et al. 2010). The original values from Trujillo et al. (2006) are modified to those in Table 5 based on the Hubble Space Telescope Ultra Deep Field (UDF, Williams et al. 2010; Bertin, priv. comm.).

(for H0 = 65 km s-1). Both bulge and disk effective radii are allowed to evolve (separately) with redshift z using simple (1 + z)γ scaling laws (see, e.g., Trujillo et al. 2006; Williams et al. 2010). The original values from Trujillo et al. (2006) are modified to those in Table 5 based on the Hubble Space Telescope Ultra Deep Field (UDF, Williams et al. 2010; Bertin, priv. comm.).

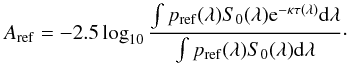

Internal extinction is applied (separately) to the bulge and disk SEDs S(λ) using the extinction law from Calzetti et al. (1994), extended to the UV and the IR assuming an LMC law (Charlot, priv. comm.):  (8)where S0(λ) is the face-on, unextincted SED and τ(λ) the uncalibrated extinction law. The normalization factor κ is computed by integrating the effect of extinction Aref, expressed in magnitudes, within the reference passband pref(λ):

(8)where S0(λ) is the face-on, unextincted SED and τ(λ) the uncalibrated extinction law. The normalization factor κ is computed by integrating the effect of extinction Aref, expressed in magnitudes, within the reference passband pref(λ):  (9)As the variation of τ(λ) is small within the reference passband, we take advantage of a second order Taylor expansion of both the exponential and the logarithm:

(9)As the variation of τ(λ) is small within the reference passband, we take advantage of a second order Taylor expansion of both the exponential and the logarithm:  with

with  (12)Solving the quadratic Eq. (11) we obtain:

(12)Solving the quadratic Eq. (11) we obtain:  (13)We adopt the parametrization of the extinction from the RC3 catalog (de Vaucouleurs et al. 1991):

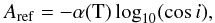

(13)We adopt the parametrization of the extinction from the RC3 catalog (de Vaucouleurs et al. 1991):  (14)where i is the disk inclination with respect to the line-of-sight, and α(T) (not to be confused with Schechter’s α) is a type-dependent “extinction coefficient” that quantifies the amount of extinction+diffusion in the blue passband. For simplicity we identify this passband with our reference g passband, although they do not exactly match. The extinction coefficient evolves with de Vaucouleurs (1959) revised morphological type as:

(14)where i is the disk inclination with respect to the line-of-sight, and α(T) (not to be confused with Schechter’s α) is a type-dependent “extinction coefficient” that quantifies the amount of extinction+diffusion in the blue passband. For simplicity we identify this passband with our reference g passband, although they do not exactly match. The extinction coefficient evolves with de Vaucouleurs (1959) revised morphological type as:  (15)Stuff applies to SEDs the mean intergalactic extinction curve at the given redshift following Madau (1995) and Madau et al. (1996), using the list of Lyman wavelengths and absorption coefficients from the Xspec code (Arnaud 1996). Galaxies are Poisson distributed in 5 h-1 Mpc redshift slices from z = 20 to z = 0. For now the model does not include clustering properties, therefore the galaxies positions are uniformly distributed over the field of view. Ultimately Stuff generates a set of mock catalogs (one per filter) to be read by the image simulation software, containing source position, apparent magnitude, B/T, bulge and disk axis ratios and position angles, and redshift. We note that for consistency, we kept most of the default values applied by Stuff to scaling parameters, although many of them come from slightly outdated observational constraints dating back to the mid-2000’s (and even earlier). This of course does not affect the conclusions of this paper.

(15)Stuff applies to SEDs the mean intergalactic extinction curve at the given redshift following Madau (1995) and Madau et al. (1996), using the list of Lyman wavelengths and absorption coefficients from the Xspec code (Arnaud 1996). Galaxies are Poisson distributed in 5 h-1 Mpc redshift slices from z = 20 to z = 0. For now the model does not include clustering properties, therefore the galaxies positions are uniformly distributed over the field of view. Ultimately Stuff generates a set of mock catalogs (one per filter) to be read by the image simulation software, containing source position, apparent magnitude, B/T, bulge and disk axis ratios and position angles, and redshift. We note that for consistency, we kept most of the default values applied by Stuff to scaling parameters, although many of them come from slightly outdated observational constraints dating back to the mid-2000’s (and even earlier). This of course does not affect the conclusions of this paper.

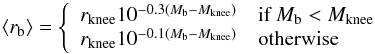

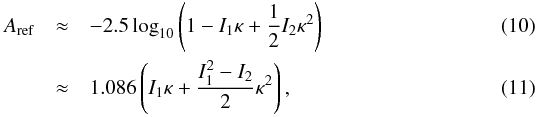

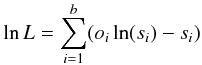

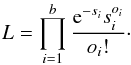

2.2. Image generation

Stuff catalogs are turned into images using the SkyMaker package (Bertin 2009). Briefly, SkyMaker renders simplified images of galaxy models as the sum of a Sérsic (1963) “bulge” and an exponential “disk” on a high resolution pixel grid. The models are convolved by a realistic PSF model generated internally, or derived from actual observations using the PSFEx tool (Bertin 2011a). Each convolved galaxy image – or point source for stars – is subsampled at the final image resolution using a Lanczos-3 kernel (Wolberg & George 1990) and placed on the pixel grid at its exact catalog coordinates. The next step involves large scale features: convolution by a PSF aureole (e.g., Racine 1996), addition of the sky background, and simulation of saturation features (bleed trails). Finally, photon (Poisson) and read-out (Gaussian) noise are added according to the characteristics of the instrument being simulated, and the data are converted to ADUs (analog-to-digital units). An example of a simulated deep survey field is shown Fig. 2.

|

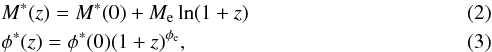

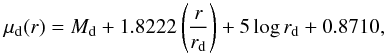

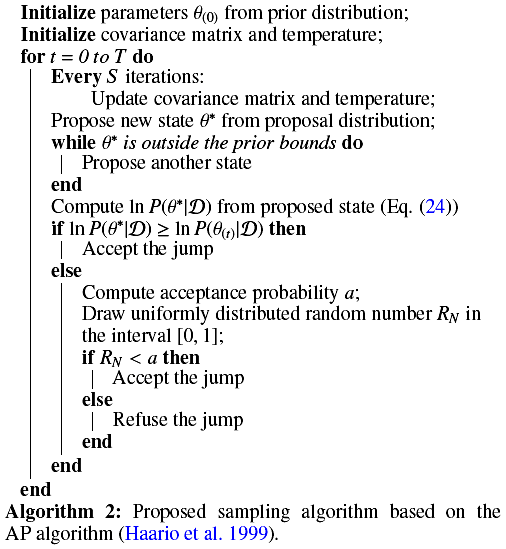

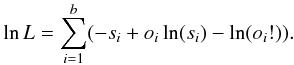

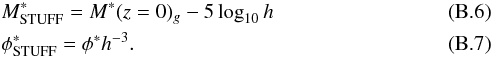

Fig. 3 Illustration of the parallelization process of our pipeline, described in detail in Sect. 3.2. Stuff generates a catalog, that is, a set of files containing the properties of simulated galaxies, such as inclination, bulge-to-disk ratio, apparent size, and luminosity. Each file lists the same galaxies in a different passband. The parallelization process is performed on two levels: first, the Stuff catalogs are split into sub-catalogs according to the positions of the sources on the image. These sub-catalogs are sent to the nodes of the computer cluster in all filters at the same time using the HTCondor framework. Each sub-catalog is then used to generate a multiband image corresponding to a fraction of the total field. This step is multiprocessed in order to generate the patches in every band simultaneously. SExtractor is then launched on every patch synchronously, also using multiprocessing. The source detection is done in one pre-defined band, and the photometry is done in every band. Finally, the SExtractor catalogs generated from all the patches are merged into one large catalog containing the photometric and size parameters of the extracted sources from the entire field. |

3. Compression of data: from source extraction to binning

3.1. Source extraction

The SExtractor package (Bertin & Arnouts 1996) produces photometric catalogs from astronomical images. Briefly, sources are detected in four main steps: first, a smooth model of the image background is computed and subtracted. Second, a convolution mask, acting as matched filter, is applied to the background-subtracted image for improving the detection of faint sources. Third, a segmentation algorithm identifies connected regions of pixels with a surface brightness in the filtered image higher than the detection threshold. Finally, the same segmentation process is repeated at increasing threshold levels to separate partially blended sources that may share light at the lowest level.

Once a source has been detected, SExtractor performs a series of measurements according to a user-defined parameter list. This includes various position, shape, and flux estimates. For this work we rely on FLUX_AUTO photometry. FLUX_AUTO is based on Kron’s algorithm (Kron 1980) and gives reasonably robust photometric estimates for all types of galaxies. For object sizes we choose the half-light radius estimation provided by the FLUX_RADIUS parameter, which is the radius of the aperture that encloses half of the FLUX_AUTO source flux. We note that this size estimate includes the convolution of the galaxy light profile by the PSF. In order to retrieve properties such as color, SExtractor is run in the so-called double image mode, where detection is carried out in one image and measurements in another. By repeating source extraction with the same “detection image”, but with “measurement images” in different filters, we ensure that the photometry is performed in the exact same object footprints in all filters.

SExtractor flags all issues occurring during the detection and measurements processes. In this work, we consider only detections with a SExtractor FLAG parameter less than four, which excludes sources that are saturated or truncated by the frame boundaries.

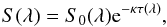

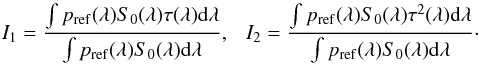

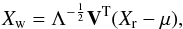

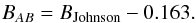

3.2. Parallelization

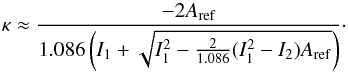

By construction, our sampling procedure based on MCMC (cf. Sect. 5) cannot be parallelized, because the knowledge of the n − 1th iteration is required to compute the nth iteration. We can, however, parallelize the process of source extraction and, most importantly, image simulation. In fact, we find in performance tests that the pipeline runtime is largely dominated by the image generation process (cf. Fig. 4), and that the image generation time scales linearly with the area of the simulated image. Simulating a single image per band containing all the sources for every iteration would make this problem computationally unfeasible in terms of execution time. In order to limit the runtime of an iteration, the image making step is therefore split into Nsub × Nf parallel small square patches, as illustrated in Fig. 3, where Nf is the number of filters fixed by the observed data and Nsub the user-defined number of patches per band. Both quantities must be chosen so that their product optimizes the resources used by the computing cluster.

We start with Nf input catalogs generated from the model, each containing a list of sources’ positions in a full-sized square field of size Lf, as well as their photometric and size properties. The sources are then filtered according to their spatial coordinates and dispatched to their corresponding patch. Each patch has a size  , where Nsub is a square number. In practice, the sources are extracted from a box 150 pixels wider than the patch size in order to include the objects outside the frame that partially affect the simulated image. All the sources of position (x,y) are within a patch of coordinate (i,j)

, where Nsub is a square number. In practice, the sources are extracted from a box 150 pixels wider than the patch size in order to include the objects outside the frame that partially affect the simulated image. All the sources of position (x,y) are within a patch of coordinate (i,j)![\hbox{$\in [0,\sqrt{N_{\rm sub}}-1] \times [0,\sqrt{N_{\rm sub}}-1]$}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq98.png) if

if ![\hbox{$x \in [i \frac{L_{\rm f}}{\sqrt{N_{\rm sub}}}-150, (i+1) \frac{L_{\rm f}}{\sqrt{N_{\rm sub}}}+150]$}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq99.png) , and

, and ![\hbox{$y \in [j \frac{L_{\rm f}}{\sqrt{N_{\rm sub}}}-150, (j+1) \frac{L_{\rm f}}{\sqrt{N_{\rm sub}}}+150]$}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq100.png) .

.

|

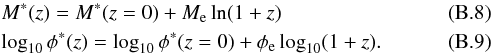

Fig. 4 Benchmarking of a full iteration of our pipeline, obtained with 50 realizations of the same iteration. An iteration starts with the Stuff catalog generation (here we consider a case where ~55 000 sources spread into two populations of galaxies are produced), and ends with the posterior density computation. The runtime of each subroutine called is analyzed in terms of the fraction of the total runtime of the iteration. In this scheme, the image simulation step clearly dominates the runtime, followed by the source extraction step and the HTCondor latencies. Source generation, pre-processing, binning and posterior density calculation (labeled lnP_CALC), however, account for a negligible fraction of the total runtime. |

As a result, all the sources are scattered through Nsub catalog files per band. We then use the HTCondor distributed jobs scheduler on our computing cluster to generate and analyze all the patches at the same time. The flexibility of HTCondor offers many advantages to a pipeline that requires distributed computing over long periods of time. Thanks to its dynamic framework, jobs can be check pointed and resumed after being migrated if a node of the cluster becomes unavailable, and the scheduler efficiently provides an efficient match-making between the required and the available resources. This framework also has its drawbacks, in the form of inherent and uncontrollable latencies when jobs input files are sent to the various nodes.

In our case, each job corresponds to a single patch, and the Nsub × Nf resulting catalogs serve as input files for the jobs. We found that HTCondor latencies represent between 7% and 50% of the run time of each iteration, as illustrated in Fig. 4 in the context of the application described below (cf. Sect. 7).

For each job, the image generation and source extraction procedures are multiprocessed: SkyMaker is first launched simultaneously in every band on the Lf/ -sized patch and, when all the images are available, SExtractor is launched in double image mode. Condor then waits until all jobs are completed. Finally, the catalog files generated from all the patches are merged into one, so that in fine, a single catalog file per band contains all the extracted sources.

-sized patch and, when all the images are available, SExtractor is launched in double image mode. Condor then waits until all jobs are completed. Finally, the catalog files generated from all the patches are merged into one, so that in fine, a single catalog file per band contains all the extracted sources.

3.3. Reduction of the dynamic ranges

Observables such as fluxes may have a large dynamic range that goes up to the saturation level of the chosen survey. This can be problematic for the binning process of our pipeline, in the sense that it will create many sparsely populated bins. We must therefore reduce the dynamic range of the photometric properties of the sources. We cannot simply use the log of the flux arrays, because the noise properties of background-subtracted images can provide faint objects with negative fluxes. We therefore use the following transform g(X), which has already been applied to model-fitting and machine learning applications (e.g., Bertin 2011b): ![\begin{eqnarray} X_{\rm r} = g(X) = \left\{\!\!\!\begin{array}{rl} \kappa_{\rm c}\sigma \ln \left(1 + \frac{X}{\kappa_{\rm c}\sigma}\right) & \text{if } X\ge 0,\\[1mm] -\kappa_{\rm c}\sigma \ln \left(1 - \frac{X}{\kappa_{\rm c}\sigma}\right) & \text{otherwise,}\\ \end{array}\right. \label{eq:reducrange} \end{eqnarray}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq104.png) (16)where σ is the baseline standard deviation of X (i.e. , the average lowest flux error), and κc a user-defined factor which can be chosen in the range from 1 to 100, typically. In all the test cases that we describe in Sect. 7, we set κc = 10. In practice we apply this compression to each dimension of the observable space, with a different value of σ for each observable. We separate the σ values into two categories for each kind of observable: σf for flux-related observables and σr for size-related ones. These values are affected by the galaxy populations in the observed field as well as the photometric properties of the field itself, such as the bands used and the noise properties. For fluxes and colors, a root mean square error estimate of the flux measurement is given by SExtractor: FLUXERR_AUTO. We set σf to the median value of the distribution of FLUXERR_AUTO values for the sources extracted from input data, and this operation is repeated on each filter. However, SExtractor provides no such error estimate for FLUX_RADIUS. For this kind of observable we rely on the distribution of FLUX_RADIUS of the extracted sources with respect to the corresponding FLUX_AUTO. For each passband, the value of σr is set to the approximate FLUX_RADIUS of the extracted sources’ distribution when FLUX_AUTO tends to 0. The exact values actually do not matter, because the same compression is applied on the observed and simulated data.

(16)where σ is the baseline standard deviation of X (i.e. , the average lowest flux error), and κc a user-defined factor which can be chosen in the range from 1 to 100, typically. In all the test cases that we describe in Sect. 7, we set κc = 10. In practice we apply this compression to each dimension of the observable space, with a different value of σ for each observable. We separate the σ values into two categories for each kind of observable: σf for flux-related observables and σr for size-related ones. These values are affected by the galaxy populations in the observed field as well as the photometric properties of the field itself, such as the bands used and the noise properties. For fluxes and colors, a root mean square error estimate of the flux measurement is given by SExtractor: FLUXERR_AUTO. We set σf to the median value of the distribution of FLUXERR_AUTO values for the sources extracted from input data, and this operation is repeated on each filter. However, SExtractor provides no such error estimate for FLUX_RADIUS. For this kind of observable we rely on the distribution of FLUX_RADIUS of the extracted sources with respect to the corresponding FLUX_AUTO. For each passband, the value of σr is set to the approximate FLUX_RADIUS of the extracted sources’ distribution when FLUX_AUTO tends to 0. The exact values actually do not matter, because the same compression is applied on the observed and simulated data.

3.4. Decorrelation of the observables: whitening transformation

The choice of the nature and number of observables is a compromise between computational cost and informational content. In fact, memory limitations intrinsic to the computational cluster when binning observed and synthetic data (cf. Sect. 3.5) prevent us from using an arbitrary number of observables in the pipeline. Observables such as fluxes or magnitudes in different passbands also tend to be correlated with one another, as they originate from the same spectrum of a given galaxy from a given population. These correlations can be high if the passbands are too narrow, too close to each other, and not covering a large enough wavelength baseline. One must thoughtfully choose the appropriate set of filters a priori in order for the resulting set of observables to be able to disentangle the luminous properties of the different galaxy populations.

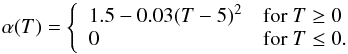

Strong correlations between input vector components can also make binning very inefficient, therefore an important pre-processing step is to decorrelate them. In that regard, we apply a linear transformation called principal component analysis whitening, or sphering (Friedman 1987; Hyvärinen et al. 2009; Shlens 2014; Kessy et al. 2017) to our reduced matrix of observables Xr of size p × Ns, where p is the number of observables and Ns is the number of sources. Principal component analysis (PCA) is an algorithm commonly used in the context of dimensionality reduction. Its goal is to find a set of orthogonal axes in a dataset called principal components that encapsulate most of the variance of the data. This can be performed via a singular values decomposition (SVD) of the covariance matrix of the data:  (17)where U and V are orthogonal matrices and Λ the diagonal matrix containing the non-negative singular values of the covariance matrix, sorted by descending order.

(17)where U and V are orthogonal matrices and Λ the diagonal matrix containing the non-negative singular values of the covariance matrix, sorted by descending order.

PCA whitening is the combination of two operations: rotation and scaling. First the dataset (previously centered around zero by subtracting the mean in each dimension) is projected along the principal components, which removes linear correlations, and then each dimension is scaled so that its variance equals to one. The whitening transform can therefore be summarized by:  (18)where Xw is the whitened version of the observables matrix Xr and μ is the average matrix. The PCA whitening transformation results in a set of new variables that are uncorrelated and have unit variance (

(18)where Xw is the whitened version of the observables matrix Xr and μ is the average matrix. The PCA whitening transformation results in a set of new variables that are uncorrelated and have unit variance ( ). During the chain iterations, the observed and simulated data are centered, rotated, and scaled in the same way to ensure that both distributions can be well superposed and compared (cf. Sect. 4).

). During the chain iterations, the observed and simulated data are centered, rotated, and scaled in the same way to ensure that both distributions can be well superposed and compared (cf. Sect. 4).

In practice, the simulated data is whitened using the Λ, VT, and μ of the observed data. The number of principal components to keep is left to the choice of the user. Retaining only the components with the highest variance and therefore reducing the computational cost of the pipeline may be tempting. Nevertheless, subtle but important features can arise from low variance components, and deleting them comes at a price. In our application (cf. Sect. 7), we choose not to reduce the dimensionality of the problem.

3.5. Binning in the observational space

It remains to quantify the similarity between the two multivariate datasets, one containing preprocessed observables from the observations and the other from a simulation. Following the idea of Robin et al. (2014) and Rybizki & Just (2015), who grouped their data representing stellar photometry into bins of magnitude and color, we choose to bin our datasets, considering the relative simplicity and advantageous computational cost of this method. However, binning comes with some inevitable drawbacks: the number of bins increases exponentially with the number of dimensions. For a fixed-size dataset, multivariate histograms are also sparser than their univariate counterparts and display more complex shapes. Finally the choice of the binning scheme can significantly influence the information content of the dataset, and that choice is not trivial in high-dimensional spaces (Cadez et al. 2002). This class of problems is known as “the curse of dimensionality” (Bellman 1972).

Several binning schemes have been developed, like the Freedman & Diaconis (1981) rule extended to several dimensions, Knuth’s rule (Knuth 2006), which uses Bayesian model selection to find the optimal number of bins, Hogg’s rule (Hogg 2008), or Bayesian blocks (Scargle et al. 2013). But all these rules face the curse of dimensionality as the number of observables becomes high. Alternatives to binning for density estimation can also be used and are discussed in Sect. 9.

In our specific case, the dimensionality of the observable space is determined by the number p of photometric and size parameters in every passband extracted from the survey images. We use ten bins of constant width per dimension throughout the article. More bins per dimension would lead to memory issues caused by the limitations of our computing cluster in the applications that we propose in Sect. 7.6. The bin width for dimension k ∈ [ 1,p ] in this scheme is therefore given by:  (19)where Xw,k is the pre-processed observables matrix for the observed data.

(19)where Xw,k is the pre-processed observables matrix for the observed data.

In this pipeline, the binning pattern is only computed once and for the observed data only. The same binning is then directly applied to the simulated data to ensure better execution speed and comparability between histograms. Because the number of counts per bin is directly affected by the model parameters that rule the number density of galaxies, such as φ∗ in our application (see Sect. 7), the resulting p-dimensional histograms are not normalized to prevent a loss of information in the minimization of distance between the synthetic and observed data.

4. Comparison between simulated and observed data

Estimating the discrepancy between the observed and simulated binned datasets in high-dimensional space is highly non-trivial, as the choice of a good distance metric is problem dependent. The observables’ distributions may be multimodal and skewed, and many metrics rely on the assumption of normality. Others, such as the Kullback-Leibler divergence (Kullback & Leibler 1951) or the Jensen-Shannon distance (Lin 1991), cannot be used without estimating an analytical underlying PDF, which can be very computationally expensive in a high-dimensional observable space.

Here is a non-exhaustive list of non-parametric (i.e., distribution-free) distance metrics found in the literature that can be used on multivariate data in the ABC framework. A more complete review is available in Pardo & Menéndez (2006) and Palombo (2011); however, no study to quantify their relative power has been performed so far. These metrics include:

-

The χ2 test (Chardy et al. 1976) is a simple and widely used way of determining whether observed frequencies are significantly different from expected frequencies. The main drawback of this approach is that χ2 test results are dependent on the binning choice (Aslan & Zech 2002). For example, Kurinsky & Sajina (2014) use the χ2 distance to compare color-color histograms.

-

The Kolmogorov-Smirnov (KS) test (Chakravarti et al. 1967) estimates the maximum absolute difference between the empirical distribution functions (EDF) of two samples. A generalization of this test for multivariate data has been proposed (Justel et al. 1997). However, as there is no unique way of ordering data points to compute a distance between two EDF, it is not as reliable as the one-dimensional version without the help of resampling methods such as bootstraping (Babu & Feigelson 2006).

-

The Anderson-Darling (AD) test (Stephens 1974) is a modification of the KS test. This method uses a weight function that gives more weight to the tails of the distributions. It is therefore considered more sensitive than the KS test, but it also suffers from the same problems in the multivariate case.

-

The Mahalanobis distance (Mahalanobis 1936) is similar to the Euclidean norm but has the advantage of taking into account the correlation structure of multivariate data. The Mahalanobis statistics, coupled with an univariate KS test, are used by Akeret et al. (2015) to compare photometric parameters for cosmological purposes. However, this distance only works for unimodal data distributions.

-

The Bhattacharyya distance (Bhattacharyya 1946) is related to the Bhattacharyya coefficient, which measures the quantity of overlap between the two samples. It is considered more reliable than the Mahalanobis distance in the sense that its use is not limited to cases where the standard deviations of the distributions are identical.

-

The Earth Mover’s distance (EMD; Rubner et al. 1998) is based on a solution to the Transportation problem. The distributions are represented by a user-defined set of clusters called signatures, where each cluster is described by its mean and by the fraction of the distribution encapsulated by it. The EMD is defined as the minimum cost of turning one signature into the other, the cost being linked to the distance between the two. A computationally fast approximate version of this distance using the Hilbert space-filling curve can be found in Bernton et al. (2017).

In the present article, we place ourselves within the pBIL framework to perform the inference process. In this context, the binning structure constructed in Sect. 3.5 and the assumption of a Poisson behavior of the number counts in each bin represent the auxiliary model that describes the data. The “auxiliary likelihood” derived from this structure is inspired from the maximum likelihood scheme of Cash (1979), a likelihood that has been used in previous studies like Robin et al. (2014), Bienayme et al. (1987), or Adye (1998):  (20)where b is the total number of bins, si is the number count in bin i for the simulated data, and oi is the number count in bin i for the observed data. The underlying assumptions for this choice of auxiliary likelihood can be found in Appendix A.

(20)where b is the total number of bins, si is the number count in bin i for the simulated data, and oi is the number count in bin i for the observed data. The underlying assumptions for this choice of auxiliary likelihood can be found in Appendix A.

In that scheme, as the logarithm of si is used, empty bins cause a problem. In order to avoid singularities, a constant small value (that we set to 1) is added to every bin up to the edges of the observables space. This process is done in both modeled and observed data so that it does not bias our results.

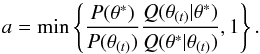

5. Sampling procedure: Adaptive Proposal algorithm

MCMC methods are a set of iterative processes which perform a random walk in the parameter space to approximate the posterior distribution with the help of Markov chains. A Markov chain is a sequence of random variables { θ(0),θ(1),θ(2),..., } in the parameter space (called states) that verifies the Markov property: the conditional distribution of θ(t + 1) given { θ(0),...,θ(t) } (called transition probability or kernel) only depends on θ(t). In other words, the probability distribution of the next state only depends on the current state.

After a period (whose length depends on the starting point and the random path taken by the chain) where the chain travels from low to high probability regions of the parameter space, the MCMC samples ultimately converge to a stationary distribution in such a way that the density of samples is proportional to the posterior PDF, also called target distribution. The portion of the chain which is not representative of the target distribution (i.e., the first iterations where the chain has not yet reached stationarity) is called burn-in, and is usually discarded from the analysis a posteriori. Well optimized MCMC methods provide an efficient tool to avoid wasting a lot of computing time sampling regions of very low probability. There is a great variety of MCMC algorithms, and the choice of a specific algorithm is problem-dependent. The reader is referred to Roberts & Rosenthal (2009) for a complete review of these methods.

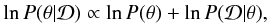

To estimate the posterior distribution P(θ | D) defined in Eq. (1) in a reasonable amount of time, one must explore the parameter space in a fast and efficient way. For our purposes, we designed a custom sampling procedure, described in Algorithm 2, based on the MCMC Adaptive Proposal (AP) algorithm (Haario et al. 1999), which is itself built upon the Metropolis-Hastings algorithm (Metropolis et al. 1953; Hastings 1970). The Metropolis-Hastings algorithm is one of the most general MCMC methods. In this algorithm, given a state θ(t) sampled from the target distribution P(θ), a proposed state θ∗ is generated using a user-defined transition kernel Q(θ∗ | θ(t)), which represents the probability of moving from θ(t) to θ∗. The proposition is accepted with probability:  (21)If the proposed sample is accepted, then θ(t + 1) = θ∗ and the chain jumps to the new state. Otherwise, θ(t + 1) = θ(t).

(21)If the proposed sample is accepted, then θ(t + 1) = θ∗ and the chain jumps to the new state. Otherwise, θ(t + 1) = θ(t).

The choice of the transition kernel Q(θ∗ | θ(t)) is crucial to guarantee the rapid convergence of the chain. We opt for the popular choice of a multivariate Normal distribution  centered on the current state and with a covariance matrix Σ which determines the size and orientation of the jumps, so that:

centered on the current state and with a covariance matrix Σ which determines the size and orientation of the jumps, so that:  (22)where ζ(t + 1) follows

(22)where ζ(t + 1) follows  .

.

A good way to assess convergence speed is to monitor the acceptance rate, that is, the fraction of accepted samples over previous iterations. The acceptance rate is mainly influenced by the covariance matrix of the transition kernel Σ. If the jump sizes are too high, the acceptance rate is too low, and the chain stays still for a large number of iterations. If the jump sizes are too small, the acceptance rate is very high but the chain needs a high number of iterations to move from one region of the parameter space to another. These situations are illustrated in Fig. 7. The desired acceptance rate depends on the target distribution, and there is no universal criterion for its optimization, but Roberts et al. (1997) proved that for any d-dimensional target distributions (with d ≥ 5) with independent and identically distributed (i.i.d.) components, optimal performance of the Random Walk Metropolis algorithm is attained for an asymptotic acceptance rate of 0.234.

As the modeling process is very time-consuming and the dimensionality of the problem may be high, we cannot afford to rely on trial and error to find the roughly optimal covariance matrix. We therefore opt for an adaptive MCMC scheme to limit user intervention as much as possible and achieve fast convergence. In the AP algorithm proposed by Haario et al. (1999), the covariance matrix of the Gaussian kernel Σ is tuned on-the-fly every fixed number of iterations using previously sampled states of the chain, and it therefore “learns” the target distribution covariance matrix. In our custom version of the algorithm, every S iterations the empirical covariance matrix from every different accepted state of the Nlast iterations is computed. We then add a fixed diagonal matrix with elements very small relative to the empirical covariance matrix elements, set to 10-6, to prevent it from becoming singular (Haario et al. 2001) while not impacting the results much (but to which extent remains presently an open question). The choice of S, also called the update frequency, is left to the user and weakly influences the performance of the algorithm, so we set it arbitrarily to 500. As for Nlast, we set it to 50 in order to minimize the chance of the covariance matrix being strongly influenced by a potential rapid evolution of the last few states.

In order to be able to converge in any case, a Markov chain must be ergodic. A stochastic process is said to be ergodic if its statistical properties can be retrieved by a finite random sample of the process. It is well known that adaptation can perturb ergodicity (see, e.g., Andrieu & Moulines 2006). In order to ensure that an adaptive sampling algorithm has the right ergodic properties, and hence converges to the right distribution, it must verify the Vanishing Adaption condition: the level of adaption must asymptotically depend less and less on previous states of the chain. Haario et al. (1999) showed that the AP algorithm is not ergodic in most cases. To tackle this issue, Haario et al. (2001) later released a revised version of their algorithm: the Adaptive Metropolis (AM) algorithm. In the AM algorithm, instead of using a fixed number of previous states, the proposal distribution covariance matrix is computed using all the previous states, which solves the ergodicity problem of the AP algorithm. However, we show in Sect. 7 that our custom implementation of the AP algorithm still yields robust results to our problem.

5.1. Prior

In any Bayesian inference problem, the choice of the prior distribution P(θ) is of crucial importance, because different prior choices can result in different posterior distributions from the same data. Without any information on what parameter values most probably explain our data, our choice by default is that of an uninformative prior, that is, a multivariate continuous uniform distribution whose boundaries are chosen according to the limits currently given for each parameter in the literature. The uniform prior is defined as: ![\begin{eqnarray} P(\theta)= \begin{cases} \prod\limits_{i=1}^{N_p} \frac{1}{d_{i}-c_{i}} & \text{ if }d_i \leq \theta_i \leq c_i\text{ } \forall i \in [1,N_p]\\ 0 & \text{ else}, \end{cases} \end{eqnarray}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq157.png) (23)where ci and di are the lower and upper limit of the PDF for parameter i and θ = (θ1,θ2,...,θNp) is the parameter values vector.

(23)where ci and di are the lower and upper limit of the PDF for parameter i and θ = (θ1,θ2,...,θNp) is the parameter values vector.

If more precise information is available on a given subset of parameters, a convolution with a more informative PDF (e.g., Normal, Beta, ...) can be performed, but in any case a finite interval is needed in order to provide the source generation software with realistic input parameters. In fact, an infinite interval can result in situations in which no galaxies are generated by the model, or conversely when too many galaxies are generated, which would dramatically increase the computing time.

5.2. Acceptance probability

In practice, one uses the ratio of the posterior density at the proposed and current states to measure the acceptance probability. More specifically, we use the difference between the log of these quantities in order to avoid floating-point numbers precision problems when dealing with very small probabilities. In log probability space, Bayes’ theorem (cf. Eq. (1)) becomes:  (24)where

(24)where  is the input data,

is the input data,  is the posterior, P(θ) is the prior defined in Sect. 5.1, and

is the posterior, P(θ) is the prior defined in Sect. 5.1, and  is the auxiliary likelihood defined in Eq. (20).

is the auxiliary likelihood defined in Eq. (20).

The target distribution can have a complex shape and if no particular precaution is taken, our sampling algorithm is not immune to getting stuck in a local maximum of likelihood. To tackle this issue, Kirkpatrick (1983) exploited the analogy between the way a heated metal cools and the search for a global optimum of a function. In the so-called simulated annealing algorithm, the acceptance probability a depends on a “temperature” parameter τ, initialized at high value and slowly decreasing over the iterations. In this scheme, the higher the temperature, the higher the algorithm is prone to accept large moves and to get away from a nearby local maximum:  (25)where

(25)where  and

and  are respectively the log of the posterior density at the current (i.e. , at iteration t) and the proposed state. In other words, if a proposition is considered more probable, it is accepted. Otherwise, it is accepted with probability a (defined in Eq. (25)). To perform the latter operation in practice, a uniformly distributed random number RN is drawn in the interval [ 0,1 ]. If RN<a, the jump is accepted. As expected, for τ = 1, the acceptance probability is the same as that of the Metropolis-Hastings algorithm in Eq. (21) for the particular case of a symmetric proposal distribution, that is, when Q(θ(t) | θ∗) = Q(θ∗ | θ(t)).

are respectively the log of the posterior density at the current (i.e. , at iteration t) and the proposed state. In other words, if a proposition is considered more probable, it is accepted. Otherwise, it is accepted with probability a (defined in Eq. (25)). To perform the latter operation in practice, a uniformly distributed random number RN is drawn in the interval [ 0,1 ]. If RN<a, the jump is accepted. As expected, for τ = 1, the acceptance probability is the same as that of the Metropolis-Hastings algorithm in Eq. (21) for the particular case of a symmetric proposal distribution, that is, when Q(θ(t) | θ∗) = Q(θ∗ | θ(t)).

Because of the intrinsic stochasticity of our model, many realizations of the model at the same state θ(t) can lead to many  values. Therefore, artificial local maxima of the target distribution appear, because each iteration relies on a single realization of the model. The simulated annealing algorithm was designed to find the global maximum of the target distribution without knowing the posteriori distribution, and this requires us to lower τ in a user-defined scheme. But our goal is distinct as we need to freely explore the parameter space landscape in order to estimate the full posterior distribution. The main constraint for τ is to be comparable to the posterior density difference resulting from the jump. Here we define it as the root mean square (rms) of the current state, as suggested by Mehrotra et al. (1997). In that scheme, a high noise level or a small difference between the proposed and the current state leads to a higher probability of jumping to this state.

values. Therefore, artificial local maxima of the target distribution appear, because each iteration relies on a single realization of the model. The simulated annealing algorithm was designed to find the global maximum of the target distribution without knowing the posteriori distribution, and this requires us to lower τ in a user-defined scheme. But our goal is distinct as we need to freely explore the parameter space landscape in order to estimate the full posterior distribution. The main constraint for τ is to be comparable to the posterior density difference resulting from the jump. Here we define it as the root mean square (rms) of the current state, as suggested by Mehrotra et al. (1997). In that scheme, a high noise level or a small difference between the proposed and the current state leads to a higher probability of jumping to this state.

The temperature is computed every S iterations by running an empirically-defined number of realizations NR of the model at the current state θ(t), storing every  value returned in a vector, and computing the standard deviation of the resulting distribution. In the application below, we find that 20 realizations are sufficient to give a reasonable estimate of the rms (cf. Fig. 8) and that the temperature quickly reaches a stationary distribution at a relatively low level τ ≃ 30, after the first few 103 iterations (cf. Fig. 9).

value returned in a vector, and computing the standard deviation of the resulting distribution. In the application below, we find that 20 realizations are sufficient to give a reasonable estimate of the rms (cf. Fig. 8) and that the temperature quickly reaches a stationary distribution at a relatively low level τ ≃ 30, after the first few 103 iterations (cf. Fig. 9).

5.3. Initialization of the chain

The initial state θ(0) is drawn randomly from the prior distribution (see Sect. 5.1). The initial position will only affect the speed of convergence, because the final distribution shall not depend on the initial position, if the chain converges. The initial temperature is then computed from this state. As for the proposal distribution, it is initialized so that no direction in the parameter space is preferred by the sampling algorithm at first. The initial covariance matrix is therefore diagonal, whose non-zero elements are set to: ![\begin{eqnarray} \label{eq:initCii} C_{ii}=\frac{u_i-l_i}{E} \text{ } \forall i \in [1,N_p], \end{eqnarray}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq173.png) (26)where ui and li are respectively the upper and lower bounds of the prior distribution for parameter i, Np the number of parameters, and E a value set empirically to 200 in order to ensure reasonable acceptance rates at the beginning of the chain. According to Haario et al. (1999), the adaptive nature of the algorithm implies that the choice of E should not influence the output of the chain.

(26)where ui and li are respectively the upper and lower bounds of the prior distribution for parameter i, Np the number of parameters, and E a value set empirically to 200 in order to ensure reasonable acceptance rates at the beginning of the chain. According to Haario et al. (1999), the adaptive nature of the algorithm implies that the choice of E should not influence the output of the chain.

6. Convergence diagnostics

The goal of an MCMC chain is to reach a stationary distribution that is supposed to be representative of the target distribution. Unfortunately there is no theoretical criterion for convergence: in other words it is impossible from a finite MCMC chain to assess convergence with certainty. Many convergence diagnostics have been developed (the reader can find an extensive review of those and a comparison of their relative performances in, e.g., Cowles & Carlin 1996), but these diagnostics can only tell if a chain has not converged. So in order to have confidence in the convergence of the chains, we must perform multiple diagnostics.

The first check is carried out by visual inspection of the trace plot for each parameter. Trace plots are used to diagnose poor mixing, that is, when the chain is highly autocorrelated, or slow sampling caused by too small a step size, which suggests that the majority of the MCMC output is not representative of the target distribution (see Fig. 7). We also use trace plots to estimate the length of the burn-in phase. The latter is determined by eye, by a rough estimate of the minimum number of iterations D necessary for all the parameters to reach a seemingly stationary distribution. We then discard the D first iterations, where D depends on the chain.

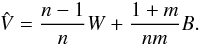

Finally, one of the most popular convergence diagnostics is a test proposed by Gelman & Rubin (1992). Given m chains  (j = 1,...,m and m ≥ 3, and typically ~10), each of length n after discarding burn-in (t = 1,...,n) and with different starting points, the test compares the variance between the mean values of the m chains B and the mean of the m within-chain variances W:

(j = 1,...,m and m ≥ 3, and typically ~10), each of length n after discarding burn-in (t = 1,...,n) and with different starting points, the test compares the variance between the mean values of the m chains B and the mean of the m within-chain variances W: ![\begin{eqnarray} B &= \frac{n}{m-1} \sum_{j=1}^m (\bar{\theta}_.^{j} - \bar{\theta}_{...})^2, \\ W &= \frac{1}{m} \sum_{j=1}^m \left[ \frac{1}{n-1} \sum_{i=1}^n (\theta_{(i)}^{j} - \bar{\theta}_.^{j})^2 \right], \end{eqnarray}](/articles/aa/full_html/2017/09/aa30587-17/aa30587-17-eq186.png) where

where  is the mean value of chain j, and

is the mean value of chain j, and  is the average value over the m chains.

is the average value over the m chains.

An overestimate of the true marginal posterior variance is given by the unbiased estimator  (29)Finally convergence is estimated using the potential scale reduction factor (PSRF)

(29)Finally convergence is estimated using the potential scale reduction factor (PSRF)  :

:  (30)Here we use the Gelman Rubin diagnostic implemented in this form in the PyMC package (Patil et al. 2010) to perform our convergence tests, and we consider that convergence has been reached if

(30)Here we use the Gelman Rubin diagnostic implemented in this form in the PyMC package (Patil et al. 2010) to perform our convergence tests, and we consider that convergence has been reached if  for all model parameters (Brooks & Gelman 1998); otherwise, more iterations are performed until the criterion is met.

for all model parameters (Brooks & Gelman 1998); otherwise, more iterations are performed until the criterion is met.

Imaging characteristics of the CFHTLS+WIRDS surveys used for SkyMaker.

Uniform prior boundaries for the parameters of the luminosity and size functions, and their evolution with redshift.

7. Application to a toy model

As a proof-of-concept of the method, we apply our pipeline to a selection of idealized cases, where the “observed” data is a synthetic image containing one or two populations of galaxies generated by a set of known input parameters of the Stuff model. Our goal is to infer the values of the input parameters in this framework.

7.1. Simulated survey characteristics

As data image, we choose to reproduce a full-sized stack of the CFHTLS Deep field (e.g., Cuillandre & Bertin 2006). The CFHTLS Wide and Deep fields offer carefully calibrated stacks with excellent image quality. Covering 155 deg2 on the sky in total, the Wide field allows for a detailed study of the large scale distribution of galaxies. As for the Deep field, which covers 4 deg2 in total, it beneficits from long time exposures (33 to 132 h), which ensure reliable statistical samples of different populations of bright galaxies up to z ~ 1. Each stack of the CFHTLS Deep field is a 19 354 × 19 354 pixel image covering 1 deg2 on the sky. We simulate one stack of the Deep field in three bands: Megacam u and i from the CFHTLS, and the WIRcam Ks infrared channel from the WIRcam Deep Survey (WIRDS) that covers part of the CFHTLS Deep fields. In accordance with CFHTLS product conventions, the image exposure time is normalized to one second and the AB magnitude zero-point is 30. The overall characteristics of the simulated images are summarized in Table 1.