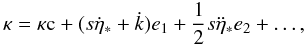

| Issue |

A&A

Volume 538, February 2012

|

|

|---|---|---|

| Article Number | A78 | |

| Number of page(s) | 47 | |

| Section | Astronomical instrumentation | |

| DOI | https://doi.org/10.1051/0004-6361/201117905 | |

| Published online | 08 February 2012 | |

The astrometric core solution for the Gaia mission

Overview of models, algorithms, and software implementation

1 Lund Observatory, Lund University, Box 43, 22100 Lund, Sweden

e-mail: Lennart.Lindegren@astro.lu.se; David.Hobbs@astro.lu.se

2 European Space Agency (ESA), European Space Astronomy Centre (ESAC), PO Box (Apdo. de Correos) 78, 28691 Villanueva de la Cañada, Madrid, Spain

e-mail: Uwe.Lammers@sciops.esa.int; William.OMullane@sciops.esa.int; Jose.Hernandez@sciops.esa.int

3 Astronomisches Rechen-Institut, Zentrum für Astronomie der Universität Heidelberg, Mönchhofstr. 12–14, 69120 Heidelberg, Germany

e-mail: bastian@ari.uni-heidelberg.de

Received: 17 August 2011

Accepted: 25 November 2011

Context. The Gaia satellite will observe about one billion stars and other point-like sources. The astrometric core solution will determine the astrometric parameters (position, parallax, and proper motion) for a subset of these sources, using a global solution approach which must also include a large number of parameters for the satellite attitude and optical instrument. The accurate and efficient implementation of this solution is an extremely demanding task, but crucial for the outcome of the mission.

Aims. We aim to provide a comprehensive overview of the mathematical and physical models applicable to this solution, as well as its numerical and algorithmic framework.

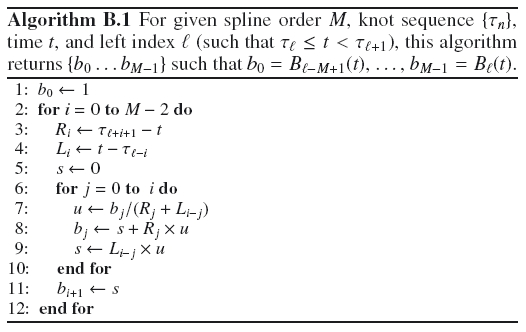

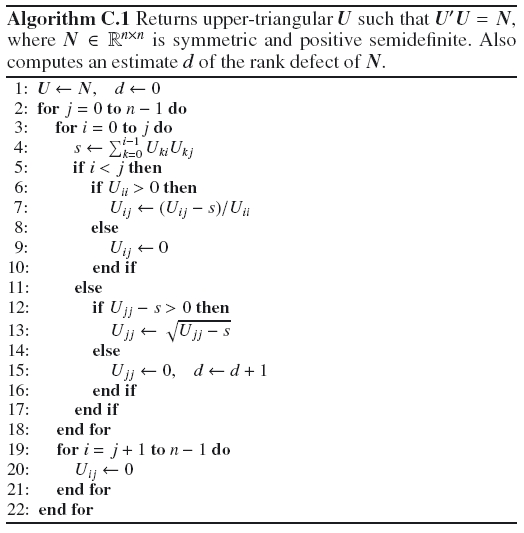

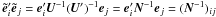

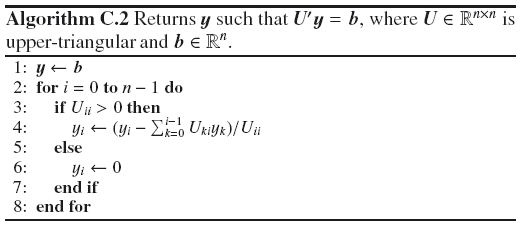

Methods. The astrometric core solution is a simultaneous least-squares estimation of about half a billion parameters, including the astrometric parameters for some 100 million well-behaved so-called primary sources. The global nature of the solution requires an iterative approach, which can be broken down into a small number of distinct processing blocks (source, attitude, calibration and global updating) and auxiliary processes (including the frame rotator and selection of primary sources). We describe each of these processes in some detail, formulate the underlying models, from which the observation equations are derived, and outline the adopted numerical solution methods with due consideration of robustness and the structure of the resulting system of equations. Appendices provide brief introductions to some important mathematical tools (quaternions and B-splines for the attitude representation, and a modified Cholesky algorithm for positive semidefinite problems) and discuss some complications expected in the real mission data.

Results. A complete software system called AGIS (Astrometric Global Iterative Solution) is being built according to the methods described in the paper. Based on simulated data for 2 million primary sources we present some initial results, demonstrating the basic mathematical and numerical validity of the approach and, by a reasonable extrapolation, its practical feasibility in terms of data management and computations for the real mission.

Key words: astrometry / methods: data analysis / methods: numerical / space vehicles: instruments

© ESO, 2012

1. Introduction

The space astrometry mission Gaia, planned to be launched by the European Space Agency (ESA) in 2013, will determine accurate astrometric data for about one billion objects in the magnitude range from 6 to 20. Accuracies of 8–25 micro-arcsec (μas) are typically expected for the trigonometric parallaxes, positions at mean epoch, and annual proper motions of simple (i.e., apparently single) stars down to 15th magnitude. The astrometric data are complemented by photometric and spectroscopic information collected with dedicated instruments on board the Gaia satellite. The mission will result in an astronomical database of unprecedented scope, accuracy and completeness becoming available to the scientific community around 2021.

The original interferometric concept for a successor mission to Hipparcos, called GAIA (Global Astrometric Interferometer for Astrophysics), was described by Lindegren & Perryman (1996) but has since evolved considerably by the incorporation of novel ideas (Høg 2008) and as a result of industrial studies conducted under ESA contracts (Perryman et al. 2001). The mission, now in the final integration phase with EADS Astrium as prime contractor, is no longer an interferometer but has retained the name Gaia, which is thus no acronym. For some brief but up-to-date overviews of the mission, see Lindegren et al. (2008) and Lindegren (2010). The scientific case is most comprehensively described in the proceedings of the conference The Three-Dimensional Universe with Gaia (Turon et al. 2005).

In parallel with the industrial development of the satellite, the Gaia Data Processing and Analysis Consortium (DPAC; Mignard et al. 2008) is charged with the task of developing and running a complete data processing system for analysing the satellite data and constructing the resulting database (“Gaia Catalogue”). This task is extremely difficult due to the large quantities of data involved, the complex relationships between different kinds of data (astrometric, photometric, spectroscopic) as well as between data collected at different epochs, the need for complex yet efficient software systems, and the interaction and sustained support of many individuals and groups over an extended period of time.

A fundamental part of the data processing task is the astrometric core solution, currently under development in DPAC’s Coordination Unit 3 (CU3), “Core Processing”. Mathematically, the astrometric core solution is a simultaneous determination of a very large number of unknowns representing three kinds of information: (i) the astrometric parameters for a subset of the observed stars, representing the astrometric reference frame; (ii) the instrument attitude, representing the accurate celestial pointing of the instrument axes in that reference frame as a function of time; and (iii) the geometric instrument calibration, representing the mapping from pixels on the CCD detectors to angular directions relative to the instrument axes. Although the astrometric core solution is only made for a subset of the stars, the resulting celestial reference frame, attitude and instrument calibration are fundamental inputs for the processing of all observations. Optionally, a fourth kind of unknowns, the global parameters, may be introduced to describe for example a hypothetical deviation from General Relativity.

We use the term “source” to denote any astronomical object that Gaia detects and observes as a separate entity. The vast majority of the Gaia sources are ordinary stars, many of them close binaries or the components of wide systems, but some are non-stellar (for example asteroids and quasars). Nearly everywhere in this paper, one can substitute “star” for “source” without distortion; however, for consistency with established practice in the Gaia community we use “source” throughout.

While the total number of distinct sources that will be observed by Gaia is estimated to slightly more than one billion, only a subset of them shall be used in the astrometric core solution. This subset, known as the “primary sources”, is selected to be astrometrically well-behaved (see Sect. 6.2) and consists of (effectively) single stars and extragalactic sources (quasars and AGNs) that are sufficiently stable and point-like. We assume here that the number of primary sources is about 108, i.e., roughly one tenth of the total number of objects, although in the end it is possible that an even larger number will be used.

In comparison with many other parts of the Gaia data processing, the astrometric core solution is in principle simple, mainly because it only uses a subset of the observations (namely, those of the primary sources), which can be accurately modelled in a relatively straightforward way. In practice, the problem is however formidable: the total number of unknowns is of the order of 5 × 108, the solution uses some 1011 individual observations extracted from some 70 Terabyte (TB) of raw satellite data, and the entangled nature of the data excludes a direct solution. A feasible approach has nevertheless been found, including a precise mathematical formulation, practical solution method, and efficient software implementation. It is the aim of this paper to provide a comprehensive overview of this approach.

|

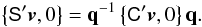

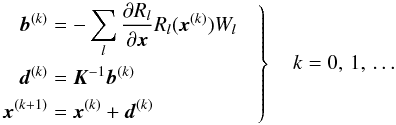

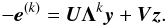

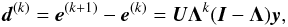

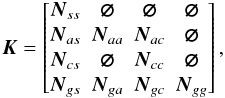

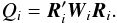

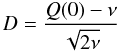

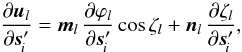

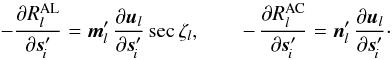

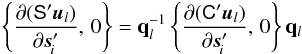

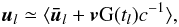

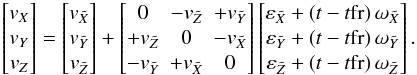

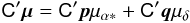

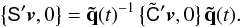

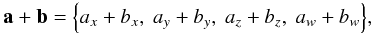

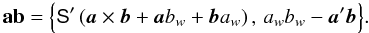

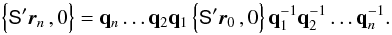

Fig. 1 Schematic representation of the main elements of the astrometric data analysis. In the shaded area is the mathematical model that allows to calculate the position of the stellar image in pixel coordinates, and hence the expected CCD data, for any given set of model parameters. To the right are the processes that fit this model to the observed CCD data by adjusting the parameters in the rectangular boxes along the middle. This paper is primarily concerned with the geometrical part of the analysis contained in the dashed box. However, a brief outline of the CCD data modelling and processing (bottom part of the diagram) is given in Sect. 3.5 and Appendix D.2. |

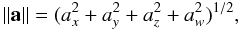

Concerning notations we have followed the usual convention to denote all non-scalar entities (vectors, tensors, matrices, quaternions) by boldfaced characters. Lower-case bold italics (a) are used for vectors and one-dimensional column matrices; upper-case bold italics (A) usually denote two-dimensional matrices. Following Murray (1983) the prime ( ′) signifies both matrix transpose (a′, A′) and scalar multiplication of vectors; thus ∥ a ∥ = (a′a)1/2 defines the magnitude of the vector a as well as the Euclidean norm of the column matrix a. Angular brackets denote normalization to unit length, as in ⟨ x ⟩ = x ∥ x ∥ -1. In this notation, no special distinction is thus made between vectors as physical entities (also known as geometric, spatial or Euclidean vectors) on one hand, and their numerical representations in some coordinate system as column matrices (also known as list vectors) on the other hand. Moreover, list vectors can of course have any dimension: a ∈ Rn. In the coordinate system whose axes are aligned with unit vectors x, y, and z, the components of the arbitrary vector a are given by ax = x′a, ay = y′a, and az = z′a; if the coordinate system is represented by the vector triad S = [x y z], these components are given by the column matrix S′a (cf. Appendix A in Murray 1983). This notation, although perhaps unfamiliar to many readers, provides a convenient and unambiguous framework for representing and transforming spatial vectors in different coordinate systems. For a vector-valued function f(x), ∂f/∂x′ denotes the dim(f) × dim(x) matrix whose (i,j)th element is ∂fi/∂xj. Quaternions follow their own algebra (see Appendix A for a brief introduction) and must not be confused with vectors/matrices; quaternions are therefore denoted by bold Roman characters (a). When taking a derivative with respect to the quaternion a, ∂x/∂a denotes the 4 × 1 matrix of derivatives with respect to the quaternion components; ∂x/∂a′ is the transposed matrix. Tables of acronyms and variables are provided in Appendix E.

2. Outline of the approach

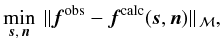

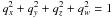

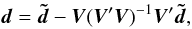

The astrometric principles for Gaia were outlined already in the Hipparcos Catalogue (ESA 1997, Vol. 3, Ch. 23) where, based on the accumulated experience of the Hipparcos mission, a view was cast to the future. The general principle of a global astrometric data analysis was succinctly formulated as the minimization problem:  (1)with a slight change of notation to adapt to the present paper. Here s is the vector of unknowns (parameters) describing the barycentric motions of the ensemble of sources used in the astrometric solution, and n is a vector of “nuisance parameters” describing the instrument and other incidental factors which are not of direct interest for the astronomical problem but are nevertheless required for realistic modelling of the data. The observations are represented by the vector fobs which could for example contain the measured detector coordinates of all the stellar images at specific times. fcalc(s,n) is the observation model, e.g., the expected detector coordinates calculated as functions of the astrometric and nuisance parameters. The norm is calculated in a metric ℳ defined by the statistics of the data; in practice the minimization will correspond to a weighted least-squares solution with due consideration of robustness issues (see Sect. 3.6).

(1)with a slight change of notation to adapt to the present paper. Here s is the vector of unknowns (parameters) describing the barycentric motions of the ensemble of sources used in the astrometric solution, and n is a vector of “nuisance parameters” describing the instrument and other incidental factors which are not of direct interest for the astronomical problem but are nevertheless required for realistic modelling of the data. The observations are represented by the vector fobs which could for example contain the measured detector coordinates of all the stellar images at specific times. fcalc(s,n) is the observation model, e.g., the expected detector coordinates calculated as functions of the astrometric and nuisance parameters. The norm is calculated in a metric ℳ defined by the statistics of the data; in practice the minimization will correspond to a weighted least-squares solution with due consideration of robustness issues (see Sect. 3.6).

While Eq. (1) encapsulates the global approach to the data analysis problem, its actual implementation will of course depend strongly on the specific characteristics of the Gaia instrument and mission as well as on the practical constraints on the data processing task.

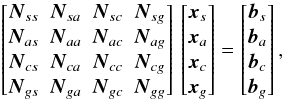

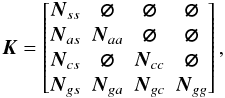

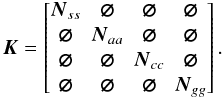

At the heart of the problem is the modelling of data represented by the function fcalc(s,n) in Eq. (1). This is schematically represented in the shaded part of the diagram in Fig. 1. It shows the main steps for calculating the expected CCD output in terms of the various parameters. The data processing, effecting the minimization in Eq. (1), is represented in the right part of the diagram. In subsequent sections we describe in some detail the main elements depicted in Fig. 1. The astrometric (source) parameters are represented by the vector s, while the nuisance parameters are of three kinds: the attitude parameters a, the geometric calibration parameters c, and the global parameters g. The observables f are represented by the pixel coordinates (κ,μ) of point source images on Gaia’s CCDs. (In the actual implementation of the approach, the minimization problem is formulated in terms of the field angles η, ζ rather than in the directly measured pixel coordinates κ, μ; see Sects. 3.3 and 3.4.)

The various elements of the astrometric solution are described in some detail in the subsequent sections. Section 3 provides the mathematical framework needed to model the Gaia observations and setting up the least-squares equations for the astrometric solution. In the interest of clarity and overview we omit in this description certain complications that need to be considered in the final data processing system; these are instead briefly discussed in Appendix D. In Sect. 4 we describe the iterative solution method in general terms, and then provide, in Sects. 5 and 6, the mathematical details of the most important procedures. Section 7 outlines the existing implementation of the solution and presents the results of a demonstration run based on simulated observations of about 2 million primary sources. Appendices A to C provide brief introductions to three mathematical tools that are particularly important for the subsequent development, namely the use of quaternions for representing the instantaneous satellite attitude, the B-spline formalism used to model the attitude as a function of time, and a modified Cholesky algorithm for the decomposition of positive semidefinite normal matrices.

3. Mathematical formulation of the basic observation model

3.1. Reference systems

The high astrometric accuracy aimed for with Gaia makes it necessary to use general relativity for modelling the data. This implies a precise and consistent formulation of the different reference systems used to describe the motion of the observer (Gaia), the motion of the observed object (source), the propagation of light from the source to the observer, and the transformations between these systems. The formulation adopted for Gaia (Klioner 2003, 2004) is based on the parametrized post-Newtonian (PPN) version of the relativistic framework adopted in 2000 by the International Astronomical Union (IAU); see Soffel et al. (2003). In this section only some key concepts from this formulation are introduced.

The orbit of Gaia and the light propagation from the source to Gaia are entirely modelled in the Barycentric Celestial Reference System (BCRS) whose spatial axes are aligned with the International Celestial Reference System (ICRS, Feissel & Mignard 1998). The associated time coordinate is the barycentric coordinate time (TCB). Throughout this paper, all time variables denoted t (with various subscripts) must be interpreted as TCB. The ephemerides of solar-system bodies (including the Sun and the Earth) are also expressed in this reference system. Even the motions of the stars and extragalactic objects are formally modelled in this system, although for practical reasons certain effects of the light-propagation time are conventionally ignored in this model (Sect. 3.2).

In order to model the rotation (attitude) of Gaia and the celestial direction of the light rays as observed by Gaia, it is expedient to introduce also a co-moving celestial reference system having its origin at the centre of mass of the satellite and a coordinate time equal to the proper time at Gaia. This is known as the Centre-of-Mass Reference System (CoMRS) and the associated time coordinate is Gaia Time (TG). Klioner (2004) demonstrates how the CoMRS can be constructed in the IAU 2000 framework in exact analogy with the Geocentric Celestial Reference System (GCRS), only for a massless particle (Gaia) instead of the Earth. Like the BCRS and GCRS, the CoMRS is kinematically non-rotating, and its spatial axes are aligned with the ICRS. The celestial reference system (either the CoMRS or the ICRS depending on whether the origin is at Gaia or the solar-system barycentre) will in the following be represented by the vector triad C = [ X Y Z ] , where X, Y, and Z are orthogonal unit vectors aligned with the axes of the celestial reference system. That is, X points towards the origin (α = δ = 0), Z towards the north celestial pole (δ = + 90°), and Y = Z × X points to (α = 90°,δ = 0).

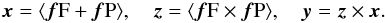

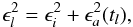

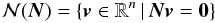

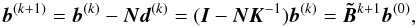

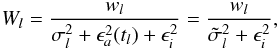

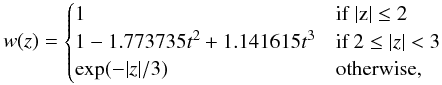

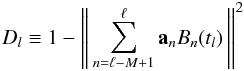

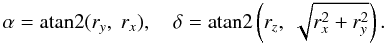

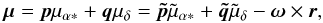

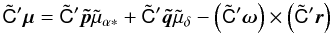

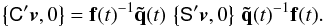

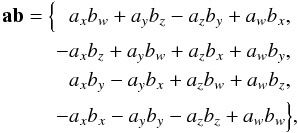

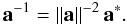

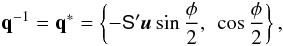

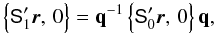

In the CoMRS the attitude of the satellite is a spatial rotation in three dimensions, and can therefore be described purely classically, for example using quaternions (Sect. 3.3 and Appendix A). The rotated reference system, aligned with the instrument axes, is known as the Scanning Reference System (SRS). Its spatial x, y, z axes (Fig. 2) are defined in terms of the two viewing directions of Gaia fP (in the centre of the preceding field of view, PFoV) and fF (in the centre of the following field of view, FFoV) as  (2)(The precise definition of fP and fF is implicit in the geometric instrument model; see Sect. 3.4.) The SRS is represented by the vector triad S = [ x y z ] .

(2)(The precise definition of fP and fF is implicit in the geometric instrument model; see Sect. 3.4.) The SRS is represented by the vector triad S = [ x y z ] .

For determining the orbit of Gaia and calibrating the on-board clock, it is also necessary to model the radio ranging and other ground-based observations of the Gaia spacecraft in the same relativistic framework. For this, we also need the GCRS. These aspects of the Gaia data processing are, however, not discussed in this paper.

3.2. Astrometric model

The astrometric model is a recipe for calculating the proper direction ui(t) to a source (i) at an arbitrary time of observation (t) in terms of its astrometric parameters si and various auxiliary data, assumed to be known with sufficient accuracy. The auxiliary data include an accurate barycentric ephemeris of the Gaia satellite, bG(t), including its coordinate velocity dbG/dt, and ephemerides of all other relevant solar-system bodies.

For the astrometric core solution every source is assumed to move with uniform space velocity relative to the solar-system barycentre. In the BCRS its spatial coordinates at time t∗ are therefore given by  (3)where tep is an arbitrary reference epoch and bi(tep), vi define six kinematic parameters for the motion of the source. The six astrometric parameters in si are merely a transformation of the kinematic parameters into an equivalent set better suited for the analysis of the observations. The six astrometric parameters are:

(3)where tep is an arbitrary reference epoch and bi(tep), vi define six kinematic parameters for the motion of the source. The six astrometric parameters in si are merely a transformation of the kinematic parameters into an equivalent set better suited for the analysis of the observations. The six astrometric parameters are:

-

α

i

the barycentric right ascension at the adopted reference epoch tep;

-

δ

i

the barycentric declination at epoch tep;

-

ϖ

i

the annual parallax at epoch tep;

-

μα ∗ i = (dαi/dt)cosδi

the proper motion in right ascension at epoch tep;

-

μδi = dδi/dt

the proper motion in declination at epoch tep;

-

μri = vriϖi/Au

the “radial proper motion” at epoch tep, i.e., the radial velocity of the source (vri) expressed in the same unit as the transverse components of proper motion (Au = astronomical unit).

As explained in Sect. 3.1, the astrometric parameters refer to the ICRS and the time coordinate used is TCB. The reference epoch tep is preferably chosen to be near the mid-time of the mission in order to minimize statistical correlations between the position and proper motion parameters.

The transformation between the kinematic and the astrometric parameters is non-trivial (Klioner 2003), mainly as a consequence of the practical need to neglect most of the light-propagation time t − t∗ between the emission of the light at the source (t∗) and its reception at Gaia (t). This interval is typically many years and its value, and rate of change (which depends on the radial velocity of the source), will in general not be known with sufficient accuracy to allow modelling of the motion of the source directly in terms of its kinematic parameters according to Eq. (3). The proper motion components μα ∗ i, μδi and radial velocity vri correspond to the “apparent” quantities discussed by Klioner (2003, Sect. 8).

The coordinate direction to the source at time t is calculated with the same “standard model” as was used for the reduction of the Hipparcos observations (ESA 1997, Vol. 1, Sect. 1.2.8), namely  (4)where the angular brackets signify vector length normalization, and [ pi qi ri ] is the “normal triad” of the source with respect to the ICRS (Murray 1983). In this triad, ri is the barycentric coordinate direction to the source at time tep, pi = ⟨ Z × ri ⟩ , and qi = ri × pi. The components of these unit vectors in the ICRS are given by the columns of the matrix

(4)where the angular brackets signify vector length normalization, and [ pi qi ri ] is the “normal triad” of the source with respect to the ICRS (Murray 1983). In this triad, ri is the barycentric coordinate direction to the source at time tep, pi = ⟨ Z × ri ⟩ , and qi = ri × pi. The components of these unit vectors in the ICRS are given by the columns of the matrix ![\begin{equation} \label{eq:normal-triad} \tens{C}'[\vec{p}_i~\vec{q}_i~\vec{r}_i] = \begin{bmatrix} -\sin\alpha_i &~ -\sin\delta_i\cos\alpha_i &~ \cos\delta_i\cos\alpha_i \\ \phantom{-}\cos\alpha_i &~ -\sin\delta_i\sin\alpha_i &~ \cos\delta_i\sin\alpha_i \\ 0 &~ \cos\delta_i &~ \sin\delta_i \end{bmatrix}. \end{equation}](/articles/aa/full_html/2012/02/aa17905-11/aa17905-11-eq83.png) (5)bG(t) is the barycentric position of Gaia at the time of observation, and Au the astronomical unit. tB is the barycentric time obtained by correcting the time of observation for the Römer delay; to sufficient accuracy it is given by

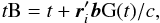

(5)bG(t) is the barycentric position of Gaia at the time of observation, and Au the astronomical unit. tB is the barycentric time obtained by correcting the time of observation for the Römer delay; to sufficient accuracy it is given by  (6)where c is the speed of light.

(6)where c is the speed of light.

In Eq. (4) the term containing μri accounts for the relative rate of change of the barycentric distance to the source. This term may produce secular variations of the proper motions and parallaxes of some nearby stars, which in principle allow their radial velocities to be determined astrometrically (Dravins et al. 1999). However, for the vast majority of these stars, μri can be more accurately calculated by combining the spectroscopically measured radial velocities with the astrometric parallaxes. Thus, although all six astrometric parameters are taken into account when computing the coordinate direction, usually only five of them are considered as unknowns in the astrometric solution.

The standard model can be derived by considering the uniform motion of the source in a purely classical framework, using Euclidean coordinates and neglecting the light propagation time from the source to the observer (except for the Römer delay). To the accuracy of Gaia, relativistic and light-propagation effects are by no means negligible, but it can be shown that this model is nevertheless accurate enough to model the observations to sub-microarcsec accuracy. It is adopted for this purpose because it closely corresponds to the conventional interpretation of the astrometric parameters. However, when the astrometric parameters are to be interpreted in terms of the barycentric space velocity of the source, some of these effects may come into play (Lindegren & Dravins 2003).

The transformation from  to the observable (proper) direction ui(t) involves taking into account gravitational light deflection by solar-system bodies and the Lorentz transformation to the co-moving frame of the observer (stellar aberration); the relevant formulae are given by Klioner (2003). This transformation therefore depends also on the global parameters g, for example the PPN parameter γ which measures the strength of the gravitational light deflection. The calculation uses some auxiliary data, not subject to improvement by the solution, and which are here denoted h; normally they include for example the barycentric ephemerides of the Gaia satellite and of solar-system bodies, along with their masses. The complete transformation can therefore be written symbolically as:

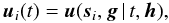

to the observable (proper) direction ui(t) involves taking into account gravitational light deflection by solar-system bodies and the Lorentz transformation to the co-moving frame of the observer (stellar aberration); the relevant formulae are given by Klioner (2003). This transformation therefore depends also on the global parameters g, for example the PPN parameter γ which measures the strength of the gravitational light deflection. The calculation uses some auxiliary data, not subject to improvement by the solution, and which are here denoted h; normally they include for example the barycentric ephemerides of the Gaia satellite and of solar-system bodies, along with their masses. The complete transformation can therefore be written symbolically as:  (7)where the vertical bar formally separates the (updatable) parameters si and g from the (fixed) given data t and h. Strictly speaking, the function u returns the coordinates of the proper direction in the CoMRS, that is the column matrix C′ui(t). The source parameter vector s is the concatenation of the sub-vectors si for all the primary sources.

(7)where the vertical bar formally separates the (updatable) parameters si and g from the (fixed) given data t and h. Strictly speaking, the function u returns the coordinates of the proper direction in the CoMRS, that is the column matrix C′ui(t). The source parameter vector s is the concatenation of the sub-vectors si for all the primary sources.

|

Fig. 2 The Scanning Reference System (SRS) [ x y z ] is defined by the viewing directions fP and fF according to Eq. (2). The instrument angles (ϕ,ζ) are the spherical coordinates in the SRS of the observed (proper) direction u towards the object. See Fig. 4 for further definition of the viewing directions. The field sizes are greatly exaggerated in this drawing; in reality the astrometrically useful field is 0.72° × 0.69° (along × across scan) for each viewing direction. The basic angle is Γc = arccos(fP′fF), nominally equal to 106.5°. |

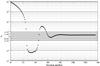

3.3. Attitude model

The attitude specifies the instantaneous orientation of the Gaia instrument in the celestial reference frame, that is the transformation from C = [X Y Z] (more precisely, the CoMRS) to S = [x y z] (Sect. 3.1) as a function of time. The spacecraft is controlled to follow a specific attitude as a function of time, for example the so-called Nominal Scanning Law (NSL; de Bruijne et al. 2010), designed to provide good coverage of the whole celestial sphere while satisfying a number of other requirements. The NSL is analytically defined for arbitrarily long time intervals by just a few free parameters.

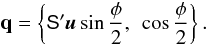

However, the actual attitude will deviate from the intended (nominal) scanning law by up to ~1 arcmin in all three axes, and these deviations vary on time scales from seconds to minutes depending on the level of physical perturbations and the characteristics of the real-time attitude measurements and control law (cf. Appendix D.4). The CCD integration time of (usually) 4.42 s means that the true (physical) attitude cannot be observed, but only a smoothed version of it, corresponding to the convolution of the physical attitude with the CCD exposure function (Appendix D.3). This “effective” attitude must however be a posteriori estimable, at any instant, to an accuracy compatible with the astrometric goals, i.e., at sub-mas level. For this purpose the effective attitude is modelled in terms of a finite number of attitude parameters, for which it is necessary to choose a suitable mathematical representation of the instantaneous transformation, as well as of its time dependence. For the Gaia data processing, we have chosen to use quaternions (Appendix A) for the former, and B-splines (Appendix B) for the latter representation.

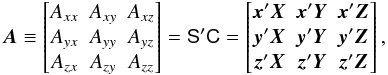

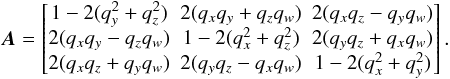

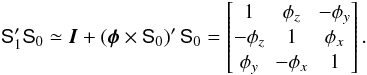

At any time the orientation of the SRS (S) with respect to the CoMRS (C) may be represented by the attitude matrix  (8)which is a proper orthonormal matrix, AA′ = I, det(A) = + 1. This is also called the direction cosine matrix, since the rows are the direction cosines of x, y and z in the CoMRS, and the columns are the direction cosines of X, Y and Z in the SRS.

(8)which is a proper orthonormal matrix, AA′ = I, det(A) = + 1. This is also called the direction cosine matrix, since the rows are the direction cosines of x, y and z in the CoMRS, and the columns are the direction cosines of X, Y and Z in the SRS.

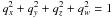

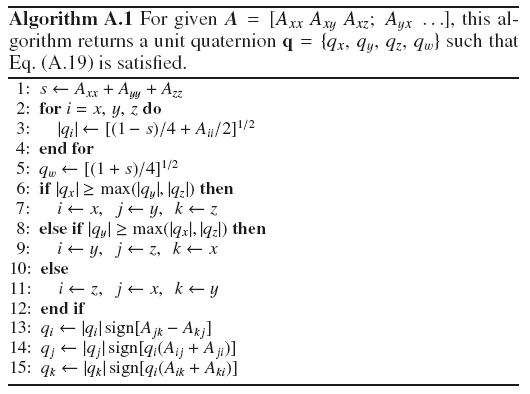

The orthonormality of A implies that the matrix elements satisfy six constraints, leaving three degrees of freedom for the attitude representation. Although one could parametrize each of the nine matrix elements as a continuous function of time, for example using splines, it would in practice not be possible to guarantee that the orthonormality constraints hold at any time. The adopted solution is to represent the instantaneous attitude by a unit quaternion q, which has only four components, {qx,qy,qz,qw}, satisfying the single constraint  . This is easily enforced by normalization.

. This is easily enforced by normalization.

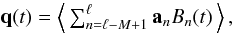

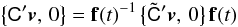

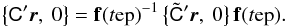

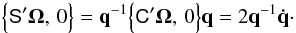

The attitude quaternion q(t) gives the rotation from the CoMRS (C) to the SRS (S). Using quaternions, their relation is defined by the transformation of the coordinates of the arbitrary vector v in the two reference systems,  (9)In the terminology of Appendix A.3 this is a frame rotation of the vector from C to S. The inverse operation is {C′v,0} = q{S′v,0}q-1.

(9)In the terminology of Appendix A.3 this is a frame rotation of the vector from C to S. The inverse operation is {C′v,0} = q{S′v,0}q-1.

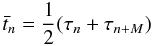

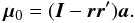

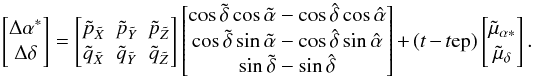

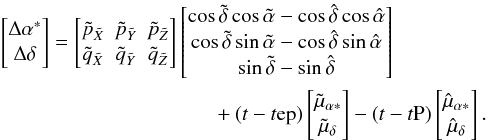

Using the B-spline representation in Appendix B, we have  (10)where an (n = 0...N − 1) are the coefficients of the B-splines Bn(t) of order M defined on the knot sequence

(10)where an (n = 0...N − 1) are the coefficients of the B-splines Bn(t) of order M defined on the knot sequence  . The function Bn(t) is non-zero only for τn < t < τn + M, which is why the sum in Eq. (10) only extends over the M terms ending with n = ℓ. Here, ℓ is the so-called left index of t satisfying τℓ ≤ t < τℓ + 1. The normalization operator ⟨ ⟩ guarantees that q(t) is a unit quaternion for any t in the interval [ τM − 1,τN ] over which the spline is completely defined. Although the coefficients an are formally quaternions, they are not of unit length. The attitude parameter vector a consists of the components of the whole set of quaternions an.

. The function Bn(t) is non-zero only for τn < t < τn + M, which is why the sum in Eq. (10) only extends over the M terms ending with n = ℓ. Here, ℓ is the so-called left index of t satisfying τℓ ≤ t < τℓ + 1. The normalization operator ⟨ ⟩ guarantees that q(t) is a unit quaternion for any t in the interval [ τM − 1,τN ] over which the spline is completely defined. Although the coefficients an are formally quaternions, they are not of unit length. The attitude parameter vector a consists of the components of the whole set of quaternions an.

Cubic splines (M = 4) are currently used in this attitude model. Each component of the quaternion (before the normalization in Eq. (10)) is then a piecewise cubic polynomial with continuous value, first, and second derivative for any t; the third derivative is discontinuous at the knots. When initializing the solution, it is possible to select any desired order of the spline. Using a higher order, such as M = 5 (quartic) or 6 (quintic), provides improved smoothness but also makes the spline fitting less local, and therefore more prone to undesirable oscillatory behaviour. The flexibility of the spline is in principle only governed by the number of degrees of freedom (that is, in practice, the knot frequency), and is therefore independent of the order. One should therefore not choose a higher order than is warranted by the smoothness of the actual effective attitude, which is difficult to model a priori (cf. Appendix D.4). Determining the optimal order and knot frequency may in the end only be possible through analysis of the real mission data.

Equation (7) returns the observed direction to the source relative to the celestial reference system, or C′u = [uX, uY, uZ] ′. In order to compute the position of the image in the field of view, we need to express this direction in the Scanning Reference System, SRS (Sect. 3.1), or S′u = [ux, uy, uz]′. The required transformation is obtained by a frame rotation according to Eq. (9),  (11)whereupon the instrument angles (ϕ,ζ) are obtained from

(11)whereupon the instrument angles (ϕ,ζ) are obtained from ![\begin{equation} \label{eq:srsprop1} \tens{S}'\vec{u} \equiv \begin{bmatrix} u_x \\ u_y \\ u_z \end{bmatrix} = \begin{bmatrix} \cos\zeta\cos\varphi \\ \cos\zeta\sin\varphi \\ \sin\zeta \end{bmatrix} \quad\Leftrightarrow\quad \left\{\begin{array}{l} \varphi = \text{atan2}\bigl(u_y,u_x\bigr)\\[3pt] \zeta = \text{atan2}\Bigl(u_z,\,\sqrt{u_x^2+u_y^2}\,\Bigr) \end{array}\right. \end{equation}](/articles/aa/full_html/2012/02/aa17905-11/aa17905-11-eq132.png) (12)(Fig. 2), where we adopt the convention that − π ≤ ϕ < π. The field index f and the along-scan field angle η are finally obtained as

(12)(Fig. 2), where we adopt the convention that − π ≤ ϕ < π. The field index f and the along-scan field angle η are finally obtained as  (13)where the basic angle, Γc, is here a purely conventional value (as suggested by the subscript). The field-of-view limitations imply that |η| ≲ 0.5° and |ζ| ≲ 0.5° for any observation.

(13)where the basic angle, Γc, is here a purely conventional value (as suggested by the subscript). The field-of-view limitations imply that |η| ≲ 0.5° and |ζ| ≲ 0.5° for any observation.

Given the time of observation t and the set of source parameters s, attitude parameters a, and global parameters g, the field index f and the field angles (η,ζ) can thus be computed by application of Eqs. (7), (10)–(13). The resulting computed field angles are concisely written η(t | s,a,g), ζ(t | s,a,g).

|

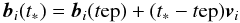

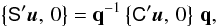

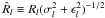

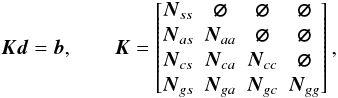

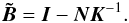

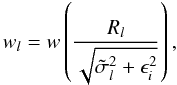

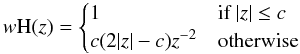

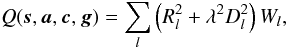

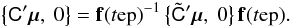

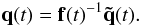

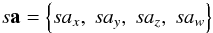

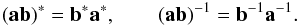

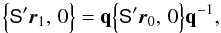

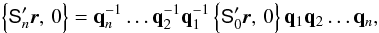

Fig. 3 Layout of the CCDs in Gaia’s focal plane. The star images move from right to left in the diagram. The along-scan (AL) and across-scan (AC) directions are indicated in the top left corner. The skymappers (SM1, SM2) provide source image detection, two-dimensional position estimation and field-of-view discrimination. The astrometric field (AF1–AF9) provides accurate AL measurements and (sometimes) AC positions. Additional CCDs used in the blue and red photometers (BP, RP), the radial-velocity spectrometer (RVS), for wavefront sensing (WFS) and basic-angle monitoring (BAM) are not further described in this paper. One of the rows (AF3) illustrates the system for labelling individual CCDs (AF3_1, etc.). The nominal paths of two star images, one in the preceding field of view (PFoV) and one in the following field of view (FFoV) are indicated. For purely technical reasons the origin of the field angles (η,ζ) corresponds to different physical locations on the CCDs in the two fields. The physical size of each CCD is 45 × 59 mm2. |

3.4. Geometric instrument model

The geometric instrument model defines the precise layout of the CCDs in terms of the field angles (η,ζ). This layout depends on several different factors, including: the physical geometry of each CCD; its position and alignment in the focal-plane assembly; the optical system including its scale value, distortion and other aberrations; and the adopted (conventional) value of the basic angle Γc. Some of these (notably the optical distortion) are different in the two fields of view and may evolve slightly over the mission; on the other hand these variations are expected to be very smooth as a function of the field angles. Other factors, such as the detailed physical geometry of the pixel columns, may be extremely stable but possibly quite irregular on a small scale. As a result, the geometric calibration model must be able to accommodate both smooth and irregular patterns evolving on different time scales in the two fields of view.

In the following it should be kept in mind that the astrometric instrument of Gaia is optimised for one-dimensional measurements in the along-scan direction, i.e., of the field angle η, while the requirements in the perpendicular direction (ζ) are much relaxed. This feature of Gaia (and Hipparcos) is a consequence of fundamental considerations derived from the requirements to determine a global reference frame and absolute parallaxes (Lindegren & Bastian 2011). In principle the measurement accuracy in the ζ direction, as well as the corresponding instrument modelling and calibration accuracies, may be relaxed by up to a factor given by the inverse angular size of the field of view (Lindegren 2005), or almost two orders of magnitude for a field of 0.7°. In practice a ratio of the order of 10 may be achieved, in which case the across-scan measurement and calibration errors have a very marginal effect on the overall astrometric accuracy.

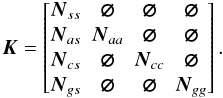

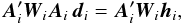

The focal plane of Gaia contains a total of 106 CCDs (Laborie et al. 2007), of which only the 14 CCDs in the skymappers (SM1 and SM2) and the 62 in the astrometric field (AF1 through AF9) are used for the astrometric solution (Fig. 3). The CCDs have 4500 pixel lines in the along-scan (AL, constant ζ, decreasing η) direction and 1966 pixel columns in the across-scan (AC, constant η, increasing ζ) direction. They are operated in the Time, Delay and Integrate (TDI) mode (also known as drift-scanning), an imaging technique well-known in astronomy from ground-based programmes such as the Sloan Digital Sky Survey (Gunn et al. 1998). Effectively, the charges are clocked along the CCD columns at the same (average) speed as the motion of the optical images, i.e., 60 arcsec s-1 for Gaia. The exposure (integration) time is thus set by the time it takes the image to move across the CCD, or nominally T ≃ 4.42 s, if no gate is activated. At this exposure time the central pixels will be saturated for sources brighter than magnitude G ≃ 121. Gate activation, or “gating” for short, is the adopted method to obtain valid measurements of brighter sources. Gating temporarily inhibits charge transfer across a certain TDI line (row of pixels AC), thus effectively zeroing the charge image and reducing the exposure time in proportion to the number of TDI lines following the gate. A range of discrete exposure times is thus available, the shortest one, according to current planning, using only 16 TDI lines (15.7 ms). Gate selection is made by the on-board software based on the measured brightness of the source in relation to the calibrated full-well capacity of the relevant section of the CCD. Measurement errors and the spread in full-well capacities make the gate-activation thresholds somewhat fuzzy, and a given bright source is not necessarily always observed using the same gate. Moreover, a quasi-random selection of the observations of fainter sources will also be gated, viz., if they happen to be read out at about the same time, and on the same CCD, as a gated bright star. The skymappers are operated in a permanently gated mode, so that in practice only the last 2900 TDI lines are used in SM1 and SM2.

The skymappers are crucial for the real-time operation of the instrument, since they detect the sources as they enter the field of view, and allow determination of an approximate two-dimensional position of the images and (together with data from AF1) their speed across the CCDs. This information is used by the on-board attitude computer, in order to determine which CCD pixels should be read out and transmitted to ground (Sect. 3.5). The skymappers also allow to discriminate between the two viewing directions, since sources in the preceding field of view (PFoV) are only seen by SM1 and sources in the following field of view (FFoV) are only seen by SM2. In the astrometric field (as well as in BP, RP and RVS) the two fields of view are superposed.

Most observations acquired in the astrometric field (AF) are purely one-dimensional through the on-chip AC binning of data (Sect. 3.5). However, sources brighter than magnitude G ≃ 13 are always observed as two-dimensional images, providing accurate information about the AC pixel coordinate (μ) as well. Some randomly selected fainter images are also observed two-dimensionally (“Calibration Faint Stars”, CFS). The bright sources, CFS and SM data provide the AC information necessary for the on-ground three-axis attitude determination.

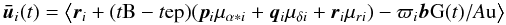

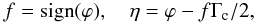

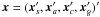

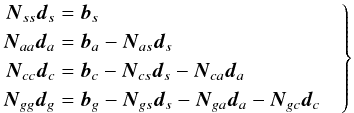

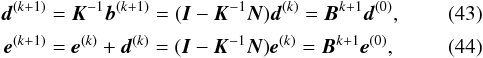

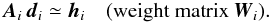

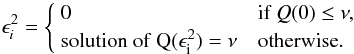

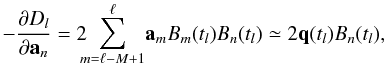

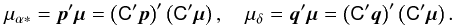

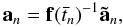

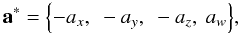

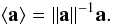

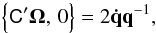

Because of the TDI mode of observation, AL irregularities of the pixel geometry are smeared out and need not be calibrated, and any measurement of the AL or AC position must effectively be referred back to an “observation time” half-way through the integration. Correspondingly, the pixel geometry can be represented by a fiducial “observation line” [η(μ), ζ(μ)] traced out in the field angles η, ζ as functions of the AC pixel coordinate μ (Fig. 4). The AC pixel coordinate is defined as a continuous variable with μ = 14 when the image is centred on the first pixel column, μ = 15 at the second pixel column, and so on until μ = 1979 at the last (1966th) pixel column. The offset by 13 pixels allows for the presence of pre-scan pixel data in some observations.

Nominally, the observation line corresponds to the (K/2)th pixel line projected backwards through the optical instrument onto the Scanning Reference System on the sky, where K is the number of active AL pixel lines in the observation (normally K = 4500)2. For a gated observation K is much smaller and the observation line is therefore correspondingly displaced towards the CCD readout register. A separate set of geometrical calibration parameters is therefore needed for each gate being used. In the calibration updating (Sect. 5.3) all the calibration parameters are however solved together, with the overlap due to the fuzzy gate-activation thresholds providing the necessary connection between the different gates.

|

Fig. 4 Schematic illustration of how the field angles (η,ζ) are defined in terms of the CCD layout in Fig. 3. For simplicity only the projections of two CCDs, AF8_3 and AF8_4, into the Scanning Reference System (SRS) are shown (not to scale). The field angles have their origins at the respective viewing direction fP or fF (Fig. 2), which are defined in relation to the nominal centres of the CCDs (crosses); the actual configuration of the detectors is described by fiducial observation lines according to Eq. (15). The dashed curve shows the apparent path of a stellar image across the two fields of view. Its intersections with the observation lines define the instants of observations. |

Let n be an index identifying the different CCDs used for astrometry, i.e., for each of the 76 CCDs in the SM and AF part of the focal plane. Furthermore, let g be a gate index such that g = 0 is used for non-gated observations and g = 1, ..., 12 for gated observations of progressively brighter sources. In each field of view (index f) the nominal observation lines ![\hbox{$\left[\eta^0_{fng}(\mu),\,\zeta^0_{fng}(\mu)\right]$}](/articles/aa/full_html/2012/02/aa17905-11/aa17905-11-eq160.png) could in principle be calculated from the nominal characteristics of the focal plane assembly (FPA) and ray tracing through the nominal optical system. However, since the nominal observation lines are only used as a reference for the calibration of the actual observation lines, a very simplistic transformation from linear coordinates to angles can be used without introducing approximation errors in the resulting calibration model. The nominal observation lines are therefore defined by the transformation

could in principle be calculated from the nominal characteristics of the focal plane assembly (FPA) and ray tracing through the nominal optical system. However, since the nominal observation lines are only used as a reference for the calibration of the actual observation lines, a very simplistic transformation from linear coordinates to angles can be used without introducing approximation errors in the resulting calibration model. The nominal observation lines are therefore defined by the transformation ![\begin{equation} \label{eq:etazeta0} \left. \begin{aligned} \eta^0_{fng}(\mu) &\equiv \eta^0_{ng} = -Y{\rm FPA}[n,g]/F \\ \zeta^0_{fng}(\mu) &= -\left(X{\rm FPA}[n]-(\mu-\mu{\rm c})p{\rm AC} -X{\rm FPA}^\text{centre}[f]\right)/F \end{aligned} \right\} \end{equation}](/articles/aa/full_html/2012/02/aa17905-11/aa17905-11-eq161.png) (14)where XFPA, YFPA are physical coordinates (in mm) in the focal plane3, XFPA [ n ] is the physical AC coordinate of the nominal centre of the nth CCD, YFPA [ n,g ] the physical AL coordinate of the nominal observation line for gate g on the nth CCD, and XFPAcentre [f] the physical AC coordinate of the nominal field centre for field index f. μc = 996.5 is the AC pixel coordinate of the CCD centre, pAC = 30 μm the physical AC pixel size, and F = 35 m the nominal equivalent focal length of the telescope. While

(14)where XFPA, YFPA are physical coordinates (in mm) in the focal plane3, XFPA [ n ] is the physical AC coordinate of the nominal centre of the nth CCD, YFPA [ n,g ] the physical AL coordinate of the nominal observation line for gate g on the nth CCD, and XFPAcentre [f] the physical AC coordinate of the nominal field centre for field index f. μc = 996.5 is the AC pixel coordinate of the CCD centre, pAC = 30 μm the physical AC pixel size, and F = 35 m the nominal equivalent focal length of the telescope. While  is independent of f, in the AC direction the origins are offset by about ± 221 arcsec between the two fields of view, corresponding to the physical coordinates XFPAcentre [ f ] = ( − 37.5 mm)f. It is emphasized that the nominal observation lines are purely conventional reference quantities, and need not be recomputed, e.g., once a more accurate estimate of F becomes available.

is independent of f, in the AC direction the origins are offset by about ± 221 arcsec between the two fields of view, corresponding to the physical coordinates XFPAcentre [ f ] = ( − 37.5 mm)f. It is emphasized that the nominal observation lines are purely conventional reference quantities, and need not be recomputed, e.g., once a more accurate estimate of F becomes available.

Because of possible changes in the instrument during the mission, the actual observation lines will be functions of time. The time dependence is quantified by introducing independent sets of calibration parameters for successive, non-overlapping time intervals. Different groups of parameters may develop on different time scales, and the resulting formulation can be quite complex. For the sake of illustration, let us distinguish between three categories of geometric calibration parameters:

-

1.

Large-scale AL calibration, Δη. This may (minutely but importantly) change due to thermal variations in the optics, the detectors, and their supporting mechanical structure. These variations could occur on short time scales (of the order of a day), and would in general be different in the two fields of view. They are modelled as low-order polynomials in the across-scan pixel coordinate μ.

-

2.

Small-scale AL calibration, δη. This is mainly related to physical defects or irregularities in the CCDs themselves, for example “stitching errors” introduced by the photolithography process used to manufacture the CCDs. These irregularities are expected to be stable over very long time scales, possibly throughout the mission, and should be identical in both fields of view. They require a spatially detailed modelling, perhaps with a resolution of just one or a few AC pixels.

-

3.

Large-scale AC calibration, Δζ. Although the physical origin is the same as for Δη, the AC components can be assumed to be constant on long time scales, since the calibration requirement in the AC direction is much more relaxed than in the AL direction. They are modelled as low-order polynomials in the field angles, separately in each field of view.

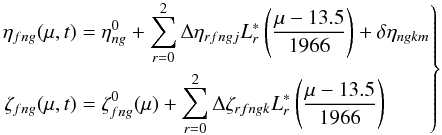

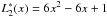

Let index j identify the “short” time intervals needed for the large-scale AL calibration, and index k identify the “long” time intervals applicable to the small-scale AL calibration and large-scale AC calibration. That is, an observation at time t belongs to some short time interval j and some long time interval k, where j and k are readily computed from the known t and the corresponding sequences of division times4. Assuming that quadratic polynomials in μ are sufficient for the large-scale calibrations, and that full AC pixel resolution is required for the small-scale AL calibration, the observation lines at time t are modelled as  (15)where

(15)where  is the shifted Legendre polynomial of degree r (orthogonal on [ 0,1 ] ), i.e.,

is the shifted Legendre polynomial of degree r (orthogonal on [ 0,1 ] ), i.e.,  ,

,  ,

,  , etc.; Δηrfngj are the large-scale AL parameters, δηngkm the small-scale AL parameters (with m = round(μ) the index of the nearest pixel column), and Δζrfngk the large-scale AC parameters.

, etc.; Δηrfngj are the large-scale AL parameters, δηngkm the small-scale AL parameters (with m = round(μ) the index of the nearest pixel column), and Δζrfngk the large-scale AC parameters.

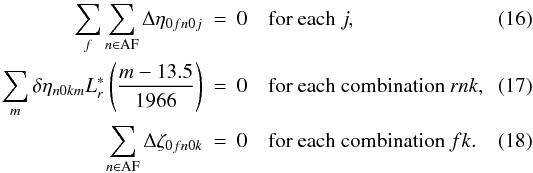

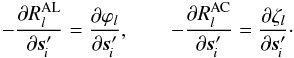

In order to render all the geometric calibration parameters uniquely determinable, a number of constraints are rigorously enforced by the astrometric solution. Effectively, they define the origins of the field angles, i.e., the viewing directions fP and fF, to coincide with the average nominal field angles of the CCD centres. The necessary constraints are:  Note that g = 0 throughout in Eqs. (16)–(18). That the constraints are only defined in terms of the non-gated observations (g = 0) implies that the observation lines for the gated observations must be calibrated relative to the non-gated observations. This is possible since any given bright source will not always be observed with the same gate.

Note that g = 0 throughout in Eqs. (16)–(18). That the constraints are only defined in terms of the non-gated observations (g = 0) implies that the observation lines for the gated observations must be calibrated relative to the non-gated observations. This is possible since any given bright source will not always be observed with the same gate.

Constraint (16) effectively defines the zero point of the AL field angle η by requiring that  when averaging over the CCDs and between the two fields of view. Constraint (17) implies that the small-scale AL corrections δη do not have any components that could be described by Δη instead; it therefore ensures a unique division between the large-scale and small-scale components. Constraint (18) effectively defines the zero point of the AC field angle ζ by requiring that

when averaging over the CCDs and between the two fields of view. Constraint (17) implies that the small-scale AL corrections δη do not have any components that could be described by Δη instead; it therefore ensures a unique division between the large-scale and small-scale components. Constraint (18) effectively defines the zero point of the AC field angle ζ by requiring that  , separately in each field of view, when averaged over the CCDs. The sums over n in Eqs. (16) and (18) are restricted to the CCDs in the astrometric field (AF), since the skymapper (SM) measurements are less accurate.

, separately in each field of view, when averaged over the CCDs. The sums over n in Eqs. (16) and (18) are restricted to the CCDs in the astrometric field (AF), since the skymapper (SM) measurements are less accurate.

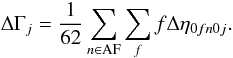

The basic angle Γc introduced in Sect. 3.3 is a fixed reference value, and any real variations of the angle between the two lines of sight will therefore show up as a variation in Δη with opposite signs in the two fields of view. The offset of the actual basic angle with respect to the conventional value Γc may be defined as the average difference of Δη between the two fields of view, where the average is computed over the astrometric CCDs, for gate g = 0, and over μ; the result for time period j is  (19)Although this is obviously a useful quantity to monitor, it does not appear as a parameter in the geometric calibration model. A number of additional quantities representing the mean scale offset, the mean field orientation, etc., can similarly be computed from the large-scale calibration parameters for the purpose of monitoring.

(19)Although this is obviously a useful quantity to monitor, it does not appear as a parameter in the geometric calibration model. A number of additional quantities representing the mean scale offset, the mean field orientation, etc., can similarly be computed from the large-scale calibration parameters for the purpose of monitoring.

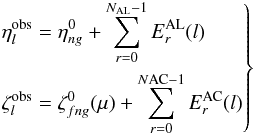

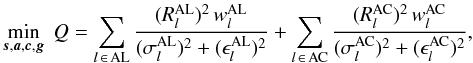

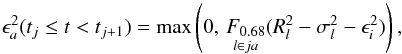

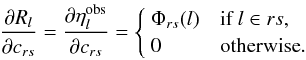

Equation (15) encapsulates a specific formulation of the geometric instrument model, with certain assumptions about the shape, dependencies, and time scales of possible variations. While this particular model is currently believed to be sufficient to describe the behaviour of the actual instrument to the required accuracy, it is very likely that modifications will be needed after a first analysis of the flight data. Moreover, in the course of the data analysis one may want to try out alternative models, or examine possible systematics resulting from the pre-processing (location estimator). In order to facilitate this, a much more flexible generic calibration model has been implemented. In the generic model, the “observed” field angles (representing the true observation lines) for any observation l are written  (20)where each of the Er(l) (for brevity dropping superscript AL/AC) represents a basic calibration effect, being a linear combination of elemental calibration functions Φrs:

(20)where each of the Er(l) (for brevity dropping superscript AL/AC) represents a basic calibration effect, being a linear combination of elemental calibration functions Φrs:  (21)NAL and NAC are the number of effects considered along and across scan. The whole set of coefficients crs forms the calibration vector c.

(21)NAL and NAC are the number of effects considered along and across scan. The whole set of coefficients crs forms the calibration vector c.

In the generic formulation, the multiple indices f, n, g and the variables μ and t are replaced by the single observation index l. This allows maximum flexibility in terms of how the calibration model is implemented in the software. The functions Φrs receive the observation index l, and it is assumed that this index suffices to derive from it all quantities needed to evaluate the calibration functions for this observation. Examples of quantities that can be derived from the observation index are: the FoV index f, the CCD and gate indices n and g, the AC pixel coordinate μ, time t, and any relevant astrometric, photometric or spectroscopic parameter of the source (magnitude, colour index, etc.). Intrinsically real-valued quantities such as t can be subdivided into non-overlapping intervals with different sets of calibration parameters applicable to each interval. The basic calibration model (15) can therefore be implemented as a particular instance of the generic model, with for example  (r = 0, 1, 2) constituting three of the calibration functions (with μ derived from l). Once the calibration functions have been coded, the entire calibration model can be conveniently specified (and changed) through an external configuration file alone using, e.g., XML structures.

(r = 0, 1, 2) constituting three of the calibration functions (with μ derived from l). Once the calibration functions have been coded, the entire calibration model can be conveniently specified (and changed) through an external configuration file alone using, e.g., XML structures.

Some elemental calibration functions may be introduced for diagnostic purposes rather than actual calibration. An example of this is any function depending on the colour or magnitude of the source. The origin of such effects is briefly explained in Appendices D.1 and D.2. Magnitude- and colour-dependent variations of the instrument response are expected to be fully taken into account by the signal modelling on the CCD data level, as outlined in Sect. 3.5 (notably by the LSF and CDM calibrations), and should not show up in the astrometric solution. Thus, non-zero results for such “non-geometric” diagnostic calibration parameters indicate that the signal modelling should be improved. In the final solution the diagnostic calibration parameters should ideally be zero.

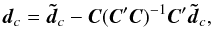

The parameters of the generic calibration model must satisfy a number of constraints similar to Eqs. (16)–(18). These can be cast in the general form  (22)where the matrix C contains one column, with known coefficients, for each constraint. The columns are, by design, linearly independent.

(22)where the matrix C contains one column, with known coefficients, for each constraint. The columns are, by design, linearly independent.

3.5. Signal (CCD data) model

As suggested in Fig. 1, the modelling of CCD data at the level of individual pixels (i.e., the photon counts) is not part of the geometrical model of the observations with which we are concerned in this paper. However, the processing of the photon counts, effectively by fitting the CCD data model, produces the “observations” that are the input to the astrometric core solution. In order to clarify the exact meaning of these observations we include here a brief overview of the signal model.

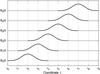

The pixel size, 10 μm ≃ 59 mas in the along-scan (AL) direction and 30 μm ≃ 177 mas in the across-can (AC) direction, roughly matches the theoretical diffraction image for the 1.45 × 0.50 m2 telescope pupil of Gaia (effective wavelength ~ 650 nm). Around each detected object, only a small rectangular window (typically 6–18 pixels long in the AL direction and 12 pixels wide in the AC direction) is actually read out and transmitted to the ground. Moreover, for most of the observations in the astrometric field (AF), on-chip binning in the serial register is used to sum the charges over the window in the AC direction. This effectively results in a one-dimensional image of 6–18 AL “samples”, where the signal (Nk) in each sample k is the sum of 12 AC pixels. The exact time tk when a sample was transferred to the serial register, expressed on the TCB scale, is in principle known from the time correlation of the on-board clock with ground-based time signals. Because of the known one-to-one relation between the TDI period counter k and tk, we may use k as a proxy for tk in subsequent calculations.

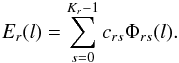

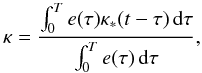

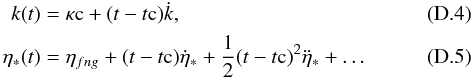

For single stars, and in the absence of the effects discussed in Appendix D.2, the sample values in the window are modelled as a stochastic variable (Poisson photon noise plus electronic readout noise) with expected value  (23)where β, α and κ are the so-called image parameters representing the (uniform) background level, the amplitude (or flux) of the source, and the AL location (pixel coordinate) of the image centroid. The continuous, non-negative function L(x) is the Line Spread Function (LSF) appropriate for the observation. L(x) depends, for example, on the spectrum of the source and on the position of the image in the focal plane. The (in general non-integer) pixel coordinate κ is expressed on the same scale as the (integer) TDI index k, and may be translated to the equivalent TCB t(κ) by means of the known relation between k and tk. The CCD observation time is defined as t(κ − K/2), where K is the number of TDI periods used for integrating the image (see Appendix D.3). Formally, the CCD observation time is the instant of time at which the centre of the source image passed across the CCD fiducial line halfway between the first and the last TDI line used in the integration (this will depend on the gating).

(23)where β, α and κ are the so-called image parameters representing the (uniform) background level, the amplitude (or flux) of the source, and the AL location (pixel coordinate) of the image centroid. The continuous, non-negative function L(x) is the Line Spread Function (LSF) appropriate for the observation. L(x) depends, for example, on the spectrum of the source and on the position of the image in the focal plane. The (in general non-integer) pixel coordinate κ is expressed on the same scale as the (integer) TDI index k, and may be translated to the equivalent TCB t(κ) by means of the known relation between k and tk. The CCD observation time is defined as t(κ − K/2), where K is the number of TDI periods used for integrating the image (see Appendix D.3). Formally, the CCD observation time is the instant of time at which the centre of the source image passed across the CCD fiducial line halfway between the first and the last TDI line used in the integration (this will depend on the gating).

Fitting the CCD signal model to the one-dimensional sample values Nk thus gives, as the end result of observation l, an estimate of the AL pixel coordinate κ of the image in the pixel stream, which is then transformed to the observation time tl. The fitting procedure also provides an estimate of the uncertainty in κ, which can be expressed in angular measure as a formal standard deviation of the AL measurement,  . It is derived by error propagation through the fitted signal model, taking into account the dominant noise sources, photon noise and readout noise.

. It is derived by error propagation through the fitted signal model, taking into account the dominant noise sources, photon noise and readout noise.

For some observations, AC information is also provided through two-dimensional sampling of the pixel window around the detected object. This applies to all SM observations, AF observations of bright (G ≲ 13) sources, and AF observations of Calibration Faint Stars (Sect. 3.4). The modelling of the two-dimensional images follows the same principles as outlined above for the one-dimensional (AL only) case, only that the LSF is replaced by a two-dimensional Point Spread Function (PSF) and that there is one more location parameter to estimate, namely the AC pixel coordinate μ. The astrometric result in this case consists of the observation time tl, the observed AC coordinate μl, and their formal standard uncertainties  and

and  (both expressed as angles).

(both expressed as angles).

The estimation errors for different images are, for the subsequent analysis, assumed to be statistically independent (and therefore uncorrelated). This is a very good approximation to the extent that they only depend on the photon and readout noises. However, modelling errors at the various stages of the processing (in particular CTI effects in the signal modelling [Appendix D.3] and attitude irregularities [Appendix D.4]) are likely to introduce errors that are correlated at least over the nine AF observations in a field transit. The resulting correlations as such are not taken into account in the astrometric solution (i.e., the weight matrix of the least-squares equations is taken to be diagonal), but the sizes of the modelling errors are estimated, and are employed to reduce the statistical weights of the observations as described in Sect. 3.6. The AL and AC estimates of a given (two-dimensional) image are roughly independent at least in the limit of small optical aberrations.

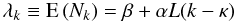

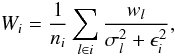

3.6. Synthesis model

By synthesis of the models described in the preceding sections, we are now in a position to formulate very precisely the core astrometric data analysis problem as outlined in Sect. 2. The unknowns are represented by the vectors s, a, c, and g of respectively the source, attitude, calibration, and global parameters. For any observation l the observed quantities are the observation time tl and, where applicable, the observed AC pixel coordinate μl, with their formal uncertainties  ,

,  . We then have the global minimization problem

. We then have the global minimization problem  (24)where

(24)where ![\begin{eqnarray} R_l^\text{AL}(\vec{s},\vec{a},\vec{c},\vec{g}) &=& \eta_{fng}(\mu_l,t_l\,|\,\vec{c})- \eta(t_l\,|\,\vec{s},\vec{a},\vec{g})\label{eq:resAL},\\[3pt] R_l^\text{AC}(\vec{s},\vec{a},\vec{c},\vec{g}) &=& \zeta_{fng}(\mu_l,t_l\,|\,\vec{c})- \zeta(t_l\,|\,\vec{s},\vec{a},\vec{g})\label{eq:resAC} \end{eqnarray}](/articles/aa/full_html/2012/02/aa17905-11/aa17905-11-eq222.png) are the residuals in the field angles, taken as functions of the unknowns5, and l ∈ AL refers to observations with a valid AL component, etc. The applicable indices f, n, g are of course known for every observation l. In Eq. (24),

are the residuals in the field angles, taken as functions of the unknowns5, and l ∈ AL refers to observations with a valid AL component, etc. The applicable indices f, n, g are of course known for every observation l. In Eq. (24),  and

and  represent all AL and AC error sources extraneous to the formal uncertainties; they include in particular modelling errors in the source behaviour (e.g., for unrecognized binaries), attitude and calibration, which have to be estimated as functions of time and source in the course of the data analysis process.

represent all AL and AC error sources extraneous to the formal uncertainties; they include in particular modelling errors in the source behaviour (e.g., for unrecognized binaries), attitude and calibration, which have to be estimated as functions of time and source in the course of the data analysis process.  and

and  are weight factors, always in the range 0 to 1; for most observations they are equal to 1, but “bad” data (outliers) are assigned smaller weight factors. The determination of these factors is discussed in Sect. 5.1.2.

are weight factors, always in the range 0 to 1; for most observations they are equal to 1, but “bad” data (outliers) are assigned smaller weight factors. The determination of these factors is discussed in Sect. 5.1.2.

For the sake of conciseness we shall hereafter consider the AL and AC components of an observation to have separate observation indices l, so that for example Rl stands for either  or

or  , as the case may be. This allows the two sums in Eq. (24) to be contracted and written concisely as

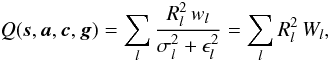

, as the case may be. This allows the two sums in Eq. (24) to be contracted and written concisely as  (27)where

(27)where  is the statistical weight of the observation.

is the statistical weight of the observation.

The excess noise ϵl represents modelling errors and should ideally be zero. However, it is unavoidable that some sources do not behave exactly according to the adopted astrometric model (Sect. 3.2), or that the attitude sometimes cannot be represented by the spline model in Sect. 3.3 to sufficient accuracy. The excess noise term in Eq. (27) is introduced to allow these cases to be handled in a reasonable way, i.e., by effectively reducing the statistical weight of the observations affected. It should be noted that these modelling errors are assumed to affect all the observations of a particular star, or all the observations in a given time interval. (By contrast, the downweighting factor wl is intended to take care of isolated outliers, for example due to a cosmic-ray hit in one of the CCD samples.) This is reflected in the way the excess noise is modelled as the sum of two components,  (28)where ϵi is the excess noise associated with source i (if l ∈ i, that is, l is an observation of source i), and ϵa(t) is the excess attitude noise, being a function of time. For a “good” primary source, we should have ϵi = 0, and for a “good” stretch of attitude data we may have ϵa(t) = 0. Calibration modelling errors are not explicitly introduced in Eq. (28), but could be regarded as a more or less constant part of the excess attitude noise. The estimation of ϵi is described in Sect. 5.1.2, and the estimation of ϵa(t) in Sect. 5.2.5.

(28)where ϵi is the excess noise associated with source i (if l ∈ i, that is, l is an observation of source i), and ϵa(t) is the excess attitude noise, being a function of time. For a “good” primary source, we should have ϵi = 0, and for a “good” stretch of attitude data we may have ϵa(t) = 0. Calibration modelling errors are not explicitly introduced in Eq. (28), but could be regarded as a more or less constant part of the excess attitude noise. The estimation of ϵi is described in Sect. 5.1.2, and the estimation of ϵa(t) in Sect. 5.2.5.

Three separate functions are needed to describe the excess attitude noise, corresponding to AL observations, AC observations in the preceding field of view (ACP), and AC observations in the following field of view (ACF). We distinguish between these functions by letting the subscript a in ϵa(t) stand for either AL, ACP or ACF.

4. Solving the global minimization problem

Assuming 108 primary sources, the number of unknowns in the global minimization problem, Eq. (24), is about 5 × 108 for the sources (s), 4 × 107 for the attitude (a, assuming a knot interval of 15 s for the 5 yr mission; cf. Sect. 5.2.1), 106 for the calibration c, and less than 100 global parameters (g). The number of elementary observations (l) considered is about 8 × 1010. However, the size of the data set, and the large number of parameters, would not by themselves be a problem if the observations, or sources, could be processed sequentially. The difficulty is caused by the strong connectivity among the observations: each source is effectively observed relative to a large number of other sources simultaneously in the field of view, or in the complementary field of view some 106.5° away on the sky, linked together by the attitude and calibration models. The complexity of the astrometric solution in terms of the connectivity between the sources provided by the attitude modelling was analysed by Bombrun et al. (2010), who concluded that a direct solution is infeasible, by many orders of magnitude, with today’s computational capabilities. The study neglected the additional connectivity due to the calibration model, which makes the problem even more unrealistic to attack by a direct method. Note that this impossibility is not a defect, but a virtue of the mathematical system under consideration: it guarantees that a unique, coherent and completely independent global solution for the whole sky can be derived from the system.

The natural alternative to a direct solution is to use some iterative method. This is in fact the standard way to deal with large, sparse systems of equations. The literature in the field is vast and a plethora of methods exist for various kinds of applications. However, the iterative method adopted for Gaia did not spring from a box of ready-made algorithms. Rather, it was developed over several years in parallel with the software system in which it could be implemented and tested. Originally based on an intuitive and somewhat simplistic approach, the algorithm has developed through a series of experiments, insights and improvements into a rigorous, efficient and well-understood procedure, completely adapted to its particular application. In this section we first describe the approach in broad outline, then provide the mathematical background for its better understanding and further development.

4.1. Outline of the iterative solution

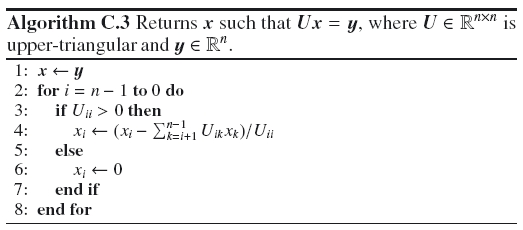

The numerical approach to the Gaia astrometry is a block-iterative least-squares solution, referred to as the Astrometric Global Iterative Solution (AGIS). In its simplest form, four blocks are evaluated in a cyclic sequence until convergence. The blocks map to the four different kinds of unknowns outlined in Sect. 3, namely:

-

S:

the source (star) update, in which the astrometric parameterss of the primary sources are improved;

-

A:

the attitude update, in which the attitude parameters a are improved;

-

C:

the calibration update, in which the calibration parameters c are improved;

-

G:

the global update, in which the global parameters g are improved.

The G block is optional, and will perhaps only be used in some of the final solutions, since the global parameters can normally be assumed to be known a priori to high accuracy.

The blocks must be iterated because each one of them needs data from the three other processes. For example, when computing the astrometric parameters in the S block, the attitude, calibration and global parameters are taken from the previous iteration. The resulting (updated) astrometric parameters are used the next time the A block is run, and so on. This iterative approach to the astrometric solution was proposed early on in the Hipparcos project as an alternative to the “three-step method” subsequently adopted for the original Hipparcos reductions; see ESA (1997, Vol. 3, p. 488) and references therein. Indeed, the later re-reduction of the Hipparcos raw data by van Leeuwen (2007) used a very similar iterative method, and yielded significantly improved results mainly by virtue of the much more elaborate attitude modelling implemented as part of the approach.

While the block-iterative solution as outlined above is intuitive and appealing in its simplicity, it is from a mathematical standpoint not obvious that it must converge; and if it does indeed converge, it is not obvious how many iterations are required, whether the order of the blocks in each iteration matter, and whether the converged results do in fact constitute a solution to the global minimization problem. These are fundamental questions for the validity of the adopted iterative approach, and we therefore take some care in the following subsections to establish its theoretical foundations (Sects. 4.2–4.5). Section 5 then describes each of the S, A, C and G blocks in some detail. In addition to these blocks, separate processes are required for the alignment of the astrometric solution with the ICRS, the selection of primary sources, and the calculation of standard uncertainties; these auxiliary processes are discussed in Sect. 6.

4.2. Least-squares approach

Strictly speaking, Eq. (24) is not a least-squares problem, because of the weight factors  ,

,  (as well as the excess noises

(as well as the excess noises  ,

,  ), which depend on the AL and AC residuals and hence on the unknowns (s,a,c,g). In Eq. (1) this dependence is formally included in the unspecified metric ℳ, which therefore is not simply a (weighted) Euclidean norm.

), which depend on the AL and AC residuals and hence on the unknowns (s,a,c,g). In Eq. (1) this dependence is formally included in the unspecified metric ℳ, which therefore is not simply a (weighted) Euclidean norm.

In principle, the minimization problem (24) can be solved by finding a point where the partial derivatives of the objective function Q with respect to all the unknowns are simultaneously zero. In practice, however, the partial derivatives are not computed completely rigorously, and the problem solved is therefore a slightly different one from what is outlined above. In order to understand precisely the approximations involved, it is necessary to consider how different kinds of non-linearities enter the problem.

The functions ηfng(μ,t | c) and ζfng(μ,t | c) appearing in Eqs. (25), (26) are strictly linear in the calibration parameters c, by virtue of the basic geometric calibration model in Eq. (15) or the generic model in Eqs. (20), (21). On the other hand, the functions η(t | s,a,g) and ζ(t | s,a,g) are non-linear in s, a, and g due to the complex transformations involved (Sects. 3.2, 3.3). However, thanks to the data processing prior to the astrometric solution, the initial errors in these parameters are already so small that the corresponding errors in η and ζ are only some 0.1 arcsec (~10-6 rad). Second-order terms are therefore typically less than 10-12 rad ≃ 0.2 μas, that is negligible in comparison with the noise of a single AL observation (some 100 μas). This means that the partial derivatives of the residuals  and