| Issue |

A&A

Volume 696, April 2025

|

|

|---|---|---|

| Article Number | A55 | |

| Number of page(s) | 17 | |

| Section | Planets, planetary systems, and small bodies | |

| DOI | https://doi.org/10.1051/0004-6361/202452058 | |

| Published online | 02 April 2025 | |

Asteroid shape inversion with light curves using deep learning

1

School of Physics, Zhejiang University of Technology,

Hangzhou

310023,

China

2

Collaborative Innovation Center for Bio-Med Physics Information Technology of ZJUT, Zhejiang University of Technology,

Hangzhou

310023,

China

3

CAS Key Laboratory of Optical Astronomy, National Astronomical Observatories, Chinese Academy of Sciences,

Beijing

100101,

China

★ Corresponding author; tyj1970@163.com

Received:

30

August

2024

Accepted:

10

February

2025

Context. Asteroid shape inversion using photometric data has been a key area of study in planetary science and astronomical research. Specifically, researchers have focused on developing techniques to reconstruct 3D asteroid shapes from light curves. This process is crucial for gaining deeper insights into the formation and evolution of asteroids, as well as for planning human space missions. However, the current methods for asteroid shape inversion require extensive iterative calculations, making the process time-consuming and prone to becoming stuck in local optima. For missions that aim to make a close approach to an asteroid, a faster and more efficient method is urgently needed.

Aims. The goals of this work are to improve the precision, speed, and adaptability to sparse data in asteroid shape inversion and to support autonomous decision-making for shape inversion in space missions.

Methods. We directly established a mapping between photometric data and shape distribution through deep neural networks. In addition, we used 3D point clouds to represent asteroid shapes and utilized the deviation between the light curves of non-convex asteroids and their convex hulls to predict the concave areas of non-convex asteroids.

Results. With our approach, we eliminate the need for extensive iterative calculations, achieving millisecond-level inversion speed. We compared the results of different shape models using the Chamfer distance between traditional methods and ours and found that our method performs better, especially when handling special shapes. For the detection of concave areas on the convex hull, the intersection over union (IoU) of our predictions reached 0.89. We further validated this method using observational data from the Lowell Observatory to predict the convex shapes of the asteroids 3337 Miloš and 1289 Kutaïssi, and we conducted light curve fitting experiments. The experimental results demonstrated the robustness and adaptability of the method.

Conclusions. We propose a deep learning-based method for asteroid shape inversion using light curve data to reconstruct the convex hull of asteroids and predict concave areas on the convex hull of non-convex asteroids. Our deep learning model efficiently extracts features from input data through convolutional and transformer networks, learning the complex illumination relationships embedded in the light curve data, and enabling precise estimation of the three-dimensional point cloud representing asteroid shapes.

Key words: instrumentation: photometers / methods: data analysis / techniques: photometric / minor planets, asteroids: general

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Asteroids preserve information of the formation and evolution of the Solar System and harbor vast natural resources. In addition, some near-Earth asteroids pose a threat to Earth, making their exploration crucial (Harris & D’Abramo 2015). The OSIRISREx mission targeting the asteroid Bennu (Lauretta et al. 2017) and the Hayabusa2 mission targeting the asteroid Ryugu (Watanabe et al. 2019) have already returned their collected samples to Earth, and both spacecraft are continuing their extended missions to new asteroid targets. The Lucy mission, which is targeting the Trojan asteroids (Levison et al. 2021), was launched and is not designed to return samples. The Tianwen-2 mission (Zhang et al. 2024), which is planned to launch in 2025, aims to explore the asteroid 1996 FG3 and asteroid 2016 HO3. For all of these missions, ground-based target selection and preliminary shape modeling are essential. However, traditional methods for shape reconstruction have high computational demands, leading to inefficiencies. When faced with the large volumes of data from modern sky surveys (e.g., Large Synoptic Survey Telescope, Ivezić et al. 2019), traditional iterative methods struggle to process the information effectively. Furthermore, traditional method has inherent uncertainty along the z-axis in the inversion results, limiting their accuracy, and they are prone to becoming stuck in local minima during the optimization process. To address these limitations, the development of deep learning-based algorithms presents a promising alternative. The new approach can significantly enhance inversion speed, improve processing efficiency, and ultimately increase the scientific return of the missions.

Asteroid shape inversion, which is crucial for planetary science research, often relies on photometric data – the easiest and most abundant source for estimating an asteroid’s physical characteristics, especially in the absence of high-resolution images. The brightness observed from an asteroid over a time series, known as the light curve, depends on the asteroid’s shape, spin state, and the scattering properties of its surface. This data is essential for improving impact risk assessment, supporting resource exploration, and ensuring successful navigation and landing missions. However, due to the effects of the atmosphere, Earth’s rotation, and the orbital path of asteroids, the effective observation window for ground-based telescopes is limited. While for a small number of larger asteroids, it is easier to obtain high signal-to-noise ratio data and high-resolution images, most asteroids have sparse historical photometric data, thus complicating data processing. Despite these challenges, photometric data remains invaluable for providing essential information for simulations, modeling, and further understanding of asteroid characteristics (Ďurech & Hanuš 2023; Ďurech et al. 2020).

Russell (1906) proposed that observing the light curve only during opposition geometry is insufficient to determine the shape of an asteroid. Nearly a century later, new paradigms and theories for asteroid light curve inversion were introduced and established led by Kaasalainen et al. (1992a) and Kaasalainen et al. (1992b). The authors demonstrated that light curve data observed under different geometries of the Sun-asteroid-observatory relationship could be used to estimate the Gaussian curvature of asteroids. Furthermore, they proposed preliminary numerical inversion methods for retrieving convex body models, which were successfully applied by Barucci et al. (1992) in the 3D shape inversion of asteroid(951) Gaspra. Ten years later, Kaasalainen & Torppa (2001) and Kaasalainen et al. (2001) established a mature and robust theory and methodology for asteroid shape inversion using convex shape models (which we refer to as KTM in this paper). The quality of the inversion method heavily relies on the quality of light curves – they should be fairly dense, cover the entire spin cycle, and be observed under different solar-asteroid observer geometries.

To exploit sparse light curve data, Carbognani et al. (2012) studied a simpler shape representation for asteroids during the inversion process with fewer parameters. Their studies on several asteroids indicated that the spin poles and periods recovered using triaxial ellipsoids were essentially consistent with those from the KTM solution with complex shapes. By integrating the Lommel-Seeliger surface scattering model, Muinonen & Lumme (2015) further advanced the ellipsoidal asteroid model. Following the same line of work, Muinonen et al. (2015) employed a Markov chain Monte Carlo (MCMC) analysis to describe the probability density function of the neighborhood region of the best-fitting parameters. Ďurech et al. (2010) established a three-dimensional model database website containing 16091 models of asteroids: Database of Asteroid Models from Inversion Techniques (DAMIT). The database also offers the convex model inversion program by Kaasalainen & Torppa (2001), which has now become one of the most popular inversion tools. Many of the models in the database are convex inversion models derived from sparse photometric data (Ďurech et al. 2010, 2020; Cellino et al. 2024).

Most of the shape models generated through light curve inversion are convex, primarily because the addition of convexity constraints during the inversion process can stabilize the solution. As noted by Kaasalainen & Torppa (2001), although asteroids typically possess concavities, their light curves can be approximated by those generated from convex shape models. Convex models serve as alternative solutions to nonconvex models lacking mathematical uniqueness and stability. They are only necessary when dealing with high-quality light curves at high solar phase angles or disk-resolved data. Ďurech & Kaasalainen (2003) defined the concept of non-convexity, explored its relationship with solar phase angles, and noted that at high solar phase angles, the shadowing effects caused by nonconvex models became more pronounced on the light curve. Bartczak & Dudziński (2018) developed the SAGE method based on genetic algorithms, and it relaxes the typical convex assumption during the light curve inversion process. The SAGE method iteratively generates random shape and spin axis mutations, evaluating them at each step until a stable solution is found. This method is particularly useful when working with highquality light curves at high solar phase angles or disk-resolved data, where non-convexity becomes more critical to accurately modeling asteroid shapes.

Kaasalainen (2011) proposed a weighting strategy to combine various types of observational data for asteroid shape, scattering parameters, rotation parameters, and albedo distribution inversion. Kaasalainen & Viikinkoski (2012) achieved multiple complementary data inversions. Subsequently, Carry et al. (2012) introduced Knitted Occultation, Adaptive-optics, and Lightcurve Analysis (KOALA), aiming to integrate light curve inversion with adaptive optics and stellar occultation. These methods were further developed by Viikinkoski et al. (2015), who proposed All-Data Asteroid Modeling (ADAM) algorithm to combine various types of data. These methods involve not only optical photometric data but also infrared data, radar data, occultation data, interferometric data, adaptive optics data, and high-resolution imaging data. Martikainen et al. (2021) devised a method to extract reference absolute magnitudes and phase curves from Gaia data, enabling comparative analyses across hundreds of asteroids. Furthermore, Muinonen et al. (2022) introduced error models for various light curve categories and investigated the behavior of phase angles.

Asteroid shape inversion based on light curve data has long been treated as a type of non-convex optimization problem (Danilova et al. 2022), characterized by challenges such as multiple local minima arising from the mathematical complexity of the inversion process. Traditionally, this problem is tackled using deterministic initialization and numerical iterative minimization methods. Aiming to achieve a global solution, Chng et al. (2022) proposed a global optimization framework by embedding the spin pole and area vector determination module. While theoretically more effective, this approach nonetheless remains computationally intensive and is limited to convex body models, thus making it unsuitable for more complex non-convex shapes.

In recent years, there has been a trend towards treating shape estimation of space objects as a classification problem and employing deep learning methods to address it. Linares et al. (2020) trained a convolutional neural network (CNN) solely based on light curve data to classify space objects by type and included categories such as rocket bodies, payloads, and debris. Their method achieved an accuracy of 75% on real light curve test sets, demonstrating the feasibility of using neural networks to process light curve data. Allworth et al. (2021) demonstrated that transferring knowledge learned on simulated light curve data improved the performance of deep networks in shape classification tasks using real light curve data. However, the application of deep neural networks in asteroid light curve-related tasks has stalled at this point, with no further successful application of neural networks to three-dimensional shape inversion.

Advancements in 3D sensor technology have significantly propelled research within the 3D computer vision domain. Among the various 3D data formats, point clouds are particularly popular due to their ability to efficiently store detailed 3D shape information while consuming less memory than other formats such as voxel grids or 3D meshes. Nonetheless, the point cloud data captured by current 3D sensors often suffers from incompleteness and inaccuracies, stemming from challenges such as self-occlusion, light reflection, and the inherent limitations in sensor resolution. As a result, the task of reconstructing complete point clouds from partial and sparse datasets has become increasingly crucial and is now considered essential in this field. Researchers have explored various approaches within deep learning to address this challenge. Initial attempts in point cloud completion sought to adapt established methods from 2D completion tasks to 3D point clouds (Dai et al. 2017; Han et al. 2017; Sharma et al. 2016; Stutz & Geiger 2018; Nguyen et al. 2016), primarily through voxelization and 3D convolutions.

With the success of PointNet (Qi et al. 2017a) and PointNet++ (Qi et al. 2017b), directly processing 3D coordinates has become the mainstream technique for point cloud-based 3D analysis. This technology has been further applied to many pioneering works in point cloud completion, where encoder-decoder architectures are designed to generate complete point clouds (Achlioptas et al. 2018; Tchapmi et al. 2019; Groueix et al. 2018; Mandikal & Radhakrishnan 2019). These algorithms have proven to be particularly well-suited for tasks in autonomous driving, especially with data generated by LiDAR sensors (Yu et al. 2021; Zhou et al. 2022; Hao et al. 2023; Hartmann et al. 2024), as they excel at extracting both global and local features from sparse point cloud data. Additionally, earlier approaches that focused on voxelization and 3D convolutions for point cloud completion (Yuan et al. 2018; Huang et al. 2020), have laid the groundwork for the development of more sophisticated methods.

Point clouds are used to represent the surface shape of three-dimensional objects. A point cloud consists of coordinates (x, y, z), and the collection of these points forms the semantic features of a three-dimensional object. The approach of representing 3D shapes with point clouds has inspired us. In the task of three-dimensional shape inversion based on light curve data, the brightness recorded at each sampling point, along with the corresponding coordinates of the Sun and the observatory at each sampling point, forms an independent vector. The collection of many such vectors embeds information about the asteroid’s 3D shape features. We aim to leverage deep learning techniques to learn the shape information embedded in the brightness data while utilizing large datasets for training and learning. Compared to iterative optimization methods, regression-based prediction approaches can significantly improve the efficiency of shape inversion.

2 Preliminaries

2.1 Asteroid light model

We considered an asteroid in principal-axis rotation about its axis of maximum inertia and denoted the rotation period by T, the pole orientation in ecliptic longitude and latitude by (λ, β)r (J2000.0; here, r stands for transpose), and the rotational phase at a given epoch t0 by ϕ0. As to the asteroid shape, we incorporated either triaxial ellipsoidal shapes or general convex shapes.

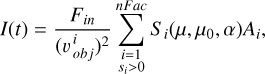

The observed brightness I of the asteroid at any given time t can be expressed as:

(1)

(1)

where Fin is the incident flux density,  represents the distance from the asteroid to the observatory, and nFac represents the number of surface facets into which the asteroid’s surface is divided. The term S (μ, μ0, α) represents the surface scattering coefficient, and it is a function of t, where μ, μ0, and α correspond to the cosine of the angle between the observer’s direction and the asteroid’s surface normal, the cosine of the angle between the light source direction and the asteroid’s surface normal, and the phase angle, respectively. Here, si > 0 indicates that the ith facet is simultaneously illuminated by the Sun and visible from the observatory at time t, and Ai represents the area of the ith facet.

represents the distance from the asteroid to the observatory, and nFac represents the number of surface facets into which the asteroid’s surface is divided. The term S (μ, μ0, α) represents the surface scattering coefficient, and it is a function of t, where μ, μ0, and α correspond to the cosine of the angle between the observer’s direction and the asteroid’s surface normal, the cosine of the angle between the light source direction and the asteroid’s surface normal, and the phase angle, respectively. Here, si > 0 indicates that the ith facet is simultaneously illuminated by the Sun and visible from the observatory at time t, and Ai represents the area of the ith facet.

Scattering models serve to describe the statistical characteristics of how light is scattered, reflected, and absorbed by the microscopic structures on asteroid surfaces. Numerous scattering models exist today, and each is dependent on variables such as incidence angle, emission angle, phase angle, surface roughness, porosity, particle size, and other physical parameters (Rizos et al. 2021). Among the commonly used scattering models for asteroids are the LS-L (Lommel-Seeliger-Lambert) model introduced by Kaasalainen and the model developed by Hapke (Hapke 1963, 1966).

The Hapke model encompasses a range of physical characteristics, including the incidence angle, emission angle, average single-scattering albedo, scattering and extinction coefficients of the medium, detector sensitivity area, reflectance, the number of reflective particles per unit volume, average particle cross-sectional area, average path length of light attenuation within the medium, and surface roughness. This model also accounts for phenomena such as multiple scattering, mutual shadowing, opposition effects across different wavelengths, and anisotropic scattering. Due to its complexity, the Hapke model has been applied to the inversion of only a limited number of asteroids (Lee et al. 2021; Scheirich et al. 2010; Ďurech 2002; Pravec et al. 2014; Masoumzadeh et al. 2015; Hudson & Ostro 1998), with the light curve fitting residuals showing limited sensitivity to the model’s parameters. Moreover, extracting all surface scattering characteristics from optical photometric data remains a significant challenge (Li et al. 2015).

Kaasalainen & Torppa (2001) pointed out that the scattering model used for inversion needs to be sufficiently simple, as having fewer model parameters can enhance the stability of the inversion. The same applies to neural networks – fewer model parameters are more conducive to the network learning the shape features from light curve data. So, we adopted the linear combination of Lommel-Seeliger (LS) and Lambert (L) models (Kaasalainen et al. 2001) as the scattering law of the object, formulated as:

![$\begin{align*} S\left(\mu, \mu_{0}, \alpha\right) & =f(\alpha)\left[S_{LS}\left(\mu, \mu_{0}\right)+c S_{L}\left(\mu, \mu_{0}\right)\right] \\ & =f(\alpha)\left[\frac{\mu \mu_{0}}{\mu+\mu_{0}}+c \mu \mu_{0}\right] \end{align*}$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq3.png) (2)

(2)

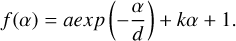

where the f(α) is the phase function

(3)

(3)

and the terms a and d are the amplitude and scale length of the opposition effect, k is the overall slope of the phase curve, and c is a weight parameter.

2.2 Shape representation

In the field of computer vision, three-dimensional objects can be represented in various ways, but primarily it is done with meshes (Catmull 1974), voxels (Lorensen & Cline 1998), and point clouds. The mesh encodes the geometry of three-dimensional objects based on the combination of vertices, edges, and faces, often using polygonal faces to represent the surface of threedimensional objects. Triangular grids are one of the simplest types of grids and are commonly used for the convenient and rapid generation of three-dimensional surface representations, particularly in unstructured grids, where flexibility and efficiency are essential.

Voxels are the corresponding representation of pixels in a three-dimensional space (likewise, pixes are analogous to the representation of voxels in two-dimensional images). Voxelization is the process of converting a continuous geometric object into a set of discrete voxels that closely approximate the object. Voxels can be viewed as cubes representing uniformly spaced samples on a three-dimensional grid, and they are commonly used in volume rendering, medical image processing, fluid dynamics simulation, and other fields, especially for dense three-dimensional data.

Point clouds are collections of data points in three-dimensional space, which can be used to describe the geometric shape of individual objects or entire scenes. Each point in a point cloud is defined by X, Y, and Z coordinates, representing the physical position of the point in three-dimensional space. Point clouds are commonly used in geographic information systems (GIS), three-dimensional modeling, virtual reality, autonomous driving, and other fields, especially for non-uniform or discrete three-dimensional data. Due to the lack of explicit topological information, point clouds offer greater flexibility in usage than traditional methods such as meshes or voxels.

We represent the three-dimensional shape of the asteroid as a collection of point clouds, denoted as P, which is a discrete representation of its underlying continuous surface. For traditional point cloud collections, each point represents only a three-dimensional coordinate, and its capability to express the three-dimensional shape depends on the resolution of the point cloud.

2.3 Inverse problem

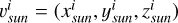

The inversion of asteroid shapes from light curves relies on the space-time relationships of the asteroid. At a certain observation moment ti, the brightness Ii of the asteroid is observed, and the direction vector of the Sun in the asteroid’s body coordinate system  and the direction vector of the observatory

and the direction vector of the observatory  are recorded. Assuming the direction vector in the ecliptic coordinate system is

are recorded. Assuming the direction vector in the ecliptic coordinate system is  , the transformation formula of the body coordinate

, the transformation formula of the body coordinate  with the asteroid as the center is then

with the asteroid as the center is then

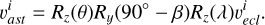

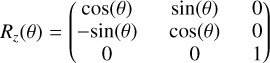

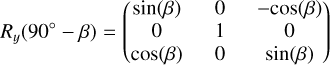

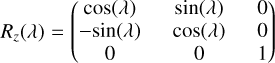

(4)

(4)

Here, Rz(θ), Ry(90∘−β), and Rz(λ) are rotational matrices, where θ denotes the rotational phase angle

(5)

(5)

(6)

(6)

(7)

(7)

(8)

(8)

Here, λ represents the ecliptic longitude, β is the ecliptic latitude of the asteroid’s rotation axis, t0 denotes the initial time, the parameter θ0 represents the asteroid’s rotational phase at that moment, and T indicates the period of the asteroid’s rotation.

Recording the brightness at time ti along with the normalized direction vectors of the Sun and the observatory in the asteroid’s body coordinate system yields a vector representation. We let the observation vector at time ti be denoted as  , where

, where  and

and  are the normalized direction vectors of the Sun and the observatory, respectively, in the asteroid’s body coordinate system. The collection of observation vectors at various historical moments forms a set

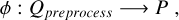

are the normalized direction vectors of the Sun and the observatory, respectively, in the asteroid’s body coordinate system. The collection of observation vectors at various historical moments forms a set  . To ensure that our inversion process only has shape as a variable, we processed Ii by assuming the phase function is known. Before inputting each point into the network, we divided Ii by the phase function f(α) as given in Equation (3). To further normalize Ii, we divided each Ii after the phase function correction by the average value of all Ii in the set Q. We refer to the observation vector set Q after this preprocessing as Qpreprocess.

. To ensure that our inversion process only has shape as a variable, we processed Ii by assuming the phase function is known. Before inputting each point into the network, we divided Ii by the phase function f(α) as given in Equation (3). To further normalize Ii, we divided each Ii after the phase function correction by the average value of all Ii in the set Q. We refer to the observation vector set Q after this preprocessing as Qpreprocess.

At this point, we needed to establish a mapping relationship ϕ:

(9)

(9)

where P represents the point cloud set characterizing the threedimensional shape of the asteroid. The construction of ϕ was implemented using a neural network. An illustration of our inversion method is presented in Fig. 1.

At the same time, we noticed that the area illuminated and observable on the asteroid during two independent observations can overlap, and this information is crucial for the inversion process. The occurrence of such area overlap is related to the shape of the asteroid and the direction of illumination. Such situations are likely to occur when the observation vectors  and

and  in the asteroid’s body coordinate system are very close. This can happen both in observations from different historical periods and in continuous observations of the asteroid during a single night. By normalizing

in the asteroid’s body coordinate system are very close. This can happen both in observations from different historical periods and in continuous observations of the asteroid during a single night. By normalizing  , we obtained

, we obtained  . Hence, we could define a domain

. Hence, we could define a domain  centered at the point

centered at the point  with a fixed radius r:

with a fixed radius r:

(10)

(10)

Here, the  represents the Euclidean distance between the

represents the Euclidean distance between the  and

and  . Due to the unknown shape of the asteroid, it is not possible to determine an accurate value of r in order to judge whether there is an overlap in the observed areas. Therefore, in our experiment, r was set as an empirical value, which we set as 0.1. In the subsequent feature extraction process, appropriate feature fusion was performed on

. Due to the unknown shape of the asteroid, it is not possible to determine an accurate value of r in order to judge whether there is an overlap in the observed areas. Therefore, in our experiment, r was set as an empirical value, which we set as 0.1. In the subsequent feature extraction process, appropriate feature fusion was performed on  .

.

3 Proposed method

3.1 Convex inversion

The goal of our proposed algorithm is to establish the mapping relationship as indicated in Equation (9). However, we must state first that the method is based on the known parameters of rotation axis direction, rotation period, and phase function.

As an attempt to establish this mapping relationship, we trained the neural network on a vast amount of simulated data. We provide a comprehensive overview of our methodology in this section.

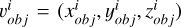

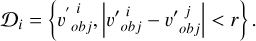

We made improvements to the PoinTR (Yu et al. 2021) algorithm framework. The network divides the prediction process of asteroid point clouds into two stages. The first stage is coarse point cloud prediction, which predicts the basic outline of the three-dimensional shape, and the second stage further refines the surface of the coarse point cloud.

Following the style of PoinTR (Yu et al. 2021), We used a simplified 1D-DGCNN (Wang et al. 2019) network for feature extraction of input vectors. We employed farthest point sampling (FPS) and the k-nearest neighbors (KNN) algorithm to extract local features and downsample them. Furthermore a geometry-aware transformer module (Yu et al. 2021) was utilized for feature encoding. In this module, the multi-head self-attention and KNN query modules work in parallel, capturing both global information and local information of light curves. The output features pass through a fully connected layer and a max-pooling layer to obtain global features, and based on these features, the coarse point cloud of the three-dimensional shape is predicted. Subsequently, we input the coarse point cloud along with the encoded features into the decoding stage. The structural features of the coarse point cloud were decoded along with the features input into the network. Finally, we performed three layers of point cloud upsampling based on the UpSample transformer module proposed by Zhou et al. (2022). In this module, the coarse point cloud is processed along with the decoder outputs through two branches: one that preserves the original features and another that refines them using attention weights computed from local neighborhoods. These refined features were then used to upsample the point cloud while retaining the geometric structure and completing the shape based on the original light curve features. The refined point cloud was obtained as the final output. The network architecture is shown in Fig. 2.

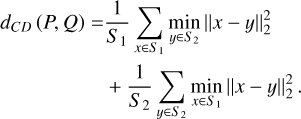

In the experiment, the Chamfer distance (CD) was employed as the loss function of the network to measure the distance between two unordered point sets, defined as follows:

(11)

(11)

where S1 and S2 represent two sets of point clouds, and x, y respectively denote the three-dimensional vectors of coordinates for the two sets of point clouds.

To ensure that both the generation of coarse point clouds and the progressive refinement approach closely approximate the real shape, the output of each point cloud at every stage was constrained using the CD loss. The coarse point cloud underwent a total of three upsampling steps before producing the final result. Therefore, the total loss function Lpc is

(12)

(12)

Here, Lcoarse represents the chamfer loss of the coarse point cloud, Lc2f represents the chamfer loss of the output point cloud after the first layer of upsampling, and Lfine represents the chamfer loss of the final refined point cloud output.

|

Fig. 1 Illustration of our proposed inversion method. The top four panels show the schematic diagrams of different visible and illuminated facets of the asteroid under varying Sun-asteroid-Earth positional relationships in the asteroid’s body coordinate system. The blue point cloud represents the asteroid point cloud obtained from the inversion process. |

3.2 Determination of concave areas

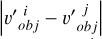

Kaasalainen et al. (1992a) pointed out that although asteroids typically have concavities, their light curves are approximately similar to those generated by convex shapes. Non-convex models serve as an alternative to convex models; however, their solutions lack mathematical uniqueness and stability unless supplemented by additional sources of shape information. By minimizing the photometric error, the shape we invert is very close to the convex hull of the non-convex asteroid. Devogèle et al. (2015) utilized this feature to propose a method for predicting concave areas by analyzing the local normal distribution of the model. However, their method does not use the photometric information of the preliminary prediction results further and can only detect large-scale concavities. Our aim is to develop a new method for detecting non-convex regions utilizing the photometric information from both the model and the convex hull, and analyzing their differences (even when the differences are very small under low solar phase angles or when the non-convexity is minimal), along with the corresponding positions of the Sun and the observation station in order to determine the non-convex regions.

Thus, we utilized the deviation between the light curve of the convex hull and the actual light curve, along with the illumination position information, to determine the concave areas and the degree of concavity. At this point, the input observation vector becomes  , forming a similar set

, forming a similar set  , where

, where  represents the relative brightness of the convex hull,

represents the relative brightness of the convex hull,  represents the relative brightness of the non-convex model, and

represents the relative brightness of the non-convex model, and  represents the difference between the brightness generated by the convex hull simulation and the brightness of the non-convex model.

represents the difference between the brightness generated by the convex hull simulation and the brightness of the non-convex model.

As our aim is for the information output by the neural network to express the non-convex regions on the convex hull, we therefore performed some pre-processing on Q′ before feeding the data into the network. We employed the Fibonacci sphere sampling method (Brent 2013) to complete the normalized observation vectors ( ). This ensures that the normalized observation points always form a spherical shape in space, with the photometric and illumination information for the supplemented points entirely replaced by zeros, such as

). This ensures that the normalized observation points always form a spherical shape in space, with the photometric and illumination information for the supplemented points entirely replaced by zeros, such as  . These supplementary vectors are added to Q′ to form a new set, Q′′, which is then fed into the classification network based on PointNet++ (Qi et al. 2017b). The network classifies each vector and identifies which points represent the projection of the non-convex regions, as shown in Fig. 3. This projection method uniquely represents the concave areas within a 3D model, and our experiments demonstrated the reliability of this approach.

. These supplementary vectors are added to Q′ to form a new set, Q′′, which is then fed into the classification network based on PointNet++ (Qi et al. 2017b). The network classifies each vector and identifies which points represent the projection of the non-convex regions, as shown in Fig. 3. This projection method uniquely represents the concave areas within a 3D model, and our experiments demonstrated the reliability of this approach.

|

Fig. 2 Network architecture. First, FPS is used to downsample the input light curve sampling points in order to determine the center points. Then, the nearest neighbor set corresponding to each center point is obtained with the KNN algorithm, and local features are extracted using a simplified 1D-DGCNN. Afterwards, positional embeddings are added to enhance spatial awareness. The enhanced features are encoded by a geometry-aware transformer module followed by global feature aggregation through max pooling. Finally, the coarse point cloud is refined into the output using a transformer decoder that integrates three up-sampling layers and skip connections. |

|

Fig. 3 Method of predicting non-convex regions. |

4 Results and discussion

4.1 Simulated light curve experiments

Due to the influence of an asteroid’s orbital motion and Earth’s orbital motion, each asteroid can only be observed during certain limited seasons. To ensure that the simulated observation conditions are realistic, we used the geometric position relationship between the Sun, the asteroid, and the observatory from actual data provided by the DAMIT website. Each asteroid’s historical observation positions are different, and by combining the 3D models of multiple asteroids with various actual observation positions, a vast number of light curves can be generated. We present one of them in the Fig. 4. Essentially, this generation method assigns different orbital paths to each asteroid model. Since the simulation uses actual observation position data, the generated light curves better reflect real observation conditions, further enhancing the realism of the simulation method.

Dataset distributions.

We used 3459 3D asteroid models to simulate light curves. For each 3D asteroid model, we selected the orbital information of 35 asteroids (the Sun-asteroid-Earth positional relationships) to simulate and generate light curve samples. Each light curve sample corresponds to a set of Qpreprocess, as mentioned in Section 2.3. The number of light curves within a sample depends on the number of historical light curves of the selected asteroid used to determine the Sun-asteroid-Earth positional relationships during the simulation, and it ranges from two to eight light curves per sample. In total, we generated 121 065 light curve samples, which were divided into training and testing sets at a ratio of approximately 10:1.

For the non-convex shape, we added a conditional check for facet occlusion in the light curve simulation. Using a triangle intersection detection algorithm (Möller & Trumbore 2005), we traversed each facet to determine whether it was occluded by other facets in the direction of illumination and observation. We used Blender (Flavell 2011) software to make 1360 non-convex models with different depression degrees and different depression positions; part of the non-convex 3D models are shown in Fig. 5. For each non-convex model and its convex hull model, we selected the orbital information of 20 asteroids and used the recorded Sun-asteroid-Earth positional relationships to simulate and generate light curve samples. We simulated 27 200 light curve samples for the non-convex model and its convex hull model under actual observation conditions. Because 3D models of non-convex asteroids are difficult to obtain, our dataset contains relatively fewer non-convex models. Since neural network training requires large amounts of data, we allocated more data to the training set, dividing the training and testing sets at a ratio of approximately 14: 1. Specific dataset information is shown in Table 1.

The algorithms discussed in this paper were implemented in Python and trained on a laboratory server running Ubuntu 20.04.1. The network architecture was built using the PyTorch library, with deep learning acceleration provided by the NVIDIA CUDA library. The server is equipped with 8 RTXA5000 GPUs, each with 24 GB of video memory, 512 GB of RAM, and two 10-core, 16-thread CPUs operating at 2.90 GHz. The models were trained and tested across these GPUs for a total of 400 epochs, using the AdamW optimizer with learning rate adjustments managed by the LambdaLR scheduler. The batch size was set to 64, and the initial learning rate was 0.001.

|

Fig. 4 Simulated light curve for convex. |

|

Fig. 5 Part of non-convex 3D model datasets. |

4.2 Convex inversion results

To standardize the observation conditions, we used the actual observation conditions of asteroid 21689 (1999 RL38) as an example. In the KTM method, we fixed the pole axis and rotation period to the same values used in our approach, specifically λ = 242∘, β = −55∘, and a rotation period of 10.08457 hours. Figures 6–8 show the inversion results for spherical, rod-shaped, and disk-shaped 3D models, respectively. We note that all model images presented in this paper are aligned with the asteroidcentric coordinate system. We present the triangular mesh and 3D point cloud of the 3D models used for simulating the light curves, the triangular mesh and 3D point cloud predicted by the intelligent inversion method we proposed, and the results predicted by the Kaasalainen & Torppa (2001)'s inversion algorithm, as shown in the figures.

Based on the above results, it is evident that the prediction model proposed in this study accurately captures the basic outline of the actual models. In contrast, the convex inversion algorithm (Kaasalainen et al. 2001) shows discrepancies, particularly with the disk-shaped 3D model, which may be due to the algorithm falling into local minima during optimization.

More importantly, our method uses regression to make its prediction, which greatly improves its computational efficiency. It only takes our method 0.56 s to predict a three-dimensional point cloud with 1024 vertices, as shown in the Table 2.

During the experiment, we found that the prediction errors of the same 3D model varied significantly under different observation conditions. This variation is not only related to the number of sampling points but is also influenced by factors such as the distribution of sampling points. To investigate under what observation conditions of light curves our network can achieve better results, we defined a metric to quantify the sparsity of the light curve samples. It should be noted that this sparsity metric is specifically designed based on the size of the surface area covered by the observations. This metric takes into account the situation where certain regions have densely sampled points, leading to overlapping observations, and it also incorporates surface area weights to account for the different surface areas observed during a full rotation of the asteroid at different latitudes.

Inversion performance comparison between KTM (Kaasalainen et al. 2001) and our method.

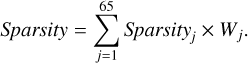

We divided the unit sphere’s latitude and longitude into equal intervals, partitioning it into a 128*65 grid, as shown in part a of Fig. 9. For each latitude direction j (a total of 65 directions), we calculated the ideal scattering area of each grid in that latitude direction, resulting in the vector  :

:

![$x_{{ideal}}^{j}=\left[x_{{ideal}, 1,1}^{j}, x_{{ideal}, 1,2}^{j}, x_{{ideal}, 1,3}^{j}, \ldots, x_{{ideal}, k, l}^{j}\right],$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq40.png) (13)

(13)

where  represents the scattering area of each grid:

represents the scattering area of each grid:

![$x_{ideal, k, l}^{j}=\max \left[{Area}_{k, l}\left(\frac{\mu_{j, i}}{\mu_{j, i}+1}+c \mu_{j, i}\right)\right].$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq42.png) (14).

(14).

We used the LS-L scattering law (Kaasalainen et al. 2001) to calculate the scattering area of each grid. The terms k and l represent the first k grid from the top and the l grid counterclockwise, k ∈ [1, 65], l ∈ [1, 128]. The term μj, i represents the cosine value of the angle between the grid normal vector in the j th latitude direction (from north to south) and the grid normal vector in the ith longitude direction (from west to east) and the surface normal vectors of the kth and lth grid, i ∈ [1, 128]. When calculating the ideal scattering area in each latitude direction, we did not consider the influence of solar illumination, and we assumed that the cosine of the angle between the solar direction and the grid normal vector is always one. For each light curve sample, we first determined which of the 65 latitude divisions each sample point belongs to and then calculated the actual scattering area of each grid in each latitude direction j, resulting in the vector  :

:

![$x_{{real}}^{j}=\left[x_{{real}, 1,1}^{j}, x_{{real}, 1,2}^{j}, x_{{real}, 1,3}^{j}, \ldots, x_{{real}, k, l}^{j}\right].$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq44.png) (15)

(15)

Subsequently, we obtained the sparsity vector xrelative for each latitude direction j:

![$x_{{relative}}^{j}=\left[\frac{x_{{real}, 1,1}^{j}}{x_{{ideal}, 1,1}^{j}}, \frac{x_{{real,} 1,2}^{j}}{x_{{ideal,}1,2}^{j}}, \frac{x_{{real}, 1,3}^{j}}{x_{{ideal}, 1,3}^{j}}, \ldots, \frac{x_{{real},k, l}^{j}}{x_{{ideal},k,l}^{j}}\right]$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq45.png) (16)

(16)

The sparsity for each latitude direction j can be defined as

![${Sparsity}_{j}(x)=l_{2}\left(x_{{relative}}^{j}\right) \in[0,1],$](/articles/aa/full_html/2025/04/aa52058-24/aa52058-24-eq46.png) (17)

(17)

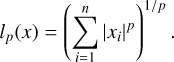

where the L-p norm of the vector x is given by

(18)

(18)

Here, p is a parameter used to calculate the norm of a vector. We calculated the total surface area Areaj of the grids in each latitude direction j, normalized this value, and used the normalized result as the area weight Wj:

(19)

(19)

Finally, our Sparsity is defined as

(20)

(20)

We calculated the sparsity of the light curve samples for 346 models in the test set using Equation (20). Then, using Equation (11), we calculated the CD between the inversion results and the ground truth as a measure of inversion accuracy error. For each model, we computed the Pearson correlation coefficient (Cohen et al. 2009) between the sparsity of its light curve samples and the CD between the inversion results and the ground truth, and plotted the distribution of the Pearson correlation coefficients, as shown in part a of Fig. 10.

In Fig. 10, part a shows that the Pearson correlation coefficients are generally less than zero, indicating a negative correlation between sparsity and CD error. The distribution of Pearson correlation coefficients is relatively balanced. For some models, there is a strong correlation between sparsity and CD error, while for others, the correlation is weak. We consider that the sparsity definition using the sphere-based grid partitioning method may not be fair for certain models. Therefore, we introduced the model-based grid partitioning method, where the triangular facets of the 3D model are used to create the grid, as shown in part b of Fig. 9. The sparsity is then calculated using the same method. Using this revised approach, we computed the sparsity of the light curve samples for 346 models from the test set and plotted the distribution of the Pearson correlation coefficients between sparsity and CD error, as shown in part b of Fig. 10.

The results shown in Fig. 10 indicate that the sparsity calculated using the model-based grid partitioning method exhibits a stronger correlation with CD. In general, the greater the sparsity of the light curve samples, the higher the accuracy of the inverted model. Therefore, sparsity can be used as an indicator to assess the quality of light curve sample data and to optimize the trajectory design of near-Earth spacecraft and light curve sampling strategies. This indicator ensures that the collected data more effectively supports the light curve inversion process, thereby improving the accuracy of the generated 3D models of asteroids.

|

Fig. 6 Inversion results of spherical model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

|

Fig. 7 Inversion results of rod-shaped model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

|

Fig. 8 Inversion results of sheet-like model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

|

Fig. 9 Generation of the grids. Here a represents a sphere-based grid partitioning method, and b represents a model-based grid partitioning method. |

4.3 Simulation of the process of the probe approaching an asteroid

During space missions exploring asteroids, as a probe approaches its target asteroid, the quality and quantity of the data collected increases. Theoretically, this can improve the inversion accuracy of the asteroid 3D model. Different from traditional inversion methods, which require numerical re-optimization when encountering new data and can be time-consuming, our proposed method leverages its speed advantage to quickly utilize new data and provide a model that is more accurate than in the previous stage, enabling rapid on-board autonomous decision-making.

We used the flight trajectory data of OSIRIS-REx (NASA 2023) to simulate the spacecraft’s approach to the asteroid 101955 Bennu. First, we simulated three ground-based observations every two months. Each ground-based observation was designed to simulate the spacecraft observing the asteroid for the duration of one complete rotation. Then, every two months after the launch of the probe, we simulated the start-up of the probe and observed the luminosity of the asteroid rotation for one period, a total of three times, as shown in the schematic diagram in Fig. 11. It can be seen from the inversion results in Fig. 12a that the inversion results of the first column, based on the ground observation data, have a large deviation from the actual situation. With the launch of the detector, when two light curves are added, the inversion results (shown in the third column) more accurately reflect the basic contour of the real model. In general, as the amount of data increases during the simulation process, the inversion accuracy improves.

Additionally, we compared our method with the KTM method. In the case of the KTM method, we fixed the pole axis and rotation period to the same values used in our model, specifically λ = 45∘, β = −88∘, and a rotation period of 4.2975 hours. The inversion results using the KTM method are shown in Fig. 12b. We compared the inversion results of different methods at various stages, using the CD between the inverted models and the ground truth, as shown in Table 3. It can be observed that in Stages 2–4, although the number of light curve points increases, the CD between the models inverted by the KTM method and the ground truth no longer decreases, stabilizing around 137 (1e-3). In contrast, our method shows improvement, with the CD of the inverted models decreasing from 152 (1e-3) to 127 (1e-3) in Stages 3–4 and the accuracy of the model inverted in Stage 4 surpassing that of the KTM method. Our method demonstrates a clear trend of reduced error, and we expect that there is still potential for further improvement in accuracy as the data volume increases further.

CD between the inverted model and the ground truth at different stages of the approach process.

|

Fig. 10 Distribution of the Pearson correlation coefficient between sparsity and CD. Panel a shows the distribution of the Pearson correlation coefficient between sparsity and CD calculated using the sphere-based grid partitioning method for sparsity. Panel b shows the distribution of the Pearson correlation coefficient between sparsity and CD calculated using the model-based grid partitioning method for sparsity. |

|

Fig. 11 Schematic of the simulated approach detection. Using telescopes on the ground and optical payloads on the spacecraft after launch, the light curve data increases gradually during the approach process, starting with three light curves in Stage 1 and adding one additional light curve at each subsequent stage. |

4.4 Real light curve experiments

The observational data were selected from the historical observation data of asteroids 3337 Miloš and 1289 Kutaïssi available on the DAMIT website (Ďurech et al. 2010). These data were obtained from the Lowell Observatory survey (Koehn et al. 2014) conducted between 1990 and 2012. The phase angle range for the observational data of asteroid 3337 Miloš is from 0.19∘ to 22.59∘, and for asteroid 1289 Kutaïssi, it is from 0.31∘ to 21.61∘. These two asteroids have extensive sampling points, and their historical photometric data have already been normalized, resulting in high-quality data. Therefore, the proposed deep learning network inversion model was used to predict the 3D shapes of these two asteroids, and the results were compared with those obtained using the Kaasalainen & Torppa (2001) inversion program code provided by the DAMIT website.

Figs. 13a and 13b show the inversion results for asteroids 3337 Miloš and 1289 Kutaïssi, respectively. From the figures, it can be observed that the prediction results of the two methods differ significantly. Due to a lack of ground truth, it is hard to determine which method gives better results. We conducted light curve fitting experiments on the 3D models inverted from asteroids 3337 Miloš and 1289 Kutaïssi using our method and compared them with the 3D models inverted using the Kaasalainen & Torppa (2001)'s method. Since the observed light curve data for these two asteroids spans a long period, we used the phase angle as the horizontal axis in order to plot the graphs and examine the fitting results in the light curve fitting experiments. The light curve fitting results for asteroids 3337 Miloš and 1289 Kutaïssi obtained by our method and the KTM method are shown in Figs. A.1 and A.2. It can be seen that the models produced by our method and the KTM method yield similar results in light curve fitting. We calculated the mean absolute error in light curve fitting for the 3D models obtained by different methods, as shown in Table 4.

Mean absolute error in light curve fitting between KTM (Kaasalainen et al. 2001) and our method.

|

Fig. 12 Inversion results of simulated approach detection process for asteroid 101955 Bennu. Panel a: results using our method. Each row of images is labeled with the corresponding observation perspective. The first column shows the inversion results based on the ground observation light curve, while the second to fourth columns display the inversion results after progressively adding light curve data collected by the detector during the flight phase. The fifth column presents the actual 3D model of 101955 Bennu. Panel b: corresponding results using the KTM method. |

|

Fig. 13 Convex inversion results using real light curve data. Panel a: results for asteroid 3337 Miloš. The top row shows the method of (Kaasalainen et al. 2001), and bottom row shows our method. Panel b: results for asteroid 1289 Kutaïssi. The real shapes are unknown due to a lack of close observations. |

4.5 Convex hull inversion of non-convex model

The 3D models we used when training the network are convex, which is inconsistent with the actual three-dimensional shape of the asteroids. To verify the applicability of our proposed method to non-convex models, we simulated the light curves of non-convex 3D models based on the 433 Eros and 113 Lutetia asteroids, as shown in Figs. 14a and 14b. The results from the figures show that although we used the light curve data of the non-convex models, we are still able to invert a model that closely approximates the shape of the minimum circumscribed convex body of the non-convex model.

We conducted a light curve fitting experiment on our inversion results for 433 Eros and 113 Lutetia, and we computed the fitting errors. The average absolute error for the light curve fitting of 433 Eros is 0.0641, while for 113 Lutetia, the average absolute error is 0.0179. Some of the experimental results are presented in Figs. A.3 and A.4. It can be observed that for the smallarea non-convex 3D model of 113 Lutetia, the light curve fitting results are quite good. However, for the large-area non-convex 3D model of 433 Eros, the fitting is slightly less accurate, though the shape of the inverted model still closely matches its minimum circumscribed convex body. These results can provide effective initial values for some numerical optimization algorithms, such as SAGE, thereby reducing inversion time.

For the current concave areas determination methods, the inversion accuracy of convex hulls that we obtained remains unacceptable. It still leads to the photometric differences that represent the shadowing effects of non-convex areas being affected by the shape differences between the inverted convex hull and the true convex hull. As a result, we have not yet conducted non-convex region detection experiments on them.

|

Fig. 14 Convex hull inversion results for non-convex asteroids. Panel a: asteroid 433 Eros. The top row shows the NEAR Shoemaker mission shape model (Zuber et al. 2000), the middle row shows its convex hull, and the bottom row shows our inversion result. Panel b: asteroid 113 Lutetia. The top row shows the shape model by (Farnham 2013), the middle rows shows its convex hull, and the bottom row shows our inversion result. |

|

Fig. 15 Illustration of the Intersection over Union (IoU) (Qi et al. 2017a) calculation in the non-convex region prediction task. |

4.6 Determination of concave areas results

Using the simulated light curve of the non-convex model under real observation conditions, we predicted the region of depression on the minimum circumscribed convex hull of the nonconvex model. For the concave areas discrimination network, we used the intersection over union (IoU) metric (Qi et al. 2017a) to evaluate the prediction results. A diagram illustrating the IoU is shown in Fig. 15. If the non-convex surface in the model is large, the IoU of the predicted non convex region point cloud can reach 0.89. For the smaller concave areas, the predicted region will be slightly offset, and as shown in Fig. 16, its intersection union ratio is about 0.6.

|

Fig. 16 Prediction results of non-convex areas under real observation conditions. The top shows the true non-convex model, and the bottom shows the prediction results on the convex hull, with the red color indicating the predicted concave area. |

5 Conclusions

We have proposed an asteroid 3D shape inversion algorithm based on convolutional and transformer networks that establishes and learns the mapping relationship between asteroid photometry and geometric position coordinates in relation to the 3D point cloud distribution of the asteroid by using deep neural networks. On a RTXA5000 GPU, it can predict the 3D point cloud of the convex hull of an asteroid in just 0.56 s, compared to the 45-second runtime of traditional optimization algorithms, thus significantly enhancing inversion efficiency.

For non-convex asteroids, we proposed a method based on the convex hull to predict concave areas. Although this method has only been implemented in simulations so far, it still reveals the potential of deep learning technology in the inversion of non-convex three-dimensional shapes of asteroids. In the next step, we will attempt to apply the concave areas prediction algorithm to real observational data. Whether using the Kaasalainen & Torppa (2001) method or our transformer-based approach to predict the shape of non-convex asteroids, there will be differences compared to their strict convex hull, which will manifest in the photometric data. This is particularly challenging, especially for asteroids with a small size of nonconvexity (Ďurech & Kaasalainen 2003). Once the concave areas are identified, the size and depth of the craters will be aspects that require our attention. If we can roughly determine the shape of a non-convex asteroid, the prediction of other physical properties, including mass, center of mass, and rotation axis, will become more accurate.

Acknowledgements

This research was funded by the National Science and Technology Major Project (2022ZD0117401) and the National Defense Science and Technology Innovation Special Zone Project Foundation of China grant number 19-163-21-TS-001-067-01.

Appendix A Additional figures

|

Fig. A.1 Light curve fitting results of the 3D model of asteroid 3337 Miloš obtained by KTM (Kaasalainen & Torppa 2001) and our method, which was collected from the Lowell Observatory between October 12, 1990, and April 24, 2012. We indicate the time period of the observational data above each chart. The blue points represent the observational data, the green points represent the simulated brightness from the inverted model using the KTM, and the red points represent the simulated brightness from the inverted model using our method. The horizontal axis represents the phase angle, while the vertical axis represents the relative brightness. |

|

Fig. A.2 Light curve fitting results of the 3D model of asteroid 1289 Kutaïssi obtained by KTM (Kaasalainen & Torppa 2001) and our method, which was collected from the Lowell Observatory between November 10, 1998, and March 16, 2012. We indicate the time period of the observational data above each chart. The blue points represent the observational data, the green points represent the simulated brightness from the inverted model using the KTM, and the red points represent the simulated brightness from the inverted model using our method. The horizontal axis represents the phase angle, while the vertical axis represents the relative brightness. |

|

Fig. A.3 Partial light curve fitting results of the 3D model of 433 Eros obtained by our method. The blue points represent the simulated observational data, while the red triangles represent the simulated brightness of the inverted models. The horizontal axis represents the phase of rotation, and the vertical axis represents the relative brightness. |

|

Fig. A.4 Partial light curve fitting results of the 3D model of 113 Lutetia obtained by our method. The blue points represent the simulated observational data, while the red triangles represent the simulated brightness of the inverted models. The horizontal axis represents the phase of rotation, and the vertical axis represents the relative brightness. |

References

- Achlioptas, P., Diamanti, O., Mitliagkas, I., & Guibas, L. 2018, in International conference on machine learning, PMLR, 40 [Google Scholar]

- Allworth, J., Windrim, L., Bennett, J., & Bryson, M. 2021, Acta Astron., 181, 301 [NASA ADS] [Google Scholar]

- Bartczak, P., & Dudziński, G. 2018, MNRAS, 473, 5050 [NASA ADS] [CrossRef] [Google Scholar]

- Barucci, M. A., Cellino, A., De Sanctis, C., et al. 1992, A&A, 266, 385 [Google Scholar]

- Brent, R. P. 2013, Algorithms for Minimization Without Derivatives (USA: Courier Corporation) [Google Scholar]

- Carbognani, A., Tanga, P., Cellino, A., et al. 2012, Planet. Space Sci., 73, 80 [NASA ADS] [Google Scholar]

- Carry, B., Kaasalainen, M., Merline, W. J., et al. 2012, Planet. Space Sci., 66, 200 [NASA ADS] [Google Scholar]

- Catmull, E. E. 1974, A Subdivision Algorithm for Computer Display of Curved Surfaces (USA: The University of Utah) [Google Scholar]

- Cellino, A., Tanga, P., Muinonen, K., & Mignard, F. 2024, A&A, 687, A277 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Chng, C.-K., Sasdelli, M., & Chin, T.-J. 2022, MNRAS, 513, 311 [NASA ADS] [Google Scholar]

- Cohen, I., Huang, Y., Chen, J., et al. 2009, Noise Reduction in Speech Processing (Berlin: Springer), 1 [Google Scholar]

- Dai, A., Ruizhongtai Qi, C., & Nießner, M. 2017, in Proceedings of the IEEE conference on computer vision and pattern recognition, 5868 [Google Scholar]

- Daly, R. T., Ernst, C. M., Barnouin, O. S., et al. 2023, Nature, 616, 443 [CrossRef] [Google Scholar]

- Danilova, M., Dvurechensky, P., Gasnikov, A., et al. 2022, in High-Dimensional Optimization and Probability: With a View Towards Data Science (Berlin: Springer), 79 [Google Scholar]

- Devogèle, M., Rivet, J. P., Tanga, P., et al. 2015, MNRAS, 453, 2232 [NASA ADS] [Google Scholar]

- Ďurech, J. 2002, Icarus, 159, 192 [Google Scholar]

- Ďurech, J., & Hanuš, J. 2023, A&A, 675, A24 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ďurech, J., & Kaasalainen, M. 2003, A&A, 404, 709 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ďurech, J., Sidorin, V., & Kaasalainen, M. 2010, A&A, 513, A46 [Google Scholar]

- Ďurech, J., Tonry, J., Erasmus, N., et al. 2020, A&A, 643, A59 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Farnham, J. 2013, NASA Planetary Data System (USA: NASA) [Google Scholar]

- Flavell, L. 2011, Beginning Blender: Open Source 3d Modeling, Animation, and Game Design (Berlin: Springer) [Google Scholar]

- Groueix, T., Fisher, M., Kim, V. G., Russell, B. C., & Aubry, M. 2018, in Proceedings of the IEEE conference on computer vision and pattern recognition, 216 [Google Scholar]

- Han, X., Li, Z., Huang, H., Kalogerakis, E., & Yu, Y. 2017, in Proceedings of the IEEE international conference on computer vision, 85 [Google Scholar]

- Hao, H., Jincheng, Y., Ling, Y., et al. 2023, Computers and Electronics in Agriculture, 205, 107560 [Google Scholar]

- Hapke, B. W. 1963, J. Geophys. Res., 68, 4571 [NASA ADS] [CrossRef] [Google Scholar]

- Hapke, B. 1966, AJ, 71, 333 [NASA ADS] [Google Scholar]

- Harris, A. W., & D’Abramo, G. 2015, Icarus, 257, 302 [NASA ADS] [CrossRef] [Google Scholar]

- Hartmann, J., Ernst, D., Neumann, I., et al. 2024, Journal of Applied Geodesy, 18, 613 [Google Scholar]

- Huang, Z., Yu, Y., Xu, J., Ni, F., & Le, X. 2020, in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7662 [Google Scholar]

- Hudson, R. S., & Ostro, S. J. 1998, Icarus, 135, 451 [Google Scholar]

- Hurley, N., & Rickard, S. 2009, IEEE Trans. Inform. Theor., 55, 4723 [Google Scholar]

- Ivezić, Ž., Kahn, S. M., Tyson, J. A., et al. 2019, ApJ, 873, 111 [Google Scholar]

- Kaasalainen, M. 2011, Inverse Problems and Imaging, 5, 37 [Google Scholar]

- Kaasalainen, M., & Torppa, J. 2001, Icarus, 153, 24 [NASA ADS] [CrossRef] [Google Scholar]

- Kaasalainen, M., & Viikinkoski, M. 2012, A&A, 543, A97 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Kaasalainen, M., Lamberg, L., & Lumme, K. 1992a, A&A, 259, 333 [NASA ADS] [Google Scholar]

- Kaasalainen, M., Lamberg, L., Lumme, K., & Bowell, E. 1992b, A&A, 259, 318 [Google Scholar]

- Kaasalainen, M., Torppa, J., & Muinonen, K. 2001, Icarus, 153, 37 [NASA ADS] [CrossRef] [Google Scholar]

- Koehn, B. W., Bowell, E. G., Skiff, B. A., et al. 2014, Minor Planet Bull., 41, 286 [NASA ADS] [Google Scholar]

- Lauretta, D. S., Balram-Knutson, S. S., Beshore, E., et al. 2017, Space Sci. Rev., 212, 925 [Google Scholar]

- Lee, H.-J., Ďurech, J., Vokrouhlický, D., et al. 2021, AJ, 161, 112 [NASA ADS] [CrossRef] [Google Scholar]

- Levison, H. F., Olkin, C. B., Noll, K. S., et al. 2021, PSJ, 2, 171 [Google Scholar]

- Li, J. Y., Helfenstein, P., Buratti, B., Takir, D., & Clark, B. E. 2015, in Asteroids IV, eds. P. Michel, F. E. DeMeo, & W. F. Bottke (Tucson: University of Arizona Press), 129 [Google Scholar]

- Linares, R., Furfaro, R., & Reddy, V. 2020, J. Astron. Sci., 67, 1063 [NASA ADS] [Google Scholar]

- Lorensen, W. E., & Cline, H. E. 1998, in Seminal Graphics: Pioneering Efforts that Shaped the Field (New York: ACM Press), 347 [Google Scholar]

- Lu, X.-P., Zhang, Y.-X., Zhao, H.-B., Zheng, H., & Di, K.-C. 2024, ApJ, 961, 154 [NASA ADS] [Google Scholar]

- Mandikal, P., & Radhakrishnan, V. B. 2019, in 2019 IEEE Winter Conference on Applications of Computer Vision (WACV) (USA: IEEE), 1052–1060 [Google Scholar]

- Martikainen, J., Muinonen, K., Penttilä, A., Cellino, A., & Wang, X. B. 2021, A&A, 649, A98 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Masoumzadeh, N., Boehnhardt, H., Li, J.-Y., & Vincent, J. B. 2015, Icarus, 257, 239 [Google Scholar]

- Möller, T., & Trumbore, B. 2005, in ACM SIGGRAPH 2005 Courses (New York: ACM Press), 7 [Google Scholar]

- Muinonen, K., & Lumme, K. 2015, A&A, 584, A23 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Muinonen, K., Wilkman, O., Cellino, A., Wang, X., & Wang, Y. 2015, Planet. Space Sci., 118, 227 [NASA ADS] [CrossRef] [Google Scholar]

- Muinonen, K., Torppa, J., Wang, X.-B., Cellino, A., & Penttilä, A. 2020, A&A, 642, A138 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Muinonen, K., Uvarova, E., Martikainen, J., et al. 2022, Front. Astron. Space Sci., 9, 821125 [NASA ADS] [CrossRef] [Google Scholar]

- NASA, P. D. S. 2023, in https://pds.nasa.gov/datasearch/subscription-service/SS-Release.shtml [Google Scholar]

- Nguyen, D. T., Hua, B.-S., Tran, K., Pham, Q.-H., & Yeung, S.-K. 2016, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5676 [Google Scholar]

- Pravec, P., Scheirich, P., Durech, J., et al. 2014, Icarus, 233, 48 [NASA ADS] [CrossRef] [Google Scholar]

- Qi, C. R., Su, H., Mo, K., & Guibas, L. J. 2017a, in Proceedings of the IEEE conference on computer vision and pattern recognition, 652 [Google Scholar]

- Qi, C. R., Yi, L., Su, H., & Guibas, L. J. 2017b, Advances in neural information processing systems, 30 [Google Scholar]

- Rizos, J. L., Asensio-Ramos, A., Golish, D. R., et al. 2021, arXiv e-prints [arXiv:2106.01363] [Google Scholar]

- Russell, H. N. 1906, ApJ, 24, 1 [Google Scholar]

- Scheirich, P., Durech, J., Pravec, P., et al. 2010, MAPS, 45, 1804 [Google Scholar]

- Sharma, A., Grau, O., & Fritz, M. 2016, in Computer Vision-ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part III 14, Springer, 236 [Google Scholar]

- Stutz, D., & Geiger, A. 2018, in Proceedings of the IEEE conference on computer vision and pattern recognition, 195 [Google Scholar]

- Tchapmi, L. P., Kosaraju, V., Rezatofighi, H., Reid, I., & Savarese, S. 2019, in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 383 [Google Scholar]

- Viikinkoski, M., Kaasalainen, M., & Durech, J. 2015, A&A, 576, A8 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Wang, Y., Sun, Y., Liu, Z., et al. 2019, ACM Transactions on Graphics, 38, 1 [CrossRef] [Google Scholar]

- Watanabe, S., Hirabayashi, M., Hirata, N., et al. 2019, Science, 364, 268 [NASA ADS] [Google Scholar]

- Yu, X., Rao, Y., Wang, Z., et al. 2021, in Proceedings of the IEEE/CVF international conference on computer vision, 12498 [Google Scholar]

- Yuan, W., Khot, T., Held, D., Mertz, C., & Hebert, M. 2018, in 2018 international conference on 3D vision (3DV) (USA: IEEE), 728 [Google Scholar]

- Zhang, P. F., Li, Y., Zhang, G. Z., et al. 2024, Lunar Planet. Sci. Conf., 3040, 1845 [NASA ADS] [Google Scholar]

- Zhou, H., Cao, Y., Chu, W., et al. 2022, in European Conference on Computer Vision (Berlin: Springer), 416 [Google Scholar]

- Zuber, M. T., Smith, D. E., Cheng, A. F., et al. 2000, Science, 289, 2097 [NASA ADS] [Google Scholar]

All Tables

Inversion performance comparison between KTM (Kaasalainen et al. 2001) and our method.

CD between the inverted model and the ground truth at different stages of the approach process.

Mean absolute error in light curve fitting between KTM (Kaasalainen et al. 2001) and our method.

All Figures

|

Fig. 1 Illustration of our proposed inversion method. The top four panels show the schematic diagrams of different visible and illuminated facets of the asteroid under varying Sun-asteroid-Earth positional relationships in the asteroid’s body coordinate system. The blue point cloud represents the asteroid point cloud obtained from the inversion process. |

| In the text | |

|

Fig. 2 Network architecture. First, FPS is used to downsample the input light curve sampling points in order to determine the center points. Then, the nearest neighbor set corresponding to each center point is obtained with the KNN algorithm, and local features are extracted using a simplified 1D-DGCNN. Afterwards, positional embeddings are added to enhance spatial awareness. The enhanced features are encoded by a geometry-aware transformer module followed by global feature aggregation through max pooling. Finally, the coarse point cloud is refined into the output using a transformer decoder that integrates three up-sampling layers and skip connections. |

| In the text | |

|

Fig. 3 Method of predicting non-convex regions. |

| In the text | |

|

Fig. 4 Simulated light curve for convex. |

| In the text | |

|

Fig. 5 Part of non-convex 3D model datasets. |

| In the text | |

|

Fig. 6 Inversion results of spherical model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

| In the text | |

|

Fig. 7 Inversion results of rod-shaped model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

| In the text | |

|

Fig. 8 Inversion results of sheet-like model. The models are divided into three groups. The first group represents the ground truth of the 3D model, displayed using both point cloud and facet representations. The second group shows the 3D model reconstructed using our method, where the point cloud is converted into triangular facets for easier comparison with the KTM method. The third group represents the 3D model reconstructed using the KTM method. All models are aligned with the asteroid-centric coordinate system for consistency and clarity in comparison. |

| In the text | |

|

Fig. 9 Generation of the grids. Here a represents a sphere-based grid partitioning method, and b represents a model-based grid partitioning method. |

| In the text | |

|

Fig. 10 Distribution of the Pearson correlation coefficient between sparsity and CD. Panel a shows the distribution of the Pearson correlation coefficient between sparsity and CD calculated using the sphere-based grid partitioning method for sparsity. Panel b shows the distribution of the Pearson correlation coefficient between sparsity and CD calculated using the model-based grid partitioning method for sparsity. |

| In the text | |

|

Fig. 11 Schematic of the simulated approach detection. Using telescopes on the ground and optical payloads on the spacecraft after launch, the light curve data increases gradually during the approach process, starting with three light curves in Stage 1 and adding one additional light curve at each subsequent stage. |

| In the text | |

|

Fig. 12 Inversion results of simulated approach detection process for asteroid 101955 Bennu. Panel a: results using our method. Each row of images is labeled with the corresponding observation perspective. The first column shows the inversion results based on the ground observation light curve, while the second to fourth columns display the inversion results after progressively adding light curve data collected by the detector during the flight phase. The fifth column presents the actual 3D model of 101955 Bennu. Panel b: corresponding results using the KTM method. |

| In the text | |

|

Fig. 13 Convex inversion results using real light curve data. Panel a: results for asteroid 3337 Miloš. The top row shows the method of (Kaasalainen et al. 2001), and bottom row shows our method. Panel b: results for asteroid 1289 Kutaïssi. The real shapes are unknown due to a lack of close observations. |

| In the text | |

|

Fig. 14 Convex hull inversion results for non-convex asteroids. Panel a: asteroid 433 Eros. The top row shows the NEAR Shoemaker mission shape model (Zuber et al. 2000), the middle row shows its convex hull, and the bottom row shows our inversion result. Panel b: asteroid 113 Lutetia. The top row shows the shape model by (Farnham 2013), the middle rows shows its convex hull, and the bottom row shows our inversion result. |

| In the text | |

|

Fig. 15 Illustration of the Intersection over Union (IoU) (Qi et al. 2017a) calculation in the non-convex region prediction task. |

| In the text | |

|