| Issue |

A&A

Volume 646, February 2021

|

|

|---|---|---|

| Article Number | A24 | |

| Number of page(s) | 21 | |

| Section | Planets and planetary systems | |

| DOI | https://doi.org/10.1051/0004-6361/201937308 | |

| Published online | 02 February 2021 | |

TRAP: a temporal systematics model for improved direct detection of exoplanets at small angular separations

1

Max-Planck-Institut für Astronomie,

Königstuhl 17,

69117 Heidelberg,

Germany

e-mail: samland@mpia.de

2

International Max Planck Research School for Astronomy and Cosmic Physics at the University of Heidelberg (IMPRS-HD), Heidelberg, Germany

3

Department of Astronomy, Stockholm University,

Stockholm, Sweden

4

Center for Cosmology and Particle Physics, Department of Physics, New York University,

726 Broadway,

New York,

NY 10003, USA

5

Center for Data Science, New York University,

60 Fifth Ave,

New York,

NY 10011, USA

6

Flatiron Institute, Simons Foundation,

162 Fifth Ave, New York,

NY 10010, USA

Received:

13

December

2019

Accepted:

23

November

2020

Context. High-contrast imaging surveys for exoplanet detection have shown that giant planets at large separations are rare. Thus, it is of paramount importance to push towards detections at smaller separations, which is the part of the parameter space containing the greatest number of planets. The performance of traditional methods for the post-processing of pupil-stabilized observations decreases at smaller separations due to the larger field-rotation required to displace a source on the detector in addition to the intrinsic difficulty of higher stellar contamination.

Aims. Our goal is to develop a method of extracting exoplanet signals, which improves performance at small angular separations.

Methods. A data-driven model of the temporal behavior of the systematics for each pixel can be created using reference pixels at a different positions, on the condition that the underlying causes of the systematics are shared across multiple pixels, which is mostly true for the speckle pattern in high-contrast imaging. In our causal regression model, we simultaneously fit the model of a planet signal “transiting” over detector pixels and non-local reference light curves describing the shared temporal trends of the speckle pattern to find the best-fitting temporal model describing the signal.

Results. With our implementation of a spatially non-local, temporal systematics model, called TRAP, we show that it is possible to gain up to a factor of six in contrast at close separations (<3λ∕D), as compared to a model based on spatial correlations between images displaced in time. We show that the temporal sampling has a large impact on the achievable contrast, with better temporal sampling resulting in significantly better contrasts. At short integration times, (4 s) for β Pic data, we increase the signal-to-noise ratio of the planet by a factor of four compared to the spatial systematics model. Finally, we show that the temporal model can be used on unaligned data that has only been dark- and flat-corrected, without the need for further pre-processing.

Key words: planets and satellites: detection / methods: data analysis / techniques: high angular resolution / techniques: image processing

© ESO 2021

1 Introduction

The field of direct observations of extrasolar planets has seen tremendous progress over the last ten years, both in terms of observational capabilities and of high-contrast imaging data analyses. This is particularly true given the recent advent of instruments dedicated to high-contrast observations such as SPHERE (Beuzit et al. 2019), GPI (Macintosh et al. 2014), and CHARIS (Groff et al. 2015), in addition to the development of more sophisticated observational strategies and post-processing algorithms – which have paved the way to making it a unique technique for the study of atmospheres and orbital characteristics of exoplanets directly. However, giant planets and substellar companions at large orbital separations (≿5−10 au) have been shown to be rare by multiple large direct imaging surveys (e.g., Brandt et al. 2014; Vigan et al. 2017, 2021; Nielsen et al. 2019). Pushing our detection capabilities towards smaller inner-working angles is therefore one of our primary goals, aimed at tapping into a parameter space regime in which planets are more abundant (Nielsen et al. 2019; Fernandes et al. 2019) and one that overlaps with indirect detection techniques, such as astrometric detections with Gaia (e.g., Casertano et al. 2008; Perryman et al. 2014) and long-term radial velocity trends (e.g., Crepp et al. 2012; Grandjean et al. 2019). Detecting planets is intrinsically more difficult the smaller the separation gets, because the speckle background is higher and fewer independent spatial elements are available for statistical evaluation of the significance of the detected signal(s). However, this intrinsic problem is further exacerbated because most algorithms used to model the stellar contamination obscuring the planet signal (the systematics) require strict exclusion criteria that determine which data can be used to construct a data-driven model of these systematics while preventing the incorporation of planet signal.

In this work, we present a novel algorithm designed to improve performance at small angular separations compared to conventional algorithms for pupil-tracking observations. Especially as coronagraphs become more powerful and allow us to probe smaller inner working angles (IWA1), the algorithmic performance will become more and more important. We achieve this goal by building a data-driven, temporal systematics model based on spatially non-local data. This model replaces the temporal exclusion criterion with a less restrictive spatial exclusion criterion and allows for the use of all frames in the observation sequence regardless of angular separation. This, in turn, allows us to make better use of the temporal sampling of the data and to create a systematics model that is sensitive to variations on all timescales sampled. We further employ a forward model of the companion signal, fitting both the systematics model and the planet model at the same time to avoid overfitting and biasing the detection.

In Sect. 2, we give an overview of systematics modeling in high-contrast imaging data, with a focus on the spatial systematics modeling approach that is traditionally used in pupil-tracking observations. In Sect. 3, we motivate our non-local, temporal systematics approach. Our causal regression model exploits the fact that pixels share similar underlying causes for their systematic temporal trends. We show how we can apply such a model to pupil-tracking, high-contrast imaging data. In Sect. 4, we discuss the data used to demonstrate the performance of our algorithm TRAP. Section 5 shows and compares the results we obtained. We end with a discussion and a future outlook on how the algorithm can be further improved in Sect. 6 and we provide a summary of our conclusions in Sect. 7.

2 Systematics modeling in pupil-tracking observations

The main challenge in high-contrast imaging is distinguishing a real astrophysical signal from the light of the central star, the so-called speckle halo. This stellar contamination is generally orders of magnitude brighter than a planetary companion object in raw data and furthermore, it can appear locally to be the same as a genuine point source.

In order to separate the astrophysically interesting signal2, such as a planet, from the systematic stellar-noise background, we need to model these systematics. Due to the complexity of the systematics, this is usually done using a data-driven approach, that is, by using the data itself as a basis for constructing the model. In order for this to work, there must be one or more distinguishing properties between the planetary signal and the contaminating systematics (the star’s light). These distinguishing properties are also referred to as diversities.

For exoplanet imaging, the primary diversity is spatial resolution, that is, a discernible difference in the position of the companion and the host-star signal on the sky. The most commonly used high-contrast imaging strategy enhances this distinguishing spatial property by inducing a further time-dependence of the measured companion signal by allowing the field-of-view (FoV) to rotate over the course of an observation sequence, while the speckle halo remains stationary. This mode of observation is called pupil-tracking mode and is the basis for algorithms such as angular differential imaging (ADI, Marois et al. 2006).

In this paper, we show that instead of building a spatial speckle pattern model for each image, we can build a temporal light-curve model for each pixel, which provides an alternative, novel way of reducing data taken in pupil-tracking mode.

Due to the field-of-view rotation, temporal variation is induced in the planetary signal as measured by the detector, thus creating a signal that varies in both space and time. Because there are two ways in which the planet signal differs from the stellar systematics, we have the freedom to build our systematics model on either, the spatial correlation between images or the temporal correlations between pixel time series (light curves). A mixture model of both presents another possibility which is not explored in this work.

The difference between the two pure approaches can be understood on the basis of whether we treat the data as being either: (a) a set of image vectors that are to be explained as a combination of other image vectors taken at other times (spatial-systematics model); or (b) a set of time-series vectors that are to be explained as a combination of other time-series vectors taken at other locations (temporal-systematics model). Before we go into detail about the proposed temporal systematics approach in Sect. 3, we first summarize the commonly used spatial-systematics approach and discuss its drawbacks.

2.1 State-of-the-art approaches

Following the invention of roll deconvolution (or roll angle subtraction) for space-based observatories (Müller & Weigelt 1985), classical ADI (Marois et al. 2006) for ground-based observatories using pupil-tracking, and its various evolutions, which initiated the employment of optimization and regression models in the form of a locally optimized combination of images (LOCI, e.g., Lafrenière et al. 2007; Pueyo et al. 2012; Marois et al. 2014; Wahhaj et al. 2015), virtually all papers and algorithms were focused on using the spatial-systematics approach. In this approach, the training data is typically taken from the same image region where the planet signal is located (as implied also by the naming of LOCI) and the spatial similarity between an individual image and the training set of images displaced in time is used to remove the quasi-static speckle pattern which covers the area where the planetary companion signal is located at that time. This is the case for most commonly used algorithms, regardless of the implementation details of the model construction. In some cases, the regression is not performed on the images themselves, but a representation of the image vectors in a lower dimensional space using dimensionality reduction techniques, such as a principal component analysis (PCA/KLIP, Amara & Quanz 2012; Soummer et al. 2012). Implementations in publicly available pipeline packages follow this trend, for instance, PynPoint (PCA, Amara & Quanz 2012; Stolker et al. 2019), KLIP (Soummer et al. 2012; Ruffio et al. 2017), VIP (Gomez Gonzalez et al. 2017). The ANDROMEDA algorithm is similarly based on a spatial model, using difference images to suppress the stable features in the speckle pattern (Cantalloube et al. 2015) together with a maximum-likelihood approach to model the expected companion signal in the difference images. Another recent approach, called patch covariance (PACO, Flasseur et al. 2018), is aimed at statistically distinguishing a spatial patch that is co-moving with a planet signal based on the properties of a stationary patch.

Two notable exceptions to this spatial approach is the wavelet-based temporal de-noising approach (Bonse et al. 2018), which, however, does not attempt to create a causal regression model of the systematics. It applies a temporal filter and pre-conditions the data before applying a spatial systematics approach. Additionally, the STIM-map approach (Pairet et al. 2019) adjusts the detection map based on the temporal residuals left over after a spatial model is applied.

2.2 Spatial systematics models

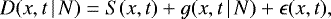

Let us assume we have a data cube, D, with a time series of image data3  , where M is the number of pixels and T the number of frames in the time series. For simplicity, we have x and t denote discretely sampled points in space and time in the notation, without further use of indices when the meaning is clear from the context. All data obtained with imaging instruments is discretely sampled. Any measurement of d in the D data can be described in the functional form evaluated at discrete points:

, where M is the number of pixels and T the number of frames in the time series. For simplicity, we have x and t denote discretely sampled points in space and time in the notation, without further use of indices when the meaning is clear from the context. All data obtained with imaging instruments is discretely sampled. Any measurement of d in the D data can be described in the functional form evaluated at discrete points:

(1)

(1)

where S is the planet signal contribution, g denotes the functional form of the systematics affecting this measurement, and ϵ is the stochastic noise (e.g., photon noise). Here, N is a stand-in for any hidden parameters that may be responsible for causing the systematics (e.g., wavefront phase residuals after adaptive optics (AO) correction, temperature, wind direction or speed).

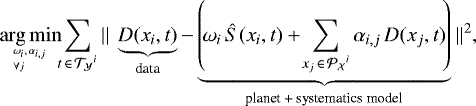

In a data-driven approach to modeling the systematics, our goal is to determine g(x, t | N) based on how g is expressed using other sets of data while being affected by the same underlying hidden parameters, N. If we could obtain a perfect model for g simply by subtracting it, we could detect and determine the planet signal, S, up to a stochastic uncertainty term. However, we have to guarantee that we do not incorporate contributions from S into our systematics model, g, otherwise we will attenuate the signal of interest, an effect known as self-subtraction4. For this reason, we divide the data into two subsets of measurements. Those that are affected by signal from a planet above a chosen threshold5 are thus called  and those that are not affected are called

and those that are not affected are called  . The union of both sets represents all sampled position and time combinations,

. The union of both sets represents all sampled position and time combinations,  , and both sets are disjoined.

, and both sets are disjoined.

![\begin{equation*} \begin{split} \mathcal{Y} &= \{(x, t) \in \mathcal{D} \, | \, S(x, t) > \text{thr}\}, \\[3pt] \mathcal{X} &= \{(x, t) \in \mathcal{D} \, | \, S(x, t) \leq \text{thr}\},\\[3pt] \mathcal{X} &\cup \mathcal{Y} = \mathcal{D}, \quad \mathcal{X} \cap \mathcal{Y} = \emptyset. \end{split} \end{equation*}](/articles/aa/full_html/2021/02/aa37308-19/aa37308-19-eq6.png) (2)

(2)

The resulting notion is that g(x, t | N) for all  can be approximated using a combination of elements in

can be approximated using a combination of elements in  that are affected by the same underlying causes of systematics, N. The most direct approach to this type of problem is to assume a linear regression model. However, since the data itself is drawn from a multi-dimensional space (space and time), a choice has to be made as to the set of basis vectors within which the linear regression is performed. Traditionally, image vectors (or, more often, vectors of sub-image regions) have been used as the basis of the linear regression (e.g., all LOCI and PCA variants mentioned earlier on). For one-dimensional representations, we may use a simplified vector notation where, for example, image vectors can be denoted as dt (x), that is, as a data vector of pixel values for a set of positions x at the time t. The image space is an intuitive basis when we think of the speckle noise as a relatively stable (quasi-static) pattern that is part of the (coronagraphic) stellar point spread function (PSF). The PSF is intuitively thought of as a spatial construct.

that are affected by the same underlying causes of systematics, N. The most direct approach to this type of problem is to assume a linear regression model. However, since the data itself is drawn from a multi-dimensional space (space and time), a choice has to be made as to the set of basis vectors within which the linear regression is performed. Traditionally, image vectors (or, more often, vectors of sub-image regions) have been used as the basis of the linear regression (e.g., all LOCI and PCA variants mentioned earlier on). For one-dimensional representations, we may use a simplified vector notation where, for example, image vectors can be denoted as dt (x), that is, as a data vector of pixel values for a set of positions x at the time t. The image space is an intuitive basis when we think of the speckle noise as a relatively stable (quasi-static) pattern that is part of the (coronagraphic) stellar point spread function (PSF). The PSF is intuitively thought of as a spatial construct.

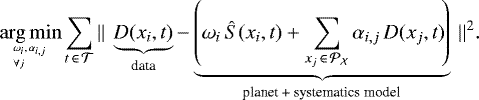

Let us now formulate, in general terms, the spatial approach as it is implemented in LOCI-like algorithms, in which we are trying to describe an image data vector in terms of other image vectors taken at a different time. We start by defining image regions  of pixels that are significantly affected by S(xi, tk) at a given tk as subsets of the complete image space of all pixels,

of pixels that are significantly affected by S(xi, tk) at a given tk as subsets of the complete image space of all pixels,

(3)

(3)

This corresponds to the area in an image covered by the companion PSF above a certain flux threshold at a given time. Given that the signal position changes over time, we can now define sets of times  in the observation sequence for which the planet signal does not affect this image region:

in the observation sequence for which the planet signal does not affect this image region:

(4)

(4)

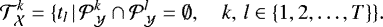

This is the set of all times at which the companion has moved far enough to not overlap with the area it occupied at a certain time. From these times we can build a linear model that does not incorporate planet signal. We can then say that the systematics and planet flux in a particular image region  and for any particular time tk can be estimated using a linear least squares regression solving

and for any particular time tk can be estimated using a linear least squares regression solving

(5)

(5)

where ωk corresponds to the contrast of the planet signal estimated at time, tk, and αk,l are the weight coefficients of the linear model at time, tk, and Ŝ is a model of the planet signal. In the spatial representation the planet model takes the form of an image of the companion PSF,  , with

, with  . This approach assumes that the speckle pattern is either sufficiently stable (up to a scaling factor) or recurrent over the course of the observation (e.g., finite probability of jitter to revisit the same position, recurring Strehl ratio, or observing conditions in general). In other words, we assume we have enough measurements for dt (x | N) with

. This approach assumes that the speckle pattern is either sufficiently stable (up to a scaling factor) or recurrent over the course of the observation (e.g., finite probability of jitter to revisit the same position, recurring Strehl ratio, or observing conditions in general). In other words, we assume we have enough measurements for dt (x | N) with  and

and  to probe the underlying distributions of unobserved causal factors, N, to allow us to reconstruct a specific instance of the systematics function g. In the spatial representation g corresponds to the speckle pattern,

to probe the underlying distributions of unobserved causal factors, N, to allow us to reconstruct a specific instance of the systematics function g. In the spatial representation g corresponds to the speckle pattern,  , in the image region. To a large extent this assumption is well founded, as evidenced by the success of the LOCI and spatial PCA-based family of algorithms. The details of how the α-coefficients are optimized and how the planet contrast, ω, is finally estimated vary strongly depending on the implementation (e.g., Lafrenière et al. 2007; Pueyo et al. 2012; Marois et al. 2014; Wahhaj et al. 2015) and this is one of the main distinguishing factors between the large number of published algorithms. Here, two closely related problems are the problem of collinearity and the problemof overfitting of the planet signal. Considering that many of the image vectors used in the linear model sharecommon features, one solution is to use regularization (e.g., damped LOCI, Pueyo et al. 2012). The most popular approach is to use a truncated set of principal component images instead of using the image vectors

, in the image region. To a large extent this assumption is well founded, as evidenced by the success of the LOCI and spatial PCA-based family of algorithms. The details of how the α-coefficients are optimized and how the planet contrast, ω, is finally estimated vary strongly depending on the implementation (e.g., Lafrenière et al. 2007; Pueyo et al. 2012; Marois et al. 2014; Wahhaj et al. 2015) and this is one of the main distinguishing factors between the large number of published algorithms. Here, two closely related problems are the problem of collinearity and the problemof overfitting of the planet signal. Considering that many of the image vectors used in the linear model sharecommon features, one solution is to use regularization (e.g., damped LOCI, Pueyo et al. 2012). The most popular approach is to use a truncated set of principal component images instead of using the image vectors  directly as done above for Eq. (5). This representation in a lower dimensional space simultaneously reduces collinearity in the training data and prevents overfitting of the model.

directly as done above for Eq. (5). This representation in a lower dimensional space simultaneously reduces collinearity in the training data and prevents overfitting of the model.

The question of whether the planet signal is fitted simultaneously to the systematics model (as done in this work), fitted after subtracting a systematics model (as has been the case for most early works), or subtracted before fitting the systematics model (e.g., Galicher et al. 2011) is another key difference in various implementations. Additionally, implementations vary in how the geometry and size of the image regions are defined and, furthermore, they may use two differently defined regions based on whether the region is used for optimizing the α-coefficients (usually larger than the actual region of interest) or subtracting the model, which is another way of reducing overfitting. Lastly, we note that reference star differential imaging (RDI, eg., Smith & Terrile 1984; Lafrenière et al. 2009; Gerard & Marois 2016; Ruane et al. 2017; Xuan et al. 2018; Bohn et al. 2020) is also based on the above-described spatial systematics model formalism, with the difference that the reference images are taken from observations of different host stars, which are consequently lacking the same planet signal contribution, S, by default. This paper is only concerned with individual pupil-stabilized observations.

2.3 Problems with spatial systematics models

The above-described assumptions impose a high requirement on the overall stability of the instrument and observing conditions. In modern instruments, a large part of the systematics can indeed be said to be quasi-static and correlated on timescales of minutes to hours (e.g., Milli et al. 2016; Goebel et al. 2018), making this approach applicable to these stellar-noise contributions.

However, the main drawback of the spatial modeling approach is the need to prevent the contamination of the systematics model with an astrophysically relevant signal by using a temporal exclusion criterion (usually defined as a protection angle). Its selection is a trade-off between excluding more frames to prevent self-subtraction and losing information related to the speckle evolution due to the exclusion of highly correlated frames close in time. As was already noted early on (Marois et al. 2006), this trade-off gets gradually worse the smaller the angular separation of a companion with respect to the central star is, because the same FoV rotation corresponds to smaller and smaller physical displacement on the detector between subsequent frames. At small separations, a large fraction of the observation sequence will be excluded from the training set (see Appendix A).

At small separations, these exclusion criteria cover time spans of the order of the linear decorrelation timescale for relatively stable instrumental speckles (Milli et al. 2016; Goebel et al. 2018). Even at large separations, the temporal exclusion will still be on the order of minutes. This means that using a spatial approach makes it intrinsically difficult to model the turbulence-induced, short-lived speckles with an exponential decay timescale of a few seconds (τ = 3.5 s, Milli et al. 2016). A sudden change in the overall conditions or the state of the instrument is likewise difficult to account for. Traditionally, obtaining simultaneous training data for modeling the systematics requires spectral differential imaging (SDI, Rosenthal et al. 1996; Racine et al. 1999) or polarimetric differential imaging (PDI, Kuhn et al. 2001).

In this work, however, we demonstrate that it is possible to apply a causal, temporal systematics model to monochromatic pupil-tracking observations, using simultaneous but non-local reference data. This allows us to avoid the harsh temporal exclusion criterion used for preventing self-subtraction by introducing a less strict spatial exclusion criterion of pixels affected by signal, which is solely determined by the total FoV rotation, independent of the angular separation between the star and exoplanet.

3 TRAP: a causal, temporal systematics model for high-contrast imaging

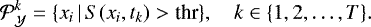

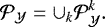

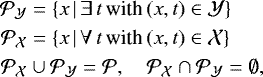

Analogous to the spatial approach as described in Eqs. (3)–(5), we can define the temporal approach in which all image vectors at a specific time become time series vectors at a specific location. This is done by first constructing a subset of times at which a specific pixel, xi, is affected by planetary signal and construct a reference set of other pixels, xj, which, in the same subset of times, does not contain planet signal. This would be a one-to-one translation of LOCI to a temporal approach, one that is local in time rather than space. Let us first construct the set of pixels affected by the planet signal at any point in time t of the observation sequence:

(6)

(6)

For each affected pixel,  , we construct a training set of unaffected other pixels

, we construct a training set of unaffected other pixels  :

:

![\begin{equation*} \begin{array}{@{\,}r@{\,}c@{\,}lr} {{\mathcal{T}}_{\mathcal{Y}}}^i &=&\displaystyle \{ t \, | \, S(x_i, t) > \text{thr} \}, &\displaystyle\forall x_i \in {{\mathcal{P}}_{\mathcal{Y}}}, \\[6pt] {{\mathcal{P}}_{\mathcal{X}}}^i &=&\displaystyle \{ x_j \, | \, {{\mathcal{T}}_{\mathcal{Y}}}^i \cap {{\mathcal{T}}_{\mathcal{Y}}}^{j} = \emptyset \}, &\displaystyle i, \, j \in \{1, 2, \dots, M\}, \end{array}\end{equation*}](/articles/aa/full_html/2021/02/aa37308-19/aa37308-19-eq25.png) (7)

(7)

and subsequently, we can build our model similar to Eq. (5) for any

(8)

(8)

where the data to be fitted takes the form of a (partial) time series (light curve),  , of one pixel,

, of one pixel,  , for all times,

, for all times,  , at which the pixel is affected by a planet signal above a certain threshold. In the temporal representation the planet model takes the form of a positive transit light curve

, at which the pixel is affected by a planet signal above a certain threshold. In the temporal representation the planet model takes the form of a positive transit light curve  for

for  . The systematics g in the temporal representation correspond to temporal trends,

. The systematics g in the temporal representation correspond to temporal trends,  , with

, with  and are reconstructed in a basis set of reference time series,

and are reconstructed in a basis set of reference time series,  , that are unaffected by planet signal during the same time. In the literature, this process is usually referred to as a de-trending of light curves. The fitting of the systematics model can therefore be achieved in a mathematically analogous way to the spatial model when swapping the spatial and temporal axes. However, in our implementation, we loosen the restriction on temporal locality, such that we always look at the complete time series and are left with only two relevant sets: the set of pixels that are at any point in time affected by planet signal

, that are unaffected by planet signal during the same time. In the literature, this process is usually referred to as a de-trending of light curves. The fitting of the systematics model can therefore be achieved in a mathematically analogous way to the spatial model when swapping the spatial and temporal axes. However, in our implementation, we loosen the restriction on temporal locality, such that we always look at the complete time series and are left with only two relevant sets: the set of pixels that are at any point in time affected by planet signal  and those that are at no point affected

and those that are at no point affected

(9)

(9)

and the model is defined over all times,  , such that for any

, such that for any  , Eq. (8) becomes:

, Eq. (8) becomes:

(10)

(10)

In an intuitive sense, it may not be obvious why we can use non-local time series to create a systematics model for the time series of a specific pixel, unless we consider the causal structure of the systematics that confounds the signal in the data. While pixels physically separated enough on the detector do not directly “talk to each other” and are, at first order, independent measuring devices, they are influenced by the same underlying causes that generate the systematics in the first place. At the most basic level, the primary cause of systematics, that is, the contamination by the stellar signal, is the (coronagraphic) PSF of the star itself which changes with observing conditions and changes in the instrument. Of course, depending on the detailed underlying cause, the area of shared influence can be spatially different; for example, wind driven halo effects (Cantalloube et al. 2018, 2020) cannot be statistically inferred from reference pixels unaffected by the same underlying cause.

A detailed mathematical description of modeling systematics in time series data based on shared underlying causes can be found in Schölkopf et al. (2016), giving the statistical background and justification for Eqs. (8) and (10). The above-mentioned work shows that by using a regression model of a sufficiently large number of “half-siblings,” that is, measurements that do not share the signal but the same causal relationship with the systematics,  , of one pixel,

, of one pixel,  , we can reconstruct a specific instance of the systematics function,

, we can reconstruct a specific instance of the systematics function,  . Furthermore, we can include a model of the planet signal itself in the regression, such that the overall regression explains the data in

. Furthermore, we can include a model of the planet signal itself in the regression, such that the overall regression explains the data in  up to a stochastic noise term (see Eq. (1)), which remains the fundamental limit of the data. This fundamental limit is a combination of photon noise, detector read-out noise, and flat fielding uncertainties. In practice, wewill still be limited by imperfections in the systematics model because only a finite number of regressor light curves are available and some causes of systematics may not be perfectly shared among them.

up to a stochastic noise term (see Eq. (1)), which remains the fundamental limit of the data. This fundamental limit is a combination of photon noise, detector read-out noise, and flat fielding uncertainties. In practice, wewill still be limited by imperfections in the systematics model because only a finite number of regressor light curves are available and some causes of systematics may not be perfectly shared among them.

The mathematical idea of using a causal time-series regression model, as described above, has been employed very successfully for transit observation using the Kepler spacecraft (e.g., Wang et al. 2016a). The benefit of simultaneously including a forward model of the expected transit shape in the regression model has been demonstrated in detail in Foreman-Mackey et al. (2015).

Implementation and application to high-contrast imaging data

The situation is very similar for high-contrast imaging data. The causes of systematics, with the exception of detector artifacts (e.g., bad, warm, or dead pixels, flat field), usually influence either the image globally (e.g., most atmospheric effects, Strehl ratio variations), or a significant region of the detector (e.g., wind-driven halo, (mis-)alignment of the coronagraph). Even slowly evolving changes in the quasi-static speckle pattern caused by the instrument usually are not strictly confined to one region of the detector (e.g., speckle patterns can and do indeed display point symmetries).

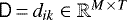

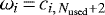

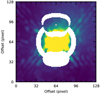

A detailed study of the objectively optimal choice of reference pixels to capture most shared underlying systematic causes is beyond the scope of this work, but multiple heuristic choices can be made, namely: (1) a preference for pixels at a similar separation (same underlying modified Rician speckle intensity distribution, Marois et al. 2008); (2) pixels at same position angle inwards and outwards of the reduction area (position angle-dependent effects, e.g., wind-driven halo, Cantalloube et al. 2018, 2020); (3) inclusion of all pixels analogous to the reduction region but on the opposite side of the star (point symmetric features arising from the nature of phase-aberrations, see e.g. small-phase approximation, Perrin et al. 2003; Ren et al. 2012; Dou et al. 2015); and (4) exclusion of all pixels affected by the planet signal. Varying the regressor-selection parameters around these heuristics does not significantly impact our final results. Figure 1 shows an example for the reference pixel (regressor) selection geometry chosen in this work for an assumed companion that passes north of the host star at the midpoint of the observation. The annulus used has a width of 7 pixels and the PSF used includes the first Airy ring and has a diameter of 21 pixels.

In our implementation6, called the Temporal Reference Analysis of Planets (TRAP), we use: (a) a linear model with a quadratic objective function (assuming Gaussian uncertainties7); (b) the principal components of the regressor pixel light curves, not the pixels themselves (principal component regression); (c) a fit of each affected pixel individually instead of all affected pixels at the same time for computational reasons; (d) a fit of the planet model and the systematics model simultaneously in order to prevent overfitting, similar to the approach by Foreman-Mackey et al. (2015). The above choice of using principal components reduces the collinearity of the regressors used for the systematics model and transforms our model onto an orthogonal basis. It also allows us to forgo regularization in favor of truncating the principle components after a certain number. Furthermore, we do not use any spatial pre-filtering of the data as is commonly done in many implementations to remove structures correlated on larger scales than the expected planet signal from the data (e.g., as done in Cantalloube et al. 2015; Ruffio et al. 2017). We also do not pre-select or discard any frames as bad frames in this study.

Given a data cube of the observation sequence,  , where M is the number of pixels and T is the number of frames in the time series, as well as the known parallactic angles for each frame, we know the temporal evolution of a companion signal’s position. The algorithm is applied as follows for one assumed relative position θ (ΔRA, ΔDEC) of a companion point source on-sky and its corresponding trajectory on the detector:

, where M is the number of pixels and T is the number of frames in the time series, as well as the known parallactic angles for each frame, we know the temporal evolution of a companion signal’s position. The algorithm is applied as follows for one assumed relative position θ (ΔRA, ΔDEC) of a companion point source on-sky and its corresponding trajectory on the detector:

- 1.

We generate a forward model of the planet signal at an assumed position, θ, for the time series by embedding the model PSF in an empty data cube at the appropriate parallactic angle for each exposureto obtain the set of pixels

affected at any point in time during the observation. Conversely, all pixels,

affected at any point in time during the observation. Conversely, all pixels,  , not affected at any time by planet signal are potential reference pixels.

, not affected at any time by planet signal are potential reference pixels.

Additionally we obtain the corresponding planet model light curve  for every

for every  . We use the unsaturated PSFs obtained without the coronagraph directly before or after the sequence, but artificially induced satellite spots could also be used. The PSF has been adjusted to the exposure time and filter of the science exposures and is set to zero beyond the first Airy ring.

. We use the unsaturated PSFs obtained without the coronagraph directly before or after the sequence, but artificially induced satellite spots could also be used. The PSF has been adjusted to the exposure time and filter of the science exposures and is set to zero beyond the first Airy ring.

- 2.

Instead of using all time series from unaffected pixels,

, to build the systematics model, we construct the training set with additional desired constraints

, to build the systematics model, we construct the training set with additional desired constraints

as previously described (see Fig. 1). The number of reference pixels in the reference set is called

as previously described (see Fig. 1). The number of reference pixels in the reference set is called

.

. - 3.

For each pixel

:

:

(a) We want to simultaneously optimize the coefficients of our systematics and planet model.

We first show how this is done starting from Eq. (10), which uses the reference light curves from the data directly. The minimization problem can be solved as a system of linear equations of the form:

(11)

(11)

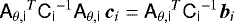

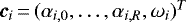

because Eq. (10) is linear in ωi and αi,j. Aθ,i is the design matrix, also called regressor matrix, containing the light-curve vectors constituting the terms of the linear model to be fitted. Here,  is the vector of model coefficients,

is the vector of model coefficients,  is the light-curve data vector of pixel, xi, that is to be fitted, and Ci is the covariance associated with the data vector to be fitted. The uncertainties of the time series are in general heteroscedastic (not uniform in time), but uncorrelated, such that the off-diagonal elements are zero.

is the light-curve data vector of pixel, xi, that is to be fitted, and Ci is the covariance associated with the data vector to be fitted. The uncertainties of the time series are in general heteroscedastic (not uniform in time), but uncorrelated, such that the off-diagonal elements are zero.

(b) The design matrix, Aθ,i, consists of columns containing the additive terms of the overall model we want to fit. As such the part of the design matrix for Eq. (10) that describes only the systematics model consists of columns that contain the reference light curves,  , such that

, such that  ,

,  , and

, and  . The same basis vectors describing the systematics model are used for all pixels xi for a given planet position θ.

. The same basis vectors describing the systematics model are used for all pixels xi for a given planet position θ.

Since this would only account for the systematics, we add two additional columns, one containing a constant offset to be fitted and one containing the light curve vector,  , describing the companion signal at pixel, xi, over time8. This (preliminary) design matrix Aθ,i looks as follows:

, describing the companion signal at pixel, xi, over time8. This (preliminary) design matrix Aθ,i looks as follows:

![\begin{equation*}\tens{A}_{\theta, i;\, kj} = \begin{cases} d_{kj}, & \text{if}\ j \leq R\\[3pt] 1, & \text{if}\ j = R+1 \\[3pt] \hat{S}_{k,i}, & \text{if}\ j = R+2 \end{cases} \: \in \mathbb{R}^{T\,{\times}\,(R+2)}. \end{equation*}](/articles/aa/full_html/2021/02/aa37308-19/aa37308-19-eq63.png) (12)

(12)

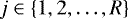

(c) However, this results in an overdetermined and highly collinear problem because there are typically more reference light curves than there are samples in time (R ≫ T) and the light curve share many common features. Therefore, some form of regularization or dimensionality reduction is required. We opt for representing them in a lower dimensional space using the principal components of the light curves instead of the reference light curves themselves. This is implemented using a singular value decomposition (SVD)9. We first center the data robustly by subtracting the temporal median of each light curve dkj. This centered data matrix can then be factorized into U ΣSVD VT, where U corresponds to a T × T matrix, ΣSVD corresponds to a T × R diagonal matrix with non-negative real numbers, and V T corresponds to an R × R matrix. Because the data dkj is real, U and V T are real and orthonormal matrices. In the following context, we use U, the columns of which are called left-singular vectors and form an orthonormal basis in which the light curves, dkj, can be described. They are ordered in decreasing order of explained variance. We truncate the number of components to be fitted to remove spurious signals from the systematics model and reduce overfitting. The number of components used in the algorithm is specified by the fraction, f, of the maximum number of components, Nmax = T, such that Nused = f Nmax with f ∈ [0, 1] and  . Nused is always rounded to the closest integer for any chosen f. The fraction of components, f, is a user parameter that can be set. The regressors for the temporal systematics are then given by Ukl, where

. Nused is always rounded to the closest integer for any chosen f. The fraction of components, f, is a user parameter that can be set. The regressors for the temporal systematics are then given by Ukl, where  . The final design matrix is:

. The final design matrix is:

![\begin{equation*}\widetilde{\tens{A}}_{\theta,i;\, kl} = \begin{cases} \tens{U}_{kl}, & \text{if}\ l \leq f \, N_{\mathrm{max}}\\[3pt] 1, & \text{if}\ l = f \, N_{\mathrm{max}}+1 \\[3pt] \hat{S}_{k,i}, & \text{if}\ l = f \, N_{\mathrm{max}}+2 \end{cases} \: \in \mathbb{R}^{T\,{\times}\,(f \, N_{\mathrm{max}}+2)}. \end{equation*}](/articles/aa/full_html/2021/02/aa37308-19/aa37308-19-eq66.png) (13)

(13)

(d) The vectors of the design matrix are mostly orthogonal and we can robustly invert the equation analogously to Eq. (11) using linear algebra operations to obtain the vector of model coefficients and their associated uncertainty. The coefficients and their associated covariances are given by:

|

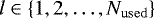

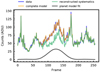

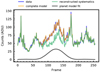

Fig. 2 Example for Eq. (11), showing the first five principal component light curves and the planet model. The constant offset term ( |

![\begin{equation*} \begin{split} \vec{c}_i &= [\widetilde{\tens{A}}_{\theta,i}^T \, \tens{C_i}^{-1} \, \widetilde{\tens{A}}_{\theta, i}]^{-1}\, [\widetilde{\tens{A}}_{\theta,i}^T \,\tens{C_i}^{-1} \, \vec{d}_{x_i}] \\ \tens{\Sigma}_{i} &= [\widetilde{\tens{A}}_{\theta,i}^T \, \tens{C_i}^{-1} \, \widetilde{\tens{A}}_{\theta,i}]^{-1}. \end{split}\end{equation*}](/articles/aa/full_html/2021/02/aa37308-19/aa37308-19-eq67.png) (14)

(14)

In our current implementation, the covariance matrix of the data C is assumed to be uncorrelated in time (zero for off-diagonal elements), as the residuals after fitting the model are approximately white. The variance for the coefficients are therefore given by:

(15)

(15)

The results shown throughout the paper assume identically distributed data uncertainties. However, known uncertainties for each pixel (e.g., photon noise, read-noise) can be passed to the routine, which is generally recommended if the variance of the input data is known. Taking into account photon noise puts less weight on data with higher speckle noise (e.g., pixels closer to the star, located on the spider, frames taken under worse conditions), further increasing the robustness of the reduction. The elements  and

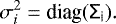

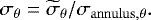

and  correspond to the contrast of the companion and its respective variance by construction. A graphical example for the system of equations in Eq. (11) to be solved is shown in Fig. 2. The result of the fit of the time series are shown in Fig. 3. The above example is for the central pixel of the signal-affected detector area shown in Fig. 1, injected with a relatively bright signal (10−4 contrast) for demonstration purposes.

correspond to the contrast of the companion and its respective variance by construction. A graphical example for the system of equations in Eq. (11) to be solved is shown in Fig. 2. The result of the fit of the time series are shown in Fig. 3. The above example is for the central pixel of the signal-affected detector area shown in Fig. 1, injected with a relatively bright signal (10−4 contrast) for demonstration purposes.

- 4.

After obtaining ωi and

for all

for all  , we remove significant outliers whose median of the residual vector deviates more than a set threshold in robust standard deviations from the rest (in our implementation 3 robust standard deviations). This usually removes a low single-digit number of pixels (<10), consistent with the number of anomalous (e.g., bad or cosmic ray affected) pixels that are expected to be present in an area the size of a typical reduction region. The incurred information loss is minimal. We call this new subset of pixels:

, we remove significant outliers whose median of the residual vector deviates more than a set threshold in robust standard deviations from the rest (in our implementation 3 robust standard deviations). This usually removes a low single-digit number of pixels (<10), consistent with the number of anomalous (e.g., bad or cosmic ray affected) pixels that are expected to be present in an area the size of a typical reduction region. The incurred information loss is minimal. We call this new subset of pixels:

.

. - 5.

Finally, we take the average of the planet contrast coefficients weighted by their respective uncertainties over all remaining pixels:

|

Fig. 3 Result of the fit for the pixel shown in Fig. 2, with the reconstructed model for the time series with and without including the coefficient ωi describing the amplitude of the companion signal. |

(16)

(16)

to obtain a single contrast and preliminary uncertainty value for this on-sky position θ. Equation (16) assumes the pixel residuals to be uncorrelated in space, which, in practice, is not the case. The preliminary uncertainty,  , needs to be scaled with an empirical normalization factor, the derivation of which is discussed below, to account for simplified assumptions about the covariance structure of the data and spatial correlations between the residuals.

, needs to be scaled with an empirical normalization factor, the derivation of which is discussed below, to account for simplified assumptions about the covariance structure of the data and spatial correlations between the residuals.

Iterating over a grid of possible positions10 allows us to construct a contrast and pseudo-uncertainty map, that is, the contrast ωθ and its preliminary uncertainty  given the positions of the assumed companion relative to the central star. From these we construct a pseudo-signal-to-noise (S/N) map. The above uncertainties are computed under the simplified assumption that all measurements are Gaussian and independent, which may not accurately reflect the reality of high-contrast imaging data. Multiple studies have shown that residuals after post-processing with high-contrast imaging pipelines, while they may be significantly whitened, are not strictly Gaussian and independent (Marois et al. 2008; Cantalloube et al. 2015; Pairet et al. 2019). The most direct solution would be to account for these effects in the likelihood function used, but in practice, this has proven to be challenging since the uncertainty distributions are heteroscedastic and depend on the observing conditions, instrument, location in the image, and time. Therefore, we apply the same solution to this problem as in ANDROMEDA (Cantalloube et al. 2015), and, subsequently, in FMMF (forward model matched filter, see Ruffio et al. 2017) and we empirically normalize the pseudo-S/N map using the azimuthal robust standard deviation of the S/N map itself computed in three-pixel-wide annuli as a function of separation. We denote these final uncertainty values as:

given the positions of the assumed companion relative to the central star. From these we construct a pseudo-signal-to-noise (S/N) map. The above uncertainties are computed under the simplified assumption that all measurements are Gaussian and independent, which may not accurately reflect the reality of high-contrast imaging data. Multiple studies have shown that residuals after post-processing with high-contrast imaging pipelines, while they may be significantly whitened, are not strictly Gaussian and independent (Marois et al. 2008; Cantalloube et al. 2015; Pairet et al. 2019). The most direct solution would be to account for these effects in the likelihood function used, but in practice, this has proven to be challenging since the uncertainty distributions are heteroscedastic and depend on the observing conditions, instrument, location in the image, and time. Therefore, we apply the same solution to this problem as in ANDROMEDA (Cantalloube et al. 2015), and, subsequently, in FMMF (forward model matched filter, see Ruffio et al. 2017) and we empirically normalize the pseudo-S/N map using the azimuthal robust standard deviation of the S/N map itself computed in three-pixel-wide annuli as a function of separation. We denote these final uncertainty values as:

(17)

(17)

Any reference to S/N in this work refers to the normalized S/N ωθ ∕σθ. This normalized S/N map is also referred to as the detection map. Any a priori known companion objects or background star sources will be masked out when deriving the normalization to avoid biasing the result.

|

Fig. 1 Example of reference pixel selection for one assumed planet position. The signal here is assumed to move through the position (Δx, Δy) ≈ (0, 28) pixel above the star at the midpoint of the observation sequence. This position is marked by a pink cross and represents the center of the reduction area, |

Observing log.

|

Fig. 4 Contour map of median combined image of pre-processed 51 Eridani data cube. Left panel: data in linear scaled brightness bins, right panel: logarithmic scaling. |

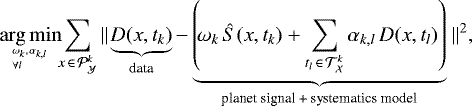

4 Datasets used for demonstration

We tested our TRAP algorithm on two real datasets obtained with the extreme-AO (SAXO; Fusco et al. 2014) fed infrared dual-band imager (IRDIS; Dohlen et al. 2008) of SPHERE (Beuzit et al. 2019) at the VLT. Both datasets were obtained as part of the SHINE (SpHere INfrared survey for Exoplanets, Chauvin et al. 2017; Vigan et al. 2021) large survey. The observation were obtained in pupil-tracking mode and use the apodized Lyot coronagraph (Soummer 2005) with a focal-mask diameter of 185 milli-arcsec. The two datasets are described in Table 1 and Figs. 4 and 5 show the pre-processed temporal median image for the 51 Eridani and β Pictoris observations, respectively.

Both datasets were pre-reduced using the SPHERE Data Center pipeline (Delorme et al. 2017), which uses Data Reduction and Handling software (DRH, v0.15.0, Pavlov et al. 2008) and additional custom routines. It corrects for detector effects (dark, flat, bad pixels), instrument distortion, in addition to aligning the frames and calibrating the relative flux.

The first test case is the directly imaged planet in the 51 Eridani system (Macintosh et al. 2015). The data we use was previously published in Samland et al. (2017). This dataset was obtained in the K1 and K2 bands simultaneously, but we focus our discussion on the K1 channel in which the companion is visible. The sequence was not taken under ideal conditions. It exhibits common problems encountered in high-contrast imaging, such as a strong wind-driven halo, as seen in Fig. 4. These effects make for a realistic test case, and allow us to demonstrate the algorithm performs well under adverse and changing conditions.

The second test case uses IRDIS H2 data of the β Pic system(Lagrange et al. 2009) published in Lagrange et al. (2019) taken in continuous satellite spot mode, that is, using sine-wave modulations on the deformable mirror to induce four satellite spots in all frames of the sequence that can be used to determine the center accurately. This also permits a measurement of the satellite spot amplitude variations as proxy for the planet PSF model’s flux modulation over time. The purpose behind including this dataset is two-fold. Firstly, the exposure time is relatively short for an IRDIS sequence (4 s), which allows us to test our time-domain based algorithm on a dataset with better temporal sampling, approaching the half-life time of fast-decaying speckles (τ = 3.5 s), such that multiple correlation timescales are not averaged. Secondly, datasets taken in continuous satellite spot mode do not use detector-stage dithering, which is generally used for IRDIS coronagraphic sequences. We will use this non-dithered dataset to test our algorithm directly on the non-aligned data cube (only dark and flat corrected). This is only possible in a modeling framework that does not optimize the local spatial similarity between training and test sets, which is the case for our temporal approach. We demonstrate that we can skip bad pixel interpolation and shifting steps by including the shift in the forward model of the planet signal, and exclude the stationary bad pixels from our training and test sets altogether. This makes the propagation of all uncertainties throughout the complete data reduction feasible because the data does not need to be resampled or interpolated. We also account for SPHERE’s anamorphism in the relative position of the companion model. We further demonstrate the possibility of including the satellite spot modulations in the forward model to reduce a systematic bias of the photometric calibration.

|

Fig. 5 Contour map of median combined image of pre-processed beta Pic data cube. Left panel: data in linear scaled brightness bins, right panel: logarithmic scaling. |

5 Results

We performed a direct comparison of results between TRAP and the official IDL implementation of the ANDROMEDA algorithm (Cantalloube et al. 2015). It is always challenging to directly compare a new approach with existing pipelines due to numerous differences in implementation, a wide range of possible reduction parameters, test data taken in various conditions and observing modes, as well as differences in statistical evaluation of the outputs. We note that no comparison will ever be complete. For our work, we have chosen ANDROMEDA as the most viable representation of the spatial systematics modeling class of algorithms, because both TRAP and ANDROMEDA implement a likelihood-based forward modeling approach of the companion signal. This allows us to directly evaluate and compare the outputs in a fair way, such that differences in implementation do not impact the statistical interpretation of the results. Additionally, ANDROMEDA has been shown to compare well with other established PCA and LOCI-based pipelines (e.g., Cantalloube et al. 2015; Samland et al. 2017).

For our tests and comparisons, we use the exact same normalization method for the detection map and contrast curve computation for both TRAP and ANDROMEDA outputs. For the computation of the empirical normalization and the contrast curves, we mask the position of the planet with a mask size radius of rmask = 15 pixels in the output maps (~180 mas).

We show the results for TRAP for a range of principal component fractions, f, (see Eq. (13)). Our representative choice is f = 0.3, which does not significantly underfit nor overfit either dataset in our reductions. The impact of f is explored in more detailed in Sect. 5.1.2. For ANDROMEDA, the representative choice of protection angle (minimum angular displacement of companion signal between two frames) is δ = 0.5 λ∕D, which shows good performance for extreme-AO SPHERE data and was the final parameter choice used for thespectro-photometric analysis of 51 Eri b (Samland et al. 2017). The contrast curve for δ = 1.0 λ∕D is included for demonstrating the impact of the protection angle on contrast performance at small angular separations. Contrast curves for ANDROMEDA using δ = 0.3, 0.5, 1.0 λ∕D for both datasets are shown in Appendix B for completeness.

Photometry and S/N for unbinned 51 Eri b data.

5.1 51 Eridani b: centrally aligned data cube

5.1.1 Comparison to ANDROMEDA

The detection maps for both TRAP and ANDROMEDA are shown in Fig. 6. The first thing we note is that the remaining structures in the detection map using TRAP are smoother and less correlated on spatial scales of 1 λ∕D. Besides 51 Eri b, we do not detect any other signal with >5 σ significance. Table 2 shows a summary of the obtained photometry for 51 Eri b using TRAP and ANDROMEDA.

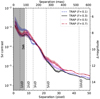

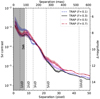

Figure 7 shows the contrast curve for both reductions (left panel) and the factor gained in contrast by using TRAP compared toANDROMEDA (right panel). The ANDROMEDA results have been obtained using two different values for the protection angle δ = 0.5 λ∕D and δ = 1.0 λ∕D. Because both algorithms obtain a 2D detection map from the forward model grid of positions, not only can we determine the median detection limit at a given separation, but also the azimuthal distribution of detection contrasts. Thus, in addition to the median, we plot the 68% range as shaded regions reflecting the variability of the detection contrast along the azimuth. This is another important figure of merit to evaluate the performance of the algorithms.

The detection limit obtained with TRAP is consistently lower than ANDROMEDA for separations up to about 10 λ∕D, at which point the results between TRAP and ANDROMEDA converge to the same background value. At small separations, in particular, we can clearly see a gain in contrast due to the absence of a protection angle in our reduction. The ANDROMEDA curves cut off at about 1λ∕D and 2λ∕D respectively because no reference data that fulfills the exclusion criterion exists (see Fig. A.1, ~ 60° elevation). The relative gain in contrast, with respect to the spatial model (ANDROMEDA) is significant, with ramifications for the detectability of close-in planets. It can be as high as a factor of six at 2λ∕D for a protection angle of 1λ∕D and four at a separation of 1λ∕D for a protection angle of 0.5λ∕D.

The diminishing gain in contrast performance with separation and subsequent agreement with ANDROMEDA at about 10λ∕D, is consistent with our expectation that the limiting factor of insufficient FoV rotation for the spatial model ceases to be important at larger separation. We note that in general the azimuthal variation of the contrast at each specific separation bin is consistently and significantly smaller for our model. This reflects the visual impression of a smoother detection map overall. This difference in azimuthal variation is especially noticeable at separations >350 mas, where the influence of the wind-driven halo declines (Fig. 4).

We performed detailed tests with injected point sources to rule out that TRAP biases the retrieved S/N to any significant degree, meaning that any bias is small compared to the derived statistical uncertainties. The description of these tests and their results are shown in Appendix C.

|

Fig. 6 Contour map of normalized detection maps obtained with TRAP (f = 0.3) and ANDROMEDA (δ = 0.5λ∕D) for 51 Eri b. These maps must not be confused with a derotated and stacked image. They represent the forward model result for a given relative planet position on the sky (ΔRA, ΔDec), that is, the conditional flux of a point-source predicted by the forward model given a relative position, corresponding to a trajectory over the detector (all pixels affected during the observation sequence). The dark wings around the detection are not a result of self-subtraction, but purely a result of the fixed forward model position with respect to the real signal. |

|

Fig. 7 Comparison between the contrast obtained with TRAP and two ANDROMEDA reductions for 51 Eri using the same input data for the K1 band. Here, TRAP was run with 30% of available principal components, whereas the two ANDROMEDA reductions correspond to a protection angle of δ = 0.5 λ∕D and δ = 1.0 λ∕D. Separations below the inner-working angle of the coronagraph are shaded and should only be interpreted relative to each other, not in terms of absolute contrast, because the impact of coronagraphic signal transmission is not included in the forward model of either pipeline. Left: shaded areas around the lines correspond to the 16–84% percentile intervals of contrast values at a given separation. Right: factor in contrast gained using TRAP. |

|

Fig. 8 Contrast curves obtained with TRAP for 51 Eri when different fractions of the maximum number of principal components are used. Figure description is analogous to that of Fig. 7. |

5.1.2 Changing the number of principal components

To confirm the reliability of our results, we performed the same data reduction with different complexities of the systematics model. We test the impact of the fraction, f, of the maximum number of components, Nmax = Nframes (see Eq. (13)). Figure 8 shows the contrast curve for the same data as used in Fig. 7, with f = 0.1, 0.3, 0.5, 0.7. Firstly, we note the absence of a trend towards “better” detection limits with the increasing number of principal components, that is, more complex systematics models. In fact the contrast gets worse for large fractions. While a simultaneous fit of models of the planet signal and systematics counteracts overfitting, increasing the complexity of the systematics model beyond a certain point, the planet model component becomes less constraining, resulting in a larger scatter of the planet contrast prediction. Secondly, the value of f = 0.3 provides good results irrespective of separation, meaning that we do not have to make a significant distinction in the complexity of our temporal model depending on separation. Models that are not sufficiently complex (f = 0.1) result in a slightly worse performance at close separation, which could be related to the presence of the strong wind-driven halo and effects from the (mis)alignment of the coronagraph with the star in addition to the quasi-static speckle pattern.

In spatial models, we may have to choose a different model complexity based on the separation to try and compensate for the self-subtraction effects (resulting from the use of smaller protection angles) by using a less complex model, but also because we have a variable number of spatial modes to reconstruct, depending on the separation and reduction area. However, for our temporal approach the existence of a model complexity that fits well globally is in agreement with our expectations. The number of frames available for training does not depend on separation because we do not have a temporal exclusion criterion. Additionally, a speckle lifetime analysis performed by Milli et al. (2016) for SPHERE did not show a strong separation dependence for speckle correlations linearly decreasing correlations on timescales of tens of minutes. The fast-evolving and exponentially decaying correlations (τ ~ 3.5 s) that are likely to be caused by turbulence internal to the instrument did show a slight separation-dependence, but our integration times are too long and the effects too small to be expected to be visible.

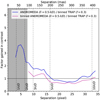

5.1.3 Changing the temporal sampling

For a temporal systematics model, it is highly probable that the performance of the algorithm scales with temporal sampling. In Fig. 9, we repeat our reduction with the same dataset binned down by a factor of four (16 –64 s exposures). We again plot the contrast of TRAP reductions with varying principal component fractions, f, and ANDROMEDA with the standard setting of δ = 0.5 λ∕D (left panel) for the same binned data. We also plot the factor gained in contrast compared to ANDROMEDA (right panel). We can still see an improvement at small separations, but the advantage of using a temporal model drops off faster, and it may even perform worse at large separations. From ~5λ∕D, we do not see any improvement and the improvement at small separations is smaller. This is consistent with our expectation of temporal models being able to capture more systematic variations at a finer time sampling. If the time sampling is poor the causal relationships in the systematics get averaged out and become more difficult to model.

Figure 10 shows the advantage in contrast when using the unbinned (16 s exposure) compared to the binned (64 s exposure) data. We see that temporally binning the data has an adverse effect on the contrast over almost the whole range of separations. Over separations between 0.5 and 10 λ∕D, we see an average 40% improvement in contrast by using the faster temporal sampling. We note that both of the sampling rates shown here are too coarse to model the short-lived speckle regime, and we expect further improvement by reducing the exposure time by another factor of four or more (≤ 4 s), as shown in the next section.

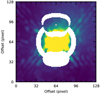

5.2 β Pic: continuous satellite spot data with short integrations

A comparison between the detection maps obtained with TRAP and ANDROMEDA is shown in Fig. 11. For both reductions, we used the same pre-reduced and aligned input data cubes and the reduction parameters were the same as for the above 51 Eri data (f = 0.3 for TRAP and δ = 0.5 λ∕D for ANDROMEDA). We focus our discussion on one of the two channels (H2). The color scaling differs to account for the difference inthe S/N of the detection. With an S/N of 40, the TRAP detection is about four times higher than in ANDROMEDA. The S/N in H3 (not shown here) is even slightly higher because the contrast to the host star is more favorable at these wavelengths. Table 3 shows a summary of the photometry results obtained for all reductions of β Pic b discussed in this section.

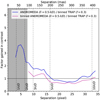

Figure 12 shows the obtained contrast analogous to Fig. 7. Qualitatively, we see the same effects as for51 Eri, an increasingly significant contrast improvement at small separations from using a temporal model. We see an even more pronounced reduction in azimuthal variation in contrast, that is, the “width” of the contrast curve. For this data, we additionally see a baseline increase in performance between 50 and 200% at larger separations (> 3 λ∕D) that we attribute to the better temporal sampling. To confirm this hypothesis, we have binned down the data by a factor of 16 to obtain one-minute exposures. The detection map obtained with TRAP is shown in Fig. 13 and the contrast compared to the unbinned ANDROMEDA reduction is shown in Fig. 14. The planet signal is detected with a S/N of about 13, only slightlybetter than the S/N obtained with ANDROMEDA on the unbinned data. The obtained detection contrast curve is also comparable to ANDROMEDA at this separation. We therefore attribute the S/N improvement by a factor of four to the fact that our algorithm is capable of taking into account the bulk of short-timescale variations. We note that at the separation of β Pic b for the epoch of the observation (~6 λ∕D), the exclusion time for spatial models is on the order of minutes even for very small protection angles (δ = 0.3 λ∕D) and above ten minutes for the standard setting of δ = 0.5 λ∕D (see Fig. A.1), which is much longer than the exposure time.

|

Fig. 9 Same as Fig. 7, but each four frames of the 51 Eri b coronagraphic data are temporally binned to achieve a DIT of 64s. Figure description is analogous to that of Fig. 7. |

|

Fig. 10 Effect of using faster temporal sampling DIT 16 s (unbinned) compared to slower sampling (longer averaging) with DIT 64s (binned) on contrast obtained with TRAP. Figure description is analogous to that of Fig. 7. |

Photometry and S/N for β Pic b.

|

Fig. 11 Contour map of normalized detection maps obtained with TRAP (f = 0.3) and ANDROMEDA (δ = 0.5 λ∕D) for β Pic. These maps must not be confused with derotated and stacked image. They representthe forward model result for a given relative planet position on the sky (ΔRA, ΔDec), i.e. the conditional flux of a point-source predicted by the forward model given a relative position, corresponding to a trajectory over the detector (all pixels affected during the observation sequence). |

|

Fig. 12 Comparison between the contrast obtained with TRAP and ANDROMEDA reductions for β Pic using the same input data for the H2 band. TRAP has been run with 10, 30, and 50% of available principal components, whereas the ANDROMEDA reduction correspond to a protection angle of δ = 0.5 λ∕D. Separations below the inner-working angle of the coronagraph are shaded and should only be interpreted relative to each other, not in terms of absolute contrast, because the impact of coronagraphic signal transmission is not included in the forward model of either pipeline. Left: shaded areas around the lines correspond to the 14–84% percentile interval of contrast values at a given separation. Right: contrast gained by using TRAP. |

5.3 Applying the algorithm to unaligned data

As we are using a non-local, temporal systematics model and are not attempting to reconstruct a spatial model for how the speckles “look,” we can forego aligning the data and we can run the algorithm on minimally pre-reduced (background11 and flat corrected) unaligned data. To demonstrate this, we measure the center based on the satellite spots for each frame of the β Pic data and use this varying center position to construct the light-curve model for the planet, that is, we do not shift the frames; instead, we shift the forward model for our planet. We also apply the anamorphism correction for SPHERE to the relative position of the planet by reducing the relative separation of the model by 0.6% in y-direction (Maire et al. 2016) for each frame, instead of stretching the images. We also modulate the contrast of the planet model by the satellite spot amplitude variation measured for each frame. The result is shown in Fig. 15, with the aligned data on the left and the unaligned data on the right. We note that the S/N of the detection is virtually the same. There are only slight differences in the residual structures. We do see a blob above the position of β Pic b that edges above 5 σ in the reduction on unaligned data. It is difficult to evaluate the veracity of these structures due to the presence of the disk structure in the β Pic system.

In Fig. 16, we show the contrast curves for the: (1) aligned data without planet brightness modulation;(2) aligned data with planet brightness modulation; and (3) unaligned data with planet brightness modulation. The step of taking into account the brightness modulation derived from the satellite spots does not have a noticeable impact on the contrast limits. It does have a minimal impact on the derived contrast of β Pic b as it reduces the flux calibration bias incurred by assuming an average or median contrast for the planet flux in the planet model, instead of a more realistic distribution. In the case of this observation, the scatter of brightness variation is roughly Gaussian with a variability of ~6% centered on the mean of the satellite spot brightness, without a large systematic trend. Taking this variation into account becomes more important as the conditions become more unstable and when an overall trend is present, for example, a trend that results from clouds reducing atmospheric transmission during part of the observation.

A bigger difference can be seen in the reduction of the unaligned data. The contrast appears to be worse in the innermost region covered by the coronagraphic mask, but slightly better outside of 3λ∕D. The S/N of β Pic b, again, is virtually the same as in the aligned case, but astrometry and photometry are slightly altered.

|

Fig. 13 Contour map of normalized detection maps obtained with TRAP (f = 0.3) binned data of beta Pic (16× binning, 64 s exposures). Figure description is analogous to that of Fig. 7. |

|

Fig. 14 Contrast ratio between TRAP (f = 0.3) reduction ontemporally binned data (16× binning, 64 s exposures) and ANDROMEDA reduction of the same binned data, as well as unbinned data, of β Pic. Figure description is analogous to that of Fig. 7. |

5.4 Computational performance

The computational time needed to reduce the 51 Eri dataset (256 frames) at one wavelength up to a separation of ~45 pixel (~550 mas) for standard parameters (f = 0.3, PSF stamp size 21 × 21 pixel), whileincluding the variance on the data, is about 120 min on a single core on a laptop (intel CORE i7 vPro, 8th Gen; 16 GB memory). The computational time can be roughly halved by using a PSF stamp of half the size for determining the reduction area,  (excluding the first Airy ring). This only has a minor impact on the overall performance of the algorithm. Reducing f similarly reduces the computational time, which is important for large data sets with thousands of frames. The algorithm is parallelized on the level of fitting the model contrast for a given position, such that the grid of positions to explore is divided among the available cores. At the current version of the code, using four cores reduces the time needed to about 40 min, that is, by a factor of three, due to inefficiencies in memory sharing. We expect a nearly linear relation with the number of cores after improvements to the code’s parallelization architecture.

(excluding the first Airy ring). This only has a minor impact on the overall performance of the algorithm. Reducing f similarly reduces the computational time, which is important for large data sets with thousands of frames. The algorithm is parallelized on the level of fitting the model contrast for a given position, such that the grid of positions to explore is divided among the available cores. At the current version of the code, using four cores reduces the time needed to about 40 min, that is, by a factor of three, due to inefficiencies in memory sharing. We expect a nearly linear relation with the number of cores after improvements to the code’s parallelization architecture.

5.4.1 Scaling with number of frames

It is noteworthy that our algorithm’s computational speed scales better with the number of frames in the observation sequence than traditional spatial-based approaches that include a protection angle. The absence of a temporal exclusion criterion means that the principal component decomposition has to be performed only once for one assumed companion position, instead of having a separate training set for each frame in the sequence. The only increase in computational time stems from the need of decomposing a larger matrix once per model position and subsequently inverting a larger system of linear equations for each pixel. Having tested the computational time for different temporal binning factors, we note that the computational time scales between linear and quadratic with the number of frames with a power-law index of about  , that is, doubling the number of frames more than doubles the computational time.

, that is, doubling the number of frames more than doubles the computational time.

|

Fig. 15 Contour map of normalized detection maps obtained with TRAP (f = 0.3) on aligned and unaligned data for beta Pic. These maps must not be confused with derotated and stacked image. They represent the forward model result for a given relative planet position on the sky (ΔRA, ΔDec), i.e. the conditional flux of a point-source predicted by the forward model given a relative position, corresponding to a trajectory over the detector (all pixels affected during the observation sequence). |

|

Fig. 16 Left: comparison between the contrast for β Pic obtained with TRAP on: (1) aligned data not taking into account the brightness modulation; (2) same but taking into account the brightness modulation; and (3) unaligned data with the brightness modulation. TRAP was run with 30% of available principal components. The shaded areas around the lines correspond to the 14–84% percentile interval of contrast values at a given separation. Right: contrast gain compared to aligned data without including amplitude variations (baseline reduction). Figure description is analogous to that of Fig. 7. |

5.4.2 Scaling with the outer-working angle