| Issue |

A&A

Volume 693, January 2025

|

|

|---|---|---|

| Article Number | A256 | |

| Number of page(s) | 27 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202451073 | |

| Published online | 23 January 2025 | |

Stellar parameter prediction and spectral simulation using machine learning

A systematic comparison of methods with HARPS observational data★

1

Department of Cybernetics, Czech Technical University in Prague,

Czech Republic

2

European Southern Observatory,

Karl-Schwarzschild-Str. 2,

85748

Garching,

Germany

★★ Corresponding author; cvrcevo1@fel.cvut.cz

Received:

12

June

2024

Accepted:

4

November

2024

Aims. We applied machine learning to the entire data history of ESO’s High Accuracy Radial Velocity Planet Searcher (HARPS) instrument. Our primary goal was to recover the physical properties of the observed objects, with a secondary emphasis on simulating spectra. We systematically investigated the impact of various factors on the accuracy and fidelity of the results, including the use of simulated data, the effect of varying amounts of real training data, network architectures, and learning paradigms.

Methods. Our approach integrates supervised and unsupervised learning techniques within autoencoder frameworks. Our methodology leverages an existing simulation model that utilizes a library of existing stellar spectra in which the emerging flux is computed from first principles rooted in physics and a HARPS instrument model to generate simulated spectra comparable to observational data. We trained standard and variational autoencoders on HARPS data to predict spectral parameters and generate spectra. Convolutional and residual architectures were compared, and we decomposed autoencoders in order to assess component impacts.

Results. Our models excel at predicting spectral parameters and compressing real spectra, and they achieved a mean prediction error of ~50 K for effective temperatures, making them relevant for most astrophysical applications. Furthermore, the models predict metallicity ([M/H]) and surface gravity (log g) with an accuracy of ~0.03 dex and ~0.04 dex, respectively, underscoring their broad applicability in astrophysical research. Moreover, the models can generate new spectra that closely mimic actual observations, enriching traditional simulation techniques. Our variational autoencoder-based models achieve short processing times: 779.6 ms on a CPU and 3.97 ms on a GPU. These results demonstrate the benefits of integrating high-quality data with advanced model architectures, as it significantly enhances the scope and accuracy of spectroscopic analysis. With an accuracy comparable to the best classical analysis method but requiring a fraction of the computation time, our methods are particularly suitable for high-throughput observations such as massive spectroscopic surveys and large archival studies.

Key words: methods: data analysis / methods: statistical / techniques: spectroscopic / stars: fundamental parameters / stars: statistics

Based on data obtained from the ESO Science Archive Facility with DOI(s): https://doi.eso.org/10.18727/archive/33

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Deriving reliable source parameters from very large sets of spectra has been rapidly gaining importance as the amount of such data massively increases, for example, through dedicated observational campaigns and/or data piling up in public archives. Perhaps the most notable case is the analysis of spectra from the ESA Gaia mission, as it has provided the largest homogeneously observed stellar spectral sample to date (470 million stars with spectra in Data Release 3; Recio-Blanco et al. 2023).

Manual analyses of such large datasets are “de facto” impossible, and automatic techniques need to take their place. As with any data analysis, it is imperative that the associated uncertainties, random and systematic, as well as the limitations of the methodologies are thoroughly understood and quantified so that the results can be reliably used, including by researchers who have not participated in their generation. Also, the methods need to be computationally efficient in order to cope with the correspondingly large data volumes in terms of the number of individual spectra and their sheer size. This, of course, is in addition to the need to deliver precision and accuracy that are competitive with the best results available in the literature.

In this research, we use machine learning (ML) to interpret spectral data, focusing on key physical and observational parameters as our primary interest. These spectral parameters include temperature, surface gravity, radial velocity, metallicity, airmass, and barycentric Earth radial velocity. Within our ML framework, these spectral parameters are treated as “labels,” and they are the outcomes our model is designed to predict.

We chose the public catalog of observations from the HARPS instrument at the ESO La Silla Observatory for our study. This dataset is accessible through the ESO Science Archive1 and is continually updated as the instrument remains in active operation. As per the nature of the instrument, the targets are stars in the solar neighborhood mostly originally observed with the intention of searching for planets around them. Our study does not focus on this particular aspect but instead on the determination of the physical parameters of the stars. The sample is particularly well suited for this since given the relatively small distance from Earth to the targets, they have been well studied in the literature, and thus there is an extensive set of physical parameters that can be used as labels to train the networks with. Also, the spectra themselves are extremely rich in information. Due to the high spectral resolution and broad wavelength coverage of HARPS, a normal stellar spectrum displays hundreds of features (absorption lines).

Machine learning techniques have experienced a rise in popularity in the past several years, being promising tools for analyzing astronomical spectra (as well as other types of astronomical data). Once trained, the methods can be applied to large datasets, usually in a computationally efficient way. Many trained models exist, but their inner workings are not always clear, so they frequently resemble black boxes. Moreover, distinct techniques are frequently utilized for specific datasets, which complicates the disentanglement of their individual roles and impacts.

Typically, ML algorithms offer a large set of customizable options that must be tuned to get optimal results. This can be a confusing and daunting task. Here, we have pursued a systematic approach by comparing different ML algorithms and setups and applying them to the same dataset. Our goal is to find a set of principles regarding how to navigate through these options and propose a practical methodology that can be applied to other similar investigations. To achieve this goal, we aim to provide objective and reproducible results, tracing the effects of the input assumptions and setup choices on the output by exploring a representative set of techniques and ML parameters. Crucially, these techniques and parameters include the number of labels available for training. Since labels may not always be copiously accessible, they will be, in many cases, the limiting factor to the attainable accuracy.

Our primary objective is to predict stellar parameters, a task we call “label prediction.” Our secondary objective is to generate realistic synthetic spectra, a task we call “ML simulation,” to clearly distinguish from the traditional physics-based simulations based on solving the transfer equations in the stellar atmospheres. We are interested in solving these tasks jointly with models that can harness multiple sources of information: the data itself, in order to circumvent the challenging task of obtaining enough reliable labels for supervised learning; catalog data, to form a semantically meaningful latent space directly; and synthetic data with uniform distributions of spectral parameters, which can serve as an alternative strategy to deal with a lack of labels.

We work under the assumption that label prediction and ML simulation are related tasks. Therefore, a joint model capable of performing both tasks simultaneously could outperform models that specialize in these tasks separately. Lastly, we hypothesize that the model can benefit from the additional information provided by the synthetic data, which can be used to regularize the model and improve its generalization capabilities.

The paper is organized as follows. Section 2 presents the problem and explains in detail the methods used. Section 3 covers the data, its augmentation, our learning approach, and the metrics employed. Section 4 presents the experimental results, while Sect. 5 offers a discussion of these results. Finally, Sect. 6 summarizes the conclusions of the paper and explores possible future research directions.

2 Machine learning methods

This section provides a concise overview of the ML models and methods we employ to achieve our objectives, as well as alternative, state-of-the-art models. We begin by defining fundamental ML terminology and mathematical notation relevant to our experiments. Next, we clarify our objectives by defining the inference tasks for label prediction and ML simulation. Subsequently, we formalize the learning problem associated with these inference tasks. Finally, we introduce specific ML models that we use to address the inference tasks.

2.1 Machine learning preliminaries

In this section we explain the relevant concepts and terminology that are essential to understand the following text. We also investigate the application of ML techniques for label prediction and ML simulations. We specifically examine the use of interpretable unsupervised models and how to combine them with supervision to enhance performance.

We start with classic “supervised learning” (Murphy 2022, p. 1) as a basic framework to extract labels from spectral data. This involves the application of an ML model learned from data annotated by experts, which can then be used to predict parameters for previously unseen data. Supervised learning has seen wide-ranging applications in astronomy, such as galaxy morphology classification (Cavanagh et al. 2021), star-galaxy separation (Muyskens et al. 2022), and transient detection and classification (Mahabal et al. 2017). These works often employ methods such as random forests and neural networks. An overview of supervised learning in astronomy is presented in (Baron 2019).

The estimation of physical parameters from spectra has been done in the literature using supervised learning methods, employing linear regression (Ness et al. 2015), neural networks (Fabbro et al. 2018; Leung & Bovy 2019), or a combination of classic synthetic models of spectra and simple neural networks (Ting et al. 2019). However, typical supervised approach is inherently limited by the amount and quality of annotated data. Data annotation is often tedious and time-consuming, and it is not always possible to obtain reliable labels (Gray & Kaur 2019).

“Unsupervised learning” methods (Murphy 2022, p. 14) are appealing due to their ability to discover inner structures or patterns in data without relying on labels. These learned representations attempt to capture essential data features and can be beneficial in various tasks, such as clustering or reconstruction. Although representations may capture the underlying patterns of the data, there is no guarantee that they align with our human understanding or are easily interpretable in specific contexts, such as spectral parameters (Gray & Kaur 2019; Sedaghat et al. 2021).

Autoencoders (AEs) are a key technique in unsupervised learning that focus on learning a low-dimensional representation of high-dimensional input data (Murphy 2022, p. 675). AEs consist of two parts: an encoder and a decoder. The encoding process involves passing the data through a “bottleneck” a middle part of the network where the data are transformed into a “latent representation.”

To ensure that the latent representation is useful and the network does not learn merely an identity mapping, some form of regularization must be applied to the bottleneck. In this paper, we achieve compression of the input by choosing a bottleneck that is much smaller than the input (bottleneck autoencoder; Murphy 2022, p. 667).

The latent representation is crucial for data reconstruction and analysis, as it encodes essential underlying features for these tasks. The space composed of all possible latent representations is called the “latent space.” The decoding process attempts to reconstruct the input from its latent representation, which is the learning objective of the AEs. AEs determine the optimal latent space without any supervision, and the resulting latent space is optimal vis-à-vis the reconstruction of the input. This process does not require any labeled data at any step. AEs are used for denoising (Murphy 2022, p. 677), dimensionality reduction, and data compression (Murphy 2022, p. 653).

However, the latent space of AEs poses several challenges. One key issue, as mentioned, is the lack of a guarantee to extract meaningful variations in the data (Leeb et al. 2022). This is informally known as interpretability since the meaningfulness of latent space is subjective with respect to the target application. Additionally, the deterministic nature of AEs, where each input corresponds to a single point in the latent space, poses risks of overfitting and limits the model’s generalization capabilities to new, unseen data (Kingma & Welling 2014).

The concept of “disentangled representation” is a possible formalization of interpretability. Disentangled representation refers to the ability of a model to autonomously and distinctly represent the fundamental statistical factors of the data (Locatello et al. 2020). In the context of this paper, statistical factors refer to various independent characteristics of celestial objects, such as their luminosity, temperature, chemical composition, or velocity. A model that learns a disentangled representation can represent each of these astronomical factors independently.

For a model to perform well on new data (beyond interpolation), the decoder must correctly interpret the cause-and-effect relationships of individual factors on the resulting spectrum (Montero et al. 2022). Determining causality from purely observational data is generally an unsolved problem that requires strong assumptions (Peters et al. 2017, pp. 44–62). The process of learning causality can be simplified when we have access to physical simulations, which would allow us to model and test causal relationships more effectively (Peters et al. 2017, pp. 118–120).

Variational autoencoders (VAEs; Kingma & Welling 2014) represent a promising category of models capable of achieving a disentangled latent space. This ability stems from the way VAEs conceptualize and manage the latent space. Unlike traditional AEs, which generate a single latent representation for each input (such as a spectrum), VAEs produce a distribution over the latent space for the same input. We provide more details of the two basic VAEs variants in Appendix C.

Variational autoencoders have some associated training challenges. They require careful tuning of the hyperparameters to ensure the latent space is properly utilized and the model does not collapse to a trivial solution (posterior collapse; Murphy 2023, pp. 796–797). Other well-known issues are blurry reconstructions that are caused by over-regularized latent space (Murphy 2023, pp. 787–788).

Independent studies have employed VAEs for HARPS (Mayor et al. 2003) and SDSS (Abazajian et al. 2009) spectra to self-learn an appropriate representation, thereby attempting to eliminate the need for annotation entirely (Portillo et al. 2020; Sedaghat et al. 2021). Although it was shown to be empirically possible to obtain some spectral parameters (Sedaghat et al. 2021), it is not clear how to obtain all of them or control which ones are obtained.

The large-scale study conducted by Locatello et al. (2020) proves that it is not feasible to achieve unsupervised disentanglement learning without making implicit assumptions that are influenced by ML models, data, and the training approach. These assumptions, commonly referred to as “inductive bias” in the field of ML (Gordon & Desjardins 1995), are challenging to manage due to their implicit nature. Therefore, to successfully acquire disentangled representations in practical situations, it is crucial to obtain access to high quality data, establish suitable distributions that accurately model the underlying structure and complexity of the data, and use labels at least for the model validation (Dittadi et al. 2021).

In light of these challenges with unsupervised learning, “semi-supervised learning,” which combines the advantages of both supervised and unsupervised learning, is a promising approach. It is beneficial when the amount of labeled data are limited or labeling is costly, but the unlabeled data are abundant (Murphy 2022, p. 634). Semi-supervision utilizes all data to overcome the lack of labels. However, semi-supervised learning in astronomy can be challenging due to unbalanced datasets (where classes are unevenly represented, often with a significant imbalance in the number of instances per class), covariate shifts, and a lack of reliably labeled data (Slijepcevic et al. 2022).

Semi-supervised2 AEs incorporate label prediction on top of reconstruction to improve their performance (Le et al. 2018). The same principle can be applied to VAEs (Kingma et al. 2014) to obtain semi-supervised VAEs. There are many variants; for example, Le et al. (2018) uses a pair of decoders – one for input reconstruction and another for label prediction. Alternatively, Kingma et al. (2014) (model M2) employs two encoders: one to provide an unsupervised bottleneck and the other to handle label prediction. The semi-supervised approach allows us to jointly solve the label prediction and ML simulation tasks.

However, not all architectural choices lead to the same outcomes. For instance, the approach of Le et al. (2018) does not use predicted labels for reconstruction, meaning that the reconstruction error is not back-propagated to influence the predictions. In contrast, architectures that incorporate predicted labels during the reconstruction process allow the reconstruction error to inform and refine the label predictions through backpropagation, thus potentially improving prediction accuracy.

Concentrating only on label prediction, we considered simulation-based inference (SBI; Cranmer et al. 2020). SBI utilizes existing simulations to infer parameters without relying on annotated data. SBI methods either examine a grid of parameters (Cranmer et al. 2020) or iteratively improve initial estimates of parameters, such as in Markov chain Monte Carlo (MCMC) methods (Miller et al. 2020). Modern approaches to SBI (Cranmer et al. 2020) employ machine learning techniques, like normalizing flows (NFs; Rezende & Mohamed 2015), for precise density estimation and to increase the speed. However, training NFs or conditional NFs, is challenging due to the high dimensions of HARPS spectra. Another issue is the significant differences between synthetic and observed data.

We prefer VAEs because they offer an efficient and flexible approach to modeling high-dimensional data, such as HARPS spectra, with a simpler and faster alternative to traditional SBI. By leveraging semi-supervised VAEs, we can directly obtain posterior distributions when the variational distribution is flexible enough to match the target distribution, which is ideal for cases with high-dimensional observations, low-dimensional parameter spaces, and unimodal posteriors. Additionally, VAEs have the capability to discover new parameters and integrate both known and unknown factors into simulations, enhancing their accuracy and adaptability. This allows for quick sampling of candidate solutions and significantly reduces computational demands. Moreover, VAEs can serve as a preprocessing step to reduce dimensionality, optimizing computational efficiency for downstream tasks, including those that use NFs for modeling more complex and multi-modal distributions.

We considered other generative models for ML simulation, such as diffusion models (Sohl-Dickstein et al. 2015), NFs (Rezende & Mohamed 2015), and generative adversarial networks (GANs; Goodfellow et al. 2014). These powerful ML models project a vector of Gaussians into the simulations. If desired, these models can condition the output on spectral parameters to provide greater control over the simulations. Their flexibility allows them to adapt to different types of data and applications, providing a robust framework for generating realistic simulations across various domains. However, these models have several limitations that make them less suitable for our specific ML simulation task.

These models intentionally function as black boxes, where the connection between the latent space and the generated simulation is hidden. As a result, we obtain simulations with variations without understanding their origin. This is acceptable in domains where explicit parametrization of the target domain is not possible or practical, such as in natural scenes for computer vision. However, in spectroscopy applications, it is desirable to control ML simulations with known or discovered parameters. Introducing variations into simulations merely to create an illusion of realism, without understanding the underlying processes, is meaningless in our context. Additionally, training these models demands a large amount of computational resources to achieve good results.

A practical example of a GAN application is CYCLE-STARNET (O’Briain et al. 2021), which combines GANs and autoencoders to transform synthetic spectra into realistic ones and vice versa. The method introduces two latent spaces: one shared between synthetic and real data and one specific to real observations. By learning mappings between these spaces, CYCLE-STARNET can enhance the realism of synthetic spectra and facilitate the transformation of observed spectra back into the synthetic domain. The learning process is unsupervised, and the method does not provide label prediction.

In this study, we chose supervised VAEs for the ML simulation task because they facilitate explicit representation of stellar parameters. VAEs can create a structured latent space that combines both known and unknown factors. This allows us to obtain both the simulated spectrum and the parameters that were used. Unlike other generative models, AEs and VAEs directly learn how to project the structured latent space to observations, making this approach deterministic and aligning with practical scientific requirements where we aim to minimize random variances in the output. Furthermore, VAEs are significantly faster to train and can handle larger data dimensions than diffusion models or NFs. Finally, as demonstrated in Rombach et al. (2022), compressing data with AEs is necessary to make diffusion models with high-dimensional data feasible. This demonstrates that scalable compression techniques, such as AEs or VAEs, are still highly relevant for these models.

We enrich the training data with simulated data to create a balanced dataset with reliable labels. We mix these synthetic data with real data to minimize the impact of covariate shifts. We also explore splitting the latent space in a supervised (label-informed) and an unsupervised part, so as to ensure that the model learns the correct labels where possible, while utilizing disentangled methods for its unsupervised parts.

We identified a gap in the literature concerning the semantic correctness of ML simulations, specifically whether individual labels in these simulations behave according to theoretical expectations (causality). In this study, we suggest modifications to existing methods and novel metrics to explore and solve this problem.

2.2 Semi-supervised latent space

Semi-supervised latent space ℬ is a tool for representing input spectra that is influenced by both labels and the spectra themselves. Within the latent representation b ∈ ℬ, “factors” specifically denote the set of independent core characteristics or attributes that have been abstracted from higher-dimensional input spectra s. We further divide the latent representation into two components: “label-informed factors” ![$\[\hat{\mathbf{l}}^{\mathrm{LIF}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq1.png) and “unknown factors” u, that is,

and “unknown factors” u, that is, ![$\[\mathbf{b}=\left(\hat{\mathbf{l}}^{\mathrm{LIF}}, \mathbf{u}\right)\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq2.png) . The label-informed factors

. The label-informed factors ![$\[\hat{\mathbf{l}}^{\mathrm{LIF}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq3.png) are supervised by known spectra parameters l from a catalog, where l undergoes a normalization process to ensure a consistent scale and distribution across the dataset. This ensures that both the label-informed factors and the original labels are consistently scaled, enhancing the ability of our machine learning models to learn and generalize from the data effectively. In this study, the symbol ˆ denotes prediction.

are supervised by known spectra parameters l from a catalog, where l undergoes a normalization process to ensure a consistent scale and distribution across the dataset. This ensures that both the label-informed factors and the original labels are consistently scaled, enhancing the ability of our machine learning models to learn and generalize from the data effectively. In this study, the symbol ˆ denotes prediction.

The normalization procedure adjusts label to follow a standard normal distribution. Specifically, the normalization and scaling of the kth label are defined as

![$\[\underline{l}^{k}=\frac{l^{k}-\mu^{k}}{\sigma^{k}} \quad \text{and} \quad l_{\text {scale }}^{k}=\frac{l^{k}}{\sigma^{k}},\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq4.png) (1)

(1)

where μk and σk are the mean and standard deviation of the kth label across the dataset, respectively. The scaling operation enables the addition of unnormalized labels with normalized labels by first scaling the unnormalized labels. In this study, ![$\[\mathbf{\underline{l}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq5.png) denotes normalized labels and lscale denotes scaled labels.

denotes normalized labels and lscale denotes scaled labels.

The unsupervised part, unknown factors u, represents undetermined spectral parameters and other statistically relevant features. Working in tandem with the supervised label-informed factors, these unknown factors help create a more comprehensive and informative representation of the latent space. This holistic approach allows us to uncover hidden patterns and relationships within the spectral data, leading to more accurate ML simulation and label prediction as shown in Sect. 4.

We use the term “label-aware” when talking about models that utilize labels during the training process, regardless of whether the approach is fully supervised or semi-supervised. Later, we assess the impact of the labels by comparing label-aware and unsupervised models.

2.3 Inference tasks

In ML, an inference task refers to a specific problem that we aim to solve. This includes stating the assumed deployment condition, in other words, what data the model will receive and what output it should produce. Specifying and formalizing the inference tasks is crucial for designing the ML models and selecting appropriate loss functions for learning the models.

We assume there is a dataset in the form ![$\[\left\{\mathbf{s}_{i}, \mathbf{l}_{i}, \mathbf{u}_{i}\right\}_{i=1}^{D}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq6.png) . Here, D is the number of samples in the dataset,

. Here, D is the number of samples in the dataset, ![$\[\mathbf{s}_{i} \in \mathbb{R}^{N}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq7.png) represents an observed spectrum, N is the number of pixels in a spectrum,

represents an observed spectrum, N is the number of pixels in a spectrum, ![$\[\mathbf{l}_{i} \in \mathbb{R}^{K}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq8.png) denotes the associated labels, where each label corresponds to a known spectral parameter such as temperature or airmass, K denotes the number of labels,

denotes the associated labels, where each label corresponds to a known spectral parameter such as temperature or airmass, K denotes the number of labels, ![$\[\mathbf{u}_{i} \in \mathbb{R}^{L}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq9.png) denotes unknown factors, where each factor corresponds to an undetermined spectra parameter, and L is the number of assumed unknown factors. The wavelength vector is constant across all samples, and therefore, the spectrum si is represented just by the flux vector.

denotes unknown factors, where each factor corresponds to an undetermined spectra parameter, and L is the number of assumed unknown factors. The wavelength vector is constant across all samples, and therefore, the spectrum si is represented just by the flux vector.

In this work, the spectra are standardized by dividing each spectrum by its median value, equivalent to the approach in Sedaghat et al. (2021). This process is formally defined as

![$\[\mathbf{s} \leftarrow \frac{\mathbf{s}}{\operatorname{median}(\mathbf{s})},\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq10.png) (2)

(2)

where median(s) computes the median flux value across all pixels in the spectrum. This step is fundamental for our ML models, ensuring that the spectral data are prepared consistently for all samples. In this study, we always use the standardized spectra.

The true generative process M is unknown and complex, involving both known and unknown factors. We formalize this as a generative process M : (l, u) → s, which maps known and undetermined parameters to the observed spectrum. Traditional simulations omit u and only map l to s.

Our prime inference task aims to reverse the generative process M and make label prediction ![$\[\hat{\mathbf{l}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq11.png) . Our secondary inference task aims to model the generative process M, including the unknown factors. We call our secondary task ML simulation, to better distinguish it from the traditional simulations approach using the applicable physical laws. We seek M such that if they obtain latent representation b = (

. Our secondary inference task aims to model the generative process M, including the unknown factors. We call our secondary task ML simulation, to better distinguish it from the traditional simulations approach using the applicable physical laws. We seek M such that if they obtain latent representation b = (![$\[\underline{\mathbf{l}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq12.png) , u) for a particular spectrum s, intervening on a factor in b should result in changes to

, u) for a particular spectrum s, intervening on a factor in b should result in changes to ![$\[\hat{\mathbf{s}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq13.png) according to the semantics of that factor. For example, modifying a factor associated with radial velocity should produce a Doppler shift in the stellar lines and no other effect.

according to the semantics of that factor. For example, modifying a factor associated with radial velocity should produce a Doppler shift in the stellar lines and no other effect.

2.4 Learning problem statement

Once the inference tasks have been defined, we can formalize the learning problem. Learning is the process of obtaining a model that solves the defined inference tasks. This involves selecting appropriate ML models, defining a loss function that penalizes discrepancies between the model outputs and the actual observations, and partitioning the data to facilitate training, validation, and testing of the model. An ML model is completely described by its parameters and hyperparameters. For neural networks, ML hyperparameters – such as architecture, learning rate, batch size, and optimization methods – externally characterize the model and are either not directly related to the loss function or we want to keep them constant during training. ML parameters, which include the weights and biases within the model, determine the neural network’s output and directly influence the loss function.

During training, the ML parameters are fitted to the training data using backpropagation (Murphy 2022, p. 434), which computes the gradient of the loss function with respect to the ML parameters. This gradient is then used for non-linear optimization. Since ML hyperparameters do not contribute to the loss function gradient, we have to experiment with different combinations of ML hyperparameters to optimize the model’s performance. During “model selection,” we train a model for each combination of ML hyperparameters and validate each model by evaluating the loss function (or some other metric) on the validation data. We select the model with the best performance among all other models. Optionally, during testing, we evaluate the selected model on the testing data to obtain an unbiased estimate of the model’s expected performance.

We aim to train ML models that approximate M and M−1 using the dataset ![$\[\left\{\mathbf{s}_{i}, \mathbf{l}_{i}, \mathbf{u}_{i}\right\}_{i=1}^{D}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq14.png) . We have chosen the encoderdecoder architecture Bengio et al. (2013) as the suitable ML model. Encoders map high-dimensional input (spectra) to low-dimensional output (labels), effectively approximating M−1. Decoders, conversely, map low-dimensional input (labels) back to high-dimensional output (spectra), serving as a suitable approximation of M.

. We have chosen the encoderdecoder architecture Bengio et al. (2013) as the suitable ML model. Encoders map high-dimensional input (spectra) to low-dimensional output (labels), effectively approximating M−1. Decoders, conversely, map low-dimensional input (labels) back to high-dimensional output (spectra), serving as a suitable approximation of M.

We train the encoder model either in isolation using a supervised approach, or in conjunction with the decoder using a semi-supervised or unsupervised approach. Semi-supervised learning enables us to leverage labeled and unlabeled data, potentially enhancing performance. Moreover, the secondary inference objective of the ML simulation cannot be optimally achieved through supervised learning alone, since, by definition, we cannot supervise unknown factors u.

All of our models minimize the loss functions that represent the disparity between the model output and the actual observations. Next, we briefly discuss progressively more complex models for the inference tasks and the corresponding learning loss functions.

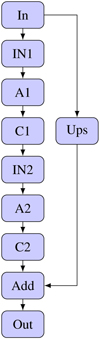

2.5 Machine learning models

Here, we present the ML models that target our inference tasks as described in Sect. 2.3. We begin with encoders and decoders as they are the foundational elements of the subsequent models. Beyond serving as foundational elements, an individual decoder can be used for ML simulation tasks, whereas an individual encoder is useful for label prediction. Building upon these, we develop both semi-supervised and unsupervised AEs. This section concludes with a description of semi-supervised VAEs and their derived methods. All AE-based methods are capable of jointly addressing label prediction and ML simulation tasks. The source code, including model implementations, data preprocessing, and training scripts, can be accessed online3.

2.5.1 Encoders

Encoders are part of AEs and the basic model that can provide label prediction. We employ convolutional neural networks (CNNs) that stack layers of convolutions along with differentiable non-linear activation functions to process an input spectrum. The CNN output is processed by a single fully connected layer to learn the encoding ![$\[q_{\phi}^{c}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq15.png) from spectrum s to latent representation b. The CNN architecture is based on the encoder proposed in Sedaghat et al. (2021), and a detailed description is given in Table B.1. We can achieve label prediction by ignoring u(u = 0).

from spectrum s to latent representation b. The CNN architecture is based on the encoder proposed in Sedaghat et al. (2021), and a detailed description is given in Table B.1. We can achieve label prediction by ignoring u(u = 0).

We defined the loss function for label prediction using encoders as the mean absolute label difference:

![$\[L_{\text {lab }}(\phi)=\mathbb{E}_{(\mathbf{s}, \mathbf{l}) \sim p_{\mathcal{D}}}\left[\frac{1}{K} \sum_{k=1}^{K}\left|\underline{l}^{k}-q_{\phi}^{c, k}(\mathbf{s})\right|\right],\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq16.png) (3)

(3)

where ![$\[\mathbb{E}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq17.png) is expected value, lk is the kth label (ground truth),

is expected value, lk is the kth label (ground truth), ![$\[q_{\phi}^{c, k}(\mathbf{s})\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq18.png) is the predicted value for kth label,

is the predicted value for kth label, ![$\[p_{\mathcal{D}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq19.png) is the distribution of the spectra s and associated catalog values l, and K is the number of labels for each spectrum. For instance, in the case of HARPS observational spectra, the distribution is empirical, based on the actual data samples we have. We sample from this distribution by randomly selecting a spectrum.

is the distribution of the spectra s and associated catalog values l, and K is the number of labels for each spectrum. For instance, in the case of HARPS observational spectra, the distribution is empirical, based on the actual data samples we have. We sample from this distribution by randomly selecting a spectrum.

The effectiveness of training encoder in isolation depends on the availability of learning data with sufficient variability in all factors. However, in our data set, only 1498 unique spectra are fully annotated (as described in Sect. 3). Most samples have incomplete annotations, and many spectra lack annotations altogether. Furthermore, corrupted, incorrect, or mislabeled data can negatively impact the supervised learning process.

Therefore, we investigate the incorporation of unsupervised methods to improve the accuracy of label prediction. A key ingredient in learning directly from spectra is the ability to reverse the encoding process through the use of decoders.

2.5.2 Decoders

Decoders are part of AEs and the basic model that provides ML simulation. In our decoder architecture pθ, we have used residual network (ResNet; He et al. 2016), as shown in Table B.3, or CNN, as illustrated in Table B.2 In either case, our objective is to learn the ML parameters θ. The loss function for the ML simulation is the mean reconstruction error:

![$\[L_{\mathrm{sim}}(\theta)=\mathbb{E}_{\left(\mathbf{(\mathbf{s, l })} \sim p_{\mathcal{D}}\right.}\left[\frac{1}{N} \sum_{j}^{N}\left|s^{j}-p_{\theta}^{j}(\underline{\mathbf{l}})\right|\right],\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq20.png) (4)

(4)

where sj represents the flux at pixel j of spectrum ![$\[\mathbf{s}, ~p_{\theta}^{j}(\underline{\mathbf{l}})\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq21.png) is the predicted flux at pixel j for labels l and N is the total number of pixels in the spectrum.

is the predicted flux at pixel j for labels l and N is the total number of pixels in the spectrum.

A potential downside of CNN architectures is that each layer processes only the output of its preceding layer. While ResNet mitigates this by incorporating short-range skip connections across two layers, this strategy may still be suboptimal. As data pass through deeper layers, the input labels or bottleneck representations may become diluted, leading to a loss of important information. To address this, we considered alternative architectures, including skip-VAEs (Dieng et al. 2019), DenseNet (Huang et al. 2017), and FiLM layers (Perez et al. 2018), which can allow labels to influence any layer. Nevertheless, in this work, we have focused on CNN and ResNet architectures, deferring exploration of these alternatives to future research.

2.5.3 Autoencoders and downstream learning

Autoencoders are a type of machine learning architecture that enables unsupervised learning directly from data. An AE is constructed by connecting an encoder to a decoder. It does not require labels because it feeds the encoder’s output directly into the decoder to achieve reconstruction. AEs are trained to minimize the reconstruction loss between the input and the reconstructed output.

Reconstruction loss is a measure of fidelity, quantifying how closely the reconstructed output matches the input. It also reflects the efficiency of the bottleneck, ensuring that it retains essential features for reconstruction. The reconstruction loss is again defined as the mean absolute difference across all pixels:

![$\[L_{\mathrm{rec}}(\theta, \phi)=\mathbb{E}_{\mathbf{s} \sim p_{\mathcal{D}}}\left[\frac{1}{N} \sum_{j}^{N}\left|s^{j}-p_{\theta}^{j}\left(q_{\phi}^{c}(\mathbf{s})\right)\right|\right].\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq22.png) (5)

(5)

Here, ![$\[p_{\theta}^{j}(q_{\phi}^{c}(\mathbf{s}))\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq23.png) is the AE’s prediction for the same pixel. Optimizing this loss over (θ, ϕ), the AE is trained to effectively capture the salient features of the spectral data necessary for reconstruction. The resulting latent representation might be practical for new tasks, such as label prediction, since it is much easier to process small latent representation instead of the full spectrum.

is the AE’s prediction for the same pixel. Optimizing this loss over (θ, ϕ), the AE is trained to effectively capture the salient features of the spectral data necessary for reconstruction. The resulting latent representation might be practical for new tasks, such as label prediction, since it is much easier to process small latent representation instead of the full spectrum.

Secondary tasks that utilize compressed representations from an AE are called downstream tasks. The typical deployment scenario occurs when we have abundant high-dimensional unlabeled data, of which only a small subset is labeled, and we aim to predict the labels. The workflow consists of two steps: first, learning an AE using the unlabeled data. This allows us to map high-dimensional data to a lower-dimensional space. Second, we use the low-dimensional representations (such as spectra) from the labeled subset to learn label prediction. There is no guarantee that an AE will learn a latent space useful for the target downstream task. This is influenced by the choice of the AE’s architecture, optimization techniques, and the properties of the data and labels. Therefore, the downstream task, which is usually the main objective, can serve as a criterion for model selection.

Our downstream tasks are label prediction and ML simulation. We chose linear regression for label prediction for two reasons. First, we are interested in AEs that provide a meaningful, disentangled latent space that can be straightforwardly translated into labels. A more complex model might recover labels from an entangled latent space, which has no clear connection to the labels. Second, since our second inference task is ML simulation, we need to map the labels back to the latent representation, which is then mapped to a spectrum. Complex models with multiple layers and non-linearities would be challenging to invert.

We used the following linear regression to map latent representation b to labels l:

![$\[\hat{\mathbf{l}}=\sum_{k=1}^{B} w^{k} \mathbf{b}^{k}+w^{k},\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq24.png) (6)

(6)

where w are weights defining the linear regression, ![$\[\hat{\mathbf{l}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq25.png) are predicted labels, and B is the size of the bottleneck. The model was trained using ordinary least squares linear regression.

are predicted labels, and B is the size of the bottleneck. The model was trained using ordinary least squares linear regression.

The downstream learning represents a two-step approach that links unsupervised preprocessing and supervised learning for label prediction or ML simulation. Next, we investigate the semi-supervised methodology where we use a single training phase that combines spectra and labels.

2.5.4 Semi-supervised autoencoder

We can trivially add supervision to the unsupervised AE, thus obtaining semi-supervised AE. Similarly to the supervision in Sect. 2.5.1, we use the known labels l to supervise the label-informed factors ![$\[\hat{\mathbf{l}}^{\mathrm{LIF}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq26.png) . Hence, we have label-informed factors that are influenced by both unsupervised and supervised objectives. In addition, we allow the unknown factors u to learn statistically meaningful information not provided by the known labels l. We achieve this by expanding the loss function in Eq. (5) while preserving the architecture of unsupervised AEs.

. Hence, we have label-informed factors that are influenced by both unsupervised and supervised objectives. In addition, we allow the unknown factors u to learn statistically meaningful information not provided by the known labels l. We achieve this by expanding the loss function in Eq. (5) while preserving the architecture of unsupervised AEs.

The integration of unsupervised and supervised learning objectives is captured by the following loss function, which combines the reconstruction loss Lrec from Eq. (5) for unsupervised learning with the label loss Llab from Eq. (3) from supervised learning:

![$\[L_{\mathrm{AE}}(\theta, \phi)=L_{\mathrm{rec}}(\theta, \phi)+\lambda_{\text {lab}} L_{\text {lab}}(\phi),\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq27.png) (7)

(7)

where λlab is a hyperparameter that allows balancing between reconstruction and label loss.

By design, the supervised portion ![$\[\hat{\mathbf{l}}^{\mathrm{LIF}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq28.png) of the latent representation b is meaningful and disentangled. However, the unsupervised portion u faces the same problems as latent representation in AEs: a lack of interpretability and entanglement. As a result, unsupervised nodes can become entangled with supervised nodes in the bottleneck. This is especially problematic for ML simulations, as the unsupervised nodes can interfere with the role of the supervised nodes during the simulation.

of the latent representation b is meaningful and disentangled. However, the unsupervised portion u faces the same problems as latent representation in AEs: a lack of interpretability and entanglement. As a result, unsupervised nodes can become entangled with supervised nodes in the bottleneck. This is especially problematic for ML simulations, as the unsupervised nodes can interfere with the role of the supervised nodes during the simulation.

As a solution, we investigated methods to regularize the bottleneck to achieve disentanglement of the unsupervised nodes. By imposing suitable constraints on the bottleneck, the model can maintain the integrity of the supervised nodes while ensuring that the unsupervised nodes capture information not contained in the known labels. Additionally, disentangling the unsupervised nodes allows them to be integrated into the ML simulation because we can easily sample from independent unsupervised nodes.

2.5.5 Semi-supervised variational autoencoder

Variational autoencoders are suitable for learning disentangled latent representations. The VAE is a probabilistic variant of AEs adept at learning disentangled latent representations. The key difference between VAEs and AEs lies in treating the latent representation as a distribution over the latent space, versus a single latent representation. We employ the β-VAE, a modification of the classic VAE. This adaptation introduces a ML hyperparameter ![$\[\lambda_{\mathbb{K L}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq29.png) that enables a flexible balance between reconstruction quality and the penalization of the latent representation distribution, thus facilitating a more controlled disentanglement of features.

that enables a flexible balance between reconstruction quality and the penalization of the latent representation distribution, thus facilitating a more controlled disentanglement of features.

The loss function of the β-VAE is

![$\[L_{\beta-\mathrm{VAE}(\mathrm{U})}\left(\theta, \phi, \lambda_{\mathbb{K L}}\right)=L_{\mathrm{rec}}(\theta, \phi)-\lambda_{\mathbb{K L}} \mathbb{E}_{\mathbf{s} \sim p_{\mathcal{D}}}\left[D_{\mathbb{K L}}\left(q_{\phi}^{p}(\mathbf{b} \mid \mathbf{s}) \| p_{b}(\mathbf{b})\right)\right],\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq30.png) (8)

(8)

where ![$\[p_{\mathcal{D}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq31.png) is a data distribution,

is a data distribution, ![$\[q_{\phi}^{p}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq32.png) is a probabilistic encoder that maps spectra s to a distribution over latent space

is a probabilistic encoder that maps spectra s to a distribution over latent space ![$\[\mathbf{b} \sim q_{\phi}^{p}(\cdot \mid \mathbf{s}), p_{b}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq33.png) is the spherical normal distribution that represents the implicitly disentangled prior over the latent space,

is the spherical normal distribution that represents the implicitly disentangled prior over the latent space, ![$\[\lambda_{\mathbb{K L}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq34.png) is the weight placed on the Kullback-Leibler (KL) term (called β in Higgins et al. 2017), and

is the weight placed on the Kullback-Leibler (KL) term (called β in Higgins et al. 2017), and ![$\[D_{\mathbb{K L}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq35.png) is the KL divergence described in Eq. (C.2). The equation reflects how the β-VAE’s objective function, derived from the original VAE, balances reconstruction accuracy and the latent space’s regularization. Further insights into the original β-VAE objective and its relation to our implementation can be found in Appendix C.1.

is the KL divergence described in Eq. (C.2). The equation reflects how the β-VAE’s objective function, derived from the original VAE, balances reconstruction accuracy and the latent space’s regularization. Further insights into the original β-VAE objective and its relation to our implementation can be found in Appendix C.1.

To achieve supervision, we added the label loss to the objective in Eq. (8):

![$\[\begin{align*}L_{\beta-\mathrm{VAE}}(\theta, \phi, \lambda_{\mathbb{KL}})= & L_{\beta-\mathrm{VAE}~(\mathrm{U})}(\theta, \phi, \lambda_{\mathbb{KL}})\\& +\lambda_{\mathrm{lab}} \mathbb{E}_{(\mathbf{s}, \mathbf{l}) \sim p_{\mathcal{D}}}\left[\frac{1}{K} \sum_{k=1}^{K}\left|l_{-k}-b_{k}(\mathbf{s})\right|\right],\end{align*}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq36.png) (9)

(9)

where ![$\[\mathbf{b}(\mathbf{s}) \sim q_{\phi}^{p}(\cdot \mid \mathbf{s})\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq37.png) denotes a vector sampled from

denotes a vector sampled from ![$\[q_{\phi}^{p}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq38.png) given the input s, and bk(s) represents the k-th element of that vector. This sampling strategy incorporates the stochastic nature of

given the input s, and bk(s) represents the k-th element of that vector. This sampling strategy incorporates the stochastic nature of ![$\[q_{\phi}^{p}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq39.png) into the supervised learning framework.

into the supervised learning framework.

An inherent challenge associated with the β-VAE framework is posterior collapse, where the latent representation fails to capture meaningful information from the input (Murphy 2023, pp. 796–797). This results in underutilization of nodes, effectively reducing the size of the bottleneck.

To address the problem of posterior collapse in β-VAEs, we adopt a mutual information-based method known as the Informational Maximizing Variational Autoencoder (InfoVAE; Zhao et al. 2019). This approach balances the disentanglement and informativeness of the latent representation. The corresponding loss function for unsupervised InfoVAE (U) is defined as

![$\[\begin{align*}L_{\mathrm{InfoVAE}(\mathrm{U})}(\theta, \phi & \left., \lambda_{\mathrm{MI}}, \lambda_{\mathrm{MMD}}\right)=L_{\mathrm{rec}}(\theta, \phi)\\& -\left(1-\lambda_{\mathrm{MI}}\right) \mathbb{E}_{\mathbf{s} \sim p_{\mathcal{D}}} D_{\mathbb{KL}}\left(q_{\phi}^{p}(\mathbf{b} \mid \mathbf{s}) \| p_{b}(\mathbf{b})\right)\\& -\left(\lambda_{\mathrm{MI}}+\lambda_{\mathrm{MMD}}-1\right) D_{\mathbb{KL}}\left(q_{\phi}^{p}(\mathbf{b}) \| p_{b}(\mathbf{b})\right),\end{align*}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq40.png) (10)

(10)

where a higher λMI means s and b have higher mutual information, and higher λMMD brings ![$\[q_{\phi}^{p}(\mathbf{b})\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq41.png) closer to the priors pb(b).

closer to the priors pb(b).

The term 1 − λMI serves a purpose similar to ![$\[\lambda_{\mathbb{KL}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq42.png) in the original β-VAE model. The primary distinction between β-VAE (U) and InfoVAE (U) lies in the term

in the original β-VAE model. The primary distinction between β-VAE (U) and InfoVAE (U) lies in the term ![$\[D_{\mathbb{K L}}\left(q_{\phi}^{p}(\mathbf{b}) {\mid} p_{b}(\mathbf{b})\right)\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq43.png) and the addition of extra ML hyperparameter. InfoVAE (U) allows us to balance disentanglement, reconstruction, and informativeness. For a more in-depth understanding and computational specifics of the InfoVAE loss function, please refer to Appendix C.2.

and the addition of extra ML hyperparameter. InfoVAE (U) allows us to balance disentanglement, reconstruction, and informativeness. For a more in-depth understanding and computational specifics of the InfoVAE loss function, please refer to Appendix C.2.

By adding the label loss, we obtain the loss function for supervised InfoVAE:

![$\[\begin{align*}L_{\mathrm{InfoVAE}}\left(\theta, \phi, \lambda_{\mathrm{MI}}, \lambda_{\mathrm{MMD}}\right)= & L_{\mathrm{InfoVAE}~(\mathrm{U})}\left(\theta, \phi, \lambda_{\mathrm{MI}}, \lambda_{\mathrm{MMD}}\right)\\& +\lambda_{\mathrm{lab}} \mathbb{E}_{(\mathbf{s,l}) \sim p_{\mathcal{D}}}\left[\frac{1}{K} \sum_{k=1}^{K}\left|\underline{l}_{k}-b_{k}(\mathbf{s})\right|\right].\end{align*}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq44.png) (11)

(11)

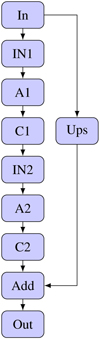

This final formulation, InfoVAE, integrates the strengths of InfoVAE with supervised learning elements. The complete visualization is shown in Fig. B.1.

3 Application of machine learning to spectra

In this section we describe the results of applying the methods introduced in Sect. 2 to the HARPS spectra described below in Sect. 3.1. We used a simulated dataset to cover various combinations of spectral parameters. These simulations are obtained from synthetic spectral energy distributions (SEDs) computed from the Kurucz (Kurucz 2005) stellar atmosphere models, processed through the instrument’s Exposure Time Calculator (ETC; Boffin et al. 2020). The ETC simulates atmospheric effects and those of the measurement apparatus, including the telescope and the HARPS instrument itself. To further increase the variability of the data, we propose transformation strategies to generate new data on the fly from the simulated data. This approach, known as data augmentation in ML terminology, is typically used to improve training by effectively increasing the quantity of data. These datasets aim to encompass a comprehensive range of astrophysical scenarios.

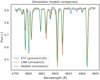

Next, we describe our approach to train and optimize the models. Then, we present the metrics used to evaluate the quality of label prediction. We evaluate the quality of ML simulation using three metrics, each targeting different properties. We use the standard reconstruction error to investigate the faithfulness of the reconstruction. Our two generative metrics are designed to measure how well an ML model grasps the cause-and-effect relationship between the spectral parameters and the output spectrum. Finally, we discuss our approach to model selection.

3.1 Data

In this section we describe both the use of real spectra from the HARPS instrument and our methodology for generating simulated spectra to enrich our dataset. This dual approach broadens our capabilities for both training and evaluating our models. The summary of the datasets we are using is in Table 1.

The selected dataset is comprised of HARPS observations ranging from October 24, 2003, to March 12, 2020 (the instrument is still in active operation, so more observations are being added regularly). Through the ESO Science Archive, we have access to 267361 fully reduced 1-dimensional (1D) HARPS spectra, consisting of flux as a function of wavelength. The processing that went from raw data to products is described in the online documentation4. About 2% of the raw science files failed processing into 1D spectra and are therefore not included in our analysis. Each spectrum has a spectral resolving power of R = λ/Δλ ≃ 115 000, and covers a spectral range from 380 to 690 nm, with a 3 nm gap in the middle.

Our machine learning experiments necessitate verified labels, which we consider the “ground truth.” We have two sources for the physical parameters used as labels: (1) the TESS Input Catalog (TIC, Stassun & et al. 2019), as already used by Sedaghat et al. (2021), serves as the source of our labels for the real data; and (2) the set of spectral parameters used to generate the ETC dataset. ETC labels are the most reliable with absolute control and consistency.

The TESS Input Catalog (and HARPS dataset) exhibits bias that is shown in Fig. 1, where each point represents a single observation. The data in this figure has already been filtered to remove underrepresented labels, as described below. The top histogram shows the distribution of the effective temperature, while the right histogram shows the distribution of the surface gravity. The color of the points represents metallicity, with black points indicating missing metallicity data. The figure shows that the main sequence is well represented, while other regions are underrepresented.

Therefore, we have filtered out spectra with labels that are significantly underrepresented in the catalog. Specifically, we have removed spectra with temperatures below 3000 K and above 11 000 K, metallicities below −1.2 dex and above 0.4 dex, and surface gravities below 3.5 dex and above 5 dex. This filtering was a practical necessity for model training since these ranges contain less than 2% of the samples, making it difficult to achieve reliable training, validation, and testing. This limits the model’s ability to generalize to these edge cases. We intend to address these limitation in future work through proper uncertainty quantification methods that would allow the model to express reduced confidence when making predictions near or beyond these boundaries.

We prepared the simulated data in two stages. First, we generated the intrinsic Spectral Energy Distribution emerging from the stars (“SED dataset”) by employing the ATLAS9 software (Kurucz 2005), facilitated by the use of Autokur (Mucciarelli 2019) to generate a dense grid that samples the stellar parameter space covered by the real data. This is driven by the following stellar parameters: effective temperature, surface gravity, and the chemical composition of the star. We fixed the microturbulent velocity at 2 km s−1, because this number is rarely available in catalogs for an individual spectrum.

To generate stellar parameters for our SED dataset, we sampled from uniform distributions with the following ranges: effective temperature from 3000 K to 11 000 K, surface gravity from 3.5 dex to 5.0 dex, and metallicity from −1.2 dex to 0.4. This approach ensures that our simulated data cover the same parameter space as the filtered HARPS dataset, allowing for direct comparisons while also providing more uniform coverage of underrepresented regions.

In the final step, we used the ETC (Boffin et al. 2020) to create a simulated dataset that more closely resembles the real HARPS dataset by including in the SEDs generated above the effects of the observing process, such as the imprints of the telescope, the instrument, and the Earth’s atmosphere. The spectral parameters are the magnitude, atmospheric water vapor, airmass, fractional lunar illumination, seeing, and exposure time. When referring to the ETC data in the text, we implicitly mean the combination of SED and ETC data. Full technical details are provided in Appendix A. In total, we generated 44 000 simulated HARPS spectra.

We utilize ETC data for pre-training, regularization, and transfer learning. We can generate an almost arbitrary combination of spectral parameters in the desired quantity. Although having more data is generally beneficial, combining data from multiple sources can be challenging and does not guarantee positive outcomes. Therefore, we investigate the impact of mixing ETC and HARPS data on model performance through several experiments, specifically examining the effect of adding simulated data to the real one.

The labels are further categorized as “intrinsic” (temperature, metallicity, and surface gravity) and “extrinsic” (radial velocity, airmass, and barycentric Earth radial velocity-BERV). The overview of labels availability for HARPS is presented in Table 2.

Each model can use either the observational HARPS dataset, the ETC dataset, or a mixture of both. We split the HARPS dataset into 90% data for training, 5% data for validation, and 5% for testing. Furthermore, some of our experiments utilized only 1% of the available labels for the real HARPS dataset. This is done to test and quantify how the accuracy and fidelity of the label prediction depend on the availability of input labels, which may be scarce in real-life scenarios.

For the ETC dataset, we generated separate datasets for training (42 000 samples), validation (1000 samples), and testing (1000 samples). The labels were sampled independently, each chosen randomly from a uniform distribution.

To assess the generative properties of models, we construct a generative ETC dataset. In this context, a generative dataset is one specifically designed to understand how isolated changes in certain labels affect the behavior and output of ML models. The dataset is organized into subsets, with each subset dedicated to exploring the variations of a single specific label.

The assembly process includes several steps. First, we generate a set of c core samples. Each core sample acts as a baseline configuration where all labels are set to uniformly sampled random values. Next, for each core sample, we systematically alter one of f labels and create v variations. In these variations, only the target label is altered from its baseline value in the core sample, while the other labels remain unchanged.

As a result of this process, the final dataset contains c · f · v samples, representing an exploration of how changes in each label affect the generative properties of the models. This dataset provides the basis for a detailed evaluation of the individual impact of each label.

Datasets used.

|

Fig. 1 Distribution of effective temperature, surface gravity, and metallicity in the HARPS dataset. |

HARPS label availability.

3.2 Data augmentation

As detailed in Appendix A, our data collection method allows for the generation of synthetic spectra with arbitrary radial velocities without further reliance on the ETC tool. This allows us to randomly alter radial velocities during training with minimal impact on performance, a technique known as “data augmentation” in ML. Our augmentation reduces the likelihood of memorization, since no single spectrum is repeated with the same radial velocity. Therefore, the augmentation encourages the model to disentangle the radial velocity more effectively. For this study, we uniformly sample radial velocities between −100 and 100 km/s. However, this method does have drawbacks, including slower I/O and increased memory demands per individual spectrum.

Our second strategy for augmentation focuses on annotation. We know that ETC spectra can be fully described by l, and u is relevant only for real spectra. We complied with this by allowing ETC spectra to utilize u, but penalize its usage to encourage the model to prefer representations that do not rely on u for ETC data. This penalization is implemented through a supervised loss, where we supervise u to be equal to the zero vector.

As a consequence, the ML model uses u only for real data. This might help with disentanglement as any attempt by the ML model to entangle unsupervised nodes with supervised will be penalized for ETC data. Furthermore, this strategy calibrates u as zero is connected to simulation data, while values different from zero inform about deviations from the simulated data.

Both strategies target the simulated data. The first strategy is specific to radial velocity and increases the variability of the simulated data without impacting the real data, that is, we could achieve the same effect by simply sampling more simulated data. The second strategy involves weak supervision of the unsupervised nodes u for simulated data during training. Consequently, the treatment of the real data is affected as well. Therefore, the second strategy is more general.

3.3 Model training and optimization

In this section we describe the process of implementing and training the various models. The models we focus on include stand-alone encoders, stand-alone decoders, AEs, β-VAEs, and infoVAEs, as detailed in Sect. 2. All models that include an encoder use the CNN encoder as specified in Table B.1. The decoding process is implemented by a CNN, detailed in Table B.2, or a ResNet decoder as outlined in Table B.3. All models were implemented and trained using the PyTorch Lightning framework (Falcon & The PyTorch Lightning team 2019).

We aim to select the highest possible value for the learning rate, as it speeds up the training. However, a learning rate that is too high results in spectral parameter divergence during training due to the vanishing or exploding gradient (Murphy 2022, p. 443). We set the learning rate to 10−4 for models that use CNN in encoder or decoder configurations. A higher learning rate of 10−3 is acceptable for models exclusively based on ResNet due to their enhanced stability. Thus, we can learn ResNet models significantly faster.

The training phase utilizes an Adam optimizer (Kingma & Ba 2015), a stochastic gradient descent variant, to efficiently manage backpropagation (Murphy 2022, p. 434) and update of ML parameters. Informally, the Adam optimizer can be seen to be adaptively modifying the learning rate based on the optimization process of the ML parameters. This optimizer includes a pair of ML hyperparameters, set to (β1, β2) = (0.9, 0.999). These ML hyperparameters help balance the influence of recent and past gradients. For more details on the Adam optimizer, see Kingma & Ba (2015). The Adam optimizer is a common and often default choice. Since we did not encounter any problems, exploring further alternatives was not worthwhile.

The standard practice in ML training is to monitor a metric that is evaluated on the validation dataset and stop training once the metric starts increasing; this practice is called “early stopping.” We initially chose the label prediction error as our stopping metric due to its relevance and quick evaluation. However, despite many days of training, we never observed the label prediction error increase; instead, it oscillated randomly while continuing to converge, resembling the double descent phenomenon for training epochs (Nakkiran et al. 2021). This unpredictable oscillation made setting an early stopping rule difficult.

As a result, we decided to train each model for a fixed 1000 epochs, where a single epoch is a complete pass through the dataset. An epoch is composed of numerous mini-batches, with the batch size indicating the number of spectra processed in each mini-batch. Given the extensive scale of our models and data, we opted for relatively small batch size, setting it to 32 spectra per batch. For additional details on optimizers and general machine learning concepts, readers may refer to Goodfellow et al. (2016); Murphy (2022).

3.4 Label prediction error

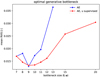

Our primary objective is focused on label prediction. We aim to produce results that closely match the labels provided by the catalog, for which we need to assess how successful our ML models are. The most straightforward approach is plotting the distribution of the errors. For visualization of error distributions, we use kernel density estimation (KDE) with bandwidth set according to Scott’s rule (Scott 1992), as shown in Fig. 2. This visualization is useful for qualitative analysis but does not provide a single numerical value that summarizes the model’s performance for the label prediction.

We achieved this by using the mean absolute error (MAE) between the predicted and the unnormalized ground truth labels:

![$\[\operatorname{MAE}(\{\mathbf{s}_{i}, \mathbf{l}_{i}, \hat{\mathbf{l}}_{i}\}_{i=1}^{D}, k)=\frac{1}{D} \sum_{i=1}^{D}\left|\mathbf{l}_{i}^{k}-\hat{\mathbf{l}}_{i}^{k}\right|.\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq45.png) (12)

(12)

The set ![$\[\left\{\mathbf{s}_{i}, \mathbf{l}_{i}\right\}_{i=1}^{D}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq46.png) denotes the testing dataset, where D is its size. The set

denotes the testing dataset, where D is its size. The set ![$\[\{\hat{\mathbf{l}}_i\}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq47.png) corresponds to the labels predicted by a ML model. The index k specifies the element of the label vector for which the MAE is computed. For the purpose of error analysis, we consider

corresponds to the labels predicted by a ML model. The index k specifies the element of the label vector for which the MAE is computed. For the purpose of error analysis, we consider ![$\[\hat{\mathbf{I}}^{\mathrm{LIF}}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq48.png) as the autoencoder’s predictions for labels

as the autoencoder’s predictions for labels ![$\[(\hat{\mathbf{\underline{l}}}_{i}=\hat{\mathbf{l}}_{i}^{\mathrm{LIF}})\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq49.png) . The MAE provides a single number summarizing the overall performance of a model for a given label with index k. However, since different labels have different units, it is not possible to compare the MAE values across different labels.

. The MAE provides a single number summarizing the overall performance of a model for a given label with index k. However, since different labels have different units, it is not possible to compare the MAE values across different labels.

To compare the performance across different labels, we use the normalized MAE (NMAE). Compared to MAE, we preceded the computation with a normalization step:

![$\[\operatorname{NMAE}(\{\mathbf{s}_{i}, \mathbf{l}_{i}, \hat{\mathbf{l}}_{i}\}_{i=1}^{D}, k)=\frac{1}{D} \sum_{i=1}^{D} \frac{|\mathbf{l}_{i}^{k}-\hat{\mathbf{l}}_{i}^{k}|}{\sigma_{k}},\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq50.png) (13)

(13)

where σk is the standard deviation of the ground truth labels for the k-th label. This modification enables us to compare performance across different labels, and we utilize it to analyze groups of labels.

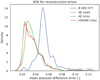

3.5 Reconstruction error

Reconstruction error is instrumental in evaluating the model’s ability to compress data while preserving key features. Additionally, this metric helps determine the effectiveness of our models in the ML simulation task.

Measuring reconstruction quality across the entire spectrum, including both the continuum and the spectral lines, presents a challenge due to the relative rarity of line pixels compared to continuum pixels. This difference can lead to misidentifying spectral lines as outliers.

An ideal metric would accurately capture deviations in both the continuum and spectral lines while being robust to outliers. However, our experiments with traditional data reconstruction metrics reveal a trade-off: either we fail to fit the continuum adequately (while capturing lines and outliers) or we overfit the continuum and miss both outliers and lines. Therefore, we chose MAE′ as the balanced metric:

![$\[\operatorname{MAE}^{\prime}(\{\mathbf{s}_{i}, \hat{\mathbf{s}}_{i}\}_{i=1}^{D})=\frac{1}{D N} \sum_{i=1}^{D} \sum_{j=1}^{N}\left|\mathbf{s}_{i}^{j}-\hat{\mathbf{s}}_{i}^{j}\right|,\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq51.png) (14)

(14)

where N is the number of pixels in the spectrum, ![$\[\mathbf{s}_{i}^{j}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq52.png) is the j-th pixel of the i-th spectrum, and

is the j-th pixel of the i-th spectrum, and ![$\[\hat{\mathbf{s}}_{i}^{j}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq53.png) is the corresponding prediction. It is well known that the absolute difference metric in MAE′ is robust to outliers (Murphy 2022, p. 399). We experimentally observed that it balances being too robust (insensitive to lines) and not robust enough (sensitive to artifacts).

is the corresponding prediction. It is well known that the absolute difference metric in MAE′ is robust to outliers (Murphy 2022, p. 399). We experimentally observed that it balances being too robust (insensitive to lines) and not robust enough (sensitive to artifacts).

3.6 Generative metrics

Generative metrics are an integral part of the evaluation process for models in ML simulation tasks. The true generative process involves cause-and-effect relationships between the spectral parameters and the resulting spectrum. However, the learned ML model is not required to replicate these relationships. It might introduce dependencies between supervised and unsupervised nodes, favor unsupervised nodes for reconstruction, or even ignore supervised nodes. For example, an ML model could learn to link effective temperature with some of its unsupervised nodes. Simply adjusting the supervised node that directly represents effective temperature will not be enough for correct simulation. This is because the model has spread the influence of effective temperature across multiple nodes, complicating how changes in temperature affect the predicted spectrum.

The mapping of labels to spectra and the measurement of reconstruction error cannot identify any of these issues. Therefore, we need metrics that specifically evaluate the model’s ability to simulate spectra based on interventions in individual labels, thus accurately measuring the cause-and-effect relationships between isolated spectral parameters and the resulting spectrum.

Our chosen approach is inspired by the latent space traversal (Chen et al. 2016), which is a recognized method for qualitative evaluation of generative properties. In latent space traversal, we manually modify the latent representation and observe the effects on the output spectrum. For example, we can alter the node responsible for the radial velocity and observe a Doppler shift in the output spectrum, provided the node captures the essence of radial velocity. This approach provides insight into the connection between labels and spectra. However, the challenge lies in quantifying this intuition.

Quantification of latent space traversal can be achieved by automatically intervening in the spectral parameters and measuring the reconstruction error against the true generative process, denoted as M. To facilitate this, we introduce a do operator to represent interventions on specific spectral parameters:

![$\[\mathbf{b}_{i}^{\prime}=\operatorname{do}\left(\mathbf{b}_{i}, \Delta l_{\text {scaled}}^{k}, k\right),\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq54.png) (15)

(15)

where bi denotes the latent representation of the i-th spectrum by some encoder q, k refers to the index of the node that is associated with the intervened spectral parameter, and ![$\[\Delta l_{\text {scaled }}^{k}\]$](/articles/aa/full_html/2025/01/aa51073-24/aa51073-24-eq55.png) is the intervention value for the spectral parameter (scaled using Eq. (1)). The operation do(bi,