| Issue |

A&A

Volume 693, January 2025

|

|

|---|---|---|

| Article Number | A18 | |

| Number of page(s) | 18 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202450421 | |

| Published online | 24 December 2024 | |

Subsweep: Extensions to the sweep method for radiative transfer

1

Universität Heidelberg, Zentrum für Astronomie, Institut für Theoretische Astrophysik,

Albert-Ueberle-Str. 2,

69120

Heidelberg,

Germany

2

Sorbonne Université, Institut D’Astrophysique de Paris,

98 bis Boulevard Arago,

75014

Paris,

France

3

Universität Heidelberg, Interdisziplinäres Zentrum für Wissenschaftliches Rechnen,

Im Neuenheimer Feld 225,

69120

Heidelberg,

Germany

★ Corresponding author; toni.peter@uni-heidelberg.de

Received:

17

April

2024

Accepted:

19

October

2024

We introduce the radiative transfer postprocessing code SUBSWEEP. The code is based on the method of transport sweeps, in which the exact solution to the scattering-less radiative transfer equation is computed in a single pass through the entire computational grid. The radiative transfer module is coupled to radiation chemistry, and chemical compositions as well as temperatures of the cells are evolved according to photon fluxes computed during radiative transfer. SUBSWEEP extends the method of transport sweeps by incorporating sub-timesteps in a hierarchy of partial sweeps of the grid. This alleviates the need for a low, global timestep and as a result SUBSWEEP is able to drastically reduce the amount of computation required for accurate integration of the coupled radiation chemistry equations. We succesfully apply the code to a number of physical tests such as the expansion of HII regions, the formation of shadows behind dense objects, and its behavior in the presence of periodic boundary conditions.

Key words: methods: numerical / HII regions / dark ages, reionization, first stars

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Reionization is the process in which the universe shifts from being fully neutral to being almost completely ionized everywhere. This is an important part of the transition from the primordial, homogeneous universe to the present-day universe which is full of heterogeneous, complex structures (see for example Wise 2019; Loeb & Barkana 2001; Zaroubi 2013). We want to understand reionization by performing a set of simulations in which radiative transfer and radiation chemistry are evolved in large cosmological simulations such as the Illustris-TNG simulations (Nelson et al. 2015, 2018, 2019; Naiman et al. 2018; Springel et al. 2018; Marinacci et al. 2018; Pillepich et al. 2018, 2019). Ideally, one would like to model reionization “on-the- fly” in such simulations, that is at the same time as the rest of the galaxy formation physics, since radiative feedback from reionization is thought to affect galaxy formation (Shapiro et al. 2004). Moreover, the intergalactic medium reacts to the passage of reionization fronts possibly resulting in the disruption of filaments (D’Aloisio et al. 2020).

However, the computational cost for doing so in a large, high-resolution simulation can be extremely large (Gnedin & Madau 2022). There is therefore considerable interest in finding efficient ways to model reionization in a post-processing step that can be applied to such simulations. While such an approach misses the recoupling of radiative feedback to hydrodynamics, it does allow us to compare the results of the simulation with and without radiative feedback without having to perform an additional, expensive hydrodynamics simulation.

Here, we introduce a new code, SUBSWEEP, which is designed to solve radiative transfer and radiation chemistry on large inputs, such as those from cosmological simulations, and which is therefore ideally suited for this application.

Radiative transfer is the physical theory that describes the propagation of radiation and its interactions with matter, such as absorption and scattering (Mihalas & Weibel-Mihalas 1999). There are multiple reasons why radiative transfer is a particularly challenging numerical problem, beginning with the high dimensionality of the quantity of interest: specific intensity, which depends on three spatial, two directional, one temporal, and one frequency dimension leading to a total of seven dimensions. In addition, the radiative transfer equation can be seen as an elliptical equation in optically thick media and as a hyperbolic equation in optically thin media, making it particularly difficult to select a single solution method that works well across the entire parameter space. Furthermore, we are primarily interested in physical scenarios in which the properties of the medium in which the radiation is transported change due to the influence of the radiation. At the same time, the radiation transport itself is dependent on the properties of the underlying medium (such as emissivity and opacity), calling for a solution to the coupled equations of radiative transfer and radiation chemistry.

Moment-based methods, where one solves the moments of the radiative transfer equation with some approximate closure relation, as opposed to the full radiative transfer equation, are a leading class of methods. This approximation can lead to drastically improved performance while decreasing the overall accuracy of the result. All moment-based methods need to choose a closure relation, which is typically given in terms of an expression for the Eddington tensor. One of the first examples of a moment-based method is the flux-limited diffusion approach (Levermore & Pomraning 1981; Whitehouse & Bate 2004) in which one assumes that the intensity varies sufficiently slowly in time and space that the radiative transfer equation becomes an effective diffusion law for the photon fluxes, and introduces a flux limiter to ensure that the signal speed of the radiation field remains lower than the speed of light.

Flux-limited diffusion has been successfully applied in astrophysics (for example Krumholz et al. 2007a; Boss 2008), but its main drawback is its diffusive nature which results in a lack of proper shadow formation (see for example Hayes & Norman 2003).

Another example of a moment-based method is the optically thin variable Eddington tensor method in which the Eddington tensor is computed under the assumption that all lines of sight to the sources in the simulation are optically thin (Gnedin & Abel 2001). While efficient, this algorithm is applicable only to a comparatively narrow range of problems.

The M1 method is another moment-based method based on the M1 closure relation (Aubert & Teyssier 2008; Kannan et al. 2019). While it is comparatively fast, it suffers from numerical problems inherent to moment-based methods, such as the two- beam instability (Rosdahl et al. 2013).

Finally, a closure relation can also be derived by explicitly computing the Eddington tensor using a ray-tracing approach (for example Hayes & Norman 2003; Finlator et al. 2018). Such methods are extremely accurate but are also typically far too slow to apply to high resolution simulations (e.g. the Finlator et al. 2018 calculation solved the radiative transfer problem on a coarse 643 grid, a factor of ∼20 000 smaller than the effective resolution of even the smallest TNG simulation).

Another class of methods is given by Monte Carlo approaches, in which radiation is represented by individual photon packets (Oxley & Woolfson 2003; Dullemond et al. 2012). For each source of radiation, packets are created by sampling over the direction of the emitted radiation (and its frequency, in multi-frequency versions of this approach) according to an appropriate probability distribution. The packets are then propagated through the gas and allowed to interact with it. These interactions are also treated probabilistically: the optical depth of a given fluid element can be used to derive the probability that a photon packet that encounters it will be absorbed. Scattering can be treated in a similar fashion. A primary advantage of these methods is that their accuracy is determined by the number of emitted photon packets, making it easily tunable. Inherent to the nature of the method is statistical noise, with a signal-to-noise ratio that scales as  , where n is the number of photon packets. However, an important disadvantage of this type of method is that it is difficult to parallelize when applied to very large simulations. For small simulations, the optimal parallelization strategy is typically to duplicate the computational domain on each processor and to split up the work by sharing the total number of photon packages evenly between the processors. Provided that the problem is small enough to all this, this approach provides close to linear scaling up to 100s or 1000s of cores (see for example Robitaille 2011). However, once the problem becomes too big to allow the whole computational domain to be held in the memory available to a single processor, this simple strategy is no longer possible, and efficient parallelization of the algorithm becomes much harder. Moreover, the cost of this approach necessarily scales with the number of sources in the simulation, which can quickly become a problem for cosmological simulations containing millions of galaxies.

, where n is the number of photon packets. However, an important disadvantage of this type of method is that it is difficult to parallelize when applied to very large simulations. For small simulations, the optimal parallelization strategy is typically to duplicate the computational domain on each processor and to split up the work by sharing the total number of photon packages evenly between the processors. Provided that the problem is small enough to all this, this approach provides close to linear scaling up to 100s or 1000s of cores (see for example Robitaille 2011). However, once the problem becomes too big to allow the whole computational domain to be held in the memory available to a single processor, this simple strategy is no longer possible, and efficient parallelization of the algorithm becomes much harder. Moreover, the cost of this approach necessarily scales with the number of sources in the simulation, which can quickly become a problem for cosmological simulations containing millions of galaxies.

In Peter et al. (2023), we introduced the sweep method and its implementation in AREPO, SWEEP, which is a very efficient way of computing the exact solution to the scattering-less radiative transfer equation in parallel based on the concept of transport sweeps (Koch et al. 1991; Zeyao & Lianxiang 2004). Transport sweeps are a subclass of discrete ordinate methods, in which the radiative transfer equation is solved simply by discretizing it in all of the available variables (time, space, frequency, and angle). During a sweep, scattering is assumed to be negligible, such that the radiative transfer equations for different angles decouple (note that scattering can still be computed correctly by reintroducing it as a source for subsequent sweep iterations). In order to solve the resulting equations efficiently, the algorithm computes an ad-hoc topological sorting of the grid with respect to the direction of the sweep, resulting in a method that computes the exact solution to the equation in a single pass through the grid. We have found the resulting method to be very performant and accurate at the same time.

Our previous implementation of the sweep method within the cosmological simulation code AREPO (Springel 2010), SWEEP (Peter et al. 2023), worked well on medium sized inputs, but a major problem was the fact that it could only perform global operations in which radiative transfer is performed on the entire box, before a global chemistry update is performed. This limitation makes large runs prohibitively expensive since the need for a small timestep in one of the cells of the entire simulation will imply a small global timestep everywhere.

In this paper, we introduce the standalone radiative transfer postprocessing code SUBSWEEP, which deals with this problem by introducing a substepping procedure, similar to the subtimestepping in modern hydrodynamical codes. This method works by assigning grid cells individual timesteps, which are chosen from a power-of-two hierarchy and adapted to the local, physical timescales of processes relevant to radiative transfer. Transport sweeps are then performed according to this timestep assignment in a physically consistent way, resulting in a computation in which cells with very low desired timesteps can be evolved accurately without sacrificing performance by strictly adhering to a global, low timestep.

We also find that this new method drastically alleviates the computational cost of incorporating periodic boundary conditions, one of the main challenges for the initial version of the sweep algorithm. Previously, periodic boundary conditions were implemented by source iteration: photon fluxes leaving the simulation box are introduced as a source term for a subsequent sweep, until convergence is reached. In the new substepping approach, we use the concept of warmstarting: periodic source terms from the previous iteration are used as a guess for the new timestep. By applying this concept also to the sub-timestep sweeps, we find that sufficient accuracy for our applications is reached without performing any additional source iterations.

This paper is structured as follows. In Sect. 2, we discuss the implementation details of SUBSWEEP, with a particular focus on the spatial domain decomposition (Sect. 2.2) and the construction of the Voronoi grid (Sect. 2.3). We also focus on radiative transfer in general and the sweep method in particular (Sect. 2.4) before we introduce the substepping approach (Sect. 2.5). Section 2 ends with the details of our radiation chemistry solver (Sect. 2.9). Afterwards, we perform a number of tests of our code (Sect. 3), showing the physical accuracy of the results in an R-Type expansion test in the normal case (Sect. 3.1) and the case of the expansion happening across a periodic boundary (Sect. 3.2). We also perform a test to study the shadowing behavior of the code (Sect. 3.3). We assess the performance of the substepping method by performing a one-dimensional R-Type expansion for a large range of parameters (Sect. 3.4) and perform a brief series of tests for the radiation chemistry solver (Sect. 3.6). Finally, we conclude this paper and discuss future extensions of the code as well as possible applications in Sect. 4.

2 Methods

2.1 General structure of the code

In this paper we discuss the SUBSWEEP simulation code1 which is a standalone code for post-processing large cosmological simulations. Currently, the code works with outputs of AREPO simulations, but extensions for output formats of other simulation codes are possible. The code requires data specifying the coordinates, temperatures and chemical compositions of the cells. Source terms can either be explicitly specified by the user or will be computed from a set of source cells (such as star particles in the case of AREPO) which also needs to be present in the inputs. It will then distribute the data onto the desired number of cores (which we briefly discuss in Sect. 2.2), construct a the Voronoi grid (discussed in Sect. 2.3) and solve the radiative transfer equation coupled to radiation chemistry and write out the intermediate and final results of the computation. Documentation for the usage of the code is available alongside the source code. The code is written with a particular focus on the postprocessing of high-redshift cosmological simulations and reionization, but extensions incorporating present-day chemistry into the code are straightforward.

2.2 Domain decomposition

In order to run our code in parallel, we have to distribute the available data over multiple cores. We choose to use a simplified version of a standard Peano-Hilbert space-filling curve approach to spatial domain decompositioning which we will briefly describe in the following.

The problem that the domain decomposition tries to solve is to distribute the particles onto the n cores 1… n in such a way that the total runtime of the program is minimized. Since this is a very difficult optimization problem to solve in general, we make it more concrete by defining two primary goals of the domain decomposition. The first goal is the minimization of the load imbalance  where the load L on core i can be defined in a variety of ways, which we will discuss later.

where the load L on core i can be defined in a variety of ways, which we will discuss later.

The second goal is to keep the total time spent communicating as low as possible. This requirement is almost equivalent to minimizing the surface area of the intersection between the domains, because shared interfaces are where communication needs to take place in order to solve them.

A third priority that is specific to transport sweep algorithms is that even if goals 1 and 2 are fulfilled optimally, the resulting sweep can still be slow if the cells are arranged in such a way that not all cores can work simultaneously due to the task dependencies that need to be fulfilled (for more discussion, see Peter et al. 2023).

For structured grids, a domain decomposition that optimizes the parallel performance of the transport sweep is given by the Koch-Baker-Alcouffe algorithm (Baker & Koch 1998; Koch et al. 1991). For unstructured grids, optimizing the performance by the domain decomposition is difficult in general, which has been discussed in detail (Vermaak et al. 2020; Adams et al. 2020).

Here, we will briefly discuss our implementation of a well- known approach based on space-filling curves that can solve requirements 1 and 2 simultaneously. For now, we find that even though we do not optimize explicitly for the third goal, that is the sweep scheduling, the resulting performance is good enough for our purposes.

In our case, a space-filling curve is given by a mapping f and its inverse f−1 between a one-dimensional interval and all the possible floating point positions in the three-dimensional simulation box with side length L,

![$f:\left[ {{c_{\min }},{c_{\max }}} \right] \to {[0,L]^3},$](/articles/aa/full_html/2025/01/aa50421-24/aa50421-24-eq3.png) (1)

(1)

![${f^{ - 1}}:{[0,L]^3} \to \left[ {{c_{\min }},{c_{\max }}} \right],$](/articles/aa/full_html/2025/01/aa50421-24/aa50421-24-eq4.png) (2)

(2)

where cmin and cmax are the minimum and maximum values of the domain of the space filling curve respectively. We call f (r) the key of a particle at position r. The basic idea of a domain decomposition using such a space filling curve is to move the three-dimensional optimization problem of distributing a set of points {pj є [0, L]3} onto n cores 1… n to a more tractable, onedimensional problem. In our case, this one-dimensional problem is the problem of finding cut-offs si for i = 1… n − 1 so that the load imbalance is minimized if each core i gets assigned the points {pj | si−1 < f−1(pj) < si}, where we take s0 = cmin and sn = cmax. If the space-filling curve is chosen such that it maps close-by points on the interval [cmin, cmax] to close-by points in three dimensional space, the resulting distribution of points will form reasonably compact domains. A common choice for such a curve is the Hilbert curve.

In order to execute the domain decomposition using the Hilbert curve, we require the load function Li(c1, c2) which computes the total load of the particles on core i between the keys c1 and c2. Here, we assume that the load can be computed as a sum over the load for each particle.

1: procedure FIND si

2: Initial guess:

3: for d ← 1, dmax do

4: For each rank k, compute Lk(si−1, si).

5: Compute  via a global sum.

via a global sum.

6: if L = Llocal then return si

7: else if L < Ldesired then

8:

9: else if L > Llocal then

10:

return si

In order to find the distributions of the cut-offs si we proceed as follows:

For each core, compute the keys for all local particles and sort them, so that computing the load function Li(c 1, c2) becomes a cheap operation for any keys c1 , c2 .

Compute the total load of the entire simulation

(via a global sum).

(via a global sum).Compute the desired load on each core as

.

.Using this, compute the cut-offs si, starting with s1 using the parallel search described in Algorithm 1.

2.3 Construction of the Voronoi grid

In order to perform the sweep algorithm over a set of points, we need to construct a mesh, so that we can determine the connectivity of cells. In order to avoid any additional numerical artifacts, we decided to use a similar mesh to the one that was used in the code which generated the outputs which we are trying to post-process using SUBSWEEP. Since we are mostly interested in postprocessing simulation outputs of AREPO, we choose to use a Voronoi grid, which is the mesh that AREPO is based on.

There are many different algorithms for constructing Voronoi grids. For simplicity, the one here is based closely on the method described in Springel (2010). The method is based on incremental insertion (Bowyer 1981; Watson 1981), extended to allow construction of the grid for a point set distributed onto multiple cores.

2.3.1 Construction of the local Delaunay triangulation

The Voronoi grid is constructed from its dual, the Delaunay triangulation. The serial incremental insertion algorithm for constructing the Delaunay triangulation proceeds as follows: Given a set of N mesh-generating points {pi | 1 ≤ i ≤ N}, begin with an all-encompassing tetrahedron, that is, one that is large enough to contain all points pi . Now, for every point p, locate the tetrahedron in the triangulation which contains p. How exactly this is done in a performant way is described in Sect. 2.3.2. Using p, we split the tetrahedron containing p into 4 new tetrahedra. After the split, the resulting triangulation is not necessarily Delaunay. In order to restore the Delaunay property, we begin by putting each of the 4 newly formed tetrahedra on a stack. For each tetrahedron t in the stack, we find the face F which is opposite of p in t. We then find the tetrahedron t′ which is on the other side of F, and locate the point p′ which is opposite of F within t′ . If p′ is contained in the circumcircle around t, then the face F violates the Delaunay criterion and needs to be removed. To do so we perform a flip orientation on the two tetrahedra t and t′ which will result in a number of new tetrahedra, each of which will now have to be checked for the Delauny property, so we put them on the stack as well. Once the stack is empty, the Delaunay property has been restored again and we can begin inserting the next point.

The flip operation between two tetrahedra t and t′ , their shared face F and the two points p and p′ opposite of F in each of the tetrahedra respectively works as follows: Compute the intersection point q of the face F with the line between p and p′ . If q lies inside F, we perform a 2-to-3-flip, in which the two tetrahedra are replaced by three. If the intersection point lies outside one of the edges of F, we take into account the neighboring tetrahedron along that edge and perform the opposite operation – a 3-to-2 flip – in which the three tetrahedra are converted to two. If the intersection point lies outside two edges, the flip can be skipped. It can be shown (Edelsbrunner & Shah 1996) that flipping the remaining violating edges will restore the Delaunay property. For more information on this procedure see Springel (2010).

2.3.2 Point location

While inserting a point p into the triangulation, we need to locate the tetrahedron containing p. This is performed by the simple “jump and walk” method. The method works by using a priority queue q. We initialize q as containing only the last tetrahedron that was inserted into the triangulation. Now we iteratively take the highest priority tetrahedron t out of the queue. If t contains p, return t. If t does not contain p, we add all the neighboring tetra- hedra of t to q with their priority determined by their distance to p (so that tetrahedra closest to p are searched first). The method performs best if the order of the points inserted into the triangulation is such that two points inserted after another are also at similar positions (which in turn makes the initial guess better).

In order to achieve this, we begin the construction by sorting all points according to their Peano-Hilbert key.

2.3.3 Parallel Delaunay construction

In principle, we would like to construct the global Delaunay triangulation Tglobal on all of the points in the entire simulation. In practice, we are limited to those points that are available on each core. All we can do is to construct a local triangulation Tlocal over all of the local points. The goal of the triangulation is to provide connection information and in order for it to be useful, this connection information has to be consistent with what the other cores see. It is clear that in order to do so and preserve the Delaunay property we need to import points that lie on other cores, which we call halo points. More precisely, we want to construct Tlocal in such a way that it is consistent with Tglobal, in the sense that for every local point p, the set of tetrahe- dra {t| t є Tlocal, p ∈ t} is the same as {t| t ∈ Tglobal, p ∈ t}. Note that this requirement does not extend to halo points, allowing us to stop importing additional halo points once all local points are consistent in the above sense.

Here, we will describe the algorithm for the halo search. The goal here is to import every necessary halo point in order to reach a consistent local triangulation, while importing as few as possible superfluous points in order to speed up the grid generation and keep memory overhead as low as possible. The basic idea is that a tetrahedron t is consistent with the global triangulation Tglobal iff we have imported the set of all points {p | p ∈ C(t)} from all other cores, where C(t) is the circumcircle of the tetrahedron. To do so, we begin by constructing the Delaunay triangulation of all local points  . Initially, we flag every tetrahedron in the triangulation as “undecided" and then iterate on the following process:

. Initially, we flag every tetrahedron in the triangulation as “undecided" and then iterate on the following process:

For every undecided tetrahedron t, we compute the circumcircle C(t) = (c, r) with center c and radius r. Given the circumcircle C(t), we compute the search radius r′. Search other cores for all points p that are within r′ distance of c which we have not imported locally yet. If there is no such point anywhere (which means we have imported all points that could be contained in the circumcircle of the tetrahedron), flag the tetrahedron as “decided”. Otherwise, we add all points p to the list of newly imported points. Now, we construct  by inserting the set of all newly imported points into

by inserting the set of all newly imported points into  . We flag any newly created tetrahedron which contains a local point as undecided.

. We flag any newly created tetrahedron which contains a local point as undecided.

Here, the search radius r′ is computed as follows: If the radius of the circumcircle r is smaller than the average expected size of a Voronoi tetrahedron  then r′ = r. Otherwise, we use

then r′ = r. Otherwise, we use  , unless we have previously performed a radius search for this tetrahedron before, in which case we use

, unless we have previously performed a radius search for this tetrahedron before, in which case we use  where α > 1 is a free parameter. This is done because in the first few iterations of the triangulation, very large tetrahedra tend to form because we are not yet aware of the presence of very nearby points on other cores. If we blindly performed a radius search with the radius of the circumcircle r, we might unnecessarily import a large number of points from other cores. However, if the triangulation should contain this large tetrahedron, the exponentially increasing search radius will ensure that we perform a search with the proper radius within a reasonable number of iterations.

where α > 1 is a free parameter. This is done because in the first few iterations of the triangulation, very large tetrahedra tend to form because we are not yet aware of the presence of very nearby points on other cores. If we blindly performed a radius search with the radius of the circumcircle r, we might unnecessarily import a large number of points from other cores. However, if the triangulation should contain this large tetrahedron, the exponentially increasing search radius will ensure that we perform a search with the proper radius within a reasonable number of iterations.

If periodic boundaries are desired, the same procedure described above, which imports halo points from other ranks can also import periodic haloes (points that represent a point shifted by a multiple of the box size along one or more axes) both from other cores and from the set of local points. This can be done by modifying the radius search such that it takes periodic images of points into account. Since constructing the distributed triangulation requires many radius searches, we need to perform the radius search quickly. To do so, we construct a standard Oct-tree on the set of all local points which reduces point search to a 𝒪(n log n) operation.

2.3.4 Degeneracies

Another difficulty in creating Delaunay triangulation is how to deal with degenerate cases and those that are close to being degenerate. One solution to this problem is to perform all operations in arbitrary precision arithmetic. However, this will reduce the performance of the code drastically. In SUBSWEEP we take an approach similar to the one in Springel (2010) where we perform the critical checks (such as the checks that ask whether a point is contained in a tetrahedron or whether a tetrahedron is positively oriented) in floating point arithmetic first. If the result of the floating point operation is at risk of being qualitatively wrong due to numerical round-off errors, we perform it again in arbitrary precision arithmetic. In the current code, we do not deal with truly degenerate cases (for example, a point lying exactly on a face of a tetrahedron) because we find them to be extremely rare in practice, however, it is possible to extend the method to account for degeneracies. For more information on this procedure, we refer to Springel (2010).

2.4 The sweep algorithm

We introduced the sweep algorithm in the context of astrophysics in Peter et al. (2023). Here, we will briefly recap the basics of the algorithm in order to explain the required fundamentals for understanding the extensions we will introduce in later sections.

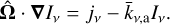

Given the specific radiative intensity  , with frequency v, spatial position r, time t, and solid angle

, with frequency v, spatial position r, time t, and solid angle  given in units of W−2 sr−1 Hz−1 , the general radiative transfer equation (RTE) reads (Rybicki & Lightman 1985)

given in units of W−2 sr−1 Hz−1 , the general radiative transfer equation (RTE) reads (Rybicki & Lightman 1985)

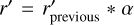

(3)

(3)

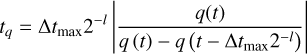

In the case of SUBSWEEP, we assume that scattering terms are negligible (see Peter et al. 2023 for more details) and make the infinite speed of light assumption, so that we obtain

(4)

(4)

We note here that the infinite speed of light assumption is indeed quite a strong assumption in cosmological contexts, where light-crossing times of large intergalactic structures can quickly become relevant, so that assuming an infinite speed of light can cause ionization fronts to be affected by a change in the source population more quickly than they would in reality. However, this only has a minor impact on the overall evolution of the system. For more discussion of the impact of an infinite speed of light in a cosmological context, see for example Leong et al. (2023).

The sweep method is a discrete ordinate method, which means that it solves the RTE by discretizing it in every variable, that is in space, time, angle and frequency.

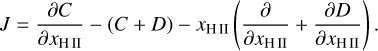

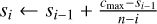

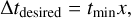

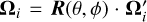

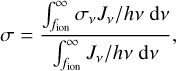

Equation (4) can be intuitively understood using the illustration in Fig. 1, which shows the processes affecting a small volume of space. Sources of radiation in this volume are through incoming radiation from cells to the left (orange arrows), the source term j directly (green arrow). Radiation from the cell is either absorbed (blue arrow) or leaves the cell towards the right (red arrows). The neighboring cells therefore fall into two categories. Cells upwind of the cell along Ω (orange arrows) need to have their solution computed before this cell, since we require the incoming fluxes from those cells to solve the local problem. Cells downwind of the cell require the outgoing fluxes of the local solution in order to be solved.

The crucial idea of the sweep method is that it finds a topological sorting of the partial order induced by the upwind- downwind relation, such that the exact solution to the (scatteringless) RTE can be obtained in ndir passes through the grid, where ndir is the number of bins into which we choose discretize the angular directions. It is crucial that the upwind-downwind relation is transitive, so that it is a partial order (which is equivalent to there being no cycles in the directed graph induced by the order), because otherwise a topological sorting of the cells does not exist. We have shown in Peter et al. (2023) that this is always true for such an ordering induced by a Voronoi grid, which is the only type of grid that we are going to work with in this paper. It should be noted that the ordering is trivially acyclic for Euclidean grids or grids generated by adaptive mesh refinement, so that the sweep algorithm can also be used in other types of grid that are widely used in astrophysics.

1: initialize task queue q ← {}

2: for all Ω and all cells c in grid do

3: count number of required upwind fluxes n(c, Ω) ← u(t)

4: if n(c, Ω) = 0 then add task (c, Ω) to q

5: while q not empty do

6: get first task t = (c, Ω) from q

7: solve t using upwind fluxes

8: for downwind neighbor cd in d(t) do

9: reduce missing upwind flux count n(cd, Ω) by 1.

10: if n(cd, Ω) = 0 then add task (cd, Ω) to q.

The single-core sweep algorithm is described in Algorithm 2. In order to find the topological sorting, the sweep algorithm starts the computation by computing an upwind count for each direction and each cell which is simply the number of cells upwind of the cell in the given direction. The idea is to keep track of the set of all (cell, direction) pairs which can currently be solved, which are those whose upwind neighbors have already been solved. Whenever we solve a task, we reduce the upwind count of all its downwind dependencies by 1. If the upwind count of this dependency is now zero, we put this dependency into the task queue. Once the queue is empty, we have solved all tasks and have obtained the solution to the RTE. The grid being acyclic guarantees that this algorithm always terminates.

1: initialize task queue q ← {)

2: initialize send queues for each processor i holding downwind neighbors of any of the cells in the domain of the current processor: si ← {}

3: for all Ω and all cells c in grid do

4: count number of required upwind fluxes n(c, Ω) ← u(t)

5: if n(c, Ω) = 0 then add task (c, Ω) to q

6: while any cell unsolved or any si not empty do

7: for each incoming message (flux f along Ω into cell c)

do

8: reduce missing upwind flux count n(c, Ω) by 1.

9: if n(c, Ω) = 0 then add task (c, Ω) to q.

10: nsolved = 0

11: while q nonempty and nsolved < Nmax do

12: get first task t = (c, Ω) from q

13: solve t using upwind fluxes

14: nsolved += 1

15: for downwind neighbor cd in d(t) do

16: if cd is remote cell on processor i then

17: add flux to send queue si

18: else

19: reduce missing upwind flux count n(cd, Ω) by 1.

20: if n(cd, Ω) = 0 then add task (cd, Ω) to q.

21: send all messages in si

In order to perform the algorithm described in Algorithm 2 in parallel on many cores with a spatial domain decomposition, a number of modifications need to be made to the algorithm. The basic idea of the algorithm does not change in the parallelized version of the code. The main difference is that we need to take task dependencies between different cores into account. Previously, having solved a task meant that we could simply reduce the upwind count of all its downwind dependencies by one. Now, the downwind dependencies of a task might be on a different core. In this case, we send a message to that core consisting of the outgoing fluxes of the local cell, the ID of the downwind cell, and the direction of the task. As soon as the other core receives that message, it will reduce the upwind count for the corresponding cell by one (and add it to the solve queue if the upwind count is 0 at this point). In order to improve performance, messages are not sent immediately, since sending lots of small messages tends to increase communication overhead and reduce performance as a result. The opposite strategy of sending messages only after all tasks that are solvable locally have been solved also comes with performance drawbacks, since it can cause long waiting times on neighboring cores who cannot perform any work before receiving new incoming fluxes. In practice, we use an intermediate approach, where we solve at most Nmax local tasks before new messages are sent and received. Here, Nmax is a free parameter and the two extremes are recovered for Nmax = 1 and Nmax = ∞, respectively. In order for this parallel algorithm to terminate, it is crucial that all cores agree on the connections between their local cells. If this is not the case, cores can end up waiting for incoming messages that will never be sent, causing infinite deadlocks. The property that all cores agree on the interfaces between their boundary cells is ensured by the particular way in which grid construction is performed by the algorithm described in Sect. 2.3.3.

|

Fig. 1 Illustration of the radiative processes described by Eq. (4) for a single grid cell. The processes are: Incoming (orange) and outgoing (red) radiation, sources (green), absorption (blue). |

2.5 Substepping

2.5.1 Motivation

The main problem with the sweep algorithm above is that the entire grid operates on the same timestep. This is not a problem for the RTE alone, since, given our assumption of infinite speed of light, it is independent of time and therefore reaches a steady-state solution immediately. However, we are interested in solutions of the RTE coupled to the radiation chemistry equations, which are manifestly time-dependent.

In practice, a large fraction of the cells in the simulation are either fully ionized or fully neutral and have settled into an equilibrium where their chemistry update could be performed on long timesteps. However, cells along ionization fronts require comparatively short timesteps in order to accurately integrate both the RTE and the chemical rate equations. With the previous sweep algorithm, the only option was to use a low value for the global timestep, which in turn means that solving the system for the desired amount of time requires a higher number of sweeps.

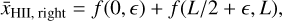

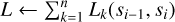

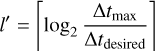

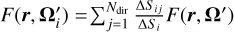

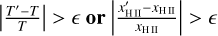

In the following, we will introduce a modification to the sweep algorithm which allows cells to perform sub-timesteps, if required. Effectively, this solves the global timestep problem by letting cells choose their desired timestep. In the following, we will explain how this algorithm works in practice. An illustration of the algorithm is shown in Fig. 2.

2.5.2 Timestep levels

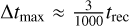

In order to do so, we introduce n different timestep levels. Each cell c is assigned to a timestep level l(c). During a full timestep Δtmax, a cell at timestep level 0 receives one update for a full timestep Δtmax. Cells at level l receive 2l updates with a timestep of 2−lΔtmax.

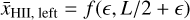

At the end of each full timestep, each cell re-computes its desired timestep. To do so, we begin by computing the three timescales

tT , the timescale on which the temperature T changes,

, the timescale on which the ionized hydrogen fraction xH II changes,

, the timescale on which the ionized hydrogen fraction xH II changes,tF, the timescale on which the photon flux F changes.

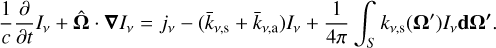

Each individual timescale tq for the corresponding quantity q (where q is one of T, xH II, and F) is computed as

(5)

(5)

where q(t − Δtmax2−l) and q(t) refer to the values of q in the previous partial sweep and the current one respectively and l is the current timestep level of the cell. Using these individual timescales, we define the minimum tmin as

![${t_{\min }} = \min \left[ {{t_{{x_{{\rm{HII}}}}}},{t_T},{t_F}} \right].$](/articles/aa/full_html/2025/01/aa50421-24/aa50421-24-eq23.png) (6)

(6)

Finally, the timescale tmin is used to compute the desired timestep Δtdesired for the cell as

(7)

(7)

where x ∈ (0,1] is a dimensionless free parameter which controls the accuracy of the integration. Given tdesired , we determine the timestep level l′ of the cell for the next full timestep as

(8)

(8)

for the entire next full timestep. In order to keep a fixed number of levels n, values of l′ > n − 1 are reduced to n − 1 and values of l′ < 0 are increased to zero. Modifications of this method where cells can change their timestep level in the middle of a full timestep are possible, but for reasons of simplicity, we have not implemented them at this point.

|

Fig. 2 Illustration of the sweep substepping procedure. The rectangles represent cells, with the color of the cell indicating how far the cell has been integrated. A fully blue cell has not been integrated at all, while a green cell has been integrated for a total of Δtmax. Arrows represent fluxes going into the cell which are computed during the sweep steps. Black arrows denote normal fluxes, while orange arrows represent boundary terms for the sweep. Each row represents either a sweep (denoted by S) or a chemistry update (denoted by C) at the corresponding level l. The last row represents the final state with each cell having been fully integrated. |

2.5.3 The algorithm

Given the distribution of the cells onto the n timestep levels 0… n − 1, we introduce the following terminology: A “partial sweep” at level l, or l-sweep is a sweep of all the cells which are at level l or higher. During a l-sweep, we call cells “active” if their timestep level l′ fulfills l′ ≥ l, that is if they are involved in the l-sweep. A “full sweep” is the procedure by which the system is integrated for a full timestep Δtmax and consists of 1 0-sweep, 1 1-sweeps, 2 2-sweeps, 4 3-sweeps, . . . , and 2n−2 (n − 1)-sweeps. The order in which they are performed is illustrated in Fig. 2.

Since only the cells at levels larger or equal than l participate in an l-sweep, we need to decide how to treat the incoming fluxes from cells which are at levels below l. In this method, the fluxes of all cells at timestep levels 0… l − 1 are kept constant and effectively treated as boundary conditions for the cells at higher levels. During the l-sweep, we compute a flux correction for each cell that has at least one active upwind neighbor, since these are the only cells for which the fluxes change during this partial sweep. For these cells, the new outgoing flux  is computed as

is computed as

(9)

(9)

where Fout is the value of the outgoing flux before the partial sweep, Fin is the incoming flux term before the partial sweep,  is the incoming flux in this partial sweep and f is the function that computes the outgoing fluxes given the incoming fluxes and crucially depends on the chemical composition which might change during a chemistry update. This function depends on the implementation of the chemistry, and its exact form in our hydrogen-only chemistry implementation will be discussed in Sect. 2.9. Outgoing fluxes of a cell are either used directly as input into other local cells, or communicated to other cores, as in the original sweep algorithm without substepping. Flux corrections are applied to cells whether or not the target cell itself is active.

is the incoming flux in this partial sweep and f is the function that computes the outgoing fluxes given the incoming fluxes and crucially depends on the chemical composition which might change during a chemistry update. This function depends on the implementation of the chemistry, and its exact form in our hydrogen-only chemistry implementation will be discussed in Sect. 2.9. Outgoing fluxes of a cell are either used directly as input into other local cells, or communicated to other cores, as in the original sweep algorithm without substepping. Flux corrections are applied to cells whether or not the target cell itself is active.

Once the l-sweep is finished, all cells c have their chemistry updated by 2−l(c)Δtmax. This means that at this moment, cells on higher levels (and therefore shorter timesteps) have experienced “less" time, than those on lower levels. This will be corrected by performing additional partial sweeps on the higher levels, so that at the end of a full sweep, each particle has been integrated for exactly Δtmax . It should also be noted that consistency is guaranteed in the sense that the order in which the partial sweeps are performed guarantees that for any given partial sweep, all active cells have experienced the same amount of time. After every full sweep, the cells are moved onto their new timestep level, according to their desired timestep (see Eq. (8)). Crucially, after the timestep levels have been updated, each core communicates the new timestep level of each of its boundary cells to the neighboring cores. This is important because all of the cores have to agree on which cells are active at each level. If they do not agree on this, one of the cores will expect incoming fluxes over the interface shared by the two cells while the other will not send those fluxes, resulting in a deadlock of the partial sweep.

2.6 Wind up

At the beginning of the simulation, we do not know how to distribute the cells onto the timestep levels, since we have no prior data on the timescales at which their relevant quantities will change. If we had to guess the timestep level of any cell, the only reasonable choice we can make in order to not violate the timestep criterion of any single cell, is to place all cells in the highest level (the lowest timestep). However, performing a full sweep in this setup would require a total of 2n+1 − 1 sweeps of the entire grid, an extremely expensive operation. In order to avoid this, we compute the timescales of each cell by placing each cell in level n − 1 and beginning with a (n − 1)-sweep, that is a sweep at the smallest allowed timestep (2−(n−1)Δtmax). We then allow each cell to move one level down, if its desired timestep is large enough, and perform a n − 2 sweep, and so on. In the end, we have performed n partial sweeps and have simulated a total time of  . In order to align the time intervals with multiples of Δtmax, we perform one more partial sweep of all cells with timestep 2−(n−1)Δtmax which will bring the total simulated time to Δtmax . From now on, every timestep will be performed by a full sweep, which totals Δtmax.

. In order to align the time intervals with multiples of Δtmax, we perform one more partial sweep of all cells with timestep 2−(n−1)Δtmax which will bring the total simulated time to Δtmax . From now on, every timestep will be performed by a full sweep, which totals Δtmax.

2.7 Periodic boundary conditions

Periodic boundary conditions are important in order to study cosmological volumes of space self-consistently, by allowing effects from the matter outside of the simulation box to be approximately modeled by the contents of the simulation box itself. In the case of radiative transfer, this means re-introducing photons that escape the box on one side to the mirrored position on the opposite side.

In Sect. 2.3.3, we discussed how periodic boundaries are taken into consideration during mesh construction. This means that each cell at the boundary of the box knows the location of its periodic neighbors. There is no obvious, self-consistent way of re-introducing outgoing photons within a single sweep. However, we can make use of the source iteration algorithm and treat fluxes that leave the boundaries of the simulation box as source terms for the next iteration of the algorithm. Each iteration then approximates the true periodic source terms until convergence is reached. However, applying this approach to a full sweep has the obvious drawback that every iteration takes exactly as long as the original sweep. Since a full sweep over the grid is an expensive operation, repeating it a number of times in order to reach an acceptable level of convergence can quickly become infeasible.

In Peter et al. (2023) we discussed the concept of warmstarting, where the resulting fluxes from previous sweeps are re-introduced in the next iteration in order to speed up convergence. Moreover, warmstarting integrates extremely well with the sub-timestepping approach introduced in SUBSWEEP. To do so, we use the outgoing periodic fluxes of every partial sweep as incoming fluxes into the corresponding cells for the next partial sweep. This has a number of benefits. Primarily, it changes the algorithm so that it does not require a global cost (re-running the full sweep) in order to fix an often local problem (convergence of the periodic fluxes in the cells with the most activity). Instead, the algorithm naturally adapts itself to the local requirements and decreases the timestep in cells with particularly bad convergence behavior with respect to periodic boundary conditions. It should be noted that this happens without requiring any timestep criterion specific to periodic boundary conditions: cells that have not converged to their true periodic fluxes will automatically reduce the timestep, since that derives (among other things) from the rate of change in the flux terms, as shown in Eq. (6). This combination of warmstarting and substepping has proven so effective that we have chosen not to implement any global iteration on levels of full sweeps in SUBSWEEP.

2.8 Rotations

As discussed for the original SWEEP implementation in Peter et al. (2023), we perform rotations of the directional bins between transport sweeps, similar to the approach described in Krumholz et al. (2007b), in order to smooth out the effect that the discretization of the directional bins has on the result. The preferential directions introduced by this discretization can easily lead to very apparent star-shaped artifacts in the hydrogen ionization fraction around strong sources.

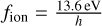

In SUBSWEEP, we keep this approach to smoothing out preferential directions. Here, remapping the flux corrections from one timestep to the next becomes important. As in the original implementation, the directions Ωi are rotated to new directions  where R(θ, ϕ) is the rotation matrix for the spherical coordinate-angles θ and ϕ. The angles are chosen from a uniform distribution of θ ∈ [0, π], ϕ ∈ [0, 2π]. Remapping of the fluxes onto the new angular bins is then done via

where R(θ, ϕ) is the rotation matrix for the spherical coordinate-angles θ and ϕ. The angles are chosen from a uniform distribution of θ ∈ [0, π], ϕ ∈ [0, 2π]. Remapping of the fluxes onto the new angular bins is then done via  where Ndir is the number of directional bins, the interpolation coefficients ΔSij are given by the solid angle that Ωi and Ωj share, and ΔSi is the solid angle corresponding to any direction Ωi.

where Ndir is the number of directional bins, the interpolation coefficients ΔSij are given by the solid angle that Ωi and Ωj share, and ΔSi is the solid angle corresponding to any direction Ωi.

These rotations are performed only after every full sweep and not after partial sweeps. It is possible in principle to rotate the bins also after every partial sweep, but doing so can have a very strong, discontinuous effect on the convergence timescale of some cells. In order to safely incorporate sub-timestep rotations into the substepping approach, we think it is necessary to introduce the ability for cells to change their desired timestep during partial sweeps, not only during full sweeps. Therefore, we have chosen not to introduce this additional complexity to the algorithm.

The drawback of this choice is that if the full-sweep timestep Δtmax is chosen to be large compared to the timescales at which ionization fronts move a large amount of cells (which is desirable in order to fully take advantage of the substepping approach), artifacts due to preferential directions can be visible. In order to avoid these artifacts, the full-sweep timestep has to be decreased, increasing computation time.

2.9 Radiation chemistry

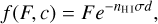

The implementation of the radiation chemistry in our code follows Rosdahl et al. (2013). In its current form, the code only treats the ionization, heating, and cooling of hydrogen in a primordial gas. We assume zero helium in our code. However, extensions to incorporate helium or more complex chemical networks are possible and intended in the structure of the code. This includes adding more frequency bins for the radiative transfer. In the current form, we use one frequency bin which incorporates all photons with frequencies v ≥ fion, where  .

.

The chemical state of a cell c is described by the state vector: U = (T, xH II) alongside its (constant) density p. The first thing that the implementation of the chemistry needs to provide is the function f (F, c) discussed in Sect. 2.5. This function computes the outgoing photon flux of a cell c given the incoming flux F which depends on the chemical state U of the cell. For our hydrogen-only chemistry, this function is given by

(10)

(10)

where nH I is the density of neutral hydrogen,  is the approximate size of the cell (V is the volume of the cell), and σ is the photon-number weighted average cross section of ionizing photons, defined as

is the approximate size of the cell (V is the volume of the cell), and σ is the photon-number weighted average cross section of ionizing photons, defined as

(11)

(11)

where Jν is the underlying spectrum of the source population. In principle we could be more consistent in our choice of cell size by computing the effective length of the cell along the given direction Ω of the sweep, but we did not do so in order to keep this as simple as possible.

1: procedure UPDATE(ΔT)

2: Remember initial state Uinit ← (T, xH II)

3: Compute T′ ← TEMPERATUREUPDATE(Δt).

4: Compute  ← IONIZATIONFRACTIONUPDATE(Δt).

← IONIZATIONFRACTIONUPDATE(Δt).

5: if  then

then

6: U ← Uinit

7: UPDATE(Δ T/2)

8: UPDATE(Δ T/2)

9: return

10: else

11: T ← T′

12:

The basic chemistry update of a cell, given the incoming photon flux F of photons above 13.6 eV proceeds as in Algorithm 4. The temperature update is performed first, which means that the ionization fraction will be updated semi- implicitly using the new values of the temperature.

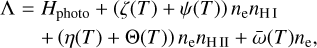

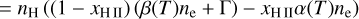

The temperature update is performed by solving the equation

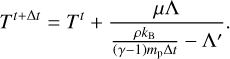

(12)

(12)

where mp is the mass of the proton, µ is the average mass of the particles in the gas in units of mp , γ is the adiabatic index, kB is the Boltzmann constant, ρ is the mass density of the gas and Λ is the total combined heating, and cooling term. In our case, we assume that the gas consists only of hydrogen, so that  where xH II is the hydrogen ionization fraction.

where xH II is the hydrogen ionization fraction.

Λ is given by a sum of the photo-heating term and the sum of all cooling processes,

(13)

(13)

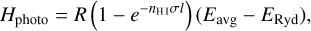

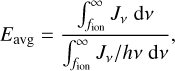

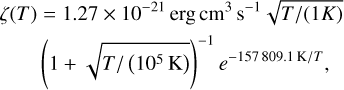

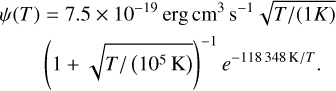

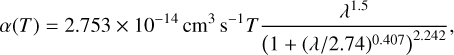

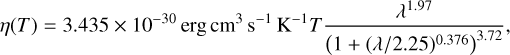

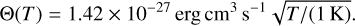

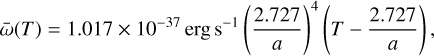

where Hphoto describes photo-heating, ne, nH I and nH II are the (number-)density of electrons, neutral hydrogen and ionized hydrogen respectively and the other terms describe cooling due to collisional ionization ζ(T), collisional excitation ψ(T), recombination η(T), Bremsstrahlung Θ(T), and Compton cooling  . We use the on-the-spot approximation in which we assume that every case A recombination (that is, recombination to the ground state) will emit a photon which is immediately reabsorbed by the surrounding neutral atoms so that it results in no additional recombination. Therefore η(T ) denotes the cooling rate of case B recombination only.

. We use the on-the-spot approximation in which we assume that every case A recombination (that is, recombination to the ground state) will emit a photon which is immediately reabsorbed by the surrounding neutral atoms so that it results in no additional recombination. Therefore η(T ) denotes the cooling rate of case B recombination only.

In order to prevent instabilities related to the stiffness of the equations, we solve Eq. (12) by updating the temperature via a semi-implicit formulation given by

(14)

(14)

Here,  is the derivative of the total heating rate with respect to temperature.

is the derivative of the total heating rate with respect to temperature.

It should be noted, that while higher order methods could be used, we chose the above method for its simplicity and consistency with the approach developed by Rosdahl et al. (2013). While this simple solver might suffer from excessive amounts of substepping if it were applied to a more complicated chemical network, we find that it performs well in our simple hydrogen-only network for the range of temperatures and ionization fractions that we are interested in.

The full expression for all the heating and cooling terms is given in Appendix A.

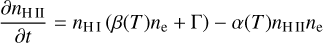

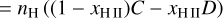

The equation describing the evolution of nH II is given by

(15)

(15)

(16)

(16)

(17)

(17)

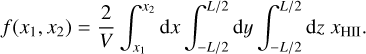

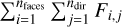

where β(T) is the electron collisional ionization rate, α(T) is the case-B recombination rate, C and D are the creation and destruction terms, Γ is the photoionization rate, which is computed as  , where nfaces is the number of neighboring faces of the cell, ndir is the number of discrete directions, and Fi,j is the incoming photon flux from a given neighbor in the given direction, where Fi,j = 0 if the neighbor is downwind in the given direction.

, where nfaces is the number of neighboring faces of the cell, ndir is the number of discrete directions, and Fi,j is the incoming photon flux from a given neighbor in the given direction, where Fi,j = 0 if the neighbor is downwind in the given direction.

Within a timestep, the ionization fraction is updated according to the semi-implicit formulation given by

(18)

(18)

2.10 Photon conservation

A critical property of radiative transfer algorithms is their ability to conserve the amount of photons, meaning that the number of photons injected through sources should be equal to the number of photons that are either lost in photochemical reactions or leave the simulation box at the edge of the system (in a system without periodic boundary conditions). The sweep algorithm described above is not manifestly photon-conserving, for two reasons.

The first reason is that the algorithm performs sub-timesteps. A higher-timestep cell will have its incoming photon flux determined by an earlier value of a neighbouring lower-timestep cell, which is not fully consistent with the value that the lower- timestep region has in its subsequent updates.

The second reason for photon non-conservation is that the sweep algorithm itself only uses an estimate of the number of photons which are going to be absorbed as a result of the photochemistry. This estimate is given by Eq. (10) in terms of the photon flux. The actual number of photons which are going to be absorbed as a result of the photochemistry is only known after the chemistry step (after solving Eq. (15)) and the two values may differ. For example, the amount of photons which can be absorbed within any given cell should be limited by the number of atoms available for ionization. However, the initial estimate for the flux reduction in Eq. (10) does not take this into account. As a result, the algorithm might severely overestimate the fraction of photons absorbed in the cell, resulting in lost photons.

An alternative to this split between the initial estimate and the actual computation would be to perform a chemistry update of the cell immediately upon encountering it during a partial sweep. However, there are multiple problems with this approach. The first is that it would increase the number of needed chemistry updates, since an update would have to be performed once per sweep direction instead of only once after all directional sweeps have finished. The second problem is that it introduces an artificial asymmetry - each sweep direction would encounter the cell in a different chemical state. To make matters worse, for the parallel sweep algorithm, this is not merely an artificial asymmetry but a non-deterministic one, since the order in which the sweep directions encounter the cell is dependent on the timings of the inter-processor communication and may differ from one simulation run to the next. These reasons make performing a chemistry update for each sweep direction individually undesirable.

In order to address the latter cause of photon nonconservation, one approach is to limit the amount of photons that may be absorbed within any given cell by the amount of available atoms Natoms that could be ionized by the photons. However, there is an immediate problem with this approach, namely that there are multiple sweep directions - each sweep would still be able to absorb as many photons as there are atoms, overestimating the number of absorbed photons by a factor of Ndir . Alternatively, one could go even further and limit the maximum amount of absorbed photons to a fraction Natoms /Ndir, to reduce this factor. However, this approach also has its drawback, since it may severely underestimate the amount of absorbed photons, leading to a widening of ionization fronts and increasing the velocity at which ionization fronts move through the medium.

We choose the former of these two approaches, that is, limiting the number of absorbed photons to Natoms for each individual sweep direction. While this does not completely solve the problem of non-conservation, it can limit the amount of lost photons in extreme cases, without any of the unphysical effects that imposing a stronger limit would cause.

3 Tests

3.1 R-type expansion of an HII region

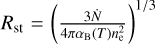

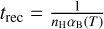

Here we study the R-type expansion of an HII region. Strömgren (1939) showed that a point source in a medium consisting of hydrogen with uniform density will eventually create a spherical HII region with radius  where

where  is the rate of ionizing photons emitted from the source, αB is the temperature-dependent case B recombination coefficient, and ne is the electron density. If we assume that the gas inside the ionized region is fully ionized then ne is equal to the number density of H nuclei, nH , and we find that the time evolution of the system is given by

is the rate of ionizing photons emitted from the source, αB is the temperature-dependent case B recombination coefficient, and ne is the electron density. If we assume that the gas inside the ionized region is fully ionized then ne is equal to the number density of H nuclei, nH , and we find that the time evolution of the system is given by

(20)

(20)

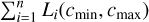

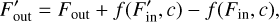

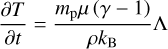

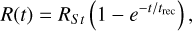

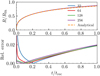

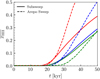

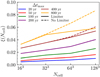

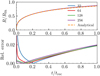

where the recombination time trec is given by  . This test is set up in exactly the same way as described by Jaura et al. (2018) and Baczynski et al. (2015), as well as the corresponding test in Peter et al. (2023). We use a cubic simulation box with L = 12.8 kpc filled with hydrogen with a homogeneous density of nH = 1 × 10−3 cm−3 which is assumed to be fully neutral in the beginning. In the center of the box, we place a single source which emits ionizing photons (of energy E > 13.6 eV) at a rate of N = 1 × 1049 s−1. During the test, we disable photo-heating and all cooling terms, keep the temperature at T = 100 K everywhere, and fix the case B recombination coefficient to αB = 2.59 × 10−13 cm3 s−1. We perform this test for a number of different resolutions, Ncell = 323, 643, 1283, 2563. The top panel of Fig. 3 shows the numerical result for the radius of the ionized bubble as a function of time, compared to the analytical expression given by Eq. (20). In the bottom panel, the relative error between the simulation result and the analytical prediction is shown. The radius of the ionized bubble is defined as the radius r at which a small spherical shell with radius r has an average ionization of

. This test is set up in exactly the same way as described by Jaura et al. (2018) and Baczynski et al. (2015), as well as the corresponding test in Peter et al. (2023). We use a cubic simulation box with L = 12.8 kpc filled with hydrogen with a homogeneous density of nH = 1 × 10−3 cm−3 which is assumed to be fully neutral in the beginning. In the center of the box, we place a single source which emits ionizing photons (of energy E > 13.6 eV) at a rate of N = 1 × 1049 s−1. During the test, we disable photo-heating and all cooling terms, keep the temperature at T = 100 K everywhere, and fix the case B recombination coefficient to αB = 2.59 × 10−13 cm3 s−1. We perform this test for a number of different resolutions, Ncell = 323, 643, 1283, 2563. The top panel of Fig. 3 shows the numerical result for the radius of the ionized bubble as a function of time, compared to the analytical expression given by Eq. (20). In the bottom panel, the relative error between the simulation result and the analytical prediction is shown. The radius of the ionized bubble is defined as the radius r at which a small spherical shell with radius r has an average ionization of  . For more details regarding the computation of this value, see Peter et al. (2023).

. For more details regarding the computation of this value, see Peter et al. (2023).

The runs at all resolutions follow the analytical prediction closely with relative errors of below 2% for all values the initial phase of the expansion where t < 0.1trec. It should be noted that the general trend is that the error increases with increasing resolution, a result that we have also seen in the SWEEP implementation of AREPO. In the following, we give two possible reasons for this counterintuitive result. First of all, we note that the analytical prediction assumes a perfectly sharp boundary, something that is clearly not the case in the numerical solution to the problem. The thickness w of the ionization front (defined as the distance between the point at which the average ionized fraction is 10% and the point at which it is 90%) that we find in our simulation is roughly w = 1.0 kpc at the lowest resolution (323 particles) and decreases slightly to about w = 0.7 kpc at the highest resolution (2563 particles). We note that this is very close to the analytical prediction of w = 0.74 kpc for the same test given in Iliev et al. (2006). This means that the value of the radius depends strongly on its definition, so that small deviations in the radius are not necessarily meaningful.

The second reason for the slight reduction in accuracy with increasing resolution might be related to the issue of photon nonconservation discussed in Sect. 2.10. The effects explained there might reasonably lead to something like the observed result. In order to understand this better, we study the quantitative effect of photon-non-conservation in Sect. 3.5.

|

Fig. 3 Results of the R-type expansion test. Top panel: the value of the radius of the ionized bubble at the center of the simulation box, in units of the Strömgren radius RSt , plotted as a function of time (normalized by the recombination time trec). Different lines represent different resolutions 323 (blue), 643 (red), 1283 (green), 2563 (purple) with the orange, dashed line representing the analytical prediction given by Eq. (20). Bottom panel: the relative error |R(t) − Rr(t)| /Rr(t) between the analytical prediction R(t) and the numerical results Rr(t) as a function of t/trec. |

|

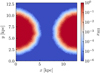

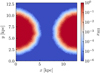

Fig. 4 Slice through the box in the plane z = 6.4 kpc at t = 20 Myr. The color shows different values of the ionization fraction with blue being neutral and red being ionized. |

3.2 Test of periodic boundary conditions

In order to test the behavior of the algorithm in setups with periodic boundary conditions, we perform a test similar to the one in Peter et al. (2023). In this test we perform a R-type expansion of an HII region as in the previous section (Sect. 3.1). The difference between the two simulations is that in this test, rather than placing the source in the center of the box, we place it right at the boundary in the x-direction at position r = (0,6.4,6.4) kpc. A slice through the box illustrating the setup of the test is shown in Fig. 4.

In the simulation there is no cell at exactly that position, so the source term will be introduced into a cell that is slightly to the right (at positive x) of r, namely at r = (є, 6.4,6.4) kpc, where є > 0 is small. Since the source is placed so close to the edge of the simulation box at x = 0, any photons originating at the source with a direction to the left must first pass through the (periodic) boundary before they re-enter from the other side and begin having an effect on the gas. Since the only symmetry breaking element in this setup is the simulation box itself, an accurate solver should produce a reasonably symmetric result, up to the precision determined by the resolution of the simulation. If photons exiting the boundary are not re-introduced on the other side consistently, we will notice a lack of ionization in the right side of the box, compared to the left side.

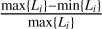

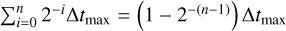

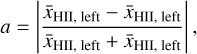

In order to quantify how well our solver deals with periodic boundary conditions, we compute the asymmetry a, defined as the relative difference between the average ionization fraction in the left side of the box and the right side of the box given by

(21)

(21)

where the (volume-)averaged ionization fractions  are defined as

are defined as

That is, the two averages are computed over the left- and righthalves of the simulation box as seen from the source at є, which corresponds to the left- and right- halves of the box except for the small є-sized sliver on the left.

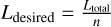

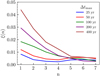

From our implementation of periodic boundary conditions, we can expect that smaller timesteps will achieve more accurate results than larger timesteps, since the initial estimate of the photon fluxes is given simply by the fluxes from the previous timestep: if the timestep itself is small, the prediction will be more accurate. However, the main goal of this test is not just to test the behavior of the solver with respect to the timestep, but to check whether allowing the solver to perform sub-timesteps has a positive effect on the accuracy. In order to test this, we perform the simulation setup described above for a variety of timesteps and different numbers of sub-timestep levels n and compute the periodic asymmetry given by Eq. (21).

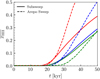

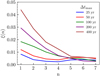

In Fig. 5 we show the asymmetry a as a function of the number of timestep levels n for different values of the maximum timestep Δtmax. Initially, it should be noted that the asymmetry is already quite small, with values below a < 0.4% even at a timestep of Δtmax = 400 kyr, which corresponds to  (the recombination time in this test is the same as in Sect. 3.1). Despite this, we still see the expected overall trend, which is that the asymmetry decreases as the timestep decreases, in approximately linear fashion. Moreover, allowing sub-timestepping to use more levels also decreases the asymmetry, with a clear downwards trend for n ≤ 5. At n = 6 and n = 7 the asymmetry increases temporarily. While this may initially seem worrying, the magnitude of the asymmetry is already below the values that we can reasonably expect to resolve given the relatively low resolution of this test. We also note that the numerical result of this test is quite strongly dependent on the exact value of є - while changing it slightly does not change the overall trend, it does change the absolute values of the asymmetry, especially for values below a < 0.001.

(the recombination time in this test is the same as in Sect. 3.1). Despite this, we still see the expected overall trend, which is that the asymmetry decreases as the timestep decreases, in approximately linear fashion. Moreover, allowing sub-timestepping to use more levels also decreases the asymmetry, with a clear downwards trend for n ≤ 5. At n = 6 and n = 7 the asymmetry increases temporarily. While this may initially seem worrying, the magnitude of the asymmetry is already below the values that we can reasonably expect to resolve given the relatively low resolution of this test. We also note that the numerical result of this test is quite strongly dependent on the exact value of є - while changing it slightly does not change the overall trend, it does change the absolute values of the asymmetry, especially for values below a < 0.001.

|

Fig. 5 Asymmetry of the average ionization fraction. The asymmetry (see Eq. (21)) is shown as a function of the number of timestep levels used for the test. The different lines depict different values for the maximum timestep used. |

3.3 Shadowing behavior behind an overdense clump

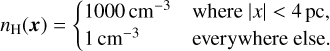

Due to the radial symmetry of the R-type expansion shown in Sect. 3.1, it does not probe the directional properties of the radiative transfer itself very well. To do so, we perform the following test which is also identical to the setup in Jaura et al. (2018) and Baczynski et al. (2015). We study the formation of a shadow behind an overdense clump. We first set up a box of length L = 32 pc. The box is filled with hydrogen with a spatially varying density with

(22)

(22)

We place two point sources at r1 = (−14, 0, 0) pc and r2 = (0, −14, 0) pc, which emit photons at a rate of N = 1.61 × 1048 s−1. An analysis of this test, which includes hydrodynamics and discusses the temperature, pressure, and density response has been performed by Jaura et al. (2018).

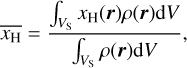

In Fig. 6, we show the rate of photons passing through the cell in units of cm−3s−1 in a slice through the simulation box along the x−y plane for different times (columns) and resolutions (rows). We find that the algorithm will correctly form a shadow behind the overdense clump. However, due to numerical diffusion, the shadow does not follow the theoretically expected form exactly. Because of this, the region behind the overdense clump will slowly begin to ionize. In order to quantify the shadowing behavior we calculate the mass-averaged fraction of ionized hydrogen in the volume of the shadow. The volume is given by the intersection of two (infinitely extended) cones, with their tips at r1 and r2 respectively and their base determined by the great circle lying in the overdense clump. The overdense clump itself is excluded from the volume. In the 2D slice shown in Fig. 6, this volume VS corresponds to the area between the black dashed circle and the black lines. The average ionization fraction in the shadow region  is given by

is given by

(23)

(23)

where xH(r) is the abundance of ionized hydrogen at position r and ρ(r) denotes the mass density at position r.

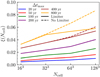

In Fig. 7,  is shown as a function of time. The ionization fraction begins to increase at t ≈ 20 kyr, demonstrating that the sweep algorithm does not form a perfect shadow. The shadowing behavior improves going from lower resolution to higher resolution. This is in line with the explanation that the protrusion of the ionization front into the shadow is due to numerical diffusion, since higher resolutions decrease the effect of numerical diffusion. We also find that for low resolutions (323 , 643), SUBSWEEP forms a better shadow than the SWEEP, especially at late times, while SWEEP performs better in the high resolution case 1283 . It might be surprising that there are different results at all, considering the fact that the two implementations use the same algorithm for the radiation transport. This is due to the fact that the chemistry updates are performed differently. The AREPO implementation, SWEEP will perform a radiation chemistry update for each time a directional sweep encounters a cell. In SUBSWEEP, cell abundances are fixed until the end of the sub-timestep and therefore remain the same for each directional sweep. This can result in different behavior at the ionization front.