| Issue |

A&A

Volume 691, November 2024

|

|

|---|---|---|

| Article Number | A319 | |

| Number of page(s) | 28 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202450617 | |

| Published online | 25 November 2024 | |

Euclid preparation

LIII. LensMC, weak lensing cosmic shear measurement with forward modelling and Markov Chain Monte Carlo sampling

1

Institute for Astronomy, University of Edinburgh,

Royal Observatory, Blackford Hill,

Edinburgh

EH9 3HJ,

UK

2

Department of Physics, Oxford University,

Keble Road,

Oxford

OX1 3RH,

UK

3

Jodrell Bank Centre for Astrophysics, Department of Physics and Astronomy, University of Manchester,

Oxford Road,

Manchester

M13 9PL,

UK

4

Mullard Space Science Laboratory, University College London,

Holmbury St Mary, Dorking,

Surrey

RH5 6NT,

UK

5

Aix-Marseille Université, CNRS, CNES, LAM,

Marseille,

France

6

Universität Innsbruck, Institut für Astro- und Teilchenphysik,

Technikerstr. 25/8,

6020

Innsbruck,

Austria

7

Universität Bonn, Argelander-Institut für Astronomie,

Auf dem Hügel 71,

53121

Bonn,

Germany

8

Université Paris-Saclay, CNRS, Institut d’astrophysique spatiale,

91405

Orsay,

France

9

Institute of Cosmology and Gravitation, University of Portsmouth,

Portsmouth

PO1 3FX,

UK

10

INAF-Osservatorio Astronomico di Brera,

Via Brera 28,

20122

Milano,

Italy

11

INAF-Osservatorio di Astrofisica e Scienza dello Spazio di Bologna,

Via Piero Gobetti 93/3,

40129

Bologna,

Italy

12

Dipartimento di Fisica e Astronomia, Università di Bologna,

Via Gobetti 93/2,

40129

Bologna,

Italy

13

INFN-Sezione di Bologna,

Viale Berti Pichat 6/2,

40127

Bologna,

Italy

14

Max Planck Institute for Extraterrestrial Physics,

Giessenbachstr. 1,

85748

Garching,

Germany

15

Universitäts-Sternwarte München, Fakultät für Physik, Ludwig-Maximilians-Universität München,

Scheinerstrasse 1,

81679

München,

Germany

16

INAF – Osservatorio Astrofisico di Torino,

Via Osservatorio 20,

10025

Pino Torinese (TO),

Italy

17

Dipartimento di Fisica, Università di Genova,

Via Dodecaneso 33,

16146,

Genova,

Italy

18

INFN-Sezione di Genova,

Via Dodecaneso 33,

16146

Genova,

Italy

19

Department of Physics “E. Pancini”, University Federico II,

Via Cinthia 6,

80126

Napoli,

Italy

20

INAF – Osservatorio Astronomico di Capodimonte,

Via Moiariello 16,

80131

Napoli,

Italy

21

INFN section of Naples,

Via Cinthia 6,

80126

Napoli,

Italy

22

Instituto de Astrofísica e Ciências do Espaço, Universidade do Porto, CAUP,

Rua das Estrelas,

4150-762

Porto,

Portugal

23

Dipartimento di Fisica, Università degli Studi di Torino,

Via P. Giuria 1,

10125

Torino,

Italy

24

INFN-Sezione di Torino,

Via P. Giuria 1,

10125

Torino,

Italy

25

INAF – IASF Milano,

Via Alfonso Corti 12,

20133

Milano,

Italy

26

INAF-Osservatorio Astronomico di Roma,

Via Frascati 33,

00078

Monteporzio Catone,

Italy

27

INFN – Sezione di Roma,

Piazzale Aldo Moro 2, c/o Dipartimento di Fisica, Edificio G. Marconi,

00185

Roma,

Italy

28

Institut de Física d’Altes Energies (IFAE), The Barcelona Institute of Science and Technology,

Campus UAB,

08193

Bellaterra (Barcelona),

Spain

29

Port d’Informació Científica,

Campus UAB, C. Albareda s/n,

08193

Bellaterra (Barcelona),

Spain

30

Institute for Theoretical Particle Physics and Cosmology (TTK), RWTH Aachen University,

52056

Aachen,

Germany

31

Institute of Space Sciences (ICE, CSIC),

Campus UAB, Carrer de Can Magrans, s/n,

08193

Barcelona,

Spain

32

Institut d’Estudis Espacials de Catalunya (IEEC), Edifici RDIT,

Campus UPC,

08860

Castelldefels,

Barcelona,

Spain

33

Dipartimento di Fisica e Astronomia “Augusto Righi” – Alma Mater Studiorum Università di Bologna,

Viale Berti Pichat 6/2,

40127

Bologna,

Italy

34

European Space Agency/ESRIN,

Largo Galileo Galilei 1,

00044

Frascati, Roma,

Italy

35

ESAC/ESA,

Camino Bajo del Castillo, s/n., Urb. Villafranca del Castillo,

28692

Villanueva de la Cañada,

Madrid,

Spain

36

Université Claude Bernard Lyon 1, CNRS/IN2P3, IP2I Lyon,

UMR 5822,

Villeurbanne,

69100

France

37

Institute of Physics, Laboratory of Astrophysics, Ecole Polytechnique Fédérale de Lausanne (EPFL),

Observatoire de Sauverny,

1290

Versoix,

Switzerland

38

UCB Lyon 1, CNRS/IN2P3, IUF, IP2I Lyon,

4 rue Enrico Fermi,

69622

Villeurbanne,

France

39

Departamento de Física, Faculdade de Ciências, Universidade de Lisboa,

Edifício C8, Campo Grande,

1749-016

Lisboa,

Portugal

40

Instituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de Lisboa,

Campo Grande,

1749-016

Lisboa,

Portugal

41

Department of Astronomy, University of Geneva,

ch. d’Ecogia 16,

1290

Versoix,

Switzerland

42

INAF-Istituto di Astrofisica e Planetologia Spaziali,

via del Fosso del Cavaliere, 100,

00100

Roma,

Italy

43

Université Paris-Saclay, Université Paris Cité, CEA, CNRS, AIM,

91191

Gif-sur-Yvette,

France

44

Institut de Ciencies de l’Espai (IEEC-CSIC),

Campus UAB, Carrer de Can Magrans, s/n Cerdanyola del Vallés,

08193

Barcelona,

Spain

45

INAF – Osservatorio Astronomico di Trieste,

Via G. B. Tiepolo 11,

34143

Trieste,

Italy

46

Istituto Nazionale di Fisica Nucleare, Sezione di Bologna,

Via Irnerio 46,

40126

Bologna,

Italy

47

INAF – Osservatorio Astronomico di Padova,

Via dell'Osservatorio 5,

35122

Padova,

Italy

48

Institute of Theoretical Astrophysics, University of Oslo,

PO Box 1029

Blindern,

0315

Oslo,

Norway

49

Higgs Centre for Theoretical Physics, School of Physics and Astronomy, The University of Edinburgh,

Edinburgh

EH9 3FD,

UK

50

Jet Propulsion Laboratory, California Institute of Technology,

4800 Oak Grove Drive,

Pasadena,

CA

91109,

USA

51

von Hoerner & Sulger GmbH,

Schlossplatz 8,

68723

Schwetzingen,

Germany

52

Technical University of Denmark,

Elektrovej 327, 2800 Kgs.

Lyngby,

Denmark

53

Cosmic Dawn Center (DAWN),

Denmark

54

Institut d’Astrophysique de Paris, UMR 7095, CNRS, and Sorbonne Université,

98 bis boulevard Arago,

75014

Paris,

France

55

Max-Planck-Institut für Astronomie,

Königstuhl 17,

69117

Heidelberg,

Germany

56

Department of Physics and Helsinki Institute of Physics,

Gustaf Hällströmin katu 2,

00014 University of Helsinki,

Finland

57

Aix-Marseille Université, CNRS/IN2P3, CPPM,

Marseille,

France

58

AIM, CEA, CNRS, Université Paris-Saclay, Université de Paris,

91191

Gif-sur-Yvette,

France

59

Leiden Observatory, Leiden University,

Einsteinweg 55,

2333 CC

Leiden,

The Netherlands

60

Université de Genève, Département de Physique Théorique and Centre for Astroparticle Physics,

24 quai Ernest-Ansermet,

1211

Genève 4,

Switzerland

61

Department of Physics,

PO Box 64,

00014 University of Helsinki,

Finland

62

Helsinki Institute of Physics,

Gustaf Hällströmin katu 2, University of Helsinki,

Helsinki,

Finland

63

NOVA optical infrared instrumentation group at ASTRON,

Oude Hoogeveensedijk 4,

7991PD,

Dwingeloo,

The Netherlands

64

Dipartimento di Fisica “Aldo Pontremoli”, Università degli Studi di Milano,

Via Celoria 16,

20133

Milano,

Italy

65

INFN – Sezione di Milano,

Via Celoria 16,

20133

Milano,

Italy

66

Dipartimento di Fisica e Astronomia “Augusto Righi” – Alma Mater Studiorum Università di Bologna,

via Piero Gobetti 93/2,

40129

Bologna,

Italy

67

Department of Physics, Institute for Computational Cosmology, Durham University,

South Road,

DH1 3LE,

UK

68

Université Côte d’Azur, Observatoire de la Côte d’Azur, CNRS, Laboratoire Lagrange,

Bd de l’Observatoire, CS 34229,

06304

Nice cedex 4,

France

69

Université Paris Cité, CNRS,

Astroparticule et Cosmologie,

75013

Paris,

France

70

University of Applied Sciences and Arts of Northwestern Switzerland, School of Engineering,

5210

Windisch,

Switzerland

71

European Space Agency/ESTEC,

Keplerlaan 1,

2201 AZ

Noordwijk,

The Netherlands

72

School of Mathematics, Statistics and Physics, Newcastle University,

Herschel Building,

Newcastle-upon-Tyne

NE1 7RU,

UK

73

Department of Physics and Astronomy, University of Aarhus,

Ny Munkegade 120,

8000

Aarhus C,

Denmark

74

Waterloo Centre for Astrophysics, University of Waterloo,

Waterloo,

Ontario

N2L 3G1,

Canada

75

Department of Physics and Astronomy, University of Waterloo,

Waterloo,

Ontario

N2L 3G1,

Canada

76

Perimeter Institute for Theoretical Physics,

Waterloo,

Ontario

N2L 2Y5,

Canada

77

Université Paris-Saclay, Université Paris Cité, CEA, CNRS, Astrophysique, Instrumentation et Modélisation Paris-Saclay,

91191

Gif-sur-Yvette,

France

78

Space Science Data Center, Italian Space Agency,

via del Politecnico snc,

00133

Roma,

Italy

79

Centre National d’Etudes Spatiales – Centre spatial de Toulouse,

18 avenue Edouard Belin,

31401

Toulouse Cedex 9,

France

80

Institute of Space Science, Str. Atomistilor,

nr. 409 Măgurele,

Ilfov

077125,

Romania

81

Instituto de Astrofísica de Canarias,

Calle Vía Láctea s/n,

38204,

San Cristóbal de La Laguna,

Tenerife,

Spain

82

Departamento de Astrofísica, Universidad de La Laguna,

38206,

La Laguna,

Tenerife,

Spain

83

Dipartimento di Fisica e Astronomia “G. Galilei”, Università di Padova,

Via Marzolo 8,

35131

Padova,

Italy

84

INFN – Padova,

Via Marzolo 8,

35131

Padova,

Italy

85

Departamento de Física, FCFM, Universidad de Chile,

Blanco Encalada 2008,

Santiago,

Chile

86

Satlantis, University Science Park,

Sede Bld 48940,

Leioa-Bilbao,

Spain

87

Centro de Investigaciones Energéticas, Medioambientales y Tecnológicas (CIEMAT),

Avenida Complutense 40,

28040

Madrid,

Spain

88

Instituto de Astrofísica e Ciências do Espaço, Faculdade de Ciências, Universidade de Lisboa,

Tapada da Ajuda,

1349-018

Lisboa,

Portugal

89

Universidad Politécnica de Cartagena, Departamento de Electrónica y Tecnología de Computadoras,

Plaza del Hospital 1,

30202

Cartagena,

Spain

90

Institut de Recherche en Astrophysique et Planétologie (IRAP), Université de Toulouse, CNRS, UPS, CNES,

14 Av. Edouard Belin,

31400

Toulouse,

France

91

Kapteyn Astronomical Institute, University of Groningen,

PO Box 800,

9700

AV

Groningen,

The Netherlands

92

INFN – Bologna,

Via Irnerio 46,

40126

Bologna,

Italy

93

Infrared Processing and Analysis Center, California Institute of Technology,

Pasadena,

CA

91125,

USA

94

IFPU, Institute for Fundamental Physics of the Universe,

via Beirut 2,

34151

Trieste,

Italy

95

INAF, Istituto di Radioastronomia,

Via Piero Gobetti 101,

40129

Bologna,

Italy

96

Department of Mathematics and Physics E. De Giorgi, University of Salento,

Via per Arnesano, CP-I93,

73100

Lecce,

Italy

97

INAF-Sezione di Lecce, c/o Dipartimento Matematica e Fisica,

Via per Arnesano,

73100,

Lecce,

Italy

98

INFN, Sezione di Lecce,

Via per Arnesano, CP-193,

73100

Lecce,

Italy

99

Institut für Theoretische Physik, University of Heidelberg,

Philosophenweg 16,

69120

Heidelberg,

Germany

100

Université St Joseph; Faculty of Sciences,

Beirut,

Lebanon

101

Institut d’Astrophysique de Paris,

98bis Boulevard Arago,

75014

Paris,

France

102

Junia, EPA department,

41 Bd Vauban,

59800

Lille,

France

103

SISSA, International School for Advanced Studies,

Via Bonomea 265,

34136

Trieste TS,

Italy

104

INFN, Sezione di Trieste,

Via Valerio 2,

34127

Trieste TS,

Italy

105

ICSC – Centro Nazionale di Ricerca in High Performance Computing, Big Data e Quantum Computing,

Via Magnanelli 2,

Bologna,

Italy

106

Instituto de Física Teórica UAM-CSIC,

Campus de Cantoblanco,

28049

Madrid,

Spain

107

CERCA/ISO, Department of Physics, Case Western Reserve University,

10900 Euclid Avenue,

Cleveland,

OH

44106,

USA

108

Laboratoire Univers et Théorie, Observatoire de Paris, Université PSL, Université Paris Cité, CNRS,

92190

Meudon,

France

109

Dipartimento di Fisica e Scienze della Terra, Università degli Studi di Ferrara,

Via Giuseppe Saragat 1,

44122

Ferrara,

Italy

110

Istituto Nazionale di Fisica Nucleare, Sezione di Ferrara,

Via Giuseppe Saragat 1,

44122

Ferrara,

Italy

111

Dipartimento di Fisica – Sezione di Astronomia, Università di Trieste,

Via Tiepolo 11,

34131

Trieste,

Italy

112

NASA Ames Research Center,

Moffett Field,

CA

94035,

USA

113

Kavli Institute for Particle Astrophysics & Cosmology (KIPAC), Stanford University,

Stanford,

CA

94305,

USA

114

Bay Area Environmental Research Institute,

Moffett Field,

CA

94035,

USA

115

Minnesota Institute for Astrophysics, University of Minnesota,

116 Church St SE,

Minneapolis,

MN

55455,

USA

116

Institute Lorentz, Leiden University,

Niels Bohrweg 2,

2333 CA

Leiden,

The Netherlands

117

Institute for Astronomy, University of Hawaii,

2680 Woodlawn Drive,

Honolulu,

HI

96822,

USA

118

Department of Physics & Astronomy, University of California Irvine,

Irvine

CA

92697,

USA

119

Department of Astronomy & Physics and Institute for Computational Astrophysics, Saint Mary’s University,

923 Robie Street, Halifax,

Nova Scotia

B3H 3C3,

Canada

120

Departamento Física Aplicada, Universidad Politécnica de Cartagena,

Campus Muralla del Mar,

30202

Cartagena,

Murcia,

Spain

121

Department of Computer Science, Aalto University,

PO Box 15400,

Espoo,

00 076,

Finland

122

Ruhr University Bochum, Faculty of Physics and Astronomy, Astronomical Institute (AIRUB), German Centre for Cosmological Lensing (GCCL),

44780

Bochum,

Germany

123

Université Paris-Saclay, CNRS/IN2P3, IJCLab,

91405

Orsay,

France

124

Univ. Grenoble Alpes, CNRS, Grenoble INP, LPSC-IN2P3,

53, Avenue des Martyrs,

38000

Grenoble,

France

125

Department of Physics and Astronomy,

Vesilinnantie 5,

20014 University of Turku,

Finland

126

Serco for European Space Agency (ESA), Camino bajo del Castillo, s/n, Urbanizacion Villafranca del Castillo,

Villanueva de la Cañada,

28692

Madrid,

Spain

127

ARC Centre of Excellence for Dark Matter Particle Physics,

Melbourne,

Australia

128

Centre for Astrophysics & Supercomputing, Swinburne University of Technology,

Victoria

3122,

Australia

129

W.M. Keck Observatory,

65-1120 Mamalahoa Hwy,

Kamuela,

HI,

USA

130

Department of Physics and Astronomy, University of the Western Cape,

Bellville,

Cape Town,

7535,

South Africa

131

Dipartimento di Fisica, Sapienza Università di Roma,

Piazzale Aldo Moro 2,

00185

Roma,

Italy

132

Centro de Astrofísica da Universidade do Porto,

Rua das Estrelas,

4150-762

Porto,

Portugal

133

Zentrum für Astronomie, Universität Heidelberg,

Philosophenweg 12,

69120

Heidelberg,

Germany

134

Dipartimento di Fisica, Università di Roma Tor Vergata,

Via della Ricerca Scientifica 1,

Roma,

Italy

135

INFN, Sezione di Roma 2,

Via della Ricerca Scientifica 1,

Roma,

Italy

136

Department of Astrophysics, University of Zurich,

Winterthurerstrasse 190,

8057

Zurich,

Switzerland

137

Department of Astrophysical Sciences, Peyton Hall, Princeton University,

Princeton,

NJ

08544,

USA

138

Niels Bohr Institute, University of Copenhagen,

Jagtvej 128,

2200

Copenhagen,

Denmark

★ Corresponding author; giuseppe.congedo@ed.ac.uk

Received:

6

May

2024

Accepted:

17

September

2024

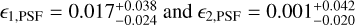

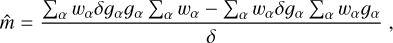

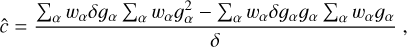

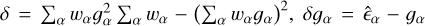

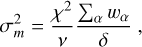

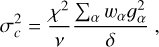

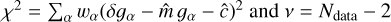

LENSMC is a weak lensing shear measurement method developed for Euclid and Stage-IV surveys. It is based on forward modelling in order to deal with convolution by a point spread function (PSF) with comparable size to many galaxies, sampling the posterior distribution of galaxy parameters via Markov chain Monte Carlo, and marginalisation over nuisance parameters for each of the 1.5 billion galaxies observed by Euclid. We quantified the scientific performance through high-fidelity images based on the Euclid Flagship simulations and emulation of the Euclid VIS images, realistic clustering with a mean surface number density of 250 arcmin−2 (IE < 29.5) for galaxies, and 6 arcmin−2 (IE < 26) for stars, and a diffraction-limited chromatic PSF with a full width at half maximum of 0′.′2 and spatial variation across the field of view. LENSMC measured objects with a density of 90 arcmin−2 (IE < 26.5) in 4500 deg2. The total shear bias was broken down into measurement (our main focus here) and selection effects (which will be addressed in future work). We found measurement multiplicative and additive biases of m1 = (−3.6 ± 0.2) × 10−3, m2 = (−4.3 ± 0.2) × 10−3, c1 = (−1.78 ± 0.03) × 10−4, and c2 = (0.09 ± 0.03) × 10−4; a large detection bias with a multiplicative component of 1.2 × 10−2 and an additive component of −3 × 10−4; and a measurement PSF leakage of α1 = (−9 ± 3) × 10−4 and α2 = (2 ± 3) × 10−4. When model bias is suppressed, the obtained measurement biases are close to Euclid requirement and largely dominated by undetected faint galaxies (−5 × 10−3). Although significant, model bias will be straightforward to calibrate given its weak sensitivity on galaxy morphology parameters. LENSMC is publicly available at gitlab.com/gcongedo/LensMC.

Key words: gravitational lensing: weak / methods: data analysis / cosmology: observations

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Weak gravitational lensing by large-scale structure is a mature cosmological tool to measure the distribution of dark matter and study dark energy through its evolution with redshift (Schneider 2006; Kilbinger 2015; Mandelbaum 2018). Weak lensing is particularly sensitive to modifications of the theory of gravity and the emergence of physics beyond the concordance Λ-cold dark matter model, which affect the clustering of dark matter (Amendola et al. 2018).

Galaxy surveys from the ground, such as the Dark Energy Survey (DES; Abbott et al. 2018b), the Kilo Degree Survey (KiDS; Kuijken et al. 2015), and the Hyper Suprime-Cam survey (HSC; Aihara et al. 2017), are now achieving constraints on the dark matter sector (primarily in the Ωm−σ8 parameter space and their combination S8) to a few percent (Abbott et al. 2018a; Hildebrandt et al. 2017; Hikage et al. 2019; Amon et al. 2022a; Secco et al. 2022; Dalal et al. 2023; Li et al. 2023d). After extensive consistency checks and sensitivity studies, recent lensing measurements from galaxy surveys are shown to be broadly in agreement with each other but in mild tension with the Planck satellite at the 2σ level or greater (Planck Collaboration VI 2020; Joudaki et al. 2020; Amon et al. 2022b; Heymans et al. 2021; Asgari et al. 2021; Loureiro et al. 2022) – this includes the latest joint analysis of DES and KiDS (Abbott et al. 2023).

In the coming years, galaxy surveys will enter a new regime of area, depth, and image quality. The space-based Euclid telescope (survey area of 14 000 deg2 , full width at half maximum (FWHM) resolution of 0′.′2, depth of 24.5, IE+YEJEHE filters, see Laureijs et al. 2011; Cropper et al. 2016; Euclid Collaboration 2022b, 2024d); the planned space-based Roman telescope (1700 deg2, 0′.′2, 26.5, YJH+F184, see Spergel et al. 2015; Akeson et al. 2019); and the ground-based Rubin Observatory Legacy Survey of Space and Time (LSST; 18 000 deg2, 0′.′7, 27.5, ugrizy, see Ivezić et al. 2019) will substantially increase the number of observable galaxies compared to current surveys. The systematic observation of a billion galaxies or more across one- third of the visible sky will then be possible for the first time. The combined effect of improved survey area and angular resolution will be an enhanced ability to probe both the large and small scales via weak lensing and galaxy clustering, allowing us to constrain cosmological models and dark energy to percentlevel precision (Mandelbaum et al. 2018; Euclid Collaboration 2020a), or even an order of magnitude better when combined with data from Planck (Euclid Collaboration 2022a).

With the dramatic improvement in precision that will be achieved in the coming years, experiments are now focussing on understanding the accuracy of their analyses (see, e.g., Euclid Collaboration 2020b). Along with theory uncertainties, the cosmic shear measurement and redshift estimation are the most challenging aspects of any large-scale weak lensing surveys. The concern of this paper is on cosmic shear measurement, which provides the necessary data for the weak lensing cosmological analysis (Kilbinger 2015). In order to achieve an order of one- percent precision on the dark energy equation of state, a billion galaxies or more with median redshift around one need to be observed. This observation has to be carried out consistently so the same shape measurement procedure is applied to all objects. This measurement has to be conducted with outstanding accuracy to satisfy the stringent requirement of 2 × 10−3 and 3 × 10−4on the measured multiplicative and additive shear biases that were set in the early development phase of weak lensing space telescopes (Massey et al. 2012; Cropper et al. 2013).

Throughout the past years, a number of shear measurement methods were developed, tested on data challenges, and applied to real data. These can be categorised into two main classes: non-parametric and parametric. Among the non-parametric is Kaiser-Squires-Broadhurst (KSB; Kaiser et al. 1995; Hoekstra et al. 1998), which is based on weighted moments of image data. Because of its simplicity, these estimators were used for the very first attempts at measuring cosmic shear in the early 2000s. These methods are fast and can be quickly calibrated, but they are sensitive to effects that need to be characterised. With better precision, it later became clear that more effects needed to be factored in, particularly a realistic PSF and the sensitivity of bias on the PSF ellipticity, known as leakage. Parametric methods, based on forward modelling and model fitting, soon appeared to be better suited to accurately incorporating such real data features building on solid statistical grounds. In recent years, a more systematic use of model fitting techniques was observed across all major lensing surveys. The Bayesian-inspired shape method lensfit (Miller et al. 2007; Kitching et al. 2008; Miller et al. 2013) was extremely successful, first in the Canada-France Hawaii Telescope Lensing Survey (CFHTLenS; Heymans et al. 2012) and more recently in KiDS. This method is based on forward modelling and marginalisation over galaxy nuisance parameters. A similar method is IM3SHAPE (Zuntz et al. 2013), which is a maximum likelihood estimator based again on analytic forward modelling that was applied to DES. While many real-data effects, including the PSF, are accounted for and can be directly built in parametric methods, any in-built correction clearly introduces extra computational overhead, as the parameter probability distribution needs to be sampled accurately.

While lensing measurements became more precise over time, accuracy also needed to be examined more carefully. Methods were compared in data challenges and were run on common simulations with increasing realism, such as in the Shear TEsting Programme (STEP; Heymans et al. 2006; Massey et al. 2007) and the Gravitational LEnsing Accuracy Testing (GREAT; Bridle et al. 2010; Kitching et al. 2012; Mandelbaum et al. 2015). With no method outshining in absolute terms, and methods being better at some aspects of the measurement but worse at others, it became evident that some form of calibration was still necessary. More than ever before, the field has become reliant on galaxy simulations. Sophisticated high-fidelity simulations now need to reproduce the realism of actual observations as close as possible so all biases from detection, measurement, and selection can be fully captured (Fenech Conti et al. 2017; Kannawadi et al. 2019; Euclid Collaboration 2019; MacCrann et al. 2021; Li et al. 2023a,b). Calibration naturally raises the question about how sensitive results are to the assumptions that are made in simulations (Hoekstra et al. 2017), or how large these simulations need to be to meet the desired precision (Pujol et al. 2019; Jansen et al. 2024). Other methods now rely on some form of calibration that is directly built in the measurement process. Galaxy images used in the calibration were simulated internally, inferred from real data, or obtained through a combination of the two methods. Metacalibration (Sheldon & Huff 2017; Huff & Mandelbaum 2017) derives internal estimates of the sensitivity of the ellipticity estimator to input shears and was extremely successful on DES Year 3 (Gatti et al. 2021); Bayesian Fourier Domain (BFD; Bernstein & Armstrong 2014; Bernstein et al. 2016) estimates the Taylor coefficients of the galaxy likelihood expanded over shear with information about moments measured from calibration fields; a similar implementation to BFD uses forward modelling (Sheldon 2014); the KiDS selfcalibration (Fenech Conti et al. 2017) derives internal estimates of the ellipticity bias from noise-free galaxy images; MomentsML relies on simulated images to train shear-predicting artificial neural networks (Tewes et al. 2019). Because many selection biases happen before the shear measurement introduces its own bias, the field has gradually become more aware that those will probably be the limiting factor in future lensing surveys. Further work around Metacalibration led to Metadetection to address the issue (Sheldon et al. 2020). An application of the method to Euclid- like simulations showed that while selection biases may exceed requirements, the outlook is still positive, with demonstrated success at handling detection and blending biases (Hoekstra 2021; Hoekstra et al. 2021; Melchior et al. 2021). Furthermore, the recent Anacal method aims to correct measurement and selection biases via analytic differentiation (Li et al. 2023c).

The impact of neighbours to lensing measurements has also become one of the most important issues that current and future surveys will need to address. In space, the large number density of detected galaxies of about 30 arcmin−2 (IE < 24.5) is compensated by a good image resolution, so the impact of neighbours may not be as bad as from the ground. In fact, due to the worse resolution on the ground, the impact of neighbours is serious, affecting 60% of the sample (Bosch et al. 2017; Arcelin et al. 2021). DES Year 1 results (Samuroff et al. 2017) showed that the total neighbour bias can be as large as 9% (reaching 80% at a close distance to the neighbour, if uncorrected). Moreover, the impact of ‘unrecognised’ blends (undetected neighbours) on the S8 parameter from simulated Rubin Year 1 data can be as large as 15% (Nourbakhsh et al. 2022). Cuts to the catalogue to remove those objects can reduce the total bias to 1% (reaching 30% at a close distance to the neighbour), however at the cost of reducing the effective number density by 30% and leaving a residual bias on S8 of 2σ. While Metacalibration is extremely successful in a few idealised cases (e.g., isolated galaxies) and Metadetection in the handling of blending and detection bias, a suite of advanced simulations were required for the tomographic calibration of DES Year 3 (MacCrann et al. 2021). These simulations show that an external calibration is still required, as the unresolved neighbour introduces a correlation between the two galaxies at different redshift, plus the Metacalibration shear responses will be biased by the presence of the neighbour itself. Therefore, calibration simulations are necessary to correctly capture neighbour bias and the interplay between shear and redshift. However, these simulations assume uniform random distributions of galaxies that were re-weighted to mimic clustering, which raises the question as to whether the inferred bias is likely underestimated. The most recent simulations by KiDS have realistic clustering of N -body simulations, mimic a number of measurement effects, and address the shear-redshift interplay (Li et al. 2023a). With a larger number density, it is expected that the situation may be more serious in future surveys.

In this paper, we present our advanced shear measurement method LENSMC specifically developed for Euclid that builds on the knowledge and success of ground-based measurement at handling real data effects. Similarly to lensfit, it adopts a mean estimator. Contrary to lensfit, it does not marginalise over nuisance parameters with numerical approximations. With full flexibility in the choice of the prior, all the marginalisation in LENSMC is performed by Markov chain Monte Carlo (MCMC) sampling for individual galaxies or jointly across groups of neighbouring galaxies. While IM3SHAPE returns the maximum of the likelihood estimated via the Levenberg-Marquardt algorithm, LENSMC employs a combination of large-scale and small-scale algorithms (such as conjugate gradient and simplex methods) to estimate a suitable initial guess for the MCMC sampling, thus requiring no information about the model derivatives and dramatically reducing the sensitivity on the initial guess (which is assumed to always be the same for all galaxies). The galaxy models in LENSMC are rendered directly in the Fourier space; hence only a single Fourier transform is required. A recent profile-fitting method, The Farmer, has also drawn attention (Weaver et al. 2023). This method is a maximum likelihood estimator whose initial guesses of position and shape are provided by the detection method. It includes a decision tree based on χ2 values to classify objects on their likely type and provides error estimates via Cramer-Rao bounds. Preliminary results are encouraging; however, the method has not been tested on full space-based cosmic shear accuracy yet.

Accurate cosmic shear measurement requires controlling the bias from a number of sources. Key examples include source clustering, faint objects, neighbours, PSF leakage, astrometry, image artefacts, and cosmic rays. Additionally, any forwardmodelling method is plagued by potential model bias. One of the main sources of model bias was addressed in this work. In summary, LENSMC employs: (i) forward modelling to deal with Euclid image undersampling and convolution by a PSF with comparable size to many galaxies; (ii) joint measuring object groups to correctly handle bias due to neighbours; (iii) masking out objects belonging to different groups; (iv) MCMC sampling to sample the posterior in a multi-dimensional parameter space, calculate weights, and correctly marginalise ellipticity over nuisance parameters and other objects in the same group. We particularly focussed on the realism of our simulations, including clustering, stars, object detection, the handling of neighbours due to high number density, and the use of realistic undersampled chromatic PSF images with spatial variation across the field of view. We did not include further real data effects such as non-linearities or cosmic rays, as these will be addressed separately. Also we assumed the same broadband PSF as obtained from a spectral energy distribution (SED) of an SBc-type galaxy at a redshift of one in both simulations and measurements.

Sect. 2 introduces our method and the practical advantages in addressing real data problems. Our forward-modelling method is sufficiently fast to analyse the typical data volume of Stage-IV surveys and can be applied to the complexity of Euclid measurements, including undersampled data and a complex PSF while accounting for the full degrees of freedom in the galaxy modelling. Additionally, it allows for the proper handling of resolved neighbours by joint measurement and masking of more distant galaxies, stars, and artefacts in the images. Sect. 3 describes the simulations used for our intensive testing of the method. The images are fiducial realisations of the Euclid VIS detector (Euclid Collaboration 2024b), and galaxies were rendered based on N -body simulations with full variability of the morphological properties (Potter et al. 2017; Euclid Collaboration 2024a). All galaxies were convolved with a realistic pre-flight PSF model with full spatial variation, but the chromatic variability was ignored. Sect. 4 presents the main results of this testing. When model bias, chromaticity, and selection biases are suppressed, the obtained biases are close to the Euclid requirement. This measurement bias is largely dominated by undetected faint galaxies in the images. The bias was also found to be stable and mostly insensitive to the many effects in the simulations. As the Euclid analysis will also need to correct for other artefacts in the images, the residual bias will be straightforward to calibrate through image simulations. Once we included the model bias by allowing the full variability in the galaxy models, the overall bias became significant. However, since the sensitivity is weak (the derivative of the bias with respect to the assumptions made in the simulations appears negligible), it will then be straightforward to also calibrate the model bias through image simulations. Sect. 5 discusses the main findings and draws the conclusions of our work.

2 Method

The main physical quantity of interest in weak lensing is the reduced cosmic shear (Kilbinger 2015),

(1)

(1)

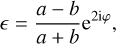

where κ and γ are convergence and shear (both related to the gravitational potential), and g ≈ γ in the weak lensing regime. The related observable in weak lensing is the ellipticity of a galaxy,

(2)

(2)

where a and b are, respectively, the semi-major and semi-minor axes,1 φ ∈ [0, π) is the orientation angle of the galaxy, and |є| ≤ 1. The effect of weak lensing is to distort the ellipticity of a source galaxy, ϵs, by the canonical transformation (Seitz & Schneider 1997),

(3)

(3)

where all spin-2 quantities are expressed in complex notation (e.g., є = є1 + i єշ, where є1 quantifies the distortion along x and y, and є2 along the coordinate axes rotated by π/4). As it is customary in weak lensing, we will refer to ϵs as the intrinsic ellipticity of the source galaxy, and ϵ as the lensed or observed ellipticity. The ellipticity in Eq. (3) is a point estimate for shear in that information on the underlying cosmic shear can be derived in a statistical sense as a sample average, g = 〈є〉, which holds whenever the distribution of orientation angles is uniform, for example, when there are no astrophysical intrinsic alignments (Joachimi et al. 2015) or shear dependent selection effects.

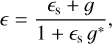

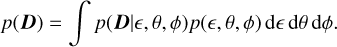

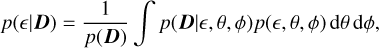

In weak lensing measurements we infer the reduced shear through sample averages. In this work, we use the ellipticity as a point estimator for shear and the problem of measuring ellipticity can be formulated fully in Bayesian terms. Suppose we have a pixel data vector, D, and a model for the galaxy brightness distribution, I = I(є, θ, ϕ), as a function of ellipticity, є, intrinsic nuisance parameters, θ, and linear nuisance parameters, ϕ2. Thanks to Bayes’ theorem, we can define a joint posterior as follows:

(4)

(4)

where p(D|є,θ,ϕ) is the likelihood, p(є,θ,ϕ) is the prior on ellipticity and nuisance parameters, and p(D) is the evidence or marginal likelihood,

(5)

(5)

We can then construct the ellipticity marginal posterior:

(6)

(6)

marginalising out the nuisance parameters. Common choices of estimators are the maximum likelihood or maximum posterior probability, but these are usually biased if the distribution is not Gaussian. However, the bias can be predicted in simple cases of low dimensionality or when the probability function is fully analytic (Cox & Snell 1968; Hall & Taylor 2017). Another option that was successful in ground-based surveys (Miller et al. 2013) is to set the estimator to the mean of the posterior distribution,

(7)

(7)

We adopt this definition, as it has some useful properties:

as the nuisance parameters are marginalised out, their impact on the ellipticity estimator via their correlation is mitigated;

overfitting3 is inherently reduced as we pick an average representative of all possible realisations that are statistically equivalent;

following on from the previous point, we expect the mean estimator to be, in general, less biased than the maximum estimators;

the mean of the distribution can be estimated through MCMC sampling techniques (see Sect. 2.3); such estimators satisfy the central limit theorem and therefore converge to the true mean.

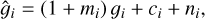

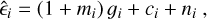

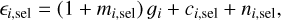

We will discuss more about those points later in this section. Whatever choice is made, any estimator can be seen as a nonlinear mapping between D and ϵ. Therefore even if D were to be Gaussian distributed, the estimator will not, hence leading to a fundamental bias in the measurement, which is commonly referred to as noise bias (Melchior & Viola 2012; Refregier et al. 2012; Viola et al. 2014). Moreover, as the shear is estimated through a sample average over a population of galaxies with varying morphological properties and complex selections, the properties of the shear bias will be different from that of galaxy ellipticity. Assuming shear is small, it is customary in the field to model the shear bias on each component with a linear model (Guzik & Bernstein 2005; Huterer et al. 2006; Heymans et al. 2006),

(8)

(8)

where mi and ci are the multiplicative and additive biases for the i-th component, gi is the true shear, ĝi is an estimate of it, and ni is the corresponding statistical noise. The transformation in Eq. (8) should in principle have mi replaced by a matrix mij to model any potential cross-talk between shear components. Alternatively, it could be rewritten as a spin-2 equation (Kitching & Deshpande 2022),

(9)

(9)

However, generalising upon previous work, m0 and m4 are now spin 0 and 4 complex numbers acting on spin-2 shear fields. Physically, this added flexibility allows for complete modemixing: m0 models a dilation and rotation of the true shear, whereas m4 models a reflection around the axis determined by its phase. We defer the application of this approach to future work. Multiplicative terms can be induced by, for example, non- Gaussianities in the posterior (skewness at first order), caused by, for example, pixel noise and a small galaxy size relative to the PSF. Additive terms are due to anisotropies induced by, for example, the PSF and its spatial variability across the field of view. This effect is referred to as PSF leakage and is defined by the dependence of ci on the PSF ellipticity ϵPSF,i (see, e.g. Gatti et al. 2021, and references therein),

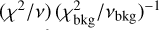

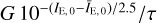

(10)

(10)

We focussed primarily on the c dependence on ϵPSF, as the m dependence is negligible as long as the PSF is stable (i.e., the variation in PSF size is within a percent level). Earlier studies (Massey et al. 2012; Cropper et al. 2013) set out requirements for space-based missions on m and c based on a top-down error breakdown from cosmology to two-point statistics. For Euclid, the requirement is on the statistical error on bias, σm < 2 × 10−3 and σc < 3 × 10−4. That is roughly equivalent to saying that a shear of 1% should be measured with a fractional accuracy and precision of 0.2%. We note that the requirement is an order of magnitude more stringent than current ground-based experiments (Hildebrandt et al. 2017). The detailed breakdown of the total budget on m and c into various error terms (Cropper et al. 2013) suggests we can set the required statistical error on the bias due to the measurement alone to σm < 5 × 10−4 and σc < 5 × 10−5. Therefore, in order to measure |g| ≈ 0.03 with a residual post-calibration multiplicative bias smaller than σm, one will need at least  galaxies4, where σє ≈ 0.3 is the ‘shape noise’, that is, the standard deviation of the per-component intrinsic ellipticity distribution. Obviously measurement noise and intrinsic scatter in the morphological properties will also need to be factored in. A ballpark estimate for the Euclid requirement on PSF leakage that we will be using as benchmark in our analysis is σα ≲ σc / |δєPSF|, where |δєPSF| ≈ 0.1 is the order of magnitude (absolute) variation in PSF ellipticity across the field of view, which yields σα < 1 × 10−3 if we assume a budget of σc < 1 × 10−4. This derivation may be too conservative, as a full propagation of the errors and biases through to cosmological parameters is demonstrated to be able to capture the spatial pattern imprinted by the PSF and other effects (Euclid Collaboration 2020b). Other surveys implemented other solutions such a first-order expansion on PSF ellipticity and PSF model residuals in KiDS (Heymans et al. 2006; Giblin et al. 2021) or the angular correlations between PSF ellipticity and size implemented in the rho statistics in DES (Jarvis et al. 2020).

galaxies4, where σє ≈ 0.3 is the ‘shape noise’, that is, the standard deviation of the per-component intrinsic ellipticity distribution. Obviously measurement noise and intrinsic scatter in the morphological properties will also need to be factored in. A ballpark estimate for the Euclid requirement on PSF leakage that we will be using as benchmark in our analysis is σα ≲ σc / |δєPSF|, where |δєPSF| ≈ 0.1 is the order of magnitude (absolute) variation in PSF ellipticity across the field of view, which yields σα < 1 × 10−3 if we assume a budget of σc < 1 × 10−4. This derivation may be too conservative, as a full propagation of the errors and biases through to cosmological parameters is demonstrated to be able to capture the spatial pattern imprinted by the PSF and other effects (Euclid Collaboration 2020b). Other surveys implemented other solutions such a first-order expansion on PSF ellipticity and PSF model residuals in KiDS (Heymans et al. 2006; Giblin et al. 2021) or the angular correlations between PSF ellipticity and size implemented in the rho statistics in DES (Jarvis et al. 2020).

In the next subsections we address the key elements of the LENSMC measurement method: galaxy modelling, PSF convolution, likelihood, sampling, and a further discussion about handling real data effects. We emphasise the role of joint measurement of objects to address neighbour bias, which is a concern for current and upcoming surveys, and also our MCMC strategy to sample a multi-dimensional parameter space and marginalise each lensing target over all nuisance parameters and other objects.

2.1 PSF-convolved galaxy models

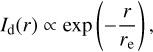

We assumed 2D-projected galaxy models as a mixture of two circular Sérsic profiles (Sérsic 1963). The disc component is

(11)

(11)

and the bulge component is

![${I_{\rm{b}}}(r) \propto \exp \left[ { - {a_{\rm{b}}}{{\left( {{r \over {{r_{\rm{h}}}}}} \right)}^{{1 \over {{n_{\rm{b}}}}}}}} \right],$](/articles/aa/full_html/2024/11/aa50617-24/aa50617-24-eq13.png) (12)

(12)

where r is the distance from the centre, re is the exponential scale length of the disc, rh is the bulge half-light radius, nb = 1, and ab ≈ 2 nb − 0.331 (Peng et al. 2002). The bulge Sérsic index was fixed to 1 based on recent multi-wavelength observations of the Hubble CANDELS/GOODS-South field (Welikala et al., in prep.)5, while bulge profiles with nb = 4 (de Vaucouleurs) are instead more typical for early-type galaxies at low redshift. Bot profiles were normalised so that their integral is 1. In the mea surement, re plays the role of object size parameter, and we fixe the bulge-to-disc scale length ratio to rh/re = 0.15 based on the same Hubble Space Telescope measurements (Welikala et al., i prep.). Finally, we imposed a hard cut-off on the surface bright ness profile at rmax/re = 4.5 since observations indicate that galaxies have truncated surface brightness distributions (Van de Kruit & Searle 1981, 1982). The parameters nb, rmax/re, and rh/re are assumptions made in the modelling that can potentially lea to large biases in presence of a mismatch in the assumed Sérsi index compared to simulations (Simon & Schneider 2017). W stress that our choice of fixed values are based on recent observa tions, and the model bias due to incorrect assumptions are ofte intertwined with the particular simulation setup and its complex ity. A detailed investigation of sensitivity of the calibration t bulge parameters is presented later in this paper.

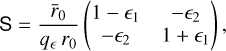

The Sérsic model introduced above is an isotropic profil with zero ellipticity. To make it anisotropic (i.e., elliptical wit ellipticity є = є1 + i є2, we used the following distortion matrix:

(13)

(13)

where  is the scale factor necessary to scale a model of size

is the scale factor necessary to scale a model of size  to the desired size r0. Because observed galaxy shapes are a 2D visual projection of an intrinsically 3D distribution, we introduce the additional scale factor, qє = 1 −|є|, to make the profile semi-major axis invariant under ellipticity transformation.6 Discs and bulges typically show different intrinsic ellipticity. As discs will be observed more elliptical if edge-on, their ellipticity is primarily driven by inclination angle. In contrast, bulges are spheroids that are almost invariant under inclination, so they will appear more circular. In the measurement, we still applied the same ellipticity to both components as part of our 2D modelling, but we are aware that a positive ellipticity gradient from the intrinsically 3D distribution would induce a bias if not fully captured (Bernstein 2010). Our ellipticity estimate will therefore be a proxy of the inclination angle, especially for disc-dominated galaxies. Any residual ellipticity gradient, if significant, will have to be addressed separately as part of the calibration.

to the desired size r0. Because observed galaxy shapes are a 2D visual projection of an intrinsically 3D distribution, we introduce the additional scale factor, qє = 1 −|є|, to make the profile semi-major axis invariant under ellipticity transformation.6 Discs and bulges typically show different intrinsic ellipticity. As discs will be observed more elliptical if edge-on, their ellipticity is primarily driven by inclination angle. In contrast, bulges are spheroids that are almost invariant under inclination, so they will appear more circular. In the measurement, we still applied the same ellipticity to both components as part of our 2D modelling, but we are aware that a positive ellipticity gradient from the intrinsically 3D distribution would induce a bias if not fully captured (Bernstein 2010). Our ellipticity estimate will therefore be a proxy of the inclination angle, especially for disc-dominated galaxies. Any residual ellipticity gradient, if significant, will have to be addressed separately as part of the calibration.

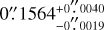

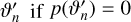

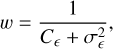

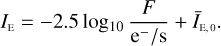

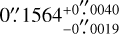

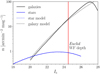

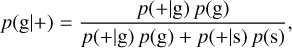

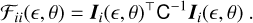

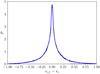

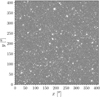

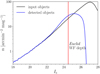

The Euclid telescope, optical elements, and detector introduce distortions of the input galaxy brightness distribution, which must be corrected. The effect is mostly convolutive, which tends to blur the galaxy image further. An example of a typical PSF image for a space-based telescope like Euclid is given in Fig. 1. The PSF is: (i) close to being diffraction limited; (ii) undersampled due to the half width being comparable with the pixel size; (iii) chromatic due to the integration over a wide range of wavelengths in the VIS filter; (iv) SED dependent due to integration being weighted by a combination of galaxy bulge and disc SEDs7; (v) spatially variant across the field of view due to optical distortions at the exit pupil; and (vi) epoch variant due to varying Solar aspect angle throughout the mission inducing thermal distortion on the optics. A comprehensive study of the modelling will be presented elsewhere (Duncan et al., in prep.). A smaller contribution also comes from the CCD pixel response function, which models the response of the detector pixel as an integrated measure of the incoming flux illuminating individual pixels. In forward modelling, we included all the convolutive effects due to the telescope PSF and CCD, individually for bulge, disc, and for each image exposure. Colour gradients originate from incorrectly using the total galaxy SED when generating the PSF image, while the bulge and disc will have naturally different SEDs (Semboloni et al. 2013; Er et al. 2018). Using individually PSF-convolved model components may help control colour gradients, if individual SEDs were available. However, the impact of colour dependence and gradients on our analysis was not addressed here since we assumed the same broadband PSF as obtained from an SED of an SBc-type galaxy at a redshift of one in both simulations and measurements. Further non-linear distortions, such as in the case of charge transfer inefficiency (CTI, Rhodes et al. 2010) and the brighter-fatter effect (BFE, Antilogus et al. 2014), are typically corrected for at the image pre-processing stage, but residuals could still affect the shear measurement (Massey et al. 2014; Israel et al. 2015), which we did not include here.

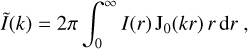

Galaxy modelling for large-volume surveys like Euclid requires fast and efficient rendering of the images. All operations described so far are best suited to work in the Fourier space. We adopted a similar approach to galsim (Rowe et al. 2015). Consider the generic profile I(r), which could be either Eq. (11) or (12). Because of its isotropy, the 2D Fourier transform is the 1D Hankel transform,

(14)

(14)

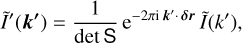

where k is the Fourier frequency (sampled on an oversampled grid) and J0 is the Bessel function of the first kind. We call I(k) the template model which any profile with arbitrary choice of parameter values (ellipticity, size, and position offset) can be derived from8. To render a galaxy profile with parameters є1, є2, and re, we applied the inverse distortion matrix S−1 to coordinates in Fourier space, so anisotropic coordinates are now defined by k′= S−1 k .A position shift by δr = (δx, δy) from the centre9 is equivalent to the phase k′·• δr. The sheared-stretched- shifted model becomes

(15)

(15)

where  is the template calculated at the sheared-stretched Fourier mode k′. Since isotropy is lost through the operation above,

is the template calculated at the sheared-stretched Fourier mode k′. Since isotropy is lost through the operation above,  is no longer a Hankel transform but a full Fourier transform, which is a function of the vector k′ . We alleviate the problem of undersampling by calculating the PSF and galaxy model on coordinates with a common oversampling factor of three. Finally, the oversampled models for PSF and galaxy is multiplied together, the convolved model is downsampled by the same factor to the actual pixel scale10, and the downsampled convolved model is inverse fast Fourier transformed to real space. We applied the operations of shear and stretch to the template bank described in Appendix A to get any object of arbitrary ellipticity and size before the convolution with the PSF takes place as previously discussed.

is no longer a Hankel transform but a full Fourier transform, which is a function of the vector k′ . We alleviate the problem of undersampling by calculating the PSF and galaxy model on coordinates with a common oversampling factor of three. Finally, the oversampled models for PSF and galaxy is multiplied together, the convolved model is downsampled by the same factor to the actual pixel scale10, and the downsampled convolved model is inverse fast Fourier transformed to real space. We applied the operations of shear and stretch to the template bank described in Appendix A to get any object of arbitrary ellipticity and size before the convolution with the PSF takes place as previously discussed.

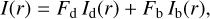

The final galaxy model is a linear mixture of PSF-convolved co-centred components. We label the profiles with a subscript ‘d’ for disc and ‘b’ bulge:

(16)

(16)

where Fd and Fb are disc and bulge fluxes, and F = Fd + Fb is the total flux. If B/T is the bulge fraction, then the fluxes are also defined by Fd = F(1 − B/T) and Fb = F B/T.

To summarise, given pre-computed template models for disc and bulge, we can generate a galaxy model with a desired ellipticity, size, and position by carrying out all the operations with simple algebra in Fourier space on oversampled coordinates, and then take one Fourier transform each time at the end. This is sufficiently fast for an intensive, repeated calculation of the same model with varying realisations of galaxy parameters (ellipticity, size, position offset, and fluxes). However, we kept nb, rmax/re, and rh /re fixed in our modelling as allowing too much freedom would induce strong degeneracies between parameters and complicate the measurement substantially. We address the model bias sensitivity in Sect. 4.4.

|

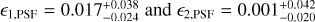

Fig. 1 Example of a Euclid chromatic PSF for an assumed SED of a SBc-type galaxy at a redshift of one. The flux in the image was rescaled to its maximum value and oversampled by a factor of three with respect to the native VIS pixel size of 0′.′1. Diffraction spikes are clearly visible at a significant distance from the centre despite the blurring due to the chromaticity. The full width at half maximum, including its variation across the field of view, is |

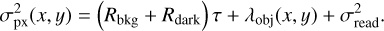

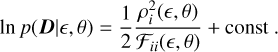

2.2 Likelihood

Suppose we have multi-exposure image data vectors D = {Dexp} 11. We wish to estimate the model, I = {Iexp}, that best represents the available exposures. The model I = I(є,θ,ϕ) is a function of ellipticity є = (є1, є2), nuisance parameters θ = (re, δx, δy),12 and linear flux nuisance parameters ϕ = (Fd, Fb).

Assuming Gaussian data13, the likelihood can be written as

![$\ln p(D\mid ,\theta ,\phi ) = - {1 \over 2}{[D - I(,\theta ,\phi )]^ \top }{{\rm{C}}^{ - 1}}[D - I(,\theta ,\phi )] + {\rm{const}},$](/articles/aa/full_html/2024/11/aa50617-24/aa50617-24-eq24.png) (17)

(17)

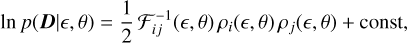

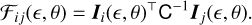

where C is the noise covariance matrix usually estimated from the data as a block diagonal matrix, and the normalisation constant, 1/2 ln[(2π)λ det C] (λ is the dimensionality), is ignored. The noise is intrinsically non-stationary since various noise sources (such as the Poisson noise14 from the objects in the image) vary spatially. Because the model is linear in the component fluxes, I = Fd Id + Fb Ib, it is straightforward to integrate over the fluxes, ϕ = (Fd, Fb), and we have the following marginalised likelihood,

(18)

(18)

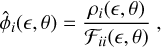

where i indexes the model component, ρi (є, θ) = D⊤C−1 Ii(є,θ) is a 2 × 1 vector, Ii = дI/дFi (i = d, b), and 𝓕ij is the 2 × 2 Fisher matrix,

(19)

(19)

We note that because the right-hand side of Eq. (18) is quadratic in ρi and 𝓕ij is positive definite, we find In p(D|є, θ) > 0. A full derivation of the marginalised likelihood, including edge cases and implementation, can be found in Appendix B. The dimensionality of the problem has now been reduced from 7 free parameters to 5: ellipticity, size, and position offsets15.

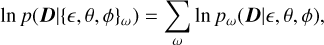

Forward modelling provides solid grounds for a further generalisation to measuring multiple objects jointly, especially if they are observed within a short angular separation such as for neighbours. We label each likelihood with the index ω running through the objects being jointly measured, In pω(D|є, θ, ϕ). The joint likelihood is then

(20)

(20)

where {ϵ, θ, ϕ}ω is the set of all parameters for all the objects being measured. In the above equation we assumed the independence of the individual likelihoods. This is a fair assumption since close neighbours will very often be so due to random visual alignment. Consequently, those galaxies will be at different redshift and will have different shear. A much smaller fraction will include tidal interaction. In this case, the galaxies will be at the same redshift and have the same shear. It it then expected to have some degree of correlation between the individual likelihoods. In more extreme but much rarer cases, the galaxies will be tidally interacting, and therefore our Sèrsic modelling would break down entirely as we did not include any extra correlation term. Despite affecting a very small fraction of objects, dedicated simulations would be required to assess the impact on shear bias. Also, it is worth noting that we need to be careful with the marginalisation of the individual likelihoods. The main issue lies in the marginalised likelihood of Eq. (18). This relies on calculating ρi(є, θ) for the various model components. However, when multiple objects are present in the same neighbourhood, this quantity will effectively introduce correlation between the likelihoods of the two objects. Therefore, the statistical independence required to multiply likelihoods together will not be ensured. We verified in testing that not marginalising individual likelihoods is indeed the correct approach to the problem. The joint likelihood is defined in a 7 × N-dimensional parameter space, where N is the number of objects being measured jointly, with N = 2 being a typical number found in testing. For increased stability, we first optimised the likelihood for fluxes and positions offsets, and then also for ellipticity and sizes. This proved to be very robust as opposed to iterating over individual objects after masking neighbours to achieve a reliable initial guess (Drlica-Wagner et al. 2018). One key benefit of MCMC is that it marginalises the ellipticity of an object over all remaining nuisance parameters, which include object nuisance parameters as well as the parameters of the other objects included in the joint sampling (see Sect. 2.3).

Our prior is based on enforcing hard bounds on all parameters: 0 < |є| < 1 given that the modulus of ellipticity in Eq. (2) cannot exceed 1; 0 ≤ re ≤ 2″, where the upper bound is based on observations made in the Hubble Deep Fields; position offsets are restricted to ± 0′.′3 since the accuracy to which detections are made is typically sub-pixel; and fluxes are positive. A more informative prior could be derived from real observations in the future. A summary of all parameters, being free, fixed or derived, is presented in Table 1.

Summary of free, fixed, and derived parameters in the modelling.

2.3 Massive Markov chain Monte Carlo sampling

Shear measurement poses serious difficulties in identifying the best strategy to sample the posterior probability distribution of Eq. (4), assuming the likelihood of Eq. (18) or (20).

Since the lensing sample is very broad in morphological properties, it will contain both low and high S/N objects, whose posterior probability distribution can be either very broad or very narrow; hence any sampling strategy must be robust to this variability.

If N is the number of objects being measured jointly to mitigate the neighbour bias, the dimensionality of the problem is 7 × N (with N being typically 2 and rarely 3 or 4); sampling must then be resilient to the large dimensionality, and provide marginalisation and error estimations with minimum overheads.

The shape of the distribution is a strong function of object parameters, such as ellipticity and size, and therefore it varies significantly across the sample; without prior knowledge of the physical properties of each galaxy, any sampling method must run in a consistent, robust way.

Given the large sample size of order 109 galaxies, sampling the posterior is a computational challenge, so a trade-off between method complexity, runtime, and access to computing resources must be identified.

The sampling must be completely automated, without human supervision, and no fine tuning of sampling parameters and options is allowed.

Considering all these challenges, our priority must be the average convergence property of the sampler. The best strategy identified is MCMC, which allowed us to sample the posterior generated from the marginalised likelihood of Eq. (18) for an individual object, or the joint likelihood of Eq. (20) for a group of objects in an efficient and consistent way, for all 109 objects in the sample (hence the adjective ‘massive’). More importantly, MCMC seems to be the best choice to tackle neighbours, particularly as an estimate can be found in a high dimensional parameter space. It is worth noting that another key benefit of MCMC sampling is that it is both a maximisation and sampling procedure. The maximisation happens during the burn-in phase where the sampler tries to reach the region of higher probability. The actual sampling happens in the later stage of the chain after the burnin phase. The marginalisation over nuisance and error estimation are then natural by-products with no extra overhead. This implies that not only can ellipticity estimates be marginalised over object nuisance parameters, but also over other object parameters in the joint group, hence minimising the impact of neighbours in the final ellipticity estimate.

When searching for such an algorithm that could potentially suit our needs, we considered a number of potential candidates that are widely used in cosmology and other fields (MacKay 2002). The development of various sampling methods is primarily driven by the quest to achieve lower auto-correlation and higher acceptance rate (Hastings 1970; Swendsen & Wang 1986; Skilling 2006; Goodman & Weare 2010; Foreman-Mackey et al. 2013; Betancourt 2017; Karamanis & Beutler 2021; Lemos et al. 2022). Although appealing, all these methods do suffer from increased complexity, which is the limiting factor in large-scale applications, where ‘large’ in this context implies runs repeated over a billion times. Even for the most sophisticated methods, it is often realised that a good initial guess is the key for good sampling of the posterior.

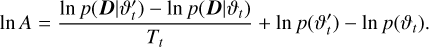

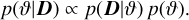

For shear measurement on the scale of large galaxy surveys, there is not much room for sampling complexity. The method has to be light enough and yet robust to all the posteriors that need to be sampled. Furthermore, the likelihood runtime limits the maximum number of samples that can be drawn for each galaxy without having an overall impact on the survey analysis runtime. The likelihood runtime is mostly dominated by the model component generation. The measurement is dominated by sampling the posterior, plus some additional pre-processing, therefore to limit the galaxy runtime to around a few seconds per galaxy, the MCMC sampling must be sparse. This is considered acceptable as we are interested in accurate shear estimates, which are found by averaging over ellipticity measurements. We chose an improved version of the Metropolis-Hastings algorithm, which was modified in two ways: (i) it incorporates some of the ideas of parallel tempering (Swendsen & Wang 1986; Sambridge 2013), so the parameter space can be sampled on a larger scale initially, and then on the smaller scale; (ii) it updates the proposal distribution during the burn-in phase, automatically tuning it to find a good match with the target distribution. Let ϑ be the generic vector of all parameters. As explained in Sect. 2.2, this is ϑ = (є, θ) for individual galaxy measurement or ϑ = (є, θ, ϕ) when jointly measuring groups of galaxies. Ignoring the evidence since our method is invariant to it, the Bayes’ theorem gives us

(21)

(21)

The parameter vector at the current iteration step is denoted with ϑt, where t = 0,… is the MCMC sample index. We also define a tempering function, Tt , as a function of t. This acts as a Boltzmann temperature and its expression will be defined later in the text. When the temperature is high, Tt >> 1, sampling from the posterior is equivalent to sampling globally from the prior. When the temperature is gradually reduced, as in annealing, Tt → 1, we begin sampling directly from the posterior. We define such a tempering function for application during the burnin phase only, and make sure Tt = 1 for the final part of the chain where we will take sample from. The method goes as follows.

At t, draw a new sample

‘ from the proposal distribution

‘ from the proposal distribution  ; here we assume a symmetric Gaussian proposal with mean ϑt and a pre-defined diagonal covariance of 10−4 on all parameters (in units of arcsec for size and position offsets).

; here we assume a symmetric Gaussian proposal with mean ϑt and a pre-defined diagonal covariance of 10−4 on all parameters (in units of arcsec for size and position offsets).Accept or reject

with probability A, that is, draw u from the uniform distribution on [0,1] and accept

with probability A, that is, draw u from the uniform distribution on [0,1] and accept  if u < min(1, A); to speed things up and avoid calculating the likelihood outside the prior, we immediately reject

if u < min(1, A); to speed things up and avoid calculating the likelihood outside the prior, we immediately reject  .

.

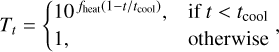

For consistency, all posteriors are sampled from an initial guess that is a circular galaxy of mean size, re = 0″.3, and zero offset from the nominal position in the sky. If we were to run any MCMC method from this point onward we would end up with varying autocorrelation time depending on how far the actual galaxy is from the initial guess; therefore, we would need to wait longer for very elliptical, small, or large galaxies. To improve the convergence of the chains within a smaller number of iterations, we achieved a better initial guess by running an initial maximisation of the posterior before the actual MCMC. We ran the conjugate-gradient search method (Powell 1964) restricted to only 100 function evaluations, and then a downhill simplex search (Nelder & Mead 1965). The burn-in phase of the MCMC starts right afterwards. During this phase the temperature is gradually lowered to one. We adopted the following power law cooling scheme (Cornish & Porter 2007),

(23)

(23)

where ƒheat = 10 is the heat factor and tcool = 100 is coolingdown sample index. The parameter tcool represents the number of samples it takes for the tempering function to become one. We note that  and

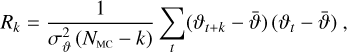

and  . We begin with a diagonal Gaussian proposal of width 0.01 on all parameters, which is then recalculated from the chains every 100 samples and rescaled by the factor 2.4 λ−1/2, with λ being the number of parameters (Dunkley et al. 2005). The burn-in phase lasts for a total of 500 samples, which is long enough for the tempering function to become 1, the proposal covariance to be recalculated 5 times, and the chain to stabilise and reach the high probability region (well before we start accumulating the final chain samples). The final phase lasts for an additional NMC = 200 samples. Again, this is plenty to estimate both the mean and covariance of the chains with enough precision, but we recognise that sampling noise may still be non-negligible.

. We begin with a diagonal Gaussian proposal of width 0.01 on all parameters, which is then recalculated from the chains every 100 samples and rescaled by the factor 2.4 λ−1/2, with λ being the number of parameters (Dunkley et al. 2005). The burn-in phase lasts for a total of 500 samples, which is long enough for the tempering function to become 1, the proposal covariance to be recalculated 5 times, and the chain to stabilise and reach the high probability region (well before we start accumulating the final chain samples). The final phase lasts for an additional NMC = 200 samples. Again, this is plenty to estimate both the mean and covariance of the chains with enough precision, but we recognise that sampling noise may still be non-negligible.

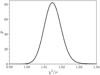

A good quantitative way to test the convergence of the chains is to investigate their auto-correlation. We do so for a variety of galaxies and results are shown in Appendix C. A less quantitative way is to verify that the acceptance rate is within the expected range. We also increased the final 200 samples up by a factor of five, without noticing any significant difference in the shear results. For further verification, we compared the method with our implementation of affine invariant (Goodman & Weare 2010) and parallel tempering (Swendsen & Wang 1986; Sambridge 2013)16. While these methods produce better ellipticity chains, they did not show any significant advantage in recovering shear, but increased complexity and therefore runtime overhead.

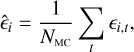

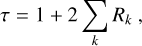

Once the samples are drawn from the distribution function, calculating the mean and width of the marginalised distribution becomes straightforward. Our point estimate for the ellipticity component marginalised over nuisance is the mean of the chain after the burn-in phase,

(24)

(24)

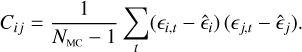

where i = 1, 2, and є1,t and є2,t are the two ellipticity chains. The marginalised ellipticity covariance matrix is

(25)

(25)

We calculated the averaged per-component variance (ignoring negligible covariance between components),

(26)

(26)

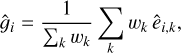

and chose to define the galaxy shear weight by

(27)

(27)

where σє is the assumed shape noise (i.e., the width of the 1D intrinsic ellipticity distribution), ideally estimated in tomographic bins from deeper measurements. In this modelling, as Cє quantifies the noise level in the data, faint galaxies will be automatically downweighted as opposed to very bright galaxies that will always have a maximum finite weight. Additionally, we note the negligible sensitivity to the choice of the 1/2 factor in Cє 17. The MCMC provides a convenient and efficient way to calculate both the mean and width of the ellipticity posterior at no extra computational cost. The weights can then be used to define sample averages, such as in one-point estimates:

(28)

(28)

where k indexes the galaxies in the lensing catalogue. The generalisation to two-point estimates is straightforward. Please note that any choice of weight leads to shear bias due to correlation with the measured ellipticity, and this is tested in Sect. 4.2.

We also implemented the self-calibration of ellipticity proposed by Fenech Conti et al. (2017)18 via importance sampling and likelihood ratio while checking the quality of the sampling weights (Wraith et al. 2009) without finding strong evidence of improvement. As results will be dominated by other larger effects, we leave out further discussion from this paper.

2.4 Handling real data

Handling real data requires being careful with additional aspects of the measurement. For instance, throughout our discussion we proposed that our sampling strategy is best suited to handle the presence of neighbours, that is, resolved objects19 close to the lensing targets. However, the situation is complicated by the fact that there is more variety in real data as the brightness distribution of an object can be contaminated in different ways depending on the nature of the nearby objects:

neighbours (resolved galaxies or stars);

blends (unresolved galaxies or stars);

any other contamination (cosmic rays, transients, or ghosts).

Each case leads to a particular type of bias, and therefore we deal with close objects in two ways. First, neighbours are grouped with a friend-of-friend algorithm to a maximum angular separation of rfriend = 1″. If the separation is too small, the objects will be mostly measured in isolation; therefore, they will still be affected by the neighbours due to improper masking. If the separation is too large, the groups will begin to be unmanageable in size, but the benefit in controlling the neighbour bias will be negligible. We found that 1″ is a good trade-off between measuring N close neighbours jointly within a default postage stamp size of 38″.4, and the non-negligible overhead in sampling a 7 × N dimensional posterior. The joint analysis of object groups also gave us a good control of neighbour bias, leading to a correction of m ≈ −7 × 10−4 as it will be shown later in the paper20. Second, the segmentation maps and masks that are usually provided with the data (Bertin et al. 2020; Kümmel et al. 2020) were combined in a binary map and passed on to the likelihood to mask out objects in different groups. Detector artefacts or cosmic rays were also masked out in a similar way. In this case, to be even more conservative, we further dilated the masks by one pixel so most of contamination bias should be taken care of. But masking also helps partially with blends because objects that are false negatives according to the detection strategy may sometimes be true positives according to the masking procedure and would therefore be masked out. Blending with faint galaxies were demonstrated to be relevant when trying to calibrate methods that are particularly sensitive to the problem (Euclid Collaboration 2019). We demonstrate that, to some extent, this is also the case in our simulations where we measured objects deeper than the Euclid nominal survey depth, as we will show in the next section.

Real images have a background level that needs to be subtracted. LENSMC uses the background estimates and noise maps that the Euclid processing provides, but residual local background variations are subtracted at the individual object group level. This is implemented by post-masking median subtraction. Likewise, the standard deviation of the background noise is estimated after masking. We measured galaxies and stars with the same model described earlier in this section. We found that good star-galaxy separation is based on selecting objects whose measured size is greater than the PSF size. This method fits well with the joint measurement of groups, however at the price of rejecting faint galaxies that would nevertheless have a negligible weight or be hard to distinguish from faint stars. More details will be given in Sect. 4.