| Issue |

A&A

Volume 681, January 2024

|

|

|---|---|---|

| Article Number | A86 | |

| Number of page(s) | 21 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202347118 | |

| Published online | 19 January 2024 | |

Scalable stellar evolution forecasting

Deep learning emulation versus hierarchical nearest-neighbor interpolation

1

Heidelberger Institut für Theoretische Studien,

Schloss-Wolfsbrunnenweg 35,

69118

Heidelberg,

Germany

e-mail: kiril.maltsev@h-its.org

2

Zentrum für Astronomie der Universität Heidelberg, Institut für Theoretische Astrophysik,

Philosophenweg 12,

69120

Heidelberg,

Germany

3

Zentrum für Astronomie der Universität Heidelberg, Astronomisches Rechen-Institut,

Mönchhofstr. 12–14,

69120

Heidelberg,

Germany

4

Department of Physics and Astronomy, Michigan State University,

East Lansing,

MI

48824,

USA

5

Department of Computational Mathematics, Science, and Engineering, Michigan State University,

East Lansing,

MI

48824,

USA

6

Machine Learning Research Lab, Volkswagen AG,

Munich,

Germany

7

Faculty of Informatics, Eötvös Loránd University,

Budapest,

Hungary

Received:

7

June

2023

Accepted:

5

October

2023

Many astrophysical applications require efficient yet reliable forecasts of stellar evolution tracks. One example is population synthesis, which generates forward predictions of models for comparison with observations. The majority of state-of-the-art rapid population synthesis methods are based on analytic fitting formulae to stellar evolution tracks that are computationally cheap to sample statistically over a continuous parameter range. The computational costs of running detailed stellar evolution codes, such as MESA, over wide and densely sampled parameter grids are prohibitive, while stellar-age based interpolation in-between sparsely sampled grid points leads to intolerably large systematic prediction errors. In this work, we provide two solutions for automated interpolation methods that offer satisfactory trade-off points between cost-efficiency and accuracy. We construct a timescale-adapted evolutionary coordinate and use it in a two-step interpolation scheme that traces the evolution of stars from zero age main sequence all the way to the end of core helium burning while covering a mass range from 0.65 to 300 M⊙. The feedforward neural network regression model (first solution) that we train to predict stellar surface variables can make millions of predictions, sufficiently accurate over the entire parameter space, within tens of seconds on a 4-core CPU. The hierarchical nearest-neighbor interpolation algorithm (second solution) that we hard-code to the same end achieves even higher predictive accuracy, the same algorithm remains applicable to all stellar variables evolved over time, but it is two orders of magnitude slower. Our methodological framework is demonstrated to work on the MESA ISOCHRONES AND STELLAR TRACKS (Choi et al. 2016) data set, but is independent of the input stellar catalog. Finally, we discuss the prospective applications of these methods and provide guidelines for generalizing them to higher dimensional parameter spaces.

Key words: stars: evolution / stars: fundamental parameters / catalogs / time / methods: numerical / methods: statistical

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Several fields of astrophysics require fast and cost-efficient predictive models of stellar evolution for their deployment at scale. These include stellar population synthesis, N-body dynamics models of stellar clusters (e.g., Kamlah et al. 2022), iterative optimization-based stellar parameter estimation methods (e.g., Bazot et al. 2012), and large-scale galactic and cosmic evolution simulations (e.g., Springel et al. 2018) that require a stellar sub-grid physics.

For example, the BONN STELLAR ASTROPHYSICS INTERFACE (BONNSAI; Schneider et al. 2014) is a Bayesian framework that allows for the testing of stellar evolution models and (if the test is passed) to infer fundamental stellar model parameters given the observational data. Determination of fundamental stellar parameters that best match the observation requires costly iterative optimization procedures, such as Markov chain Monte Carlo nested sampling techniques, which need a large number of evaluations over a quasi-continuous parameter space for convergence to the best-fit model. In order to reduce systematic estimation errors, BONNSAI requires a stellar parameter grid to be as dense as possible.

However, there are costly computational demands arising from the traditional method of running a detailed stellar evolution code over a dense rectilinear grid in a stellar parameter space: for a fixed grid spacing, the number of stellar tracks to evolve scales to the power of the dimensionality of the fundamental stellar parameter space. The most important parameters of single star evolution are the: age, τ; initial mass, Mini, at the zero age main sequence (ZAMS); initial metallicity, Zini; and initial rotation velocity, υini. For stars of Mini > 8 M⊙, the binary interaction effects become increasingly important: 71% of all O-stars interact with a companion and for over half of them, this takes place during the main sequence evolution (Sana et al. 2012). Therefore, in order to evolve massive stars, the parameter space needs be expanded to cover eight dimensions (τ1,Mini,1, Mini,2, υini,1, υini,2, Zini, Pini, ϵ) in general, where Pini is the initial period, ϵ the eccentricity of the binary orbit, and τ1 ≃ τ2 to a good approximation.

MODULES FOR EXPERIMENT IN STELLAR ASTROPHYSICS (MESA; Paxton et al. 2011) is an example of a detailed one-dimensional (1D) stellar evolution code with a modular structure, which allows us to update the adopted physics when generating stellar evolution tracks; for instance, the equation of state, the mass loss recipe, and the opacity tables. When evolving stars numerically over a wide and densely sampled parameter grid with MESA, there are two main computational challenges: 1) the computational cost associated with running the code over the large grid size and 2) the numerical instabilities. To overcome the latter, substantial manual effort is required to push a simulation past failure points by reconfiguring the code and by checking for unphysical results. The manual action mainly involves the adaptation of spatial mesh refinement and time step control strategy, as well as of the error tolerance thresholds in stellar model computation, to make sure the solvers converge over each evolutionary phase within a reasonable computation time.

The problem of prohibitive computational costs has been addressed in three different ways: 1) the stellar evolution tracks have been approximated by analytic fitting formulae; 2) the output of detailed stellar evolution codes over a discrete parameter grid has been interpolated; and 3) cost-efficient surrogate models of stellar evolution have been constructed. Below, we summarize these main approaches.

The SINGLE STAR EVOLUTION (SSE) package (Hurley et al. 2000) consists of analytic stellar evolution track formulae predicting stellar luminosity, radius and core mass as functions of the age, mass and metallicity of the star. Separate formulae are applied to each evolutionary phase and the duration of each phase is estimated from physical conditions. Along with analytical expressions from stellar evolution theory, the SSE package was obtained by fitting polynomials to the set of stellar tracks by Pols et al. (1998). The fitting formulae method has been extended to predict the evolution of binary systems by including analytical prescriptions for mass transfer, mass accretion, common-envelope evolution, collisions, supernova kicks, angular momentum loss mechanisms and tides (Hurley et al. 2002). At present, the fitting formulae are often used in connection with rapid binary population synthesis codes, for example COMPACT OBJECT MERGERS: POPULATION ASTROPHYSICS & STATISTICS (COMPAS; Riley et al. 2022), and stellar N-body dynamics codes. However, there are two main drawbacks: 1) the fixed (rather than modular) input physics and 2) the limited set of predicted output variables, which (depending on the astro-physical application) may be not all the variables of interest. A re-derivation of analytic fitting formulae for a new set of stellar tracks is non-trivial (Church et al. 2009; Tanikawa et al. 2020). overall, the analytic approach is not sustainable, since it would need to be reiterated after each update in stellar input physics.

The interpolation of tracks pre-computed by a detailed code is an alternative to analytic fitting. Brott et al. (2011) interpolate stellar variables in a (Mini, υini, τ) parameter space. For each stellar age, the two nearest neighbors (from above and from below) in initial mass are selected first, and then, for each of the two initial masses, the two nearest neighbors in initial rotational velocity are chosen. The values of stellar evolution variables, at each stellar age, are computed from these four neighboring grid points by a sequence of linear interpolations in the sampled parameter space. The scope of the interpolation method is restricted to the main sequence evolution of stars. instead of the stellar age, the fractional main sequence lifetime is used as interpolation variable.

Following a different approach to interpolation of stellar tracks, the METHOD OF INTERPOLATION for SINGLE STAR EVOLUTION code (METISSE; Agrawal et al. 2020) takes as its input a discrete single-star parameter grid and uses interpolation by a piece-wise cubic function to generate new stellar tracks in-between the sampled initial mass grid points at fixed metallicity. The parameter space covers the initial mass range from 0.5 to 50 M⊙, and stars are evolved up to the late stages beyond core helium burning. instead of stellar age, the interpolation scheme uses a uniform basis known as EQUIVALENT EVOLUTIONARY POINTS (EEP; Dotter 2016) to model the evolutionary tracks. The EEP coordinate quantifies the evolutionary stage of a star based on physical conditions, derived from numerical values of evolutionary variables (e.g., depletion of central hydrogen mass fraction to a threshold value), which are readily identifiable for different evolutionary tracks. For any given stellar age, an isochrone is constructed by identifying which EEP coordinate values are valid for that age as function of Mini. For each fixed EEP value, an ordered Mini – τ relation is constructed over the available grid points and interpolated over. In a second step, Mini is used as independent variable to obtain stellar properties by another round of interpolation. Reliable and fast stellar track interpolation with the EEP method has originally been demonstrated upon MESA ISOCHRONES AND STELLAR TRACKS (MIST; Choi et al. 2016), a catalog of stellar evolution tracks over a grid space covering the age, initial mass and initial metallicity parameters. METISSE is a more general alternative to SSE, because it may take any single star grid (at fixed initial metallicity), produced as output of a detailed stellar evolution code, as its input; namely, it is not tied to specific input physics adopted to generate the stellar tracks.

Apart from METISSE, there are the COMBINE (Kruckow et al. 2018), SEVN (Iorio et al. 2023, in its latest version), and POSYDON (Fragos et al. 2023) population synthesis codes that interpolate grids of detailed single or binary evolution simulations. Interpolation in COMBINE is based on the method of Brott et al. (2011) while in SEVN, single star evolution is divided into sub-phases analogous to the EEP method, and interpolation is performed over each sub-phase using a fractional time coordinate relative to duration of each sub-phase. Evolution of the binary companion and interaction effects are approximated using analytic fitting formulae. Since the procedure to construct the uniform EEP basis cannot be trivially automatized, the pre-processing steps to identify EEPs, to define appropriate interpolation functions and also to down-sample the stellar evolution catalog to reduce memory costs need to be re-iterated after each stellar grid update (see, e.g., the TRACKCRUNCHER preprocessing modules, Iorio et al. 2023, in the context of SEVN).

In contrast, POSYDON interpolates output of detailed binary evolution simulations with MESA. The EEP-based interpolation method is not directly applicable to binary evolution tracks, because EEPs must be strictly ordered a priori while binary interaction, which can set on at any time, may change their order. Therefore, in POSYDON interpolation needs to be preceded by classification of binary evolution phase and separate interpolation schemes are to be applied over each of them.

Finally, the third way is to build a prediction-making tool that allows for the replacement of the output of cost-intensive detailed up-to-date stellar evolution code such MESA with a cost-efficient imitation model (emulator or surrogate) of the original. Emulation, or surrogate modeling, is a pragmatic but reliable reproduction of the output generated by an expensive computer experiment. The predictive surrogate model is constructed by training a supervised machine learning (ML) algorithm on a stellar evolution tracks data base pre-computed with the original code over a discrete parameter grid. A well-trained model will not only efficiently reproduce stellar tracks at the parameter grid points it has seen during training, but it will be capable of generating accurate predictions of tracks in-between the grid points, thanks to the capability to generalize it acquired by training. Once constructed, the emulator can be used as a package to generate predictions of stellar variables of interest, instead of running the original detailed stellar evolution code such as MESA over a quasi-continuous parameter range or storing the catalog data in computer memory for interpolation. Using the emulator package saves energy costs, speeds up generation of output predictions over a dense grid by several orders of magnitude and reduces human effort of running models. The speed-up is owed to the efficiency of input-to-output mapping by machine learning algorithms. The disadvantage is the introduction of prediction errors by the trained model, which reproduces stellar tracks with a finite precision. Therefore, when training machine learning models, the main task is to achieve reliable generalization over the parameter space with a prediction inaccuracy of stellar variables of interest that is tolerable for inference and astrophysical applications.

Surrogate modeling of stellar evolution has yet not been explored extensively at widths of the parameter range necessary for more general applicability. Li et al. (2022) used a Gaussian process regression (GPR) to emulate stellar tracks in a five-dimensional (5D) parameter space, but the initial mass range covered by the predictive models is restricted to the solar-mass neighborhood Mini ∊ (0.8, 1.2) M⊙ and to evolutionary sequences from the Hayashi line onward through the main sequence up to the base of the red giant branch. Also, GPR-based emulators have been used, for example, for parameter space exploration of state-of-art rapid binary population synthesis codes such as COMPAS (Barrett et al. 2017; Taylor & Gerosa 2018). Due to the data set size limitation for the applicability of GPR, it is not the ideal tool for emulating a large stellar model grid. Thus, we seek for other ML based models instead. The feedforward neural network algorithm proved itself as promising in previous surrogate modeling works, for instance: Scutt et al. (2023) emulated 25 stellar output variables (classic photometric variables, asteroseismic quantities, and radial and dipole mode frequencies) over a (Mini, Zini) grid space of stars in or near the δ Scuti instability strip using neural networks, along with a principal component analysis to reduce the output dimension to nine. Lyttle et al. (2021) emulated five variables of red dwarfs, sunlike stars, and subgiants in a 5D input parameter space. While these are high-dimensional problems that have been successfully addressed by neural networks, aspects that the problem settings have in common include: the mass range considered is relatively narrow, Mini ∈ (1.3, 2.2) M⊙ and Mini ∈ (0.8, 1.2) M⊙, respectively; and the evolutionary sequences cover the pre-main sequence and only part of the main sequence, or main sequence and subgiant phase, respectively. More widely in context of stellar astrophysics, supervised machine learning has been applied to solve the inverse problem of mapping observables to models. For example, a variant of the random forest regression model (Bellinger et al. 2016) and invertible neural networks (Ksoll et al. 2020) have been trained to predict fundamental stellar parameters in a high-dimensional parameter space given a set of observational variables. Again, however, the predictive models were restricted to an initial mass range and evolutionary sequences of stars narrower (e.g., main sequence evolution of Mini ∊ (0.7, 1.6) M⊙ stars in Bellinger et al. 2016) than those presented in this work, where we consider an initial mass range from red dwarfs to very massive stars evolved from the ZAMS up to the end of core helium burning.

In this work, we provide two proof-of-concept solutions of automated single star interpolation schemes over a wide parameter span, which (in contrast to the EEP-based interpolation method) do not require mapping out points of interest in stellar parameter space; this is because they are constructed based on a timescale-adapted evolutionary coordinate that we introduce, whose computation can be easily automated. Using the latter for constructing more general interpolation models has a potential applicability to larger parameter spaces, such as those found in stellar binaries. The first solution we develop is a surrogate model of stellar evolution, constructed with supervised machine learning. The second is a stellar-catalog-based hierarchical nearest-neighbor interpolation (HNNI) method. These feature two different trade-off points between efficiency and accuracy of predictions: depending on astrophysical application, either the one or the other is preferable.

This paper is organized as follows. In Sect. 2, we describe the methods common to both interpolation scheme solutions that we have developed: the regression problem that is addressed, the data base used for constructing predictive models, the timescale-adapted evolutionary coordinate (which is used as the primary interpolation variable), and the performance scores that assess the quality of the predictions. In Sect. 3, we outline how the two interpolation scheme solutions are set up. For the surrogate model, we report on the choice of loss function, the selection of machine learning model class, and its hyperparameter optimization. For the interpolation-based solution, we explain how HNNI works and how it differs from interpolation models from previous work. In Sect. 4, we present our results, obtained with both the supervised machine learning and the HNNI. The paper is concluded in Sect. 5 with a summary of results, limitations, and an outlook on possible future developments.

2 Methods

In Sect. 2.1, we define the problem which is addressed by two different predictive frameworks (surrogate modeling of stellar evolution and catalog-based hierarchical nearest neighbor interpolation) and we motivate the two-step approach to fitting stellar evolution tracks. In Sect. 2.2, the timescale-adapted evolutionary coordinate is introduced, which we used to set up reliable predictive frameworks in the two-step interpolation scheme. In Sect. 2.3, the methods to prepare the data base are described: a nonlinear sampling density segmentation of the initial mass parameter space and a data augmentation routine for the core helium burning phase. This data base is used as catalog for interpolation of tracks by HNNI and as training data for constructing surrogate models. Finally, Section 2.4 outlines how we evaluate predictive performance of our models based on error metrics.

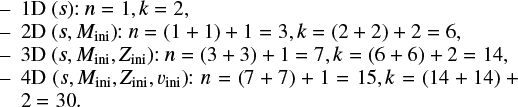

2.1 Regression problem formulation

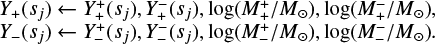

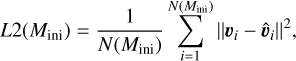

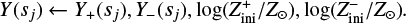

In 1D stellar evolution codes such as MESA, stellar evolution is modeled as a deterministic initial value problem and observ-ables are predicted by cost-intensive numerical time integration of differential equations. Instead, we formulated the prediction of observables as a regression problem, which is to be addressed by supervised machine learning or by catalog-based interpolation. In a regression problem, the goal is to predict output target variables from input regressor variables. But in the surrogate modeling case, the data-driven approach is used to learn the mapping, instead of programming the rules that map the input to the output. We constrained the problem to predicting three stellar surface observables, namely, log-scaled luminosity, YL = log L/L⊙; effective temperature, YT = log Teff/K; and surface gravity, Yg = log 𝑔/[cm s−2]. These are the target variables to be predicted for a given input of age, τ, and initial mass, Mini, of an isolated non-rotating single star, at a fixed solar-like initial metallicity Zini = Z⊙.

Stars evolve on different timescales, depending on the evolutionary phase they undergo, on their masses as well as on other stellar parameters. Therefore, stellar track fitting across different evolutionary phases and initial masses is a temporal multiscale problem. We confirm the conclusion of Li et al. (2022), namely, that the naive approach of training a machine learning surrogate model fML: (τ, Mini) ↦ Y to predict the observable Y, by operating directly on (scaled) age, τ, does not result in accurate enough predictions of the post-main sequence evolution (see Fig. A.1 for an illustration). Instead, we set up a two-step interpolation scheme:

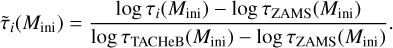

Step 1 (age proxy fit) f1: (log τ, log Mini) ↦ s,

Step 2 (observables fit) f2: (s, log Mini) ↦ (YL, YT, Yg).

Here, the evolution of stellar surface variables is modeled as function of a timescale-adapted evolutionary coordinate s (an age proxy) instead of the age, τ (step 2). The transition from stellar age to the age proxy is accomplished by a second predictive model (step 1).

We find that the fits of the post-main sequence evolutionary stages resulting from this two-step interpolation scheme are orders of magnitude more accurate, as assessed by standard statistical performance scores, than the direct naive fit. We take the logarithm of initial mass values, in order to exploit the approximate mass-luminosity power law relation, which is a linear variable dependence in log-log space.

2.2 The timescale-adapted evolutionary coordinate

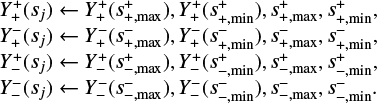

The method of using a timescale-adapted evolutionary coordinate, or age proxy, instead of the age variable for fitting stellar evolution tracks has been explored before in stellar astrophysics (e.g., Jørgensen & Lindegren 2005; Li et al. 2022). The motivation for this re-parametrization is to reduce timescale variability. Stellar age at computation step, i,

is a monotonically increasing function which grows cumulatively at an adaptive step size, δtj, after each step j = 1,…, i of numerical time integration of the differential equations describing stellar structure and evolution. The age proxy variable,

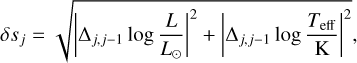

is constructed analogously, but here δsj is the increment in the star’s Euclidean displacement in a diagram spanned by a set of its physical variables, obtained after the numerical time integration step j = 1,…, i. For a parametric form of δs, Jørgensen & Lindegren (2005) used the ansatz

where Δj,j−1X = Xj − Xj−1. By construction, this age proxy measures the increase in Euclidean path length of a star along its evolutionary track in the Hertzsprung–Russell (HR) diagram. More recently, Li et al. (2022) suggested another prescription

![$\delta {s_j} = {\left( {{{\left| {{\Delta _{{\rm{j}},{\rm{j}} - 1}}\log {g \over {\left[ {{\rm{cm}}{{\rm{s}}^{ - 2}}} \right]}}} \right|}^2} + {{\left| {{\Delta _{{\rm{j}},{\rm{j}} - 1}}\log {{{T_{{\rm{eff}}}}} \over {\rm{K}}}} \right|}^2}} \right)^c},$](/articles/aa/full_html/2024/01/aa47118-23/aa47118-23-eq4.png)

which they tailored to their problem formulation and parameter range. Their age proxy measures the displacement of the star in the Kiel diagram to the power of a parameter, c. After experimentation, they conclude that c = 0.18 yields the most uniform distribution of the data they trained their models on. At the same time, the authors report fit inaccuracies at transition regions between consecutive evolutionary phases and over the fast ascension of the red giant branch. Over these phases (in contrast to the MS evolution) target variables change rapidly in time and vary unsteadily even as function of the age proxy. To cure this problem, we re-defined the timescale-adapted evolutionary coordinate by an altered prescription, whose effect is to not only smooth out transitions in-between stellar phases, but, additionally, to resolve the CHeB phase in a way that allows for reliable stellar track fitting; this is done by keeping the resolution of variability on the same numerical age proxy scale as the previous two phases. To get there, we found a promising approach in returning to the original formulation by Jørgensen & Lindegren (2005), but extending it by a third variable that spans another dimension of the diagram, in which the Euclidean path length is calculated:

![$\delta {\mathop s\limits^ _j} = \sqrt {{{\left| {{\Delta _{{\rm{j}},{\rm{j}} - 1}}\log {L \over {{L_ \odot }}}} \right|}^2} + {{\left| {{\Delta _{{\rm{j}},{\rm{j}} - 1}}\log {{{T_{{\rm{eff}}}}} \over {\rm{K}}}} \right|}^2} + {{\left| {{\Delta _{{\rm{j}},{\rm{j}} - 1}}\log {{{\rho _c}} \over {\left[ {{\rm{g}}{\rm{c}}{{\rm{m}}^{ - 3}}} \right]}}} \right|}^2}} .$](/articles/aa/full_html/2024/01/aa47118-23/aa47118-23-eq5.png)

The motivation for introducing another variable into the computational prescription of the path length stems from the fact that during the stable CHeB, stars hardly displace in the HR diagram, although their nuclear composition and hydrodynamic properties undergo substantial changes. In order to adjust the path length prescription, we therefore sought for a suitable stellar-core-related variable. After experimental tests, we found that adding the log-scaled core density log ρc/[g cm−3] has the desirable effect of casting the variability of all target variables of interest onto a unified numerical scale across the three consecutive phases MS, RGB, CHeB, and across the wide initial mass range that we work with1.

We normalize the age proxy of each initial mass to the range (0, 1). The star is on the ZAMS when s = 0, while s = 1, when the star has reached the end of core helium burning2.

2.3 Data base

Stellar evolution catalog. Here, we use MIST (Choi et al. 2016) as an example data set upon which we formulate and demonstrate our method, as well as train and validate our predictive models. However, the method we develop is general and not specific to the MIST data set. We restrict the scope of the ages of stars to the evolutionary sequence from ZAMS to the terminal age of core helium burning (TACHeB), which is expected to account for ≃99% of stellar observations (excluding compact object sequences). The initial mass parameter range, from 0.65 to 300 M⊙, is chosen as the entire initial mass span available in the MIST data set, over which stars are evolved through all three consecutive phases main sequence (MS), red giant branch (RGB), and core helium burning (CHeB). The wide initial mass range and, at the same time, the inclusion of the red giant as well as core helium burning phases have not been explored in previous work of stellar evolution surrogate modeling. We acknowledge that the 2D input parameter space is small compared to the size of the eight-dimensional (8D) parameter space required for general cost-efficient binary star modeling. We see our work as a first step toward a large-scale enterprise of stellar evolution surrogate modeling and of hierarchical interpolation in high-dimensional parameter space over wide parameter ranges, however, as a layout of basic methodology toward this end.

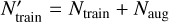

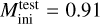

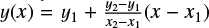

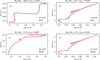

CHeB data augmentation. The MIST data set is generated with the MESA code, which by default outputs more stellar evolution models than what is included in the MIST data set for each Mini-dependent track. The number of models per track is ~500, with ~250 models on the MS, ~150 on the RGB before ignition of helium burning in the core, and ~100 for the CHeB phase. While the MIST data set includes phase labels for each stellar model, the predictive models that we build are not exposed to this information. All the input information they are exposed to is the value of the age (proxy) and of initial mass of the star. While in the MIST data set, the CHeB phase is the least sampled among these three, it is the phase most difficult to fit. In particular, the helium flashes of low-mass stars, blue loops of upper main-sequence stars, and fast timescale dynamics of Wolf-Rayet stars during CHeB pose a challenge to fitting. To increase weight and accuracy of interpolation fits during the CHeB phase, we use local nearest-neighbor 1D linear interpolation of the training data (not of the test data) along the age proxy axis (for the step 2 fit) or along the scaled age axis (for the step 1 fit) during this phase. The net effect is an artificial increase in the CHeB training data by insertion of a sample in-between each pair of age proxy neighbors. Despite simplicity of this methodological step, we find the predictive performance of our best-fit models to be boosted by around half an order of magnitude decline in the mean squared error over the validation data (to which CHeB data augmentation is not applied) after switching on CHeB data augmentation of the training data. In Fig. 1, the data pre-processing consisting of age proxy re-parametrization, normalization, and CHeB data augmentation is illustrated based on the example of the Sun-like stellar model.

Parameter space grid sampling. A recommended standard routine for a homogeneous sampling of the parameter space that produces the data for training surrogate models is LATIN HYPERCUBE SAMPLING (LHS; McKay et al. 1979). This is an efficient alternative to random uniform and rectilinear sampling methods for achieving homogeneity. Random sampling introduces sampling voids by consequence of statistical random clumping effects, while dense rectilinear sampling is too expensive in many problem settings. However, since the stellar evolution dependence on the initial mass parameter is strongly non-linear, a homogeneous population of parameter space is not the optimal sampling scheme. We work with the pre-computed MIST data set for which a segmented parameter sampling density across the initial mass range has already been pre-determined by the makers of the catalog, based on physics-informed considerations.

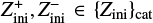

In order to reach a high accuracy level of stellar track forecasts that is necessary for a general-purpose stellar evolution emulator across the entire initial mass range, we found that we need to locally increase the initial mass sampling. In practice, we increased the initial mass sampling in those parameter space sub-regions, where the fit quality was found to be worst, while we kept the MIST stock sampling intact, where the local fit accuracy was found to be satisfactory (see Fig. 2 and Table 1 for a summary). For generating the additional stellar tracks, we used the MIST Web Interpolator3, which works by applying the EEP-based method referred to in Sect. 1. Our finding is that the final sampling required to reach the predictive accuracy goal varies substantially, depending on sub-region of parameter space: a least δMini/M⊙ = 0.01 between Mini/M⊙ ∈ (1.16, 1.5) and a largest δMini/M⊙ = 25 between Mini/M⊙ ∈ (150, 300). For the Mini/M⊙ ∈ (0.65, 0.9) interval, we double the sampling to correct for a systematic under-representation of red dwarfs in the stock MIST catalog as compared to the adjacent initial mass intervals. For the Mini/M⊙ ∈ (1.16, 1.5) interval, we double the sampling rate mainly because of complexity of shape changes in HR diagrams due to the helium flashes. In the interval Mini/M⊙ ∈ (1.5, 40), we hardly increase the sampling, except at transitions in-between neighbouring sampling segments at different rates, in order to smooth out transitions. The biggest increase in this range is within the interval Mini/M⊙ ∈ (8, 21). We stress that our densest sampling region (the solar neighborhood initial mass range) is the same as in Li et al. (2022), in Bellinger et al. (2016) and in Lyttle et al. (2021), while at the same time our surrogate models evolve the stars further, up to end of CHeB, and cover a much wider initial mass range. Scutt et al. (2023) adopt a sampling of δMini = 0.02 M⊙ over the range Mini/M⊙ ∈ (1.3, 2.2), comparable to ours.

At the high-mass end, the relative increase in sampling is greatest within the interval Mini/M⊙ ∈ (40, 70), where the increment step size δMini/M⊙ was augmented from 5 to 0.625. We suspect that numerical challenges are the reason for unexpectedly sharp, peculiarly shaped changes in HR diagrams. Nevertheless, for the proof-of-concept, we assume that MIST offers a perfect data set, even when we suspect that it may be not.

Naturally, the denser the grid sampling, the more accurate are the forecasts of surrogate models. We stress that depending on minimal performance benchmarks (as quantified by error scores) of a specific astrophysical application, the initial mass sampling required to reach that benchmark can be significantly sparser.

With the initial-mass parameter space sampling as described above, the total size Ntot of the data set amounts to 139 016. Shuffling it, we do a uniform random split of the Ntot into 85 % training (Ntrain) and 15 % validation (Nval) data sets. To the Ntrain data, we applied a CHeB data augmentation, which yields additional Naug = 32 143 samples, such that the expanded training data set is of size  . This is the final data set on which we train different classes of surrogate models (for the first solution) or which we use as the catalog for interpolation (for the second solution).

. This is the final data set on which we train different classes of surrogate models (for the first solution) or which we use as the catalog for interpolation (for the second solution).

|

Fig. 1 Luminosity series of a Sun-like star from the ZAMS up to TACHeB parametrized as function of stellar age, τ, (a) versus of the timescale-adapted evolutionary coordinate, |

|

Fig. 2 Original initial mass sampling in the MIST catalog (in blue) and the locally increased sampling (in red) that we used for training the surrogate models. The stock MIST catalog contains 177 solar metallicity stellar evolution tracks within the initial mass range (0.65, 300) M⊙. For our purposes, we expanded it to 274 to achieve the desired quality of predictive accuracy necessary for a general-purpose stellar evolution emulator. |

Summary of the initial mass sampling density segmentation before (δ0Mini) and after (δMini) expanding the stock MIST data set.

2.4 Performance evaluation

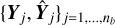

Validation and test data. We used two schemes to evaluate performance of predictive models: the first (model validation) based on the validation data set, and the second (model testing) based on the test data set. The validation data consists of randomly selected grid points over the input domain (initial masses and evolutionary phases of stars). It is representative, since it has similar statistical properties as the training data. In contrast, for model testing, we aim to assess the trained model’s capability to predict entire stellar tracks from ZAMS up to TACHeB for initial masses unseen during training. We choose this method of model testing since it is of main interest to obtain a predictive model that is capable of accurate interpolation over the space of fundamental stellar parameters. Only then can the traditional method of running expensive simulations over densely sampled grids be replaced by a surrogate model capable of sufficiently accurate generalization. As test data, we prepared another set of stellar tracks at 16 initial mass grid points,  , = {0.91 1.51, 2.41, 4.1, 8.25, 16.25, 21.5, 31.5, 41, 51, 61, 83.75, 103.75, 155, 262.5, 295), which we held back from training. These were chosen at half of the grid step in the respective region of parameter space. This choice is motivated by the aim to test predictive accuracy at parameter space points that are farthest away from training grid points, where we likely probe the worst cases of complete stellar track predictions4.

, = {0.91 1.51, 2.41, 4.1, 8.25, 16.25, 21.5, 31.5, 41, 51, 61, 83.75, 103.75, 155, 262.5, 295), which we held back from training. These were chosen at half of the grid step in the respective region of parameter space. This choice is motivated by the aim to test predictive accuracy at parameter space points that are farthest away from training grid points, where we likely probe the worst cases of complete stellar track predictions4.

Performance scores. A crucial ingredient for the optimization procedure of an automated interpolation method is a set of appropriately designed scores that quantify performance in a physically meaningful and numerically appropriate manner. Only with an adequately defined quantitative performance scoring, the automated interpolation scheme can be scaled up to higher dimensional fundamental stellar parameter spaces, which become too large for visual inspection based performance evaluation for comparing the predicted against the held-back test tracks.

For model validation on the validation data set, we look at residuals for each observable independently and at measures of overall predictive performance. A residual is a signed prediction error,  , of a given prediction-label pair

, of a given prediction-label pair  , and we evaluate it for each of the surface variables. We consider the following error scores that retain the physical significance of residuals: the mean residual

, and we evaluate it for each of the surface variables. We consider the following error scores that retain the physical significance of residuals: the mean residual  , the most extremal under-prediction

, the most extremal under-prediction  , and the most extremal over-prediction

, and the most extremal over-prediction  If ϵ+ and ϵ− are close enough to zero over the entire set of validation data grid points, then there is no need to further stratify the performance evaluation5. Additionally, we use the following error scores to quantify overall predictive performance across the three surface variables: the Mean Squared Error (MSE) and the Mean Absolute Error (MAE). These scores are calculated from the squared residuals and from the absolute residuals, respectively, by taking the average variable by variable and over the three surface variables. We choose the MSE and the MAE, because these are standard choices for evaluating point forecasts generated by statistical learning models, but the physical significance is largely lost by averaging across surface variables.

If ϵ+ and ϵ− are close enough to zero over the entire set of validation data grid points, then there is no need to further stratify the performance evaluation5. Additionally, we use the following error scores to quantify overall predictive performance across the three surface variables: the Mean Squared Error (MSE) and the Mean Absolute Error (MAE). These scores are calculated from the squared residuals and from the absolute residuals, respectively, by taking the average variable by variable and over the three surface variables. We choose the MSE and the MAE, because these are standard choices for evaluating point forecasts generated by statistical learning models, but the physical significance is largely lost by averaging across surface variables.

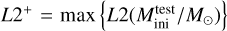

For model testing on the held-back test tracks at the 16 Mini grid points stated above, we define and use the following error scores based on HR and Kiel diagrams:  and

and  . For a single track in HR or in Kiel diagram at a particular initial mass, Mini, the L2 score measures the cumulative deviation between predicted track and held-back track,

. For a single track in HR or in Kiel diagram at a particular initial mass, Mini, the L2 score measures the cumulative deviation between predicted track and held-back track,

computed as the mean squared Euclidean distance in a 2D plane of target variable pairs: υi = (log Li, log Teff,i) for the HR diagram and υi = (log 𝑔i,log Teff,i) for the Kiel diagram. This measurement agrees reasonably well with the visual assessment of how closely a predicted track aligns with the true test track. As summary measures of predictive performance on the test data, we take the maximum L2 measure,  among the 16 initial masses of the test set, for each type of diagram, namely,

among the 16 initial masses of the test set, for each type of diagram, namely,  and

and  .

.

3 Interpolation scheme solutions

In this section, we describe the methodology behind the development of the two solutions to cost-efficient stellar evolution forecasting over continuous parameter spaces. For the construction of a stellar evolution emulator with supervised machine learning, we treat the selection of the surrogate model class in Sect. 3.1. Then, we discuss loss function choice (Sect. 3.2), and outline our training and hyperparameter optimization methods to obtain the best-fit model (Sect. 3.3), which is a feedforward neural network. The hierarchical nearest-neighbor interpolation method is subject of Sect. 3.4.

3.1 Model selection

There are different surrogate model class candidates available for tackling the regression problem defined in Sect. 2.1. For selection of statistical learning algorithms, the following three requirements apply in our problem case: 1) applicability to a large data set (N > 150k), 2) multiple output6, and 3) fast computational speed in forecast generation, for applicability of the surrogate model at scale. Below, we discuss a number of available options, and justify our choices.

Choice of statistical learning model. GPR has been considered the standard model choice for emulation tasks (Sacks et al. 1989). However, because of memory limitations, the default implementation of global GPR is not applicable to large training data sets. While there are approaches to improve the scalability of GPR, we did not opt for GPR-based emulators for reasons discussed in Appendix C. Instead, we tested the performance of a number of regression models that satisfy the aforementioned constraints. After a series of manual tests, we found a satisfactory starting performance with the k-nearest neighbors (Fix & Hodges 1989), random forest (Ho 1995), and feedforward neural network (Ivakhnenko & Lapa 1967; Rumelhart et al. 1985) regression models classes, all of which are efficient statistical learning algorithms that qualify as scalable predictive models with multiple outputs. Among them, in order to identify which model class is the best choice for the construction of a sufficiently accurate surrogate model, we performed a hyperparameter optimization of each of these three to cross-compare their performance, as assessed by the scores defined in Sect. 2.4. We performed hyperparameter optimization of k-nearest neighbors (KNN) and random forest (RF) regression models by a grid search, with a sampling of numerical hyperparameters over a log scale, and carried out a model selection based on three-fold cross-validation. For the feedforward neural network (ffNN) model, which has a much larger space of options for hyperparameter choices, we determined a preliminary best-fit hyperparameter configuration after training hundreds of models over a high-dimensional, but coarsely sampled hyperparameter grid. We then took it as a starting configuration, which we further optimized in terms of the hyperparameter selection over a series of manual experiments. The result is that a manually tuned feedforward neural network (ffNN) outperforms KNN and RF models that have been optimized through a grid search, as assessed by the majority of error metrics defined above (see Table 3). The KNN and RF best-fit models therefore serve us primarily as benchmarks for ffNN performance.

Deep learning models. ffNN is one out of many available deep learning architectures. We opt for a ffNN architecture because in our regression problem, the input is a vector of fixed dimension. To discriminate, we did not train a recurrent neural network based architecture, which is the model class of choice if the input is a sequence of variable length; nor did we choose a convolutional neural network architecture, which is the model class of choice if the input is a higher dimensional topological data array. A motivation for choosing a ffNN architecture is the established theoretical result that a ffNN with a number of hidden layers ≥1 is capable of universal function approximation (Hornik et al. 1989).

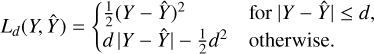

3.2 Choice of loss function

Choosing a loss function appropriate to the problem is a crucial step because it defines the training goal for supervised machine learning. During the optimization of a ffNN, its trainable parameters are iteratively updated, after each batch, to minimize the loss score. Choosing one error score over another is a trade-off to compromise which type of error is least tolerable against other types of errors. Common choices of scoring rules (for a more detailed reference on scoring rules for point forecast evaluation, see Gneiting 2011) for model training as well as for point forecast evaluation are the MAE and MSE. Other choices include the mean squared logarithmic error (MSLE) and the mean absolute percentage error (MAPE). For our problem case, the loss function selection was guided by the following considerations.

MAPE is not the appropriate loss function since, for instance, changes in log-scaled luminosity of massive stars in HR diagram happen on a smaller relative numerical scale than for low-mass stars and prediction errors in that range would therefore hardly be penalized. Furthermore, we chose not to opt for MAPE for additional reasons that are outlined in Tofallis (2015). When choosing MSLE as loss function, we observed an inefficient learning procedure, with an overly slow decline of MSE, MAE, and our physical performance scores over the validation data. However, we also found neither MAE nor MSE to be optimal choices for our problem. Using MAE allowed the mean averaged error scores to remain low but admitted considerable prediction outliers. Conversely, using MSE reproduced the global shape of the distribution of values of the target variables, but predictions of stellar tracks were often not precise enough locally, and overfitting occurred at epochs much earlier than when minimizing MAE. Instead, we opted for the Huber loss (Huber 1964), which seeks a trade-off between MAE and MSE minimization. It penalizes MSE-like for small prediction errors, and MAE-like for large prediction errors, using the parameter d for the transition threshold (for a recent discussion and generalization, see Taggart 2022):

During supervised learning, the Huber loss  issues a penalty for each point prediction error, given the prediction,

issues a penalty for each point prediction error, given the prediction,  , by the surrogate model and the true label, Y, it is compared against. When training deep learning models to predict multiple output, the mean Huber loss is computed as the average across target variables, that is, over the set of labels and over multiple output predictions

, by the surrogate model and the true label, Y, it is compared against. When training deep learning models to predict multiple output, the mean Huber loss is computed as the average across target variables, that is, over the set of labels and over multiple output predictions  that are obtained from one randomly sampled data batch of size nb. We find our best results, as assessed by the physically meaningful performance scores outlined in Sect. 2.4, with d = 0.75. Once a desired target value of the validation loss score is set, which goes in hand with low enough physical performance scores over the validation data, what is left is to seek a suitably configured deep learning model that reaches this target value7.

that are obtained from one randomly sampled data batch of size nb. We find our best results, as assessed by the physically meaningful performance scores outlined in Sect. 2.4, with d = 0.75. Once a desired target value of the validation loss score is set, which goes in hand with low enough physical performance scores over the validation data, what is left is to seek a suitably configured deep learning model that reaches this target value7.

3.3 Hyperparameter optimization

There are two types of hyperparameters that ought to be optimized when constructing ffNN-based emulators: the architecture and learning hyperparameters. The most important architecture hyperparameters are: the number of layers, number of neurons per layer, choice of activation function, and the kernel initialization. The typical important learning hyperparameters are: the learning rate, batch size, choice of optimizer, and the choice of regularization method. There are three different ways to optimize hyperparameters: first, by manual ffNN learning engineering; second, by automated brute-force search methods (for instance, grid or random search); third, by sophisticated search algorithms (e.g., Bayesian optimization or genetic evolutionary search). We opt for manual ffNN learning engineering instead of automated searches, because for deep learning models, the optimal stage when (i.e., at which epoch8) to stop training cannot be faithfully decided a priori, and it requires a careful consideration of numerical criteria for stopping training if models are optimized in an automated pipeline. Most reliably, it is determined a posteriori by inspection of the fluctuating training and validation data loss curve declines during the runtime. Then, we continue training so long as the degree of overfitting is tolerable. We consider the overfitting to be tolerable so long as the validation loss – even though it may be decaying slower than the training loss at advanced learning stages (i.e. at large epoch numbers) – has not reached the flattening plateau stage, nor started to increase.

Best-fit model. For theoretical considerations regarding hyperparameter tuning and the selection criteria we used, the reader is referred to Appendix D. In practice, we found a successful hyperparameter tuning strategy (guided by Goodfellow et al. 2017) with the following configurations (see Table 2 for a summary). First, a symmetric many-layer (6 hidden layers) architecture with a moderate number of neurons per layer (128), rectified linear unit (ReLU; Hahnloser et al. 2000) activation, Glorot uniform (GU; Glorot & Bengio 2010) kernel initialization, and layer normalization (LN; Ba et al. 2016) regularization after each layer. Layer normalization counteracts overfitting while the eight-layer architecture with 128 neurons per hidden layer yields a large enough model capacity to prevent underfitting by over-parametrization. Second, long-term training (~ 70k epochs) at relatively small (512) batch size. Observation of the degree of fluctuation of the loss curves is a means to assess exploration of the high-dimensional trainable parameter space spanned by the biases and by the weighted connections between neurons from neighboring layers in each backpropagation step. The small batch size (as compared to the size of  ) adds stochasticity to the learning, and thereby ensures enough exploration, which is aimed to prevent an early flattening of the validation loss curve. Third, a learning rate schedule of slow exponential decay in the Adam optimizer (Kingma & Ba 2014): starting with a large enough initial learning rate lri = 10−3 (to accelerate the gradient descent at beginning stages of learning) and decreasing the learning rate down to a final lrf ~ 5 × 10−6 toward the end of training (in order to target global rather local minima in the value space of trainable network parameters). The slow gradual decrease is aimed to improve on subtle prediction errors.

) adds stochasticity to the learning, and thereby ensures enough exploration, which is aimed to prevent an early flattening of the validation loss curve. Third, a learning rate schedule of slow exponential decay in the Adam optimizer (Kingma & Ba 2014): starting with a large enough initial learning rate lri = 10−3 (to accelerate the gradient descent at beginning stages of learning) and decreasing the learning rate down to a final lrf ~ 5 × 10−6 toward the end of training (in order to target global rather local minima in the value space of trainable network parameters). The slow gradual decrease is aimed to improve on subtle prediction errors.

Summary of loss function choice, architecture and learning hyperparameters adopted for training our best-fit ffNN model, compared to those adopted by Scutt et al. (2023).

3.4 Hierarchical nearest-neighbor interpolation

In this section, we present a second method to solve the problem by a HNNI scheme. Our construction of the HNNI algorithm was partly motivated by an attempt to customize the operation of the KNN algorithm to our problem setting. In KNN, the nearest neighbors are selected based on a pre-defined distance metric (e.g., Euclidean or Manhattan) over the input parameter space, without treating the regressor dimensions apart from one another. The key principle behind the HNNI method is to select the nearest available grid points (from above and from below) in each parameter space direction to the location in parameter space at which the interpolation prediction is to be made; and then to apply a 1D interpolation prescription subsequently in each parameter space direction according to a hierarchical order of parameters. Our method works similarly to Brott et al. (2011) in that it performs a sequence of linear interpolations separately in each parameter space direction according to a hierarchical ordering of stellar variables, but different from it in that it uses a timescale-adapted evolutionary coordinate, instead of fractional age, as the primary interpolation variable. We thereby show that the method is applicable not only to the MS evolution, but to a sequence of evolutionary phases. In this regard, our method is analogous to Agrawal et al. (2020) in that it uses an adapted evolutionary coordinate to trace the evolution of stars across phases, but we use a prescription for it that allows for automatization of its computation.

We prepare the data set for generating predictions with HNNI under exactly the same conditions as in the supervised machine learning case. The  is now used as a catalog data base, upon which the hierarchical nearest neighbor interpolation is performed, instead of serving as the training data for fitting a surrogate model. The HNNI method requires continued access to the pre-computed stellar evolutionary tracks catalog. The HNNI method is applied separately to each of the three surface variables YL, Yt, and Yg, for obtaining point forecasts at unseen locations in parameter space.

is now used as a catalog data base, upon which the hierarchical nearest neighbor interpolation is performed, instead of serving as the training data for fitting a surrogate model. The HNNI method requires continued access to the pre-computed stellar evolutionary tracks catalog. The HNNI method is applied separately to each of the three surface variables YL, Yt, and Yg, for obtaining point forecasts at unseen locations in parameter space.

As will be shown in Sect. 4.1, the HNNI is applicable reliably over the entire initial mass range and over all three evolutionary phases, including the transitions in between them, without the need to map out points of interest for that purpose. The level of predictive accuracy of HNNI is achieved for two main reasons. First, HNNI operates on local parameter space regions immediate to the test location at which a prediction is to be made. Predictions are calculated by an interpolation scheme that treats different dimensions apart from one another. This stands in contrast to the way ffNN, RF, and KNN operate. RF and f£NN take the global properties of the input parameter space into account to find their own rules for making predictions. Comprehension of global patterns can be a great benefit in some problem settings, but irrelevant in others. Similarly to HNNI, KNN also operates on local environments but does not take hierarchy relations among input parameters into account. Second, HNNI uses the normalized timescale-adapted evolutionary coordinate s as primary interpolation variable, without which the interpolation scheme would not produce accurate results. By virtue of using the latter, interpolation-based predictions at transitions between evolutionary phases are mostly accurate because meanwhile values of stellar log-scaled luminosity, effective temperature, or core density variables change drastically. Therefore, the path length increment δs, which is computed from absolute increments in these variables, increases significantly, resulting in a higher resolution along the age proxy axis of the transition stages between evolutionary phases.

Given the initial mass parameter space sampling used in this work, a linear interpolator was sufficient for making accurate forecasts. More generally, for each parameter space dimension, a different (e.g., a quadratic or cubic polynomial) functional could be applied instead.

For clarity, we outline the pseudo-code of HNNI in a 3D (s, Mini, Zini) single star parameter space in Appendix B. We believe that the HNNI method, in its basic principle, is applicable to those higher dimensional parameter spaces that allow for a sequential ordering of the parameters in importance of their effect on the shape of resulting stellar evolutionary tracks.

|

Fig. 3 Validation data results for the ffNN-based stellar evolution emulator. The histograms and summary statistics of the residuals |

4 Results

In this section, the prediction results, obtained with the deep learning surrogate model and with the HNNI algorithm, are analyzed. We treat the observables fit (step 2) first (Sect. 4.1) because it yields the physically meaningful outcome: the prediction of stellar evolution variables and tracks. Therefore, in our two-step interpolation scheme, the observables fit needs to reach a satisfactory level of accuracy first, which can be assessed physically, before approaching the age proxy fit (step 1). Then, the performance baseline for the age proxy fit is set by the condition that the predictive accuracy of the integral two-step interpolation scheme is maintained on the same order of magnitude, as assessed by the scores. We analyze the step 1 fit in Sect. 4.2.

4.1 Observables fit

4.1.1 Deep learning emulation

Validation data. The performance assessment on the validation data is presented in Fig. 3 by histograms of the residuals and by the summary statistics, defined in Sect. 2.4, individually for each of the three predicted surface variables. If we assume that the prediction errors of YL, YT, and Yg were scored over the same numerical scale, then the following conclusions could be made. The mean residual, in absolute value, is largest for log 𝑔 and lowest for log L, while the most extremal overprediction and underprediction are obtained for the log 𝑔 target variable. All three mean residuals take on low numerical values on the order of 10−4 or 10−5. These error scores are comparable to those found with the best-fit neural network model of Scutt et al. (2023; 8 × 10−4 dex on log L and 2 × 10−4 dex on log Teff), who address a similar regression problem. Since  is negative for log L but positive for log Teff and log 𝑔, the deep learning emulator tends to over-predict the first, but to underpredict the latter two. The most extreme prediction outliers are on the order of 10−1 or 10−2 in absolute value, namely, up to three orders of magnitude larger than the mean residuals. To better characterize the distribution of errors, we therefore computed an additional score, σϵ, which is the standard deviation of the residuals over each target variable. It is a measure of the spread of the prediction errors around the mean residual error, which we find to be on the order of 10−3 for each of the three target variables.

is negative for log L but positive for log Teff and log 𝑔, the deep learning emulator tends to over-predict the first, but to underpredict the latter two. The most extreme prediction outliers are on the order of 10−1 or 10−2 in absolute value, namely, up to three orders of magnitude larger than the mean residuals. To better characterize the distribution of errors, we therefore computed an additional score, σϵ, which is the standard deviation of the residuals over each target variable. It is a measure of the spread of the prediction errors around the mean residual error, which we find to be on the order of 10−3 for each of the three target variables.

Comparison to observational uncertainties. It is of interest to compare the mean residual errors on the target variables to the typical uncertainties from observations of stars. For stellar bolometric luminosity, the relative error is on the order of δL/L ∝ 0.01 for Gaia observations of solar-like stars (Creevey et al. 2023), which translates into δ log  log e ∝ 0.004. For surface gravity, with δ log 𝑔/[cm ⋅ s−2] ∝ 0.1 (see, e.g., Ryabchikova et al. 2016), it is comparatively large. For effective temperature of low-mass stars, the observational error is on the order of δTeff/K ∝ 50-100 depending on stellar class and spectral method (Ryabchikova et al. 2016). For massive stars, the observational uncertainty on the classical observables typically ranges between δ log L/L⊙ = 0.1, δTeff ∝ 500−2000 K and δ log 𝑔/ [cm s−2] ∝ 0.1-0.2 (Schneider et al. 2018b,a).

log e ∝ 0.004. For surface gravity, with δ log 𝑔/[cm ⋅ s−2] ∝ 0.1 (see, e.g., Ryabchikova et al. 2016), it is comparatively large. For effective temperature of low-mass stars, the observational error is on the order of δTeff/K ∝ 50-100 depending on stellar class and spectral method (Ryabchikova et al. 2016). For massive stars, the observational uncertainty on the classical observables typically ranges between δ log L/L⊙ = 0.1, δTeff ∝ 500−2000 K and δ log 𝑔/ [cm s−2] ∝ 0.1-0.2 (Schneider et al. 2018b,a).

In sum, the mean residual errors on all three target variables are smaller than the typical observational errors on the same log-scaled quantities. In the case of ϵL and ϵg, the mean residual errors (note: not only these, but also the expected spreads σϵ) are smaller by one to three orders of magnitude depending on statistical score. This means that the prediction errors from the emulator are greater than the observational uncertainties only when the prediction errors belong to the tail of their integral empirical histogram, which comprises cases that are statistically rare. For ϵT, the histogram of linear-scaled residual errors,  , yields a mean residual error of ≃8.3 K, an expected spread of ≃85 K, a worst overprediction outlier of ≃1385 K and a worst underprediction outlier of ≃2885 K in absolute values. The expected spread is smaller than the observational uncertainty δTeff/K but of a similar order of magnitude. Therefore, inference on effective temperature of low-mass stars using the emulator is (based on the assumption of the aforementioned observational uncertainties) least reliable, out of the three surface variables, in a practical setting.

, yields a mean residual error of ≃8.3 K, an expected spread of ≃85 K, a worst overprediction outlier of ≃1385 K and a worst underprediction outlier of ≃2885 K in absolute values. The expected spread is smaller than the observational uncertainty δTeff/K but of a similar order of magnitude. Therefore, inference on effective temperature of low-mass stars using the emulator is (based on the assumption of the aforementioned observational uncertainties) least reliable, out of the three surface variables, in a practical setting.

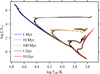

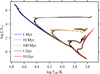

Test data. For model testing on the test data, in order to predict evolutionary tracks in the HR diagram, we compute the values of target variables, log Li, log 𝑔i, and log Teff,i at the evolutionary coordinate grid points,  , contained in the held-back series for each test initial mass

, contained in the held-back series for each test initial mass  . We then plot pairs of predicted target variables against one another to obtain the predicted tracks in the HR and in the Kiel diagram, respectively. These can now be compared with the test data held-back tracks in the diagrams. As shown in Fig. 4, the shape of the stellar tracks is reproduced by the deep learning surrogate models across the entire initial mass range. For a closer inspection of the predictive quality, Fig. 5 displays the best and worst predictions, respectively, of stellar evolution tracks in the HR diagram at unseen test data initial mass grid points. The biggest deviation between predicted and held-back test stellar track is observed at the low-mass end (worst fit for

. We then plot pairs of predicted target variables against one another to obtain the predicted tracks in the HR and in the Kiel diagram, respectively. These can now be compared with the test data held-back tracks in the diagrams. As shown in Fig. 4, the shape of the stellar tracks is reproduced by the deep learning surrogate models across the entire initial mass range. For a closer inspection of the predictive quality, Fig. 5 displays the best and worst predictions, respectively, of stellar evolution tracks in the HR diagram at unseen test data initial mass grid points. The biggest deviation between predicted and held-back test stellar track is observed at the low-mass end (worst fit for  M⊙). There are two main reasons for this. First, as low-mass stars displace in the HR diagram from ZAMS up to TACHeB, they cover a larger spread in value range of log-scaled luminosity than higher mass stars, due to the stretched-out (in the HR diagram) ascension of the red giant branch. Second, the main contribution to cumulative deviation of predicted to the actual test track for low-mass stars arises during the unstable core helium burning, the sequence of short-lived helium flashes. The helium flashes introduce the most prompt transition in both the log L and the log Teff variables. Since these are physically uncertain from the modeling perspective, it therefore is not as important to obtain high accuracy prediction of flashes compared to other parts of the stellar evolution track. We evaluate our state-of-art worst fit as satisfactory, since the more reliable (from the modeling perspective) evolution before and after the flashes is well reproduced by the surrogate model: the evolution up to the tip of the RGB and the stable core helium burning after electron degeneracy in the core is lifted.

M⊙). There are two main reasons for this. First, as low-mass stars displace in the HR diagram from ZAMS up to TACHeB, they cover a larger spread in value range of log-scaled luminosity than higher mass stars, due to the stretched-out (in the HR diagram) ascension of the red giant branch. Second, the main contribution to cumulative deviation of predicted to the actual test track for low-mass stars arises during the unstable core helium burning, the sequence of short-lived helium flashes. The helium flashes introduce the most prompt transition in both the log L and the log Teff variables. Since these are physically uncertain from the modeling perspective, it therefore is not as important to obtain high accuracy prediction of flashes compared to other parts of the stellar evolution track. We evaluate our state-of-art worst fit as satisfactory, since the more reliable (from the modeling perspective) evolution before and after the flashes is well reproduced by the surrogate model: the evolution up to the tip of the RGB and the stable core helium burning after electron degeneracy in the core is lifted.

|

Fig. 4 Test data results, comparing the true (left) and the ffNN-predicted (right) stellar evolutionary tracks in HR (top) and Kiel (bottom) diagrams, over the entire set of our test initial masses |

|

Fig. 5 Test data results, showing the best (left) and the worst (right) predictions of stellar evolutionary tracks, as assessed by the L2 measure, in the HR diagram, for unseen test initial masses, by the trained ffNN model (top) and by the HNNI algorithm (bottom). For comparison, the original held-back tracks are underlain. |

4.1.2 HNNI

Stellar track predictions in the HR and Kiel diagram are obtained in the same way as described above for the deep learning case. The performance of the HNNI predictive model is assessed using the same data bases, procedures, and metrics as the supervised machine learning models. The outcome is that over the validation and test data, HNNI even outperforms the deep learning method in accuracy of predictions (although not significantly) as is measured by the majority of statistical scores (see Table 3). Over the test data, the HNNI method yields accurate predictions of stellar evolutionary tracks across the entire initial mass range and over all three evolutionary phases, including the fast-timescale transition regions. For illustration, Fig.5 shows the best and worst fit of a stellar track in HR diagram over the test data. The HNNI and deep learning models agree on the worst fit for  M⊙ for reasons explained above. In the HNNI case, the worst fit is resolved at higher accuracy than in the deep learning case, with a deviation from the test track that is marginal throughout, except during the helium flashes.

M⊙ for reasons explained above. In the HNNI case, the worst fit is resolved at higher accuracy than in the deep learning case, with a deviation from the test track that is marginal throughout, except during the helium flashes.

Furthermore, the HNNI scheme allows us to predict any stellar evolution variable of interest we tested, by virtue of the same algorithmic prescription for interpolation (see Fig. A.3 for prediction of stellar-core related variables for unseen test data initial masses). In contrast, by the current setting, the ffNN predicts only those three surface variables which it has been trained upon, as set by the regression problem defined in Sect. 2.1. In principle, a predictive framework with a large number of time-evolved variables could also be achieved with a ffNN emulator in two different ways. By the first way, the dimension of the output would need to be expanded to match the total number of stellar evolution variables of interest. For example, the values of six stellar variables would be produced as output of the 6 neurons in the outermost layer of the ffNN. However, optimizing such a model by a single globally defined loss score is cumbersome (for a discussion, see Appendix E). By the second way, a separate ffNN model with univariate output would need be trained to predict each additional stellar variable of interest. This is the more promising approach out of the two, but requires the construction of a separate hyperparameter-optimized model for each output variable.

4.1.3 Method comparison

The two methods for stellar evolutionary track forecasting (deep learning emulation versus HNNI) that we develop lie at different trade-off points between cost-efficiency and accuracy of the forecasts. To summarize, the advantages of HNNI are as follows. First, the quality of predictions is reliable, with HNNI even outperforming our best-fit deep learning model. Second, all evolved stellar variables (i.e., not only log Li, log Teff,i log ɡi, whose prediction has numerically been evaluated for comparison with output of the surrogate model) are covered by the same interpolation prescription. Third, HNNI works as a sustainable out-of-the-box solution method. In contrast to the supervised machine learning approach, there is no need to re-iterate the training and optimization of a predictive model each time another stellar tracks data base is used as the catalog being accessed by the algorithm.

The disadvantages of HNNI are as follows. First, continued access to the catalog data base is required, which, depending on the size of parameter space, sampling density and dimension of the problem, is typically of ~GB size. Second, the computing time to generate predictions is significantly slower compared to the speed of the surrogate model. For the comparison, we have computed scaling relations on a 4-core CPU (see Fig. 6): on such a machine, it takes around 40 seconds to generate one million point predictions of all the three surface variables, spread randomly across the evolutionary phases and the initial mass range, with ffNN, while making the same number of predictions takes around 3 h 13 min for HNNI (the computing time scales down linearly with the number of cores that are used to generate the predictions). Third, the extension to higher dimensional parameter space is not straightforward. Depending on the set of stellar parameters, a hierarchical relation may not always be identifiable. Moreover, in a high-dimensional parameter space, the required number of subsequent ID interpolations becomes large (see the discussion in Appendix B). Thereby, prediction-generation is slowed down further.

In contrast, advantages of the supervised machine learning method are as follows. First, casting predictions is fast, namely, two orders of magnitude faster (in seconds) to generate than with HNNI. Second, trained surrogate models are handy: a predictive ffNN model is of file size ~3 MB. Third, the supervised machine learning approach is very general: the extension to higher dimensions (in contrast to HNNI) does not require any hierarchical ordering of regressor variables, nor does casting predictions face any significant increase in computing time with increasing dimension.

The disadvantages of the method are as follows. First, the optimization of deep learning models is a more entailed task than a hard-coding adjustment of HNNI. Second, minimizing a single global loss score during model training does not guarantee locally accurate fit results consistently over the entire parameter space (see Appendix E for a discussion thereof and proposed solutions). Third, the scaling of ffNN output with the number of target variables either comes under sacrifice of predictive accuracy (in the multiple output case) or implies considerably more development effort (in the single output case).

|

Fig. 6 Cost-efficiency of forecast generation. Given our problem size and software implementation of HNNI (see Appendix B for an outline of the pseudo-code), the computing time scaling relation t(N) ∝ N with the number, N, of multiple output predictions is around 360 times larger for HNNI compared to that of the ffNN. |

4.2 Age proxy fit

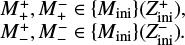

The series of age proxy values from s = 0 (ZAMS) to s = 1 (TACHeB) are not known for initial masses over which no stellar evolution tracks have been pre-computed, since s is calculated from the log L, log Teff, and log ρc time series which are then not available at those initial mass grid points. Both our methods for predicting stellar evolution tracks rely on the timescale-adapted evolutionary coordinate, s, which we use to re-parametrize the evolution of stars. Many astrophysical applications, however, require an indication of the stellar ages; for instance, drawing model isochrones into observed color-magnitude diagrams. We therefore construct another duet of interpolation methods (with HNNI and with supervised machine learning) that map the age τ onto the value of a star’s timescale-adapted evolutionary coordinate s(log τ, log Mini) ∊ (0, 1) over a continuous initial mass range, in order to accomplish the two-step interpolation scheme as defined in Sect. 2.1. Time counting in the MIST data set starts with the pre-MS phase. Therefore, the values of ages at ZAMS, τZAMS(Mini), quantify its duration. Instead of τ, we use a scaled age variable  for the age proxy fit with both the HNNI and the supervised ML methods:

for the age proxy fit with both the HNNI and the supervised ML methods:

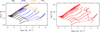

To obtain back the actual non-normalized age values (in units of years), the supply of the ZAMS log τZAMS(Mini) and the TACHeB log τTACHeB(Mini) functions is needed. The τZAMS (Mini) values are available from the MIST data set at the discretely sampled initial mass grid points. In order to be able to predict ZAMS and TACHeB ages of stars over a continuous Mini range, we fit a Gaussian process regression model to the discretely sampled catalog ZAMS and TACHeB grid points (see Fig. 7a), respectively.

|

Fig. 7 GPR fits of the log τZAMS(log Mini/M⊙) and log τTACHeB(log Mini/M⊙) relations (a), and the scatter plots of the age proxy predictions ŝ(log τ, log Mini) against the validation data, stest, obtained with the HNNI (b) and KNN (c) methods, for performance comparison. |

|

Fig. 8 Best ((a) and (c)) and worst ((b) and (d)) fits of age proxy tracks for unseen test initial masses, with the HNNI and KNN methods, respectively. |

4.2.1 HNNI

The HNNI routine for the age proxy fit operates in the same way as outlined in Sect. 3.4, with the sole difference that the primary regressor variable now is  (instead of the age proxy used in step 2), while s is itself the target variable of the fit. As Fig. 7b shows, HNNI predicts the values of the age proxy reliably throughout evolution of stars from s = 0 up to s = 1 over the validation data set. The mean residual error