| Issue |

A&A

Volume 656, December 2021

|

|

|---|---|---|

| Article Number | A54 | |

| Number of page(s) | 28 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202141446 | |

| Published online | 06 December 2021 | |

Auto-RSM: An automated parameter-selection algorithm for the RSM map exoplanet detection algorithm

1

STAR Institute, Université de Liège, Allée du Six Août 19c, 4000 Liège, Belgium

e-mail: carl-henrik.dahlqvist@uliege.be

2

Aix Marseille Univ, CNRS, CNES, LAM, Marseille, France

Received:

31

May

2021

Accepted:

16

September

2021

Context. Most of the high-contrast imaging (HCI) data-processing techniques used over the last 15 years have relied on the angular differential imaging (ADI) observing strategy, along with subtraction of a reference point spread function (PSF) to generate exoplanet detection maps. Recently, a new algorithm called regime switching model (RSM) map has been proposed to take advantage of these numerous PSF-subtraction techniques; RSM uses several of these techniques to generate a single probability map. Selection of the optimal parameters for these PSF-subtraction techniques as well as for the RSM map is not straightforward, is time consuming, and can be biased by assumptions made as to the underlying data set.

Aims. We propose a novel optimisation procedure that can be applied to each of the PSF-subtraction techniques alone, or to the entire RSM framework.

Methods. The optimisation procedure consists of three main steps: (i) definition of the optimal set of parameters for the PSF-subtraction techniques using the contrast as performance metric, (ii) optimisation of the RSM algorithm, and (iii) selection of the optimal set of PSF-subtraction techniques and ADI sequences used to generate the final RSM probability map.

Results. The optimisation procedure is applied to the data sets of the exoplanet imaging data challenge, which provides tools to compare the performance of HCI data-processing techniques. The data sets consist of ADI sequences obtained with three state-of-the-art HCI instruments: SPHERE, NIRC2, and LMIRCam. The results of our analysis demonstrate the interest of the proposed optimisation procedure, with better performance metrics compared to the earlier version of RSM, as well as to other HCI data-processing techniques.

Key words: methods: data analysis / techniques: image processing / techniques: high angular resolution / planets and satellites: detection

© ESO 2021

1. Introduction

In the study of exoplanets, direct imaging provides information that is complementary to indirect detection techniques, allowing wider orbits to be explored in greater detail, thus giving access to photometric and spectral features of young massive self-luminous companions. High-contrast imaging (HCI) is however one of the most challenging exoplanet detection techniques, as it requires large telescopes, advanced adaptive optics, coronagraphs, and sophisticated data processing to disentangle the faint planetary signal from the bright host star. Besides the large flux ratio between the companion and the host star, HCI techniques have to deal with residual noise due to quasi-static speckles, which originate from the optical train of the instruments or from uncorrected atmospheric turbulence.

In the last decade, the field of HCI has been very active and a large number of data processing techniques have been developed to detect and characterise planetary candidates. The most common approach combines the angular differential imaging (ADI, Marois et al. 2006) observing strategy with subtraction of a reference point spread function (PSF). While the ADI observing strategy relies on angular diversity to better average out quasi-static speckles, subtraction of a reference PSF increases the sensitivity of HCI by modelling and subtracting quasi-static speckles from the original set of frames. The most popular PSF-subtraction methods include ADI median-subtraction, locally optimised combination of images (LOCI, Lafreniere et al. 2007), principal component analysis (PCA/KLIP, Soummer et al. 2012; Amara & Quanz 2012), non-negative matrix factorisation (NMF, Ren et al. 2018), and the local low rank plus sparse plus Gaussian decomposition (LLSG, Gonzalez et al. 2016). Other algorithms such as ANDROMEDA (Cantalloube et al. 2015), KLIP FMMF (Pueyo 2016; Ruffio et al. 2017), PACO (Flasseur et al. 2018), and TRAP (Samland et al. 2021) exploit the inverse problem approach, which, for HCI, consists in tracking a model of the expected planetary signal in the set of frames included in the ADI sequence. All these methods rely on signal-to-noise ratio (S/N) maps to detect planetary candidates. These S/N maps are computed in a separate step for PSF-subtraction methods (using algorithms such as those described in Mawet et al. 2014; Bottom et al. 2017; Pairet et al. 2019), or are generated as a by-product of the algorithm for inverse problem approaches. Recently, a new PSF-subtraction-based approach was proposed to take better advantage of the numerous existing PSF-subtraction techniques. The regime-switching model (RSM) map (Dahlqvist et al. 2020) replaces the estimation of the S/N map by the computation of a probability map generated using one or several PSF-subtraction techniques. The most recent version of the RSM map (Dahlqvist et al. 2021) accommodates up to six different PSF-subtraction techniques, including two techniques relying on the forward modelling of an off-axis point source PSF.

The use of one or several PSF-subtraction techniques to generate a S/N map or a probability map via the RSM algorithm, requires the definition of multiple parameters specific to each method and potentially varying from one ADI sequence to another. The selected set of parameters can have dramatic effects on the final detection map, both in terms of noise and algorithm throughput. The selection of the optimal set of parameters is usually done manually, which requires time and can lead to bias, as the definition of the optimality of the set is driven by the ability of the user to properly analyse the generated detection maps. The complexity of the optimal parameter selection can be an obstacle to the use of some HCI data-processing techniques, and can lead to unreliable results. This also makes it difficult to properly compare the performance of HCI data-processing techniques, as their performance is parameter-driven to a large extent, and therefore depends on subjective choices made by the user. To mitigate these issues in the context of the RSM framework, we propose an optimisation procedure called auto-RSM to automatically select the best set of parameters for the PSF-subtraction techniques, as well as for the RSM algorithm itself. To our knowledge, such an extensive optimisation procedure has not yet been proposed in the HCI literature, although some earlier works have already partly addressed the question of parameter optimisation. Approaches such as S/N-based optimisation of the number of components for PCA (Gomez Gonzalez et al. 2017) or direct optimisation of the non-linear S/N function (Thompson & Marois 2021) focus on a single PSF-subtraction technique, whereas here we proposed a more generic framework applicable to most PSF-subtraction techniques.

The proposed optimisation framework can be divided into three main steps: (i) selection of the optimal set of parameters for the different PSF-subtraction techniques (and ADI sequences) via Bayesian optimisation, (ii) optimal parametrisation of the RSM algorithm, and (iii) selection of the optimal set of PSF-subtraction techniques (and ADI sequences) to be considered for the computation of the final RSM probability map. This last step is motivated by the fact that, when relying on multiple PSF-subtraction techniques and multiple ADI sequences, some sequences may be noisier while some methods may better cope with the noise independently of their parametrisation. Special attention should therefore be paid to the choice of the cubes of residuals generating the final probability map. The optimisation step for the PSF-subtraction technique parameters is not limited to auto-RSM, and can be performed separately if a S/N map is preferred to the RSM probability map. In addition to the development of auto-RSM, we therefore propose a variant of the algorithm adapted to the use of S/N maps instead of RSM probability maps. Auto-S/N relies on the first step of the auto-RSM algorithm, and on a modified selection framework allowing the optimal combination of multiple S/N maps.

The performance of both optimisation procedures is assessed using the exoplanet imaging data challenge (EIDC), which regroups ADI sequences generated by three state-of-the-art HCI instruments: SPHERE, NIRC2, and LMIRCam. The aim of the EIDC initiative is to provide tools, i.e., the data sets and performance metrics, to properly compare the various HCI data processing techniques that have been developed recently. The EIDC first phase, which ended in October 2020, regrouped and compared the results from 23 different submissions for the ADI subchallenge (Cantalloube et al. 2020), making it a great tool to assess the performance of a new approach.

The remainder of this paper is organised as follows. Section 2 describes the procedure used to optimise the parameters of the PSF-subtraction techniques. In Sect. 3, we introduce the RSM map framework and present the next two steps of the auto-RSM framework: the optimal RSM parameter selection, and the selection of the optimal set of cubes of residuals used to generate the final probability map. Section 4 is devoted to the performance assessment of the optimisation procedure along with the comparison of different versions of the optimisation procedure. Finally, Sect. 5 concludes this work.

2. PSF-subtraction techniques optimisation

The proposed optimisation procedure relies on the concept of inverted parallactic angles, which have already been used in the HCI literature (e.g., Gomez Gonzalez et al. 2018; Pairet et al. 2019). The sign of the parallactic angles used to de-rotate the ADI sequence is flipped, blurring any planetary signal while preserving the noise temporal correlation and statistical properties. The use of ADI sequences with flipped parallactic angles should allow us to avoid biases due to the contribution from potential planetary signals during the optimisation process. Although the inverted parallactic angles approach allows us to blur planetary signals, it is not immune to potential bright artefacts, which implies that particular attention needs to be paid to the elimination of outliers from the computed optimal parameters.

As mentioned in Sect. 1, the RSM map relies on one or several PSF-subtraction techniques to generate a final probability map. Six different PSF-subtraction techniques are currently used with the RSM map: LOCI, annular PCA (APCA, Gomez Gonzalez et al. 2017), KLIP, NMF, LLSG, and forward-model versions of KLIP and LOCI (see Dahlqvist et al. 2021, for more details). Each PSF-subtraction technique is characterised by its own set of parameters, which strongly affect the quality of the reference PSF modelling. Table 1 presents the parameters that we have identified as the most relevant for the optimisation of the six considered PSF-subtraction techniques. Other parameters, such as the annulus width, were tested during the auto-RSM development, but were discarded from the optimisation framework as their influence was found to be smaller or because of other practical considerations.

Set of parameters selected for the optimisation of the six considered PSF-subtraction techniques.

2.1. Definition of the loss function

Parameter optimisation requires the definition of a loss function f, which provides, for a given set of parameters p, an outcome f(p) that can be maximised or minimised. In the case of reference PSF modelling, the loss function should quantify the ability of the PSF-subtraction technique to remove the residual noise contained in the ADI sequence and to identify potential planetary companions. The definition of the achievable planet/star flux ratio or contrast, for a given detection significance, is therefore a good candidate to measure the PSF-subtraction technique performance. In the context of HCI, the contrast is defined as follows (Jensen-Clem et al. 2017):

The contrast is usually defined at a 5 σ level which implies factor = 5 with noise = σ. As the parameter optimisation is done for a single ADI sequence at a time, the stellar aperture photometry does not impact the optimisation process and is therefore irrelevant and set to 1. We rely on the procedure of Mawet et al. (2014) illustrated in Fig. 1 to determine the noise annulus-wise. For a given annulus and for an aperture centred on the pixel of interest, with a diameter equal to the full width at half maximum (FWHM) of the PSF, the noise is computed by considering the standard deviation of the fluxes in all the non-overlapping apertures (one FWHM in diameter each) included in this annulus. The number of apertures being relatively small for small angular separations, the procedure implements a small statistics correction, relying on a Student t-test to correct the multiplicative factor for the noise.

|

Fig. 1. Estimation of the noise via the annulus-wise procedure proposed by Mawet et al. (2014). The dotted white circles indicate the apertures whose flux is used for the noise computation, while the red circular region is centred on the pixel for which the noise needs to be estimated. |

The throughput quantifies the attenuation of the planetary signal due to reference PSF subtraction. In practice, the throughput is estimated by injecting a fake companion at a predefined position and computing the ratio between the injected aperture flux and the recovered aperture flux after the reference PSF subtraction. Contrast curves can be computed by averaging the sensitivity limit in terms of planet-to-star contrast obtained by injecting several fake companions at different position angles for a series of angular separations. Relying on several azimuthal positions and averaging the associated contrasts reduces the impact of the residual speckles on the estimated contrast. We follow this approach, but instead of injecting individual fake companions separately to compute the average contrast, we inject several fake companions at once, which drastically reduces the computation time. We impose a minimum separation of one FWHM between the apertures containing the fake companions, and a maximum of eight fake companions per annulus, in order to limit potential cross-talk between the injected fake companions. This safety distance, as well as the small intensity of the injected fake companions1, provides a good approximation of the average contrast (see Appendix C for a comparison between sequential and multiple injections) while limiting the computation time, which is crucial here as parameter optimisation requires a large number of contrast estimations. The loss function computation may be summarised as follows:

-

Reference PSF estimation using the selected post-processing technique and set of parameters;

-

Reference PSF subtraction from the original ADI sequence, de-rotation of the cube of residuals, and median combination of the resulting frames;

-

Computation of the fluxes for the entire set of apertures within the selected annulus in the median-combined frame obtained in step 2, and estimation of the noise relying on a Student t-test;

-

Injection of fake companions at the selected set of azimuths with flux value defined as five times the noise computed in step 3;

-

Computation of the cube of residuals for the ADI sequence containing the fake companions and median combination;

-

Computation of the throughputs by comparing the aperture flux of the injected companions to that of the retrieved companions after PSF subtraction (difference between final frame of step 5 and step 2);

-

Estimation of the contrasts via Eq. (1) and computation of the average contrast.

2.2. Parameter selection via Bayesian optimisation

The NMF and LLSG PSF-subtraction techniques have integer parameters that are, in practice, restricted to a small range of possible values. One can therefore easily select their optimal parameters by going through their entire parameter space, and simply applying steps 1–7 to compute the contrast for each set of parameters. The optimal set of parameters is the one that minimises the contrast. However, for the other PSF-subtraction techniques, part of the parameter space is continuous, which prevents exploration of the entire parameter space. A more advanced minimisation algorithm is therefore needed. The derivatives and convexity properties of our loss function are unfortunately unknown. However, it is expected that our loss function, i.e. the function describing the evolution of the annulus-wise contrast in terms of the selected parameters, is non-convex and most probably non-linear. This implies that we cannot rely on mainstream minimisation approaches (e.g., Newton-Conjugate-Gradient algorithm or Nelder-Mead Simplex algorithm). In addition, evaluating the annulus-wise contrast is expensive, because of the numerous steps involved in its estimation. We therefore cannot simply rely on Monte Carlo simulation or random searches to explore the parameter space.

Considering all these constraints, we decided to rely on Bayesian optimisation to select the optimal set of parameters for the remaining PSF-subtraction techniques. Bayesian optimisation is a powerful strategy to limit the number of loss function evaluations needed to reach an extremum (see, e.g., Mockus et al. 1978; Jones et al. 1998). This strategy belongs to a class of algorithms called sequential model-based optimisation (SMBO) algorithms. This class of algorithms uses previous observations of the loss function to determine the position of the next point inside the parameter space to be evaluated. It is called Bayesian optimisation because it relies on Bayes’ theorem to define the posterior probability of the loss function, on which the sampling strategy is based. Bayes’ theorem states that the posterior probability associated with a model, given a set of observations, is proportional to the likelihood of the observations given the model, multiplied by the prior probability of the model:

where f is the loss function to optimise and 𝒪1 : t = {p1 : t,f(p1 : t)} is the set of observations of the loss function, with p1 : t being the set of tested points (see Appendix A for a summary of all the mathematical notions used throughout the paper). In the case of Bayesian optimisation, we assume a Gaussian likelihood with noise, as follows:

where 𝒪t = f(pt)+ϵ with  .

.

Regarding the prior distribution for our loss function, Mockus (1994) proposed relying on a Gaussian process (GP) prior as this induces a posterior distribution over the loss function that is analytically tractable2. A GP is the generalisation of a Gaussian distribution to a function, replacing the distribution over random variables by a distribution over functions. A GP is fully characterised by its mean function m(p), and its covariance function K, where

The GP process can be seen as a function that returns the mean and variance of a Gaussian distribution over the possible values of f at p, instead of returning a scalar f(p). We make the assumption that the prior mean is the zero function m(p) = 0 and we select a commonly used covariance function, the squared exponential function:

where l is the length scale of the kernel.

Having documented the posterior probability computation for our loss function, we need to define a sampling strategy. Bayesian optimisation relies on an acquisition function to define how to sample the parameter space. This function is based on the current knowledge of the loss function, i.e. the posterior probability. The acquisition function is a function of the posterior distribution over the loss function f, which provides a performance metric for all new sets of parameters. The set of parameters with the highest performance is then chosen as the next point of the parameter space to be sampled. A popular acquisition function is the expected improvement (EI, Mockus et al. 1978; Jones et al. 1998) which is defined as follows:

where 𝔼 is the expected value and  is the current optimal set of parameters. We see that in the case of Bayesian optimisation, we look for the maximum value of the loss function. As we are trying to minimise the contrast for a given set of parameters, we simply define our loss function f(p) as the inverse of the contrast averaged over the selected set of azimuths (see Sect. 2.1).

is the current optimal set of parameters. We see that in the case of Bayesian optimisation, we look for the maximum value of the loss function. As we are trying to minimise the contrast for a given set of parameters, we simply define our loss function f(p) as the inverse of the contrast averaged over the selected set of azimuths (see Sect. 2.1).

An interesting feature of the EI is that it can be evaluated analytically under the GP model, yielding (see Appendix B for more details about the derivation of these expressions)

where Φ(Z) and ϕ(Z) are respectively the cumulative distribution and probability density function of the Gaussian distribution, μ(pt + 1) and σ(pt + 1) are the mean and variance of the Gaussian posterior distribution, and ![$ Z=\left[ \mu(\boldsymbol{p}_{t+1})-f(\widehat{\boldsymbol{p}})\right] /\sigma(\boldsymbol{p}_{t+1}) $](/articles/aa/full_html/2021/12/aa41446-21/aa41446-21-eq10.gif) . We see from this last expression that the EI is high either when the expected value of the loss μ(p) is larger than the maximum value of the loss function

. We see from this last expression that the EI is high either when the expected value of the loss μ(p) is larger than the maximum value of the loss function  or when the uncertainty σ(pt + 1) around the selected set of parameters pt + 1 is high. The EI approach aims to minimise the number of function evaluations by performing a trade-off between exploitation and exploration at each step. The EI exploits the existing set of observations by favouring the region where the expected value of f(pt + 1) is high, while it also explores unknown regions where the uncertainty associated with the loss function is high.

or when the uncertainty σ(pt + 1) around the selected set of parameters pt + 1 is high. The EI approach aims to minimise the number of function evaluations by performing a trade-off between exploitation and exploration at each step. The EI exploits the existing set of observations by favouring the region where the expected value of f(pt + 1) is high, while it also explores unknown regions where the uncertainty associated with the loss function is high.

Bayesian optimisation starts with the initialisation of the posterior probability by estimating the loss function for several sets of parameters via random search in the parameter space. Once this initial population of observations is computed, the rest of the algorithm can be summarised as follows.

-

Based on the GP model, use random search to find the pt + 1 that maximises the EI, pt + 1 = argmax[EI(pt + 1)];

-

Compute the contrast for the new set of parameters pt + 1;

-

Update the posterior expectation of the contrast function using the GP model (see Appendix B);

-

Repeat the previous steps for a given number of iterations.

The number of random searches to compute the initial GP and the number of iterations for the Bayesian optimisation depend on the size of the parameter space associated with the considered PSF-subtraction techniques. A specific number of random searches and iterations are therefore selected for each PSF-subtraction technique. At the end of the Bayesian optimisation, the minimal average contrast for a given annulus a and PSF-subtraction technique m is stored in a matrix element Ca, m, along with the set of parameters p in another matrix Pa, m.

This first step of the auto-RSM algorithm may be used outside the RSM framework, allowing the production of S/N maps based on the cubes of residuals generated by optimised PSF-subtraction techniques. A S/N-based version of the auto-RSM framework called auto-S/N has been developed and is presented in Appendix D. Auto-S/N optimally combines S/N maps computed from the cubes of residuals generated by the optimised PSF-subtraction techniques, relying on the same greedy approach as for auto-RSM (see Sect. 3.3). The performance of auto-S/N is assessed in Appendix D.2 using the same metrics as for auto-RSM (see Sect. 4). The lower performance of auto-S/N implies that auto-RSM should preferred, despite its longer computation time, although the two approaches can be complementary to some extent.

3. RSM map optimisation

3.1. RSM map principles

The RSM algorithm relies on a two-state Markov chain to model the pixel intensity evolution inside one or multiple de-rotated cubes of residuals generated by PSF subtraction techniques using ADI or SDI observing strategies. The cubes of residuals are treated annulus-wise to account for the radial evolution of the residual speckle noise statistics. For each angular separation a, a residual time-series, xia, is built by vectorising the set of patches centred on the annulus of radius a, first along the time axis and then the spatial axis. The index ia ∈ {1, …, T × La} gives the position of the considered patches within the cube of residuals, where La and T are respectively the number of pixels in the annulus of radius a and the number of frames in the residuals cube. In the case of multiple PSF-subtraction techniques and ADI sequences of the same object, T is defined as the sum of the number of frames per cube multiplied by the number of considered PSF-subtraction techniques.

The set of patches xia is described by a probability-weighted sum of the outcomes of two regimes: a noise regime, Sia = 0, where xia is described by the statistics of the quasi-static speckle residuals contained in the annulus; and a planetary regime, Sia = 1, where xia is described by both the residual noise and a planetary signal model3. The two regimes are characterised by the following equations:

where μ is the mean of the quasi-static speckle residuals, εs, ia is their time and space varying part, which is characterised by the quasi-static speckle residuals statistics, and β and m provide the flux and a model of the planetary signal, respectively. The parameter Fia = {0,1} is a realisation of a two-state Markov chain which implies that the probability of being in regime s at index ia will depend on the probability at the previous step, ia − 1. This allows us to better disentangle a planetary signal from bright speckle by providing a short-term memory to the model. The set of patches xia are described via the probability-weighted sum of the values generated by Eq. (8).

The probability of being in the regime s at index ia, defined as ξs, ia, depends on the probability at the previous step, ia − 1, on the likelihood of being currently in a given regime, ηs, ia, and on the transition probability between the regimes given by the matrix, pq, s. The probability ξs, ia is given by:

where  is a normalisation factor and ηs, ia is the likelihood associated with the regime s, which is given for each patch, ia, in the Gaussian case by

is a normalisation factor and ηs, ia is the likelihood associated with the regime s, which is given for each patch, ia, in the Gaussian case by

where σ is the noise standard deviation (see Sect. 3.2.2 for more details about its estimation), θ gives the size in pixels of the planet model, m, and n is the pixel index within the patch.

As the RSM algorithm relies on a two-state Markov chain, the computation of the probabilities requires the use of an iterative procedure because of the dependence of the probabilities on past observations. Once the probabilities of being in the two regimes have been computed for every pixel of every annulus, the probability of being in the planetary regime, ξ1, ia, is averaged along the time axis to generate the final probability map. A detailed description of the different steps of the RSM algorithm may be found in Dahlqvist et al. (2020). The use of forward-modelled PSFs, as well as the estimation of the probabilities via a forward-backward approach replacing the forward approach presented here, are documented in Dahlqvist et al. (2021).

3.2. Parameter selection for the RSM map

Following the optimisation of the PSF-subtraction techniques to be used in the RSM model (Sect. 2), the next step is to consider the parametrisation of the RSM algorithm itself. The use of the RSM algorithm requires the definition of four main parameters. These parameters are (i) the crop-size θ for the planetary model m, (ii) the definition of the region of the cube of residuals considered for the computation of the noise properties, whose estimation can be done (iii) empirically or via best fit, and (iv) the method used to compute the intensity of the potential planetary candidate β. When defining the flux parameter β as a multiple of the noise standard deviation, an additional parameter δ has to be used to determine how far into the noise distribution tail we are looking for potential planetary candidates.

An optimal set of parameters for the RSM algorithm is computed separately for each PSF-subtraction technique, and is based on a performance metric computed using the generated RSM map. We do not rely on multiple simultaneous injections of fake companions at different azimuths, as done previously, as the RSM approach assumes a single planetary signal per annulus. Injecting the fake companions sequentially would largely increase the computation time. We therefore define, annulus-wise, a single median position in terms of noise intensity, common to all PSF-subtraction techniques. This allows a fair comparison between the PSF-subtraction techniques when selecting the best set of likelihood cubes to generate the final RSM map in the last step of the auto-RSM framework. The determination of this median position starts with de-rotation of the original ADI sequence and the median-combination of the resulting set of frames. We then compute the flux of every aperture contained in the selected annulus, each aperture centre being separated by a single pixel in contrast with the approach of Mawet et al. (2014), where the apertures centre are separated by one FWHM. We define the fake companion injection position as the centre of the aperture for which the flux is the median of all the apertures fluxes. We decided to compute this median-flux position in the original ADI sequence, as the median-flux position inside the PSF-subtracted final frame differs from one PSF subtraction technique to the other, although a single common position is required for the final step of the auto-RSM algorithm. Regarding the contrast used for the optimisation of the RSM map parameters, for each PSF-subtraction technique, we select the average contrast Ca, m obtained with the optimal set of parameters. Here, we make the assumption that taking the median-flux position and the average contrast should provide a balanced optimised parametrisation that works for brighter as well as fainter planetary signals.

The performance metric used for the RSM algorithm optimisation is then defined as the peak probability in a circular aperture with a diameter of one FWHM centred on the position of the injected fake companion in the final RSM map divided by the maximum probability observed in the remaining part of the annulus of width equal to one FWHM. This allows us to account for potential bright speckles within the probability map as well as for the intensity of the planetary signal. Having defined the loss function used for the RSM parameter selection, we now consider the different parameters that should be optimised.

3.2.1. Crop size

The crop size θ is one of the parameters affecting the final probability map the most. This is especially true when relying on forward-model versions of the PSF-subtraction techniques, where a larger crop size should be considered to take advantage of the modelling of the negative side lobes appearing on either side of the planetary signal peak, which are due to self-subtraction associated with PSF-subtraction techniques. Self-subtraction depends on the relative position of the planetary candidate compared to the host star, with stronger self-subtraction at small angular separations and almost no self-subtraction at large angular separations. Indeed, the apparent movement of the planetary candidate increases linearly with the distance to the host star as the parallactic angles remain fixed but the radius increases. Larger apparent movement between two frames goes along with reduced self-subtraction. This implies that the optimal crop size for forward-modelled PSF should decrease with angular separation, as the negative side lobes appearing on either side of the planetary signal peak are replaced by noise. The selection of the optimal crop size should account for this effect as well as the range of parallactic angles, which is specific to each data set and also affects self-subtraction patterns. For PSF-subtraction techniques relying on the off-axis PSF to model the planetary signal, we consider a smaller range of crop sizes, as we do not take into account the distortion due to reference PSF subtraction. A maximum size of one FWHM is considered when relying on off-axis PSFs compared to the two FWHMs used for foward-model PSF-subtraction techniques. The definition of a proper crop size is nevertheless still important, because considering the shape of the PSF peak should help in disentangling planetary signals from speckle noise.

3.2.2. Parametrisation of the noise distribution

One of the corner stones of the RSM algorithm is the proper definition of the likelihood function associated with every patch contained in a given annulus. Four potential noise distribution functions are considered to compute these likelihoods, namely the Gaussian and the Laplacian distribution, the Huber loss (Pairet et al. 2019), and a hybrid distribution built as a weighted sum of Gaussian and Laplacian distributions (Dahlqvist et al. 2020). The first two noise distribution functions require estimation of the noise mean and variance, whereas the other two require additional parameters. Selection of the optimal distribution is done automatically within the RSM algorithm via a best-fit approach. However, the estimation of the parameters characterising the residual noise distribution function necessitates proper definition of the set of pixels to be considered. Different approaches are tested in auto-RSM to determine the most relevant set of pixels inside the cube of residuals. We have selected five possible ways to evaluate the noise properties:

-

Spatio-temporal estimation: The set of pixels incorporates the pixels inside the selected annulus4 for all the frames contained in the cube of residuals (see ‘Spatio-temporal’ in Fig. 2). The distribution function parameters depend solely on the radial distance a (μa and

).

). -

Frame-based estimation: The set of pixels incorporates the pixels of a given frame inside the selected annulus (see ‘Frame’ in Fig. 2). The distribution function parameters depend on both the radial distance a and the time-frame t (μa, t and

.

. -

Frame with mask-based estimation: The set of pixels incorporates the pixels of a given frame inside the selected annulus, apart from a region with a diameter of one FWHM centred on the pixels for which the likelihood is estimated (see ‘Frame with mask’ in Fig. 2). The distribution function parameters depend on both the radial distance a and the pixels index ia (μa, ia and

).

). -

Segment with mask-based estimation: The set of pixels incorporates the pixels of all frames inside a section (of length equal to three FWHMs) of the selected annulus, apart from a region with a diameter of one FWHM centred on the pixels for which the likelihood is estimated (see ‘Segment with mask’ in Fig. 2). The distribution function parameters depend on both the radial distance a and the pixels index ia (μa, ia and

).

). -

Temporal estimation: The last method is inspired by the approach developed in Flasseur et al. (2018). This approach relies on the cube of residuals before de-rotation. For a given patch inside the selected annulus, the pixels selected for computation of the distribution function parameters are the ones sharing the same position within the cube of residuals before de-rotation but taken at different times (see ‘Temporal’ in Fig. 2). All the frames except for the frame containing the selected patch are therefore considered. The distribution function parameters depend on both the radial distance a and the pixels index ia (μa, ia and

).

).

|

Fig. 2. Graphical representation of the estimation of residual noise properties using the five proposed approaches. The red circle/point indicates the pixel for which the likelihood is estimated. White and blue circles encompass the set of pixels used for computation of the noise properties. White circles indicate that the entire set of frames from the derotated cube are used for the computation, while blue circles indicate that the estimation is done frame-wise. Black circles define a mask, i.e. pixels that are not considered in the estimation. |

The use of these different methods allows us to investigate which part of the neighbourhood around the patch is relevant in order to correctly estimate the noise profile. This explains the wide variety of proposed methods both in terms of temporal and spatial position.

Depending on the region selected to compute the noise properties, a specific noise distribution function and parametrisation can be selected for a single patch, a single frame, or the entire set of frames and patches contained in the considered annulus. The estimation of noise distribution parameters can be done empirically or via best fit. The choice between empirical estimation and estimation via best fit represents an additional parameter to be considered during the RSM parameter optimisation.

3.2.3. Estimation of the planetary intensity

Two different methods were proposed to compute the planetary intensity parameter β (Dahlqvist et al. 2020, 2021). The first one relies on an additional parameter δ to define the expected position of the potential planetary signal intensity in the noise distribution. The intensity parameter β is defined as δ multiplied by the estimated noise standard deviation (Dahlqvist et al. 2020). A set of δ is tested and the optimal one is selected via maximisation of the total likelihood associated with a given angular distance (see Eq. (10)). In the case of auto-RSM, this last step is removed and the optimal δ is selected during the auto-RSM optimisation process. Preliminary tests have shown that the optimisation of δ using the auto-RSM performance metric can significantly reduce the background noise in the RSM probability map compared to the total likelihood-based optimisation proposed in Dahlqvist et al. (2020), while leaving planetary signals almost unaffected for δ ≤ 5.

The second approach relies on Gaussian maximum likelihood to define a pixel-wise intensity (Dahlqvist et al. 2021). The estimation of the intensity parameter β via Gaussian maximum likelihood requires the computation of a frame-wise standard deviation. The expression for the pixel-wise intensity is

with σj being the noise standard deviation,  the observed patch, and mj the planet model for frame j. Frame-wise computation of the standard deviation implies that the mean and variance computation described in the previous section should be performed via the frame-based estimation, the frame with mask-based estimation, or the temporal estimation, while for the other intensity computation methods, all five approaches can be used.

the observed patch, and mj the planet model for frame j. Frame-wise computation of the standard deviation implies that the mean and variance computation described in the previous section should be performed via the frame-based estimation, the frame with mask-based estimation, or the temporal estimation, while for the other intensity computation methods, all five approaches can be used.

3.2.4. Sequential parameter optimisation

While optimisation of the PSF-subtraction techniques is done in a single step, optimisation of the RSM parameters is done partly sequentially. The estimation of the RSM map is indeed much slower than the estimation of the contrast. Depending on the region considered for the estimation of the noise properties, the computation time can further increase, especially when relying on the frame with mask-based estimation or the temporal estimation, preventing optimisation of all the parameters in a single step. The selection of the optimal region to compute the noise properties is therefore treated separately. The selection of the optimal set of RSM parameters starts with computation of the RSM map performance metric for the two methods used to determine the intensity parameter β using the frame-based estimation of the noise properties5. For both methods, a separate performance metric is estimated for the selected range of crop sizes, but also for the selected range of δ for the method defining the intensity as a multiple of the noise standard deviation. The selection of the optimal value for all three parameters (i.e. δ, the crop size θ and the method to compute the intensity) is performed by comparing the obtained RSM performance metric. The faster computation of the RSM map when relying on the frame-based estimation of the noise properties allows optimisation of these three parameters in a single step. The next step involves the selection of the optimal region for estimation of the noise properties. Depending on the method selected to compute the intensity, a reduced set of regions may be considered. Optimisation of the RSM parameters ends by determining whether the noise properties are optimally computed empirically or via a best fit.

3.3. Optimal combination of the likelihood cubes

Having optimised the parameters of the PSF-subtraction techniques as well as the ones of the RSM algorithm, we are now left with a series of optimal cubes of likelihoods. One of the most interesting features of the RSM framework is its ability to use several cubes of residuals generated with different PSF-subtraction techniques to maximise the planetary signal, while minimising the residual speckle noise. The RSM algorithm takes advantage of the diversity of noise structures in the different cubes of residuals. This diversity is reflected in the noise probability distribution but also in the repartition of maxima and minima in the different speckle fields. By taking both aspects into account, the RSM algorithm is able to better average out the noise and improve the ratio between potential planetary signals and the residual speckle noise.

Despite optimisation of the parameters, some PSF-subtraction techniques may be less suited to generating a clean cube of residuals for some data sets. Redundancies in the information contained in several cubes of residuals may also degrade the performance of the RSM map by increasing the relative importance of some speckles. When dealing with several ADI sequences of the same object, some sequences can also be much noisier depending on the observing conditions. All these elements necessitate proper selection of the likelihood cubes used to generate the optimal final RSM map. We propose the investigation of two possible approaches to select the set of likelihood cubes used for computation of the final probability map, a bottom-up approach and a top-down approach, making use of a greedy selection framework.

As the RSM algorithm relies on spatio-temporal series of likelihoods to compute annulus-wise probabilities (see Eq. (9)), we start by defining the set of available series of likelihoods for a given radius a by Ya =  [0,Nsequence],m∈[0,Ntechnique]}. The

[0,Nsequence],m∈[0,Ntechnique]}. The  time-series corresponds to the set of likelihoods ηs, ia given in the Gaussian case by Eq. (10), generated for the cube c with the PSF-subtraction techniques m for all pixel indices ia of the annulus a. This last step of auto-RSM is used to define a subset Za ⊂ Ya regrouping the series of likelihoods maximising the performance metric of the RSM probability map for annulus a. This selection step shares the same performance metric as the RSM parameters optimisation step. To compute the performance metric, a single fake companion injection is used for each annulus for the entire set of PSF-subtraction techniques6. The selected set of time-series of likelihoods Za are then concatenated to form a single time-series per annulus and used to compute the probabilities via Eq. (9). The RSM performance metric, estimated based on these probabilities, allows us to select the optimal set Za.

time-series corresponds to the set of likelihoods ηs, ia given in the Gaussian case by Eq. (10), generated for the cube c with the PSF-subtraction techniques m for all pixel indices ia of the annulus a. This last step of auto-RSM is used to define a subset Za ⊂ Ya regrouping the series of likelihoods maximising the performance metric of the RSM probability map for annulus a. This selection step shares the same performance metric as the RSM parameters optimisation step. To compute the performance metric, a single fake companion injection is used for each annulus for the entire set of PSF-subtraction techniques6. The selected set of time-series of likelihoods Za are then concatenated to form a single time-series per annulus and used to compute the probabilities via Eq. (9). The RSM performance metric, estimated based on these probabilities, allows us to select the optimal set Za.

3.3.1. Bottom-up approach

When relying on a bottom-up approach, the iterative selection algorithm starts with an empty set Za. At each iteration, the series of likelihoods  that leads to the highest performance metric increase is added to the set Za. The procedure is repeated until no additional series of likelihoods leads to an increase in the performance metric. The bottom-up greedy selection algorithm can be summarised by the following steps.

that leads to the highest performance metric increase is added to the set Za. The procedure is repeated until no additional series of likelihoods leads to an increase in the performance metric. The bottom-up greedy selection algorithm can be summarised by the following steps.

-

For each series of likelihood contained in Ya, compute the corresponding RSM map performance metric using the set of series of likelihoods

for annulus a.

for annulus a. -

At each iteration, select the series of likelihoods providing the largest incremental performance metric increase and include the considered series of likelihoods

in the set of selected series Za. Remove from Ya the selected series

in the set of selected series Za. Remove from Ya the selected series  , as well as any other series included in Ya that did not lead to an increase in the performance metric.

, as well as any other series included in Ya that did not lead to an increase in the performance metric. -

Repeat the previous two steps until Ya is empty.

3.3.2. Top-down approach

In contrast with the bottom-up approach, the top-down iterative selection algorithm starts with a set Za = Ya and relies on pruning steps to reduce the number of series of likelihoods included in Za until an optimum is reached. The steps of the top-down greedy selection algorithm are the following.

-

For each series of likelihood contained in Za, compute the RSM map performance metric corresponding to the set of series of likelihoods

for annulus a.

for annulus a. -

At each iteration, select the series of likelihoods providing the largest incremental performance metric increase and remove the considered series of likelihoods

from the set of selected series Za.

from the set of selected series Za. -

Repeat the two previous steps until no more incremental performance metric decrease can be observed.

Pseudo codes of both approaches are provided in Tables E.1 and E.2. The potential redundancies in the information contained in different cubes of likelihoods, as well as the iterative procedure used by the RSM algorithm to generate the final probability map, mean that the set of series of likelihoods are not truly independent, which prevents us from finding the global optimum while using a greedy approach. However, these bottom-up and top-down greedy selection algorithms provide a good approximation of the global optimum in a reasonable amount of time.

3.4. Practical implementation

After having presented the different steps of the proposed optimisation framework for the RSM map algorithm, these steps can now be merged into a single optimisation procedure, which is implemented in the PyRSM package7. Two different modes of this optimisation procedure are proposed: the full-frame mode and the annular mode. The two modes share a common structure but their output dependence on the angular separation is different. In the full-frame mode, there is no dependence between the optimal set of parameters and the angular separation to the host star, with a single set of parameters being used for every annulus. In the annular mode, the frames are divided into successive annuli of pre-defined width, and a set of optimal parameters is defined for each annulus. As the noise distribution and parameters evolve with the angular separation, this second mode accommodates possible evolutions of the optimal parametrisation with the angular separation to the host star. Figure 3 provides a graphical representation of the two different modes in the case of optimisation of the FOV minimum rotation used for the annular PCA estimation.

|

Fig. 3. Graphical representation of the optimisation of the FOV minimal rotation for the annular PCA with the two modes of the auto-RSM algorithm for the SPHERE 1 data set of the EIDC. The full-frame version (illustrated in the bottom left corner) considers a set of annuli of width equal to one FWHM to provide a single set of optimal parameters. The annular version (top left) considers successive annuli of width equal to one FWHM to provide annulus-wise sets of optimal parameters. |

As illustrated in Fig. 3, in both cases the frames are divided into successive annuli of one FWHM8 in width. The red dotted circles represent the centres of the selected annuli on which the apertures for the optimisation of the PSF-subtraction techniques are centred, and for which probabilities are computed to optimise the parameters of the RSM algorithm and select the optimal set Za. We do not consider all angular separations but a subset of them separated by one FWHM, as we expect a slow evolution of the parameters. This should give a good representation of the evolution of the parameters or a good overview in the case of the full-frame mode, while reducing the computation time9.

In the case of the full-frame mode, we consider a subset of the annuli of the annular mode, with an increasing distance between the selected annuli as we move away from the host star. This allows us to increase the relative weight of small angular separations, the noisier region being located near the host star, and reduce the estimation time. The proposed annulus selection rule for the full-frame mode can be summarised as

where Δa is the separation between two successive annuli used in the optimisation procedure, and amax is the largest annulus to be considered in the RSM map computation. Selection of the optimal parameter set for the PSF-subtraction techniques in the full-frame mode is achieved by comparing the normalised contrasts generated with the different tested parametrisations summed over the selected angular separations. We start by computing contrasts for a common set of parametrisations10 for each considered angular separation. For a given angular separation, the median of the obtained contrasts is then computed and used to normalise all the contrasts. The normalised contrasts are finally summed over the selected angular separations provided by Eq. (12) for each considered parametrisation. The optimal set of parameters is then the one that minimises the summed normalised contrast11. As the contrast decreases with the angular separation, the normalisation allows a proper summation of the contrasts generated at the different angular distances. A similar approach is used for optimisation of the RSM algorithm and selection of the optimal likelihoods, although no normalisation is required according to the definition of the performance metric. As regards the annular mode, no normalisation is required as the optimisation is done separately for each selected annulus.

The complete auto-RSM optimisation procedures for the two considered modes are summarised in Tables E.3 and E.4. As can be seen from both tables, the optimisation procedures can be divided into four main steps, (i) optimisation of the PSF-subtraction techniques, (ii) optimisation of the RSM algorithm, (iii) optimal combination of models and sequences, and (iv) computation of the final RSM probability map (respectively the opti_model, opti_RSM, opti_combination, and opti_map function of the PyRSM class). In both modes we include the estimation of a background noise threshold for every annulus, by taking, for each annulus, the maximum probability observed in the map generated with the reversed parallactic angles. Following subtraction of the angular separation-dependent thresholds, we set all negative probabilities to zero to generate the final map. The threshold subtraction should help to reduce the noise, especially near the host star where most residual speckles are observed. However, these thresholds should not be used as detection thresholds, as the noise statistics properties of the original ADI sequence are not exactly equivalent to the ADI sequence with sign-flipped parallactic angles.

Considering the existence of potential bright artefacts in the map generated with the reversed parallactic angles, we rely on a Hampel filter and a polynomial fit to smooth the radial evolution of the thresholds in the full-frame mode. As the parametrisation of the RSM algorithm has a large impact on planetary signals and background noise levels, we do not apply the threshold fit for the annular mode, as the RSM parametrisation evolves with the angular separations. However, we do apply a smoothing procedure for the parameters of the PSF-subtraction techniques by applying a moving average after a Hampel filter. This helps in smoothing potential discontinuities between annuli in the set of optimal parameters and provides a more consistent final probability map. As computation of the residual cubes is done annulus-wise12, we need a single set of parameters13 for a number of angular separations equal to the width of the annulus. However, the RSM map computation requires the definition of a set of parameters for every considered angular separation. A radial basis multiquadric function (RBF) is used to perform an interpolation (Hardy 1971) of the RSM optimal parameters for the annular mode to provide a set of parameters for each angular distance.

4. Performance assessment

4.1. Description of the data sets

As mentioned in the introduction, we base our performance analysis on the data set of the EIDC ADI subchallenge (Cantalloube et al. 2020). This data set regroups nine ADI sequences, three for each considered HCI instrument, namely VLT/SPHERE- IRDIS (Beuzit et al. 2019), Keck/NIRC2 (Serabyn et al. 2017), and LBT/LMIRCam (Skrutskie et al. 2010). The ADI sequences were obtained in H2-band for SPHERE and Lp-band for the two other instruments. For each ADI sequence, a set of four fits files is provided: the temporal cube of images, the parallactic angles variation corrected from true north, a non-coronagraphic or non-saturated PSF of the instrument, and the pixel scale of the detector. The ADI sequences are pre-reduced using the dedicated pre-processing pipeline for the three instruments (more details about the reduction are provided by Cantalloube et al. 2020).

As the LMIRCam ADI sequences regroup between 3219 and 4838 frames, we relied on moving averages to reduce this number to around 250 frames in order to limit the computation time. The reduction of the number of frames starts with the de-rotation of the original cube of images using the parallactic angle variations provided. A moving average is then applied on the de-rotated cubes along the time axis with a window and step size of 20 frames for the LMIRCam sequence 1 and 3, and 15 frames for the LMIRCam sequence 2. The same is done on the set of parallactic angle variations. The inverse reduced parallactic angle variations are then used to re-rotate the resulting ADI sequences. In addition to reducing the computation time, the moving average allows part of the noise to be averaged out in advance. More details about the nine ADI sequences are provided in Table F.1.

To assess the performance of the HCI data-processing techniques, fake companions were injected by the EIDC organisers using the VIP package (Gomez Gonzalez et al. 2017). Between 0 and 5 point sources were injected into each ADI sequence for a total of 20 planetary signals within the entire EIDC ADI subchallenge data set. These point sources were injected using the opposite parallactic angles, avoiding any interference with potential existing planetary signals while keeping the speckle noise statistics14. The separation, the azimuth, and the contrast of the injected fake companions were chosen randomly. The contrasts range between 2σ and 8σ based on a contrast curve computed with the regular annular PCA implemented in the VIP package (Gomez Gonzalez et al. 2017), which is referred to as the ‘baseline’ in the performance analysis presented in Cantalloube et al. (2020). The detection maps of the baseline consist in S/N maps computed using the approach of Mawet et al. (2014). The detection maps generated with the baseline approach are used in our model comparison.

4.2. Performance metrics

The performance assessment of HCI data-processing techniques is done via the definition of a classification problem, counting detections and non-detections on a grid of FWHM-sized apertures applied to the detection maps. A true positive (TP) is defined as a value above the threshold provided by the user along with the S/N or probability maps within the FWHM aperture centred on the position of the injected fake companion. Any values above the provided threshold that are not in the set of apertures containing injected fake companions are considered as false positives (FPs). The false negatives (FNs) regroup all the non-detections at the position of injected fake companions, while the true negatives (TNs) are the non-detections at any other position. Different performance metrics are computed using these four categories:

-

True positive rate:

-

False positive rate:

-

False discovery rate:

-

F1 score:

In addition to the F1 score computed at the pre-defined threshold, we follow the same approach as in Cantalloube et al. (2020) and also consider the area under the curve (AUC) for the TPR, FPR, and FDR as a function of the threshold to classify the different versions of the proposed optimisation procedure. The AUCs of the TPR, FPR, and FDR are preferred to the values of these latter at the provided threshold, as this allows us to mitigate the arbitrariness of the threshold selection by considering their evolution for a range of thresholds. The AUC of the TPR should be as close as possible to 1 and the AUCs of the FPR and FDR as close as possible to zero. The F1 score being the harmonic mean of the recall and precision of the classification problem, it ranges between 0 and 1, with values close to 1 being favoured (perfect recall and precision).

4.3. Results

We have now all the elements to apply the auto-RSM optimisation procedure described in Sects. 2 and 3 to the nine selected ADI sequences. Only PSF-subtraction techniques relying on an off-axis PSF (and not on forward models) are considered during the optimisation procedure in order to reduce the computation time, considering the large set of ADI sequences on which the method is tested, and the numerous parametrisations of the auto-RSM algorithm that we consider. This also allows a fair comparison with the results of the RSM algorithm already used in Cantalloube et al. (2020), which relied only on the PSF-subtraction techniques based on off-axis PSFs. We note however that the PyRSM Python package also accommodates the use of a forward-model version of KLIP and LOCI, where a parameter defines the maximum angular separation above which the forward model is no longer considered. This allows us to take advantage of the higher performance of forward-modeled PSF-subtraction techniques at small angular separations, while limiting their impact on the computation time at larger separations.

For the Bayesian optimisation of APCA and LOCI, the contrast is computed for respectively 80 and 60 points of the parameter space to initialise the Gaussian process, while 60 iterations of the minimum-expectation Bayesian optimisation are used to determined the optimal set of parameters. The smaller number of points for the initialisation of LOCI comes from its smaller set of parameters compared to APCA. The number of points for the initialisation and the number of iterations have been chosen to ensure the convergence to the global optimum15. The ranges of possible values that have been selected to define the parameter space for the PSF-subtraction optimisation are shown in Table G.1. Most ADI sequences share a common range of possible values. However, differences may be found in the definition of the parameter space boundaries for the NIRC2 ADI sequences due to the reduced number of frames.

4.3.1. Full-frame and annular auto-RSM parametrisation

Regarding the parameters of the full-frame version of auto-RSM, the set of selected annuli is truncated at amax = 10λ/D to favour small angular separations during the optimisation, the region close to the host star being more noisy16. The order of the polynomial fit of the annular threshold is set to three in order to limit the impact of small artefacts appearing in the RSM map generated with inverted parallactic angles, while keeping the main characteristics of the angular evolution of the noise. The full-frame (FF) auto-RSM was tested with three different parametrisations, allowing the comparison between the bottom-up (BU) and top-down (TD) selection of the optimal cubes of likelihoods, as well as the comparison between the forward (F) and forward-backward (FB) approaches to compute the final probabilities.

The annular version of auto-RSM requires the definition of two additional parameters: the window sizes for the Hampel filter and the moving average used to smooth the PSF-subtraction parameters (see Table E.4). The window sizes are respectively equal to 3 and 5, and the window is centred on the angular distance for which the filtered value or the moving average is computed. Two different flavours of the annular auto-RSM were tested, one relying on the annular framework for the optimisation of the entire set of parameters (A), and one using the annular framework for the PSF-subtraction parametrisation and the full-frame framework for the RSM parametrisation and the selection of the optimal set of cubes of likelihoods. The hybrid approach mixing full-frame and annular frameworks (AFF) aims to reduce the angular variability of the background residual probabilities, which are mainly affected by the parametrisation of the RSM model.

4.3.2. Performance metric computation and model comparison

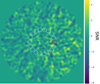

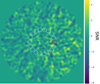

Having presented the five tested parametrisations of the full-frame and annular auto-RSM, we now turn to the estimation of the detection maps and the computation of the performance metrics, which will allow us to rank these parametrisations and compare them with both the original RSM algorithm and the baseline presented in Cantalloube et al. (2020). All parametrisations of the auto-RSM were applied to the nine data sets of the EIDC. Figure 4 presents the detection maps generated with the full-frame auto-RSM using the bottom-up greedy algorithm to select the optimal set of cubes of likelihoods and the forward approach to compute the probabilities (auto-RSM FF_BU_F). The detection maps for all five parametrisations of the auto-RSM are provided in Appendix H. As can be seen from Fig. 4, the contrast between detected targets and background residual probabilities is very high compared to standard S/N maps, demonstrating the ability of the proposed approach to easily disentangle planetary signals from residual speckles and ease the selection of a detection threshold. As an illustration, the ratio between the peak probability (or S/N) of the target and the mean of the background probabilities (or S/Ns) in the detection map of the SPHERE 1 data set is larger than 3000 for the auto-RSM FF_BU_F, and only 2 for the baseline17.

|

Fig. 4. Detection maps corresponding to the nine data sets of the EIDC, generated with the full-frame version of auto-RSM using the bottom-up approach for the selection of the optimal set of cubes of likelihoods, as well as the forward approach for the computation of the probabilities. The yellow circles are centred on the true position of the detected targets (TP) and the red circles give the true positions of FNs. |

Following the EIDC procedure, a single threshold was selected for all data sets, for each parametrisation of the auto-RSM. This threshold allows estimation of the F1 score. As mentioned in Sect. 4.2, in addition to the F1 score, the AUCs of the TPR, FPR, and FDR are also computed. Figure 5 illustrates the computation of the different performance metrics for the nine data sets, relying on the detection maps generated with the auto-RSM FF_BU_F. The TPR, FPR, and FDR are computed for different threshold values ranging from zero to twice the selected threshold. The AUCs of the TPR, FPR, and FDR are computed in this interval. Apart from the NIRC2 data sets and LMIRCam-2, the AUC of the FDR is very small for the remaining data sets compared to the baseline. The AUC of the FPR is close to zero for all data sets, especially for the SPHERE data sets for which the AUC values are below the considered 0.001 limit.

|

Fig. 5. True positive rate (green), false discovery rate (red) and false positive rate (dash-dotted blue line) computed for a range of thresholds varying from zero to twice the selected threshold (represented by a dotted vertical line). These curves are computed for the nine data sets of the EIDC, relying on the detection maps estimated with the full-frame version of auto-RSM using the bottom-up approach for the selection of the optimal set of cubes of likelihoods, as well as the original forward approach for the computation of the probabilities. The green line representing the TPR should be as close as possible to 1 for the entire range of thresholds, while the red and dash-dotted blue line representing respectively the FDR and the FPR, should be as close as possible to zero. |

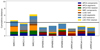

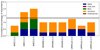

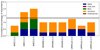

Having illustrated the computation of the performance metrics for the different data sets, we now consider aggregated results to compare the performance of the five auto-RSM parametrisations with the baseline and RSM algorithm submission to the EIDC. The different rankings for the four considered performance metrics are shown in Fig. 6. The light, medium, and dark colours correspond to the three instruments, with the VLT/SPHERE-IRDIS, Keck/NIRC2, and LBT/LMIRCam data sets, respectively. Figure 6 highlights the fact that the RSM-based approaches largely outperform the baseline with much higher F1 scores, a much larger AUC of the TPR, and much lower AUCs of the FDR and FPR. Regarding the five considered auto-RSM parametrisations, they all present a smaller F1 score compared to the RSM algorithm parametrised manually, except for the auto-RSM FF_BU_F, which performs slightly better. However, when considering the other performance metrics, the auto-RSM approach seems to perform better in most cases, especially when considering false positives. These results demonstrate the ability of the auto-RSM approach to better cope with residual speckle noise, while maintaining a high detection rate. This is a key element in reducing arbitrariness in the selection of the detection threshold. The selection of a detection threshold is indeed often a complex task, especially when relying on S/N maps, as the noise probability distribution is often non-Gaussian.

|

Fig. 6. Ranking of the different parametrisations of the full-frame and annular versions of the auto-RSM along the original RSM and baseline presented in Cantalloube et al. (2020). Panel a provides the ranking based on the F1 score obtained at the selected threshold. Panel b gives the ranking based on the AUC of the TPR. Panel c gives the ranking based on the AUC of the FPR, while panel d provides the ranking based on the AUC of the FDR. FF stands for full-frame, A for annular, AFF for annular full-frame (annular approach used to optimise the PSF-subtraction parameters and full-frame approach used for the RSM parameter optimisation and the selection of the optimal set of cubes of likelihoods), and BU, TD, F, and FB as explained in Sect. 4.3.1. The light, medium, and dark colours correspond to VLT/SPHERE-IRDIS, Keck/NIRC2, and LBT/LMIRCam data sets, respectively. |

Looking in more detail at the five parametrisations of the auto-RSM, we see clearly that the auto-RSM FF_BU_F leads to the best performance metrics in most cases, and should therefore be favoured for detection when using the auto-RSM approach. The results for the annular and hybrid annular full-frame auto-RSM seem to demonstrate that considering the radial evolution of the optimal parameters does not lead to a significant improvement in performance. The slightly degraded performance of the annular mode can be explained by the fact that the auto-RSM optimisation relies on the inverted parallactic angle approach. The noise structure being similar but not equivalent when inverting the parallactic angles, the annular optimisation is more affected by local differences in the noise structure. These local differences in the noise structure prevent the algorithm from improving the overall performance. Considering the longer computation time required for the annular auto-RSM, and its performance, the full-frame version should clearly be preferred.

As regards the difference between the bottom-up and top-down approaches, the better results obtained with the bottom-up approach may be explained by its ability to select the cube of likelihoods in the right order. Indeed, the probability associated with a planetary signal increases along the temporal axis when computing the RSM detection map. This probability increases faster and stays high for longer when selecting first the cube of likelihoods providing the highest probability ratio between injected fake companion peak probability and background residual probabilities. Sorting the cubes of likelihoods in descending order of quality leads to a higher average probability for the planetary signal, while it should not affect the probability associated with residual noise.

In addition to the automated selection of the optimal parameters, the results obtained with the auto-RSM FF_BU_F show a clear performance improvement compared to the set of RSM detection maps originally submitted to the EIDC (Cantalloube et al. 2020). We observe an overall reduction of 76% and 33% compared to the RSM submission for the AUC of the TPR and the AUC of the FDR, respectively, as well as an increase of 19% and 2% for the AUC of the TPR and the F1 score, respectively.

4.4. Commonalities in optimal parametrisations

The proposed auto-RSM optimisation procedure is relatively time consuming even when relying on the full-frame mode, which may potentially preclude its use for very large surveys. In this section, we therefore investigate the possibility of using a smaller set of ADI sequences to generate an optimal parametrisation, which could then be applied to a larger set of ADI sequences. This requires a homogeneity in the sets of optimal parameters selected for different ADI sequences generated by a given HCI instrument, as surveys generally consider multiple observation sequences generated by a single HCI instrument. Sources of heterogeneities in the parametrisation of ADI sequences for a common instrument can originate from the number of frames, the observing conditions, the parallactic angle range, or the target position in the sky.

As the EIDC data set contains multiple ADI sequences generated with different instruments under different observing conditions and with different characteristics (see Table F.1 for the frames number, FOV rotation), it should allow us to estimate the homogeneity of the parametrisations for a common instrument, and potential heterogeneity between instruments. As the full-frame version of the optimisation algorithm provides the best performance, we rely on the set of parameters generated by this mode to conduct our analysis. We define a heterogeneity metric, which differs for the PSF-subtraction techniques and the RSM algorithm. We use the distance between parametrisations as a metric with which to gauge the performance of the PSF-subtraction techniques. This distance is defined as the difference between the optimal values of all the parameters (see Table I.1) for each pair of ADI sequences. For each parameter, we normalise the absolute value of the distance between the two considered ADI sequences with the mean of the two optimal values used to compute the distance. This allows proper comparison of the relative weight of the different parameters. For the RSM parametrisation, most parameters are non-numerical and we therefore replace the notion of distance by the notion of similarity. For a given pair of ADI sequences, as a metric for each parameter we use the percentage of dissimilarity within the entire set of PSF-subtraction techniques, i.e. the number of PSF-subtraction techniques for which the parameter values are different divided by the total number of PSF-subtraction techniques.

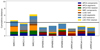

Figure 7 shows the cumulative normalised distances for every pair of ADI sequences within each instrument, along with the relative weight of all the parameters. The black line gives the mean distance computed based on the 36 possible pairs of ADI sequences. A cumulative distance below the back line indicates a higher homogeneity of the parameters for the considered pair of ADI sequences. As can be seen from Fig. 7, the ADI sequences generated by the SPHERE and LMIRCam instruments seem to be characterised by a relatively homogeneous set of parameters, which implies that a common set of parameters could be defined and used for larger surveys. The larger heterogeneity for the NIRC2 samples, which seems to be mainly driven by the NIRC2-2 sequence, may be explained by the ADI sequence characteristics (see Table I.1), or by differences in terms observing conditions.

|

Fig. 7. Normalised distances between PSF-subtraction-technique parameter sets for nine pairs of ADI sequences. The different coloured bars provide the contribution of the different parameters to the cumulative normalised distance. The considered pairs of ADI sequences are generated by the same instrument. The black horizontal line represents the normalised distances averaged over the 36 possible pairs of ADI sequences. |

Considering now the parametrisation of the RSM algorithm, the results presented in Fig. 8, demonstrate again the larger parametric heterogeneity for the NIRC2 samples, with the NIRC2-3 sequence affecting the dissimilarity measures the most. This confirms the particularity of the NIRC2-3 sequence, which seems to have a different noise structure compared to the other ADI sequences. The crop size of 3 pixels as well as the use of a best-fit approach to estimate the noise properties are common to all ADI sequences (see Table I.1). The heterogeneity is mainly driven by the definition of the region used for computation of the noise properties, which tends to demonstrate the advantage of considering multiple approaches for estimation of the noise properties. We finally consider the set of selected PSF-subtraction techniques used to generate the final RSM map when relying on the full-frame bottom-up auto-RSM. We see from Table 2 that the SPHERE ADI sequences share the same set of PSF-subtraction techniques while the set is different for the other ADI sequences.

|

Fig. 8. Percentage of dissimilarity between RSM parameter sets for nine pairs of ADI sequences. The different coloured bars provide the contribution of the different parameters to the cumulative dissimilarity. The selected pairs of ADI sequences are generated by the same instrument. The black horizontal line represents the percentage of dissimilarity averaged over the 36 possible pairs of ADI sequences. |

Selected PSF-subtraction techniques for the computation of the final RSM-detection map for the nine ADI sequences in the case of the full-frame version of the auto-RSM procedure using the bottom-up approach.