| Issue |

A&A

Volume 649, May 2021

|

|

|---|---|---|

| Article Number | A38 | |

| Number of page(s) | 26 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202038419 | |

| Published online | 07 May 2021 | |

DAWIS: a detection algorithm with wavelets for intracluster light studies

1

Sorbonne Université, CNRS, UMR 7095, Institut d’Astrophysique de Paris, 98bis Bd Arago, 75014 Paris, France

e-mail: ellien@iap.fr

2

Observatoire de la Côte d’Azur, BP 4229, 06304 Nice Cedex 4, France

3

Aix-Marseille Univ., CNRS, CNES, LAM, Marseille, France

4

UFRJ, Observatório do Valongo, Rio de Janeiro, RJ, Brazil

5

Independent Researcher, Telschowstr. 16, Garching, Germany

6

Independent Researcher, Est. Caetano Monteiro 2201/65, Niterói, Brazil

Received:

14

May

2020

Accepted:

11

January

2021

Context. Large numbers of deep optical images will be available in the near future, allowing statistically significant studies of low surface brightness structures such as intracluster light (ICL) in galaxy clusters. The detection of these structures requires efficient algorithms dedicated to this task, which traditional methods find difficult to solve.

Aims. We present our new detection algorithm with wavelets for intracluster light studies (DAWIS), which we developed and optimized for the detection of low surface brightness sources in images, in particular (but not limited to) ICL.

Methods. DAWIS follows a multiresolution vision based on wavelet representation to detect sources. It is embedded in an iterative procedure called synthesis-by-analysis approach to restore the unmasked light distribution of these sources with very good quality. The algorithm is built so that sources can be classified based on criteria depending on the analysis goal. We present the case of ICL detection and the measurement of ICL fractions. We test the efficiency of DAWIS on 270 mock images of galaxy clusters with various ICL profiles and compare its efficiency to more traditional ICL detection methods such as the surface brightness threshold method. We also run DAWIS on a real galaxy cluster image, and compare the output to results obtained with previous multiscale analysis algorithms.

Results. We find in simulations that DAWIS is on average able to separate galaxy light from ICL more efficiently, and to detect a greater quantity of ICL flux because of the way sky background noise is treated. We also show that the ICL fraction, a metric used on a regular basis to characterize ICL, is subject to several measurement biases on galaxies and ICL fluxes. In the real galaxy cluster image, DAWIS detects a faint and extended source with an absolute magnitude two orders brighter than previous multiscale methods.

Key words: galaxies: clusters: general / methods: data analysis / techniques: image processing

© A. Ellien et al. 2021

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1. Introduction

Low surface brightness (LSB) science will improve in this new decade with the launch of several large observational programs. The Vera Rubin Observatory Large Synoptic Survey Telescope (LSST; Ivezić et al. 2019), a ground-based system featuring an 8.4 m primary mirror, will lead a ten-year survey on a 18 000 deg2 sky area, reaching a foreseen limiting depth of μg = 31 mag arcsec−2. In space, the Euclid mission will perform three deep-field programs in the visible (VIS) broad band (R + I + Z) covering 40 deg2 in total, with a conservatively estimated limiting magnitude of μVIS = 26.5 mag arcsec−2. New missions such as the MESSIER surveyor (Valls-Gabaud & MESSIER Collaboration 2017), a space telescope optimized specifically for LSB imaging in the UV and the visible wavelengths, are also planned for the upcoming years.

These new programs will complement current and past LSB surveys (of which we only give a nonexhaustive review here). Small telescopes optimized for LSB imaging such as the Dragonfly Telephoto Array (Abraham & van Dokkum 2014) or the Burrell Schmidt Telescope (Mihos et al. 2017) are obtaining good results from the ground, reaching limiting depths of μg = 29.5 mag arcsec−2. These small telescopes take advantage of the minimization of artificial contamination sources in a field in which other instruments not originally dedicated to this type of studies are strongly disadvantaged in this regard, such as the MegaCam instrument on the Canada France Hawaii Telescope (CFHT). This last instrument has nevertheless achieved its share of surveys as its limiting depth has been pushed by constraining instrumental contamination effects through refined observational strategies and reduction softwares. The CFHT Legacy Survey (Gwyn 2012) is a good example, as it allowed detecting LSB structures such as tidal streams (Atkinson et al. 2013), followed later by the Next Generation Virgo Cluster Survey (Ferrarese et al. 2012), a survey dedicated to deep imaging of the Virgo cluster. Next in line is the ongoing Ultraviolet Near-Infrared Optical Northern Survey (UNIONS; Ibata et al. 2017), which features images processed with the Elixir-LSB pipeline (Duc et al. 2011) and reaches a limiting depth of μr = 28.3 mag arcsec−2 in the r band on a wide sky area of ∼5000 deg2.

As these ground-based instruments are still limited by the atmosphere, surveys for capturing LSB features have also been led from space with the Hubble Space Telescope (HST), the prime example being the HST Ultra Deep Field (Beckwith et al. 2006). However, the small field of view (FoV) of the HST does not allow probing large spatial extents, leading to different scientific goals such as the study of distant objects that are smaller on the projected sky plane (typically high-redshift galaxies or galaxy clusters). The ongoing Beyond Ultra-deep Frontier Field And Legacy Observations survey (BUFFALO; Steinhardt et al. 2020), next in line to the Hubble Frontier Field (HFF; Lotz et al. 2017), follows this trend and targets six massive galaxy clusters with redshifts in the range 0.3 < z < 0.6 with very deep imaging.

Compared to the ongoing surveys, which are all limited in their own ways, the new generation of telescopes will bring an unprecedented amount of data to exploit. As a subfield of LSB science, the detection and analysis of intracluster light (ICL) will be strongly be affected because one of the primary requirements of studying this faint feature of galaxy clusters in the visible bands is the gathering of deep images. However, the state of research in this field is currently not well defined because there is no consensus on a strict definition of ICL in astronomical images, nor on the best method for detecting it. This leads to a variety of studies on this subject that can be barely compared in view of the large discrepancies implied by the methods that are used (for more details, see the review by Montes 2019). The same can be said about surface brightness depth and the method for computing detection limits from the sky background in images, as explained by Mihos (2019). Before the large upcoming data sets can be efficiently used, a much needed analysis of the currently used detection method properties should be made.

Because part of this new challenge in LSB astronomy is purely technical (processing great numbers of images is often expensive in computing time), new algorithms with an emphasis on efficiency need to be developed to analyze images and to capture the useful information they contain. This is an ideal time to (re)explore concepts from signal and image analysis and adapt them to LSB astronomy. With this in mind, we developed DAWIS, an algorithm optimized for the detection of LSB sources that is highly parallelized and is to be run on large samples of images.

Such a new algorithm needs to be tested on simulations and compared with previous detection methods. To run the tests, we created images of simulated galaxy clusters and ICL using the Galsim package (Rowe et al. 2015). These images only reproduce the photometric aspect of galaxy clusters and cannot be used to draw conclusions on the properties of ICL. However, their content is a known value, which allowed us to estimate the efficiency of DAWIS and of previous methods for detecting ICL. We were also able to constrain the different biases and contamination effects that occur when these methods are applied.

This paper is organized as follows. In Sect. 2 we give some context on the various detection methods that are used to detect ICL in deep images and different effects that limit and contaminate this detection. In Sect. 3 we present the technicalities of DAWIS and the core of the algorithm. In Sect. 4 we describe our simulated mock images of galaxy clusters with ICL with the modeling package Galsim. In Sect. 5 we apply the different detection methods to the simulations and present the results. In Sect. 6 we run DAWIS on real data and compare the results with previous works. In Sect. 7 we discuss the results and the performances of the methods for simulations and real data. We assume a standard ΛCDM cosmology with Ωm = 0.3, ΩΛ = 0.7 and H0 = 70 km s−1 Mpc−1.

2. Overview of ICL detection methods

Behind the term intracluster light detection several choices hide, starting with the way images are acquired (space-based versus ground-based, survey strategies with or without image dithering, long exposures or stacks of short ones), data reduction (depending on the properties of the instrument), and finally, how the useful information is separated from noise and contamination sources (sky background estimation, separation of ICL from galaxies and foreground objects, point spread function (PSF) wings, scattered light). We refrain from addressing all these effects at once, but consider the most fundamental aspect of ICL detection: separating the ICL component from galaxy luminosity distributions and taking the sky background noise into account.

A large variety of methods have been used to separate ICL from bright sources in astronomical images. We group them roughly into three categories: the surface brightness threshold (SBT) methods, the profile-fitting (PF) methods, and the multiscale analysis (usually making use of wavelet bases) methods. The literature knows as many procedures for detecting the ICL as there are papers studying it. This classification therefore simplifies the larger picture, but is probably a good start for characterizing and evaluating this great variety of approaches and ICL definitions.

2.1. Surface brightness threshold methods

The first method is the SBT method, which consists of applying a predefined surface brightness threshold to the image in order to demarcate the ICL from the galaxy luminosity profiles. The most common threshold value is given by the Holmberg radius (defined by the isophote μB = 26.5 mag arcsec−2; Holmberg 1958), which delimits the geometric size of a galaxy in optical images at first order. This threshold has been used in different cases either to mask the cluster galaxies (the threshold in this case defines the limit of the galaxy extension), or simply to separate the outskirts of the brightest cluster galaxy (BCG) luminosity profile from the ICL. In any case, the threshold acts as a decision operator, attributing pixels either to galaxies or to ICL.

This method is quick to implement and has been used in several works (Krick et al. 2006; Krick & Bernstein 2007; Burke et al. 2012; DeMaio et al. 2018; Ko & Jee 2018; Montes & Trujillo 2018, e.g.). Different values for the threshold have been tested (Feldmeier et al. 2002, 2004), resulting in large discrepancies for the results obtained in observational data (Kluge et al. 2020). From the N-body and hydrodynamical simulation side, studies have shown similar results (Rudick et al. 2011; Tang et al. 2018). Depending on the choice of the person performing the study, different SBT values have been tested, with values ranging from μSBT ∼ 23 to μSBT ∼ 27 mag arcsec−2 in the V band, which once again showed strong discrepancies between them. However, because it is difficult to define ICL even in simulations, authors have proposed that different definitions would naturally lead to very different results, without specifying which result is the most appropriate.

2.2. Profile-fitting methods

Intensity PF methods consist of fitting analytical functions to the intensity distribution of the galaxies or to the ICL. The first use of this approach was made by fitting de Vaucouleurs (dV) profiles to the inner intensity distribution of BCGs before excesses of light in the outskirts were detected that were attributed to ICL (Uson et al. 1991; Scheick & Kuhn 1994; Feldmeier et al. 2002; Zibetti et al. 2005). Because the BCG and ICL intensity distributions are smoothly blended, several studies have tried to characterize the BCG+ICL intensity distribution using a single-Sérsic profile (Krick et al. 2006; Krick & Bernstein 2007). Other authors have tried to distinguish the two distributions by fitting sums of profiles, such as double Sérsic (Seigar et al. 2007; Durret et al. 2019; Kluge et al. 2020), double exponential profiles (Gonzalez et al. 2005), or composite profiles (Donzelli et al. 2011). In some cases, different analytical functions can correctly fit the same distribution (Puchwein et al. 2010), which complicates the physical interpretation of such results. Most notably, recent works have sown that the decomposition of the BCG+ICL distribution into separate luminosity profiles is likely unphysical (Remus et al. 2017; Kluge et al. 2020).

Fitting algorithms such as Galfit usually decompose galaxy intensity profiles into two components (bulge plus disk), and allow modeling the radial distribution with Sérsic profiles, while the angular distribution is controlled by trigonometric functions. This allows fitting a great diversity of intensity profiles, as long as the features they present are not too sharp. Most galaxy intensity profiles can therefore be fit with such methods. For complex objects such as strongly interacting galaxies, a high level of interactivity is required, because in these cases the user needs to manually adjust the parameters involved in the fitting procedure. This makes this method difficult to automatize fully, which is a downside when many galaxies are present in the FoV (but not impossible, as shown in Morishita et al. 2017). Additionally, the blending of galaxy intensity profiles in the high-density regions of galaxy clusters is another problem for this type of approach.

Recently, more sophisticated fitting algorithms have been developed, most notably, CICLE (Jiménez-Teja & Benítez 2012; Jiménez-Teja & Dupke 2016). CICLE models galaxy luminosity profiles with Chebyshev Fourier (CHEFs) functions. These forms are implemented into a fitting pipeline using outputs (position and size of the object) from the SExtractor image analysis software (Bertin & Arnouts 1996) because a subjective origin needs to be set for the basis function when each galaxy is modeled. While this fitting method is very strong in accurately modeling the surface brightness distribution of detected objects, it is therefore still sensitive to the detection performances of SExtractor or other detection methods that were used beforehand.

2.3. Wavelets and multiscale image analysis methods

Another approach to the detection of ICL (and generically of faint and extended sources among much brighter objects in astronomical images) has been the use of multiscale wavelet-based algorithms. Isotropic wavelet bases such as the B3-spline scaling function and its associated wavelet transform was used for the first time in an astronomical context by Slezak et al. (1994) to detect the large intracluster medium halo in X-ray images of galaxy clusters. Bijaoui & Rué (1995) then devised a powerful multiresolution vision model to analyze the three-dimensional (3D) data set of wavelet coefficients generated by an isotropic wavelet transform. The related procedure allows detecting significant structures in the 2D wavelet domains, identifying objects in this 3D wavelet space, and restoring the denoised luminosity distribution of these detected structures. This approach enables the detection of extended sources, and can therefore be adapted to the detection of ICL in optical images of galaxy clusters, as demonstrated by Adami et al. (2005). Another known implementation has been the OV_WAV package, developed in 2003 at Observatório do Valongo (OV-UFRJ, Rio de Janeiro) by Daniel Epitácio Pereira and Carlos Rabaça. This IDL package has been used in several works to detect diffuse light in galaxy groups or clusters (Da Rocha & Mendes de Oliveira 2005; Da Rocha et al. 2008; Guennou et al. 2012; Adami et al. 2013). More recently, another implementation of the multiresolution vision model has been developed to process astronomical MHz radio images, adding another deconvolution step and describing the problem within the modern framework of sparse representation (the MORESANE radio astronomical image analysis algorithm; Dabbech et al. 2015). We describe this type of approach and its mathematical background in depth below by compiling and standardizing the various information contained in previous articles.

2.3.1. Choosing a suitable representation space

Beyond the applied algorithm itself, the efficiency of this approach is tightly connected to the mathematical space used to represent the information content of the signal. One way to carry out the detection of the ICL is then to find a new space that highlights the low surface brightness and large spatial extent of the ICL, which facilitates distinguishing it from other astronomical sources.

The mathematical space in which the signal is initially represented (hereafter the direct space) may not be the most efficient for the pursued goal of our our analysis. This can be the case in particular when the information of interest is strongly mixed with other (in that case) components. Identifying a new representation space (and consequently the set of basis functions generating this space) for the data is then of uttermost importance for the final result of the processing. The transform of the initial signal from the direct space to this new space is obtained through inner products with the set of basis functions, defining what is called a projection. Because detecting the ICL in direct space is a difficult task with traditional methods, a more suitable representation space can be sought to facilitate it.

Many basis functions with different regularity properties are available and can define a basis, orthogonal or not. Consequently, as many representation spaces exist. The choice of which basis to use then depends on the signal characteristics that are to be strengthened according to the analysis goals. A generic approach for this choice is the notion of sparsity: an adequate function basis separates the signal of interest from the rest by concentrating the useful information in a few high-valued coefficients while spreading the noise and worthless components to many coefficients with low values. While a sparse representation gathers the relevant information, it may simplify it because features that are very different from the basis functions are lost.

In our case, the typical image of a galaxy cluster in visible bands can roughly be hierarchically decomposed into several circular or elliptical components with different characteristic sizes and intensities: the brightest sources in the FoV are usually PSF-like Milky Way stars and foreground galaxies, then galaxies at the cluster redshift, and finally, faint more distant galaxies, and even fainter extended sources such as the ICL. All are superimposed on a spatially slowly varying (instrumental or not) sky background.

Because their shapes when projected onto the sky are elliptical, a relevant first approach to describing these sources is to use isotropic functions that are azimuthally invariant. Because these sources may have very different characteristic sizes (or spatial frequencies), the function basis also needs to capture localized information (high frequencies) as well as mean behaviors related to the information averaged over a given region of the image (low frequencies). The transform associated with such a basis is called a multiscale transform. It enables studying the signal at varying scales: the transform at small scales gives access to the thinnest and local features of the signal, while its transform at large scales captures its overall behavior. As the analysis goal here is the detection of any feature of interest, an analysis that is invariant under translations would be preferred here. The inner products of the signal with the basis functions then do not depend on the position of the information in the signal, meaning that there is no need to set a subjective origin for the transform, as is the case for shapelets (Refregier 2003).

In our case, an interesting class of functions is the wavelet family. The first- (admissibility condition) and second-order moments of wavelets are equal to zero, making them contrast detectors in the simplest form. An example of such a function is the Morlet wavelet, which is basically a cosine weighted by a Gaussian. A wavelet basis is built by shifting and dilating the same wavelet function (the so-called mother wavelet), and the associated transform is called a wavelet transform. In contrast to the Fourier basis, which gives the most accurate frequency information in an infinite temporal signal in exchange of the loss of date information, wavelet transforms are part of the multiscale transform family. They therefore allow a time-frequency representation because different frequency scales are locally explored by different dilated and translated versions of the same mother wavelet function, providing thereby a date information for each analyzed sample from the whole data set.

The 2D isotropic wavelet bases satisfy the criteria listed above and are consequently adequate at first order to study astronomical images, specifically, images with bright localized sources (the signature in the image of objects such as stars or galaxies) and large diffuse sources (the signature of objects such as intracluster light halos). On the other hand, 2D isotropic wavelet bases are certainly not suitable for detection in image features that are strongly anisotropic or have very sharp edges, such as elongated rectangles or lines (e.g., the PSF spikes around stars, cosmic rays, satellite trails, or tidal debris around galaxies). Any such transform acts as a measure of similarity between the set of wavelets and the studied features. Making use of an isotropic filter implies loose information related to anisotropies, if any. A popular example of a 2D isotropic wavelet function is the normalized second derivative of the Gaussian, nicknamed the Mexican hat (MH) because the shape resembles a sombrero, when used as a 2D kernel: a disk of positive values surrounded by an annulus of negative ones, the integral of which is normalized to zero.

2.3.2. Discretization and multiresolution approach

In order to use wavelet transforms to analyze images, they need to be implemented into algorithms and the continuous theoretical functions along the scales and image axes need to be discretized. A discrete set of functions is built, which may constitute what is called a frame according to the chosen discretization scheme for wavelets with sufficient regularity properties. This discretization is not without consequence because part of the information contained in the continuous function might be lost. An upper limit for the loss of information for a given set of frame bounds, that is, a discretization scheme, can be computed (Daubechies 1990). This loss is small for the MH function when a dyadic scheme and two voices per octave are considered1.

A problem with the MH function though is its extended spatial support – the fact that its profile extends to infinity. It is in practice numerically impossible to compute the exact theoretical transform as approximations need to be done at the edges. Consequently, a widely used MH-like function is the B3-spline wavelet, which is also isotropic and translation invariant with a controlled loss of information when using dyadic scales, but with the prime advantage of having a compact support making the transform computable without any approximation.

A major breakthrough for the understanding and efficient implementation of wavelets into algorithms resulted from the multiresolution theory of Mallat (1989), showing that the set of wavelet functions are no more than a hierarchy or cascades of filters, also known as filter banks in the domain of applying signal processing. Within this framework, the mother wavelets are defined by means of a scaling function, which acts as a low-pass filter. This mother wavelet function in fact appears to be the difference between this scaling function and a normalized version of it dilated by a factor 2 in size. For instance, the MH is the difference between two differently scaled Gaussian functions, and the B3-spline wavelet is the difference between two differently scaled B3-spline functions.

The link between some classes of wavelets (e.g., B3-spline) and filter banks is also expressed through a dilation equation: the scaling function at scale 2 can be expressed as a linear combination of these scaling functions at scale 1. This is true for the continuous basis and for the dyadically discretized version of it. Therefore an image can be iteratively convolved with dilated (and decimated) B3-spline functions using a dyadic scheme, in this way building a set of increasingly coarser approximations of the initial 2D signal. The difference between two successive approximation levels then gives the wavelet coefficients related to this scale range. These coefficients can be viewed as a measure of the information difference between the coarser and thinner approximation, or in other words, of the details in the image with typical sizes within these two scales (cf. bandpass filter).

This iterative approach, which makes use of the so-called à trous algorithm from Holschneider et al. (1989, cf. spatial decimation of the low-pass filters), is much faster than using convolutions with filters of increasing supports to compute the transform. It does not rely on numeric integrals, but benefits from a simplified filtering operator based on simple multiplications and additions. There are different versions of this algorithm depending on the analysis goal. For the 2D decimated wavelet transform, the size in pixels of each smoothed image is divided by four with respect to the previous level of approximation (and so are the number of associated wavelet coefficients). This leads to a pyramidal representation that is well suited to encode the features of the image at a given level with different sizes in a sparse way when these features and the scaling functions match well. An undecimated version has also been proposed, which allows retaining precise spatial information because all the wavelet planes have the same size as the original image. This undecimated wavelet transform with the à trous algorithm and the B3-spline scaling function basis is central to the multiresolution vision model of Bijaoui & Rué (1995), a basic conceptual framework for denoising or source-detection algorithms.

2.3.3. Analysis and restoration

Besides the Haar wavelet, Daubechies has proved (see, e.g., Daubechies 1992) that a wavelet basis cannot simultaneously have a compact support, be isotropic, and be orthogonal. Because the B3-spline wavelet basis has a compact support and is isotropic, its associated representation space is not orthogonal: a source with a single characteristic size in the image is then not to be seen as a set of wavelet coefficients with high values at one single scale, but will have non-null wavelet coefficients at several successive scales. An analysis of the wavelet coefficients is then needed along the spatial axes and the scale axis to link these coefficients and correctly characterize the associated source in the wavelet domain. For the spatial analysis, the undecimated à trous algorithm of Holschneider et al. (1989), first used in the astronomical context in Slezak et al. (1990), and also known as the isotropic undecimated wavelet fransform (IUWT, Starck & Fadili 2007), is easier to use because the various wavelet planes have the same size as the original image.

We briefly explain the properties of the IUWT below, and a rigorous definition is given in Sect. 3.4. According to a dyadic scheme, the image is separated into several scales that exhibit sources with the same characteristic size: the first few high-frequency scales contain compact sources (small-scale details), while the low-frequency scales contain extended sources (large-scale details). Although not perfect because objects in the original image are spread through several sources at different scales, the IUWT is a sparse representation of the initial data. Providing that the noise affecting the data is white, objects in the direct space indeed generate wavelet coefficients with much higher values than those related to the noise-dominated pixels at any except for the smallest scale.

The fact that the IUWT is sparse makes the detection of any faint but extended source much easier in the wavelet domain than in the direct space, especially at the large scales relevant for the ICL component. A hard thresholding of the wavelet coefficients is therefore an efficient way to denoise the data and detect objects or structures, for instance. To do so, the significant wavelet coefficients need to be selected scale by scale and then need to be grouped into connected domains (see Sect. 3.5). Restoring an image in the direct space for a single detected object is slightly more difficult: an interscale analysis must be performed to build interscale trees using the spatial and scale positions of each significant domain, and various constraints can be applied when these trees are built or pruned. The information from a pruned interscale tree can then be used to restore (or reconstruct) the associated object intensity distribution in direct space.

Following the method described in Bijaoui & Rué (1995), only the wavelet coefficients of the domain are used in which the interscale maximum of a tree is located (i.e., the region with the highest value within the tree, hence with the highest information content), and from every region linked to it at smaller scales. This pruning of the interscale trees ensures that the restoration algorithm has access to enough information to compute a satisfying solution, and that the retained information does belong to the same structure in the direct image. However, this pruning discards the information from domains at lower spatial frequencies than the wavelet scale of the interscale maximum. For a source with an intensity profile with an inner core that is much brighter than its outskirts, only the bright core is therefore reconstructed and most of the outskirts are missed. Faint sources near bright ones are therefore correctly processed only when an analysis with such a pruning is performed at least twice.

The restoration step was applied to each tree individually and can be viewed as an inverse problem that yields an iterative estimation process (see Sect. 3.1). Several solutions to such optimization problems have been proposed in the literature, such as conjugate gradient methods or the Landweber scheme (Starck et al. 1998), based on positivity and other regularization constraints. In a more straightforward way, these estimation algorithms aim to reproduce the direct space intensity profile of the detected object by adding and subtracting different elements from a wavelet basis, and using information from the interscale tree. In this paper, the wavelet basis used for the restoration step is usually the same as the basis used for the analysis (the B3-spline wavelet basis).

There are several remarks to make on this overall method. The first is that it is parameter prior-free because there is no need to specify a profile for the objects that are reconstructed, in contrast to usual fitting methods. However, we recall that a choice is made through the wavelet basis that is used for the analysis and the restoration.

Astronomical sources cannot be represented by a single wavelet function, but rather by linear combinations of elements from the same wavelet basis. This implies a selection (made through the estimation algorithm) of the elements of the basis function that lead to the best representation. This selection is performed to minimize the difference in shape between the source intensity distribution and the pattern in the direct space that is linked to the set of wavelet functions that are used to model it. However, this selection is almost always suboptimal, and will generically result in artifacts in the restored profile. In the best-case scenario, the amplitude of these artifacts is very low compared to the other source distribution attributes. Sometimes, however, the iterative process fails to compensate for this difference (and may even amplify it in the worst-case scenario of strongly overlapping objects with high surface brightness), and artifacts can then be significant. Because of the nature of the wavelet pattern, which is a disk of positive pixels surrounded by an annulus of negative ones, these artifacts, if any, in our case take the form of spurious rings around restored sources. Likewise, choosing an isotropic vision model also leads to slight morphological biases on the reconstruction of anisotropic objects, typically galaxies with high ellipticities for which the solution has the same integrated flux but which have a more circular light profile.

2.3.4. Implementation and limitations

A wavelet-based multiscale approach like this was first used by Adami et al. (2005) to detect a large-scale diffuse component within the Coma galaxy cluster. The IDL package OV_WAV is another implementation of this multiresolution approach, and was used to detect diffuse sources in astronomical images of galaxy groups (Da Rocha & Mendes de Oliveira 2005). The wavelet representation allowed the authors to detect extended sources down to a signal-to-noise ratio (S/N) ∼ 0.1 per pixel, which was enough to characterize the intragroup light (IGL) of HCG 15, HCG 35, and HCG 51 (Da Rocha et al. 2008).

Even with fast à trous algorithms, this analysis procedure is computationally time expensive, mainly because of the interscale analysis and the object reconstructions. In addition, a problem met by this approach is the false detections due to statistical fluctuations of the noise. As previously mentioned, the noise is dominant in the high-frequency wavelet scales, and packs of noise pixels with high values can be detected as sources and have their own interscale tree. This results in the reconstruction of incorrect detections in the high-frequency scales, which increases the computing time. The authors of OV_WAV used various ways of thresholding their wavelet scales in order to limit the false detections and applied higher thresholds for the high-frequency scales, but they did not completely solve the problem.

Running OV_WAV on an image allows detecting most of the bright sources and reconstructing them properly up to a very high precision. All the reconstructed sources are then concatenated into a single image: the full reconstructed image of the original field. A residual image can then be computed by subtracting the reconstructed image from the original. Because of the various reconstruction factors described in Sect. 2.3.3, low surface brightness features can be missed. Adami et al. (2005) had the idea of running the algorithm a second time, but on the residual image, in order to detect outer galaxy halos and other more diffuse structures. While better results are obtained in this way, the overall performance of this iterative approach is still determined by the intrinsic quality of the restored intensity distribution for the detected source. This is especially the case for strongly peaked and bright sources because any high-value residual left from the first pass could then be detected as a significant structure in the second pass, once again hiding faint sources that are superimposed or close to it. Ellien et al. (2019) chose this approach for a beta version of DAWIS, where the same algorithm was run three times in a row to correctly detect and model every galaxy in the image. When the ICL is not detected with the wavelet algorithm after this procedure, it is possible as a fast alternative to detect it in the final residual image by applying an appropriate standard sky background threshold. In this case, the wavelet analysis acted as a simple modeling tool for galaxies, analogous to PF methods. A fully iterative procedure with this type of wavelet algorithm is always difficult to apply because of its computational cost, but it appears to be the best way to significantly improve the overall quality of the analysis (especially with regard to object restoration), and to thoroughly detect ICL in the wavelet space.

In parallel to the detection of ICL, this multiscale approach has been adapted to different types of data, where the scientific goals are similar (e.g., detecting faint and extended sources hidden by bright and compact sources). Most notably, Dabbech et al. (2015) proposed the algorithm MORESANE (model reconstruction by synthesis-analysis estimators), developed for processing radio images of galaxy clusters taking the complex PSF of radio interferometers into account. As already said, this algorithm makes use of the multiresolution vision model of Bijaoui & Rué (1995), embedded in an iterative procedure generalizing and upgrading the process implied by the earlier works of Adami et al. (2005) on ICL. This allows solving most of the problems described in the previous paragraphs. Dabbech et al. (2015) also provided a description of the overall procedure and algorithm in terms of sparse representation, called synthesis-by-analysis approach. We decided to use this latest version of multiscale image-analysis procedure as a starting point to upgrade this class of methods for detecting ICL. We propose here our own version of this strategy, optimized for computation time and for optical images. We presented it in the next section.

3. DAWIS

In this section we present the operating structure of DAWIS. While many notions addressed here are already well known, we still detail them with the global understanding of the algorithm in mind. The comparison of the ICL detection performance is presented in Sect. 4. We use the following notations: matrices are denoted by bold uppercase letters (e.g., A with a transpose A⊤), vectors by bold lowercase letters (e.g., v). A component of row index i and column index j is given by Ai, j. A vector component of index i is given by vi. Vectors are all column vectors, and row vectors are denoted as transposes of column vectors (e.g., v⊤). Vector subsets and matrix columns or rows are denoted by top or bottom indexes with parentheses (e.g., v(j) or v(j)).

3.1. Inverse problem and sparse representations

When a signal from observed data is modeled, the solution of this inverse problem may not be unique. To solve this so-called ill-posed problem, a penalty term must be introduced in the mathematical equation that describes it so that a particular solution can be selected. This solution must satisfy this added criterion, thereby leading to an optimization problem. We consider the generic equation

where y ∈ ℝM is the measured signal, x ∈ ℝN is the initial signal, ℋ : ℝN → ℝM is a known (or approximately known) degradation operator, and n ∈ ℝM is an additive noise. This structure can be used to represent many problems in image and signal processing such as denoising with ℋ = I or deconvolution with ℋ an impulse response (i.e., the PSF for a focused optical system). Recovering the initial signal from the observed (sub-)set of data is an inverse problem that can be solved with a penalized estimation process, written as

where ℛ : ℝN → ℝ+ is the penalization function and λ ∈ ℝ+ is the regularization parameter. One widely used constraint is the Tikhonov regularization, which may rely on difference operators, for instance, to promote a smooth solution or the identity matrix to give preference to solutions with small ℓp norms2. Sparsity of the solution for a given representation space can also be enforced. To do so, the penalized function ℛ is then a measure 𝒮 of the sparsity of the solution when projected onto the basis defining this new space, that is, after applying a transform γ to the solution so that the penalty term is written as ℛ(x′) = 𝒮(γ) with γ = γ(x′). This transform γ is usually chosen to be a linear operator. The normalized matrix aggregating the new basis functions as columns is commonly referred to as a dictionary, and each column or vector of it is then an element that is also called an atom.

A natural choice for the function 𝒮 is the ℓp norm with 0 < p < 1 to favor sparsity. Case p = 0 related to support minimization is usually untractable because it is highly nonconvex, hence an NP-hard problem; case p = 1 corresponds to the tightest convex relaxation to this problem, which may still not be easy to solve efficiently when the dimension is high. The use of dictionaries (cf. composite features) in combination with ℓ1 norm gave rise to several minimization algorithms with many variants to determine the best approximation of x by the elements of the dictionary, such as the method of frame (Daubechies 1988), the basis pursuit scheme (Chen et al. 2001), or the compressive sensing (Donoho 2006). Faster than convex optimization but lacking uniformity, a greedy method such as (orthogonal) matching pursuit (Mallat 1993), which is conceptually easier to implement, is also a suitable and efficient algorithm to solve the task.

Recovery of the sparse (or compressible) signal x is guaranteed providing that the correlation between any two elements of the dictionary is small (as measured by the mutual coherence indicator or the K-restricted isometry constant) and the number of measurements is large enough (Candes et al. 2006). In case of overcomplete and redundant dictionaries, the successful restoration of the signal relies on the use of a prior as is often the case for solving many inverse problems, with the maximum a posteriori (MAP) estimator, for instance. The prior we are interested in is the sparsity of the solution we are looking for, and it can be introduced following two approaches that are closely related but not equivalent for such redundant dictionaries, as studied by Elad et al. (2007).

The first approach relies on an analysis-based prior. The signal x characterized by its inner products with all the atoms of a dictionary A is assumed to be sparse for this dictionary, that is, γa = A⊤x with γa the sparse representation of x. To be efficient, this approach must involve priors on the signal for selecting adequate dictionaries such as wavelet-based ones for nearly isotropic sources in astronomical images, as was implemented in the previous algorithm OV_WAV. Given ℓ1(x) = ∑i|xi|, Eq. (2) becomes

The second approach is sparse synthesis, where the signal to be restored, x, is assumed to be a linear combination of a few atoms from a dictionary, so that x = Sγs, where γs is the sparse representation of x and S is the synthesis dictionary (not to be confused with the measure of sparsity 𝒮). This leads to the solution of the inverse problem as

For redundant dictionaries, solutions for analysis or synthesis priors are different. As far as the authors know, no general results on their practical comparison are available for usual transforms, even for the ℓ1 norm case. However, the analysis approach may be more robust than the synthesis approach because it does not require the signal to be expressed as a linear combination of atoms of a given dictionary. It is clear that a synthesis approach with dictionaries including too few atoms leads to a rough restoration and that the number of unknowns for large dictionaries is computationally expensive and often prohibitive.

3.2. DAWIS: A synthesis-by-analysis approach

We chose a hybrid approach for DAWIS, the analysis-by-synthesis method first developed for processing radio astronomy images and explicitly implemented in the MORESANE algorithm (Dabbech et al. 2015). The principle is to model an image as a linear combination of synthesis atoms that are learned iteratively through analysis-based priors. The previous algorithm, OV_WAV, already follows this path implicitly because (i) it makes use of wavelet dictionaries to reconstruct images according to an analysis approach where objects are detected and restored subject to the wavelet coefficient values, and (ii) it sometimes has to be run iteratively two or three times to obtain better results, which is the beginning of a synthesis approach with the successive restored images as synthesis atoms. However, applying a formal analysis-by-synthesis method allows us to solve most of the problems met by the OV_WAV algorithm while keeping the advantages of an analysis based on wavelet atoms for the detection of low surface brightness features.

This approach makes DAWIS (like MORESANE) a very versatile tool with a great range of applications, as the nature of the signal of interest and of the degradation operator are defined by the person performing the analysis. In the work presented here, for example, the signal to be recovered is the ICL, and the degradation operator ℋ can then be seen as effects coming from instrumental (scattered light, PSF) and physical (blending of astronomical sources, contamination by diffuse halos, etc.) origins. However, our goal of using DAWIS here is not to cope with instrumental effects (this type of degradation must therefore have been dealt with before), but to focus on the detection of diffuse and low surface brightness features (e.g., detecting signal where standard detection methods fail) and source separation (e.g., separating the ICL from the galaxies based on nonarbitrary parameters). The operating mode of DAWIS is therefore conditioned by these specific analysis goals. We stress the fact that other applications are possible (also see Sect. 3.10 for more details).

3.3. DAWIS: A semi-greedy algorithm

The synthesis-by-analysis approach implemented in MORESANE by Dabbech et al. (2015) and in DAWIS by us is conceptually reminiscent of a matching pursuit algorithm (Mallat 1993) where the atoms of the dictionary are constructed with orthogonal projections of the signal on time-frequency functions. At each iteration the best correlated projection is kept as an atom for the synthesis dictionary, and retrieved from the signal before the same process is applied to the residual. This method is efficient in determining atoms that characterize the signal well because its core strategy is to minimize at each iteration a residual from the computation of the inner products of the underconstruction solution with all the atoms of the analysis dictionary, hence a so-called greedy method, which has the main disadvantage to be time consuming. A well-known greedy algorithm is CLEAN, which uses a set of Diracs and a PSF to deconvolve the image of a field of stars represented by intensity peaks. The algorithm assesses a Dirac function to each peak with a spatial position in the image and an amplitude, which makes it sparse by nature because the observed field is expressed with a few positive coefficients. The sky image is recovered with the convolution of the set of Diracs by the PSF, which can be seen as the product of coordinates by a dictionary with one single atom.

A problem when detecting sources in astronomical images is blending: a faint source aside a much brighter source is partially hidden by the bright source, especially if the latter is also larger. In CLEAN, an iterative process controlled by an empirical factor called the CLEAN factor is introduced to address this issue. The highest detected peak in the image is first considered, and instead of totally removing it from the image before computing and processing the new highest peak, only a fraction of it given by this CLEAN factor is subtracted. By doing so, the risk of accidentally removing faint blended sources is decreased, hence allowing a better deconvolution of the image.

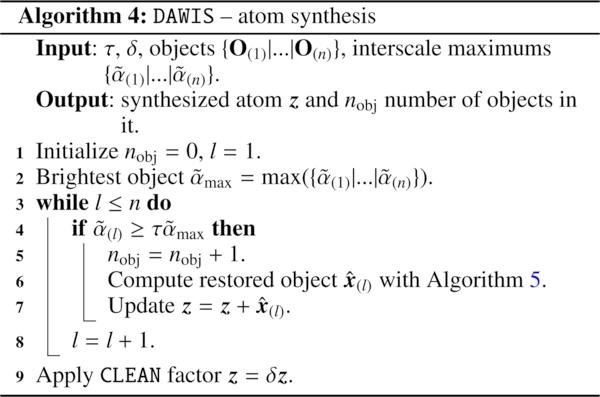

In DAWIS the brightest source within the image is detected at each iteration in the wavelet space before it is restored and removed from the image. Likewise, a very bright object in the image (such as a foreground star) translates into wavelet coefficients with very high values that strongly dominate this representation space (even at low frequency scales), and contaminates low surface brightness features. Removing this bright structure first before detecting fainter sources allows a better recovery of the objects than in the previous process used in the OV_WAV algorithm. Similarly to MORESANE, we also introduced a parameter δ ∈ [0, 1] in DAWIS that is equivalent to the CLEAN factor so that only a fraction of the reconstructed object is actually removed from the image. This also limits the appearance of artifacts.

The downside of this approach is its slowness. An analysis in which sources are processed one by one is far too time-expensive computationally. We therefore decided for DAWIS to implement the semi-greedy method of MORESANE. The reconstructed atom at each iteration is not composed of a single source, but of a set of sources with similar characteristic sizes and intensities. This set is defined by a parameter τ ∈ [0.1], setting a threshold relative to the brightest structure in the image.

3.4. DAWIS: the B3-spline wavelet as analysis dictionary

We described above that we used an analysis-based method to obtain the synthesized atoms of S. As ICL is believed to have an isotropic or quasi-isotropic shape, we chose as adequate dictionary the well-known B3-spline wavelet dictionary, with w = A⊤x, where w is the vector of the wavelet coefficients. The choice of this symmetric and compact mother wavelet also grants a very efficient way to compute these coefficients using the IUWT, for which there is no need to compute the product between A⊤ and x. Benefiting from the so-called à trous algorithm (Holschneider et al. 1989), the original image x is smoothed consecutively J times using an adaptive B3-spline kernel, giving J coarse versions of x, with c(j) ∈ ℝN being the version at the scale j. The vector w can then be written as the concatenation of J + 1 vectors w(j) ∈ ℝN such as w = {w(1)|…|w(j)|…|w(J)|c(J)}, where w(j) = c(j + 1) − c(j) (Mallat 1989; Shensa 1992). Each vector w(j) basically represents the details of two consecutive smoothed levels, and the components of these vectors are called the wavelet coefficients. An image of size N pixels gives a maximum number of scales J ≤ log2(N)−1.

3.5. DAWIS: Noise filtering and multiresolution support

Determining which wavelet coefficients are of interest (filtering step) requires knowing how the noise in the direct space translates into the wavelet space. Because the wavelet transform is linear, the noise statistics remain the same. To be able to select wavelet coefficients using a simple thresholding method involving Gaussian statistics, we need to ensure such a Gaussian distribution for the noise in the wavelet space. For this purpose, DAWIS makes use of a variance stabilization transform. Considering an image x with a combination of Gaussian noise and Poissonian noise, DAWIS involves the generalized Anscombe transform 𝒜 : ℝN → ℝN (Anscombe 1948), which is given by

with g being the gain of the detector, and μ and σ are the mean and standard deviation of the Poissonian-Gaussian noise in the original image, respectively, computed here with a bisection-like method. The result  is an image with a Gaussian noise of σ = 1, which has a very nice behavior in the wavelet space. The à trous algorithm can then be applied to the output image to create the stabilized wavelet coefficient vectors

is an image with a Gaussian noise of σ = 1, which has a very nice behavior in the wavelet space. The à trous algorithm can then be applied to the output image to create the stabilized wavelet coefficient vectors  .

.

The statistically significant pixels are then selected at each scale using a thresholding method, and a multiscale support is identified (Bijaoui & Rué 1995), which is given a scalar operator 𝒯, a vector M = {M(1)|…|M(j)|…|M(J)} with M(j) ∈ ℝN such as

The computation of the multiscale support fills two objectives. First, it acts as a ℓ0 sparsification of the analysis coefficients, discarding small (nonsignificant) wavelet coefficients and indicating the position of interesting features. Second, it allows us to very easily translate this acquired knowledge from the variance-stabilized wavelet space into the nonstabilized space (e.g., the vector w generated by A⊤x), where the actual object identification is made.

Because the noise in the initial image is considered to be spatially uncorrelated (which is not always the case in reality) and therefore generates wavelet coefficients with high values only at the first two high-frequency scales, a first relevant approach is to estimate the standard deviation of the noise and its mean at these two first scales. The IUWT being a linear transform, the rms values can then be extrapolated to the higher wavelet scales where (i) the noise becomes highly correlated as a result of the large size of the related filters, and (ii) the mean source size increases, leaving increasingly fewer background pixels to estimate the noise statistics.

The threshold operator applied to the wavelet coefficients 𝒯 can take many forms. DAWIS implements the usual hard threshold operator, which operates as

The threshold t applied to each  is different, such as t = kσ(j), with σ(j) being the standard deviation of the noise of

is different, such as t = kσ(j), with σ(j) being the standard deviation of the noise of  and k a constant usually chosen to be 3 or 5 according to the chosen probability for false alarms. Other formulations for 𝒯 can be used, such as the soft threshold operator (Mallat 2008) or the combined evidence operator used in OV_WAV (Da Rocha & Mendes de Oliveira 2005).

and k a constant usually chosen to be 3 or 5 according to the chosen probability for false alarms. Other formulations for 𝒯 can be used, such as the soft threshold operator (Mallat 2008) or the combined evidence operator used in OV_WAV (Da Rocha & Mendes de Oliveira 2005).

3.6. DAWIS: Object identification through interscale connectivity

We first repeat that a source in the original image generates significant wavelet coefficients at several successive scales for a nonorthogonal transform. Consequently, an analysis along the scale axis has to be performed in order to identify the set of wavelet coefficients related to this source. To this end, DAWIS once again follows the recipe first proposed by Bijaoui & Rué (1995) and also implemented in MORESANE. It relies on the construction of interscale trees.

We denote with α the set of significant wavelet coefficients of w. The location of these coefficients is given by the multiscale support M defined in Sect. 3.5. When this mask is applied, a classical segmentation procedure is first performed to group these coefficients into regions (e.g., domains) of connected pixels. α can then be written as a concatenation of vectors α(j) so that α = {α(1)|…|α(j)|…|α(J)} with α(j) ⊂ w(j), each α(j) being composed of a set of domains d with different sizes. As usual, each significant region is characterized by the location and amplitude of its coefficient with the highest value, allowing us to define a local maximum. Let  be this local maximum value for the region d(1) ⊂ α(j). Region d(1) is then defined as linked to a region d(2) ⊂ α(j+1) when

be this local maximum value for the region d(1) ⊂ α(j). Region d(1) is then defined as linked to a region d(2) ⊂ α(j+1) when  belongs to d(2). Finally, by testing this connectivity for each region d ⊂ α, interscale trees are built throughout the whole segmented wavelet space.

belongs to d(2). Finally, by testing this connectivity for each region d ⊂ α, interscale trees are built throughout the whole segmented wavelet space.

With this procedure, an object O is then defined by the concatenation of K connected regions in the wavelet space d(k) such as O = {d(1)|…|d(k)|…|d(K)}. A region is linked to at most one other region at the next lower frequency scale, but can be linked to several regions at the next higher frequency scale. One criterion must be satisfied to consider that such a tree is related to a genuine object in the direct image, and not an artifact. An interscale tree must include at least three regions that are linked at three successive scales.

3.7. DAWIS: Object reconstruction

Applying the procedure summarized in the previous section, each object in direct space is therefore related to an interscale tree of significant wavelet coefficients. Beyond the structure of the tree itself, the amount of information about this object is distributed throughout the linked regions and can be measured by the value of their maxima once normalized. Because wavelets act as a contrast detector, the average values of the wavelet coefficient amplitudes tend to be indeed lower at small scales than at large scales in astronomical images, thereby creating an implicit bias when wavelet coefficient values at different scales are compared. As a normalization factor for each scale j, DAWIS uses the standard deviation at this scale j of the IUWT of a Gaussian white-noise image with unit variance, denoted  . For an object O, this results in the normalized vector

. For an object O, this results in the normalized vector  of size K, with given d(k) ⊂ α(j),

of size K, with given d(k) ⊂ α(j),  .

.

The region d(k) ⊂ O in the tree that contains most of the information about the object is especially relevant for the information content. This region of maximum information is the interscale maximum. It is denoted by an index kobj and a scale jobj such as

This interscale maximum gives an easy way to characterize an object O because the parameter jobj provides its characteristic size 2jobj. Moreover, the parameter  giving its normalized intensity is also used by DAWIS to compare it to other objects and rank them for the restoration step. As indicated in Sect. 3.3, a parameter τ defines a threshold relative to the brightest identified object, which has the highest parameter

giving its normalized intensity is also used by DAWIS to compare it to other objects and rank them for the restoration step. As indicated in Sect. 3.3, a parameter τ defines a threshold relative to the brightest identified object, which has the highest parameter  , denoted

, denoted  . Only objects for which

. Only objects for which  are restored at a given iteration of the processing and are included in the associated dictionary atom.

are restored at a given iteration of the processing and are included in the associated dictionary atom.

Objects are reconstructed individually, using their specific support Mspec giving the location in w of every significant coefficient belonging to O. Here DAWIS also strictly follows the procedure of Bijaoui & Rué (1995) and that regions at scales j higher than jobj are discarded for the restoration. This restoration is a direct application of Eq. (2), where positivity of the solution is used as a regularization term, with y = Mspecw and ℋ = MspecA⊤. DAWIS uses the conjugate gradient algorithm from Bijaoui & Rué (1995), which makes use of the adjoint operator †A of the analysis dictionary A (see their article for an explicit definition of this operator). This algorithm iteratively finds the solution  , which is the reconstructed object. This conjugate gradient version algorithm makes use of the Fletcher-Reeves step size β (Fletcher & Reeves 1964). This process is applied to all objects before they are concatenated into a single restored image z ∈ ℝN such as

, which is the reconstructed object. This conjugate gradient version algorithm makes use of the Fletcher-Reeves step size β (Fletcher & Reeves 1964). This process is applied to all objects before they are concatenated into a single restored image z ∈ ℝN such as  .

.

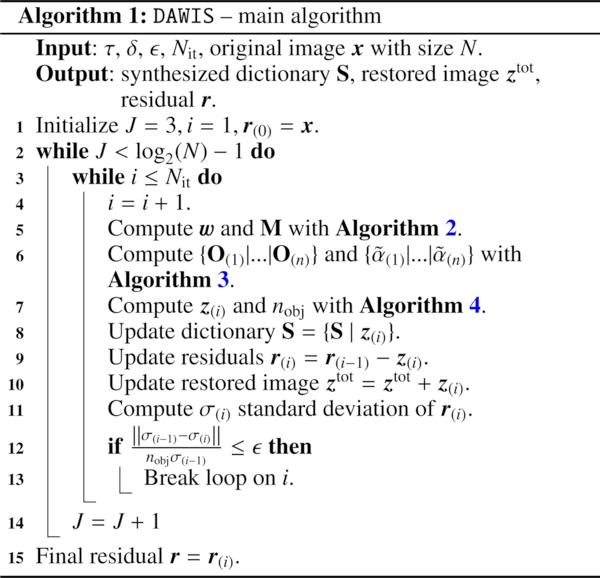

3.8. DAWIS: Architecture of the algorithm

We present the general architecture of DAWIS in the form of a simplified pseudo-code in this section: Algorithms 1 and 4 summarize the synthesis-by-analysis approach as explained in Sects. 3.2 and 3.3, while Algorithms 2, 3, and 5 describe the wavelet-atom-based analysis as described in Sects. 3.4–3.7. For the operating mode of DAWIS, which is iterative, some parameters have to be defined to control the convergence of the algorithm.

To ensure that the algorithm correctly peels the image starting by the bright sources, we imposed an upper scale J so that the brightest detected object at each iteration cannot have an interscale maximum  at scale jmax > J. This also decreases the computation time because there is no need to perform the wavelet and interscale analyzes for all scales for the first few iterations. The upper scale is initialized at J = 3 because an interscale tree needs domains that are connected at least at three successive scales to be considered as related to an object.

at scale jmax > J. This also decreases the computation time because there is no need to perform the wavelet and interscale analyzes for all scales for the first few iterations. The upper scale is initialized at J = 3 because an interscale tree needs domains that are connected at least at three successive scales to be considered as related to an object.

The main convergence parameter is defined as  and is computed from the variation of the standard deviation of the residual at each iteration i. However, the nature of the synthesis atom for different iterations can induce instability for this convergence parameter. One atom can indeed be composed of many bright objects, which means strong variation in the standard deviation, and another of a single faint object, hence a low variation in the standard deviation, which might break the loop while there are still sources in the residual. Therefore we normalized this convergence parameter by the number of objects nobj to stabilize it. When the value of this parameter decreases below a threshold ϵ, the value of the upper scale J is increased by one, so that larger sources can be processed by the algorithm. A hard limit Nit is also given to the algorithm to restrict the number of possible iterations.

and is computed from the variation of the standard deviation of the residual at each iteration i. However, the nature of the synthesis atom for different iterations can induce instability for this convergence parameter. One atom can indeed be composed of many bright objects, which means strong variation in the standard deviation, and another of a single faint object, hence a low variation in the standard deviation, which might break the loop while there are still sources in the residual. Therefore we normalized this convergence parameter by the number of objects nobj to stabilize it. When the value of this parameter decreases below a threshold ϵ, the value of the upper scale J is increased by one, so that larger sources can be processed by the algorithm. A hard limit Nit is also given to the algorithm to restrict the number of possible iterations.

3.9. DAWIS: implementation and parallelization

DAWIS is implemented with an emphasis on modularity (e.g., a set of modules that can be moved or replaced by new versions) because the algorithm can still be upgraded to increase the quality of the method or modified according to new analysis goals. We chose to write the main layer of modules in Python because it is a very widely used versatile and accessible open-source language. However, this versatility has a cost in terms of numerical performance, which led us to support the Python modules by Fortran 90 codes where the main numerical computations are done.

Input: Maximum scale J, residual r.

Output: wavelet coefficients w and multiscale support M.

-

Apply Anscombe transform

.

. -

Compute

with à trous algorithm for scale j = 1 to J.

with à trous algorithm for scale j = 1 to J. -

Apply hard threshold

.

. -

Compute multiscale support M from

.

. -

Compute w = A⊤r with à trous algorithm for scale j = 1 to J.

As explained in Sect. 2.3.4, one of the main limitations of wavelet-based algorithms is computation time, which prevents the application of previous packages such as OV_WAV to large samples of images or to very large images. Great effort has been made with DAWIS to parallelize the algorithm. This is not straightforward because the main algorithm is iterative, which means that only the content of one iteration can be sped up. Therefore we parallelized the modules inside an iteration. When large data arrays are worked (which is typically the case when the wavelet data cube and the multiresolution support are computed with Algorithm 2 and when the multiscale analysis is performed with Algorithm 3), the Fortran modules are parallelized in shared memory using OpenMP3. Conversely, we use the new Python package Ray4 to distribute processes when we work on many small arrays (the restorations of numerous objects to compute the associated synthesis atom are independent from each other and can be distributed, such as Algorithms 4 and 5).

We display here a central processing unit (CPU) computing-time scaling test on mock data for both types of parallelizations. For the shared memory parallelization, the test was set on an image of size 4096 × 4096 pixels (giving a wavelet data cube of 4096 × 4096 × 10 wavelet coefficients). Algorithms 2 and 3 were run on the image first serially (one CPU), and then with progressively increasing the number of CPUs. As shown in Fig. 1, the gain of computing time is high when the number of CPUs is increased from 1 to 16: it changes from a computing time of ∼40 min to a computing time of ∼6 min. However, these modules do not scale linearly with the number of CPUs, and the gain in computing time is rather negligible for 32 and more CPUs, where the computing time converges toward a value of ∼4 min.

|

Fig. 1. CPU computing-time scaling test for the two types of parallelization used in DAWIS. The scale for the computing time is logarithmic. This gain in time is achieved for the modules inside one main iteration of the algorithm, and does not represent the total CPU computing time of DAWIS (see the text for more details). |

For the distributed memory parallelization, the test was run on 1000 copies of the same object array of size 128 × 128 pixels. Algorithms 4 and 5 were run on the arrays, and the resulting CPU computing times are also displayed in Fig. 1. Similar to the shared-memory parallelization, the gain in CPU computing time is most effective when the number of CPUs is increased to 16, for which the computing time changes from ∼25 min to ∼2 min. It is rather negligible with higher numbers of CPUs, however, and converges toward a computing time of ∼2 min. The objects that are to be restored are not always of size 128 × 128 pixels, and the number of objects that is to be restored can also differ from 1000. In some cases, the object sizes might even be a large fraction of the image. In these cases, however, there are rarely more than one or two objects that need to be restored. In our experience, the computation time of an iteration with one or two large objects that are to be restored does not differ very much from the computation time of an iteration in which many small objects are restored.

This simple test is not representative of the performance of the complete algorithm. Because of the greedy nature of Algorithm 1, the CPU computing time of DAWIS largely depends on the content of the image. An image that contains complex structures will always take longer to process than an image with very simple shapes because more main iterations are needed to accurately model these complex structures (in our experience, the number of main iterations ranges from a hundred to a few hundred, depending on the image). Additionally, the length of a main iteration also greatly depends on the content of the image. For example, the algorithm will sometimes choose to restore only one very bright source. In this case, the parallelization in distributed memory is of no use because only one object is to be restored. Nevertheless, these parallelization processes ensure that the complete CPU computing time of DAWIS does not disproportionately increase in case of ‘heavy’ main iterations.

3.10. Astrophysical priors on object selection

We showed in Sect. 3.2 that the columns of the final synthesized dictionary S are atoms that are no more than the restored images zi at each iteration i. Then, the restoration ztot of the whole original image is given by the sum of all these atoms such as ztot = ∑iz(i). The generic operating mode of DAWIS therefore produces a fully (denoised) restored image every time the algorithm is run. However, in this way, a large part of the information recovered by the sparse synthesis-by-analysis method is not used because all synthesized atoms are concatenated into the same image in which the information of individual spatial frequencies or characteristic sizes is no longer accessible. However, depending on the analysis goals, the subsets of atoms of S alone might be of interest, or even more specifically, alternative synthesized dictionaries might have to be compiled, the atoms of which would be selected differently throughout the synthesis-by-analysis procedure. To allow for these possibilities, a discrimination operator 𝒟 was applied to the detected objects before we constructed the associated synthesis atoms such as  . We do not present a rigorous definition of such an operator here because it can take many forms and use different properties to distinguish objects depending on the desired goal.

. We do not present a rigorous definition of such an operator here because it can take many forms and use different properties to distinguish objects depending on the desired goal.

In this paper, the main goal is to detect the bright components characterizing ICL. The discrimination operator 𝒟 then becomes a way of classifying sources as ICL-type structures, denoted  , and to extract them from components associated with galaxies. A very simple way of doing so is to consider jobj for each object and to use this parameter as a constraint because the characteristic size of galaxies is not the same as the characteristic size of any structural element of the ICL. The dictionary atom zICL can then be built in parallel of z such that

, and to extract them from components associated with galaxies. A very simple way of doing so is to consider jobj for each object and to use this parameter as a constraint because the characteristic size of galaxies is not the same as the characteristic size of any structural element of the ICL. The dictionary atom zICL can then be built in parallel of z such that  . This atom is then added to the ICL-synthesized dictionary SICL, and a fully restored ICL image can be computed by summing all its atoms. A distinction based on the spatial position of the interscale maximum kobj can also be applied because atoms describing galaxies belonging to a galaxy cluster would also be considered (a catalog of the cluster member positions is needed in this case) for ICL fraction studies, for example, or again to ensure that atoms associated with ICL are well centered on the galaxy cluster. More complete discrimination operators can be developed based on morphological properties of sources in the wavelet scales, for instance, granularity (i.e., the number of regions linked to an interscale maximum), depth of the interscale tree (i.e., the number of scales composing the tree), or the color of the restored object (when several bands are available).

. This atom is then added to the ICL-synthesized dictionary SICL, and a fully restored ICL image can be computed by summing all its atoms. A distinction based on the spatial position of the interscale maximum kobj can also be applied because atoms describing galaxies belonging to a galaxy cluster would also be considered (a catalog of the cluster member positions is needed in this case) for ICL fraction studies, for example, or again to ensure that atoms associated with ICL are well centered on the galaxy cluster. More complete discrimination operators can be developed based on morphological properties of sources in the wavelet scales, for instance, granularity (i.e., the number of regions linked to an interscale maximum), depth of the interscale tree (i.e., the number of scales composing the tree), or the color of the restored object (when several bands are available).

4. Simulations

A new detection algorithm such as DAWIS must be tested and compared to more traditional methods. For this purpose, we took an image analysis approach and created monochromatic mock images of galaxy clusters simulated with the Galsim package (Rowe et al. 2015), emulating the photometric aspect of galaxy clusters (galaxies + ICL) and the properties of the CFHT wide-field camera MegaCam. The choice of MegaCam was made to prepare upcoming ground-based surveys such as the UNIONS survey (Ibata et al. 2017). However, the same approach can (and should in the future) be applied to simulations of HST images, for example.

From an astrophysics point of view, this approach is not the most realistic because the simulated images contain only light profiles and an artificial background. However, it allows a complete control over the different included components and allowed us to compare different detection methods and their performances in different situations. Even if they are more realistic, it is not possible to repeat this with N-body or hydrodynamical simulations because the way in which ICL is defined in those simulations is also subject to debate (Rudick et al. 2011; Tang et al. 2018).

4.1. Photometric calibration

We retrieved the MegaCam properties on the MegaPrime website5. The MegaCam-type images are set with a pixel scale s = 0.187 arcsec pixel−1, a detector gain g = 1.62e−/ADU, an exposure time texp = 3600 seconds, and a readout noise level σreadout = 5e−. We calibrated the photometry for the r band with a zeropoint ZP = 26.22, and set the sky surface brightness μsky to the corresponding average dark sky value, which is μsky = 21.3 mag arcsec−2. The sky background level in ADU/pix is then given by

The sky background in our images was simulated using the Galsim function ‘CCDNoise(sky_level =  , gain = g, read_noise = σreadout)’, which generates a spatially flat noise composed of Gaussian readout noise plus Poissonian noise.

, gain = g, read_noise = σreadout)’, which generates a spatially flat noise composed of Gaussian readout noise plus Poissonian noise.

We neither tried to model the MegaCam PSF and the associated complex scattered light halos in a refined way nor to measure the effect of its extended wings, and we did not include any spatial variation or anisotropy to it. We therefore chose a constant value for the seeing. We modeled the PSF with a Moffat profile using the Galsim function ‘galsim.Moffat’ with parameters β = 4.765 and a half-light radius of 0.7″, which is a generic value for the MegaCam seeing.

4.2. Creating catalogs

We first created a galaxy cluster catalog. Because the vast majority of ICL detections in the literature was made at low redshift (z < 0.5, with a few exceptions such as Adami et al. 2005; Burke et al. 2012; Guennou et al. 2012; Ko & Jee 2018), we picked three redshift values such as z ∈ [0.1, 0.3, 0.5] in which ten different galaxy clusters per bin were simulated. This choice of redshift values was made to study the effect of cosmological dimming on the ICL detection for each detection method. While the number of clusters per bin seems at first fairly low, different parameter spaces for each cluster ICL profiles were explored by multiplying the number of processed images to several hundred and allowing us to lead a statistically significant study.

A Navarro-Frenk-White (NFW; Navarro et al. 1997) dark matter (DM) gravitational potential was simulated at the center of an image for each cluster. The mass of the potential was set to an average value of 1015 M⊙, and its concentration followed the N-body simulation concentrations of Klypin et al. (2016). Recent works have shown that the spatial distribution of ICL should follow the concentration of the DM halo (Montes & Trujillo 2019). This was not the case in our simulations, and we did not explore the effects of the mass and concentration parameters on our results. Our goal was to mimic the photometric aspect of galaxy clusters and their ICL, and not to determine the physical properties of their gravitational potential or the effect of these parameters on ICL.