| Issue |

A&A

Volume 580, August 2015

|

|

|---|---|---|

| Article Number | A48 | |

| Number of page(s) | 17 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201425274 | |

| Published online | 29 July 2015 | |

The 3D MHD code GOEMHD3 for astrophysical plasmas with large Reynolds numbers

Code description, verification, and computational performance⋆

1 Max Planck Institute for Solar System Research, 37077 Göttingen, Germany

e-mail: skala@mps.mpg.de

2 Astronomical Institute of Czech Academy of Sciences, 25165 Ondřejov, Czech Republic

3 Rechenzentrum (RZG) der Max Planck Gesellschaft, Garching, Germany

4 University J. E. Purkinje, 40096 Ústí nad Labem, Czech Republic

Received: 4 November 2014

Accepted: 21 April 2015

Context. The numerical simulation of turbulence and flows in almost ideal astrophysical plasmas with large Reynolds numbers motivates the implementation of magnetohydrodynamical (MHD) computer codes with low resistivity. They need to be computationally efficient and scale well with large numbers of CPU cores, allow obtaining a high grid resolution over large simulation domains, and be easily and modularly extensible, for instance, to new initial and boundary conditions.

Aims. Our aims are the implementation, optimization, and verification of a computationally efficient, highly scalable, and easily extensible low-dissipative MHD simulation code for the numerical investigation of the dynamics of astrophysical plasmas with large Reynolds numbers in three dimensions (3D).

Methods. The new GOEMHD3 code discretizes the ideal part of the MHD equations using a fast and efficient leap-frog scheme that is second-order accurate in space and time and whose initial and boundary conditions can easily be modified. For the investigation of diffusive and dissipative processes the corresponding terms are discretized by a DuFort-Frankel scheme. To always fulfill the Courant-Friedrichs-Lewy stability criterion, the time step of the code is adapted dynamically. Numerically induced local oscillations are suppressed by explicit, externally controlled diffusion terms. Non-equidistant grids are implemented, which enhance the spatial resolution, where needed. GOEMHD3 is parallelized based on the hybrid MPI-OpenMP programing paradigm, adopting a standard two-dimensional domain-decomposition approach.

Results. The ideal part of the equation solver is verified by performing numerical tests of the evolution of the well-understood Kelvin-Helmholtz instability and of Orszag-Tang vortices. The accuracy of solving the (resistive) induction equation is tested by simulating the decay of a cylindrical current column. Furthermore, we show that the computational performance of the code scales very efficiently with the number of processors up to tens of thousands of CPU cores. This excellent scalability of the code was obtained by simulating the 3D evolution of the solar corona above an active region (NOAA AR1249) for which GOEMHD3 revealed the energy distribution in the solar atmosphere in response to the energy influx from the chromosphere through the transition region, taking into account the weak Joule current dissipation and viscosity in the almost dissipationless solar corona.

Conclusions. The new massively parallel simulation code GOEMHD3 enables efficient and fast simulations of almost ideal astrophysical plasma flows with large Reynolds numbers well resolved and on huge grids covering large domains. Its abilities are verified by comprehensive set of tests of ideal and weakly dissipative plasma phenomena. The high-resolution (20483 grid points) simulation of a large part of the solar corona above an observed active region proves the excellent parallel scalability of the code up to more than 30 000 processor cores.

Key words: magnetohydrodynamics (MHD) / Sun: corona / Sun: magnetic fields

A movie associated to Fig. 21 is available in electronic form at http://www.aanda.org

© ESO, 2015

1. Introduction

For most astrophysical plasmas the viscosity and current dissipation (resistivity) are negligibly small, that is, astrophysical plasmas are nearly ideal, almost dissipationless, and hence, for relevant processes and scales, the characteristic Reynolds and Lundquist numbers are very large. This requires specific approaches to correctly take into account turbulence and different types of ideal and non-ideal interactions in the plasma flows such as shock waves, dynamo action, and magnetic reconnection (Birn & Priest 2007). Fortunately, improvements in computer technology as well as the development of efficient algorithms allow increasingly realistic numerical simulations of the underlying space plasma processes (Büchner et al. 2003). For the proper numerical description of nearly dissipationless astrophysical plasmas, for example, of magnetic reconnection (Büchner 2007a) and dynamo action, one needs to employ schemes with negligible numerical diffusion for magnetohydrodynamical (MHD) as well as kinetic plasma descriptions (Elkina & Büchner 2006). The schemes should be as simple as possible so that they can run quickly and efficiently. Moreover, to ensure flexibility concerning the particular physics problem under consideration, they should allow an easy modification of initial and boundary conditions as well as the simple addition and adjustment of physics modules. For this purpose, the serial second-order-accurate MHD simulation code LINMOD3D had been developed, to name just one. It was successfully applied to study the magnetic coupling between the solar photosphere and corona based on multiwavelength observations (Büchner et al. 2004b), to investigate the heating of the transition region of the solar atmosphere (Büchner et al. 2004a), and the acceleration of the fast solar wind by magnetic reconnection (Büchner & Nikutowski 2005a). It was also used to physically consistently describe the evolution of the solar chromospheric and coronal magnetic fields (Büchner & Nikutowski 2005b) and to compare solar reconnection with spacecraft telescope observations (Büchner 2007b), the electric currents around EUV bright points (Santos et al. 2008a), the role of magnetic null points in the solar corona (Santos et al. 2011b), and the triggering of flare eruptions (Santos et al. 2011a). Other typical applications of LINMOD3D were the investigation of the relative importance of compressional heating and current dissipation for the formation of coronal X-ray bright points (Javadi et al. 2011) and of the role of the helicity evolution for the dynamics of active regions (Yang et al. 2013). To investigate stronger magnetic field gradients in larger regions of the solar atmosphere, however, an enhanced spatial resolution is required. To a certain degree this was possible using the OpenMP parallelized code MPSCORONA3D, which can be run on large shared-memory parallel computing resources, to investigate the influence of the resistivity model on the solar coronal heating (Adamson et al. 2013), for instance.

To simulate further challenging problems, like the development and feedback of turbulence, for high-resolution simulations of large spatial domains, for the investigation of turbulent astrophysical plasmas with very large Reynolds numbers, for the consideration of subgrid-scale turbulence for large scale plasma phenomena, one needs to be able to use a much larger number of CPU cores than shared memory systems can provide, however. Hence, message passing interface (MPI) parallelized MHD codes such as ATHENA1, BATS-R-US2, BIFROST, ENZO3 or PENCIL4 have to be used; these codes run on distributed memory computers. The PENCIL code is accurate to sixth order in space and to third order in time. It uses centered spatial derivatives and a Runge-Kutta time-integration scheme. ENZO is a hybrid (MHD + N-body) code with adaptive mesh refinement, which uses a third-order piecewise parabolic method (Colella & Woodward 1984) with a two-shock approximate Riemann solver. ATHENA allows a static mesh refinement, implementing a higher order scheme and using a Godunov method on several different grid geometries (Cartesian, cylindrical). It employs third-order cell reconstructions and a Roe solver, Riemann solvers, and a split corner-transport upwind scheme (Colella 1990; Stone et al. 2008) with a constrained-transport method (Evans & Hawley 1988; Stone & Gardiner 2009). The BIFROST code is accurate to sixth order in space and to third order in time (Gudiksen et al. 2011). The code BATS-R-US solves the 3D MHD equations in finite-volume form using numerical methods related to Roe’s approximate Riemann solver. It uses an adaptive grid composed of rectangular blocks arranged in varying degrees of spatial refinement levels. We note that all these codes are of an accuracy higher than second order. As a result, every time step is numerically expensive and changes or modifications of initial and boundary conditions, for instance, require quite some effort. Conversely, second-order-accurate schemes are based on simpler numerics and efficient solvers. They are generally far easier to implement and modify, different types of initial and boundary conditions are parallelized, for example. On modern computer architectures the desired numerical accuracy can rather easily and computationally cheaply be achieved by enhancing the grid resolution. This motivated us to base our new code GOEMHD3 on a simple second-order-accurate scheme that is relatively straightforward to implement and parallelize, which facilitates modification and extension. GOEMHD3 runs quickly and efficiently on different distributed-memory computers from standard PC clusters to high-performance-computing (HPC) systems like the Hydra cluster of the Max Planck Society at the computing center (RZG) in Garching, Germany. To demonstrate the reach and limits of the code, GOEMHD3 was tested on standard problems and by simulating the response of the strongly height-stratified solar atmosphere based on photospheric observations using a large number of CPU cores. In Sect. 2 we describe the basic equations that are solved by the code (Sect. 2.1), together with their discretization and numerical implementation (Sect. 2.2). In Sect. 2.3 we describe the hybrid MPI-OpenMP parallelization of GOEMHD3. The performance of the code was tested with respect to different ideal and non-ideal plasma processes (Sect. 3). All tests were carried out using the same 3D code. For quasi-2D simulations the number of grid points in the invariant direction was reduced to four, the minimum value required by the discretization scheme. Section 3.1 presents a test of the hydrodynamic part of the code by simulating the well-posed problem of a Kelvin-Helmholtz velocity shear instability using the methodology developed by McNally et al. (2012) because it was also applied to test higher-order codes such as PENCIL, ATHENA, and ENZO. In Sect. 3.2 the ideal MHD limit is tested by simulating vortices according to Orszag & Tang (1979). In the past, Kelvin-Helmholtz instability and Orszag-Tang vortex tests have also been used to verify total-variation-diminishing schemes (Ryu et al. 1995). The possibility of numerical oscillations caused by the finite-difference discretization was investigated as in Wu (2007). To verify the explicit consideration of dissipative processes by GOEMHD3, a current decay test was performed by suppressing other terms in the equations (Sect. 3.3). The effective numerical dissipation rate of the new code was assessed by a set of 1D Harris-like current sheet (e.g., Kliem et al. 2000) simulations (Sect. 3.4) and by a fully 3D application with solar-corona physics (Sect. 4.5).Section 4 presents an application of GOEMHD3 to the evolution of the solar corona in response to changing conditions at the lower boundary according to the photospheric plasma and magnetic field evolution, and we document the computational performance of the code. The paper is summarized and conclusions for the use of GOEMHD3 are drawn in Sect. 5.

2. Basic equations and numerical implementation

2.1. Resistive MHD equations

For a compressible, isotropic plasma, the resistive MHD equations in dimensionless form read ![\begin{eqnarray} \label{eq:density} &&\frac{\partial\rho}{\partial t}+\nabla\cdot(\rho\boldsymbol u) = \chi \nabla^2 \rho~\\[1mm] \label{eq:momentum} &&\frac{\partial(\rho \boldsymbol u)}{\partial t}+ \!\nabla\!\cdot\left[\rho\boldsymbol u\boldsymbol u+\frac{1}{2}(p+B^2)\boldsymbol I \!-\!\boldsymbol B\boldsymbol B\!\right]\! = -\nu\rho(\boldsymbol{u}\!-\!\boldsymbol{u}_0) +\chi\nabla^2 \rho\boldsymbol{u} \\[1mm] &&\frac{\partial \boldsymbol B}{\partial t} = \nabla\times(\boldsymbol u\times \boldsymbol B)- (\nabla \eta)\times\boldsymbol j+\eta\nabla^2 \boldsymbol B \label{eq:induction}\\[1mm] &&\frac{\partial h}{\partial t}+\nabla\cdot h\boldsymbol{u} = \frac{(\gamma-1)}{\gamma h^{\gamma-1}}\eta\boldsymbol{j}^2 +\chi \nabla^2 h \label{eq:pressure} , \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq3.png) where the symbols ρ, u, h, and B denote the primary variables, density, velocity, specific entropy of the plasma, and the magnetic field, respectively. The symbol I is the 3 × 3 identity matrix. The resistivity of the plasma is given by η and the collision coefficient ν accounts for the coupling of the plasma to a neutral gas moved around with a velocity u0. The system of equations is closed by an equation of state. The entropy h is expressed via the scalar pressure p as p = 2hγ. When the entropy is used as a variable instead of the internal energy (here adiabatic conditions are assumed, i.e., a ratio of the specific heats γ = 5/3), then Eq. (4) shows that in contrast to the internal energy, the entropy is conserved in the absence of Joule and viscous heating. Ampere’s law j = ∇ × B allows eliminating the current density j. The terms proportional to χ in Eqs. (1), (2), and (4) are added for technical reasons, as explained in the next section (Sect. 2.2).

where the symbols ρ, u, h, and B denote the primary variables, density, velocity, specific entropy of the plasma, and the magnetic field, respectively. The symbol I is the 3 × 3 identity matrix. The resistivity of the plasma is given by η and the collision coefficient ν accounts for the coupling of the plasma to a neutral gas moved around with a velocity u0. The system of equations is closed by an equation of state. The entropy h is expressed via the scalar pressure p as p = 2hγ. When the entropy is used as a variable instead of the internal energy (here adiabatic conditions are assumed, i.e., a ratio of the specific heats γ = 5/3), then Eq. (4) shows that in contrast to the internal energy, the entropy is conserved in the absence of Joule and viscous heating. Ampere’s law j = ∇ × B allows eliminating the current density j. The terms proportional to χ in Eqs. (1), (2), and (4) are added for technical reasons, as explained in the next section (Sect. 2.2).

The variables are rendered dimensionless by choosing typical values for a length scale L0, a normalizing density ρ0, and a magnetic field strength B0. To normalize the remaining variables and parameters, the following definitions are used:  for a typical (magnetic) pressure,

for a typical (magnetic) pressure,  for a typical (Alfvén) velocity, and τ0 for the Alfvén crossing time over a distance L0, that is,

for a typical (Alfvén) velocity, and τ0 for the Alfvén crossing time over a distance L0, that is,  . The current density is normalized by

. The current density is normalized by  , the resistivity by η0 = μ0L0uA0, and the energy by

, the resistivity by η0 = μ0L0uA0, and the energy by  . For simulations of the solar atmosphere, typical numerical values are L0 = 5000 km, ρ0 = 2 × 1015 m-3 and B0 = 10-3 T, which yields p0 = 0.7958 Pa, uA0 = 487.7 kms-1, τA0 = 10.25 s, j0 = 1.59 × 10-4 Am-2, η0 = 3.06 × 106 Ω.m, and W0 = 1.99 × 1013 J for the normalizing energy.

. For simulations of the solar atmosphere, typical numerical values are L0 = 5000 km, ρ0 = 2 × 1015 m-3 and B0 = 10-3 T, which yields p0 = 0.7958 Pa, uA0 = 487.7 kms-1, τA0 = 10.25 s, j0 = 1.59 × 10-4 Am-2, η0 = 3.06 × 106 Ω.m, and W0 = 1.99 × 1013 J for the normalizing energy.

2.2. Numerical implementation

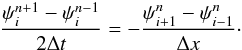

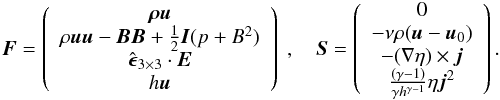

The resistive MHD equations (Eqs. (1)–(4)) are discretized on a 3D Cartesian grid employing a combination of a time-explicit leap-frog, a Lax, and a DuFort-Frankel finite-difference scheme (see Press et al. 2007). For the conservative, homogeneous part of the MHD equations, we adopted a second-order accurate leap-frog discretization scheme  (5)A first-order Lax method is used to start the integration from initial conditions, that is, to compute ψn from the given initial values ψn−1, or upon a change of the time step Δt (see below).

(5)A first-order Lax method is used to start the integration from initial conditions, that is, to compute ψn from the given initial values ψn−1, or upon a change of the time step Δt (see below).

The advantage of the leap-frog scheme lies in its low numerical dissipation – in the derivation of the scheme all even derivative terms cancel out in the expansion, and a von Neumann stability analysis shows that there is no amplitude dissipation for the linearized system of MHD equations. The full nonlinear system in principle exhibits finite dissipation rates, corresponding to additional nonlinear terms in the von Neumann stability analysis. As we show in Sects. 3.4 and 4.5, the effective numerical dissipation rates found with GOEMHD3 are sufficiently low to enable simulations of almost ideal dissipationless magneto-fluids with very high Reynolds number (Re ~ 1010).

The disadvantage of the leap-frog scheme is that it is prone to generating oscillations. When such numerical oscillations arise, they must be damped by a locally switched-on diffusivity, for example, which prevents a steepening of gradients beyond those resolved by the grid. This also prevents mesh-drift instabilities of staggered leap-frog schemes that arise because odd and even mesh points are decoupled (see, e.g., Press et al. 2007 and Yee 1966).

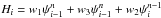

This general oscillation-damping diffusion is explicitely introduced via terms proportional to χ∇2ρ, χ∇2ρu, and χ∇2h in the right-hand sides of Eqs. (1), (2), and (4). Finite χ are switched on only when necessary for damping, as explained below. Hence, although a leap-frog scheme is by construction dissipationless (at least in the linear regime), we combined it with an explicit, externally controllable diffusion necessary to avoid oscillations. This in the end makes the scheme dissipative, but in a controlled way. To maintain second-order accuracy, the dissipative terms are discretized by a DuFort-Frankel scheme, which is also used to discretize the diffusion term in the induction Eq. (3): ![\begin{eqnarray} \label{eq:duforFrankel} \psi_i^{n+1}=\psi_i^{n-1}+2\Delta t \left[w_1 \psi_{i-1}^n+w_3 \psi_{i+1}^n+\frac{1}{2}w_2\left( \psi_i^{n-1}+\psi_i^{n+1}\right)\right] . \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq46.png) (6)Here,

(6)Here,  ,

,  , and

, and  are the coefficients necessary for calculating the second-order derivatives on the nonequidistant mesh. The left derivative is denoted by Δxl = xi−xi−1, the right by Δxl = xi + 1−xi, and the total by Δx = Δxl + Δxr.

are the coefficients necessary for calculating the second-order derivatives on the nonequidistant mesh. The left derivative is denoted by Δxl = xi−xi−1, the right by Δxl = xi + 1−xi, and the total by Δx = Δxl + Δxr.

By combining the leap-frog (Eq. (5)) and the DuFort-Frankel (Eq. (6)) discretization schemes, we obtain ![\begin{eqnarray} \psi^{n+1}=\psi^{n-1}+\lambda\left[S^n + \sum_i \left( \chi_i H_i - {\rm d}x_i F_i^n\right) \right] \label{eq:all}, \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq53.png) (7)with the fluxes

(7)with the fluxes  and source terms Si

and source terms Si (8)The diffusion term is

(8)The diffusion term is  , where

, where  is the permutation pseudo-tensor, E = −u × B is the convection electric field, and ψ represents any one of the plasma variables ρ, ρu, B, and h. Equation (7) further uses the abbreviations

is the permutation pseudo-tensor, E = −u × B is the convection electric field, and ψ represents any one of the plasma variables ρ, ρu, B, and h. Equation (7) further uses the abbreviations  , dt = 2Δt, d

, dt = 2Δt, d , and the index i represents the x, y, and z directions.

, and the index i represents the x, y, and z directions.

Note that two terms of the source vector S are not treated exactly according to this scheme: as a result of the staggered nature of the leap-frog scheme, the values of h at time level n are not available in the pressure equation. Similarly, for the induction equation, the gradient of the resistivity is needed at time level n. While in the former case, hn can simply be approximated by averaging over the neighboring grid points, now the gradient ∇ηn is extrapolated from the previous time level n−1, assuming that the arising numerical error is small for a resistivity that is reasonably smoothed both in space and in time. The resistivity is smoothed in time if a time-dependent resistivity model is used. GOEMHD3 is meant to describe collisionless astrophysical plasmas of the solar corona, for instance, where resistivity is physically caused by micro-turbulence. Since it is not possible to describe kinetic processes like micro-turbulence in the framework of an MHD fluid-model, different types of switch-on resistivity models are implemented in GOEMHD3 to mimic a kinetic-scale current dissipation at the macro scales. The criteria controlling the switch-on of resistivity usually localize the resistivity increase. This allows reaching the observed magnetic reconnection rates, for example. Current dissipation is expected to be most prominent in regions of enhanced current densities where the use of smooth resistivity models is appropriate.

As noted before, the numerical oscillations are damped by switching on diffusion. As soon as the value of ψ exhibits two or more local extrema (either maxima or minima) in any of the three coordinate directions, the diffusion coefficient χ is given a finite value, as an example, we chose ≃10-2, at the given grid point and its next neighbors. If at least two extrema are found, then the diffusion term is switched on locally in the corresponding direction. For this all directions (x,y and z) are considered separately.

For solar applications it is possible to start GOEMHD3 with initially force-free magnetic fields. These magnetic fields are obtained by a numerical extrapolation of the observed photospheric magnetic field. To improve the accuracy for strong initial magnetic fields, the current density is evaluated by calculating j = ∇ × (B−Binit), that is, for a field from which the initial magnetic field Binit is subtracted. This reduces the error arising from the discretization of the magnetic field. In this case, the current density is explicitly used to solve the momentum equation, which has the form ![\begin{eqnarray} \frac{\partial\rho \boldsymbol u}{\partial t}+\nabla\cdot\left[\rho\boldsymbol u\boldsymbol u+\frac{1}{2}p\boldsymbol I \right] - \boldsymbol j \times \boldsymbol B = -\nu\rho(\boldsymbol{u}-\boldsymbol{u}_0)+\chi \nabla^2 \rho\boldsymbol{u} \label{eq:momentum2} . \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq80.png) (9)For this case, GOEMHD3 can alternatively solve the momentum equation Eq. (9) instead of Eq. (2).

(9)For this case, GOEMHD3 can alternatively solve the momentum equation Eq. (9) instead of Eq. (2).

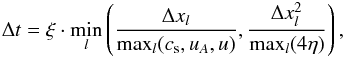

Time step control.

The time-explicit discretization entails a time-step limit according to the Courant-Friedrichs-Lewy (CFL) condition, which basically requires that during a time step no information is propagated beyond a single cell of the numerical grid. To this end, the minimum value of the sound, Alfvén, and fluid crossing times, and similarly for the resistive time scale, is determined for every grid cell,  (10)with the local values of the sound speed,

(10)with the local values of the sound speed,  , the Alfvén speed,

, the Alfvén speed,  , and the macroscopic velocity u = | u | at the grid position l. Typically, a value of 0.2 is chosen for the constant safety factor ξ ∈ (0,1).

, and the macroscopic velocity u = | u | at the grid position l. Typically, a value of 0.2 is chosen for the constant safety factor ξ ∈ (0,1).

In our simulations the time step Δt was changed only after several (usually at least aboutten) time steps, which avoids interleaving a necessary Lax integration step too frequently and hence compromising the overall second-order accuracy of the leap-frog scheme. To prevent an unlimited decrease of the time step, limiting values of at least 10% of the initial values of the density and 1% of the entropy h, for example, and u< 3uA were enforced. The values at the corresponding grid points were reset to the corresponding cut-off value, and the values at the surrounding grid points were smoothed by averaging over the neighboring grid points.

Divergence cleaning.

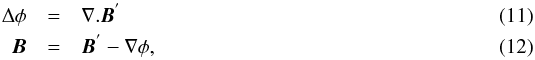

As a result of discretization errors, unphysical finite divergences of the magnetic field may arise. To remove such finite values of ∇B,weapplied the following cleaning method that solves a Poisson equation for the magnetic potential φ:  where B′ is the magnetic field with a finite divergence, and B is the cleaned magnetic field. With central differences dx = 1/(xi + 1−xi−1), and similarly for the other coordinate directions, which are suppressed here for brevity, the Poisson equation Eq. (11) is discretized as

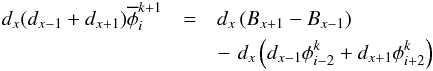

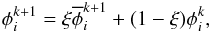

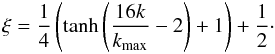

where B′ is the magnetic field with a finite divergence, and B is the cleaned magnetic field. With central differences dx = 1/(xi + 1−xi−1), and similarly for the other coordinate directions, which are suppressed here for brevity, the Poisson equation Eq. (11) is discretized as  (13)and is solved with a simple fix-point iteration, where k denotes the iteration step. For faster convergence we used a standard relaxation method,

(13)and is solved with a simple fix-point iteration, where k denotes the iteration step. For faster convergence we used a standard relaxation method,  (14)where the relaxation coefficient ξ depends on the iteration k as

(14)where the relaxation coefficient ξ depends on the iteration k as  (15)

(15)

2.3. Hybrid MPI-OpenMP parallelization

The time-explicit discretization scheme described above can be straightforwardly parallelized using a domain decomposition approach and introducing halo regions (ghost zones) of width 1, corresponding to an effective stencil length of 3 in each of the coordinate directions. Accordingly, only next-neighbor communication and a single global reduction operation (for computing the time step, cf. Eq. (10)) are necessary for exchanging data between the domains. To be specific, GOEMHD3 employs a 2D domain decomposition in the y-z plane with width-1 halo exchange, using the MPI. Within the individual pencil-shaped domains, a shared-memory parallelization is implemented using OpenMP. The hybrid MPI-OpenMP approach first integrates smoothly with the existing structure of the serial code, and second, thanks to a very efficient OpenMP parallelization within the domains, allows using a sufficiently large number of processor cores, given typical sizes of the numerical grid ranging between 2563 and 20483 points. In addition, the hybrid parallelization helps to maximize the size (i.e., volume in physical space) of the individual MPI domains, and hence to minimize the surface-to-volume ratio. The latter translates into a lower communication-to-computation ratio and hence relatively shorter communication times, and the former accounts for longer MPI messages and hence decreases communication overhead (latency). Our parallelization assumes the individual MPI domains to be of equal size (but not necessarily with a quadratic cross section in the y-z plane). This greatly facilitates the technical handling of the extrapolations required by so-called line-symmetric side-boundary conditions (Otto et al. 2007), which are often employed in realistic solar corona simulations. As a side effect, this restriction a priori avoids load-imbalances due to an otherwise non-uniform distribution of the processor workload.

Overall, as we demonstrate below (cf. Sect. 4.3), GOEMHD3 achieves very good parallel efficiency over a wide range of processor counts and sizes of the numerical grid, with the hybrid parallelization outperforming a plain MPI-based strategy at high core counts.

3. Test problems

To assess the stability, the convergence properties, and the numerical accuracy of the new GOEMHD3 code, we simulated the standard test problems of the Kelvin-Helmholtz instability and the Orszag-Tang vortex, performed a test (Skála & Bárta 2012) of the resistive MHD properties of the code, estimated the effective numerical dissipation in the nonlinear regime using a Harris-like current sheet, and compared our results with numerical and analytical reference solutions. All tests are 2D problems in the space coordinates. To perform these 2D simulations with our 3D code, the x-direction was considered invariant, and the numerical grid in this direction covered four points (minimum required by the code).

3.1. Kelvin-Helmholtz instability

The properties of the hydrodynamic limit of the GOEMHD3 code were verified by simulating the nonlinear evolution of the Kelvin-Helmholtz instability (KHI) in two dimensions. This is a well-known standard test of numerical schemes that solve the equations of hydrodynamics (see, e.g., McNally et al. 2012). The KHI instability is caused by a velocity shear. At its nonlinear stage, it leads to the formation of large-scale vortices. The time evolution of the size and growth rate of the vortices can be followed and compared with reference solutions obtained by other numerical schemes. We verified GOEMHD3 by closely following McNally et al. (2012). These authors established a standard methodology for the KHI test and published and sent us the results of their fiducial reference solutions obtained using the PENCIL code of simulations for high-resolution grids with up to 40962 grid points. To avoid problems of resolving sharp discontinuities that arise in some numerical schemes, McNally et al. (2012) proposed a test setup with smooth initial conditions as introduced by Robertson et al. (2010). For the two spatial coordinates 0 <y< 1 and 0 <z< 1) the initial conditions are therefore ![\begin{eqnarray} \zeta=\left\{ \begin{array}{ll} \zeta_1-\zeta_m \exp\left( \frac{z-1/4}{L}\right) & \mathrm{if}\,1/4>z\geq0 \\[1mm] \zeta_2+\zeta_m \exp\left( \frac{1/4-z}{L}\right) & \mathrm{if}\,1/2>z\geq1/4 \\[1mm] \zeta_2+\zeta_m \exp\left( \frac{z-3/4}{L}\right) & \mathrm{if}\,3/4>z\geq1/2 \\[1mm] \zeta_1-\zeta_m \exp\left( \frac{3/4-z}{L}\right) & \mathrm{if}\,1>z\geq3/4 \end{array} \right. \label{eq:kelHel-rho0v0} , \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq104.png) (16)where ζ either denotes the density ρ or the velocity uy, and ζm = (ζ1 + ζ2)/2. To trigger the instability, a small perturbation uz = 0.01sin(4πy) was imposed on the velocity in the z-direction, while the initial pressure was assumed to be uniform in space: p = 5. Periodic boundary conditions were imposed. According to the stability requirements of our code, we imposed diffusion quantified by a coefficient χ = 4 × 10-5 in Eqs. (1), (2) and (4).

(16)where ζ either denotes the density ρ or the velocity uy, and ζm = (ζ1 + ζ2)/2. To trigger the instability, a small perturbation uz = 0.01sin(4πy) was imposed on the velocity in the z-direction, while the initial pressure was assumed to be uniform in space: p = 5. Periodic boundary conditions were imposed. According to the stability requirements of our code, we imposed diffusion quantified by a coefficient χ = 4 × 10-5 in Eqs. (1), (2) and (4).

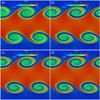

Figure 1 shows snapshots of the fluid density at time t = 2.5 as computed by GOEMHD3 using different numerical resolution ranging from 1282 (panel a) to 10242 (panel d) grid points. The familiar Kelvin-Helmholtz patterns are clearly recognizable and compare qualitatively well with published structures (e.g., Robertson et al. 2010; Springel 2010). For lower resolutions we observe somewhat smoother edges of the primary Kelvin-Helmholtz instability, which is due to the higher effective numerical diffusivity caused by the smoothing scheme of GOEMHD3 (cf. Sect. 2). For higher resolutions of 5122 (panel c) and 10242 (panel d), secondary billows develop in the primary billows. As McNally et al. (2012) pointed out, these secondary billows are artifacts caused by numerical grid noise.

|

Fig. 1 Color-coded mass density, ρ(y,z) at time t = 2.5 for the Kelvin-Helmholtz test problem. Panels a)–d) show the GOEMHD3 results for a numerical resolution of 1282, 2562, 5122, and 10242, respectively. |

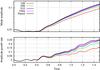

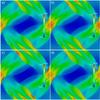

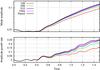

For a quantitative verification of GOEMHD3, we computed the time evolution of different variables introduced and defined by McNally et al. (2012). First we calculated the y-velocity mode amplitude Ay according to Eqs. (6) to (9) in McNally et al. (2012), its growth rate Ȧy, and the spatial maximum of the kinetic energy density of the motion in the y-direction ( ). We furthermore calculated the relative error by comparing GOEMHD3 results with those of the PENCIL reference code as obtained by McNally et al. (2012), who used the PENCIL code with a grid resolution of 40962 points. Finally, we calculated convergence quantities as defined by Roache (1998) for GOEMHD3. The results of these calculations are shown in Figs. 2–5.

). We furthermore calculated the relative error by comparing GOEMHD3 results with those of the PENCIL reference code as obtained by McNally et al. (2012), who used the PENCIL code with a grid resolution of 40962 points. Finally, we calculated convergence quantities as defined by Roache (1998) for GOEMHD3. The results of these calculations are shown in Figs. 2–5.

|

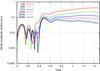

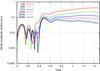

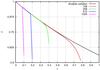

Fig. 2 Time evolution of the y-velocity mode amplitude Ay (top panel) and of its growth rate Ȧy (bottom panel) in the course of the Kelvin-Helmholtz test. The results obtained by GOEMHD3 for different spatial resolutions are color-coded according to the legend. The black line corresponds to the result obtained by a PENCIL code run using a grid resolution of 40962 (McNally et al. 2012). |

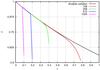

First, Fig. 2 shows that both the y-velocity mode amplitude Ay of the KHI (upper panel) and its growth rate (lower panel) converge well with increasing numerical resolution of GOEMHD3. They also agree very well overall with the reference solution obtained by using the PENCIL code. A closer look reveals, however, that while the initial evolution of Ay closely resembles the reference solution at high as well as at lower resolution, at later times a sufficiently high resolution of at least 5122 is needed to match the PENCIL code results. The velocity mode growth rate Ȧy and the maximum of the kinetic energy density of the motion in the y-direction Ey behave similarly, as Fig. 3 shows. While GOEMHD3 initially follows the reference solution at all tested resolutions, at later times, GOEMHD3 results for lower resolution are slightly lower than the result obtained by the PENCIL code.

|

Fig. 3 Time evolution of the highest kinetic energy density |

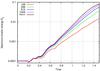

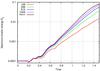

Furthermore, we benchmarked GOEMHD3 by comparing it with the KHI test results obtained by the PENCIL code for the same initial conditions. We quantified the comparison by calculating the relative error | εA | of the mode amplitude  obtained by GOEMHD3 with the corresponding values

obtained by GOEMHD3 with the corresponding values  obtained by a 40962 grid points run of the PENCIL code for reference:

obtained by a 40962 grid points run of the PENCIL code for reference:  (17)For the whole time evolution of the KHI until t = 1.5 (the last value available from McNally et al. 2012), Fig. 4 shows the relative errors of the GOEMHD3 results compared to the benchmark solution that was obtained with the PENCIL code using 40962 grid points. The relative error decreases from 30% if GOEMHD3 is using 1282 grid points to less than 4% if GOEMHD3 uses the same resolution of 40962 grid points as the PENCIL code. This is a very good result given that GOEMHD3 uses a numerically much less expensive second-order accurate scheme compared to the sixth-order scheme used in the PENCIL code.

(17)For the whole time evolution of the KHI until t = 1.5 (the last value available from McNally et al. 2012), Fig. 4 shows the relative errors of the GOEMHD3 results compared to the benchmark solution that was obtained with the PENCIL code using 40962 grid points. The relative error decreases from 30% if GOEMHD3 is using 1282 grid points to less than 4% if GOEMHD3 uses the same resolution of 40962 grid points as the PENCIL code. This is a very good result given that GOEMHD3 uses a numerically much less expensive second-order accurate scheme compared to the sixth-order scheme used in the PENCIL code.

|

Fig. 4 Time evolution of the relative error | εA | of the mode amplitude obtained for the Kelvin-Helmholtz test using GOEMHD3 compared to those obtained by the PENCIL code (McNally et al. 2012) for different numbers of grid points (color coded). |

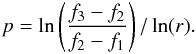

Now, we investigate how the mode amplitude converges with increasing mesh resolution and establish its uncertainty. The convergence assessment is based on the generalized Richardson extrapolation method, which allows extracting the convergence rate from simulations performed at three different grid resolutions with a constant refinement ratio (Roache 1998)  (18)Here, r = 2 is the mesh refinement ratio and f1, f2, and f3 are the mode amplitudes for the fine, medium, and coarse mesh, respectively. From the convergence rate we can calculate the grid convergence index (GCI, Roache 1998), which indicates the uncertainty based on the grid convergence p.

(18)Here, r = 2 is the mesh refinement ratio and f1, f2, and f3 are the mode amplitudes for the fine, medium, and coarse mesh, respectively. From the convergence rate we can calculate the grid convergence index (GCI, Roache 1998), which indicates the uncertainty based on the grid convergence p.  (19)where ε = (f2−f1) /f1 is a relative error and Fs = 1.25 is a safety factor. Following (Roache 1998), we used this value for grid convergence studies that compare three or more different resolutions. Figure 5 shows the time evolution of the grid convergence rate for the mode amplitude (upper panel) and the GCI corresponding to the finest resolution (lower panel). The convergence order of the GOEMHD3 runs appeared to be in the range (0.8−1.5). A convergence order p of about 1.5 for GOEMHD3, a second-order accurate code, is a very good result compared to convergence orders of about 2 obtained by higher (e.g., sixth-) order accurate schemes like PENCIL. At the same time, the mode amplitude uncertainty GCI for the highest resolution always remains lower than 0.008.

(19)where ε = (f2−f1) /f1 is a relative error and Fs = 1.25 is a safety factor. Following (Roache 1998), we used this value for grid convergence studies that compare three or more different resolutions. Figure 5 shows the time evolution of the grid convergence rate for the mode amplitude (upper panel) and the GCI corresponding to the finest resolution (lower panel). The convergence order of the GOEMHD3 runs appeared to be in the range (0.8−1.5). A convergence order p of about 1.5 for GOEMHD3, a second-order accurate code, is a very good result compared to convergence orders of about 2 obtained by higher (e.g., sixth-) order accurate schemes like PENCIL. At the same time, the mode amplitude uncertainty GCI for the highest resolution always remains lower than 0.008.

|

Fig. 5 Time evolution of the grid convergence rate (top panel) of the mode amplitude in dependence on the spatial resolution given in the legend and of the grid convergence index GCI (bottom panel) of the mode amplitude uncertainty for the highest resolution. |

The differences between the results obtained by GOEMHD3 and PENCIL at later times originate from the different role of diffusivity in the codes. While the leap-frog scheme implemented in GOEMHD3 is intrinsically not diffusive, it initially does not switch on diffusion either because no strong gradients are present, which would cause numerical oscillations. Hence the initial (linear) phase of the Kelvin-Helmholtz instability is well described by GOEMHD3 since it does not need additional smoothing at this stage. However, secondary billows develop earlier in the GOEMHD3 KHI test simulation than in PENCIL code simulations (see Fig. 1 and also Fig. 12 of McNally et al. 2012). This is due to the explicit diffusion that is switched on by the GOEMHD3 code when steep gradients have to be smoothed that develop during the turbulent phase of the KHI. As a result, GOEMHD3 initially, when it is still not diffusive at all, reveals the same Kelvin-Helmholtz instability growth even though is only second-order accurate. Later, however, at the nonlinear stage of the KHI, the explicit diffusion used in GOEMHD3 for smoothing exceeds the diffusion level of the sixth-order accurate PENCIL code.

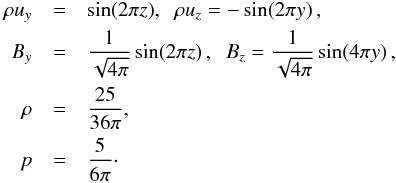

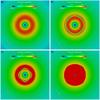

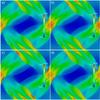

3.2. Orszag-Tang test

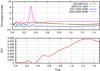

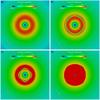

The ideal-MHD limit of the GOEMHD3 code was tested by simulating an Orszag-Tang vortex setup in two dimensions (Orszag & Tang 1979). The test starts with an initially periodic velocity and magnetic fields, a constant mass density, and a pressure distribution given by  (20)Hence both the velocity and magnetic fields contain X-points, where the fields vanish. In the y-direction the modal structure of the magnetic field differs from the velocity field structure. The simulation box size is [− 0.5,0.5] × [−0.5,0.5]. All boundary conditions are periodic. The coefficients χ of the smoothing diffusion terms are chosen to be 2 × 10-4, 1 × 10-4, 5 × 10-5, and 2 × 10-5 for meshes with 1282, 2562, 5122, and 10242 grid points, respectively. As expected, GOEMHD3 reproduces purely growing vortices including sharp gradients, structures, and a dynamics that resembles the results obtained by Ryu et al. (1995) and Dai & Woodward (1998). To show an example, Fig. 6 depicts the mass density distribution at t = 0.25. Panels a–d of Fig. 6 correspond to mesh resolutions of 1282, 2562, 5122, and 10242 grid points, respectively. Low-density regions are color-coded in blue, higher density values are plotted in red. Similar structures containing sharp gradients (shocks) were obtained also by ATHENA 4.2, for instance5, and by our least-squares finite element code (Skála & Bárta 2012). Note that, owing to its flux conservative discretization scheme, GOEMHD3 is able to accurately reproduce the position of shock fronts (cf. Fig. 7).

(20)Hence both the velocity and magnetic fields contain X-points, where the fields vanish. In the y-direction the modal structure of the magnetic field differs from the velocity field structure. The simulation box size is [− 0.5,0.5] × [−0.5,0.5]. All boundary conditions are periodic. The coefficients χ of the smoothing diffusion terms are chosen to be 2 × 10-4, 1 × 10-4, 5 × 10-5, and 2 × 10-5 for meshes with 1282, 2562, 5122, and 10242 grid points, respectively. As expected, GOEMHD3 reproduces purely growing vortices including sharp gradients, structures, and a dynamics that resembles the results obtained by Ryu et al. (1995) and Dai & Woodward (1998). To show an example, Fig. 6 depicts the mass density distribution at t = 0.25. Panels a–d of Fig. 6 correspond to mesh resolutions of 1282, 2562, 5122, and 10242 grid points, respectively. Low-density regions are color-coded in blue, higher density values are plotted in red. Similar structures containing sharp gradients (shocks) were obtained also by ATHENA 4.2, for instance5, and by our least-squares finite element code (Skála & Bárta 2012). Note that, owing to its flux conservative discretization scheme, GOEMHD3 is able to accurately reproduce the position of shock fronts (cf. Fig. 7).

|

Fig. 6 Mass density distribution obtained at t = 0.25 for the 2D Orszag-Tang test. Panels a)–d) correspond to a mesh resolution of 1282, 2562, 5122, and 10242 grid points, respectively. |

|

Fig. 7 Thermal plasma pressure (panels a), e)), magnetic pressure (panels b), f)), vorticity ∇ × u (panels c), g)) and current density ∇ × B (panels d), h)) obtained for the 2D Orszag-Tang test by GOEMHD3 (top row) and ATHENA 4.2 (bottom row) for a grid resolution of 2562. t = 0.5. |

The convergence properties of GOEMHD3 are illustrated by calculating the relative difference ερ = (ρ2−ρ1) /ρ1 of the spatial distribution of the mass density obtained by comparing the mass densities derived from runs with lower and higher mesh resolution. Here ρ1 corresponds to the mass density distribution obtained for the higher mesh resolution and ρ2 to the coarser grid. In particular, Fig. 8 shows the spatial distribution of the relative differences obtained at t = 0.5 for runs with doubled numbers of grid points – from 1282 to 2562, from 2562 to 5122, and from 5122 to 10242 in panels a) to c), respectively. Figure 8 shows that the largest relative differences ερ of the mass density are localized in regions of strong gradients (shock fronts), while they do not extend into regions of smooth flows.

|

Fig. 8 Spatial distribution of the relative difference | ερ | of the mass density obtained by GOEMHD3 simulating the Orszag-Tang vortex problem comparing the results for three different mesh resolutions of a) 1282–2562; b) 2562–5122; and c) 5122–10242 grid points. |

To directly compare the Orszag-Tang test of GOEMHD3 with the results of another astrophysical MHD code, we ran the same test using the ATHENA code in its version 4.2. For this, we employed the same setup as described before, a Courant safety constant C = 0.5, and a resolution of 2562 grid points.

Figure 7 compares the Orszag-Tang test simulation results of GOEMHD3 (top row) with those obtained by running it using the ATHENA 4.2 code (bottom row). The figure depicts the two-dimensional spatial distribution of the thermal pressure (panels a, e), of the magnetic pressure (panels b, f), of the vorticity ∇ × u (panels c, g), and of the current density proportional to ∇ × B (panels d, h) obtained at t = 0.50 for a mesh resolution of 2562 grid points. Figure 7 clearly shows that the thermal pressure, depicted in panels a) an e) and the magnetic pressure (panels b and f) are anticorrelated everywhere except in the post-shock flows. The comparison with the ATHENA results shows that the numerically much less expansive code GOEMHD3 reproduces the ATHENA results and only leaves slightly shallower gradients because only weak diffusion is added to smooth gradients to keep the simulation stable.

As already discussed before, GOEMHD3 switches on a finite diffusion to smooth numerically caused oscillations that may arise as a result of using a leap-frog discretization scheme. In addition, GOEMHD3 limits mass density and pressure to certain externally given lowest values to avoid too high information propagation speeds (sound and Alfvén), which would require very short time steps to fulfill the Courant-Friedrich-Levy condition. At the same time, the values of mass density and pressure in the neighboring zones of the grid are locally smoothed towards the minimum value. Of course, the limiting parameters have to be carefully chosen in a way to avoid numerically caused local changes of thermal and kinetic energy.

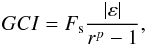

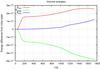

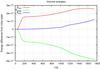

The resulting properties of GOEMHD3 concerning total energy conservation are documented in Fig. 9, which shows the resolution-dependent time evolution of the total energy (upper panel) and of the relative deviation from the conserved energy (lower panel). GOEMHD3 simulations were performed with resolutions of 1282 (red line), 2562 (green line), 5122 (blue line), and 10242 (magenta line) grid points. The black line overlaid in the upper panel corresponds to the volume-integrated total energy value of 0.0697, which was obtained with the the ATHENA code on a mesh of 10242 grid points (the energy density on the ATHENA mesh was rescaled from a surface density to a volume density to make it comparable with the 3D GOEMHD3 simulation).

The colored curves show the resolution-dependent amount of energy dissipation of GOEMHD3 – in contrast with (by construction of the numerical scheme) perfectly energy-conserving ATHENA code simulations. As expected, Fig. 9 shows that the energy loss in GOEMHD3 simulations can be easily reduced by enhancing the numerical resolution.

|

Fig. 9 Time evolution of the simulated total energy (upper panel) and its relative deviation from its conserved value (lower panel) as obtained by the 2D Orszag-Tang test using GOEMHD3 in dependence on the mesh resolution of 1282 (red), 2562 (green), 5122 (blue), and 10242 (magenta) grid points. The black line overlaid in the upper panel corresponds to the volume energy density of 0.0697 rescaled from the surface density that was obtained by the ATHENA code run for 10242 grid points. |

3.3. Resistive decay of a cylindrical current

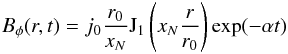

GOEMHD3 was developed to simulate current carrying astrophysical plasmas taking into account current dissipation. This means that to describe solar flares, for example, magnetic reconnection has to be simulated, which needs resistivity. A locally increased resistivity is commonly assumed to do this, which is switched on after reaching a macroscopic current density threshold, for example. After this, the resistivity grows linearly or nonlinearly with the current density (see, e.g., Adamson et al. 2013). For this purpose, GOEMHD3 contains modules for spatial and also temporal smoothing of the resistivity, which keeps the simulations stable. To test the ability of GOEMHD3 to correctly describe the behavior of a resistive magneto-plasma, we tested it by applying different models of resistivity and comparing the simulation results with analytical predictions where possible. In particular, we applied a test setup simulating the resistive decay of a cylindrical current column in two spatial dimensions for which in certain limits analytical solutions exist (Skála & Bárta 2012).

Initially, at t = 0, a cylindrical current was set up using a radial magnetic field B = (0,Bφ,0), which is given by  (21)in the internal (r ≤ r0) region and by

(21)in the internal (r ≤ r0) region and by  (22)in outer space (r>r0). Here j0 = 1 is the amplitude of the current density on the axis of the cylinder, and r0 = 1 is the radius of the current column, Jl(x) denotes a Bessel function of the order l, and xN ≈ 2.40 is its first root J0(x). The decrement (current decay rate) α is defined as α = η(xN/r0)2. The pressure was chosen uniformly (p = 1) in the whole domain and the density was set to a very high uniform value (ρ = 1032) that effectively sets the plasma at rest. Then the system of MHD Eqs. (1)–(4)) reduces to the induction Eq. (3), which in special cases can be solved analytically. For the GOEMHD3 test simulations the computational domain was chosen as [− 2.5,2.5] × [−2.5,2.5] and open boundary conditions were applied in the y and z directions. Periodic boundary conditions were used in the invariant (x-) direction.

(22)in outer space (r>r0). Here j0 = 1 is the amplitude of the current density on the axis of the cylinder, and r0 = 1 is the radius of the current column, Jl(x) denotes a Bessel function of the order l, and xN ≈ 2.40 is its first root J0(x). The decrement (current decay rate) α is defined as α = η(xN/r0)2. The pressure was chosen uniformly (p = 1) in the whole domain and the density was set to a very high uniform value (ρ = 1032) that effectively sets the plasma at rest. Then the system of MHD Eqs. (1)–(4)) reduces to the induction Eq. (3), which in special cases can be solved analytically. For the GOEMHD3 test simulations the computational domain was chosen as [− 2.5,2.5] × [−2.5,2.5] and open boundary conditions were applied in the y and z directions. Periodic boundary conditions were used in the invariant (x-) direction.

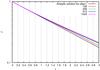

We simulated the consequences of resistivity for the evolution of electrical currents by using GOEMHD3 considering the decay of a current column in response to two different resistivity models – a sharp step-function-like and a smooth change of the resistivity.

Step function model of resistivity.

In this model the resistivity was set η = 0.1 in the internal region, while in outer space it was set to zero. For this step function of the resistivity distribution, Skála & Bárta (2012) found an analytic solution of the induction equation describing the time-dependent evolution of the magnetic field and current in the cylinder. According to this solution, the current decays exponentially, and an infinitesimally thin current ring is induced around the resistive region according to ![\begin{eqnarray} \label{eq:jt_anal} j_x(r,t)&=&j_0 J_0 \left(x_N \frac{r}{r_0}\right) \exp(-\alpha t) \! +\!\frac{j_0}{2\pi x_N} J_1(x_N)\nonumber\\ &&\times \left[1 - \exp \left(-\alpha t\right)\right]\delta(r-r_{\rm o}), \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq174.png) (23)where δ(x) is the Dirac delta function. As a result of the discretization of the equations instead of a Dirac delta function shape, the current ring has a finite width, which, in our case, extends over two grid-points while the magnitude of the current inside this ring is finite.

(23)where δ(x) is the Dirac delta function. As a result of the discretization of the equations instead of a Dirac delta function shape, the current ring has a finite width, which, in our case, extends over two grid-points while the magnitude of the current inside this ring is finite.

|

Fig. 10 GOEMHD3 simulation of the time evolution of the current density jx at the center of a current cylinder assuming a step-function change of the resistivity. Colored lines correspond to different mesh resolutions employed for the simulations. The solid black line corresponds to the analytic solution given by Eq. (23). |

|

Fig. 11 Magnetic field strength | B | in the y-z-plane at time t = 0.1τA for the step-function-like change of the resistivity changing with mesh resolution. Panels a)–d) correspond to a resolution of 1282, 2562, 5122, and 10242 grid points, respectively. |

Figure 10 shows that, initially, the decay of the current density in the center closely follows the time evolution of the analytic solution (Eq. (23)), while a sharp drop to zero is observed at later times depending on the numerical resolution of the grid. Figure 10 shows that the drop of the current density at the center of the column is steeper and occurs earlier the better the grid resolution is. This is due to a numerical instability which spreads starting from the sharp edge of the resistive cylinder propagating toward its center. The growth rate and speed of propagation of this instability increases with the grid resolution, as illustrated in Fig. 11. The figure shows the magnetic field strength | B | in the y-z-plane at time t = 0.1τA for four different mesh resolutions corresponding to 1282, 2562, 5122, and 10242 grid points. This dependence on resolution strongly indicates that the numerical origin of the instability is caused by the sharp resistivity in this model. To verify this hypothesis, we also tested another model in which the resistivity changes not by a step-function-like jump, but smoothly, as is typically encountered in astrophysical applications.

Smooth change of resistivity model.

Indeed, GOEMHD3 is meant to treat collisionless astrophysical plasmas, like that of the solar corona, by a fluid approach, while the resistivity (as other transport properties) is due to micro-turbulence, which is not described by the MHD equations. As a good compromise, smoothly changing resistivity models are often assumed to include this microphysics-based phenomenon in the fluid description. Smoothly changing switch-on models of resistivity are well suited to mimic the consequences of kinetic-scale processes. To test the influence of a resistivity changing smoothly in space and time, we used the same setup as described in the previous paragraph and only replaced the step-like jump function by a smooth resistivity change according to  (24)where now η0 = 0.1 and σ is a smoothness parameter. Figure 12 shows the results obtained for a smoothness parameter σ = 32. It indicates that a smooth resistivity change immediately solves the problem of oscillatory instabilities arising for a step-function-like resistivity change. As there is no analytical solution known for the smooth switch-on resistivity, we also show in Fig. 12 (by the black line) the result of the analytical prediction obtained for a step-function-like change of the resistivity. The simulated current decay is very similar to the analytically predicted decay for the step-function-like jump of the resistivity. The slight deviation of the curves from the predicted decay at later times might be due to the lower resistivity values that arise in the smooth model at the edge of the resistive cylinder (r → r0) compared to those typical for the step-function model. We note that the steepness parameter σ = 32 in Eq. (24) allowed a stable simulation of the current decay already for the relatively coarse mesh resolution of 1282 grid points, as shown in Fig. 12. We tested with additional test runs (not shown here) the stability of the simulations for even steeper resistivity changes and found that GOEMHD3 can easily cope with changes characterized by steepness parameters 64, 128, and higher, as long as the grid resolution is increased accordingly.

(24)where now η0 = 0.1 and σ is a smoothness parameter. Figure 12 shows the results obtained for a smoothness parameter σ = 32. It indicates that a smooth resistivity change immediately solves the problem of oscillatory instabilities arising for a step-function-like resistivity change. As there is no analytical solution known for the smooth switch-on resistivity, we also show in Fig. 12 (by the black line) the result of the analytical prediction obtained for a step-function-like change of the resistivity. The simulated current decay is very similar to the analytically predicted decay for the step-function-like jump of the resistivity. The slight deviation of the curves from the predicted decay at later times might be due to the lower resistivity values that arise in the smooth model at the edge of the resistive cylinder (r → r0) compared to those typical for the step-function model. We note that the steepness parameter σ = 32 in Eq. (24) allowed a stable simulation of the current decay already for the relatively coarse mesh resolution of 1282 grid points, as shown in Fig. 12. We tested with additional test runs (not shown here) the stability of the simulations for even steeper resistivity changes and found that GOEMHD3 can easily cope with changes characterized by steepness parameters 64, 128, and higher, as long as the grid resolution is increased accordingly.

Finally, we conclude that by testing different models of changing resistivity, we were able to demonstrate that GOEMHD3 can simulate the consequences of localized resistive dissipation with sufficient accuracy, provided the changes are not step-function-like.

|

Fig. 12 GOEMHD3 simulation of the time evolution of the current density jx at the center of a current cylinder assuming a smooth change of the resistivity. Colored lines correspond to different mesh resolutions employed for the simulations. Note that, since no analytical solution exists for this problem, the solid black line still corresponds to the analytic solution for a step-funtion-like change of the resistivity as given by Eq. (23) – as in Fig. 10. |

3.4. Harris current sheet

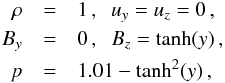

To assess the effective numerical dissipation rate for the leap-frog scheme in the nonlinear regime, we simulated a Harris-like current sheet in the framework of an ideal plasma (see, e.g., Kliem et al. 2000). The size of the simulation box was set to [− 10.0,10.0] × [−0.6,0.6] with an open boundary in y-direction and periodic boundary conditions in z-direction. The initial conditions read  (25)and the physical resistivity is η = 0.

(25)and the physical resistivity is η = 0.

|

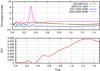

Fig. 13 Time evolution of the relative deviation | ΔBz/ tanh(ym) | of the magnetic field from the analytical prediction (top) and derived effective numerical resistivity ηn (bottom) at position (ym,zm) = (−0.5493,0) for the simulation of a Harris-like current sheet. Results for different spatial resolutions (number of grid points in y direction) are color-coded according to the legend. The high-frequency oscillations are caused by the mesh drift instability of the staggered leap-frog scheme, which is not explicitly damped in this simulation setup (cf. Sect. 2.2). |

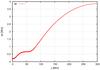

We measured the time-variation of the magnetic field, Bz, at point (ym,zm) = (−0.5493,0) where the field attains half of its maximum magnitude. The effective numerical resistivity of the discretization scheme can then be estimated by  (26)where ΔBz = Bz(ym,zm)−tanh(ym) is the difference between the numerical and the analytical solution for the magnetic field, Δt represents the time of the measurement, and the second derivative of Bz is approximated by a standard finite-difference representation. Figure 13 shows the time evolution of the numerical dissipation rate, ηn, of the code for different values of the mesh resolution in y direction (a constant number of eight grid points was used in the invariant z direction). The relative numerical error of Bz and hence the estimate of the numerical resistivity settle at very low values, for instance, ηn ≃ 10-14 and | ΔBz/ tanh(ym) | ≃ 5 × 10-11 for the simulation with 256 × 8 grid points.

(26)where ΔBz = Bz(ym,zm)−tanh(ym) is the difference between the numerical and the analytical solution for the magnetic field, Δt represents the time of the measurement, and the second derivative of Bz is approximated by a standard finite-difference representation. Figure 13 shows the time evolution of the numerical dissipation rate, ηn, of the code for different values of the mesh resolution in y direction (a constant number of eight grid points was used in the invariant z direction). The relative numerical error of Bz and hence the estimate of the numerical resistivity settle at very low values, for instance, ηn ≃ 10-14 and | ΔBz/ tanh(ym) | ≃ 5 × 10-11 for the simulation with 256 × 8 grid points.

We conclude that the residual intrinsic numerical dissipation of the discretization scheme is negligible compared with the physical resistivities and explicit numerical stabilization measures that typically apply in simulations with GOEMHD3. We complement this idealized 1D test below by estimates for the effective numerical dissipation rate obtained in fully 3D simulations of an eruptive solar region (see Sect. 4.5).

|

Fig. 14 Grid spacing dz in the z-direction in the simulation of the AR 1429, where z = 0 is the photosphere. The finer spacing at the bottom part samples the transition region better with steep gradients in the density and temperature. |

4. Three-dimensional simulations of the solar corona with GOEMHD3

To demonstrate the applicability of the GOEMHD3 code to realistic, 3D simulations of weakly collisional astrophysical plasmas at high Reynolds numbers and to assess the computational performance of the code, we simulated the evolution of the solar corona above an active region. Being able to simulate such scenarios, where a number of important dynamical processes are still not well understood, has in fact been the main motivation for developing GOEMHD3. As we show below, GOEMHD3 allows us to numerically tackle such problems with significantly higher numerical resolution and accuracy than was possible with its predecessor codes.

4.1. Physical context

We chose for this demonstration the solar corona above active region NOAA AR 1429 in March 2012. This active region is well known since it released many prominent phenomena, such as strong plasma heating, particle acceleration, and even eruptions. Many of them took place during the two weeks between March 2 and 15, 2012, making AR1429 one of the most active regions during solar cycle 24. They were observed in detail using the AIA instrument onboard of NASA’s Solar Dynamics Observatory SDO, for instance (see, e.g., Inoue et al. 2014; van Driel-Gesztelyi et al. 2014; Möstl et al. 2013). Very sensitive information was obtained about MeV energy (relativistic) electron acceleration processes, for example, which is provided by 30 THz radio waves. Examining the role of the continuum below the temperature minimum with a new imaging instrument operating at El Leoncito, Kaufmann et al. (2013) studied the 30 THz emissions. For the M8 class flare on March 13, 2012, for example, they found a very clear 30 THz signature, much cleaner than the white-light observations are able to provide. Another important information obtained about the solar activity are the dynamic spectra of solar proton emissions. The PAMELA experiment, for instance, measures the spectra of strongly accelerated protons over a wide energy range. For four eruptions of AR 1429 the observed energetic proton spectra were analyzed by Martucci et al. (2014). The authors interpreted the eruptions as an indication of first-order Fermi acceleration, that is, of a mirroring of the protons between dynamically evolving plasma clouds in the corona above AR 1429. Changes in the chemistry of the Earth’s atmosphere after the impact of the energetic solar protons emitted by AR 1429 were studied by von Clarmann et al. (2013). These authors used the MIPAS spectrometer onboard the now defunct European environmental satellite ENVISAT to measure temperature and trace gas profiles in the Earth’s atmosphere. They found that the amount of energetic solar protons produced by AR 1429 was among the 12 largest solar-particle events, also known as proton storms, in 50 years. These and more observations of AR 1429 indicate that very efficient energy conversion processes took place in the corona.

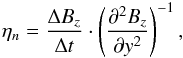

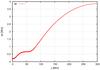

4.2. Initial and boundary conditions

We started the simulation with initial conditions derived in accordance with observations of AR 1429 on March 7, 2012, when at 00:02 UT a X5.4 flare eruption took place at heliographic coordinates N18E31. To describe the evolution of the corona before the eruption, we initialized the simulation using photospheric magnetic field observations obtained on March 6 at 23:35 UT. Figure 16 shows the line-of-sight (LOS) component of the photospheric magnetic field of AR 1429 obtained at this time by the HMI instrument onboard the SDO spacecraft in a field of view of 300 × 300 arcsec2. This field of view covers an area of 217.5 × 217.5 Mm2, which we chose as the lower boundary of the simulation box. The line-of-sight magnetic field was preprocessed by flux balancing, removing small-scale structures and fields close to the boundary before it was used for extrapolation into 3D. In particular, a spatial 2D Fourier filtering of the magnetic field data was applied to remove short spatial wavelength modes with wave numbers greater than 16, which correspond to structures that do not reach out into the corona, above the transition region. The Fourier-filtered magnetic fields were flux balanced and extrapolated into the third dimension according to the MHD box boundary conditions derived by Otto et al. (2007). The resulting initial magnetic field is depicted in Fig. 17. For the height of the simulation box we chose 300 Mm. The simulation grid spacing in the x and y directions is homogeneous with a mesh resolution given by the sampling over 2582 grid points. After filtering out all modes with wave numbers larger than 16, this grid allows resolving all magnetic field structures sufficiently well. In the height (z-) direction we also used 258 grid points, but the grid was nonuniformly distributed to better resolve the lower part of the corona, that is, transition region and chromosphere. Figure 14 shows the height-dependent grid spacing (dz) used.

The initial density distribution was prescribed such that the chromospheric density is 500 times higher than the density in the corona according to the equation ![\begin{eqnarray} \rho(z)=\frac{\rho_{\rm ch}}{2}\left[1-\tan\left(2\left(z-z_0\right)\right)\right] +\rho_{\rm co}, \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq202.png) (27)where, ρch and ρco are chromospheric and coronal densities. We note that the normalizing density was ρ0 = 2 × 1015 m-3. The transition region was initially localized around z0 = 3, which corresponds to 15 Mm. The initial thermal pressure p = 0.01p0 = 0.7957 Pa was homogeneous throughout the whole simulation domain, meaning that gravity effects were neglected. According to the ideal gas law T = p/ (kBN), this reveals the temperature height profile. The initial density and temperature height profiles are depicted in Fig. 15. The initial coronal temperature is on the order of 106 K. The initial plasma velocity is zero everywhere in the corona, but finite in the chromosphere.

(27)where, ρch and ρco are chromospheric and coronal densities. We note that the normalizing density was ρ0 = 2 × 1015 m-3. The transition region was initially localized around z0 = 3, which corresponds to 15 Mm. The initial thermal pressure p = 0.01p0 = 0.7957 Pa was homogeneous throughout the whole simulation domain, meaning that gravity effects were neglected. According to the ideal gas law T = p/ (kBN), this reveals the temperature height profile. The initial density and temperature height profiles are depicted in Fig. 15. The initial coronal temperature is on the order of 106 K. The initial plasma velocity is zero everywhere in the corona, but finite in the chromosphere.

|

Fig. 15 Initial height profiles of density and temperature in the simulation of AR 1429. The chromospheric density is 500 times higher than the density in the corona. The transition region is initially localized around z0 = 3, which corresponds to 15 Mm. |

|

Fig. 16 Magnetogram of active region 1429 on March 6, 2012, as taken by HMI onboard SDO. |

|

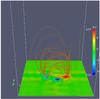

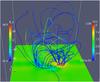

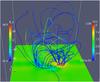

Fig. 17 Initial structure of the magnetic field in the parallel scaling tests. The field is current-free and is extrapolated from the 2D magnetogram of AR1429 by the Fourier method. The evolution is triggered by a divergence-free velocity vortex located in the positive magnetic footpoint. |

For the sides of the simulation box, the boundary conditions were set according to the MHD-equation-compatible line symmetry conditions derived by Otto et al. (2007). The top boundaries were open, that is,  , except for the normal to the boundary component of the magnetic field, which was obtained to fulfill the source-freeness condition ∇·B = 0. The bottom boundary of the simulation box was open for entropy and magnetic fluxes.

, except for the normal to the boundary component of the magnetic field, which was obtained to fulfill the source-freeness condition ∇·B = 0. The bottom boundary of the simulation box was open for entropy and magnetic fluxes.

The coronal plasma was driven via a coupling to the neutral gas below the transition region. The neutral gas was driven in accordance with the observed photospheric motion. First, the plasma flow velocities were inferred from photospheric magnetic field observations according to Santos et al. (2008b). To avoid emerging and submerging magnetic fluxes, the motion pattern was then modeled by divergence-free vortices given by ![\begin{eqnarray} \boldsymbol{u}_0=\nabla\times\left[\frac{\phi_0}{\cosh\left(\frac{x-y+c_0}{l_0}\right)\cosh\left(\frac{x+y+d_0}{l_1 }\right)} \right]\hat{\boldsymbol{z}} . \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq211.png) (28)The parameters determining strength and localization of the vortex motion were chosen in accordance with observations. In the simulated case the magnetic fluxes rotate around footpoints given by the set of parameters φ0 = 0.1, c0 = 9, d0 = −49, l0 = 2, and l1 = −2. The strength of the plasma driving by the neutral gas decreases with the height above the photosphere. This decrease is controlled by a height-dependent coupling term in the momentum equation Eq. (2) (or Eq. (9)). The height-dependent collision coefficient is defined as

(28)The parameters determining strength and localization of the vortex motion were chosen in accordance with observations. In the simulated case the magnetic fluxes rotate around footpoints given by the set of parameters φ0 = 0.1, c0 = 9, d0 = −49, l0 = 2, and l1 = −2. The strength of the plasma driving by the neutral gas decreases with the height above the photosphere. This decrease is controlled by a height-dependent coupling term in the momentum equation Eq. (2) (or Eq. (9)). The height-dependent collision coefficient is defined as ![\begin{eqnarray} \nu(z)=\frac{\nu_0}{2}\left[1-\tanh(20(z-z_{\rm c}))\right]. \end{eqnarray}](/articles/aa/full_html/2015/08/aa25274-14/aa25274-14-eq217.png) (29)For the simulated case a good approximation for the coupling coefficient is ν0 = 3 with and zc = 0.25 (or 1.25 Mm) as the characteristic height, where the coupling (and, therefore, the photospherically caused plasma driving) vanishes.

(29)For the simulated case a good approximation for the coupling coefficient is ν0 = 3 with and zc = 0.25 (or 1.25 Mm) as the characteristic height, where the coupling (and, therefore, the photospherically caused plasma driving) vanishes.

4.3. Computational performance of GOEMHD3

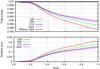

Employing the physical setup (i.e., initial and boundary conditions) described in the previous subsection, the parallel scalability and efficiency of the GOEMHD3 code was assessed across a wide range of CPU-core counts and for different sizes of the numerical mesh. The benchmarks were performed on the high-performance-computing system of the Max Planck Society, Hydra, which is operated by its computing center, the RZG. Hydra is an IBM iDataPlex cluster based on Intel Xeon E5-2680v2 Ivy Bridge processors (two CPU sockets per node, ten cores per CPU socket, operated at 2.8 GHz) and an InfiniBand FDR 14 network. Hydra’s largest partition with a fully nonblocking interconnect comprises 36 000 cores (1800 nodes). For the benchmarks Intel’s FORTRAN compiler (version 13.1) and runtime were used together with the IBM parallel environment (version 1.3) on top of the Linux (SLES11) operating system.

|

Fig. 18 Computing time per time step (open circles) as a function of the number of CPU cores. Two MPI tasks, each spawning ten OpenMP threads that are assigned to the ten cores of a CPU socket were used on each of the two-socket nodes, i.e., the total number of MPI tasks is ten times smaller than the number of CPU cores given on the abscissa. Different colors correspond to different sizes of the numerical grid. Dashed inclined lines indicate ideal strong scalability for a given grid size. Two sets of measurements in which both the number of grid points and the number of processor cores was increased by a factor of 23 from left to right are marked by filled circles. The horizontal dotted lines are a reference for ideal weak scalability. The diamond symbols correspond to additional runs that employed a plain MPI parallelization (OpenMP switched off), i.e., the number of MPI tasks equals the number of cores. |

Figure 18 provides an overview of the parallel performance of GOEMHD3, using the execution time for a single time step6 as a metric. Four different grid sizes were considered: grids with 2563 cells (black), 5123 cells (red), 10243 cells (green), and 20483 cells (blue). The figure demonstrates the very good overall strong scalability of the code, that is, the reduction of the computing time for fixed grid size with an increasing number of CPU cores (compare the measured runtimes plotted as circles with the dashed lines of the same color that indicate ideal scalability). For example, the parallel efficiency is at the 80% level for the 10243 grid on 2580 cores (128 nodes) when compared to the baseline performance on 160 cores (8 nodes). Simulations with a 20483 grid can be performed with a parallel efficiency of 80% on more than 10 000 cores.

Increasing the number of grid points by a factor of 8 (from 2563 to 5123, or from 5123 to 10243) and at the same time using eight times as many CPU cores, the computing time remains almost constant (compare the two sets of filled circles with the corresponding horizontal dotted lines in Fig. 18). This demonstrates the very good weak scalability of GOEMHD3, given that the complexity of the algorithm scales linearly with the number of grid points.

The deviations from the ideal (strong) scaling curves that become apparent at high core counts are due to the relatively longer fraction of time spent in the MPI communication (halo exchange) between the domains. For example, for the 10243 grid, the percentage of communication amounts to 30% for 10 000 cores and increases to about 50% at 36 000 cores. For a given number of cores, the communication-time share is longer for smaller grids (visible as a stronger deviation from ideal scalability in Fig. 18). The latter observation underlines the benefit of making the MPI domains as large as possible, which is enabled by our hybrid MPI-OpenMP parallelization approach (cf. Sect. 2.3). Moreover, by comparison with runs where the OpenMP parallelization was switched off and computer nodes were densely populated with MPI tasks (one MPI task per core), the advantages of the hybrid MPI-OpenMP vs. a plain MPI parallelization become immediately apparent. The smaller size of the MPI domains in the plain MPI runs (diamond symbols in Fig. 18) accounts for a higher communication-to-computation ratio and a larger number of smaller MPI messages. Accordingly, the communication times increase by about 75%, resulting in total runtimes being longer by 15–30% than the hybrid version using the same number of cores. It is crucial for the hybrid approach to achieve a close-to-perfect parallel efficiency of the OpenMP parallelization within the MPI domains so as not to jeopardize the performance advantages of the more efficient communication. Additional benchmarks have shown that GOEMHD3 indeed achieves OpenMP efficiencies close to 100% up to the maximum number of cores a single CPU socket provides (ten cores on our benchmark platform), but – as a result of the effects of NUMA7 and limited memory bandwidth – not beyond.

Overall, GOEMHD3 achieves a floating-point performance of about 1 GFlops/s per core, which is about 5% of the theoretical peak performance of the Intel Xeon E5-2680v2 CPU. Floating-point efficiencies in this range are commonly considered reasonable for this class of finite-difference schemes.