| Issue |

A&A

Volume 655, November 2021

|

|

|---|---|---|

| Article Number | A54 | |

| Number of page(s) | 28 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202040140 | |

| Published online | 19 November 2021 | |

Theoretical and numerical perspectives on cosmic distance averages

1

Aix Marseille Univ., CNRS, CNES, LAM, Marseille, France

e-mail: michel-andres.breton@lam.fr

2

Instituto de Física Teórica UAM-CSIC, Universidad Autónoma de Madrid, Cantoblanco, 28049 Madrid, Spain

e-mail: pierre.fleury@uam.es

Received:

16

December

2020

Accepted:

15

August

2021

The interpretation of cosmological observations relies on a notion of an average Universe, which is usually considered as the homogeneous and isotropic Friedmann-Lemaître-Robertson-Walker (FLRW) model. However, inhomogeneities may statistically bias the observational averages with respect to FLRW, notably for distance measurements, due to a number of effects such as gravitational lensing and redshift perturbations. In this article, we review the main known theoretical results on average distance measures in cosmology, based on second-order perturbation theory, and we fill in some of their gaps. We then comprehensively test these theoretical predictions against ray tracing in a high-resolution dark-matter N-body simulation. This method allows us to describe the effect of small-scale inhomogeneities deep into the non-linear regime of structure formation on light propagation up to z = 10. We find that numerical results are in remarkably good agreement with theoretical predictions in the limit of super-sample variance. No unexpectedly large bias originates from very small scales, whose effect is fully encoded in the non-linear power spectrum. Specifically, the directional average of the inverse amplification and the source-averaged amplification are compatible with unity; the change in area of surfaces of constant cosmic time is compatible with zero; the biases on other distance measures, which can reach slightly less than 1% at high redshift, are well understood. As a side product, we also confront the predictions of the recent finite-beam formalism with numerical data and find excellent agreement.

Key words: large-scale structure of Universe / distance scale / cosmology: theory / methods: numerical

© M.-A. Breton and P. Fleury 2021

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1. Introduction

On very large scales, our Universe seems to be well described by a spatially homogeneous and isotropic Friedmann-Lemaître-Robertson-Walker (FLRW) model (Green & Wald 2014). This model allows us to predict the dynamics of cosmic expansion as a function of the Universe’s content, and of the laws of gravitation. Furthermore, the FLRW model constitutes a rather efficient framework to interpret the observation of remote light sources; in particular, it provides the relation between their redshift z and their angular or luminosity distance D.

The distance-redshift relation D(z) is prominent in cosmology, as it is involved in the interpretation of various observables. Its first derivative today defines the Hubble-Lemaître constant, dD/dz|0 = c/H0, whose exact value is still subject to a lively debate (Planck Collaboration VI 2020; Riess et al. 2019; Wong et al. 2019; Freedman et al. 2019). More generally, D(z) constitutes the essence of the Hubble diagram of type-Ia supernovae (SNe, Scolnic et al. 2018; Abbott et al. 2019), which historically revealed the acceleration of cosmic expansion (Perlmutter & Aldering 1998; Riess et al. 1998), as well as the Hubble diagram of gravitational-wave standard sirens in the near future (Holz & Hughes 2005; Caprini & Tamanini 2016). The D(z) relation is also essential in the analysis of the anisotropies of the cosmic microwave background (CMB, Planck Collaboration VI 2020), or in the baryon-acoustic oscillation signal observed in galaxy, Lyman-α or quasar surveys (Alam et al. 2021), because it converts the observed angular size of the sound horizon θ* into a physical distance rs = D(z*)θ* that may be predicted by theory.

In the actual inhomogeneous Universe, however, the D(z) relation is affected by various effects, such as gravitational lensing (Schneider et al. 1992) which tends to focus and distort light beams, thereby changing the apparent size and brightness of light sources; it is also affected by the peculiar velocities of the sources and the observer, which correct the observed redshift via the Doppler effect (Hui & Greene 2006; Davis et al. 2011). Such effects make D(z) line-of-sight dependent, but it is generally assumed that the FLRW prediction is recovered on average.

The fundamental question of whether the average D(z) is the same as the D(z) of the average Universe goes back more than 50 years, when Zel’dovich (1964) and Feynman (in a colloquium given at Caltech the same year)1 suggested the following: if the Universe is lumpy, then a typical light beam should mostly propagate through under-dense regions, and thereby be de-focussed with respect to FLRW; this should imply that D(z) is actually biased up. Many developments and counter-arguments followed from that seminal idea; we refer the interested reader to the introduction of Kaiser & Peacock (2016, hereafter KP16) and the comprehensive review by Helbig (2020) for details.

In that debate, a significant step was made by Weinberg (1976), who showed that in a Universe sparsely filled with point masses, the average flux ∝⟨1/D2(z)⟩ is the same as if the matter in those lenses were homogeneously distributed in space. Importantly, Weinberg’s calculation was made at first order in the small projected density of the lenses2. As such, it also implies that ⟨D(z)⟩ is unaffected by inhomogeneities at that order, because the difference between ⟨1/D2⟩ and 1/⟨D⟩2 only appears at second order. Weinberg nevertheless conjectured, on the basis of flux conservation, that the invariance of ⟨1/D2(z)⟩ may be exact and hold for any matter distribution. As noted by Ellis et al. (1998), this general flux-conservation argument is, in fact, incomplete because it implicitly assumes that the area of surfaces of constant redshift are unaffected by inhomogeneities, which is a mere reformulation of the whole problem.

For a long period of time, all this discussion remained mostly centred on the observation of individual sources, with the aim of predicting possible biases on the Hubble diagram; it became a somewhat marginal topic from the end of the 1980s, presumably because the precision of cosmological measurements was not sufficient to be sensitive to the expected biases on ⟨D(z)⟩. Interest in that matter was nevertheless revived by Clarkson et al. (2014), who made the rather surprising claim that lensing affects the distance to the last-scattering surface (LSS) at percent level, which would be dramatic for the standard interpretation of the CMB. This claim was then retracted by (almost) the same team in Bonvin et al. (2015a), who with KP16 clarified that: (i) the average distance to the LSS is not relevant to the standard CMB analysis; and (ii) one must distinguish between the concepts of directional averaging, source-averaging, or ensemble-averaging, which may yield different results (Bonvin et al. 2015b). Such considerations on cosmological averages were actually elaborating on an earlier work by Kibble & Lieu (2005).

In the end, for the CMB just as for the Hubble diagram, the whole problem boils down to the validity of Weinberg’s conjecture which states that the area of LSS, A*, or the area of constant-redshift surfaces, A(z), are not significantly affected by inhomogeneities. KP16 undertook the difficult task to explicitly check this conjecture in the framework of cosmological perturbations at second order. With a rather intuitive approach, KP16 identified several key effects such as the shortening of the radius reached by rays due to their deflection, or the increase in A* due to its wrinkles, and eventually reached the conclusion that A* cannot be biased by more than a part in a million. They identified that the relevant structures responsible for such a bias are rather large in size, of the order of 50 h−1 Mpc.

Most of the theoretical work depicted above was done using cosmological perturbation theory on an FLRW background (see also Sasaki 1987; Bonvin et al. 2006; Ben-Dayan et al. 2012; Umeh et al. 2014; Yoo & Scaccabarozzi 2016). However, this theoretical framework is not guaranteed to provide a good representation of the Universe, as it does not access the highly non-linear regime of structure formation. That is why one may prefer to rely on numerical simulations and ray-tracing methods, in order to accurately describe the propagation of light in a realistic picture of the cosmos.

As a first step, a significant research endeavour was dedicated to ray tracing and distance measurements in cosmological toy-models, such as Swiss-cheese models (Brouzakis et al. 2007, 2008; Marra et al. 2008; Biswas & Notari 2008; Vanderveld et al. 2008; Valkenburg 2009; Clifton & Zuntz 2009; Bolejko 2009, 2011; Bolejko & Célérier 2010; Szybka 2011; Flanagan et al. 2013; Fleury et al. 2013; Fleury 2014; Troxel et al. 2014; Peel et al. 2014; Lavinto & Rasanen 2015; Koksbang 2017, 2019a,b, 2020a), plane-parallel Universes (Di Dio et al. 2012), or lattice models (Clifton & Ferreira 2009a,b, 2011; Clifton et al. 2012; Liu 2015; Bruneton & Larena 2013; Bentivegna et al. 2017; Sanghai et al. 2017; Koksbang 2020b). These works generally agreed with the relevant theoretical predictions. Using N-body simulations, Odderskov et al. (2016) showed that at low redshift (z < 0.1), the averaged luminosity distance is very close from its value in an FLRW background. Within the field of numerical relativity, Giblin et al. (2016) showed that ⟨log D⟩ (or averaged magnitude) was not affected by inhmogeneities, at least until z = 1.5. However, both studies used simulations with rather low resolution, which might be subject to large variance and therefore could not highlight second-order effects. More recently, Adamek et al. (2019) used the general-relativistic simulation gevolution (Adamek et al. 2016), and accurate ray tracing to find null geodesics between sources and observer and produce realistic halo catalogues. They found that when averaging over sources, ⟨1/D2(z)⟩ is very close to its value from a homogeneous Universe, until z = 3, thereby confirming Weinberg’s conjecture, while ⟨D(z)⟩ is slightly biased as expected. Albeit a high-resolution, gevolution remains a particle-mesh code without adaptive-mesh refinement, which thus cannot access very small scales.

In the present article, we propose a short theoretical review, and a detailed numerical study of the bias in the distance-redshift relation with respect to the standard FLRW prediction. The theory part builds upon KP16 and fills minor conceptual gaps therein. In the main, numerical, part we use a high-resolution N-body simulation part of the ‘Raygal’ suite and propagate photons on null geodesics to infer distance measures, accounting for gravitational lensing and redshift perturbations. Taking advantage of very large statistics and wide redshift range (up to z = 10), we investigate the different averaging procedures and study the statistics to the related observables. Furthermore, we numerically estimate the area bias depending on the choice of light-cone slicing.

The article is organised as follows. Section 2 presents the formalism for light propagation and the bias on statistical quantities with respect to the homogeneous case, depending on the averaging procedure; we connect these notions to the area of slices of the light cone. The numerical simulation, ray-tracing methods, and analysis techniques, are presented in Sect. 3, while the results are exposed in Sect. 4. We conclude in Sect. 5.

Notation and conventions. Greek indices (μ, ν, …) run from 0 to 3 and Latin indices (i, j, …) from 1 to 3. Bold symbols denote Euclidean two-dimensional or three-dimensional vectors, and matrices. Over-barred symbols denote quantities computed in a homogeneous-isotropic FLRW model. We adopt units in which the speed of light is unity, c = 1.

2. Theory

This section gathers a number of already-established theoretical results about light propagation in the inhomogeneous Universe, as well as a few novel elements, such as the distinction between lensing magnification and amplification and its interpretation. We shall focus on the statistical averages of distance measures, and how they relate to the area of light-cone slices.

2.1. Light propagation in a perturbed FLRW Universe

We consider a cosmological space-time described by a spatially flat FLRW model with scalar perturbations. The associated line element reads, in the Newtonian gauge

where η denotes the conformal time (hereafter simply referred to as time), xi are comoving coordinates, a(η) the scale factor describing cosmic expansion, and ϕ the Bardeen potential (Bardeen 1980) caused by inhomogeneities in the matter density field. We assume that anisotropic stress is negligible so that this potential is unique. Except in the vicinity of compact objects, ϕ ≪ 1 can be treated as a perturbation. The time at the observation event (here and now) is denoted η0, where the scale factor is conventionally set to unity, a0 = a(η0) = 1.

Light propagates along null geodesics of the space-time geometry. In the absence of perturbations (that is, for ϕ = 0), such geodesics are straight lines in comoving coordinates, travelled with unit coordinate speed. In the presence of perturbations, light rays are bent and the coordinate speed of light effectively varies (Schneider et al. 1992). These effects are encoded in the null geodesic equation kν∇νkμ = 0, with kμ ≡ dxμ/dλ and λ denotes a past-oriented affine parameter for the light ray. The temporal and spatial components of the geodesic equation read

where ℋ ≡ a−1da/dη is the conformal expansion rate. Equation (2) rules the evolution of light’s frequency in the cosmic frame; combined with the latter, Eq. (3) describes light bending.

2.2. Gravitational lensing

Light bending implies that the images of light sources are displaced and distorted when seen through the inhomogeneous Universe. Let θ denote the position of such an image of a point source, and β its FLRW counterpart, that is, the position where the image would be seen in the absence of cosmological perturbations. It is customary to refer to β as the source position.

2.2.1. Geometric distortions of infinitesimal images

The distortions of an infinitesimal image are then fully encoded in the Jacobi matrix of the mapping θ ↦ β, also called distortion matrix. This matrix may be parameterised as

with κ, γ = γ1 + iγ2, and ω are respectively called the convergence, complex shear, and rotation. As a rule of thumb, κ, γ are typically first order in cosmological perturbations, while ω is second order (see for example Fleury 2015, Sect. 2.3.2).

We define the signed geometric magnification of an image as

By definition of the determinant of a matrix, its absolute value |μ|=d2θ/d2β is the ratio of the angular size of an infinitesimal image, d2θ, and the angular size of the underlying source, d2β.

As indicated by its name and definition, the signed magnification of an image can be either positive or negative, which indicates its orientation relative to the source. An image at θ is said to have positive parity if μ(θ) > 0, and negative parity otherwise. In a Universe made of transparent lenses, any source has an odd total number 2n + 1 of images, with n ≥ 0 images of negative parity and n + 1 images of positive parity (Burke 1981; Schneider et al. 1992).

The total geometric magnification of a source β is the sum of the absolute magnifications of its 2n + 1 images θi(β),

It represents the total increase in apparent size of a source relative to is unlensed counterpart.

2.2.2. Geometric-magnification integrals

In a transparent Universe, the map θ ↦ β(θ), which to an image associates its source, is a well-defined surjective function of 𝕊2 onto 𝕊2. In other words, any image has one and only one source, and every source has at least one image. These properties imply

which we refer to as the geometric-magnification integrals.

We note that in the absence of multiple imaging, θ ↦ β(θ) is a diffeomorphism of 𝕊2, so that Eqs. (7) and (8) are merely changes of variables in an integral. The true interest of the magnification integrals is that they hold even in the presence of strong lensing and multiple images.

The total magnification integral (8) is the full generalisation of the result found by Weinberg (1976) at linear order and with point lenses. To the best of our knowledge, it was first formulated by Wucknitz (2008). The proof goes as follows. For each source element d2β, d2θtot = μtot(β) d2β is the total solid angle occupied by the associated images. As one sums over d2β, the image sphere gets progressively covered. On the one hand, the whole sphere is eventually covered, because any image has a source – for any θ, there is always a corresponding β. On the other hand, every image point θ is covered only once, because an image cannot have more than one source.

The inverse-magnification integral (7) can be found in Kibble & Lieu (2005). Its proof relies on the relative number of positive- and negative-parity images, mentioned in Sect. 2.2.1. For each element d2θ of the image sphere, d2β = |μ−1(θ)| d2θ is the corresponding solid angle in the source sphere. As one sums over d2θ, the entire source sphere is covered, again because every source has at least one image. Multiple imaging implies, however, that some regions of the source sphere may be covered several times. When this occurs, since a source β always has 2n + 1 images θi(β), n of which having negative parity, their contributions cancel two by two but one,

Therefore, each source element is eventually covered once and only once, which leads to Eq. (7). As pointed out by KP16, albeit correct the inverse-magnification integral has little practical interest, because it is difficult to observe the parity of an image.

2.2.3. Observable magnification: shift and tilt corrections

The geometric magnification μ = ±d2θ/d2β is a well-defined theoretical notion, but it is not the most observationally relevant one. This is because d2β represents the coordinate solid angle associated with an image, rather than the unlensed apparent size  of its source. There are two reasons why these quantities differ, namely the ‘shift’ and ‘tilt’, which we elaborate on below.

of its source. There are two reasons why these quantities differ, namely the ‘shift’ and ‘tilt’, which we elaborate on below.

Observable magnification. We consider an infinitesimal source at redshift z with physical area d2A. Let d2θ be the apparent size of an image of that source, and  its unlensed counterpart, that is the solid angle under which d2A would be seen at the same redshift in FLRW. The observable magnification is defined as

its unlensed counterpart, that is the solid angle under which d2A would be seen at the same redshift in FLRW. The observable magnification is defined as

where the ± sign indicates the image parity. By definition, the absolute observable magnification thus quantifies the change of the area distance DA to an image due to cosmological perturbations,

We note that the above relies on a notion of area distance associated with individual images θ.

Shift and tilt. We now relate the observational magnification  to the geometric magnification μ. For simplicity, we identify the source with an infinitesimal patch of the surface of constant redshift. However, the results obtained in this paragraph are much more general; in particular, we refer the reader to Appendix A for an alternative approach based on a spherical source.

to the geometric magnification μ. For simplicity, we identify the source with an infinitesimal patch of the surface of constant redshift. However, the results obtained in this paragraph are much more general; in particular, we refer the reader to Appendix A for an alternative approach based on a spherical source.

Let d2β be the coordinate solid angle covered by the source. We may multiply and divide the expression (11) of  by d2θ/d2β to get

by d2θ/d2β to get

where  is the physical area sub-tended by the coordinate solid angle d2β in the absence of perturbations.

is the physical area sub-tended by the coordinate solid angle d2β in the absence of perturbations.

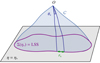

As illustrated in Fig. 1,  differs from d2A for two reasons. First, for a given redshift z, the time and radial position of the source event are not necessarily the same in the background

differs from d2A for two reasons. First, for a given redshift z, the time and radial position of the source event are not necessarily the same in the background  as in the perturbed Universe (η, r); the coordinates of that event are shifted. We call d2A⊥ the area sub-tended by d2β at the shifted event; we have

as in the perturbed Universe (η, r); the coordinates of that event are shifted. We call d2A⊥ the area sub-tended by d2β at the shifted event; we have

|

Fig. 1. Illustrating the difference between the geometrical magnification μ = d2θ/d2β and the observable magnification |

Second, because of light deflection, the orientation of the source is tilted by an angle ι with respect to how it would be seen in FLRW. Because they are sub-tended by the same solid angle d2β, the tilted area d2A is larger than its untilted counterpart d2A⊥ = d2A × cos ι.

Summarising, the observable and geometrical magnifications are related as

We generally expect the shift to be the main driver of the difference between μ and  , because the tilt cos ι ≈ 1 − ι2/2 is a second-order quantity. Specifically, in the numerical results discussed in Sect. 4.1, the effect of tilt will always be sub-dominant; it will be precisely quantified in Sect. 4.3.

, because the tilt cos ι ≈ 1 − ι2/2 is a second-order quantity. Specifically, in the numerical results discussed in Sect. 4.1, the effect of tilt will always be sub-dominant; it will be precisely quantified in Sect. 4.3.

Physical origin of the shift. While μ is a pure-lensing quantity,  depends on other phenomena, such as time delays, Sachs-Wolfe (SW) and integrated Sachs-Wolfe (ISW) effects, or peculiar velocities. The latter in particular may lead to significant differences between μ(z) and

depends on other phenomena, such as time delays, Sachs-Wolfe (SW) and integrated Sachs-Wolfe (ISW) effects, or peculiar velocities. The latter in particular may lead to significant differences between μ(z) and  at low redshift. If a source has, for instance, a centripetal peculiar velocity with respect to the observer, then its redshift is smaller compared to a comoving source at the same position. Thus, for a given redshift z its comoving distance must be slightly larger than the one that it would have if it were comoving,

at low redshift. If a source has, for instance, a centripetal peculiar velocity with respect to the observer, then its redshift is smaller compared to a comoving source at the same position. Thus, for a given redshift z its comoving distance must be slightly larger than the one that it would have if it were comoving,  . Because the source event belongs to the observer’s past light cone, this also means that it happens slightly earlier,

. Because the source event belongs to the observer’s past light cone, this also means that it happens slightly earlier,  . At low z, this typically results in

. At low z, this typically results in  , implying that

, implying that  . The conclusion would be opposite if the peculiar velocity were centrifugal.

. The conclusion would be opposite if the peculiar velocity were centrifugal.

To be more specific, at first order in the peculiar velocities of the source, vs and of the observer, vs, the shift3 reads (Kaiser 1987; Sasaki 1987)

where β is the unit vector in the background direction of the source. The 1/(ℋr) term in Eq. (15) shows that for sources at small distances,  may reach large values.

may reach large values.

The quantity  is sometimes called ‘Doppler convergence’ (Bonvin 2008; Bolejko et al. 2013; Bacon et al. 2014), although it is unrelated to lensing. This expression and notation originate from the fact that we may define an observable distortion matrix

is sometimes called ‘Doppler convergence’ (Bonvin 2008; Bolejko et al. 2013; Bacon et al. 2014), although it is unrelated to lensing. This expression and notation originate from the fact that we may define an observable distortion matrix  , which is to the distortion matrix 𝒜 what

, which is to the distortion matrix 𝒜 what  is to μ. Namely, if

is to μ. Namely, if

where 𝒟 is the Jacobi matrix of the Sachs formalism (for example Fleury 2015, Sect. 2.2), then  . We may then introduce a convergence-shear decomposition of

. We may then introduce a convergence-shear decomposition of  similarly to Eq. (4), thereby defining

similarly to Eq. (4), thereby defining  , to which

, to which  is an important contribution.

is an important contribution.

Fixing other parameters. In the above, we have defined the observable magnification  at fixed redshift. This choice was made for concreteness, but the definition of

at fixed redshift. This choice was made for concreteness, but the definition of  could be adapted if we were to fix another parameter, such as the comoving radius, the emission time, or the affine parameter. A little intellectual challenge would consist in determining which light-cone slicing may ensure

could be adapted if we were to fix another parameter, such as the comoving radius, the emission time, or the affine parameter. A little intellectual challenge would consist in determining which light-cone slicing may ensure  . To our knowledge, there is currently no answer to that particular question.

. To our knowledge, there is currently no answer to that particular question.

2.2.4. Amplification and luminosity distance

Small or remote sources, such as SNe or quasars, are generally unresolved by telescopes. In that context, the key observable is the observed flux, that is the total power received from the source per unit of telescope area, rather than the apparent size of images. We may define the amplification of a source β at z as the ratio of the observed flux S(z, β) with its unlensed counterpart  . By virtue of Etherington’s reciprocity law (Etherington 1933), and assuming a transparent Universe, the amplification is nothing but the total observable magnification,

. By virtue of Etherington’s reciprocity law (Etherington 1933), and assuming a transparent Universe, the amplification is nothing but the total observable magnification,

By definition of the luminosity distance DL, we also have

This could seem to be at odds with Eq. (11) and the well-known distance-duality relation DL = (1 + z)2DA. This apparent paradox is due to the fact that we have defined DA for a single image, while DL accounts for all the images of a given source. The two approaches are reconciled if we consistently distinguish between image-based definitions and source-based definitions. For example, we could define the area distance of a multiply imaged source DA(z, β) from the total apparent area occupied by all its images. In that case ![$ [\bar{D}{_{\text{ A}}}(z)/D{_{\text{ A}}}(z,{\boldsymbol{\beta}})]^2=\tilde{\mu}{_{\text{ tot}}}(z,{\boldsymbol{\beta}}) $](/articles/aa/full_html/2021/11/aa40140-20/aa40140-20-eq47.gif) consistently with distance duality.

consistently with distance duality.

Finally, we note that Eq. (17) is only valid if one compares the background and perturbed fluxes at the same redshift z. Had we compared the two situations, for instance, at fixed affine parameter, the background and perturbed redshift would have differed, which would have affected fluxes through the energy and the reception rate of individual photons.

2.3. Averaging in cosmology

The interpretation of cosmological observations, and their confrontation with theoretical predictions, involve various notions of averaging, which are non-trivially related in the presence of gravitational lensing. We review here the relevant definitions and properties of cosmological averages, elaborating on Bonvin et al. (2015b), Kaiser & Peacock (2016), Fleury et al. (2017a).

Importantly, from now on we shall neglect multiple imaging, except explicitly stated otherwise. Thus, the lens mapping θ ↦ β is assumed to be a diffeomorphism of 𝕊2, and the resulting magnifications are positive. In that context, there is no difference between signed, absolute, and total magnifications any more. We may also treat observable magnification and amplification as synonyms, both denoted  . This assumption is justified by the relatively rare occurrence of strong lensing from a cosmological perspective, and by the huge gain of simplicity that it brings to the discussions of this section.

. This assumption is justified by the relatively rare occurrence of strong lensing from a cosmological perspective, and by the huge gain of simplicity that it brings to the discussions of this section.

2.3.1. Directional averaging

Let X(θ) be an observable in the direction θ on the observer’s celestial sphere, such as the temperature anisotropies of the cosmic microwave background, or the apparent surface density of galaxies. Directional averaging ⟨…⟩d corresponds to a statistical average of X(θ) where all the observation directions θ have the same statistical weight; the average is thus weighted by the image solid angle d2θ,

One may ask how lensing affects directional averages, in particular for distance measurements. We first note that, by virtue of Eq. (7), the directional average of the inverse geometric magnification is unity,

This property is exact and applies to any slicing of the light-cone. However, as pointed out in Sect. 2.2.3, Eq. (20) has only little observational relevance, because the actually observable quantity is  , which differ from μ by the shift and tilt described in Fig. 1. Despite that concern, we may still conclude that

, which differ from μ by the shift and tilt described in Fig. 1. Despite that concern, we may still conclude that

in the limit where the tilt/shift corrections are sub-dominant compared to the most relevant gravitational-lensing effects.

Unlike Eq. (20), the accuracy of Eq. (21) depends on which parameter is fixed in the definition of  . For instance, Kibble & Lieu (2005) argued that

. For instance, Kibble & Lieu (2005) argued that  was accurate for sources at fixed affine parameter λ; this was checked numerically with ray tracing in post-Newtonian cosmological modelling (Sanghai et al. 2017). However, we shall see in Sect. 4.2.5 that the use of the affine parameter is quite risky at very high redshift. If instead the redshift is kept fixed, then significant departures from

was accurate for sources at fixed affine parameter λ; this was checked numerically with ray tracing in post-Newtonian cosmological modelling (Sanghai et al. 2017). However, we shall see in Sect. 4.2.5 that the use of the affine parameter is quite risky at very high redshift. If instead the redshift is kept fixed, then significant departures from  are expected at low z due to peculiar velocities.

are expected at low z due to peculiar velocities.

2.3.2. Source-averaging and areal averaging

We now consider an observable Y which is associated with a specific population of sources, such as the distance to type-Ia supernovae or the Lyman-α absorption in quasar spectra. The natural averaging procedure associated with such an observable is called source averaging ⟨…⟩s, and is defined as

where N denotes the number of observed sources, and in the second equality we took the continuous limit. The difference with directional averaging is that the sky is not necessarily homogeneously sampled. Clearly, if the sources are not homogeneously distributed in the Universe, then their projected density N−1d2N/d2θ tends to favour some regions of the sky more than others, thereby breaking the apparent statistical isotropy.

But even if sources are homogeneously distributed in space, gravitational lensing implies that they do not evenly sample the observer’s sky. Indeed, lensing tends to make light beams ‘avoid’ over-dense regions of the Universe, thereby favouring under-dense regions in source-averages. This specific effect may be captured in the notion of areal averaging. For example, if all the sources are observed at the same redshift z, we may define the areal average of Y as

with Σ(z) the surface of constant redshift z and A(z) its total proper area. The definition must be adapted if the sources are observed on other slices of the light cone, for instance all at the same emission time η or affine parameter λ.

Using the area distance,  , we may convert areal averages in terms of directional averages as follows,

, we may convert areal averages in terms of directional averages as follows,

from which we immediately conclude, substituting  , that

, that

by virtue of Eq. (21). Areal averaging exactly coincides with source-averaging if the sources are homogeneously distributed on Σ(z), because then the number of observed sources scales as the area that they occupy, so that  . If not, corrections arise from the correlation between the fluctuations of the density of sources and the amplification; further corrections such as redshift-space distortions, must also be accounted for if the sources are observed in redshift bins (Fleury et al. 2017a; Fanizza et al. 2020). Such discrepancies between source-averaging and areal averaging typically remain below 10−5, and hence they may be neglected. Combining this approximation with Eq. (25) then yields

. If not, corrections arise from the correlation between the fluctuations of the density of sources and the amplification; further corrections such as redshift-space distortions, must also be accounted for if the sources are observed in redshift bins (Fleury et al. 2017a; Fanizza et al. 2020). Such discrepancies between source-averaging and areal averaging typically remain below 10−5, and hence they may be neglected. Combining this approximation with Eq. (25) then yields

Equation (26) was shown to be accurate at the 10−3 level up to z = 3 by Adamek et al. (2019).

2.3.3. Ensemble averaging and cosmic variance

We shall close this discussion with the notion of ensemble averaging. Within the standard lore, we envisage all cosmological structures as originating from quantum fluctuations in the primordial Universe (Peter & Uzan 2013). From that point of view, ϕ(η, x) is a particular realisation of an intrinsically stochastic field, which is believed to be initially Gaussian. In that framework, the ensemble average of any field Z(η, x) that depends on ϕ, which we may simply denote as ⟨Z(η, x)⟩, would be its expectation value over an infinite number of realisations the Universe.

Contrary to directional, areal, or source-averages, ensemble-averaging is thus a strictly theoretical procedure, which is nevertheless used in any cosmological prediction. Ensemble averaging may be connected to other averaging procedures via the ergodicity principle. Which observable averaging is mimicked by ensemble averaging then depends on which quantities are kept fixed when making multiple realisations of the Universe as illustrated in Fig. 2. For example, if the observed direction of light θ and redshift z are kept fixed, then we get directional averaging,

|

Fig. 2. Correspondence between ensemble averaging and observational averaging procedure depends on which quantity is kept fixed. |

because any θ is virtually affected the same statistical weight. In this scenario, the source position β may change from one cosmic realisation to another. An alternative scenario would consist, on the contrary, in fixing β while allowing θ to vary from one realisation to another; this yields

We may divide the above with ⟨μ−1⟩d if directional average is taken on a fraction of the sky only. Other possibilities would consist in fixing another parameter than the redshift, such as time or affine parameter, which would correspond to averaging across other slices of the light cone.

Importantly, ergodicity is sensible only if the region of the Universe over which an observational averaging is performed is statistically homogeneous. In other words, there should not be super-sample inhomogeneity modes. Such an assumption is not satisfied in the standard lore, which predicts inhomogeneity modes at all scales. Thus, any observational average is subject to an irreducible source of uncertainty, called cosmic variance. Equations (27) and (28) only hold up to cosmic variance.

2.4. Biased distance measurements

Equations (21) and (26) show than only very specific quantities are (almost) unbiased by cosmic inhomogeneities; in particular, most distance measurements happen to be biased. We describe here the nature and amplitude of these biases.

We introduce for convenience the dimension-less distance

Because d is a non-linear function of  , it exhibits a statistical bias for both directional and source-averaging. For directional averaging we may expand d at second order in

, it exhibits a statistical bias for both directional and source-averaging. For directional averaging we may expand d at second order in  and use Eq. (21) to get

and use Eq. (21) to get

Similarly, for areal or source-averaging we may expand d in terms of  which, together with Eq. (26) yields

which, together with Eq. (26) yields

The biases appearing in Eqs. (30) and (31) are not independent, and are usually expressed in terms of the convergence. Indeed, if the amplification is expressed in terms of some convergence and shear similarly to Eq. (5), that is, ![$ \tilde{\mu}=[(1-\tilde{\kappa})^2-|\tilde{\gamma}|^2]^{-1} $](/articles/aa/full_html/2021/11/aa40140-20/aa40140-20-eq72.gif) , then at second order in

, then at second order in  ,

,

Since  is a second-order quantity, the difference between its directional, source-, or ensemble-average would be of higher order, and hence it should not matter much which averaging procedure is considered when substituting

is a second-order quantity, the difference between its directional, source-, or ensemble-average would be of higher order, and hence it should not matter much which averaging procedure is considered when substituting  in Eqs. (30) and (31). Furthermore, if we neglect again the shift and tilt corrections4 and write

in Eqs. (30) and (31). Furthermore, if we neglect again the shift and tilt corrections4 and write  , then we simply have

, then we simply have

If Eq. (34) is applied at the redshift of the CMB, z* ≈ 1100, and κ(z) is computed from linear perturbation theory, then the corresponding bias reaches the percent level. This is how Clarkson et al. (2014) concluded that the standard analysis of the CMB, which does not account for such a bias, might be flawed. That conclusion was shown to be incorrect by Bonvin et al. (2015a), Kaiser & Peacock (2016), because the analysis of the CMB is in fact not sensitive to ⟨d(z*)⟩s. However, supernova cosmology is. In supernova surveys, it is customary to use the distance modulus rather than the luminosity distance as a distance measure; its perturbation due to inhomogeneities reads Δm = 5log10d, and hence its source-averaged bias is

For z < 2, this bias remains below 10−3 and hence is negligible in current SN surveys, except for reconstructions of the evolution of the dark-energy equation of state (Fleury et al. 2017a). It would be easily removed if next-generation surveys were using  instead of magnitude as a distance indicator.

instead of magnitude as a distance indicator.

We finally note that all the above only holds in a transparent Universe. If this assumption is relaxed, then distance measurements may be further biased by selection effects. For instance, in a Universe made of opaque matter lumps, observed light beams do not evenly sample the density field – they experience an effectively under-dense Universe. This result in an effective de-focussing of light as originally described by Zel’dovich (1964), later generalised by Dashevskii & Slysh (1966) and Dyer & Roeder (1974) on the basis of Einstein-Straus Swiss-cheese models (Kantowski 1969; Fleury 2014). The resulting bias on luminosity distance measurements typically reaches 10% at z = 1 for very lumpy models (Fleury et al. 2013). In Okamura & Futamase (2009), the authors made an attempt to determine the fraction of such opaque lumps based on the halo model of Sheth & Tormen (1999). To date, however, there is no compelling evidence of any large effect of opaque lumps on distance measurements in our Universe (Helbig 2020).

2.5. Reformulation: the area of light-cone slices

We may now rephrase the average-amplification rules (21) and (26) in terms of the area of light-cone slices, such as surfaces of constant redshift. We consider for instance the directional average of the inverse amplification:

where in the last equality we introduced the area A(z) of the surface of constant redshift, Σ(z), as well as its background counterpart  . Equation (36) thus tells us that

. Equation (36) thus tells us that  would be equivalent to

would be equivalent to  ; meaning that the area of iso-z surfaces is mostly unaffected by inhomogeneities.

; meaning that the area of iso-z surfaces is mostly unaffected by inhomogeneities.

Although it may seem quite natural, the last equality of Eq. (36) is, in fact, not obvious. In the background FLRW space-time,  is a sphere (in comoving coordinates) at constant cosmic time; hence its proper area is clearly

is a sphere (in comoving coordinates) at constant cosmic time; hence its proper area is clearly  . But things are less clear in the inhomogeneous Universe, where Σ(z) is wrinkly and is not limited to a constant-time hypersurface. The definition of its proper area A(z) is then subject to several questions about its uniqueness, if it is frame-dependent and how it relates to the angular distance. We propose to clarify these subtleties below.

. But things are less clear in the inhomogeneous Universe, where Σ(z) is wrinkly and is not limited to a constant-time hypersurface. The definition of its proper area A(z) is then subject to several questions about its uniqueness, if it is frame-dependent and how it relates to the angular distance. We propose to clarify these subtleties below.

2.5.1. Surfaces of constant redshift and their area

Surfaces of constant redshift, Σ(z), correspond to a particular slicing of the light cone 𝒞 of the observation event O, as illustrated in Fig. 3. We note that this slicing is generally not performed at constant time, η(z)≠ const. This last property raises the question of how to actually define the proper area of Σ(z).

|

Fig. 3. Surface of constant redshift, Σ(z) (red), is a particular slicing of the light cone 𝒞 (blue) that is not included in constant-time hyper-surfaces (grey). We represented light rays as straight lines for simplicity. |

Let d2x be the element of Σ(z) subtended by the solid angle d2θ at O. For causality reasons, d2x must be space-like; thus, there exists a frame such that d2x is strictly spatial. We shall call it the ‘natural frame’5 of d2x, and define the area d2Az of d2x in that frame. Applying that construction to all elements d2x of Σ(z) and integrating over them then defines its total area A(z).

Now that we have defined the area of an iso-z surface, we shall see how it relates to the angular distance DA(z). For that purpose, we note that d2x is orthogonal to direction of light propagation in its natural frame. We shall now prove this point. We may see 𝒞 as the hyper-surface defined by all the events that are in phase with O, for a spherical wave converging at the observer. If w denotes the phase of that wave and kμ = ∂μw is the associated wave four-vector, then any displacement dxμ across 𝒞 satisfies kμdxμ = dw = 0. This applies, in particular, to any dxμ ∈ d2x ⊂ Σ(z)⊂𝒞. In the natural frame of d2x, this four-dimensional orthogonality becomes three-dimensional because dx0 = 0; in other words, k ⋅ dx = 0, where k is the wave-vector in the natural frame.

The spatial orthogonality between k and d2x implies that d2x forms a Sachs screen space in its natural frame. Thus, d2Az is not only the proper area of d2x, but also the cross-sectional area of the light beam subtended by d2θ in that frame. By virtue of Sachs’ shadow theorem (Sachs 1961, see also Sect. 2.1.2 of Fleury 2015), the area of a beam is independent of the frame in which it is evaluated, as long as it is projected on a Sachs screen. This unique notion of a beam’s cross-sectional area then defines the angular distance according to

This confirms that the area of Σ(z) is indeed related to the angular distance as  , thereby validating the last equality of Eq. (36).

, thereby validating the last equality of Eq. (36).

We finally note that the above reasoning actually applies to any slice of the light cone. In other words, for any parameter p such that the iso-p surface Σ(p) is space-like (p may stand, for instance, for the affine parameter λ, time η, the comoving radius r, etc.) the area of the element d2x subtended by the solid angle d2θ reads  and the total area of the iso-p surface is

and the total area of the iso-p surface is

2.5.2. The photon-flux conservation argument

In the spirit of the second part of Weinberg (1976), we may also connect the area-averaged amplification to A(z) on the basis of photon conservation. Let F0 be the total number of photons received per unit time by an observer. If the photon number is conserved, then the same photons crossed Σ(z) at a rate Fz = (1 + z)F0, where the (1 + z) factor accounts for time dilation. Importantly, the latter relation holds regardless of the geometry of Σ(z); in other words,  .

.

Now, the photon flux may be written as

where n is the outgoing normal to Σ(z), J is the photon flux density vector, and in the second equality we used that in its natural frame n is aligned with k and hence to J. Since J = Π/(ℏω), where  is the Poynting vector, we conclude that

is the Poynting vector, we conclude that  . Combining this with

. Combining this with  then yields

then yields

Therefore, Eq. (25) is equivalent to stating that inhomogeneities do not affect the area of iso-z surfaces. We stress again here that Weinberg’s photon-flux-conservation argument does not imply that  , but rather shows that such an equality is equivalent to

, but rather shows that such an equality is equivalent to  .

.

2.5.3. CMB and the area of the last-scattering surface

Hitherto, our discussion has been focused on surfaces of constant redshift because of their connection with observational averages. The archetypal application would be the analysis of the Hubble diagram in a non-homogeneous Universe, which involves the source-averaged distance modulus. However, shall we be more interested in the analysis of the CMB than in SNe, more relevant would be the last-scattering surface (LSS) and its area A*.

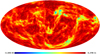

The area of the LSS is a relevant quantity indeed. As illustrated in Fig. 4, A* essentially drives how many sound horizons rs the observer may count in the CMB, and hence their average angular size θ* which is one of the main direct CMB observables. The problem is then to estimate to which extent is A* affected by the inhomogeneities of our Universe. Following KP16, we shall approximate the LSS as a surface of constant time6, Σ(η*). By virtue of Eq. (38) for p = η*, we have  where the departure from strict equality stems from the difference between

where the departure from strict equality stems from the difference between  and μ(η*), that is from the small shift and tilt effects emphasised in Sect. 2.2.3.

and μ(η*), that is from the small shift and tilt effects emphasised in Sect. 2.2.3.

|

Fig. 4. Last scattering surface, approximated as a constant-time slice Σ(η*) of the light cone 𝒞. Its area is connected to the number of sound horizons rs that appear on the observer’s CMB, and hence to its average apparent size θ*. |

From a pedagogical point of view, surfaces of constant time Σ(η) are very attractive because their natural frame (in the sense of Sect. 2.5.1) is the comoving frame. Indeed, for any displacement dxμ ∈ Σ(η) we have by definition dx0 = 0 in comoving coordinates. Because of this, the shift and tilt corrections to the area A* of the LSS can be made particularly explicit. Expressing A* as the area of a polar surface, we have indeed

where  is the comoving radial coordinate of the point of LSS with angular coordinates β, which is generally shifted with respect to its background counterpart

is the comoving radial coordinate of the point of LSS with angular coordinates β, which is generally shifted with respect to its background counterpart  . The angle ι*(β) is the tilt between the normal to the LSS and the radial direction; it encodes the wrinkles of the LSS which tend to increase its area. Recall that β denotes the ‘true’ angular position of a point of the LSS, not to be confused with the direction θ in which that point would be observed.

. The angle ι*(β) is the tilt between the normal to the LSS and the radial direction; it encodes the wrinkles of the LSS which tend to increase its area. Recall that β denotes the ‘true’ angular position of a point of the LSS, not to be confused with the direction θ in which that point would be observed.

In order to get a theoretical prediction for  , we may expand Eq. (41) at second order in cosmological perturbations, and assume ergodicity to turn integrations over β into ensemble averages (see Sect. 2.3.3). This yields

, we may expand Eq. (41) at second order in cosmological perturbations, and assume ergodicity to turn integrations over β into ensemble averages (see Sect. 2.3.3). This yields

with  .

.

KP16 proposed a quite intuitive analysis of the shift term, δr*; we shall paraphrase their idea here, while further details and minor corrections are given in Appendix C. The first effect of inhomogeneities is the presence of the gravitational potential ϕ, which changes the effective (coordinate) speed of light as

As a consequence, during a fixed travel time η0 − η, the comoving distance travelled7s is slightly changed, s(η) = η0 − η + δs(η). At the LSS, this reads

where x(η) is the photon trajectory connecting the observer to the point β of the LSS. We note that this is nothing but the usual Shapiro time delay seen from a different point of view.

Second, because of gravitational lensing, light rays are wiggly, and hence the comoving radius r that they reach after travelling a comoving distance s is slightly smaller than s. At the LSS we may write r* = s* + δrgeo, with

at second order in perturbations, and where ι is the angle made between the instantaneous photon propagation direction and the axis spanned by β. It coincides with ι* at the LSS.

When both effects (time delay and wiggles) are taken into account, the radial shift of the LSS with respect to its background counterpart reads

We note that δs* is first-order, while δrgeo is second-order in cosmological perturbations. It is thus essential to go beyond the Born approximation when evaluating δs* for consistency. Because of that hierarchy, δs* may also be considered the main driver of the wrinkles ι* of the LSS.

Once ensemble average is taken, Eq. (43) yields

where Pϕ denotes the power spectrum of the gravitational potential. More details can be found in Appendix C. Equation (48) agrees with Eq. (A.44) of KP16, albeit obtained via a slightly different path.

2.6. Summary and goal of the remainder of this article

Inhomogeneities may bias cosmological observations, notably via the effect of gravitational lensing on distance measurements. Biases depend on the notion of averaging that is involved. By virtue of the inverse-magnification integral ⟨μ−1⟩d = 1, some specific observables are expected to be almost unbiased: ⟨d2(z)⟩d ≈ 1/⟨d−2(z)⟩s ≈ 1. Other combinations of d, such as the magnitude, generally exhibit a potentially much larger bias, on the order of ⟨κ2⟩. Departures from the exact ⟨d2(z)⟩d = 1 stem from  and may be interpreted as being due to shifts and tilts of iso-z surfaces with respect to their background counterpart. Apart from these shift and tilt effects, the area of iso-z surfaces is unaffected by inhomogeneities. An equivalent reasoning may be applied to other slices of the light cone, such as surfaces of constant time whose area is relevant for CMB observations.

and may be interpreted as being due to shifts and tilts of iso-z surfaces with respect to their background counterpart. Apart from these shift and tilt effects, the area of iso-z surfaces is unaffected by inhomogeneities. An equivalent reasoning may be applied to other slices of the light cone, such as surfaces of constant time whose area is relevant for CMB observations.

In the remainder of this article, we propose to numerically evaluate: (i) the accuracy of ⟨d2(z)⟩d ≈ 1/⟨d−2(z)⟩s ≈ 1; (ii) the amplitude of the 𝒪(⟨κ2⟩) bias on other observables; (iii) the performance of the prediction (48) for the area of iso-η surfaces. Our investigation will be based on accurate ray tracing in a high-resolution N-body simulation, so as to fully capture non-linear effects which are difficult to control in a pure-theory approach.

3. Numerical methods

In this section, we present the numerical set-up and the various tools that are used to obtain the results reported in Sect. 4.

3.1. Simulation

We use the N-body code RAMSES (Teyssier 2002; Guillet & Teyssier 2011) with dark matter (DM) only. RAMSES uses a Particle-Mesh with Adaptive-Mesh-Refinement (PM-AMR) method, which computes the evolution of the gravitational potential and density field from particles and gravity cells. AMR allows one to probe high-density regions and hence the highly non-linear regime of structure formation.

The simulation’s box comoving length is 2625 h−1 Mpc with 40963 particles in a ΛCDM cosmology with WMAP-7 best-fit parameters (Komatsu et al. 2011), namely h = 0.72, total-matter density Ωm = 0.25733, baryon density Ωb = 0.04356, radiation density Ωr = 8.076 × 10−5, spectral index ns = 0.963 and power-spectrum normalisation σ8 = 0.801. The corresponding DM-particle mass is 1.88 × 1010 h−1 M⊙. The initial power spectrum is computed with CAMB (Lewis et al. 2000). Initial conditions are generated using a 2LPT version of MPGRAFIC (Prunet et al. 2008) to avoid transients (Scoccimarro 1998), which allows us to start the simulation at z = 46.

Fidler et al. (2015, 2016) showed that Newtonian N-body simulations, such as the one used in this article, yield physical quantities computed in the so-called N-body gauge. In principle, a small relativistic correction must be applied to translate such results into the Newtonian gauge (Chisari & Zaldarriaga 2011). We choose to neglect these corrections, and hence we identify the coordinates and the gravitational potential computed from the simulation with the coordinates and metric perturbation ϕ in Eq. (1).

3.2. Light cones

To produce light cones from our simulation we use the onion-shell method (Fosalba et al. 2008; Teyssier et al. 2009). At each synchronisation (coarse) time step of the simulation, we output a thin spherical shell whose mean radius is the comoving distance to a central observer at the snapshot time. The shells contain all the required information about the particles (positions and velocities) and about the grid cells (gravitational potential and acceleration). Furthermore, the shells are produced with a non-zero thickness, in the sense that every spatial cell appears at different times, which allows us to compute time derivatives.

We produce three different light cones for a given observer at the centre of the simulation, which correspond to three different depths and sky coverage. The simulation box size allows us to build a full-sky cone up to a radius equal to half the box length, corresponding to z ≲ 0.5. Going further would imply that some parts of the cone would repeat due to the periodic boundary conditions of the simulation. Such replication effects are suppressed by reducing the angular width of the light cone beyond z ≈ 0.5. An intermediate narrow cone is built up to z = 2 with a sky coverage of 2500 deg2, while our deep narrow cone goes up to z = 10 covering 400 deg2. The cones are oriented so that light rays do not cross the same structures at different times.

Haloes on the light cones are identified using the parallel Friend-of-Friend (PFoF) code (Roy et al. 2014) with linking length b = 0.2 and at least 100 DM particles. A halo’s position is defined from its centre of mass, while its velocity is defined as the mean velocity of the particles that it contains. The properties of the DM haloes of the present simulation have been studied in the appendix of Corasaniti et al. (2018).

We choose to model neither the haloes’ intrinsic luminosity, nor the luminosity threshold for their detection by the observer. Thus, the results presented in this article exploit all the available data within the redshift ranges of interest.

3.3. 3D relativistic ray tracing

Observables are extracted from the light cones using a fully relativistic ray-tracing procedure based on the MAGRATHEA library (Reverdy 2014). Ray-tracing is performed backwards, that is, towards the past starting from the observation event where λ = 0. Initial conditions are fixed by the observation direction n and by setting k0 = 1. This means that the affine parameter coincides with conformal time at O. The observer is chosen to be comoving, meaning that its peculiar velocity is set to zero, vo = 0. This implies that ki ∝ ni, where the proportionality factor is such that kμkμ = 0.

From these initial conditions, the geodesic Eqs. (2) and (3) are integrated numerically with a fourth-order Runge-Kutta integrator. Specifically, photon trajectories are computed within the 3D AMR structure with four steps per AMR cell. Since the underlying N-body code uses a Triangular Shaped Cloud (TSC) interpolation scheme, we use an inverse TSC to estimate the gravitational potential and acceleration at the exact position of a photon. Using another interpolation method may lead to inconsistencies, such as self-accelerating particles.

The 3D TSC scheme requires 27 cells with the same refinement level to interpolate the value of a field at a position x. In practice, we start with the finest level, that is, the level of the smallest cell that contains x; if there are less than 27 neighbouring cells with the same refinement level, then we try again with the next coarser level, and so on.

We stop the ray tracing if (and only if) that operation is impossible even at the coarse level, which means that the ray reaches the limits of our numerical background light-cones (described in Sect. 3.2) and there is no more data available to pursue its propagation. Importantly, we save all the information about every integration step of each ray’s trajectory. Besides, rays are traced irrespective of the structures that they encounter; in other words, matter is assumed to be transparent.

3.4. Infinitesimal beams

The most common way to numerically evaluate the distortion matrix 𝒜 is based on the multi-plane lensing formalism (Blandford & Narayan 1986), where the matter distribution near the line of sight (LOS) is projected onto various planes which are then treated as thin lenses (Jain et al. 2000; Hilbert et al. 2009). Here we want to fully exploit the 3D information of the RAMSES AMR octree. For that purpose, a first option consists in integrating the projected Hessian matrix ∇a∇aϕ of the gravitational potential along the actual trajectories of light rays,

where rs is the comoving distance to the source, a, b take the values 1, 2, and the two-dimensional gradient ∇a is transverse to n (to the LOS). In practice, the 3D Hessian ∂i∂jϕ is computed on the mesh, and then converted in spherical coordinates to extract its angular (transverse) part ∇a∇bϕ.

We shall refer to this approach as the ‘infinitesimal-beam method’, because it describes the distortions of an infinitesimal light source. We note that this way of computing 𝒜 is comparable to the method used in RAY-RAMSES (Barreira et al. 2016), except that here ∇a∇bϕ is evaluated on the actual ray trajectory rather than on the background trajectory. In other words, we do not resort to the Born approximation.

3.5. Ray bundles and finite-beam effect

The second option to compute the distortion matrix 𝒜 is based on a bundle of rays (a minima three), which may be seen as a finite light beam subtended by an extended light source (Fluke et al. 1999; Fluke & Lasky 2011). In that ‘ray-bundle method’, each ray is accompanied with four auxiliary rays making an angle ε with the central one, as depicted in Fig. 5. The components of 𝒜 are then computed from finite coordinate differences between the rays, rather than from gradients.

|

Fig. 5. Ray-bundle method. A central light ray (dotted line) is accompanied with four auxiliary rays A, B, C, D (red solid lines). Each auxiliary ray makes an angle ε at O with respect to the central ray. The distortion matrix is estimated by comparing the relative positions of the auxiliary rays in a plane orthogonal to the line of sight θ. |

More precisely, our method goes as follows: First, stop the central ray when the relevant parameter (such as redshift, time or comoving distance) has reached the desired value; this defines the fiducial source event S. Second, stop the auxiliary rays at the same affine parameter λ as the central ray’s at S. This criterion is arbitrary and other possibilities are implemented in the code. Third, project the relative positions of the auxiliary rays on some source plane. For simplicity, we chose it to be orthogonal to the LOS8θ. This defines the transverse separation ξ between the auxiliary rays and the central one.

Our estimator  for the distortion matrix is then motivated by the fact that if two rays separated by a small Δθ at O should have angular coordinates differing by

for the distortion matrix is then motivated by the fact that if two rays separated by a small Δθ at O should have angular coordinates differing by  ,

,

where r is the radial position where the central ray was stopped, and e1, e2 are unit vectors defining the initial separation of the auxiliary rays with respect to the central ray.

We note that the choices made in step 2 and 3 induce a spurious tilt in our estimate of the distortion matrix. We have checked that the effect of this tilt is negligible in all the results involving  in this article. The specific analysis of the tilt in Sect. 3.8, which require a better accuracy, will rely on a different method.

in this article. The specific analysis of the tilt in Sect. 3.8, which require a better accuracy, will rely on a different method.

The finite separation of the rays in the bundle method may cause some discrepancies with the infinitesimal-beam approach. Those may be quantified using the finite-beam formalism developed by Fleury et al. (2017b, 2019a,b)9. In particular, the finite-beam corrections to the angular power spectrum of convergence, Pκ, and shear, Pγ, are found to read

where Pκ, γ(ℓ; 0) denote the power spectra computed with the infinitesimal-beam approach described in Sect. 3.4.

The complete derivation of Eqs. (52) and (53) is provided in Appendix B; it relies on the weak-lensing, flat-sky, and Limber approximations. We note that Eqs. (52) and (53) differ from the results highlighted in Fleury et al. (2019a, Eqs. (120) and (121)), because they correspond to different beam geometries. The latter were computed from the distortions of circular beams, while the former correspond to square-shaped beams as depicted in Fig. 5.

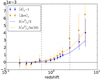

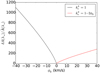

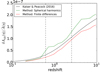

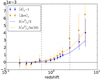

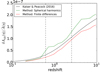

Figure 6 compares the predictions of Eqs. (52) and (53) with ray tracing. Three different beam semi-apertures are considered: ε = 35 arcmin, 3.5 arcmin and 0.35 arcmin, to which we may add ε = 0 corresponding to infinitesimal beams. For each value of ε but 0, we compute the convergence and shear using the ray-bundle method, at z = 1.95 on the intermediate narrow light cone, and for LOS dictated by HEALPIX (Górski et al. 2005). Power spectra are extracted using POLSPICE (Szapudi et al. 2001; Chon et al. 2004), so as to correctly allow for the angular selection function associated with the narrow cone’s geometry.

|

Fig. 6. Finite-beam corrections to the angular power spectrum of convergence (top panel) and shear (bottom panel) at z = 1.95, for different semi-aperture sizes. Black lines indicate the theoretical predictions of Eqs. (52) and (53), while coloured lines indicate ray-tracing results. |

Power-spectrum estimates from HEALPIX turn out to be robust until ℓ ≈ nside10 Since finite-beam effects typically kick in from ℓ ∼ ε−1, we set nside = 4096 for ε = 35arcmin and ε = 3.5 arcmin, while we set nside = 8192 for the smallest beam size ε = 0.35 arcmin, so as to ensure that the power spectra are reliable at the scales of interest.

The excellent agreement between Eqs. (52) and (53) and ray tracing, as shown in Fig. 6, is the first numerical evidence of the accuracy of the finite-beam formalism. This confirms that the finite-beam corrections that may arise in the present work are well understood and under control. In particular, the damping of Pκ(ℓ; ε) Pγ(ℓ; ε) is expected to slightly reduce the variance of convergence and shear, which are involved in distance biases. Such effects will not change the conclusions of our analysis.

Except otherwise stated, in the remainder of this article we set ε = 0.35 arcmin. Smaller beam sizes are excluded because they would exceed the resolution of the simulation.

3.6. Producing observables for statistical averages

We now turn to the generation of observables, for the purpose of computing statistical averages. As seen in Sect. 2, directional averaging and source averaging are distinct operations for which different numerical techniques must be applied.

3.6.1. HEALPIX maps for directional averages

Directional averaging consists in affecting equal weights to all directions of the observer’s sky. This condition is easily satisfied by dividing the sky into pixels of equal area, which is the purpose of HEALPIX. In order to estimate the directional average ⟨X⟩d of an observable X, we thus shoot a ray bundle in each direction θ dictated by HEALPIX, compute X(θ), and take their average.

3.6.2. Halo catalogues for source averaging

Source averaging gives the same statistical weight to each source on the observer’s light cone. Thus, computing source averages requires to produce a source catalogue, and to determine the null geodesic that connects each source to the observer.

In this work, sources are identified with the DM haloes, which are extracted from the simulation as described in Sect. 3.2. Geodesic identification, besides, follows Breton et al. (2019) (see also Adamek et al. 2019). In a nutshell, a photon is shot towards the comoving direction of a source; due to gravitational lensing the photon generally misses the source, so that LOS must be corrected and the operation iterated upon convergence at the source (for an illustration, see Fig. 1 in Breton et al. 2019). This procedure eventually yields the full trajectory of light for each source, as well as its observed position. We note that we do not account for multiple images of the same source, meaning that we stop the geodesic-finding algorithm as soon as one valid ray is found.

From the N-body code we also know the gravitational potential and velocity of each source in the catalogue. This data notably allow us to accurately compute the redshift, accounting for all the special- and general-relativistic effects at first order in the metric perturbation. The quantitative features of the mocks11 used in this work are summarised in Table 1.

Three light cones are used for the present work.

3.7. Surfaces on the light cone

In this article, we shall consider various ways to slice the observer’s past light cone, depending on which parameter is fixed; namely: Surfaces of constant redshift (iso-z), constant time (iso-η), constant comoving distance travelled (iso-s), and constant affine parameter (iso-λ). In the background FLRW model, all these surfaces are spherical and correspond to each other following specific one-to-one relations. These are denoted with an over-bar; for instance,  is the background redshift on the background iso-η surface. In practice, we determine these background relations by shooting a single ray in the simulation with ϕ = 0.

is the background redshift on the background iso-η surface. In practice, we determine these background relations by shooting a single ray in the simulation with ϕ = 0.

In the inhomogeneous case, the surfaces are determined by shooting rays in directions θ set by HEALPIX. Since all the properties of the ray and its location are saved at each integration step, it is straightforward to determine the perturbed surfaces, such as iso-η surfaces x(η), as well as the value of all the other parameters across the surfaces, so that  . The comoving distance travelled s is computed at each integration step according to si + 1 = si + |xi + 1 − xi|.

. The comoving distance travelled s is computed at each integration step according to si + 1 = si + |xi + 1 − xi|.

Subtleties arise in the case of iso-z surfaces. The significant contribution of peculiar velocities to the observed redshift raises two issues: First, since velocities are only defined for particles, interpolation on the grid is necessary to estimate z at each time step. For that purpose, we use a TSC interpolation using all the DM particles in the redshift range of interest with a buffer zone. Second, it may happen that a light ray meets the same redshift multiple times during its propagation. In other words, the function λ ↦ z(λ, θ) is not one-to-one in the inhomogeneous Universe; iso-z surfaces are not uniquely defined. In this work we restrict the analysis to the two extremal iso-z surfaces, namely the closest to the observer {x[rmin(z, θ),θ]}, and the farthest from the observer {x[rmax(z, θ),θ]}. We denote these surfaces Σ−(z) and Σ+(z) respectively.

3.8. Computing the area of wrinkly iso-η surfaces

In order to check the theoretical predictions of Sect. 2.5.3 regarding the area of the LSS, and more generally of the iso-η surfaces, we need to numerically evaluate the expression

where β denotes the ‘true’ position of a point of the iso-η surface, as opposed to the direction θ in which it would be observed. Such a computation thus requires the numerical determination of r(η, β) and its gradient ∂r/∂β.

In practice, however, we have a more direct access to r(η, θ) because the iso-η surface is determined by ray shooting (see Sect. 3.7), which yields r(η, θ) and β(η, θ) for each θ of a HEALPIX map. One could in principle compute r(η, β) by finding the null geodesics between the observer and the direction β at each iso-η surface, but this procedure would be computationally expensive. Another option consists in directly building a lower-resolution HEALPIXβ-map, such that in each pixel r(η, β) is the average of the r(η, θ) for which β(η, θ) falls into that pixel.

An even cheaper possibility consists in using the fact that the conversion between θ and β is dictated by lensing quantities, which we do compute for each ray. We shall adopt this method here. Specifically, in terms of θ, Eq. (54) reads

The quantities r, 𝒜, μ are indeed evaluated in each direction θ of our HEALPIX maps, thereby making the computation of A(η) much easier. In fact, the corrections due to the presence of μ and 𝒜, that is, the difference between integrating over θ or β, turn out to be very small – about 1% of  ; these corrections could thus be neglected in first approximation.

; these corrections could thus be neglected in first approximation.

We use two different methods to compute the gradient ∂r/∂θ from the map r(η, θ) so as to better control numerical artefacts: First, the ‘finite differences’ method, where we estimate derivatives from finite differences between pixels. Second, the ‘spherical harmonics’ method, where we first decompose the map into spherical harmonics, r(η, θ) = ∑ℓ, mrℓm(η)Yℓm(θ) and then compute gradients from the gradients of spherical harmonics. The same procedure is applied to the mask (with zero padding), which so as to normalise the gradient of the original map. We use healpy routines (Zonca et al. 2019).

In practice, the spherical-harmonics method requires a smoothing beforehand to ensure that we recover the initial map through the operation map →rℓm→ map. The smoothing scale must be as small as possible but larger than pixel size; we thus adopt a Gaussian beam with FWHM = 5 arcmin. Although smoothing is not needed in the finite-difference method, we apply it as well to ensure that their results are comparable.

3.9. Uncertainties on numerical averages

When computing the (source or directional) average ⟨X⟩ of an observable X from mock data, the result generally differs from theoretical predictions; in other words, the ergodicity principle is not exactly satisfied. This may happen for two reasons: First, the number of mock observations in the sample is finite; this leads to a Poisson uncertainty on the estimation of any average quantity. Second, there may be super-sample inhomogeneity modes (Hui & Greene 2006), which may bias the estimator of ⟨X⟩. This is particularly relevant to the mock data extracted from the narrow cones, which may be, for example, slightly over-dense or under-dense with respect to the simulation box.

We shall account for this uncertainty by adding error bars on any numerical average presented in the next section. The size of such error bars, that is, the uncertainty σ on ⟨X⟩, is computed as

In Eq. (57), the first term represents the Poisson uncertainty due to the finite sample size;  denotes the ℓth multipole of X, and N is the number of mock observations – the number of pixels in the map for directional average, or the number of sources for source-averaging. When N ≫ 1, this first term may be neglected.

denotes the ℓth multipole of X, and N is the number of mock observations – the number of pixels in the map for directional average, or the number of sources for source-averaging. When N ≫ 1, this first term may be neglected.

The second term in Eq. (57),  , is the super-sample variance. This contribution may be understood as a generalisation of the cosmic variance mentioned in Sect. 2.3.3. Suppose that we compute the directional average of X within a cone with half angle α at the observer,

, is the super-sample variance. This contribution may be understood as a generalisation of the cosmic variance mentioned in Sect. 2.3.3. Suppose that we compute the directional average of X within a cone with half angle α at the observer,

with θ = (ϑ, φ), and where

is the cone’s window function. We note that ⟨X⟩α = π = ⟨X⟩d by definition. ⟨X⟩α is a random variable, because it depends on the actual orientation of the cone. Its variance is the super-sample variance  that we are looking for,

that we are looking for,

Decomposing the window function X and observable W in spherical harmonics, for which

where Pℓ are Legendre polynomials, we find

In the full-sky limit (α = π), the uncertainty on ⟨X⟩α is expectedly dictated by the monopole only,  .

.

3.10. Variance within a finite simulation box

The variance derived in Sect. 3.9 depends on  , which ultimately depends on the matter density power spectrum P(k). As such, it would seem natural to use the information from all the wavelengths available in P(k). However there is a subtlety when estimating the variance from N-body simulations: these are usually cubic boxes with periodic boundary conditions, with a mean density equal to zero inside the cubic volume by definition. This means that, unlike the real Universe, there can be no inhomogeneity modes with wavelengths larger that the box itself.

, which ultimately depends on the matter density power spectrum P(k). As such, it would seem natural to use the information from all the wavelengths available in P(k). However there is a subtlety when estimating the variance from N-body simulations: these are usually cubic boxes with periodic boundary conditions, with a mean density equal to zero inside the cubic volume by definition. This means that, unlike the real Universe, there can be no inhomogeneity modes with wavelengths larger that the box itself.

To mimic this effect, Gelb & Bertschinger (1994) imposed a cut-off in the matter power spectrum at kmin = 2π/L with L the comoving size of the box. This approach has been widely studied either to estimate 3D statistics (Bagla & Ray 2005; Power & Knebe 2006) or 2D weak-lensing analysis (Harnois-Déraps & van Waerbeke 2015). To go further, one may convolve the power spectrum with the appropriate cubic window function in real space (Pen 1997; Sirko 2005); we found that this last correction was negligible because our box is large enough.

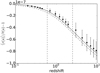

Finite-box corrections effectively change the low-k behaviour of the power spectrum of any quantity that depends on the gravitational potential or on the density contrast. As a consequence, the angular power spectrum  of any related observable ℓ is modified at low ℓ compared to its theoretical predictions in an infinite Universe. Depending on the shape of