| Issue |

A&A

Volume 575, March 2015

|

|

|---|---|---|

| Article Number | A74 | |

| Number of page(s) | 29 | |

| Section | Extragalactic astronomy | |

| DOI | https://doi.org/10.1051/0004-6361/201425017 | |

| Published online | 26 February 2015 | |

Online material

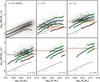

Appendix A: The UVJ selection

To further test the reliability of the UVJ selection technique, we have separately stacked the galaxies classified as quiescent. The result is presented in Fig. A.1. On this plot we show what the location of the quiescent galaxies would be on the SFR–M∗ plane assuming that all their IR luminosity is coming from star formation. This is certainly wrong because in these massive galaxies dust is mostly heated by old stars, so the SFR we derive is actually an upper limit on the true star formation activity of these galaxies. However, even with this naive assumption, the derived SFRs are an order of magnitude lower than that of the star-forming sample. We also observe that the effective dust temperature, inferred from the wavelength at which the FIR emission peaks, is lower and this is expected if dust is indeed mainly heated by less massive stars.

|

Fig. A.1

Same as Fig. 16, this time also showing the location of UVJ passive galaxies. In each panel, the blue line shows the average stacked SFR (Sect. 4.2), and the green lines above and below show the 1σ dispersion obtained with scatter stacking. The orange horizontal line shows the detection limit of Herschel in SFR. The red line shows the stacked SFR of UVJ passive galaxies, naively assuming that all the IR light comes from star formation. This is a conservative upper limit, since in these galaxies dust is predominantly heated by old stars, and the effective dust temperature inferred from the FIR SED is much colder than for actively star-forming galaxies of comparable mass. |

| Open with DEXTER | |

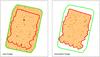

Appendix B: Tests of our methods on simulated images

To test all of these procedures, we build a set of simulated images. We design these to be as close as possible to the real images in a statistical sense, i.e., the same photometric and confusion noise, and the same number counts.

To do so, we start from our observed H-band catalogs, knowing redshifts and stellar masses for all the galaxies. Using our results from stacking Herschel images, we can attribute an SFR to each of these galaxies. We then add a random amount of star formation, following a log-normal distribution of dispersion 0.3 dex. We also put 2% of our sources in starburst mode, where their SFR is increased by 0.6 dex. Next, we assign an FIR SED to each galaxy following the observed trends with redshift (no mass dependence) and excess SFR (Magnelli et al. 2014). Starburst galaxies are also given warmer SEDs.

From these simulated source catalogs, we generate a list of fluxes in all Herschel bands. Given noise maps (either modeled from rms maps assuming Gaussian noise, or constructed from the difference between observing blocks), we build simulated images by placing each source as a PSF centered on its sky position, with a Gaussian uncertainty of 0.45″ and a maximum offset of 0.9″. We randomly reposition the sources inside the fields using uniform distributions in right ascension and declination, to probe multiple realizations of confusion. These simulated images have pixel distribution, or P(D) plots, very close to the observed images, and are thus good tools to study our methods. An example is shown in Fig. B.1 for the GOODS–South field at 100 μm.

We produce 400 sets of simulated catalogs and images, each with a different realization of photometric noise, confusion noise and SFR. We then run our full stacking procedure on each, using the same setup as for the real images (i.e., using the same redshift and mass bins), to test the reliability of our flux extraction and the accuracy of the reported errors.

|

Fig. B.1

Real Herschel PACS 100 μm image (left) and one of our simulations (right). The green region shows the extent of the PACS coverage, while the red region shows the Hubble ACS coverage, i.e., the extent of our input catalog. The two images are shown here with the same color bar. |

| Open with DEXTER | |

|

Fig. B.2

Comparison of measured stacked flux densities from the simulated images with the real flux densities that were put into the 100 μm map (the other wavelengths behave the same). The stacked sources were binned in redshift and mass using exactly the same bins as those that were used to analyze the real images. Left: mean stacked flux densities, right: median stacked flux densities. Each point shows the median Soutput/Sinput among all the 400 realizations, while error bars show the 16th and 84th percentiles of the distribution. Filled circles indicate measurements that are individually significant at >5σ on average, i.e., those we would actually use, while open circles indicate measurements at <5σ to illustrate the trend. On each plot, gray circles show the values obtained with the other method (i.e., median and mean) for the sake of direct comparison. It is clear that mean fluxes are more noisy, while median fluxes exhibit a systematic bias. |

| Open with DEXTER | |

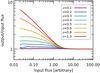

Appendix B.1: Mean and median stacked fluxes

For each of the 400 realizations we compare the measured flux densities using both mean and median stacking to the expected mean and median flux densities, respectively. The results are shown in Fig. B.2 for the PACS 100 μm band. The other bands show similar behavior.

Although less noisy, median fluxes are biased toward higher values (at most by a factor 2 here). This is because the median is not a linear operation, so it is not true in general that ⟨a + b⟩ = ⟨a⟩ + ⟨b⟩, where ⟨.⟩ denotes the median. In particular, this means that if we compute the median of our noisy stacked image and subtract the median value of the noise, we do not exactly recover the median flux density. We will call this effect the noise bias in what follows. White et al. (2007) show that this bias arises when: 1) the S/N of stacked sources is low; and 2) the distribution of flux is skewed toward either faint or bright sources. The latter is indeed true in our simulations, since we used a log-normal distribution for the SFR. Correcting for this effect is not trivial, as it requires knowledge of the real flux distribution. Indeed, Fig. B.3 shows the amplitude of this bias for different log-normal flux dispersions, the highest dispersions producing the highest biases. White et al. (2007) argue that the median stacked flux is still a useful quantity, since it is actually a good measure of the mean of the distribution, but this is only true in the limit of low S/Ns. In their first example, a double normal distribution, the measured median reaches the true mean for S/N< 0.1, but correctly measures the true median for S/N > 3.

Of course these values depend on the distribution itself, as is shown in Fig. B.3. In particular, for a log-normal distribution with 0.3 dex scatter, the mean is reached for S/N< 0.4, and the median for S/N > 3. Theoretically, the difference between the mean and the median for a log-normal distribution is log (10) σ2/ 2 dex. In our simulations, the typical 100 μm flux dispersion within a stacking bin is ~ 0.45 ± 0.1 dex, which yields a factor ![]() , in agreement with the maximum observed bias.

, in agreement with the maximum observed bias.

To see how this affects the measured LIR in practice, we list in Table B.1 the ratio of the median to mean measured LIR in each stacked bin, as measured on the real images. We showed in Sect. 4.4 that the dispersion in LIR is about 0.3 dex. Therefore, assuming a log-normal distribution, we would theoretically expect the ratio of the median to mean LIR to be close to 0.78. It is, however, clear from Table B.1 that this is not the case in practice: the median is usually (but not always) much closer to the mean than expected for a noiseless situation. Therefore, the median stacked fluxes are often not measuring the median fluxes or the mean fluxes, but something in between. Since correcting for this bias requires assumptions on the flux distribution, we prefer (when possible) to use the more noisy but unbiased mean fluxes for this study.

Ratio of the LIR values obtained from median and mean stacking using the same sample on the real Herschel images.

Appendix B.2: Clustering correction

Among our 400 random realizations, the measured mean fluxes do not show any systematic bias. However these simulations do not take the flux boosting caused by source physical clustering into account, because we assigned random positions to the sources in our catalog. To test the effect of clustering, we regenerate a new set of 200 simulations, this time using the real optical positions of the sources and only varying the photometric noise and the SFRs of the sources.

If galaxies are significantly clustered in the image, then the measured fluxes will be boosted by the amount of light from clustered galaxies that falls inside the beam. Since the beam size here is almost a linear function of the wavelength, we expect SPIRE bands to be more affected than PACS bands. Since the same beam at different redshifts corresponds to different proper distances, low redshift measurements (z< 0.5) should be less affected. However, because of the flatness of the relation between redshift and proper distance for z > 0.5, this should not have a strong impact for most of our sample. Indeed, we do not observe any significant trend with redshift in our simulations. No trend was found with stellar mass either, hence we averaged the clustering signal over all stacked bins for a given band, and report the average measured boost in Table B.2 (“method A”) along with the 16th and 84th percentiles. Although we limited this analysis to fluxes measured at better than 5σ, the scatter in the measured bias is compatible with being only caused by uncertainties in flux extraction.

|

Fig. B.3

Monte Carlo analysis showing evidence for a systematic bias in median stacking. These values have been obtained by computing medians of log-normally distributed values in the presence of Gaussian noise of fixed amplitude (σnoise = 1 in these arbitrary flux units, so that the input flux is also the S/N). |

| Open with DEXTER | |

Clustering bias in simulated Herschel images.

Although negligible in PACS, this effect can reach 30% in SPIRE 500 μm data. Here we correct for this bias by simply deboosting the real measured fluxes by the factors listed in Table B.2, band by band. The net effect on the total measured LIR is reported in Table B.3.

By construction, these corrections are specific to our flux extraction method. By limiting the fitting area to pixels where the PSF relative amplitude is larger than 10%, we absorb part of the large scale clustering into the background level. If we were to use the full PSF to measure the fluxes, we would measure a larger clustering signal (see Sect. 3.2). We have re-extracted all the fluxes by fitting the full PSF, and we indeed measure larger biases. These are tabulated in Table B.2 as “method B”. An alternative to PSF fitting that is less affected by clustering consists of setting the mean of the flux map to zero before stacking and then only using the central pixel of the stacked cutout (Béthermin et al. 2012). Because of clustering, the effective PSF of the stacked sources will be broadened, and using the real PSF to fit this effective PSF will result in some additional boosting. Therefore, by only using the central pixel, one can get rid of this effect. We show in Table B.2 as “method C” how the figures change using this alternative method. Indeed the measured boosting is smaller than when using the full PSF, and is consistent with that reported by Béthermin et al. (2015), but our method is even less affected thanks to the use of a local background.

Appendix B.3: Error estimates

|

Fig. B.4

True error σ on the stacked flux measurements as a function of the instrumental white noise level σinst. that is put on the image (here normalized to a “PSF” noise in mJy, i.e., the error on the flux measurement of a point source in the absence of confusion). We generated multiple simulations of the 250 μm maps using varying levels of white noise, and compute σ from the difference between the measured fluxes and their expected values. Left: evolution of the average total noise per source |

|

| Open with DEXTER | |

We now study the reliability of our error estimates on the stacked fluxes. We compute the difference between the observed and input flux for each realization, ΔS. We then compute the median ⟨ΔS⟩, which is essentially the value plotted in Fig. B.2, i.e., it is nonzero mostly for median stacked fluxes. We subtract this median difference from ΔS, and compute the scatter σ of the resulting quantity using median absolute deviation, i.e., σ ≡ 1.48 × MAD(ΔS − ⟨ΔS⟩). We show in Fig. B.5 the histograms of (ΔS − ⟨ΔS⟩) /σ for the mean and median stacked PACS 100 μm fluxes in each stacked bin. By construction, these distributions are well described by a Gaussian of width unity (black curve).

We have two error estimates at our disposal. The first, σIMG, is obtained by measuring the rms of the residual image (after the stacked fluxes have been fitted and subtracted), and multiplying this value by the PSF error factor (see Eq. (4)). The second, σBS, is obtained by bootstrapping, i.e., repeatedly stacking half of the parent sample and measuring the standard deviation of the resulting flux distribution (again, see Sect. 3.2). Each of these method provides a different estimation of the error on the flux measurement, and we want to test their accuracy.

In Fig. B.5, we show as red and blue lines the predicted error distribution according to σIMG and σBS, respectively. When the predicted distribution is too narrow or too broad compared to the observed distribution (black curve), this means that the estimated error is respectively too low or too high.

For median stacked fluxes, it appears that σBS is accurate in all cases. It tends to slightly overestimate the true error on some occasions, but not by a large amount. On the other hand, σIMG dramatically underestimates the error when the measured S/N of stacked sources is high (or the number of stacked sources is low).

The situation for mean stacked fluxes is quite different. The behavior of σIMG is the same, but σBS show the completely opposite trend, i.e., it underestimates the error at low S/N and high number of stacked sources. This may be caused by the fact that bootstrapping will almost always produce the same confusion noise, since it uses the same sources. The reason why this issue does not arise for median stacked fluxes might be because the median naturally filters out bright neighbors, hence reducing the impact of confusion noise.

The results are the same for the PACS 70 and 160 μm band. Therefore, keeping the maximum error between σIMG and σBS ensures that one has an accurate error measurement in all cases for the PACS bands.

The SPIRE fluxes on the other hand show a substantially different behavior. We reproduce the same figures in Fig. B.6, this time for the SPIRE 350 μm band. Here, and except for the highest mass bin, the errors are systematically underestimated by a factor of ~1.7, regardless of the estimator used. We therefore use this factor to correct all our measured SPIRE errors in these bins.

We believe this underestimation of the error is an effect of confusion noise. Indeed, it is clear when looking at the stacked maps at these wavelengths (e.g., Fig. 5) that there is a substantial amount of large scale noise coming from the contribution of the neighboring bright sources. The main issue with this noise is that it is spatially correlated. This violates one of the assumptions that were made when deriving the error estimation of Eq. (4), which may thus give wrong results. The reason why only the SPIRE bands are affected is because the noise budget here is (by design) completely dominated by confusion. This is clear from Fig. B.4 (left): when putting little to no instrumental noise σinst on the simulated maps, the total error σtot on the flux measurements is completely dominated by the confusion noise σconf (blue line), and it is only by adding instrumental noise of at least 10 mJy (i.e., ten times more than what is present in the real maps) that the image becomes noise dominated. By fitting ![]() (B.1)we obtain σconf = 4.6 mJy. This value depends on the model we used to generate the simulated fluxes, but it is in relatively good agreement with already published estimates

(B.1)we obtain σconf = 4.6 mJy. This value depends on the model we used to generate the simulated fluxes, but it is in relatively good agreement with already published estimates

from the literature (e.g., Nguyen et al. 2010, who predict σconf = 6 mJy).

We then show in Fig. B.4 (right) that the error underestimation in the SPIRE bands, here quantified by the ratio σ/σIMG, goes away when the image is clearly noise dominated, meaning that this issue is indeed caused by confusion and the properties of the noise that it generates.

|

Fig. B.5

Normalized distribution of (ΔS − ⟨ΔS⟩) /σ of the mean (top) and median (bottom) stacked PACS 100 μm fluxes in each stacked bin. The black, blue, and red curves show Gaussians of width 1, σBS/σ and σIMG/σ, respectively. The estimation of the true S/N of the flux measurement is displayed in dark red, while the average number of stacked sources is shown in dark blue. |

| Open with DEXTER | |

|

Fig. B.6

Same as Fig. B.5 for SPIRE 350 μm. |

| Open with DEXTER | |

© ESO, 2015

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.