| Issue |

A&A

Volume 699, July 2025

|

|

|---|---|---|

| Article Number | A96 | |

| Number of page(s) | 15 | |

| Section | Astronomical instrumentation | |

| DOI | https://doi.org/10.1051/0004-6361/202554824 | |

| Published online | 04 July 2025 | |

A novel approach to optimizing the image cleaning performance of Imaging Atmospheric Cherenkov Telescopes: Application to a time-based cleaning for H.E.S.S.

1

Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen Centre for Astroparticle Physics,

Nikolaus-Fiebiger-Str. 2,

91058

Erlangen,

Germany

2

Max-Planck-Institut für Kernphysik,

Saupfercheckweg 1,

69117

Heidelberg,

Germany

★ Corresponding author: jelena.celic@fau.de

Received:

28

March

2025

Accepted:

13

May

2025

The Imaging Atmospheric Cherenkov Telescope (IACT) technique is essential for gamma-ray astronomy, but it suffers from performance degradation due to night sky background (NSB) noise. This degradation is mitigated through image-cleaning procedures. This study introduces a time-based cleaning method for the High Energy Stereoscopic System using CT5 in monoscopic mode, and it presents an optimization workflow for image-cleaning algorithms to enhance telescope sensitivity while minimizing systematic biases. Unlike previous optimization efforts, we do not use first-order metrics such as image size retention; instead, our pipeline focuses on the final sensitivity improvement and its systematic susceptibility to NSB. We evaluate three methods -tail-cut cleaning and two variants of time-based cleaning, TIME3D and TIME4D -and identify the best-cut configurations for two cases: optimal overall sensitivity and minimal energy threshold. The TIME3D method achieves a ~15% improvement compared to standard tailcut cleaning for E < 300 GeV, with a ~200% improvement for the first energy bin (36.5 GeV < E < 64.9 GeV), providing more stable performance across a wider energy range by preserving more signal. The TIME4D method achieves an approximately 20% improvement at low energies due to superior NSB noise suppression, allowing enhanced capability to detect sources at the lowest energies. We demonstrate that using first-order estimations of cleaning performance, such as image size retaining or NSB pixel reduction, cannot provide a complete picture of the expected result in the final sensitivity. Beyond expanding the effective area at low energies, sensitivity improvement requires precise event reconstruction, including improved energy and directional accuracy. Enhanced gamma-hadron separation and optimized pre-selection cuts further boost sensitivity. The proposed pipeline fully explores this, providing a fair and robust comparison between different cleaning methods. The method is general and can be applied to other IACT systems, such as the Very Energetic Radiation Imaging Telescope Array System and the Major Atmospheric Gamma-Ray Imaging Cherenkov Telescopes. By advancing data-driven image cleaning, this study lays the groundwork for detecting faint astrophysical sources and deepening our understanding of high-energy cosmic phenomena.

Key words: astroparticle physics / techniques: image processing

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

The Imaging Atmospheric Cherenkov Telescope (IACT) technique, first demonstrated by Whipple in 1989, has proven extremely successful in detecting gamma rays in the energy range from 100 GeV to several tens of TeV (Weekes et al. 1989). Over the past decades, modern IACT systems, such as the High Energy Stereoscopic System (H.E.S.S.) (Aharonian et al. 2006), Major Atmospheric Gamma-Ray Imaging Cherenkov Telescopes (MAGIC) (Aleksi et al. 2012), and the Very Energetic Radiation Imaging Telescope Array System (VERITAS) (Holder et al. 2006) have revolutionized our understanding of energetic processes in the Universe (Funk 2015). The next-generation instrument is the Cherenkov Telescope Array Observatory (CTAO), which features significantly enhanced hardware and software capabilities. With a sensitivity up to ten times greater than that of existing instruments, CTAO is expected to enable groundbreaking discoveries (The CTA consortium 2019).

The detection principle of IACTs is based on observing gamma rays indirectly through extensive air showers. Secondary charged particles traveling faster than the speed of light in the atmosphere emit light in the form of Cherenkov radiation, which is collected by the telescope mirrors, forming an image of the air shower (Weekes 2005). From these images, it is possible to reconstruct the essential properties of the primary particle, such as its energy, direction, and species.

However, Cherenkov images are affected by noise induced by the night sky background (NSB). Even on the darkest nights, faint diffuse light from various sources (such as night airglow, zodiacal light, or diffuse light from unresolved stars) contributes to the NSB (Leinert et al. 1998; Preuss et al. 2002). These components vary with time, location, and observing conditions, making the NSB a dynamic and complex phenomenon. In addition, electronic and detector noise further contaminates the recorded images. This combination of noise sources introduces unwanted information into the event images, creating noise pixels that bias both image parameterization and the reconstruction of event properties.

To maximize the air shower signal while minimizing NSB contamination, robust image-cleaning techniques are essential for accurate data analysis. Traditional methods apply a pixel amplitude threshold to eliminate low-intensity pixels (Punch 1994; Konopelko et al. 1996), or use an island-cleaning technique to exclude isolated clusters (Bond et al. 2003), in order to retain Cherenkov light while reducing the number of noise pixels. With the development of advanced detector hardware, experiments such as MAGIC (Aliu et al. 2009), VERITAS (Maier & Holder 2018), and CTAO (Shayduk 2013) have explored a different approach by incorporating timing information from the signal. The motivation for using timing information lies in the correlated temporal patterns of shower pixels, which contrast with the random temporal nature of NSB noise. By analyzing both spatial and temporal patterns, these techniques are expected to suppress NSB noise more effectively while preserving the Cherenkov signal. This approach is particularly advantageous for detecting low-energy, faint showers, ultimately lowering the system’s energy threshold by retaining more low-energy events.

To address these challenges, we introduce a novel time-based cleaning technique developed for the H.E.S.S. system, as time information can now be exploited due to the last camera upgrade. Additionally, we propose a new workflow for optimizing image cleaning performance. The primary goal of this workflow is to improve the final detector sensitivity while making the system less susceptible to NSB fluctuations. Although the workflow is demonstrated using the H.E.S.S. system, it is designed to be applied to any IACT system, providing a robust and flexible solution for investigating current and future image-cleaning methods.

2 Dataset description and image-cleaning algorithms

The High Energy Stereoscopic System (H.E.S.S.) is an array of IACTs located in Namibia. The array initially comprised four telescopes, each with a 12-meter-diameter mirror (CT1 to CT4), which have been operating since 2003. In 2012, a larger telescope was added with a 28-meter-diameter mirror in the center (CT5), which operates with a monoscopic trigger mode (van Eldik et al. 2016). In this work, we use Monte Carlo (MC) simulations of gamma-ray events recorded with CT5, equipped with the FlashCam (FC) camera (Bi et al. 2021). Air shower simulations were generated with the CORSIKA (COsmic Ray SImulations for KAscade) (Heck et al. 1998) software package. Simulations of the telescope response were performed using the simtel_array (Bernlöhr 2008) software, using the most recent configuration files (Leuschner et al. 2023). The simulated MC gamma-ray spectrum follows a power law with an index of -2 to maintain high statistical rates at higher energy. Using simulations, we can obtain additional information about the true Cherenkov signal - specifically, the pixels associated with the event signal - which will be used later in the analysis. To provide a realistic background, we use real observational data with masks for known gamma-ray sources and bright stars, rather than MC proton simulations. This approach minimizes discrepancies between Monte Carlo and real data (Parsons & Schoorlemmer 2019; Pastor-Gutiérrez et al. 2021), while also limiting the significant computational cost associated with generating sufficiently large proton datasets for high-statistics analyses. Simulations were performed under representative conditions of a 20 degree zenith, a 0 degree azimuth, and a 0.5 degree offset. Background observations with zeniths around 20 degrees were chosen. Calibration and data processing were performed using the H.E.S.S. analysis program (HAP), one of the current state-of-the-art tools for processing data from the H.E.S.S. telescopes. The FC pulse reconstruction, fully described in Pühlhofer et al. (2022), provides the signal amplitude in photoelectrons (p.e.) and the peak times in nanoseconds for each pixel. The latter is considered to be the arrival time of the Cherenkov photons.

Once the signal in each of the pixels is reconstructed, image cleaning is performed. The default image-cleaning method in HAP is tail-cut cleaning. In this work, we introduce a new approach, time-based cleaning for H.E.S.S. - designed to improve the separation of signal from noise by leveraging the timing characteristics of Cherenkov light. Cleaning differences are most impactful for events with low signal intensities, which arise from low-energy showers or large impact distances. While this affects all telescope types to some extent, the lowest energies - where intensities are most frequently near the cleaning threshold - are primarily accessible with CT5. In a stereo analysis, it is likely that one telescope will have a large impact distance, and thus a low signal. Nevertheless, the telescopes with the largest signal tend to dominate the reconstruction, diminishing the improved cleaning effect in the others. For these reasons, in this work we focus on a monoscopic analysis using CT5 FC, where the benefits of improved cleaning are more pronounced and directly traceable in the reconstruction performance. The following sections provide a detailed description of the two cleaning algorithms. Figure 1 illustrates the behavior of each algorithm, showing the remaining light - referred to in this work as size - after cleaning, for an example event.

2.1 Tail-cut cleaning

The standard image-cleaning technique used by H.E.S.S. is the two-threshold cleaning method, commonly referred to as tailcut cleaning (Aharonian et al. 2006). This method is favored for its effectiveness, simplicity, and computational efficiency. The cleaning process is executed in two stages, each applying specific thresholds to the pixel data. The noise level in each pixel is approximated by a Gaussian distribution with a mean of 0,p and a standard deviation of σnoise. For observations under average Galactic NSB conditions with CT5 FC, a typical value is σgalactic NSB = 1.65 p.e. (Leuschner 2024). In the current configuration, pixels with a signal below 3σnoise are removed in a pre-cleaning step. In the second stage, pixels with a signal above a specified threshold (e.g., 5 p.e.) are retained only if they are adjacent to a pixel exceeding a higher threshold (e.g., 10 p.e.). This condition is bidirectional: pixels above the higher threshold are retained if they are adjacent to a pixel with a signal above the lower threshold. This ensures that only regions with a significant signal are preserved, while isolated pixels, which are likely to be spurious signals or noise, are removed. Some cleaning configurations require multiple neighboring pixels to exceed the threshold, rather than just a single one. The default tail-cut cleaning configurations for CT5 are typically 0916NN2 and 0714NN2. In these configurations, the first threshold is 9 p.e. or 7 p.e., the second is 16 p.e. or 14 p.e., and a minimum of two neighboring pixels (NN2) is required for a pixel to be retained. Thus, the usual tailcut cleaning can be defined by four input parameters: nnoise, thr1, thr2, and NN.

2.2 Time-based cleaning using DBSCAN

Traditional image-cleaning methods in IACTs, such as tail-cut cleaning, rely solely on pixel amplitudes to suppress background noise. Although effective in removing isolated noise pixels, these methods often fail to preserve the full extent of air shower images, particularly at lower energies. Low-energy and/or largeimpact showers are characterized by faint Cherenkov light emissions, making them highly susceptible to removal by aggressive threshold cuts (Bond et al. 2003). This leads to a significant loss of potentially valuable gamma-ray events, ultimately raising the energy threshold of the telescope. To overcome these limitations, time-based cleaning introduces an additional layer of information: the temporal evolution of the Cherenkov signal. The fundamental advantage of this approach is that shower pixels exhibit strong time correlations, whereas noise from the NSB follows a more random temporal distribution. By incorporating the arrival time of Cherenkov photons, time-based cleaning can distinguish real shower pixels from NSB-induced noise. By relaxing the amplitude cuts, more of the shower structure can be preserved while still effectively suppressing background contamination.

The time-based cleaning algorithm implemented in the HAP software uses density-based spatial clustering of applications with noise (DBSCAN; Ester et al. 1996) to enhance the identification of clusters in IACT shower images. A detailed description of the implementation and first-order optimization of the input cleaning parameters can be found in Steinmaßl (2023). The optimized parameters derived in Steinmaßl (2023) are the current standard for CT5 FC and are labeled here as TIME3D_STD1 and TIME3D_STD2; these are listed in Table 1. The DBSCAN algorithm is an efficient clustering method that operates without predefined cluster counts, making it particularly suited for IACT images. Hadronic showers often produce multiple clusters due to their interaction patterns, whereas gamma-ray showers typically result in a single, concentrated cluster. The algorithm’s ability to classify unclustered points as noise further aids in isolating relevant data from background pixels. Before running DBSCAN, a pre-cut on intensity is applied, which can be either a fixed intensity threshold (nhard) or based on the noise level (nnoise × σnoise), similar to the pre-cleaning step of tail-cut cleaning. After pre-cleaning, DBSCAN is used to classify the remaining pixels. In general, the algorithm defines clusters based on two parameters: neighborhood distance ∊ and minimum points (minPts). Using these parameters, it classifies the points as core points (those with at least minPts within ∊), density-reachable points (within ∊ of a core point), or noise points (neither core nor density-reachable). Core points and their density-reachable neighbors are grouped to form clusters, while noise points are removed.

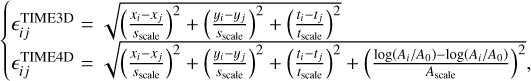

The distance ∊ is defined as a dimensionless, scaled distance in an N-dimensional space. Two definitions are used: the first considers the pixel peak time and spatial position in the camera, resulting in a three-dimensional distance, and is labeled as TIME3D. The second adds the amplitude in the pixel as the fourth dimension by considering the logarithm of the ratio between the pixel amplitude and that of the brightest pixel; this is referred to as TIME4D. The distance between two pixels, ∊ij, can then be expressed as

(1)

(1)

where xi and yi are the spatial positions in the camera for pixel i, ti is its peak time, Ai is its amplitude, and A0 is the amplitude of the brightest pixel. The spatial, time, amplitude scales, Sscale, tscale, and Ascale are input parameters of the cleaning (similar to the p.e. thresholds for tail-cuts). Thus, five input parameters (nhard, nnoise, minPts, sscale, tscale) must be optimized for TIME3D and six (additionally Ascale) for TIME4D. Other possibilities for including amplitude information, such as those proposed by Escañuela Nieves et al. (2025), were not investigated in this work. To optimize computation, DBSCAN was implemented within the HAP framework using a k-d tree to precompute distances, thus reducing processing time.

|

Fig. 1 An example MC gamma event seen before and after image cleaning with tail-cut and time-based algorithms. The amplitude (upper panel) and the peak time (lower panel) in each pixel of the FC are shown as color scales. In the cleaned images, blue pixels indicate shower pixels associated with the Cherenkov signal, i.e., at least 1 p.e. comes from Cherenkov photons. |

Standard time-based cleaning parameter combinations of CT5 FC.

|

Fig. 2 Left: average size retention, determined from pixels containing the shower signals. Right: average NSB survival rate calculated from pixels not containing the shower signal. Both panels show different tail-cut threshold combinations. Red and purple circles indicate the two default configurations. All combinations shown use the same pre-cleaning (3σnoise) as in the default configurations. Arrows indicate the direction of expected performance gain. |

3 Optimization workflow

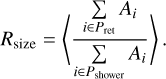

The primary goal of image cleaning is to preserve as much shower-related information as possible while simultaneously reducing noise levels. However, a trade-off must be found. Figure 2a shows the effect of varying thresholds in the tailcut cleaning algorithm on the average remaining light (size) retention, calculated as follows:

(2)

(2)

Here, Rsize denotes the average size retention, i.e., the fraction of shower-associated light that survives cleaning. Pshower is the set of all pixels associated with the shower, as determined from Monte Carlo TruePE information, while Pret ⊂ Pshower is the subset of those pixels that are retained after image cleaning. The variable Ai denotes the reconstructed amplitude (in photoelectrons) of pixel i, including contributions from NSB and detector effects. The angle brackets 〈·〉 indicate an average over all MC gamma-ray events.

The quantity is evaluated on shower-only pixels, which can be extracted from the simulations. The results indicate that as the thresholds increase, the size retention decreases. This means that higher thresholds result in more of the shower’s information being removed. Conversely, as thresholds are lowered, the number of NSB clusters increases, as shown in Figure 2b where the average NSB survival rate rises significantly for lower thresholds. Finding the right balance between size retention and NSB cleaning efficiency is crucial to developing an optimal cleaning algorithm. For this reason, the optimization of image-cleaning methods remains a complex challenge. Previous studies (Steinmaßl 2023, Shayduk 2013 and Kherlakian 2023) aimed at improving image cleaning for IACTs have often focused on maximizing size retention, minimizing the number of survived NSB clusters, or combination of both. Although these are useful considerations, estimating the impact of image cleaning on the final sensitivity of the experiment is computationally expensive. Sensitivity depends on a complex interplay between preserving the gamma-ray signal, minimizing noise contamination, and maintaining accurate event reconstruction. Moreover, size thresholds for preselection are typically optimized for specific cleaning algorithms, which may not translate well across different methods, complicating direct comparisons. Full reconstruction, separation training, and the instrument response function (IRF) generation must be performed for each cleaning using high statistics (see Unbehaun et al. 2025 for a detailed description of the process). For that reason, it is impossible to repeat the process for hundreds or thousands of possible input cleaning parameters in order to find a global optimum. In response to this challenge, we established a new pipeline to efficiently explore cleaning configurations with respect to their impact on telescope sensitivity rather than on separate metrics. This approach provides a fair and robust method for comparing different cleaning algorithms.

The optimization framework developed in this study provides a generalized and data-driven approach for improving image cleaning in IACTs and consists of the following steps. Candidate parameter sets are selected from the image-cleaning parameter space. The number of candidates is then reduced through a multistep cleaning process. First, an NSB susceptibility limit is applied, calculated from nominal and high NSB gamma simulations according to the formula (3). For each candidate, the event size distribution of MC gamma and background events is generated and the shapes of both distributions are characterized using the fit function in Eq. (4). Candidates with similar behavior are then clustered using the K-means algorithm, and cluster representatives are determined as those closest to the cluster centers.

For all cluster representatives, the final sensitivity is calculated and the sensitivity improvement, ξ (see Eq. (5)), is evaluated for both performance and detection criteria. Candidates susceptible to NSB fluctuations, exceeding a fake cluster rate of 1%, or with an effective area ratio test κ above 1.1, are discarded. The optimal candidate is identified as the one with the highest sensitivity improvement (high ξ) and the greatest robustness to NSB fluctuations (κ=1). The first step in the proposed pipeline is to explore a wide range of input parameters for each of the three cleaning algorithms tested.

Rather than performing a regular grid search, parameter values were randomly sampled to enable a more diverse and comprehensive exploration of the parameter space while significantly reducing the computational cost to a manageable level. The chosen parameter space limits are listed in Tables 2 and 3. Establishing a broad coverage of the multidimensional parameter space increases the likelihood of identifying optimal configurations under various conditions. A parameter-space reduction strategy, described in the following section, was applied to remove less promising cleaning parameter configurations (hereafter referred to as candidates) and to focus the workflow on a smaller set of high-potential candidates. This approach enables the pipeline to prioritize sensitivity over individual metrics, providing a comprehensive and efficient manner of identifying the best cleaning methods and respective input parameters.

Chosen parameter spaces for testing the tail-cut cleaning algorithm.

Chosen parameter spaces for TIME3D and TIME4D.

|

Fig. 3 Distribution of differences in gamma efficiency for two NSB levels for the three tested cleaning algorithms: TIME3D (light blue), TIME4D (blue), and tail-cut (purple). The dashed line indicates the chosen upper limit (6.2%). The discarded candidates, outside of the grey area, correspond to 13.4% of all tested candidates. |

3.1 Parameter-space reduction

3.1.1 NSB susceptibility as a pre-selection condition

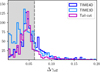

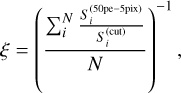

The first step in restricting the parameter space is to evaluate the susceptibility of each candidate to different NSB levels. This aspect is crucial because the response of the experiment must remain stable under varying NSB conditions, which can differ significantly depending on observation time, conditions, and pointings. A boosted decision tree (BDT) model with a sizedependent cut, as presented in Unbehaun et al. (2025), was used. The BDT was trained to distinguish between MC gamma simulations under nominal NSB conditions and background data. For each candidate, the trained model was applied independently to MC simulations with both the nominal and elevated NSB levels. The NSB susceptibility was then estimated by the difference in gamma efficiency between the two NSB levels,

(3)

(3)

The calculation was repeated one hundred times for each candidate, and the average susceptibility was determined. This repetition was necessary because the analysis relied on low-statistics datasets (≈50000 events) to reduce CPU time costs.

Figure 3 shows the distribution of NSB susceptibility. A value for Δγeff of 6.2% was set as an upper limit, which is 10% higher than the value for the standard 0714NN2 tail-cut cleaning. Applying this threshold resulted in 13.4% of all tested candidate configurations being discarded. The total number of candidates per cleaning algorithm that survived this first step is listed in Table 4.

3.1.2 Clustering of the size distribution shape parameters

After filtering the NSB-susceptible candidates, the second step in the optimization pipeline is to determine the size distributions of the cleaned events. Each cleaning method yields a unique size distribution that depends on its image cleaning settings and affects gamma rays and background data events differently. The distributions of both signal and background events are important. The effects on the former are reflected in the effective area (the energy-dependent area over which gamma rays are detected after all analysis cuts, reflecting both detection efficiency and reconstruction performance), as well as in the energy and angular resolution (defined as the 68% containment radius of the reconstructed gamma-ray direction around the true source position, indicating directional precision) after reconstruction. The effect on the latter, which is often neglected in previous studies, directly impacts the gamma-hadron separation power. Specifically, a cleaning method that removes the differences between the gamma and hadron images will perform very poorly in terms of sensitivity.

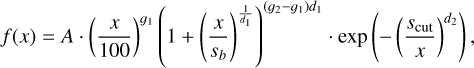

We propose an empirical fit function to describe the overall shape of the size distributions for gamma rays and background separately, using seven free parameters:

(4)

(4)

where A, g1, g2, d1, d2, sb, and scut are free parameters, and x and f (x) denote the bin center and content of the image size histogram. Figure 4 shows the distribution and the resulting fitted functions for several example cleanings.

The seven fit parameters for gamma MC and seven for background data capture key aspects of the cleaned distributions, such as the total number of events remaining after cleaning, break points, and size thresholds. Cleanings with similar fitted parameters are therefore expected to result in similar experimental performances (e.g., sensitivity). Thus, to classify similar candidates, a clustering method was adopted. A K-means clustering algorithm (Ikotun et al. 2023) was trained for each cleaning method, resulting in ten clusters for each cleaning, as shown in Fig. 5.

For visualization purposes only, a principal component analysis (PCA) (Maćkiewicz & Ratajczak 1993) was applied to obtain two representative linear combinations of the 14-D size parameter space. Figure 5 illustrates the ten cluster regions for each cleaning algorithm.

The candidate closest to each cluster center was chosen as representative of that cluster. These ten candidates serve as typical exemplars of their groups and were selected for further sensitivity evaluation. In addition to the cluster representatives, a further 30 (ten from each tested cleaning algorithm) were randomly selected to allow a more detailed examination of the parameter space. A total of 64 candidates-including the 30 cluster representatives (e.g., TIME3D_1 to TIME3D_10), the 30 random selections (e.g., TIME3D_11 to TIME3D_20), and the four default cleanings (0916NN2, 0714NN2, TIME3D_STD1, TIME3D_STD2)- are included in the next step of this workflow. This clustering approach not only reduces the tested parameter space to a manageable number, but also preserves diversity in the cleaning configurations.

|

Fig. 4 Left: size distribution for gamma MC and off-data events, with corresponding fitted function for the default tail-cut candidate 0916NN2. Right: fitted function for gamma MC (solid line) and off-data (dashed line) for different example cleanings (shown in different colors). |

Number of candidates before and after application of the NSB susceptibility cut.

3.2 Performance evaluation

Gamma-ray energy and direction reconstruction as well as gamma-hadron separation were performed for all 64 candidates according to the procedure of Unbehaun et al. (2025), which introduced several improvements to the low-energy end of CT5 mono performance.

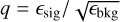

The training procedure, implemented within the HAP framework, follows the steps outlined below (further details can be found in Unbehaun et al. 2025). First, preselection cuts are applied, requiring an image size above 50 p.e. and more than five pixels. Next, gamma-ray reconstruction training is performed using neural networks that are trained to flip images and reconstruct both direction and energy. A gamma-hadron separation is achieved by training a BDT with gamma MC and off-data events. A size-dependent BDT cut is then optimized to maximize the q-factor, defined as  , and is subsequently smoothed.

, and is subsequently smoothed.

|

Fig. 5 Results of K-means clustering for three different cleaning algorithms:TIME3D, TIME4D, and tail-cut. Ten cluster regions are estimated for each algorithm. This approach reduces the parameter space to ten per algorithm, with each cluster treated as a single point. For visualization, PCA is performed and the 14-dimensional parameter space is reduced to two components (Component 1 and Component 2). |

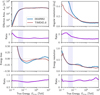

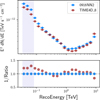

3.2.1 Improvement in sensitivity and optimization of preselection cuts

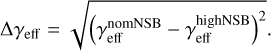

Training performance at larger sizes is independent of whether smaller images are included, which justifies the use of very loose preselection cuts prior to training. However, the final performance is strongly dependent on these cuts. Loose cuts allow for a lower energy threshold as more faint images are retained. However, high-energy events with large impact parameters also result in faint images, which are harder to reconstruct. As a result, looser preselection cuts deteriorate performance across the whole energy range. Consequently, the final preselection cuts are optimized by estimating the improvement in sensitivity with respect to the loosest case, i.e., 50 p.e. and five pixels. Similarly to Hassan et al. (2017), we evaluate this improvement, denoted ξ, as

(5)

(5)

where Si denotes the differential sensitivity in each pixel. All sensitivity calculations are performed assuming point-like gamma-ray sources, in line with standard IACT analysis procedures. For each candidate, we consider two criteria.

The performance criterion is evaluated by calculating ξ for bins between 50 GeV and 500 GeV. This evaluates the broadenergy performance of the cleaning in both spectral and spatial analysis in which good sensitivity is required throughout the whole energy range. The upper limit of 50 GeV is chosen because stereoscopic analyses are expected to perform better in this range. The detection criterion is evaluated by calculating ξ for bins between 36.5 GeV and 86.5 GeV. This evaluates the capability of achieving the detection of faint fluxes at low energies. Extending the analysis to lower energies results in reduced spectral resolution over the entire energy range. Therefore, this criterion focuses primarily on being able to detect faint sources, such as transients, at the expense of reconstruction accuracy for these events.

|

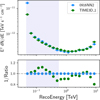

Fig. 6 Effective area for representative cleaning candidates calculated from nominal (full lines) and high NSB simulations (dashed lines). Horizontal dashed lines indicate the energy threshold, defined as the energy at which the effective area is 10% of its maximum. The bottom panel shows the ratio between the nominal and high NSB cases. Both example time-cleaning candidates illustrate extreme cases of overestimating or underestimating the signal efficiency at higher NSB rates; both are discarded according to the set limit. |

3.2.2 Fake cluster rate

As a consistency check, we estimated the fake cluster rate for all 64 candidates. We used pure NSB simulations, varying the NSB scaling factor xnsb, such that xnsb × the realistic NSB rate was cleaned and the number of images kept after cleaning was estimated. The realistic NSB rate is defined as the mean of the Gaussian distribution fitted to all observations, excluding extreme NSB conditions. Candidates with a fake cluster rate above 1% in any of the realistic NSB simulation scenarios considered (xnsb = 1.0 and xnsb = 1.645) were discarded. Twenty-four candidates were affected by this limit, which resulted in 36.9% of all tested candidates being rejected. This selection ensured that the cleaning algorithms did not retain images without any gammaray-induced signal and, in particular, do not retain secondary noise clusters in the gamma images.

|

Fig. 7 Sensitivity improvement for candidates with better performance that the default tail-cut cleaning (0916NN2) in the performance range (50 GeV to 500 GeV) and the detection range (36.5 GeV to 86.5 GeV). Candidates that fail the fake cluster rate and/or the average effective area ratio criteria are shown as lighter colors. Blue, green, and orange bars represent candidates for tail-cut, TIME3D, and TIME4D, respectively. |

3.2.3 Effective area ratio between nominal and high NSB simulations

To quantify the impact of NSB on performance, we computed the effective areas, including the optimized preselection cuts and gamma-hadron separation, as a function of the MC energy for both nominal and high NSB simulations for all 64 candidates. Figure 6 presents the effective areas for a representative candidate from each cleaning method, each optimized using its respective detection cut configuration. For NSB-susceptible cleaning candidates, the differences between the two NSB levels can vary in both directions, resulting in either the retention of a fake signal (orange) or misclassification of the signal as background (red). We calculated the average ratio (κ) between the effective areas for the nominal and high NSB cases for energy bins between the energy threshold and 1 TeV. The threshold is defined as the energy for which the effective area is 10% of its maximum and 1 TeV is chosen as it represents the primary energy regime for CT5-only operations. To keep NSB systematics for changing NSB levels below 10%, the ratio should not exceed 1.1.

|

Fig. 8 Average improvement in sensitivity, ξ, versus average effective area ratio, κ, for the best candidates. The optimal region is as close as possible to κ of 1 and at large values of ξ. |

|

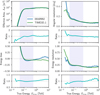

Fig. 9 Comparison of the instrument response functions (effective area, angular resolution, energy bias, and energy resolution) between the current default tail-cut cleaning and the best candidate for the performance criterion, TIME3D_1.The blue shaded region indicates the energy range of interest for the performance criterion. |

Preselection cuts and input parameters for the best candidate, and best preselection cuts for standard tail-cuts cleaning for each criterion.

4 Results

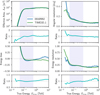

The application of the optimization pipeline yields the optimized sensitivity for each of the 64 candidates from the three image-cleaning algorithms for IACTs: TIME3D, TIME4D, and the conventional tail-cut method. Figure 7 compares the representative candidates based on their improvement in sensitivity, ξ, under both the performance and detection criteria. Only those representatives that demonstrate better performance than the reference cleaning (0916NN2) are shown. Many candidates with large improvements are discarded due to the fake cluster rate and/or the average effective area ratio tests, i.e., they are too susceptible to NSB. These candidates are shown in transparent colors. As expected, it is easier to achieve apparently better performance when NSB susceptibility is not property controlled. Figure 8 shows the average effective area ratio for the remaining candidates, i.e., those with ξ > 1 that pass the NSB susceptibility requirements. An optimal candidate presents an average effective area ratio κ of 1 and as large a ξ as possible. Consequently, the best candidates are TIME3D_1 for performance and TIME4D_8 for detection. Their parameters and preselection cuts, as well as the best preselection cuts for 0916NN2 in each criterion, are listed in Table 5. A comparison of the Hillas parameters is provided in Appendix A.

4.1 Impact on instrument response functions

A closer examination of the instrument response functions (IRFs) provides important insights into the key differences that lead to improved performance among the new candidates. We evaluate performance after gamma-hadron separation in terms of four key metrics: effective area, angular resolution, energy bias, and energy resolution. While these quantities are derived from the IRF, they are not IRFs themselves. The IRFs describe the transformation between true and reconstructed gamma-ray properties and are factorized into components such as the effective collection area, the point spread function (PSF), and energy dispersion. From these, we extract scalar performance metrics -for example, angular resolution defined as the 68% containment of the PSF, and energy resolution as the 68% width of the reconstructed-to-true energy ratio distribution.

|

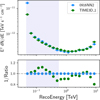

Fig. 10 Differential sensitivity comparison between the current default tail-cut cleaning candidate and the best candidate for the performance criterion, TIME3D_1. The upper panel shows the curves, while the lower panel displays the inverse ratio (i.e., values larger than 1 denote a smaller, and thus improved, sensitivity). The first flux point for TIME3D_1 at E = 48.6 TeV corresponds to an improvement of 200% and is omitted from the lower panel for clarity. The blue shaded region indicates the energy range of interest for the performance criterion. |

4.1.1 Best candidate for the performance criterion

Figure 9 shows a comparison of the IRFs of TIME3D_1 and 0916NN2. The effective area slightly increases at lower energy ranges, suggesting improved detection capability for low-energy gamma rays. Additionally, TIME3D_1 improves direction reconstruction, as indicated by the angular resolution. This improvement is important for maintaining more signal events. Energy reconstruction remains similar, with a reduced bias at the lowest energies but slightly worse energy resolution. Figure 10 provides a detailed view of the sensitivity improvements for TIME3D_1. It reveals a significant enhancement, particularly at the first flux point, where a factor of two improvement is observed, and for energies up to 300 GeV, where a 10-15% improvement is expected. These gains are primarily driven by its improved angular resolution and greater gamma-ray retention. In the energy range around 1 TeV, TIME3D_1 shows slightly worse sensitivity than 0916NN2. However, in this energy range, the smaller telescopes provide better sensitivity, and CT5 in monoscopic mode becomes less critical.

|

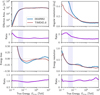

Fig. 11 Comparison of the instrument response functions (effective area, angular resolution, energy bias, and energy resolution) between the current default tail-cut cleaning and the best candidate for the detection criterion, TIME4D_8. The blue shaded region indicates the energy range of interest for the detection criterion. |

4.1.2 Best candidate for the detection criterion

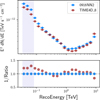

Time-based cleaning methods such as TIME3D_1 outperform the default cleaning approach in terms of event reconstruction accuracy, supporting their effectiveness in mitigating noise while preserving critical shower information. However, when the primary goal is detection optimization, different considerations apply. Figure 11 shows the IRFs for the standard cleaning 0916NN2 with preselection cuts optimized for detection (50 p.e., five pixels) and the best detection candidate, TIME4D_8, using preselection cuts of 95 p.e. and seven pixels. As discussed previously, an improved detection range can be achieved for a given cleaning method simply by loosening its preselection cuts. This can be seen in the effective area, which is slightly larger for 0916NN2 at the lowest energies. However, such loosening leads to poorer reconstruction performance. An optimal cleaning for the detection criterion should ensure that the reconstruction is not significantly degraded by loosening the preselection cuts. This becomes clear in the angular and energy reconstructions. TIME4D_8 maintains a much better angular resolution and energy bias compared to 0916NN2, while still reaching a similar energy threshold. The resulting sensitivities are shown in Fig. 12. The time-cleaning candidate yields an approximate 20% improvement in sensitivity at low energies compared to the standard tail-cut cleaning.

The choice between the two best candidates, TIME3D_1 and TIME4D_8, depends on the specific scientific objectives. If the goal is to maximize overall sensitivity across all energies, such as in spectral and spatial studies, TIME3D_1 provides the best solution. However, for optimizing low-energy event detection, such as the search for transients or faint sources, TIME4D_8 is more advantageous due to its superior noise suppression and enhanced event reconstruction at low energies.

5 Conclusion

This study introduces both a new time-based cleaning technique for H.E.S.S. and a comprehensive optimization pipeline to evaluate the image cleaning performance of IACTs. The method presented here accounts for correlations in gamma-ray induced air showers in both the pixel amplitude and timing information. We explored two options: TIME3D, which clusters pixel position and time; and TIME4D, which adds an extra dimension based on pixel amplitude relative to the brightest pixel. By systematically evaluating and refining the cleaning configura-tions of three algorithms - the tail-cut method, TIME3D, and TIME4D - this work addresses the challenge of balancing signal retention and noise suppression to improve overall sensitivity. Unlike traditional optimization approaches that focus on isolated metrics such as size retention or noise reduction, our method integrates multiple factors to ensure holistic performance improvement, focusing on sensitivity gain. Key innovations include an NSB susceptibility assessment using a BDT model to identify stable cleaning configurations under varying NSB conditions; an efficient parameter space exploration using K-means clustering to systematically group and evaluate diverse cleaning parameter sets while reducing computational costs; and a sensitivity-driven optimization approach that prioritizes the impact on key instrument response functions, including gammahadron separation, energy reconstruction, and effective area.

We demonstrate that time-based cleaning techniques significantly outperform the conventional tail-cut method. We explored two possible performance methods. For overall improvement over the entire energy range, the best candidate was identified using the TIME3D method. It provides stable performance across a broad energy range, effectively balancing NSB suppression and signal retention, making it particularly useful for general-purpose gamma-ray analyses where uniform sensitivity is required. To improve the detection of faint sources at the lowest energies, TIME4D provides the best candidate. It achieves an approximately 25% improvement in sensitivity at low energies by more aggressively filtering NSB noise while preserving enough shower structure for precise reconstruction. This enables looser preselection cuts while maintaining reasonable reconstruction resolution.

A key insight from this work is that simply expanding the effective area at low energies-for example by relaxing preselection cuts-does not necessarily lead to improved sensitivity. While this may increase the event rate, it can also severely degrade the reconstruction quality, particularly for direction and energy. Because sensitivity is computed under the assumption of point-like gamma-ray sources, accurate angular reconstruction is critical for separating signal from background. In this context, precise event reconstruction, especially in terms of angular resolution and gamma-hadron separation, becomes a dominant factor in achieving performance gains. For instance, the improved noise suppression of TIME4D enables significantly enhanced sensitivity, despite slightly higher energy thresholds. This highlights the importance of considering all aspects of performance, beyond simple metrics such as image size retention, when optimizing image-cleaning strategies.

Although validated here for H.E.S.S., this methodology is directly applicable to both current and future IACTs, such as VERITAS, MAGIC, and CTAO. Similar approaches can also be extended to other high-energy astrophysics experiments. By refining image-cleaning strategies, this work enhances our ability to detect faint astrophysical sources and, consequently, to better understand the most energetic phenomena in the Universe. Furthermore, the results highlight the necessity of tailoring image-cleaning algorithms to specific observational goals.

|

Fig. 12 Comparison of the differential sensitivity for the current default tail-cut cleaning candidate and the best candidate for the detection criterion, TIME4D_8. The upper panel shows the curves, while the lower panel shows the inverse of the ratio (i.e., values greater than 1 denote a smaller, and thus improved, sensitivity). The blue shaded region indicates the energy range of interest for the detection criterion. |

Acknowledgements

We thank the H.E.S.S. Collaboration for providing the simulated data, common analysis tools, and valuable comments on this work.

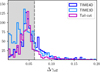

Appendix A Distribution of Hillas parameters

We show the distribution of Hillas variables. Depending on the used cleaning candidate and preselect cut configuration, ≈ 130 000-70 000 background events, taken from observations where known gamma-ray sources are excluded. All simulations were done for 20 deg zenith angle, 0 deg azimuth and 0.5 deg offset angle and the simulated spectrum with an index of -2 is re-weighted to -2.5. The distributions are normalized to 1 such that the difference in event numbers is not visible which means the y-axis of the plots shows the normalized number of entries in that bin. The upper panel always compares the default cleaning with TIME3D_1 after applied optimized pre-selection performance criteria cuts from 5, the lower one always compares the default with TIME4D_8 after applied detection criteria cuts. The solid line shows the distribution of the MC gamma simulation, the dashed one for the real background data.

|

Fig. A.1 size distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.2 Number of remaining pixels distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.3 Hillas width distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.4 Hillas length distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.5 Hillas skewness distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.6 Hillas kurtosis distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.7 Density distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

|

Fig. A.8 Hillas length over size distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

References

- Aharonian, F., Akhperjanian, A. G., Bazer-Bachi, A. R., et al. 2006, A&A, 457, 899 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Aleksi, J., Alvarez, E. A., Antonelli, L. A., et al. 2012, Astropart. Phys., 35, 435 [CrossRef] [Google Scholar]

- Aliu, E., Anderhub, H., Antonelli, L., et al. 2009, Astropart. Phys., 30, 293 [NASA ADS] [CrossRef] [Google Scholar]

- Bernlöhr, K. 2008, Astropart. Phys., 30, 149 [CrossRef] [Google Scholar]

- Bi, B., Barcelo, M., Bauer, C. W., et al. 2021, PoS, ICRC2021, 743 [Google Scholar]

- Bond, I., Hillas, A., & Bradbury, S. 2003, Astropart. Phys., 20, 311 [Google Scholar]

- Escañuela Nieves, C., Werner, F., & Hinton, J. 2025, Astropart. Phys., 167, 103078 [Google Scholar]

- Ester, M., Kriegel, H.-P., Sander, J., & Xu, X. 1996, in Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, KDD’96 (AAAI Press), 226 [Google Scholar]

- Funk, S. 2015, Annu. Rev. Nucl. Part. Sci., 65, 245 [NASA ADS] [CrossRef] [Google Scholar]

- Hassan, T., Arrabito, L., Bernlöhr, K., et al. 2017, Astropart. Phys., 93, 76 [NASA ADS] [CrossRef] [Google Scholar]

- Heck, D., Knapp, J., Capdevielle, J. N., Schatz, G., & Thouw, T. 1998, CORSIKA: a Monte Carlo code to simulate extensive air showers (Hannover: TIB) [Google Scholar]

- Holder, J., Atkins, R. W., Badran, H. M., et al. 2006, Astropart. Phys., 25, 391 [NASA ADS] [CrossRef] [Google Scholar]

- Ikotun, A. M., Ezugwu, A. E., Abualigah, L., Abuhaija, B., & Heming, J. 2023, Inform. Sci., 622, 178 [Google Scholar]

- Kherlakian, M. 2023, PoS, ICRC2023, 588 [Google Scholar]

- Konopelko, A., Aharonian, F., Akhperjanian, A., et al. 1996, Astropart. Phys., 4, 199 [Google Scholar]

- Leinert, C., Bowyer, S., Haikala, L. K., et al. 1998, A&AS, 127, 1 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Leuschner, F. 2024, PhD thesis, Universität Tübingen, Germany [Google Scholar]

- Leuschner, F., Schäfer, J., Steinmassl, S., et al. 2023, PoS, Gamma2022, 231 [Google Scholar]

- Maier, G., & Holder, J. 2018, PoS, ICRC2017, 747 [Google Scholar]

- Maćkiewicz, A., & Ratajczak, W. 1993, Comput. Geosci., 19, 303 [CrossRef] [Google Scholar]

- Parsons, R. D., & Schoorlemmer, H. 2019, Phys. Rev. D, 100, 023010 [Google Scholar]

- Pastor-Gutiérrez, A., Schoorlemmer, H., Parsons, R. D., & Schmelling, M. 2021, Eur. Phys. J. C, 81 [Google Scholar]

- Preuss, S., Hermann, G., Hofmann, W., & Kohnle, A. 2002, Nucl. Instrum. Meth. A, 481, 229 [Google Scholar]

- Punch, M. 1994, in International Workshop Towards a Major Atmospheric Cerenkov Detector-III for TeV Astro/Particle Physics [Google Scholar]

- Pühlhofer, G., Bernlöhr, K., Bi, B., et al. 2022, arXiv e-prints [arXiv:2108.02596] [Google Scholar]

- Shayduk, M., & Consortium, C. 2013, arXiv e-prints [arXiv:1307.4939] [Google Scholar]

- Steinmaßl, S. F. 2023, PhD thesis, Heidelberg University, Germany [Google Scholar]

- The CTA consortium 2019, Science with the Cherenkov Telescope Array (World Scientific) [Google Scholar]

- Unbehaun, T., Lang, R. G., Baruah, A. D., et al. 2025, A&A, 694, A162 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- van Eldik, C., Holler, M., Berge, D., et al. 2016, PoS, ICRC2015, 847 [Google Scholar]

- Weekes, T. C. 2005, arXiv e-prints [arXiv:astro-ph/0508253] [Google Scholar]

- Weekes, T. C., Cawley, M. F., Fegan, D. J., et al. 1989, ApJ, 342, 379 [NASA ADS] [CrossRef] [Google Scholar]

All Tables

Preselection cuts and input parameters for the best candidate, and best preselection cuts for standard tail-cuts cleaning for each criterion.

All Figures

|

Fig. 1 An example MC gamma event seen before and after image cleaning with tail-cut and time-based algorithms. The amplitude (upper panel) and the peak time (lower panel) in each pixel of the FC are shown as color scales. In the cleaned images, blue pixels indicate shower pixels associated with the Cherenkov signal, i.e., at least 1 p.e. comes from Cherenkov photons. |

| In the text | |

|

Fig. 2 Left: average size retention, determined from pixels containing the shower signals. Right: average NSB survival rate calculated from pixels not containing the shower signal. Both panels show different tail-cut threshold combinations. Red and purple circles indicate the two default configurations. All combinations shown use the same pre-cleaning (3σnoise) as in the default configurations. Arrows indicate the direction of expected performance gain. |

| In the text | |

|

Fig. 3 Distribution of differences in gamma efficiency for two NSB levels for the three tested cleaning algorithms: TIME3D (light blue), TIME4D (blue), and tail-cut (purple). The dashed line indicates the chosen upper limit (6.2%). The discarded candidates, outside of the grey area, correspond to 13.4% of all tested candidates. |

| In the text | |

|

Fig. 4 Left: size distribution for gamma MC and off-data events, with corresponding fitted function for the default tail-cut candidate 0916NN2. Right: fitted function for gamma MC (solid line) and off-data (dashed line) for different example cleanings (shown in different colors). |

| In the text | |

|

Fig. 5 Results of K-means clustering for three different cleaning algorithms:TIME3D, TIME4D, and tail-cut. Ten cluster regions are estimated for each algorithm. This approach reduces the parameter space to ten per algorithm, with each cluster treated as a single point. For visualization, PCA is performed and the 14-dimensional parameter space is reduced to two components (Component 1 and Component 2). |

| In the text | |

|

Fig. 6 Effective area for representative cleaning candidates calculated from nominal (full lines) and high NSB simulations (dashed lines). Horizontal dashed lines indicate the energy threshold, defined as the energy at which the effective area is 10% of its maximum. The bottom panel shows the ratio between the nominal and high NSB cases. Both example time-cleaning candidates illustrate extreme cases of overestimating or underestimating the signal efficiency at higher NSB rates; both are discarded according to the set limit. |

| In the text | |

|

Fig. 7 Sensitivity improvement for candidates with better performance that the default tail-cut cleaning (0916NN2) in the performance range (50 GeV to 500 GeV) and the detection range (36.5 GeV to 86.5 GeV). Candidates that fail the fake cluster rate and/or the average effective area ratio criteria are shown as lighter colors. Blue, green, and orange bars represent candidates for tail-cut, TIME3D, and TIME4D, respectively. |

| In the text | |

|

Fig. 8 Average improvement in sensitivity, ξ, versus average effective area ratio, κ, for the best candidates. The optimal region is as close as possible to κ of 1 and at large values of ξ. |

| In the text | |

|

Fig. 9 Comparison of the instrument response functions (effective area, angular resolution, energy bias, and energy resolution) between the current default tail-cut cleaning and the best candidate for the performance criterion, TIME3D_1.The blue shaded region indicates the energy range of interest for the performance criterion. |

| In the text | |

|

Fig. 10 Differential sensitivity comparison between the current default tail-cut cleaning candidate and the best candidate for the performance criterion, TIME3D_1. The upper panel shows the curves, while the lower panel displays the inverse ratio (i.e., values larger than 1 denote a smaller, and thus improved, sensitivity). The first flux point for TIME3D_1 at E = 48.6 TeV corresponds to an improvement of 200% and is omitted from the lower panel for clarity. The blue shaded region indicates the energy range of interest for the performance criterion. |

| In the text | |

|

Fig. 11 Comparison of the instrument response functions (effective area, angular resolution, energy bias, and energy resolution) between the current default tail-cut cleaning and the best candidate for the detection criterion, TIME4D_8. The blue shaded region indicates the energy range of interest for the detection criterion. |

| In the text | |

|

Fig. 12 Comparison of the differential sensitivity for the current default tail-cut cleaning candidate and the best candidate for the detection criterion, TIME4D_8. The upper panel shows the curves, while the lower panel shows the inverse of the ratio (i.e., values greater than 1 denote a smaller, and thus improved, sensitivity). The blue shaded region indicates the energy range of interest for the detection criterion. |

| In the text | |

|

Fig. A.1 size distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.2 Number of remaining pixels distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.3 Hillas width distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.4 Hillas length distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.5 Hillas skewness distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.6 Hillas kurtosis distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.7 Density distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

|

Fig. A.8 Hillas length over size distribution of 0916NN2, TIME3D_1 and TIME4D_8. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.