| Issue |

A&A

Volume 684, April 2024

|

|

|---|---|---|

| Article Number | A2 | |

| Number of page(s) | 31 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202347304 | |

| Published online | 29 March 2024 | |

Efficient simulation of stochastic interactions among representative Monte Carlo particles★

1

Institute of Theoretical Astrophysics, Center for Astronomy (ZAH), Ruprecht-Karls-Universität Heidelberg, Albert-Ueberle-Str. 2, 69120 Heidelberg, Germany

e-mail: moritz.beutel@ziti.uni-heidelberg.de

2

Institute of Computer Engineering (ZITI), Ruprecht-Karls-Universität Heidelberg, Im Neuenheimer Feld 368, 69120 Heidelberg, Germany

Received:

27

June

2023

Accepted:

20

December

2023

Context. Interaction processes between discrete particles are often modelled with stochastic methods such as the Representative Particle Monte Carlo (RPMC) method which simulate mutual interactions (e.g. chemical reactions, collisions, gravitational stirring) only for a representative subset of n particles instead of all N particles in the system. However, in the traditionally employed computational scheme the memory requirements and the simulation runtime scale quadratically with the number of representative particles.

Aims. We want to develop a computational scheme that has significantly lower memory requirements and computational costs than the traditional scheme, so that highly resolved simulations with stochastic processes such as the RPMC method become feasible.

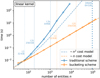

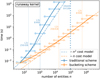

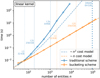

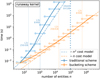

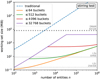

Results. In this paper we propose the bucketing scheme, a hybrid sampling scheme that groups similar particles together and combines rejection sampling with a coarsened variant of the traditional discrete inverse transform sampling. For a v-partite bucket grouping, the storage requirements scale with n and v2, and the computational cost per fixed time increment scales with n ⋅ v, both thus being much less sensitive to the number of representative particles n. Extensive performance testing demonstrates the higher efficiency and the favourable scaling characteristics of the bucketing scheme compared to the traditional approach, while being statistically equivalent and not introducing any new requirements or approximations. With this improvement, the RPMC method can be efficiently applied not only with very high resolution but also in scenarios where the number of representative particles increases over time, and the simulation of high-frequency interactions (such as gravitational stirring) as a Monte Carlo process becomes viable.

Key words: accretion, accretion disks / methods: numerical / methods: statistical / planets and satellites: formation / protoplanetary disks

The source code for this publication is available at https://github.com/mbeutel/buckets-src.

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Studying the emergent dynamics of large discrete systems in numerical simulations is a common challenge in physics. The independent entities that constitute these systems (e.g. molecules in chemical reaction processes, as well as dust grains and pebbles in planetary formation processes) are often so numerous that a direct simulation is neither practically possible nor desirable. For example, an Earth-like planet might be formed by the coagulation of ~ 1040 dust grains. The vast number of particles exceeds the capabilities of every conceivable computer; also, we are not interested in the individual fates of every single dust grain but rather in the evolution of their mass statistics.

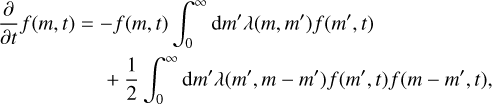

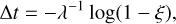

A traditional method to model discrete systems is to view them as continuous systems whose statistical properties resemble those of the discrete systems on certain scales. A continuous surrogate model might then be governed by a set of differential equations that could be solved analytically or numerically. For example, coagulation processes have traditionally been modelled by a coagulation equation. Given a continuous particle mass distribution function f(m, t), the Smoluchowski coagulation equation (Smoluchowski 1916) is an integrodifferential population balance equation that describes the change of the number of particles f (m, t)dm in an infinitesimal mass bin m ∈ [m, m + dm]:

(1)

(1)

where the first term accounts for the loss of particles that grow to larger masses through coagulation, and the second term comprises the particles newly formed by the coagulation of lighter particles. The Smoluchowski equation can be solved numerically with grid-based methods by representing a finite number of particle mass distribution samples with finite mass bins [m, m + δm] (e.g. Weidenschilling 1980; Tanaka et al. 2005; Dullemond & Dominik 2005; Brauer et al. 2008). The resolution of the grid can be improved by reducing the width of mass bins δm, which increases the number of bins required. However, grid-based methods suffer from the so-called curse of dimensionality in multidimensional parameter spaces. In the example of protoplan-etary growth, a model that associates particles only with a mass is a drastic simplification of the planet formation process; a more realistic treatment would have to consider the influence of additional properties such as root mean square (rms) particle velocity v or porous particle volume V. In the continuous approach, the particle mass distribution f (m; t) would therefore become a multidimensional particle number distribution f (m, υ, V; t), and the multidimensional equivalent of the Smoluchowski equation (Eq. (1)) would have to integrate over all dimensions of the state space. To solve the equation numerically with a three-dimensional parameter space, the particle number distribution would then have to be discretised on a three-dimensional grid. For every grid cell, evaluating the right-hand side amounts to a three-dimensional summation; for a grid of size 𝒩m × 𝒩υ × 𝒩V, this requires (𝒩m × 𝒩υ × 𝒩V)2 arithmetic operations per timestep, where 𝒩m × 𝒩υ, and 𝒩V represent the number of grid cells in the dimensions of mass, rms velocity, and porosity. Such a simulation becomes extremely expensive if all dimensions are meant to be resolved. However, a lot of the work done is unnecessary: the state space often is not fully occupied because its dimensions are not entirely uncorrelated; for instance, more massive bodies tend to be less porous due to gravitational compaction, and particles of similar mass tend to have similar rms velocities due to the quasi-thermal diffusive effects of viscous stirring and dynamical friction. The excessive cost of higher-dimensional grids was expounded in, for instance, Okuzumi et al. (2009), whose Eq. (3) gives an extended Smoluchowski equation comprising an additional dimension (porous particle volume V). The authors subsequently argued (in their Sect. 2.2) that adding a dimension with N bins would make the evaluation of the right-hand side of the Smoluchowski equation more expensive by a factor of 𝒩2, and therefore infeasible. Thus, instead of extending the dimensionality of their grid, they devised a dynamic-average approximation for the new parameter, tracking only the average porous volume V for every mass bin rather than resolving the porosity distribution.

Instead of simulating a discrete system as a whole or replacing it with continuous surrogate system, the evolution of the system can also be approximated by selecting a relatively small number of representative entities whose trajectories through state space are then followed, and by estimating the statistical properties of the entire system from the statistics of these representative entities. As an example, protoplanetary growth by coagulation has been simulated with a Monte Carlo method (Ormel & Spaans 2008; Zsom & Dullemond 2008; Ormel et al. 2010). The accuracy of the particle mass distribution inferred from a representative set of particles can then be improved by increasing the number of representative entities simulated. Compared to continuous models, representative sampling approaches have the advantage that they sample the state space sparsely. Because the state space value of every representative particle is physically realised, no computational effort is wasted on non-occupied parts of the state space. Also, a mass-weighted sampling automatically increases the sampling resolution in densely populated regions of the state space.

Although the sparsity of the state space sampling makes representative entity methods more viable for the simulation of higher-dimensional state spaces, this computational advantage is somewhat diminished by the quadratic number of possible interactions. For an ensemble of n representative entities, there are n2 possible types of interactions that must be considered. A Monte Carlo method that simulates individual interactions must therefore know the mutual rates of interaction between all entities represented by any two representative entities in the system. In the computational scheme traditionally employed for such a simulation (Zsom & Dullemond 2008, Sect. 2.1), all n2 interaction rates between entities j and k are computed and stored. They must be updated as the properties of the entities change over the course of the simulation. For every entity that undergoes a change, 2n interaction rates have to be recomputed, making the representative method too demanding to model processes with both a high resolution and frequent interaction (Ormel et al. 2010, Sect. 2.6).

The companion paper to this work, Beutel & Dullemond (2023), amended the Representative Particle Monte Carlo (RPMC) method originally conceived by Zsom & Dullemond (2008). By its construction, the original RPMC method had the limitation that the number of particles Nj represented by each representative particle j needed to be much greater than unity, Nj ⪢ 1, which implied that the method could not be used to simulate runaway growth processes where individual particles accumulate the bulk of the available mass. To overcome this limitation, the companion paper introduced a distinction between ‘many-particles swarms’ and ‘few-particles swarms’ (a swarm being the ensemble of physical particles represented by a given representative particle). The particle regime threshold Nth, which is a simulation parameter with a typical value of ‘a few’ (in the companion paper, Nth = 1 and Nth = 10 were used), separates many-particles swarms from few-particles swarms. The extended method then establishes different interaction regimes: the interaction between many-particles swarms is said to operate in the ‘many-particles regime’ and proceeds exactly as in the original RPMC method, whereas interactions between a few-particles swarm and a many-particles swarm or between few-particles swarms, which are subsumed under the ‘few-particles regime’, follow a different procedure under which coagulation leads to mass transfer between swarms. This is essential for representative bodies which outgrow the total mass of their swarm.

To minimise the statistical error and allow individual growth, a many-particles swarm j is split up into Nth individual self-representing particles once its particle count Nj falls below the particle regime threshold Nth. New representative particles are then added to the simulation, and the representative particle of the swarm is thus replaced with Nj representative particles representing only themselves. As a consequence, a few-particles swarm k always has a particle count Nk = 1 , although we note that this is not strictly required by the method, and the splitting of swarms may be waived if, other than for the runaway growth scenario, individual representation is not considered necessary.

Numerical methods similar to the representative Monte Carlo methods of Zsom & Dullemond (2008), Ormel & Spaans (2008), and Beutel & Dullemond (2023) have emerged in other fields. In particular, the ‘super-droplet’ method of Shima et al. (2009) has gained traction in theoretical research on cloud and ice microphysics (e.g. Brdar & Seifert 2018; Grabowski et al. 2019). The coalescence of super-droplets (cf. Shima et al. 2009, Sect. 4.1.4) is similar to the few-particles regime of the extended RPMC method but with particle counts Nk (ξk in the notation of Shima et al. 2009) not necessarily equal to 1. The super-droplet method was found to generate correct results for several test cases by Unterstrasser et al. (2017).

As a consequence of the initial weight distribution chosen, Shima et al. (2009) had to use very large numbers (n ~ 217.221) of super-droplets in their simulations. As with the RPMC method, the cost of the traditional computational scheme scales quadratically with the number of super-droplets, which was found to be prohibitively expensive. Shima et al. (2009) therefore proposed a sub-sampling scheme where, in a given time interval, only ~ n/2 randomly chosen interaction pairs with an up-scaled interaction rate are considered rather than the full set of ~n2 interaction pairs. At the expense of additional statistical noise due to the sparse sampling of the matrix of possible interactions, the computational cost of this sub-sampling scheme therefore scales with n rather than n2. The sub-sampling scheme, often referred to as ‘linear sampling’ by subsequent publications, was not employed in the study of Unterstrasser et al. (2017), however, it was used by Dziekan & Pawlowska (2017), who meticulously verified that it did not adversely affect their simulation outcomes.

This paper proposes a novel computational scheme that significantly reduces the cost for simulating representative entity methods without introducing a statistical coarsening or requiring approximations. Taking advantage of the fact that entities with similar properties often have similar interaction rates, we group similar particles in ‘buckets’ and then compute and update bucket-bucket interaction rates, which are obtained as upper bounds for the enclosed entity-entity interaction rates by virtue of interval arithmetic. The interacting entities are then chosen with rejection sampling. The proposed scheme is an optimisation in the sense that it does not affect the statistical outcome of the simulation: no additional inaccuracies are introduced, and the stochastic process simulated is exactly equivalent to a simulation using the traditional computational scheme.

The computational scheme presented here is key to the improvement of the Representative Particle Monte Carlo (RPMC) method proposed in the companion paper (Beutel & Dullemond 2023), which splits up swarms as their particle count falls below the particle number threshold Nth, and which thus dispensed with a core property of the original RPMC method, namely that the number of representative particles in the simulation shall remain constant. A growing number of representative particles is very costly with the traditional computational scheme where the computational effort scales quadratically with the number of representative particles. With the computational scheme presented in this work, the Nth representative particles emerging from a split swarm, whose statistical properties are initially identical and tend to remain similar, are not excessively more expensive to simulate than a single representative particle representing the Nth particles, making the performance impact of the many-few transition manageable.

In Sect. 2, we define a general stochastic process and discuss the traditional computational scheme for simulating it, and we establish a cost model for its storage and computation requirements. In Sect. 3, we introduce the bucketing scheme, a new sampling scheme for implementing a stochastic process. We prove that it is equivalent to the traditional sampling scheme, and we derive a detailed cost model demonstrating that the computational demands no longer scale with n2. The efficient computation of interaction rates is discussed in Sect. 4. Interactions in a spatially resolved physical system are often modelled with a maximal radius of interaction; in Sect. 5 we introduce sub-buckets to efficiently account for the fact that particles far apart cannot interact. Section 6 verifies our cost model by numerically studying the scalability of the scheme with a set of test problems, and then explores the conditions under which the different contributing terms of the cost model become dominant. Some practical limitations of the proposed scheme are discussed in Sect. 7.

2 Simulating a stochastic process

Consider an ensemble of n entities that interact through a discrete physical process (e.g. by colliding with each other). Each entity k is characterised by a vector of (possibly statistical) properties qk ∈ ℚ, where ℚ is the space of properties. What constitutes an entity is purposefully left unspecified: it might be a physical body, a stochastic representative of a swarm of physical bodies, a surrogate object representing the consolidated influence of some physical effect, etc.

We model interactions as instantaneous events: a given interaction is assumed to occur at a precise time t and may instantaneously alter the properties of the entities involved. For example, if two colliding bodies j, k with masses mj, mk ‘hit and stick’, then at some precise time t the mass of one body instantaneously changes to mj + mk, while the other body ceases to exist as a separate entity.

2.1 Simulating a Poisson point process

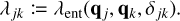

Let us assume that the interaction rate λjk of two entities j, k, in units of time−1, is given by some function of the properties of entities j and k and the Kronecker delta δjk,

(2)

(2)

The third argument of this entity interaction rate function λent(q, q′, δ) can be used to distinguish entity self-interaction from interaction of different entities. We emphasise that the interaction rate function has no explicit time dependency. Therefore, if for a given duration the properties qj, qk of entities j and k do not change, the interaction of the entities j, k during that time interval is a homogeneous Poisson point process. We also note that λent(q, q′, δ) need not be commutative in the first two arguments q, q′, or equivalently, that λjk need not be the same as λkj. For instance, interaction rates are asymmetric for the RPMC method (Zsom & Dullemond 2008; Beutel & Dullemond 2023), which we refer to throughout this work.

The arrival time ∆t of the next event in a Poisson point process with a given interaction rate λ follows the exponential distribution characterised by the probability distribution function

![$p({\rm{\Delta }}t) = \lambda \exp [ - \lambda {\rm{\Delta }}t].$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq3.png) (3)

(3)

Using inverse transform sampling1, we can determine a random arrival time by computing

(4)

(4)

where ξ is a uniform random number drawn from the interval [0,1).

2.2 Simulating a compound Poisson process

An ensemble of n entities comprises n2 interaction processes, each of which can be considered a Poisson point process. Because an interaction of two entities j, k may change the properties qj and qk, it may affect the interaction rates of the entities j and k with any other entity i ∈ {1,…, n}. A Poisson point process is not homogeneous if interaction rates can suffer intermittent changes. It follows that the sampling method of Eq. (4) is applicable only if interactions are simulated in global order as a compound Poisson process, and that all affected interaction rates must be recomputed after an event has occurred.

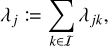

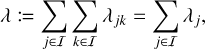

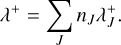

A scheme for simulating interactions in an ensemble of n entities in global order has first been proposed by Gillespie (1975). We now give a brief summary of this scheme. First, let λj be the cumulative interaction rate of entity j with any other entity in the ensemble,

(5)

(5)

and let λ be the global rate of interactions,

(6)

(6)

where we abbreviate the set of all entity indices as

(7)

(7)

The arrival time of the next interaction can be sampled as per Eq. (4). We then draw random indices j and k from the discrete distribution defined by the joint probability

(8)

(8)

is the chance that entity j is involved in the interaction event, and

(10)

(10)

is the chance that, given such an interaction, it is entity k which interacts with entity j. We then advance the system by the time increment ∆t and carry out the interaction between entities j and k, allowing their properties to be altered. The interaction may also effectuate the creation of new entities (e.g. when colliding bodies fragment to smaller pieces) or the annihilation of entities (e.g. when colliding particles ‘stick’ and thus merge). Therefore, the global interaction rate λ has to be recomputed after an interaction.

2.3 Incremental updates

A routine that directly computes the global interaction rate λ for an ensemble of n entities entails n2 evaluations of the entity interaction rate function λent (q, q′,δ), which may be prohibitively expensive for large ensembles.

An interaction of entity j with entity k may inflict changes on the two entities involved, wherefore the global interaction rate may change and must be recomputed. In Zsom & Dullemond (2008, Sect. 2.1), a way of alleviating the cost of recomputing λ was described. The authors observed that a change to the properties of entity j can only affect the interaction rates λji,λij∀i ∈ {1, … n}. Therefore, one can store the entirety of pair-wise interaction rates λjk in a two-dimensional array and the cumulative interaction rates λj, obtained as the column sum of the λjk array, in a one-dimensional array. Then, when the properties of entity jchange during an interaction, only a row and a column in the λjk array have to be recomputed, and the λj array can be updated cumulatively. At the expense of a memory buffer holding (n2 + n) interaction rates, the interaction rates can thus be updated with only (2n − 1) evaluations of the interaction rate function.

2.4 Discrete inverse transform sampling

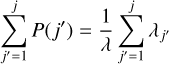

Knowing the exact values for the global interaction rate λ, the cumulative interaction rates λj, and the individual interaction rates λjk, we can determine the time interval until the next event At by sampling the exponential distribution with Eq. (4). To find the indices j and k of the entities interacting, we can then employ discrete inverse transform sampling, a discrete variant of the inverse transform sampling scheme also described by Gillespie (1975): we first draw a uniformly distributed random number ξ ∈ [0, 1), and then we compute the partial sum

(11)

(11)

consecutively for indices j = 1,…, n, stopping and picking the first index j for which

(12)

(12)

where P(j) was defined in Eq. (9), and likewise for index k and the probability P(k| j) defined in Eq. (10). With precomputed values for λj and λjk available, this operation is a simple cumulative summation of an array, taking n summation steps on average.

2.5 Example: Physical bodies

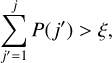

How the entity interaction rate function λent(q, q′ ,δ) should be defined depends on what is understood by an entity. As an example, we might identify entities with individual physical bodies that interact through collision. The raw collision rate λ(q, q′) is the rate (in units of time−1) at which two bodies with properties q, q are expected to collide. It is necessarily commutative, λ(q, q′ ) = λ(q′, q). If the particles are distributed homogeneously and isotropically in a volume V, the stochastic collision rate λ(q, q′) can be written as

(13)

(13)

where σ(q, q′) is the collision cross-section and |∆v(q, q′)| the average relative velocity between particles with properties q, q′. To account for the fact that a particle cannot collide with itself, and to avoid double counting of mutual interactions, the entity interaction rate function λent(q, q′, δ) would then be defined as

(14)

(14)

2.6 Example: Representative particles

Alternatively, an entity j might be identified with both a representative particle and an associated swarm of Nj particles, with the properties of representative particle and swarm both captured in qj. In that case, an entity can interact with itself (a representative body can collide with another particle from the swarm which its entity is associated with), and the raw interaction rate λ(q, q′) is not commutative: representative particle jmay be more likely to collide with a particle from swarm k than representative particle k is to collide with a particle from swarm j.

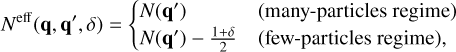

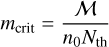

The extended RPMC method introduced in the companion paper (Beutel & Dullemond 2023) distinguishes between many-particles swarms and few-particles swarms, where a swarm is a many-particles swarm if its number of particles exceeds the particle number threshold Nth. The effective swarm particle count is thus defined according to the interaction regime,

(15)

(15)

where N(qk) ≡ Nk is the number of swarm particles represented by entity k, and where an interaction between entities with properties q and q′ is said to operate in the many-particles regime if both swarms are many-particles swarms, N(q) > Nth and N(q′) > Nth, and in the few-particles regime otherwise. For the RPMC method, the entity interaction rate function is then defined as

![${\lambda _{{\rm{ent }}}}\left( {{\bf{q}},{{\bf{q}}^\prime },\delta } \right): = {N^{{\rm{eff }}}}\left( {{\bf{q}},{{\bf{q}}^\prime },\delta } \right)\lambda \left( {{\bf{q}},{{\bf{q}}^\prime }} \right).]$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq16.png) (16)

(16)

In the few-particles regime, double counting is compensated and self-interaction is suppressed for interactions between representative particles but not for interactions between a representative and a non-representative particle. In particular, because Nth ≥ 1, Eq. (16) degenerates to Eq. (14) for N(q′) = 1.

2.7 Incorporating external effects

In many physical codes, multiple types of interactions have to be considered, and hence a given process of stochastic interactions needs to be interweaved with other processes of stochastic or deterministic nature such as direct N-body interaction or hydro-dynamic processes. Entity properties may then undergo changes originating in physical processes which are modelled by other parts of the simulation and hence not represented as discrete events in the stochastic process at hand. Computing incremental updates after every stochastic event then no longer suffices as all interaction rates can be subject to external changes at all times. As an example, consider an ensemble of particles in quasi-kinetic motion which populate a volume V also occupied by a gas. The gas exerts a drag force on the particles, thus slowing them down and reducing the average relative velocity |∆v(q, q′)|, and thereby decreasing their mutual interaction rates over time as per Eq. (13).

As a simple means of incorporating external effects in a stochastic simulation, one could accumulate and coalesce external changes to entity properties and defer the recomputation of interaction rates until they exceed some absolute or relative change threshold. When the properties of an entity are changed by more than a given relative threshold, the interaction rates for the entity are updated.

2.8 Cost model

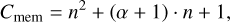

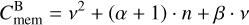

The memory requirements of this sampling scheme amount to approximately Cmem floating-point values:

(17)

(17)

where α = dim ℚ is the number of floating-point values needed to store the properties of an entity. For the sampling process, we need to store n2 pair-wise interaction rates and n + 1 cumulative interaction rates.

To model the computational complexity, we first define some elementary computational costs:

Cop, the cost of performing an elementary arithmetic operation (e.g. adding two numbers);

Crand, the cost of generating a random number distributed uniformly in [0,1);

Cλ, the cost of evaluating the entity interaction rate function λent(q, q′,δ);

Caction, the cost of simulating an interaction between two given entities.

We are aware that, on a modern superscalar system, one cannot reason about the cost of elementary arithmetic operations without also considering how computations are executed and how memory is accessed. With some effort, the computational performance of a system with arithmetic vector units can far exceed the performance predicted by a simplistic linear cost model, and the cost of memory accesses may be anything from completely marginalised through caches, speculative execution, and other means of hiding latency to throttling the computational power through limited bandwidth or inefficient memory access patterns. Therefore, the cost units above are not meant to be identified with specific quantities (such as ‘10 CPU cycles’). Instead, our cost model aims to predict the scaling behaviour of the computational scheme. We use the cost units to identify which operations scale with n2 or with n, from which we can infer which individual costs would be most worthwhile to reduce.

To initialise the simulation, all pair-wise interaction rates between entities must be computed and summed up columnwise. The cost of initialisation therefore is

(18)

(18)

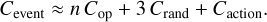

To sample and simulate an interaction event, we need to draw three uniform random numbers, traverse up to n floating-point numbers two times during the sampling procedure described in Sect. 2.4, and then simulate the interaction itself. Assuming that, on average, half of the n interaction rates need to be traversed during sampling, this amounts to a per-event cost of

(19)

(19)

When the properties of an entity have changed – that is, when the entity was subject to an interaction, or when the cumulative changes of external effects have exceeded a given threshold –, all interaction rates involving the entity need to be recomputed. Executed as an iterative update, this has a cost of

(20)

(20)

To estimate how the number of interactions scales with the number of entities n, we need to make further assumptions on the structure of the entity interaction rate function λent(q, q′,δ). Let us consider the case of representative entities in Eq. (16) and assume that the number of particles represented by an entity is large, Nk ⪢ 1 ∀k. Then, we approximate the entity interaction rate function as

(21)

(21)

By definition, the global interaction rate (Eq. (6)) then is

(22)

(22)

where 〈xjk〉jk is the quantity xjk averaged over all combinations of entities j, k. In this picture, a certain number of N physical particles is represented by n representative particles each associated with an entity. From  we infer

we infer

(23)

(23)

and because λ(qj, qk) should not depend on Nj or Nk, we argue that the mean value 〈Nkλ(qj, qk)〉jk must be proportional to n−1. Assuming an invariant number of physical particles N, we therefore obtain an approximate scaling behaviour of λ ∝ n, or equivalently

(24)

(24)

with some average raw collision rate  .

.

To consider the cost of changes imposed by external effects as sketched in Sect. 2.7, we assume that the average rate of updates per entity triggered by external effects is quantified by  , independent of the number of entities. The global average rate of external updates is therefore

, independent of the number of entities. The global average rate of external updates is therefore

(25)

(25)

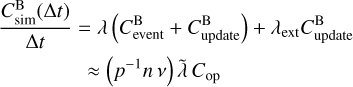

The simulation cost rate - the cost of simulating an ensemble of n entities for a given time interval ∆t divided by ∆t – then is

![$\matrix{ {} & {{{{C_{{\rm{sim}}}}({\rm{\Delta }}t)} \over {{\rm{\Delta }}t}} = \lambda \left( {{C_{{\rm{event}}}} + {C_{{\rm{update}}}}} \right) + {\lambda _{{\rm{ext}}}}{C_{{\rm{update}}}}} \cr \approx & {{n^2}\left[ {\tilde \lambda \left( {3{C_{{\rm{op}}}} + 2{C_\lambda }} \right) + {{\tilde \lambda }_{{\rm{ext}}}}\left( {2{C_{{\rm{op}}}} + 2{C_\lambda }} \right)} \right]} \cr {} & { + n\tilde \lambda \left( {3{C_{{\rm{rand}}}} + {C_{{\rm{action}}}}} \right).} \cr } $](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq29.png) (26)

(26)

Despite being a vast improvement over direct summation, the incremental updating scheme is still an impediment to larger-scale simulations as its costs for a fixed time increment scale with n2.

3 An improved sampling scheme

In this part we devise a new scheme for computing interaction rates that avoids the n2 proportionality of the costs for storage and computation entailed by the traditional sampling scheme. Using rejection sampling, a bucketing scheme, and interval arithmetic, we develop a generally applicable method for efficiently simulating large ensembles of representative particles.

In the traditional sampling scheme described in Sect. 2, the dominant cost is the computation and storage of the entity interaction rates λjk. Not only does this cost scale with n2, but the interaction rate may also be expensive to compute by itself, for instance in a realistic physical collision model. We therefore strive to evaluate the interaction rate function as seldom as possible.

Exact values for λ, λj, and λjk are required to draw random samples with the discrete inverse transform sampling scheme described in Sect. 2.4. Because we would like to reduce the number of evaluations of the interaction rate function, we need to consider alternative sampling schemes.

3.1 Rejection sampling

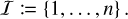

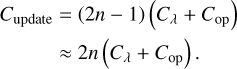

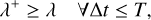

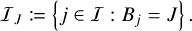

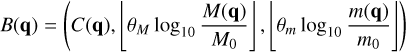

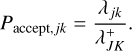

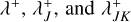

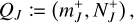

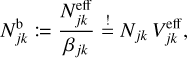

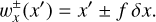

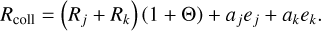

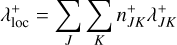

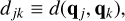

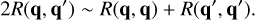

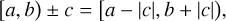

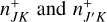

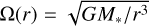

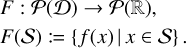

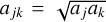

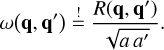

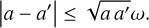

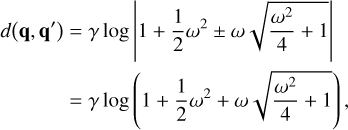

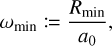

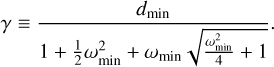

The method of rejection sampling, which is illustrated in Fig. 1, can be used to generate event times for a non-homogeneous Poisson point process if an upper bound for the interaction rate λ is known, cf. Ross (2014, Sect. 11.5.1). Let us assume we can obtain an upper bound

(27)

(27)

where t is the current time and T is the timescale on which external changes to the system need to be considered. We can then sample a potential event at time t + ∆t by sampling a time interval ∆t from the exponential distribution given by Eq. (4) but using λ+ instead of λ. If ∆t > T, no event occurs during the timescale T; we then update the system by applying the external operators, compute a new timescale T′ from the external process and a new upper bound λ′+, and update

(28)

(28)

(29)

(29)

(30)

(30)

(31)

(31)

Once a small enough time interval has been sampled, ∆t ≤ T, we compute the exact interaction rate λ at time t + ∆t and choose to accept the sampled event time with a probability of

(32)

(32)

Regardless of acceptance, we update the times as

(33)

(33)

(34)

(34)

If the event time was accepted, we simulate an interaction by determining the indices j and k of the interacting entities, and then repeat the process from the beginning.

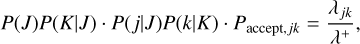

This sampling scheme can also be applied to the compound Poisson process of n entities. To this end, we take λ to be the global interaction rate in Eq. (6). After an event has been accepted, the indices of the entities interacting are chosen with discrete inverse transform sampling of the joint probability given in Eq. (8). The probability that an interaction between entities j and k occurs at time t + ∆t is thus

(35)

(35)

The proposed scheme still requires us to compute all interaction rates λjk and the global interaction rate λ at time t + ∆t in order to determine whether to accept the sampled event time ∆t and to sample the indices j and k, and therefore retains the performance characteristics of the sampling scheme for a homogeneous Poisson process. But as we demonstrate in this paper, the computational cost can be significantly reduced by flexible and more granular use of upper bounds.

|

Fig. 1 Schematic illustration of rejection sampling. Given a continuous probability distribution function p(x) (solid red line) defined on some domain D ≡ [x−, x+], a single p-distributed sample |

|

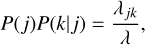

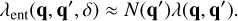

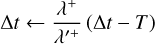

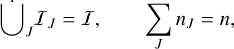

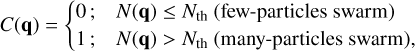

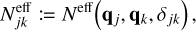

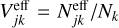

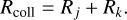

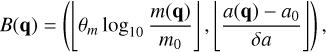

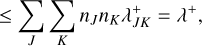

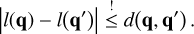

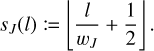

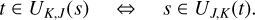

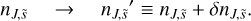

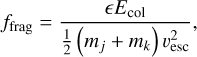

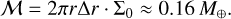

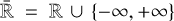

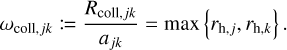

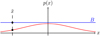

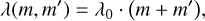

Fig. 2 Schematic illustration of bucketing. Rejection sampling as per Fig. 1 is inefficient if the upper bound B significantly overestimates the probability distribution function p(x), necessitating a large number of evaluations of p(x) per accepted sample. To increase efficiency, the domain D can be divided into disjoint subdomains D1,…,Dv (shaded with different colours), henceforth referred to as ‘buckets’. Then, separate upper bounds B1,…,Bv (solid coloured lines) can be estimated for the individual buckets. To sample a value |

3.2 Buckets

Rejection sampling is an efficient sampling scheme only if the upper bound λ+ is sufficiently close to the true interaction rate λ: the more the upper bound overestimates the interaction rate, the more sampled events have to be rejected, nevertheless each incurring an evaluation of the entity interaction rate function λent(q, q′, δ).

In order to increase the efficiency of rejection sampling, we introduce the ‘bucketing scheme’, the concept of which is explained schematically in Fig. 2. The basic idea is to group similar entities in ‘buckets’, then to compute upper bounds of the interaction rates between buckets of entities. To group entities in buckets, we first need a bucketing criterion, that is, a function B : ℚ → 𝕁 that maps a vector of entity properties q to an element B(q) in some discrete ordered set 𝕁. In simpler words, for any given entity j the function B(qj) tells which bucket this entity is in. Each bucket is uniquely identified by an element J ∈ ∈, henceforth referred to as the label of the bucket. A convenient choice for a label would be a d-dimensional vector of integers, ∈ ≡ ℤd, which can be ordered lexicographically.

With Bj we denote the label of the bucket which entity j is currently in, which we initialise as

(36)

(36)

We refer to the set of entity indices associated with a bucket with label J ∈ ℤ as

(37)

(37)

In other words, 𝔗J is the index set of entities that are in bucket J. The number of entities in a bucket is denoted

(38)

(38)

consistent with n = #𝔗, where #S refers to the cardinality of some set S. Buckets are necessarily disjoint,

(39)

(39)

meaning that each entity belongs to one, and only one, bucket. The set of occupied bucket labels, that is, the labels of the buckets which contain at least one entity, is

(40)

(40)

the number of which is referred to as

(41)

(41)

Because the bucketing scheme samples the space of bucket labels 𝕁 sparsely, the cost of storage and computational effort is a function of v.

|

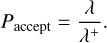

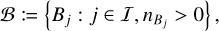

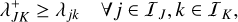

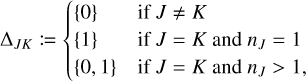

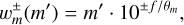

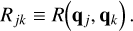

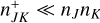

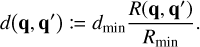

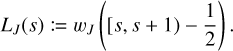

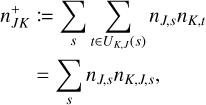

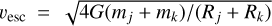

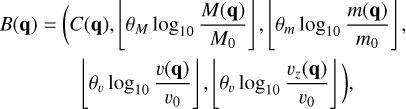

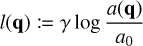

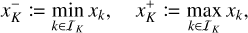

Fig. 3 Schematic illustration of the mass bucketing criterion in Eq. (42). The orbs represent particles of different mass, visually represented as surface area, which are grouped into seven buckets. |

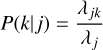

3.3 Example: Representative particles with mass

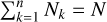

Pursuing the example given in Sect. 2.6 further, we identify entities with representative particles. We also assume that each representative particle j has a mass mj = m(qj), and that the collision rate λ(qj, qk) is correlated with the masses mj and mk. The bucketing criterion is therefore chosen to be mass-dependent. A typical physical scenario studied with an RPMC coagulation simulation covers a dynamic range of several orders of magnitude in particle mass, hence a logarithmic dependency on mass is appropriate. A simple yet effective one-dimensional bucketing criterion thus is

(42)

(42)

for some reference mass m0, where m(q) is the mass of a representative particle with properties q, and where the expression  denotes the floor function. The bucket density θm is a simulation parameter which specifies how many buckets per mass decade should be used, thus indirectly controlling the number of occupied buckets v. The bucketing criterion in Eq. (42) is visualised schematically in Fig. 3.

denotes the floor function. The bucket density θm is a simulation parameter which specifies how many buckets per mass decade should be used, thus indirectly controlling the number of occupied buckets v. The bucketing criterion in Eq. (42) is visualised schematically in Fig. 3.

As explained in Sect. 2.6, for the extended RPMC method, particle–swarm interaction rates differ between the different interaction regimes (the many-particles regime and the few-particles regime). Therefore, it is reasonable to also use the swarm multiplicity as part of the bucketing criterion. Another change brought about by the extended RPMC method is that swarm masses are allowed to vary. In the original definition of the method, the same fraction of the total mass had been assigned to each swarm. The interaction rate λjk has a strong dependency on the number of particles represented, as seen in the (near-)proportionality to N(q′) in Eq. (16), and therefore also depends on the swarm mass to which the number of particles relates as N(q) = M(q)/m(q), where M(q) refers to the total mass represented by the swarm. For this reason, we also want to have the swarm mass be part of the bucketing criterion.

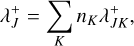

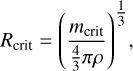

For the extended RPMC method we therefore propose the following three-dimensional bucketing criterion:

(43)

(43)

with M0 = 𝓜/n the average swarm mass, 𝓜 the total mass of particles in the system, and the bucket density θM a simulation parameter specifying the number of buckets to use per decade of swarm masses. The classifier C(q) is defined as

(44)

(44)

with Nth the particle regime threshold.

The traditional RPMC method always employs an equal-mass sampling, Mi = M0 ∀i ∈ 𝔗, which stays unaltered over the course of the simulation, and it entirely operates in the many-particles regime, Ni ≫ 1 ∀i ∈ 𝔗, and hence Ni > Nth ∀i ∈ 𝔗. Therefore, the first and second components of Eq. (43) would always evaluate to 1 and  , rendering this bucketing criterion equivalent to Eq. (42) in this case.

, rendering this bucketing criterion equivalent to Eq. (42) in this case.

3.4 Sampling an event

Let us assume that, given a bucket J and its constituent entities j ∈ 𝔗J, we can compute its bucket properties, which we denote as QJ; and that, given two buckets J, K, and their associated bucket properties QJ, QK, we can compute an upper bound  for the interaction rates λjk between any entities j, k associated with these buckets,

for the interaction rates λjk between any entities j, k associated with these buckets,

(45)

(45)

Bucket properties and the computation of bucket-bucket interaction rate bounds remain unspecified here but are discussed in detail in Sect. 4.

We then define the upper bounds of the cumulative interaction rates for all buckets J,

(47)

(47)

and the upper bound of the global interaction rate

(48)

(48)

Inserting Eqs. (46) and (47) into Eq. (48) proves that λ+ indeed constitutes an upper bound to the global rate of interactions λ (Eq. (6)):

(49)

(49)

Using the upper bounds λ+ and  , we can now implement the rejection sampling scheme of Sect. 3.1 more efficiently by interchanging the acceptance decision and the selection ofentity indices j, k:

, we can now implement the rejection sampling scheme of Sect. 3.1 more efficiently by interchanging the acceptance decision and the selection ofentity indices j, k:

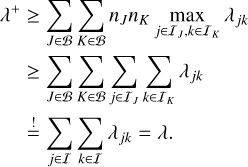

1. First, we randomly choose bucket indices J, K with a probability

(50)

(50)

2. using discrete inverse transform sampling as per Sect. 2.4.2. We then randomly choose entity indices j∈ 𝔗J, k ∈ 𝔗K with the probability

(51)

(51)

3. which is independent of j and k, amounting to uniform or unweighted sampling. 3. Then we compute the interaction rate λjk of the entities chosen. The interaction event is then accepted with a probability of

(52)

(52)

Combining all three probabilities, we obtain the selection probability

(53)

(53)

which we find identical to the combined sampling probability Pjk in Eq. (35), thereby proving that this selection process is equivalent.

3.5 The bucketing algorithm

We now describe how a general compound Poisson process as defined in Sect. 2.2 can be simulated with the bucketing scheme. The proposed algorithm consists of the following steps:

- 1.

Initialise simulation.

- 1.i.

Distribute all entities j to buckets. To this end, for every entity j determine the entity bucket label Bj ← B(qj). Assemble a list of occupied buckets and, for every occupied bucket, a list of the entities in it.

- 1.ii.

For all occupied buckets J ∈ ∈, compute the bucket properties QJ, which are a function of the properties qj of the entities in the bucket, j ∈ 𝔗J.

- 1.iii.

For all pairs of buckets J, K ∈ 𝓑, compute bucket-bucket interaction rate upper bounds

.

. - 1.iv.

Compute the upper bounds for the cumulative bucket interaction rate bound

for all occupied buckets J ∈ 𝓑 by summation of

for all occupied buckets J ∈ 𝓑 by summation of  , and compute the upper bound of the global interaction rate λ+ by summation of all

, and compute the upper bound of the global interaction rate λ+ by summation of all

- 1.i.

- 2.

Sample an event.

- 2.i.

Sample an event interarrival time ∆t with Eq. (4) using λ+ as the interaction rate.

- 2.ii.

If the time exceeds the timescale of external updates, ∆t > T, apply the external updates, execute Step 3 for every entity whose properties underwent significant changes during the external update, then adjust ∆t, t, T as per Eqs. ((28)–(31)), then start over with Step 2.i.

- 2.iii.

It can be assumed henceforth that Δt ≤ T: The sampled tentative interaction event may occur within the external updating timescale. Thus, using discrete inverse transform sampling with the stored interaction rate bounds

, randomly choose the buckets J, K ∈ 𝔅 which contain the entities possibly determined to undergo an interaction event.

, randomly choose the buckets J, K ∈ 𝔅 which contain the entities possibly determined to undergo an interaction event. - 2.iv.

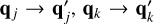

Using uniform sampling, randomly choose a pair of entities j ∈ 𝔗, k ∈ 𝔗K.

- 2.v.

Compute the interaction rate λjk. Accept the event with probability Paccept,jk (Eq. (52)).

Advance the system time by the event interarrival time, t ← t + Δt (Eq. (33)).

- 2.vi.

If the event was accepted, simulate the interaction between entities j and k, thereby possibly altering the entity properties

and then execute Step 3 for all entities whose properties have changed (none, j, k, or both j and k).

and then execute Step 3 for all entities whose properties have changed (none, j, k, or both j and k). - 2.vii.

Continue with Step 2.i.

- 2.i.

- 3.

Subroutine: update a given entity j.

- 3.i.

Determine the old and new bucket labels J := Bj, J′ := B′j. Move the entity to the new bucket if J′ ≠ J.

- 3.ii.

For bucket J′, update (if necessary) or recompute (if due) the bucket properties QJ′.

Section 4.4 precisely defines what ‘updating’ means and how we decide whether updating suffices or the bucket properties need to be recomputed.

- 3.iii.

If the bucket properties were recomputed, or if the updating step caused an alteration of the bucket properties QJ′ of the new bucket J′, recompute the bucket-bucket interaction rate upper bounds

for all occupied buckets K ∈ 𝔅.

for all occupied buckets K ∈ 𝔅. - 3.iv.

For all occupied buckets K ∈ 𝔅, update the cumulative interaction rate bounds

incrementally to account for any changes to

incrementally to account for any changes to  and recompute λ+ by summation of all

and recompute λ+ by summation of all  .

.

- 3.i.

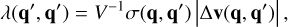

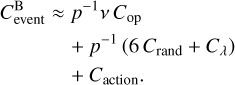

3.6 Data structures

The efficiency of the algorithm presented in Sect. 3.5 is bounded by the efficiency of its various mapping and enumeration steps. The data structures chosen to represent the simulation state are thus of critical importance.

In our reference implementation, we represent the simulation state with the following data structures:

An array (qj)j∈l of length n which holds the entity properties for all entities j ∈ 𝔗.

The upper bound for the total interaction rate λ+.

-

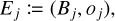

An array (DJ)J∈𝔅 of length v which for every occupied bucket J ∈ 𝔅 stores a tuple

(54)

(54)holding the bucket label J, the bucket properties QJ, an array of entities 𝔗J, an array of bucket–bucket interaction data upper bounds

, and the cumulative bucket interaction rate upper bound

, and the cumulative bucket interaction rate upper bound  .

.The order of the entity indices in the 𝔗J arrays is arbitrary. The order of the (DJ)J∈𝔅 array is arbitrary as well but must coincide with the order of the arrays of bucket-bucket interaction data upper bounds

for all J ∈ 𝔅.

for all J ∈ 𝔅. -

An array (J, bJ)J∈𝔅 of length v which for every occupied bucket J ∈ 𝔅 stores the index bJ of the corresponding element in the (DJ)J∈𝔅 array.

The array is ordered by J.

-

An array (Ej)j∈𝔗 of length n which for every entity j ∈ 𝔗 stores a tuple

(55)

(55)holding the bucket labels Bj and the offsets oj at which the entity indices are stored in the array held by

.

.

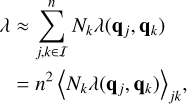

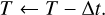

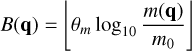

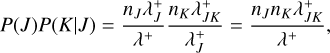

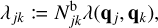

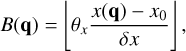

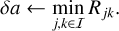

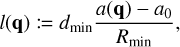

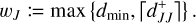

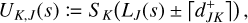

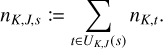

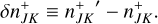

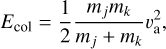

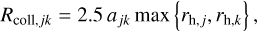

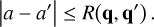

The relations between the arrays (DJ)J∈𝔅, (J, bJ)J∈𝔅, and (Ej)j∈𝔗 are exemplified in Fig. 4. With these data structures, the bucketing algorithm can be implemented very efficiently, as we shall explore in the next section.

Although a particular choice of data structures is discussed here, the bucketing algorithm could of course be implemented differently. For example, instead of storing an array of entries 𝔗J for every bucket J ∈ 𝔅, all entries could be stored in a single contiguous array ordered by bucket index, thereby reducing the amount of memory reallocations required while increasing the cost of moving entities between buckets and the cost of adding or removing buckets.

3.7 Cost model

In the following we estimate the memory requirement and the computational cost of simulating a compound Poisson process with the bucketing scheme when implemented with the choice of data structures described in Sect. 3.6.

The bucket properties QJ and the computation of upper bounds for bucket–bucket interaction rates are discussed in Sect. 4. For now, without specifying them any further, we only make two assumptions for the purpose of assessing the cost of the simulation. Firstly, given a bucket J ∈ 𝔅 holding nJ entities, we assume that the bucket properties QJ can be computed by traversal of the entity properties qj of all entities in the bucket j ∈ ?J, and therefore with nJ computational steps. Secondly, given the bucket properties QJ, QK for two buckets J, K ∈ 𝔅, we assume that an upper bound for the bucket–bucket interaction rate  can be computed in constant time, that is, with computational effort independent of the number of entities held by buckets J and K.

can be computed in constant time, that is, with computational effort independent of the number of entities held by buckets J and K.

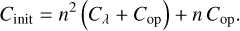

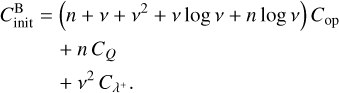

In the bucketing scheme, the same n vectors of entity properties qj as in the traditional sampling scheme need to be tracked, but in addition, only v2 upper bounds of bucket–bucket interaction rates as well as v elements of per-bucket data such as bucket properties and cumulative interaction rate upper bounds must be stored, amounting to an approximate memory requirement of

(56)

(56)

floating-point values, where α = dim ℚ again is the number of floating-point values needed to represent the properties qj of some entity j, and β represents the number of floating-point values required to store the properties QJ of some bucket J.

In addition to the elementary computational costs defined in Sect. 2.8, we define the following additional costs specific to the bucketing scheme:

CQ, the cost of updating the set of bucket properties QJ for a given bucket J ∈ 𝔅 to account for an entity being added to or removed from the bucket;

, the cost of computing an upper bound for the bucket-bucket interaction rate from two sets of bucket properties Q, Q′.

, the cost of computing an upper bound for the bucket-bucket interaction rate from two sets of bucket properties Q, Q′.

The proposed algorithm of the bucketing scheme employs dynamically sized data structures. The runtime cost and memory overhead of dynamic memory allocation is neglected in the following discussion.

To set up a simulation, we first traverse the list of entities j ∈ 𝔗, evaluate the bucketing criterion B(q) to determine the corresponding bucket label Bj ← B(qj) for every entity, store the label in the Ej array, and store the list of all bucket labels in the sorted array of tuples (J, bJ)J∈𝔅. This can be done with amortised (n + v log v) operations by accumulating and subsequently sorting occupied bucket labels. For every occupied bucket we allocate an entry in the DJ array which we refer to with the bJ index. We then iterate through the list of entities j ∈ 𝔗 a second time, look up the bucket labels Bj in the Ej array, locate the bucket in the (J, bJ) array with binary search, and append the entity to the bucket’s list of entity indices  , storing the entity index position in oj, which entails an average number of n log v steps. With every bucket having available a list of the entities in it, we can now iterate through the array of buckets and compute and store the bucket properties QJ for every bucket J ∈ 𝔅 at a total cost of ΣJ nJ CQ = nCQ. Finally, we iterate over all pairs of buckets J, K ∈ 𝔅 and compute and append the bucket–bucket interaction rate upper bounds

, storing the entity index position in oj, which entails an average number of n log v steps. With every bucket having available a list of the entities in it, we can now iterate through the array of buckets and compute and store the bucket properties QJ for every bucket J ∈ 𝔅 at a total cost of ΣJ nJ CQ = nCQ. Finally, we iterate over all pairs of buckets J, K ∈ 𝔅 and compute and append the bucket–bucket interaction rate upper bounds  to the array

to the array  of bucket J, which has a cost of

of bucket J, which has a cost of  . With v2 arithmetic operations, we also compute the cumulative bucket interaction rate upper bounds

. With v2 arithmetic operations, we also compute the cumulative bucket interaction rate upper bounds  and the total interaction rate upper bound λ+. The total cost of initialising the simulation thus amounts to

and the total interaction rate upper bound λ+. The total cost of initialising the simulation thus amounts to

(57)

(57)

Sampling an event candidate requires drawing one uniform random number for choosing an interarrival time, two random numbers and v additions on average for determining a pair of buckets, and another two random numbers for choosing entity indices. An event candidate can be accepted or rejected, which is decided by computing the interaction rate between the entities sampled to determine the acceptance probability (Eq. (52)) and by choosing whether to accept by drawing another random number. Let us assume that the average probability of acceptance is p ∈ (0,1]; then, for one event to be simulated, p−1 candidates have to be drawn on average, and the cost of sampling an event therefore is

(58)

(58)

When the properties qj of an entity j are changed either by an interaction or by external events, the bucket properties QJ of the corresponding bucket J = Bj, all bucket–ucket interaction rate bounds involving bucket J, and all cumulative bucket interaction rate bounds need to be updated. Locating the bucket of entity j in the sorted array of tuples (J, bJ)J∈𝔅 with binary search requires log v comparisons on average, and updating the upper bound for the interaction rate λ+ requires recomputing and summing up an average number of v bucket–ucket interaction rate bounds, amounting to an approximated cost of

(59)

(59)

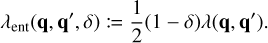

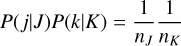

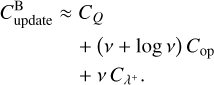

Entities sometimes need to be moved to another bucket, and as a consequence, buckets sometimes need to be added and removed. With the data structures adopted for our reference implementation, either operation can be performed in amortised 𝒪(1) steps with a manoeuvre sketched in Fig. 5 which we refer to as ‘castling’ because of its vague resemblance of the eponymous chess move.

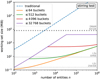

Following the reasoning in Sect. 2.8, we estimate the simulation cost rate as

(60)

(60)

![$\eqalign{ & \approx \left( {{p^{ - 1}}nv} \right)\tilde \lambda {C_{{\rm{op}}}} \cr & \,\,\, + \left( {{p^{ - 1}}n} \right)\tilde \lambda \left( {6{C_{{\rm{rand }}}} + {C_\lambda }} \right) \cr & \,\,\, + (nv)\left[ {\tilde \lambda + {{\tilde \lambda }_{{\rm{ext }}}}} \right]\left( {{C_{{\lambda ^ + }}} + {C_{{\rm{op}}}}} \right) \cr & \,\,\, + n\left[ {\tilde \lambda \left( {{C_Q} + {C_{{\rm{action }}}}} \right) + {{\tilde \lambda }_{{\rm{ext }}}}{C_Q}} \right], \cr} $](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq97.png) (61)

(61)

where a (n log v) ![$\left[ {\tilde \lambda + {{\tilde \lambda }_{{\rm{ext}}}}} \right]{C_{{\rm{op}}}}$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq98.png) term has been neglected.

term has been neglected.

The cost estimate is more complex than the estimate given for the traditional scheme in Eq. (26), but it is evident that no term scales with n2 or with n log n. However, we observe that, because buckets are allocated sparsely, there cannot be more occupied buckets than entities,

(62)

(62)

and thus n v is an upper bound to v2: the cost rate of the bucketing scheme thus scales at least quadratically with the number of buckets, just as the cost rate of the traditional implementation scales quadratically with the number of entities.

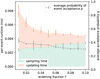

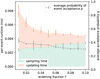

The average probability of acceptance p quantifies the efficiency of the bucketing scheme. For an inefficient bucketing scheme, p is very small, and hence p−1 is large, allowing the first two terms in Eq. (61), which comprise the cost of rejection sampling, to dominate the simulation cost rate. Conversely, if the bucketing scheme is efficient, p, and thus p−1 as well, approaches unity, allowing the latter two terms in Eq. (61) to dominate. If we adjust the balance between rejection sampling and updating by refining or coarsening the bucketing scheme, or by making the bucketing scheme slightly hysteretic (cf. Sect. 4.5), then we can achieve better overall performance, as studied in Sect. 6.3.5.

|

Fig. 4 Example illustrating the data structures used to implement the bucketing scheme as described in Sect. 3.6. This example comprises an ensemble of n = 13 entities partitioned in v = 4 buckets, with the bucket labels being designated as A, B, C, D, ordered lexicographically, and using 0-based numeric indices for all other indexing purposes. Entity indices are printed in normal type. The indices of the array entries at which the entity indices are stored in their associated buckets are printed in italic type. Element referral is indicated by dashed lines. Some elements of the DJ tuples were omitted in the visual representation. |

|

Fig. 5 Example demonstrating how to move an entity to a different bucket in 𝒪(1) steps. To move entity 4 from bucket A to bucket B, append an element to the list of entities in bucket B, and move the last entity in bucket A to the previous location of entity 4. Only two elements in the array (Ej)j∈𝔗 have to be updated. Element movement is symbolised with solid lines, and element referral is indicated by dashed lines. Addition and removal of entries to and from arrays is indicated with boxes of green and red colour, respectively. |

4 Computing interaction rate bounds

In our description of the bucketing algorithm in Sect. 3.5, two aspects had remained unspecified: the nature and purpose of the bucket properties QJ, and the computation of upper bounds for bucket-bucket interaction rates  from bucket properties QJ, QK of two buckets J, K ∈ 𝔅.

from bucket properties QJ, QK of two buckets J, K ∈ 𝔅.

The definition of bucket-bucket interaction rate upper bounds given in Eq. (46) suggests that bounds could be computed as

(63)

(63)

for two given buckets J, K ∈ 𝔅. Naïvely, this would require the evaluation of the entity–entity interaction rate λ jk for all pairs of entities j ∈ 𝔗J and k ∈ 𝔗K, and therefore nJ ⋅ nK evaluations of the entity interaction rate function λent(q, q′, δ). Because, for v occupied buckets, a total number of v2 bucket-bucket interaction rate upper bounds need to be computed, and because ΣJ nJ = n, this amounts to n2 evaluations of λent(q, q′, δ), which would be prohibitively expensive. But Eq. (63) can be evaluated much more efficiently, as we shall now demonstrate with an example.

4.1 Example: Linear kernel

with some constant λ0 leads to a special case of the Smoluchowski equation (Eq. (1)) for which the equation can be solved analytically (e.g. Ohtsuki et al. 1990), and which is hence often used to verify numerical methods for the simulation of coagulation processes. In a representative particle simulation where all swarms are many-particle swarms, Nk ≫ 1 ∀k ∈ 𝔗, the particle–swarm interaction rate λjk would then be

(65)

(65)

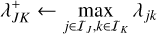

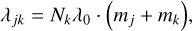

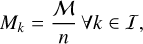

as per Eq. (16). Inserting this expression into Eq. (63), we find that a bucket–bucket interaction rate bound can be computed as a function of bucket-specific upper bounds,

![$\eqalign{& \lambda _{JK}^ + \leftarrow \mathop {\max }\limits_{j \in {I_J},k \in {I_K}} {\lambda _0}\left( {{N_k}{m_j} + {M_k}} \right) \cr & \,\,\,\,\,\,\,\,\,\,\matrix{ { = {\lambda _0}\left[ {\mathop {\max }\limits_{k \in {I_K}} {N_k}\mathop {\max }\limits_{j \in {I_J}} {m_j} + {M \over n}} \right]} \hfill \cr { = {\lambda _0}\left[ {N_K^ + m_J^ + + {M \over n}} \right],} \hfill \cr } \cr} $](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq104.png) (66)

(66)

where we used the swarm mass Mk = Nkmk, assumed an equal-mass sampling where every swarm holds the same fraction of the total mass ℳ,

(67)

(67)

refers to the bucket-specific lower and upper bounds  of some property xk for entities k in a bucket K. Computing a bucket-specific upper bound only requires traversal of all entities in the bucket, and hence nJ operations for a bucket J, or n operations for all buckets. In the case of the linear kernel, we could therefore define the bucket properties as a tuple

of some property xk for entities k in a bucket K. Computing a bucket-specific upper bound only requires traversal of all entities in the bucket, and hence nJ operations for a bucket J, or n operations for all buckets. In the case of the linear kernel, we could therefore define the bucket properties as a tuple

(69)

(69)

which for any bucket J can be computed with nJ operations. Subsequently, given the bucket properties QJ, QK of two buckets J and K, a bucket–bucket interaction rate upper bound  can be computed directly as per Eq. (66), thereby meeting the complexity requirements stated in Sect. 3.7. We note that the upper bound given by Eq. (66) is optimal: it is an exact upper bound on the particle–swarm interaction rates λjk for j ∈ 𝔗J, k ∈ 𝔗K, or in other words, no better bound exists. This quality is difficult to retain except in the simplest of cases.

can be computed directly as per Eq. (66), thereby meeting the complexity requirements stated in Sect. 3.7. We note that the upper bound given by Eq. (66) is optimal: it is an exact upper bound on the particle–swarm interaction rates λjk for j ∈ 𝔗J, k ∈ 𝔗K, or in other words, no better bound exists. This quality is difficult to retain except in the simplest of cases.

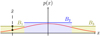

Results for an RPMC simulation of coagulation with the linear kernel using the bucketing scheme are shown in Fig. 6. The analytical solution is given as a reference.

|

Fig. 6 Analytical solutions and RPMC simulations for the coagulation test with the linear kernel (Eq. (64)) using the bucketing scheme. The RPMC simulation runs use n = 1024 and n = 65 536 representative particles, respectively. |

4.2 Example: Runaway kernel

As another example, we consider the runaway kernel, a test kernel modelled after the gravitational focussing effect (cf. e.g. Armitage 2007, Sect. III.B.l) which was introduced in the companion paper Beutel & Dullemond (2023, Sect. 6.2) for the purpose of studying the swarm regime transition:

![$\lambda \left( {m,{m^\prime }} \right) = {\lambda _0}\max {\left( {m,{m^\prime }} \right)^{2/3}}\left[ {1 + {{\left( {{{\max \left( {m,{m^\prime }} \right)} \over {{m_{{\rm{th }}}}}}} \right)}^{2/3}}} \right],$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq110.png) (70)

(70)

where λ0 is some constant and is a threshold mass.

As with the linear kernel, an upper bound of the bucket–bucket interaction rate can be given in terms of bucket-specific bounds,

![$\lambda _{JK}^ + \leftarrow {\lambda _0}N_K^ + {\left( {m_{J,K}^ + } \right)^{2/3}}\left[ {1 + {{\left( {{{m_{J,K}^ + } \over {{m_{{\rm{th}}}}}}} \right)}^{2/3}}} \right],$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq111.png) (71)

(71)

where we used the abbreviation  , the sub-multiplicativity of the maximum norm,

, the sub-multiplicativity of the maximum norm,

(72)

(72)

for sequences of positive semi-definite quantities (ai)i, (bi)i, and the monotonicity of exponentiation. Although this upper bound is no longer exact2, it can be obtained directly from bucket properties

(73)

(73)

and thus also meets the complexity requirements stated in Sect. 3.7.

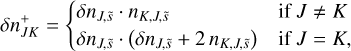

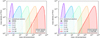

Results for an RPMC simulation of coagulation with the runaway kernel using the bucketing scheme are shown in Fig. 7. The runaway kernel is shown in Fig. 8 along with the emerging entity interaction rate λent(q,q′,δ). For comparison, two snapshots from the RPMC simulation are given in Fig. 9. In both figures, a discontinuity can be observed as swarms Nk are split up once their swarm particle count Nk no longer exceeds the particle regime threshold Nth. Self-representing particles, that is, representative particles k with a swarm particle count of Nk = 1, cannot interact with their own swarm, hence their self-interaction rate is λkk = 0, as seen in the lower left panel of Fig. 9. Indices are grouped by buckets, and the corresponding bucket–bucket interaction rate bounds  are shown in the right panels of Fig. 9.

are shown in the right panels of Fig. 9.

4.3 General interaction rate bounds

We demonstrated how to efficiently compute upper bounds for the bucket–bucket interaction rates for the examples given in Sects. 4.1 and 4.2 by defining a set of bucket-specific upper bounds as bucket properties, inserting the respective interaction rate function into Eq. (63), and rewriting the upper-bound estimate as a function of the bucket properties. The generalisation of this approach leads to the concept of interval arithmetic, which is briefly introduced in Appendix A along with interval notation such as [x] = [x−, x+] and the Fundamental Theorem of Interval Arithmetic. For a more thorough introduction to interval arithmetic, refer to Hickey et al. (2001); Moore et al. (2009); Alefeld & Herzberger (2012).

By virtue of the Fundamental Theorem of Interval Arithmetic, we can obtain an interval extension of the linear kernel (Eq. (64)),

![$\Lambda \left( {[m],\left[ {{m^\prime }} \right]} \right) = {\lambda _0} \cdot \left( {[m] + \left[ {{m^\prime }} \right]} \right),$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq116.png) (74)

(74)

and likewise of the runaway kernel (Eq. (70)),

![$\eqalign{ & \Lambda \left( {[m],\left[ {{m^\prime }} \right]} \right) = {\lambda _0} \cdot {\left( {{\mathop{\rm Max}\nolimits} \left( {[m],\left[ {{m^\prime }} \right]} \right)} \right)^{2/3}} \cr & \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, \times \left[ {1 + {{\left( {{{{\mathop{\rm Max}\nolimits} \left( {[m],\left[ {{m^\prime }} \right]} \right)} \over {{m_{{\rm{th }}}}}}} \right)}^{2/3}}} \right], \cr} $](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq117.png) (75)

(75)

where the exact interval extension of the max function is given by

![${\mathop{\rm Max}\nolimits} ([x],[y]) = \left[ {\max \left( {{x^ - },{y^ - }} \right),\max \left( {{x^ + },{y^ + }} \right)} \right].$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq118.png) (76)

(76)

Likewise, for the RPMC method with any raw collision rate function λ(q, q′) with an interval extension Λ([q], [q′]), an interval extension of the entity interaction rate function λent(q, q′,δ) in Eq. (16) can be obtained as

![${\Lambda _{{\rm{ent}}}}\left( {[{\bf{q}}],\left[ {{{\bf{q}}^\prime }} \right],\Delta } \right): = {N^{{\rm{eff}}}}\left( {[{\bf{q}}],\left[ {{{\bf{q}}^\prime }} \right],\Delta } \right)\Lambda \left( {[{\bf{q}}],\left[ {{{\bf{q}}^\prime }} \right]} \right),$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq119.png) (77)

(77)

where [q] denotes a vector of intervals, and where Δ ⊆ {0,1}, Δ ≠ 0 is a set containing 1 if the interaction can be an entity self-interaction and 0 if the interaction can be an interaction between different entities (and may thus contain both 0 and 1). Equivalently, we might state

![$\left[ {{\lambda _{JK}}} \right] = \left[ {N_{JK}^{{\rm{eff}}}} \right]\Lambda \left( {\left[ {{{\bf{q}}_J}} \right],\left[ {{{\bf{q}}_K}} \right]} \right),$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq120.png) (78)

(78)

where we abbreviate the effective swarm particle count and its interval extension as

(79)

(79)

![$\left[ {N_{JK}^{{\rm{eff}}}} \right]: = {N^{{\rm{eff}}}}\left( {\left[ {{{\bf{q}}_J}} \right],\left[ {{{\bf{q}}_K}} \right],{\Delta _{JK}}} \right),$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq122.png) (80)

(80)

and where the set-valued self-interaction indicator ΔJK, the set extension of δjk, is given by

(81)

(81)

in accordance with the definition of Δ given above: considering that we know only the buckets J, K which hold the interacting entities, we know that the interaction cannot be an entity self-interaction if the interacting entities are in different buckets, and that it is necessarily an entity self-interaction if both are in the same bucket and if that bucket only holds one entity. Otherwise, both self-interaction and non-self-interaction are possible.

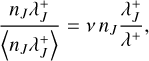

In Beutel & Dullemond (2023), the notion of ‘boosting’ was introduced as a means of grouping together multiple similar events, and the boosted interaction rate was defined as

(82)

(82)

where βjk, indicating the number of events grouped together, is the boost factor for an interaction of representative particle j with swarm k, and with the boosted swarm multiplicity factor  defined as

defined as

(83)

(83)

with the boosted swarm particle count Njk = Nk/βjk and the effective swarm particle count correction factor  . A large value of βjk counteracts a large number of swarm particles Nk, and hence an interval extension of λjk can be obtained using the algebraically favourable rendering of Eq. (83),

. A large value of βjk counteracts a large number of swarm particles Nk, and hence an interval extension of λjk can be obtained using the algebraically favourable rendering of Eq. (83),

![$\left[ {{\lambda _{JK}}} \right]: = \left[ {{N_{JK}}} \right] \cdot \left[ {V_{JK}^{{\rm{eff}}}} \right] \cdot \Lambda \left( {\left[ {{{\bf{q}}_J}} \right],\left[ {{{\bf{q}}_K}} \right]} \right).$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq129.png) (84)

(84)

Here, the interval extensions of [NJK] and ![$\left[ {V_{JK}^{{\rm{eff}}}} \right]$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq130.png) are obtained by composition as per the Fundamental Theorem of Interval Arithmetic, following the paradigm proposed by Beutel & Strzodka (2023) to obtain interval extensions of piecewise-defined functions such as Neff(q, q′, δ).

are obtained by composition as per the Fundamental Theorem of Interval Arithmetic, following the paradigm proposed by Beutel & Strzodka (2023) to obtain interval extensions of piecewise-defined functions such as Neff(q, q′, δ).

We thus define the bucket properties QJ for a bucket J as a vector of intervals of property bounds,

![${Q_J}: = \left[ {{{\bf{q}}_J}} \right].$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq131.png) (85)

(85)

We note, however, that it may be useful, and allow for tighter results in the interval extension of the interaction rate function, if we augment QJ with intervals of bounds of additional derived properties, as done in Eq. (69) for the linear kernel where we had defined the bucket properties QJ to include bounds for the particle property mj as well as the derived property Nj = (M/n)/mj.

|

Fig. 7 RPMC simulations for the coagulation test with the runaway kernel (Eq. (70)) using the bucketing scheme. The RPMC simulation runs use n = 2048 and n = 65 536 representative particles, respectively, and a particle regime threshold of Nth = 10. The dimensionless threshold mass of mth = 107, marking the point where runaway growth ensues, is indicated by the dotted red horizontal lines, and the dashed orange lines indicates the representative particle mass M/(nNth) at which swarms are split up into individual particles. The plots show time series of histograms of the mass-weighted particle number density with bin counts colour-encoded on a logarithmic scale. The runaway particle is shown separately (red curve). Results have been averaged over 10 runs; the shaded surroundings of the red curve indicate the error bounds of the runaway particle mass. |

|

Fig. 8 Raw interaction rate λ(q, q′) and entity interaction rate λent(q, q′, δ) for the runaway kernel (Eq. (70)). Parameters are: λ0 = 10−10, threshold mass mth = 107, total mass ℳ = 1011, n = 2048 representative particles, particle regime threshold Nth = 10. Many-particles swarms (N(q) > Nth) are assumed to have homogeneous swarm mass M(q) = ℳ/n, whereas few-particles swarms (N(q) ≤ Nth) are assumed to have been split up to represent only themselves, N(q) = 1, and thus have swarm mass M(q) = m(q); hence the discontinuity at mcrit. |

|

Fig. 9 Snapshots of the entity interaction rates λjk and corresponding bucket-bucket interaction rate bounds |

4.4 Updating bucket properties

We computed and stored an initial set of bucket property bounds QJ = [qJ] for all occupied buckets J ∈ 𝔅 as the Cartesian product of element–wise intervals

![$\left[ {{{\bf{q}}_J}} \right] \equiv \left[ {{q_{J,1}}} \right] \times \cdots \times \left[ {{q_{J,d}}} \right],$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq132.png) (86)

(86)

![$\left[ {{q_{J,s}}} \right] \equiv \left[ {q_{J,s}^ - ,q_{J,s}^ + } \right] \leftarrow \left[ {\mathop {\min }\limits_{j \in {I_J}} {q_{j,s}},\mathop {\max }\limits_{j \in {I_J}} {q_{j,s}}} \right],$](/articles/aa/full_html/2024/04/aa47304-23/aa47304-23-eq133.png) (87)

(87)

where s denotes the component index of the d-dimensional property vector q. We sometimes abuse notation by employing the quantity itself as a placeholder for its index or its component, for instance writing qj,m instead of and use the component and the quantity interchangeably, mj = m(qj) = qj,m. For example, if entity properties consisted of a mass m and a charge c, the property vector would be qj = (mj, Cj); index s = m would refer to the mass dimension and index s ≡ c would refer to the charge dimension.

During simulation, an interaction between entities j and k usually changes some of their properties qj, qk. When the properties of an entity j change, the bucket property bounds [qJ], [qJ′] of the entity’s old and new buckets J and J′ (which may be identical) may also change. This is not always the case, though. As an example, consider a bucket with label A which contains three particles with masses m1 = 1 g, m2 = 3 g, and m3 = 7g. Presume that particle 2 suffers an interaction that grows its mass to  . Then the bucket property bounds for bucket A does not change; the minimum and maximum mass of any particle in the bucket are still 1 g and 7 g. In this case, the interaction rate bounds, which are a function of the bucket property bounds (Eq. (77) in the case of the RPMC method), do not change either and thus need not be recomputed in Step 3.iii, and no updates need to be performed in Step 3.iv of the bucketing algorithm.

. Then the bucket property bounds for bucket A does not change; the minimum and maximum mass of any particle in the bucket are still 1 g and 7 g. In this case, the interaction rate bounds, which are a function of the bucket property bounds (Eq. (77) in the case of the RPMC method), do not change either and thus need not be recomputed in Step 3.iii, and no updates need to be performed in Step 3.iv of the bucketing algorithm.

Now let us instead assume that particle 3 undergoes a collision and grows to mass  . If the bucketing criterion B(q) still places particle 3 in the same bucket A, its bucket property bounds have to expand given that the maximum particle mass in the bucket is now 12 g; subsequently, the bucket interaction rate bounds for bucket A need to be recomputed in Step 3.iii, and the cumulative bucket interaction rate bounds need to be updated in Step 3.iv. Expanding the bucket property bounds is an operation with constant complexity, entailing only a min and max function call per bucket property, and thus is of negligible cost compared to the subsequent recomputation of interaction rate bounds and updating of cumulative interaction rate bounds.