| Issue |

A&A

Volume 626, June 2019

|

|

|---|---|---|

| Article Number | A49 | |

| Number of page(s) | 18 | |

| Section | Extragalactic astronomy | |

| DOI | https://doi.org/10.1051/0004-6361/201935355 | |

| Published online | 12 June 2019 | |

Identifying galaxy mergers in observations and simulations with deep learning

1

SRON Netherlands Institute for Space Research, Landleven 12, 9747 AD Groningen, The Netherlands

e-mail: w.j.pearson@sron.nl

2

Kapteyn Astronomical Institute, University of Groningen, Postbus 800, 9700 AV Groningen, The Netherlands

3

Leiden Observatory, Leiden University, PO Box 9513, 2300 RA Leiden, The Netherlands

Received:

25

February

2019

Accepted:

10

May

2019

Context. Mergers are an important aspect of galaxy formation and evolution. With large upcoming surveys, such as Euclid and LSST, accurate techniques that are fast and efficient are needed to identify galaxy mergers for further study.

Aims. We aim to test whether deep learning techniques can be used to reproduce visual classification of observations, physical classification of simulations and highlight any differences between these two classifications. As one of the main difficulties of merger studies is the lack of a truth sample, we can use our method to test biases in visually identified merger catalogues.

Methods. We developed a convolutional neural network architecture and trained it in two ways: one with observations from SDSS and one with simulated galaxies from EAGLE, processed to mimic the SDSS observations. The SDSS images were also classified by the simulation trained network and the EAGLE images classified by the observation trained network.

Results. The observationally trained network achieves an accuracy of 91.5% while the simulation trained network achieves 65.2% on the visually classified SDSS and physically classified EAGLE images respectively. Classifying the SDSS images with the simulation trained network was less successful, only achieving an accuracy of 64.6%, while classifying the EAGLE images with the observation network was very poor, achieving an accuracy of only 53.0% with preferential assignment to the non-merger classification. This suggests that most of the simulated mergers do not have conspicuous merger features and visually identified merger catalogues from observations are incomplete and biased towards certain merger types.

Conclusions. The networks trained and tested with the same data perform the best, with observations performing better than simulations, a result of the observational sample being biased towards conspicuous mergers. Classifying SDSS observations with the simulation trained network has proven to work, providing tantalising prospects for using simulation trained networks for galaxy identification in large surveys.

Key words: galaxies: interactions / techniques: image processing / methods: data analysis / methods: numerical

© ESO 2019

1. Introduction

Galaxy-galaxy mergers are of fundamental importance to our current understanding of how galaxies form and evolve in cold dark matter cosmology (e.g. Conselice 2014). Dark matter halos and their baryonic counterparts merge under hierarchical growth to form the universe that we see today (Somerville & Davé 2015). Mergers play an important role in many aspects of galaxy evolution such as galaxy mass assembly, morphological transformation and growth of the central black hole (e.g. Johnston et al. 1996; Naab & Burkert 2003; Hopkins et al. 2006; Bell et al. 2008; Guo & White 2008; Genel et al. 2009). In addition, galaxy mergers are believed to be the triggering mechanism of some of the brightest infrared objects known: (ultra) luminous infrared galaxies (Sanders & Mirabel 1996). With bright infrared emission often comes high star formation rates (SFRs), hence a prevailing interpretation from early merger works is that most mergers go through a starburst phase (e.g. Joseph & Wright 1985; Schweizer 2005).

Recent studies are beginning to dismantle the claim that all galaxy mergers are starbursts. In a study of 1500 galaxies, within 45 Mpc of our own, Knapen et al. (2015) have found that the increase in SFR in merging galaxies is at most a factor of two, with the majority of galaxies showing no evidence of an increase in SFR, or even showing evidence of mergers quenching the star formation. Galaxy mergers do still cause starbursts and a higher fraction of starbursts are mergers than starbursting non-mergers (Luo et al. 2014; Knapen & Cisternas 2015; Cortijo-Ferrero et al. 2017). Claims about the importance of mergers depend critically on our ability to recognise galaxy interactions. A method to reliably identify complete merger samples among a large number of galaxies is clearly needed.

Existing automated techniques for detecting mergers include selecting close galaxy pairs or selecting morphologically disturbed galaxies. The close pair method finds pairs of galaxies that are close, both on the sky and in redshift (e.g. Barton et al. 2000; Patton et al. 2002; Lambas et al. 2003; Lin et al. 2004; De Propris et al. 2005). This method requires highly complete, spectroscopic observations and, as a result, is observationally expensive. It can also be contaminated by flybys (Sinha & Holley-Bockelmann 2012; Lang et al. 2014). Selecting the morphologically disturbed galaxies using quantitative measurements of non-parametric morphological statistics, such as the Gini coefficient, the second-order moment of the brightest 20 percent of the light (Lotz et al. 2004) and the CAS system (e.g. Bershady et al. 2000; Conselice et al. 2000, 2003; Wu et al. 2001) aims to detect disturbances such as strong asymmetries, double nuclei or tidal tails. This method relies on high-quality, high-resolution imaging to detect these features beyond the local universe and has a high percentage of misclassifications (>20%), especially at high redshift (Huertas-Company et al. 2015). There is also the option to classify galaxies through visual inspection. However, visual classifications are hard to reproduce and are time consuming. Large crowd sourced methods, such as Galaxy Zoo1 (Lintott et al. 2008), are not scalable to the sizes of the data sets expected from upcoming surveys. Visual identification can also suffer from low accuracy and incompleteness (Huertas-Company et al. 2015).

Deep learning techniques have the potential to revolutionise galaxy classification. Once properly trained, the neural networks used in deep learning can classify thousands of galaxies in a fraction of the time it would take a human, or team of humans, to classify the same objects. The use of deep learning for galaxy classification was brought to wider attention after Galaxy Zoo lead a competition on the Kaggle platform2, known as The Galaxy Challenge, to develop a machine learning algorithm to replicate the human classification of the Sloan Digital Sky Survey (SDSS; York et al. 2000) images. This competition was won by Dieleman et al. (2015) using a deep neural network, the architecture of which has formed the base for subsequent deep learning algorithms for galaxy classification (e.g. Huertas-Company et al. 2015; Petrillo et al. 2017). More recently, deep learning has been applied to SDSS images from Galaxy Zoo to classify objects as merging or non-merging systems using transfer learning, that is taking a pre-trained network and retraining the output layer to classify images into a different set of classifications (Ackermann et al. 2018). There has also been work using deep learning techniques to identifying mergers and tidal features in optical data from the Canada-France-Hawaii Telescope Legacy Survey (Gwyn 2012; Walmsley et al. 2019). These techniques will have an important application in classifying galaxies in large, upcoming surveys, such as the Large Synoptic Survey Telescope (LSST; LSST Science Collaboration 2009) or Euclid (Laureijs et al. 2011).

In this work, we aim to develop a neural network architecture and independently train it with two different training sets. This will result in a trained neural network that can identify visually classified mergers from the SDSS data as well as one that can identify physically classified mergers from the Evolution and Assembly of GaLaxies and their Environments (EAGLE) hydrodynamical cosmological simulation (Schaye et al. 2015). Once trained, the networks will be cross applied: SDSS images through the EAGLE trained network and images of simulated galaxies from EAGLE through the SDSS trained network. Visually identified merger catalogues constructed from surveys, such as the SDSS, are biased towards mergers that produce conspicuous features but cosmological simulations include a wide variety of merging galaxies with different mass ratios, gas fractions, environments, orbital parameters etc. Therefore, through training our neural network separately with visual classifications of real observations, physical classifications in simulations and the cross-applications of the two, we can better understand any potential biases in observations and identify problems in simulations in terms of reproducing realistic merger properties.

The paper is structured as follows: Sect. 2 describes the data sets used, Sect. 3 covers the neural networks, Sect. 4 provides the results and discussion and Sect. 5 the concluding remarks. Where necessary, Wilkinson Microwave Anisotropy Probe year 7 (WMAP7) cosmology (Komatsu et al. 2011; Larson et al. 2011) is followed, with ΩM = 0.272, ΩΛ = 0.728 and H0 = 70.4 km s−1 Mpc−1.

2. Image data

2.1. SDSS images

To train the neural network, a large number of images of merging and non-merging systems are required. For the training the observational network, we create our merger and non-merger samples by following Ackermann et al. (2018) and combining the Darg et al. (2010a,b) merger catalogue with non-merging systems. The Darg et al. (2010a,b) catalogue contains 3003 merging systems selected by visually rechecking the visual classifications of all objects from Galaxy Zoo with the fraction of people who classified the object as merging greater than 0.4 and spectroscopic redshifts between 0.005 and 0.1. As a result of this thorough visual classification, the Darg et al. (2010a,b) catalogue is likely to be conservative and mainly contain galaxies with obvious signs of merger, that is two (or more) clearly interacting galaxies or obviously morphologically disturbed systems, and may miss more subtle mergers. The SDSS spectra were only taken for objects with apparent magnitude r < 17.77, or absolute magnitude r < −20.55 at z = 0.1 hence resulting in an effective mass limit of ≈1010 M⊙ at z = 0.1 (Darg et al. 2010b). For the non-merging systems, we generated a catalogue of all SDSS objects with spectroscopic redshifts in the same range as the Darg et al. (2010a,b) catalogue and the fraction of people who classified the object as merging in Galaxy Zoo less than 0.2 and then randomly selected 3003 of these to form the sample. As we also require spectroscopic redshifts, the non-merger sample will have the same effective mass limit of ≈1010 M⊙.

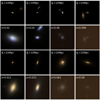

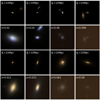

Cut-outs of the merging and non-merging objects were then requested from the SDSS jpeg cut-out server for data release 73 (DR7) to create 6006 images with the gri bands as the blue, green and red colour channels respectively, each of 256 × 256 pixels. SDSS images created this way use a modified version of the Lupton et al. (2004) non-linear colour normalisation. These images were then cropped to the centre 64 × 64 pixels for use to reduce memory requirements while training. Larger image sizes were tested but showed no clear improvements over 64 × 64 pixel images. Examples of the central 64 × 64 pixels of merging and non-merging SDSS galaxies are given in Fig. 1.

|

Fig. 1. Examples of the central 64 × 64 pixels of SDSS gri, as blue, green and red respectively, galaxy images, corresponding to an angular size of 25.3 × 25.3 arcsec. Top row: merging galaxies from the Darg et al. (2010a,b) catalogue while bottom row: non-merging galaxies. |

The SFR and stellar mass (M⋆) for the SDSS objects were gathered from the MPA-JHU catalogue4; the M⋆ were created following the techniques of Kauffmann et al. (2003) and Salim et al. (2007), while the SFR were based on the Brinchmann et al. (2004) catalogue. The redshifts and ugriz magnitudes come from the SDSS DR7. Darg et al. (2010b) find that 54% are major mergers, defined as the ratio of the masses of the merging galaxies is between 1/3 and 3.

2.2. EAGLE images

For the simulation network, simulated gri images from EAGLE were used (Crain et al. 2015; McAlpine et al. 2016). These single channel images were generated using the py-SPHviewer code (Benítez-Llambay 2017). EAGLE star particles were treated as individual simple stellar populations in the imaging, assuming a Chabrier (2003) initial mass function. The luminosity of particles in each band was obtained using the Bruzual & Charlot (2003) population synthesis model via their zero-age mass, current age and smoothed particle hydrodynamics metallicity. Particle smoothing lengths were calculated based on the 64th nearest neighbour, as described in Trayford et al. (2017). We note that attenuation by dust was not accounted for, with these primary images functioning as maps of pure stellar emissivity.

To provide enough galaxies to adequately train a neural network, EAGLE galaxies from the simulation snapshots with a redshift of less than 1.0 were used. Objects with M⋆ greater than 1010 M⊙ were selected while the merging partner of the merging systems must be larger than 109 M⊙. The merging partner must also be more than 10% of the M⋆ of the primary galaxy. Galaxies were deemed to have merged when they are tracked as two galaxies in one simulation snapshot and then tracked as one galaxy in the following snapshot in the EAGLE merger trees catalogue (Qu et al. 2017). This prevents the inclusion of chance flybys that may be selected as mergers if the EAGLE galaxies were selected based on proximity. Systems that are projected to merge, using a closing velocity extrapolation, within the next 0.3 Gyr (pre-merger) or are projected to have merged, again using a closing velocity extrapolation of the progenitors, within the last 0.25 Gyr (post-merger) were selected, along with a number of non-merging systems, and gri band images were created of these systems. Springel et al. (2005) have shown that the effects of a merger are visible for approximately 0.25 Gyr after the merger event while the pre-merger stage is much longer. However, we chose to have the pre and post merger period approximately equal as tests conducted with longer pre-merger times showed no improvement, see discussion in Sect. 4.2. We note, however, that the merger timing may suffer from imprecision as a result of the coarse time resolution of the EAGLE simulation, that is the time between snapshots, which becomes coarser at lower simulation redshift. Each galaxy was imaged at an assumed distance of 10 Mpc and each image contains all material within 100 kpc of the centre of the target galaxy and is 256 × 256 pixels, where 256 pixels corresponds to a physical size of 60 kpc. There are 537 pre-merger, 339 post-merger and 335 non-merging systems, each with six random projections to increase the size of the training set. Each of the six projections are treated as individual galaxies resulting in 3222 pre-merger, 2034 post-merger and 2010 non-merging galaxy images for training. The pre-mergers and post-mergers were combined to form the merger class, keeping the pre-merger image if the same galaxy appears in both sets.

To make the raw EAGLE images look like SDSS images (processed EAGLE images), a number of operations were performed. For each projection of each system, a redshift was randomly chosen from the redshifts of the objects in the Darg et al. (2010a,b) catalogue and the surface brightness of the galaxy was corrected to match this redshift. The image was also re-binned using interpolation with the python reproject package (Robitaille 2018) so that the physical resolution of the EAGLE image matches that physical resolution of an SDSS galaxy at the selected redshift. The resulting apparent r-band magnitudes are less than 17.77 for all but 58 of the 10 134 galaxy projections, meaning that the brightness of the simulated galaxies is consistent with the observed SDSS galaxies. Once the surface brightness and physical resolution correction was completed, the observed SDSS point spread functions (PSFs) for the gri bands were created using the stand alone PSF tool5 and the simulated images were convolved with these PSFs. The EAGLE galaxies were then injected into real SDSS images to add realistic noise. Finally, red, green, blue (RGB) images were generated from the gri bands using a modified Lupton et al. (2004) non-linear colour normalisation to closely match the way the SDSS RGB images are made. A brief comparison using a simple linear colour scaling to generate the EAGLE images is discussed in Appendix A.

To get real SDSS noise, the position of all known SDSS objects from DR7 in three SDSS images were collected. The noise images were generated by offsetting from the position of the objects in these images by a random distance between 6.329 and 18.986 arcsec (that is between 0.25 and 0.75 times the average separation of SDSS objects) and with a random angle in the RA-Dec plane. Then 256 × 256 pixel cut-outs were made, centred on these offset positions, and were used as noise in the EAGLE images. The code used to make the EAGLE images SDSS like and get the noise cut-outs can be downloaded from GitHub6 while examples of the raw and processed EAGLE images can be found in Fig. 2.

|

Fig. 2. Examples of the raw (first and third rows, linear colour scaling) and processed (second and fourth rows, Lupton et al. (2004) non-linear colour scaling) EAGLE images for merging (first and second rows) and non-merging (third and fourth rows) systems. The raw images shown are 128 × 128 pixels and imaged at 10 Mpc, corresponding to a physical size of 30 × 30 kpc or an angular size of 621 × 621 arcsec, while the processed images are 64 × 64 pixel images corresponding to an angular size of 25.3 × 25.3 arcsec. The redshifts are those that the EAGLE images have been projected to. |

The M⋆, SFR, ugriz absolute magnitudes, galaxy asymmetry, merger mass ratio and time to or since the merger event of the EAGLE galaxies are from the simulation. For the merging systems, M⋆ and SFR are calculated for the merger remnant. The galaxy asymmetry is the 3D asymmetry and was calculated as described in Trayford et al. (2019). Uniform bins of solid angle were created about the galaxy centre and the M⋆ within each bin is summed. The asymmetry is then the sum of the absolute mass difference between diametrically opposed bins divided by the total M⋆. Thus, the higher the asymmetry value, the more asymmetric the galaxy is. The minority of the EAGLE galaxies, 34%, are major mergers: the ratio of the masses of the major mergers is between 1/3 and 3.

3. Deep learning

3.1. Convolutional neural networks

Deep learning neural networks are a type of machine learning that aim to loosely mimic how a biological system processes information by using a series of layers of non-linear mathematic operations, known as neurons, each with its own weight and bias value. Here we use a type of deep learning known as convolutional neural networks (CNN). The lower layers of a CNN are known as convolutional layers and contain a defined number of kernels that are convolved with the output of the layer below. The kernels are groups of neurons that have a shape smaller in width and height than the input so must move across the input, performing the necessary matrix multiplications on the region of the input they cover at each step. The higher layers of a CNN are one-dimensional and fully connected, that is all the neurons are connected to all the neurons in the layer below. Dimensionality of the network can be reduced by applying pooling layers. This type of layer groups inputs into it and passes on the maximum or average value of each group to the next layer. When placed after an image input or a convolution layer, this grouping is done in the 2-dimensional height-width plane and not the depth/colour direction resulting in a reduction in the spatial size but not the depth. Each neuron in the kernels or fully connected layers of a network has an activation function to scale the result to pass to higher layers or force the output to a certain value, depending on the value passed into the activation function and the activation function used. The weights and biases in the neurons are trained by passing a large number of classified images through the network, in the case of supervised learning used here, such that the output classifications converge on the known input classifications. A thorough description of how CNNs work is beyond the scope of this paper; further information on CNNs can be found in Lecun et al. (1998).

When discussing neural networks, some terms are used whose definitions may differ from what is expected or be unfamiliar. Also, concepts have a number of different names. To prevent confusion, terms used in this paper are defined in Table 1, taking a positive result to mean a merger and a negative result to mean a non-merger.

Terms used when describing the performance of neural networks.

3.2. Architecture

The CNN used in this work was built using the Tensorflow framework (Abadi et al. 2015). As the task we are attempting to complete is similar to that of The Galaxy Challenge, we based our network on the winning Dieleman et al. (2015) architecture but apply some tweaks. The input image was 64 by 64 pixels with three colour channels. We then applied a series of four, two dimensional convolutional layers with 32, 64, 128 and 128 kernels of 6 × 6, 5 × 5, 3 × 3 and 3 × 3 pixels for the first, second, third and fourth layers respectively. The strides of the kernels, how far the kernel is moved as it scans the input, was set at 1 pixel for all layers and the zero padding was set to “same” to pad each edge of the image with zeros evenly (if required). Batch normalisation (Ioffe & Szegedy 2015) was applied after each layer, scaling the output between zero and one, and we used rectified linear units (ReLU; Nair & Hinton 2010) for activation. ReLU returns max(x, 0) when passed x. Dropout (Srivastava et al. 2014) was also applied after each activation, to help reduce overfitting, with a dropout rate of 0.2, randomly setting the output of neurons to zero 20% of the time during training. The output from the first, second and fourth convolutional layers had a 2 × 2 pixel max-pooling applied to reduce dimensionality. After the fourth convolutional layer, we used two one-dimensional, fully connected layers of 2048 neurons, again applying ReLU activation, batch normalisation and dropout. The output layer has two neurons7, one for each class, and uses a softmax output. For training, validation and testing samples with an equal number of each class, as done here, softmax output provides probabilities for each class, in the interval [0, 1], that sum to one, that is softmax maps the un-normalised input into it into a probability distribution over the output classes. Thus there is one output that can be considered the probability the input image is of a merging system and one output that can be considered to be the probability the input image is of a non-merging galaxy. In this paper, we will use the output for the merger class, although with our binary classification the non-merger class can be considered equivalent as it is 1-(merger class output). The full network can be seen in Table 2. Loss of the network was determined using softmax cross entropy and was optimised using the Adam algorithm (Kingma & Ba 2015). A learning rate, that is how fast the weights and biases in the network can change, of 5 × 10−5 was used as it resulted in a more accurate network.

Architecture of the CNN.

3.3. Training, validation and testing

If there were an unequal number of images in the two classes, the larger class size was reduced by randomly removing images until the classes are the same size. The images were then subdivided into three groups: 80% were used for training, 10% for validation and 10% for testing. The training set was the set used to train the network while the validation was used to see how well the network was performing as training progressed. Each network was trained for 200 epochs, an epoch is showing each image to the network once, and the epoch with simultaneously the highest accuracy and lowest loss with the validation set was selected for use. Using 200 epochs was long enough as by this point the loss for the validation set had begun to increase as the network starts to over-train and learn the training set, not the features in the training set. The testing set was used once, and once only, to test the performance of the network deemed to be the best from the validation. Testing images were not used for validation to prevent accidental training on the test data set. To reduce sensitivity to galaxy orientation, the images were also augmented as they were loaded for training (and only training): the images were randomly rotated by 0°, 90°, 180° or 270°. We also cropped the images to the centre 64 × 64 pixels and scale the images globally between zero and one, preserving the relative flux densities. The code used to create, train, validate and test the networks can be downloaded from GitHub8.

4. Results and discussion

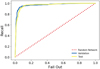

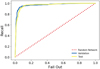

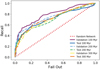

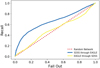

We used the receiver operating characteristic (ROC) curve to determine how well the network has performed for a binary classification. The ROC curve is a plot of the recall against fall-out (see Table 1 for definitions) with each point along the curve corresponding to a different value for the output (threshold) above which an input image is considered to be of a merging system. Higher recall and lower fall-out means a better threshold while the (0,0) and (1,1) positions correspond to assigning all objects to the non-merger and merger classes respectively. The threshold with recall and fall-out closest to the (1,0) position, calculated as least squared difference, is the preferred threshold for splitting mergers from non-mergers. Also, the area under the ROC curve is unity for an infallible network, and close to unity for good networks, while a truly random network will have an area of 0.5.

The two-sample Kolmogorov–Smirnov test (KS-test; Smirnov 1939) is also used to compare the distributions of correctly and incorrectly identified objects to see if they are likely sampled from the same distribution. The null hypothesis that the two distributions are the same is rejected at level α = 0.05 if the KS-test statistic, DN, M, is greater than  , where c(α) = 1.224 for α = 0.05 and n and m are the sizes of samples N and M.

, where c(α) = 1.224 for α = 0.05 and n and m are the sizes of samples N and M.

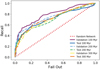

4.1. Observation trained network

The 97th epoch of the network trained with SDSS images (observation network) was used. This epoch has an accuracy (see Table 1 for definition) at validation of 0.932 cutting at a threshold of 0.5 to separate mergers from non-merger classification. Using the validation set, we plot the ROC curve for this network in blue in Fig. 3. This has an area of 0.966 and provides an ideal cut threshold of 0.57. At this threshold, the accuracy of the validation set increases to 0.935. To determine the true accuracy of the network, we perform the same analysis for the test data set. The area under the ROC curve, shown in Fig. 3 in yellow, remains constant at 0.966. With the threshold set at 0.57, the final accuracy of the network is 0.915, with recall, precision, specificity and NPV of 0.920, 0.911, 0.910 and 0.919 respectively (see Table 1 for definitions). It is possible to increase the accuracy, and other cut dependent statistics, by changing the cut threshold for the training set. However, this risks accidentally using the test set for training and thus not giving a true representation of the network.

|

Fig. 3. ROC curve for the observation network used on visually classified SDSS images at validation (blue) and testing (yellow). The area under each curve is 0.966. The dashed red line shows the position of a truly random network. |

Our results can be compared to those of Ackermann et al. (2018), who performed a similar study using the same Darg et al. (2010a,b) merger catalogue. Ackermann et al. (2018) have a recall of 0.96, a precision of 0.97 and the area under the ROC curve is 0.9922. All these values are slightly larger than those we find, demonstrating that their network performs somewhat better. However, there are some differences between the two studies. The architecture of the CNN used here is different from that used by Ackermann et al. (2018), who use the Xception architecture (Chollet 2017), and they perform transfer learning: using a network pre-trained on the non-astronomical ImageNet images (Deng et al. 2009) and then continuing to train using the merger and non-merger images. The non-merger set is also different, with Ackermann et al. (2018) using 10 000 non-merging galaxies as opposed to our 3003. We use an equal number of mergers and non-mergers to prevent accidental bias against the class with fewer images. Finally, the Ackermann et al. (2018) study does not change the cut threshold from 0.5 to improve the recall or precision, suggesting that these values may be able to be improved.

Another study, by Walmsley et al. (2019), trains a CNN on data from the Canada-France-Hawaii Telescope Legacy Survey (CFHTLS; Gwyn 2012). Here, they aim to identify galaxies with tidal features, which are likely due to galaxy interactions. The performance of our SDSS trained network is much better than that of the CFHTLS network: we achieve recall of 0.920 while Walmsley et al. (2019) achieve 0.760. However, the differences in data set and network architecture will have an effect on the results.

To determine if certain physical properties of the galaxies are the cause of the misclassification, the specific SFR (SFR/M⋆, sSFR), M⋆, redshift and ugriz band magnitudes of the misclassified objects have been compared to their correctly classified counterparts. This will allow us to determine if, for example, all of the high mass, non-mergers have been classified as mergers. There are no trends in any of these properties: the distribution of the misclassified objects is the same as the distribution for the correctly classified objects. The confusion matrix, showing the number of TP, FP, TN and FN, for the SDSS images classified by the observation network can be found in Table 3 while the KS-test statistics comparing the distributions of correctly and incorrectly identified galaxies with the physical properties can be found in Table 4. See Table 1 for definitions of TP, FP, TN and FN.

Confusion matrix for SDSS images classified by the observation network.

KS-test statistic, DN, M, and the critical value,  , for the SDSS images classified by the observation network.

, for the SDSS images classified by the observation network.

The images of the misclassified objects have also been visually inspected. Over half of the FP objects (16 of 27) have a close chance projection or a second galaxy projected into the disc of the primary galaxy, possibly fooling the network into believing that the two galaxies are merging. Four further galaxies fill the entire 64 × 64 pixel image, two of which also have a chance projection of a second galaxy into the disc of the primary galaxy. For six of the FP, there is no clear reason why they are misclassified: they appear to be isolated galaxies without signs of morphological disturbance. The final FP is a large grand design spiral that has been identified off centre in the original 256 × 256 pixel image. When cropped, the image contains only the arms of the spiral that appear like a disturbed system. Examples of the FP are shown in Figs. 4a–d. However, and unsurprisingly with so few misclassified objects, galaxies that are visually similar to the FP have also been correctly identified, as seen in Figs. 4e–h.

|

Fig. 4. Examples of FP galaxies from the observation network for (a) a chance projection, (b) a galaxy filling the image, (c) a galaxy filling the image with a chance projection and (d) an isolated, non-interacting galaxy. Panels e to h: TN galaxies that are visually similar to those shown in (a) to (d). |

For the FN objects, six of the 24 have a merging companion that is either outside the 64 × 64 pixel image or on the very edge, indicating that a larger image may reduce the FP rate. The remaining images show a clear morphological disturbance or a clear merger companion. It is possible that these companions are being identified by the network as chance projections, especially the companions that are almost point-like in the image. Examples of the FN are shown in Figs. 5a–d. As with the FP objects, there are also example TP that are visually similar to the FN galaxies, presented in Figs. 5e–h.

|

Fig. 5. Examples of FN galaxies from the observation network for (a) a galaxy with its merging companion outside the image, (b) a galaxy with its merging companion on the edge of the image, (c) a merging system and (d) a merging system where the minor galaxy is almost point-like. Panels e to h: TP galaxies that are visually similar to those shown in (a) to (d). |

4.2. Simulation trained network

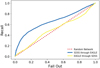

The 26th epoch of the network trained with EAGLE images (simulation network) was used. This epoch has an accuracy of 0.672 at validation, cutting at a threshold of 0.5 to separate mergers from non-merger classification. Using the validation set, we plot the ROC curve for this network in dot-dashed yellow in Fig. 6. This has an area of 0.710 and provides an ideal cut threshold of 0.46. At this threshold, the accuracy of the validation set decreases to 0.644. To determine the true accuracy of the network, we perform the same analysis for the test data set. The area under the ROC curve, the dot-dashed orange curve in Fig. 6, increases to 0.726. With the threshold set at 0.46, the final accuracy of the network is 0.674, with recall, precision, specificity and NPV of 0.657, 0.680, 0.692 and 0.668 respectively.

|

Fig. 6. ROC curve for the simulation networks at validation (purple, light blue, yellow) and testing (bark blue, green, orange) for 100 Myr (solid), 200 Myr (dashed) and 300 Myr (dot-dashed) from the merger event. The areas under the curves can be found in Table 5. The dashed red line shows the position of a truly random network. |

The lower accuracy of the simulation trained network relative to the observation trained network (discussed in Sect. 4.1) is a result of the difference in the training sample. The SDSS merger sample has been thoroughly checked to verify there are visible indications of a merger, as can been seen in the examples in Fig. 1. The EAGLE merger sample, however, contains physically classified mergers in the simulation without visual inspection to check whether there are any obvious signs of merging. As such, the EAGLE merger sample includes a wide variety of merger types (in terms of their mass ratios, orbital parameters, gas fractions, etc.) and hence some of the mergers are bound to have inconspicuous merging signs and will therefore be harder to discern, resulting in a lower accuracy. We have checked the merging galaxies misclassified by the network visually and confirm that most EAGLE mergers are indeed not as conspicuous as the ones in the SDSS catalogue, where the mergers from Darg et al. (2010a,b) have been selected to be conspicuous.

If the time before and after the merger event is decreased, the accuracy of the network increases. Performing the same analysis as with the full EAGLE data set, we find that using galaxies that are within 200 Myr of the merger event results in a network that has a test accuracy of 0.644 at a cut threshold of 0.40 in the 31st epoch. Using galaxies that are within 100 Myr of the merger event the network as a test accuracy of 0.652 at a cut threshold of 0.39 in the 52nd epoch. The full statistics for these two networks can be found in Table 5 and the ROC curves can be found in Fig. 6. As the 100 Myr network has the largest area under the ROC curve, the majority of the remainder of the paper will now focus on the 100 Myr network when discussing the simulation network. The confusion matrix for the 100 Myr network can be found in Table 6.

Statistics for the SDSS and the 100 Myr, 200 Myr and 300 Myr EAGLE trained networks at testing.

Confusion matrix for EAGLE images classified by the simulation network.

A similar study has been performed by Snyder et al. (2019) using simulated galaxy images from the Illustris simulation (Vogelsberger et al. 2014), although their technique is somewhat different. In their study, Snyder et al. (2019) train Random Forests using non-parametric morphology statistics, such as concentration, asymmetry, Gini and M20, as inputs, with these statistics derived from Illustris galaxies processed to look like Hubble Space Telescope images. They select galaxies that will, or have, merge within 250 Gyr. The recall of Snyder et al. (2019) is slightly higher than this work, they achieve ≈0.70 compared to our 0.632, but their precision is much lower, at ≈0.30 compared to 0.658. Comparing the Snyder et al. (2019) results to our 300 Myr trained network, a more fair comparison, shows similar results: Snyder et al. (2019) has higher recall, ≈0.70 compared to 0.657, but lower precision, ≈0.30 compared to 0.680.

As the galaxies are generated from a simulation, we know the physical properties of these systems. As with the SDSS objects, we can compare the physical properties of the galaxies that are correctly and incorrectly identified. KS-test statistics comparing the distributions of correctly and incorrectly identified galaxies with the physical properties can be found in Table 7.

KS-test statistic, DN, M, and the critical value,  , for the EAGLE images classified by the simulation network.

, for the EAGLE images classified by the simulation network.

Many FN objects appear to have low simulation snapshot redshifts when compared to the TP, see Fig. 7a, potentially a result of coarser time resolution of the simulation at low redshift. We note that the simulation snapshot redshift is different from the redshift used when making the EAGLE galaxies look like SDSS images. For the TN and FP populations, higher snapshot redshifts have a higher fraction of FP sources relative to the TN, see Fig. 7b. This suggests that simulated non-mergers in the local universe look different from simulated non-mergers in the higher-z universe.

|

Fig. 7. Distributions for the correctly (blue) and incorrectly (orange) identified EAGLE objects in the simulation network for (a) mergers and (b) non-mergers as a function of simulation snapshot redshift. Merging objects with low snapshot redshifts are disproportionally assigned a non-merger classification while non-merging objects with high simulation redshifts are often seen as mergers. |

The asymmetry of the non-merger population also has an effect: non-merging objects with higher asymmetry are preferentially being identified as merging systems. It is worth noting that the time to/from the merger event does not appear directly correlated with the asymmetry of the galaxy.

In M⋆, there is a slight trend for the low mass, merging systems to be identified as non-mergers, although the non-merging galaxies are typically slightly lower mass than the merging systems so this is not overly unexpected. For sSFR, there is a splitting, with low sSFR merging systems being preferentially assigned the non-merger classification and the high sSFR non-merging galaxies preferentially identified as mergers, as shown in Fig. 8.

|

Fig. 8. Distribution of EAGLE galaxies from the simulation network of the correctly (green) and incorrectly (brown) mergers (a) and non-mergers (b) as a function of EAGLE sSFR. Merging galaxies with low sSFR are often misclassified as non-merging while high sSFR non-mergers are often identified as mergers. |

The apparent magnitude of the simulated galaxy after redshift projection has the largest effect, compared to the other parameters investigated, on the correct identification. For the merging systems, faint objects are preferentially classified as non-merging systems while the bright non-mergers are more likely to be misclassified as mergers. An example of this in the g-band is presented in Fig. 9. Misclassification for the merging systems is likely a result of the merging systems being brighter, on average, than the non-merger systems while the majority of the merging systems are fainter, hence the high misclassification rate for non-merging systems at these magnitudes.

|

Fig. 9. Distributions for the correctly (purple) and incorrectly (yellow) identified objects for (a) mergers and (b) non-mergers as a function of g-band magnitude for EAGLE galaxies classified by the simulation network. Faint mergers are preferentially classified as non-mergers while the distribution of misclassified non-mergers is at intermediate magnitudes. |

As with the SDSS images, the misclassified EAGLE images have also been visually inspected. The majority of the FP galaxies, 43 of 66, contain a chance projection generated when the real SDSS noise is added. Three FP galaxies have a projected galaxy that is much brighter than the EAGLE galaxy, resulting in the EAGLE galaxy becoming extremely faint in the image and (almost) impossible to see by eye. There are also correctly identified galaxies that also suffer from the same image suppression, suggesting that this issue is not the sole cause of the misclassification. Of the remaining FP, three are at a low projection redshift, resulting in the features and inhomogeneities of the galaxy appearing as morphological disturbances, although, again, there are examples of these low projection redshift galaxies that have been correctly identified as non-merging. The other objects show no signs of asymmetry or morphological disturbances. Examples of these galaxies can be found in Figs. 10a–d while example TN galaxies that are visually similar can be found in Figs. 10e–h.

|

Fig. 10. Examples of FP EAGLE galaxies from the simulation network for (a) a chance projection, (b) a galaxy where the chance projection from the SDSS noise has resulted in the EAGLE galaxy appearing faint in the image, (c) a galaxy at low projection redshift and (d) an isolated, non-interacting galaxy. Panels e to h: TN galaxies that are visually similar to those shown in (a) to (d). |

For the FN objects, nine of the 74 have a bright chance projection from the added SDSS noise that results in the EAGLE galaxy becoming (almost) impossible to see in the image. As these types of images are present in the FN, FP, TP and TN, it is unlikely that the bright counterpart is causing the misclassifications. 21 FNs do not appear morphologically disturbed or asymmetric. This is likely a result of the PSF convolution and redshift re-projection smoothing out the visual merger indicators resulting in what appears to be a single, smooth galaxy. The remaining objects do have clearly identifiable merger counterparts or asymmetry. Examples of these galaxies can be found in Figs. 11a–d. As with the EAGLE FP, Figs. 11e–h show there are also examples of visually similar galaxies that have been correctly identified by the simulation network.

|

Fig. 11. Examples of FN EAGLE galaxies from the simulation network for (a) a galaxy where the chance projection from the SDSS noise has resulted in the EAGLE galaxy appearing faint in the image, (b) a merging system that appears as a single, smooth galaxy, (c) a galaxy with a clearly identifiable counterpart and (d) as asymmetric galaxy. Panels e to h: TP galaxies that are visually similar to those shown in (a) to (d). |

The CNN architecture was also trained on simulation images that had only been partially processed to look like SDSS images. This will allow us to determine if there is a specific part of the process that results in the lower accuracy for the simulation network with respect to the observation network. For this, we use EAGLE galaxies that are within 100 Myr of the merger event and perform one of the following processes: convolve the EAGLE image with the SDSS PSF (C), inject the EAGLE image into the real SDSS noise (N), match the EAGLE resolution to that of the SDSS images (R), adjust the EAGLE magnitude to be the correct apparent magnitude for a chosen redshift (Z) or a combination of three (CNR, CNZ, CRZ, NRZ). We also train the network on the EAGLE images that have not been processed. As with training with SDSS or fully processed EAGLE images, the epoch with simultaneously the lowest validation loss and accuracy is chosen and the cut threshold with fall-out and recall closet to (0,1) is used. The statistics are then calculated for the test set and are presented in Table 8. Individually, C and N do not notably change the accuracy of the trained network, remaining within one percentage point of the accuracy of the un-processed EAGLE images (87%). R and Z both cause a small reduction in the accuracy, reducing it to 82%, along with the combination of CNR. CNZ, CRZ and NRZ all show more significant reductions. Of these three, CRZ has the smallest effect, reducing accuracy to 78%, while the combinations CNZ and NRZ reduce the accuracy to 72% and 68% respectively. This suggests that correcting the apparent magnitude has the largest affect, especially when coupled with the inclusion of back ground noise. This is possibly because when changing from absolute to apparent magnitude, the fainter objects are becoming harder to discern from the background when injected into the real SDSS noise. We note, however, that only 58 of the original 10 134 processed EAGLE images have an apparent r-band magnitude greater than the limit applied to the SDSS images.

Statistics for the network trained with partially processed EAGLE images at testing.

4.3. Cross application of the networks

Here we passed the images through the other network, that is we passed all 6006 SDSS images through the simulation network and all 4020 EAGLE images through the observation network. For this, we used the same cut threshold as for passing through the “correct” images. This was done so that we can understand any biases and incompleteness in the two data sets. For example, the visually classified mergers from the SDSS data consist only of certain types of mergers with conspicuous merging signs, such as two massive galaxies obviously interacting with strong tidal features. However, the EAGLE simulation contains a much more complete merger sample. So, one would expect the neural network trained with the visually classified SDSS merger sample to perform poorly on simulated images of EAGLE mergers. We also perform the cross application so that any SDSS objects classified as merging systems in the visual classification but not identified by the simulation trained network can be identified and help improve our understanding of the limitations of simulations so that they become more representative of the real universe in future developments.

4.3.1. EAGLE images through the observation network

Passing all the EAGLE images through the observation network resulted in an accuracy of 0.530, only slightly better than random assignment of objects at first glance. Precision and NPV are similarly close to random at 0.541 and 0.523. However, recall is low, at 0.387, and the specificity is high, at 0.673, demonstrating that the network preferentially assigns objects to the non-merger class but with each class containing just over half correctly identified objects. As to be expected, the area under the ROC curve is close to 0.5 at 0.502, depicted in yellow in Fig. 12. The confusion matrix can be found in Table 9.

|

Fig. 12. ROC curve for the SDSS images classified by the simulation network (blue) and the EAGLE images classified by the observation network (yellow). The area under the EAGLE through observation network is 0.502 while the area under the SDSS through simulation network is 0.689. The dashed red line shows the position of a truly random network. |

Confusion matrix for EAGLE images classified by the observation network.

As before, the physical properties of the EAGLE images can be examined to determine if they are affecting the classification by the network, a brief summary of which can be seen in the KS-test results in Table 10. One property that has an obvious splitting between correct and incorrect assignment is the redshift of projection. As is evident in Fig. 13, objects with high projection redshifts are preferentially being classified as non-merger systems, see Fig. 13a, while objects with low projected redshifts are classified as merging systems, see Fig. 13b. The distribution of redshifts used to re-project the EAGLE galaxies is nearly identical to the SDSS distribution: the redshifts used to re-project the galaxies were drawn randomly from the redshifts of the SDSS observations. Thus this effect is not a result of a mismatch in the redshift distributions between observations and simulations. The issue of misclassified mergers at high redshift may arise while matching the physical resolution (that is kpc per pixel) of the EAGLE images to the SDSS images. At high redshift, this could result in a loss of finer detail that would be expected in merging systems, resulting in these systems being classified as non-mergers. The main misclassification of non-merging systems happens at low projection redshift. The physical resolution of EAGLE images matches the physical resolution of the SDSS images at z ≈ 0.03. Objects assigned a redshift lower than this value are increased in physical resolution using a bicubic interpolation. This interpolation may result in the creation of artefacts that appear, to the CNN, like features of merging systems. Alternatively, it is possible that at low redshifts the individual particles of the simulation are detectably disturbing the light profile of the galaxies and resulting in misclassification.

KS-test statistic, DN, M, and the critical value,  , for the EAGLE images classified by the observation network.

, for the EAGLE images classified by the observation network.

|

Fig. 13. Distributions for the correctly (blue) and incorrectly (orange) identified EAGLE objects for (a) mergers and (b) non-mergers as a function of redshift used for projection after being classified by the observation network. High redshift, merging systems are preferentially classified as non-merging while low redshift non-merging systems are preferentially classified as merging. |

There is also a trend with the mass ratio of the merging systems. Although the TP and FN do not split into two distinct distributions, the low mass ratio merger systems, that is major mergers, are more often misclassified as non-merging galaxies. This is the opposite to what would be expected: minor mergers would be expected to be misclassified more often as the disturbances from the smaller galaxy would be expected to be less obvious. Similarly, low mass mergers have a slight preference to be assigned the non-merger class and vice versa, although again this is unsurprising as the merger sample is typically higher mass than the non-merger sample for the EAGLE galaxies. The mass ranges for the EAGLE and SDSS data are not quite comparable: while the SDSS data has an effective mass limit of 1010 M⊙ at z = 0.1, there are lower mass galaxies in the sample, while the mass limit for EAGLE is 1010 M⊙ at all projected redshifts. For the sSFR, the high sSFR merging galaxies are often misclassified as non-mergers while there is no obvious misclassification for the non-merging galaxies.

As with the EAGLE images through the simulation network, the apparent magnitude of the object has the largest effect on the correct classification. Like the simulation network, the observation network also identifies faint, merging systems as non-merging systems and identifies bright, non-merging systems as merging systems. This is true for all five of the ugriz bands. An example in the g-band is presented in Fig. 14. This is consistent with the results seen with the projected redshift above and is likely a result of the re-projection to the projection redshift.

|

Fig. 14. Distributions for the correctly (purple) and incorrectly (yellow) identified EAGLE objects for (a) mergers and (b) non-mergers as a function of g-band magnitude after being classified by the observation network. Faint, merging systems are preferentially classified as non-merging while bright non-merging systems are preferentially classified as merging. |

It is also more likely that the more complete classification for the EAGLE galaxies is causing the low accuracy. The SDSS classifications are for objects that are clearly visually merging systems while the EAGLE classifications will include systems that are not obviously, visually merging. Thus, the observation trained network has not been trained to identify merging systems that are not obviously, visually merging and hence assign these objects the non-merger classification, increasing the number of FN.

Visual inspection of a sub-sample of the FP shows that the majority of these objects (≈64%) appear to be isolated, non-interacting systems. A further ≈18% of the objects have a close chance projection that may be being mistaken for a merging partner by the CNN. ≈8% of the FP objects are galaxies that have been projected into a larger angular size than the original, raw image from EAGLE. This often results in the internal structure of the galaxy being expanded and could appear to the network to be morphological disturbances or multiple galaxies. The remaining objects have a bright chance projection in the SDSS noise and, as a result, are (almost) impossible to see in the image. Examples of these galaxies can be found in Figs. 15a–d. With accuracy, specificity and NPV all being almost equivalent to 0.5, it is unsurprising to find examples of visually similar galaxies that have been correctly identified and are shown in Figs. 15e–h.

|

Fig. 15. Examples of EAGLE FP galaxies (a to d) from the observation network for (a) an isolated, non-interacting galaxy, (b) a chance projection, (c) a galaxy at low projection redshift and (d) a galaxy where the chance projection from the SDSS noise has resulted in the EAGLE galaxy appearing faint in the image. Panels e to h: TN galaxies that are visually similar to those shown in (a) to (d). |

Images of the FN are more useful in understanding why the EAGLE images are poorly classified by the observation network. Inspecting a sub-sample, nearly half (≈46%) appear to be a single object. This suggests that these objects have had the visible signatures of merger suppressed while being processed to look like SDSS images, likely by the re-projection and PSF matching, or that these mergers are not obvious, even without the processing steps to make them look like SDSS images. It could also be that the merging companion is hidden behind the galaxy it is merging with, as the angle the galaxy is viewed at is picked randomly, so it cannot be seen within the image. Of the remaining objects, ≈41% had at least one counterpart, either from the simulation or random projections from the SDSS noise, that could potentially be merging with the central galaxy and ≈5% were unambiguously merging systems. As with the FP, there are a number of images whose simulated galaxies have been suppressed by bright chance projections from the SDSS noise. Example FN galaxies can be found in Figs. 16a–d and their visually similar but correctly identified counterparts can be found in Figs. 16e–h.

|

Fig. 16. Examples of EAGLE FN galaxies (a to d) from the observation network for (a) an apparent single object, (b) a galaxy with a counterpart, either a merger counterpart from EAGLE or a chance projection from the SDSS noise, (c) an unambiguous merger and (d) a galaxy where the chance projection from the SDSS noise has resulted in the EAGLE galaxy appearing faint in the image. Panels e to h: TP galaxies that are visually similar to those shown in (a) to (d). |

There are limitations of the cross application due to the selection of the EAGLE galaxies. To increase the number of galaxies available to train the simulation network we use merging and non-merging galaxies with simulation redshifts out to z = 1 and re-project them to redshifts between 0.005 and 0.1. However, the EAGLE galaxies at z = 1 have approximately half the radii of a galaxy at z = 0 while the radii of EAGLE galaxies is similar to those observed in the real universe (Furlong et al. 2017). As a result, images of the EAGLE galaxies can contain an object up to two times too small to be comparable in size to the observations, which may hamper the observation network’s ability to correctly identify merging and non-merging systems. If this limitation was reduced, or removed entirely, it is possible that the results of this cross application could improve.

4.3.2. SDSS images through the simulation network

Passing all the SDSS images through the simulation network was more successful than passing all the EAGLE images through the observation network. While still not as good as SDSS images through the observation network, the SDSS images classified by the simulation network had an accuracy of 0.646. Like the EAGLE images through the observation network, the SDSS images through the simulation network have a preference towards the non-merger assignment, demonstrated by a low recall of 0.467 and high specificity of 0.825. The area under the ROC curve is 0.658, see the blue line in Fig. 12. The statistics for the cross application of the networks can be found in Table 11. The confusion matrix, showing the number of correctly and incorrectly identified objects, can be found in Table 12.

Statistics for the EAGLE images classified by the observation network and the SDSS images classified by the simulation network.

Confusion matrix for SDSS images classified by the simulation network.

As with the SDSS images identified by the observation network, we can examine the estimated physical parameters of the SDSS images that were classified by the simulation network. The KS-test statistics comparing the distributions of correctly and incorrectly identified galaxies with the physical properties can be found in Table 13. There is an obvious splitting in the distributions of M⋆ for correctly and incorrectly identified objects: the high mass merging objects are preferentially assigned the non-merger classification, while the intermediate mass non-merging objects are preferentially assigned the merger classification. Although no low mass mergers being assigned the non-merging class is reassuring as there are no low mass non-merging objects. This splitting may arise from the training sample having non-merging systems as preferentially high mass and merging objects as preferentially intermediate and low mass. A similar, but opposite, split is seen with sSFR: low sSFR mergers are identified as non-mergers, as seen in Fig. 17a, while high sSFR non-mergers have a higher misclassification rate than low sSFR non-mergers, as seen in Fig. 17b. This suggests that the EAGLE images for merging systems may preferentially show boosted sSFR.

KS-test statistic, DN, M, and the critical value,  , for the SDSS images classified by the simulation network.

, for the SDSS images classified by the simulation network.

|

Fig. 17. Distributions for the correctly (green) and incorrectly (brown) identified SDSS objects for (a) mergers and (b) non-mergers as a function of sSFR after being classified by the simulation network. Low sSFR merging systems are preferentially classified as non-merging while high sSFR non-merging systems have a higher misclassification rate than low sSFR non-merger. |

The trend of the ugriz band magnitudes of the SDSS images is also interesting. As the band becomes more red, from g through to z, the distributions of correctly and incorrectly identified objects become more and more split, as can be seen by the increasing KS-test statistic in Table 13. Thus, as the band becomes redder, more and more bright mergers are classified as non-mergers while the faint objects are correctly classified more often. Similarly, as the bands become redder, the distribution of incorrectly identified non-mergers moves to the fainter end. An example of the z-band magnitude distribution is shown in Fig. 18. The trend that is seen for misclassification in the merging systems is the opposite of the effect seen in the EAGLE test set when classified by the simulation network.

|

Fig. 18. Distributions for the correctly (purple) and incorrectly (yellow) identified SDSS objects after being classified by the simulation network for (a) mergers and (b) non-mergers as a function of z-band magnitude. Bright mergers are preferentially classified as non-mergers while the distribution of misclassified non-mergers is skewed towards the faint end of the distribution. This trend becomes less pronounced as the bands become more blue, from z to u-band. |

A sub-sample of FP have been visually inspected. ≈42% of the FP have at least one other galaxy that lie close to the primary galaxy but are not visually interacting with the primary. These secondary galaxies are likely being identified as a merging companion to the primary or they are possibly merging systems that appear in simulations but are not identified as such in Galaxy Zoo. A further ≈43% are unambiguous, non-interacting, isolated galaxies. This is possibly a result of many merging systems in the EAGLE training set visually looking like single, undisturbed galaxies. However, that does not exclude these galaxies from being true mergers as the EAGLE training set should be more complete than the SDSS images. Approximately 8% of objects show signs of asymmetry or morphological disturbances. As with the misidentified chance projections, this may be a result of the strict selection for merging SDSS systems ignoring these galaxies but the more complete selection from EAGLE identifying these as mergers. The remaining galaxies contain a non-physical artefact, typically a single pixel width black line through the galaxy, although there are also a number of TN that also have similar artefacts, so this is unlikely to be causing the misclassification. Example FP galaxies can be found in Figs. 19a–d and the visually similar TN in Figs. 19e–h.

|

Fig. 19. Examples of SDSS FP galaxies from the simulation network for (a) a galaxy with a close (in projection) companion, (b) a non-interacting, isolated galaxy, (c) a galaxy showing asymmetry or morphological disturbance and (d) a galaxy with a non-physical artefact within the image. Panels e to h: TN galaxies that are visually similar to those shown in (a) to (d). |

Alongside the 526 FP, there are 1600 FN of which we visually examine a sub-sample. The majority of these systems (≈79%) clearly show two interacting galaxies, which may be a result of the network identifying these as chance projections. A further ≈7% show clear evidence of morphological disturbances or asymmetry. Approximately 14% of the FN have their counterpart of the edge or outside the image cut-out. This suggests, like the observation network, that a larger cut-out may help identify these objects. Examples of these objects can be found in Figs. 20a–d and examples of TP galaxies that look similar in Figs. 20e–h.

|

Fig. 20. Examples of SDSS FN galaxies from the simulation network for (a) a galaxy with a clear merging counterpart, (b) a clearly disturbed system, (c) a galaxy whose merger companion is outside of the 64 × 64 pixel image and (d) the larger 256 × 256 pixel image showing the merger companion outside of panel (c). Panels e to h: TP galaxies that are visually similar to those shown in (a) to (d). |

As mentioned in Sect. 4.3.1, the size of the galaxies from EAGLE may be impacting the results. The re-projection of the high snapshot redshift galaxies to lower redshifts can result in the EAGLE galaxies being too small by up to a factor of two in the most extreme cases (Furlong et al. 2017). As the simulation network is trained on these apparently smaller galaxies, it may have difficulty in correctly identifying the larger SDSS galaxies. As before, if this limitation was reduced or removed, the result may improve.

4.4. Differences in network merger identification

To further examine the differences in the observation and simulation networks, we examined the features that each network uses to identify a merging galaxy. A 12 × 12 pixel area within the images that were correctly identified by both networks was made black by setting the RGB values to zero. This region was moved across the image in steps of one pixel in both x and y directions, generating 2704 images with different 12 × 12 pixel areas masked. For each masked image, the output for the merger class was recorded. To determine which regions of the image have the greatest effect on the classification, we generated a heat map for each object where each pixel is the average of the merger class outputs of the images where that pixel is masked, as seen in the example in Figs. 21c and f.

|

Fig. 21. Heat maps to demonstrate how the observation (top row) and simulation (bottom row) networks detect an example merging SDSS system. Panels a and d: original image of the galaxy being classified. Panels b and e: regions that most effect the merger classification and panels c and f: heat maps where regions with darker colours have a greater affect on the classification (lower merger class output). Panels b and e are created by stretching the heat map between zero and one and multiplying this with the original image. |

As can be seen with an example SDSS galaxy in Fig. 21, both networks require the secondary galaxy to correctly identify the system as merging. However, as can be seen clearly in Fig. 21f, the simulation network is also affected by the edges of the primary galaxy, the more diffuse regions, and uses these to help determine the classification. This is likely a result of the EAGLE merging galaxies used to train the simulation network containing systems that are longer from the merger event and so have settled somewhat as well as alignments of the EAGLE image such that the secondary galaxy is in the line of sight with the primary galaxy, requiring closer examination of the primary galaxy to find perturbations that mark them as merger. As a result, the simulation network is more sensitive to smaller changes in the profile of the more diffuse material of a galaxy and more easily fooled into providing an incorrect classification.

A similar distinction between how the observation and simulation networks function is seen when doing a similar examination with the EAGLE galaxies. The simulation network is more easily fooled by masking pixels closer into the nucleus of the primary galaxy than the observation network. However, both networks can be fooled with many galaxy images requiring the background noise to be un-masked to generate a correct classification. This indicates that the EAGLE galaxies are not a true recreation of the SDSS images, helping to partially explain the poor performance of the cross application.

There are also a small minority of galaxies that the observation and simulation networks need the opposite pixels to correctly identify the system as merging. For example, the simulation network may not identify the system as merging if the primary galaxy is masked but the secondary galaxy is visible while the observation network may not identify the system correctly if the secondary is masked but the primary is visible, as shown in Fig. 22.

|

Fig. 22. Heat maps to demonstrate how the observation (top row) and simulation (bottom row) networks detect an example merging EAGLE system. Panels a and d: original image of the galaxy being classified. Panels b and e: regions that most effect the merger classification and panels c and f: heat maps where regions with darker colours have a greater affect on the classification (lower merger class output). Panels b and e are created by stretching the heat map between zero and one and multiplying this with the original image. |

5. Conclusions

Training and applying a CNN on SDSS images has been successful, achieving an accuracy of 91.5%. This clearly demonstrates that CNNs can be used to reproduce visual classification. There is no clear indication of a specific type of object that is incorrectly identified from the physical or observable parameters. Training and applying a CNN on the EAGLE images was also somewhat successful, with an accuracy of 65.2% when trained using mergers that will or have occurred within 100 Myr of the image snapshot. Using a longer time between the image snapshot and the merger reduces the accuracy of the network. This relatively lower accuracy suggests that some EAGLE mergers do not have visible merging features that can be picked up by the CNN. The incorrectly identified mergers are primarily at low simulation snapshot redshifts as well as faint apparent magnitude. The combination of real noise added to the EAGLE images and converting the absolute magnitude to apparent magnitude also reduces the effectiveness of the CNN, which demonstrates the importance of image quality (in terms of, for example, signal-to-noise and resolution) in merger identification. Within the image, chance projections result in a large number of non-merging galaxies being identified as mergers.

Examining the features of the merging galaxies that result in correct classifications, we find that the EAGLE trained network is more sensitive to features in the diffuse part of the galaxy, likely tidal structures and disturbances as well as the presence of a close companion. The SDSS trained network, however, primarily focuses only on the presence of a close companion galaxy.

The lower accuracy of the EAGLE trained network is most likely a result of the difference in the training sample. The SDSS merger sample has been selected to contain conspicuous mergers and so the features of a merger are more easily identified but will miss subtler mergers. Meanwhile, the EAGLE sample has fewer conspicuous mergers but should be more complete (including mergers with a wide range of mass ratios, gas fractions, viewing angles, environments, orbital parameters, etc.), resulting in less obvious merger features, in pixel space, that are harder for a CNN to recognise.

Passing the SDSS images through the EAGLE trained network has proven to work, although with only 64.6% accuracy. This relatively low accuracy appears to be a result of high mass or low sSFR objects being identified as non-mergers and low mass or high sSFR objects being identified as mergers. This could suggest that simulations show evidence of high sSFR in the merging systems when this may not necessarily be true. However, the EAGLE trained network may also be identifying merging systems that the visual classification missed. The EAGLE classification will be more complete, as we know which systems are merging, and so the EAGLE trained network may be identifying these objects in the SDSS images that have been missed by the less complete, but move visually obvious, SDSS classification. The result may be a lower specificity, that is a smaller fraction of non-mergers are being correctly identified, when using the SDSS classifications as the truth when in fact the EAGLE trained network is correctly identifying merging system missed by the human visual classification. However, the relatively low recall, the fraction of mergers correctly identified, suggests that EAGLE has relatively few conspicuous mergers.

This has a tantalising prospect for large upcoming surveys, such as LSST and Euclid. It is possible to train a CNN with images from simulations and apply it to observations of galaxies from the real universe. Presently, the simulation trained network could be used to generate a set of galaxy merger candidates, which would need to be checked by a human expert, for use in training an observation network. However, with further refinement to the training images from simulations it is not beyond the realm of possibility to reduce the need for an observation training set and apply a simulation trained CNN directly to images from an entire survey, massively speeding up identification.

Passing the EAGLE images through the SDSS trained network was unsuccessful, with the network preferentially assigning objects to the non-merger class. This suggests that some EAGLE mergers are not representative of the SDSS selected mergers, although this appears to be primarily due to how re-projecting the galaxies to their assigned redshift has been done, so it may not be that the EAGLE mergers themselves do not look like observable mergers. The mergers in EAGLE are also less conspicuous than those in the SDSS training set so the observational network has not been trained to identify these less obvious merger events, resulting in a large number of EAGLE mergers being identified as non-mergers.

Improvements for the simulation galaxies in future work would be to increase the mass resolution, which can affect the appearance of galaxies and galaxy mergers (Sparre et al. 2015; Torrey et al. 2015; Trayford et al. 2015; Sparre & Springel 2016), and exactly match the stellar mass distributions with those of observations. Increasing the time resolution, for example by using the snipshots9 instead of snapshots from EAGLE, should also provide improvement along with improving the estimates of time to or since the merger event by tracing when the central black holes merge. It would also be informative to include the effects of dust attenuation.

As has been shown in this work, chance projections are a major problem in merger classification. In future work, we could train the network to recognise chance projections better by constructing training samples of galaxies which appear close together in the sky but are actually far away from each other. Another area to improve in the future is to come up with a more refined merger classification system, rather than just a binary classification of merger versus non-mergers. For example, have separate classes of early mergers, late mergers, minor mergers, major mergers etc.

Acknowledgments

We would like to thank the anonymous referee for their thoughtful comments that have improved the quality of this paper. We would like to thank S. Ellison and L. Bignone for helpful discussions that have improved this paper. Funding for the SDSS and SDSS-II has been provided by the Alfred P. Sloan Foundation, the Participating Institutions, the National Science Foundation, the US Department of Energy, the National Aeronautics and Space Administration, the Japanese Monbukagakusho, the Max Planck Society, and the Higher Education Funding Council for England. The SDSS Web Site is http://www.sdss.org/. The SDSS is managed by the Astrophysical Research Consortium for the Participating Institutions. The Participating Institutions are the American Museum of Natural History, Astrophysical Institute Potsdam, University of Basel, University of Cambridge, Case Western Reserve University, University of Chicago, Drexel University, Fermilab, the Institute for Advanced Study, the Japan Participation Group, Johns Hopkins University, the Joint Institute for Nuclear Astrophysics, the Kavli Institute for Particle Astrophysics and Cosmology, the Korean Scientist Group, the Chinese Academy of Sciences (LAMOST), Los Alamos National Laboratory, the Max-Planck-Institute for Astronomy (MPIA), the Max-Planck-Institute for Astrophysics (MPA), New Mexico State University, Ohio State University, University of Pittsburgh, University of Portsmouth, Princeton University, the United States Naval Observatory, and the University of Washington.

References

- Abadi, M., Agarwal, A., Barham, P., et al. 2015, TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, Software available from:https://www.tensorflow.org [Google Scholar]

- Ackermann, S., Schawinski, K., Zhang, C., Weigel, A. K., & Turp, M. D. 2018, MNRAS, 479, 415 [NASA ADS] [CrossRef] [Google Scholar]

- Barton, E. J., Geller, M. J., & Kenyon, S. J. 2000, ApJ, 530, 660 [NASA ADS] [CrossRef] [Google Scholar]

- Bell, E. F., Zucker, D. B., Belokurov, V., et al. 2008, ApJ, 680, 295 [NASA ADS] [CrossRef] [Google Scholar]

- Benítez-Llambay, A. 2017, Astrophysics Source Code Library [record ascl:1712.003] [Google Scholar]

- Bershady, M. A., Jangren, A., & Conselice, C. J. 2000, AJ, 119, 2645 [NASA ADS] [CrossRef] [Google Scholar]

- Brinchmann, J., Charlot, S., White, S. D. M., et al. 2004, MNRAS, 351, 1151 [NASA ADS] [CrossRef] [Google Scholar]

- Bruzual, G., & Charlot, S. 2003, MNRAS, 344, 1000 [NASA ADS] [CrossRef] [Google Scholar]

- Chabrier, G. 2003, PASP, 115, 763 [NASA ADS] [CrossRef] [Google Scholar]

- Chollet, F. 2017, IEEE Conference on Computer Vision and Pattern Recognition [Google Scholar]

- Conselice, C. J. 2014, ARA&A, 52, 291 [NASA ADS] [CrossRef] [Google Scholar]

- Conselice, C. J., Bershady, M. A., & Jangren, A. 2000, ApJ, 529, 886 [NASA ADS] [CrossRef] [Google Scholar]

- Conselice, C. J., Bershady, M. A., Dickinson, M., & Papovich, C. 2003, AJ, 126, 1183 [NASA ADS] [CrossRef] [Google Scholar]

- Cortijo-Ferrero, C., González Delgado, R. M., Pérez, E., et al. 2017, A&A, 607, A70 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Crain, R. A., Schaye, J., Bower, R. G., et al. 2015, MNRAS, 450, 1937 [NASA ADS] [CrossRef] [Google Scholar]

- Darg, D. W., Kaviraj, S., Lintott, C. J., et al. 2010a, MNRAS, 401, 1552 [NASA ADS] [CrossRef] [Google Scholar]

- Darg, D. W., Kaviraj, S., Lintott, C. J., et al. 2010b, MNRAS, 401, 1043 [NASA ADS] [CrossRef] [Google Scholar]

- De Propris, R., Liske, J., Driver, S. P., Allen, P. D., & Cross, N. J. G. 2005, AJ, 130, 1516 [NASA ADS] [CrossRef] [Google Scholar]

- Deng, J., Dong, W., Socher, R., et al. 2009, IEEE Conference on Computer Vision and Pattern Recognition [Google Scholar]

- Dieleman, S., Willett, K. W., & Dambre, J. 2015, MNRAS, 450, 1441 [NASA ADS] [CrossRef] [Google Scholar]

- Furlong, M., Bower, R. G., Crain, R. A., et al. 2017, MNRAS, 465, 722 [NASA ADS] [CrossRef] [Google Scholar]

- Genel, S., Genzel, R., Bouché, N., Naab, T., & Sternberg, A. 2009, ApJ, 701, 2002 [NASA ADS] [CrossRef] [Google Scholar]