| Issue |

A&A

Volume 619, November 2018

|

|

|---|---|---|

| Article Number | A7 | |

| Number of page(s) | 11 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201833139 | |

| Published online | 05 November 2018 | |

Spatial likelihood analysis for MAGIC telescope data

From instrument response modelling to spectral extraction

1

Max-Planck-Institut für Physik, Föhringer Ring 6, 80805 Munich, Germany

e-mail Ievgen.Vovk@mpp.mpg.de; strzys@mpp.mpg.de; fruck@mpp.mpg.de

2

Technische Universität München, Physik-Department, James-Franck-Str. 1, 85748, Garching

Received:

30

March

2018

Accepted:

31

May

2018

Context. The increase in sensitivity of Imaging Atmospheric Cherenkov Telescopes (IACTs) has lead to numerous detections of extended γ-ray sources at TeV energies, sometimes of sizes comparable to the instrument’s field of view. This creates a demand for advanced and flexible data analysis methods that are able to extract source information using the photon counts in the entire field of view.

Aims. We present a new software package, “SkyPrism”, aimed at performing 2D (3D if energy is considered) fits of IACT data that possibly contain multiple and extended sources. The fits are based on sky images binned in energy. Although the development of this package was focused on the analysis of data collected with the MAGIC telescopes, it can further be adapted to other instruments, such as the future Cherenkov Telescope Array.

Methods. We have developed a set of tools that in addition to sky images (count maps) compute the instrument response functions of MAGIC (effective exposure throughout the field of view, point spread function, energy resolution, and background shape) based on the input data, Monte Carlo simulations, and the pointing track of the telescopes. With this information, the package can perform a simultaneous maximum likelihood fit of source models of arbitrary morphology to the sky images providing energy spectra, detection significances, and upper limits.

Results. We demonstrate that the SkyPrism tool accurately reconstructs the MAGIC point spread function, on- and off-axis performance as well as the underlying background. We further show that for a point source analysis with the MAGIC default observational settings, SkyPrism gives results compatible with those of the standard tools while being more flexible and widely applicable.

Key words: gamma rays: general / methods: data analysis / techniques: image processing

© ESO 2018

1. Introduction

Imaging Atmospheric Cherenkov Telescopes (IACTs) observe astrophysical sources through the detection of optical light from extended atmospheric showers (EASs), which are induced by γ-rays of energies ≳10 GeV. These observations are usually performed in the presence of a relatively high background from cosmic-ray (CR) initiated EASs, which significantly outnumber the signal counts. Despite advances in background suppression techniques (e.g. Albert et al. 2008), a non-negligible part of CR events survive the event selection criteria and form an irreducible, γ-like background throughout the entire field of view (FoV) of an IACT.

Traditionally, this remaining background is managed by defining a source region and (possibly several) background control regions (Berge et al. 2007). The source signal is extracted as the difference in the counts from the source and background area. Given the simplicity of this approach, it is also commonly used to extract the spectral information of astrophysical sources.

Such an “aperture photometry” method is also adopted in the standard data analysis routines of the MAGIC collaboration, operating one of the modern IACTs, located at the Canary island of La Palma, Spain (Baixeras 2003; Aleksić et al. 2016a,b). Despite being successful at analysing point sources, this method has two drawbacks when analysing extended astrophysical objects:

-

The shape of the source/background subtraction regions must be adapted to the real source shape, otherwise it results in excessive background counts in the source region and consequently a loss of sensitivity.

-

The signal of overlapping sources or source components, whether nearby point-like or extended, cannot be easily distinguished.

The steady increase in sensitivity, due to improved technology or new instruments such as the upcoming Cherenkov Telescope Array (CTA; Acharya et al. 2013), and thereby the detection of even fainter sources and source components, further intensifies the need for alternative analytical approaches.

A way to overcome these issues is using a 2D spacial modelling of the measured sky signal: by applying the known instrument response to an assumed source model, one can obtain an image of the model as it would be seen by the telescope. This model, together with the estimated background map, is fitted to the measured sky image to estimate the most likely flux of the model sources in the observed sky region. When combined with the fitting of the spectral energy distribution (SED) of the sources, this procedure becomes a 3D fit in the “x-y-energy” phase space.

This approach has successfully been applied for the spacebased EGRET (Mattox et al. 1996) and Fermi-LAT1 missions. Furthermore, it is considered for the analysis of CTA data in software packages such as CTools (Knödlseder et al. 2016) or Gammapy2 (Deil et al. 2017).

We present a software suite, named SkyPrism, that implements such 2(3)D modelling for the MAGIC telescope data, including the required computation of a background model and instrument response functions (IRFs). The IRFs are based on Monte Carlo (MC) simulations and generated during runtime according to the observations; no radial detector symmetry is assumed. We also present the results of validation tests ensuring the accuracy of the calculations performed by the package tools. In the summary we discuss how the routines of this package can further be transferred to the analysis of CTA data.

2. MAGIC SkyPrism package

The MAGIC SkyPrism package consists of a set of C++/ROOT- and Python-based tools that build on the standard MAGIC data analysis package MARS (Zanin et al. 2013). It focuses on the analysis of the entire telescope FoV simultaneously and is designed to work with the 2D images of the sky, possibly binned in energy.

For consistency, all SkyPrism tools (except for the fitting routines) share a single configuration file containing information of the energy binning, data cuts, and the size and binning of the sky image that will be used for the sky, background, and exposure maps. The programs generating the IRFs from the data files, for compatibility reasons, are coded in C++/ROOT, while the routines performing the signal extraction via a likelihood fit are written in Python. We describe the implementation of each of these tools in detail below.

2.1. Sky map

Energy-binned raw sky images, also called ON maps, are 2D histograms in celestial coordinates of all events passing selection criteria. The likelihood analysis routines of SkyPrism will finally fit the model to these ON maps. The events are read from ROOT-based event lists (Antcheva et al. 2009) that are intermediate products of the MAGIC standard data reduction chain of MARS, subjected to selection criteria. They typically consist of the so-called Hadronness cut as well as a lower limit in terms of image size (recorded light yield) in each telescope. The Hadronness parameter of an event is the result of the application of /hadron separation random forests (Albert et al. 2008; Breiman 2001). The same selection criteria as for the ON map are also applied for the events used to generate the instrument responses and background map.

2.2. Pointing history sampling of events for computing the IRFs

The development of an EAS of a given primary particle type and energy, leaving statistical fluctuations aside, depends primarily on the atmospheric depth in the shower direction and the orientation of the shower axis relative to the Earth’s magnetic field. Since for IACTs the atmosphere is an integral part of the detector, the performance of the telescope depends on its pointing direction in the horizontal coordinate system (azimuth/zenith). To compute accurate background maps and IRFs, the pointing directions during the observation time need to be considered. The telescope pointings are weighted with the time spent in the corresponding direction and binned into an Az/Zd histogram, the so-called pointing history, with the zenith distance (Zd) binned in terms of cos(Zd).

To estimate the IRFs of the MAGIC telescopes (also needed in the aperture photometry approach), the MAGIC collaboration generates MC simulated event sets, in which the simulated events are distributed across the entire sky. Out of this event population, the events located around the centre of a filled pointing history bin are sampled to generate the IRFs. The size of the acceptance box around the pointing bin centres can be chosen by the user and by default is the same as the bin size of the pointing history. The method for the background map generation deviates as it requires sampling from measured events, as explained in Sect. 2.3.

2.3. Background map

For weak or extended sources, data collected with IACTs are strongly dominated by an irreducible background (Maier & Knapp 2007; Sobczyńska 2009, 2015; Sitarek et al. 2018) that cannot be separated, as mentioned in Sect. 1. Therefore, the distribution of background events in the observed sky-patch must be known with high accuracy to keep systematic uncertainties low. Such background counts are caused by the fraction of hadronic CRs surviving the event selection cuts and electron-induced showers, which like γ-ray showers are purely electromagnetic.

Their distribution in the observed sky region can be expected to resemble the camera response to an isotropic flux of γ-ray events. For long exposures, however, the background skymap deviates from the simulated γ-ray exposure. This may result from discrepancies between data and simulations such as dead pixels, stars in the FoV, imperfect flat-fielding of the camera, or a class of hadronic CRs surviving the cuts, but still having an acceptance distribution different to the one of γ-rays. Hence, the background needs to be computed from measurements.

This background measurement must be performed under the same observational conditions and follow the same pointing history as the ON data. Such so-called ON–OFF observations are an established mode of observation for IACTs, but are only rarely used because of the additional time needed for the OFF observations that is then lost for the ON source observations.

For sources that are point-like or have limited extension (<1/2 FoV), the established mode of observation is the so-called wobble mode (Fomin et al. 1994), where the telescope points in an alternating way to coordinates at a fixed offset around the object. In this way, ON and OFF measurements can be performed simultaneously since the background can be obtained from another part of the camera of similar acceptance as the source position; the alternation cancels out possible inhomogeneities in the camera halves.

We have implemented three different methods to reconstruct a background exposure model for the whole camera from wobble-mode observations. Two of these methods are reimplementations of established techniques (called wobble and blind map; Moralejo et al. 2009) used in MARS to generate sky maps. The third method (exclusion map) is an advancement of the concept regarding generalisation and flexibility. All three methods follow the same basic scheme:

-

All events passing the selection criteria along the pointing track of the telescopes are binned in horizontal coordinates and grouped by wobble pointings.

-

A 2D histogram binned in camera coordinates is filled for each wobble and pointing history bin.

-

For each pointing history bin, the program constructs one camera exposure model out of the different wobble pointings.

-

The background model in celestial coordinates is populated by random sampling from the corresponding camera exposure models and by applying the correct coordinate rotations and observation time weights along the pointing track.

The methods differ in the way the camera exposures for each wobble pointing are combined into a single assumed source-free background camera exposure model (step 3). The available options illustrated in Fig. 1 are:

|

Fig. 1. Illustration of the different methods for the construction of a background camera exposure model from wobble observations (here one wobble pair). The source position and extension is shown as red point, ellipse, or stripe. The blue shading marks bins excluded from the background map reconstruction. |

-

Wobble map: the single camera exposures are divided into halves, such that the nominal source position (centre of the wobble setup) is contained in one half, and the normal vector on the separating line is pointing away from this coordinate. The combined background camera exposure model is obtained by normalising and summing the source-free halves. This method can only be applied if the γ-ray emission is confined to a circle around the nominal source position with a radius corresponding to the wobble offset.

-

Blind map: the single camera exposures are normalised by the exposure times. From these, the combined background camera exposure is obtained using the median values in each pixel. The median automatically suppresses too large counts from possible sources. This method has the advantage that no prior knowledge about the distribution of γ-ray sources inside the FoV is needed. However, if only two wobbles are used, the blind map by construction underestimates the background, although MARS routines contain a bias correction to compensate for this effect. The presence of strong sources will also inevitably lead to an upward-bias of the background model in the corresponding regions, causing a systematic flux underestimation for these objects.

-

Exclusion map: here the analyser must specify regions containing known or expected sources, which can have circular or line shape. These regions are then excluded from the computation of the median, as described in the previous method. Special care is required in order not to exclude too large regions, as this might leave no remaining options for the background extraction for certain camera bins.

2.4. Point spread function

IACTs, like all imaging systems, have a finite angular resolution described by the point spread function (PSF). It is defined by the extension of an idealised point-like object, placed at infinity, in the final reconstructed image. Accordingly, the PSF can be seen as the probability function  for reconstructing a detected event in the bin αβ instead of i j corresponding to its original arrival direction.

for reconstructing a detected event in the bin αβ instead of i j corresponding to its original arrival direction.

For MC simulated events, the reconstructed and original arrival direction (as reconstructed by a perfect instrument) is known, so that the angular response can be estimated. MC events are sampled around the track of the source in the horizontal coordinate system, as explained in Sect. 2.2. For each event, the vector between the reconstructed and original arrival direction in camera coordinates is computed. This vector is finally rotated into the equatorial coordinate system according to Appendix A. The sum of all events will describe the shape of a point source observed along the track of the target.

Since the number of MC events is limited, the simulated shape of the PSF is noisy and the PSF is better modelled by a smooth, analytical function. For MAGIC, a 2D double Gaussian (Aleksić et al. 2016b) or a 2D King (Vela et al. 2018) function were found to describe the PSF well. Because of the lower number of free parameters, this analysis used the King function:

where σ sets the angular scale of the resulting profile and γ determines the weight of the tails. To allow for an asymmetry of the MAGIC PSF, the distance variable r is defined as follows:

where ø is a positional angle and ɛ is the asymmetry parameter, which accounts for the non-symmetry of the MAGIC system (which has only two telescopes; Aleksić et al. 2016b; Vela et al. 2018) as well as the influence of the Earth’s magnetic field. The King function is fitted to the simulated point-source shape and finally evaluated on a sufficiently large grid around the camera centre.

2.5. Exposure map

The effective area of a telescope corresponds to the size of an ideal detector (with a 100% detection efficiency) recording the same number of events as the real instrument. It can be defined as the product of the physical detector size and the detector efficiency εDet. For IACTs, it is usually estimated with MC simulations, where the detection efficiency is a ratio of the detected events to the simulated ones:

where Ndet is the number of detected MC events, Nsim the number of originally simulated events, and rsim is the maximum simulated distance between the impact point of the shower axis and the telescope (impact parameter), playing the role of an assumed physical detector size.

The Cherenkov light density in the EAS depends on the energy of the primary particle resulting in an energy dependence of the detection efficiency, and thus the collection area. Additionally, the limited extension of the EAS and the size of MAGIC trigger area (smaller than the camera size; it has a radius of 1.17° vs. 1.75°; Aleksić et al. 2016a) lead to the drop of the camera acceptance towards its outer regions, resulting in an non-uniform camera response to γ-ray sources at different distances from the centre of the FoV. All these effects are reproduced in the MAGIC MC simulations.

To correctly estimate the MAGIC detection efficiency from those MC for a specified observation, we follow the procedure outlined in Sect. 2.2, but instead of filling the pointing history just with time weights, the effective observation time T eff for each pointing bin is estimated. This is the sum of the durations of all observational runs in that pointing bin, excluding possible gaps of more than 2 s and correcting for the instrument dead time.

For each pointing bin, the program samples events from the MC set and transforms them into the skymap: the program computes the difference between the simulated pointing direction for the telescope and the arrival direction of the event. This vector is added to the equatorial coordinates of the pointing bin (see also Appendix A). Each event is additionally reweighted according to the assumed source spectrum with the weights w(E) = F(E)/M(E), where F(E) is the source spectrum and M(E) is the energy spectrum used for the MC generation. Owing to the limited size of the MC sample, the statistical noise in the obtained maps can exceed the tolerance level of ~5% required for the accuracy of subsequent source analysis. To overcome this noise, we fit the efficiency model with a modified Gaussian function:

This parametrisation correctly reproduces the change of the MAGIC detection efficiency throughout the FoV for a wide range of energies, encompassing those that are usually selected for data analysis. To perform the fit, we assumed that the number of simulated nij and detected kij events in each camera pixel (i j) follows the binomial distribution

The best-fit is obtained by minimising the log-likelihood function

where we have dropped the term  as it does not depend on the function of interest

as it does not depend on the function of interest  . The minimiser used is Minuit23 provided by ROOT, which estimates the parameters of the modified Gaussian model and their uncertainties.

. The minimiser used is Minuit23 provided by ROOT, which estimates the parameters of the modified Gaussian model and their uncertainties.

The efficiency models obtained this way were converted to the collection area via Eq. (3), and by multiplication with the estimated effective time Teff, to exposure models. This procedure was repeated for each energy bin that was selected for analysis in the SkyPrism settings.

To estimate the uncertainties of the camera efficiency models, resulting from the fit, we simulated a number (usually 100) of representations of the model parameters (A, ø, s1, s1, σx, and σy). The program constructs the parameter sets by drawing random numbers from a multivariate Gaussian distribution and combining them with the covariance matrix from the best fits. The efficiency models for each of these simulations are stored and can be supplied to the spectral fitting routines of SkyPrism for propagating the fit uncertainties to the flux and spectral parameters estimation.

2.6. Energy dispersion

As well as the reconstructed arrival direction of an event, the reconstructed energy is affected by the detector response and the reconstruction procedure. An event whose energy falls into a true energy bin k may be incorrectly classified into a reconstructed energy bin l. This transfer of events between the energy bins can be described by a 2D matrix Dkl, called the energy migration or dispersion matrix:

where  is the measured number of event counts and Creal (Ek) the count distribution as emitted by the source. For convenience, the migration matrix is normalised along the reconstructed energy axis E′

is the measured number of event counts and Creal (Ek) the count distribution as emitted by the source. For convenience, the migration matrix is normalised along the reconstructed energy axis E′

which is equivalent to assuming that an event with true energy E will be with 100% probability contained in the  set.

set.

The energy matrix for a given observation is generated from MC events selected and weighted in the same way as the PSF construction. For the PSF and exposure program, the user can switch between generating the IRFs in E or E′. By default, SkyPrism uses a wider energy range in true energy than that in reconstructed energy, specified by the user. This accounts for the possible spill-over of the events from energy bins outside the analysed energy range to those used in the analysis. By extending the energy range in E down to  and up to

and up to  , we ensure that the lowest and highest true energy bins contribute no more than 10% to the flux in the E′ range. Furthermore, the number of bins in E is increased compared to E′ to allow for an accurate flux reconstruction when changing the spectral parameters during the maximal likelihood fit and to account for possible sharp spectral features such as cut-offs or bumps. By default, the binning in the true energy is chosen to approximately match the energy resolution of the MAGIC telescopes.

, we ensure that the lowest and highest true energy bins contribute no more than 10% to the flux in the E′ range. Furthermore, the number of bins in E is increased compared to E′ to allow for an accurate flux reconstruction when changing the spectral parameters during the maximal likelihood fit and to account for possible sharp spectral features such as cut-offs or bumps. By default, the binning in the true energy is chosen to approximately match the energy resolution of the MAGIC telescopes.

2.7. Likelihood fitting of the sky maps

The extraction of the information of the observed sources, such as the flux and the extension, is performed by a set of Python4 routines, which can be accessed from a user script. In this way, the fit and analysis procedure can be adapted to the user’s needs and can easily be extended beyond the foreseen functionality presented here.

The fitting procedure combines background, PSF, energy migration matrix and exposure information obtained in the previous steps to provide the maximum likelihood estimate of the fluxes of the sources specified by the user. Internally, IRFs (e.g. exposure and PSF) are represented as 2D images for each of the energy bins where the analysis takes place; they are prepared by the tools described above and loaded at the moment of fitting. The source images are prepared during runtime based on the information, provided in the “source model”:

-

for point sources, the corresponding image contains one filled skymap bin at the specified source position;

-

for extended sources, the image is taken either from a predefined FITS5 (Wells et al. 1981) file or a 2D array filled by the user inside the analysis script.

Such source maps are further interpolated to exactly match the pixel grid of the sky, background, and exposure maps. In each energy bin, the best-fit estimate of the source fluxes is obtained by maximising the Poissonian likelihood of the observed number of counts given the source model and background:

are the measured counts in each pixel of the sky map,

are the measured counts in each pixel of the sky map,  the model source (p denotes the source number in the model), Eij the exposure maps, and Bαβ the background maps, while

the model source (p denotes the source number in the model), Eij the exposure maps, and Bαβ the background maps, while  is the PSF model, mapping an (i, j) pixel to (α, β). Computationally the program minimises the negative log-likelihood − ln(L) using iminuit6. Following the Wilks theorem (Wilks 1938), the uncertainties on the obtained fluxes are computed as deviations of the log-likelihood from its best value by

is the PSF model, mapping an (i, j) pixel to (α, β). Computationally the program minimises the negative log-likelihood − ln(L) using iminuit6. Following the Wilks theorem (Wilks 1938), the uncertainties on the obtained fluxes are computed as deviations of the log-likelihood from its best value by  (since there is only one parameter of interest: flux), where is a desired confidence quantile. Additionally, we have also implemented a Markov chain Monte Carlo (MCMC) sampling procedure, based on the emcee7 (Foreman-Mackey et al. 2013) library, which allows a more accurate computation of non-symmetric error bars in case of strong correlations between the fit parameters (e.g. if overlapping extended sources are specified in the model).

(since there is only one parameter of interest: flux), where is a desired confidence quantile. Additionally, we have also implemented a Markov chain Monte Carlo (MCMC) sampling procedure, based on the emcee7 (Foreman-Mackey et al. 2013) library, which allows a more accurate computation of non-symmetric error bars in case of strong correlations between the fit parameters (e.g. if overlapping extended sources are specified in the model).

In addition to the fit of individual source fluxes, the implemented procedure also allows for the source positions to be fitted. For this, the supplied source models  are shifted with respect to the originally specified position; the amounts of the shifts are optimised with the rest of the parameters during the fit. More sophisticated source position/extension scans can be easily implemented in the user analysis script by altering the source model and repeating the fit of the already loaded data.

are shifted with respect to the originally specified position; the amounts of the shifts are optimised with the rest of the parameters during the fit. More sophisticated source position/extension scans can be easily implemented in the user analysis script by altering the source model and repeating the fit of the already loaded data.

2.8. Likelihood fitting considering the energy migration

In addition to fitting of the individual data bins in reconstructed energy, the SkyPrism Python library can also fit in the true energy space via a forward-folding procedure. In this case, the assumed source spectrum is integrated in all true energy bins Ek, multiplied with the exposure, and convolved with the PSF corresponding to them. Through a multiplication with the energy migration matrix, the predicted counts of each model component in the reconstructed energy bin  are obtained. The sum of all components together with the background image (which is always constructed as a function of the estimated energy E′, as it comes directly from the data) leads to the model counts in E′:

are obtained. The sum of all components together with the background image (which is always constructed as a function of the estimated energy E′, as it comes directly from the data) leads to the model counts in E′:

The likelihood function in this case is defined as a product of all E′ bins in Eq. (10), which is then optimised with respect to the model parameters through the same routines as described in Sect. 2.7.

As opposed to the fits in terms of the estimated energy Eq. (9), the reconstruction of the separate flux points in the true energy space is not a well-defined procedure. It requires assumptions of the source spectral shape between the points (or, equivalently, inside the energy bins, defined in the true energy space). In SkyPrism such differential flux points are interpreted as the nodes of the source spectral model, which uses a linear interpolation of the inter-node energies in the log E − log dN/dE space. This is equivalent to a broken power law with multiple energy break. If the user chooses such a fit during the run time, SkyPrism will use such spectral models for all the sources in Eq. (11) and perform the fit as usual. In this case, the energies of the desired data points (nodes) can be chosen freely.

The output of this fit can be used for instance to assess the validity of the chosen source spectral model. We would like to underline, however, that the resulting data points (along with their uncertainties) may be strongly correlated and cannot be used as independent measurements in third-party analyses.

3. Validation on MAGIC data

To ensure that the SkyPrism tools and routines work as expected, we have performed a series of tests for every part of the package. For the tests, we employed the standard MAGIC MC simulations, which cover all the low-level data reductions and compare the tool outputs with (a) real data and (b) results of the standard MAGIC data analysis with MARS.

3.1. Background map

As the normalisation of the background can be refined during the maximum likelihood fit, the background model, described in detail in Sect. 2.3, only needs to match the real background distribution in shape. In order to estimate a possible mismatch, we have performed

-

a comparison of the model with the isotropic background, obtained through a simplified simulation of a typical MAGIC observation;

-

a comparison of the obtained model with the sky map for an observation of an empty region of sky.

The simulation has the advantage of eliminating possible small variations of the real instrument performance, providing a consistency check of the procedure itself, whereas the comparison with real data demonstrates the overall performance with all the imperfections included.

For the comparison with the simulated events, we took the MAGIC track on the sky during one of the low zenith angle observations (Zd < 35°) and simulated a various number of events isotropically distributed over the FoV along the track. The number of simulated events matches what is usually recorded with the typical analysis criteria in the energy range above 100 GeV with exposure times of 3–30 h. These events were supplied to the blind map procedure of SkyPrism, with no modifications to the reconstruction settings.

A comparison of the simulated event distribution with the reconstructed background, obtained in this way, is given in Fig. 2. The left panel of Fig. 2 shows the radial distribution of the simulated events with respect to the reconstructed background as a function of the offset from the pointing centre. The performance of the reconstruction method degrades towards the edges of the field of view, where the MAGIC collection area is lower and fewer events are recorded. Still, spurious background variations, resulting from the SkyPrism methods, do not exceed ~5% even for short exposures (~10 h). For longer exposures of more than 10 h, variations are in general less than 2–3% in the 3.5 deg wide FoV. Similar conclusions can be drawn from the right panel of Fig. 2, which depicts the reconstructed background variations as a function of the polar angle with respect to the observation pointing centre.

|

Fig. 2. Comparison of a simulated sky map with no source and a background profile, reconstructed from it with SkyPrism. We also show a similar profile, obtained from the real empty field data with the BlindMap method of the SkyPrism analysis. Left panel: off-centre distribution. Right panel: positional angle distribution; the zero-point is arbitrarily set to a horizontal direction. |

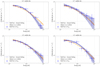

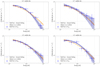

Additionally, we tested the different background models available within SkyPrism by comparing them to the ON sky map of an empty sky region observed for 50 h with four wobble pointings. For this purpose, the background model was scaled to match the ON sky map in terms of the median and subtracted from the latter. The residuals were examined in terms of relative flux (residual counts over the background) and test statistic (TS) value. The TS was constructed by sampling 500 times from the background model, using Poissonian random numbers in each pixel. We smoothed the map with a kernel of approximately the size of the PSF to obtain the TS values, roughly equivalent to putative point sources in the FoV. After smoothing, the residuals of the background-subtracted simulations very closely follow a Gaussian distribution around zero in each pixel. We thus define the TS as the local deviation in units of the Gaussian σ. The results of this test are shown in Fig. 3. All three background estimation methods show a similar performance.

|

Fig. 3. Background methods tested with an empty sky region at Zd < 35°. Top to bottom panels: Wobble map, blind map, and excluded region map. For the excluded region map, the ignored regions (a stripe and two circles) are shaded. The PSF after convolution with a smoothing kernel is depicted in terms of 39% and 68% containment contours. Left panels: ratio of the residuals (skymap-reconstructed background) to the skymap. The blue and red contours indicate ±5% relative flux boundary. Centre panels: test statistic maps computed for the residual counts in the left panels. Right panels: distribution of the test statistic values (blue histogram) compared to the expected normal distribution (red curve). See Sects. 2.3 and 3.1 for details. |

The distribution of the TS values matches the normal distribution well enough to not lead to the detections of spurious sources in the image. This can be seen from the fitted σmap values, given in each TS histogram plot of Fig. 3. These values are approximately 1.1 ± 0.02, indicating a slight excess in addition to the statistical fluctuations. This excess is likely due to a small shape discrepancy between the background model and the observed empty field. Assuming that the statistical error σstat and systematic uncertainty σsys of the model are both normally distributed, we can quantify the contribution from the systematic component as  . This yields σsys ≈ 0.46 in units of standard deviation of the simulated background maps. This latter is ≈1.8% of the background flux, suggesting that the systematic uncertainty of the SkyPrism background maps is ~1%, which is comparable to the quoted systematics of the MAGIC telescopes (Aleksić et al. 2016b).

. This yields σsys ≈ 0.46 in units of standard deviation of the simulated background maps. This latter is ≈1.8% of the background flux, suggesting that the systematic uncertainty of the SkyPrism background maps is ~1%, which is comparable to the quoted systematics of the MAGIC telescopes (Aleksić et al. 2016b).

It should be noted that the statistical uncertainty of the constructed background model depends significantly on the event selection criteria. For relaxed cuts and a wide energy range, similar to those used here, the relative statistical background uncertainty would scale with the observation time as  .

.

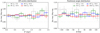

3.2. Point spread function

As mentioned in Sect. 2.4, the MAGIC PSF in general is not circularly symmetric. This instrumental effect is covered by the MAGIC MCs, but not considered in the standard MAGIC analysis. Instead, the standard MAGIC spectrum extraction tool collects the signal from a circular region around the source, whose extension is usually chosen to contain a significant fraction (≳75%) of the signal. In this way, the positional angle dependence of the PSF has a minor impact on the reconstructed source fluxes in most of the applications of the standard MAGIC analysis chain.

For a 2D image analysis of MAGIC data, this effect cannot be neglected. For certain observational configurations it would result in noticeable residuals, and consequently, in a possible bias in the fit, especially in crowded regions. To ensure that the effect is properly reconstructed with the SkyPrism tools, we performed a 2D fit of the reconstructed PSF profiles with the King function (see Sect. 2.4 for details), which we compared to the real data.

For this comparison we used a recent MAGIC observation of Mrk 421 at low and medium zenith angles in the energy range from 100 GeV to 10 TeV. Since the PSF calculation procedure, described in Sect. 2.4, selects only a subsample of available MC events (so that it matches the azimuth and zenith angle distributions of the observations), the number of MC events at the edges of the used energy range is limited. We therefore dropped the highest (lowest) energy bin for the low (medium) zenith range analysis in order to avoid issues related to the low statistics limit.

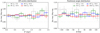

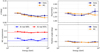

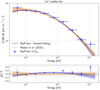

In Figs. 4 and 5 we show the comparison of the King function fit results to the low and medium zenith angle data sample as well as to the corresponding MC PSF profile, computed with SkyPrism. It is evident that the MC estimated PSF is sharper than the real data profile; the relative event containment difference is ~10%, estimated at the angular distance, where the measured event containment is 68%. At larger distances, corresponding to 95% containment of the real data, the MC data mismatch is reduced to ≾5%. This is comparable to the results obtained in Aleksić et al. (2016b).

|

Fig. 4. Comparison of the MC PSF to the real data in the 0°−35° zenith angle range, performed in terms of the King function fit. Top left panel: σ parameter of the King function, which defines the spacial scale. Top right panel: asymmetry ɛ of the fitted profile. Bottom left panel: MC event containment, computed at the radial distance corresponding to the 68% (95%) data containment radius. Bottom right panel: positional angle ø of the non-symmetric PSF extension. |

|

Fig. 5. Comparison of the MC PSF to the real data in the 35°−50° zenith angle range, performed in terms of the King function fit. The panels are the same as in Fig. 4 |

The excessive peakedness of the core of the MAGIC MC PSF has been demonstrated earlier (Aleksić et al. 2016b). In practice, an additional systematic random component of ~0.02° should be added in order to compensate for the difference of MC to the data (Aleksić et al. 2016b). The addition of 0.02° smearing to the MC PSF results in ≾5% residuals in the 2D data-to-model comparison plots, shown in Fig. 6, throughout the entire PSF extension.

|

Fig. 6. PSF model validation on Mrk421 data taken at zenith angles <30° in two energy ranges: 80 GeV–320 GeV (top panels) and 320 GeV–1280 GeV (bottom panels). Left panels: source images with the 68% and 95% level contours of the corresponding PSF model; centre panels: PSF model with the 68% and 95% containment contours of the sky map. Right panels: residuals after subtracting the normalized PSF model from the sky image. |

Certain small differences between the MC estimated PSF and real data, apparent in the right panels in Figs. 4 and 5, play a second-order role when the MAGIC mispointing is considered. We have checked that the largest deviation in asymmetry for the 0°−35° zenith angle (Fig. 4) range comes from the low number of counts in the source image in the energy bin 2.1–4.6 TeV. At larger zenith angles in Fig. 5, the difference in MC and real PSF asymmetries in 215–464 GeV bin appears significant because only very few MC events survive the selection cuts, which results in a significant underestimation of the corresponding error bars.

Overall, the comparison above demonstrates a reasonable agreement between the MC PSF model, computed with the presented software, and real data.

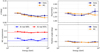

3.3. Exposure map

As described in Sect. 2.5, the exposure and effective area primarily depend on the energy. For a point source, our programs should reproduce the same energy dependence of the effective area as the standard analysis. As the analysis approach is different, we need to reproduce the aperture photometry approach for estimating the effective area: we cut a circular aperture out of the effective area map and averaged the effective area inside by weighting each bin according to the PSF distribution. In this way, we obtained the effective area curve for a point source inside a certain area. The size and the data set used were the same as in Aleksić et al. (2016b).

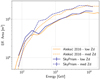

Figure 7 shows the comparison of the SkyPrism result with those from Aleksić et al. (2016b) for two different zenith distance ranges, low (Zd < 30°) and medium (30° < Zd < 45°). With higher energy, the effective area increases as showers more distant from the telescopes can be recorded. The curves obtained with both methods agree for all energies within 20%. The discrepancy may well result from the difference in the approaches as they can just be made compatible to a certain extent. Furthermore, the SkyPrism analysis uses MC events distributed over the whole camera, whereas the analysis from Aleksić et al. (2016b) uses the simulation of a point source at an offset of 0.4°.

|

Fig. 7. Effective area vs. energy as estimated with the SkyPrism package compared to the standard MAGIC analysis from Aleksić et al. (2016b). |

As our tools should further compute the effective area correctly across the entire FoV, we validated the estimated off-axis performance. Aleksić et al. (2016b) measured the rate from the Crab Nebula depending on the offset pointing distance from the source to determine the off-axis acceptance. The measured rate and the effective area off-axis dependence differ only by a single factor, given by the integral spectrum of the source above a considered threshold energy. Since this factor is a constant (for steady sources such as the Crab Nebula), the measured rate and the effective area have the same dependency on the off-axis angle.

We computed the effective area for the off-axis data sets of the rate measurement. The effective area was extracted for each off-axis angle separately in the same way as for the energy/effective area comparison. We multiplied the effective area at each offset with the integral spectrum extracted with SkyPrism at 0.4° offset to obtain the expected rate. Figure 8 shows that the effective area estimates agree with the measurements in nearly all bins within the error bars, and the relative difference is less than 20% except for 1.8 deg offset. The MAGIC MC simulation include events up to 1.5 deg offset, hence at 1.8 deg we need to rely on the extrapolation from the fit to the exposure model, and thereby the SkyPrism estimation is approximately correct.

|

Fig. 8. Crab Nebula count rate as a function of the off-axis angle. Orange points correspond to Aleksić et al. (2016b), and the blue points denote the estimates from the SkyPrism exposure model, obtained for the same data and selection cuts. Bottom panel: the relative difference between the two data sets at each offset angle. |

Considering the known differences between SkyPrism and the standard MARS analysis, as well as the limited FoV in the MC simulations, the estimations and measurements agree in the two approaches. Hence, the SkyPrism package is able to correctly compute the effective area across the MAGIC FoV.

3.4. Source flux estimation through the likelihood fit

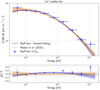

A final test of the overall performance of the SkyPrism package and its image fitting routines in particular is to reproduce the spectrum of a well-measured source. It is natural to use the Crab Nebula, given that it is one of the brightest steady sources in the TeV energy band and is widely used as a calibration object in the IACT community. Here we used the MAGIC Crab Nebula observations at low (0°−30°) zenith angle.

The fit setup for this test includes the PSF, γ-ray exposure, and background model, estimated with SkyPrism, and employs the MAGIC data in the energy range from 60 GeV to 10 TeV. The fitted model includes the point source at the Crab Nebula position and an isotropic background.

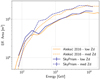

The resulting spectrum, presented in Fig. 9, does not show any significant deviations from the reference spectrum derived by MAGIC (Aleksić et al. 2016b). Although the accuracy of the off-axis SkyPrism performance estimate was demonstrated in Sect. 3.3, we also performed an additional analysis of the larger offset observations of the Crab Nebula at 0.2°, 0.7°, 1.0°, and 1.4° (compared to the standard MAGIC 0.4°). These tests, presented in Appendix B, show the same level of agreement between the standard MAGIC analysis and SkyPrism results, although the amount of data is not sufficient to obtain statistical uncertainties of ≾10% over the entire energy range. Overall, this asserts the validity of SkyPrism calculations.

|

Fig. 9. SED of the Crab Nebula obtained by processing the data set used by Aleksić et al. (2016b) with the full SkyPrism tool chain. The spectral fit is obtained via the forward folding procedure, whereas the data points are the results of the individual fits in each Eest bin. The dark blue error bars of the data points are the statistical error, and the light blue error bars indicate the uncertainties from the exposure model. The obtained results are also compared to the Crab Nebula SED from the same publication in terms of the relative flux difference (ΔF/F.) |

4. Summary

We have presented a new IACT data analysis package SkyPrism, optimised for MAGIC data. The package includes several routines that estimate the telescope response (as a function of the FoV and photon energy) from on MC simulations. The estimated response is computed individually for each observation, based on the telescope pointing distribution during the corresponding data taking to ensure that it describes the telescope performance accurately.

We have performed extensive tests of the key ingredients of a data analysis: the PSF and γ-ray exposure, background model, and fitting procedure. Altogether, we demonstrate an agreement with the standard MAGIC analysis package, MARS (Zanin et al. 2013), at the level ≾10% in terms of the reconstructed source flux. The accuracy of the SkyPrism analysis does not degrade with the offset from the telescope camera centre, which enables a proper reconstruction of extended sources fluxes over the entire FoV.

With SkyPrism, it is possible for the first time to analyse MAGIC data of extended sources of arbitrary morphology. The program can also compute multiple, overlapping point sources in the instrument FoV, as well as combine several data sets that cover large sky regions in a convenient way. Although the structure of SkyPrism is different from CTools (Knödlseder et al. 2016) and Gammapy (Deil et al. 2017), its parts can be further optimised for the data analysis of different IACTs, such as the next-generation CTA.

Within the MAGIC collaboration, SkyPrism will be distributed together with the future standard software releases; being open-source, it is also available from the authors upon request.

Acknowledgments

The authors would like to thank the MAGIC Collaboration for support and providing the data and the MARS software. Particularly, we would like to thank Julian Sitarek for providing us the results of the MAGIC performance paper and valuable discussion throughout the preparation of this work. The work of Ievgen Vovk is supported by the Swiss National Science Foundation grant P2GEP2_151815.

References

- Acharya, B. S., Actis, M., Aghajani, T., et al. 2013, Astropart. Phys., 43, 3 [Google Scholar]

- Albert, J., Aliu, E., Anderhub, H., et al. 2008, NIMPA, 588, 424 [Google Scholar]

- Aleksić, J., Ansoldi, S., Antonelli, L. A., et al. 2016a, Astropart. Phys., 72, 61 [NASA ADS] [CrossRef] [Google Scholar]

- Aleksić, J., Ansoldi, S., Antonelli, L. A., et al. 2016b, Astropart. Phys., 72, 76 [NASA ADS] [CrossRef] [Google Scholar]

- Antcheva, I., Ballintijn, M., Bellenot, B., et al. 2009, Comput. Phys. Commun., 180, 2499 [Google Scholar]

- Baixeras, C. 2003, Nucl. Phys. B Proc. Suppl., 114, 247 [NASA ADS] [CrossRef] [Google Scholar]

- Berge, D., Funk, S., & Hinton, J. 2007, A&A, 466, 1219 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Breiman, L. 2001, Mach. Learn., 45, 5 [CrossRef] [Google Scholar]

- Deil, C., Zanin, R., Lefaucheur, J., et al. 2017, ICRC, 35, 766 [Google Scholar]

- Fomin, V. P., Stepanian, A. A., Lamb, R. C., et al. 1994, Astropart. Phys., 2, 137 [NASA ADS] [CrossRef] [Google Scholar]

- Foreman-Mackey, D., Hogg, D. W., Lang, D., & Goodman, J. 2013, PASP, 125, 306 [CrossRef] [Google Scholar]

- Knödlseder, J., Mayer, M., Deil, C., et al. 2016, A&A, 593, A1 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Maier, G., & Knapp, J. 2007, Astropart. Phys., 28, 72 [NASA ADS] [CrossRef] [Google Scholar]

- Mattox, J. R., Bertsch, D. L., Chiang, J., et al. 1996, ApJ, 461, 396 [NASA ADS] [CrossRef] [Google Scholar]

- Moralejo, A., Gaug, M., Carmona, E., et al. 2009, ArXiv e-prints [arXiv: 0907.0943] [Google Scholar]

- Sitarek, J., Sobczyńska, D., Szanecki, M., et al. 2018, Astropart. Phys., 97, 1 [NASA ADS] [CrossRef] [Google Scholar]

- Sobczyńska, D. 2009, J. Phys. G Nucl. Phys., 36, 125201 [NASA ADS] [CrossRef] [Google Scholar]

- Sobczyńska, D. 2015, J. Phys. G Nucl. Phys., 42, 095201 [NASA ADS] [CrossRef] [Google Scholar]

- Vela, P. D., Stamerra, A., Neronov, A., et al. 2018, Astropart. Phys., 98, 1 [NASA ADS] [CrossRef] [Google Scholar]

- Wells, D. C., Greisen, E. W., & Harten, R. H. 1981, A&AS, 44, 363 [NASA ADS] [Google Scholar]

- Wilks, S. S. 1938, Ann. Math. Statist., 9, 60 [Google Scholar]

- Zanin, R., Carmona, E., Sitarek, J., Colin, P., & Frantzen, K. 2013, in Proc. 33st ICRC [Google Scholar]

Appendix A: Transformation from azimuthal to equatorial coordinates

While the instrument response depends on the horizontal coordinate system, the emission of astrophysical sources and the background depend on the skyfield, which is preferably defined in a celestial coordinate system such as the equatorial coordinate system.

For usual observations, the horizontal and equatorial coordinates for the centre of the MAGIC camera are recorded, so that the time dependence of the transformation can be factored out. The sampled IRFs can be transformed from horizontal into equatorial coordinates through a rotation by an angle β, which can be computed using the so-called navigational triangle: it is formed by the pointing position, the celestial north pole, and the zenith. As the azimuth ;, the latitude of the observatory ø (b = 90° − ø), and the zenith distance of the pointing a are known, the rotation angle can be calculated through

with c being the distance between the pointing and the celestial north pole.

Appendix B: Source spectra estimation at different camera offset angles

As a demonstration of the performance of the SkyPrism tools for non-standard source positions with respect to the camera centre, here we also show additional SEDs from larger offset observations of the Crab Nebula, namely at at 0.2°, 0.7°, 1.0°, and 1.4° (MAGIC standard off-set is 0.4°). Similarly to the analysis, presented in Sect. 3.4, we used the PSF, γ-ray exposure, and background models, constructed with SkyPrism for each of the observations individually. The fitted source model again included just the Crab Nebula point source with the addition of the isotropic background. The obtained spectra are shown in Fig. B.1 and demonstrate a reasonable agreement between SkyPrism and standard MAGIC analysis. The data sets are comparably small, which increases statistical uncertainties.

|

Fig. B.1. SED of the Crab Nebula obtained by processing data with non-standard wobble offsets that has also been used by Aleksić et al. (2016b). The solid error bars indicate statistical errors, while the lighter coloured, wider error bars were obtained by also propagating the uncertainties from the constructed exposure model. Because of the short observation times of the data sets, the uncertainties of the fitted exposure map can be larger than the statistical error of the data points. |

All Figures

|

Fig. 1. Illustration of the different methods for the construction of a background camera exposure model from wobble observations (here one wobble pair). The source position and extension is shown as red point, ellipse, or stripe. The blue shading marks bins excluded from the background map reconstruction. |

| In the text | |

|

Fig. 2. Comparison of a simulated sky map with no source and a background profile, reconstructed from it with SkyPrism. We also show a similar profile, obtained from the real empty field data with the BlindMap method of the SkyPrism analysis. Left panel: off-centre distribution. Right panel: positional angle distribution; the zero-point is arbitrarily set to a horizontal direction. |

| In the text | |

|

Fig. 3. Background methods tested with an empty sky region at Zd < 35°. Top to bottom panels: Wobble map, blind map, and excluded region map. For the excluded region map, the ignored regions (a stripe and two circles) are shaded. The PSF after convolution with a smoothing kernel is depicted in terms of 39% and 68% containment contours. Left panels: ratio of the residuals (skymap-reconstructed background) to the skymap. The blue and red contours indicate ±5% relative flux boundary. Centre panels: test statistic maps computed for the residual counts in the left panels. Right panels: distribution of the test statistic values (blue histogram) compared to the expected normal distribution (red curve). See Sects. 2.3 and 3.1 for details. |

| In the text | |

|

Fig. 4. Comparison of the MC PSF to the real data in the 0°−35° zenith angle range, performed in terms of the King function fit. Top left panel: σ parameter of the King function, which defines the spacial scale. Top right panel: asymmetry ɛ of the fitted profile. Bottom left panel: MC event containment, computed at the radial distance corresponding to the 68% (95%) data containment radius. Bottom right panel: positional angle ø of the non-symmetric PSF extension. |

| In the text | |

|

Fig. 5. Comparison of the MC PSF to the real data in the 35°−50° zenith angle range, performed in terms of the King function fit. The panels are the same as in Fig. 4 |

| In the text | |

|

Fig. 6. PSF model validation on Mrk421 data taken at zenith angles <30° in two energy ranges: 80 GeV–320 GeV (top panels) and 320 GeV–1280 GeV (bottom panels). Left panels: source images with the 68% and 95% level contours of the corresponding PSF model; centre panels: PSF model with the 68% and 95% containment contours of the sky map. Right panels: residuals after subtracting the normalized PSF model from the sky image. |

| In the text | |

|

Fig. 7. Effective area vs. energy as estimated with the SkyPrism package compared to the standard MAGIC analysis from Aleksić et al. (2016b). |

| In the text | |

|

Fig. 8. Crab Nebula count rate as a function of the off-axis angle. Orange points correspond to Aleksić et al. (2016b), and the blue points denote the estimates from the SkyPrism exposure model, obtained for the same data and selection cuts. Bottom panel: the relative difference between the two data sets at each offset angle. |

| In the text | |

|

Fig. 9. SED of the Crab Nebula obtained by processing the data set used by Aleksić et al. (2016b) with the full SkyPrism tool chain. The spectral fit is obtained via the forward folding procedure, whereas the data points are the results of the individual fits in each Eest bin. The dark blue error bars of the data points are the statistical error, and the light blue error bars indicate the uncertainties from the exposure model. The obtained results are also compared to the Crab Nebula SED from the same publication in terms of the relative flux difference (ΔF/F.) |

| In the text | |

|

Fig. B.1. SED of the Crab Nebula obtained by processing data with non-standard wobble offsets that has also been used by Aleksić et al. (2016b). The solid error bars indicate statistical errors, while the lighter coloured, wider error bars were obtained by also propagating the uncertainties from the constructed exposure model. Because of the short observation times of the data sets, the uncertainties of the fitted exposure map can be larger than the statistical error of the data points. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.