| Issue |

A&A

Volume 557, September 2013

|

|

|---|---|---|

| Article Number | A143 | |

| Number of page(s) | 15 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201321742 | |

| Published online | 25 September 2013 | |

PORTA: A three-dimensional multilevel radiative transfer code for modeling the intensity and polarization of spectral lines with massively parallel computers

1 Astronomical Institute ASCR, v.v.i., Ondřejov, Czech Republic

e-mail: jiri.stepan@asu.cas.cz

2 Instituto de Astrofísica de Canarias, vía Láctea s/n, 38205 La Laguna, Tenerife, Spain

e-mail: jtb@iac.es

3 Departamento de Astrofísica, Universidad de La Laguna (ULL), 38206 La Laguna, Tenerife, Spain

4 Consejo Superior de Investigaciones Científicas, Spain

Received: 19 April 2013

Accepted: 17 July 2013

The interpretation of the intensity and polarization of the spectral line radiation produced in the atmosphere of the Sun and of other stars requires solving a radiative transfer problem that can be very complex, especially when the main interest lies in modeling the spectral line polarization produced by scattering processes and the Hanle and Zeeman effects. One of the difficulties is that the plasma of a stellar atmosphere can be highly inhomogeneous and dynamic, which implies the need to solve the non-equilibrium problem of the generation and transfer of polarized radiation in realistic three-dimensional (3D) stellar atmospheric models. Here we present PORTA, an efficient multilevel radiative transfer code we have developed for the simulation of the spectral line polarization caused by scattering processes and the Hanle and Zeeman effects in 3D models of stellar atmospheres. The numerical method of solution is based on the non-linear multigrid iterative method and on a novel short-characteristics formal solver of the Stokes-vector transfer equation which uses monotonic Bézier interpolation. Therefore, with PORTA the computing time needed to obtain at each spatial grid point the self-consistent values of the atomic density matrix (which quantifies the excitation state of the atomic system) scales linearly with the total number of grid points. Another crucial feature of PORTA is its parallelization strategy, which allows us to speed up the numerical solution of complicated 3D problems by several orders of magnitude with respect to sequential radiative transfer approaches, given its excellent linear scaling with the number of available processors. The PORTA code can also be conveniently applied to solve the simpler 3D radiative transfer problem of unpolarized radiation in multilevel systems.

Key words: line: formation / magnetic fields / methods: numerical / polarization / radiative transfer

© ESO, 2013

1. Introduction

This paper describes a computer program we have developed for solving, in three-dimensional (3D) models of stellar atmospheres, the problem of the generation and transfer of spectral line polarization taking into account anisotropic radiation pumping and the Hanle and Zeeman effects in multilevel systems. The numerical method of solution is based on a highly convergent iterative method, whose convergence rate is insensitive to the grid size, and on an accurate short-characteristics formal solver of the Stokes-vector transfer equation that uses monotonic Bézier interpolation. A key feature of our multilevel code called PORTA (POlarized Radiative TrAnsfer) is its parallelization strategy, which allows us to speed up the numerical solution of complicated 3D problems by several orders of magnitude with respect to sequential radiative transfer approaches.

The multilevel radiative transfer problem currently solved by PORTA is the so-called non-local thermodynamic equilibrum (LTE) problem of the 2nd kind (Landi Degl’Innocenti & Landolfi 2004, hereafter LL04; see also Trujillo Bueno 2009), where the phenomenon of scattering in a spectral line is described as the temporal succession of statistically-independent events of absorption and re-emission (complete frequency redistribution, or CRD). This is a formidable numerical problem that implies calculating, at each spatial grid point of the (generally magnetized) 3D stellar atmosphere model under consideration, the values of the multipolar components of the atomic density matrix corresponding to each atomic level of total angular momentum J. These  elements, with K = 0,...,2J and Q = −K,...,K, quantify the overall population of each level J (

elements, with K = 0,...,2J and Q = −K,...,K, quantify the overall population of each level J ( ), the population imbalances between its magnetic sublevels (

), the population imbalances between its magnetic sublevels ( , with K > 0), and the quantum coherence between each pair of them (

, with K > 0), and the quantum coherence between each pair of them ( , with K > 0 and Q ≠ 0). The values of these density-matrix elements have to be consistent with the intensity, polarization, and symmetry properties of the incident radiation field generated within the medium. Finding these density-matrix values requires solving jointly the radiative transfer (RT) equations for the Stokes parameters (I(ν,Ω) = (I,Q,U,V)T, with ν and Ω the frequency and direction of propagation of the radiation beam under consideration) and the statistical equilibrium equations (SEE) for the

, with K > 0 and Q ≠ 0). The values of these density-matrix elements have to be consistent with the intensity, polarization, and symmetry properties of the incident radiation field generated within the medium. Finding these density-matrix values requires solving jointly the radiative transfer (RT) equations for the Stokes parameters (I(ν,Ω) = (I,Q,U,V)T, with ν and Ω the frequency and direction of propagation of the radiation beam under consideration) and the statistical equilibrium equations (SEE) for the  elements. These

elements. These  elements, at each spatial grid point of the 3D atmospheric model and for each level J of the considered atomic model, provide a complete description of the excitation of each level J. As a result, the radiative transfer coefficients (i.e., the emission vector and the propagation matrix of the Stokes-vector transfer equation) corresponding to each line transition depend on the

elements, at each spatial grid point of the 3D atmospheric model and for each level J of the considered atomic model, provide a complete description of the excitation of each level J. As a result, the radiative transfer coefficients (i.e., the emission vector and the propagation matrix of the Stokes-vector transfer equation) corresponding to each line transition depend on the  values of the upper (J = Ju) and lower (J = Jl) line levels. Once the self-consistent

values of the upper (J = Ju) and lower (J = Jl) line levels. Once the self-consistent  values are obtained at each point within the medium, PORTA solves the Stokes-vector transfer equation to obtain the emergent Stokes profiles for any desired line transition and line of sight. Obviously, this last step is computationally cheap compared with the time needed to obtain the self-consistent solution for the

values are obtained at each point within the medium, PORTA solves the Stokes-vector transfer equation to obtain the emergent Stokes profiles for any desired line transition and line of sight. Obviously, this last step is computationally cheap compared with the time needed to obtain the self-consistent solution for the  elements.

elements.

When polarization phenomena are neglected the only non-zero density-matrix element is  (proportional to the overall population of each J level) and the only non-zero Stokes parameter is the specific intensity I(ν,Ω) for each of the radiative transitions in the model atom under consideration. This non-LTE problem of the 1st kind (e.g., Mihalas 1978) is a particular case of the above-mentioned non-LTE problem of the 2nd kind. In other words, the 3D multilevel radiative transfer code described here can also be applied to solve the standard non-LTE multilevel problem on which much of today’s quantitative stellar spectroscopy is based. For this reason, PORTA provides options for spectropolarimetry and for spectroscopy (see Appendix B).

(proportional to the overall population of each J level) and the only non-zero Stokes parameter is the specific intensity I(ν,Ω) for each of the radiative transitions in the model atom under consideration. This non-LTE problem of the 1st kind (e.g., Mihalas 1978) is a particular case of the above-mentioned non-LTE problem of the 2nd kind. In other words, the 3D multilevel radiative transfer code described here can also be applied to solve the standard non-LTE multilevel problem on which much of today’s quantitative stellar spectroscopy is based. For this reason, PORTA provides options for spectropolarimetry and for spectroscopy (see Appendix B).

An overview on 3D radiative transfer codes for unpolarized radiation can be found in Carlsson (2008). Information on numerical methods for the transfer of spectral line polarization can be found in some reviews (e.g., Trujillo Bueno 2003; Nagendra & Sampoorna 2009) and research papers (e.g., Rees et al. 1989; Paletou & Faurobert-Scholl 1998; Manso Sainz & Trujillo Bueno 1999, 2003, 2011; Sampoorna & Trujillo Bueno 2010; Anusha et al 2011). To the best of our knowledge this is the first time that a computer program suitable for massively parallel computers has been developed to solve the multilevel problem of the generation and transfer of spectral line polarization resulting from scattering processes and the Hanle and Zeeman effects in 3D stellar atmosphere models. The problem of the generation and transfer of spectral line polarization with partial frequency redistribution (PRD) is being increasingly considered in the literature (e.g., Sampoorna et al. 2010; Belluzzi et al. 2012), but assuming relatively simple model atoms suitable only for some resonance lines.

After presenting in Sect. 2 the formulation of the RT problem, in Sect. 3 we explain our formal solver of the 3D Stokes-vector transfer equation, which is based on Auer’s (2003) suggestion of monotonic Bézier interpolation within the framework of the short-characteristics approach. An additional important point is the parallelization strategy we have developed for taking advantage of massively parallel computers, which we detail in Sect. 4 following our explanation of the formal solver. The iterative method we have implemented, explained in Sect. 5, is based on the non-linear multigrid method for radiative transfer applications proposed by Fabiani Bendicho et al. (1997). We present useful benchmarks and comparisons of the multigrid iterative option of our code with another option based on the Jacobian iterative method on which one-dimensional (1D) multilevel codes for solving the non-LTE problem of the 2nd kind are based (e.g., Manso Sainz & Trujillo Bueno 2003; Štěpán & Trujillo Bueno 2011). Finally, in Sect. 6 we present our conclusions with a view to future research.

We have already applied PORTA to investigate the intensity and linear polarization of some strong chromospheric lines in a model of the extended solar atmosphere resulting from state-of-the-art 3D magneto-hydrodynamic (MHD) simulations (e.g., Štěpán et al. 2012). However, for the benchmarks presented in this paper, whose aim is a detailed description of PORTA, we have found it more suitable to choose the 3D model atmosphere and the five-level atomic model detailed in Appendix A. The software and hardware tools are summarized in Appendix B.

2. The radiative transfer problem

The multilevel non-LTE problem considered here for the generation and transfer of spectral line polarization is that outlined in Sect. 3 of Štěpán & Trujillo Bueno (2011), where we assumed one-dimensional (1D), plane-parallel atmospheric models (see also Manso Sainz & Trujillo Bueno 2003), while the aim here is to describe the computer program we have developed for solving in Cartesian coordinates the same multilevel radiative transfer problem but in three-dimensional (3D) stellar atmospheric models. As shown below, the development of a robust multilevel 3D code is not simply an incremental step with respect to the 1D case, given the need to develop and implement an accurate 3D formal solver, a highly convergent iterative scheme based on multiple grids, and a suitable parallelization strategy to take advantage of today’s massively parallel computers.

A detailed presentation of all the physics and relevant equations necessary to understand the radiation transfer problem solved in this paper can be found in Chap. 7 of LL04. Our aim here is to solve jointly the Stokes-vector transfer equation (corresponding to each radiative transition in the model atom under consideration) and the statistical equilibrium and conservation equations for the multipolar components of the atomic density matrix (corresponding to each level J). We take into account the possibility of quantum coherence (or interference) between pairs of magnetic sublevels pertaining to any given J level, but neglect quantum interference between sublevels pertaining to different J levels. Neglecting J-state interference is a very suitable approximation for modeling the line-core polarization, which is where the Hanle effect in most solar spectral lines operates (see Belluzzi & Trujillo Bueno 2011). In the absence of J-state interference, the general number of  unknowns for each level J is (2J + 1)2, at each spatial grid point. We note that in the unpolarized case there is only one unknown associated to each J level (i.e.,

unknowns for each level J is (2J + 1)2, at each spatial grid point. We note that in the unpolarized case there is only one unknown associated to each J level (i.e.,  ).

).

In this paper we focus on the multilevel model atom (see Sects. 7.3 and 7.13c of LL04), in which quantum interference between sublevels pertaining to different J levels are neglected. However, it is important to note that the same iterative method, formal solver, and the overall logical structure of our code PORTA are very suitable for solving the same type of problem but considering other model atoms and/or magnetic field regimes (see Chap. 7 of LL04).

The emission vector and the propagation matrix of the Stokes-vector transfer equation depend on the local values of the  elements (with K = 0,...,2J and Q = −K,...,K) of the upper (i) and lower (j) line levels (see Sect. 7.2.b in LL04). Given an estimation of these

elements (with K = 0,...,2J and Q = −K,...,K) of the upper (i) and lower (j) line levels (see Sect. 7.2.b in LL04). Given an estimation of these  elements for each J level at all spatial grid points, the formal solution of the Stokes-vector transfer equation for each radiative transition allows us to obtain the ensuing Stokes parameters at each spatial point within the medium, for each discretized frequency and ray direction. After angle and frequency integration one can obtain the radiation field tensors

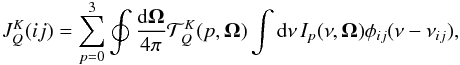

elements for each J level at all spatial grid points, the formal solution of the Stokes-vector transfer equation for each radiative transition allows us to obtain the ensuing Stokes parameters at each spatial point within the medium, for each discretized frequency and ray direction. After angle and frequency integration one can obtain the radiation field tensors  (1)where φij is the line absorption profile and

(1)where φij is the line absorption profile and  the spherical irreducible tensors given in Table 5.6 of LL04, and (I0,I1,I2,I3)T ≡ (I,Q,U,V)T is the so-called Stokes vector. These radiation field tensors, defined for K = 0,1,2 and Q = −K,...,K, specify the symmetry properties of the radiation field that illuminates each point within the medium. These quantities are of fundamental importance because they determine the radiative rates that enter the statistical equilibrium equations (see Sect. 7.2.a in LL04). After the discretization of the spatial dependence these equations can be expressed as

the spherical irreducible tensors given in Table 5.6 of LL04, and (I0,I1,I2,I3)T ≡ (I,Q,U,V)T is the so-called Stokes vector. These radiation field tensors, defined for K = 0,1,2 and Q = −K,...,K, specify the symmetry properties of the radiation field that illuminates each point within the medium. These quantities are of fundamental importance because they determine the radiative rates that enter the statistical equilibrium equations (see Sect. 7.2.a in LL04). After the discretization of the spatial dependence these equations can be expressed as  (2)where ℒl is a block-diagonal matrix formed by

(2)where ℒl is a block-diagonal matrix formed by  submatrices,

submatrices,  being the number of points of the spatial grid of resolution level l (the larger the positive integer number l the finer the grid). NL × NL is the size of each submatrix, NL being the total number of

being the number of points of the spatial grid of resolution level l (the larger the positive integer number l the finer the grid). NL × NL is the size of each submatrix, NL being the total number of  unknowns at each spatial grid point. The length of the vector ρl of

unknowns at each spatial grid point. The length of the vector ρl of  unknowns and of the known vector fl is

unknowns and of the known vector fl is  . The coefficients of the block-diagonal matrix ℒl depend on the collisional rates, which depend on the local values of the thermodynamical variables, and on the radiative rates, which depend on the radiation field tensors

. The coefficients of the block-diagonal matrix ℒl depend on the collisional rates, which depend on the local values of the thermodynamical variables, and on the radiative rates, which depend on the radiation field tensors  , whose computation requires solving the Stokes-vector transfer equation for each radiative transition i → j. Since the radiative transfer coefficients depend on the

, whose computation requires solving the Stokes-vector transfer equation for each radiative transition i → j. Since the radiative transfer coefficients depend on the  unknowns the problem is non-linear, in addition to non-local.

unknowns the problem is non-linear, in addition to non-local.

To solve this type of problem we need a fast and accurate formal solver of the Stokes-vector transfer equation and a suitable iterative method capable of finding rapidly the density matrix elements ρl these that Eq. (2) is satisfied when the radiation field tensors, which appear in the block-diagonal matrix ℒl, are calculated from such ρl elements via the solution of the Stokes-vector transfer equation. The 1D multilevel code described in Appendix A of Štěpán & Trujillo Bueno (2011) is based on the DELOPAR formal solver proposed by Trujillo Bueno (2003) and on a Jacobian iterative scheme, similar to that applied by Manso Sainz & Trujillo Bueno (2003), but generalized to the case of overlapping transitions.

We turn now to explain the new formal solver we have developed for 3D Cartesian grids that is based on monotonic Bézier interpolation.

3. BESSER: Monotonic Bézier formal solver of the Stokes-vector transfer equation

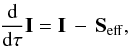

The transfer equation for the Stokes vector I = (I,Q,U,V)T can be written (e.g., Rees et al. 1989; Trujillo Bueno 2003)  (3)where τ is the optical distance along the ray under consideration (dτ = −ηI ds, with s the geometrical distance along the ray and ηI the diagonal element of the 4 × 4 propagation matrix K), Seff = S − K′I being K′ = K/ηI − 1 (where 1 is the unit matrix and S = ϵ/ηI, with ϵ = (ϵI,ϵQ,ϵU,ϵV)T the emission vector resulting from spontaneous emission events). The formal solution of this equation is

(3)where τ is the optical distance along the ray under consideration (dτ = −ηI ds, with s the geometrical distance along the ray and ηI the diagonal element of the 4 × 4 propagation matrix K), Seff = S − K′I being K′ = K/ηI − 1 (where 1 is the unit matrix and S = ϵ/ηI, with ϵ = (ϵI,ϵQ,ϵU,ϵV)T the emission vector resulting from spontaneous emission events). The formal solution of this equation is ![\begin{eqnarray} \vec I_{\rm O}=\vec I_{\rm M} {\rm e}^{-\tau_{\rm MO}}+\int_0^{\tau_{\rm MO}} {\rm d} t\, \left[\vec S(t)-\vec K'(t)\vec I(t)\right]{\rm e}^{-t}, \label{eq:fsint} \end{eqnarray}](/articles/aa/full_html/2013/09/aa21742-13/aa21742-13-eq51.png) (4)where the ray or pencil of radiation of frequency ν propagates along the direction Ω, from the upwind point M (where the Stokes vector IM is assumed to be known) towards the spatial point O of interest (where the Stokes vector IO is sought), and t is the optical path along the ray (measured from O to M; see Fig. 1).

(4)where the ray or pencil of radiation of frequency ν propagates along the direction Ω, from the upwind point M (where the Stokes vector IM is assumed to be known) towards the spatial point O of interest (where the Stokes vector IO is sought), and t is the optical path along the ray (measured from O to M; see Fig. 1).

The numerical solution of Eq. (4) allows us to obtain, from the current estimates of the emission vector ϵ and propagation matrix K, the Stokes parameters at each spatial grid point O within the 3D medium, for all discretized radiation frequencies and directions. We note that the unpolarized version of Eq. (4) can be easily obtained by taking IO → IO, IM → IM, S(t) → S(t), and K′(t) → 0.

3.1. The short characteristics method

|

Fig. 1 Short-characteristics in a three-dimensional Cartesian rectilinear grid. |

The short-characteristics (SC) method was proposed by Kunasz & Auer (1988) to solve the unpolarized version of Eq. (4) for the specific intensity (see also Auer & Paletou 1994; Auer et al. 1994; Fabiani Bendicho & Trujillo Bueno 1999). Consider three consecutive spatial points M, O, and P along the ray under consideration, with M the upwind point, P the downwind point, and O the point where the Stokes I parameter is being sought, for the given frequency and ray direction (see Fig. 1). The aim of the original SC method is to solve the unpolarized version of Eq. (4) along the MO segment in order to compute the specific intensity I(ν,Ω). The original SC method is based on the approximation of parabolic interpolation of the source function S(t) between the upwind point M of the short characteristics, the grid point O, and the downwind point P (see Fig. 1). In 2D and 3D grids, the upwind and downwind points of the SC do not generally coincide with any spatial grid node and the radiation transfer quantities (i.e., the emission and absorption coefficients) have to be interpolated from the nearby 9-point (biquadratic case) or 4-point (bilinear case) stencils of the discrete grid points. In the unpolarized and polarized options of PORTA both biquadratic and bilinear interpolation are implemented. Bilinear interpolation is sufficient in the fine grids of today’s MHD models. The upwind specific intensity or the Stokes vector IM need to be interpolated from the same grid nodes. Proper topological ordering of the grid points is therefore necessary for every direction of the short characteristics. We note that the intersection points M and P may be located on a vertical plane of the grid instead of a horizontal one.

|

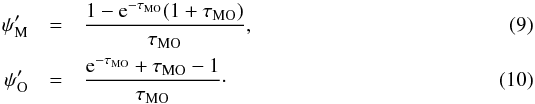

Fig. 2 Parabolic and BESSER interpolation using the three successive points M, O, and P. Dotted line: parabolic interpolation may create a spurious extremum between points M and O. Solid line: interpolation using our BESSER method with continuous derivative at point O. The control points of the intervals, whose y-coordinates are denoted by cM and cP, define tangents to the Bézier splines in their endpoints. The x-coordinates of the control points are located at the center of the corresponding intervals. |

The DELO method proposed by Rees et al. (1989) can be considered a possible generalization of the scalar SC method to the radiative transfer problem of polarized radiation. That formal solver of the Stokes-vector transfer equation is, however, based on linear interpolation of the source function Seff between points M and O. Trujillo Bueno (2003) demonstrated that significantly more accurate solutions can be obtained by using instead a formal solver he calls DELOPAR, which is based on the choice in Eq. (4) of parabolic interpolation for S (between points M, O, and P) and linear interpolation for K′I (between points M and O). He showed that with DELOPAR the accuracy of the self-consistent solution rapidly improves as the spatial resolution level l of the spatial grid is increased. The first version of the computer program NICOLE of Socas Navarro et al. (2000), for the synthesis and inversion of Stokes profiles induced by the Zeeman effect, used DELOPAR as the formal solver.

In smooth and/or suitably discretized model atmospheres, the DELOPAR method provides accurate results. However, in the presence of abrupt changes in the physical properties of the atmospheric model, the parabolic interpolation suffers from non-monotonic interpolation between otherwise monotonic sequences of discrete points. Such spurious extrema of the interpolant decrease the accuracy of the solution and can also lead to unrealistic or even unphysical Stokes parameters at the grid point O under consideration (see the dotted line in Fig. 2). In addition, the parabolic interpolation may occasionally lead to the divergence of the whole numerical solution.

To overcome these difficulties, Auer (2003) suggested an interpolation based on the use of monotonic Bézier splines. Some formal solvers based on this idea have already been implemented (Koesterke et al. 2008; Hayek 2008; Štěpán & Trujillo Bueno 2012; Holzreuter & Solanki 2012; de la Cruz Rodríguez & Piskunov 2013). In this section, we describe in detail the accurate formal solver we have developed, pointing out a significant difference with the original proposal of Auer (2003). We call it BESSER (BEzier Spline SolvER).

3.2. Monotonic spline interpolation with continuous first derivative

Following Auer (2003), our BESSER algorithm is based on the use of piecewise monotonic quadratic Bézier splines. The control points of the splines can be used to preserve monotonicity of the interpolant, because the interpolant is contained in an envelope defined by the tangents of the spline in its endpoints and of the control point which is located in the intersection of these tangents (see Fig. 2). As shown below, we achieve a smooth connection of the Bézier splines in the central point O by imposing a continuous first derivative of the interpolant. This improvement over the original treatment of Auer (2003) leads to a symmetrical interpolation independently of the choice of the interpolation direction (MOP for one direction of the ray propagation or POM for the opposite direction of the ray). An additional attractive feature is that our BESSER method always provides reliable values for the diagonal of the Λ-operator, i.e., in the interval [0,1), used in methods based on the Jacobi iteration.

|

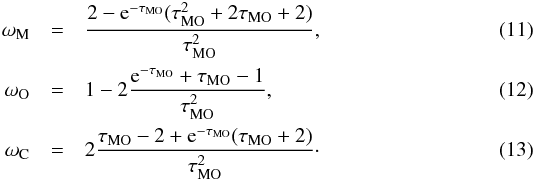

Fig. 3 Treatment of an overshoot in the downwind interval OP by three different methods. Black solid line: BESSER implementation with continuous derivative at point O and the cP overshoot correction of the control point. Crosses: piecewise monotonic quadratic Bézier spline interpolation. Solid gray line: parabolic interpolation. We note that the piecewise monotonic quadratic Bézier interpolation coincides with the parabolic interpolation in the MO segment because the overshoot in the OP interval does not affect the upwind interpolation between M and O. |

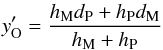

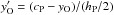

Given a quantity y (e.g., the source function) defined at three successive points xM, xO, and xP, we use two quadratic Bézier splines to interpolate y between points M and O and between points O and P (see Fig. 2). First, we look for an optimal interpolation in the interval MO. For the sake of simplicity, we parametrize the x-coordinate in this interval by a dimensionless parameter u = (x − xM)/hM, where hM = xO − xM. The Bézier spline in the interval MO is a parabola passing through points M and O. The derivatives at such points are defined by the position of the control point whose y-coordinate is cM (see Fig. 2). The equation for such a spline reads (Auer 2003) ![\begin{eqnarray} y(u)=(1-u)^2y_{\rm M} + 2u(1-u)c_{\rm M} + u^2y_{\rm O},\qquad u\in[0,1]. \label{eq:beziers} \end{eqnarray}](/articles/aa/full_html/2013/09/aa21742-13/aa21742-13-eq81.png) (5)Similarly, one can define a Bézier spline between points O and P by doing the formal changes yM → yO, yO → yP, and u = (x − xO)/hP, where hP = xP − xO; the y-coordinate of the ensuing control point is denoted by cP (see Fig. 2).

(5)Similarly, one can define a Bézier spline between points O and P by doing the formal changes yM → yO, yO → yP, and u = (x − xO)/hP, where hP = xP − xO; the y-coordinate of the ensuing control point is denoted by cP (see Fig. 2).

We look for the values of cM and cP that satisfy the following conditions: (1) if the sequence yM, yO, yP is monotonic, then the interpolation is monotonic in the whole interval [xM,xP]; (2) if the sequence of yi values is not monotonic, then the interpolant has the only local extremum at O; and (3) the first derivative of the interpolant at point O should be continuous. The ensuing algorithm proceeds as follows:

-

1.

Calculate the quantities dM = (yO − yM)/hM, dP = (yP − yO)/hP.

-

2.

If the sequence yM, yO, yP is not monotonic (i.e., if dMdP ≤ 0), then set cM = cP = yO and exit the algorithm. The derivative of the splines at point O is equal to zero, leading to a local extremum at the central point.

-

3.

Estimate the derivative at point O,

(6)(see Eq. (7) of Auer 2003, and references therein). This derivative is equal to that provided at the same point by parabolic interpolation among points M, O, and P. Moreover, in contrast with Eq. (12) of Auer (2003), it is an expression that relates y′ (e.g., the source function derivative) linearly with the y-values (e.g., with the source function values).

(6)(see Eq. (7) of Auer 2003, and references therein). This derivative is equal to that provided at the same point by parabolic interpolation among points M, O, and P. Moreover, in contrast with Eq. (12) of Auer (2003), it is an expression that relates y′ (e.g., the source function derivative) linearly with the y-values (e.g., with the source function values). -

4.

Calculate the initial positions of the control points,

, and

, and  1.

1. -

5.

Check that min(yM,yO) ≤ cM ≤ max(yM,yO). If the condition is satisfied, then go to step 7, otherwise continue with step 6.

-

6.

If the condition in step 5 is not satisfied, then there is an overshoot of the interpolant in the interval MO. Set cM = yM, so that the first derivative at M is equal to zero and the overshoot is corrected. Since the value of cP is not of interest for the formal solution between M and O, exit the algorithm.

-

7.

Check if min(yO,yP) ≤ cP ≤ max(yO,yP). If this condition is not satisfied, then set cP = yP so that the overshoot in the interval OP is corrected.

-

8.

Calculate the new derivative at O,

, using the corrected value of cP calculated in step 7.

, using the corrected value of cP calculated in step 7. -

9.

Calculate a new cM value to keep the derivative at O smooth. It is easy to realize that this change cannot produce an overshoot in the MO interval, hence the solution remains monotonic with a continuous derivative.

Steps 8 and 9 of the above-mentioned algorithm, dealing with correction of the overshoots in the downwind interval followed by modification of the cM upwind control point value, are not part of the original algorithm of Auer (2003) in which the derivative at point O can be discontinuous. We have found that it is suitable to guarantee the smoothness of the derivative, and this can be done with only a small increase in the computing time with respect to the DELOPAR method. Our BESSER interpolation is stable; that is, the interpolant varies smoothly with smooth changes of the M, O, and P points. No abrupt changes of the splines occur that could negatively affect the stability of the iterative method.

In contrast to some other formal solvers based on the idea of quadratic Bézier splines (e.g., one of the two Bezier methods discussed by de la Cruz Rodríguez & Piskunov 2013), our BESSER algorithm guarantees that a monotonic sequence of the MOP points leads to a monotonic interpolant in all situations. This fact is of critical importance in 2D and 3D grids in which τMO and τOP may differ significantly because of unpredictable intersections of the grid planes, especially if periodic boundary conditions are considered. Such large differences often lead to overshoots, unless treated by BESSER or a similarly suitable strategy. Formal solvers based on cubic Bezier splines (e.g., de la Cruz Rodríguez & Piskunov 2013) could be developed to preserve the continuity of  , but they may fail to accurately interpolate quadratic functions even in fine grids when using Auer’s (2003) Eq. (12) for

, but they may fail to accurately interpolate quadratic functions even in fine grids when using Auer’s (2003) Eq. (12) for  (see Sect. 3.4).

(see Sect. 3.4).

An alternative formal solver, which uses cubic Hermite splines, has been presented by Ibgui et al. (2013). However, the way of fixing the derivatives at the end points M and P of the SC to the values corresponding to the linear interpolation case may cause loss of accuracy of the formal solver.

3.3. Formal solution of the vectorial radiative transfer equation with BESSER

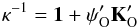

Taylor expansion of the ωM,O,P coefficients for small optical path intervals.

The application of the Bézier interpolation for calculating the formal solution of Eq. (4) proceeds as follows. We assume that the Stokes components of the vectorial source function S(t) vary, between points M and O, according to Eq. (5) with the control points calculated using the BESSER algorithm described in the previous section. The term K′(t)I(t) is assumed to change linearly in the same interval, as in the DELOPAR method. The integral in Eq. (4) can then be evaluated analytically and the Stokes parameters at point O can be expressed in the form (see Trujillo Bueno 2003, for details of an analogous derivation using parabolic interpolation of S) ![\begin{eqnarray} \vec\kappa^{-1}\vec I_{\rm O}=\left[{\rm e}^{-\tau_{\rm MO}}-\psi_{\rm M}'\vec K_{\rm M}' \right]\vec I_{\rm M} +\omega_{\rm M}\vec S_{\rm M}+\omega_{\rm O}\vec S_{\rm O}+\omega_{\rm C}\vec c_{\rm M}, \label{eq:fsbez} \end{eqnarray}](/articles/aa/full_html/2013/09/aa21742-13/aa21742-13-eq118.png) (7)where

(7)where  (8)is a 4 × 4 matrix and 1 is the unit matrix. Multiplying Eq. (7) by κ gives the desired vector of Stokes parameters IO at point O. The coefficients

(8)is a 4 × 4 matrix and 1 is the unit matrix. Multiplying Eq. (7) by κ gives the desired vector of Stokes parameters IO at point O. The coefficients  and

and  are the usual coefficients resulting from linear interpolation,

are the usual coefficients resulting from linear interpolation,  Using the substitutions hM = τMO and u = 1 − t/τMO in Eq. (5), one obtains for the ωi coefficients the expressions

Using the substitutions hM = τMO and u = 1 − t/τMO in Eq. (5), one obtains for the ωi coefficients the expressions  It is important to note that the accuracy of these expressions decreases as τMO ≪ 1, due to the limited precision of the floating point computer arithmetics. Therefore, for small upwind optical paths we use instead the Taylor expansion of such expressions calculated at τMO = 0 (see Table 1).

It is important to note that the accuracy of these expressions decreases as τMO ≪ 1, due to the limited precision of the floating point computer arithmetics. Therefore, for small upwind optical paths we use instead the Taylor expansion of such expressions calculated at τMO = 0 (see Table 1).

An important quantity used in Jacobi-based iterative methods for the solution of non-LTE problems is the diagonal of the monochromatic Λ operator at the point O under consideration,  . It can be easily calculated from Eq. (7) for IO, by setting IM = SM = SP = (0,0,0,0)T and SO = (1,0,0,0)T. It follows that

. It can be easily calculated from Eq. (7) for IO, by setting IM = SM = SP = (0,0,0,0)T and SO = (1,0,0,0)T. It follows that  . Given that in this case the source function has a local maximum at point O, we have cM = 1, and we finally arrive at

. Given that in this case the source function has a local maximum at point O, we have cM = 1, and we finally arrive at  (14)In contrast to the familiar parabolic solvers, no information about the interpolation coefficients in the preceding point is needed to determine the diagonal of the Λ operator at the point O under consideration. It is easy to show that

(14)In contrast to the familiar parabolic solvers, no information about the interpolation coefficients in the preceding point is needed to determine the diagonal of the Λ operator at the point O under consideration. It is easy to show that  , which is an important condition for the stability of the iterative method used to solve any non-LTE problem. This is particularly important for solving three-dimensional problems in which the upwind point M does not generally coincide with any grid node.

, which is an important condition for the stability of the iterative method used to solve any non-LTE problem. This is particularly important for solving three-dimensional problems in which the upwind point M does not generally coincide with any grid node.

We have found by numerical experimentation that the time needed to perform one Jacobi iteration using our BESSER formal solver is only ≲ 1% slower than when using instead DELOPAR, because of the need to determine the cM and cP values of the control points following the algorithm described in Sect. 3.2. The computation of κ and κ-1 (see Eq. (8)), the calculation of the transfer coefficients, and their interpolation in the upwind and downwind points takes most of the computing time per iterative step.

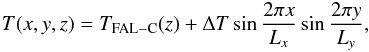

It is also important to note that the convergence rate of multilevel iterative methods using BESSER as formal solver is virtually identical to that achieved using DELOPAR (see an example of the Jacobi method in Fig. 4).

If the atmospheric model used is sufficiently smooth and no abrupt changes of the source function are present, the accuracy of BESSER and DELOPAR are virtually identical. This is not surprising because both formal solvers produce identical results in the absence of overshoots.

|

Fig. 4 Each pair of curves shows, for a spatial grid of given resolution, the maximum relative change versus the iteration number using the Jacobi method with DELOPAR (dotted lines) and with BESSER (solid lines). We note that the finer the grid the slower the convergence rate, but that for any given spatial resolution both formal solvers give the same convergence rate. |

3.4. Accuracy of the BESSER formal solver

|

Fig. 5 Variation along the ray direction of the source function (Eq. (15)) and of the corresponding specific intensity (Eq. (17)) calculated analytically. |

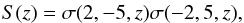

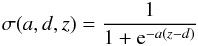

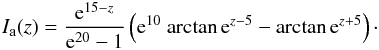

To demonstrate the accuracy of our BESSER formal solver we consider the RT problem of an arbitrary ray propagating in an infinite medium having constant opacity and a source function variation along the ray direction given by the expression (for the sake of simplicity we consider the unpolarized case)  (15)where the sigmoid function σ reads

(15)where the sigmoid function σ reads  (16)and z is the geometrical distance along the ray, measured in units of the length scale for which Δz = Δτ, with Δz the grid spacing and Δτ the ensuing optical distance. As shown by the solid line in Fig. 5, which corresponds to d = 5 in Eq. (16), the source function exponentially rises around z = −d, reaches its maximum value around z = 0, and then exponentially decreases around z = d. Assuming Ia( − ∞) = 0, the analytical solution of the radiative transfer equation for the specific intensity propagating towards positive z values is (see the dotted line in Fig. 5)

(16)and z is the geometrical distance along the ray, measured in units of the length scale for which Δz = Δτ, with Δz the grid spacing and Δτ the ensuing optical distance. As shown by the solid line in Fig. 5, which corresponds to d = 5 in Eq. (16), the source function exponentially rises around z = −d, reaches its maximum value around z = 0, and then exponentially decreases around z = d. Assuming Ia( − ∞) = 0, the analytical solution of the radiative transfer equation for the specific intensity propagating towards positive z values is (see the dotted line in Fig. 5)  (17)We have calculated numerically the specific intensity for the above-mentioned one-ray problem by solving the radiative transfer equation in several spatial grids of increasing resolution and using various formal solvers. Our aim is to compare the accuracy of our BESSER formal solver with other short-characteristics methods. To this end, we use the analytical solution given by Eq. (17) to compute the maximum true error

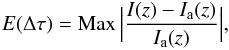

(17)We have calculated numerically the specific intensity for the above-mentioned one-ray problem by solving the radiative transfer equation in several spatial grids of increasing resolution and using various formal solvers. Our aim is to compare the accuracy of our BESSER formal solver with other short-characteristics methods. To this end, we use the analytical solution given by Eq. (17) to compute the maximum true error  (18)among all the spatial points along the ray for which the solution has been obtained (i.e., − 12 ≤ z ≤ 12)2.

(18)among all the spatial points along the ray for which the solution has been obtained (i.e., − 12 ≤ z ≤ 12)2.

|

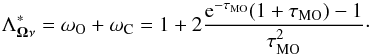

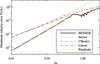

Fig. 6 Maximum relative true error E(Δτ) calculated as a function of the uniform grid spacing Δτ, using different formal solvers. Solid line: our BESSER method. Dotted line: quadratic Bézier with the derivative at point O calculated using the expression given by Fritsch & Butland (1984) (see also Eq. (12) of Auer 2003). Dashed line: as in the previous method, but applying the cM overshoot correction (see Eq. (11) of Auer 2003). Dashed-dotted line: standard SC method with linear interpolation. Three-dotted-dashed line: standard SC method with parabolic interpolation. |

The formal solvers we have applied are listed in the caption of Fig. 6, which gives E(Δτ) as a function of the Δτ of the grid spacing. Surprisingly, the worst performance is that corresponding to the Bézier formal solver based on the central-point derivative  , calculated using the weighted harmonic mean derivatives of Fritsch & Butland (1984) and ignoring the overshoot test in the upwind interval (Bezier, dotted line). If the correction to the upwind overshoot is applied, the method performs much better, at least in the coarsest grids in Fig. 6 (CBezier, dashed line). In finer grids, however, the accuracy is still lower than that of BESSER and even than that provided by the standard SC method with parabolic interpolation (dashed-three-dotted line). The reason is that the estimation of the central-point derivative

, calculated using the weighted harmonic mean derivatives of Fritsch & Butland (1984) and ignoring the overshoot test in the upwind interval (Bezier, dotted line). If the correction to the upwind overshoot is applied, the method performs much better, at least in the coarsest grids in Fig. 6 (CBezier, dashed line). In finer grids, however, the accuracy is still lower than that of BESSER and even than that provided by the standard SC method with parabolic interpolation (dashed-three-dotted line). The reason is that the estimation of the central-point derivative  provided by Fritsch & Butland (1984) (see also Eq. (12) of Auer 2003) generally does not allow the second-order polynomials to be interpolated exactly3.

provided by Fritsch & Butland (1984) (see also Eq. (12) of Auer 2003) generally does not allow the second-order polynomials to be interpolated exactly3.

In the coarsest grids in Fig. 6, the maximum true error depends not only on the grid spacing but also on the particular position of the grid points with respect to the z = 0 position of the source function maximum. Consequently, in the region in Fig. 6 corresponding to the coarsest grids we observed an oscillatory behavior of the maximum true error. However, the overall variation of the error with Δτ remains the same independent of the particular location of the grid nodes.

4. Parallelization using the Snake Algorithm

The slowest part in the numerical computations needed for solving a non-LTE radiative transfer problem is the formal solution of the radiative transfer equation because the number of floating point operations needed to compute the radiation field at all the spatial grid points far exceeds the number of operations needed to solve the SEEs. In particular, an accurate modeling of the spectral line polarization produced by anisotropic radiation pumping (scattering line polarization) and its modification by the Hanle effect requires the use of very fine frequency and direction quadratures that increase the computing time of the formal solution.

The formal solution for computing a single Stokes parameter at any given grid point for any given frequency and ray direction typically takes about 1 μs on today’s workstations. It is easy to estimate that one formal solution for computing the four Stokes parameters in a 3D grid with 5003 points, 100 radiation frequencies, and 160 discrete directions will take about 90 days on the same computer. The full non-LTE solution requiring one hundred Jacobi iterations would take 25 years.

The use of powerful methods for multilevel radiative transfer applications, such as the non-linear multigrid method proposed by Fabiani Bendicho et al. (1997), is necessary but not sufficient for doing multilevel radiative transfer calculations in realistic 3D atmospheric models resulting from state-of-the-art MHD simulations. To this end, we need to use massively parallel computers, which requires a suitable parallelization of the RT code. In this section, we describe a novel algorithm for performing the numerical formal solution using multiple CPU cores. As shown below, simultaneous parallelization via domain decomposition and parallelization in the frequency domain results in a very efficient radiative transfer code that shows an optimal scaling with the number of CPU cores.

4.1. Domain decomposition

The computer memory needed tp store large model grids exceeds the capacity of the computing nodes of today’s supercomputers by at least one order of magnitude. The capacity of computers will continue to increase in the future, but the same will happen with the scale and resolution of the MHD models. It is therefore necessary to reduce the memory demands per CPU core. This can be achieved through the technique of domain decomposition, by means of which different parts of the model grid are treated simultaneously (in parallel), each running on a different CPU core. This task is non-trivial in radiative transfer because of the need to use a well-defined sequence of grid points. This complicates the treatment of radiative transfer in generally decomposed grids where different parts are to be solved simultaneously. A possible solution to this problem comes from the fact that the non-LTE problem needs to be solved by iteration and the radiation field at the domain boundaries can be fixed in every iteration using the radiation field calculated in the previous iteration. After a sufficient number of iterations, a self-consistent solution to the problem is eventually found. The disadvantage of this approach is that fixing the boundary radiation field of the domains reduces the information flow between the domains, which leads to a scaling of the algorithm proportional to P2/3, with P the number of CPU cores (Hayek 2008). In Sect. 4.4 we discuss the advantages and disadvantages of our approach.

Given a Cartesian grid with NxNyNz discrete points, we only divide it along the z-axis into a consecutive sequence of L domains  , ℓ = 1,...,L (see Fig. 7, which shows a 2D instead of a 3D grid, for simplicity). The horizontal extension of each domain

, ℓ = 1,...,L (see Fig. 7, which shows a 2D instead of a 3D grid, for simplicity). The horizontal extension of each domain  is always the same, and identical to that corresponding to a serial solution of the same non-LTE problem. Each of these domains is treated by one or more CPU cores in parallel with others according to the algorithm described in the following section. The boundary layer

is always the same, and identical to that corresponding to a serial solution of the same non-LTE problem. Each of these domains is treated by one or more CPU cores in parallel with others according to the algorithm described in the following section. The boundary layer  of the successive domains

of the successive domains  and

and  has to be taken into account in each of the domains. Ghost layers have to be included in both domains if the formal solver of the transfer equation is of parabolic accuracy and/or the multigrid method is used. This ghost layer is needed to calculate the radiation transfer coefficients at the downwind point P (see Fig. 1) when point O is in the boundary layer

has to be taken into account in each of the domains. Ghost layers have to be included in both domains if the formal solver of the transfer equation is of parabolic accuracy and/or the multigrid method is used. This ghost layer is needed to calculate the radiation transfer coefficients at the downwind point P (see Fig. 1) when point O is in the boundary layer  . We note that if the interpolation of the upwind and downwind radiation transfer coefficients is bilinear instead of biquadratic, only one ghost layer is needed for each of these domains. This is usually a good approximation given the spatial fineness of today’s MHD models. Given that the boundary layer that is common to each pair of domains has to be treated twice, the number Nℓ of z-points per domain is equal to (Nz − 1)/L + 1 (assuming that L is such that (Nz − 1)/L is an integer).

. We note that if the interpolation of the upwind and downwind radiation transfer coefficients is bilinear instead of biquadratic, only one ghost layer is needed for each of these domains. This is usually a good approximation given the spatial fineness of today’s MHD models. Given that the boundary layer that is common to each pair of domains has to be treated twice, the number Nℓ of z-points per domain is equal to (Nz − 1)/L + 1 (assuming that L is such that (Nz − 1)/L is an integer).

|

Fig. 7 Domain decomposition in the z-axis, with Nℓ denoting the number of discrete heights within domain |

As shown below, it is possible to divide the z-axis into a large number of intervals without any serious effect on the efficiency. In the numerical experiments discussed below, we have used values as small as (Nz − 1)/L + 1 = 6. For a sufficient number of computing nodes, it follows that the memory requirements per domain scales as O(NxNy). Given the large spatial extension of the 3D stellar atmospheric models that result from today’s MHD simulations (e.g., Leenaarts et al. 2012), the domain decomposition strategy described above is very suitable for reducing to reasonable values the memory requirements per computing core.

4.2. 3D formal solution in the domain-decomposed models: the Snake Algorithm

|

Fig. 8 Clarification of the Snake Algorithm (SA) using an example of a formal solution with five radiation frequencies νj = 1,...,5 and six directions Ωi = 1,...,6, running in a domain-decomposed grid with six domains. We note that only the solution for rays Ωz > 0 is shown in this figure. See the main text for details. |

In contrast to the usual 3D domain decomposition technique, it is possible to fulfill the requirement of a topologically sorted grid without the need to iterate the boundary conditions. For reasons that will become obvious below, we call it the Snake Algorithm (SA). It proceeds as follows.

The formal solution of the RT equation allows us to obtain, at each spatial grid point (ix,iy,iz) of domain  , the Stokes parameters for all the discretized directions and radiation frequencies (Ωi,νj). The total number of these points is NΩNν, where NΩ denotes the number of ray directions and Nν the number of radiation frequencies. Without loss of generality, let us consider the formal solution of the RT equation in domain

, the Stokes parameters for all the discretized directions and radiation frequencies (Ωi,νj). The total number of these points is NΩNν, where NΩ denotes the number of ray directions and Nν the number of radiation frequencies. Without loss of generality, let us consider the formal solution of the RT equation in domain  for the directions Ωi having Ωz > 0 (i.e., for rays propagating along directions of increasing z, from the lower boundary of

for the directions Ωi having Ωz > 0 (i.e., for rays propagating along directions of increasing z, from the lower boundary of  to the upper boundary of

to the upper boundary of  ).

).

For (Ω1,ν1), we solve the RT equation starting at the lower boundary  and proceeding upwards to the domain boundary layer

and proceeding upwards to the domain boundary layer  (see Fig. 7). If the atmospheric model assumes periodic boundary conditions in the horizontal (x,y) directions, we take them into account following the strategy of Auer et al. (1994). Since the domain is not decomposed in the horizontal directions, in each of the domains our algorithm works exactly as it does in the serial solution.

(see Fig. 7). If the atmospheric model assumes periodic boundary conditions in the horizontal (x,y) directions, we take them into account following the strategy of Auer et al. (1994). Since the domain is not decomposed in the horizontal directions, in each of the domains our algorithm works exactly as it does in the serial solution.

Once the radiation field for (Ω1,ν1) is known at the last plane  of the domain, we start the process responsible for doing the formal solution in the next domain

of the domain, we start the process responsible for doing the formal solution in the next domain  . In addition to the Stokes parameters, the

. In addition to the Stokes parameters, the  value has to be provided to the next domain. At this point, the process

value has to be provided to the next domain. At this point, the process  starts solving the radiative transfer equation for (Ω1,ν2), beginning again at the lower boundary. After reaching the

starts solving the radiative transfer equation for (Ω1,ν2), beginning again at the lower boundary. After reaching the  plane, the radiative data are provided to the

plane, the radiative data are provided to the  domain and the solution continues with (Ω1,ν3). These steps are repeated until the radiation transfer equation is solved for all the discrete frequencies. Then, it continues in an analogous way with (Ω2,ν1) and for all the directions with Ωz > 0.

domain and the solution continues with (Ω1,ν3). These steps are repeated until the radiation transfer equation is solved for all the discrete frequencies. Then, it continues in an analogous way with (Ω2,ν1) and for all the directions with Ωz > 0.

The solution in domain  proceeds in an exactly analogous way. After receiving the radiation data (Ω1,ν1) from domain

proceeds in an exactly analogous way. After receiving the radiation data (Ω1,ν1) from domain  , the RT equation is solved in planes

, the RT equation is solved in planes  ,

,  , etc., up to the layer

, etc., up to the layer  , from which the resulting radiation field and

, from which the resulting radiation field and  are propagated to the grid points

are propagated to the grid points  of domain

of domain  . At a given time, each domain solves the RT equation for different (Ωi,νj), such that the difference between two successive processes (domains) is just one step in the discrete space of directions and frequencies. The outgoing radiation from one domain becomes the incoming radiation for the following domain. The resulting snake of length L clambers half of the parameter space of directions Ωz > 0 and, after this is finished, it proceeds back in an analogous way by solving the radiative transfer problem for all Ωz < 0 directions.

. At a given time, each domain solves the RT equation for different (Ωi,νj), such that the difference between two successive processes (domains) is just one step in the discrete space of directions and frequencies. The outgoing radiation from one domain becomes the incoming radiation for the following domain. The resulting snake of length L clambers half of the parameter space of directions Ωz > 0 and, after this is finished, it proceeds back in an analogous way by solving the radiative transfer problem for all Ωz < 0 directions.

Figure 8 visualizes the whole process using as an example a formal solution with five radiation frequencies νj = 1,...,5 and six directions Ωi = 1,...,6, running in a domain-decomposed grid with six domains, each of which is indicated by a numbered rectangle. Each step of the solution in every domain corresponds to a formal solution of the RT equation for one direction and one frequency. In the next step, the snake of processes moves by one (Ωi,νj) point and the radiation field data are passed between the successive domains. In this example, every single process solves the RT problem in the dedicated domain which contains N/6 grid nodes. These processes parse the discrete space of directions and frequencies in the well-defined order indicated in the figure, until the whole direction-frequency space is passed through by all the processes. At the beginning and at the end of the formal solution, some of the processes are inactive, waiting for other processes to finish their work. The total number of time steps of the formal solution is 35 for the 30 direction-frequency points, which implies a speedup factor 30 × 6/35 ≈ 5.1 with respect to the serial solution. This can be easily verified by using Eq. (24) with NΩ = 12 (see below).

If the domains have the same or similar Nℓ values (which is easy to achieve in practice), each process (i.e., each domain) solves only the fraction 1/L of the whole radiation transfer problem. This leads, in principle, to an almost linear scaling with the number of spatial domains.

For practical reasons (i.e., optimization in the treatment of line absorption profiles, because it is not practical to store them for every grid point and ray direction), the line absorption profiles are obtained by interpolation, using a pre-calculated database created at the beginning of the non-LTE solution. This implies some significant reduction of memory and computing time. After calculating a line profile from the database (where the profiles are normalized to unit area), one may need to renormalize it using the chosen frequency quadrature (e.g., in the presence of Doppler shift gradients caused by macroscopic plasma motions). This only needs to be done once per direction if the loop over frequencies is the inner loop because the normalization factor only depends on direction. In summary, it is more convenient if the directions are in the outer loop and the frequencies in the inner loop of the algorithm, so that our snake parallelization strategy proceeds row by row as indicated in Fig. 8, instead of column by column.

Concerning the implementation of the algorithm, it is important to note that it is crucial to use non-blocking routines to propagate the radiation data between the successive domains. In other words, the RT calculation in domain  proceeds by solving the next (Ωi,νj) point immediately after the lower-boundary radiation data from domain

proceeds by solving the next (Ωi,νj) point immediately after the lower-boundary radiation data from domain  arrives. It does not have to wait for

arrives. It does not have to wait for  to retrieve the (Ωi,νj − 1) data. Consequently, the snake in Fig. 8 can temporarily become split, with two successive processes ℓ and ℓ + 1 processing non-subsequent points in the discrete Ω × ν space (see Fig. 8). If this does not happen, the computing performance can decrease significantly because a significant amount of time is spent waiting for the synchronization of the whole grid.

to retrieve the (Ωi,νj − 1) data. Consequently, the snake in Fig. 8 can temporarily become split, with two successive processes ℓ and ℓ + 1 processing non-subsequent points in the discrete Ω × ν space (see Fig. 8). If this does not happen, the computing performance can decrease significantly because a significant amount of time is spent waiting for the synchronization of the whole grid.

4.2.1. Scaling of the algorithm

In the serial solution, the computing time needed for the formal solution of the RT equation is proportional to the total number of spatial grid points  , the number of directions NΩ, and the number of radiation frequencies Nν. We shall denote this computing time by

, the number of directions NΩ, and the number of radiation frequencies Nν. We shall denote this computing time by  (19)with α a constant of proportionality. In the domain-decomposed parallel solution (still assuming that the first half of the integrations is performed along the directions having Ωz > 0), the duration of the full formal solution in the whole grid is equal to the time interval between the first (Ω1,ν1) and the last (ΩNΩ,νNν) ray integrations in the domain

(19)with α a constant of proportionality. In the domain-decomposed parallel solution (still assuming that the first half of the integrations is performed along the directions having Ωz > 0), the duration of the full formal solution in the whole grid is equal to the time interval between the first (Ω1,ν1) and the last (ΩNΩ,νNν) ray integrations in the domain  .

.

|

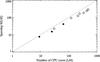

Fig. 9 Speedup S(L) of the solution of the RT equation due to domain decomposition with the Snake Algorithm. The number of CPU cores on the horizontal axis is equal to the number L of spatial domains. The diagonal dotted line indicates the theoretical curve of linear scaling. The scaling of the algorithm is almost a linear function of the number of domains. The small departure from linearity is mainly due to the cost of the inter-process communication. |

Given that the number of grid nodes in domain  is equal to

is equal to  , the time spent solving the first half of the rays in domain

, the time spent solving the first half of the rays in domain  is

is  (20)The process responsible for domain

(20)The process responsible for domain  is waiting for the last upward ray (ΩNΩ/2,νNν) to propagate through L − 1 domains to the upper grid boundary zNz. Its duration equals

is waiting for the last upward ray (ΩNΩ/2,νNν) to propagate through L − 1 domains to the upper grid boundary zNz. Its duration equals  (21)The same time tb it taken by the first downward ray (ΩNΩ/2 + 1,ν1) to propagate from

(21)The same time tb it taken by the first downward ray (ΩNΩ/2 + 1,ν1) to propagate from  to the upper boundary of

to the upper boundary of  . The computing time needed for the solution of the NΩ/2 downward rays in the

. The computing time needed for the solution of the NΩ/2 downward rays in the  domain is, again, equal to ta.

domain is, again, equal to ta.

The duration of the full formal solution in the L-decomposed grid is, therefore, equal to TL = 2(ta + tb). It follows from the equations above that ![\begin{eqnarray} T_L = \alpha\frac {\mathcal {N}}L\left[ N_\Omega N_\nu+2(L-1) \right]\cdot \label{eq:parLtime} \end{eqnarray}](/articles/aa/full_html/2013/09/aa21742-13/aa21742-13-eq234.png) (22)We define the speedup of the parallel solution with respect to the serial solution as

(22)We define the speedup of the parallel solution with respect to the serial solution as  (23)which, using Eqs. (19) and (22), is equal to

(23)which, using Eqs. (19) and (22), is equal to  (24)where

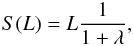

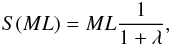

(24)where  (25)If λ ≪ 1, then the speedup in the formal solution is practically linear with the number of domains L. This is equivalent to saying that NΩNν ≫ L, i.e., to a situation in which the number of direction-frequency points is much larger than the number of domains. Given that in the transfer of polarized radiation the typical orders of magnitude of the relevant quantities are NΩ ~ 102, Nν ~ 103, and L ~ 102, we obtain λ ~ 10-3. It is easy to see from Eq. (24) that SA always accelerates the solution if NΩ > 2 and L > 1.

(25)If λ ≪ 1, then the speedup in the formal solution is practically linear with the number of domains L. This is equivalent to saying that NΩNν ≫ L, i.e., to a situation in which the number of direction-frequency points is much larger than the number of domains. Given that in the transfer of polarized radiation the typical orders of magnitude of the relevant quantities are NΩ ~ 102, Nν ~ 103, and L ~ 102, we obtain λ ~ 10-3. It is easy to see from Eq. (24) that SA always accelerates the solution if NΩ > 2 and L > 1.

4.3. Parallelization in the radiation frequencies

|

Fig. 10 Snake Algorithm applied to the problem in Fig. 8 with L = 3 and M = 5. Here, every process has a single dedicated radiation frequency, i.e., Nm = 1. Given the small number of directions and frequencies in this illustrative example, we have λ = 2/3 and the speedup S(LM) = 9. See the text for details. |

The radiation frequencies νi = 1,...,Nν can be grouped into M intervals, each containing the Nm = Nν/M discrete frequencies4. The Snake Algorithm can be applied in parallel to each of these frequency blocks. The only difference with respect to the algorithm described in Sect. 4.2 is that the solution in the spatial domains  is only performed in a sub-space of (Ωi,νj) in which

is only performed in a sub-space of (Ωi,νj) in which  . Since this can be done in parallel for all the M blocks, a significant reduction of the solution time can be achieved (see Fig. 10).

. Since this can be done in parallel for all the M blocks, a significant reduction of the solution time can be achieved (see Fig. 10).

The domain and frequency decomposition parallelization strategies described above are performed independently of each other, in the sense that there is no need of communication between the processes treating different frequency intervals m during the formal solution. The radiation field tensors  and the

and the  operator needed for the solution of the statistical equilibrium equations are only partially integrated over the line absorption profiles during the formal solution and, at the end of the whole formal solution process, are summed over the frequency intervals and synchronized among them. The time cost of this operation is negligible with respect to the time demands of the formal solution. Thanks to this orthogonality of the two independent parallelizations, it is possible to achieve a multiplicative effect of both speedup factors.

operator needed for the solution of the statistical equilibrium equations are only partially integrated over the line absorption profiles during the formal solution and, at the end of the whole formal solution process, are summed over the frequency intervals and synchronized among them. The time cost of this operation is negligible with respect to the time demands of the formal solution. Thanks to this orthogonality of the two independent parallelizations, it is possible to achieve a multiplicative effect of both speedup factors.

4.3.1. Scaling of the algorithm

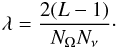

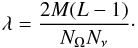

A reduction in the number of frequencies in every domain by a factor of 1/M gives a new solution time TML which is obtained after the replacement Nν → Nν/M in Eq. (22). The speedup then follows as  (26)where we now have

(26)where we now have  (27)When λ ≪ 1 the scaling of the algorithm is virtually linear with the total number of CPU cores, P = ML. As an example, we assume a large 3D model atmosphere and a supercomputer with 104 CPUs which allows for parallelization with L = 100 and M = 100. Assuming NΩ = 200 and Nν = 1000, we have λ ≈ 0.1 and a speedup S(ML) ≈ 0.9 × 104.

(27)When λ ≪ 1 the scaling of the algorithm is virtually linear with the total number of CPU cores, P = ML. As an example, we assume a large 3D model atmosphere and a supercomputer with 104 CPUs which allows for parallelization with L = 100 and M = 100. Assuming NΩ = 200 and Nν = 1000, we have λ ≈ 0.1 and a speedup S(ML) ≈ 0.9 × 104.

If ML ≫ 1, then Eq. (26) can be approximated by the expression ![\begin{eqnarray} S(ML) \approx \left[\frac 1{ML} + \frac 2{N_\Omega N_\nu}\right]^{-1}, \end{eqnarray}](/articles/aa/full_html/2013/09/aa21742-13/aa21742-13-eq273.png) (28)which immediately shows how the speedup factor depends on both the fineness of the quadratures and the number of available CPU cores. It follows that it is only possible to decrease the computing time by an additional factor of 1/2 when using more cores than

(28)which immediately shows how the speedup factor depends on both the fineness of the quadratures and the number of available CPU cores. It follows that it is only possible to decrease the computing time by an additional factor of 1/2 when using more cores than  (29)Given that typically NΩNν ~ 105, the power of today’s supercomputing facilities can be effectively exploited. Not surprisingly, (ML)optimal corresponds to a situation in which each frequency is treated by a unique frequency band and the number of spatial domains is equal to one half of the number of rays (see Eq. (25) for Nν = 1).

(29)Given that typically NΩNν ~ 105, the power of today’s supercomputing facilities can be effectively exploited. Not surprisingly, (ML)optimal corresponds to a situation in which each frequency is treated by a unique frequency band and the number of spatial domains is equal to one half of the number of rays (see Eq. (25) for Nν = 1).

4.4. Advantages and disadvantages of the Snake Algorithm

The advantages and disadvantages of SA can be summarized as follows.

Disadvantages:

-

1.

It is only possible to decompose the grid so that L < Nz.

-

2.

If NxNy becomes too large, the memory requirements can be difficult to fulfill in very large atmospheric models. In the particular case of the five-level Ca ii model atom considered in this paper, a conservative memory limit of 2 GB per core would be reached for models with NxNy ≈ 15002.

-

3.

If the number of ray directions and frequencies is low, i.e., λ ≳ 1 in Eq. (27), the scaling properties of SA are sub-optimal. However, this situation is unlikely in realistic models.

-

4.

It is not trivial to implement the Gauss-Seidel iterative method of Trujillo Bueno & Fabiani Bendicho (1995) for the smoothing part of the non-linear multigrid iteration (see Sect. 5).

-

5.

There is always an overhead of communication and synchronization between the domains. This overhead can become as large as 20 % of the whole formal solution computing time for cases with Nz/L approaching unity, but the overhead is usually about 10 % with the hardware we have been using (see Appendix B). The relative importance of the cost of the inter-process communication increases with the number of domains, leading to a minor departure from linear scaling in the parallel solution.

Advantages:

-

1.

The accuracy of the solution is identical to the serial solution.The following two points follow directly from this fact.

-

2.

The total number of iterations needed to solve the non-LTE problem is equal to the serial solution and the convergence rate is not deteriorated as in domain-decomposition methods with iterative boundary conditions.

-

3.

There are no discontinuities of the errors at the boundaries of domains due to iterative boundary conditions. The error remains smooth and the application of the non-linear multigrid method is not affected by the presence of such numerical problems.

-

4.

It is easy to use a partially or fully converged solution (stored in a disk file) as an initialization of a different non-LTE computation without having to worry about the radiation field at the domain boundaries5. For instance, this is useful for obtaining the solution in a given 3D thermal model, but for different magnetic field choices.

-

5.

The scaling properties of the algorithm are almost linear with the number of CPU cores P, until P becomes comparable to NΩNν, i.e., for all cases of practical interest.

-

6.

Thanks to the multiplicative effect of the two independent parallelization strategies (i.e., domain decomposition and parallelization in radiation frequencies), large-scale supercomputing facilities can be used with a significant improvement in the solution time.

-

7.

The algorithm is relatively easy to implement in practice. Existing serial RT codes can be generalized using the SA without a very serious programming effort.

|

Fig. 11 Speedup S(M) of the formal solution of the RT equation due to parallelization in radiation frequencies in a single spatial domain (L = 1). |

|

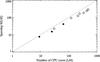

Fig. 12 Speedup S(LM) of the formal solution of the RT equation due to simultaneous parallelization in radiation frequencies and domain decomposition. Filled circles: data calculated for various values of L and M using the OCAS cluster. Diamonds: data calculated using the LaPalma supercomputer. We note that because of non-optimal spatial and frequency decompositions (i.e., not all the CPU cores treat exactly the same number of grid points and/or frequencies), small oscillations around the nearly linear trend appear. The speedup in both data sets is normalized to the serial time T1 corresponding to each of the used computers. |

5. The non-linear multigrid method

As mentioned in Sect. 2 we need a fast iterative method capable of finding rapidly the density matrix elements ρl such that Eq. (2) is satisfied when the radiative rates, which appear in the block-diagonal matrix ℒl of Eq. (2), are calculated through the solution of the Stokes-vector transfer equation when using at each spatial point the emission vector and the propagation matrix corresponding to these ρl values. A suitable method is the Jacobian iterative scheme described in Manso Sainz & Trujillo Bueno (2003) and in Appendix A of Štěpán & Trujillo Bueno (2011). As with other operator splitting methods, with this Jacobi-based iterative method the convergence rate is the slower the finer the spatial grid, so that the computing time needed to obtain the self-consistent solution scales in general as  (where

(where  is the total number of spatial grid points). In order to solve complicated 3D problems in very fine grids it is convenient to apply an iterative method whose convergence rate is insensitive to the grid size, so that the computing time needed to obtain the self-consistent solution scales as

is the total number of spatial grid points). In order to solve complicated 3D problems in very fine grids it is convenient to apply an iterative method whose convergence rate is insensitive to the grid size, so that the computing time needed to obtain the self-consistent solution scales as  . This method is called the non-linear multigrid method (e.g., Hackbush 1985) whose application to multilevel radiative transfer without polarization has been described in great detail by Fabiani Bendicho et al. (1997)6. Here we provide only a brief overview of the non-linear multigrid (MG) method with emphasis on the details related to our generalization to the polarized case and the parallelization strategy via domain decomposition.

. This method is called the non-linear multigrid method (e.g., Hackbush 1985) whose application to multilevel radiative transfer without polarization has been described in great detail by Fabiani Bendicho et al. (1997)6. Here we provide only a brief overview of the non-linear multigrid (MG) method with emphasis on the details related to our generalization to the polarized case and the parallelization strategy via domain decomposition.

5.1. Brief overview of the non-linear MG method

As mentioned above, the aim is to find the ρl vector of density matrix values, defined in the desired fine grid of resolution level l, such that Eq. (2) is satisfied as explained above. We assume that we have a fine grid estimate  such that the residual

such that the residual  (30)is smooth7. We would like to obtain a fine-grid correction Δρl such that the new estimate

(30)is smooth7. We would like to obtain a fine-grid correction Δρl such that the new estimate  satisfies Eq. (2):

satisfies Eq. (2):  (31)Given that the problem is non-linear (i.e., the operator ℒl depends on

(31)Given that the problem is non-linear (i.e., the operator ℒl depends on  ) we need to make an approximation to ℒl in order to obtain a better estimate

) we need to make an approximation to ℒl in order to obtain a better estimate  .

.

With the Jacobian method we simplify the operator ℒl in the same grid of resolution level l; we do this by choosing the diagonal of the Λ operator. With the non-linear MG method we approximate the operator ℒl by forming a suitable approximation in a coarser grid of level l − 1; that is, we coarsify rather than simplify. In order to understand how we coarsify we note first that Eqs. (30) and (31) imply that  (32)The residual rl was assumed to be smooth, so we can map the left-hand side of Eq. (32) to a coarser grid of level l − 1 to obtain the coarse-grid equation (cf. Fabiani Bendicho et al. 1997):

(32)The residual rl was assumed to be smooth, so we can map the left-hand side of Eq. (32) to a coarser grid of level l − 1 to obtain the coarse-grid equation (cf. Fabiani Bendicho et al. 1997):  (33)where the linear operator ℛ is a fine-to-coarse or restriction operator whose application to

(33)where the linear operator ℛ is a fine-to-coarse or restriction operator whose application to  and to rl allow us to obtain directly the rhs terms. Therefore, the system of Eq. (33) is formally identical to the original system of Eq. (2), but formulated in a grid of level l − 1. After solving it with the Jacobian method explained in Appendix A of Štěpán & Trujillo Bueno (2011) we obtain the coarse-grid correction (CGC)