| Issue |

A&A

Volume 699, July 2025

|

|

|---|---|---|

| Article Number | A170 | |

| Number of page(s) | 8 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202451822 | |

| Published online | 07 July 2025 | |

An adaptive symplectic integrator for gravitational dynamics

1

School of Aeronautics and Astronautics, Shenzhen Campus of Sun Yat-sen University, Shenzhen,

Guangdong

518107,

China

2

Shenzhen Key Laboratory of Intelligent Microsatellite Constellation, Shenzhen Campus of Sun Yat-sen University, Shenzhen,

Guangdong

518107,

China

3

School of Mechanics and Aerospace Engineering, State Key Laboratory of Structural Analysis, Optimization and CAE Software for Industrial Equipment, Dalian University of Technology, Dalian,

Liaoning

116081,

China

⋆ Corresponding author: liuxd36@mail.sysu.edu.cn

Received:

7

August

2024

Accepted:

3

June

2025

This paper presents an adaptive symplectic integrator, SQQ-PTQ, developed on the basis of the fixed-step symplectic integrator SQQ. To mitigate the Runge phenomenon, SQQ-PTQ employs Chebyshev interpolation for approximating the action, enhancing both the precision and stability of the interpolation. In addition, to reduce the computational cost of evaluating interpolation functions, SQQ-PTQ introduces a projection method that improves the efficiency of these computations. A key feature of SQQ-PTQ is its use of the time transformation to implement an adaptive time step. To address the challenge of computing complicated Jacobian matrices attributed to the time transformation, SQQ-PTQ adopts a quasi-Newton method based on Broyden’s method. This strategy accelerates the solution of nonlinear equations, thereby improving the overall computational performance. The effectiveness and robustness of SQQ-PTQ are demonstrated via three numerical experiments. In particular, SQQ-PTQ demonstrates adaptability in handling close-encounter problems. Moreover, during long-term integrations, SQQ-PTQ maintains the energy conservation, further confirming its advantages as a symplectic algorithm.

Key words: methods: numerical

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Symplecticity is characteristic of Hamiltonian systems and numerical results obtained by symplectic integrators maintain long-term stability compared to nonsymplectic integrators. Thus, symplectic integrators have proven to be powerful tools in studies of gravitational dynamics (Vogelaere 1900; Ruth 1983; Feng 1985; Wang et al. 2021).

An important approach to constructing symplectic integrators is based on the use of generating functions (Channell & Scovel 1990; Miesbach & Pesch 1992; Chen et al. 2003; Makazaga & Murua 2009). Gao et al. (2012) constructed a high-order and fixed-step symplectic integrator SQQ for Hamiltonian systems based on the principle of least action and generating function. In the acronym SQQ, S denotes the symplectic nature of the integrator, while the two Qs indicate that generalized coordinates are used as independent variables at both sides in the generating function (Gao et al. 2012). Overall, SQQ is a general-purpose integrator, and has been applied to trajectory planning (Peng et al. 2023), kinodynamic planning, optimal control of cable-driven tensegrity manipulators (Song et al. 2020), and multibody dynamics problems (Peng et al. 2019, 2020; Li et al. 2021). The numerical results demonstrate that SQQ exhibits high accuracy and effective energy conservation behavior.

However, in the numerical computation of multi-body gravitational problems, traditional fixed-step symplectic algorithms face two primary challenges. The first challenge arises from the computational efficiency issues caused by the multi-scale nature of time in such systems. For systems with strong variations in interaction, such as high-eccentricity or hyperbolic Kepler problems, the fixed-step symplectic integrator becomes inefficient. In contrast, adaptive time-step integrators can handle these scenarios effectively by employing smaller time steps in rapidly changing regions and larger time steps in slowly varying regions (Dehnen & Read 2011; Springel 2005). Unfortunately, traditional step-size control strategies destroy the long-term conservation properties of symplectic integrators (Preto & Tremaine 1999; Emel’yanenko 2007; Blanes & Iserles 2012).

One approach to constructing adaptive time-step symplectic integrators involves the application of time transformation, which decouples the time step from the integration step (Hairer 1997; Mikkola & Tanikawa 1999; Preto & Tremaine 1999; Wang et al. 2020; Wang & Nitadori 2020). However, time transformation often leads to a non-separable Hamiltonian system, which, in turn, transforms an explicit algorithm into an implicit one (Hairer 1997; Huang & Leimkuhler 1997). In general, implicit algorithms require solutions to nonlinear equations. Due to the complexity introduced by the time-transformed Hamiltonian, computing the Jacobian matrix for solving this nonlinear system can become computationally expensive. This leads to the second challenge: how to efficiently solve the nonlinear equations at each time step. To address this challenge, we employed a quasi-Newton method based on Broyden’s method (Broyden 1965) to accelerate the inversion of the Jacobian matrix, which has been widely used in many research fields (e.g. Bulín & Hajžman 2021; Chen et al. 2022; Sherman & Morrison 1950). Broyden’s method iteratively updates an approximation to the Jacobian inverse during the process of solving the nonlinear equations. By avoiding the explicit computation of the Jacobian matrix, this approach significantly reduces computational costs and improves efficiency.

To address the two important challenges mentioned above, our proposed method combines the time transformation and the quasi-Newton approach, offering a robust framework for efficient and accurate simulations in multi-body gravitational problems. This paper is organized as follows. Section 2 provides the fundamental theory of SQQ based on Chebyshev interpolation method. Section 3 presents the numerical implementation, which includes three parts. The first part introduces a projection method that accelerates the computation of interpolation functions and their derivatives without requiring matrix inversion. The second part introduces the concept of the time transformation and discusses the selection of the step-size control function. The last part presents an efficient approach for solving nonlinear equations. Finally, Section 4 includes several numerical examples to demonstrate the effectiveness of the proposed method.

2 The symplectic algorithm based on Generating Function

This section gives a brief introduction to SQQ (for more details, readers are referred to Gao et al. 2012). The action of a conservative system is the integral of the Lagrangian function over time

![$\[S=\int_{t_a}^{t_b}\left[\mathbf{p}^T \dot{\mathbf{q}}-H(\mathbf{q}, \mathbf{p})\right] d t,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq1.png) (1)

(1)

where q is the generalized displacement, ![$\[\dot{\mathbf{q}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq2.png) is the generalized velocity, and p is the generalized momentum. The principle of least action is given by

is the generalized velocity, and p is the generalized momentum. The principle of least action is given by

![$\[\begin{aligned}\delta S= & \int_{t_a}^{t_b}\left[(\delta \dot{\mathbf{q}})^T \mathbf{p}-(\delta \mathbf{q})^T \frac{\partial H}{\partial \mathbf{q}}\right] d t+\int_{t_a}^{t_b}(\delta \mathbf{p})^T\left(\dot{\mathbf{q}}-\frac{\partial H}{\partial \mathbf{p}}\right) d t \\= & \int_{t_a}^{t_b}(\delta \mathbf{q})^T\left[-\dot{\mathbf{p}}-\frac{\partial H}{\partial \mathbf{q}}\right] d t+\int_{t_a}^{t_b}(\delta \mathbf{p})^T\left(\dot{\mathbf{q}}-\frac{\partial H}{\partial \mathbf{p}}\right) d t \\& +\left.\mathbf{p}^T \delta \mathbf{q}\right|_{t_a} ^{t_b}=0.\end{aligned}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq3.png) (2)

(2)

Equation (2) shows that if the Hamiltonian canonical differential equation is satisfied within the time step, the action will satisfy the following equation,

![$\[\delta S=\mathbf{p}^T\left(t_b\right) \mathrm{d} \mathbf{q}\left(t_b\right)-\mathbf{p}^T\left(t_a\right) \mathrm{d} \mathbf{q}\left(t_a\right).\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq4.png) (3)

(3)

The physical meaning of Eq. (3) is that the action, S, depends only on the generalized displacement at both ends of the time interval [ta, tb]. In particular, S is a function of q (ta) and q (tb). From Eq. (3), we get

![$\[\mathbf{p}\left(t_a\right)=-\frac{\partial S\left(\mathbf{q}\left(t_a\right), \mathbf{q}\left(t_b\right)\right)}{\partial \mathbf{q}\left(t_a\right)}, \quad \mathbf{p}\left(t_b\right)=\frac{\partial S\left(\mathbf{q}\left(t_a\right), \mathbf{q}\left(t_b\right)\right)}{\partial \mathbf{q}\left(t_b\right)}.\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq5.png) (4)

(4)

Once the generating function is determined, the generalized displacement q (tb) and the generalized momentum p(tb) can be calculated according to Eq. (4). In this context, SQQ employs the Lagrange interpolation with equidistant nodes and then the Gaussian quadrature is used to calculate the approximate value of S. However, a higher order interpolation with equidistant nodes often leads to the Runge phenomenon. To avoid this phenomenon, we used the Chebyshev interpolation in this work (Cai & Song 2012; Lanczos 1938).

The generalized coordinate and momentum vectors as functions of time, t, are expressed as:

![$\[\mathbf{q}(t)=\sum_{k=0}^m M_k(t) \mathbf{q}_k, \quad \mathbf{p}(t)=\sum_{k=0}^n N_k(t) \mathbf{p}_k,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq6.png) (5)

(5)

where Mk(t) and Nk(t) are the interpolation basis functions, while qk and pk are defined as:

![$\[\mathbf{q}_k=\left\{q_k^1, q_k^2, \cdots, q_k^d\right\}^{\mathrm{T}}, \quad k=0,1, \cdots, m,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq7.png) (6)

(6)

![$\[\mathbf{p}_k=\left\{p_k^1, p_k^2, \cdots, p_k^d\right\}^{\mathrm{T}}, \quad k=0,1, \cdots, n,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq8.png) (7)

(7)

with d representing the degrees of freedom (DOF) of the system. Here, m and n denote the number of interpolation points for the generalized displacement and generalized momentum, respectively.

By substituting Eq. (5) into Eq. (1) and then combining it with Eq. (4), we obtain

![$\[\begin{aligned}& \frac{\partial S}{\partial \mathbf{q}_0}+\mathbf{p}_0=0, \\& \frac{\partial S}{\partial \mathbf{q}_i}=0, \quad i=1,2, \cdots, m-1, \\& \frac{\partial S}{\partial \mathbf{p}_i}=0, \quad i=0,1, \cdots, n.\end{aligned}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq9.png) (8)

(8)

Generally, Eq. (8) is composed of nonlinear algebraic equations. Due to the introduction of the step-size control function in this paper (see Section 3.2), the analytical derivation and calculation of the Jacobian matrix become complicated and challenging. This paper employs a quasi-Newton method and accelerates the iterative computation using Broyden’s method (see Section 3.3).

3 Numerical implementation

3.1 Efficient computation of interpolation functions using the projection method

To solve Eq. (8), the action S was approximated using Gaussian integration, which requires the calculation of Gaussian points and their corresponding interpolation functions, Mk(t) and Nk(t), at each time step. Since this process involves matrix inversion, it is computationally expensive. However, because the number of interpolation points remains constant during the computation, these functions do not need to be recomputed at each step. Instead, they can be efficiently determined using a projection method. This section will illustrate this method by using Nk(t) and ![$\[\dot{N}_{k}(t)\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq11.png) as examples.

as examples.

Thus, we let Gaussian integral points and their weights in the interval [−1, 1] be

![$\[\hat{\xi}_j, \hat{\omega}_j, \quad j=1,2, \cdots, g,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq12.png) (10)

(10)

where g represents the number of Gaussian quadrature points, and ![$\[\hat{N}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq13.png) and

and ![$\[\hat{\dot{N}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq14.png) are the interpolation function and its derivative, respectively. In any time interval [ta, tb] (0 ≤ ta < tb), the Gaussian integral points and their weights can be obtained as follows:

are the interpolation function and its derivative, respectively. In any time interval [ta, tb] (0 ≤ ta < tb), the Gaussian integral points and their weights can be obtained as follows:

![$\[\xi_j=t_a+\frac{\hat{\xi}_j+1}{2} L, \quad \omega_j=\frac{L}{2} \hat{\omega}_j, \quad j=1,2, \cdots, g,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq15.png) (11)

(11)

where L = tb − ta. As illustrated in Fig. 1, ![$\[\hat{\xi}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq16.png) and

and ![$\[\xi(\xi \neq \hat{\xi})\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq17.png) denote the Gaussian quadrature points on the intervals [−1, 1] and [ta, tb], respectively. These points share equivalent “length coordinates” denoted by λ1 and λ2, which represent the proportion of the interval’s total length occupied by a given coordinate point. Consequently, the interpolation function within the interval [ta, tb] can be expressed as

denote the Gaussian quadrature points on the intervals [−1, 1] and [ta, tb], respectively. These points share equivalent “length coordinates” denoted by λ1 and λ2, which represent the proportion of the interval’s total length occupied by a given coordinate point. Consequently, the interpolation function within the interval [ta, tb] can be expressed as

![$\[\boldsymbol{N}\left(\xi_j\right)=\hat{\boldsymbol{N}}\left(\hat{\xi}_j\right), \quad j=1,2, \cdots, g,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq18.png) (12)

(12)

Taking the derivative of both sides of the Eq. (12), we obtain

![$\[\boldsymbol{\dot{N}}\left(\xi_j\right)=\frac{2}{L} \boldsymbol{\hat{\dot{N}}}\left(\hat{\xi}_j\right), \quad j=1,2, \cdots, g.\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq20.png) (13)

(13)

Clearly, it is only necessary to compute ![$\[\hat{N}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq21.png) and

and ![$\[\hat{\dot{N}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq22.png) , and then use the Eqs. (12) and (13) to obtain N and

, and then use the Eqs. (12) and (13) to obtain N and ![$\[\dot{\boldsymbol{N}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq23.png) in any time interval. The variables

in any time interval. The variables ![$\[\hat{\boldsymbol{N}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq24.png) and

and ![$\[\hat{\dot{\boldsymbol{N}}}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq25.png) can be calculated using the following equations

can be calculated using the following equations

![$\[\hat{\boldsymbol{N}}(t)=\boldsymbol{G}^{-1} \boldsymbol{p}(t), \quad \dot{\boldsymbol{N}}(t)=\boldsymbol{G}^{-1} \dot{\boldsymbol{p}}(t),\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq26.png) (14)

(14)

and t1, t2, . . . , tn are the Chebyshev interpolation points on the interval [−1, 1].

In this paper, a warm-up procedure is used to compute ![$\[\hat{N}(\hat{\xi})\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq28.png) and

and ![$\[\hat{\dot{N}}(\hat{\xi})\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq29.png) in advance. Vectors N (ξ) and

in advance. Vectors N (ξ) and ![$\[\dot{\boldsymbol{N}}(\xi)\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq30.png) are calculated at each step by using Eqs. (12) and (13), which reduces the computational costs of the matrix inversion. Similarly, this approach is also applied to compute M(ξ) and

are calculated at each step by using Eqs. (12) and (13), which reduces the computational costs of the matrix inversion. Similarly, this approach is also applied to compute M(ξ) and ![$\[\dot{\boldsymbol{M}}(\xi)\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq31.png) .

.

|

Fig. 1 Illustration of the length coordinates for the time intervals [−1, 1] and [ta, tb]. Although the horizontal coordinates of |

3.2 Time transformation

The time transformation t ↔ τ can be employed to implement variable step sizes. Integrating the original system with a variable step size Δt is equivalent to integrating the transformed system with a constant step size, Δτ. The relationship between the transformed time, τ, and the original time, t, is given by

![$\[\frac{\mathrm{d} t}{\mathrm{~d} \tau}=\sigma(\mathbf{p}, \mathbf{q}),\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq32.png) (16)

(16)

where σ > 0 is the step size control function. To ensure that the transformed system remains Hamiltonian, the Darboux–Sundman transformation (Nacozy 1977) is expressed as follows:

![$\[K=\sigma\left(H-H_0\right).\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq33.png) (17)

(17)

Here, H is the Hamiltonian of the original system, K is the new Hamiltonian system, and H0 = H(p0, q0) is the initial energy of the system. For the N-body problem, the Hamiltonian system is generally separable. However, introducing the time transformation makes the system nonseparable. As a result, explicit methods become implicit, requiring the solution of nonlinear equations. Furthermore, due to the time transformation, the Jacobian matrix of the new Hamiltonian system becomes more complicated, which significantly increases the computational cost if solving nonlinear equations using Newton’s method. An efficient quasi-Newton method based on Broyden’s approach for solving nonlinear equations is presented in Section 3.3.

In some cases, appropriate step size control functions σ(p, q) are known a priori. For example, Budd & Piggott (2003) proposed an effective step size control function for the two-body problem:

![$\[\sigma_1(\mathbf{p}, \mathbf{q})=\|\mathbf{q}\|^\alpha,\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq34.png) (18)

(18)

where α = 2 or 1.5. Smaller steps are taken when the bodies are close to each other. In the N-body problem, close encounters can lead to a significant increase in velocity, when the total energy of the system is primarily dominated by kinetic energy and a smaller time step should be chosen in such cases. Therefore, it is natural to use the following step size control function (Huang & Leimkuhler 1997; Hairer et al. 2006):

![$\[\sigma_2(\mathbf{q})=\left[\left(H_0-U(\mathbf{q})\right)+\nabla U(\mathbf{q})^T M^{-1} \nabla U(\mathbf{q})\right]^{-1 / 2},\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq35.png) (19)

(19)

where U(q) is the potential energy. Equation (19) ensures that the step size adapts naturally to the dynamic properties of the system. To prevent the step size from becoming excessively small or large, and upper and lower bounds are introduced (Huang & Leimkuhler 1997). The step size function used in this paper is defined as:

![$\[\sigma(p, q)=\frac{\sqrt{\sigma_2^2+a^2}}{\frac{1}{b} \sqrt{\sigma_2^2+a^2}+1},\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq36.png) (20)

(20)

where 0 < a ≪ 1 ≪ b. This formulation ensures that σ(p, q) is constrained within the range defined by the lower bound a and the upper bound b. In this paper, σ(p, q) is adopted as the time step function with a = 10−6 and b = 102.

3.3 An efficient quasi-Newton method based on Broyden’s method

We let F : Rd → Rd be a vector valued function, defined by Eq. (8). Then, the Newton method for solving the nonlinear equations F(x) = 0 can be expressed as follows:

![$\[\mathbf{x}_{k+1}=\mathbf{x}_k-\mathbf{J}_k^{-1} \mathbf{F}\left(\mathbf{x}_k\right),\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq37.png) (21)

(21)

where k is the iteration index. In each iteration of solving the nonlinear equations, the Jacobian matrix, Jk, and its inverse, ![$\[\mathbf{J}_{k}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq38.png) , are typically required. However, deriving the Jacobian analytically becomes highly complex due to the time transformation applied to the Hamiltonian. A common method to approximate the Jacobian is numerical differentiation. While effective, this approach can be computationally expensive, especially when evaluating the nonlinear function, F, involves Gaussian quadrature.

, are typically required. However, deriving the Jacobian analytically becomes highly complex due to the time transformation applied to the Hamiltonian. A common method to approximate the Jacobian is numerical differentiation. While effective, this approach can be computationally expensive, especially when evaluating the nonlinear function, F, involves Gaussian quadrature.

To accelerate the computation, we employ the quasi-Newton method instead of standard Newton method. Specifically, we used Broyden’s method, which iteratively updates the inverse of the Jacobian matrix without explicitly computing the Jacobian itself. This allowed us to significantly reduce the computational overhead while maintaining reasonable convergence properties.

Following by Broyden’s method, the inverse Jacobian at iteration k + 1 was updated using information from the previous iteration, k, according to the following update formula:

![$\[\mathbf{J}_{k+1}^{-1}=\mathbf{J}_k^{-1}+\frac{\left(\mathbf{s}_k-\mathbf{J}_k^{-1} \mathbf{y}_k\right) \mathbf{s}_{\mathrm{k}}^{\top} \mathbf{J}_k^{-1}}{\mathbf{s}_k^{\top} \mathbf{J}_k^{-1} \mathbf{y}_k},\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq39.png) (22)

(22)

where sk = xk − xk−1 and yk = F(xk) − F(xk−1). This formula provides an efficient method for updating ![$\[\mathbf{J}_{k}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq40.png) , without requiring a direct recalculation of the Jacobian matrix. This is especially beneficial in cases where F(x) involves computationally intensive procedures, such as Gaussian integration. The pseudocode used in this study for the quasi-Newton method based on the Broyden method, is given in Algorithm 1, presented below.

, without requiring a direct recalculation of the Jacobian matrix. This is especially beneficial in cases where F(x) involves computationally intensive procedures, such as Gaussian integration. The pseudocode used in this study for the quasi-Newton method based on the Broyden method, is given in Algorithm 1, presented below.

Require: x0 (initial guess), ![$\[\mathbf{J}_{0}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq41.png) (initial inverse of the Jacobian matrix), ε (convergence criterion), kmax (maximum number of iterations)

(initial inverse of the Jacobian matrix), ε (convergence criterion), kmax (maximum number of iterations)

Ensure: xk (solution)

1: Initialize k ← 0, ek ← 1,

2: while ek > ε and k < kmax do,

3: Evaluate nonlinear function: F(xk),

4: Update the solution: ![$\[\mathbf{x}_{k+1} \leftarrow \mathbf{x}_{k}-\mathbf{J}_{k}^{-1} \mathbf{F}\left(\mathbf{x}_{k}\right)\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq42.png) ,

,

5: Update the inverse Jacobian ![$\[\mathbf{J}_{k+1}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq43.png) using the Broyden update formula (Eq. (22)),

using the Broyden update formula (Eq. (22)),

6: Compute the relative error: ![$\[e_{k+1} \leftarrow \frac{\left\|\mathbf{x}_{k+1}-\mathbf{x}_{k}\right\|}{\left\|\mathbf{x}_{k}\right\|}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq44.png) ,

,

7: Increment the iteration counter: k ← k + 1,

8: end while,

9: return xk.

Throughout the run of Algorithm 1, the solution from the previous time step xk is utilized as the initial guess x0 for the subsequent time step. Similarly, the inverse Jacobian, ![$\[\mathbf{J}_{0}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq45.png) , is initialized by the inverse Jacobian,

, is initialized by the inverse Jacobian, ![$\[\mathbf{J}_{k}^{-1}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq46.png) , from the prior converged time step. For the first time step, the Jacobian J0 is approximated numerically using the difference quotient method.

, from the prior converged time step. For the first time step, the Jacobian J0 is approximated numerically using the difference quotient method.

Nonlinear equations can also be solved by using other solvers such as the tensor method. The tensor method introduces an additional tensor term in the iteration to capture the higher-order nonlinear behavior (Schnabel & Frank 1984). Compared to quasi-Newton methods (e.g. Broyden’s method, as used in this work), the tensor method comes with higher computational costs (Nocedal & Wright 2006) and is generally more difficult to implement due to the introduction of the additional tensor term.

However, one notable problem of the quasi-Newton method is its potentially slower convergence compared to the standard Newton iteration (Broyden 1970). This issue can often be mitigated by employing smaller step sizes, which improve the stability and accuracy of the iterations while maintaining the efficiency of the quasi-Newton approach (Bulín & Hajžman 2021; Nocedal & Wright 2006).

|

Fig. 2 Evolution of the energy error for SQQ-P and SQQ. Here, e = 0.5, and m and n denote the number of interpolation points for the generalized displacement and generalized momentum, respectively. |

4 Numerical example

To evaluate the performance of the proposed integration methods, we present three numerical examples. Before delving into the examples, we summarize the key characteristics of the integrators involved.

The original SQQ uses equidistant interpolation nodes and solves nonlinear equations with the Newton method. Five extensions of SQQ are evaluated in this Section: SQQ-P, SQQ-PN, SQQ-PQ, SQQ-PTN, and SQQ-PTQ. The suffix “T” denotes the use of the time transformation, as defined in Eq. (20), “P” refers to the projection method for efficient interpolation, while “Q” and “N” indicate the use of quasi-Newton and Newton methods for solving nonlinear equations, respectively. The characteristics of these methods are summarized in Table 1. For consistency, the convergence threshold, ε, for solving nonlinear equations is set to 1 × 10−12 for all examples. All numerical computations were performed in MATLAB on a platform equipped with a 13th Generation Intel(R) Core(TM) i7-13700 processor and 32 GB of RAM.

4.1 The Kepler problem

The Kepler problem with the Hamiltonian is given by

![$\[H=\frac{1}{2}\left(p_1^2+p_2^2\right)-\frac{1}{r}, \quad r=\sqrt{q_1^2+q_2^2},\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq47.png) (23)

(23)

where the initial conditions are

![$\[\mathbf{q}=\left[\begin{array}{c}1-e \\0\end{array}\right], \quad \mathbf{p}=\left[\begin{array}{c}0 \\\sqrt{\frac{1+e}{1-e}}\end{array}\right],\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq48.png) (24)

(24)

and e represents the eccentricity of the orbit. The period of this system is 2π.

To evaluate the efficiency of the projection method, e = 0.5 is selected as the test parameter. Simulations of 500 orbital periods are performed using both the SQQ and SQQ-P.

Figure 2 illustrates the evolutions of the energy error for SQQ and SQQ-P. When the number of interpolation points is relatively small (i.e., m < 15 and n < 15), the Runge phenomenon does not occur. In this example, the evolutions of the energy error for SQQ and SQQ-P are nearly identical, exhibiting oscillatory behavior within a bounded range, which is typical for symplectic methods. This demonstrates that the projection method employed in this study provides an accurate computation of the interpolation function. As the number of interpolation points increases (i.e., m = 18 and n = 18), SQQ exhibits a growth in energy error over time. This behavior arises from the excessive number of interpolation points, which triggers the Runge phenomenon. As a result, the interpolated action, S, may become inaccurate. In contrast, SQQ-P, which uses Chebyshev interpolation points, effectively suppresses the Runge phenomenon. As a result, the energy error for SQQ-P continues to oscillate within a bounded range, as shown in Fig. 2. This highlights the robustness of Chebyshev interpolation in maintaining the stability of the computed action.

Table 2 shows the time step sizes and CPU times for the various simulations. As the number of interpolation points increases, the CPU time ratio decreases (the CPU time ratio is defined as the ratio of SQQ-P’s CPU time to that of SQQ). This happens because the increase in the number of interpolation points introduces additional Gaussian nodes, significantly raising the computational cost of evaluating the interpolation function during each time step. In contrast, SQQ-P employs the projection method proposed in this study, thereby enhancing the efficiency.

The Kepler problem becomes challenging for traditional fixed-step algorithms as the eccentricity approaches to 1. We perform two numerical experiments for eccentricities of e = 0.9 and 0.99, with parameters Δτ = 0.01, m = n = 3, and a total integration time of 500 orbital periods. Fig. 3 illustrates the evolution of the energy error for SQQ-PTQ with these large eccentricities. Fig. 4 shows the corresponding phase space trajectories of q and p. It can be seen that, even over long-term integration, the phase-space structure remains nearly intact, which indicates that SQQ-PTQ effectively preserves the Hamiltonian structure.

Table 3 presents the CPU times of SQQ-PTN and SQQ-PTQ for solving the Kepler problem with high eccentricities. SQQ-PTQ exhibits an improvement in efficiency over SQQ-PTN, with the latter requiring about 2–3 times of the CPU time at e = 0.9 and e = 0.99, respectively.

Summary of characteristics for different integrators.

CPU time comparison between SQQ and SQQ-P methods.

|

Fig. 3 Evolution of the energy error for SQQ-PTQ for the Kepler problem with high eccentricities. |

Performance comparison of SQQ-PTN and SQQ-PTQ for the Kepler problem at high eccentricities.

4.2 Three-body problem

For problems involving close encounters, any unreasonable time step will lead to increased errors or calculation failure, so an appropriate time step is necessary to accurately capture such events. The three-body problem with possible close encounters is a good option for assessing the performance of the adaptive time step strategy of integrators. Recently, Liao et al. (2022) employed machine learning techniques to discover some novel periodic solutions for the three-body problem. In this section, we use one specific periodic configuration from their study to test SQQ-PTQ’s ability to automatically select the time step. The orbital and physical parameters of the three bodies, as given in Liao et al. (2022), are as follows:

![$\[\begin{array}{lll}\mathbf{q}_1=\left[\begin{array}{ll}-0.2227,~0\end{array}\right]^{\mathrm{T}}, & \dot{\mathbf{q}}_1=[0,~1.7813]^{\mathrm{T}}, & m_1=0.9, \\\mathbf{q}_2=\left[\begin{array}{ll}1,~0] ^ \mathrm{~T}\end{array},\right. & \dot{\mathbf{q}}_2=[0,~0.4150]^{\mathrm{T}}, & m_2=0.85, \\\mathbf{q}_3=\left[\begin{array}{ll}0,~0] ^\mathrm{~T}\end{array},\right. & \dot{\mathbf{q}}_3=[0,~-1.9559]^{\mathrm{T}}, & m_3=1.\end{array}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq49.png) (25)

(25)

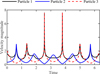

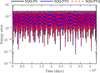

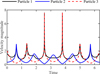

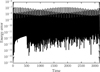

The period of the system is T = 6.3509, and the gravitational constant G is normalized to 1. We simulated 500 orbital periods of this system using the SQQ-PTQ. Figure 5 shows the trajectories of three particles, where particles 1 and 3 experience two close encounters within one period. These encounters occur at approximately 0.4T (dashed box a), and at 0.6T (dashed box b). For both encounters, the closest distance between particles 1 and 3 is approximately 0.014. Figure 6 shows the magnitude of the velocities of the three particles (during the first period). The velocities of particles 1 and 3 exhibit two peaks within one period, due to their close encounters, leading to significant velocity changes for both particles. These two close encounters cause the maximum velocities of particles 1 and 3 to be 10 and 34 times of their respective minimum velocities. The evolution of the energy error exhibits stable oscillations as shown in Fig. 7. Furthermore, the phase space trajectories shown in Fig. 8 maintain a well-preserved structure throughout long-term integration, indicating that SQQ-PTQ effectively preserves the symplectic property.

We also use the MATLAB built-in general-purpose integrator ODE45 (with relative error tolerance set to 1 × 10−10) and SQQ-PTN to simulate this system for 500 orbital periods. Table 4 lists the computational time and maximum energy errors for the three integrators. The only difference between SQQ-PTQ and SQQ-PTN lies in the method used to solve the nonlinear equations, which explains why their maximum energy errors are nearly identical. The CPU time of SQQ-PTQ is less than one-third of that of SQQ-PTN. This indicates that the quasi-Newton method accelerates the computation without introducing any additional errors.

|

Fig. 4 Phase space trajectories over 500 orbital periods for the Kepler problem with different eccentricities. |

Comparison of the performances between SQQ-PTN, SQQ-PTQ, and ODE45 (with relative error tolerance set to 1 × 10−10) in the three-body problem.

|

Fig. 5 Trajectories of particles in the three-body problem, with dashed boxes a and b indicating the locations of two close encounters. Box a corresponds to a close encounter occurring at approximately 0.4T, and box b corresponds to the second close encounter occurring at approximately 0.6T, where T denotes the orbital period. The two subplots below provide zoomed-in views, showing the positions and velocity directions of particles 1 and 3. For both encounters, the closest distance between particles 1 and 3 is approximately 0.014. |

4.3 Outer Solar System

As the final example, we consider the outer Solar System problem, which includes the Sun, Jupiter, Saturn, Uranus, Neptune, and Pluto. The outer Solar System has multiple degrees of freedom. Its clear structure and stable orbits over long timescales make it suitable for evaluating the integrator’s robustness, stability, and computational efficiency. For a detailed description of the masses and orbital parameters of the planets, we refer to Hairer et al. (2006).

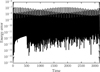

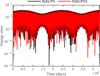

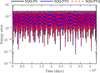

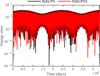

We perform simulations over 100 Jupiter orbits using three integrators: SQQ-PN, SQQ-PTN, and SQQ-PTQ. Figure 9 illustrates the evolution of energy error over time. It is worth mentioning that the evolutions of the energy error for SQQ-PTQ and SQQ-PTN are nearly indistinguishable. This is consistent with the phenomenon of the three-body problem mentioned in Section 4.2, because the only difference between SQQ-PTQ and SQQ-PTN lies in the method used to solve the nonlinear equations. Table 5 presents a summary of the CPU time and maximum energy errors for the three integrators. It can be seen that SQQ-PTN has the longest computational time, which is roughly six times that of SQQ-PTQ. This is primarily due to the time transformation, which increases the computational cost of the Jacobian matrix in each iteration. SQQ-PN, which does not use the time transformation, exhibits moderate computational efficiency. In contrast, SQQ-PTQ achieves the highest efficiency by using a quasi-Newton method based on Broyden’s method, avoiding the direct computation of the Jacobian matrix. Even compared to SQQ-PN, SQQ-PTQ requires only 50% of the computational time.

To further evaluate the long-term integration stability of SQQ-PTQ, we performed simulations over 10000 Jupiter orbital periods using both SQQ-PTQ and SQQ-PN. Figure 10 shows that the evolutions of the energy error for both SQQ-PN and SQQ-PTQ oscillate within a bounded range. Table 6 shows the CPU time and maximum energy error of the integration with different integrators. The efficiency of SQQ-PTQ is higher than that of SQQ-PN, even though SQQ-PTQ includes the time transformation. This demonstrates the effectiveness and robustness of combining the time transformation with the quasi-Newton method.

|

Fig. 6 Evolution of the velocity magnitudes of the three particles over one period in the three-body problem. |

|

Fig. 7 Evolution of the energy error for the integration of the three-body problem by SQQ-PTQ. |

|

Fig. 8 Phase space trajectories of particles in the three-body problem over 500 orbital periods. |

Maximum energy error and CPU times for the integration of the outer Solar System by SQQ-PN, SQQ-PTN, SQQ-PTQ, and ODE45 (relative error tolerance 1 × 10−8), over 100 Jupiter orbital periods.

|

Fig. 9 Evolutions of the energy error for the integration of the outer Solar System by SQQ-PN, SQQ-PTN, and SQQ-PTQ, with a total integration time of 100 Jupiter orbital periods. |

|

Fig. 10 Evolutions of the energy error for the integration of the outer Solar System by SQQ-PN and SQQ-PTQ, with a total integration time of 10000 Jupiter orbital periods. |

Maximum energy error and CPU times for the integration of the outer Solar System by SQQ-PN, SQQ-PTQ and ODE45 (with relative error tolerance set to 1 × 10−8), with a total integration time of 10 000 Jupiter orbital periods.

5 Conclusion

This paper introduces an adaptive symplectic integrator SQQ-PTQ, which builds on the fixed-step integrator SQQ. By combining the time transformation with a quasi-Newton method, SQQ-PTQ improves both computational efficiency and applicability.

A key feature of SQQ-PTQ is its use of time transformation to achieve adaptive step sizing. This allows SQQ-PTQ to adjust the step size in gravitational multi-body problems on different timescales. To overcome the challenges of computing Jacobian matrices, SQQ-PTQ employs a quasi-Newton method based on Broyden’s method. This approach significantly accelerates the solution of nonlinear equations, enhancing the overall computational performance.

The effectiveness and robustness of SQQ-PTQ are demonstrated through three numerical experiments. In particular, SQQ-PTQ demonstrates adaptability when handling close-encounter problems. Furthermore, over long-term integrations, SQQ-PTQ maintains energy conservation, further confirming its characteristics as a symplectic algorithm.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 12311530055 and 12472048), the Shenzhen Science and Technology Program (Grant Nos. ZDSYS20210623091808026), and by High-performance Computing Public Platform (Shenzhen Campus) of SUN YAT-SEN UNIVERSITY.

References

- Blanes, S., & Iserles, A. 2012, Celest. Mech. Dyn. Astron., 114, 297 [Google Scholar]

- Broyden, C. G. 1965, Math. Comput., 19, 577 [CrossRef] [Google Scholar]

- Broyden, C. G. 1970, Comput. J., 12, 393 [Google Scholar]

- Budd, C., & Piggott, M. 2003, Handbook of Numerical Analysis (Amsterdam: North-Holland Publishing Co.) [Google Scholar]

- Bulín, R., & Hajžman, M. 2021, Nonlinear Dyn., 103, 2475 [Google Scholar]

- Cai, Q., & Song, L. 2012, in 2012 IEEE International Conference on Information Science and Technology, IEEE, 745 [Google Scholar]

- Channell, P. J., & Scovel, C. 1990, Nonlinearity, 3, 231 [Google Scholar]

- Chen, J.-B., Guo, H.-Y., & Wu, K. 2003, J. Math. Phys., 44, 1688 [Google Scholar]

- Chen, Y., Li, Y., Huang, Y., Li, M., & Liu, Y. 2022, Appl. Math. Model., 106, 742 [Google Scholar]

- Dehnen, W., & Read, J. I. 2011, Eur. Phys. J. Plus, 126, 1 [Google Scholar]

- Emel’yanenko, V. V. 2007, Celest. Mech. Dyn. Astron., 98, 191 [CrossRef] [Google Scholar]

- Feng, K. 1985, Proceedings of the 1984 Beijing Symposium on Differential Geometry and Differential Equations: Computation of Partial Differential Equations (Science Press) [Google Scholar]

- Gao, Q., Tan, S., Zhang, H., & Zhong, W. 2012, Int. J. Numer. Methods Eng., 89, 438 [Google Scholar]

- Hairer, E. 1997, Appl. Numer. Math., 25, 219 [Google Scholar]

- Hairer, E., Wanner, G., & Lubich, C. 2006, Geometric Numerical Integration, 2nd edn. (Berlin, Heidelberg: Springer) [Google Scholar]

- Huang, W., & Leimkuhler, B. 1997, SIAM J. Sci. Comput., 18, 239 [Google Scholar]

- Lanczos, C. 1938, J. Math. Phys., 17, 123 [Google Scholar]

- Li, F., Peng, H., Yang, H., & Kan, Z. 2021, Nonlinear Dyn., 106, 2919 [Google Scholar]

- Liao, S., Li, X., & Yang, Y. 2022, New Astron., 96, 101850 [Google Scholar]

- Makazaga, J., & Murua, A. 2009, Numer. Math., 113, 631 [Google Scholar]

- Miesbach, S., & Pesch, H. J. 1992, Numer. Math., 61, 501 [Google Scholar]

- Mikkola, S., & Tanikawa, K. 1999, MNRAS, 310, 745 [NASA ADS] [CrossRef] [Google Scholar]

- Nacozy, P. 1977, Celest. Mech., 16, 309 [Google Scholar]

- Nocedal, J., & Wright, S. J. 2006, Numerical Optimization (Springer) [Google Scholar]

- Peng, H., Li, F., Liu, J., & Ju, Z. 2019, IEEE Trans. Ind. Electron., 67, 3819 [Google Scholar]

- Peng, H., Song, N., & Kan, Z. 2020, Multibody Syst. Dyn., 49, 119 [Google Scholar]

- Peng, H., Shi, B., Song, J., & Wang, X. 2023, Appl. Math. Model., 114, 205 [Google Scholar]

- Preto, M., & Tremaine, S. 1999, Astron. J., 118, 2532 [Google Scholar]

- Ruth, R. D. 1983, IEEE Trans. Nucl. Sci., 30, 2669 [Google Scholar]

- Schnabel, R. B., & Frank, P. D. 1984, SIAM J. Numer. Anal., 21, 815 [Google Scholar]

- Sherman, J., & Morrison, W. J. 1950, Ann. Math. Stat., 21, 124 [CrossRef] [Google Scholar]

- Song, N., Peng, H., Kan, Z., & Chen, B. 2020, Nonlinear Dyn., 102, 1375 [Google Scholar]

- Springel, V. 2005, MNRAS, 364, 1105 [Google Scholar]

- Vogelaere, R. D. 1900, Methods of Integration which Preserve the Contact Transformation Property of the Hamilton Equations, Tech. rep., University of Notre Dame [Google Scholar]

- Wang, L., & Nitadori, K. 2020, MNRAS, 497, 4384 [Google Scholar]

- Wang, L., Nitadori, K., & Makino, J. 2020, MNRAS, 493, 3398 [NASA ADS] [CrossRef] [Google Scholar]

- Wang, Y., Sun, W., Liu, F., & Wu, X. 2021, AJ, 907, 66 [Google Scholar]

All Tables

Performance comparison of SQQ-PTN and SQQ-PTQ for the Kepler problem at high eccentricities.

Comparison of the performances between SQQ-PTN, SQQ-PTQ, and ODE45 (with relative error tolerance set to 1 × 10−10) in the three-body problem.

Maximum energy error and CPU times for the integration of the outer Solar System by SQQ-PN, SQQ-PTN, SQQ-PTQ, and ODE45 (relative error tolerance 1 × 10−8), over 100 Jupiter orbital periods.

Maximum energy error and CPU times for the integration of the outer Solar System by SQQ-PN, SQQ-PTQ and ODE45 (with relative error tolerance set to 1 × 10−8), with a total integration time of 10 000 Jupiter orbital periods.

All Figures

|

Fig. 1 Illustration of the length coordinates for the time intervals [−1, 1] and [ta, tb]. Although the horizontal coordinates of |

| In the text | |

|

Fig. 2 Evolution of the energy error for SQQ-P and SQQ. Here, e = 0.5, and m and n denote the number of interpolation points for the generalized displacement and generalized momentum, respectively. |

| In the text | |

|

Fig. 3 Evolution of the energy error for SQQ-PTQ for the Kepler problem with high eccentricities. |

| In the text | |

|

Fig. 4 Phase space trajectories over 500 orbital periods for the Kepler problem with different eccentricities. |

| In the text | |

|

Fig. 5 Trajectories of particles in the three-body problem, with dashed boxes a and b indicating the locations of two close encounters. Box a corresponds to a close encounter occurring at approximately 0.4T, and box b corresponds to the second close encounter occurring at approximately 0.6T, where T denotes the orbital period. The two subplots below provide zoomed-in views, showing the positions and velocity directions of particles 1 and 3. For both encounters, the closest distance between particles 1 and 3 is approximately 0.014. |

| In the text | |

|

Fig. 6 Evolution of the velocity magnitudes of the three particles over one period in the three-body problem. |

| In the text | |

|

Fig. 7 Evolution of the energy error for the integration of the three-body problem by SQQ-PTQ. |

| In the text | |

|

Fig. 8 Phase space trajectories of particles in the three-body problem over 500 orbital periods. |

| In the text | |

|

Fig. 9 Evolutions of the energy error for the integration of the outer Solar System by SQQ-PN, SQQ-PTN, and SQQ-PTQ, with a total integration time of 100 Jupiter orbital periods. |

| In the text | |

|

Fig. 10 Evolutions of the energy error for the integration of the outer Solar System by SQQ-PN and SQQ-PTQ, with a total integration time of 10000 Jupiter orbital periods. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.

![$\[\mathbf{p}_n=\frac{\partial S}{\partial \mathbf{q}_m}.\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq10.png)

![$\[\boldsymbol{G}=\left[\begin{array}{lllll}1 & t_1 & t_1^2 & \cdots & t_1^{n-1} \\1 & t_2 & t_2^2 & \cdots & t_2^{n-1} \\\vdots & \cdots & \cdots & \cdots & \vdots \\\vdots & \cdots & \cdots & \cdots & \vdots \\1 & t_n & t_n^2 & \cdots & t_n^{n-1}\end{array}\right], \boldsymbol{p}=\left[\begin{array}{l}1 \\t \\t^2 \\\vdots \\t^n\end{array}\right], \dot{\boldsymbol{p}}=\left[\begin{array}{l}0 \\1 \\2 t \\\vdots \\n t^{n-1}\end{array}\right]\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq27.png)

![$\[\hat{\xi}\]$](/articles/aa/full_html/2025/07/aa51822-24/aa51822-24-eq19.png)