| Issue |

A&A

Volume 689, September 2024

|

|

|---|---|---|

| Article Number | A289 | |

| Number of page(s) | 12 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202449475 | |

| Published online | 19 September 2024 | |

ATAT: Astronomical Transformer for time series and Tabular data

1

Department of Computer Science, Universidad de Concepción,

Concepción,

Chile

2

Center for Data and Artificial Intelligence, Universidad de Concepción,

Edmundo Larenas 310,

Concepción,

Chile

3

Millennium Institute of Astrophysics (MAS),

Nuncio Monseñor Sotero Sanz 100, Of. 104, Providencia,

Santiago,

Chile

4

Center for Mathematical Modeling (CMM), Universidad de Chile,

Beauchef 851,

Santiago

8320000,

Chile

5

Department of Electrical Engineering, Universidad de Chile,

Av. Tupper 2007,

Santiago

8320000,

Chile

6

Data Observatory Foundation,

Eliodoro Yáñez 2990, oficina A5,

Santiago,

Chile

7

Data and Artificial Intelligence Initiative (ID&IA), University of Chile,

Santiago,

Chile

8

Institute of Astronomy (IvS), Department of Physics and Astronomy, KU Leuven,

Celestijnenlaan 200D,

3001

Leuven,

Belgium

9

Instituto de Informática, Facultad de Ciencias de la Ingeniería, Universidad Austral de Chile,

General Lagos 2086,

Valdivia,

Chile

10

European Southern Observatory,

Karl-Schwarzschild-Strasse 2,

85748

Garching bei München,

Germany

11

Instituto de Física y Astronomía, Universidad de Valparaíso,

Av. Gran Bretaña 1111, Playa Ancha,

Casilla

5030,

Chile

12

Instituto de Astrofísica, Pontificia Universidad Católica de Chile,

Av. Vicuña Mackenna 4860,

7820436

Macul, Santiago,

Chile

13

Centro de Astroingeniería, Pontificia Universidad Católica de Chile,

Av. Vicuña Mackenna 4860,

7820436

Macul, Santiago,

Chile

Received:

2

February

2024

Accepted:

9

June

2024

Context. The advent of next-generation survey instruments, such as the Vera C. Rubin Observatory and its Legacy Survey of Space and Time (LSST), is opening a window for new research in time-domain astronomy. The Extended LSST Astronomical Time-Series Classification Challenge (ELAsTiCC) was created to test the capacity of brokers to deal with a simulated LSST stream.

Aims. Our aim is to develop a next-generation model for the classification of variable astronomical objects. We describe ATAT, the Astronomical Transformer for time series And Tabular data, a classification model conceived by the ALeRCE alert broker to classify light curves from next-generation alert streams. ATAT was tested in production during the first round of the ELAsTiCC campaigns.

Methods. ATAT consists of two transformer models that encode light curves and features using novel time modulation and quantile feature tokenizer mechanisms, respectively. ATAT was trained on different combinations of light curves, metadata, and features calculated over the light curves. We compare ATAT against the current ALeRCE classifier, a balanced hierarchical random forest (BHRF) trained on human-engineered features derived from light curves and metadata.

Results. When trained on light curves and metadata, ATAT achieves a macro F1 score of 82.9 ± 0.4 in 20 classes, outperforming the BHRF model trained on 429 features, which achieves a macro F1 score of 79.4 ± 0.1.

Conclusions. The use of transformer multimodal architectures, combining light curves and tabular data, opens new possibilities for classifying alerts from a new generation of large etendue telescopes, such as the Vera C. Rubin Observatory, in real-world brokering scenarios.

Key words: methods: data analysis / methods: statistical / surveys / supernovae: general / stars: variables: general

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

A new generation of synoptic telescopes are carrying out data-intensive observation campaigns. An emblematic example is the Vera C. Rubin Observatory and its Legacy Survey of Space and Time (LSST) (Ivezic et al. 2019). Starting in 2025, the Rubin Observatory will generate an average of ten million alerts and ~20 TB of raw data every night. The massive data stream of LSST is to be distributed to community brokers1 that will be in charge of ingesting, processing, and serving the annotated alerts to the astronomical community. Collaboration between astronomers, computer scientists, statisticians, and engineers is key to solving the rising astronomical big data challenges (Borne 2010; Huijse et al. 2014).

Automatic data processing based on feature engineering (FE; e.g., Nun et al. 2017) and machine learning (ML), including deep learning (DL) have been applied extensively in astronomical data applications, such as light curve and image-based classification (e.g., Dieleman et al. 2015; Cabrera-Vives et al. 2016, 2017; Carrasco-Davis et al. 2021; Russeil et al. 2022), clustering (Mackenzie et al. 2016; Astorga et al. 2018), physical parameter estimation (Förster et al. 2018; Sánchez et al. 2021; Villar 2022), and outlier detection (Nun et al. 2016; Pruzhinskaya et al. 2019; Sánchez-Sáez et al. 2021a; Ishida et al. 2021; Pérez-Carrasco et al. 2023; Perez-Carrasco et al. 2023). The Vera C. Rubin Community Brokers, ALeRCE (Förster et al. 2021), AMPEL (Nordin et al. 2019), ANTARES (Matheson et al. 2021), BABAMUL, Fink (Möller et al. 2021), Lasair (Smith et al. 2019), and Pitt-Google2 are processing or will process massive amounts of data annotated with cross-matches, ML model predictions, and/or other information that is distributed to the community. These scientific products allow astronomers to study transient and variable objects in almost real time or in an offline fashion for a systematic analysis of large numbers of objects. To enable the former, ML models should be integrated into a complex infrastructure and allow the accurate, rapid, and scalable evaluation of tens of thousands of alerts received every minute (Narayan et al. 2018; Rodriguez-Mancini et al. 2022; Cabrera-Vives et al. 2022). In the past, the most common choices were decision tree-based ensembles (e.g., random forest, RF, or light gradient boosting machine, LightGBM), models with high predictive performance, but high resource usage, due to the FE step. Several efforts have applied faster DL-based approaches to the problem of classifying astronomical time series (e.g., recurrent neural networks, RNNs; Charnock & Moss 2017; Naul et al. 2018; Carrasco-Davis et al. 2019; Muthukrishna et al. 2019; Becker et al. 2020; Gómez et al. 2020; Jamal & Bloom 2020; Donoso-Oliva et al. 2021). These approaches usually focus on a specific type of object, for example variable stars (Naul et al. 2018; Becker et al. 2020; Jamal & Bloom 2020) or transients (Charnock & Moss 2017; Muthukrishna et al. 2019; Möller & de Boissière 2019; Fraga et al. 2024). Until this work, ALeRCE had not been able to outperform tree-based ensembles (Boone 2019; Hložek et al. 2023; Neira et al. 2020; Sánchez-Sáez et al. 2021b; Sánchez-Sáez, P. et al. 2023) with deep learning approaches for real-time alert classification across a broad taxonomy simultaneously encompassing transient, stochastic, and periodic variable objects.

More recently, multi-head attention (MHA; Vaswani et al. 2017) and transformers have appeared as promising alternatives to time series encoders in astronomy (Allam & McEwen 2024; Pimentel et al. 2023; Donoso-Oliva et al. 2023; Moreno-Cartagena et al. 2023). These models are faster than RNNs since they have access to all the input simultaneously and not sequentially. The above-mentioned attention-based models have not explored training with multiple data sources (time series, metadata, and human-engineered features) simultaneously.

The astronomical community has made great efforts to create realistic scenarios to test ML models (Hložek et al. 2023), but none of them has contemplated an end-to-end ML pipeline (i.e., from the data ingestion to the ML model’s outputs). The recent Extended LSST Astronomical Time-Series Classification Challenge (ELAsTiCC3,4, see Methods) has appeared as a unique opportunity to test broker pipelines and ML models in production. ELAsTiCC is a challenge created by the Dark Energy Science Collaboration (DESC) that simulates LSST-like astronomical alerts with the goal of connecting the LSST project, brokers, and DESC by testing end-to-end pipelines in real-time. To fulfill this objective, ELAsTiCC started an official data stream on September 28, 2022. Additionally, ELAsTiCC provided a dataset to train ML models.

In this work we present the methods that ALeRCE used for the first round of ELAsTiCC. We propose ATAT, an Astronomical Transformer for time series And Tabular data, a model that is based on a transformer architecture. ATAT is trained with the dataset provided by ELAsTiCC previous to the start of the real-time infrastructure challenge and implemented as an end-to-end pipeline within the ALeRCE (Förster et al. 2021) broker. ATAT can use time series information and all the available metadata and/or features obtained from other pre-processing steps (see Figure 1). In summary, our contributions are the following:

A new state-of-the-art transformer model called ATAT that encodes multivariate, variable length, and irregularly sampled light curves in combination with metadata and/or extracted features.

A thorough comparison between ATAT and a RF-based baseline (historically the most competitive model of ALeRCE when applied to real data streams) in the ELAsTiCC dataset.

The code for replicating our experiments is publicly available at https://github.com/alercebroker/ATAT. Our code uses the publicly available ELAsTiCC dataset5.

|

Fig. 1 Diagram of ATAT, which consists of two branches: 1) at the top a transformer to process light curves (matrices x, t, and M) and 2) at the bottom a transformer to process tabular data (matrix ƒ). The information sources are respectively processed by time modulation (TM) and quantile feature tokenizers (QFT) (white rectangles). In both cases the results of this processing are sequences. Subsequently, a learnable token is added as the first element of the sequence. These sequences are processed by the transformer architectures Tlc (light curves) and Ttab (tabular data). Finally, the processed token is transformed linearly and used for label prediction |

|

Fig. 2 ELAsTiCC dataset class histogram. In (a), the original taxonomy class distribution is shown. In (b), the taxonomy class distribution selected by Vera Rubin’s brokers is shown. We use the SN-like/Other class to include SNe IIb. |

2 ELAsTiCC overview and machine learning approaches

2.1 ELAsTiCC

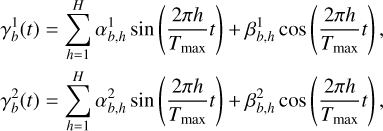

The ELAsTiCC dataset contains 1,845,146 light curves in six bands (ugrizY) from simulated astronomical objects distributed in 32 classes, as shown in Figure 2a. This work uses the training dataset from the first ELAsTiCC campaign. We used the same taxonomy as the ELAsTiCC broker’s taxonomy, except for the “SN-like/Other” class that includes only SNe IIb (see Figure 2b).

We consider 64 attributes from the metadata provided in the alert stream of ELAsTiCC. These attributes include the best heliocentric redshift, the Milky Way extinction between the blue and visual (and its error), and for the first and second galaxy host match: ellipticity, magnitudes on each band (and their error), transient-host separation, radius, photometric redshift (and its error), deciles of the estimated photometric redshift probability density function, and spectroscopic redshift if available (and its error).

We split the ELAsTiCC dataset into training, validation, and test sets. The test set contains 1000 light curves of each class, ensuring a balanced representation. The remaining data is further stratified into five folds, from which we train five separate models (the same folds for all neural networks and random forest). In each training iteration, one fold serves as a validation set for hyperparameter tuning and early stopping. All the metrics presented in this paper are derived from evaluations performed on the test set.

Additionally, the ELAsTiCC dataset was modified by discarding information that is not available in the ELAsTiCC alert stream. For this purpose, we use the PHOTFLAG6 key to select only non-saturated data. We consider alerts and forced photometry points in each light curve spanning from 30 days prior to the initial alert (forced photometry data for the first alert) to the final detection. If there are non-detections after the last detection, these are not included.

2.2 ATAT

Here we describe our proposed transformed-based model, ATAT, and the techniques developed to process time series information (light curves) and tabular data information (metadata and/or processed features). For the rest of the paper, we call these models ATAT variants since different input combinations can be used.

For each astronomical source, we consider two types of data: the light curve and tabular data composed of static metadata (e.g., host galaxy redshifts, if any) and features calculated from the light curves (e.g., the period of a periodic source). For a particular source, an observation j in band b of its light curve is described by the observation time tj,b and by the photometric data xj,b = (µj,b, σj,b), where µj,b represents the difference flux7, and σj,b the flux error. Not all light curves have the same number of observations. In order to represent this in the model input, we consider fixed size light curves of the length of the largest light curve in the dataset, consisting of 65 observations, and perform zero padding (adding zeros for observations after the maximum time in each band, both for xj,b and tj,b). At the same time, not all bands are observed simultaneously. This is represented by adding zeros to µj,b and σj,b of the unobserved bands of observation j. In order to mask attention for these unobserved values, we use a binary mask Mj,b such that Mj,b = 1 if observation j is observed at band b, and Mj,b = 0 if not (Vaswani et al. 2017; Devlin et al. 2019). For each source, tabular data consists of K features fk, k ∈ {1, …, K}, which, as explained above, may be metadata or features calculated from the light curves.

As a first step, time series and tabular data are processed using time modulation (TM) and a quantile feature tokenizer (QFT), respectively. These steps return sequences that can be used as inputs for common transformer architectures. Figure 1 shows a general scheme of ATAT. Its hyperparameters are further specified in Section 2.2.5. We note that larger models showed better performance, but we limited their size to reduce the memory requirements in production. For the rest of the paper we denote a linear layer as LL.

2.2.1 Time modulation

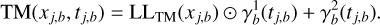

Time modulation incorporates time information of observation j and band b, tj,b, into the difference flux µj,b and flux error σj,b. Previous works have successfully applied TM in attention models, using processes similar to positional encoding (Vaswani et al. 2017). We constructed a variant of the time modulation proposed by Pimentel et al. (2023), which is based on a Fourier decomposition. For each observation j and band b of the light curve we performed a linear transformation on the input vector, transforming Xj,b = (µj,b,σj,b) to a vector LLTM(xj,b) of dimension ETM8. We modulate this vector by doing an element-wise product with the output of a vector function  and add the output of a second vector function

and add the output of a second vector function  :

:

(1)

(1)

We define the functions  and

and  as Fourier series

as Fourier series

(2)

(2)

where Tmax is an hyperparameter that is set higher than the maximum timespan of the longest light curve in the dataset; H is the number of harmonics in the Fourier series; and  , and

, and  are learnable Fourier coefficients. In this work, t is the number of days since the first forced photometry point in the light curve.

are learnable Fourier coefficients. In this work, t is the number of days since the first forced photometry point in the light curve.

Equation (1) applies a linear transformation to xj,b, expanding its dimension. After that, a scale and bias are created as Fourier series (Eq. (2)) using time tj,b. We note that a Fourier series can have enough expressive power for large H. Equation (1) is applied separately for each band, and their output vectors are later concatenated in the sequence dimension. Consequently, the output of TM for a light curve is a matrix of dimension L ⋅ B × ETM, where B is the number of bands, and L is the maximum number of observations that a band can have for all bands and light curves in the dataset.

2.2.2 Quantile feature tokenizer

Tabular data in ELAsTiCC may include processed light curve features, static metadata, or a concatenation of both. We process this data before feeding it into a transformer. We call this process quantile feature tokenizer, and it comprises two steps. First, a quantile transformation9 QTk (fk) is applied to each feature fk of the tabular data of each object, to normalize them as a way to deal with complex distributions (e.g., skewed) and help the predictive model perform better. Second, an affine transformation is used to vectorize each scalar value of the attributes recorded in the tabular data

(3)

(3)

where ⋅ stands for matrix multiplication, k refers to the index feature, and Wk and bk are vectors of learnable parameters with dimensions EQFT. In other words, the k-th scalar feature fk is transformed by QTk and then vectorized by multiplying it by Wk and adding bk. It should be noted that a different transformation is applied to each feature of the tabular data. The output dimension EQFT is a hyperparameter to be chosen. This methodology is similar to that described in Gorishniy et al. (2021), but we additionally apply the quantile transformation to each feature that is fitted before training the model.

2.2.3 Transformers

The transformer architecture is based on Bidirectional Encoder Representations from Transformers (BERT, Devlin et al. 2019) and Vision Transformers (Dosovitskiy et al. 2021) that were designed to process sequential information. The architecture consists of a multi-head attention (MHA) step and a forward fully-connected (FF) neural network step with skip connections.

The transformer architecture used in this work can be described as follows. We consider a layer l that receives as input the output of layer l – 1. Then, our transformer can be described as

(4)

(4)

(5)

(5)

where l ∈ [1 … nlayers ] (nlayers being the number of layers of the transformer); hl is the output of layer l; and  is the output of the MHA step, which includes a skip connection. We note that

is the output of the MHA step, which includes a skip connection. We note that  serves as input to a feed-forward network with a skip connection that outputs hl. The relevant transformer hyperparameters include the number of heads nheads and the embedding dimensionality ET, which are specified in Section 2.2.5. Additionally, we used a learnable classification token of dimension ET that is concatenated at the beginning of the input sequence. This token representation after the transformer is fed into an output layer that performs the classification task. The dimension number of ET is equal to ETM for the light curve transformer and EQFT for the tabular data transformer (equal to their input).

serves as input to a feed-forward network with a skip connection that outputs hl. The relevant transformer hyperparameters include the number of heads nheads and the embedding dimensionality ET, which are specified in Section 2.2.5. Additionally, we used a learnable classification token of dimension ET that is concatenated at the beginning of the input sequence. This token representation after the transformer is fed into an output layer that performs the classification task. The dimension number of ET is equal to ETM for the light curve transformer and EQFT for the tabular data transformer (equal to their input).

When only a single data source is considered (e.g., only light curve data), we take the first element of the transformer’s output sequence, and apply a linear layer plus a softmax activation function. When two data sources are considered, two transformers Tlc and Ttab are used to process light curve and tabular data information, respectively. The first output elements of the two sequences are concatenated, and a multilayer perceptron and a softmax activation function are applied to produce the label prediction (see Figure 1).

2.2.4 Mask temporal augmentation

To improve early classification performance, we train ATAT on light curves reduced up to a randomly selected time instant. During training, a day t* ∈ {8, 128, 2048} is randomly selected for each light curve, and the values of mask M corresponding to times t > t* are set to zero. We note that t = 2048 is equivalent to using the complete light curves. Times are selected from a limited discrete set since it is unfeasible to compute the features at arbitrary times. Hereafter, we refer to this augmentation method as masked temporal augmentation (MTA). Considering the lengths of the light curves during training is a standard procedure that has been used in the past (Möller & de Boissière 2019; Donoso-Oliva et al. 2021; Gagliano et al. 2023).

2.2.5 Implementation details

ATAT variants are evaluated every 20000 iterations, and early stopping with a patience of three evaluations is used. Models are trained using the Adam optimizer (Kingma & Ba 2015) until early stopping with learning rate of 2 ⋅ 10–4 and a batch size of 256. We used class balanced batches. The fully connected neural network of Eq. (5) consists of two layers. The first layer employs a Gaussian error linear unit (GELU; Hendrycks & Gimpel 2016), while the second layer is a linear layer. For both Tlc and Ttab, nheads = 4 and nlayers = 3. For Tlc/Ttab all input and output dimensions of linear layers are 48/32 with the exception of the hidden layers of FF (Eq. (5)) which are 96/64. We note that this implies that ETM = 48 ⋅ 4 = 192 and ETT = 36 ⋅ 4 = 144. We selected Tmax = 1500 days and H = 64. We used a dropout of 0.2 for training. All Nans, inf, and – inf in features and metadata are replaced by −9999.

2.3 Features

The ELAsTiCC dataset has six bands and its light curves contain difference fluxes. In comparison, the alert stream from ZTF (Bellm et al. 2018; Graham et al. 2019) classified by the original balanced hierarchical random forest model from ALeRCE has only two fully public bands and it offers light curves in difference magnitudes. In order to deal with this, we modified some of the original features from Sánchez-Sáez et al. (2021b). All light curve based features were modified to use fluxes as input instead of magnitudes. The supernova parametric model (SPM) from Sánchez-Sáez et al. (2021b) was modified to better handle the six bands available and the extra information of redshift and Milky Way dust extinction. The fluxes were scaled using the redshift information available and the WMAP5 cosmologi-cal model (Komatsu et al. 2009; Astropy Collaboration 2022), and also deattenuated using the extinction information and the model from O’Donnell (1994). This means that some of the metadata information was used in the computation of features. We removed some features from Sánchez-Sáez et al. (2021b) that were not simulated by ELAsTiCC, for example the star-galaxy score from the ZTF stream and the color information from ALL-WISE. The coordinates of the objects are not used because they were not simulated in a realistic way for each of the astrophysical classes. We ended up with a total of 429 features: 69 features per band plus 15 multi-band (e.g., colors, multi-band periodograms). These engineered features are also used for ATAT in Section 3. In Appendix A we give a comprehensive list of the modified features.

We calculated features for all light curves with a total of more than five points across all bands. Since we are using forced photometry, the source with the fewest observations has 11 points. Consequently, we calculated features for all objects.

2.4 Balanced hierarchical random forest

We compare our transformer models against the BHRF model of Sánchez-Sáez et al. (2021b) adapted for the ELAsTiCC dataset. This section describes the differences between the original BHRF and its ELAsTiCC adaptation.

The original BHRF described in Sánchez-Sáez et al. (2021b) is composed of four balanced random forest models (Chen et al. 2004) that are used in a hierarchical structure. The top model classifies each light curve into transient, stochastic, and periodic classes. Then each of the three groups is further classified using its own balanced random forest model. In the current paper, the transient group includes the following classes: calcium-rich transients (CART), SNe Iax (Iax), SNe 91bg-like (91bg), SNe Ia (Ia), SNe Ib/c (Ib/c), SNe II (II), SNe IIb (SN-like/Other), superlumi-nous SNe (SLSN), pair instability SNe (PISN), tidal disruption events (TDE), intermediate luminosity optical transients (ILOT), and kilonovae (KN). The stochastic group includes the following classes: M-dwarf flares, Dwarf novae, active galactic nuclei (AGN), and gravitational micro lensing events (uLens). The periodic group includes the following classes: Delta Scuti, RR Lyrae, Cepheid, and eclipsing binaries.

During training, the balanced random forest models draw bootstrap samples from the minority class and sample with replacement the same number of samples from the other classes for each tree. In order to be consistent with ATAT, the balanced random forest models were trained using light curves trimmed to 8, 128, and 2048 days long. We sampled 15 000, 9000, and 3900 light curves from each class to train each tree of the transient, periodic, and stochastic balanced random forests, respectively. We note that all data was fed to the model during training, but each tree only uses a randomly sampled balanced subset of the data. We performed a hyperparameter grid search for the split criterion (Gini impurity, entropy), number of trees (10, 100, 350, 500), and maximum depth (10, 100, and no maximum depth). The best combination of hyperparameters in terms of the mean macro F1 score over predictions for the full light curves were obtained for an entropy split criterion, 350 trees, and a maximum depth of 100. We also calculated the feature importance on the random forest and used only 100 features for each forest which lead to a statistically similar macro F1 score (within one standard deviation). These decisions were made to diminish the final size of the model in order to facilitate deployment.

|

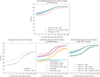

Fig. 3 F1 score vs. time since first alert for a selection of models. We show the better performing ATAT variants and the RF-based baseline (a), the light curve only ATAT variants (b), the tabular data only ATAT variants (c), and the combined light curve and tabular data ATAT variants (d). LC, MD, and Features refer to models that are optimized using the light curve, metadata, and feature information, respectively. Models can use more than one information source, e.g., LC + MD + Features. the dotted lines refer to models that are optimized with MTA (see Section 2.2.4). |

3 Results

3.1 Comparison between ATAT and BHRF

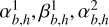

We calculated measures of classification performance by averaging predictions for each object. Figure 3a shows the test-set F1 score of two selected ATAT variants using different data sources with MTA and the BHRF as a function of the number of days after the first alert. The analysis begins on the day of the first detection and progressively increases by powers of two until reaching the maximum duration of the longest light curve, which spans 1104 days. These ATAT variants outperform the BHRF model for all light curve lengths, especially for shorter light curves. We further compare these models by measuring the F1 score, recall, and precision on a per class basis, as shown in Table 1. The labels indicate whether the models were trained using the light curve data (LC), metadata (MD), engineered features (Features), or combinations thereof. MD is the data coming together with the alerts (e.g., host galaxies redshift), while features are extracted from the light curves (e.g., period). The ATAT variants surpass the BHRF in 16 out of 20 classes. In particular, the ATAT variant based on LC and MD performs better in the SN subclasses, but the ATAT variants that use all data sources obtain better scores in the periodic subclasses. Three of the four classes where the BHRF outperforms (F1 score) ATAT, namely KN, CART, and M-dwarf flares are also the ones with fewer examples in the dataset (see Figure 2). This may be explained by the differences in the class-balancing strategies: the BHRF is more robust to overfitting in the minority classes. The BHRF model also outperforms ATAT in terms of the F1 score for the eclipsing binaries (EBs), primarily because ATAT achieves a relatively low precision for this class. As illustrated in Figure 4, about 10% of the M-dwarf flares are classified as EBs by ATAT, which can be explained by the low support of M-dwarf flares in our dataset.

Classification performance of the models in this work.

3.2 Classification performance of ATAT variants

To explore the influence of the number and type of data sources on the classification performance, 11 ATAT variants are compared in Figures 3b–d. In all figures the dashed lines correspond to the cases where the MTA strategy is used.

Figure 3b shows the performance of the ATAT variants using only the light curve as input source, with and without the MTA strategy. The MTA strategy significantly improves the classifier performance at early times, saturating at about 128 days and after that having only marginal increments. This could be related to the majority of the classes in the dataset being transients and to the absence of longer timescales variable objects (e.g., Miras and other LPVs).

Figure 3c shows the performance of the ATAT variants trained using only tabular data information. This includes metadata from the first alert and features that are a function of the available light curve data, where a strategy similar to MTA can also be applied. We note that the feature-based model (orange line) outperforms the LC-based model (gray line in Figure 3b). We can also observe that the performance of the feature-based model increases considerably when metadata is incorporated (purple line), and even outperforms the RF-based baseline (red line) when considering MTA after ~32 days. Before 32 days, the RF model outperforms all other ATAT models that only use tabular data. The ATAT model that uses only MD (cyan line) outperforms the ATAT that use only features for light curves shorter than ~8 days. The MTA strategy applied to feature computation has a positive effect in early classification performance in all cases.

Figure 3d shows the performance of four ATAT variants trained with both the light curves and the metadata, with and without features, and with and without the MTA strategy. This figure suggests a low synergy between the light curve and feature data and that ATAT can extract the most relevant class information using only the light curve and metadata. Moreover, a comparison with Figure 3b suggests a high synergy between the light curve and metadata, where adding metadata yields a performance improvement between 20% and 30% depending on the length of the light curves. The ATAT variant that uses all information sources (blue solid line) without the MTA strategy has a worse performance for light curves shorter than 128 days than the model that uses only light curve and metadata information (green solid line). When the MTA strategy is applied, the model that uses all the data sources is only marginally superior after ~32 days. Before ~32 days, not using features leads to a higher macro F1 score.

In summary, models combining light curves and metadata information yield the highest performance (highest synergy). The addition of features to ATAT marginally improves the macro F1 score when calculated over the entire light curves when using the MTA strategy. Additionally, applying the MTA strategy is always beneficial for early classification in the models using light curves and/or feature data. The two ATAT variants that use light curves plus metadata and MTA shown in Figure 3a were put into production within the pipeline that processes the ELAsTiCC stream.

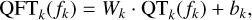

Figure 4 shows the confusion matrices of (a) the ATAT variant that uses LC, metadata, and MTA; (b) the ATAT variant that uses LC, features, metadata, and MTA; and (c) the RF-based baseline. These results were obtained by evaluating the light curves at their maximum length. The ATAT (LC + MD + Features + MTA) model outperformed the RF in 15 out of 20 classes in the dataset. In particular the ATAT performs better in all the SNe subclasses, namely Iax, 91bg, Ia, Ib/c, II, SLSN, PISN, and SN-like/Other. This is especially noticeable for types Ib/c and II where the difference in recall is 26% and 23%, respectively. In the case of transient types, except for the aforementioned SNe subclasses, noticeable differences between the models arise. For example the RF-based baseline outperforms ATAT by 13% and 16% in the case of KN and CART, respectively. The former model confused these classes mainly with SNe types Ib/c and Iax. The baseline is also 10% better at detecting ILOT. ATAT confuses this class mainly with TDEs, whereas the baseline does not present this confusion. On the other hand, ATAT outperforms the baseline by 16% and 13% in the case of TDE and uLens, respectively. Cataclysmic types also present interesting differences between the models. For example, ATAT outperforms the baseline by 10% for the Dwarf-novae class. On the other hand, the RF-based baseline outperforms the ATAT model by 10% in the case of M-dwarf flares. The latter model has 9% confusion between this class and the EB periodic subtype, whereas the baseline does not present this confusion. Finally, the confusion matrices show that both models achieve almost perfect detection for periodic variable star classes and for the stochastic AGN class, with the proposed model being marginally superior to the baseline. It is worth noting that three of the four classes where the RF-based baseline outperforms ATAT, namely KN, CART, and M-dwarf, are also the ones with fewer examples in the dataset (see Figure 2). This suggests that the RF is more efficient for highly class-imbalanced datasets than the transformer-based approach. Additional data-augmentation strategies may be required to improve the performance of ATAT in these data-scarce classes. The ATAT variant that does not use features (LC + MD + MTA) shows a performance similar to that of the ATAT variant that uses them (LC + MD + Features + MTA), except for periodic classes where the feature-based variant is consistently better.

|

Fig. 4 Confusion matrix of two ATAT variants, and the random forest (RF) baseline. ATAT (LC + MD + MTA) has an F1 score of 82.6%, ATAT (LC + MD + Features + MTA) has an F1 score of 83.5%, and RF (MD + Features) has an F1 score of 79.4%. |

Ablation study F1 scores.

3.3 Ablation study

In this section we test the different design choices introduced in ATAT. Table 2 shows the F1 scores (mean and standard deviation) for combinations of positional encodings (PE) and tokenizers used in the feature transformer for the metadata. For the PE we consider our proposed TM, the original fixed sinusoidal (Vaswani et al. 2017), and not using the light curves at all (hence, no light curve transformer). For the tabular data transformer we consider our proposed QFT, the original feature tokenizer from Gorishniy et al. Gorishniy et al. (2021), and using no tabular metadata. For the tabular data we consider the metadata and not the features, given that there is not more than 2σ difference in the F1 score with and without the features as shown in Figure 3. All experiments include the MTA described in Section 2.2.4. The combination of the TM and the QFT outperform other experiments. By using our proposed TM we increase the F1 scores significantly (over 5%) compared to the sinusoidal PE, while our proposed QFT is able to increase the performance of our model by over 2%.

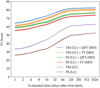

Figure 5 shows the performance of the models as a function of the days since the first alert for each of the combinations of PEs and tokenizers. This figure shows the superiority of TM and QFT over the models that use a fixed sinusoidal PE or a non-quantile tabular data tokenizer. We conclude that there is a significant contribution of our proposed modification to the light curves and tabular data transformers for the classification of astronomical data streams from the ELAsTiCC challenge.

|

Fig. 5 Ablation study in time. We compare combinations of our proposed time modulation (TM) and quantile feature tokenizer (QFT) relative to a fixed sinusoid (FS) positional encoding (PE) and non-quantile feature tokenizer (FT). The combination of TM and QFT outperforms other combinations of PEs and tabular tokenizers independent of the time the models are evaluated. |

3.4 Computational time performance

Table 3 shows the average computational time to predict the class of a single light curve10 with the selected ATAT variants and the RF-based baseline. The table also shows the average time per light curve to compute the complete set of engineered features. We note that only the RF-based baseline and ATAT (LC + MD + Features + MTA require features to be computed. In light of the LSST emitting millions of alerts per night, evaluating inference times in batches is essential for assessing the practical feasibility of our approach. Inference times for ATAT on the GPU are shown for both a batch of 2000 light curves and one light curve at a time (averaged over 20 000 batches of one light curve each).

From Table 3, we observe that the computational time required to perform inference with any of the models is negligible in comparison with the time required to compute features. This means that in total, the ATAT (LC + MD + MTA) variant is orders of magnitude faster than the RF-based baseline and feature-based ATAT variants. This sets the LC + MD + MTA variant as a very interesting trade-off, reducing computational time in 99.75% with only a 0.3% decrease in F1 score. We note, however, that some classes are more affected when features are excluded, e.g., periodic variables. As future work we plan to explore which subset of features are more synergistic with the ATAT (LC + MD + MTA) variant. The selection of the best trade-off may also need to be reevaluated as future surveys such as LSST are expected to incorporate some features (e.g., period) in the alert stream.

Comparison of computational time performances.

4 Discussion and conclusions

We presented the models used by the ALeRCE team during the first round of ELAsTiCC. We introduced ATAT, a novel deep learning transformer model that combines time series and tabular data information. The proposed model was developed for the ELAsTiCC challenge that simulates an LSST-like stream, with the objective of testing end-to-end alert stream pipelines. We were able to evaluate both classification and infrastructure performance metrics in the training set provided by ELAsTiCC. Our model was put into production within the ALeRCE broker in preparation for the real-time classification of the LSST alert stream.

Our results show that, using the ELAsTiCC dataset, ATAT outperforms a balanced hierarchical RF model similar to the current ALeRCE light curve classifier. This RF obtains a macro precision, recall, and F1 score respectively of 0.777, 0.782, and 0.772, while ATAT achieves 0.841, 0.827, and 0.825 when using light curves, metadata, and features calculated over the light curves. Furthermore, if only the light curves and metadata are considered for ATAT, we achieved values of 0.838, 0.825, 0.823 for the previous metrics and, when performing inference in batches of 2,000 light curves, about 400 times faster inference times than with the RF. Importantly, our work suggests that it is possible to classify light curves excluding human-engineered features with no significant loss in performance, and highlights the importance of including metadata information such as the properties of the host galaxy (e.g., Förster et al. 2022). These results may be improved for data-scarce classes by class-weighting and/or additional data-augmentation strategies (Boone 2019). We plan to explore these alternatives in the future.

The metrics presented in this work, for example in Table 1 and Figure 3, are representative of the dataset provided by ELAsTiCC to prepare machine learning models previous to the end-to-end challenge. The ELAsTiCC simulated data may not be representative of the real LSST alert stream, and this may result in different performance metrics than those reported in this work. In order to tackle these differences, we suggest the application of fine-tuning and domain adaptation techniques.

ATAT has proven to be competitive against feature-based tree ensembles in a large, complex, and multi-class alerts light curve classification setting, including very different variability classes. Transformer-based models represent a paradigm shift, and we believe that more astronomical applications based on these models will be developed. Particularly, ATAT opens the door for more multi-modal applications, for example a third branch for the stamps in Figure 1.

Acknowledgements

The authors acknowledge support from the National Agency for Research and Development (ANID) grants: Millennium Science Initiative ICN12_009 and AIM23-0001, BASAL Center of Mathematical Modeling Grant PAI AFB-170001 and FB210005 (NA, IRJ, FF, JA, AMMA, AA), BASAL projects ACE210002 and FB210003 (AB, MC), FONDEQUIP Patagón supercomputer of Universidad Austral de Chile EQM180042 (NA, GCV, PH), FONDECYT Iniciación 11191130 (GCV), FONDECYT Regular 1200710 (FF), FONDECYT Regular 1211374 (PH), FONDECYT Regular 1220829 (PAE), FONDECYT Regular 1231367 (MC) and infrastructure fund QUIMAL190012. We acknowledge support from REUNA Chile. This work has been possible thanks to the use of Amazon Web Services (AWS) credits managed by the Data Observatory. ELAsTiCC is a project of the U.S. Department of Energy-supported Dark Energy Science Collaboration. ELAsTiCC used resources of the National Energy Research Scientific Computing Center (NERSC), a U.S. Department of Energy Office of Science User Facility located at Lawrence Berkeley National Laboratory. We are grateful to the team that created the public ELAsTiCC challenge: Gautham Narayan, Alex Gagliano, Alex Malz, Catarina Alves, Deep Chatterjee, Emille Ishida, Heather Kelly, John Franklin Crenshaw, Konstantin Malanchev, Laura Salo, Maria Vincenzi, Mar-tine Lokken, Qifeng Cheng, Rahul Biswas, Renée Holžek, Rick Kessler, Robert Knop, Ved Shah Gautam.

Appendix A Processed features details

To extract color information from the difference light curves, on each band we take the absolute value of the flux, compute the percentile 90 and save that value. Following the order ugrizY, we take the value of the percentile 90 previously saved for one band and divide it by the value of the next band. To avoid dividing by zero, we add 1 to the denominator.

Most differences in the features used are related to extracting information from supernova-like light curves. This is the list of supernova features, which are computed for each band:

positive_fraction: fraction of observations with a positive flux value.

dflux_first_det_band: difference between the flux of the first detection (in any band) and the last non-detection (in the same selected band) just before the first detection.

dflux_non_det_band: same as dflux_first_det_band, but instead of using the last non-detection before the first detection, we take all the non-detections before the first detection and compute the median. Later, this median is subtracted from the flux of the first detection (in any band).

last_flux_before_band: flux of the last non-detection (in the selected band) before the first detection (in any band).

max_flux_before_band: maximum flux of the non-detections (in the selected band) before the first detection (in any band).

max_flux_after_band: maximum flux of the non-detections (in the selected band) after the first detection (in any band).

median_flux_before_band: median flux of the non-detections (in the selected band) before the first detection (in any band).

median_flux_after_band: median flux of the non-detections (in the selected band) after the first detection (in any band).

n_non_det_before_band: number of non-detections (in the selected band) before the first detection (in any band).

n_non_det_after_band: number of non-detections (in the selected band) after the first detection (in any band).

As we were not sure if the ELAsTiCC stream would indicate if the observations were alerts or forced photometry (i.e. if the signal was strong enough compared with the noise), for the supernova features we considered an observation as a detection if the absolute value of the difference flux was at least 3 times larger than the observation error.

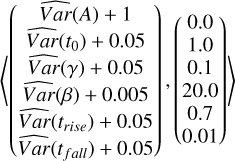

With respect to the Supernova Parametric Model (SPM, Sánchez-Sáez et al. 2021b), one SPM model per band was fitted to the data, but the optimization was done simultaneously and penalizing the dispersion between the parameters on different bands. The extra term added to the cost function is

(A.1)

(A.1)

where the variances are estimated over the different bands and the coefficients were found experimentally. The original SPM code was modified to avoid numerical instabilities. To speed up the optimization, the gradient of the cost function is computed using the JAX library.11 The initial guess and the boundaries for the parameter optimization were tuned for the range of values in the ELAsTiCC dataset.

References

- Allam, T., & McEwen, J. D. 2024, RASTI, 3, 209 [Google Scholar]

- Astorga, N., Huijse, P., Estévez, P. A., & Förster, F. 2018, in 2018 International Joint Conference on Neural Networks (IJCNN), 1 [Google Scholar]

- Astropy Collaboration (Price-Whelan, A. M., et al.) 2022, ApJ, 935, 167 [NASA ADS] [CrossRef] [Google Scholar]

- Becker, I., Pichara, K., Catelan, M., et al. 2020, MNRAS, 493, 2981 [NASA ADS] [CrossRef] [Google Scholar]

- Bellm, E. C., Kulkarni, S. R., Graham, M. J., et al. 2018, PASP, 131, 018002 [Google Scholar]

- Boone, K. 2019, AJ, 158, 257 [NASA ADS] [CrossRef] [Google Scholar]

- Borne, K. D. 2010, Earth Sci. Informatics, 3, 5 [Google Scholar]

- Cabrera-Vives, G., Reyes, I., Förster, F., Estévez, P. A., & Maureira, J.-C. 2016, in 2016 International Joint Conference on Neural Networks (IJCNN) (IEEE), 251 [CrossRef] [Google Scholar]

- Cabrera-Vives, G., Reyes, I., Förster, F., Estévez, P. A., & Maureira, J.-C. 2017, ApJ, 836, 97 [NASA ADS] [CrossRef] [Google Scholar]

- Cabrera-Vives, G., Li, Z., Rainer, A., et al. 2022, in International Conference on Product-Focused Software Process Improvement (Springer), 21 [Google Scholar]

- Carrasco-Davis, R., Cabrera-Vives, G., Förster, F., et al. 2019, PASP, 131, 108006 [NASA ADS] [CrossRef] [Google Scholar]

- Carrasco-Davis, R., Reyes, E., Valenzuela, C., et al. 2021, AJ, 162, 231 [NASA ADS] [CrossRef] [Google Scholar]

- Charnock, T., & Moss, A. 2017, ApJ, 837, L28 [NASA ADS] [CrossRef] [Google Scholar]

- Chen, C., Liaw, A., Breiman, L., et al. 2004, Univ. Calif. Berkeley, 110, 24 [Google Scholar]

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. 2019, arXiv e-prints [arXiv: 1810.04805] [Google Scholar]

- Dieleman, S., Willett, K. W., & Dambre, J. 2015, MNRAS, 450, 1441 [NASA ADS] [CrossRef] [Google Scholar]

- Donoso-Oliva, C., Cabrera-Vives, G., Protopapas, P., Carrasco-Davis, R., & Estévez, P. A. 2021, MNRAS, 505, 6069 [CrossRef] [Google Scholar]

- Donoso-Oliva, C., Becker, I., Protopapas, P., et al. 2023, A&A, 670, A54 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., et al. 2021, ICLR [Google Scholar]

- Förster, F., Moriya, T., Maureira, J., et al. 2018, Nat. Astron., 2, 808 [CrossRef] [Google Scholar]

- Förster, F., Muñoz Arancibia, A. M., Reyes-Jainaga, I., et al. 2022, AJ, 164, 195 [CrossRef] [Google Scholar]

- Fraga, B. M. O., Bom, C. R., Santos, A., et al. 2024, A&A submitted [arXiv:2404.08798] [Google Scholar]

- Förster, F., Cabrera-Vives, G., Castillo-Navarrete, E., et al. 2021, AJ, 161, 242 [CrossRef] [Google Scholar]

- Gagliano, A., Contardo, G., Foreman-Mackey, D., Malz, A. I., & Aleo, P. D. 2023, ApJ, 954, 6 [Google Scholar]

- Gómez, C., Neira, M., Hernández Hoyos, M., Arbeláez, P., & Forero-Romero, J. E. 2020, MNRAS, 499, 3130 [CrossRef] [Google Scholar]

- Gorishniy, Y., Rubachev, I., Khrulkov, V., & Babenko, A. 2021, in Advances in Neural Information Processing Systems, 34, eds. M. Ranzato, A. Beygelz-imer, Y. Dauphin, P. Liang, & J. W. Vaughan (Curran Associates, Inc.), 18932 [Google Scholar]

- Graham, M. J., Kulkarni, S., Bellm, E. C., et al. 2019, PASP, 131, 078001 [NASA ADS] [CrossRef] [Google Scholar]

- Hendrycks, D., & Gimpel, K. 2016, arXiv e-prints [arXiv: 1606.08415] [Google Scholar]

- Hložek, R., Malz, A., Ponder, K., et al. 2023, ApJS, 267, 25 [Google Scholar]

- Huijse, P., Estevez, P. A., Protopapas, P., Principe, J. C., & Zegers, P. 2014, IEEE Computat. Intell. Mag., 9, 27 [CrossRef] [Google Scholar]

- Ishida, E., Mondon, F., Sreejith, S., et al. 2021, A&A, 650, A195 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ivezic, Ž., Kahn, S. M., Tyson, J. A., et al. 2019, ApJ, 873, 111 [NASA ADS] [CrossRef] [Google Scholar]

- Jamal, S., & Bloom, J. S. 2020, ApJS, 250, 30 [NASA ADS] [CrossRef] [Google Scholar]

- Kingma, D., & Ba, J. 2015, in International Conference on Learning Representations (ICLR), San Diega, CA, USA [Google Scholar]

- Komatsu, E., Dunkley, J., Nolta, M., et al. 2009, ApJS, 180, 330 [NASA ADS] [CrossRef] [Google Scholar]

- Mackenzie, C., Pichara, K., & Protopapas, P. 2016, ApJ, 820 [Google Scholar]

- Matheson, T., Stubens, C., Wolf, N., et al. 2021, AJ, 161, 107 [NASA ADS] [CrossRef] [Google Scholar]

- Möller, A., Peloton, J., Ishida, E. E. O., et al. 2021, MNRAS, 501, 3272 [CrossRef] [Google Scholar]

- Moreno-Cartagena, D. A., Cabrera-Vives, G., Protopapas, P., et al. 2023, in Machine Learning for Astrophysics. Workshop at the Fortieth International Conference on Machine Learning (ICML 2023), 23 [Google Scholar]

- Muthukrishna, D., Narayan, G., Mandel, K. S., Biswas, R., & Hložek, R. 2019, PASP, 131, 118002 [NASA ADS] [CrossRef] [Google Scholar]

- Möller, A., & de Boissière, T. 2019, MNRAS, 491, 4277 [Google Scholar]

- Narayan, G., Zaidi, T., Soraisam, M. D., et al. 2018, ApJS, 236, 9 [NASA ADS] [CrossRef] [Google Scholar]

- Naul, B., Bloom, J., Perez, F., & van der Walt, S. 2018, Nat. Astron., 2, 151 [NASA ADS] [CrossRef] [Google Scholar]

- Neira, M., Gómez, C., Suárez-Pérez, J. F., et al. 2020, ApJS, 250, 11 [NASA ADS] [CrossRef] [Google Scholar]

- Nordin, J., Brinnel, V., van Santen, J., et al. 2019, A&A, 631, A147 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Nun, I., Protopapas, P., Sim, B., & Chen, W. 2016, AJ, 152, 71 [NASA ADS] [CrossRef] [Google Scholar]

- Nun, I., Protopapas, P., Sim, B., et al. 2017, Astrophysics Source Code Library, [record ascl:1711.017] [Google Scholar]

- O’Donnell, J. E. 1994, ApJ, 422, 158 [Google Scholar]

- Pérez-Carrasco, M., Cabrera-Vives, G., Hernández-Garcia, L., et al. 2023, in Machine Learning for Astrophysics. Workshop at the Fortieth International Conference on Machine Learning (ICML 2023), 23 [Google Scholar]

- Perez-Carrasco, M., Cabrera-Vives, G., Hernandez-García, L., et al. 2023, AJ, 166, 151 [NASA ADS] [CrossRef] [Google Scholar]

- Pimentel, Ó., Estévez, P. A., & Forster, F. 2023, AJ, 165, 18 [NASA ADS] [CrossRef] [Google Scholar]

- Pruzhinskaya, M. V., Malanchev, K. L., Kornilov, M. V., et al. 2019, MNRAS, 489, 3591 [Google Scholar]

- Rodriguez-Mancini, D., Li, Z., Valenzuela, C., Cabrera-Vives, G., & Förster, F. 2022, IEEE Softw., 39, 28 [CrossRef] [Google Scholar]

- Russeil, E., Ishida, E. E. O., Le Montagner, R., Peloton, J., & Moller, A. 2022, [arXiv:2211.10987] [Google Scholar]

- Sánchez, A., Cabrera, G., Huijse, P., & Förster, F. 2022, in Machine Learning and the Physical Sciences Workshop, 35th Conference on Neural Information Processing Systems (NeurIPS) [Google Scholar]

- Sánchez-Sáez, P., Lira, H., Martí, L., et al. 2021a, AJ, 162, 206 [CrossRef] [Google Scholar]

- Sánchez-Sáez, P., Reyes, I., Valenzuela, C., et al. 2021b, AJ, 161, 141 [CrossRef] [Google Scholar]

- Sánchez-Sáez, P., Arredondo, J., Bayo, A., et al. 2023, A&A, 675, A195 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Smith, K. W., Williams, R. D., Young, D. R., et al. 2019, RNAAS, 3, 26 [NASA ADS] [Google Scholar]

- Vaswani, A., Shazeer, N., Parmar, N., et al. 2017, in Advances in Neural Information Processing Systems, 30, eds. I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, & R. Garnett (Curran Associates, Inc.) [Google Scholar]

- Villar, V. A. 2022, arXiv e-prints [arXiv:2211.04480] [Google Scholar]

All Tables

All Figures

|

Fig. 1 Diagram of ATAT, which consists of two branches: 1) at the top a transformer to process light curves (matrices x, t, and M) and 2) at the bottom a transformer to process tabular data (matrix ƒ). The information sources are respectively processed by time modulation (TM) and quantile feature tokenizers (QFT) (white rectangles). In both cases the results of this processing are sequences. Subsequently, a learnable token is added as the first element of the sequence. These sequences are processed by the transformer architectures Tlc (light curves) and Ttab (tabular data). Finally, the processed token is transformed linearly and used for label prediction |

| In the text | |

|

Fig. 2 ELAsTiCC dataset class histogram. In (a), the original taxonomy class distribution is shown. In (b), the taxonomy class distribution selected by Vera Rubin’s brokers is shown. We use the SN-like/Other class to include SNe IIb. |

| In the text | |

|

Fig. 3 F1 score vs. time since first alert for a selection of models. We show the better performing ATAT variants and the RF-based baseline (a), the light curve only ATAT variants (b), the tabular data only ATAT variants (c), and the combined light curve and tabular data ATAT variants (d). LC, MD, and Features refer to models that are optimized using the light curve, metadata, and feature information, respectively. Models can use more than one information source, e.g., LC + MD + Features. the dotted lines refer to models that are optimized with MTA (see Section 2.2.4). |

| In the text | |

|

Fig. 4 Confusion matrix of two ATAT variants, and the random forest (RF) baseline. ATAT (LC + MD + MTA) has an F1 score of 82.6%, ATAT (LC + MD + Features + MTA) has an F1 score of 83.5%, and RF (MD + Features) has an F1 score of 79.4%. |

| In the text | |

|

Fig. 5 Ablation study in time. We compare combinations of our proposed time modulation (TM) and quantile feature tokenizer (QFT) relative to a fixed sinusoid (FS) positional encoding (PE) and non-quantile feature tokenizer (FT). The combination of TM and QFT outperforms other combinations of PEs and tabular tokenizers independent of the time the models are evaluated. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.