Fig. 2

Download original image

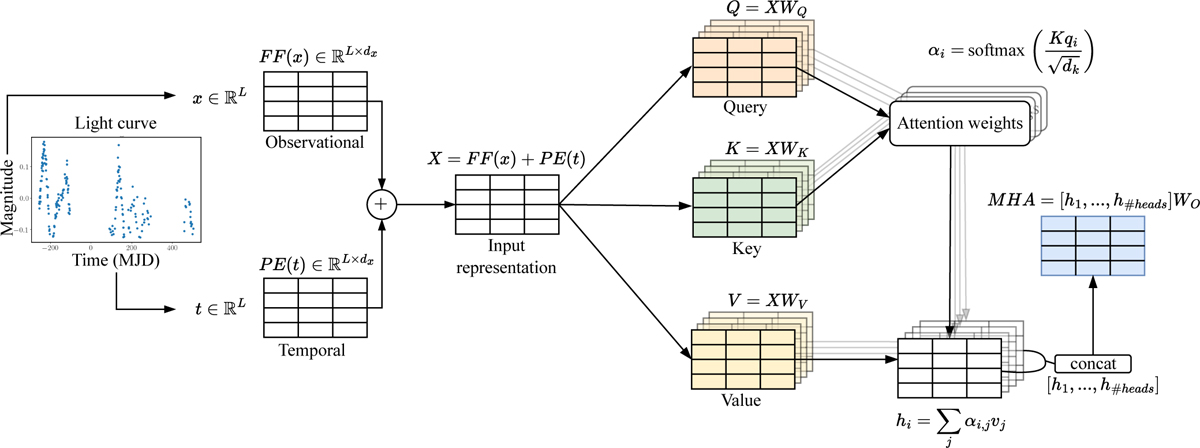

Astromer’s multi-head attention mechanism. The light curve consists of magnitudes and timestamps (in MJDs). The magnitude values are processed through a FF neural network, while the timestamps of each observation are encoded using the positional encoder. The two representations are summed to form the input representation, which is then fed into the attention mechanism. This representation is projected into query (Q), key (K), and value (V) matrices using learned weight matrices. The attention mechanism computes attention weights (αi) using a softmax function applied to a scaled dot-product operation of the query and key vectors. The final multi-head attention output consists of multiple attention heads [h1, . . ., h#heads] concatenated and then transformed by a learned weight matrix. This transformed output is then used as the input representation for the next multi-head attention layer.

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.