| Issue |

A&A

Volume 522, November 2010

|

|

|---|---|---|

| Article Number | A70 | |

| Number of page(s) | 22 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/200913890 | |

| Published online | 04 November 2010 | |

MYRIAD: a new N-body code for simulations of star clusters

1

Department of PhysicsAristotle University of Thessaloniki,

54124

Thessaloniki,

Greece

e-mail: simos@astro.auth.gr

2

Theoretical Astrophysics, Eberhard-Karls University of Tübingen,

72076

Tübingen,

Germany

Received:

16

December

2009

Accepted:

13

June

2010

Aims. We present a new C++ code for collisional N-body simulations of star clusters.

Methods. The code uses the Hermite fourth-order scheme with block time steps, to advance the particles in time, while the forces and neighboring particles are computed using the GRAPE-6 board. Special treatment is used for close encounters, and binary or multiple subsystems that form either dynamically or exist in the initial configuration. The structure of the code is modular and allows the appropriate treatment of more physical phenomena, such as stellar and binary evolution, stellar collisions, and evolution of close black-hole binaries. Moreover, it can be easily modified so that the part of the code that uses GRAPE-6, could be replaced by another module that uses other accelerating-hardware such as the graphics processing units (GPUs).

Results. Appropriate choice of the free parameters give a good accuracy and speed for simulations of star clusters up to and beyond core collapse. Simulations of Plummer models consisting of equal-mass stars reached core collapse at t ≃ 17 half-mass relaxation times, which compares very well with existing results, while the cumulative relative error in the energy remained below 10-3. Comparisons with published results of other codes for the time of core collapse for different initial conditions, show excellent agreement. Simulations of King models with an initial mass-function, similar to those found in the literature, reached core collapse at t ≃ 0.17 ± 0.05 half-mass relaxation times, which is slightly earlier than expected from previous work. Finally, the code accuracy becomes comparable to and even better than the accuracy of existing codes, when a number of close binary systems is dynamically created in a simulation. This is because of the high accuracy of the method that is used for close binary and multiple subsystems.

Conclusions. The code can be used for evolving star clusters containing equal-mass stars or star clusters with an initial mass function (IMF) containing an intermediate mass black hole (IMBH) at the center and/or a fraction of primordial binaries, which are systems of particular astrophysical interest.

Key words: gravitation / globular clusters: general / galaxies: star clusters: general / methods: numerical

© ESO, 2010

1. Introduction

Systems of numerous individual members interacting with each other are found on both small and large scales. Gas molecules interact with each other by means of electrostatic van der Waals forces, the same forces are responsible for the interactions and collisions of amino-acids that lead to the construction of protein molecules. On the other hand, planets and planetesimals interact with both each other and the central star in a planetary system, with the gravitational force. The gravitational force is also the interaction force between members of greater systems such as open or globular star-clusters, galaxies, and groups of galaxies.

N-body codes provide one way of simulating systems like those mentioned above. Those codes require calculations of all the forces between all the individual particles at every integration time step. This means that at every time-step, a total of N(N − 1) ≈ N2 force calculations should be made, where N is the number of interacting particles. From that, it is easy to conclude that N-body codes have time complexity O(N2) and require fast computers or even special purpose hardware, such as GRAPE systems, to simulate realistically large systems. This is why the evolution of N-body codes that could integrate these systems in a reasonable time, is closely connected to the hardware evolution.

The first attempt to perform a simulation, albeit without the use of a computer, but instead a system of 37 light bulbs represending two interacting model galaxies, was made by Holmberg (1941). The first computer N-body simulations were developed by von Hoerner (1960, 1963) using initially 16 and later 25 particles. Later, as simulation methods and computers became faster, the number of particles increased to 100 (Aarseth 1963) and 250 (Aarseth 1968). Even in these early attempts some of the physical processes that occur in true star clusters, such as mass segregation and formation of hard binaries, were found in the simulations, but simulating systems closer to realistic star clusters required improvements in both software, by developing efficient algorithms, and hardware.

A significant step in the evolution of N-body algorithms was the introduction of individual time steps: all particles do not share the same time step, but each one of them has its own, depending on its motion. In block-time-step schemes (McMillan 1985), the time steps are quantized, usually as powers of 2, permitting blocks of particles to be updated simultaneously. This idea made the algorithms faster, because only the necessary calculations need to be made for a given accuracy. Today all sophisticated N-body codes such as Starlab1 (Portegies Zwart et al. 2001; Hut 2003), φGRAPE (Harfst et al. 2008), and Aarseth’s nbody2 series (nbody4, nbody6, nbody6++ (Aarseth 1999a,b; Spurzem 1999)) use block time steps. Another milestone in the history of N-body simulations was the introduction of special purpose hardware, responsible only for the calculation of the gravitational forces. The GRAPE series of computers (Makino et al. 1997, 2003a) were designed to perform only this type of calculation. The next step was GRAPE-DR3 (Makino 2008), which is a more general purpose hardware specialized for N-body codes which operates faster than a normal CPU. Simulations of realistically large star systems can be made using a single GRAPE machine attached to a fast host-computer. Today, GPUs are slowly replacing CPUs and the older GRAPEs in N-body simulations (Portegies Zwart et al. 2007a; Belleman et al. 2008; Hamada & Iitaka 2007), because they are faster and considerably cheaper, than either CPUs or GRAPEs.

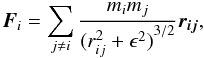

In this paper, we assess the limitations of N-body codes with today’s software and hardware power. First of all, N-body simulations are eithercollisionless or collisional. In collisionless simulations, the gravitational law is modified in close encounters to avoid collisions of particles and disencourage the dynamical formation of hard binary systems. The modified gravitational force acting on a single particle i due to all other particles is calculated to be:  (1)where ϵ is the so-called softening parameter. The presence of close binary systems slows down the simulations, thus when handling large numbers of particles, one prefers to use collisionless simulations.

(1)where ϵ is the so-called softening parameter. The presence of close binary systems slows down the simulations, thus when handling large numbers of particles, one prefers to use collisionless simulations.

In simulations of collisional systems, the minimum number of stars that can be modeled is much lower and depends strongly on the fraction of stars that belong to binary systems in the initial configuration (primordial binaries). This is because the evolution of binary systems requires special treatment, including small time steps. Baumgardt et al. (2005) presented the results of simulations of unequal-mass star clusters containing up to N = 131072 stars and an intermediate-mass black hole (IMBH) at the center. Those simulations were oparated for 12 Gyr and no primordial binaries were included. In Portegies Zwart et al. (2007b), the results of simulations of star clusters with a total of N = 144179 stars including 10% primordial binaries were presented. The simulations were run for 100 Myrs. From the above numbers, it is easy to conclude that although it is not possible to simulate a typical galaxy with all its stars (N ≈ 108 − 109), a simulation of a typical globular cluster (N ≈ 105 − 106) for timescales of the order of some Gyr is possible, with today’s computing power. If primordial binaries are not included, the evolution of a star cluster with up to 105 stars up to and beyond core collapse is possible, using a GRAPE-6 machine attached to a fast host-computer. In the near future, using new algorithms and the coming generations of hardware, it should become possible to simulate the dense and massive centers of galaxies (N ≈ 106 − 107).

During the past few years, N-body simulations have provided a realistic tool for explaining the dynamics and structure of star clusters. Portegies Zwart et al. (1999) and Portegies Zwart & McMillan (2003) explained one way of forming IMBH in some globular clusters, by means of the process of runaway collisions of stars. Baumgardt et al. (2005) used detailed N-body simulations to discuss the structure of a globular cluster containing an IMBH, while Gualandris & Merritt (2008) discussed the possibility of the ejection of supermassive black holes from galactic centers. Kupi et al. (2006) and Amaro-Seoane et al. (2009) used post-Newtonian effects on their N-body simulations to study the dynamics and the gravitational radiation from IMBH-binaries in star clusters. Finally, Baumgardt et al. (2008) discussed the effects of primordial mass segregation on the evolution of globular clusters.

Here we introduce Myriad4, a new C++ N-body code for collisional and collisionless simulations. The code is loosely based on the instructive on-line books found in The Art of Computational Science website5. It has many of the features of existing codes, such as the use of block time steps, the Hermite 4th order integrator, GRAPE-6 support, and special treatment for binary and multiple sub-systems, but introduces a new structure and some new ideas especially in using GRAPE-6 to find neighboring stars and perturbers of binary systems.

These ideas and a description of the basic structure of the code are presented in Sect. 2. In Sect. 2.2, the detailed steps of the code are described. More attention is given in describing the method for dynamical evolution of binary or multiple sub-systems that may appear in a simulation, in Sect. 2.2.3. In Sect. 3 we compare our preliminary results with the results of other codes. In the final two sections, some of the future directions of this code are presented with a discussion about the source of numerical errors and the CPU time needed for the simulations. The code uses the so-called N-body units, which, together with some basic equations for calculating the physical parameters of a star cluster are given in Appendix A.

2. The new code

2.1. Code structure

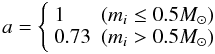

|

Fig. 1 Class hierarchy in Myriad. A class cluster is a set of several block classes. A block contains a variable number of particle and binary classes. A binary is a set of two or more particle classes. A class particle contains information (mass, position, velocity etc.) for a single star and functions that act on them. The class cluster contains information about the system as a whole (total mass, energy, half-mass radius, core radius etc.) and the required functions for calculating them. |

|

Fig. 2 Simplified graphical representation of Myriad. The arrows show the flow of the data in the code. Boxes represent input or output datafiles or outside applications, while circles represent sets of code-functions. Myriad applications are those inside the large dashed box. The other boxes and circles refer to satellite programs that create initial data or make animations from Myriad-output. |

The core of Myriad6 uses C++ classes to store and manipulate data. The simplest or lower-level class is the class particle, which contains all necessary information about a single star7 (mass, radius, position, velocity, acceleration etc.). The class particle also contains a set of functions that act on a star following its evolution in time, finding its neighbors, its time step and all the required data for the star itself. A set of particle classes that share the same time step at a given time, forms a higher-level class, the class block. As with the class particle, for the class block there is a set of functions that act on both the class itself and its particle members.

Finally, the complete set of block classes (it might only be one if all stars evolve with the same time step) forms a cluster, which is the highest-level class. The cluster-functions, act on all particle classes of the system, and mainly finding information about the cluster of stars itself (total mass, center of mass, escapers, binaries etc.). There is also another class that is a member of a class block, the class binary, which consists of two or more particle classes representing stars that lie close to each other in space. The binary class has the required functions for the initialization of a binary or multiple star-system, its evolution in time, and its termination, when necessary, and also returns the required data back to the cluster.

Figure 1 shows the hierarchy of classes in the code described above. Figure 2 is a simplified graphical representation of Myriad. Initial data are provided to Myriad from a single data file. This initial data files can be constructed by the Starlab package. The main output of Myriad are snapshots of the evolving system at every output time step dtout and information about the whole cluster (total mass, core radius, half-mass radius etc.) at every diagnostic time step dtdiag.

|

Fig. 3 Schematic data flow between the CPU and the GRAPE-6 for the Hermite 4th-order integrator. See text for description. |

2.2. Integration method

The integration method used in Myriad is the Hermite 4th order (H4) (Makino & Aarseth 1992) predictor-corrector scheme (PEC: Predict-Evaluate force-Correct) with block time steps. Accelerations and their derivatives (jerks) are computed using GRAPE-6. Binaries, close encounters, and multiple sub-systems that require small time steps are detected using GRAPE-6 and evolved using the time-symmetric Hermite 4th order integrator (Kokubo et al. 1998), where the prediction (P) step is the same as in the simple H4, but the force evaluation (E) and correction (C) steps are repeated 3 times (P(EC)3).

For each block i, tic is the current time of its particle classes, dti is the time step, and tif = tic + dti is the time after a single update.

The integration procedure consists of the following steps (the time complexity of the slowest steps is displayed in parenthesis):

-

1.

We define the universal current timetc and the time after the step is completed tf to be equal to the time and time forward of block 0, the block containing stars with the minimum time step, respectively

(2)

(2) (3)

(3) -

2.

We determine which blocks have time forward equal to tf and label them as requiring update.

-

3.

We update each labeled block to its time forward. To update a single block with m members (N being the total number of stars):

-

(a)

If binary8 classes exist, update them to tf To update a binary class with k members:

-

i.

We determine the time step dtb for the P(EC)3 integrator.

-

ii.

We predict the position and velocity of each member (O(k)).

-

iii.

We calculate the forces between binary-members on the CPU (O(k2)).

-

iv.

We calculate the perturbation forces from p neighboring stars on the CPU (O(kp)).

-

v.

We then correct the position and velocity of each member (O(k)). Processes (iii) to (v) are repeated n = 3 times.

-

vi.

We update the current time tc = tc + dtb of the binary.

-

vii.

We continue from (i) until tc equals tf.

-

(b)

We predict the positions and velocities of all the particles of theblock, including normal stars and “virtual” stars that representthe centers of mass of binary or multiple sub-systems(O(m)).

-

(c)

We evaluate the accelerations and their derivatives for all the particles of the block using the GRAPE-6 hardware (O(mlog N)).

-

(d)

We find the new time step for each particle of the block. This will be used for the next update (O(m)).

-

(e)

We check for encounters between single stars or binaries.

-

(f)

We correct the positions and velocities of all the particles of the block (O(m)).

-

(g)

If an encounter is detected in step (e), we construct the binary, and replace its members with a “virtual” star represending their center of mass.

-

(h)

We check the binary classes. If a binary is terminated, replace its center of mass in the N-body code, with its former members and erase it.

-

(i)

We then send the corrected values to GRAPE-6 memory.

-

4.

We move particles between block classes according to theirnew time step.

-

5.

We update tc and tf for each updated block.

-

6.

We continue with step 1.

From the above, it is obvious that the speed of the code depends on:

-

1.

The total number of stars N.

-

2.

The number of block classes and the average number of stars per block.

-

3.

The average number p of stars responsible for significant perturbations on a binary system (perturbers).

Figure 3 shows how data flows between the CPU and the GRAPE-6 and which parts of the calculation are performed on the CPU and the GRAPE-6. Initially positions and velocities, and estimates of both the accelerations and the jerks of all particles are stored in GRAPE-6 memory (This procedure is omitted in the graph). Then, a prediction is made for a set of i-particles using the CPU. The predicted values and the time of each particle is sent to the GRAPE-6 buffer, which directly sends them to the internal predictor of the GRAPE-6. The internal predictor takes the data for the rest j-particles from the GRAPE-6 memory and predicts their positions and velocities at the time of the i-particles. The GRAPE-6 then computes the acceleration, its derivative (jerk), and the nearest neighbors for the i-particles and returns them to the corrector operating on the CPU. The corrector calculates the corrected values of position and velocity for the i-particles and sends the new data to the GRAPE-6 memory. A new set of i-particles then, is sent to the predictor and the procedure continues until all particles are evolved to the expected time. Data flow between the CPU and the GRAPE-6 occurs three times per time step. The most time-consuming calculation, the calculation of the gravitational forces (O(Nlog (N))), performed on the GRAPE-6. The rest of the calculations are of order O(N). If the force-calculation were to be performed on the CPU, then it would be far more time-expensive as it would scale as O(N2).

2.2.1. Block time steps

|

Fig. 4 Distribution of equal mass particles in different time steps (powers of 2). Aarseth’s criterion is used for the time step with η = 0.01. A small number of particles lies in time step 2-14. This is the smallest allowed time step for the N-body code. Stars with this time step are candidates for close encounters or virtual particles representing the centers of mass of binary or multiple sub-systems |

The use of block time steps have proven to be an ideal method for N-body simulations. The advantage of block time steps over constant (shared) time steps for all particles is that a smaller number of operations is needed to achieve a given accuracy. The advantage over individual time steps is that particles are monitored with time in groups and not individually. The implementation of block steps is ideal with GRAPE-6, because it lessens the communication time between the host and GRAPE-6. In individual time-step algorithms, a single particle is sent to GRAPE-6 everytime, while in block time step algorithms, groups of particles, that share the same time step are sent simultaneously to GRAPE-6 for force evaluation.

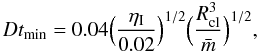

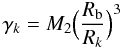

At t = 0, all particles, unless there are binaries in the initial configuration, share the same time step, which is the smallest time step allowed by the N-body code. This time step is given by (Aarseth 2003)  (4)where

(4)where  is the mean mass of the system, ηI the initial accuracy parameter with typical value 0.01, and Rcl is the close encounter distance

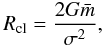

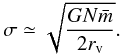

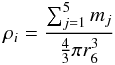

is the mean mass of the system, ηI the initial accuracy parameter with typical value 0.01, and Rcl is the close encounter distance  (5)where G is the gravitational constant, σ is the rms velocity dispersion given by

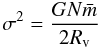

(5)where G is the gravitational constant, σ is the rms velocity dispersion given by  (6)at equilibrium, N is the number of stars, and RV is the virial radius of the cluster

(6)at equilibrium, N is the number of stars, and RV is the virial radius of the cluster  (7)where U is the total potential energy.

(7)where U is the total potential energy.

The time step Dtmin is rounded to the closest power of 2 and the resulting Δtmin is the time step of block 0 and the particles that belong to it.

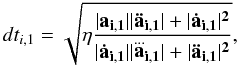

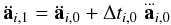

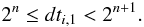

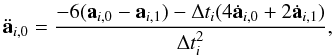

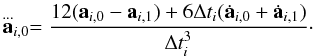

Each time a particle i is updated, its next time step is calculated according to Aarseth’s criterion (Aarseth 2003) (8)where η is the accuracy parameter with a typical value 0.01, and ai,1, ȧi,1,

(8)where η is the accuracy parameter with a typical value 0.01, and ai,1, ȧi,1,  , and

, and  are the acceleration of particle i and its time derivatives, calculated in next section. Subscript “1” refers to the values of the parameters at the end of the time step (final values), while subscript “0” refers to the values at the beginning (initial values).

are the acceleration of particle i and its time derivatives, calculated in next section. Subscript “1” refers to the values of the parameters at the end of the time step (final values), while subscript “0” refers to the values at the beginning (initial values).

The second and third time derivatives of the acceleration at the end of the time step are given by  (9)and

(9)and  (10)where Δti,0 is the previous time step of particle i.

(10)where Δti,0 is the previous time step of particle i.

Time steps are quantized according to the rule Δti,1 = 2n, where n is a negative integer defined in such a way that  (11)In this way, all particles have time steps that are powers of 2. All particles with the same time step are grouped in a block so that they are updated simultaneously. The time step of “virtual” particles replacing binaries or multiple systems (see below) is set to be Δtmin. In addition, Δtmin is the time step of particles that are relatively close to and approaching each other rapidly. To improve the efficiency of the code, every new time step cannot be longer than 2 times its previous value, jumps to higher time steps with step greater than 2 are not allowed.

(11)In this way, all particles have time steps that are powers of 2. All particles with the same time step are grouped in a block so that they are updated simultaneously. The time step of “virtual” particles replacing binaries or multiple systems (see below) is set to be Δtmin. In addition, Δtmin is the time step of particles that are relatively close to and approaching each other rapidly. To improve the efficiency of the code, every new time step cannot be longer than 2 times its previous value, jumps to higher time steps with step greater than 2 are not allowed.

The diagram of Fig. 4 shows an example of a particle distribution in different time steps in a cluster of 16 384 (214) stars.

2.2.2. The Hermite 4th order predictor-corrector scheme

The H4 scheme is the integrator used in many N-body codes. The most important feature of this integrator is that it needs only one calculation of the acceleration and its derivatives (force calculation) in every time step. This makes H4 superior to other popular integrators of the same accuracy, which require more than one force calculations per time step. A Runge-Kutta 4th order algorithm evaluates the force three times every time step, which makes this integrator much slower than H4 and consequently inappropriate for N-body simulations with large number of particles.

Another reason for choosing the H4 integrator is that GRAPE-6 is specifically designed for use with this kind of integrator. This is because GRAPE-6 returns the acceleration and its first derivative for each particle, which are the only data H4 needs. One may use a higher order integrator of the Hermite family (Nitadori & Makino 2008), but this would require extra calculations to be made of higher order derivatives of the acceleration on the CPU. Higher accuracy, also is not necessary for this kind of problem.

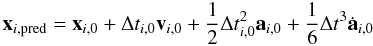

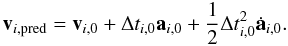

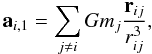

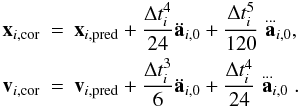

The integration steps for advancing a particle forward in time with the H4 scheme are given in the following lines (see also Makino & Aarseth 1992).

We assume that ti,0 is the current time of the particle, ti,1 = ti,0 + Δti,0 is the time after a step is taken, and Δti,0 is the current time step.

-

1.

We predict the position and velocity ofthe particle using already known data:

(12)

(12) (13)This operation has a time complexity of O(1).

(13)This operation has a time complexity of O(1). -

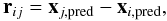

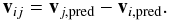

2.

We evaluate the acceleration ai and its derivative (jerk)

of the particle. To achieve this, we have first to predict the positions and velocities of all the other particles to time tf. The acceleration and jerk are evaluated on either the GRAPE-6 or the CPU using

of the particle. To achieve this, we have first to predict the positions and velocities of all the other particles to time tf. The acceleration and jerk are evaluated on either the GRAPE-6 or the CPU using  (14)

(14)![\begin{eqnarray} \dot{\textbf{a}}_{i,1} = \sum_{j \neq i} G m_j \left[ \frac{ \textbf{v}_{ij} }{r_{ij}^3} - \frac{3 (\textbf{v}_{ij} \cdotp \textbf{r}_{ij}) \textbf{r}_{ij}} {r_{ij}^5} \right] \label{a_dot}, \end{eqnarray}](/articles/aa/full_html/2010/14/aa13890-09/aa13890-09-eq84.png) (15)where

(15)where  (16)

(16) (17)If softening ϵ is included in the calculations (for collisionless simulations), then the denominators of Eqs. (14) and (15) are replaced with

(17)If softening ϵ is included in the calculations (for collisionless simulations), then the denominators of Eqs. (14) and (15) are replaced with  and

and  , respectively. We also, evaluate numerically higher order derivatives of the acceleration using

, respectively. We also, evaluate numerically higher order derivatives of the acceleration using

Fig. 5 Representation of Hermite 4th order with block time steps. In the figure, a set of 7 particles distributed in 3 time-blocks is shown. See text for description.

(18)

(18) (19)The evaluation of ai,1 and

(19)The evaluation of ai,1 and  has time complexity O(N), when it is performed on the CPU and O(log N), when it is performed on the GRAPE-6. The other calculations have complexity O(1).

has time complexity O(N), when it is performed on the CPU and O(log N), when it is performed on the GRAPE-6. The other calculations have complexity O(1).

-

3.

We correct the position and velocity of the particle using higher order terms

(20)The complexity of this operation is O(1).

(20)The complexity of this operation is O(1).

Figure 5 shows how particles distributed in 3 time-blocks are updated using the H4 scheme. We note that in a typical star cluster, the number of time-blocks usually used is 10–15. All particles lie initially at the bottom, where t = 0. Initially block 0 contains 3 particles, while blocks 1 and 2 each contain 2 particles. A black dot represents a particle after the correction step (a straight blue arrow represents the correction step), while a red dot represents a particle after a prediction step (a curved red arrow represents the prediction step). Force evaluation is necessary for every particle, only before a correction step, i.e., force evaluation happens for a particle only when it is black. For the force evaluation of a particle, a prediction of all other particles to the current time of the particle is needed. It is obvious that particles in block 0 are considered for predict-evaluate-and-correct every dt. Particles of block 1 predict every dt, but subjet to evaluate-and-correct every 2dt. Finally, particles of block 2 predict every dt and evaluate-and-correct every 4dt. The curved brown arrows show how particles are allowed to move between blocks depending on the time step calculated using Eq. (8). A jump to a higher block is allowed only if it doubles the time step of a particle (particle 3 jumps from block 0 to block 1). In contrast, a jump to any lower block is allowed if necessary (particle 6 jumps immediately from block 2 to block 0).

2.2.3. Binaries and multiples

During the evolution of the system, close encounters between stars may occur. Close binaries also may be formed dynamically, after three-body encounters. Those subsystems require a small time step to be integrated accurately in time and this time step may be much shorter than the shorterst time step Δtmin used in the N-body code. This means that this subsystem has to be specially treated to ensure that it evolves in time with the required accuracy and speed. The special treatment used for close encounters, binaries, and small multiple systems is described in the following lines.

The time-symmetric Hermite 4th order integrator (Kokubo et al. 1998) is the numerical integrator for binaries, multiples, and close encounters. This integrator is more accurate than the simple H4 integrator with the penalty of being a few times slower. Time-symmetry is achieved by applying the force evaluation-correction (EC) steps n times, after the prediction (P(EC)n) and by appropriate choice of the time step. The value n = 3 is used in the code because this is sufficient to achieve time-symmetry. Time-symmetry is responsible for transforming the H4 scheme into a symplectic integrator, which is more appropriate for the time evolution of small systems such as binaries. Symplectic integrators (Kinoshita et al. 1990) conserve the characteristics of the integrated system (energy, semimajor axis, eccentricity, period) with high accuracy, paying of course the penalty of time. In reality, when using symplectic integrators the energy of the integrated system is bounded and changes periodically with time. In contrast, non-symplectic integrators are faster, but the energy of the integrated system increases monotonically with time. A brief description of the integration method for binaries and multiple sub-systems is given at the beginning of Sect. 2.2, between items (i) and (vii).

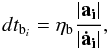

The choice of the time step for the (P(EC)3) integrator is important to preserve time-symmetry. The time step at each time t must be such that if the time were to be reversed and the particles move backwards, the time step choice would remain the same. We followed the logic of the algorithm presented in Kaplan et al. (2005) for the choice of the time step. According to this algorithm, the time step is only allowed to either increase or decrease by a factor of 2 or remain the same with respect to its last value and an increase in the time step is allowed only at even times. In this way, the time step remains symmetric and quantized as a power of 2. The current time is characterized as either even or odd with respect to the last time step taken by the system. We apply this algorithm using as a time step criterion the shortest among the time steps dtbi of the members of the binary  (21)where ηb is a parameter to control the accuracy, and ai and ȧi are the acceleration and its derivative for particle i, respectively.

(21)where ηb is a parameter to control the accuracy, and ai and ȧi are the acceleration and its derivative for particle i, respectively.

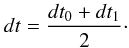

At each time t, we begin by finding whether t is even or odd with respect to the last time step, Δtold, taken. If t is odd, we maintain the same time step and by using Δtold we determine the time step at both the beginning dt0 and the end dt1 of that time step and find their average  (22)We constrain the time step used Δtold to be shorter than dt and longer than dt/2. If it satisfies this condition, then the chosen time step is the one to use in the integration. If not, then the time step must be halved. In the case of even time, we follow the same procedure, testing whether the doubling of the previous time step satisfies the criterion of being smaller than dt and greater than dt/2, where dt is given by Eq. (22). If the criterion is satisfied, then 2Δtold is the time step for the next step. If not, then we follow the same logic using Δtold as a test time step and follow exactly the same steps as if t was odd.

(22)We constrain the time step used Δtold to be shorter than dt and longer than dt/2. If it satisfies this condition, then the chosen time step is the one to use in the integration. If not, then the time step must be halved. In the case of even time, we follow the same procedure, testing whether the doubling of the previous time step satisfies the criterion of being smaller than dt and greater than dt/2, where dt is given by Eq. (22). If the criterion is satisfied, then 2Δtold is the time step for the next step. If not, then we follow the same logic using Δtold as a test time step and follow exactly the same steps as if t was odd.

For this algorithm to work properly, an appropriate choice for ηb must be made. This choice needs to be small enough for dt not to change by more than a factor of 2 with respect to its previous value. In addition, ηb is responsible for providing a smooth transition to the time step from the N-body code to the binary module of the code, to ensure that a particle that has either just joined a binary system or just left it does not change its time step by more than a factor of 2. This is required because major changes in the time step introduce significant errors in the integration. To guarantee this behavior, ηb is calculated for every binary at the time of its formation. To do this, we determine the particle i with the smallest value of |ai|/|ȧi| and using Eq. (21) we determine ηb by requiring that dt = Dtmin/2, which is given by Eq. (4) and is the smallest time step of the N-body code; it is also the last time step taken by all the future members of the binary or the next time step for all the particles that leave a binary. In the case of a binary with more than 2 members, we use a shared time step for all its members. To avoid major changes in the time step criterion, we use an even smaller value for ηb. The problem here is that if a single particle escapes for example from a triple system, leaving behind a close binary system, the time step of the escaping particle is forced to jump to Dtmin even if it was much smaller when the particle was still a member of the triple. This necessary change in the time step may introduce errors in the integration. To solve this problem, an individual time step must be used for multiple subsystems. In this way, all members of the binary would have their own time steps and an escaping particle would increase its time step by just a factor of 2.

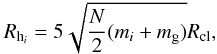

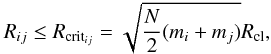

Finding the binary systems in an N-body system is a slow procedure with time complexity O(N2), unless all particle classes are members of a linked list, as in a binary search tree, or GRAPE-6 is used for acceleration. Here we use the neighbor-module of GRAPE-6 to search more rapidly for binaries and close encounters. Each star i with mass mi is associated with a distance  (23)where mg is the mass of the most massive star of the system, N the total number of stars, and Rcl is given by Eq. (5). During the calculation of the force for the star i, this distance is sent to the neighbor-module of GRAPE-6, which returns the identities of the stars that lie in distances r ≤ Rhi from the star i. Those stars are considered as “neighbors” of the star i. Then, a search between the neighbors is performed to find out whether one or more of them is about to have a close encounter or to form a close binary system with star i. The criterion for a close encounter between the star i and its neighbor j is

(23)where mg is the mass of the most massive star of the system, N the total number of stars, and Rcl is given by Eq. (5). During the calculation of the force for the star i, this distance is sent to the neighbor-module of GRAPE-6, which returns the identities of the stars that lie in distances r ≤ Rhi from the star i. Those stars are considered as “neighbors” of the star i. Then, a search between the neighbors is performed to find out whether one or more of them is about to have a close encounter or to form a close binary system with star i. The criterion for a close encounter between the star i and its neighbor j is  (24)

(24) (25)where Rij is the separation between stars i and j, mi, and mj their masses, N is the total number of stars, and Vij is the relative velocity of the two stars. The choice of the distance Rcritij comes from our finding that in an equal-mass system, if the distance between two stars is more than Rcl, then they can be integrated accurately using the H4 integrator with a time step greater than or equal to Dtmin, given by Eq. (4), which is the shortest time step allowed by the block time-step scheme. If the separation is smaller than Rcl, then a smaller time step is needed, so integrating the two particles in time with acceptable accuracy, needs some special treatment. The factor

(25)where Rij is the separation between stars i and j, mi, and mj their masses, N is the total number of stars, and Vij is the relative velocity of the two stars. The choice of the distance Rcritij comes from our finding that in an equal-mass system, if the distance between two stars is more than Rcl, then they can be integrated accurately using the H4 integrator with a time step greater than or equal to Dtmin, given by Eq. (4), which is the shortest time step allowed by the block time-step scheme. If the separation is smaller than Rcl, then a smaller time step is needed, so integrating the two particles in time with acceptable accuracy, needs some special treatment. The factor  in Eq. (24) is used to generalize the criterion for a non-equal-mass system. Finally, the distance Rhi that is sent to GRAPE-6 during the calculation of the force for star i, is simply 5 times the critical distance between this star and the most massive star of the system, to ensure that every close encounter between two stars is detected.

in Eq. (24) is used to generalize the criterion for a non-equal-mass system. Finally, the distance Rhi that is sent to GRAPE-6 during the calculation of the force for star i, is simply 5 times the critical distance between this star and the most massive star of the system, to ensure that every close encounter between two stars is detected.

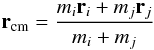

If the above criteria are fulfilled, a binary with the two particle classes as members is created. The two particle classes are removed from the N-body system and replaced by a new particle that corresponds to a “virtual” star represending the center of mass of the removed stars. This “virtual” star has mass  (26)and is placed at position

(26)and is placed at position  (27)with velocity

(27)with velocity  (28)and behaves as if it were a normal star in the rest-frame of the N-body system.

(28)and behaves as if it were a normal star in the rest-frame of the N-body system.

A third star k at distance Rk from the center of mass of the binary, becomes a member of the binary transforming it to a triple system, if one of the criteria below is satisfied:

-

1.

In all cases, if the distance of the star k from at least one of the members of the binary is smaller than 2/3Rcl

-

2.

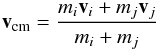

If the relative motion of the two stars is a simple close encounter (e > 1, where e the eccentricity of the system), when

(29)where

M is the total mass of the binary and

mk the mass of the third star.

(29)where

M is the total mass of the binary and

mk the mass of the third star.

-

3.

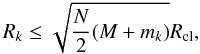

If the relative motion of the two stars is a close binary (e < 1), then the dimensionless perturbation γk, acting on the binary from star k

(30)becomes

greater than a critical value, i.e.,

(30)becomes

greater than a critical value, i.e.,

(31)

(31)

, where M is given by Eq. (26),

, where M is given by Eq. (26),  is the mean mass of the system, Rb is the size of the binary defined to be either its semimajor axis for hard binaries (i.e., binaries of binding energy greater than the average energy of a particle) or the distance R between its members in the case of soft binaries (i.e., binaries with binding energy lower than the average energy of a particle).

is the mean mass of the system, Rb is the size of the binary defined to be either its semimajor axis for hard binaries (i.e., binaries of binding energy greater than the average energy of a particle) or the distance R between its members in the case of soft binaries (i.e., binaries with binding energy lower than the average energy of a particle).

The same criteria are used when a fourth particle comes close enough to a triple system. The critical value of γ for soft binaries is γcrit = 0.125. For hard binaries, we use the value γcrit = 0.015625. In an equal-mass system, according to Eq. (30), the first value corresponds to a distance Rk = 2 Rb for the third star, while the second to a distance Rk = 4 Rb. This means that in an equal-mass system, a third star would become a member of a soft binary when it lies at a distance twice the size of the binary. If the binary is hard, the critical distance is 4 times the size of the binary.

Another way of forming multiple sub-systems with number of stars n ≥ 4 is when two binaries or multiples approach each other. When the distance between their centers of mass becomes smaller than three times the sum of their sizes, then the two binaries merge forming a larger system where all particles interact strongly with each other. The size of a binary is defined to be its semimajor axis, for hard binaries or the distance between its members, for soft binaries.

Termination of a triple, quadruple, or higher order sub-system occurs when the following three criteria are met:

-

1.

When the dimensionless perturbation γj acting on the particle-member of the sub-system j on the inner binary, becomes less than γcrit

(32)

(32) -

2.

The relative velocity of particle j, vj, with respect to the inner binary is positive, i.e., particle j moves outwards.

-

3.

The distance of particle j from any of the other particles/members of the multiple system, is greater than Rcl.

The so-called “inner binary” is the hardest double sub-system inside the multiple (the one with the greatest binding energy).

In some rare cases, two particles-members of a multiple system with number of stars n ≥ 4 are removed together from the system and form a close binary.

Termination of a simple binary occurs when the distance between its members becomes greater than Rcl. Hard binaries are not terminated, unless another particle becomes a member and interacts strongly with their members. The outcome of these interactions may be a softer or harder binary, a collision, or even a dissolved system.

Collisions:

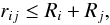

Another rare case for termination of a binary is a collision between its members. A collision between two stars occurs when their distance rij (33)where Ri, Rj the radii of the two stars.

(33)where Ri, Rj the radii of the two stars.

The stellar radius Ri for a main sequence star is given by  (34)where mi is the mass of the star, R⊙ is the radius, and M⊙ is the mass of the sun. The parameter a depends on the mass mi (Bowers & Deeming 1984) such that

(34)where mi is the mass of the star, R⊙ is the radius, and M⊙ is the mass of the sun. The parameter a depends on the mass mi (Bowers & Deeming 1984) such that  (35)If the star is a black hole, then Ri corresponds to its Schwarchild radius

(35)If the star is a black hole, then Ri corresponds to its Schwarchild radius  (36)where c is the speed of light.

(36)where c is the speed of light.

Perturbers:

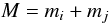

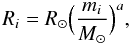

The internal motion of a binary or multiple sub-system is controlled by the internal forces acting between its member stars. External perturbations from stars passing close to the center of mass of the sub-system are also included. A star k is considered as a perturber of a binary system if the dimensionless perturbation γk, acting from the star to the binary, is greater than a critical value  (37)A typical value of 10-7 is used for γpert, but this value is usually modified to limit the total number of perturbers for a binary system according to the total number of stars. During the force calculation, the external perturbations for every star-member of a binary or multiple system are calculated and added to their internal values. The perturbation in the acceleration ai and first derivative

(37)A typical value of 10-7 is used for γpert, but this value is usually modified to limit the total number of perturbers for a binary system according to the total number of stars. During the force calculation, the external perturbations for every star-member of a binary or multiple system are calculated and added to their internal values. The perturbation in the acceleration ai and first derivative  acting on a star i, a member of a binary or multiple system, caused by an external star j are calculated using

acting on a star i, a member of a binary or multiple system, caused by an external star j are calculated using  (38)

(38) (39)where acm,j is the contribution of particle j to the acceleration of the center of mass,

(39)where acm,j is the contribution of particle j to the acceleration of the center of mass,  is the contribution of particle j to the first derivative of the acceleration of the center of mass, and ai,j and

is the contribution of particle j to the first derivative of the acceleration of the center of mass, and ai,j and  are the direct contributions of particle j to the total acceleration and jerk of particle i, respectively.

are the direct contributions of particle j to the total acceleration and jerk of particle i, respectively.

Identifying the perturbers of a binary or multiple sub-system is done by using the neighbor-module of GRAPE-6. During the force calculation for an “virtual” star i, a distance  (40)is sent to the neighbor-module of GRAPE-6. Where mg is the mass of the most massive star in the system, mi the mass of the “virtual” star, i.e., the total mass of the binary or multiple subsystem, and d the size of the subsystem i.e. the maximum distance between its members. To avoid the case of overflow of the neighbor lists of GRAPE-6, each “virtual” star is sent alone to GRAPE-6, after cleaning the previous neighbor lists from GRAPE-6 memory. To avoid overflow and limit the number of perturbers per binary system in systems with tens of thousands of stars, Rpi is also modified to ensure that ~100 neighbors are returned. This is achieved by preventing Rpi becoming greater than 2.5 × r6, where r6 is the distance of the sixth nearest neighbor of star i, used to find the core radius of the cluster, as we see below, and 2.5 × r6 is an estimate of the distance of the 100th nearest neighbor of star i, assuming that the density around star i is constant and unchanged from the time of the last calculation of r6. This means that the calculation of the core radius should be repeated relatively often, to estimate the true number density around each star at any time. This calculation is usually iterated a few times every crossing time, which is defined to be

(40)is sent to the neighbor-module of GRAPE-6. Where mg is the mass of the most massive star in the system, mi the mass of the “virtual” star, i.e., the total mass of the binary or multiple subsystem, and d the size of the subsystem i.e. the maximum distance between its members. To avoid the case of overflow of the neighbor lists of GRAPE-6, each “virtual” star is sent alone to GRAPE-6, after cleaning the previous neighbor lists from GRAPE-6 memory. To avoid overflow and limit the number of perturbers per binary system in systems with tens of thousands of stars, Rpi is also modified to ensure that ~100 neighbors are returned. This is achieved by preventing Rpi becoming greater than 2.5 × r6, where r6 is the distance of the sixth nearest neighbor of star i, used to find the core radius of the cluster, as we see below, and 2.5 × r6 is an estimate of the distance of the 100th nearest neighbor of star i, assuming that the density around star i is constant and unchanged from the time of the last calculation of r6. This means that the calculation of the core radius should be repeated relatively often, to estimate the true number density around each star at any time. This calculation is usually iterated a few times every crossing time, which is defined to be  (41)as described in Appendix A. With the above restrictions, the GRAPE-6 memory does not overflow and GRAPE-6 returns the identities of the “neighbors” of star i, which are the perturbers of the corresponding binary system. Nevertheless, if an overflow occurs, then the perturbers are found after searching among all the stars of the system and the result is used to avoid future overflows.

(41)as described in Appendix A. With the above restrictions, the GRAPE-6 memory does not overflow and GRAPE-6 returns the identities of the “neighbors” of star i, which are the perturbers of the corresponding binary system. Nevertheless, if an overflow occurs, then the perturbers are found after searching among all the stars of the system and the result is used to avoid future overflows.

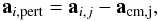

|

Fig. 6 Perturbers. Stars with γ ≥ γpert are considered as perturbers of a binary system. Those are the stars represented as empty (red) circles in the above image. The filled (black) circles represent the members of the binary or multiple sub-system. If the dimensionless perturbation γ, given by Eq. (30), becomes greater than γcrit, then the star is included into the binary or multiple sub-system. More stars could be added forming a multiple system with up to ~10 stars. Those systems are usually dispersed quickly. On the other hand, a star with γ becoming smaller than γpert is no longer a perturber. Those are the stars represented with filled (blue) squares. For an equal mass system, we typically set γpert ~ 10-7 and γcrit = 0.125 if the inner binary is considered as “soft”, while γcrit = 0.015625 is assumed if it is considered as “hard”. |

Figure 6 shows schematically the distribution of perturbers and non-perturbers for a close binary system.

We note here a couple of definitions used regularly in the remainter of the paper. In all simulations we refer to as escapers, the star clusters that are assumed to be isolated i.e., for which no external galactic field exists. Stars that are moving outwards and reach a distance two times the initial size of the cluster, are considered as escapers from the system. When a star escapes, the energy of the system is reinitialized. In addition we refer to as hard binary a close binary system whose energy per unit mass h < − 1 or whose semimajor axis a < Rh(mi + mj)/2, where mi and mj the masses of its members and Rh its half-mass radius. In an equal mass system, the limit for a becomes a < Rh/N, where N is the number of stars.

3. Results and performance

We measure the accuracy and performance of Myriad by using it to evolve different systems in time. The most simple tests are those where an equal-mass Plummer sphere (Plummer 1911) is used as an initial configuration. We evolve systems with different numbers of stars and measure the accuracy and the speed of Myriad. As a measure of the accuracy of the code, we use the relative error in the total energy, after evolving the systems for one N-body time unit. The speed of the code is measured by the CPU time required for the simulations. We also performed simulations of systems with equal-mass stars distributed in a Plummer sphere and systems with different initial mass functions distributed in either a Plummer sphere or according to a King density profile (King 1966), up to core collapse. In these simulations, we measure the time of core collapse and compare the results with other codes and with results found in the literature.

In all simulations, the stars are considered as interacting point particles. The real dimensions of a star are taken into account only when it collides with another star. Stellar evolution, stellar mass-loss, and binary evolution are also not included. If close black-hole binaries are formed, they evolve using purely Newtonian dynamics, without any post-Newtonian terms in the equations. Finally, the effects of the Galactic tidal forces on the star clusters are ignored i.e., star clusters are assumed to be isolated. Initial data in all simulations were provided by the Starlab software environment.

The host computer used for these tests is an AMD Athlon(tm) 64 Processor 3500+ operating at 2.2 GHz with 2GB of RAM, connected to a 32-chip GRAPE-6 board and running Fedore Core 1 GNU/Linux with the 2.4.22 kernel.

3.1. Accuracy

There are many different sources of errors in an N-body code. Two of them, which can be easily controlled, are the choice of integration scheme and its order. Here, as mentioned earlier, the integrator is 4th order. If the time step were constant and common for all stars, then the errors would scale as O(dt4). In codes that use block time steps, such as Myriad, the parameter that controls the error is the accuracy parameter η is used in the time-step criterion of Eq. (8). Another source of errors, which is clear when small values of η are chosen, is the accuracy of the hardware, both CPU and GRAPE-6. Myriad uses double precision for all calculations on the CPU, while the accuracy of the calculation of the accelerations and derivatives is controlled by GRAPE-6 (Makino et al. 2003b).

|

Fig. 7 Cumulative relative energy error as a function of η for a Plummer model with N = 8192 (top left), N = 16384 (top right), N = 32768 (bottom left), and N = 65536 (bottom right). The integrations ended at t = 1 N-body units. |

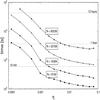

We performed a series of experiments using Myriad to evolve equal-mass Plummer models, with different particle numbers N from t = 0 to t = 1 N-body time units. For all the integrations, we recorded the cumulative relative energy error (ΔE/E = (Et = 1 − Et = 0)/E0) and studied its dependence on the accuracy parameter η and particle number N. For this study, we varied the accuracy parameter η from 10-4 to 0.2 and the particle number from 8192( ≡ 8K) to 65536( ≡ 64K), where 1K ≡ 1024. The results are shown in Fig. 7.

For small values of η (η ≤ 5 × 10-3), the relative energy error is almost constant. This is because for those values of η the integration error is small and the total error is dominated by the hardware precision. For values of the accuracy parameter η ≥ 5 × 10-3, the error increases with η as expected. When η ≥ 0.1, the errors are too large, so these choices of accuracy parameter are inappropriate for simulations. The typical choices for the accuracy parameter are (0.001 ≤ η ≤ 0.01) and the relative energy error between these limits is ΔE/E0 ≤ 10-9 for N ≤ 16 K and ΔE/E0 ≤ 10-7 for greater values of the particle number N.

The dependence of the error on the particle number N is weak and evident only at the lower values of η. For greater values of N, the relative energy error saturates to slightly smaller limits.

3.2. Performance

The elapsed time T for a simulation depends on the accuracy parameter η and particle number N. The elapsed time (wall-clock time) as a function of η and N for the simulations of Fig. 7 is shown in Fig. 8. As expected, T grows with smaller η and greater N. We note that smaller η indicates that shorter time steps are used on average.

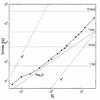

To study the speed of Myriad, we performed a set of integrations of equal-mass Plummer models with different particle numbers N from t = 0 to t = 1 N-body time units. For all simulations, we recorded the wall-clock time as a function of N and the results are shown in Fig. 9, where N is chosen to be between 512( = 29) and 262144( = 218). The accuracy parameter used for all simulations was η = 0.01. In the same figure, the slopes of N2, N3, and Nlog 10N are shown for comparison.

The complete set of calculations inside Myriad has a time complexity of O(Nlog N). There is only one calculation that has a time complexity O(mN), where 1 ≤ m ≤ N is the average number of neighbors per particle returned by GRAPE-6 or, if GRAPE-6 returns an overflow, calculated by the CPU. This is the calculation of the core radius of the star cluster. This calculation is repeated every diagnostic time step dtdiag. For the experiments of Fig. 9, this calculation took place only once, before t = 1. As the number of stars increases, the calculation of the core radius takes a comparable amount of time to the integration time. This is why initially the slope in Fig. 9 is similar to the slope of Nlog N, but as N increases, it tends to more closely resemble the slope of N2. We note that all stars at t = 0 begin with the shortest time step Dtmin and then, as time passes by, they are slowly distributed in all the available time blocks. Because of this, the simulation initially operates more slowly. We note that primordial binary systems were not included in all runs, while no close binary systems were dynamically formed until t = 1 N-body time units. At later times, dynamically formed binary systems would force the simulation to run slower.

|

Fig. 8 Wall-clock elapsed time as a function of N and η for the simulations of Fig. 7. All simulations ended at t = 1 in N-body units. |

|

Fig. 9 Wall-clock elapsed time as a function of the number of stars N. All simulations ended at t = 1 in N-body units. The accuracy parameter η had the same value 0.01 in all simulations. The slopes of O(N3), O(N2), and O(Nlog N) are also shown for comparison. |

3.3. Binaries and multiples

|

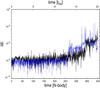

Fig. 10 Cumulative energy error (ΔE/E0 = (Et − E0)/E0) as a function of time for the first 10 periods for the simulations of equal-mass binary systems with 4 different eccentricities. The eccentricities of the binaries are e = 0.19 (red line), e = 0.51 (green dashed line), e = 0.75 (blue dotted line), and e = 0.91 (cyan dashed-dotted line). |

|

Fig. 11 Variation in the time step used for the evolution of the binary systems of Fig. 10 during 2 periods of each binary. The time step parameter ηb is chosen so that the error in all the binaries is of the same order. |

|

Fig. 13 Cumulative energy error (ΔE/E0 = (Et − E0)/E0) as a function of time for 105 periods for the simulation of a binary system with eccentricity e = 0.89 and mass ratio mheavy/mlight = 15. |

|

Fig. 14 The trajectory of the two stars in the simulation of Fig. 13. The two stars are orbiting around their common center of mass, which is located at O(0,0). |

|

Fig. 15 Cumulative energy error (ΔE/E0 = (Et − E0)/E0) as a function of time for the Pythagorean three-body system. |

|

Fig. 16 Trajectories of the particles in the simulation of the Pythagorean three-body system. The initial positions of the particles are marked with black circles. |

Before testing Myriad in simulations of clusters up to core collapse, we investigated the accuracy of the the code in handling close binaries and multiples. We recall that the algorithm used in the binary module of Myriad is the time-symmetric Hermite 4th order scheme described in Sect. 2.2.3 and note that the force calculation is done on the CPU.

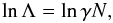

Figure 10 shows the cumulative relative error in the energy for the first 10 periods of binaries consisting of equal mass particles and having different eccentricities. The choice of the accuracy parameter ηb in the time step calculation and the maximum allowed time step are such that for all simulations the initial energy errors are of the same order. The number of time steps per period for the binary with eccentricity e = 0.19 is 720, for the binary with e = 0.51 is 2200, for the binary with e = 0.75 is 2200, and for the binary with e = 0.91 is 4300. Figure 11 shows how the time step changes according to our time step criterion, during the first 2 periods of each of the binaries. As shown in the figure, the time step is quantized as a power of 2. Finally, the cumulative energy error for evolving the binaries for 105 periods is shown in Fig. 12. For a clearer visual result, we have printed a fraction of the points in the figure. It is obvious that the algorithm used for the dynamical evolution of close binary systems is time-symmetric and preserves the energy since there is no linear growth in the energy errors. The energy error oscillates between a lower and higher value. The lower error is observed during the apastron passage, while the higher value is observed during the periastron passage. We would have the same behavior for the energy error even if we decrease the number of steps per period for the simulations. The error would be greater but still have an upper limit and no linear growth with time.

Figure 13 shows the cumulative relative energy error in the case of a binary with unequal mass members (mass ratio = 15) and eccentricity e = 0.89. We followed the simulation for 105 periods. The energy error does not grow linearly with time, but instead follows some kind of random walk between some limits. This result shows that the binary module of Myriad is capable of evolving binaries with high mass ratios and high eccentricities for a long period without significant energy errors. The trajectories of the two particles around their common center of mass for the full simulation of 105 periods are shown in Fig. 14.

We finally tested the performance of Myriad in handling triple systems by evolving the well known Pythagorean three-body system. This system consists of three different masses initially located at rest at the corners of a right triangle whose sides have lengths 3, 4, and 5. Each of the three masses is located in such a way that its mass is equal to the length of the opposite side. Mass m1 = 3 is opposite the side of length 3, m2 = 4 is opposite the side of length 4 and m3 = 5 is opposite the side of length 5. The three masses interact strongly with each other, and their trajectories after some time are shown in Fig. 16. The final result is a hard binary consisting of particles with masses m3 = 5 and m2 = 4 and an escaping particle. The evolution of the cumulative relative energy error for the simulation is shown in Fig. 15. We note the two “jumps” in the energy error. These “jumps” occur when two of the particles come very close to each other and as a result more time steps are needed to perform an accurate integration. We used a constant accuracy parameter ηb = 0.0001. The cumulative relative energy error at t = 100 N-body units is 2.23776 × 10-8.

3.3.1. Choice of the parameters

In Myriad, assuming that a good choice for the accuracy parameter η is 0.01 and adjusting the accuracy parameter for binary evolution ηb so that there is a smooth transition between the binary module and the Hermite integrator, there is another free parameter that balances between speed and accuracy in collisional simulations up to core collapse. This is the number of perturbers for every binary or multiple subsystem controlled by the critical dimmensionless perturbation γpert. This parameter is discussed in Sect. 2.2.3 (Eq. (37)). For the rest of this work, we choose γpert = 10-7, which is small enough to ensure that the errors introduced into the simulations by replacing two or more particles that lie close to each other, with their center of mass, are not significant. Smaller values of γpert would slightly increase accuracy, but the speed of the code would be lowered significantly, since the forces of the perturbers on the members of a binary are computed on the CPU and not on the GRAPE-6. Finally, if the value of γpert is responsible for large numbers of perturbers per binary system, this value is increased so that no more than 100 perturbers per binary are used.

3.4. Core collapse

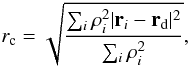

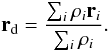

The evolution of a star cluster up to and beyond core collapse is one of the challenges for any N-body code. During core collapse, the density at the center reaches its maximum value, while the core radius, defined by the equation  (42)which is described in Appendix A, reaches its minimum value. Figures 23 and 17 clearly show this behavior as a star cluster of 1024 equal-mass stars evolves beyond core collapse. As the cluster evolves, close binary or multiple systems are formed and interact with single stars or other binary or multiple systems. Those interactions become more frequent and violent as the cluster approaches core collapse, because the density of stars around the center of the cluster reaches its maximum. All of these encounters have to be resolved with an acceptable accuracy. In addition, some stars approach the outer bounds of the system and consequently escape the system.

(42)which is described in Appendix A, reaches its minimum value. Figures 23 and 17 clearly show this behavior as a star cluster of 1024 equal-mass stars evolves beyond core collapse. As the cluster evolves, close binary or multiple systems are formed and interact with single stars or other binary or multiple systems. Those interactions become more frequent and violent as the cluster approaches core collapse, because the density of stars around the center of the cluster reaches its maximum. All of these encounters have to be resolved with an acceptable accuracy. In addition, some stars approach the outer bounds of the system and consequently escape the system.

We tested the behavior of Myriad in evolving star clusters up to core collapse and compared the results with those produced by Starlab or found in the literature. For these tests, we used 3 different initial configurations. We initially used an equal-mass Plummer model and studied the time of core collapse and the evolution of the error in the total energy. The same simulation with similar parameters was performed using Starlab for comparison. The next test was the evolution of a star cluster with an initial mass function up to core collapse. The stars were again distributed initially according to a Plummer density profile. Finally, we performed several simulations of star clusters with an initial mass function of a broad range of masses, distributed initially according to a King density profile. The result of the latter simulations was a measure of the core collapse time (tcc) as a function of the half-mass relaxation time (trlx). These results were compared with existing results found in the literature.

3.4.1. Equal-mass plummer models

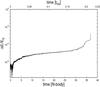

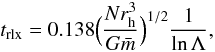

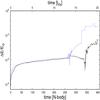

We performed a simulation of an equal-mass Plummer model up to core collapse to test the stability, accuracy, and speed of Myriad. Figure 17 shows the evolution of the Lagrangian radii that corresponds to fractions of the total mass of the system and the evolution of the core radius of a system, which was initially an equal-mass Plummer model with N = 1024 stars. It is obvious that the core radius and the smallest Lagrangian radii reach a minimum at tcc ≃ 340 N-body time units, when core collapse occurs. From equation

The core collapse times for different initial models.

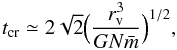

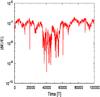

(43)which is described in Appendix A, we find that for this system the initial half-mass relaxation time is trlx = 19.92 N-body time units, so tcc ≃ 17trlx, which is close to what is expected from Eq. (A.15) and consistent with the results of other codes presented in Table 1 of Freitag & Benz (2001) and Anders et al. (2009), where a detailed comparison between the two major N-body codes Starlab and NBODY4 is presented. In Fig. 18, we compare the results of Myriad and Starlab for the evolution of selected Lagrangian radii of the same system. The small differences in the Lagrangian radii computed by the two codes, may be caused by the difference in the escaper-removal criterion and small differences in the time-step allocation criteria. Because of these differences, the simulation performed with Myriad ended with 983 stars remaining in the system, while that completed with Starlab contained 973. Figure 19 shows the evolution of the instantaneous energy error δE for this simulation. In the same figure, the simulation error of Starlab is presented for comparison. The error is initially small and unaffected by close encounters and binary systems. It grows slowly with time, as expected for the H4 integrator. As the system approaches core collapse, the interactions at the center become more frequent and violent and the energy error is contolled by them. Hard binaries form and their interactions with single stars or other binaries introduce another source of error. After core collapse, the average error becomes smaller, but some peaks, due to strong encounters between hard binaries, continue to appear.

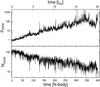

(43)which is described in Appendix A, we find that for this system the initial half-mass relaxation time is trlx = 19.92 N-body time units, so tcc ≃ 17trlx, which is close to what is expected from Eq. (A.15) and consistent with the results of other codes presented in Table 1 of Freitag & Benz (2001) and Anders et al. (2009), where a detailed comparison between the two major N-body codes Starlab and NBODY4 is presented. In Fig. 18, we compare the results of Myriad and Starlab for the evolution of selected Lagrangian radii of the same system. The small differences in the Lagrangian radii computed by the two codes, may be caused by the difference in the escaper-removal criterion and small differences in the time-step allocation criteria. Because of these differences, the simulation performed with Myriad ended with 983 stars remaining in the system, while that completed with Starlab contained 973. Figure 19 shows the evolution of the instantaneous energy error δE for this simulation. In the same figure, the simulation error of Starlab is presented for comparison. The error is initially small and unaffected by close encounters and binary systems. It grows slowly with time, as expected for the H4 integrator. As the system approaches core collapse, the interactions at the center become more frequent and violent and the energy error is contolled by them. Hard binaries form and their interactions with single stars or other binaries introduce another source of error. After core collapse, the average error becomes smaller, but some peaks, due to strong encounters between hard binaries, continue to appear.

The comparison between Myriad and Starlab is also shown in Fig. 20, where the respective relative energy error ratio Myriad/Starlab is presented. The error of Myriad at any time step is smaller by 300 times than the error of Starlab, while most of the time the error of Starlab is greater. After t ~ 250 and until core collapse, the error of Myriad becomes several times smaller than that of Starlab. After core collapse, both Starlab and Myriad have large instantaneous errors and their ratio scatter from 10-5 to 300. Figure 21 shows the cumulative relative energy error for the simulation as it evolves with time. The same error of Starlab again is presented for comparison. It is obvious that before core collapse the two codes show identical behavior and after core collapse the error of Myriad remains smaller than that of Starlab.

The simulation ended at t ≃ 402 N-body time units. In Fig. 22, the CPU times of Myriad and Starlab are compared. For Myriad, the total CPU time and the CPU time spent in force calculation and binary evolution are shown. Before core collapse, the CPU time of Myriad is constantly greater than that of Starlab. This is because of the small differences between the two codes in the time step criterion, which on average causes Myriad to store more particles in small time-step blocks. A search for neighbors also occurs every time these particles are updated, which slows down the simulation significantly. The CPU time of the Myriad simulation until core collapse is about T ≃ 1.7 h, while the same time for Starlab is about T ≃ 1 h. After core collapse, the CPU time of Starlab becomes longer because of the formation of hard binaries. Hard binaries lead to an increase in the CPU time of Myriad cause it to finish in about T ≃ 8 h. The simulation done with Starlab, with similar parameters as those used in Myriad, took about 13 h, spending most of the time in the post-collapse part. Finally, in Fig. 23 we show the evolution of the mass density and the number of stars at the core. As expected, the core density increases with time and reaches a maximum at the time of core collapse. On the other hand, the number of stars at the core drops with time and reaches a minimum value at core collapse.

|

Fig. 17 Core radius (heavy black line) and Langrangian radii containing 90%, 80%, 70%, 60%, 50%, 40%, 30%, 20%, 10%, 5%, and 3% (blue dashed lines from top to bottom) of the total mass. The half mass radius is indicated by a heavier dashed line. Core collapse is reached at tcc ≃ 340 N-body time units or tcc ≃ 17trlx. |

|

Fig. 18 Lagrangian radii containing from top to bottom 90%, 50%, 10%, and 1% of the total mass. The continuous lines are the results produced by Myriad, while the blue dashed lines are produced using Starlab. |

|

Fig. 19 Error in the energy per quarter of the time unit (δE = E(t) − E(t − 0.25)) as it evolves in time for the simulation of an equal-mass Plummer model of 1024 stars. The simulation ended at t ≃ 402 N-body time units. The dashed line is the energy error of Starlab when it was used for evolving the same system. Before core collapse, the energy errors are identical. |

3.4.2. Plummer models with a Salpeter initial mass function

A simulation of a Plummer model with N = 16384 stars was performed assuming a (Salpeter 1955) initial mass function (IMF) and limiting masses of mlower = 0.5 M⊙ and mupper = 5 M⊙. In Fig. 24 we show the evolution of the Lagrangian radii and core radius of the system. In Fig. 25, we illustrate the evolution of the error in the total energy of the system for this simulation. Core collapse is reached at t ≃ 2.2trlx, which is much earlier than expected for an equal-mass system, given empirically by Eq. (A.15). This is expected because heavier stars tend to sink to the core faster and because of that core collapse occurs earlier. The time taken for a star of mass m to sink to the center from a circular orbit at r ≫ rc is given by (Portegies Zwart & McMillan 2003)  (44)According to Eq. (44), the most massive stars of the above system would sink to the center in a time of about ts ≃ 0.73trlx. Until t = 2.2trlx, most of the massive stars are located close to the center and this leads to the observed core collapse of the cluster.

(44)According to Eq. (44), the most massive stars of the above system would sink to the center in a time of about ts ≃ 0.73trlx. Until t = 2.2trlx, most of the massive stars are located close to the center and this leads to the observed core collapse of the cluster.

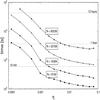

3.4.3. King models with an initial mass function

We performed simulations of star clusters with initial density distribution of a W0 = 6 King model (King 1966) up to core collapse. We used a (Scalo 1986) initial mass function (IMF) with lower and upper limits of 0.1 M⊙ and 100 M⊙, respectively. We varied the number of stars from N = 6122 to N = 12288 and found the core collapse time tcc as a fraction of the half mass relaxation time trlx of the systems. The systems chosen are similar to those used in Portegies Zwart & McMillan (2003), where the simulations were performed using the Starlab package including stellar mass loss from stellar evolution. The results are presented in Table 1. As the core collapse time we consider the time of the creation of the first dynamically formed hard binary system in agreement with Portegies Zwart & McMillan (2003). We performed 18 simulations and our result for the core collapse time is tcc ≃ (0.17 ± 0.05)trlx . The result is slightly smaller than Portegies Zwart & McMillan (2003), which is tcc ≃ (0.19 ± 0.08)trlx . This difference in results may be due to the lack of stellar mass loss and stellar evolution in Myriad which, according to Portegies Zwart & McMillan (2003), tends to delay core collapse.

The evolution of Lagrange radii and core radius in one of the simulations containing N = 6122 stars is shown in Fig. 26. For a clearer visual result, we have applied a cubic spline interpolation to the core radius. The cumulative error in the energy for the same simulation is presented in Fig. 27. In Figs. 28 and 29, we present the same results for one of the simulations containing N = 12288 stars. The cumulative energy error in all simulations remained below 10-3.

|

Fig. 20 Respective energy error ratio Myriad/Starlab as a function of time. |

4. Discussion

Sources of energy errors:

There are unavoidable numerical errors in all N-body simulations. Here we discuss the source of numerical errors in simulations with Myriad and also how the total CPU time is divided between the different parts of the code.