| Issue |

A&A

Volume 690, October 2024

|

|

|---|---|---|

| Article Number | A12 | |

| Number of page(s) | 17 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202449963 | |

| Published online | 25 September 2024 | |

Jacobian-free Newton-Krylov method for multilevel nonlocal thermal equilibrium radiative transfer problems

Institute for Solar Physics, Dept. of Astronomy, Stockholm University, AlbaNova University Centre,

106 91

Stockholm,

Sweden

Received:

13

March

2024

Accepted:

5

August

2024

Context. The calculation of the emerging radiation from a model atmosphere requires knowledge of the emissivity and absorption coefficients, which are proportional to the atomic level population densities of the levels involved in each transition. Due to the intricate interdependence of the radiation field and the physical state of the atoms, iterative methods are required in order to calculate the atomic level population densities. A variety of different methods have been proposed to solve this problem, which is known as the nonlocal thermodynamical equilibrium (NLTE) problem.

Aims. Our goal is to develop an efficient and rapidly converging method to solve the NLTE problem under the assumption of statistical equilibrium. In particular, we explore whether the Jacobian-Free Newton-Krylov (JFNK) method can be used. This method does not require an explicit construction of the Jacobian matrix because it estimates the new correction with the Krylov-subspace method.

Methods. We implemented an NLTE radiative transfer code with overlapping bound-bound and bound-free transitions. This solved the statistical equilibrium equations using a JFNK method, assuming a depth-stratified plane-parallel atmosphere. As a reference, we also implemented the Rybicki & Hummer (1992) method based on linearization and operator splitting.

Results. Our tests with the Fontenla, Avrett and Loeser C model atmosphere (FAL-C) and two different six-level Ca II and H I atoms show that the JFNK method can converge faster than our reference case by up to a factor 2. This number is evaluated in terms of the total number of evaluations of the formal solution of the radiative transfer equation for all frequencies and directions. This method can also reach a lower residual error compared to the reference case.

Conclusions. The JFNK method we developed poses a new alternative to solving the NLTE problem. Because it is not based on operator splitting with a local approximate operator, it can improve the convergence of the NLTE problem in highly scattering cases. One major advantage of this method is that it is expected to allow for a direct implementation of more complex problems, such as overlapping transitions from different active atoms, charge conservation, or a more efficient treatment of partial redistribution, without having to explicitly linearize the equations.

Key words: line: profiles / radiative transfer / methods: numerical / Sun: atmosphere

© The Authors 2024

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

The statistical equilibrium equations describe the radiative and collisional transitions between the different levels of a model atom (see, e.g., Hubeny & Mihalas 2014). When the collisional terms dominate the rate equations, the assumption of local ther-modynamical equilibrium (LTE) is usually adequate, and the atomic level population densities (hereafter population densities) can be obtained analytically using the Saha-Boltzmann equations. When the radiative terms become relevant, however, the radiation field greatly influences the population densities (NLTE). Because of this cross-dependence of the population densities with the radiation field, the NLTE problem must be solved iteratively in order to make them consistent with each other. Moreover, the nonlocality of the radiation field increases the complexity of the problem because all grid cells must be solved simultaneously.

Early attempts solved the rate equations using Lambda iteration, which is based on the fixed-point iteration method (see, e.g., Hubeny & Mihalas 2014). However, this scheme has very poor convergence properties and cannot be used in practice. Auer & Mihalas (1969) proposed a complete linearization method (of the second-order transfer equation) to solve the structure and radiation emerging from static stellar atmospheres. In their implementation, physical accuracy was neglected to make the problem computationally tractable, but it inspired future developments in the field (see below). A different approach, introduced by Rybicki (1972), used the core saturation approximation to eliminate passive photon scatterings in the line core, while only keeping the much more efficient scatterings in the line wings. The latter improved the conditioning of the rate equations, allowing traditional Lambda iteration to converge in a reasonable (but large) number of iterations.

The most successful methods for solving the statistical equilibrium equations are based on the operator splitting technique (Cannon 1973) combined with a linearization of the problem (e.g., Auer & Mihalas 1969; Scharmer & Carlsson 1985; Rybicki & Hummer 1992). Scharmer & Carlsson (1985) linearized the first-order radiative transfer equation and the rate equations with respect to the population densities until they were able to derive a linear system to estimate a correction. (Rybicki & Hummer 1992, RH92 hereafter) followed a slightly different approach and replaced some of the quantities that depend on the population densities with the value from the previous iteration. The fundamental difference between these two methods is that the complete linearization method of Scharmer & Carlsson (1985) is a minimization method of the error in the rate equations, whereas the RH92 method is closer to the fixed-point iteration method, but uses the operator-splitting technique to drive the solution. Furthermore, the complete linearization method of Scharmer & Carlsson (1985) operates on the source function, whereas the RH92 method operates on the emissivity, allowing for a simpler treatment of overlapping (active) transitions and partial redistribution effects (Uitenbroek 2001; Leenaarts et al. 2012; Sukhorukov & Leenaarts 2017).

The performance of these methods is largely determined by the choice of the approximate operator. The simplest block-diagonal (local) operator (Olson et al. 1986) decouples the explicit dependence of the rate equations with respect to space, and it requires very little storage and operations, making it a great choice for multidimensional problems (e.g., Leenaarts & Carlsson 2009; Amarsi et al. 2018). However, information about the nonlocal contribution to the intensity is neglected, as is its origin. A better prediction of the mean intensity can be attained by using the single-point quadrature global operator (Scharmer 1981; Scharmer & Nordlund 1982), which was greatly inspired by the Eddington-Barbier approximation (Milne 1921; Eddington 1926; Barbier 1943). Although it only requires one coefficient per ray and direction (two, when linear interpolation is used), the equations are again spatially coupled, and they must be solved together. Schemes using the global operator generally converge in fewer iterations than those using the local operator. Several codes with implementations of the complete linearization (Carlsson 1986; Hubeny & Lites 1995) and RH92 (Uitenbroek 2001; Leenaarts & Carlsson 2009; Pereira & Uitenbroek 2015; Socas-Navarro et al. 2015; Amarsi et al. 2018; Milić & van Noort 2018; Osborne & Milić 2021) methods have been extensively used by the solar and stellar communities.

A common way of solving nonlinear systems of equations is the Newton-Raphson method (Raphson 1690; Newton 1736). The main limitation to applying it to the statistical equilibrium equations is the expensive calculation of the Jacobian matrix that is required in each iteration. In this paper, we propose to use a modification of the Newton-Raphson method known as the Jacobian-free Newton-Krylov method (Knoll & Keyes 2004), to solve the radiative transfer problem. In this method, the Jaco-bian matrices are neither inverted nor built or stored. Instead, an iterative inversion solver based on Krylov subspaces (Krylov 1931) is used to estimate the Newton-Raphson correction to the unknowns. This method has already proved to be efficient in several fields, such as hydrodynamics or neutron scattering problems. Compared to the method of Scharmer & Carlsson (1985), our method does not require any explicit linearization of the rate and radiative transfer equations, and it does not use the operator splitting.

In Sect. 2, we introduce the numerical problem under consideration and the proposed numerical method for its resolution. In Sect. 3, we discuss our results, and in Sect. 4 we summarize our conclusions and discuss potentially interesting developments for future studies.

2 Problem and methods

2.1 Mathematical description of the problem

2.1.1 Theory

We adopt the notation used in Uitenbroek (2001) to express the statistical equilibrium equations. We furthermore assume a plane-parallel geometry hereafter. In all equations, unless mentioned otherwise, lower indices refer to atomic levels and upper indices refer to depth points within the atmosphere. The RH92 notation elegantly unifies the expressions for bound-bound and bound-free transitions, allowing for a very clean implementation of the rate equations. For a bound-bound transition between a lower level i and upper level j, we can define

(1)

(1)

(2)

(2)

(3)

(3)

where Aji, Bji, and Bij are the Einstein coefficients, and ϕij and ψij are the line absorption and emission profiles. ν is the frequency, and µ is the line-of-sight angle cosine. Similarly, for a bound-free transition, we can define

(4)

(4)

(5)

(5)

(6)

(6)

where αij is the photoionization cross-section, ne is the electron density, and Φij(T) is the Saha-Boltzmann function evaluated at temperature T,

(7)

(7)

where ɡ denotes the level statistical weight and E the level energy, and me is the mass of the electron. In practice, these expressions can be further simplified using the Einstein relations between coefficients, so that we obtain

(8)

(8)

(9)

(9)

For bound-bound transitions, assuming complete-redistribution of scattered photons, ɡij = ɡi/ɡj. For bound-free transitions,

(10)

(10)

where the asterisk-superscript denotes the LTE atomic level population.

We can now write the rate equations as a function of Vij, regardless of whether we consider bound-bound or bound-free transitions. We recall the rate equation for the atomic level i at depth index k,

(11)

(11)

where  is the population density of the atomic level i at depth index k.

is the population density of the atomic level i at depth index k.  are the collisional and radiative rate coeffi-cients of the transition i → j at depth index k with

are the collisional and radiative rate coeffi-cients of the transition i → j at depth index k with  . The radiative rate coefficients can be expressed as a double integral over the angle and frequency of the intensity (Uitenbroek 2001),

. The radiative rate coefficients can be expressed as a double integral over the angle and frequency of the intensity (Uitenbroek 2001),

(12)

(12)

![$R_{ji}^k = {1 \over 2}\mathop \smallint \limits_{ - 1}^1 d\mu \mathop \smallint \limits_0^\infty {{dv} \over {hv}}\left[ {\left( {{{2h{v^3}} \over {{c^2}}}} \right) + I_{\mu v}^k(n)} \right]g_{ij}^kV_{ij}^k\quad i < j,$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq16.png) (13)

(13)

for a plane-parallel atmosphere. The expressions for  and

and  are given in Eqs. (1)–(10) for bound-bound and bound-free transitions. The last component

are given in Eqs. (1)–(10) for bound-bound and bound-free transitions. The last component  is the intensity in the direction µ at a frequency ν and at the depth index k. The vector n contains the population densities with the chosen structure

is the intensity in the direction µ at a frequency ν and at the depth index k. The vector n contains the population densities with the chosen structure  , where Nz and Nℓ are the number of depth points and active atomic levels, respectively. While all the other quantities do not depend on the population densities, the intensity involves them all in a nonlinear and nonlocal fashion through the radiative transfer equation (RTE),

, where Nz and Nℓ are the number of depth points and active atomic levels, respectively. While all the other quantities do not depend on the population densities, the intensity involves them all in a nonlinear and nonlocal fashion through the radiative transfer equation (RTE),

(14)

(14)

(15)

(15)

where Sµv = ηµv/χµv is the source function, χµv and ηµv are the total opacity and emissivity, respectively, which can be calculated through

(16)

(16)

(17)

(17)

where the subscript c refers to the background continuum contribution, and sca indicates the background-scattering contribution, which are assumed to be independent with respect to the active population densities. The mean intensity Jν can be computed using

(18)

(18)

The presence of Jν in the scattering term of Eq. (17) might cause the calculations to become more complex because it depends on the intensity, which in turn depends on opacities and emissivi-ties. Because these scattering terms do not originate from active transitions of the atom, however, we use a previous estimation of the mean intensity  instead of Jν in Eq. (17). The optical thickness τµv is obtained by integrating the opacity over depth,

instead of Jν in Eq. (17). The optical thickness τµv is obtained by integrating the opacity over depth,

(19)

(19)

Equation (11) describes a system of Nℓ × Nz equations and variables  in which Nz equations are redundant. Therefore, we replace one rate equation by a particle conservation equation per depth-point,

in which Nz equations are redundant. Therefore, we replace one rate equation by a particle conservation equation per depth-point,

(20)

(20)

where  is the total atom density at the depth index k and is kept constant. The replacement was made on the most populated atomic level at each depth point for numerical stability purposes.

is the total atom density at the depth index k and is kept constant. The replacement was made on the most populated atomic level at each depth point for numerical stability purposes.

Finally, the system of equations was reformulated as

(21)

(21)

for radiative rate equations, and

(22)

(22)

for mass conservation equations. Altogether, Eqs. (21)–(22) form a residual vector F(n) with the same dimension as n. Solving the system of equations for the vector of population densities n is therefore equivalent to finding the root of the residual vector F. The calculation of F for a given atmosphere and population densities is detailed in algorithm 1. The residual vector is the central part of the solving process and constitutes the main computational cost of it. Thus, we evaluated the performance of a solver by the number of computation calculations of F (hereafter calls) needed to solve the problem to a given precision.

2.1.2 Discretization of the RTE

When the radiative rates are computed, the angular and frequency integrals are in practice discretized according to quadrature schemes and yield quadrature coefficients (ωµ, ων) for each set (µ, ν). Equations (14) and (15) are discretized along the depth axis, and the involved integrals can be calculated assuming a depth-dependent profile for Sµv. This profile is usually taken as simple piece-wise polynomial functions. We considered piece-wise linear functions (Olson & Kunasz 1987), which yielded

(23)

(23)

(24)

(24)

where the coefficients ak and bk are given in Appendix A.1, and

(25)

(25)

is the optical thickness of the slab.

At the top of the atmosphere, we assumed that there is no incoming radiation. The deepest point in the atmosphere was assumed to be thermalized so that the intensity at this location is the Planck function Bν at the local temperature T,

(26)

(26)

(27)

(27)

The angular integrals were evaluated using a Gauss-Legendre quadrature defined in ]0,1[. At each depth index k, the incoming and outgoing rays were considered in the calculation of the mean intensity J. The number of quadrature points is a parameter set by the user. We normally ran with five quadrature points with two rays per angle.

2.2 Newton-Raphson method

2.2.1 Basics

The system of nonlinear equations F(n) = 0 for the vector n may be solved through several numerical iterative methods. The Newton-Raphson method is one of the simplest and most powerful ones (e.g., Press et al. 2002). When n(p) is the estimate of the solution on the pth iteration, the next iterate n(p+1) is sought such that F(n(p+1)) = 0. When we further define δn(p) = n(p+1) − n(p) as the pth incremental, the Newton-Raphson method relies on a linearization of F(n(p+1)),

(28)

(28)

where we have introduced the Jacobian matrix JF associated with the residual vector F and evaluated at n(p). A possible representation of JF is given in Fig. 1. Solving the latter linear system for δn(p) yields

(29)

(29)

from which we may compute the next iterate n(p+1). This new estimate is a priori not a solution to F(n) = 0, although the iterative process ensures a quadratic convergence to a solution in the best cases (e.g., Dennis & Schnabel 1996). The initial guess n(0) might be given, for instance, by the LTE or the radiation-free predictions of the population densities. The linearization introduced by the Newton-Raphson method only consists of a mean to solve the raw statistical equations, whereas RH92 solves a linearized approximated version of the problem.

2.2.2 Limitations of the Newton-Raphson method

We note that δn(p) might also lead to a poorer estimation of the solution. This behavior can occur when the correction δn(p) lies beyond the domain of linearity of the residual vector around the evaluation vector n(p). A simple way to overcome this behavior is to limit the incremental vector with a dampening factor α and try α = 1,0.5,0.25,… until ||F(n(p) + αδn(p))|| < ||F(n(p))||. This procedure is the simplest of the so-called line-search methods, although more elaborated ones exist (see Dennis & Schnabel 1996, for instance).

A second problem deals with the possibility to produce solution estimates with negative entries. While mathematically correct, a solution estimate with negative population densities is physically incorrect, and the solver may even overflow when solving the RTE. A possible solution to prevent negative entries consists of limiting the correction at each depth independently.

A third and inherent problem of the Newton-Raphson method deals with the quality of the initial guess. The initial guess is usually an important factor in the method convergence rate or even the failure of the method. The method does not converge or ventures outside the domain of definition of F when a poor starting point is given. The method may also be trapped in a local minimum of the residual vector, which can be difficult to spot. Several tools such as continuation methods can be used to build a robust solver based on the Newton-Raphson method. More details are given in Knoll & Keyes (2004).

The Newton-Raphson requires the knowledge of the inverse of a Jacobian matrix  at each iterative step. Several issues can potentially arise when these quantities are computed, and we list them below.

at each iterative step. Several issues can potentially arise when these quantities are computed, and we list them below.

Implementation: most problems do not have an analytical expression for JF, and an approximation needs to be given (e.g., by finite differences). The convergence is therefore likely to be less than quadratic. In the worst case, the method may fail when the approximation is too coarse.

Storage: for large problems, storing JF can be problematic.

Time consumption: inversion of JF quickly becomes time-consuming as the size of the problem increases, considering traditional inversion routines such as Gauss-Jordan elimination. The computation of the full Jacobian might also be expensive.

The radiative transfer problem we considered disqualifies a classical Newton-Raphson method mainly because of the computational cost derived from building of JF, even when using analytical expressions. The Newton-Raphson method therefore requires the information of the Jacobian matrices without building them or only building them partially, in order to keep an efficient solver. The next sections detail a way to bypass this thorny problem.

|

Fig. 1 Jacobian matrix structure for a Nℓ-level atom problem. JF is a (NℓNz) × (NℓNz) matrix that contains the derivatives of the residual vector components with respect to the population densities. This matrix can be considered as a Nz × Nz block matrix, where each block Jkℓ is a Nℓ × Nℓ matrix. Jkℓ stores the derivatives of F at depth index k with respect to the population densities at depth index ℓ. |

2.3 Iterative inversion: Krylov methods

Equation (29) is equivalent to a linear system of the form Ax = b, where A = JF(n(p)), x = δn(p) and b = −F(n(p)). This linear system can be solved for x without inverting A using iterative approaches such as Krylov methods. In short, Krylov methods are used to solve large linear systems through projections onto a Krylov subspace Ks,

where r0 = b − Ax0 is the initial residual vector built from the initial guess x0. Since x is meant to represent a Newton-Raphson correction, a typical initial guess would be zero and thus r0 = b (we considered that no correction is required initially). Another initial condition might be given by the solution to Px0 = b, where P is a preconditioner (Sect. 2.4.3). Then, the solution is estimated as

(30)

(30)

where the set of vectors (q1,…, qs) is a basis of Ks and (ĸ1,…, ĸs) are the corresponding coordinates. The purpose of a Krylov method is therefore to construct a basis of Ks, and then to determine the corresponding scalars ĸi through projection methods. This construction is made iteratively in s iterations (one iteration per basis vector). Each iteration adds a new component to the solution estimate. The solution is sought such that ‖r‖2 = ‖b − Ax‖2 < δ‖r0‖2, where δ is a relative tolerance set by the user. We note that the given tolerance might be achieved in fewer than s iterations.

The size of the Krylov subspace is related to the precision of the solution that can be achieved, the latter also depending on the method employed. If s is too small, the desired tolerance level might not be achieved. On the other hand, when s is chosen to be equal to the size of A, a Krylov method will eventually converge to the exact solution in theory. In practice, round-off and truncation errors will limit the maximum precision that can be expected. From the definition of Ks, we note that only matrix-vector products are required in these methods, which is the keystone of Sect. 2.4.

A plethora of Krylov methods has been developed over the past decades, among which two popular techniques and their respective variants are broadly used in various physics problems. We briefly describe them below.

The generalized minimal residual method (GMRES) is usually based on the Arnoldi process or Householder transforms to produce orthonormalized bases. It was first developed in Saad & Schultz (1986) as an improvement and an extension to nonsymmetric matrices of the MINRES method developed by Paige & Saunders (1975). It is a very versatile linear system solver.

The bi-conjugate gradient stabilized (BiCGSTAB) is based on the Lanczos bi-orthogonalization procedure, which generates nonorthogonal bases. It was first developed in van der Vorst (1992) as a variant of the biconjugate gradient method (BiCG).

Both methods can be used with any invertible matrix. GMRES requires one matrix-vector product per iteration, whereas two are needed with BiCGSTAB, but the latter requires less memory than GMRES. This is especially true whenever the number of iterations required for convergence is large because BiCGSTAB uses a constant amount of memory per iteration and GMRES does not. The most crucial feature of GMRES is a strict decrease of the residual norm ‖r‖2 throughout the iterative process, whereas the convergence behavior of BiCGSTAB is less regular (van der Vorst 1992).

2.4 Jacobian-free Newton-Krylov methods

2.4.1 Setup

We still did not address the problem of the Jacobian matrix estimation and the potential high computational cost it represents. Fortunately, Krylov methods applied to the Newton-Raphson iteration (Eq. (29)) only require the action of the Jacobian matrix JF onto a generic vector v (see Sect. 2.3). The operation JF(n(p))v can be approximated using finite differences (e.g., Knoll & Keyes 2004),

(31)

(31)

(32)

(32)

(33)

(33)

where є is the difference step. These schemes do not use the Jacobian matrix, but rather extra calls of F, which is a huge computational and storage gain especially for large problems, but at the cost of precision. This is the keystone of Jacobian-free Newton-Krylov solvers (hereafter JFNK). These methods were first presented by Knoll & Keyes (2004). Since the residual vector F(n(p)) is already computed and passed to the Krylov solver, first-order schemes (forward and backward) only require one fresh call of F. In comparison, the second-order scheme (central) requires two fresh calls of F, which is a major drawback. In our problem, every evaluation of F translates into solving the RTE for all rays at all frequencies for a given n. We also note that the finite-differences calculations in Eqs. (31)–(33) do not estimate the individual elements of the Jacobian matrix, but are instead used to directly estimate the matrix-vector product.

Since the Newton-Raphson method is iterative, the matrix-vector product estimates are only needed to be accurate enough to guarantee convergence. This is the main reason why the vast majority of JFNK solvers use first-order schemes rather than higher-order ones (Knoll & Keyes 2004). In practice, round-off and truncation errors may occur, and an optimal choice of є is hard to find. The first source of error is caused by the finite arithmetic precision of computers, and the second source of error is due to the limited accuracy of the scheme. Several empirical choices for є are further detailed in Knoll & Keyes (2004) to minimize both sources of error.

2.4.2 Augmentation of numerical precision with complex numbers

For the considered problem, the components of n(p) cover a wide range of values. For instance, considering a two-level hydrogen atom in a FAL-C atmosphere (Fontenla et al. 1993), the LTE population densities cover the range 104 up to 1017 cm−3. This leads to high values of є considering the empirical choices. A rescaling of the densities is possible to keep reasonable є values. However, the large span of densities remains problematic within the residual vector F itself and leads to round-off errors. Thus, the usual numerical derivative schemes are not suited for the given problem. Fortunately, there is an alternative scheme that uses complex numbers to increase the precision and dynamic range of the calculations, which is far less affected by round-off errors or substractive cancellations (e.g., Kan et al. 2022; Martins & Ning 2021),

![${{\bf{J}}_F}\left( {{n^{(p)}}} \right)v = {{\left[ {F\left( {{n^{(p)}} + iv} \right)} \right]} \over } + O\left( {{^2}} \right)$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq47.png) (34)

(34)

where i is the imaginary unit. This special scheme only requires one fresh call of F and is second-order accurate as the central difference scheme. It requires setting up the main routines for complex arithmetic operations, however. We used the linear piece-wise source function scheme described in Sect. 2.1 to integrate the RTE along rays, for which the modifications are straightforward. The remaining truncation error can be greatly attenuated by selecting a tiny є value. Martins & Ning (2021) recommend a typical value є ~ 10−200 for double-precision functions. The imaginary part is only used for the Krylov solver, otherwise only the (unperturbed) real part is considered. Since the imaginary part is typically very small compared to the real part, equations that involve the perturbations should be linearized (e.g., by computing the source function). This prevents introducing undesired arithmetic errors. Figure 2 points out a typical accuracy issue in the computation of the Jacobian matrices when using traditional schemes. The complex scheme alone can provide accurate estimates of Jacobian matrices for our given NLTE problem. For this sole reason, we used the complex scheme in our JFNK solvers.

|

Fig. 2 Jacobian matrix estimation error for the three-level Ca II setup, evaluated at the LTE populations and for the different schemes. The first-order scheme is the forward one. The difference steps є1 and є2 are common choices (Knoll & Keyes 2004) and read |

2.4.3 Preconditioning

Although we have introduced an accurate method to evaluate the matrix-vector products JF(n(p))v, the Jacobian matrix may potentially be ill-conditioned. Consequently, the Krylov solver may require many iterations to converge to the desired tolerance because of the high condition number of JF(n(p)). Therefore, we propose preconditioning the Krylov solver in order to increase its efficiency. The preconditioning process consists of using a preconditioning matrix P (the preconditioner) such that JF(n(p))P−1 (right preconditioning) or P−1 JF(n(p)) (left preconditioning) has a lower condition number than JF(n(p)). The system to be solved by the Krylov solver is no longer given by Eq. (29) but rather by

(35)

(35)

for right preconditioning and by

(36)

(36)

for left preconditioning. The preconditioned system is expected to be solved in fewer iterations than the original system. Equation (35) is solved for y = Pδn(p) first then for δn(p) If the right preconditioning is used, the matrix-vector product scheme (Eq. (34)) can be adapted for Eq. (35) and reads

![${{\bf{J}}_F}\left( {{n^{(p)}}} \right)\left( {{{\bf{P}}^{ - 1}}v} \right) = {{\Im \left[ {F\left( {{n^{(p)}} + i\left( {{{\bf{P}}^{ - 1}}v} \right)} \right)} \right]} \over } + O\left( {{^2}} \right).$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq52.png) (37)

(37)

If the left preconditioning is chosen, Eq. (34) is used directly, and then the result is left-multiplied by P−1. The second member passed to the Krylov solver in this case is −P−1F(n(p)). In our JFNK solvers, we used the left preconditioning because the right preconditioning involves an additional inversion step. Because the preconditioner is meant to improve the overall performance of the inversion process, P should be easy to calculate and invert. A commonly used algebraic preconditioner is given by the diagonal or block diagonal of the matrix that is to be inverted (easy inversion and matrix-vector multiplication). This simple matrix is also known as a Jacobi (or block-Jacobi) preconditioner. This choice is particularly interesting in our case because the block diagonal of JF(n(p)) is as costly to compute as a call of F (see Sects. 2.1 and 2.5.2). We note, however, that other physics-based preconditioners could be very relevant for our case, as presented in Janett et al. (2024) for solving linear problems. The calculation of such a preconditioner can be performed when calculating F(n(p)), thus reusing most of the variables that were already computed to obtain the residual vector. Algorithm 2 illustrates the final JFNK solving routine.

2.5 Analytical Jacobian matrix

2.5.1 Derivation

In this part, we derive the expressions of the Jacobian matrix elements as a function of the population densities. In principle, these equations could be used to compute the fully analytical Jacobian matrix. While the calculation of the full Jacobian is expensive, it is at least important to detail these derivations for preconditioning purposes (Sect. 2.4). A more general derivation is provided in Milić & van Noort (2017) for derivatives according to any atmospheric parameter. The Jacobian element  can be calculated as follows:

can be calculated as follows:

(38)

(38)

unless  is a particle conservation equation, in which case the Jacobian element is simply given by

is a particle conservation equation, in which case the Jacobian element is simply given by

(39)

(39)

where we used the fact that the population densities  are considered to be independent variables and that

are considered to be independent variables and that  is kept constant, and therefore,

is kept constant, and therefore,

(40)

(40)

In Eq. (38), the collisional coefficients do not depend on the population densities. Applying the chain rule to Eq. (38) yields

(41)

(41)

by noting that the derivative of the radiative rates only involves the derivative of the intensity  with respect to

with respect to  . The extinction profile within each coefficient

. The extinction profile within each coefficient  is considered to be independent with respect to the population densities. Then, we can expand the derivative and further write

is considered to be independent with respect to the population densities. Then, we can expand the derivative and further write

(45)

(45)

because the intensity, through the RTE, is only a function of the optical depth and the source function. We further develop Eq. (45) and write

(46)

(46)

(47)

(47)

so that Eq. (45) therefore reduces to

(50)

(50)

Equation(50) consists of a linear contribution of the intensity and a summation of nonlinear cross terms due to the background scattering. Both derivatives involving  depend on the scheme chosen to solve the RTE. Since we used the linear piece-wise source function scheme detailed in Sect. 2.1, the corresponding expressions for the derivatives are

depend on the scheme chosen to solve the RTE. Since we used the linear piece-wise source function scheme detailed in Sect. 2.1, the corresponding expressions for the derivatives are

(51)

(51)

(52)

(52)

for outgoing rays (µ > 0), and

(53)

(53)

(54)

(54)

for ingoing rays (µ < 0), where the expressions of the different involved coefficients are given in Appendix A.2. These coefficients can be constructed from the variables used when solving the RTE to save computational time. The boundary conditions for this scheme (Sect. 2.1) imply that

(55)

(55)

(56)

(56)

for each value of ℓ. It can be shown that Eqs. (51)–(54) define two upper (µ > 0) and two lower (µ < 0) triangular matrices,

(57)

(57)

for µ < 0. These expressions are valid for internal depth points. The matrices related to the derivatives with respect to the emis-sivities are obtained by using the associated coefficients. These matrices can be understood as Jacobian matrices of the intensity with respect to opacities and emissivities.

The last part to detail deals with the derivative of the mean intensity term. Through its definition, we note that this quantity involves derivatives of the intensity with respect to the population densities. In brief, the full expansion of Eq. (50) consists of an intricate self-consistent but linear system of the derivatives of the intensity with respect to the population densities. It is possible, however, to find a solution to this system that can be written as

(59)

(59)

where the coefficients rp and the solution derivation can be found in Appendix B. In practice, the background-scattering contribution to the derivatives is usually very weak and can therefore be neglected.

2.5.2 Preconditioning of JFNK with the analytical Jacobian matrix

Preconditioning the JFNK solver is troublesome when the analytical derivation of the Jacobian matrix is used. It is expensive to compute Eq. (50) when the interest is in the Jacobi pre-conditioner alone, because all off-diagonal terms need to be calculated. This issue arises from the background-scattering contribution, and we therefore have to deal with the following in order to obtain the preconditioner:

The summation in Eq. (50) involves all cross terms (k ≠ p terms).

The presence of the mean intensity in the scattering term also involves all cross terms (Sect. 2.5).

Therefore, only an approximate Jacobi preconditioner can be used at the cost of precision and potentially convergence rate of the Krylov solver. We give three simple solutions to overcome both problems and still provide a preconditioner that dramatically improves the convergence properties of the Krylov solver:

The very first solution consists of disregarding the background-scattering contribution. In this case, Eq. (50) becomes for k = ℓ

(60)

(60)-

The second solution consists of discarding all of the cross terms to only keep the local one. The preconditioner further reduces to a local operator when we use

![$\left( {{{\partial I_{\mu \nu }^k} \over {\partial n_j^k}}} \right) = \beta _j^k\left( {{{\partial I_{\mu \nu }^k} \over {\partial \chi _{\mu \nu }^k}}} \right) + \left[ {\gamma _j^k + \chi _{{\rm{sca}}}^k{{\left( {{{\partial J_v^k} \over {\partial n_j^k}}} \right)}^\dag }} \right]\left( {{{\partial I_{\mu \nu }^k} \over {\partial \eta _{\mu \nu }^k}}} \right),$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq84.png) (61)

(61)where a previous estimate of the derivative of the mean intensity is used instead of the current one. This estimate is easily computed for the next iteration by an integration of Eq. (61) over all angles. This quantity can be initialized to zero (zero radiation initial guess) or the mean intensity given by the LTE solution can be calculated.

-

The last solution, which is the least constraining but requires additional operations, uses the solution given by Eq. (59) while only considering the local terms. The corresponding operator then has

![$\left( {{{\partial I_{\mu v}^k} \over {\partial n_j^k}}} \right) = \beta _j^k\left( {{{\partial I_{\mu \nu }^k} \over {\partial \chi _{\mu \nu }^k}}} \right) + \left[ {\gamma _j^k + \chi _{{\rm{sca}}}^k{r_k}} \right]\left( {{{\partial I_{\mu \nu }^k} \over {\partial \eta _{\mu \nu }^k}}} \right),$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq85.png) (62)

(62)

A preconditioner that follows one of the three solutions only requires the calculation of ℓ = k terms in Eq. (50), which are only built from the coefficients  , and

, and  given in Appendix A.2. Furthermore, it can be calculated in a comparable number of operations as the residual vector F. In our JFNK solvers, we use the first solution to calculate the preconditioner since the scattering terms are negligible in Eq. (59) compared to the other contributions, at least with the setups we considered (Sect. 3.1).

given in Appendix A.2. Furthermore, it can be calculated in a comparable number of operations as the residual vector F. In our JFNK solvers, we use the first solution to calculate the preconditioner since the scattering terms are negligible in Eq. (59) compared to the other contributions, at least with the setups we considered (Sect. 3.1).

3 Results and discussion

We have ported a simplified version of the excellent RH code (Uitenbroek 2001) to Python. The latter solves the statistical equilibrium equations using the RH92 method, but with the possibility of including partial redistribution effects of scattered photons (PRD). This Python version does not include PRD effects, and it is significantly slower than the C-version of RH. It is implemented using modern programming constructions, however, such as classes and inheritance, which has been extremely useful in the implementation of our proof-of-concept JFNK solver because we were able to reuse most of the atom structures, opacity calculation routines, and formal solvers of the transport equation. We used the RH92 method as a reference in order to evaluate the performance of our JFNK method. We analyzed the convergence properties of different schemes and therefore did not include Ng-acceleration in our calculations (Ng 1974), which is implemented in the RH code.

3.1 Setup

All the results presented in this paper were computed using a FAL-C model atmosphere of the solar photosphere, chromosphere, and transition region (Fontenla et al. 1993), which consists of 82 depths points covering the interval τ500 = [10−10,23], where τ500 is the optical depth at λ = 500 nm. Figure 3 depicts the stratifications of the gas temperature, electron density, and total hydrogen density. The atmosphere does not have a native line-of-sight velocity profile.

Three different atomic setups were used, consisting of HI and Can. The different transitions are listed in Table 1. Each setup also included collisional and bound-free transitions (Shull & van Steenberg 1982; Burgess & Chidichimo 1983; Arnaud & Rothenflug 1985). The absorption and emission profiles of each line at each location were modeled with the Voigt function, which depends on the Doppler width and the damping parameter. The latter includes radiative, Stark, linear Stark (in calculations with H atoms), and van der Waals broadening. The angular integrals were discretized using a five-point Gauss-Legendre quadrature.

We performed our calculations using the Newton-Raphson, two JFNK (using GMRES and BiCGSTAB , respectively) and the RH92 methods. Both JFNK solvers systematically used the analytical Jacobi left preconditioner (Sects. 2.4.3 and 2.5.2). All solvers required an exit condition that defined a good convergence. In our case, we kept track of the residual norm ||F||∞ and of the population relative change norm . Unless mentioned otherwise, we assumed that a method has converged when

. Unless mentioned otherwise, we assumed that a method has converged when  . This condition was sufficient most of the time, although we also imposed a minimal drop of ||F||∞ by two magnitudes to avoid premature exits.

. This condition was sufficient most of the time, although we also imposed a minimal drop of ||F||∞ by two magnitudes to avoid premature exits.

Summary of the different atom setups.

|

Fig. 3 FAL-C atmosphere. The temperature and total hydrogen and electron densities are shown. The height dimension origin (z = 0) corresponds tO τ500 = 1. |

3.2 Krylov solver tolerance impact

The Krylov solver that is internally used in the JFNK method can generally have a number of parameters that must be chosen for a given run (e.g., the size of the subspace or the convergence criteria). In the case of simple solvers such as GMRES or BiCGSTAB, this set of parameters reduces to the accuracy to which the solution is desired. This single parameter steers the behavior of the JFNK method and its convergence properties. Thus, we investigated the impact of the Krylov solver accuracy on the stability and the efficiency of the JFNK method.

Our first test was to assess the ability of a JFNK solver to match the Newton-Raphson solution. We would expect minimal differences between the two solvers as the accuracy of the Krylov solver increases. Figure 4 shows the convergence properties of our solvers in the case of the six-level HI atom setup. Several accuracy levels are displayed to highlight the evolution of the discrepancies between the different methods. The Newton-Raphson solver outperforms JFNK solvers in every situation. By truncating the precision of the correction provided by the Krylov solver, each JFNK iteration becomes less accurate, usually leading to additional iterations (compared to the standard Newton-Raphson case) in order to achieve a given convergence level in the population densities. The differences between the two methods decrease when the precision of the Krylov solver is substantially increased. Both JFNK solvers display similar results and converge to the same solution. They show the same behavior as the Newton-Raphson solver when rtol ~ 10−4, which validates the implementation of our solvers.

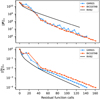

A second chart is presented in Fig. 5 and directly compares our iterative solvers with the RH92 solver. In the JFNK method, we would expect a range of tolerances for which the method is optimal (with respect to the residual function calls):

Smaller tolerances result in more precise estimates of the inverse of the Jacobian. The JFNK method therefore requires fewer Newton-Raphson iterations, but the overall number of calls to F is nonetheless higher because the additional accuracy is not effective enough in the convergence of the JFNK method.

Higher tolerances result in coarse estimates of the inverse of the Jacobian. Even though the number of calls to F is reduced, Newton-Raphson iterations usually yield poorer corrections. Therefore, the JFNK method requires additional Newton-Raphson iterations to converge. Overall, the solver requires more calls to F.

The optimal range thus consists of a trade-off between the accuracy of the inverse of the Jacobian and the calls to F needed to obtain them.

We note that overly coarse estimates of the inverse of the Jacobian can yield an unstable behavior throughout Newton-Raphson iterations. We found a few situations in which this feature can be helpful to escape potential local minima of the residual vector, and the method can even converge in fewer calls to F. These inaccurate corrections will probably not lead to the convergence of the JFNK method, however. For the sake of stability, we recommend to use a Krylov relative tolerance smaller than ~10−2.

Figure 5 (top) highlights for the three-level Ca II setup an optimal range spanning from rtol ~ 3 × 10−4 to ~3 × 10−2. In this range, the JFNK (GMRES) solver outperforms the RH92 solver, whereas the JFNK (BiCGSTAB) solver outperforms the latter in a much narrower range. It is also possible to witness the irregular convergence behavior of the JFNK (BiCGSTAB) solver because the corresponding curve is less smooth than that of the JFNK (GMRES) solver. In the case of a six-level setup (Fig. 5 bottom), there is no such optimal range. Instead, the number of residual vector calls decreases almost monotonically with the Krylov solver tolerance. Both JFNK solvers outperform the RH92 solver for Krylov relative tolerances higher than ~3 × 10−3 (GMRES) and ~1 × 10−2 (BiCGSTAB). The JFNK (GMRES) solver has always proven to outperform the JFNK (BiCGSTAB) solver for most Krylov tolerances and various setups, and it outperforms the RH92 solver in a wider range of Krylov tolerances than the BiCGSTAB counterpart. The residual vector calls from the JFNK / Newton-Raphson solvers and the iterations of the RH92 solver are different, however. Therefore, Fig. 5 only makes sense when the two are comparable in operations or execution time, which is the case for our setups (see Sect. 3.3). Thus, the Jacobi preconditioner allows the JFNK method to be more efficient than the RH92 method when it is used optimally. The preconditioner is less efficient for a setup consisting of many frequencies, however, such as the six-level Ca II or HI ones. Moreover, the lack of an optimal range of Krylov tolerances indicates that the Jacobi preconditioner is not enough for setups like this. This statement also includes every setup with strong scattering.

|

Fig. 4 Comparison of the Newton-Raphson method with JFNK routines for the six-level HI setup (zero radiation initial guess). As the Krylov solver relative tolerance rtol becomes small, the discrepancy between the different methods reduces to truncation and round-off errors. |

|

Fig. 5 Residual vector calls required for convergence as a function of the Krylov solver relative tolerance. Top: Three-level Ca II setup. Bottom: Six-level Ca II setup. Both setups use the LTE initial condition. |

3.3 Performance of the solver

Table 2 shows the average execution time per call to F (or equiv-alently, for the RH92 solver) for different setups. The pure call to F is always slightly faster to execute by the JFNK solvers than the RH92 solver equivalent because the RH92 solver method itself requires the computation of cross-coupling terms and the remaining rate matrix elements in order to update the population densities. On the other hand, computing the residual vector and updating the preconditioner (JFNK) requires approximately twice the time of a pure F call (by a JFNK solver), which was expected. The preconditioner update call, while more time-consuming than the RH92 solver equivalent, is only performed once per Newton-Raphson iteration. The main contribution to the execution time is due to the Krylov solver calls, and therefore, to pure residual vector estimates. As a result, it can be shown that the JFNK solver calls, as implemented in our proof-of-concept code, do require slightly less time on average than RH92 calls, even for extremely suboptimal Krylov tolerances.

In the following part, we compare the quality of the solutions provided by JFNK solvers with the reference RH92 case. The convergence condition is usually given by a sufficiently small change in the population densities. For this purpose, we used the JFNK residual norm ||F||∞ as the metric because it is derived from the raw equations we attempted to solve, although the RH92 solver is not designed to minimize the raw residual norm. Moreover, the residual norm might stall when using RH92 because of the deepest layers of the atmosphere. The medium indeed becomes strongly collisional, and therefore, the radiative contribution and the changes that may occur during the solving process do not have a significant impact. Nevertheless, we disregarded these layers in the estimation of the residual norm because they are close to LTE. In the following, the residual norm is evaluated considering only the first 50 points (z > 700 km) of our atmosphere, where the chromosphere is located. A very large error in the RH92 curve is obtained when this is neglected, and the error is mostly driven by deeper layers where LTE should hold. This behavior was absent in the JFNK calculations.

Figures 6 and 7 show this clamped residual norm as well as the population change norm for the Ca II and the H I setups, respectively. It is clear that the RH92 solver displays a lower convergence rate for the largest part of the solving process. JFNK solvers, on the other hand, are outperformed at the beginning before the convergence rate surpasses that of RH92 (Ca II) or equals the latter (H I). As an outcome, JFNK solvers can perform better than the RH92 solver, especially when the initial guess is close enough to the solution. Moreover, the two figures show that the solution provided by the JFNK solvers is one hundred times more precise than that of RH92. We recall that the success condition is dictated by the population change norm and not by the residual norm.

The size of the population change norm is a poor criterion for convergence. Figure 8 shows that the maximum error in the rate equations for the JFNK solver is lower than in the RH92 case for the same correction size. The JFNK solver achieves a lower absolute error in the residual norm than RH92 for any given convergence condition set on the size of the population change norm. This is expected because the size of the correction per iteration is affected by the efficiency of the solver: For example, in the extreme case of a traditional lambda iteration, this leads to very small corrections with a very large error in the rate equations (i.e., residual norm), whereas in an accelerated lambda iteration, the convergence is comparatively more efficient.

Finally, we provide in Figs. 9-10 the spectra of the six-level Ca II and six-level H I setups, respectively. In both cases, the emerging spectra predicted by the RH92 and the JFNK solvers are essentially identical. We note that the additional accuracy of the solution provided by a JFNK solver does not yield noticeable changes in the emerging intensity, and we can therefore decide to terminate the solving process earlier and still output a similar result.

Average execution time per call for the JFNK and the RH92 solvers.

|

Fig. 6 Residual and population change norms during the solving process of the six-level Ca II setup. The initial population densities are the LTE ones. Both JFNK solvers used a Krylov relative tolerance of 10−2. Each cross marker corresponds to a Newton-Raphson iteration. |

3.4 Calculations with velocity gradients

In this section, we show that the JFNK solver can properly handle nonstatic atmospheres with velocity gradients as a function of depth. We modified the FAL-C model atmosphere by introducing a sharp velocity gradient around z = 1000 km, corresponding to the lower chromosphere. Velocity gradients can be problematic when the velocity jump between consecutive grid cells is larger than approximately one-third of the Doppler width (Ibgui et al. 2013). Under these circumstances, the discretization of the RTE can lead to artifacts in the intensity.

In order to avoid numerical artifacts in the calculation of the intensity, we performed a depth optimization by placing more points where the gradients in temperature, density, optical depth or line-of-sight velocity are large. All quantities were interpolated to the new depth grid by linear interpolation. The total number of depth points was kept equal to that in the original model. This method is essentially an extension of the depth-optimization included in the Multi code (Carlsson 1986), which now also accounts for the presence of velocity gradients. The upper left panel in Fig. 11 illustrates the artificial velocity gradient represented in the optimized grid.

When the velocity gradient is properly sampled with sufficient depth points, there is no fundamental reason why any of the algorithms would perform very differently than in the static case. Our convergence plots in Fig. 11 show a very similar behavior than those in Fig. 6 for the Ca II atom. After a few iterations, the residual norm ||F||∞ is lower than in the RH92 curve, whereas the population change norm ||δn/n||∞ is larger. After approximately 80 iterations, the RH92 method has achieved a convergence (in the residual norm) that is similar to that of the GMRES after 50 iterations.

The emerging intensity spectrum now shows strong asymmetries around the core of all chromospheric lines, which become progressively more blueshifted by the presence of the positive velocity gradient at the base of the chromosphere. The Ca II H&K lines (3968 Å and 3934 Å) show the well-known enhancement of one of the k2 peaks (in the case of the red wing) because the blueshifted line profile in the core frequencies leaves an opacity gap in the red wing of the line where photons can escape more efficiently compared to the static case (Scharmer 1984).

|

Fig. 7 Residual and population change norms during the solving process of the six-level HI setup. The initial population densities are the zero radiation initial guess ones. Both JFNK solvers used a Krylov relative tolerance of 10−2. Each cross marker corresponds to a Newton-Raphson iteration. |

|

Fig. 8 Residual norm of the rate equations (six-level HI setup) as a function of the population change norm for the JFNK (blue) and RH92 (red) schemes. The Krylov relative tolerance was set to 10−2. The population densities were initialized using the zero radiation approximation. Each solver was run in order to achieve several Newton relative tolerances in the population change norm (e.g., 10−1, 10−2). We then recorded the final residual and population change norms. |

3.5 Prospects

The proposed JFNK method can be upgraded for a better efficiency, and there are several ways of doing so. The external Newton-Raphson update does not leave much space for improvement, but it might be interesting to implement a continuation or a line-search method to potentially reduce the number of Newton-Raphson iterations. A nice survey of continuation methods is given in Allgower & Georg (1993). A potentially simple but robust modification would be to implement a hybrid solver mixing the RH92 and the JFNK solvers. Starting with a few RH92 iterations before switching to JFNK iterations would allow this hybrid solver to avoid the usual Newton method deficiencies, as well as providing a better initial guess for the Newton-based solver. This behavior was encountered when attempting to solve the problem for instance with the six-level HI setup starting with the LTE population densities. The performance of our JFNK solvers otherwise reduces to how efficient the Krylov solver can be, and this is therefore dictated by the quality of the preconditioner and the solver itself.

The Jacobi preconditioner we used has proven to be relatively inefficient in several of our setups, and therefore, it could be improved. in our implementation, the local preconditioner is a block-diagonal matrix. When it multiplies the Jacobian, it destroys the nonlocal derivatives in the left-hand side of Eq. (36), and therefore, it has a similar effect as the adoption of a local approximate operator in the RH92 method. However, we recall that the preconditioner should be kept to be easily invertible and calculable, thus leaving a narrow space for improvement. To do this, we provide two possible routes to calculate a more suitable preconditioner. The first option deals with the single-point quadrature approximation of the RTE from Scharmer & Carlsson (1985). An approximation of this kind could greatly simplify the calculations of the nonlocal part of the Jacobian matrices and therefore might provide a more accurate preconditioner than the Jacobi one. The second option is more related to the JFNK formalism and is presented in Chen & Shen (2006) and deals with an adaptive preconditioning technique. in brief, we can take advantage of the matrix vector products calculated by a Krylov solver to iteratively update the preconditioner. it also allows us to computate a nonlocal contribution to the preconditioner.

Another upgrade that can be implemented deals with the initial guess provided to the JFNK solver. in this paper, we used two possible initial guesses, which are the LTE and the zero radiation ones. However, there might be other possibilities more suited for a Newton-based method applied to the radiative transfer problem such as the JFNK one. For instance, the population densities can be initialized with those derived from the escape probability theory (e.g., Hubeny & Mihalas 2014; Judge 2017).

We only considered 1D plane-parallel NLTE problems here. The extension to 3D geometry could be possible with some considerations. First, 3D radiative transfer codes are usually domain-decomposed for parallelization purposes (Leenaarts & Carlsson 2009), where each processor or machine only has access to the properties of the atmosphere, opacities, emissiv-ities and population densities within one subdomain. In order to implement the inner Krylov solver, we would need to collect all population densities from all subdomains and keep the vector basis of the Krylov subspace in one manager task. The key part is the evaluation of Eq. (34), which applies a perturbation to the population densities over the entire domain. The manager would need to propagate the relevant perturbed population densities to each subdomain, but the calculation of J can be made in the same domain-decomposed way. The cost is one additional communication from the manager to the worker tasks per Krylov iteration. At current memory standards in high-performance computing centers, this approach should be reasonable.

|

Fig. 9 Ca II (six-level) spectrum. In black, we plot the output from the RH92 solver. In blue, we plot the output from the JFNK solver (GMRES) with a Krylov relative tolerance of 10−2. |

|

Fig. 10 Six-level HI spectrum. In black, we plot the output from the RH92 solver. In blue, we plot the output from the JFNK solver (GMRES) with a Krylov relative tolerance of 10−2. |

4 Conclusion

We presented a Jacobian-free Newton-Krylov method for solving the multilevel NLTE radiative transfer problem assuming statistical equilibrium. Our implementation showed a similar convergence as the Newton-Raphson method, without ever building the full Jacobian matrix explicitly. As a benchmark, we solved the NLTE problem assuming plane-parallel geometry and the FAL-C model for a three-level Ca II as well as six- level Ca II and H I atoms, which have been commonly used in solar physics applications. We showed that our solver can converge faster than other methods based on linearization, such as RH92. The improvement in the convergence rate depends on the atom, but it usually reaches a factor 1.5–2 in the best cases. The latter is evaluated in terms of the number of formal solutions needed to converge the problem. However, we note that the JFNK formal solutions are faster because no cross-term summations are required compared to RH92. The downside of our method is that it relies on an appropriate election of the convergence tolerance for the Krylov inner solver. Our sensitivity study seems to indicate that an optimal performance can be attained when the tolerance is set in the range of 10−3–10−2.

In order to increment the accuracy of the Newton-Raphson correction per iteration, we augmented the precision of the formal solver using complex numbers. This change was required given the enormous dynamic range of the atomic level population densities from the photosphere to the transition region.

Compared to other studies that used Krylov-subspace methods to iteratively solve the linear two-level atom problem (e.g., Hubeny & Burrows 2007; Anusha et al. 2009; Benedusi et al. 2021, 2022), our method handles multilevel nonlinear problems. Because the Jacobian matrix does not need to be explicitly computed in each iteration, this method becomes particularly interesting for more complex problems, which we briefly discuss hereafter as future prospects.

The more obvious application relates to problems where partial redistribution effects of scattered photons are important. While several efficient solutions are available for the two-level atom problem (e.g., Scharmer 1983; Paletou & Auer 1995), similar methods for multilevel problems have important limitations. For example, Hubeny & Lites (1995) presented a PRD method based on the complete linearization approach of Scharmer & Carlsson (1985), which does not consider overlapping active transitions. Uitenbroek (2001) overcame that limitation by using the RH92 formalism and performing two iterative cycles, separating the correction to the atomic level population densities and the correction to the emissivity profile. The method converges, but it requires several evaluations of the redistribution integral per iteration. The method presented in this paper shows great potential to accelerate the convergence of PRD problems because it does not require any explicit linearization of the problem.

Another extension could be the inclusion of charge conservation when H atoms are solved. The idea would be to add another conservation equation and update the electron density in each iteration because the ionization of H is usually dominant in the chromosphere. Previous studies have included these corrections, but needed to perform Newton-Raphson iterations due to the nonlinear dependences of the Saha equation and the rate equations on the electron density (e.g., Leenaarts et al. 2007; Bjørgen 2019). Since we did not perform any explicit linearization of the rate equations or the transfer equation and we already used Newton-Raphson iterations, the inclusion of charge conservation could be very efficient and relatively straightforward.

|

Fig. 11 Velocity gradient convergence test for the different solvers. Top left panel: line-of-sight velocity profile used for the test. Top right panel: Associated convergence plot for the six-level Ca II setup with a Krylov relative tolerance of 10−2 and initial LTE population densities. All the solvers required more iterations to converge than in the case shown in Fig. 6. Bottom panel: converged spectrum including the velocity gradient for RH92 (black) and JFNK (GMRES) (blue). A JFNK (GMRES) velocity-free reference spectrum (dashed black) is also shown. A blueshift clearly occurs near the line center due to the positive velocity gradient at the base of the chromosphere, resulting in very asymmetric output lines. |

Acknowledgements

We are very thankful to the referee for his/her constructive suggestions and careful evaluation of our manuscript. The Institute for Solar Physics is supported by a grant for research infrastructures of national importance from the Swedish Research Council (registration number 2021-00169). JL acknowledges financial support from the Swedish Research council (VR, project number 2022-03535). No animals were harmed in the making of this manuscript. This project has been funded by the European Union through the European Research Council (ERC) under the Horizon 2020 research and innovation program (SUNMAG, grant agreement 759548) and the Horizon Europe program (MAGHEAT, grant agreement 101088184). Part of our computations were enabled by resources provided by the National Academic Infrastructure for Supercomputing in Sweden (NAISS), partially funded by the Swedish Research Council through grant agreement no. 2022-06725, at the PDC Center for High Performance Computing, KTH Royal Institute of Technology (project numbers NAISS 2023/1-15 and NAISS 2024/1-14).

Appendix A Discretization coefficients

A.1 Piecewise linear RTE

A.2 Derivatives of the intensity

For outgoing rays (µ > 0),

![$a_\chi ^k = - {{S_{\mu v}^k} \over {\chi _{\mu v}^k}}{a^k} + {{\left| {{z_{k + 1}} - {z_k}} \right|} \over {2\mu }}\left[ {{e^{ - \Delta \tau _{\mu \nu }^k}}\left( {S_{\mu \nu }^{k + 1} - I_{\mu \nu }^{k + 1}} \right) - {{S_{\mu \nu }^{k + 1} - S_{\mu v}^k} \over {\Delta \tau _{\mu \nu }^k}}{b^k}} \right]$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq92.png) (A.2)

(A.2)

![$b_\chi ^k = - {{S_{\mu \nu }^{k + 1}} \over {\chi _{\mu \nu }^{k + 1}}}{b^k} + {{\left| {{z_{k + 1}} - {z_k}} \right|} \over {2\mu }}\left[ {{e^{ - \Delta \tau _{\mu v}^k}}\left( {S_{\mu \nu }^{k + 1} - I_{\mu v}^{k + 1}} \right) - {{S_{\mu \nu }^{k + 1} - S_{\mu v}^k} \over {\Delta \tau _{\mu v}^k}}{b^k}} \right]$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq93.png) (A.3)

(A.3)

(A.4)

(A.4)

(A.5)

(A.5)

when k < Nz − 1; otherwise, one can set the coefficients to zero. For ingoing rays (µ < 0),

![$c_\chi ^k = - {{S_{\mu v}^k} \over {\chi _{\mu \nu }^k}}{a^{k - 1}} + {{\left| {{z_{k - 1}} - {z_k}} \right|} \over {2\mu }}\left[ {{e^{ - \Delta {\tau _{\mu \nu }} - 1}}\left( {S_{\mu \nu }^{k - 1} - I_{\mu v}^{k - 1}} \right) - {{S_{\mu \nu }^{k - 1} - S_{\mu v}^k} \over {\Delta \tau _{\mu \nu }^{k - 1}}}{b^{k - 1}}} \right]$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq96.png) (A.6)

(A.6)

![$d_\chi ^k = - {{S_{\mu \nu }^{k - 1}} \over {\chi _{\mu \nu }^{k - 1}}}{b^{k - 1}} + {{\left| {{z_{k - 1}} - {z_k}} \right|} \over {2\mu }}\left[ {{e^{ - \Delta \tau _{\mu \nu }^{k - 1}}}\left( {S_{\mu \nu }^{k - 1} - I_{\mu \nu }^{k - 1}} \right) - {{S_{\mu \nu }^{k - 1} - S_{\mu v}^k} \over {\Delta \tau _{\mu \nu }^{k - 1}}}{b^{k - 1}}} \right]$](/articles/aa/full_html/2024/10/aa49963-24/aa49963-24-eq97.png) (A.7)

(A.7)

(A.8)

(A.8)

(A.9)

(A.9)

when k > 1; otherwise, one can set the coefficients to zero.

Appendix B Full Jacobian with background scattering terms

Let us recall Eq. 50 with different indices

(B.1)

(B.1)

If we develop the mean intensity term using the angular quadrature scheme with weights ωµ, one can write

(B.2)

(B.2)

Going any further requires simplifying the notations to keep as much clarity as possible. Let us define the following quantities

(B.3)

(B.3)

(B.4)

(B.4)

(B.5)

(B.5)

Here we have omitted the indices r, ℓ, and ν. From this point on, we no longer mention nor write these indices; however, it should be noted that the final solution should be computed for them as well. Equation B.2 can now be expressed as

(B.6)

(B.6)

In this equation, the unknowns one is seeking are the coefficients Mij. Equation B.6 can also be expressed as

(B.7)

(B.7)

where xi = (Mi1,…, MiNµ)τ, bi = (Bi1,…, BiNµ)τ, Ω = (ω1,…, ωNµ)τ, and Qi is the matrix associated with the coefficients Qikl. The vector ep is the pth canonical basis vector. At this point, the unknowns are gathered into the vectors xi . It should be mentioned that if the background scattering is ignored, xi = bi and the solution is therefore simple. Otherwise, let us dot product Eq. B.7 with Ω to obtain

(B.8)

(B.8)

where ri = (xi, Ω), bΩ,i = (bi, Ω),and Aij = [QiΩ]j. The equation can also be written using matrix notations to obtain

(B.9)

(B.9)

where I is the identity matrix. This is a simple linear system of unknown r for which the solution is

(B.10)

(B.10)

but can be simplified into

(B.11)

(B.11)

if the background scattering is weak. Introducing this result into Eq. B.7 gives the final solution

(B.12)

(B.12)

and if the background scattering is weak,

(B.13)

(B.13)

References

- Allgower, E. L., & Georg, K. 1993, Acta Numerica, 2, 1 [NASA ADS] [CrossRef] [Google Scholar]

- Amarsi, A. M., Nordlander, T., Barklem, P. S., et al. 2018, A&A, 615, A139 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Anusha, L. S., Nagendra, K. N., Paletou, F., & Léger, L. 2009, ApJ, 704, 661 [NASA ADS] [CrossRef] [Google Scholar]

- Arnaud, M., & Rothenflug, R. 1985, A&AS, 60, 425 [NASA ADS] [Google Scholar]

- Auer, L. H., & Mihalas, D. 1969, ApJ, 158, 641 [NASA ADS] [CrossRef] [Google Scholar]

- Barbier, D. 1943, Ann. Astrophys., 6, 113 [NASA ADS] [Google Scholar]

- Benedusi, P., Janett, G., Belluzzi, L., & Krause, R. 2021, A&A, 655, A88 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Benedusi, P., Janett, G., Riva, G., Belluzzi, L., & Krause, R. 2022, A&A, 664, A197 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Bjørgen, J. P. 2019, PhD thesis, Stockholm University [Google Scholar]

- Burgess, A., & Chidichimo, M. C. 1983, MNRAS, 203, 1269 [NASA ADS] [CrossRef] [Google Scholar]

- Cannon, C. J. 1973, ApJ, 185, 621 [Google Scholar]

- Carlsson, M. 1986, Uppsala Astronomical Observatory Reports, 33 [Google Scholar]

- Chen, Y., & Shen, C. 2006, IEEE Trans. Power Syst., 21, 1096 [NASA ADS] [CrossRef] [Google Scholar]

- Dennis, J. E., & Schnabel, R. B. 1996, Numerical Methods for Unconstrained Optimization and Nonlinear Equations (Society for Industrial and Applied Mathematics) [CrossRef] [Google Scholar]

- Eddington, A. S. 1926, The Internal Constitution of the Stars [Google Scholar]

- Fontenla, J. M., Avrett, E. H., & Loeser, R. 1993, ApJ, 406, 319 [Google Scholar]

- Hubeny, I., & Burrows, A. 2007, ApJ, 659, 1458 [CrossRef] [Google Scholar]

- Hubeny, I., & Lites, B. W. 1995, ApJ, 455, 376 [NASA ADS] [CrossRef] [Google Scholar]

- Hubeny, I., & Mihalas, D. 2014, Theory of Stellar Atmospheres: An Introduction to Astrophysical Non-equilibrium Quantitative Spectroscopic Analysis, Princeton Series in Astrophysics (Princeton University Press) [Google Scholar]

- Ibgui, L., Hubeny, I., Lanz, T., & Stehlé, C. 2013, A&A, 549, A126 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Janett, G., Benedusi, P., & Riva, F. 2024, A&A, 682, A68 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Judge, P. G. 2017, ApJ, 851, 5 [NASA ADS] [CrossRef] [Google Scholar]

- Kan, Z., Song, N., Peng, H., & Chen, B. 2022, J. Computat. Appl. Math., 399, 113732 [CrossRef] [Google Scholar]

- Knoll, D., & Keyes, D. 2004, J. Computat. Phys., 193, 357 [CrossRef] [Google Scholar]

- Krylov, A. N. 1931, Izv. Akad. Nauk SSSR Ser. Fiz.-Mat, 4, 491 [Google Scholar]

- Leenaarts, J., & Carlsson, M. 2009, in Astronomical Society of the Pacific Conference Series, 415, The Second Hinode Science Meeting: Beyond Discovery-Toward Understanding, eds. B. Lites, M. Cheung, T. Magara, J. Mariska, & K. Reeves, 87 [NASA ADS] [Google Scholar]

- Leenaarts, J., Carlsson, M., Hansteen, V., & Rutten, R. J. 2007, A&A, 473, 625 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Leenaarts, J., Pereira, T., & Uitenbroek, H. 2012, A&A, 543, A109 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Martins, J. R. R. A., & Ning, A. 2021, Engineering Design Optimization (Cambridge University Press) [CrossRef] [Google Scholar]

- Milić, I., & van Noort, M. 2017, A&A, 601, A100 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Milić, I., & van Noort, M. 2018, A&A, 617, A24 [Google Scholar]

- Milne, E. A. 1921, MNRAS, 81, 361 [Google Scholar]

- Newton, I. 1736, The Method of Fluxions and Infinite Series: With Its Application to the Geometry of Curve-lines. By … Sir Isaac Newton, … Translated from the Author’s Latin Original Not Yet Made Publick. To which is Sub-join’d, a Perpetual Comment Upon the Whole Work, … By John Colson, … (Henry Woodfall; and sold by John Nourse) [Google Scholar]

- Ng, K. C. 1974, J. Chem. Phys., 61, 2680 [NASA ADS] [CrossRef] [Google Scholar]

- Olson, G. L., & Kunasz, P. B. 1987, J. Quant. Spec. Radiat. Transf., 38, 325 [NASA ADS] [CrossRef] [Google Scholar]

- Olson, G. L., Auer, L. H., & Buchler, J. R. 1986, J. Quant. Spec. Radiat. Transf., 35, 431 [NASA ADS] [CrossRef] [Google Scholar]

- Osborne, C. M. J., & Milic, I. 2021, ApJ, 917, 14 [NASA ADS] [CrossRef] [Google Scholar]

- Paige, C. C., & Saunders, M. A. 1975, SIAM J. Numer. Anal., 12, 617 [NASA ADS] [CrossRef] [Google Scholar]

- Paletou, F., & Auer, L. H. 1995, A&A, 297, 771 [NASA ADS] [Google Scholar]

- Pereira, T. M. D., & Uitenbroek, H. 2015, A&A, 574, A3 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Press, W. H., Teukolsky, S. A., Vetterling, W. T., & Flannery, B. P. 2002, Numerical recipes in C++ : the art of scientific computing [Google Scholar]

- Raphson, J. 1690, Analysis Aequationum Universalis Seu Ad Aequationes Alge-braicas Resolvendas Methodus Generalis, & Expedita, Ex Nova Infinitarum Serierum Methodo, Deducta Ac Demonstrata [Google Scholar]

- Rybicki, G. B. 1972, in Line Formation in the Presence of Magnetic Fields, 145 [Google Scholar]

- Rybicki, G. B., & Hummer, D. G. 1992, A&A, 262, 209 [NASA ADS] [Google Scholar]

- Saad, Y., & Schultz, M. H. 1986, SIAM J. Sci. Statist. Comput., 7, 856 [CrossRef] [MathSciNet] [Google Scholar]

- Scharmer, G. B. 1981, ApJ, 249, 720 [Google Scholar]

- Scharmer, G. B. 1983, A&A, 117, 83 [NASA ADS] [Google Scholar]

- Scharmer, G. B. 1984, in Methods in Radiative Transfer, 173 [Google Scholar]

- Scharmer, G., & Carlsson, M. 1985, J. Computat. Phys., 59, 56 [NASA ADS] [CrossRef] [Google Scholar]

- Scharmer, G. B., & Nordlund, Å. 1982, Stockholms Observatoriums Reports, 19 [Google Scholar]

- Shull, J. M., & van Steenberg, M. 1982, ApJS, 48, 95 [NASA ADS] [CrossRef] [Google Scholar]

- Socas-Navarro, H., de la Cruz Rodriguez, J., Asensio Ramos, A., Trujillo Bueno, J., & Ruiz Cobo, B. 2015, A&A, 577, A7 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Sukhorukov, A. V., & Leenaarts, J. 2017, A&A, 597, A46 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Uitenbroek, H. 2001, ApJ, 557, 389 [Google Scholar]

- van der Vorst, H. A. 1992, SIAM J. Sci. Statist. Comput., 13, 631 [CrossRef] [Google Scholar]

All Tables

All Figures

|

Fig. 1 Jacobian matrix structure for a Nℓ-level atom problem. JF is a (NℓNz) × (NℓNz) matrix that contains the derivatives of the residual vector components with respect to the population densities. This matrix can be considered as a Nz × Nz block matrix, where each block Jkℓ is a Nℓ × Nℓ matrix. Jkℓ stores the derivatives of F at depth index k with respect to the population densities at depth index ℓ. |

| In the text | |

|

Fig. 2 Jacobian matrix estimation error for the three-level Ca II setup, evaluated at the LTE populations and for the different schemes. The first-order scheme is the forward one. The difference steps є1 and є2 are common choices (Knoll & Keyes 2004) and read |

| In the text | |

|

Fig. 3 FAL-C atmosphere. The temperature and total hydrogen and electron densities are shown. The height dimension origin (z = 0) corresponds tO τ500 = 1. |

| In the text | |

|

Fig. 4 Comparison of the Newton-Raphson method with JFNK routines for the six-level HI setup (zero radiation initial guess). As the Krylov solver relative tolerance rtol becomes small, the discrepancy between the different methods reduces to truncation and round-off errors. |

| In the text | |

|

Fig. 5 Residual vector calls required for convergence as a function of the Krylov solver relative tolerance. Top: Three-level Ca II setup. Bottom: Six-level Ca II setup. Both setups use the LTE initial condition. |

| In the text | |

|