| Issue |

A&A

Volume 678, October 2023

|

|

|---|---|---|

| Article Number | A165 | |

| Number of page(s) | 8 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202346717 | |

| Published online | 20 October 2023 | |

Improved cosmic microwave background (de-)lensing using general spherical harmonic transforms

1

Max-Planck Institut für Astrophysik,

Karl-Schwarzschild-Str. 1,

85748

Garching,

Germany

2

Université de Genève, Département de Physique Théorique et CAP,

24 Quai Ansermet,

1211

Genève 4,

Switzerland

e-mail: sebastian.belkner@unige.ch

Received:

20

April

2023

Accepted:

28

July

2023

Deep cosmic microwave background polarization experiments allow a very precise internal reconstruction of the gravitational lensing signal in principle. For this aim, likelihood-based or Bayesian methods are typically necessary, where very large numbers of lensing and delensing remappings on the sphere are sometimes required before satisfactory convergence. We discuss here an optimized piece of numerical code in some detail that is able to efficiently perform both the lensing operation and its adjoint (closely related to delensing) to arbitrary accuracy, using nonuniform fast Fourier transform technology. Where applicable, we find that the code outperforms current widespread software by a very wide margin. It is able to produce high-resolution maps that are accurate enough for next-generation cosmic microwave background experiments on the timescale of seconds on a modern laptop. The adjoint operation performs similarly well and removes the need for the computation of inverse deflection fields. This publicly available code enables de facto efficient spherical harmonic transforms on completely arbitrary grids, and it might be applied in other areas as well.

Key words: cosmic background radiation

© The Authors 2023

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Weak gravitational lensing by large-scale structure affects radiation from the cosmic microwave background (CMB) in subtle but important ways (Lewis & Challinor 2006) by distorting and smoothing the primordial near isotropic two-point statistics and introducing a large trispectrum that can now easily be detected with very high significance (Planck Collaboration VIII 2020; Carron et al. 2022; Qu et al. 2023). Extraction of the signal is now an important piece of the science case of many CMB experiments (Abazajian et al. 2016; Challinor et al. 2018; Ade et al. 2019) because the lensing potential power spectrum, which probes the formation of structure at high redshift, is thought to be a particularly clean probe of the neutrino mass scale (Lesgourgues & Pastor 2006; Hall & Challinor 2012; Allison et al. 2015). Another reason is that removal of the lensing signal (delensing) from the CMB polarization B-mode (Zaldarriaga & Seljak 1998; Knox & Song 2002; Kesden et al. 2002) is now compulsory in order to place the best constraints on a primordial background of gravitational waves from inflation (Ade et al. 2021; Tristram et al. 2022).

It has long been known that deep high-resolution observations of the CMB polarization in principle allow an extremely good internal reconstruction of the lensing signal (Hirata & Seljak 2003b). Recent years have seen works trying to achieve this goal of capturing a signal-to-noise ratio that is as high as possible in realistic experimental configurations (Carron & Lewis 2017; Millea et al. 2019, 2021; Millea & Seljak 2022; Legrand & Carron 2022, 2023; Aurlien et al. 2023), and some attempts were made on data as well (Adachi et al. 2020; Millea et al. 2021). These methods have in common that they use a likelihood model of the lensing signal, which allowed them to outperform the now standard quadratic estimators (Hu & Okamoto 2002; Okamoto & Hu 2003; Maniyar et al. 2021), which are limited by the amount of lensing B-mode power in the data.

These likelihood-based methods form the main motivation for this work. They are typically much more expensive than current quadratic estimator analysis: Properly modeling the subtle, ~2 arcmin remapping effect of gravitational lensing or delensing on CMB maps requires working at high resolution, and these operations must be performed many times before convergence. Numerically speaking, harmonic transforms of data distributed on the sphere are significantly costlier than in flat space. Only a handful of works were so far able to run such optimized reconstructions on large sky fractions and take the sky curvature into account (Aurlien et al. 2023; Legrand & Carron 2022, 2023).

In order to be slightly more concrete, we consider for example the problem of recovering the unlensed CMB from data and an estimate of the lensing deflection. The unlensed CMB likelihood conditioned on the lensing map is Gaussian, but with a covariance that is anisotropic owing to the deflection. If no further approximation is made, recovery of the delensed CMB will involve the inverse covariance (Wiener-filtering), which today can only be performed with iterative methods such as a conjugate gradient, and needs approximately ten iterations to converge. Each iteration requires two remapping operations (one forward operation, and the second operation is the adjoint operation). The construction of optimal lensing mass maps internally from the CMB proceeds by iteratively applying these delensing steps and measuring residual lensing (Hirata & Seljak 2003a,b), and at best, it requires about approximately ten delensing iterations. Hence (again, in the absence of approximations), it seems difficult to reconstruct a single best-lensing mass map with fewer than approximately 200 remapping operations. Sampling methods (Millea et al. 2019, 2021) are in principle orders of magnitude costlier still.

For these reasons, we have developed an optimized piece of code that is able to perform the deflection operation and its adjoint (the operations are properly defined below) efficiently. While several pieces of software are publicly available to perform the forward-deflection operation, we find that our implementation outperforms them by far, and the adjoint operation (equally as important for likelihood-based methods) appears to be new.

|

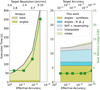

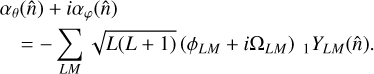

Fig. 1 Lensing geometry and notation near the north pole. The sky curvature is suppressed for clarity. The deflection vector |

2 Lensing and delensing the cosmic microwave background

To a very good approximation that is valid for next-generation CMB experiments such as CMB-S41 (Abazajian et al. 2016), the effect of gravitational lensing is that of a remapping of points on the sphere. The observed CMB intensity signal at position ft’ is related to that of an unlensed position by the relation

where  is located at a distance α from

is located at a distance α from  along the great circle generated by the deflection field α. Figure 1 shows the geometry and our notation. In polarization, and more generally, for any spin-weighted field s𝒯, there is an additional phase factor that is sourced by the change in the local basis between the deflected and undeflected position (hence mostly relevant only near the poles; Challinor & Chon 2002). This may be written

along the great circle generated by the deflection field α. Figure 1 shows the geometry and our notation. In polarization, and more generally, for any spin-weighted field s𝒯, there is an additional phase factor that is sourced by the change in the local basis between the deflected and undeflected position (hence mostly relevant only near the poles; Challinor & Chon 2002). This may be written

where χ = β − β in Fig. 1. We are primarily interested in efficient implementations of both the deflection operator, 𝒟α, which from a band-limited set of harmonic modes results in the lensed map on some arbitrary locations, or pixels,  and of its adjoint

and of its adjoint  .

.

The forward operation can be written explicitly (for spin-0 fields) as

and is thus closely related to the problem of finding an efficient forward spherical harmonic transform to an irregular grid. The adjoint takes a map as input, together with a set of deflected coordinates, to produce harmonic coefficients as follows (again, here for a spin-0 field):

for |m| ≤ ℓ ≤ ℓmax. In the most general situation, the points  and

and  are completely arbitrary, such that the code presented here forms in fact a spherical harmonic transform (SHT) pair that works on any pixelization of the sphere.

are completely arbitrary, such that the code presented here forms in fact a spherical harmonic transform (SHT) pair that works on any pixelization of the sphere.

In situations like those encountered in CMB lensing, the points  cover the sphere according to a reasonable sampling scheme (e.g., a Healpix; Górski et al. 2005 or Gauss-Legendre grid), and

cover the sphere according to a reasonable sampling scheme (e.g., a Healpix; Górski et al. 2005 or Gauss-Legendre grid), and  are the deflected coordinates given by Fig. 1. When quadrature weights are added to Eq. (4), the sum becomes an approximation to an integral over the observed coordinate,

are the deflected coordinates given by Fig. 1. When quadrature weights are added to Eq. (4), the sum becomes an approximation to an integral over the observed coordinate,

This is different to the operation inverse to Eq. (3), (delensing), as we discuss now.

If the remapping of the sphere is invertible (which is always the case in the weak-lensing regime), we can perform a variable transformation to the unlensed coordinate  and obtain

and obtain

where  is the Jacobian (magnification) determinant of the lensing remapping.

is the Jacobian (magnification) determinant of the lensing remapping.

Equation (6) now has the form of a standard SHT of  , where

, where  is matched to

is matched to  as in Fig. 1 (hence, Tlen/A is first delensed, and then mapped back to harmonic space). The choice of an isolatitude grid for

as in Fig. 1 (hence, Tlen/A is first delensed, and then mapped back to harmonic space). The choice of an isolatitude grid for  provides one way to calculate this integral quickly with a standard backward SHT (Aurlien et al. 2023; Legrand & Carron 2022, 2023) implemented 𝒟† in this way. However, significant overhead can remain with this method because it requires calculating the inverse deflection angles

provides one way to calculate this integral quickly with a standard backward SHT (Aurlien et al. 2023; Legrand & Carron 2022, 2023) implemented 𝒟† in this way. However, significant overhead can remain with this method because it requires calculating the inverse deflection angles  on this grid. In a standard situation, the angles

on this grid. In a standard situation, the angles  are easily obtained from a standard SHT of the deflection field on an isolatitude grid sampling the observed coordinate

are easily obtained from a standard SHT of the deflection field on an isolatitude grid sampling the observed coordinate  , which does not provide

, which does not provide  when the unlensed coordinate

when the unlensed coordinate  itself is sampled on such a grid. Moreover, usage of Eq. (6) requires the additional calculation of the magnification determinant, which has the cost of several forward SHTs (see Appendix B). The algorithm presented here bypasses this additional work and drops the requirement of an invertible deflection field.

itself is sampled on such a grid. Moreover, usage of Eq. (6) requires the additional calculation of the magnification determinant, which has the cost of several forward SHTs (see Appendix B). The algorithm presented here bypasses this additional work and drops the requirement of an invertible deflection field.

For spin-weighted fields, the situation is almost identical. The harmonic space coefficients are split into a gradient (Gℓm) and a curl term (Cℓm), and the deflection operation is defined through

for s > 0. The sign is not consistent with the spin-0 case to accommodate for the most prevalent conventions in the community. This creates a complex map of spin weight s, whose complex conjugate can be referred to with the subscript −s.

The adjoint takes this complex map as input and calculates the two sets of coefficients

which are decomposed as usual into gradient and curl modes,

The phase χ is quite specific to CMB lensing applications and is absent in the general-purpose interpolation routines. The relation between the adjoint and inverse is unchanged from the case of spin-0 fields.

3 Implementation

Of the many implementations of the forward operation that were tested over the years, our approach is closest to that of Basak et al. (2008). The fundamentals are quite straightforward: the key point is that on the sphere parameterized by co-latitude θ and longitude φ, a band-limited function can be exactly written as a two-dimensional discrete Fourier series in θ and φ. While any function on the sphere is naturally periodic in the longitude coordinate, we must artificially extend the θ range to [0, 2π) to obtain a doubly periodic function. We can then apply nonuniform fast Fourier transform (NUFFT) techniques (Barnett et al. 2019) to this function to perform the desired interpolation.

The main steps of the forward operations can be summarized as follows:

synthesis: Synthesis of the map from

on a rectangular equidistant grid with nθ ≥ ℓmax + 2 and nφ ≥ 2ℓmax + 2 points with a standard SHT, with one isolatitude ring on each pole. Both dimensions are chosen in a way to make FFTs of lengths nφ and 2nθ − 2 efficient; in addition, nφ must be an even number to enable the subsequent doubling step. For a standard CMB lensing application requiring ℓmax ~ 5θθ0, this corresponds to a sampling resolution close to 2 arcmin. The asymptotic complexity is

on a rectangular equidistant grid with nθ ≥ ℓmax + 2 and nφ ≥ 2ℓmax + 2 points with a standard SHT, with one isolatitude ring on each pole. Both dimensions are chosen in a way to make FFTs of lengths nφ and 2nθ − 2 efficient; in addition, nφ must be an even number to enable the subsequent doubling step. For a standard CMB lensing application requiring ℓmax ~ 5θθ0, this corresponds to a sampling resolution close to 2 arcmin. The asymptotic complexity is  .

.doubling: Doubling the θ-range from [0, π] to [0, 2π), by creating a (2nθ − 2, nφ) map, with the original map in the first half, and mirrored image θ → 2π − θ and φ → φ + π in the second half. In the case of odd spin values, the mirrored image also takes a minus sign.

FFT: Going to Fourier space with the help of a standard forward 2D FFT. These coefficients by construction contain all information necessary to evaluate the map perfectly at any location. The asymptotic complexity is

.

.NUFFT: Finally, from these Fourier coefficients and the coordinates

, interpolation proper, performed with a uniform-to-nonuniform (or type 2) NUFFT. The asymptotic complexity is

, interpolation proper, performed with a uniform-to-nonuniform (or type 2) NUFFT. The asymptotic complexity is  , where npoints is the number of points on the irregular grid.

, where npoints is the number of points on the irregular grid.

This is the only step that incurs inaccuracies beyond those introduced by the finite precision of floating-point arithmatics. These inaccuracies are controlled by a user-specified parameter e that was described in some detail in Arras et al. (2021).

The first three steps given here construct the 2D Fourier coefficients of the doubled-sphere representation of the same map from the spherical harmonic coefficients. Our approach to performing this (synthesis-doubling-FFT) starts with an SHT (synthesis). Consistent with the given asymptotic complexities, we find that this typically dominates the execution time overall. There are alternative approaches, however by manipulating Wigner small-d matrices, we can build a more explicit representation of the relation between spherical and 2D Fourier harmonics that can be implemented via a well-behaved three-term recurrence formula without any Fourier transforms and with a similar  theoretical complexity. This is how Huffenberger & Wandelt (2010) implemented their SHTs, for example, and how Basak et al. (2008) implemented their CMB remapping. In place of a Legendre transform for each θ coordinate, a Legendre transform is required only at the equator, but one spin-weighted transform is required for each spin between 0 and ℓmax. In our case, previous measurements (see, e.g., Sect. 2 of Galloway et al. 2023) showed that it was difficult to bring this recursion to speeds on CPUs that were comparable to the highly optimized standard Legendre transforms derived from the libsharp library (Reinecke & Seljebotn 2013) we are using. While we cannot exclude that there is some room for improvements provided the recursion can be optimized in a similar fashion (or, possibly, on GPUs), these are likely to be minor.

theoretical complexity. This is how Huffenberger & Wandelt (2010) implemented their SHTs, for example, and how Basak et al. (2008) implemented their CMB remapping. In place of a Legendre transform for each θ coordinate, a Legendre transform is required only at the equator, but one spin-weighted transform is required for each spin between 0 and ℓmax. In our case, previous measurements (see, e.g., Sect. 2 of Galloway et al. 2023) showed that it was difficult to bring this recursion to speeds on CPUs that were comparable to the highly optimized standard Legendre transforms derived from the libsharp library (Reinecke & Seljebotn 2013) we are using. While we cannot exclude that there is some room for improvements provided the recursion can be optimized in a similar fashion (or, possibly, on GPUs), these are likely to be minor.

For the adjoint operation, the steps naturally go backwards (the individual complexities stay unchanged):

NUFFT: From the input map and deflected coordinates, we perform a nonuniform-to-uniform (or type 1) NUFFT, resulting in the 2D FFT Fourier coefficients.

FFT: We remap them to position space using a standard backward FFT on the same doubled Fourier sphere of shape (2nθ - 2, nφ) as for the forward operation.

undoubling: The doubling of the Fourier sphere is undone by adding its mirror image to (or, for odd spins, subtracting from) the part [0, π].

adjoint synthesis: We perform a standard adjoint SHT on this new map of shape (nθ, nφ). This gives us the desired spherical harmonic coefficients.

Most of these steps are well established2 in the astrophysical community or can be understood intuitively, with the possible exception of the NUFFT, whose purpose and structure we therefore outline (for more theoretical and technical details, see Potts et al. 2001; Greengard & Lee 2004; Barnett et al. 2019). When given the Fourier coefficients of an n-dimensional function, it is trivial to obtain the function values on a regular grid in real space, in (almost) linear time. This is achieved using the fast Fourier transform, potentially after zero-padding the Fourier coefficients to increase the grid resolution. If the function values are required at irregularly spaced positions, however, this is not possible, and naive calculation of the Fourier series at each point is typically prohibitively slow in practice. One mathematically correct approach is to perform an FFT to a regular grid and then convolve these points with a sine kernel centered on the desired locations (sine-interpolation). This is just as prohibitive, but approximate solutions can be found by choosing an alternative and more suitable convolution kernel. In particular, a kernel with compact support will be chosen (in our case, it takes the form of Eq. (29) of Arras et al. 2021), and we divide the Fourier coefficients of the function by the Fourier coefficients of the kernel. This is the deconvolution step, and is followed by zero-padding the Fourier coefficients which increases the size of each dimension by a factor of roughly 1.2 to 2, and perform an FFT of the resulting array. At last comes the convolution step: for each irregularly spaced point, we perform a sum over its neighborhood and weight it by the kernel function.

Depending on the chosen kernel shape, zero-padding factor, and kernel support, it is possible to achieve accuracies close to machine precision; tuning the algorithm for higher tolerances is also possible and will improve the run time considerably. Good kernels compromise between the conflicting properties of being fast to evaluate and having small support and a quickly decaying Fourier transform. We used the variations of the kernel proposed in Barnett et al. (2019), as discussed in Arras et al. (2021).

Because all steps mentioned above are linear operations, the adjoint of the described NUFFT is obtained by executing the adjoint steps in reverse order, which is the nonuniform-to-uniform, or type 1, NUFFT.

Finally, for CMB-lensing applications, the unlensed angles  can easily be gained from the representation

can easily be gained from the representation

where at each point, the deflection vector is obtained from the spin-1 transform of the gradient (ϕ) and curl (Ω, if present) lensing potentials,

Here, we follow the convention of using capital letters LM to refer to the spherical harmonic coefficients of the lensing potential.

4 Benchmark

This section discusses the computational cost, the scaling with threading, and memory usage of the forward and adjoint operation, and it compares our work to a few publicly available implementations of the forward operation, such as LensPix3 (Lewis 2005), lenspyx4 (Planck Collaboration VIII 2020), pixell5 (Naess et al. 2021), and Flints6 (Lavaux & Wandelt 2010).

A typical task in the CMB lensing context is to compute lensed CMB spherical harmonic coefficients ( ) starting from unlensed ones (

) starting from unlensed ones ( ). This can be achieved by first performing a forward remapping onto a suitable pixelization of the sphere, and computing the spherical harmonics coefficients by a standard backward SHT. The adjoint of this entire operation is first built out of a forward SHT, followed by the adjoint remapping. Key parameters impacting the execution time are the band limit

). This can be achieved by first performing a forward remapping onto a suitable pixelization of the sphere, and computing the spherical harmonics coefficients by a standard backward SHT. The adjoint of this entire operation is first built out of a forward SHT, followed by the adjoint remapping. Key parameters impacting the execution time are the band limit  of

of  and the requested maximum multipole

and the requested maximum multipole  of the lensed CMB. In the applications that motivated this work,

of the lensed CMB. In the applications that motivated this work,  is at most the multipole above which the information on the lensing signal becomes negligible. For example, Planck reconstructions (Planck Collaboration VIII 2020; Carron et al. 2022) used

is at most the multipole above which the information on the lensing signal becomes negligible. For example, Planck reconstructions (Planck Collaboration VIII 2020; Carron et al. 2022) used  , and the recent ACT results (Madhavacheril et al. 2023)

, and the recent ACT results (Madhavacheril et al. 2023)  . For a very deep future polarization experiment such as the CMB-S4 deep survey (Abazajian et al. 2016), this is closer to

. For a very deep future polarization experiment such as the CMB-S4 deep survey (Abazajian et al. 2016), this is closer to  , a number we use often as reference in this section. Then

, a number we use often as reference in this section. Then  must typically be taken slightly higher than

must typically be taken slightly higher than  in order to account for the mode mixing by lensing.

in order to account for the mode mixing by lensing.

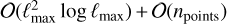

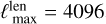

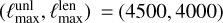

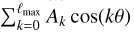

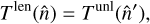

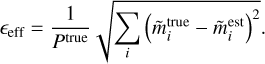

When lensed spherical harmonic coefficients are built in this way, another important parameter is the intermediate grid pixelization. Because the lensed CMB is not band limited, there is no exact quadrature rule, and this choice can strongly impact the accuracy of the recovered  at high multipoles. We show in Fig. 2 the execution time of these tasks for the forward (upper panel) and adjoint (lower panel) cases as a function of the number of threads (strong scaling). We picked

at high multipoles. We show in Fig. 2 the execution time of these tasks for the forward (upper panel) and adjoint (lower panel) cases as a function of the number of threads (strong scaling). We picked  ,

,  , and a longitude-thinned Gauss-Legendre grid with 6144 rings as intermediate grid (~5 × 107 pixels). This is a conservative configuration that in our experience allows a robust and accurate lensing reconstruction for very deep stage IV CMB observations. This was performed on a CSCS Piz Daint XC50 compute node7, with 12 physical cores. Results on more recent processors can sometimes be up to twice as fast in our experience. The top panel shows the result for the synthesis operation, while the lower panels shows the adjoint of it, and we see that each operation scales almost perfectly. We have excluded the cost of building the undeflected angles from the timing, which we briefly discuss below, as is suitable in the context of optimal lensing reconstruction, where many maps are deflected with the same set of angles. The accuracy of the interpolation was set to 10-5, which value is good enough for most purposes, as also discussed in more detail below. The interpolation is very efficient. Only a minor part of the total time is dominated by the pair of SHTs that is involved.

, and a longitude-thinned Gauss-Legendre grid with 6144 rings as intermediate grid (~5 × 107 pixels). This is a conservative configuration that in our experience allows a robust and accurate lensing reconstruction for very deep stage IV CMB observations. This was performed on a CSCS Piz Daint XC50 compute node7, with 12 physical cores. Results on more recent processors can sometimes be up to twice as fast in our experience. The top panel shows the result for the synthesis operation, while the lower panels shows the adjoint of it, and we see that each operation scales almost perfectly. We have excluded the cost of building the undeflected angles from the timing, which we briefly discuss below, as is suitable in the context of optimal lensing reconstruction, where many maps are deflected with the same set of angles. The accuracy of the interpolation was set to 10-5, which value is good enough for most purposes, as also discussed in more detail below. The interpolation is very efficient. Only a minor part of the total time is dominated by the pair of SHTs that is involved.

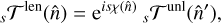

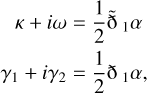

We now discuss the accuracy and memory usage of our implementation. Our code uses simple heuristics to allow the user to choose a target-relative accuracy, ϵtarget, of at least 10−13. For the forward operation, schematically  , we calculated a map-level effective relative accuracy, ϵeff, as follows: We took the difference between the true and estimated lensed maps,

, we calculated a map-level effective relative accuracy, ϵeff, as follows: We took the difference between the true and estimated lensed maps,  ,

,  , respectively, and normalized by the total power of the true lensed maps,

, respectively, and normalized by the total power of the true lensed maps,

Here,  , and the sum index i run over pixels as well as the map components for nonzero spin (Q and U in polarization). The maps

, and the sum index i run over pixels as well as the map components for nonzero spin (Q and U in polarization). The maps  were determined by a brute-force approach: We remapped the unlensed map to the desired grid by calculating Eq. (3) explicitly. As this can be fairly expensive, we calculated 105 exactly lensed pixels at most for a few numbers of isolatitude rings close to the equator.

were determined by a brute-force approach: We remapped the unlensed map to the desired grid by calculating Eq. (3) explicitly. As this can be fairly expensive, we calculated 105 exactly lensed pixels at most for a few numbers of isolatitude rings close to the equator.

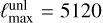

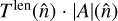

The right panel in Fig. 3 shows the computational cost of the lensing routine for spin-2 fields at  , using four CPUs, mapping onto a Healpix pixelization with Nside = 2048, as a function of ϵeff (bottom axis) and ϵtarget (top axis). The effective accuracy is typically slightly higher than requested, except in the vicinity of 10−13. This is not a problem of the NUFFT interpolation, however, but rather of the true SHT calculations themselves, which lose several digits in accuracy at this band limit. The diagram shows the split of the total computational cost into its most relevant steps described earlier. The SHT steps for the angle and doubled Fourier sphere always dominate the cost in this configuration, and the choice of accuracy has a fairly minor impact. If relevant, the SHTs can be performed with a gradient-only setting for nonzero spin fields (an implementation specific to the case of a vanishing curl component), further reducing the computational cost of these tasks by about 25%. The scale of the peak memory usage is indicated on the right y-axis of the right panel, and is shown as connected green square data points. The timings, memory, and accuracy calculations were made for a set of five analyses, and their standard deviation is negligible.

, using four CPUs, mapping onto a Healpix pixelization with Nside = 2048, as a function of ϵeff (bottom axis) and ϵtarget (top axis). The effective accuracy is typically slightly higher than requested, except in the vicinity of 10−13. This is not a problem of the NUFFT interpolation, however, but rather of the true SHT calculations themselves, which lose several digits in accuracy at this band limit. The diagram shows the split of the total computational cost into its most relevant steps described earlier. The SHT steps for the angle and doubled Fourier sphere always dominate the cost in this configuration, and the choice of accuracy has a fairly minor impact. If relevant, the SHTs can be performed with a gradient-only setting for nonzero spin fields (an implementation specific to the case of a vanishing curl component), further reducing the computational cost of these tasks by about 25%. The scale of the peak memory usage is indicated on the right y-axis of the right panel, and is shown as connected green square data points. The timings, memory, and accuracy calculations were made for a set of five analyses, and their standard deviation is negligible.

The left panel shows the corresponding results for a code using a popular interpolation scheme, lenspyx. This code (just as LensPix and pixell) uses bicubic spline interpolation on a intermediate grid obtained by cylindrical projection. The resolution of this grid then essentially sets the accuracy of the result and memory usage. While these interpolation schemes are perfectly fine for the purpose of producing a set of lensed CMB maps, they have strong limitations for more intensive tasks, or when higher accuracies are imperative. For low target resolutions of about 1.4 arcmin, the new implementation speeds up the execution time approximately 7 times for a similarly effective accuracy of about ϵeff ≈ 10−2. This increases to a speed-up of 30 times for an effective accuracy of 10−5. Higher accuracies are almost not manageable for lenspyx in both time and memory, whereas the new implementation can easily reach accuracies as low as 10−12.

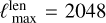

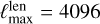

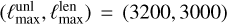

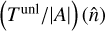

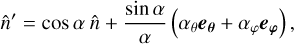

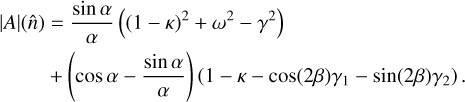

Figure 4 shows the execution time and peak memory usage of the new implementation for various Nside and  configurations and a target accuracy of ϵtarget = 10−13. The memory usage for the most challenging benchmarks is still below 64 GB, and we would like to note that the memory consumption is only slightly larger than the memory needed for the three maps (the deflection angles) for the respective Nside.

configurations and a target accuracy of ϵtarget = 10−13. The memory usage for the most challenging benchmarks is still below 64 GB, and we would like to note that the memory consumption is only slightly larger than the memory needed for the three maps (the deflection angles) for the respective Nside.

We now compare our results to other implementations that benchmark the spin-0 forward operation. The effective accuracy was calculated as in the above case, replacing the data by the spin-0 true and estimated temperature maps. For LensPix and Flints, we estimated the costs by using the natively C-implemented lensing routine, and with OpenMP support and four CPUs. We measured the tasks directly related to lensing, such as the calculation of deflection angles, the deflection, the interpolation, and rotation. To farily compare all implementations, we did not cache any calculations in Flints, even though they are available. For pixell, we used the full-sky lensing routine implemented in Python, and measured the costs within Python.

Almost all implementations provide an accuracy parameter in form of the intermediate grid pixel resolution. We chose them to provide approximately comparable results.

For Flints, LensPix, and lenspyx, we generated input CMB and lensing potential realizations and the true and estimated lensed maps on a Healpix grid. For pixell, the full-sky lensing routine was evaluated onto a CAR geometry, and we calculated the effective accuracy using our own CAR geometry to calculate the true lensed map.

We used  = 4096 and an HPC machine with four CPUs and 60 GB of memory. To reduce memory usage, the data for LensPix were split into sets and were calculated individually.

= 4096 and an HPC machine with four CPUs and 60 GB of memory. To reduce memory usage, the data for LensPix were split into sets and were calculated individually.

Table 1 summarizes the computational costs and memory usage for the pixel resolution and effective accuracy. LensPix and Flints are well-established algorithms and perform the task in reasonable time and for very reasonable memory usage. LensPix allows us to control the intermediate grid pixel resolution, whereas with Flints, this can be indirectly controlled by the choice of Nside. If needed, Flints execution time could be reduced by caching some of the calculations, making them available for repeated runs. pixell supports splitting of the full sky data into bands, which can be used to reduce the memory usage, if needed. The algorithm in this paper, shown in the bottom row, is vastly more efficient both in execution time and memory consumption, even more so in the high-accuracy regime.

Finally, we comment more specifically on the relevance of this code for a maximum a posteriori lensing reconstruction (Hirata & Seljak 2003b; Carron & Lewis 2017). In these methods, the reconstruction proceeds by finding an approximate solution to the Wiener-filtered delensed CMB using a conjugate gradient solver at each iteration that involves applying the forward and adjoint operation. As mentioned earlier, this typically involves several hundred such operations. The benefits for a reconstruction strategy like this are twofold. Faster operations directly speed up the lensing reconstruction, and accurate operations can prevent the conjugate gradient solver from building up errors or showing instability.

We saw this explicitly by testing the full-sky lensing reconstruction with CMB-S4-like settings  , a polarization noise level of

, a polarization noise level of  arcmin, and 4 CPUs), reconstructing the lensing potential using polarization-only maps, using both lenspyx, and the new implementation for the forward and adjoint operations. With lenspyx, we observed that a target resolution of about 1.0 arcmin is beneficial for a stable reconstruction of the very largest modes of the lensing potential in experimental configurations like these, resulting in execution times for a single adjoint operation of about 3 min. This is significantly longer than for the new implementation, for which we find execution times of only 8 s for better accuracy. Analogously, we find for the execution time of a typical full-iteration step of a lensing reconstruction ~25 min, or ~4 min, respectively, and the speed-up is even larger for lower ℓmax analyses (~24min vs. ~2 for (

arcmin, and 4 CPUs), reconstructing the lensing potential using polarization-only maps, using both lenspyx, and the new implementation for the forward and adjoint operations. With lenspyx, we observed that a target resolution of about 1.0 arcmin is beneficial for a stable reconstruction of the very largest modes of the lensing potential in experimental configurations like these, resulting in execution times for a single adjoint operation of about 3 min. This is significantly longer than for the new implementation, for which we find execution times of only 8 s for better accuracy. Analogously, we find for the execution time of a typical full-iteration step of a lensing reconstruction ~25 min, or ~4 min, respectively, and the speed-up is even larger for lower ℓmax analyses (~24min vs. ~2 for ( ). It is worth mentioning that with lenspyx at this resolution, outliers can occasionally occur. They ca take more tha 1 h or do not co verge at all. This is directly due to the lower accuracy, in particular very close to the poles, where the bicubic spline method is less accurate. This has not bee observed with the new implementation, whose error map is uniform across the entire sphere. We observed something similar for temperature-only reconstruction, where an effective relative accuracy of 10−7 generally appears to be required for a successful convergence of the lensing-map search on the very largest scales. This accuracy is accessible only with the implementation discussed here.

). It is worth mentioning that with lenspyx at this resolution, outliers can occasionally occur. They ca take more tha 1 h or do not co verge at all. This is directly due to the lower accuracy, in particular very close to the poles, where the bicubic spline method is less accurate. This has not bee observed with the new implementation, whose error map is uniform across the entire sphere. We observed something similar for temperature-only reconstruction, where an effective relative accuracy of 10−7 generally appears to be required for a successful convergence of the lensing-map search on the very largest scales. This accuracy is accessible only with the implementation discussed here.

|

Fig. 2 Execution time of different transforms as a function of number of threads used for the calculation. Upper panel: scaling of a single forward-lensing execution time, producing lensed spherical harmonic coefficients from their unlensed counterparts. The solid blue line shows the total time of producing |

|

Fig. 3 Full-sky forward operation execution times for spin-2 fields of our implementation compared with lenspyx, which uses a popular bicubic spline interpolation technique. The top panels show lenspyx (left) and the new implementation (right) as a function of the effective relative accuracy for ℓmax = 4096, producing lensed CMB maps in a Healpix geometry with Nside = 2048. The effective accuracy is calculated for a few isolatitude rings close to the equator. All calculations were made with four CPUs. We indicate the execution time (shaded areas, left y-axes), peak memory usage (green data points, right y-axis), and the accuracy parameters (target resolution, target accuracy) at the top x-axes. For lenspyx, we divided the full sky map into two bands to reduce the memory consumption. The differently colored areas show the main steps of the operation. The execution time and memory consumption both grows rapidly for lenspyx because it requires high-resolution grids for an accurate interpolation. |

spin-0, ℓmax = 4096, and Nside = 2048 (if applicable) forward-operation execution time and memory consumption for different implementations on the full sky, using 4 CPUs.

|

Fig. 4 Benchmark of the execution time and peak memory consumption for a wide range of Healpix resolution Nside and ℓmax, for a target accuracy of 10−13, and for our new implementation. The cell highlighted in magenta is the configuration we used for the accuracy benchmark in Fig. 3. The execution time ranges from 0.55 s (Nside = 512, ℓmax = 1024) to 336 s (Nside = 8192, ℓmax = 12 288), while the memory consumption increases from 0.4 GB to 51 GB. The memory consumption closely follows the memory needed to store the Healpix maps of that particular size. |

5 Conclusion

We have described an optimized implementation of the spherical transform pair of an arbitrary spin-weight that can be used on any pixelization of the sphere, such as regular grids distorted by CMB lensing. A C++ implementation and comprehensive Python front-end is available, together with the low-level algorithms (FFT, NUFFT, and SHT), under the terms of the GNU General Public License and named DUCC8. The code is written with the goal of portability and does not depend on external libraries. It supports multithreading via the C++ standard threading functionality and will make use of CPU vector instructions on x86 and ARM hardware, if the compiler supports the respective language extensions. The Python interface is kept deliberately general and flexible to allow use in the widest possible range of scientific applications. As a consequence, parts of the interface are somewhat complex and are perhaps best used by higher-level more application-specific packages to hide unnecessary details from the end user.

For users interested in applications specific to CMB lensing, the lenspyx9 Python package has been updated in this spirit to include these developments and provide additional wrappers to these routines. This results in an improvent of some orders of magnitude in execution time and accuracy over currently publicly available tools.

Acknowledgements

We thank Guilhem Lavaux, Antony Lewis, and Mathew Madhavacheril for discussions on Flints, LensPix and pixell respectively. J.C. and S.B. acknowledge support from a SNSF Eccellenza Professorial Fellowship (No. 186879). This work was supported by a grant from the Swiss National Supercomputing Centre (CSCS) under project ID s1203.

Appendix A Map analysis with a minimal number of rings

In the text above, we regularly use equiangular spherical grids with ℓmax + 2 rings (first and last ring located at the poles) to represent functions with a band limit of ℓmax (inclusive). This number of rings may sound insufficient to fully represent a function with the given band limit because the minimum number of rings required for an accurate map analysis via a quadrature rule on this layout is 2ℓmax + 2 (Clenshaw-Curtis quadrature).

However, we can again make use of the double Fourier sphere technique that was introduced in Sect. 3, that is, we can follow a meridian from the north pole to the south pole, and then back again on the opposite side. A full meridian like this has 2ℓmax + 2 points in total. Because we assumed the function on the sphere to be band limited, the θ-dependent function along each of these full meridians is the sum of associated Legendre polynomials of degrees up to ℓmax in cos(θ). In other words, it can be expressed as a Fourier series  . This function in turn is completely determined by 2ℓmax + 1 equidistant samples in the range θ = [0; 2π), that is, one less than we actually have. The same is true in azimuthal direction, where we also have at least 2ℓmax + 2 pixels on each ring.

. This function in turn is completely determined by 2ℓmax + 1 equidistant samples in the range θ = [0; 2π), that is, one less than we actually have. The same is true in azimuthal direction, where we also have at least 2ℓmax + 2 pixels on each ring.

As a consequence, the actual function value at any θ and φ can be obtained using a combination of fast Fourier transforms and phase-shifting factors, or (in an approximate fashion) via NUFFT. One way of extracting the spherical harmonic coefficients from this map would therefore be by first computing the function values at a shifted set of isolatitude rings located exactly between the existing ones, increasing the number of rings to 2ℓmax + 3. This is then followed by applying the appropriate Clenshaw-Curtis quadrature weights to the full set of rings. Finally, running an adjoint spherical harmonic synthesis operation on the full set of weighted rings gives the desired result.

It is even possible to shift the newly generated rings back to the original positions after weighting, which again reduces the number of rings in the adjoint synthesis operation to ℓmax + 2. This speeds up the SHT considerably.

Appendix B Adjoint and inverse lensing

In the most general situation, invertibility of the deflection field is not necessarily always achieved at all points in a likelihood search under nonideal conditions, where lensing estimators can react strongly to signatures of anisotropies unrelated to lensing. When the lensing deflection is invertible, the inverse lensing operation can still be useful. To this end, we can use the same adjoint operation 𝒟†, but with input  instead of

instead of  . Eq. (6) in the main text shows that the result then is

. Eq. (6) in the main text shows that the result then is  .

.

The magnification determinant may be obtained as follows: With 1α = αθ + iαφ as in Eq. (11), let the convergence (κ), field rotation (ω) and shears (γ) be

where ð and  are the spin raising and lowering operators (see the first appendix of Lewis et al. (2002) for a discussion in the context of the CMB). It holds

are the spin raising and lowering operators (see the first appendix of Lewis et al. (2002) for a discussion in the context of the CMB). It holds

All of these quantities can be computed from the harmonic coefficients of the deflection field with the help of spin-weighted spherical harmonic transforms.

Informally, ignoring technical issues of band limits, quadrature weights, and so on, we may write

where δD is the Dirac delta. Similarly, the operator 𝒟†𝒟 produces the spherical harmonic coefficients of  from those of Tunl.

from those of Tunl.

References

- Abazajian, K. N., Adshead, P., Ahmed, Z., et al. 2016 ArXiv e-prints [arXiv:1618.82743] [Google Scholar]

- Adachi, S., Aguilar Faúndez, M. A. O., Akiba, Y., et al. 2020, Phys. Rev. Lett., 124, 131301 [NASA ADS] [CrossRef] [Google Scholar]

- Ade, P., Aguirre, J., Ahmed, Z., et al. 2019, JCAP, 02, 056 [CrossRef] [Google Scholar]

- Ade, P. A. R., Ahmed, Z., Amiri, M., et al. 2021, Phys. Rev. Lett., 127, 151301 [NASA ADS] [CrossRef] [Google Scholar]

- Allison, R., Caucal, P., Calabrese, E., Dunkley, J., & Louis, T. 2015, Phys. Rev.D, 92, 123535 [NASA ADS] [CrossRef] [Google Scholar]

- Arras, P., Reinecke, M., Westermann, R., & Enßlin, T. A. 2021, A & A, 646, A58 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Aurlien, R., Remazeilles, M., Belkner, S., et al. 2023, JCAP, 06, 034 [CrossRef] [Google Scholar]

- Barnett, A. H., Magland, J., & af Klinteberg, L. 2019, SIAM J. Sci. Comput., 41, C479 [Google Scholar]

- Basak, S., Prunet, S., & Benabed, K. 2008, in 12th Marcel Grossmann Meetingon General Relativity, 2213 [Google Scholar]

- Carron, J., & Lewis, A. 2017, Phys. Rev. D, 96, 063510 [NASA ADS] [CrossRef] [Google Scholar]

- Carron, J., Mirmelstein, M., & Lewis, A. 2022, JCAP, 09, 039 [CrossRef] [Google Scholar]

- Challinor, A., & Chon, G. 2002, Phys. Rev. D, 66, 127301 [NASA ADS] [CrossRef] [Google Scholar]

- Challinor, A., Allison, R., Carron, J., et al. 2018, JCAP, 04, 018 [CrossRef] [Google Scholar]

- Galloway, M., Reinecke, M., Andersen, K. J., et al. 2023, A & A, 675, A8 [CrossRef] [EDP Sciences] [Google Scholar]

- Górski, K. M., Hivon, E., Banday, A. J., et al. 2005, ApJ, 622, 759 [Google Scholar]

- Greengard, L., & Lee, J.-Y. 2004, SIAM Rev., 46, 443 [NASA ADS] [CrossRef] [Google Scholar]

- Hall, A. C., & Challinor, A. 2012, MNRAS, 425, 1170 [NASA ADS] [CrossRef] [Google Scholar]

- Hirata, C. M., & Seljak, U. 2003a, Phys. Rev., D67, 043001 [NASA ADS] [Google Scholar]

- Hirata, C. M., & Seljak, U. 2003b, Phys. Rev., D68, 083002 [NASA ADS] [Google Scholar]

- Hu, W., & Okamoto, T. 2002, ApJ, 574, 566 [CrossRef] [Google Scholar]

- Huffenberger, K. M., & Wandelt, B. D. 2010, ApJS, 189, 255 [NASA ADS] [CrossRef] [Google Scholar]

- Kesden, M., Cooray, A., & Kamionkowski, M. 2002, Phys. Rev. Lett., 89, 011304 [NASA ADS] [CrossRef] [Google Scholar]

- Knox, L., & Song, Y.-S. 2002, Phys. Rev. Lett., 89, 011303 [NASA ADS] [CrossRef] [Google Scholar]

- Lavaux, G., & Wandelt, B. D. 2010, ApJS, 191, 32 [NASA ADS] [CrossRef] [Google Scholar]

- Legrand, L., & Carron, J. 2022, Phys. Rev. D, 105, 123519 [NASA ADS] [CrossRef] [Google Scholar]

- Legrand, L., & Carron, J. 2023 ArXiv e-prints [arXiv:2384.82584] [Google Scholar]

- Lesgourgues, J., & Pastor, S. 2006, Phys. Rept., 429, 307 [NASA ADS] [CrossRef] [Google Scholar]

- Lewis, A. 2005, Phys. Rev., D71, 083008 [NASA ADS] [Google Scholar]

- Lewis, A., & Challinor, A. 2006, Phys. Rept., 429, 1 [Google Scholar]

- Lewis, A., Challinor, A., & Turok, N. 2002, Phys. Rev. D, 65, 023505 [Google Scholar]

- Madhavacheril, M. S., Qu, F. J., Sherwin, B. D., et al. 2023 ArXiv e-prints [arXiv:2304.05203] [Google Scholar]

- Maniyar, A. S., Ali-Haïmoud, Y., Carron, J., Lewis, A., & Madhavacheril, M. S. 2021, Phys. Rev. D, 103, 083524 [NASA ADS] [CrossRef] [Google Scholar]

- Millea, M., & Seljak, U. 2022, Phys. Rev. D, 105, 103531 [NASA ADS] [CrossRef] [Google Scholar]

- Millea, M., Anderes, E., & Wandelt, B. D. 2019, Phys. Rev. D, 100, 023509 [NASA ADS] [CrossRef] [Google Scholar]

- Millea, M., Daley, C. M., Chou, T.-L., et al. 2021, ApJ, 922, 259 [NASA ADS] [CrossRef] [Google Scholar]

- Naess, S., Madhavacheril, M., & Hasselfield, M. 2021, Astrophysics Source Code Library [record ascl:2102.003] [Google Scholar]

- Okamoto, T., & Hu, W. 2003, Phys. Rev., D67, 083002 [NASA ADS] [Google Scholar]

- Planck Collaboration VIII. 2020, A & A, 641, A8 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Potts, D., Steidl, G., & Tasche, M. 2001, Fast Fourier Transforms for Nonequi-spaced Data: A Tutorial, eds. J. J. Benedetto, & P. J. S. G. Ferreira (Boston, MA: Birkhäuser Boston), 247 [Google Scholar]

- Qu, F. J., Sherwin, B. D., Madhavacheril, M. S., et al. 2023 ArXiv e-prints [arXiv:2384.85282] [Google Scholar]

- Reinecke, M., & Seljebotn, D. S. 2013, A & A, 554, A112 [CrossRef] [EDP Sciences] [Google Scholar]

- Tristram, M., Banday, A. J., Górski, K. M., et al. 2022, Phys. Rev. D, 105, 083524 [Google Scholar]

- Zaldarriaga, M., & Seljak, U. 1998, Phys. Rev. D, 58, 023003 [Google Scholar]

Fast Fourier transforms are handled with code derived from the pocketFFT library (https://gitlab.mpcdf.mpg.de/mtr/pocketfft), which in turn is a descendant of FFTPACK (https://netlib.org/fftpack/), which was heavily modified for an improved accuracy and performance.

The standard ring-based spherical harmonic transforms are derived from the libsharp (Reinecke & Seljebotn 2013) library (https://gitlab.mpcdf.mpg.de/mtr/libsharp).

All Tables

spin-0, ℓmax = 4096, and Nside = 2048 (if applicable) forward-operation execution time and memory consumption for different implementations on the full sky, using 4 CPUs.

All Figures

|

Fig. 1 Lensing geometry and notation near the north pole. The sky curvature is suppressed for clarity. The deflection vector |

| In the text | |

|

Fig. 2 Execution time of different transforms as a function of number of threads used for the calculation. Upper panel: scaling of a single forward-lensing execution time, producing lensed spherical harmonic coefficients from their unlensed counterparts. The solid blue line shows the total time of producing |

| In the text | |

|

Fig. 3 Full-sky forward operation execution times for spin-2 fields of our implementation compared with lenspyx, which uses a popular bicubic spline interpolation technique. The top panels show lenspyx (left) and the new implementation (right) as a function of the effective relative accuracy for ℓmax = 4096, producing lensed CMB maps in a Healpix geometry with Nside = 2048. The effective accuracy is calculated for a few isolatitude rings close to the equator. All calculations were made with four CPUs. We indicate the execution time (shaded areas, left y-axes), peak memory usage (green data points, right y-axis), and the accuracy parameters (target resolution, target accuracy) at the top x-axes. For lenspyx, we divided the full sky map into two bands to reduce the memory consumption. The differently colored areas show the main steps of the operation. The execution time and memory consumption both grows rapidly for lenspyx because it requires high-resolution grids for an accurate interpolation. |

| In the text | |

|

Fig. 4 Benchmark of the execution time and peak memory consumption for a wide range of Healpix resolution Nside and ℓmax, for a target accuracy of 10−13, and for our new implementation. The cell highlighted in magenta is the configuration we used for the accuracy benchmark in Fig. 3. The execution time ranges from 0.55 s (Nside = 512, ℓmax = 1024) to 336 s (Nside = 8192, ℓmax = 12 288), while the memory consumption increases from 0.4 GB to 51 GB. The memory consumption closely follows the memory needed to store the Healpix maps of that particular size. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.

![$ {\left[ {{{\cal D}_\alpha }{T^{{\rm{unl}}}}} \right]_i} \equiv \sum\limits_{ = 0}^{{_{\max }}} {\sum\limits_{m = - }^ {T_{m}^{{\rm{unl}}}{Y_{m}}\left( {{{\hat n'}_i}} \right)\,\,\,\,\,\,\,\,\left( {{\rm{spin - 0}}} \right)} } $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq11.png)

![$ {\left[ {{\cal D}_\alpha ^\dag {T^{{\rm{len}}}}} \right]_{m}} \equiv \sum\limits_i {{T^{{\rm{len}}}}\left( {{{\hat n}_i}} \right)Y_{m}^ * \left( {{{\hat n'}_i}} \right)} \,\,\,\,\,\,\left( {{\rm{spin - 0}}} \right) $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq12.png)

![$ {\left[ {{\cal D}_\alpha ^\dag {T^{{\rm{len}}}}} \right]_{Lm}} \sim \int {{d_2}n{T^{{\rm{len}}}}\left( {\hat n} \right)Y_{Lm}^ * \left( {\hat n'} \right)} \,\,\,\,\,\,\,\,\,\left( {{\rm{spin - 0}}} \right) $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq17.png)

![$ {\left[ {{\cal D}_\alpha ^\dag {T^{{\rm{len}}}}} \right]_{Lm}} \sim \int {{d^2}n'} \left( {{{{T^{{\rm{len}}}}\left( {\hat n} \right)} \over {\left| {A\left( {\hat n} \right)} \right|}}} \right)Y_{Lm}^ * \left( {\hat n'} \right)\,\,\,\,\,\,\,\,\,\left( {{\rm{spin - 0}}} \right) $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq19.png)

![$ _s{\left[ {{{\cal D}_\alpha }} \right]_i} = - {e^{is\chi \left( {\hat n'} \right)}}\sum\limits_{L = 0}^{L\max } {\sum\limits_{m = - L}^L {\left( {{G_{Lm}} + i{C_{Lm}}} \right)} \,{\,_s}{Y_{Lm}}\left( {{{\hat n'}_i}} \right)} $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq30.png)

![$ _{ \pm s}{\left[ {{\cal D}_\alpha ^\dag } \right]_{Lm}} = \sum\limits_i {{e^{ \mp is\chi \left( {{{\hat n}_i}} \right)}}_{ \pm s}\left( {{{\hat n}_i}} \right){\,_{ \pm s}}Y_{Lm}^ * \left( {{{\hat n'}_i}} \right)} , $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq31.png)

![$ \matrix{ { - {1 \over 2}\left( {_s{{\left[ {{\cal D}_\alpha ^\dag } \right]}_{Lm}} + {{\left( { - 1} \right)}^s}_{ - s}{{\left[ {{\cal D}_\alpha ^\dag } \right]}_{Lm}}} \right)\,\,\,\,\left( {{G_{Lm}}} \right)} \hfill \cr { - {1 \over {2i}}\left( {_s{{\left[ {{\cal D}_\alpha ^\dag } \right]}_{Lm}} - {{\left( { - 1} \right)}^s}_{ - s}{{\left[ {{\cal D}_\alpha ^\dag } \right]}_{Lm}}} \right)\,\,\,\left( {{G_{Lm}}} \right)} \hfill \cr } $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq32.png)

![$ \left[ {{{\cal D}_\alpha }{\cal D}_\alpha ^\dag } \right]\left( {{{\hat n}_i},{{\hat n}_j}} \right) \sim {{{\delta ^D}\left( {{{\hat n}_i} - {{\hat n}_j}} \right)} \over {\left| {A\left( {{{\hat n}_i}} \right)} \right|}},\,\,\,\,\left( {{\bf{\alpha }}\,{\rm{invertible}}} \right), $](/articles/aa/full_html/2023/10/aa46717-23/aa46717-23-eq77.png)