Fig. 4

Download original image

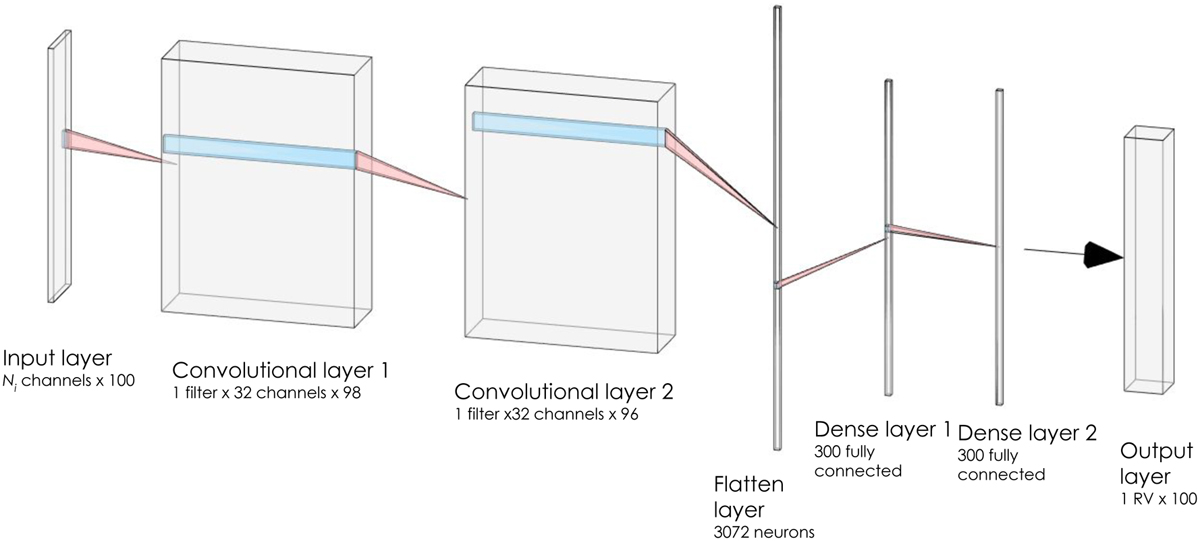

Neural network architecture used throughout this study. The input layer consists of 100 measurements of Ni activity indicators, and the output layer (or label) is the corresponding 100 RVs. The first two hidden layers are convolutional layers, each with 32 kernels applied onto the three neighbouring neurons. After the second convolutional layer, all the outputs for all the neurons are organised in a linear vector of neurons, and three subsequent, fully connected layers are used to transform this vector into the final RV prediction. The response functions of all the neurons are the so-called ReLus, which clearly outperforms other non-linear functions in terms of convergence speed and lower computational time. As discussed in the text, small modifications to this architecture do not significantly change the obtained results.

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.