| Issue |

A&A

Volume 664, August 2022

|

|

|---|---|---|

| Article Number | A52 | |

| Number of page(s) | 13 | |

| Section | Astronomical instrumentation | |

| DOI | https://doi.org/10.1051/0004-6361/202243107 | |

| Published online | 09 August 2022 | |

Large Interferometer For Exoplanets (LIFE)

IV. Ideal kernel-nulling array architectures for a space-based mid-infrared nulling interferometer

Research School of Astronomy and Astrophysics, College of Science, Australian National University,

2611

Canberra, Australia

e-mail: jonah.hansen@anu.edu.au

Received:

13

January

2022

Accepted:

30

May

2022

Aims. Optical interferometry from space for the purpose of detecting and characterising exoplanets is seeing a revival, specifically from missions such as the proposed Large Interferometer For Exoplanets (LIFE). A default assumption since the design studies of Darwin and TPF-I has been that the Emma X-array configuration is the optimal architecture for this goal. Here, we examine whether new advances in the field of nulling interferometry, such as the concept of kernel-nulling, challenge this assumption.

Methods. We develop a tool designed to derive the photon-limited signal-to-noise ratio of a large sample of simulated planets for different architecture configurations and beam combination schemes. We simulate four basic configurations: the double Bracewell/X-array, and three kernel-nullers with three, four, and five telescopes respectively.

Results. We find that a configuration of five telescopes in a pentagonal shape, using a five-aperture kernel-nulling scheme, outperforms the X-array design in both search (finding more planets) and characterisation (obtaining better signal, faster) when the total collecting area is conserved. This is especially the case when trying to detect Earth twins (temperate, rocky planets in the habitable zone), showing a 23% yield increase over the X-array. On average, we find that a five-telescope design receives 1.2 times more signal than the X-array design.

Conclusions. With the results of this simulation, we conclude that the Emma X-array configuration may not be the best choice of architecture for the upcoming LIFE mission, and that a five-telescope design utilising kernel-nulling concepts will likely provide better scientific return for the same collecting area, provided that technical solutions for the required achromatic phase shifts can be implemented.

Key words: telescopes / instrumentation: interferometers / techniques: interferometric / infrared: planetary systems / methods: numerical / planets and satellites: terrestrial planets

© J. T. Hansen et al. 2022

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe-to-Open model. Subscribe to A&A to support open access publication.

1 Introduction

Optical interferometry from space remains one of the key technologies that promises to bring an unprecedented look into high angular resolution astrophysics. This is particularly true in the booming field of exoplanet detection and characterisation; the Voyage 2050 plan of the European Space Agency (ESA; Voyage 2050 Senior Committee 2021) recently recommended that the study of temperate exoplanets and their atmospheres in the mid-infrared (MIR) be considered for a large scale mission, given it can be proven to be technologically feasible. The Large Interferometer For Exoplanets (LIFE) initiative (Quanz et al. 2022) was developed to achieve this goal, using a space-based MIR nulling interferometer to find and characterise temperate exoplanets around stars that would be otherwise too challenging or unfeasible for other techniques such as single-aperture coronography or transit spectroscopy.

The notion of using a space-based nulling interferometer to look for planets is not new. It was first proposed by Bracewell (1978), and then further developed by Léger et al. (1995) and Angel & Woolf (1997) among others, leading to two simultaneous concept studies of large scale missions by ESA (the Darwin mission) and NASA (the Terrestrial Planet Finder – Interferometer (TPF-I) mission). Unfortunately for those excited by the prospect of a space-based interferometer, both missions were dropped by their corresponding agencies due to a combination of funding issues, technical challenges, and lack of scientific understanding of the underlying exoplanet population.

These concerns have been tackled substantially in the recent decade. Exoplanet space missions such as Kepler (Borucki et al. 2010), TESS (Ricker et al. 2015) and CHEOPS (Broeg et al. 2013), and radial velocity surveys on instruments including HIRES (Vogt et al. 1994) and HARPS (Mayor et al. 2003), have provided the community with a vast trove of knowledge concerning exoplanet demographics (e.g. Petigura et al. 2018; Fulton & Petigura 2018; Berger et al. 2020; Hansen et al. 2021). On the technical side, missions such as PROBA-3 (Loreggia et al. 2018) and Pyxis (Hansen & Ireland 2020, Hansen et al., in prep.) aim to demonstrate formation-flight control at the level required for interferometry, while the Nulling Interfer-ometry Cryogenic Experiment (NICE; Gheorghe et al. 2020) aims to demonstrate MIR nulling interferometry at the sensitivity required for a space mission under cryogenic conditions. Other developments, such as in MIR photonics (Kenchington Goldsmith et al. 2017; Gretzinger et al. 2019) and MIR detectors (Cabrera et al. 2020), have also progressed to the point where a space-based MIR interferometer is significantly less technically challenging.

In this light, we wish to revisit the studies into array architecture that were conducted during the Darwin/TPF-I era, and identify which architecture design (with how many telescopes) is best suited to both detecting and characterising exoplanets. Since the initial trade-off studies (Lay et al. 2005), the assumed architecture of an optical or MIR nulling interferometer has been the Emma X-array configuration; four collecting telescopes in a rectangle formation that reflect light to an out-of-plane beam combiner. Functionally, this design acts as two Bracewell interferometers with a π/2 phase chop between them. However, new developments in the theory of nulling interferometry beam combination, particularly that of kernel-nulling (Martinache & Ireland 2018; Laugier et al. 2020), allow other configurations to obtain the same robustness as the X-array, and so may no longer render this the best configuration for the purposes of the proposed LIFE mission.

Kernel-nulling is a generalised concept that allows the beam combination of multiple telescopes to be robust to second-order piston and optical path delay (OPD) errors. The X-array described above, with the ± π/2 phase chop (sine-chop) between the two nulled arms, is equivalent to a kernel nuller that is signal-to-noise optimal at a single wavelength, thus offering similar robustness against piston errors (Velusamy et al. 2003; Lay 2004). The advantages of kernel-nullers (or equivalently the sine-chopped X-array) also extend to the removal of symmetric background sources such as local zodiacal light and exozodiacal light (Defrère et al. 2010) due to their asymmetric responses. This symmetric source suppression was the reason that the sine-chop was favoured over the cosine-chop (chopping between 0 and π) in the original investigations of the double Bracewell (Velusamy et al. 2003). These benefits lead us to postulate that all competitive nullers for exoplanet detection must be kernel-nullers, or alternatively offer the same benefits even if they are not labelled as such (e.g. the double Bracewell/X-array).

Here, we build a simulator that identifies the signal-to-noise ratio (S/N) of a simulated population of planets for a given telescope array architecture, to identify which configuration is best suited for both the detection of an exoplanet (where the orbital position of a planet may not be known) and the characterisation of an exoplanet’s atmosphere (where the orbital position of a planet is known). Four architectures are compared: the default sine-chopped X-array configuration and three kernel-nullers with three, four, and five telescopes respectively. To compare these configurations, we base our analysis on the relative merits of the transmission maps associated with each architecture. We highlight here that we only consider the photon-noise-limited case, with instrumental errors discussed in a follow-up paper (Hansen et al. 2022).

2 Model Implementation

In our model, we adopt a similar spectral range to that of the initial LIFE study (Quanz et al. 2022, hereafter labelled LIFE1), spanning 4−19 μm. We assume Nλ = 50 spectral channels, giving a spectral bandpass of 0.3 μm per channel.

2.1 Star and Planet Populations

To draw our population of planets, we use the P-Pop simulator tool1, as found in Kammerer & Quanz (2018). This tool uses Monte Carlo Markov chains to draw a random population of planets with varying orbital and physical parameters around a set input catalogue of stars. We chose to use the LIFE star catalogue (version 3), as described in LIFE1. This catalogue contains main-sequence stars primarily of spectral types F through M within 20 pc without close binaries; histograms of the stellar properties in the input catalogue can be found in Fig. A.1 of LIFE1. The underlying planet population was drawn using results from NASA’s ExoPaG SAG13 (Kopparapu et al. 2018), with planetary radii spanning between 0.5 and 14.3 Rθ (the lower and upper bounds of atmosphere-retaining planets covered in Kopparapu et al. 2018) and periods between 0.5 and 500 days. As in LIFE1, binaries with a separation greater than 50 AU are treated as single stars, and all planets are assumed to have circular orbits. We run the P-Pop simulator ten times to produce ten universes of potential planetary parameters, in order for our calculation of S/N detection rates to be robust. We also highlight to the reader that the underlying population used in this paper is different to the one used in LIFE1, and that comparisons between the planet detection yields in these works must be made with caution.

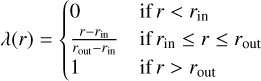

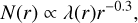

To create our stellar and planetary photometry, we approximate the star as a blackbody radiator and integrate the Planck function over the spectral channels. For the planet, we consider two contributions: thermal and reflected radiation. The thermal radiation is generated by approximating the planet as a blackbody, and utilising the effective temperature and radius generated from P-Pop. For the effective temperature, we note that P-pop uses a random number for the Bond albedo in the range AB ∈ [0,0.8). The reflected radiation, on the other hand, is the host star’s blackbody spectrum scaled by the semi-major axis and albedo of the planet. We assume the reflected spectral radiance is related to the host star by

(1)

(1)

where Ag is the mid-infrared geometric albedo from P-pop (Ag ∈ [0,0.1)), fp is the Lambertian reflectance, Rp is the radius of the planet, and a is the semi-major axis. We note here, however, that typically the reflected light component of an exoplanet’s flux is negligible when compared to the thermal radiation in the MIR wavelength regime.

2.2 Architectures

In this work, we define two specific modes for the array: search and characterisation. The search mode is where the array is optimised for a single, predefined radius around a star (nominally the habitable zone, HZ) with the aim of detecting new planets. The array spins, and any modulated signal can then be detected as a planet. It should be noted that the planet is almost certainly not in the optimised location of the array. For our purposes, we consider ideal signal extraction, with a single planet only. We also assume the array rotates an integer number of symmetry angles (e.g. 72 degrees for the five-telescope array) during an observation. For the characterisation mode, we assume we know the angular position of the planet we are characterising and optimise the array for that position. As such, no array rotation is required. This stage focuses on obtaining the highest signal of the planet possible.

We consider four architectures to compare in this analysis: the X-array design based on the double Bracewell configuration (the default choice inherited from the Darwin/TPF-I trade studies; Lay et al. 2005), and then three sets of kernel-nullers based on the work of Martinache & Ireland (2018) and Laugier et al. (2020), using three, four, and five telescopes respectively. We assume that the beam combining spacecraft is out of the plane of the collector spacecraft (the ‘Emma’ configuration) so that any two-dimensional geometry can be realised.

To obtain the responses of each architecture, we create a transmission map of each telescope configuration normalised by the flux per telescope. We first must define a reference baseline to optimise the array around. We chose to use the shortest baseline in any of the configurations (that is, adjacent telescopes). If we wish to maximise the response of the interferometer at a given angular separation from the nulled centre δ, the baseline should be calculated as

(2)

(2)

where λB is a reference wavelength (that is, a wavelength for which the array is optimised) and ΓB is an architecture-dependent scale factor. This factor must be configured for each different architecture as well as for each nulled output; that is, the array may be optimised for only a single nulled output.

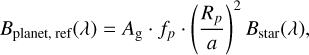

The on-sky intensity response is given by

(3)

(3)

where Mk,l is the transfer matrix of the beam combiner, taking k telescopes and turning them into l outputs; uk are the locations of the telescopes in units of wavelength; and α is the angular on-sky coordinate.

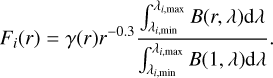

The response of different architectures are thus defined by their telescope coordinates uk and their transfer matrix Mk,l. Each architecture will produce a different number of robust observables (NK), which are generated from linear combinations of the response maps Rl(α) (Martinache & Ireland 2018). It should be noted that we make the approximation that the electric field is represented by a scalar, and we are not considering systematic instrumental effects. We provide, however, some technical requirements on phase stability that will be discussed in Sect. 3.1, and we will examine systematic instrumental effects in a follow-up publication (Hansen et al. 2022). For the following discussion, all configuration diagrams and response maps can be found in Fig. 1.

2.2.1 X-array

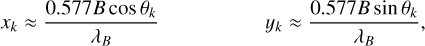

We first consider the default assumed architecture of the LIFE mission (as described in LIFE1), and that of the Darwin and TPF-I trade studies (Lay 2004; Lay et al. 2005); the X-array or Double Bracewell. This design consists of four telescopes in a rectangle, with a shorter ‘nulling’ baseline (defined here as B) and a longer ‘imaging’ baseline (defined through the rectangle ratio c, such that the length is cB). The pairs of telescopes along each nulling baseline are combined with a π phase shift along one of the inputs. Then, the two nulled outputs are combined with a π/2 phase chop. A diagram of the arrangement is in Fig. 1.A.a.

We simply define the Cartesian positions of the telescopes as

![$ {x_k} = \left[ {0.5B, - 0.5B, - 0.5B,0.5B} \right], $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq4.png) (4)

(4)

![$ {y_k} = \left[ {0.5cB, - 0.5cB, - 0.5cB,0.5cB} \right]. $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq5.png) (5)

(5)

The transfer matrix of the system can be written as

![$ {{\rm{M}}_{k,l}} = {1 \over 2}\left[ {\matrix{ {\sqrt 2 } & {\sqrt 2 } & 0 \& 0 \cr 1 & { - 1} & i & { - i} \cr i & { - i} & 1 & { - 1} \cr 0 \& 0 & {\sqrt 2 } & {\sqrt 2 } \cr } } \right], $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq6.png) (6)

(6)

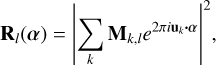

thus providing us with k = 4 four outputs. We can see from the matrix that the responses R0 and R3 contain the majority of the starlight, and the other two phase-chopped nulled outputs contain the planet signal. A single robust observable is simply given by the difference in intensities of these two outputs (NK = 1). The observable, and its associated transmission map, are thus:

(7)

(7)

We note here that, in practice, when considering radially symmetric emission sources such as stellar or exozodiacal leakage, the two responses matrices are equivalent and so the transmission map can be functionally written as either  or

or  . This isn’t true for all orientations of edge-on disks, and hence we define the transmission as the average of the two responses.

. This isn’t true for all orientations of edge-on disks, and hence we define the transmission as the average of the two responses.

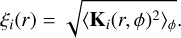

To identify the baseline scale factor ΓB that provides maximum sensitivity, we calculated the modulation efficiency of the array as a function of radial position in units of λ/B. The modulation efficiency ξ, as defined in Dannert et al. (2022) and described in Lay (2004), is a measurement of the signal response that a source should generate as the array is rotated. This is essentially a RMS average over azimuthal angles, given as a function of radius by Dannert et al. (2022):

(8)

(8)

We note, however, that in this work we normalise the modulation efficiency by the flux per telescope rather than the total flux.

Assuming a rectangle ratio of c = 6 (that is, the imaging baseline is six times the size of the nulling baseline, as suggested by Lay 2006), we plot the modulation efficiency as a function of radius in Fig. 1.A.b. The position that gives a maximum efficiency indicates that the baseline scale factor should be ΓB = 0.59. A map of the observable, in units of λB/B, is shown in Fig. 1.A.c, along with a circle highlighting the radial separation that has the highest modulation efficiency.

2.2.2 Kernel-3 Nuller

The Kernel-3 nuller arrangement is simply three telescopes in an equilateral triangle. This is similar to what was studied in the Darwin era (Karlsson et al. 2004), although the beam combination scheme is different and is instead based on a kernel-nuller (see Sect. 5.1 in Laugier et al. 2020).

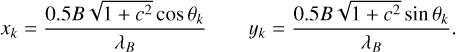

We can consider the Cartesian coordinates of the telescopes to be

(9)

(9)

where ![$\theta = \left[ {0,{{2\pi } \over 3},{{4\pi } \over 3}} \right]$](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq12.png) and B is the baseline of adjacent spacecraft. A schematic of the design is shown in Fig. 1.B.a.

and B is the baseline of adjacent spacecraft. A schematic of the design is shown in Fig. 1.B.a.

The nulling transfer matrix is:

![$ {{\bf{M}}_{k,l}} = {1 \over {\sqrt 3 }}\left[ {\matrix{ 1 \& 1 \& 1 \cr 1 & {{e^{{{2\pi i} \over 3}}}} & {{e^{{{4\pi i} \over 3}}}} \cr 1 & {{e^{{{4\pi i} \over 3}}}} & {{e^{{{2\pi i} \over 3}}}} \cr } } \right]. $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq13.png) (10)

(10)

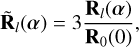

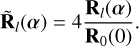

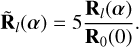

We note that there is a single kernel-null here (NK = 1), given by the difference in the last two outputs, with the starlight going into the first output. We first normalise the transmission to flux per telescope,

(11)

(11)

and the kernel-null response (and associated transmission map) is given by

(12)

(12)

As with the X-array design, through calculating the modulation efficiency for different radii plotted in Fig. 1.B.b, we identified that the baseline scale factor for this configuration should be ΓB = 0.666. A map of the kernel-null response is shown in Fig. 1.B.c.

|

Fig. 1 Telescope configurations (sub-figures a), modulation efficiency curves as a function of radial position for each kernel (sub-figures b), and kernel maps (sub-figures c) for each of the simulated architectures (X-array (A), Kernel-3 (B), Kernel-4 (C), and three different scalings of the Kernel-5 (D-F)). The dashed line on the modulation efficiency plots, and the circle on the kernel maps, correspond to the angular separation that the array is optimised for, and defines the value of ΓB. In general, this corresponds to the angular separation with the highest modulation efficiency at the reference wavelength. Angular position in these plots is given in units of λB/B, and the transmission is given in units of single telescope flux. |

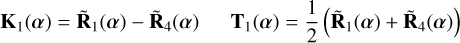

2.2.3 Kernel-4 Nuller

The Kernel-4 nuller is the most interesting of the architectures, as it directly competes with the X-array design in terms of number of telescopes. Instead of having only two nulled outputs, however, this design produces three kernel-null observables (NK = 3) through the use of an extra optical mixing stage in the beam combination process (Martinache & Ireland 2018), potentially providing more information for signal demodulation. Furthermore, this design only has one bright output and so should make more use of the planet flux. Conversely, as the signal is split between multiple outputs, there will be less signal per output than for the X-array.

This design requires non-redundant baselines, and so rectangular geometries will not be appropriate. Instead, a right angled kite design was chosen due to its ability to be parameterised around a circle. The kite is defined by two parameters, as with the X-array design; the short baseline (B) and the ratio of the longer side to the shorter side (c). A schematic is in Fig. 1.C.a.

The azimuthal angular positions of the telescopes can be derived through the geometry of the right kite, yielding

![$ \matrix{ {\theta = \left[ {{\pi \over 2} - \beta ,{\pi \over 2},{\pi \over 2} + \beta ,{{3\pi } \over 2}} \right],} & {\beta = 2{{\tan }^{ - 1}}\left( {{1 \over c}} \right),} \cr } $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq16.png) (13)

(13)

and Cartesian coordinates of

(14)

(14)

The beam combination transfer matrix, as given in Martinache & Ireland (2018), is

![$ {{\bf{M}}_{k,l}} = {1 \over 4}\left[ {\matrix{ 2 \& 2 \& 2 \& 2 \cr {1 + {e^{i\theta }}} & {1 - {e^{i\theta }}} & { - 1 + {e^{i\theta }}} & { - 1 - {e^{i\theta }}} \cr {1 - {e^{ - i\theta }}} & { - 1 - {e^{ - i\theta }}} & {1 + {e^{ - i\theta }}} & { - 1 + {e^{ - i\theta }}} \cr {1 + {e^{i\theta }}} & {1 - {e^{i\theta }}} & { - 1 - {e^{i\theta }}} & { - 1 + {e^{i\theta }}} \cr {1 + {e^{i\theta }}} & {1 - {e^{i\theta }}} & { - 1 - {e^{i\theta }}} & { - 1 + {e^{i\theta }}} \cr {1 + {e^{i\theta }}} & { - 1 - {e^{i\theta }}} & {1 - {e^{i\theta }}} & { - 1 + {e^{i\theta }}} \cr {1 - {e^{ - i\theta }}} & { - 1 + {e^{i\theta }}} & { - 1 - {e^{ - i\theta }}} & {1 + {e^{ - i\theta }}} \cr } } \right], $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq18.png) (15)

(15)

where θ is the optical mixing angle, set to  . We note that there are four inputs and seven outputs in this design, accounting for the one bright output and three pairs of nulled outputs. Again, we normalise the output to be in terms of telescope flux:

. We note that there are four inputs and seven outputs in this design, accounting for the one bright output and three pairs of nulled outputs. Again, we normalise the output to be in terms of telescope flux:

(16)

(16)

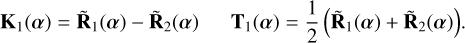

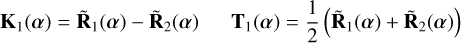

The kernel-null responses are the difference between the adjacent null responses, and are listed with their associated transmission maps below:

(17)

(17)

(18)

(18)

(19)

(19)

Finally, as with the previous architectures, we identified the baseline scale factor through an analysis of the modulation efficiency as a function of radius (Fig. 1.C.B). In particular, we found that the baseline scale factor is ΓB = 0.4 when the kite has a ratio of c = 1.69. This ratio was chosen as the three kernel-null outputs have maxima in roughly the same place. In fact, due to the symmetry of the kite, kernels 1 and 3 are anti-symmetrical and have the exact same radial RMS response. This can be seen in the plots of the kernel-nulls in Fig. 1.C.c.

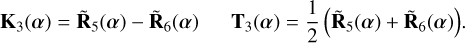

2.2.4 Kernel-5 Nuller

The final architecture we consider is the Kernel-5 nuller; five telescopes arranged in a regular pentagonal configuration, shown in Figs. 1.D.a–F.a. The positions of the telescopes are given by

(20)

(20)

where again B is the separation between adjacent spacecraft (that is, the short baseline), and ![$\theta = \left[ {0,{{2\pi } \over 5},{{4\pi } \over 5},{{6\pi } \over 5},{{8\pi } \over 5}} \right]$](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq25.png) .

.

The transfer matrix for this beam combiner is extrapolated from that of the Kernel-3 nuller:

![$ {{\bf{M}}_{k,l}} = {1 \over {\sqrt 5 }}\left[ {\matrix{ 1 \& 1 \& 1 \& 1 \& 1 \cr 1 & {{e^{{{2\pi i} \over 5}}}} & {{e^{{{4\pi i} \over 5}}}} & {{e^{{{6\pi i} \over 5}}}} & {{e^{{{8\pi i} \over 5}}}} \cr 1 & {{e^{{{4\pi i} \over 5}}}} & {{e^{{{8\pi i} \over 5}}}} & {{e^{{{2\pi i} \over 5}}}} & {{e^{{{6\pi i} \over 5}}}} \cr 1 & {{e^{{{6\pi i} \over 5}}}} & {{e^{{{2\pi i} \over 5}}}} & {{e^{{{8\pi i} \over 5}}}} & {{e^{{{4\pi i} \over 5}}}} \cr 1 & {{e^{{{8\pi i} \over 5}}}} & {{e^{{{6\pi i} \over 5}}}} & {{e^{{{4\pi i} \over 5}}}} & {{e^{{{2\pi i} \over 5}}}} \cr } } \right]. $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq26.png) (21)

(21)

Here, we have one bright output and two pairs of nulled outputs that can produce kernel-nulls (NK = 2). Again, we normalise the outputs to that of one telescope flux:

(22)

(22)

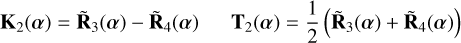

The two kernel-nulls and their associated transfer maps are given by

(23)

(23)

(24)

(24)

Now, after the same modulation efficiency analysis, it was found that there were maximum peaks at different places for each of the two kernel-nulls. Due to this, we simulated three different versions of the Kernel-5 nuller with different values for the baseline scaling. These values are ΓB = 0.66, 1.03, and 1.68, and are shown overlaid on the modulation efficiency curves in Figs. 1.D.b–F.b respectively. In the forthcoming analysis, these arrangements will be distinguished through the value of their baseline scale factor. The kernel maps of each of these scaled versions of the Kernel-5 array are found in Figs 1.D.c to F.c.

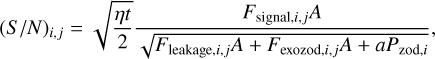

2.3 Signal and Noise Sources

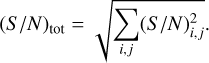

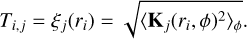

To determine whether a planet is detectable, or to determine the extent by which a planet can be characterised in a certain amount of time, we use the S/N metric. In our analysis, we are assuming we are photon-noise limited and hence only consider photon-noise sources. Considering fringe-tracking noise would require a model of the power-spectrum noise in the servo loop, which is highly dependent on the architecture and beyond the scope of this paper, although it will be briefly considered in the discussion. The sources we include are: stellar leakage, the starlight that seeps past the central null; zodiacal light, thermal emission of dust particles in our solar system; and exozodiacal light, the equivalent of zodiacal light but from the target planet’s host star. Each ith spectral channel and jth kernel-null can be calculated using

(25)

(25)

where η is the throughput of the interferometer, t is the exposure time, A is the telescope area, Fsignal,i,j is the planet flux, Fleakage,i,j is the stellar leakage flux, Fexozod,i,j is the exozodiacal light flux, and Pzod,i is the zodiacal power. Each of these flux values are further defined below in the following sections. The parameter a is used as a scaling factor for the zodiacal light of the Kernel-4 kite configuration; due to the extra split, the zodiacal light is reduced by a factor of two. Hence a = 0.5 for the Kernel-4, and a = 1 for the other configurations. The zodiacal light is a background source and is thus not dependant on the telescope area. The factor of  included in the calculation stems from the use of the difference of two nulled maps for the signal. We then combine the separate spectral channel S/Ns and different kernel map S/Ns through Lay (2004):

included in the calculation stems from the use of the difference of two nulled maps for the signal. We then combine the separate spectral channel S/Ns and different kernel map S/Ns through Lay (2004):

(26)

(26)

Before addressing the separate noise sources used in the simulation, we mention briefly the monochromatic sensitivity of these configurations at a known star-planet separation and position angle. In the cases of the X-array, Kernel-3, and Kernel-5, there are spatial locations where the kernel map has a transmission amplitude equal to the number of telescopes. This means that in the case of known star-planet separation, for a single wavelength, all the planet light comes out of one output, and the background is split between all outputs. In turn this means that, for the same total collecting area, they have identical S/N in the cases of zodiacal and photon noise. This is a maximum S/N in the case of a background-limited chopped measurement. Hence the main differences between these architectures come from their relative polychromatic responses (and modulation efficiencies in the cases of array rotation and planet detection), and their sensitivity to different noise sources, notably the depth of the null with regards to stellar leakage. For the Kernel-4, due to the transmission maxima of one output never occurring at the minima of other outputs, the maximum S/N is 8% lower than the X-array’s theoretical maximum with four telescopes. As an aside, if no nulling was required and a single telescope of the same collecting area was used in an angular chopping mode, it would also have the same S/N (albeit with a slightly different architecture, as two neighbouring sky positions would be simultaneously recorded while chopping).

2.3.1 Planet Signal

The signal of the planet depends on which mode of observation we are undergoing; search or characterisation. We recall that the search mode is where the array is optimised for a single point around a star and made to rotate so that any planet signal is modulated. We choose the angle at which the array is optimised as the centre of the HZ. We calculate the HZ distances using the parameterisation of Kopparapu et al. (2013), with the coefficients for the outer edge being ‘early Mars’ and the inner edge being ‘recent Venus’. Specifically, we have that the stellar flux at the inner edge and outer edge of the HZ are given by

(27)

(27)

(28)

(28)

where T is the effective temperature of the star minus the Sun’s temperature (T = Teff − 5780 K) and

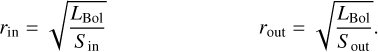

We scale the stellar flux using the bolometric luminosity of the star, LBol, to obtain the radius:

(29)

(29)

Finally, we adopt the mean of this range and divide by the stellar distance to obtain the angular position of the centre of the HZ. This position is used to set the baseline of the array, which in turn creates the response maps (as in Sect. 2.2). We then take the angular separation of the planet, ri, scaled by the central wavelength of each channel, λi, and average the kernel-null observable map over azimuthal angles (akin to the modulation efficiency). Specifically, the planet transmission in the kernel-nulled transmission map Kj, j ∈ [1,..Nk] averaged over angles ϕ is

(30)

(30)

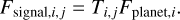

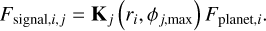

The planet signal is then just the transmission multiplied by the planet flux (consisting of both thermal and reflected contributions) in the different i ∈ [1,Nλ] spectral channels:

(31)

(31)

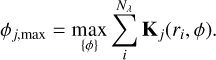

For the characterisation mode, we assume we know precisely where the planet we are investigating is. Hence, the optimised angular separation is the same as the angular separation of the planet at the characterisation wavelength  . To identify the best azimuthal angle of the array to ensure the highest signal, we find the azimuthal angle that gives the maximum summed transmission over wavelengths:

. To identify the best azimuthal angle of the array to ensure the highest signal, we find the azimuthal angle that gives the maximum summed transmission over wavelengths:

(32)

(32)

This angle is then used to calculate the wavelength-dependent transmission of the planet, which, when multiplied by the planet flux, gives the signal:

(33)

(33)

2.3.2 Stellar Leakage

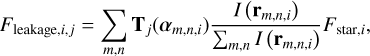

Stellar leakage is the light from the star that ‘leaks’ into the nulled outputs. The stellar leakage flux is the summed grid of the stellar flux (for each spectral channel i) multiplied by a normalised limb darkening law I(r) and the jth transmission function:

(34)

(34)

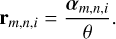

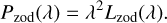

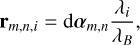

where rm,n,i is the linear coordinate in units of stellar radius θ given by

(35)

(35)

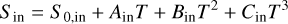

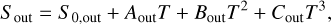

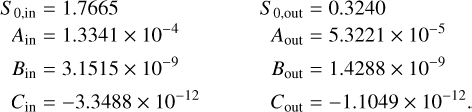

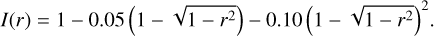

We use a standard second-order limb darkening law where r is the radius from the centre of the transmission map (in units of stellar radius). Coefficients are from Claret & Bloemen (2011), using the Spitzer 8 μm filter and assuming a typical K dwarf with T = 5000 K, log(g)= 4.5, and [Fe/H] = 0:

(36)

(36)

2.3.3 Zodiacal Light

Zodiacal light is the primary background source for space telescopes, being the light reflected (and thermally radiated) from dust in the solar system. To calculate this, we use the James Webb Space Telescope (JWST) background calculator2, as this telescope operates in the same wavelength range.

To begin with, we find the zodiacal light spectral radiance in the direction of the star’s celestial coordinates. The JWST calculator returns the spectral radiance expected over the course of a year (this is due to the different position of the sun with respect to the telescope). Due to the Emma array type design, with the beam combiner out of the plane of the collector telescopes, we assume that the interferometer can only look in an anti-solar angle from 45 to 90 degrees. On average, this corresponds roughly to the 30th percentile of the yearly distribution, and so we adopt this radiance.

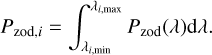

We note that, assuming the background over the field of view is isotropic, the solid angle subtended by the telescope PSF is proportional to

(37)

(37)

where A is the telescope aperture area. Hence we can convert the spectral radiance of the zodiacal light to spectral power (ph/s/m) by multiplying by the square of the wavelength:

(38)

(38)

We then calculate the background for each ith spectral channel by integrating over wavelength:

(39)

(39)

2.3.4 Exozodiacal Light

Exozodiacal light is simply the equivalent to zodiacal light around interstellar systems. To calculate this, we simply scale the local zodiacal background by a number of ‘exozodis’, calculated through P-Pop from the distributions in Ertel et al. (2020). One exozodi is equivalent to the zodiacal light in our own solar system. We assume the exozodiacal background is not clumpy and is distributed face on (with an inclination of zero). These assumptions may not be realistic, but further analysis of the complications of exozodiacal light is beyond the scope of this work. We point the reader to Defrère et al. (2010) for a better treatment of this background source.

We start by calculating the local zodiacal spectral radiance as seen looking at the ecliptic pole using the JWST calculator as before with the zodiacal background calculation. Unlike before, we take the minimum value over the course of the year. We then integrate over each spectral channel, giving us the radiance in each channel:

(40)

(40)

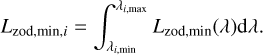

Now, we convert our angular sky grid αm,n into a linear grid with units of AU, again scaling by the channel’s central wavelength:

(41)

(41)

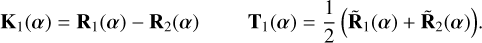

where d is the distance to the star in AU and αm,n is the angular coordinate in radians. We overlay the radial surface density distribution of zodiacal dust, which is assumed to scale with heliocentric distance as a power law with exponent 0.34 (Kennedy et al. 2015). We also factor in a density depletion factor λ(r), as proposed by Stenborg et al. (2021), to account for the depletion of dust as the heliocentric distance decreases. That is,

(42)

(42)

for rin = 3 R⊙, rout = 19 R⊙.

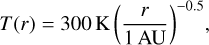

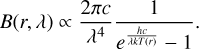

We then calculate the flux distribution through the Planck function. The temperature distribution of the dust is scaled by

(44)

(44)

assuming the dust at 1 AU is at 300 K. Assuming blackbody radiation, the dust flux scales as

(45)

(45)

The flux distribution then, normalised at a radial distance of 1 AU for each spectral channel, is

(46)

(46)

To get the scaled flux distribution, we multiply by the local scale factor at 1 AU, given by:

(47)

(47)

where z is the number of exozodis (from P-Pop). The factor of two arises from looking through two halves of the exozodiacal disk (as opposed to only looking through half of the local disk). Finally, we calculate the exozodiacal flux in each channel by multiplying the dust distribution by the jth transmission map (Tj), the solid angle of each pixel (Ω), and the local scale factor, and then summing over the grid:

(48)

(48)

3 Results and Discussion

3.1 Search Phase

We begin by showing the number of detected exoplanets in the HZ for each of the six architectures, and also over three different reference wavelengths (λB = 10,15, and 18 μm). We define an exoplanet as being detected when the total S/N over all the wavelength channels is greater than seven; the same as defined in LIFE1. Due to the technical challenges and physical restrictions that are imposed on a space interferometer, we set a limit to the possible baselines available. First, no two spacecraft can form a baseline shorter than 5 m, else they run the risk of colliding into each other. Second, we set that the baselines cannot extend beyond 600 m; this is where formation flying metrology may become more difficult and is the number used in the initial LIFE estimates (LIFE1). For any configurations outside of these limits, we set the S/N to be zero.

Unlike in LIFE1, we do not optimise integration time for our target list; instead we derive our estimates assuming a five-hour integration time for every target. This number was chosen as a balance between the average integration times used in previous studies (15-35 h in LIFE1, 10 h in Kammerer & Quanz 2018) and avoiding detection saturation of the targets within 20 pc. We emphasise that, due to the difference in integration times, combined with the different underlying planet population along with a different treatment of the zodiacal and exozodiacal light, direct comparison of our estimates with the estimates in LIFE1 is not straightforward and should be treated with care. The purpose of this study is not the raw count of exoplanets detected, but rather the relative performance between architectures.

We assume an optical throughput of the interferometer of 5% and scale each collector’s diameter such that the array as a whole has the same total area equal to four 2 m diameter telescopes. In other words, the Kernel-3 nuller will have larger collectors, while the Kernel-5 nuller will have smaller collectors; this allows a fair comparison by removing to first order the effect that architectures with more apertures have a larger total collecting surface. We divide the number of detections by the number of simulated universes to obtain the average count for one universe’s worth of planets. The plots are shown in Fig. 2.

We can see that the Kernel-5 nullers, particularly those with a larger scale factor, perform the best in detecting HZ planets over all three reference wavelengths. The difference is most stark with λB = 10 μm, where the Kernel-5 nuller detects approximately 50% more planets than the X-array design. This advantage is then lowered at λB = 18 μm, where the X-array detects only slightly fewer planets than the Kernel-5 (with ΓB = 1.03). Generally most detections over all the architectures occur when λB = 18 μm, and so we restrict our analysis in the remaining sections to this reference wavelength. We also note here that our results echo those of Quanz et al. (2022); reference wavelengths between 15 and 20 μm produce similar yields for the X-array configuration, but substantially less at lower wavelengths.

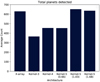

We show in Fig. 3 the total planet yield of each of the architectures. We see again that the Kernel-5 architecture, particularly with a larger scaled baseline, detects substantially more planets than most of the architectures, although the X-array design provides a comparable yield.

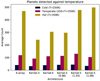

We also split up the exoplanet sample into a few categories. In Fig. 4, we split the detected sample into radii larger than and smaller than 1.5 R⊕. This value is used due to it being the point that is thought to separate rocky, super-Earths from gaseous sub-Neptunes (Rogers 2015; Chen & Kipping 2017). Hence, this can be used as a rough metric of the composition of the planet. Interestingly, we see that the X-array detects mostly gaseous planets (more so than any of the other architectures), while the Kernel-5 nuller detects far more rocky planets (60% more than the X-array at γB = 1.03 and 80% more at γB = 1.68).

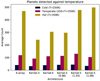

We also split the planets into temperature bins: cold planets at <250 K, temperate planets between 250 and 350 K, and hot planets with T > 350 K. Histograms of the detected count of planets are shown in Fig. 5. We see a pattern similar to the total exoplanets emerging for the numerous hot exoplanets, with the larger baseline Kernel-5 nullers and the X-array finding many more than the others. The other two subsets show only slight differences between configurations, although the Kernel-5 nuller with γB = 1.03 detects marginally more temperate exoplanets.

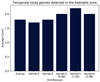

Finally, we show an amalgamation of Figs. 2, 4, and 5 to investigate how each architecture responds to Earth twins: temperate, rocky planets in the HZ. This is shown in Fig. 6. Here, we see that the architectures perform similarly, with all of them detecting between one and two Earth twins (again, assuming a five-hour integration time). That being said, the Kernel-5 nuller at ΓB = 1.03 has a 23% detection increase over the X-array configuration, with an average of 2.2 Earth twins detected.

In Fig. 7, we plot S/N as a function of wavelength for two detectable planets in the HZ of their host stars; a rocky super-Earth around an M-dwarf (D = 1.8 pc, Tp = 300 K,RP = 1.3 R⊕, z = 3.2 zodis), and a gaseous Neptune-type planet around a K-dwarf (D = 4.9 pc, Tp = 260 K, Rp = 3.9 R⊕, z = 0.14 zodis). The advantages of the Kernel-5 nuller are quite apparent; at smaller wavelengths, the S/N is higher than for other architectures due to a deeper fourth-order null (Guyon et al. 2013) reducing the stellar leakage (especially for the K dwarf), while the complex rotationally symmetric pattern allows for more transmission peaks as a function of wavelength than the other architectures. This results in a more consistent response as a function of wavelength and a higher overall S/N. We also note that, in the search phase, a random planet will have 50% of its light going out the bright ports in the X-array configuration, while only losing 20% of its light to the bright output for the Kernel-5 nuller.

Taken with the previous plots, it is quite apparent that when it comes to searching for exoplanets, particularly rocky, temperate planets in the HZ, the Kernel-5 nuller performs the best, and especially when the baseline is scaled by ΓB = 1.03.

We briefly consider that, for detectable planets that have a maximum S/N at short wavelengths (4 μm), the zodiacal light contribution is at a minimum; at most, four orders of magnitude below the stellar leakage. As phase variation due to fringe tracking errors is linked to fluctuations in the null depth, and by extension stellar leakage, we can estimate the RMS fringe tracking errors needed to be photon-limited:

![$ \left\langle {{\phi ^2}} \right\rangle {F_{{\rm{star}}}} ltamp; \max \left[ {{{{P_{{\rm{zodiacal}}}}} \over A},{F_{{\rm{leakage}}}}} \right]. $](/articles/aa/full_html/2022/08/aa43107-22/aa43107-22-eq57.png) (49)

(49)

At worst, therefore, we find that fringe tracking should be better than approximately 9 nm for an M-type star, and 2.5 nm for a G-type star, in order to remain photon-limited by zodiacal light, assuming an aperture diameter of 2 m. For a space based interferometer, this should be an achievable target.

|

Fig. 2 Number of planets detected in the habitable zone (HZ) for each architecture, given as an average of the number of detections in each of the ten simulated universes. Each sub-figure shows the detections for three different reference wavelengths (λB). |

|

Fig. 3 Total number of planets detected for each nulling architecture, for a reference wavelength of 18 μm. |

|

Fig. 4 Number of planets detected for each nulling architecture, subdivided between two radii bins centred around 1.5 R⊕, for a reference wavelength of 18 μm. This also acts as a proxy between rocky and gaseous planets. |

|

Fig. 5 Number of planets detected for each nulling architecture, subdivided between hot, temperate, and cold temperature bins, for a reference wavelength of 18 μm. |

|

Fig. 6 Number of Earth twins detected for each nulling architecture using a reference wavelength of 18 μm. An Earth twin is defined as being rocky (R < 1.5 R⊕), temperate (250 < T < 350 K) and in the HZ. |

|

Fig. 7 S/N as a function of wavelength for the different architectures, taken for two example HZ planets. |

3.2 Characterisation Phase

The other major component to a LIFE-type space interferometer mission is the characterisation phase, meaning the observation of a known planet for a long enough period of time to receive a spectrum and possibly detect biosignatures (that is, ‘the presence of a gas or other feature that may be indicative of a biological agent’; Schwieterman et al. 2018). Hence the number of planets detectable is not the key parameter here, but rather which architectures produce a better S/N for a given amount of time. Conversely, the best architecture will provide quality spectra in shorter exposure times than the others, allowing for more targets to be observed. In the following discussion we keep the same basic setup from the search phase: a throughput of 5%, a five-hour integration time, conservation of the total collecting area, and a reference wavelength of 18 μm. The planet is now chosen to lie at the maximum of the transmission map (see Sect. 2.3.1).

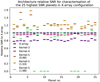

In Fig. 8, we show the relative S/N of the 25 HZ planets with the highest signal in the X-array configuration for the six different architectures. Hence this plot should be inherently biased towards the X-array design. What we see instead is that the Kernel-5 nuller, particularly with ΓB = 0.66 and 1.03, has a consistently higher S/N. Over the 25 planets, we find that these two Kernel-5 configurations on average achieve an S/N 1.2 times higher than the X-array, sometimes reaching as high as 1.6. Conversely, the Kernel-4 nuller performs marginally worse than the X-array (a similar result to the search phase) and the Kernel-3 nuller is one of the worst performing. We note that the points at zero relative S/N are caused by the configuration having a baseline outside of the 5-600 m range, and is particularly applicable to the Kernel-5 nullers with large baseline scale factors.

We also plot the relative S/N compared to the X-array design as a function of stellar distance of a planet in the middle of the HZ of stars of three different spectral types. This is shown in Fig. 9. Each simulated star had parameters based on an archetypal star of that spectral type: a G2V star based on the Sun, a K2V star based on Epsilon Eridani, and an M5V star based on Proxima Centauri. The dashed lines in the plot indicate when the configuration’s baseline moves outside of the acceptable range.

It is evident that these plots echo that of Fig. 8, in that the Kernel-5 nuller produces a better S/N than the X-array consistently. Interestingly though, the Kernel-5 variant with ΓB = 0.66 is significantly better than ΓB = 1.03 at all distances, and especially with M-type stars where the baseline is still short enough to probe stars as far out as 15 pc. It is also better quantitatively, exhibiting 1.3 times the S/N of the X-array design on average, though this falters for stars closer than 5 pc. Based on this, it seems that the ΓB = 0.66 Kernel-5 nuller is the best architecture for characterisation, and by a substantial margin.

One potential reason for this is the ‘zoomed-in’ nature of the transmission map for a smaller scale factor; this produces a greater S/N across the wavelength-dependent angular positions when the planet is at the point of maximum transmission, compared to a ‘zoomed-out’ map with larger radial variance. This same feature is a disadvantage in search mode; if the planet is in a transmission minima, it may be in the same minima at more wavelength-dependent positions compared to an architecture with a larger scale factor. Nevertheless, as ΓB is simply a baseline scaling factor, it would be trivial to implement a control such that a Kernel-5 nuller uses a longer ΓB = 1.03 during the search phase and a shorter ΓB = 0.66 during characterisation.

It is also worth noting that the Kernel-5 nuller’s two kernel maps combined contain more spatial information than the single chopped output of the X-array. This would allow the spectrum extraction algorithm (such as the one posited by Dannert et al. 2022), in the almost certain case of a multi-planetary system, to more easily distinguish between the fluxes of multiple planets and increase the constraints of the exoplanet’s astrophysical properties.

Finally, we comment here that we also performed a similar analysis with seven telescopes arranged in a heptagonal configuration. We found that it performed ~15% worse in detection than the Kernel-5 nuller, but ~15% better in characterisation, likely due to the presence of a sixth-order null. However, due to the great increase in complexity, both in terms of optical design as well as management of spacecraft (and the meagre improvement), we did not pursue this line of research further. We hypothesise that the characterisation will continue to improve by increasing the number of telescopes (particularly with odd numbers in order to have a simpler optical design and access to higher order nulls) up to a point where stellar leakage is no longer dominant in the wavelength range. Despite this, the additional design complexity will likely counteract this advantage.

|

Fig. 8 S/Ns for each architecture, relative to the X-array configuration, of the planets in the HZ with the 25 highest S/Ns when observing with the X-array design. |

|

Fig. 9 S/Ns for each architecture, relative to the X-array configuration, of a planet in the middle of the HZ of its host star as a function of stellar distance. Plotted for three stars with archetypal stellar types. |

4 Conclusions

With optical space interferometry once again holding potential as a future mission (in the form of LIFE), it is vital to take a critical look at the assumptions made in the past and adapt them to work in the future. Through our simulations, we find that through the use of a kernel-nulling beam combiner and when conserving the total collecting area, an architecture consisting of five telescopes in a regular pentagonal configuration provides better scientific return than the X-array design inherited from the Darwin and TPF-I trade studies. This holds true for both search and characterisation:

In search mode, with a reference wavelength of λB =18 μm, the Kernel-5 nuller with a baseline scale factor of ΓB = 1.03 would detect 23% more Earth twins (temperate, rocky and in the HZ) than the X-array. It also finds considerably more rocky planets, HZ planets and total planets than its other architecture counterparts.

In characterisation mode, again with a reference wavelength of λB =18 μm, the Kernel-5 nuller with a baseline scale factor of ΓB = 0.66 has on average an S/N between 1.2 and 1.3 times greater than the X-array. This holds for planets around GKM stars, as well as at a majority of stellar distances.

The fact that search and characterisation modes favour different baseline scale lengths is not a problem in this study, as this scaling factor can be changed in real time depending on what mode the interferometer is undertaking.

Hence, we recommend that future studies and simulations based around a large, exoplanet-hunting, optical space interferometry mission, such as LIFE, consider adopting a Kernel-5 nulling architecture as the basis of the design. There may also be further benefits to this architecture in terms of redundancy; the failure of one of the five collecting spacecraft may not result in the failure of the mission as a whole. Both this and realistic instrumental noise will be addressed in a follow-up article (Hansen et al. 2022).

We note here two small caveats to the recommendation of the Kernel-5 architecture. The first is the additional complexity of having one more spacecraft, though this may be be of benefit due to the added redundancy of an extra telescope. Second, and arguably more importantly, implementing the Kernel-5 nuller requires a range of achromatic phase shifts that deviate from the standard π and π/2 phase shifts used in the X-array. A potential implementation of such a beam combination scheme in bulk optics will also be discussed in a follow-up article (Hansen et al. 2022). In principle, photonics may be able to provide an arbitrary achromatic phase shift, but this needs to yet be successfully demonstrated at 10 microns and under cryogenic conditions.

The resurrection of optical space interferometry as a tool for exoplanet science holds extreme potential in revolutionising the field and providing humanity with the possible first signs of life on another world. Simultaneously looking back at the past of Darwin and TPF-I, learning from both the achievements and failures made in that era, while also looking forwards at future technologies and applying new research collaboratively, is likely the only way that this dream from decades ago may one day see the faint light of planets far, far away.

Acknowledgements

This project has made use of P-Pop. This research was supported by the ANU Futures scheme and by the Australian Government through the Australian Research Council’s Discovery Projects funding scheme (project DP200102383). The JWST Background program was written by Jane Rigby (GSFC, Jane.Rigby@nasa.gov) and Klaus Pontoppidan (STScI, pon-toppi@stsci.edu). The associated background cache was prepared by Wayne Kinzel at STScI, and is the same as used by the JWST Exposure Time Calculator. We also greatly thank Jens Kammerer, Sascha Quanz and the rest of the LIFE team for helpful discussions. The code used to generate these simulations can be found publicly at https://github.com/JonahHansen/LifeTechSim.

References

- Angel, J. R. P., & Woolf, N. J. 1997, ApJ, 475, 373 [NASA ADS] [CrossRef] [Google Scholar]

- Berger, T. A., Huber, D., Gaidos, E., van Saders, J. L., & Weiss, L. M. 2020, AJ, 160, 108 [NASA ADS] [CrossRef] [Google Scholar]

- Borucki, W. J., Koch, D., Basri, G., et al. 2010, Science, 327, 977 [Google Scholar]

- Bracewell, R. N. 1978, Nature, 274, 780 [NASA ADS] [CrossRef] [Google Scholar]

- Broeg, C., Fortier, A., Ehrenreich, D., et al. 2013, Eur. Phys. J. Web Conf., 47, 03005 [CrossRef] [EDP Sciences] [Google Scholar]

- Cabrera, M. S., McMurtry, C. W., Forrest, W. J., et al. 2020, J. Astron. Teles. Instrum. Syst., 6, 011004 [NASA ADS] [Google Scholar]

- Chen, J., & Kipping, D. 2017, ApJ, 834, 17 [Google Scholar]

- Claret, A., & Bloemen, S. 2011, A&A, 529, A75 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Dannert, F., Ottiger, M., Quanz, S. P., et al. 2022, A&A, 664, A22 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Defrère, D., Absil, O., den Hartog, R., Hanot, C., & Stark, C. 2010, A&A, 509, A9 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ertel, S., Defrère, D., Hinz, P., et al. 2020, AJ, 159, 177 [Google Scholar]

- Fulton, B. J., & Petigura, E. A. 2018, AJ, 156, 264 [Google Scholar]

- Gheorghe, A. A., Glauser, A. M., Ergenzinger, K., et al. 2020, SPIE Conf. Ser., 11446, 114462N [NASA ADS] [Google Scholar]

- Gretzinger, T., Gross, S., Arriola, A., & Withford, M. J. 2019, Opt. Express, 27, 8626 [NASA ADS] [CrossRef] [Google Scholar]

- Guyon, O., Mennesson, B., Serabyn, E., & Martin, S. 2013, PASP, 125, 951 [NASA ADS] [CrossRef] [Google Scholar]

- Hansen, J. T., Casagrande, L., Ireland, M. J., & Lin, J. 2021, MNRAS, 501, 5309 [NASA ADS] [CrossRef] [Google Scholar]

- Hansen, J. T., Ireland, M. J., Laugier, R., & the LIFE collaboration 2022, A&A, submitted [arXiv:2204.12291] [Google Scholar]

- Hansen, J. T., & Ireland, M. J. 2020, PASA, 37, e019 [NASA ADS] [CrossRef] [Google Scholar]

- Kammerer, J., & Quanz, S. P. 2018, A&A, 609, A4 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Karlsson, A. L., Wallner, O., Perdigues Armengol, J. M., & Absil, O. 2004, SPIE Conf. Ser., 5491, 831 [NASA ADS] [Google Scholar]

- Kenchington Goldsmith, H.-D., Cvetojevic, N., Ireland, M., & Madden, S. 2017, Opt. Express, 25, 3038 [NASA ADS] [CrossRef] [Google Scholar]

- Kennedy, G. M., Wyatt, M. C., Bailey, V., et al. 2015, ApJS, 216, 23 [NASA ADS] [CrossRef] [Google Scholar]

- Kopparapu, R. K., Ramirez, R., Kasting, J. F., et al. 2013, ApJ, 765, 131 [NASA ADS] [CrossRef] [Google Scholar]

- Kopparapu, R. K., Hébrard, E., Belikov, R., et al. 2018, ApJ, 856, 122 [NASA ADS] [CrossRef] [Google Scholar]

- Laugier, R., Cvetojevic, N., & Martinache, F. 2020, A&A, 642, A202 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Lay, O. P. 2004, Appl. Opt., 43, 6100 [NASA ADS] [CrossRef] [PubMed] [Google Scholar]

- Lay, O. P. 2006, SPIE Conf. Ser., 6268, 62681A [NASA ADS] [Google Scholar]

- Lay, O. P., Gunter, S. M., Hamlin, L. A., et al. 2005, SPIE Conf. Ser., 5905, 8 [NASA ADS] [Google Scholar]

- Léger, A., Puget, J. J., Mariotti, J. M., Rouan, D., & Schneider, J. 1995, Space Sci. Rev., 74, 163 [CrossRef] [Google Scholar]

- Loreggia, D., Fineschi, S., Bemporad, A., et al. 2018, SPIE, 10695, 1069503 [Google Scholar]

- Martinache, F., & Ireland, M. J. 2018, A&A, 619, A87 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Mayor, M., Pepe, F., Queloz, D., et al. 2003, The Messenger, 114, 20 [NASA ADS] [Google Scholar]

- Petigura, E. A., Marcy, G. W., Winn, J. N., et al. 2018, AJ, 155, 89 [Google Scholar]

- Quanz, S. P., Ottiger, M., Fontanet, E., et al. 2022, A&A, 664, A21 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Ricker, G. R., Winn, J. N., Vanderspek, R., et al. 2015, J. Astron. Teles. Instrum. Syst., 1, 014003 [Google Scholar]

- Rogers, L. A. 2015, ApJ, 801, 41 [Google Scholar]

- Schwieterman, E. W., Kiang, N. Y., Parenteau, M. N., et al. 2018, Astrobiology, 18, 663 [CrossRef] [Google Scholar]

- Stenborg, G., Howard, R. A., Hess, P., & Gallagher, B. 2021, A&A, 650, A28 [EDP Sciences] [Google Scholar]

- Velusamy, T., Angel, R. P., Eatchel, A., Tenerelli, D., & Woolf, N. J. 2003, ESA SP, 539, 631 [NASA ADS] [Google Scholar]

- Vogt, S. S., Allen, S. L., Bigelow, B. C., et al. 1994, SPIE Conf. Ser., 2198, 362 [NASA ADS] [Google Scholar]

- Voyage 2050 Senior Committee. 2021, Voyage 2050 - Final Recommendations from the Voyage 2050 Senior Committee [Google Scholar]

All Figures

|

Fig. 1 Telescope configurations (sub-figures a), modulation efficiency curves as a function of radial position for each kernel (sub-figures b), and kernel maps (sub-figures c) for each of the simulated architectures (X-array (A), Kernel-3 (B), Kernel-4 (C), and three different scalings of the Kernel-5 (D-F)). The dashed line on the modulation efficiency plots, and the circle on the kernel maps, correspond to the angular separation that the array is optimised for, and defines the value of ΓB. In general, this corresponds to the angular separation with the highest modulation efficiency at the reference wavelength. Angular position in these plots is given in units of λB/B, and the transmission is given in units of single telescope flux. |

| In the text | |

|

Fig. 2 Number of planets detected in the habitable zone (HZ) for each architecture, given as an average of the number of detections in each of the ten simulated universes. Each sub-figure shows the detections for three different reference wavelengths (λB). |

| In the text | |

|

Fig. 3 Total number of planets detected for each nulling architecture, for a reference wavelength of 18 μm. |

| In the text | |

|

Fig. 4 Number of planets detected for each nulling architecture, subdivided between two radii bins centred around 1.5 R⊕, for a reference wavelength of 18 μm. This also acts as a proxy between rocky and gaseous planets. |

| In the text | |

|

Fig. 5 Number of planets detected for each nulling architecture, subdivided between hot, temperate, and cold temperature bins, for a reference wavelength of 18 μm. |

| In the text | |

|

Fig. 6 Number of Earth twins detected for each nulling architecture using a reference wavelength of 18 μm. An Earth twin is defined as being rocky (R < 1.5 R⊕), temperate (250 < T < 350 K) and in the HZ. |

| In the text | |

|

Fig. 7 S/N as a function of wavelength for the different architectures, taken for two example HZ planets. |

| In the text | |

|

Fig. 8 S/Ns for each architecture, relative to the X-array configuration, of the planets in the HZ with the 25 highest S/Ns when observing with the X-array design. |

| In the text | |

|

Fig. 9 S/Ns for each architecture, relative to the X-array configuration, of a planet in the middle of the HZ of its host star as a function of stellar distance. Plotted for three stars with archetypal stellar types. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.