| Issue |

A&A

Volume 604, August 2017

|

|

|---|---|---|

| Article Number | A109 | |

| Number of page(s) | 18 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/201629591 | |

| Published online | 22 August 2017 | |

Weak-lensing shear estimates with general adaptive moments, and studies of bias by pixellation, PSF distortions, and noise

Argelander-Institut für Astronomie, Universität Bonn, Auf dem Hügel 71, 53121 Bonn, Germany

e-mail: psimon@astro.uni-bonn.de

Received: 26 August 2016

Accepted: 18 May 2017

In weak gravitational lensing, weighted quadrupole moments of the brightness profile in galaxy images are a common way to estimate gravitational shear. We have employed general adaptive moments (GLAM ) to study causes of shear bias on a fundamental level and for a practical definition of an image ellipticity. The GLAM ellipticity has useful properties for any chosen weight profile: the weighted ellipticity is identical to that of isophotes of elliptical images, and in absence of noise and pixellation it is always an unbiased estimator of reduced shear. We show that moment-based techniques, adaptive or unweighted, are similar to a model-based approach in the sense that they can be seen as imperfect fit of an elliptical profile to the image. Due to residuals in the fit, moment-based estimates of ellipticities are prone to underfitting bias when inferred from observed images. The estimation is fundamentally limited mainly by pixellation which destroys information on the original, pre-seeing image. We give an optimised estimator for the pre-seeing GLAM ellipticity and quantify its bias for noise-free images. To deal with images where pixel noise is prominent, we consider a Bayesian approach to infer GLAM ellipticity where, similar to the noise-free case, the ellipticity posterior can be inconsistent with the true ellipticity if we do not properly account for our ignorance about fit residuals. This underfitting bias, quantified in the paper, does not vary with the overall noise level but changes with the pre-seeing brightness profile and the correlation or heterogeneity of pixel noise over the image. Furthermore, when inferring a constant ellipticity or, more relevantly, constant shear from a source sample with a distribution of intrinsic properties (sizes, centroid positions, intrinsic shapes), an additional, now noise-dependent bias arises towards low signal-to-noise if incorrect prior densities for the intrinsic properties are used. We discuss the origin of this prior bias. With regard to a fully-Bayesian lensing analysis, we point out that passing tests with source samples subject to constant shear may not be sufficient for an analysis of sources with varying shear.

Key words: gravitational lensing: weak / methods: data analysis / methods: statistical

© ESO, 2017

1. Introduction

Over the past decade measurements of distortions of galaxy images by the gravitational lensing effect have developed into an important, independent tool for cosmologists to study the large-scale distribution of matter in the Universe and its expansion history (recent reviews: Schneider 2006; Munshi et al. 2008; Hoekstra & Jain 2008; Massey et al. 2010; Kilbinger 2015). These studies exploit the magnification and shear of galaxy light bundles by the tidal gravitational field of intervening matter. The shear gives rise to a detectable coherent distortion pattern in the observed galaxy shapes. The distortions are usually weak, only of the order of a few per cent of the unlensed shape of a typical galaxy image. Therefore, the key to successfully devising gravitational shear as cosmological tool is accurate measurements of the shapes of many, mostly faint and hardly resolved galaxy images.

There has been a boost of interest in methods of shape measurements in anticipation of the gravitational lensing analysis of the upcoming next generation of wide-field imaging surveys (e.g. Euclid; Laureijs et al. 2011). Despite being sufficient for contemporary surveys, current methodologies are not quite at the required level of accuracy to fully do justice to the quantity of cosmological information we expect from future lensing surveys (Heymans et al. 2006; Massey et al. 2007). The challenge all methodologies face is that observable, noisy (post-seeing) galaxy images are modifications of the actual (pre-seeing) image owing to instrumental and possible atmospheric effects. Post-seeing galaxy images are subject to pixellation as well as instrumental noise, sky noise, photon noise, and random overlapping with very faint objects (Kitching et al. 2012; Hoekstra et al. 2015). In addition, galaxies are not intrinsically circular such that their ellipticities are noisy estimators of the cosmic distortion. Current theoretical work consequently focuses on sources of bias in shape measurements, such as pixel-noise bias, shape-noise bias, underfitting bias, colour gradients, or several selection biases (Hirata & Seljak 2003; Hirata et al. 2004; Mandelbaum et al. 2005; Melchior et al. 2010; Viola et al. 2011; Melchior & Viola 2012; Kacprzak et al. 2012; Massey et al. 2013; Semboloni et al. 2013).

One major source of bias is the pixel-noise bias or simply noise bias hereafter. This bias can at least partly be blamed on the usage of point estimates of galaxy shapes in a statistical analysis, that is single-value estimators of galaxy ellipticities (Refregier et al. 2012). This begs the question of whether it is feasible to eradicate noise bias by means of a more careful treatment of the statistical uncertainties in the measurement of galaxy ellipticities within a fully Bayesian framework. Indeed recent advances in image processing for weak gravitational lensing strongly support this idea, at least for the inference of constant shear (Sheldon 2014; Bernstein & Armstrong 2014, BA14 hereafter; Bernstein et al. 2016, BAKM16 hereafter). In contrast, the contemporary philosophy with point estimates is to perform elaborate, time-consuming calibrations of biased estimators by means of simulated images; the calibration accuracy is, additionally, only as good as the realism of simulated images (e.g. Hoekstra et al. 2015). It is the case that code implementations of non-Bayesian techniques are typically an order of magnitude or more faster than Bayesian codes which could be a decisive factor for upcoming surveys.

Here we take a new look into possible causes of bias in shear measurements on a fundamental level. To this end, we examine, step-by-step with increasing complexity, a fully-Bayesian lensing analysis based on weighted brightness moments of galaxy images (Gelman et al. 2013; MacKay 2003). While the method in BA14 and BAKM16 is set in Fourier space, we work with moments in angular space which has benefits in the case of correlated noise or missing pixels in realistic images. Moment-based methods as ours are non-parametric; this means they are free from assumptions about the galaxy brightness profile. They hence appear to be advantageous for reducing bias, but nonetheless the specific choice of the adaptive weight for the moments is known to produce bias (Viola et al. 2014; Voigt & Bridle 2010). The origin of this problem, which principally also affects unweighted moments, becomes obvious in our formalism. We define as practical measure of galaxy shape a generalization of the impractical ellipticity ϵ expressed in terms of unweighted moments (Kaiser et al. 1995; Seitz & Schneider 1997, SS97 hereafter). Being Bayesian, our measurement of ellipticity results in a Monte-Carlo sample of the probability distribution function (PDF) of ϵ which should be propagated in a fully Bayesian analysis. That is: we do not devise point estimators in order to ideally stay clear of noise bias. This overall approach of general adaptive moments (GLAM ) is inspired by and comparable to Bernstein & Jarvis (2002) apart from the Bayesian framework and some technical differences: (i) for any adaptive weight, the perfectly measured GLAM ellipticity is an unbiased estimator of gravitational shear unaffected by shape-noise bias; (ii) the adaptive weight may have a non-Gaussian radial profile; (iii) our inference of the pre-seeing ellipticity is realised as forward-fitting of elliptical profiles (so-called templates), that is we do not determine the brightness moments of the post-seeing image and correct them to estimate the pre-seeing moments (cf. Hirata & Seljak 2003; Mandelbaum et al. 2005).

As a disclaimer, the GLAM methodology outlined here is prone to bias, even where a fully Bayesian analysis can be realised, and, at this stage, its performance is behind that of other techniques. The aim of this paper is to elucidate causes of bias, instead of proposing a new technique that is competitive to existing techniques. However, these findings are also relevant for other methodologies because model-based or moment-based approaches are linked to GLAM .

For this paper, we exclude bias from practically relevant factors: the insufficient knowledge of the PSF or noise properties, blending of images, and the selection of source galaxies (see e.g. Heymans et al. 2006; Hartlap et al. 2011; Dawson et al. 2016). We focus on the core of the problem of shape measurements which is the inference of pre-seeing ellipticities from images whose full information on the brightness profile have been lost by instrumental limitations.

The paper is laid out as follows. In Sect. 2, we introduce the formalism of GLAM for a practical definition of ellipticity with convenient transformation properties under the action of gravitational shear. We also analytically investigate the limits of measuring the pre-seeing ellipticity from a noise-free but both PSF-convolved and pixellated image. In Sect. 3, we construct a statistical model for the GLAM ellipticity of noisy post-seeing images. We then study the impact of inconsistencies in the posterior model of ellipticity in three, increasingly complex scenarios. First, we analyse with independent exposures of the same pre-seeing image the ellipticity bias due to a misspecified likelihood in the posterior (underfitting bias). Second, we consider samples of noisy images with the same ellipticity but distributions of intrinsic properties such as sizes or centroid positions. Here a new contribution to the ellipticity bias emerges if the prior densities of intrinsic properties are incorrectly specified (prior bias). Third in Sect. 4, we perform numerical experiments with samples of noisy galaxy images of random intrinsic shapes that are subject to constant shear. With these samples we study the impact of inconsistent ellipticity posteriors on shear constraints (shear bias). We also outline details on our technique to Monte-Carlo sample ellipticity or shear posteriors. We discuss our results and possible improvements of the GLAM approach in Sect. 5.

2. General adaptive moments

2.1. Definition

Let I(x) be the light distribution in a galaxy image of infinite resolution and without noise where x is the position on the sky. A common way of defining a (complex) ellipticity of a galaxy image uses the quadrupole moments,  (1)of I(x) relative to a centroid position,

(1)of I(x) relative to a centroid position,  (2)of the image (e.g. Bartelmann & Schneider 2001). For real applications, the quadrupole moments are soundly defined only if they involve a weight, decreasing with separation from the centroid position X0, because galaxies are not isolated so that the normalisation

(2)of the image (e.g. Bartelmann & Schneider 2001). For real applications, the quadrupole moments are soundly defined only if they involve a weight, decreasing with separation from the centroid position X0, because galaxies are not isolated so that the normalisation  and the brightness moments diverge. Hirata & Seljak (2003, H03 hereafter) address the divergence problems by realising an adaptive weighting scheme by minimising the error functional, sometimes dubbed the energy functional,

and the brightness moments diverge. Hirata & Seljak (2003, H03 hereafter) address the divergence problems by realising an adaptive weighting scheme by minimising the error functional, sometimes dubbed the energy functional, ![\begin{equation} \label{eq:functional} E(\vec{p}|I)= \frac{1}{2} \int\d^2x\; \Big[I(\vec{x})-Af(\rho)\Big]^2, \end{equation}](/articles/aa/full_html/2017/08/aa29591-16/aa29591-16-eq8.png) (3)with the quadratic form

(3)with the quadratic form  (4)and the second-order tensor

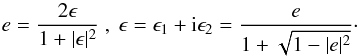

(4)and the second-order tensor  (5)The tensor M is expressed in terms of the complex ellipticity e = e1 + ie2 and the size T of the image; f(ρ) is a weight function that HS03 chose to be a Gaussian weight f(ρ) = e− ρ/ 2. In comparison to HS03, we have slightly changed the definition of ρ for convenience: here we use ρ instead of ρ2. The set p = (A,x0,M), comprises a set of six parameters on which the functional E depends for a given galaxy image I.

(5)The tensor M is expressed in terms of the complex ellipticity e = e1 + ie2 and the size T of the image; f(ρ) is a weight function that HS03 chose to be a Gaussian weight f(ρ) = e− ρ/ 2. In comparison to HS03, we have slightly changed the definition of ρ for convenience: here we use ρ instead of ρ2. The set p = (A,x0,M), comprises a set of six parameters on which the functional E depends for a given galaxy image I.

Frequently another definition of complex ellipticity, the ϵ-ellipticity, is more convenient (sometimes also known as the third flattening). It arises if we write ρ in the form  (6)where V is symmetric. Obviously, we have V2 = M or

(6)where V is symmetric. Obviously, we have V2 = M or  . By writing V in the form

. By writing V in the form  (7)we see that V2 = M implies 2T = t2 (1 + | ϵ | 2), and

(7)we see that V2 = M implies 2T = t2 (1 + | ϵ | 2), and  (8)We henceforth use ϵ as parametrisation of M because ϵ is an unbiased estimator of reduced shear in the absence of a PSF and pixellation (SS97). Conversely, the ellipticity e has to be calibrated with the distribution of unsheared ellipticities which poses another possible source of bias in a lensing analysis (H03).

(8)We henceforth use ϵ as parametrisation of M because ϵ is an unbiased estimator of reduced shear in the absence of a PSF and pixellation (SS97). Conversely, the ellipticity e has to be calibrated with the distribution of unsheared ellipticities which poses another possible source of bias in a lensing analysis (H03).

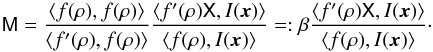

As generally derived in Appendix A, the parameters p at the minimum of (3) are:  (9)and

(9)and  (10)where

(10)where  is a constant. These equations are derived by HS03 for a Gaussian f(ρ), for which we have f′(ρ): = df/ dρ = − f/ 2. This shows that the best-fitting f(ρ) has the same centroid and, up to a scalar factor, the same second moment M = V2 as the with f′(ρ) adaptively weighted image I(x). This is basically also noted in Lewis (2009) where it is argued that the least-square fit of any sheared model to a pre-seeing image I(x) provides an unbiased estimate of the shear, even if it fits poorly.

is a constant. These equations are derived by HS03 for a Gaussian f(ρ), for which we have f′(ρ): = df/ dρ = − f/ 2. This shows that the best-fitting f(ρ) has the same centroid and, up to a scalar factor, the same second moment M = V2 as the with f′(ρ) adaptively weighted image I(x). This is basically also noted in Lewis (2009) where it is argued that the least-square fit of any sheared model to a pre-seeing image I(x) provides an unbiased estimate of the shear, even if it fits poorly.

In this system of equations, the centroid x0 and tensor M are implicitly defined because both f(ρ) and f′(ρ) on the right-hand-side are functions of the unknowns x0 and M: the weights adapt to the position, size, and shape of the image. Equations (9) and (10) therefore need to be solved iteratively. The iteration should be started at a point which is close to the final solution. Such a starting point could be obtained by using the image position, determined by the image detection software, as initial value for x0, and tensor M determined from a circular weight function with the same functional form as f. Nevertheless, there is no guarantee that the solution of this set of equations is unique. In fact, for images with two brightness peaks one might suspect that there are multiple local minima in p of the functional E. This may occur, for instance, in the case of blended images. One standard solution to this particular problem is to identify blends and to remove these images from the shear catalogue. Alternatively we could in principle try to fit two template profiles to the observed image, that is by adding a second profile E2(p2 | I) to the functional (3) and by minimising the new functional with respect to the parameter sets p and p2 of both profiles simultaneously.

2.2. Interpretation

If an image I(x) has confocal elliptical isophotes, with the same ellipticity for all isophotes, one can define the ellipticity of the image uniquely by the ellipticity of the isophotes. In this case, the ellipticity ϵ defined by the minimum of (3) coincides with the ellipticity of the isophotes for any weight f. We show this property in the following.

Assume that the brightness profile I(x) is constant on confocal ellipses so that we can write I(x) = S(ζ) where ζ: = (x − xc)TB-2(x − xc). Here xc denotes the centre of the image, and the matrix elements of B describe the size and the shape of the image, in the same way as we discussed for the matrix V before. The function S(ζ) describes the radial brightness profile of the image. We start by writing (9) in the form  (11)and introduce the transformed position vector z = B-1(x − xc), or x = Bz + xc. Then the previous equation becomes

(11)and introduce the transformed position vector z = B-1(x − xc), or x = Bz + xc. Then the previous equation becomes  (12)where in terms of z the quadratic form ρ is

(12)where in terms of z the quadratic form ρ is  (13)From these equations, we can see that x0 = xc is the solution of (12) since then ρ is an even function of z, S is an even function of z, whereas the term in the parenthesis of (12) is odd, and the integral vanishes due to symmetry reasons. Thus we found that our adaptive moments approach yields the correct centre of the image.

(13)From these equations, we can see that x0 = xc is the solution of (12) since then ρ is an even function of z, S is an even function of z, whereas the term in the parenthesis of (12) is odd, and the integral vanishes due to symmetry reasons. Thus we found that our adaptive moments approach yields the correct centre of the image.

Next, we rewrite (10) in the form  (14)where β, see Eq. (10), is a constant factor (see Appendix A.3). We again used the transformation from x to z = B-1(x − xc) and employed the fact that x0 = xc. Accordingly, we have ρ = zTBV-2Bz. We now show that the solution of (14) is given by V = λB with λ being a scalar factor. Using this Ansatz, we get ρ = λ-2 | z | 2, and (14) can be written, after multiplying from the left and from the right by B-1, as

(14)where β, see Eq. (10), is a constant factor (see Appendix A.3). We again used the transformation from x to z = B-1(x − xc) and employed the fact that x0 = xc. Accordingly, we have ρ = zTBV-2Bz. We now show that the solution of (14) is given by V = λB with λ being a scalar factor. Using this Ansatz, we get ρ = λ-2 | z | 2, and (14) can be written, after multiplying from the left and from the right by B-1, as  (15)Since both S and f′ in the numerator depend solely on | z | 2, the tensor on the right hand side is proportional to the unit tensor 1, and (15) becomes a scalar equation for the scalar λ,

(15)Since both S and f′ in the numerator depend solely on | z | 2, the tensor on the right hand side is proportional to the unit tensor 1, and (15) becomes a scalar equation for the scalar λ,  (16)whose solution depends on the brightness profile S and the chosen weight function f. However, the fact that B differs from V only by the scalar factor λ implies that the derived ellipticity ϵ of V is the same as that of the elliptical image. Therefore we have shown that the approach of adapted moments recovers the true ellipticity with elliptical isophotes for any radial weight function f.

(16)whose solution depends on the brightness profile S and the chosen weight function f. However, the fact that B differs from V only by the scalar factor λ implies that the derived ellipticity ϵ of V is the same as that of the elliptical image. Therefore we have shown that the approach of adapted moments recovers the true ellipticity with elliptical isophotes for any radial weight function f.

For a general brightness profile of the image, the interpretation of the GLAM ellipticity ϵ is less clear, and the ellipticity generally depends on the weight f. Nevertheless, ϵ is uniquely defined as long as a minimum of the functional (3) can be found. More importantly, for any weight f the GLAM ellipticity obeys the same simple transformation law under the action of gravitational shear, as shown in the following section.

2.3. Transformation under shear

We now consider the effect of a shear γ = γ1 + iγ2 and convergence κ on the GLAM ellipticity ϵ (Bartelmann & Schneider 2001). For an image with no noise, no pixellation, and no PSF convolution the ellipticity ϵ should be an unbiased estimate of the reduced shear g = g1 + ig2 = γ (1 − κ)-1. This is clearly true for sources that intrinsically have circular isophotes since the isophotes of the sheared images are confocal ellipses with an ellipticity ϵ = g. The minimum of (3) is hence at g by means of the preceding discussion.

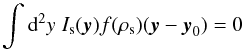

For general images, let ϵs = ϵs,1 + iϵs,2 be the complex ellipticity of the image in the source plane and ϵ = ϵ1 + iϵ2 its complex ellipticity in the lens plane. We show now that for any brightness profile and template f(ρ), GLAM ellipticities have the extremely useful property to transform under the action of a reduced shear according to  (17)This is exactly the well-known transformation obtained from unweighted moments (SS97). To show this generally for GLAM , let Is(y) be the surface brightness of an image in the source plane with source plane coordinates y. The centroid y0 and moment tensor Ms of Is are defined by the minimum of (3) or, alternatively, by the analog of Eqs. (9) and (10) through

(17)This is exactly the well-known transformation obtained from unweighted moments (SS97). To show this generally for GLAM , let Is(y) be the surface brightness of an image in the source plane with source plane coordinates y. The centroid y0 and moment tensor Ms of Is are defined by the minimum of (3) or, alternatively, by the analog of Eqs. (9) and (10) through  (18)and

(18)and  (19)with

(19)with  (20)The ellipticity ϵs is given by

(20)The ellipticity ϵs is given by  (21)We now shear the image Is. The shear and the convergence are assumed to be constant over the extent of the image, that is lens plane positions x are linearly mapped onto source plane positions y by virtue of

(21)We now shear the image Is. The shear and the convergence are assumed to be constant over the extent of the image, that is lens plane positions x are linearly mapped onto source plane positions y by virtue of  where

where  (22)xc and yc are such that the point xc is mapped onto the point yc by the lens equation, and both are chosen to be the central points around which the lens equation is linearised. We then find for the centroid y0 in the source plane

(22)xc and yc are such that the point xc is mapped onto the point yc by the lens equation, and both are chosen to be the central points around which the lens equation is linearised. We then find for the centroid y0 in the source plane  (23)where

(23)where  . This then yields for (20)

. This then yields for (20)  (24)In the next step, the expression (18) for the source centre can be rewritten by transforming to the image coordinates and using the conservation of surface brightness Is(y(x)) = I(x):

(24)In the next step, the expression (18) for the source centre can be rewritten by transforming to the image coordinates and using the conservation of surface brightness Is(y(x)) = I(x):  (25)With the same transformation, we rewrite the moment tensor (19) as

(25)With the same transformation, we rewrite the moment tensor (19) as  (26)On the other hand, minimising the functional (3) for the surface brightness I(x) in the lens plane yields the expressions (9) and (10) for x0 and M, respectively. We then see that Eqs. (25), (26) and (9), (10) agree with each other, if we set

(26)On the other hand, minimising the functional (3) for the surface brightness I(x) in the lens plane yields the expressions (9) and (10) for x0 and M, respectively. We then see that Eqs. (25), (26) and (9), (10) agree with each other, if we set  (27)for which ρs = ρ. In particular, this shows that the centre x0 of the image is mapped onto the centre y0 of the source.

(27)for which ρs = ρ. In particular, this shows that the centre x0 of the image is mapped onto the centre y0 of the source.

The relation  (28)can be rewritten in terms of the square roots of the matrix M as

(28)can be rewritten in terms of the square roots of the matrix M as  (29)where

(29)where  is uniquely defined by requiring that for the symmetric, positive-definite matrix Ms, Vs is symmetric and positive-definite. Although both V and

is uniquely defined by requiring that for the symmetric, positive-definite matrix Ms, Vs is symmetric and positive-definite. Although both V and  are symmetric,

are symmetric,  is in general not. Therefore Vs cannot be readily read off from (29). Instead, we use a rotation matrix

is in general not. Therefore Vs cannot be readily read off from (29). Instead, we use a rotation matrix  (30)to write

(30)to write ![\begin{equation} \mat{V}_{\rm s}^2 = {\cal A}\mat{V}\mat{R}^{-1}(\varphi)\mat{R}(\varphi)\mat{V}{\cal A} = [\mat{R}(\varphi)\mat{V}{\cal A}]^{\rm T}[\mat{R}(\varphi)\mat{V}{\cal A}]. \end{equation}](/articles/aa/full_html/2017/08/aa29591-16/aa29591-16-eq102.png) (31)If we now choose ϕ to be such that

(31)If we now choose ϕ to be such that  is symmetric, then

is symmetric, then  . After a bit of algebra, we find that the rotation angle ϕ is given through

. After a bit of algebra, we find that the rotation angle ϕ is given through  (32)and we obtain as final result Vs as in (21) with

(32)and we obtain as final result Vs as in (21) with  (33)The inverse of (33) is given by

(33)The inverse of (33) is given by  (34)We recover for the GLAM ellipticity ϵ exactly the transformation law of unweighted moments (SS97). The GLAM ellipticity ϵ is therefore an unbiased estimator of the reduced shear g along the line-of-sight of the galaxy, and there is no need to determine unweighted moments.

(34)We recover for the GLAM ellipticity ϵ exactly the transformation law of unweighted moments (SS97). The GLAM ellipticity ϵ is therefore an unbiased estimator of the reduced shear g along the line-of-sight of the galaxy, and there is no need to determine unweighted moments.

As a side remark, the transformation between ϵ and ϵs is a linear conformal mapping from the unit circle onto the unit circle, and from the origin of the ϵ-plane onto the point − g in the ϵs-plane. If | g | > 1, then g has to be replaced by 1 /g∗ in Eq. (34), but we shall not be concerned here with this situation in the strong lensing regime.

2.4. Point spread function and pixellation

We have defined the GLAM ellipticity ϵ of an image I(x) relative to an adaptive weight f(ρ). This definition is idealised in the sense that it assumes an infinite angular resolution and the absence of any atmospheric or instrumental distortion of the image. Equally important, it ignores pixel noise. In this section, we move one step further to discuss the recovery of the original ϵ of an image after it has been convolved with a PSF and pixellated. The problem of properly dealing with noise in the image is discussed subsequently.

Let Ipre(x) be the original image prior to a PSF convolution and pixellation. This we call the “pre-seeing” image. Likewise, by the vector Ipost of Npix values we denote the “post-seeing” image that has been subject to a convolution with a PSF and pixellation. For mathematical convenience, we further assume that Ipre(x) is binned on a fine auxiliary grid with N ≫ Npix pixels of solid angle Ω. We list these pixel values as vector Ipre. The approximation of Ipre(x) by the vector Ipre becomes arbitrarily accurate for N → ∞. Therefore we express the post-seeing image Ipost = LIpre by the linear transformation matrix L applied to the pre-seeing image Ipre. The matrix L with N × Npix elements combines the effect of a (linear) PSF convolution and pixellation. Similarly, we bin the template f(ρ) in the pre-seeing frame to the grid of Ipre, and we denote the binned template by the vector fρ; as usual, the quadratic form ρ is here a function of the variables (x0,ϵ,t), Eq. (6). The GLAM parameters ppre of the pre-seeing image are given by the minimum of E(p | Ipre), or approximately by  (35)For the recovery of the pre-seeing ellipticity ϵ, the practical challenge is to derive the pre-seeing parameters ppre from the observed image Ipost in the post-seeing frame. For this task, we assume that the transformation L is exactly known. We note that a linear mapping L is an approximation here; we ignore the nonlinear effects in the detector (Plazas et al. 2014; Gruen et al. 2015; Niemi et al. 2015; Melchior et al. 2015).

(35)For the recovery of the pre-seeing ellipticity ϵ, the practical challenge is to derive the pre-seeing parameters ppre from the observed image Ipost in the post-seeing frame. For this task, we assume that the transformation L is exactly known. We note that a linear mapping L is an approximation here; we ignore the nonlinear effects in the detector (Plazas et al. 2014; Gruen et al. 2015; Niemi et al. 2015; Melchior et al. 2015).

For a start, imagine a trivial case where no information is lost by going from Ipre to Ipost. We express this case by a transformation L that can be inverted, which means that N = Npix and L is regular. We then obtain ppre by minimising Epre(p | L-1Ipost) with respect to p: we map Ipost to the pre-seeing frame and analyse Ipre = L-1Ipost there. This is equivalent to minimising the form (Ipost − ALfρ)T(LLT)-1(Ipost − ALfρ) in the post-seeing frame.

|

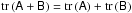

Fig. 1 Examples of GLAM templates in the pre-seeing frame, fρ (top left), and the post-seeing frame, Lfρ (other panels); the templates are Gaussian radial profiles with f(ρ) = e− ρ/ 2. The bottom left panel simulates only pixellation, whereas the right column also shows the impact of a PSF, indicated in the top right corner, without (top) and with pixellation (bottom). |

For realistic problems where L-1 does not exist, because N ≫ Npix, this trivial case at least suggests to determine the minimum ppost of the new functional  (36)as estimator of ppre. This way we are setting up an estimator by forward-fitting the template fρ to the image in the post-seeing frame with the matrix U being a metric for the goodness of the fit. Clearly, should L-1 exist we recover (35) only by adopting U = (LLT)-1. So we could equivalently obtain ppre, without bias, by fitting Lfρ to the observed image Ipost in this case. However, realistically L is singular: the recovery of ppre from (36) can only be done approximately. Then we could at least find an optimal metric to minimise the bias. We return to this point shortly. In any case, the metric has to be positive-definite and symmetric such that always Epost ≥ 0. We note that the moments at the minimum of (36) are related but not identical to the adaptive moments in the post-seeing frame. To obtain the latter we would fit a pixellated fρ with U = 1 to Ipost. The bottom and top right images in Fig. 1 display examples of post-seeing templates that are fitted to a post-seeing image to estimate ppre with the functional (36).

(36)as estimator of ppre. This way we are setting up an estimator by forward-fitting the template fρ to the image in the post-seeing frame with the matrix U being a metric for the goodness of the fit. Clearly, should L-1 exist we recover (35) only by adopting U = (LLT)-1. So we could equivalently obtain ppre, without bias, by fitting Lfρ to the observed image Ipost in this case. However, realistically L is singular: the recovery of ppre from (36) can only be done approximately. Then we could at least find an optimal metric to minimise the bias. We return to this point shortly. In any case, the metric has to be positive-definite and symmetric such that always Epost ≥ 0. We note that the moments at the minimum of (36) are related but not identical to the adaptive moments in the post-seeing frame. To obtain the latter we would fit a pixellated fρ with U = 1 to Ipost. The bottom and top right images in Fig. 1 display examples of post-seeing templates that are fitted to a post-seeing image to estimate ppre with the functional (36).

For singular L, the minimum of the functional yields an unbiased ppre for any U if

-

1.

Ipre(x) has confocal elliptical isophotes with the radial brightness profile S(x);

-

2.

and if we choose f(ρ) = S(ρ) as GLAM template;

-

3.

and if Epost has only one minimum (non-degenerate).

To explain, due to 1. and 2. we find a vanishing residual  (37)at the minimum of Epre and consequently Epre(ppre | Ipre) = 0. At the same time for any metric U, we also have Epost(ppre | Ipost) = 0 because Ipost − ALfρ = LRpre = 0 for p = ppre. Because of the lower bound Epost ≥ 0, these parameters ppre have to coincide with a minimum of Epost and hence indeed ppost = ppre. We note that the previous argument already holds for the weaker condition LRpre = 0 so that a mismatch between Ipre and the template at ppre produces no bias if the mapped residuals vanish in the post-seeeing frame. In addition, if this is the only minimum of Epost then the estimator ppost is also uniquely defined (condition 3). An extreme example of a violation of condition 3 is the degenerate case Npix = 1: the observed image consists of only one pixel. Then every parameter set (x0,ϵ,t) produces Epost = 0, if A is chosen correspondingly. But this should be a rare case because it is not expected to occur for Npix> 6, thus for images that span over more pixels than GLAM parameters.

(37)at the minimum of Epre and consequently Epre(ppre | Ipre) = 0. At the same time for any metric U, we also have Epost(ppre | Ipost) = 0 because Ipost − ALfρ = LRpre = 0 for p = ppre. Because of the lower bound Epost ≥ 0, these parameters ppre have to coincide with a minimum of Epost and hence indeed ppost = ppre. We note that the previous argument already holds for the weaker condition LRpre = 0 so that a mismatch between Ipre and the template at ppre produces no bias if the mapped residuals vanish in the post-seeeing frame. In addition, if this is the only minimum of Epost then the estimator ppost is also uniquely defined (condition 3). An extreme example of a violation of condition 3 is the degenerate case Npix = 1: the observed image consists of only one pixel. Then every parameter set (x0,ϵ,t) produces Epost = 0, if A is chosen correspondingly. But this should be a rare case because it is not expected to occur for Npix> 6, thus for images that span over more pixels than GLAM parameters.

A realistic pre-seeing image is neither elliptical nor is our chosen template f(ρ) likely to perfectly fit the radial light profile of the image, even if it were elliptical. This mismatch produces a bias in p only if L is singular, and the magnitude of the bias scales with the residual of the template fit in the pre-seeing frame. To see this, let  be the best-fitting template Afρ with parameters ppre. The residual of the template fit in the pre-seeing frame is

be the best-fitting template Afρ with parameters ppre. The residual of the template fit in the pre-seeing frame is  . In the vicinity of ppre, we express the linear change of Afρ for small δp by its gradient at ppre,

. In the vicinity of ppre, we express the linear change of Afρ for small δp by its gradient at ppre,  (38)where

(38)where  (39)Each column Gi of the matrix G denotes the change of Afρ with respect to pi. Therefore, close to ppre we find the Taylor expansion

(39)Each column Gi of the matrix G denotes the change of Afρ with respect to pi. Therefore, close to ppre we find the Taylor expansion  (40)Furthermore, since ppre is a local minimum of Epre, Eq. (35), we find at the minimum the necessary condition

(40)Furthermore, since ppre is a local minimum of Epre, Eq. (35), we find at the minimum the necessary condition  (41)This means that the residual Rres is orthogonal to every Gi. Now let

(41)This means that the residual Rres is orthogonal to every Gi. Now let  be the residual mapped to the post-seeing frame. Then (36) in the vicinity to ppre is approximately

be the residual mapped to the post-seeing frame. Then (36) in the vicinity to ppre is approximately  (42)which we obtain by plugging (40) into (36). This approximation is good if the bias is small, by which we mean that the minimum ppost of Epost(p | Ipost) is close to ppre. As shown in Aitken (1934) in the context of minimum-variance estimators, the first-order bias δpmin = ppost − ppre that minimises (42) is then given by

(42)which we obtain by plugging (40) into (36). This approximation is good if the bias is small, by which we mean that the minimum ppost of Epost(p | Ipost) is close to ppre. As shown in Aitken (1934) in the context of minimum-variance estimators, the first-order bias δpmin = ppost − ppre that minimises (42) is then given by  (43)with UL: = LTUL. This reiterates that the bias δpmin always vanishes either for vanishing residuals Rpre = 0, or if L-1 exists and we choose U = (LLT)-1 as metric. The latter follows from Eq. (43) with UL = 1 and Eq. (41). We note that δpmin does not change if we multiply U by a scalar λ ≠ 0. Thus any metric λU generates as much bias as U.

(43)with UL: = LTUL. This reiterates that the bias δpmin always vanishes either for vanishing residuals Rpre = 0, or if L-1 exists and we choose U = (LLT)-1 as metric. The latter follows from Eq. (43) with UL = 1 and Eq. (41). We note that δpmin does not change if we multiply U by a scalar λ ≠ 0. Thus any metric λU generates as much bias as U.

With regard to an optimal metric U, we conclude from Eq. (43) and GTRpre = 0 that we can minimise the bias by the choice of U for which UL ≈ 1, or if ∥ LTUL − 1 ∥ 2 is minimal with respect to the Frobenius norm ∥ Q ∥ 2 = tr(QQT). This optimised choice of U corresponds to the so-called pseudo-inverse (LLT)+ of LLT (Press et al. 1992). The pseudo-inverse is the normal inverse of LLT if the latter is regular.

A practical computation of U = (LLT)+ could be attained by choosing a set of orthonormal basis function bi in the pre-seeing frame, or approximately a finite number Nbase of basis functions that sufficiently describes images in the pre-seeing frame. For every basis function, one then computes the images Lbi and the matrix  (44)The pseudo-inverse of this matrix is the metric in the post-seeing frame.

(44)The pseudo-inverse of this matrix is the metric in the post-seeing frame.

Specifically, for images that are only pixellated the matrix LLT is diagonal. To see this, consider pixellations that map points ei in the pre-seeing frame to a single pixels e′(ei) in the post-seeing frame, or Lei = e′(ei); both ei and e′(ei) are unit vectors from the standard bases in the two frames. According to (44), the matrix LLT = ∑ ie′(ei) [ e′(ei) ] T is then always diagonal, typically proportional to the unit matrix, and easily inverted to obtain the optimal metric U. Unfortunately, this U only makes the linear-order bias (43) vanish while we still can have a higher-order bias because of the singular L.

2.5. Similarity between model-based and moment-based techniques

Through the formalism in the previous sections it becomes evident that there is no fundamental difference between model-based techniques and those involving adaptive moments. Model-based techniques perform fits of model profiles to the observed image, whereas moment-based ellipticities with the adaptive weight f′(ρ) are equivalently obtained from the image by fitting the ellipticial profile f(ρ) to the image. This requires, however, the existence of a unique minimum of the functional E(p | I) which we assume throughout the paper. We note that the similarity also extends to the special case of unweighted moments in Eqs. (1) and (2) which are in principle obtained by fitting f(ρ) = ρ since f′(ρ) = 1 in this case.

Nonetheless one crucial difference to a model-fitting technique is that a fit of f(ρ) does not assume a perfect match to I(x): the functional E(p | I) needs to have a minimum, but the fit is allowed to have residuals Rpre, this means E(p | I) ≠ 0 at the minimum. As shown, for the estimator based on (36) this may cause bias, Eq. (43), but only when analysing post-seeing images hence for L ≠ 1. In practice, different methodologies to estimate the pre-seeing moment-based ellipticity certainly use different approaches. Our choice of a forward-fitting estimator (36) is very specific but is optimal in the sense that it is always unbiased for LRpre = 0 or regular L. Yet other options are conceivable. For instance, we could estimate moments in the post-seeing frame first and try to map those to the pre-seeing frame (e.g. H03 or Melchior et al. 2011, which aim at unweighted moments). It is then unclear which weight is effectively applied in the pre-seeing frame. Therefore the expression Eq. (43) for the bias is strictly applicable only to our estimator and adaptive moments. But it seems plausible that the bias of estimators of pre-seeing moments generally depends on the residual Rpre since the brightness moments x0,i and Mij are the solutions to a best-fit of elliptical templates.

In the literature the problem of bias due to residuals in model fits is known as model bias or underfitting bias (Zuntz et al. 2013; Bernstein 2010). Consequently, moment-based techniques are as prone to underfitting bias as model-based methodologies.

3. Statistical inference of ellipticity

Realistic galaxy images I are superimposed by instrumental noise δI. Therefore the pre-seeing GLAM ellipticity can only be inferred statistically with uncertainties, and it is, according to the foregoing discussion, subject to underfitting bias. For a statistical model of the ellipticity ϵ, we exploit the previous conclusions according to which the ellipticity of I(x) for the adaptive weight f′(ρ) is equivalent to ϵ of the best-fitting template f(ρ). This renders the inference of ϵ a standard forward-fit of a model ALf(ρ) to I.

We consider post-seeing images I with Gaussian noise δI, that means images I = Ipost + δI. The covariance of the noise is N = ⟨ δIδIT ⟩, while Ipost = LIpre is the noise-free image in the post-seeing frame. A Gaussian noise model is a fair assumption for faint galaxies in the sky-limited regime (Miller et al. 2007). Possible sources of noise are: read-out noise, sky noise, photon noise, or faint objects that blend with the galaxy image. If an approximate Gaussian model is not applicable, the following model of the likelihood has to be modified accordingly.

The statistical model of noise are given by the likelihood ℒ(I | p) of an image I = LIpre + δI given the GLAM parameters p. We aim at a Bayesian analysis for which we additionally quantify our prior knowledge on parameters by the PDF Pp(p). We combine likelihood and prior to produce the marginal posterior  (45)of ellipticity by integrating out the nuisance parameters (x0,A,t); the constant normalisation of the posterior is irrelevant for this paper but we assume that the posterior is proper (it can be normalised). Our choice for the numerical experiments in this study is a uniform prior Pp(p) for positive sizes t and amplitudes A, ellipticities | ϵ | < 1, and centroid positions x0 inside the thumbnail image. As known from previous Bayesian approaches to shear analyses, the choice of the prior affects the consistency of the ellipticity posteriors (see, e.g. BA14). The origin of the prior-dependence will become clear in Sect. 3.3.

(45)of ellipticity by integrating out the nuisance parameters (x0,A,t); the constant normalisation of the posterior is irrelevant for this paper but we assume that the posterior is proper (it can be normalised). Our choice for the numerical experiments in this study is a uniform prior Pp(p) for positive sizes t and amplitudes A, ellipticities | ϵ | < 1, and centroid positions x0 inside the thumbnail image. As known from previous Bayesian approaches to shear analyses, the choice of the prior affects the consistency of the ellipticity posteriors (see, e.g. BA14). The origin of the prior-dependence will become clear in Sect. 3.3.

With regard to notation, we occasionally have to draw random numbers or vectors of random numbers x from a PDF P(x) or a conditional density P(x | y). We denote this by the shorthand x ~ P(x) and x ~ P(x | y), respectively. As common in statistical notation, distinct conditional probability functions may use the same symbol, as for instance the symbol P in P(x | y) and P(y | x).

3.1. Caveat of point estimates

|

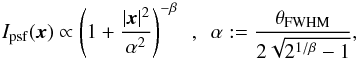

Fig. 2 Toy-model demonstration of a maximum-likelihood estimator (red), a maximum-likelihood estimator with first-order bias correction (green), and an estimator exploiting the full posterior (blue). Data points display the estimator average (y-axis) over 106 data points at varying signal-to-noise levels (x-axis). The true value to be estimated is x = 1. The panels show different signal-to-noise regimes; − nppt denotes y = 1 − n/ 103. |

The bias in a lensing analysis is not only affected by how we statistically infer galaxy shapes but also how we process the statistical information later on. To demonstrate in this context the disadvantage of point estimators in comparison to a fully Bayesian treatment, we consider here a simplistic nonlinear toy model. This model has one parameter x and one single observable y = x3 + n that is subject to noise n. By n ~ N(0,σ) we draw random noise from a Gaussian distribution N(0,σ) with mean zero and variance σ. From the data y, we statistically infer the original value of x. Towards this goal we consider the (log-)likelihood of y given x which is − 2lnℒ(y | x) = (y − x3)2σ-2 + const.

A maximum likelihood estimator of x is given by xest = y1 / 3, the maximum of ℒ(y | x). We determine the bias of xest as function of signal-to-noise ratio (S/N) x/σ by averaging the estimates of Nreal = 106 independent realisations of y. The averages and the standard errors are plotted as red line in Fig. 2. Clearly, xest is increasingly biased low towards lower S/N levels. In the context of lensing, this would be noise bias. As an improvement we then correct the bias by employing the first-order correction in Refregier et al. (2012) for each realisation of y. As seen in the figure, this correction indeed reduces the systematic error, but nevertheless breaks down for S/N ≲ 3.

On the other hand in a fully Bayesian analysis, we obtain constraints on x that are consistent with the true value for any S/N. For this purpose, we make Nreal independent identically distributed realisations (i.i.d.) yi and combine their posterior densities Ppost(x | yi) ∝ ℒ(yi | x) Pprior(x) by multiplying the likelihoods; we adopt a uniform (improper) prior Pprior(x) = const. This gives us for y = (y1,...,yNreal), up to a normalisation constant, the combined posterior  (46)As expected due to the asymptotic normality of posteriors (under regularity conditions), for i.i.d. experiments yi the product density is well approximated by a Gaussian N(x0,σx) and is consistent with the true value x (van der Vaart 1998). We plot values of x0 and σx in Fig. 2 as blue data points.

(46)As expected due to the asymptotic normality of posteriors (under regularity conditions), for i.i.d. experiments yi the product density is well approximated by a Gaussian N(x0,σx) and is consistent with the true value x (van der Vaart 1998). We plot values of x0 and σx in Fig. 2 as blue data points.

In conclusion, keeping the full statistical information Ppost(x | y) in the inference of x yields consistent constraints over the entire S/N range probed: the noise bias vanishes. Also note that the Bayesian approach has not substantially increased the error σx compared to the error of the point estimator (relative sizes of error bars); both approaches have similar efficiency.

3.2. Likelihood model and underfitting bias

Inspired by the foregoing Bayesian toy model that is free of noise bias, we set up a Bayesian approach for GLAM ellipticities. To construct a likelihood for a GLAM fit in the pre-seeing frame, we first consider, similar to Sect. 2.4, the trivial case where L is regular. This is straightforward since we can map the noisy image I → L-1I back to the pre-seeing frame and determine the noise residual for given pre-seeing p,  (47)The inverse noise covariance in the pre-seeing frame is LTN-1L. The logarithmic likelihood of δIpre(p) in the Gaussian case is thus

(47)The inverse noise covariance in the pre-seeing frame is LTN-1L. The logarithmic likelihood of δIpre(p) in the Gaussian case is thus  (48)where “const.” expresses the normalisation of the likelihood. Therefore, we can equivalently write the pre-seeing fit in terms of available post-seeing quantities. We note here that the transform LRpre of the (unknown) pre-seeing residual is for singular L and Rpre ≠ 0 not equal to the post-seeing residual Rpost, which we defined in a least-square fit of ALfρ to Ipost (see Sect. 2.4).

(48)where “const.” expresses the normalisation of the likelihood. Therefore, we can equivalently write the pre-seeing fit in terms of available post-seeing quantities. We note here that the transform LRpre of the (unknown) pre-seeing residual is for singular L and Rpre ≠ 0 not equal to the post-seeing residual Rpost, which we defined in a least-square fit of ALfρ to Ipost (see Sect. 2.4).

In reality, L is singular so the previous steps cannot be applied. Nevertheless, Eq. (48) is the correctly specified likelihood of a forward fit if the model m(p): = Afρ + Rpre perfectly describes the brightness profile Ipre for some parameters ptrue. Therefore we expect no inconsistencies for singular L as long as the correct Rpre can be given. Since Rpre is unknown, however, we investigate in the following the impact of a misspecified likelihood that does not properly account for our ignorance in the pre-seeing residuals. We do this by assuming Rpre ≡ 0 and employing  (49)Obviously, this is a reasonable approximation of (48) if ∥ LRpre ∥ ≪ ∥ I ∥ so that residuals are only relevant if they are not small when compared to the image in the post-seeing frame. It also follows from Eq. (48) that for LRpre = 0 the likelihood is correctly specified even if Rpre ≠ 0 and L being singular, similar to the noise-free case. More generally we discuss later in Sect. 5 how we could modify the likelihood ℒ(I | p) to factor in our insufficient knowledge about Rpre. Until then the approximation (49) introduces underfitting bias into the likelihood model, making it inconsistent with the true ppre.

(49)Obviously, this is a reasonable approximation of (48) if ∥ LRpre ∥ ≪ ∥ I ∥ so that residuals are only relevant if they are not small when compared to the image in the post-seeing frame. It also follows from Eq. (48) that for LRpre = 0 the likelihood is correctly specified even if Rpre ≠ 0 and L being singular, similar to the noise-free case. More generally we discuss later in Sect. 5 how we could modify the likelihood ℒ(I | p) to factor in our insufficient knowledge about Rpre. Until then the approximation (49) introduces underfitting bias into the likelihood model, making it inconsistent with the true ppre.

To show the inconsistency, we proceed as in the foregoing section on the toy model. We consider a series of n i.i.d. realisations Ii of the same image Ipost and combine their likelihoods ℒ(Ii | p) for a given p into the joint (product) likelihood  (50)and we work out its limit for n → ∞. The joint likelihood can be written as

(50)and we work out its limit for n → ∞. The joint likelihood can be written as  (51)Here we have made use of the properties of the trace of matrices, namely its linearity

(51)Here we have made use of the properties of the trace of matrices, namely its linearity  and that

and that  . For large n, we can employ the asymptotic expressions

. For large n, we can employ the asymptotic expressions  (52)to the following effect:

(52)to the following effect:  (53)Thus the joint likelihood peaks for n → ∞ at the minimum ppost of ∥ Ipost − ALfρ ∥ N. This is equivalent to the location of the minimum of Epost(p | Ipost), Eq. (36), with metric U = N-1. Consequently, as in the noise-free case, we find an inconsistent likelihood if the residual Rpre is non-vanishing and if LTN-1L is not proportional to the unity matrix. We additionally expect a smaller bias δp = ppost − ppre for smaller levels of residuals1.

(53)Thus the joint likelihood peaks for n → ∞ at the minimum ppost of ∥ Ipost − ALfρ ∥ N. This is equivalent to the location of the minimum of Epost(p | Ipost), Eq. (36), with metric U = N-1. Consequently, as in the noise-free case, we find an inconsistent likelihood if the residual Rpre is non-vanishing and if LTN-1L is not proportional to the unity matrix. We additionally expect a smaller bias δp = ppost − ppre for smaller levels of residuals1.

With regards to the noise dependence of underfitting bias, we find that δp does not change if we increase the overall level of noise in the image I. This can be seen by scaling the noise covariance N → λ N with a scalar λ. Any value λ> 0 results in the same minimum location for Epost(p | Ipost) so that δp is independent of λ: there is no noise bias.

Moreover, the bias δp of a misspecified likelihood seems to depend on our specific assumption of a Gaussian model for the likelihood. It can be argued on the basis of general theorems on consistency and asymptotic normality of posteriors, however, that for n → ∞ we obtain the same results for other likelihood models under certain regularity conditions (van der Vaart 1998; Appendix B in Gelman et al. 2013). The latter requires continuous likelihoods that are identifiable, hence ℒ(I | p1) ≠ ℒ(I | p2) for p1 ≠ p2, and that the true ptrue is not at the boundary of the domain of all parameters p. This is stricter than our previous assumptions for the Gaussian model where we needed only a unique global maximum of the likelihood ℒ(Ipost | p). It could therefore be that a unique maximum is not sufficient for non-Gaussian likelihoods.

3.3. Prior bias

The foregoing section discusses the consistency of the likelihood of a single image I. We can interpret the analysis also in a different way: if we actually had n independent exposures Ii of the same pre-seeing image, then combining the information in all exposures results in a posterior  that is consistent with ppost for a uniform prior Pp(p) = 1. As discussed in van der Vaart (1998), the more general Bernstein-von Mises theorem additionally shows that under regularity conditions the choice of the prior is even irrelevant provided it sets prior mass around ppost (Cromwell’s rule). This might suggest that for a correctly specified likelihood ℒ(Ii | p), a fully Bayesian approach for the consistent measurement of ϵ might be found that is independent of the specifics of the prior and has no noise bias in the sense of Sect. 3.2. This is wrong as shown in the following.

that is consistent with ppost for a uniform prior Pp(p) = 1. As discussed in van der Vaart (1998), the more general Bernstein-von Mises theorem additionally shows that under regularity conditions the choice of the prior is even irrelevant provided it sets prior mass around ppost (Cromwell’s rule). This might suggest that for a correctly specified likelihood ℒ(Ii | p), a fully Bayesian approach for the consistent measurement of ϵ might be found that is independent of the specifics of the prior and has no noise bias in the sense of Sect. 3.2. This is wrong as shown in the following.

Namely, in contrast to the previous simplistic scenario, sources in a lensing survey have varying values of p: they are intrinsically different. For more realism, we therefore assume now i = 1...n pre-seeing images that, on the one hand, shall have different centroid positions x0,i, sizes ti, amplitudes Ai but, on the other hand, have identical ellipticities ϵ. Our goal in this experiment is to infer ϵ from independent image realisations Ii = Ipost,i + δIi by marginalising over the 4n nuisance parameters qi = (x0,i,ti,Ai). This experiment is similar to the standard test for shear measurements where a set of different pre-seeing images is considered whose realisations Ii are subject to the same amount of shear (e.g. Bridle et al. 2010). As a matter of fact, the inference of constant shear from an ensemble of images would just result in 2n additional nuisance parameters for the intrinsic shapes with essentially the same following calculations.

Let Pq(qi) be the prior for the four nuisance parameters qi of the ith image and Pϵ(ϵ) = 1 a uniform prior for ϵ. We combine the GLAM parameters in pi: = (qi,ϵ), and we assume that all images have the same prior density and that the noise covariance N applies to all images. The marginal posterior of ϵ is then the integral  (54)with

(54)with  being a normalization constant.

being a normalization constant.

The product of the likelihood densities inside the integral is given by  (55)Here we have taken into account that the GLAM parameters partly differ, indicated by the additional index in Ai and fρ,i. This is different in Eq. (53) where we take the product of full likelihoods in p-space without marginalization. In the limit of n → ∞, we find in addition to the relations (52) that

(55)Here we have taken into account that the GLAM parameters partly differ, indicated by the additional index in Ai and fρ,i. This is different in Eq. (53) where we take the product of full likelihoods in p-space without marginalization. In the limit of n → ∞, we find in addition to the relations (52) that  (56)because δIi is uncorrelated to ALfρ,i so that

(56)because δIi is uncorrelated to ALfρ,i so that  vanishes on average for many δIi. Therefore, for the asymptotic statistic we can replace all Ii by Ipost,i in Eq. (55) to obtain

vanishes on average for many δIi. Therefore, for the asymptotic statistic we can replace all Ii by Ipost,i in Eq. (55) to obtain  (57)and, as a result, for Eq. (54)

(57)and, as a result, for Eq. (54)  (58)where Pϵ(ϵ | Ipost,i) is the marginal ellipticity posterior for the ith noise-free image.

(58)where Pϵ(ϵ | Ipost,i) is the marginal ellipticity posterior for the ith noise-free image.

The limit (58) has the interesting consequence that the consistency with ppost of the marginal posterior depends on the specific choice of the prior density Pq(qi). To show this, consider one particular case in which, for simplicity, all pre-seeing images are identical such that Ipost,i ≡ Ipost. Then, according to (58), the ellipticity posterior converges in distribution to [ Pϵ(ϵ | Ipost) ] n which for n → ∞ peaks at the global maximum of Pϵ(ϵ | Ipost). It is then easy to see that we can always change the position of this maximum by varying the prior density in Pϵ(ϵ | Ipost). In particular, even if the likelihoods are correctly specified, we generally find an inconsistent marginal posterior depending on the prior. A similar argument can be made if Ipost,i ≠ Ipost,j for i ≠ j.

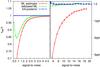

|

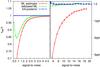

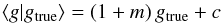

Fig. 3 Prior bias in the marginal posterior Pϵ(ϵ | I1,...,In) as function of S/N ν for different galaxy sizes rh (in arcsec). The posterior assumes a uniform prior. Shown is the error δϵ = | ϵ − ϵtrue | of the inferred ϵ for a true ϵtrue = 0.3 as obtained by combining the marginal posteriors of 5 × 103 exposures of the same galaxy with random centroid positions. The pixel size 0.1 arcsec equals the PSF size (Moffat). Galaxy profiles and GLAM templates have a Sérsic profile with n = 2: there is no underfitting. |

For a concrete example, we perform for Fig. 3 a simulated analysis of 5 × 103 noisy images with ϵtrue = 0.3. All pre-seeing images are identical to one particular template Afρ (Sérsic profile with n = 2). Therefore we have a correctly specified likelihood model and no underfitting. We adopt a uniform prior Pq(q) (and Pϵ(ϵ)). The details on the simulated images and their analysis are given in Sect. 4. For each data point, we plot the mean and variance of the marginal posterior Pϵ(ϵ | I1,...,In) relative to the true ellipticity ϵtrue as function of S/N ν and for different image sizes rh. Evidently, the bias δϵ increases for smaller ν thereby producing a noise-dependent bias which is not present when analysing the consistency of ℒ(I | p) of individual images as in Sect. 3.2.

The sensitivity of the posterior to the prior implies that consistency (for a correctly specified likelihood) could be regained by choosing an appropriate prior density Pq(qi). On the other hand, the dependence on the prior runs against the conventional wisdom that the prior should become asymptotically irrelevant for n → ∞ as the joint likelihood starts dominating the information on inferred parameters. Indeed, general theorems show this under certain regularity conditions (see e.g. discussion in Chap. 4 of Gelman et al. 2013). These conditions are, however, not given here because the total number of model parameters is not fixed but rather increases linearly with n due to a new set of nuisance parameters qi for every new galaxy image Ii. The observed breakdown of consistency is the result. A trivial (but practically useless) prior to fix the problem is one that puts all prior mass at the true values of the nuisance parameters which essentially leaves only ϵ as free model parameter. Likewise, an analysis with sources that knowingly have the same values for the nuisance parameters also yields consistent constraints; this is exactly what is done in Sect. 3.2. A non-trivial solution, as reported by BA14, is to use “correct priors” for the nuisance parameters that are equal to the actual distribution of q in the sample. On the downside, this raises the practical problem of obtaining correct priors from observational data.

In summary, for an incorrect prior of q, such as our uniform prior, we find a noise-dependent bias in the inferred ellipticity despite a fully Bayesian approach and a correctly specified likelihood. We henceforth call this bias “prior bias”. This emphasises the difference to the noise bias found in the context of point estimators.

4. Simulated shear bias

We have classified two possible sources of bias in our Bayesian implementation of adaptive moments: underfitting bias and prior bias. In this section, we study their impact on the inference of reduced shear g by using samples of mock images with varying S/N, galaxy sizes, and galaxy brightness profiles. In contrast to our previous experiments, the images in each sample have random intrinsic shapes but are all subject to the same amount of shear, and we perform numerical experiments to quantify the bias. Moreover, this section outlines practical details of a sampling technique for the posteriors of GLAM ellipticities and the reduced-shear, which is of interest for future applications (Sects. 4.4 and 4.5).

The PSF shall be exactly known for these experiments. For a large shear bias, we choose a relatively small PSF size and galaxy images that are not much larger than a few pixels. According to the discussion in Sect. 2.4, underfitting bias is mainly a result of pixellation which mathematically cannot be inverted. We note that a larger PSF size would reduce the underfitting bias since it spreads out images over more image pixels. Overall the values for shear bias presented here are larger than what is typically found in realistic surveys (e.g. Zuntz et al. 2013). We start this section with a summary of our simulation specifications.

4.1. Point-spread function

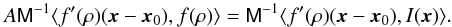

If not stated otherwise, our simulated postage stamps consist of 20 × 20 pixels (squares), of which one pixel has a size of 0.1′′; the simulated galaxies are relatively small with a half-light radius rh of only a few times the pixel size (0.15′′ ≤ rh ≤ 0.3′′). Additionally, we adopt an isotropic Moffat PSF,  (59)with the full width half maximum (FWHM) of θFWHM = 0.1′′ and β = 5 (Moffat 1969).

(59)with the full width half maximum (FWHM) of θFWHM = 0.1′′ and β = 5 (Moffat 1969).

4.2. GLAM template profiles

As GLAM templates we employ truncated Sérsic-like profiles with index n, ![\begin{equation} \label{eq:sersic} f(\rho)= \exp{\left(-\left[\frac{\rho}{\rho_0}\right]^{\frac{1}{2n}}\right)}\, h(\!\sqrt{\rho})~;~ h(x):=\frac{1}{\e^{5(x-3)}+1} \end{equation}](/articles/aa/full_html/2017/08/aa29591-16/aa29591-16-eq332.png) (60)and

(60)and  (61)(Capaccioli 1989). To avoid a numerical bias due to aliasing at the edges of the grid during the Fourier transformation steps in the sampling code, we have introduced the auxiliary function h(x) that smoothly cuts off the Sérsic profile beyond ρ ≈ 9. If f(ρ) were the radial light profile of an elliptical galaxy, the truncation would be located at about three half-light radii.

(61)(Capaccioli 1989). To avoid a numerical bias due to aliasing at the edges of the grid during the Fourier transformation steps in the sampling code, we have introduced the auxiliary function h(x) that smoothly cuts off the Sérsic profile beyond ρ ≈ 9. If f(ρ) were the radial light profile of an elliptical galaxy, the truncation would be located at about three half-light radii.

We use template profiles with n = 2 throughout. This index n falls between the values of n used for the model galaxies and thus is a good compromise to minimise the fit residual and thereby the underfitting bias.

4.3. Mock galaxy images

We generate postage stamps of mock galaxy images with varying half-light radii rh, radial light profiles, and signal-to-noise ratios ν. To this end, we utilise the code that computes the post-seeing GLAM templates (Appendix B). We consider pre-seeing images of galaxies with elliptical isophotes of three kinds of light profiles, Eq. (60): (1) exponential profiles with Sérsic index n = 1 (EXP; exponential), (2) de-Vaucouleur profiles with n = 4 (DEV; bulge-like) and (3) galaxies with profiles n = 2 that match the profile of the GLAM template (TMP; template-like). TMP galaxies hence cannot produce underfitting bias.

We devise uncorrelated Gaussian noise in the simulation of postage stamps. To determine the RMS variance σrms of the pixel noise for a given ν, let fi be the flux inside image pixels that is free of noise and ftot = ∑ ifi the total flux. From this, we compute a half-light flux threshold fth defined such that the integrated flux fhl = ∑ fi ≥ fthfi above the threshold is fhl = ftot/ 2 or as close as possible to this value. The pixels i with fi ≥ fth are defined to be within the half-light radius of the image; their number is Nhl: = ∑ fi ≥ fth; the integrated noise variance within the half-light radius is  . The signal-to-noise ratio within the half-light radius is therefore

. The signal-to-noise ratio within the half-light radius is therefore  , or

, or  (62)Figure 4 depicts four examples with added noise for different ν.

(62)Figure 4 depicts four examples with added noise for different ν.

|

Fig. 4 Examples of simulated images of galaxies with random ellipticities. Signal-to-noise ratios from left to right and top to bottom: ν = 10, 20, 40, and 200. The radial light profile of the pre-seeing images is exponential with rh = 0.2′′; the pixel size is 0.1′′. The FWHM of the PSF is indicated by the circle in the top right corners. |

To simulate galaxy images with intrinsic ellipticities that are sheared by g, we make a random realisation of an intrinsic ellipticity ϵs and compute the pre-seeing ellipticity with (17). As PDF for the intrinsic ellipticities we assume a bivariate Gaussian with a variance of σϵ = 0.3 for each ellipticity component; we truncate the PDF beyond | ϵs | ≥ 1.

In realistic applications, centroid positions of galaxy images are random within an image pixel, so we average all our following results for bias over subpixel offsets within the quadratic solid angle of one pixel at the centre of the image. This means: in an sample of mock images, every image has a different subpixel position which is chosen randomly from a uniform distribution. In this averaging process, care has to be taken to perform an even sampling of subpixel offsets. To ensure this with a finite number of random points in all our following tests, we employ a subrandom Sobol sequence in two dimensions for the random centroid offsets (Press et al. 1992).

4.4. Monte-Carlo sampling of ellipticity posterior

For practical applications of a Bayesian GLAM analysis, we produce a Monte Carlo sample of the posterior Pϵ(ϵ | I), Eq. (45) with the approximate likelihood ℒ(I | p) in Eq. (49). This sample consists of a set (ϵi,wi) of 1 ≤ i ≤ Nreal pairs of values ϵi and wi, which determine the sampling position ϵi and a sampling weight wi. For this paper, we use Nreal = 50 sampling points. In contrast to a lensing analysis with single-valued point estimators of ellipticity, a Bayesian analysis employs the sample (ϵi,wi) of each galaxy. To attain the sample (ϵi,wi) we invoke the importance sampling technique (e.g. Marshall 1956; Kilbinger et al. 2010).

For this technique, we define an approximation Q(p) of Pp(p | I), the so-called importance distribution function, from which we draw a random sample pi. The ellipticity component ϵi of pi is then assigned the weight wi = Pp(pi | I) Q(pi)-1 which we normalise to ∑ iwi = 1 afterwards. As importance function, we use a multivariate Gaussian with mean at the maximum pml of the likelihood ℒ(I | p) and a covariance defined by the inverse Fisher matrix F-1 at the maximum (Fisher 1935; Tegmark et al. 1997). More implementation details are given in Appendix B.

If Q(p) is too different from Pp(p | I), the sample will be dominated by few points with large weights, usually due to sampling points in the tail of the posterior for which the importance function predicts a too low probability, this means wi becomes large. This is indicated by a small effective number  of points compared to Nreal. This can produce a poor convergence and thus extreme outliers in the analysis that merely appear to have tight constrains on ϵ. Typically affected by this are images that are both small compared to the pixel size and low in signal-to-noise. These images tend to exhibit posteriors that allow parameter solutions with small sizes t compared to the true t or high eccentricities | ϵ | ≳ 0.7, giving the posterior a complex, distinctly non-Gaussian shape. In future applications, this problem can be addressed by finding a better model of the importance function.

of points compared to Nreal. This can produce a poor convergence and thus extreme outliers in the analysis that merely appear to have tight constrains on ϵ. Typically affected by this are images that are both small compared to the pixel size and low in signal-to-noise. These images tend to exhibit posteriors that allow parameter solutions with small sizes t compared to the true t or high eccentricities | ϵ | ≳ 0.7, giving the posterior a complex, distinctly non-Gaussian shape. In future applications, this problem can be addressed by finding a better model of the importance function.

For the scope of this paper, we address this problem at the expense of computation time by increasing the number of sampling points and by resampling. This means: for Monte-Carlo samples with Neff<Nreal/ 2, we draw more samples from the importance distribution until Neff ≥ Nreal/ 2 in the expanded sample. By an additional rule, we stop this process if the expanded sample size becomes too large and reaches 10 × Nreal. This case is indicative of a failure of the importance sampling. If this happens, we switch to a time-consuming standard Metropolis algorithm to sample the posterior with 103 points after 102 preliminary burn-in steps (Metropolis et al. 1953). This technique does not assume a particular shape for the posterior but performs a random walk through the p space, thereby producing a correlated sample along a Monte-Carlo Markov chain. As symmetric proposal distribution for this algorithm, we adopt a multivariate Gaussian with covariance 1.5 × F-1; all points of the chain have equal weight wi; the starting point of the chain is pml. Finally, in the resampling phase, we bootstrap the expanded or Metropolis sample by randomly drawing Nreal points from it with probability wi (with replacement). All selected data points are given the equal weights  in the final catalogue.

in the final catalogue.

4.5. Posterior density of reduced shear

For the inference of g, we convert the ellipticity posterior Pϵ(ϵ | I) into a posterior Pg(g | I) of shear. To this end, let us first determine the Pg(g | ϵ) for an exactly known ϵ. We express our uncertainty on the intrinsic ellipticity ϵs by the prior Ps(ϵs), and Pg(g) is our a-priori information on g. The values of g and ϵs shall be statistically independent, by which we mean that Psg(ϵs,g) = Ps(ϵs) Pg(g)2. Applying a marginalization over ϵs and then Bayes’ rule, we find  (63)or, equivalently,

(63)or, equivalently,  By

By  we denote the normalisation of Pg(g | ϵ),

we denote the normalisation of Pg(g | ϵ),  (66)The normalisation

(66)The normalisation  only depends on the modulus of ϵ for isotropic priors for symmetry reasons. The integration over the Dirac delta function δD(x) uses Eq. (17). The determinant | d2ϵs/ d2ϵ |, the Jacobian of the linear conformal mapping ϵs(g,ϵ), is a function of ϵ and g. For the weak lensing regime | g | ≤ 1 of interest, this is

only depends on the modulus of ϵ for isotropic priors for symmetry reasons. The integration over the Dirac delta function δD(x) uses Eq. (17). The determinant | d2ϵs/ d2ϵ |, the Jacobian of the linear conformal mapping ϵs(g,ϵ), is a function of ϵ and g. For the weak lensing regime | g | ≤ 1 of interest, this is  (67)(Geiger & Schneider 1998). To now account for the measurement error of ϵ in the shear posterior, we marginalise Pg(g | ϵ), Eq. (65), over ϵ with Pϵ(ϵ | I) as error distribution,

(67)(Geiger & Schneider 1998). To now account for the measurement error of ϵ in the shear posterior, we marginalise Pg(g | ϵ), Eq. (65), over ϵ with Pϵ(ϵ | I) as error distribution,  (68)In our Monte Carlo scheme, we sample the ellipticity posterior of every galaxy i through (ϵij,wij) ~ Pϵ(ϵ | Ii) by 1 ≤ j ≤ Nreal values ϵij of ellipticity and statistical weight wij. To convert this sample to an approximation of the g posterior, we replace Pϵ(ϵ | Ii) inside the integral (68) by the point distribution ΣjwijδD(ϵ − ϵij),

(68)In our Monte Carlo scheme, we sample the ellipticity posterior of every galaxy i through (ϵij,wij) ~ Pϵ(ϵ | Ii) by 1 ≤ j ≤ Nreal values ϵij of ellipticity and statistical weight wij. To convert this sample to an approximation of the g posterior, we replace Pϵ(ϵ | Ii) inside the integral (68) by the point distribution ΣjwijδD(ϵ − ϵij),  (69)For the following, we adopt a uniform prior for g, which means Pg(g) ∝ H(1 − | g |). For reasons discussed in Sect. 3.3, the prior on the nuisance ϵs presumably has to be equal to the distribution of intrinsic shapes for a consistent shear posterior. To investigate the sensitivity of shear bias as to Ps(ϵs) we therefore use two types of priors: the correct prior, which is the true intrinsic-shape distribution in Sect. 4.3, or a uniform prior H(1 − | ϵs |). For each prior Ps(ϵs), we numerically compute

(69)For the following, we adopt a uniform prior for g, which means Pg(g) ∝ H(1 − | g |). For reasons discussed in Sect. 3.3, the prior on the nuisance ϵs presumably has to be equal to the distribution of intrinsic shapes for a consistent shear posterior. To investigate the sensitivity of shear bias as to Ps(ϵs) we therefore use two types of priors: the correct prior, which is the true intrinsic-shape distribution in Sect. 4.3, or a uniform prior H(1 − | ϵs |). For each prior Ps(ϵs), we numerically compute  for different ϵ once and interpolate between them later on in (69). We finally combine the posteriors Pg(g | Ii) of all images Ii in the sample by multiplying posterior values on a g-grid. For this, we apply (69) to every galaxy in the sample independently.

for different ϵ once and interpolate between them later on in (69). We finally combine the posteriors Pg(g | Ii) of all images Ii in the sample by multiplying posterior values on a g-grid. For this, we apply (69) to every galaxy in the sample independently.

4.6. Results

|

Fig. 5 Plots of the multiplicative bias m for simulated images, based on Eq. (69), for two different priors Ps(ϵs) (filled data points: correct prior; open data points: uniform prior). Different styles for the data points indicate different image sizes rh, see key inside figure, while colours vary with galaxy type: GLAM template (TMP; red); exponential (EXP; green); bulge-like (DEV; blue). Data points for rh = 0.3′′, same galaxy type, and same prior are connected by dotted lines to guide the eye. The prior for galaxy sizes t, amplitudes A, and centroids x0 is uniform giving rise to noise-dependent prior bias; the constant offset of EXP and DEV is due to underfitting. A square image pixel has the size 0.1′′, which also equals the PSF size. Results for m for a larger PSF size can be found in Table 1. |

For each experiment, we consider a sample of statistically independent galaxy images  that are subject to the same reduced shear g but have random intrinsic ellipticities ϵs. To infer g from

that are subject to the same reduced shear g but have random intrinsic ellipticities ϵs. To infer g from  in a fully Bayesian manner, we compute the posteriors Pg(g | Ii) of g for every image Ii separately with Eq. (69) and then combine all posteriors by the product