| Issue |

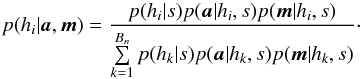

A&A

Volume 597, January 2017

|

|

|---|---|---|

| Article Number | A89 | |

| Number of page(s) | 28 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/201629219 | |

| Published online | 10 January 2017 | |

Probabilistic multi-catalogue positional cross-match

1 Observatoire astronomique de Strasbourg, Université de Strasbourg, CNRS, UMR 7550, 11 rue de l’Université, 67000 Strasbourg, France

e-mail: francois-xavier.pineau@astro.unistra.fr

2 IFCA (CS-IC-UC), Avenida de los Castros, 39005 Santander, Spain

3 Department of Physics & Astronomy, University of Leicester, Leicester, LEI 7RH, UK

4 Leibniz-Institut für Astrophysik Potsdam (AIP), An der Sternwarte 16, 14482 Potsdam, Germany

5 Max-Planck Institute for Solar System Research, Justus-von-Liebig-Weg 3, 37077 Göttingen, Germany

Received: 30 June 2016

Accepted: 23 August 2016

Context. Catalogue cross-correlation is essential to building large sets of multi-wavelength data, whether it be to study the properties of populations of astrophysical objects or to build reference catalogues (or timeseries) from survey observations. Nevertheless, resorting to automated processes with limited sets of information available on large numbers of sources detected at different epochs with various filters and instruments inevitably leads to spurious associations. We need both statistical criteria to select detections to be merged as unique sources, and statistical indicators helping in achieving compromises between completeness and reliability of selected associations.

Aims. We lay the foundations of a statistical framework for multi-catalogue cross-correlation and cross-identification based on explicit simplified catalogue models. A proper identification process should rely on both astrometric and photometric data. Under some conditions, the astrometric part and the photometric part can be processed separately and merged a posteriori to provide a single global probability of identification. The present paper addresses almost exclusively the astrometrical part and specifies the proper probabilities to be merged with photometric likelihoods.

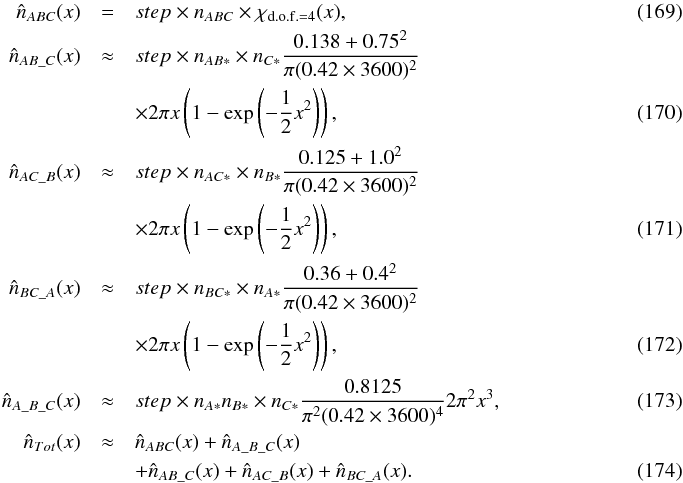

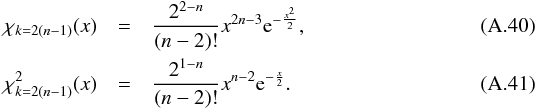

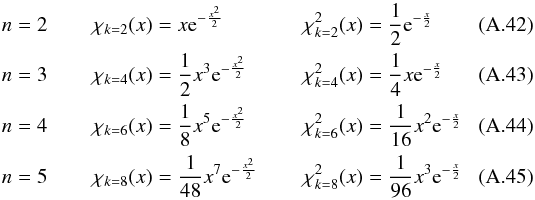

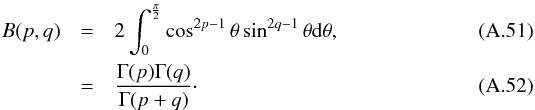

Methods. To select matching candidates in n catalogues, we used the Chi (or, indifferently, the Chi-square) test with 2(n−1) degrees of freedom. We thus call this cross-match a χ-match. In order to use Bayes’ formula, we considered exhaustive sets of hypotheses based on combinatorial analysis. The volume of the χ-test domain of acceptance – a 2(n−1)-dimensional acceptance ellipsoid – is used to estimate the expected numbers of spurious associations. We derived priors for those numbers using a frequentist approach relying on simple geometrical considerations. Likelihoods are based on standard Rayleigh, χ and Poisson distributions that we normalized over the χ-test acceptance domain. We validated our theoretical results by generating and cross-matching synthetic catalogues.

Results. The results we obtain do not depend on the order used to cross-correlate the catalogues. We applied the formalism described in the present paper to build the multi-wavelength catalogues used for the science cases of the Astronomical Resource Cross-matching for High Energy Studies (ARCHES) project. Our cross-matching engine is publicly available through a multi-purpose web interface. In a longer term, we plan to integrate this tool into the CDS XMatch Service.

Key words: methods: data analysis / methods: statistical / catalogs / astrometry

© ESO, 2017

1. Introduction

The development of new detectors with high throughput over large areas has revolutionized observational astronomy during recent decades. These technological advances, aided by a considerable increase of computing power, have opened the way to outstanding ground-based and space-borne all-sky or very large area imaging projects (e.g. the 2MASS Skrutskie et al. 2006; Cutri et al. 2003; SDSS Ahn et al. 2012, 2013; and WISE Wright et al. 2010; Cutri et al. 2014, surveys). These surveys have provided an essential astrometric and photometric reference frame and the first true digital maps of the entire sky.

As an illustration of this flood of data, the number of catalogue entries in the VizieR service at the Centre de Données astronomiques de Strasbourg (CDS) which was about 500 million in 1999 has reached almost 18 billion as on February 2016. At the 2020 horizon, European space missions such as Gaia and Euclid together with the Large Synoptic Survey Telescope (LSST) will provide a several-fold increase in the number of catalogued optical objects while providing measurements of exquisite astrometric and photometric quality.

This exponentially increasing flow of high quality multi-wavelength data has radically altered the way astronomers now design observing strategies and tackle scientific issues. The former paradigm, mostly focusing on a single wavelength range, has in many cases evolved towards a systematic fully multi-wavelength study. In fact, modelling the spectral energy distributions over the widest range of frequencies, spanning from radio to the highest energy gamma-rays has been instrumental in understanding the physics of stars and galaxies.

Many well designed and useful tools have been developed worldwide concurrently with the emergence of the virtual observatory. Most if not all of these tools can handle and process multi-band images and catalogues. When assembling spectral energy distributions using surveys obtained at very different wavelengths and with discrepant spatial resolution, one of the most acute problems is to find the correct counterpart across the various bands. Several tools such as TOPCAT (Taylor 2005) or the CDS XMatch Service (Pineau et al. 2011a; Boch et al. 2012) offer basic cross-matching facilities. However, none of the publicly available tools handles the statistics inherent to the cross-matching process in a fully coherent manner. A standard method for a dependable and robust association of a physical source to instances of it in different catalogues (cross-identification) and in diverse spectral ranges is still absent.

The pressing need for a multi-catalogue probabilistic cross-matching tool was one of the strong motivations of the FP7-Space European program ARCHES (Motch et al. 2016)1. Designing a cross-matching tool able to process, in a single pass, a theoretically unlimited number of catalogues, while computing probabilities of associations for all catalogue configurations, using the background of sources, positional errors and eventually introducing priors on the expected shape of the spectral energy distribution is one of the most important outcomes of the project. A preliminary description of this algorithm was presented in Pineau et al. (2015). Although ARCHES was originally focusing on the cross-matching of XMM-Newton sources, the algorithms developed in this context are clearly applicable to any combination of catalogues and energy bands (see for example Mingo et al. 2016).

2. Going beyond the two-catalogue case

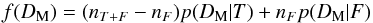

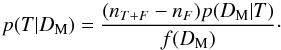

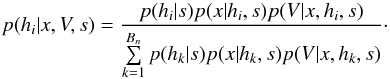

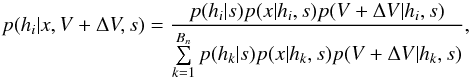

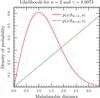

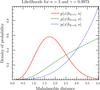

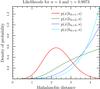

Computing probabilities of identifications when cross-correlating two catalogues in a given area can be quite straightforward (provided the area is small enough so that the density of sources can be considered more or less constant, but large enough to provide sufficient statistics). For each possible pair of sources (one from each catalogue), we compute the distance normalized by positional errors (called normalized distance, σ-distance, χ-distance or more generally in this paper Mahalanobis distance DM). Then we build the histogram of the number of associations per bin of DM. This histogram is the sum of two components (see Fig. 2): the “real” or “true” associations (T) for which the distribution p(DM | T) follows a Rayleigh distribution; the spurious or “false” associations, for which the distribution p(DM | F) follows a linear (Poisson) distribution. Knowing these two distributions and the total number of associations (nT + F), we may fit the histogram with the function  (1)to estimate the number of spurious associations (nF) and thus the number of good matches (nT = nT + F−nF). Hence, we are able to attribute to an association with a given normalized distance the probability of being a good match:

(1)to estimate the number of spurious associations (nF) and thus the number of good matches (nT = nT + F−nF). Hence, we are able to attribute to an association with a given normalized distance the probability of being a good match:  (2)Dividing both the numerator and the denominator by nT + F we recognize Bayes’ formula considering (nT + F−nF) /nT + F as the prior p(T) and considering either nF/nT + F as the prior p(F) or f(DM) = nT + Fp(DM).

(2)Dividing both the numerator and the denominator by nT + F we recognize Bayes’ formula considering (nT + F−nF) /nT + F as the prior p(T) and considering either nF/nT + F as the prior p(F) or f(DM) = nT + Fp(DM).

The present paper basically extends this simple approach to more than two catalogues. Instead of fitting histograms to find the number of spurious associations, we directly compute them from the input catalogues data and from geometrical considerations.

Previously, Budavári & Szalay (2008) developed a multi-catalogue cross-match. For a given set of n sources from n distinct catalogues, they compute a “Bayes’ factor” based on both astrometric and photometric data. The “Bayes’ factor” is then used as a score: a pre-defined threshold on its value is applied to select or reject the given set of n sources. We discuss the astrometric part of Budavári & Szalay (2008) “Bayes’ factor” and compare it to our selection criterion in Sects. 5.6 and 6.1.

Throughout the present paper we consider a set of n catalogues. We use a Chi-square criterion based on individual elliptical positional errors to select, in these catalogues, sets of associations containing at most one source per catalogue. We call this selection a χ-match. We then compute probabilities for each set of associations. To compute probabilities, we consider only the result set in which each set of associations contains exactly n sources (one per catalogue, see below for partial matches). For people familiar with databases, it can be seen as the result of inner joins, joining successively each catalogue using a Chi-square criteria. The probabilities we then compute are only based on positional coincidences. Although we show how it is possible to add likelihoods based on photometric considerations, the computation of such photometric likelihoods is beyond the scope of this paper.

As the result of a χ-match, two distinct sets of associations may have sources in common: a source having a large positional error in one catalogue may for example be associated to several sources with smaller errors in another catalogue. We do not take into account in our probabilities the “one-to-several” and the “one-to-one” associations paradigms defined in Fioc (2014): it becomes far too complex when dealing with a generic number of catalogues and it is not that simple when a source may be blended, etc. We use a several-to-several-(to-several-...) paradigm. In other words, we compute probabilities for a set of associations regardless of the fact that a source in the set can be in other sets of associations. So a same detection in one catalogue may have very high probabilities of associations with several (sets of) candidates in the other catalogues. We think it is the responsibility of the photometric part to disentangle such cases.

Requiring one candidate per catalogue for each set of associations (i.e. each tuple) is somewhat restrictive. But, if one or several catalogues do not contain any candidates for a tuple, then we compute the probabilities from the cross-match of the subset of catalogues providing one candidate to that tuple. Those probabilities are computed independently of the “full” n catalogues probabilities. For example, if we cross-match three catalogues and if a set of associations (a tuple) contains one source per catalogues (A, B and C), then we will compute five probabilities: one for each possible configuration (ABC, AB_C, A_BC, AC_B and A_B_C in which the underscore “_” separates the catalogue entries associated to different actual sources, see Sect. 6.2.2). Now, if one source from A has a candidate in B and no candidate in C, we will compute only two probabilities (AB and A_B, see Sect. 6.2.1) considering only the result of the cross-match of A with B. Likewise for A and C only and for B and C only. These four cross-matches will yield eleven distinct probabilities. It is possible to deal with “missing” detections when computing photometrically based likelihoods (taking into account limit fluxes, ...) but it is not the case in the astrometric part of this work.

When χ-matching n catalogues, the number of hypotheses to be tested, and thus the number of probabilities to be computed for a given set of associations, increases dramatically with n. This number is 203 for 6 catalogues and reaches 877 for seven catalogues (see Table 2 in Sect. 6.2.4). To be able to compute probabilities when χ-matching more than seven catalogues we may start by merging catalogues for which the probability of making spurious associations is very low (e.g. catalogues of similar wavelength and similar astrometric accuracy), and handle the merged catalogue as a single input catalogue.

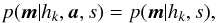

In Sect. 3 we lay down the assumptions we use to work on a simplified problem. We then (Sect. 4) define the notations and the standards used throughout the paper and link them to the standards adopted in a few catalogues. We then describe in detail the candidate selection criterion (Sect. 5) before providing (Sect. 6) an exhaustive list of all hypotheses we have to account for to apply Bayes’ formula. In Sect. 5 we also show how the “Bayesian cross-match” of Budavári & Szalay (2008) may be interpreted as an inhomogeneous χ-match. Then (Sect. 7) we show how it is possible to estimate the rates of spurious associations and hence “priors”. In Sect. 8 we compute an integral which is related to the probability the selection criterion has to select a set of n sources for a given hypothesis. This integral is crucial to compute likelihoods defined in Sect. 9 and to normalize likelihoods in Sect. 10. Finally, after showing how to introduce the photometric data into the probabilities (Sect. 11), and before concluding (Sect. 14), we explain the tests we carried out on synthetic catalogues in Sect. 12. Since this paper is long and technical, we put a summary of the steps to follow to perform a probabilistic χ-match in Sect. 13.

3. Simplifying assumptions

Cross-correlating catalogues taking into account an accurate model of the sky on one hand, and the effects and biases due to the catalogue building process on the other hand is a daunting task. To make progress towards this objective, we have to start by making simplifying assumptions.

First of all, we assume that there are no systematic offsets between the positions of each possible pair of catalogues. It means that the positions are accurate (no bias). We also assume that positional errors provided in catalogues are trustworthy. It means that they are neither overestimated nor underestimated: for instance, no systematic have to be quadratically added or removed. The first point supposes an accurate astrometric calibration of all catalogues. This is somewhat the “dog chasing its tail” problem since a proper astrometric calibration should be based on secure identifications, themselves based on... cross-identification! Ideally the astrometric calibration and the cross-identification should be performed simultaneously in an iterative process. It will not be developed here but we point out that the present work can be used to calibrate astrometrically n catalogues at the same time from one reference catalogue, taking into account all possible associations in all possible catalogue sub-sets. However, carrying out careful identification of primary or secondary astrometric standards is only important when the density of bright astrometric references is very low, typically in deep small field exposures. Reliable cross-identification is also crucial when the wavelength band of the image to calibrate differs widely from that of the astrometric reference image. In most large scale surveys such as 2MASS (Skrutskie et al. 2006) or SDSS (Pier et al. 2003) the density of bright Tycho-2 (Høg et al. 2000) or UCAC (Zacharias et al. 2004) astrometric reference stars is high enough to ensure an excellent overall calibration without any ambiguity in the associations.

Although the idealized vision of an immutable and static sky is long gone, we ignore proper motions in this analysis. There are at least two ways of taking them into account: either we may force associations to include at least one source from a catalogue containing measured proper motions; or we may try to fit proper motions during the cross-match process. In this last case, if a set of n sources detected at different epochs in n distinct catalogues does not satisfy the candidate selection defined in Sect. 5.2, we may make the hypothesis that they nonetheless are from a same source but having a proper motion. We can then estimate the proper motion and the associated error based on positions, (Gaussian) positional errors and epochs (see Appendix B). From the n observed positions and associated errors and from the n theoretical estimated positions and associated errors we can compute a Mahalanobis distance which follows a χ distribution with 2(n−2) degrees of freedom. Similarly to the candidate selection criterion in Sect. 5.2 we can then reject the hypothesis “same source with proper motion” if the Mahalanobis distance is larger than a given threshold.

We neglect clustering effects. We suppose that in a given area Ω, source properties are homogeneous. This implies that the local density of sources, the positional error distributions and the associations priors (probabilities of true associations that in principle depend on the astrophysical nature of the sources and on the limiting flux) are uniform over the sky area considered. As usual we have to face the following dilemma: on the one hand, the larger the area Ω, the better the statistic; on the other hand, the larger the area Ω, the less probable the uniform density, errors distributions and priors hypothesis. In the ARCHES project, for instance, we grouped the individual XMM-Newton EPIC fields of view of ≈0.126 deg2 each into installments of homogeneous exposure times and galactic latitude so as to ensure as much uniformity as possible. Each installment contained on the order of several hundred sources.

Finally, we neglect blending. If two sources are separated in one catalogue and blended in the other one, the position of the blended source will be something like the photocentre of the two sources. Either the blended source will not match any of the two distinct sources, or only one of the two distinct sources will match, the match likely being in the tail of the Rayleigh distribution, possibly leading to a low probability of identification. It will then not be problematic to consider the match as spurious since the observed flux is contaminated by the flux of the nearby source. Finally, if the positional accuracy of the blended source is well below that of the distinct sources, both distinct sources will match the blended source, leading to a non-unique association requiring further investigations to be disentangled.

4. Notations and links with catalogues

4.1. Notations

This article uses almost exclusively the notations defined in the ISO 80000-2:2009(E) international standard. Exceptionally we waive the notation detA for determinant and replace it by the equivalent but more compact notation | A |.

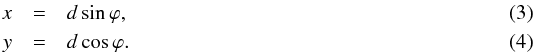

We consider n catalogues defined on a common surface of area Ω. We assume that each catalogue source has individual elliptical positional errors defined by a bivariate normal (or binormal) distribution. For this, we assimilate locally the surface of the sphere to its zenithal (or azimuthal) equidistant projection (see ARC projection in Calabretta & Greisen 2002), that is to its local Euclidean tangent plane. In this frame, the position of a point at distance d arcsec from the origin O (the tangent point) and having a position angle ϕ (east of north) is simply  This approximation is acceptable since typical positional errors, distances and surfaces locally considered are small.

This approximation is acceptable since typical positional errors, distances and surfaces locally considered are small.

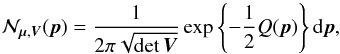

We note  the binormal probability density function (p.d.f.) representing the position of a source S and its associated uncertainty:

the binormal probability density function (p.d.f.) representing the position of a source S and its associated uncertainty:  (5)with

(5)with

-

μ = (μx,μy)⊺ the position of the source S provided in a catalogue, that is the mean of the binormal distribution;

-

V the provided variance-covariance – also simply called covariance – matrix which defines the error on the source position;

-

p = (x,y)⊺ any given two-dimensional position;

-

Q(p) the quadratic form Q(p) = (p−μ)⊺V-1(p−μ), that is the square of the weighted distance between a given position p and the position of the source S

where

where

-

σx is the standard deviation along the x-axis (i.e. the east axis);

-

σy is the standard deviation along the y-axis (i.e. the north axis);

-

ρ the correlation factor between σx and σy;

-

-

the determinant of V;

the determinant of V; -

dp = dxdy.

A covariance matrix V represents a 1σ ellipse. The “real” position of the source S has ≈39% chances to be located inside this 1σ-ellipse. It must not be confused with the 1-dimensional 1σ-segment which contains a real “value” with a probability of ≈68%.

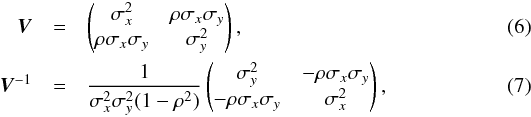

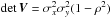

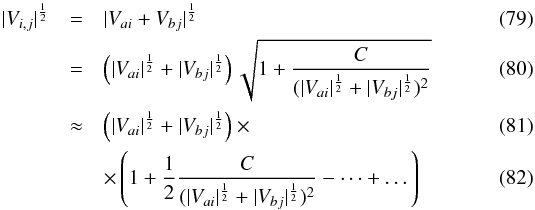

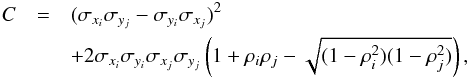

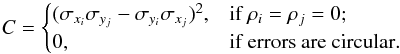

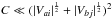

4.2. Classical positional errors in catalogues

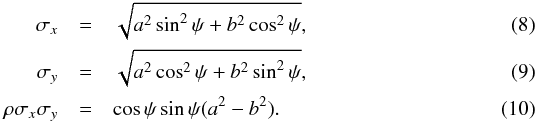

In astronomical catalogues like the 2MASS All-Sky Catalog of Point Sources (2MASS-PSC, Cutri et al. 2003) positional errors are described by three parameters defining the 1σ positional uncertainty ellipse2: err_maj or a the semi-major axis, err_min or b the semi-minor axis, and err_ang or ψ the positional angle (east of north) of the semi-major axis. We give the formula to transform the ellipse into a covariance matrix (see Appendix A.2 of Pineau et al. 2011b and footnote 11 in Fioc 2014):  In the AllWISE catalogue (Cutri et al. 2014), the coefficients of the covariance matrix are (almost) directly available. Instead of providing the unitless correlation factor ρ or the covariance ρσxσy (in arcsec2), the authors chose to provide the co-sigma (σαδ) because, as they state3, the latter is in the same units as the other uncertainties. We thus have

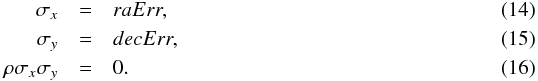

In the AllWISE catalogue (Cutri et al. 2014), the coefficients of the covariance matrix are (almost) directly available. Instead of providing the unitless correlation factor ρ or the covariance ρσxσy (in arcsec2), the authors chose to provide the co-sigma (σαδ) because, as they state3, the latter is in the same units as the other uncertainties. We thus have  In catalogues like the Sloan Digital Sky Survey since its eighth data release (SDSS-DR8, Aihara et al. 2011) positional errors contain two terms: the error on RA (raErr) and the error on Dec (decErr). In this case the parameters of the covariance matrix are simply

In catalogues like the Sloan Digital Sky Survey since its eighth data release (SDSS-DR8, Aihara et al. 2011) positional errors contain two terms: the error on RA (raErr) and the error on Dec (decErr). In this case the parameters of the covariance matrix are simply  In catalogues like the XMM catalogues (e.g. the 3XMM-DR5, Rosen et al. 2016) a single error is provided. Ideally, one would like to have access to the two one-dimensional errors, even if their respective values are often very close. The column named radecErr is the total error, so the quadratic sum of the two computed (but not provided) 1-dimensional errors, one computed on RA and one computed on Dec. If one uses σx = radecErr and σy = radecErr, the total error will be

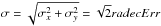

In catalogues like the XMM catalogues (e.g. the 3XMM-DR5, Rosen et al. 2016) a single error is provided. Ideally, one would like to have access to the two one-dimensional errors, even if their respective values are often very close. The column named radecErr is the total error, so the quadratic sum of the two computed (but not provided) 1-dimensional errors, one computed on RA and one computed on Dec. If one uses σx = radecErr and σy = radecErr, the total error will be  instead of radecErr. In output of the astrometric calibration process, the XMM pipeline provides a systematic error sysErrCC which is quadratically added to radecErr to compute the “total radial position uncertainty”4posErr. As for radecErr, we must divide posErr by

instead of radecErr. In output of the astrometric calibration process, the XMM pipeline provides a systematic error sysErrCC which is quadratically added to radecErr to compute the “total radial position uncertainty”4posErr. As for radecErr, we must divide posErr by  to obtain the 1-dimensional error. The appropriate errors to be used (including a systematic) are then

to obtain the 1-dimensional error. The appropriate errors to be used (including a systematic) are then  The factor

The factor  has not been taken into account in Pineau et al. (2011b). It partly explains why the fit of the curve in the right panel of Fig. 3 mentioned in Sect. 5 of this paper does not lead to a Rayleigh scale parameter equal to 1.

has not been taken into account in Pineau et al. (2011b). It partly explains why the fit of the curve in the right panel of Fig. 3 mentioned in Sect. 5 of this paper does not lead to a Rayleigh scale parameter equal to 1.

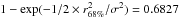

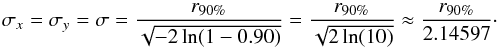

Similarly to the XMM case, the error posErr provided in the GALEX All-Sky Survey Source Catalog (GASC) catalogue5 (which also includes the systematic) is a “total radial error”. It is thus the Rayleigh parameter σ which is the quadratic sum of two one-dimensional errors. As for XMM, the appropriate errors to be used are  In catalogues like the ROSAT All-Sky Bright Source Catalogue (1RXS, Voges et al. 1999) the error provided is the radius of the cone containing the real position of a source with a probability of ≈68.269% (the 1 dimensional 1σ). Authors like Rutledge et al. (2003; given the details provided in Voges et al. 1999, Sect. 3.3.3) call this radius the 1σ-radius. We note it r68%. But, in the Rayleigh distribution, the scale parameter σ is defined such that the cone of radius r = σ contains the real position with a probability 100 × (1−exp(−1/2)) ≈ 39.347%. Adjusting such that

In catalogues like the ROSAT All-Sky Bright Source Catalogue (1RXS, Voges et al. 1999) the error provided is the radius of the cone containing the real position of a source with a probability of ≈68.269% (the 1 dimensional 1σ). Authors like Rutledge et al. (2003; given the details provided in Voges et al. 1999, Sect. 3.3.3) call this radius the 1σ-radius. We note it r68%. But, in the Rayleigh distribution, the scale parameter σ is defined such that the cone of radius r = σ contains the real position with a probability 100 × (1−exp(−1/2)) ≈ 39.347%. Adjusting such that  leads to

leads to  (21)Similarly if the provided error is the radius of the cone containing the real position with a probability of 90% (e.g. in the WGACAT, White et al. 2000)

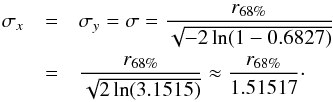

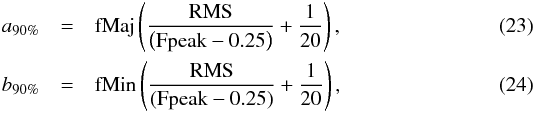

(21)Similarly if the provided error is the radius of the cone containing the real position with a probability of 90% (e.g. in the WGACAT, White et al. 2000)  (22)The description (White et al. 1997) and the on-line documentation6 of the FIRST catalogue (Helfand et al. 2015a,b) provide an “empirical expression” to compute the semi-major and semi-minor axis of the 90% positional accuracy associated to each source:

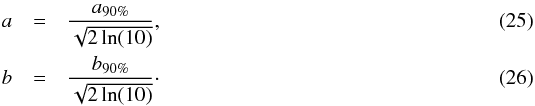

(22)The description (White et al. 1997) and the on-line documentation6 of the FIRST catalogue (Helfand et al. 2015a,b) provide an “empirical expression” to compute the semi-major and semi-minor axis of the 90% positional accuracy associated to each source:  in which fMaj (fMin) is the major (minor) axis of the fitted FWHM, RMS “is a local noise estimate at the source position” and Fpeak is the peak flux density. The position angle ψ of the accuracy equals the fitted FWHM angle fPA. We first obtain the 1σ accuracy ellipse by resizing the 90% ellipse axes divinding them by the same factor as for the WGACAT (i.e.

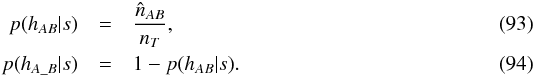

in which fMaj (fMin) is the major (minor) axis of the fitted FWHM, RMS “is a local noise estimate at the source position” and Fpeak is the peak flux density. The position angle ψ of the accuracy equals the fitted FWHM angle fPA. We first obtain the 1σ accuracy ellipse by resizing the 90% ellipse axes divinding them by the same factor as for the WGACAT (i.e.  )

)  After possibly adding systematics, the variance-covariance matrix is obtained applying the equations used for the 2MASS catalogue.

After possibly adding systematics, the variance-covariance matrix is obtained applying the equations used for the 2MASS catalogue.

Errors in catalogues like the Guide Star Catalog Version 2.3.27 (Lasker et al. 2007, GSC2.3) should not be used in the framework of this paper. As stated in Table 3 of Lasker et al. (2008): these astrometric and photometric errors are not formal statistical uncertainties but a raw and conservative estimate to be used for telescope operations.

Table 1 summarizes the transformation of catalogues positional errors into the coefficients of covariance matrices V.

Summary of the transformations of positional errors provided in various astronomical catalogues into the coefficients of error covariance matrices (before adding quadratically possible systematics).

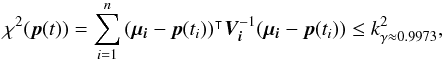

5. Candidates selection: the χ-match

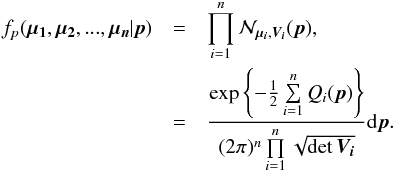

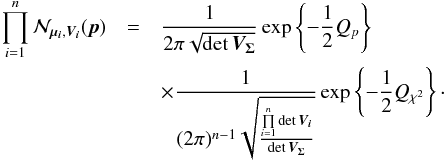

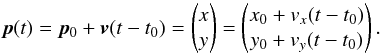

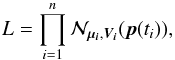

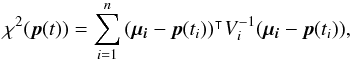

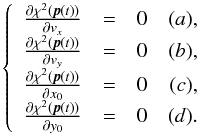

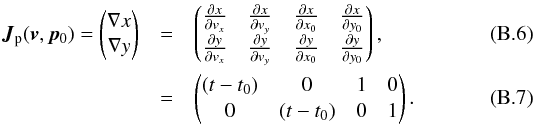

We make the hypothesis that n sources from n distinct catalogues are n independent detections of a same real source. With p the unknown position of the real source and μi the observed position of detection i, the probability for the n detections to be located at the observed positions is expressed by the joint density function:  (27)

(27)

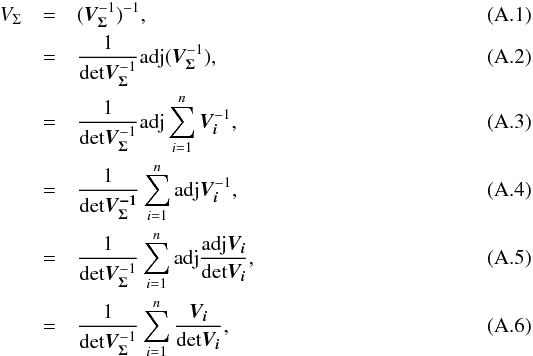

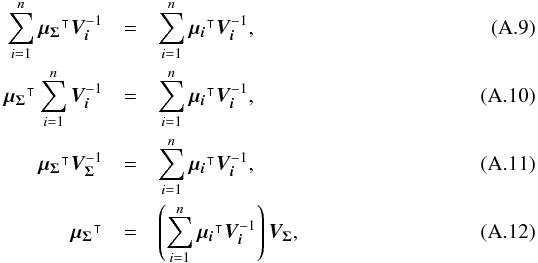

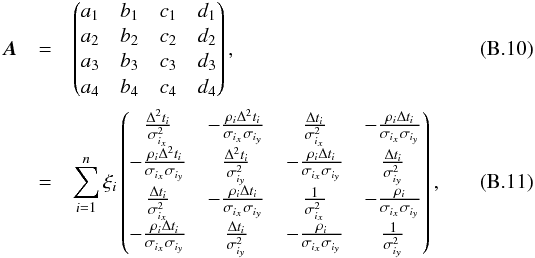

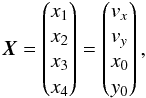

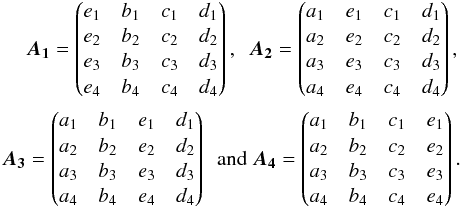

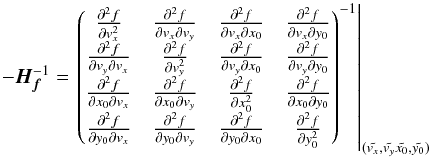

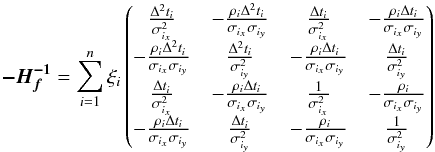

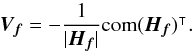

5.1. Estimation of the real position given n observations

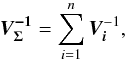

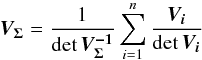

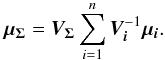

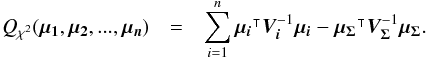

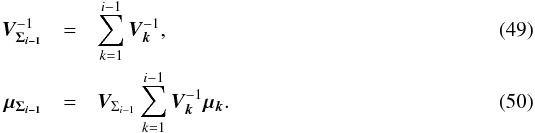

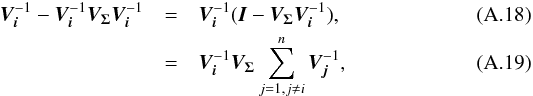

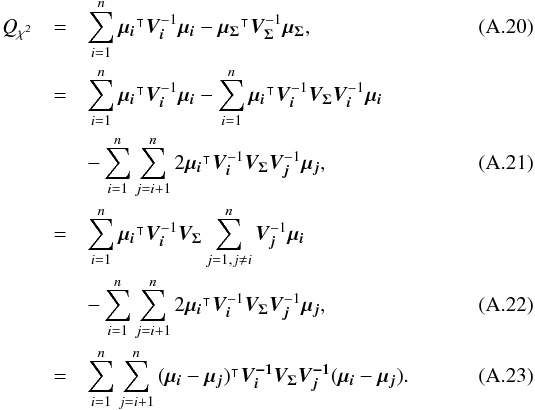

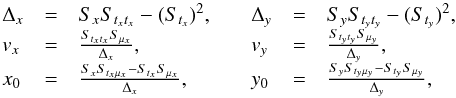

We introduce the notations μΣ and VΣ for the weighted mean position of the n sources and its associated error respectively. The inverse of the covariance matrix VΣ is  (28)leading to (see demonstration in Sect. A.1)

(28)leading to (see demonstration in Sect. A.1)  (29)which is used in the weighted mean position expression

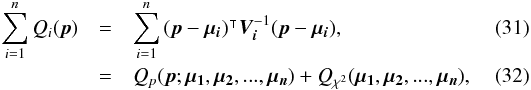

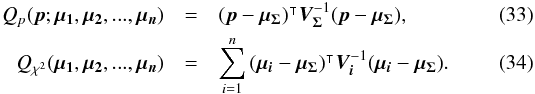

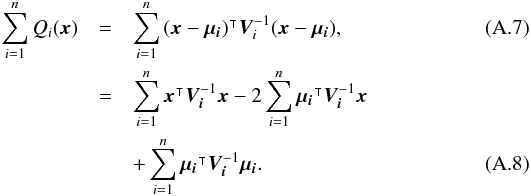

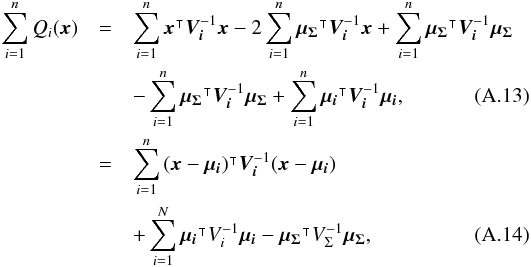

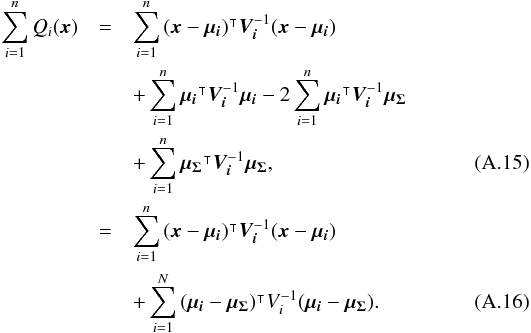

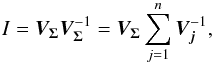

(29)which is used in the weighted mean position expression  (30)Using both the weighted mean position and its error, the sum of quadratics in Eq. (27) can be divided into two parts and written as (see demonstration Sect. A.2)

(30)Using both the weighted mean position and its error, the sum of quadratics in Eq. (27) can be divided into two parts and written as (see demonstration Sect. A.2) with

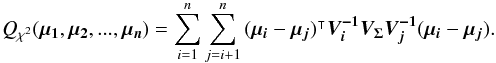

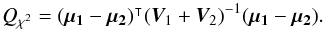

with  In the case of two catalogues the latter term can be written as in Eq. (51). Moreover, if both covariances are null, it takes the simple and common form

In the case of two catalogues the latter term can be written as in Eq. (51). Moreover, if both covariances are null, it takes the simple and common form  (35)Back to the general case, the term Qχ2 can also be put in the more computationally efficient form (only one loop over i)

(35)Back to the general case, the term Qχ2 can also be put in the more computationally efficient form (only one loop over i)  (36)From those formulae, it appears that the weighted mean position (Eq. (30)) is the maximum likelihood estimator of the “true” position of the source: the second term (Qχ2) is constant with respect to p so the maximum of the likelihood function ℒ(p;μ1,μ2,...,μn) = fp(μ1,μ2,...,μn | p) is obtained when the first term (Qp) is null, so when p = μΣ. The error on this estimate is simply VΣ, the inverse of the Hessian of the likelihood function.

(36)From those formulae, it appears that the weighted mean position (Eq. (30)) is the maximum likelihood estimator of the “true” position of the source: the second term (Qχ2) is constant with respect to p so the maximum of the likelihood function ℒ(p;μ1,μ2,...,μn) = fp(μ1,μ2,...,μn | p) is obtained when the first term (Qp) is null, so when p = μΣ. The error on this estimate is simply VΣ, the inverse of the Hessian of the likelihood function.

5.2. Candidates selection criterion

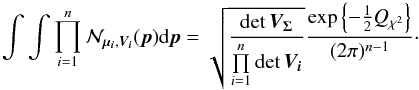

For the candidate selection, we are interested in the probability the n sources have to be located at the same position. Let’s first rewrite Eq. (27) to exhibit a product of a binormal distribution by another multi-dimensional normal law:  (37)When integrating Eq. (37) over all possible positions (i.e. over p) the first term integrates to 1, since it is the p.d.f. of a normal law in p, so we obtain

(37)When integrating Eq. (37) over all possible positions (i.e. over p) the first term integrates to 1, since it is the p.d.f. of a normal law in p, so we obtain  (38)We are supposed to integrate on the surface of the unit sphere. But the errors being small, we consider the infinity being at a relatively close distance, before effects of the sphere curvature become non-negligible.

(38)We are supposed to integrate on the surface of the unit sphere. But the errors being small, we consider the infinity being at a relatively close distance, before effects of the sphere curvature become non-negligible.

In the previous equation, only the Qχ2 term remains. It can also be written (see demonstration Sect. A.3)  (39)Equation (38) is equivalent to P(D | H) in Budavári & Szalay (2008) and Eq. (39) – multiplied by the

(39)Equation (38) is equivalent to P(D | H) in Budavári & Szalay (2008) and Eq. (39) – multiplied by the  factor in the exponential (Eq. (38)) – is the generalization for elliptical errors of Eq. (B12) in Budavári & Szalay (2008). In practice, we never use Eq. (38) since the number of terms to be computed increases with O(n(n−1)/2) while it increases with O(n) in Eq. (36) or in its iterative form (see Eq. (48) in Sect. 5.3). We use here the big O notation, to be read as “the order of”.

factor in the exponential (Eq. (38)) – is the generalization for elliptical errors of Eq. (B12) in Budavári & Szalay (2008). In practice, we never use Eq. (38) since the number of terms to be computed increases with O(n(n−1)/2) while it increases with O(n) in Eq. (36) or in its iterative form (see Eq. (48) in Sect. 5.3). We use here the big O notation, to be read as “the order of”.

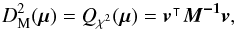

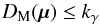

We can see Eq. (34) as the result of a 2n-dimensional weighted least squares in which the model is the “real” position of the source and the solution is μΣ (by similarity with Eq. (31)). Putting all positional errors matrices in a 2n × 2n block diagonal matrix M, Qχ2 is the square of the Mahalanobis distance  defined by

defined by  (40)

(40) (41)which follows in our particular case a χ2 distribution with 2(n−1) degrees of freedom, or equivalently, (n−1)χ2 distributions with two degrees of freedom. Equation (40) is probably the Mahalanobis distance mentioned without giving its expression in Adorf et al. (2006).

(41)which follows in our particular case a χ2 distribution with 2(n−1) degrees of freedom, or equivalently, (n−1)χ2 distributions with two degrees of freedom. Equation (40) is probably the Mahalanobis distance mentioned without giving its expression in Adorf et al. (2006).

If  follows a

follows a  distribution, then its square root, the distance DM(μ), follows a χd.o.f. = 2(n−1) distribution.

distribution, then its square root, the distance DM(μ), follows a χd.o.f. = 2(n−1) distribution.

We perform a statistical hypothesis test on a set of n sources, defining the null hyothesis H0 as follows: all sources in the set are detections of the same “real” source. The alternative hypothesis H1 would thus be: not all sources in the set are detections of the same “real” source; in other words the set of n sources contains at least one spurious source; or, expressed differently, the n sources are n observations of at least two distinct real sources. We adopt Fisher’s approach, that is we will reject the null hypothesis if, the null hypothesis being true, the observed data is significantly unlikely.

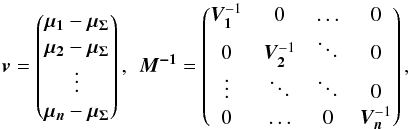

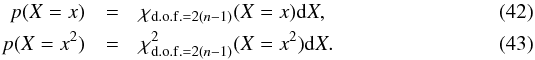

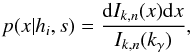

From now on, we indifferently write x or DM the Mahalanobis distance. Assuming the null hypothesis is true, the “theoretical” probability we had to get the actual computed (square of) Mahalanobis distance is given by a Chi(-square) distribution with 2(n−1) degrees of freedom:  The probability we had to get an actual computed (square of) Mahalanobis distance less than or equal to a given threshold (or critical value)

The probability we had to get an actual computed (square of) Mahalanobis distance less than or equal to a given threshold (or critical value)  is given by the value of the cumulative distribution function of a the Chi(-square) at the given threshold

is given by the value of the cumulative distribution function of a the Chi(-square) at the given threshold  (44)We can indifferently work on x with the χ distribution or on x2 with the χ2 distribution. The threshold kγ we obtain on x is simply the square root of the threshold

(44)We can indifferently work on x with the χ distribution or on x2 with the χ2 distribution. The threshold kγ we obtain on x is simply the square root of the threshold  we obtain on x2. Although we find the Chi test more natural in the present case, most astronomers are familiar with the Chi-square test.

we obtain on x2. Although we find the Chi test more natural in the present case, most astronomers are familiar with the Chi-square test.

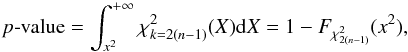

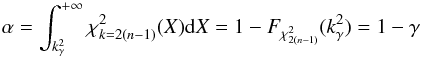

In the framework of statistical hypothesis tests, it is the complementary cumulative distribution (or tail distribution) function which is usually used by defining the p-value  (45)and a significance level α defined by

(45)and a significance level α defined by  (46)is fixed. The null hypothesis is then rejected if p - value <α. In the Neyman-Pearson framework α is the type I error, or the false positive rate, that is the probability the null hypothesis has to be rejected (positive rejection test) while it is true (wrong/false decision). In our case we fix γ (hereafter called completeness), the fraction of real associations we “theoretically” select over all real associations. The candidates selection criterion, or fail of rejection criterion, we use is then

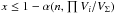

(46)is fixed. The null hypothesis is then rejected if p - value <α. In the Neyman-Pearson framework α is the type I error, or the false positive rate, that is the probability the null hypothesis has to be rejected (positive rejection test) while it is true (wrong/false decision). In our case we fix γ (hereafter called completeness), the fraction of real associations we “theoretically” select over all real associations. The candidates selection criterion, or fail of rejection criterion, we use is then  (47)in which

(47)in which  or, equivalently,

or, equivalently,  . This inequality is equivalent to p - value <α. It is important to write “fail of rejection” since nothing proves that if Eq. (47) is satisfied the null hypothesis is true: at this point the selected set of sources is nothing else than a set of candidates. Nevertheless we do call region of acceptance the set of DM(μ) values satisfying Eq. (47). This region of acceptance will be useful to define the domain of integration used to normalize likelihoods when computing probabilities for each hypothesis from Sect. 7. Its volume (see e.g. Eq. (64)) is the volume of the 2n-ellipsoid defined by M (see Eq. (40)) divided by the error ellipse associated to the weighted mean position μΣ and defined by VΣ (it thus is a volume in a 2(n−1) space).

. This inequality is equivalent to p - value <α. It is important to write “fail of rejection” since nothing proves that if Eq. (47) is satisfied the null hypothesis is true: at this point the selected set of sources is nothing else than a set of candidates. Nevertheless we do call region of acceptance the set of DM(μ) values satisfying Eq. (47). This region of acceptance will be useful to define the domain of integration used to normalize likelihoods when computing probabilities for each hypothesis from Sect. 7. Its volume (see e.g. Eq. (64)) is the volume of the 2n-ellipsoid defined by M (see Eq. (40)) divided by the error ellipse associated to the weighted mean position μΣ and defined by VΣ (it thus is a volume in a 2(n−1) space).

In practice, the value  is computed numerically using Newton’s method to solve

is computed numerically using Newton’s method to solve  . The initial guess we use is the approximate value returned by Eq. (A.3) of Inglot (2010).

. The initial guess we use is the approximate value returned by Eq. (A.3) of Inglot (2010).

The value of γ we fix is independent of n, the number of candidates. In practice we often set this input parameter to γ = 0.9973. In one dimension this value leads to kγ = 3, that is the famous 3σ rule. It means that for 10 000 real associations in a dataset, we theoretically miss 27 of them by applying the candidate selection criterion. From now on we call this cross-correlation a χγ-match, or simply a χ-match.

In the particular case of two catalogues DM(μ) follows a χ distribution with 2 degrees of freedom – that is a Rayleigh distribution – and kγ = 0.9973 = 3.443935. This latter value is used in the two-catalogues χ-match of Pineau et al. (2011b).

5.3. Iterative form: catalogue by catalogue

Somewhat similarly to the Bayes factor in Budavári & Szalay (2008, Sect. 6) it is noteworthy that Qχ2 can be computed iteratively, summing (n−1) successive χ2 with two degrees of freedom computed from (n−1) successive two-catalogues cross-matches.

After each iteration, the new position to be used for the next cross-match is the weighted mean of all already matched positions and the new associated error is the error on this weighted mean. The strict equality between Eq. (48) and the non iterative form, for example Eq. (34), proves that the result is independent of the successive cross-matches order.

The maximum number of cross-matches to be performed must be known in advance in order to put an upper limit on kγ since it depends on the degree of freedom of the total χ2. The iteration formula is simply  (48)in which

(48)in which  We find it from the 2-catalogues case, for which (see Sect. A.4)

We find it from the 2-catalogues case, for which (see Sect. A.4)  (51)We can demonstrate by direct calculation that

(51)We can demonstrate by direct calculation that  (52)and so, iteratively, we find the general expression

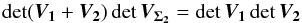

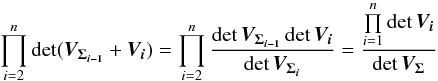

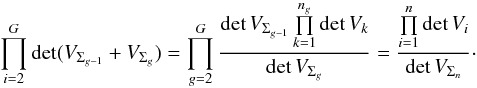

(52)and so, iteratively, we find the general expression  (53)which is consistent with Eq. (38). The volume of the acceptance region of the statistical hypothesis test is the volume of a 2(n−1) dimensional ellipsoid. More precisely, it is the product of the previous equation Eq. (53) by the volume of a 2(n−1)-sphere of radius kγ. This will be crucial when computing the rate of spurious associations.

(53)which is consistent with Eq. (38). The volume of the acceptance region of the statistical hypothesis test is the volume of a 2(n−1) dimensional ellipsoid. More precisely, it is the product of the previous equation Eq. (53) by the volume of a 2(n−1)-sphere of radius kγ. This will be crucial when computing the rate of spurious associations.

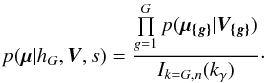

5.4. Iterative form: by groups of catalogues

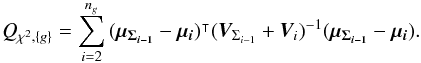

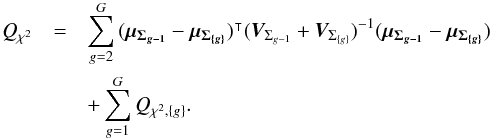

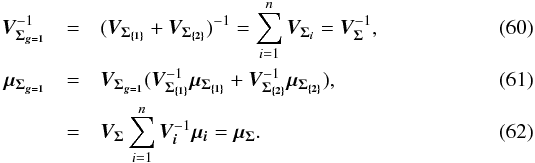

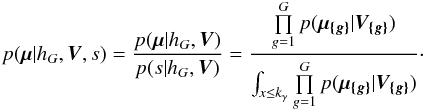

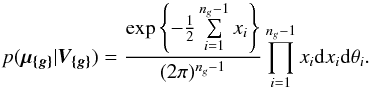

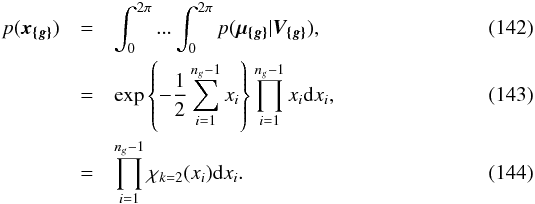

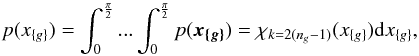

Instead of iterating over catalogues one by one, we can also perform G sub-cross-matches, each associating ng distinct sources such that  . We note Qχ2, { g } the square of the Mahalanobis distance associated with the group g:

. We note Qχ2, { g } the square of the Mahalanobis distance associated with the group g:  (54)We show that we can compute Qχ2 iteratively from the G weighted mean positions μΣ{g} and their associated errors

(54)We show that we can compute Qχ2 iteratively from the G weighted mean positions μΣ{g} and their associated errors  . The square of the Mahalanobis distance can be written

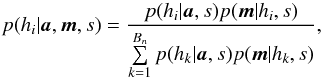

. The square of the Mahalanobis distance can be written  (55)In other words, the square of the Mahalanobis distance is the sum of the square of the intra-group Mahalanobis distances plus the inter-group iterative one. With k being an index defined inside each of the G groups { g }

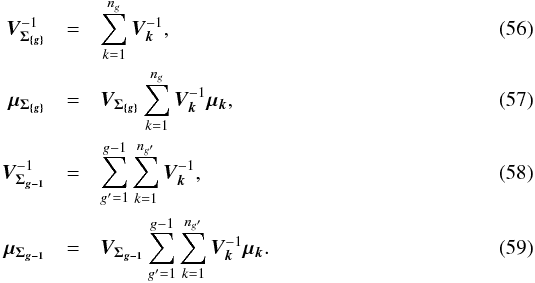

(55)In other words, the square of the Mahalanobis distance is the sum of the square of the intra-group Mahalanobis distances plus the inter-group iterative one. With k being an index defined inside each of the G groups { g } In fact, it is a straightforward generalization of the G = 2 groups case for which

In fact, it is a straightforward generalization of the G = 2 groups case for which  Here again,

Here again,  (63)Again, kγ depends on the number of degrees of freedom of the total χ2, thus on the total number of cross-correlated tables. It means that to be complete, all sub-cross-correlations must use the candidate selection threshold kγ(2(n−1)) computed from the total number of tables instead of kγ(2(ng−1)) computed from the number of tables in a group.

(63)Again, kγ depends on the number of degrees of freedom of the total χ2, thus on the total number of cross-correlated tables. It means that to be complete, all sub-cross-correlations must use the candidate selection threshold kγ(2(n−1)) computed from the total number of tables instead of kγ(2(ng−1)) computed from the number of tables in a group.

5.5. Summary and Interpretation

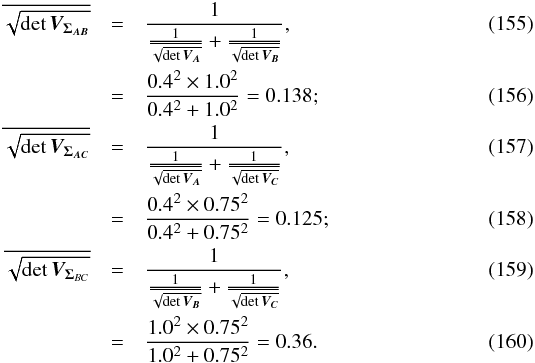

Equations (34), (36), (39), (40), (48) and (55) are all equivalent and they lead to the same value, that is to the same squared Mahalanobis distance. All sources are retained as possible candidates if Eq. (47) is verified, so if the Mahalanobis distance is smaller or equal to kγ. This threshold is the inverse of the cumulative χ distribution function at the chosen completeness γ, for 2(n−1) degrees of freedom.

As this criterion is no other than a χ-test criterion (or χ2-test criterion if we work on squared Mahalanobis distances) we call the result of such a criterion a χ-match.

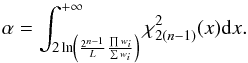

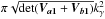

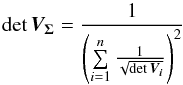

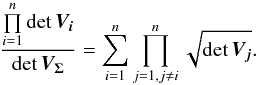

The χ-match criterion defines a region of acceptance which is a 2(n−1)-ellipsoid of radius kγ. Its volume is computed from Eq. (53): ![\begin{equation} \mathcal{V}_n(k_\gamma) = \left[\frac{\prod\limits_{i=1}\det\bm{V_i}}{\det\bm{V_\Sigma}} \right]^{1/2} \frac{\pi^{n-1}k_\gamma^{2(n-1)}}{(n-1)!}, \label{eq:ellvolume} \end{equation}](/articles/aa/full_html/2017/01/aa29219-16/aa29219-16-eq187.png) (64)with

(64)with  the volume of a 2(n−1)-sphere of radius kγ. It will be later used to compute the expected number of spurious associations.

the volume of a 2(n−1)-sphere of radius kγ. It will be later used to compute the expected number of spurious associations.

5.6. Comment on the “Bayesian cross-match” of Budavári & Szalay (2008)

We mention in Sect. 6.1 what appears to be a conceptual problem in calling B (Eq. (65)) a Bayes factor for more than two catalogues in the astrometrical part of Budavári & Szalay (2008).

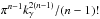

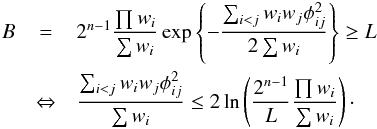

Performing a cross-match by fixing a lower limit L on the “Bayes factor” B defined in Eq. (18) of Budavári & Szalay (2008) is no other than performing a χ-match with a significance level which depends both on the number of sources n and on the volume of the 2(n−1)-ellipsoid of radius 1. In fact, using the factor B of Budavári & Szalay (2008) in which wi is the inverse of the cirular error on the position of the source i and φij is the angular distance between sources i and j, we have the equivalence  (65)We showed that the quantity on the left side of the inequality is equal to Eq. (39) in the present paper and thus follows a χ2 distribution for “real” associations. It means that the “Bayesian” candidate selection criterion B ≥ L is equivalent to a χ2 test having a significance level equal to

(65)We showed that the quantity on the left side of the inequality is equal to Eq. (39) in the present paper and thus follows a χ2 distribution for “real” associations. It means that the “Bayesian” candidate selection criterion B ≥ L is equivalent to a χ2 test having a significance level equal to  (66)The larger the volume of the 2(n−1)-ellipsoid of radius 1 (∝

(66)The larger the volume of the 2(n−1)-ellipsoid of radius 1 (∝ ), the more “real” associations are missed and the less spurious associations are retrieved. We could replace the criterion B ≥ L by

), the more “real” associations are missed and the less spurious associations are retrieved. We could replace the criterion B ≥ L by  . This is somewhere between the fixed radius cone search and the fixed significance level χ-match. The rate of missed “real” associations is not homogeneous but depends on the positional errors. Only if positional errors are constant in all catalogues, then the B ≥ L constraint becomes equal to the χ-match which is equal to a fixed radius cross-match.

. This is somewhere between the fixed radius cone search and the fixed significance level χ-match. The rate of missed “real” associations is not homogeneous but depends on the positional errors. Only if positional errors are constant in all catalogues, then the B ≥ L constraint becomes equal to the χ-match which is equal to a fixed radius cross-match.

6. Hypotheses from combinatorial considerations

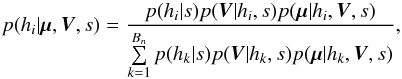

A χ-match output is made of sets of associations, each set of associations containing one source per catalogue. For each set of associations we want to compute the probability all sources of the set have to come from a same actual source. In this section, especially in Sect. 6.2 we make explicit the sets { hi } of hypotheses we have to formulate to compute probabilities of identification when cross-correlating n catalogues.

6.1. Generalities

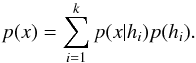

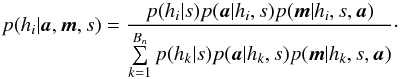

Given a set { hk } of pairwise disjoint hypotheses whose union is the entire set of possibilities, the law of total probabilities for an observable x is  (67)Leading to Bayes’ theorem

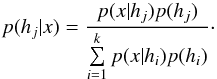

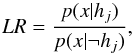

(67)Leading to Bayes’ theorem  (68)We stress that Bayes’ factor (also called likelihood ratio) is defined only in cases involving two and only two hypotheses

(68)We stress that Bayes’ factor (also called likelihood ratio) is defined only in cases involving two and only two hypotheses  (69)and is used when no trustworthy priors p(h1) and p(h2) are available. We can transform any set of pairwise disjoint hypotheses into two disjoint hypotheses. In this case, using the negation notation ¬

(69)and is used when no trustworthy priors p(h1) and p(h2) are available. We can transform any set of pairwise disjoint hypotheses into two disjoint hypotheses. In this case, using the negation notation ¬ (70)with

(70)with  (71)and

(71)and  (72)Such a likelihood ratio (Eq. (70)) is not interesting since it is not only computed from likelihoods, but also from priors.

(72)Such a likelihood ratio (Eq. (70)) is not interesting since it is not only computed from likelihoods, but also from priors.

The term B(H | K) in Budavári & Szalay (2008, Eq. (8)) is improperly called Bayes factor when dealing with more than two catalogues. As a matter of fact, the union of the two hypotheses – all sources are from the same real source and each sources is from a distinct real source – is only a subset of all possibilities so the law of total probabilities and hence Bayes’ formula are not valid.

In Pineau et al. (2011b) the term LR(r) in Eq. (9) is also improperly called likelihood ratio since a likelihood is a probability density function and so integrates to 1 over its domain of definition. It is obviously not the case of dp(r | spur) in Eq. (8). The built quantity is related to the ratio between the probability the association has to be “real” over the probability it has to be spurious, but formally it is not a likelihood ratio. The very same “abuse of term” is made in Wolstencroft et al. (1986; who, moreover, adds a prior in the likelihood ratio), in Rutledge et al. (2000), Brusa et al. (2007) and probably other publications.

6.2. Possible combinations and the Bell number

Let’s suppose we have selected one set of n distinct sources from n different catalogues, one source per catalogue. Those n sources possibly are n detections of k distinct real sources, with k ∈ [1,n]. The case k = 1 corresponds to the situation where all sources are n observations of the same real source and the case k = n corresponds to the situation where there are n distinct real sources detected independently, one in each catalogue.

We call A the source from catalogue number one, B the source from catalogue number two and so on.

6.2.1. Two-catalogues case: two hypotheses

The classical two-catalogues case is trivial. We formulate only two hypotheses:

-

AB, the match is a real match, the two sources are two observations of a same real source, that is k = 1;

-

A_B, the match is spurious, the two sources are two observations of two different real sources, that is k = 2.

6.2.2. Three-catalogues case: five hypotheses

For three sources A, B and C from three different catalogues, we formulate five hypotheses:

-

ABC, all three sources come from a same real source, that is k = 1;

-

AB_C, A and B are from a same real source and C is from a different real source, that is k = 2;

-

AC_B, A and C are from a same real source and B is from a different real source, that is k = 2;

-

A_BC, B and C are from a same real source and A is from a different real source, that is k = 2;

-

A_B_C, all three sources are from three different real sources, that is k = 3.

6.2.3. Four-catalogues case: 15 hypotheses

For four sources A, B, C and D we have to formulate 15 hypotheses:

-

ABCD, when k = 1;

-

ABC_D, ABD_C, ACD_B and BCD_A, but also

-

AB_CD, AC_BD and AD_BC for k = 2;

-

AB_C_D, AC_B_D, AD_B_C, BC_A_D, BD_A_C and DC_A_B when k = 3;

-

A_B_C_D when k = 4.

6.2.4. n-catalogues case: Bell number of hypotheses

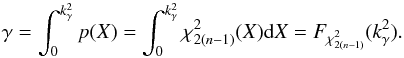

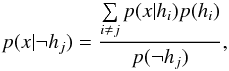

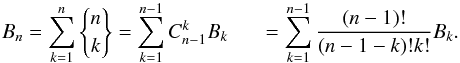

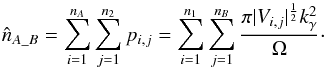

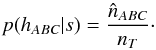

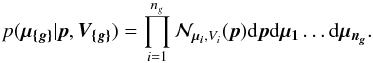

We now generalize to n catalogues. For each possible value of k, the number of ways the set of n sources can be partitioned into k non-empty subsets – each subset correspond to a real source – is given by the Stirling number of the second kind denoted  . The total number of hypotheses to be formulated is equal to the Bell number. The Bell number counts the number of partitions of a set and is given by

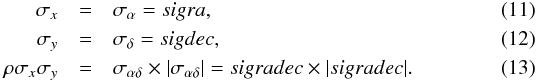

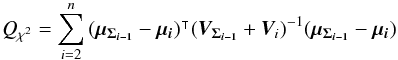

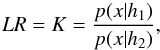

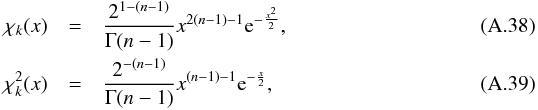

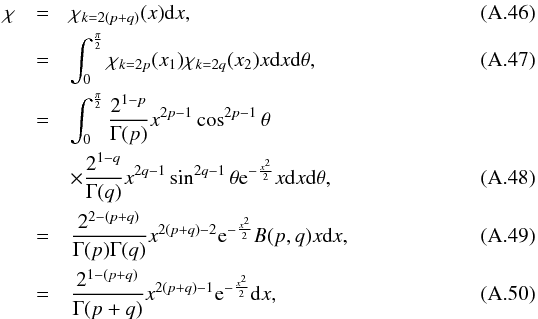

. The total number of hypotheses to be formulated is equal to the Bell number. The Bell number counts the number of partitions of a set and is given by  (73)Its seven first values are provided in Table 2 and a graphic illustration representing all possible partitions for five catalogues is provided in Fig. 18.

(73)Its seven first values are provided in Table 2 and a graphic illustration representing all possible partitions for five catalogues is provided in Fig. 18.

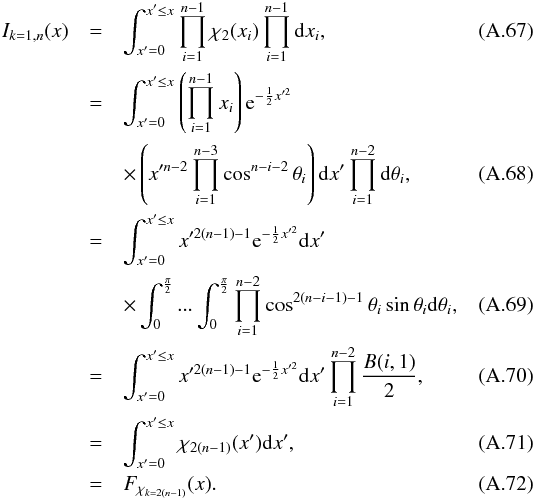

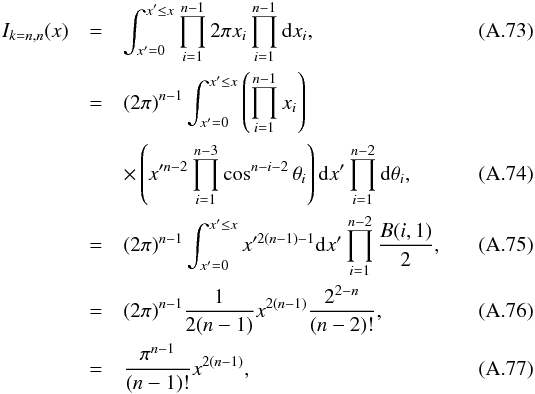

Values of the seven first Bell numbers.

|

Fig. 1 The 52 partitions of a set with n = 5 elements. Each partition corresponds to one hypothesis for five distinct sources from five distinct catalogues. Left: k = 5, the five sources are from five distinct real sources. Right: k = 1, the five sources are from a same real source. (Tilman Piesk – CC BY 3.0 – modified – link in footnote). |

We face a combinatorial explosion of the number of hypotheses to be tested when increasing the number of catalogues. Although the theoretical developments presented here deal with any number of catalogues, the exhaustive analysis may be in practice limited to a few catalogues (n< 10).

Hereafter we note hi the hypothesis number i, we explicit it with letters for example hAB, and we note hk = i an hypothesis in which n observed sources are associated to i real sources.

7. Frequentist estimation of spurious associations rates and priors

We have defined a candidate selection criterion to perform χ-matches. We recall that we note x the Mahalanobis distance, and we note s the “event” x ≤ kγ, that is a given set of sources satisfies the selection criterion.

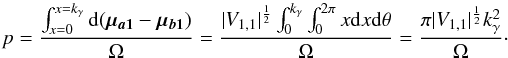

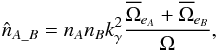

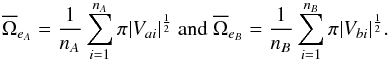

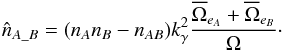

In a first step we want to estimate the number of “fully spurious” associations we would expect to find in a χ-match output and derive the prior p(hk = n | s) from this estimate. By “fully spurious” we mean that each candidate from each catalogue is actually associated with a different “real” source. A good such estimate is simply the mean sky area of the test acceptance region (see Eq. (64)) over all possible sources of all catalogues, multiplied by the number of sources in one of the catalogues and by the density of sources in the other ones. Written differently for n catalogues of ni sources each, on a common surface area Ω, it leads to an estimated number of spurious associations  equals to:

equals to: ![\begin{equation} \hat{n}_{\Omega_{\rm spur}} = \frac{\pi^{n-1}k_\gamma^{2(n-1)}}{(n-1)!\Omega^{n-1}} \sum\limits_{i_1=1}^{n_1} \sum\limits_{i_2=1}^{n_2} ... \sum\limits_{i_n=1}^{n_n} \left[\frac{\prod\limits_{j=1}^n \det \bm{V_{i_j}}}{\det \bm{V_\Sigma}}\right]^{1/2}\cdot \label{eq:nfullyspur} \end{equation}](/articles/aa/full_html/2017/01/aa29219-16/aa29219-16-eq254.png) (74)Or, having histograms or more generally discretized positional error distributions:

(74)Or, having histograms or more generally discretized positional error distributions: ![\begin{equation} \hat{n}_{\Omega_{\rm spur}} = \frac{\pi^{n-1}k_\gamma^{2(n-1)}}{(n-1)!\Omega^{n-1}} \sum\limits_{b_1=1}^{N_1} \sum\limits_{b_2=1}^{N_2} ... \sum\limits_{b_n=1}^{N_n} \prod\limits_{k=1}^n c_{b_k}\left[\frac{\prod\limits_{j=1}^n \det \bm{V_{b_j}}}{\det \bm{V_\Sigma}}\right]^{1/2}, \label{eq:nfullyspurdisc} \end{equation}](/articles/aa/full_html/2017/01/aa29219-16/aa29219-16-eq255.png) (75)in which Nk are the numbers of bins in histograms – or number of points in a discrete distribution– and cbk are number of counts in given bins of a histogram. The number of counts may be replaced by the value of the discrete distribution (or weight wbk) times the number of elements: cbk = nkwbk.

(75)in which Nk are the numbers of bins in histograms – or number of points in a discrete distribution– and cbk are number of counts in given bins of a histogram. The number of counts may be replaced by the value of the discrete distribution (or weight wbk) times the number of elements: cbk = nkwbk.

To perform quick estimations using only a one dimensional error histogram per catalogue, we approximate elliptical errors by circular errors of same surface area.

The remainder of this section explains how we can compute priors from the rate of “fully spurious” associations and the number of associations found in all possible sub-cross-matches.

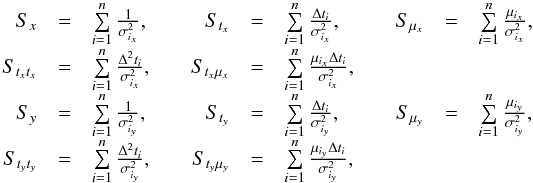

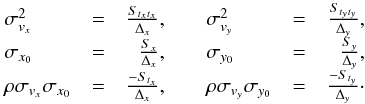

7.1. Case of two catalogues

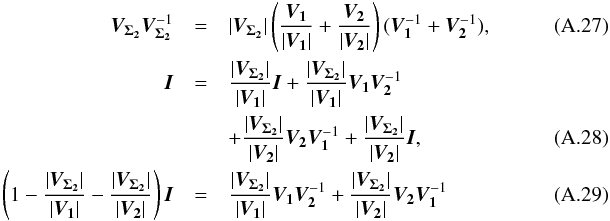

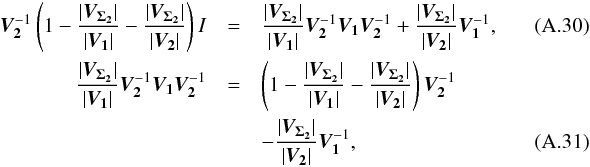

Let’s suppose that we have two catalogues A and B and each catalogue contains only one source in the common surface area Ω. We note μa1, Va1 and μb1, Vb1 the position of the source and associated covariance matrix in A and B respectively. If we fix the position μa1 of the first source, the second source will be associated with the first one by a χγ-match if Eq. (47) is satisfied. So if the second source is located in an ellipse of surface area  centred around the position of the first source. We temporarily waive the ISO 80000-2 notation detM and replace it by the equivalent and more compact notation | M |. We also replace Va1 + Vb1 by V1,1 to rewrite the last term in the pithier form

centred around the position of the first source. We temporarily waive the ISO 80000-2 notation detM and replace it by the equivalent and more compact notation | M |. We also replace Va1 + Vb1 by V1,1 to rewrite the last term in the pithier form  . We now suppose that both sources are unrelated and that μa1 and μb1 are uniformly distributed in Ω. Then, neglecting border effects, the probability that the two sources are associated by chance when performing a χγ-match is given by the ratio of the acceptance ellipse to the total surface area Ω:

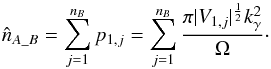

. We now suppose that both sources are unrelated and that μa1 and μb1 are uniformly distributed in Ω. Then, neglecting border effects, the probability that the two sources are associated by chance when performing a χγ-match is given by the ratio of the acceptance ellipse to the total surface area Ω:  (76)We now suppose that the second catalogue contains nB sources uniformly distributed in Ω. And if all of them are unrelated to the source of the first catalogue, then the estimated number of spurious associations is simply the sum of the previous probability over the nB sources of the second catalogue

(76)We now suppose that the second catalogue contains nB sources uniformly distributed in Ω. And if all of them are unrelated to the source of the first catalogue, then the estimated number of spurious associations is simply the sum of the previous probability over the nB sources of the second catalogue  (77)We now suppose that the first catalogue contains nA sources also uniformly distributed in Ω, all unrelated to catalogue B sources. Still neglecting border effects, the estimated number of spurious associations is simply the sum of the previous estimation over all catalogue A sources

(77)We now suppose that the first catalogue contains nA sources also uniformly distributed in Ω, all unrelated to catalogue B sources. Still neglecting border effects, the estimated number of spurious associations is simply the sum of the previous estimation over all catalogue A sources  (78)In practice, evaluating this quantity can be time-consuming since we have to compute and sum nA × nB terms. Fortunately, we can evaluate it exactly for circular errors and approximately for elliptical errors computing only nA + nB terms. In fact

(78)In practice, evaluating this quantity can be time-consuming since we have to compute and sum nA × nB terms. Fortunately, we can evaluate it exactly for circular errors and approximately for elliptical errors computing only nA + nB terms. In fact  in which

in which  (83)and thus

(83)and thus  (84)For ordinary ellipses, that is ellipses having a position angle different from 0 and π/ 2, the approximation is valid if

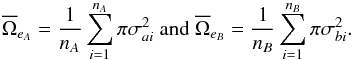

(84)For ordinary ellipses, that is ellipses having a position angle different from 0 and π/ 2, the approximation is valid if  . In the particular case of circular errors, Eq. (78) becomes

. In the particular case of circular errors, Eq. (78) becomes  (85)in which

(85)in which  and

and  are the mean surface area of all positional error ellipses in catalogues A and B respectively:

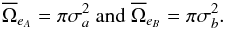

are the mean surface area of all positional error ellipses in catalogues A and B respectively:  (86)For simple circular errors σai and σbi, this reduces to

(86)For simple circular errors σai and σbi, this reduces to  (87)If errors are constant for all sources in each catalogue, this reduces to

(87)If errors are constant for all sources in each catalogue, this reduces to  (88)These estimates based on geometrical considerations have the advantage of being very fast to compute.

(88)These estimates based on geometrical considerations have the advantage of being very fast to compute.

Theoretically, we should remove from the double summation in Eq. (78) the pairs (i,j) which are real associations. We have no mean to do this since we do not known in advance the result of the cross-identification. Fortunately this effect is negligible in common cases. Indeed, if the result of the cross-match of the two catalogues contains nAB real associations – that is sources of both catalogues from a same real source – and supposing that the positional error distribution of sources having a counterpart is similar to the global error distribution we should modify Eq. (85) by (89)In practice this estimate will tend to be overestimated since the distribution of sources in a catalogue cannot be uniform because of the limited angular resolution preventing the detection of very close sources in a same image. This effect is usually deemed to be of negligible importance. However one can detect its presence in particular circumstances. For instance, if the actual counterpart is located in the wings of a much brighter nearby source it may not be detected. This effect probably accounts for the presence of a fraction of the stellar identifications in high Galactic latitude X-ray surveys, in particular those with a much higher Fx/Fopt flux ratios and harder X-ray spectra than normal for active coronae in which cases a faint AGN may be the correct identification (Watson 2012; Menzel et al. 2016). One way to account for this effect and to limit the overestimation is to remove from the surface area Ω small areas around each source. The value of those areas depends for example on the source brightness. In addition, again because of the angular resolution: for real associations in catalogues having similar positional errors, the chance a source has to be also associated with a spurious source is low. More precisely, the start of the Poisson distribution will be truncated. In extreme cases in which the Poisson distribution is truncated for x<kγ, meaning that sources in a real association cannot be part of a spurious association, we should remove those sources from the estimate

(89)In practice this estimate will tend to be overestimated since the distribution of sources in a catalogue cannot be uniform because of the limited angular resolution preventing the detection of very close sources in a same image. This effect is usually deemed to be of negligible importance. However one can detect its presence in particular circumstances. For instance, if the actual counterpart is located in the wings of a much brighter nearby source it may not be detected. This effect probably accounts for the presence of a fraction of the stellar identifications in high Galactic latitude X-ray surveys, in particular those with a much higher Fx/Fopt flux ratios and harder X-ray spectra than normal for active coronae in which cases a faint AGN may be the correct identification (Watson 2012; Menzel et al. 2016). One way to account for this effect and to limit the overestimation is to remove from the surface area Ω small areas around each source. The value of those areas depends for example on the source brightness. In addition, again because of the angular resolution: for real associations in catalogues having similar positional errors, the chance a source has to be also associated with a spurious source is low. More precisely, the start of the Poisson distribution will be truncated. In extreme cases in which the Poisson distribution is truncated for x<kγ, meaning that sources in a real association cannot be part of a spurious association, we should remove those sources from the estimate  . We thus have to rewrite the previous equation Eq. (89) as

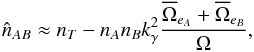

. We thus have to rewrite the previous equation Eq. (89) as  (90)Knowing the total number of associations, nT, resulting from the χ-match, we can estimate from Eq. (89) the number of spurious associations, and thus the number of real associations is estimated by

(90)Knowing the total number of associations, nT, resulting from the χ-match, we can estimate from Eq. (89) the number of spurious associations, and thus the number of real associations is estimated by  (91)If mean error ellipses in both catalogues are very small compared to the total surface area – that is

(91)If mean error ellipses in both catalogues are very small compared to the total surface area – that is  – we can use the approximation

– we can use the approximation  (92)which is equivalent to using directly equation Eq. (85), that is without taking care of removing real associations.

(92)which is equivalent to using directly equation Eq. (85), that is without taking care of removing real associations.  is but an estimate and nothing prevents it from being negative due to count statistics in cross-matches with very few real associations and a lot of spurious associations. In practice, we have to define a lower limit such as

is but an estimate and nothing prevents it from being negative due to count statistics in cross-matches with very few real associations and a lot of spurious associations. In practice, we have to define a lower limit such as  .

.

Hence we can estimate the priors in the sample of associations satisfying the selection criterion (s)  After a first two-catalogues cross-match, we may compare the expected histogram of detVi,j for spurious associations with the same histogram obtained from all associations. We may then derive the estimated distribution of this quantity (detVi,j) for “real” associations and compute the two likelihoods p(detVi,j | hAB,s) and p(detVi,j | hA_B,s).

After a first two-catalogues cross-match, we may compare the expected histogram of detVi,j for spurious associations with the same histogram obtained from all associations. We may then derive the estimated distribution of this quantity (detVi,j) for “real” associations and compute the two likelihoods p(detVi,j | hAB,s) and p(detVi,j | hA_B,s).

Similarly we may build the histograms of the quantity detVΣ for both the spurious and the “real” associations. This quantity is the determinant of the covariance matrix – that is the positional error – associated with the weigthed mean positions. We proceeded likewise in Pineau et al. (2011b) using the “likelihood ratio” (see our comment on the abuse of term likelihood ratio) quantity instead of positional uncertainties.

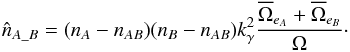

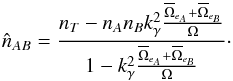

7.2. Case of three catalogues

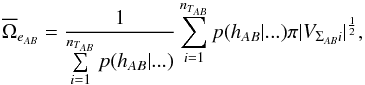

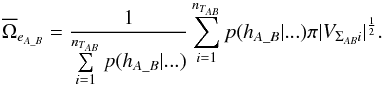

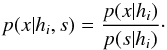

We recall that for 3 catalogues, the output contains five components (see Sect. 6.2.2): ABC, AB_C, A_BC, AC_B, A_B_C. We would like to estimate the number of spurious associations, that is the number of associations in the four components other than ABC. To do so, we need to perform the three two-catalogue cross-matches A with B, A with C and B with C. We are thus able to estimate nAB and nA_B, nAC and nA_C and finally nBC and nB_C respectively. To compute nAB_C, we proceed like in the previous section considering the two catalogues AB and C. AB is the result of the χ-match of A with B: the positions in catalogue AB are the weighted mean positions (μΣ, Eq. (30)) of associated A and B sources and the associated errors (or covariance matrix) are given by VΣ (Eq. (29)). The only difference with the two-catalogues case is that for the first catalogue (AB) we replace the simple mean elliptical error surface  over the nTAB entries by the weighted mean accounting for the probabilities the AB associations have to be “real” (i.e. not spurious)

over the nTAB entries by the weighted mean accounting for the probabilities the AB associations have to be “real” (i.e. not spurious)  (95)in which p(hAB | ...) is the probability the association has to be a real association knowing some parameters (“...”), and VΣABi is the covariance matrix of the error on the weighted mean position i (see Sect. 5.3, particulary Eq. (49)). Such a probability will be computed in the next sections. We then compute

(95)in which p(hAB | ...) is the probability the association has to be a real association knowing some parameters (“...”), and VΣABi is the covariance matrix of the error on the weighted mean position i (see Sect. 5.3, particulary Eq. (49)). Such a probability will be computed in the next sections. We then compute  and derive

and derive  like in the two catalogues case replacing A by AB and B by C. Similarly to

like in the two catalogues case replacing A by AB and B by C. Similarly to  , we can estimate

, we can estimate  and

and  .

.

We now want to estimate nA_B_C, with a result which is independent from the cross-correlation order. Although we may use Eq. (74), it is possibly time consuming. Another solution is to use its discretized form Eq. (75). To do so quickly at the cost of an approximation we may circularize the errors by replacing the coefficients of the covariance matrix V by a single error equal to  and setting the correlation (or covariance) parameter equal to zero. It means that the new covariance matrix is diagonal and both diagonal elements are equal to

and setting the correlation (or covariance) parameter equal to zero. It means that the new covariance matrix is diagonal and both diagonal elements are equal to  . We choose this value to preserve the surface area of the two-dimensional error since the determinant (∝area) of the circular error equals the determinant (∝area) of the ellipse. This approximation is the same as the one made in the previous section. For each catalogue we then make the histogram of

. We choose this value to preserve the surface area of the two-dimensional error since the determinant (∝area) of the circular error equals the determinant (∝area) of the ellipse. This approximation is the same as the one made in the previous section. For each catalogue we then make the histogram of  values using steps of for example one mas and we apply Eq. (75). In this case – circular errors – we simplify the equation using

values using steps of for example one mas and we apply Eq. (75). In this case – circular errors – we simplify the equation using  (96)and thus

(96)and thus  (97)We do not use this last form but give it for comparison with the denominator of Eq. (17) in Budavári & Szalay (2008).

(97)We do not use this last form but give it for comparison with the denominator of Eq. (17) in Budavári & Szalay (2008).

Another option is to compute the number of “fully” spurious associations three times by following what was done in the previous section (and in the beginning of this section), but computing  instead of

instead of  . Similarly to Eq. (95):

. Similarly to Eq. (95):  (98)Computing

(98)Computing  we derive

we derive  . Similarly we can compute

. Similarly we can compute  and

and  and estimate the number of fully spurious associations taking the mean of

and estimate the number of fully spurious associations taking the mean of  ,

,  and

and  .

.

Having the estimated number of associations being part of the components AB_C, AC_B, A_BC and A_B_C plus knowing the total number of associations nT, we are able to estimate  and to compute the priors, for example

and to compute the priors, for example  (99)

(99)

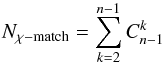

7.3. Case of n catalogues

We can easily generalise the previous section using recursion. For n = 4 catalogues, we estimate the number of associations in component A_B_C_D knowing the number of associations in the result of the four-catalogue χ-match and estimating recursively (from the three-catalogue χ-matches) the number of associations in the 14 other components (AB_C_D, ...). So for n catalogues, the total number of distinct (sub)-cross-matches to be performed to compute all priors recursively is  (100)in which terms

(100)in which terms  are the binomial coefficients (n−1) ! /(k ! (n−1−k) !). For five catalogues, Nχ−match = 26 and for six catalogues, Nχ−match = 57.

are the binomial coefficients (n−1) ! /(k ! (n−1−k) !). For five catalogues, Nχ−match = 26 and for six catalogues, Nχ−match = 57.

8. Probability of being χ-matched under hypothesis hi

In this section we compute p(s | hi), the probability that n sources from n distinct catalogues have to satisfy the candidate selection criteria under hypothesis hi. We will show in section Sect. 9 that the p.d.f. of the Mahalanobis distance for χ-match associations under hypothesis hi is the p.d.f. of the Mahalanobis distance without applying the candidate selection criteria, normalized by the probability p(s | hi) we compute in this section:  (101)We show here that p(s | hi) is proportional to the integral we note Ihi,n(kγ) (see Eq. (107)) which is independent of positional uncertainties and which also plays a role in Sect. 10. We will see also that p(s | hi) and Ihi,n(kγ) can be simplified to p(s | hk) and Ik,n(kγ) respectively, that is the probability n sources from n distinct catalogues have to satisfy the candidate selection criteria knowing they are actually associated with k distinct real sources.

(101)We show here that p(s | hi) is proportional to the integral we note Ihi,n(kγ) (see Eq. (107)) which is independent of positional uncertainties and which also plays a role in Sect. 10. We will see also that p(s | hi) and Ihi,n(kγ) can be simplified to p(s | hk) and Ik,n(kγ) respectively, that is the probability n sources from n distinct catalogues have to satisfy the candidate selection criteria knowing they are actually associated with k distinct real sources.

If k = 1, that is all sources are from a same real source, we – logically – find p(s | hk = 1) = γ, the cumulative χ distribution function evaluated at the threshold kγ.

If k = n, all sources are spurious, we – also logically (see Eq. (74)) – find for Ik = n,n(kγ) the volume of a 2(n−1)-dimensional sphere of radius kγ, and p(s | hk = n) equals the volume of the 2(n−1)-dimensional ellipsoid defined by the test acceptance region divided by the common χ-match surface area raised to the power of the number of χ-matches (i.e. n−1).

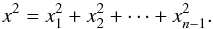

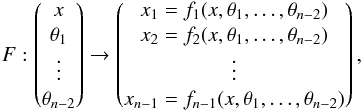

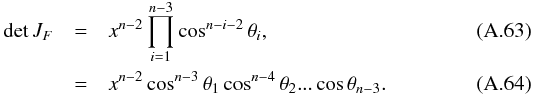

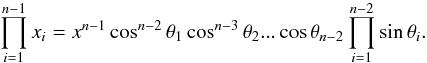

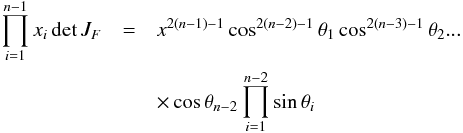

We note x the total Mahalanobis distance, that is the square root of Eq. (36). The vectorial form x = (x1,x2,...xn−1) denotes the n−1 terms, also Mahalanobis distances, which are summed in the catalogue by catalogue iterative form Eq. (48). We rewrite this equation with the new notations  (102)So x is the radius of an hypersphere in the n−1 successive Mahalanobis distances space. The relation between x and x of dimension n−1 is the polar transformation F:Rn−1 → Rn−1, (x1,x2,...,xn−1) = F(x,θ1,...,θn−2),

(102)So x is the radius of an hypersphere in the n−1 successive Mahalanobis distances space. The relation between x and x of dimension n−1 is the polar transformation F:Rn−1 → Rn−1, (x1,x2,...,xn−1) = F(x,θ1,...,θn−2),  (103)with

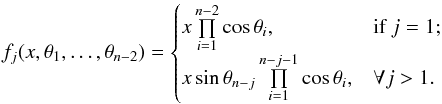

(103)with  (104)The associated differential transform is

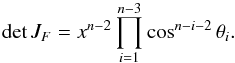

(104)The associated differential transform is  (105)with JF the determinant of the Jacobian of F which is for example computed in Stuart & Ord (1994, Chap. II “Exact sampling distributions”, p. 375):

(105)with JF the determinant of the Jacobian of F which is for example computed in Stuart & Ord (1994, Chap. II “Exact sampling distributions”, p. 375):  (106)We now define the Ik,n(kγ) integral which will be crucial in the next sections

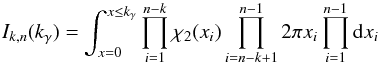

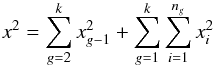

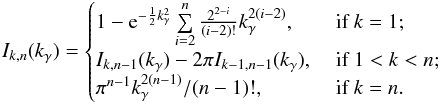

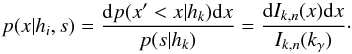

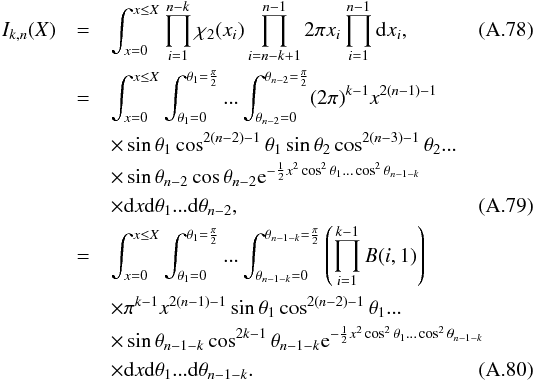

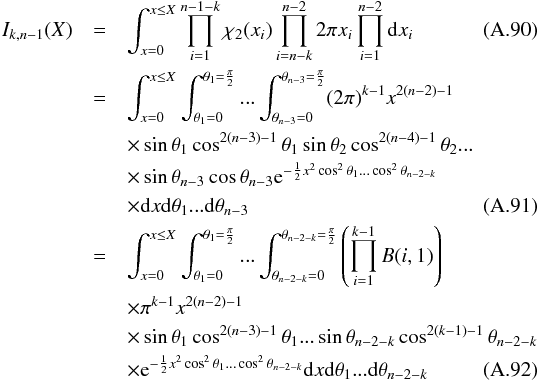

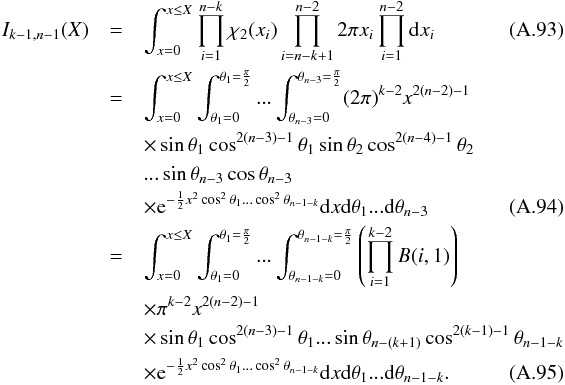

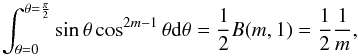

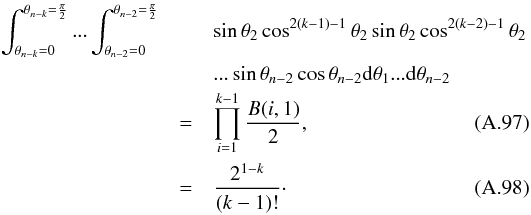

(106)We now define the Ik,n(kγ) integral which will be crucial in the next sections  (107)in which k denotes the hypothetical number of real sources and so ranges from 1 to n. Written this way, the integral is simpler than the equivalent form: