| Issue |

A&A

Volume 501, Number 3, July III 2009

|

|

|---|---|---|

| Page(s) | 925 - 932 | |

| Section | Extragalactic astronomy | |

| DOI | https://doi.org/10.1051/0004-6361/200911814 | |

| Published online | 27 May 2009 | |

Multiwavelength periodicity study of Markarian 501

C. Rödig - T. Burkart - O. Elbracht - F. Spanier

Lehrstuhl für Astronomie, Universität Würzburg, Am Hubland, 97074 Würzburg, Germany

Received 9 February 2009 / Accepted 27 April 2009

Abstract

Context. Active galactic nuclei (AGN) are highly variable emitters of electromagnetic waves from the radio to the gamma-ray regime. This variability may be periodic, which in turn could be the signature of a binary black hole. Systems of black holes are strong emitters of gravitational waves whose amplitude depends on the binary orbital parameters such as the component mass, the orbital semi-major-axis and eccentricity.

Aims. It is our aim to prove the existence of periodicity of the AGN Markarian 501 from several observations in different wavelengths. A simultaneous periodicity in different wavelengths provides evidence of bound binary black holes in the core of AGN.

Methods. Existing data sets from observations by Whipple, SWIFT, RXTE, and MAGIC have been analysed with the Lomb-Scargle method, the epoch-folding technique and the SigSpec software.

Results. Our analysis shows a 72-day period, which could not be seen in previous works due to the limited length of observations. This does not contradict a 23-day period that can be derived as a higher harmonic from the 72-day period.

Key words: galaxies: active - BL Lacertae objects: individual: Mrk 501 - X-rays: galaxies - gamma rays: observations

1 Introduction

Active galactic nuclei (AGN) have been a class of most interesting astronomical sources for a long time, mainly for their high variability on time scales from years down to minutes. Nowadays these variations may be observed from radio up to gamma-rays (>10 TeV). In most cases the variation in the AGN do not show signs of periodicity but an unpredictable switching between quiescent and active states and flaring behaviour. The explanation of such behaviour requires several different approaches in order to explain the very different time scales. Most of the models make use of the source size (the black hole radius) for the minimum time scales or the accretion efficiency and outer scales for the maximum time scales.

Some sources show a periodicity of the order of several tens of days in the optical, X-ray, or TeV data like Mrk 421, Mrk 501, 3C66A, and PKS 2155-304 (Lainela et al. 1999; Osone & Teshima 2001; Hayashida et al. 1998; Kranich et al. 2001), whereas the long-term optical light curves from classical sources such as BL Lacertae, ON 231, 3C273, OJ 287, PKS 0735+178, 3C345 and AO 0235+16 usually suggest (observed) time scales of several years (e.g. Sillanpaa et al. 1988; Raiteri et al. 2001; Fan et al. 1997). Models invoked to describe aperiodic variability do not give rise to explanations for periodic variations. It is therefore necessary to develop new models. One of these models is the binary black hole (BBH) model, which is explained in detail in the next section. It is worth noting that the origin of these quasi periodic objects (QPOs) may be related to the instabilities of (optically thin) accretion disks (cf. e.g. Fan et al. (2008) and citations within). We do not want to stress this point but concentrate on the BBH model to explain the variability.

The existence of BBHs seems plausible since the host galaxies of most AGN are elliptical galaxies that originate from galaxy mergers. The study of BBHs and their interactions become of primary importance from both the astrophysical and theoretical points of view now that the first gravitational wave detectors have started operating; gravitational waves, while certainly extremely weak, are also extremely pervasive and thus a novel and very promising way of exploring the universe. For a review of BBHs the reader is referred to Komossa (2003).

BBHs have already been detected (Hudson et al. 2006; Romero et al. 2003; Sudou 2003; Sillanpaa 1998), but most sources are far too distant to be separated into radio or X-rays (for a detailed discussion of the emission mechanisms see (Bogdanovic et al. 2008). By using a multi-messenger technique (combined electromagnetic and gravitational wave astronomy), it should be possible to identify periodic AGN as BBH systems by means of their gravitational wave signal, on the one hand, while on the other, possible targets for gravitational wave telescopes could easily be identified by X-ray and gamma-astronomy.

The aim of this paper is to present a complete data analysis of

Markarian 501 in different wavelengths searching for periodicity. This will allow for restricting possible black hole masses and the distance between the two black holes. Taking the model of Rieger & Mannheim (2000) into account, where the smaller companion is emitting a jet with Lorentz factor ![]() towards the observer, we expect periodic behaviour over the complete AGN spectrum.

towards the observer, we expect periodic behaviour over the complete AGN spectrum.

This paper is organised as follows. In Sect. 2 we review a model used to explain the variability and present the equations used to determine the parameters of the binary system under study. Then in Sect. 3 the data we used and the experiments are described. In Sect. 4 we describe methodology for data analysis, and in 5 we present the results of the analysis, discuss our findings and draw some conclusions.

2 The binary black hole model

As discussed above, the presence of close supermassive binary black hole (SMBBH) systems has been repeatedly invoked as plausible source for a number of observational findings in blazar-type AGN, ranging from misalignment and precession of jets to helical trajectories and quasi-periodic variability (cf. e.g. Komossa 2006).

What makes the BBH model a unique interpretation is that it facilitates facts otherwise not possible: (1.) it is based on quite general arguments for bottom-up structure formation; (2.) it incorporates helical jet trajectories observed in many sources; (3.) it provides a reasonable explanation for long term periodic variability; (4.) it can explain, to some extend, quasi-periodic variability on different time-scales in different energy bands (e.g. Rieger 2007).

The nearby TeV blazar Mrk 501

(z = 0.033) attracted attention in 1997, when the source underwent a phase of high activity becoming the brightest source in the sky at TeV energies.

Recent contributions like Rieger & Mannheim (2001,2000) have proposed a possible periodicity of

![]() days, during the 1997 high state of Mrk 501, which could be related to the orbital motion in a SMBBH system. The proposed model

relies on the following assumptions: (1.) the observed periodicity is associated with a relativistically moving feature in the jet (e.g. knot); (2.) due to the orbital motion of the jet-emitting (secondary) BH, the trajectory of the outward moving knot is expected to be a long drawn helix. The orbital motion is thus regarded as the underlying driving power for periodicity; (3.) the jet, which dominates the observed emission, is formed by the less massive BH; (4.) the observed periodicity arises due to a Doppler-shifted flux modulation which is caused by a slight change of the inclination angle with respect to the line of sight.

days, during the 1997 high state of Mrk 501, which could be related to the orbital motion in a SMBBH system. The proposed model

relies on the following assumptions: (1.) the observed periodicity is associated with a relativistically moving feature in the jet (e.g. knot); (2.) due to the orbital motion of the jet-emitting (secondary) BH, the trajectory of the outward moving knot is expected to be a long drawn helix. The orbital motion is thus regarded as the underlying driving power for periodicity; (3.) the jet, which dominates the observed emission, is formed by the less massive BH; (4.) the observed periodicity arises due to a Doppler-shifted flux modulation which is caused by a slight change of the inclination angle with respect to the line of sight.

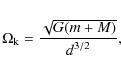

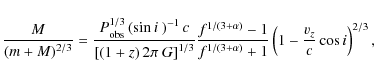

Following Rieger & Mannheim (2000, hereinafter RM), we assume

that the observed signal periodicity has a geometrical origin, being a consequence of a Doppler-shifted modulation. It is therefore possible to relate the observed signal period

![]() to the Keplerian orbital period

to the Keplerian orbital period

by the equation (cf. Roland et al. 1994; Camenzind & Krockenberger 1992)

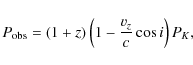

where vz is the typical jet velocity assumed to be

For a resolved emission region (e.g. a blob of plasma) with spectral index ![]() ,

the spectral flux modulation by Doppler boosting can be written as

,

the spectral flux modulation by Doppler boosting can be written as

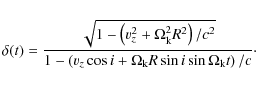

where

![\begin{displaymath}\delta(t) = \frac{1}{\gamma_{\rm b} \left[ 1 - \beta_{\rm b} \cos \theta(t)\right]},

\end{displaymath}](/articles/aa/full_html/2009/27/aa11814-09/img21.png) |

(4) |

where

A periodically changing viewing angle may thus naturally lead to a periodicity in the observed lightcurves even for an intrinsically constant flux.

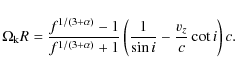

Depending on the position of the jet-emitting BH along its orbit, the Doppler factor has two extremal values, so that one obtains the condition

where

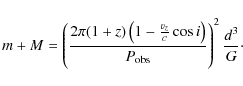

Finally, by using the definition of the Keplerian orbital period Eq. (1) and noting that

and from Eq. (2) we get

To be able to determine the primary and secondary black hole masses m and M by solving simultaneously Eqs. (8) and (9) we have to provide an additional assumption or a third equation. RM obtained the binary separation d by equating the time-scale for gravitation radiation with the gas dynamical time-scale (Begelman et al. 1980). We have not adopted this condition since it is somewhat arbitrary to assume

As outlined, we can determine the orbital parameters of the system in case of a perfectly circular orbit. Notably, the assumption of a perfectly circular orbit is simplifying the problem to some extend. It is true that the orbits tend to circularise due to gravitational radiation, but this happens within a time-scale of the same order of magnitude as the merging time-scale (Fitchett 1987; Peters & Mathews 1963). Therefore it is possible that the constituting black holes of a binary system are still on eccentric orbits, in which case the method by RM will be only an approximation to the real situation. Recently De Paolis et al. (2003,2002) extended the model of RM to elliptical orbits including the eccentricity e, which reduces to the circular model in case of ![]() .

In case of an eccentric orbit a degeneracy in the solutions appears making it impossible to determine the orbital parameters in a unique fashion. As pointed out further by De Paolis et al. (2002) the degeneracy can only be solved by the detection of a gravitational wave signal.

.

In case of an eccentric orbit a degeneracy in the solutions appears making it impossible to determine the orbital parameters in a unique fashion. As pointed out further by De Paolis et al. (2002) the degeneracy can only be solved by the detection of a gravitational wave signal.

3 Data

Data was taken from a variety of different sources, earthbound telescopes like MAGIC or WHIPPLE, but also from satellite experiments like RXTE and SWIFT. In contrast to any previous studies on this source, we explicitly excluded the time of the flare in 1997, so as to determine whether a periodicity would still be present in the quiescent state of Mrk 501. We didn't make use of any raw data material, but only used data that was background-corrected and processed by the respective collaborations, to which we refer for more details. Therefore the units of the plots are arbitrary, a fact that doesn't cause any problems for our analysis as we are not interested in any absolute flux values of the source.

3.1 RXTE

The Rossi X-ray Timing Explorer (RXTE) is an orbital X-ray telescope for the observation of black holes, neutron stars, pulsars and X-ray binaries. The RXTE has three instruments on board: the Proportional Counter Array (PCA, 2-60 keV), the High-Energy X-ray Timing Experiment (HEXTE, 15-250 keV) and the so-called All Sky Monitor (ASM, 2-10 keV). The PCA is an array of five proportional counters with a time resolution of

![]() and an angular of

and an angular of ![]() FWHM, the energy resolution amounts to 18% at 6 keV. For HEXTE the time sampling is

FWHM, the energy resolution amounts to 18% at 6 keV. For HEXTE the time sampling is ![]() s with a field of view of

s with a field of view of ![]() FWHM at an energy resolution of 15% at 60 keV and the ASM consists of three wide-angle cameras which scan 80% of the sky every 90 mn (RXTE 2009; Jahoda et al. 1996).

FWHM at an energy resolution of 15% at 60 keV and the ASM consists of three wide-angle cameras which scan 80% of the sky every 90 mn (RXTE 2009; Jahoda et al. 1996).

The data from RXTE we used was gathered by the ASM from March 1998 until December 2008 and a sample from the whole data set can be seen in Fig. 1 (in arbitrary units).

We are only plotting a subset to show the actual sampling structure of the RXTE data, firstly because the data is extremely plentiful (it contains 3697 data points) and secondly to have a direct comparison to the SWIFT data in Fig. 3. The data shows a rms of

![]() with an average signal-to-noise ratio of 1.22.

with an average signal-to-noise ratio of 1.22.

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig1.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg36.png) |

Figure 1: Subset of the whole available ASM data on Mrk 501. The sampling is not equidistant and the data points clearly differ significantly from zero. |

| Open with DEXTER | |

3.2 SWIFT

The SWIFT satellite is part of the SWIFT Gamma-Ray Burst Mission planned and executed by NASA dedicated to the study of GRBs and their afterglows. It consists of three instruments, the Burst Alert Telescope (BAT) which detects gamma-rays in a range from 15 to 150 keV with a field of view of about 2 ![]() and computes their position on the sky with arc-minute positional accuracy. After the detection of a GRB by the BAT, its image and spectrum can be observed with the X-ray Telescope (XRT, 300 eV-10 keV) and the Ultraviolet/Optical Telescope (UVOT, 170-650 nm) with an arc-second positional accuracy (Swift 2009b; Barthelmy & Swift Team 2003).

and computes their position on the sky with arc-minute positional accuracy. After the detection of a GRB by the BAT, its image and spectrum can be observed with the X-ray Telescope (XRT, 300 eV-10 keV) and the Ultraviolet/Optical Telescope (UVOT, 170-650 nm) with an arc-second positional accuracy (Swift 2009b; Barthelmy & Swift Team 2003).

The data that was used can be obtained from a database at the website of the NASA (Swift 2008). We made use of data from February 2005 to September 2008. As can be seen in Fig. 2, the lightcurve (in arbitrary units) provided by the SWIFT satellite looks very much like background noise. Especially when comparing the size of the error-bars to the amplitude of the signal in the subset in Fig. 3, common sense implies that this lightcurve should be regarded with caution. The subset was chosen around the maximum amplitude measured

within the whole sample and the same time-span was then plotted for RXTE in Fig. 1 for comparison. The rms of the flux

of the whole data sample is

![]() with an average signal-to-noise ratio of only 0.570. As a result of this, we consider the data from SWIFT merely as an indicator, but not as solid evidence that seriously influences the outcome of the hypothesis tests.

with an average signal-to-noise ratio of only 0.570. As a result of this, we consider the data from SWIFT merely as an indicator, but not as solid evidence that seriously influences the outcome of the hypothesis tests.

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig2.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg39.png) |

Figure 2: Complete available data for SWIFT containing 1153 data points with error bars. Background has already been subtracted before data retrieval (for details see Swift 2009a). |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig3.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg40.png) |

Figure 3:

Subset of SWIFT data around the maximum amplitude in order to show the single points more clearly. The highest peak lies 12.8 |

| Open with DEXTER | |

3.3 MAGIC

MAGIC is an Imaging Air Cherenkov Telescope (IACT) situated at the Roque de los Muchachos on one of the Canary Islands, La Palma. It has an active mirror surface of 236 m2 and a hexagonal camera with a diameter of 1.05 m consisting of 576 photomultipliers. The

trigger and analysis threshold are 50-70 GeV and the upper detection limit is 30 TeV, for further details on the MAGIC telescope see Petry & The MAGIC Telescope Collaboration (1999). The data (in arbitrary units) we used was collected from May to July 2005 in the energy range of 150 GeV to 10 TeV (Albert et al. 2007) and can be seen in Fig. 4. It contains of 24 data points with a rms of

![]() and an average signal-to-noise ratio of 11.0.

and an average signal-to-noise ratio of 11.0.

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig4.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg42.png) |

Figure 4: Measured high-energy data from MAGIC in 2005, the set contains 24 data points. |

| Open with DEXTER | |

3.4 WHIPPLE

In the early 1980s, the 10 m Whipple Telescope was built at the Fred Lawrence Whipple Observatory in southern Arizona. It is still fully operational and is nowadays used for long-term monitoring of some interesting astrophysical sources in the energy

range from 100 Gev to 10 TeV. The data (in arbitrary units) of Mrk501 we used was taken from March 2006 to March 2008 and is preliminary (WHIPPLE 2008). It shows a rms of

![]() and an average signal-to-noise ratio of 2.45 (see Fig. 5).

and an average signal-to-noise ratio of 2.45 (see Fig. 5).

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig5.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg44.png) |

Figure 5: Preliminary high-energy data measured by WHIPPLE from 2006 to 2008, the set contains 33 data points. |

| Open with DEXTER | |

4 Analysis technique

In observational sciences the observer can often not completely control the time of the observations, but must simply accept a certain dictated set of observation times ti. In order to process unevenly sampled data, simple Fourier-transformations cannot be used, but must be replaced by a more elaborate analysis. There are several ways of how to tackle this problem. In our work we made use of three different methods. These we selected by the criterion of being mutually fairly orthogonal so as to reduce systematic errors. In the following paragraphs two of them will be outlined briefly, the third was gratefully accepted from the Dept. for Astroseismology of the University of Vienna.

4.1 Lomb-scargle and epoch folding

4.1.1 Lomb-scargle

A full derivation of the Lomb normalised periodogram (spectral power as a function of angular frequency

![]() may be found in Lomb (1976) and Scargle (1982). The algorithm transforms a discretely and unevenly sampled set of data points into a quasi-continuous function in Fourier space, essentially by applying a

may be found in Lomb (1976) and Scargle (1982). The algorithm transforms a discretely and unevenly sampled set of data points into a quasi-continuous function in Fourier space, essentially by applying a ![]() -test on an equivalent to linear least square fitting to the model

-test on an equivalent to linear least square fitting to the model

| (10) |

In order to detect low frequency signals, the oversampling method (Press et al. 2003) is usually considered. However, spurious effects are thus introduced and particular attention is necessary in analysing the resulting periodogram (see also Vaughan 2005). An ill-chosen value of the oversampling parameter is found to produce artificial oscillations in the periodogram that can easily be interpreted wrongly. We used the oversampling method and in Sect. 4.3 describe means of controlling these oscillations and we depict a way of statistically evaluating them as one of the major contributions to the uncertainty of an identified period.

4.1.2 Epoch folding

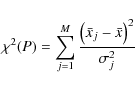

Following Leahy et al. (1983), the epoch-folding statistic is defined as

|

(11) |

where M denotes the number of phase bins and

where the Ai are the degrees of freedom in the fit. We also tried higher derivatives or higher powers of trigonometric functions, however the formula Eq. (12) proved to yield the best results.

4.2 SigSpec

This software (for details see Reegen 2007) presents a combination of the techniques mentioned above, and has many more other features in addition. One aspect that needs to be mentioned is that where the Lomb-Scargle Periodogram is presented as a

continuous function of the period, SigSpec only provides discrete values of those periods, that are above a certain threshold-level of spectral significance. The latter is the inverse False-Alarm-Probability

![]() scaled logarithmically (specified significance threshold y and number of independent frequencies M). The conversion of a chosen threshold for maximum spectral significance into its corresponding ``individual'' spectral significance threshold for accepting detected peaks is very similar to Scargle (1982) and can be found in Reegen (2007). SigSpec cannot deal with coloured noise, however it is possible to choose different spectral significance thresholds for different frequency regions (Reegen 2007).

scaled logarithmically (specified significance threshold y and number of independent frequencies M). The conversion of a chosen threshold for maximum spectral significance into its corresponding ``individual'' spectral significance threshold for accepting detected peaks is very similar to Scargle (1982) and can be found in Reegen (2007). SigSpec cannot deal with coloured noise, however it is possible to choose different spectral significance thresholds for different frequency regions (Reegen 2007).

4.3 Random-noise-modelling and Random-sampling

In order to handle the uncertainties of the results we used two approaches as follows. One way of testing the significance of the resulting Fourier

![]() Lomb-Scargle-transformation is ``Random-sampling'': we randomly choose a certain number P of data points from the original data set thus creating n subsets, transform all of them (using one of the three algorithms at a time) individually and add the thus acquired n Fourier-sets together so to form one single periodogram. P was chosen to be 2/3 of the original length of the lightcurve so as to guarantee sufficient degrees of freedom for permutation whereas at the same time not forfeiting the low frequency detection for the short data sets, which gets the more difficult the shorter the subset becomes.

In order to facilitate proper summation of the results, the transformations of the n subsets need to have the same frequency resolution and frequency window so one really sums up the function at equal points. If the resulting periodogram still shows appreciably high

Lomb-Scargle-transformation is ``Random-sampling'': we randomly choose a certain number P of data points from the original data set thus creating n subsets, transform all of them (using one of the three algorithms at a time) individually and add the thus acquired n Fourier-sets together so to form one single periodogram. P was chosen to be 2/3 of the original length of the lightcurve so as to guarantee sufficient degrees of freedom for permutation whereas at the same time not forfeiting the low frequency detection for the short data sets, which gets the more difficult the shorter the subset becomes.

In order to facilitate proper summation of the results, the transformations of the n subsets need to have the same frequency resolution and frequency window so one really sums up the function at equal points. If the resulting periodogram still shows appreciably high ![]() values, it can be deduced that the corresponding periods are actually physically relevant. If otherwise the peaks become increasingly broadened, it is rather likely that they do not correspond to a physically relevant period but stem from noise and/or

aliasing effects.

values, it can be deduced that the corresponding periods are actually physically relevant. If otherwise the peaks become increasingly broadened, it is rather likely that they do not correspond to a physically relevant period but stem from noise and/or

aliasing effects.

Another method is ``Random-noise-modelling'': We take the data points xi and their error-bars ![]() ,

create a uniformly distributed random number

,

create a uniformly distributed random number

![]() and add

and add ![]() to the corresponding xi. The idea behind this is to weigh the influence of a bad signal-to-noise ratio. If a data set has good signal-to-noise ratio, the random-noise-modelling will scarcely effect the resulting periodogram at all as the interval

to the corresponding xi. The idea behind this is to weigh the influence of a bad signal-to-noise ratio. If a data set has good signal-to-noise ratio, the random-noise-modelling will scarcely effect the resulting periodogram at all as the interval ![]()

![]() is small and therefore the new set, created by the Random-noise-modelling is almost identical to the original one. If, however, the

is small and therefore the new set, created by the Random-noise-modelling is almost identical to the original one. If, however, the ![]() are large, i.e. the data has a bad signal-to-noise ratio, the new set will differ significantly from the old and it is to be expected that the period-analysis will yield different results. So we have found a way of assigning tendentially larger uncertainties to the results from data sets of bad quality. It might be argued that the distribution of the

are large, i.e. the data has a bad signal-to-noise ratio, the new set will differ significantly from the old and it is to be expected that the period-analysis will yield different results. So we have found a way of assigning tendentially larger uncertainties to the results from data sets of bad quality. It might be argued that the distribution of the ![]() should be Gaussian, other than uniform, however, we preferred the uniform distribution as it leads to larger differences of the new sets and the originals and gives therefore a larger uncertainty in the final results, which we are otherwise underestimating. The variance of the new set is statistically converging towards that of the original as for each data-point xi the

should be Gaussian, other than uniform, however, we preferred the uniform distribution as it leads to larger differences of the new sets and the originals and gives therefore a larger uncertainty in the final results, which we are otherwise underestimating. The variance of the new set is statistically converging towards that of the original as for each data-point xi the ![]() and from that the

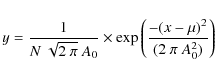

and from that the ![]() is calculated. The distribution of the variance is given in Fig. 6. This graph shows that the distribution of the variance, and thus of the rms of the simulated lightcurves, is gaussianly distributed around the original variance. We fitted with the formula

is calculated. The distribution of the variance is given in Fig. 6. This graph shows that the distribution of the variance, and thus of the rms of the simulated lightcurves, is gaussianly distributed around the original variance. We fitted with the formula

|

(13) |

where

Throughout the analysis we took n = 3 representative samples, each.

The results are accumulated (= added up) onto one final periodogram, which provides the means of determining the significance level ![]() of the whole analysis. If now the

periodogram resulting from the summation should exhibit its highest peak at places where the underlying single curves show nothing but a forest of little noise peaks, we deduce that the corresponding period is merely due to noise and has no physical meaning whatsoever. This effect is mainly observed for very small periods (P < 10 days), whereas for higher periods the displacement of the large peaks along the period-axis dominate.

of the whole analysis. If now the

periodogram resulting from the summation should exhibit its highest peak at places where the underlying single curves show nothing but a forest of little noise peaks, we deduce that the corresponding period is merely due to noise and has no physical meaning whatsoever. This effect is mainly observed for very small periods (P < 10 days), whereas for higher periods the displacement of the large peaks along the period-axis dominate.

Both random-methods were applied to all data sets independently, i.e. the original sets were taken, for each of them we created 3 sets using the Random-noise-modelling and (again from the original) 3 subsets using the random-sampling. The analysis of the data and its random models was carried out respecting all three numerical methods (see above). The simulated light curves are treated on equal footing as the original set, no weighing is applied, nor are the curves mixed. Only the very final periodograms (Fig. 7) present the sum of all 7, individually analysed, lightcurves for each experiment. As shown in Fig. 8 the Lomb-technique for high oversampling parameters may display oscillations, which appear to be Gaussian-shaped and more or less symmetrical around some central period. We believe these oscillations to be a mere artifact due to choosing the oversampling parameter slightly too big, a choice which is however necessary in order to resolve larger periods at all. Therefore we chose to fit enveloping Gaussian curves onto these structures. The ![]() levels were extracted from

the HWHM value of the envelope, that was fitted onto the final (accumulated and normalised) periodograms.

levels were extracted from

the HWHM value of the envelope, that was fitted onto the final (accumulated and normalised) periodograms.

For the SigSpec tool, however, this formula is not applicable and also the method of fitting the envelope is generally underestimating the uncertainty. According to Kataoka (2008) the normalised power spectral density exhibits a power-law

![]() for low (pink) frequencies, and the transformations were consequently corrected thus (``pink corrected''). In order to avoid spurious signatures from gaps, the data sets were each examined for their sampling structure so as to treat peaks arising around the positions of recurring data-gaps with additional caution.

for low (pink) frequencies, and the transformations were consequently corrected thus (``pink corrected''). In order to avoid spurious signatures from gaps, the data sets were each examined for their sampling structure so as to treat peaks arising around the positions of recurring data-gaps with additional caution.

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig6.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg63.png) |

Figure 6: Histogram of the variance of 300 random-noise-modelling samples and the one-parameter fit onto a Gaussian-distribution yielding a correlation coefficient of 0.975010. |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig7.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg64.png) |

Figure 7: Pink-corrected Lomb-Scargle periodogram for the RXTE data: 1998-2008 of Mrk 501. This final periodogram is the sum of the periodograms of the original lightcurve and the simulated lightcurves. The dashed graph is the periodogram of the original data set. |

| Open with DEXTER | |

5 Results and discussion

In this section we present the results of our analysis methods applied to the various data sets available for Markarian 501. First of all we show the periodograms calculated from the data (Figs. 7, 8), then we present the results of the hypothesis tests (in Figs. 11-14) and summarise all this in Table 1.

In Figs. 7 and 8 we show the typical signatures of the fundamental frequency in RXTE and the first harmonic in WHIPPLE, respectively. The periodogram of the preliminary WHIPPLE data was produced using a very high oversampling parameter, so as to resolve the long periods; however the data set is too short for the Lomb-Scargle algorithm to produce reliable results. In addition the Epoch-Folding-phase diagram in Fig. 9 shows the signature of the 72.6 day-period to be clearly sinusoidal. The overall hypothesis tests for both the first and second harmonic of

![]() days are shown in Figs. 11 - 14. The hypothesis-tests are evaluated according to standard statistics using two-sided tests on the specified confidence level.

The points with their error-bars are the identified periods with their 1-

days are shown in Figs. 11 - 14. The hypothesis-tests are evaluated according to standard statistics using two-sided tests on the specified confidence level.

The points with their error-bars are the identified periods with their 1-![]() -uncertainties whereas the boxes show the area in which the hypothesis can be accepted, if overlapping. We find, that the 23-day period can be accepted on a 5%-level for the SigSpec results (cf. Fig. 12) and on a

-uncertainties whereas the boxes show the area in which the hypothesis can be accepted, if overlapping. We find, that the 23-day period can be accepted on a 5%-level for the SigSpec results (cf. Fig. 12) and on a ![]() -level for the Lomb-Scargle results (cf. Fig. 11) when all four experiments, that we consider significant, are compared. That means, that we accept a hypothesis even if the SWIFT results indicate the contrary (see Sect. 3 for discussion). For the Lomb-Scargle analysis of the 36-day period only the data-sets of sufficient length could be considered due to the fact that the detection of low frequencies requires longer time spans in the original data. Figure 13 shows that a period of

-level for the Lomb-Scargle results (cf. Fig. 11) when all four experiments, that we consider significant, are compared. That means, that we accept a hypothesis even if the SWIFT results indicate the contrary (see Sect. 3 for discussion). For the Lomb-Scargle analysis of the 36-day period only the data-sets of sufficient length could be considered due to the fact that the detection of low frequencies requires longer time spans in the original data. Figure 13 shows that a period of

![]() days can be considered present in the two data sets on a

days can be considered present in the two data sets on a ![]() -level. In Fig. 14 we show that on a

-level. In Fig. 14 we show that on a ![]() -level, the first harmonic, 36 days, as identified by the SigSpec algorithm is still consistent within the 1-

-level, the first harmonic, 36 days, as identified by the SigSpec algorithm is still consistent within the 1-![]() uncertainties of the fundamental period,

uncertainties of the fundamental period,

![]() days, even though we probably underestimated the uncertainties in our method for this algorithm. The phase diagram in Fig. 9 shows that of the 4-parameter fit onto Eq. (12) yields 0.621577 for the correlation coefficient, with an rms per cent error of 0.18285 for the parameter

A2 = 0.0865454, which corresponds to a period of 72.6 days via the fundamental relation

days, even though we probably underestimated the uncertainties in our method for this algorithm. The phase diagram in Fig. 9 shows that of the 4-parameter fit onto Eq. (12) yields 0.621577 for the correlation coefficient, with an rms per cent error of 0.18285 for the parameter

A2 = 0.0865454, which corresponds to a period of 72.6 days via the fundamental relation

![]() .

We interpret this high correlation coefficient as a clear sign the periodicity itself to be of sinusoidal shape.

.

We interpret this high correlation coefficient as a clear sign the periodicity itself to be of sinusoidal shape.

The approach to look for the harmonics of 72 days is motivated chiefly by Figs. 7 and 9 for this period dominates over all other structures. However, the ASM data is the only set, plentiful and long enough so the algorithms can detect and identify a period clearly. Given the fact that all other samples are dominated either by noise or gaps it is of great importance to seek for the persistent appearance of the harmonics throughout all sets, even though a single detection of a period in one sole set would for itself scarcely be considered significant.

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig8.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg70.png) |

Figure 8: Pink-corrected Lomb-Scargle periodogram for the preliminary WHIPPLE data 2006-2008 of Mrk 501. This final periodogram was produced using a very high oversampling parameter so as to resolve long periods in this short data set. Thus the influence of artifacts dominates for periods larger than 30 days. |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig9.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg72.png) |

Figure 9:

Phase diagram of the period = 72.6 day in the RXTE 1998-2008 data: The Signature is clearly that of a sinusoidal signal. |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig10}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg73.png) |

Figure 10:

Required mass dependence for a BBH system in Mrk 501. The dotted and dot-dashed lines show the upper and lower limits of the binary mass estimation derived from the

|

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig11.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg74.png) |

Figure 11:

The two sided-hypothesis test of whether the 23-day period can be accepted within the |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig12.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg75.png) |

Figure 12:

The two sided-hypothesis test of the 23-day period for the SigSpec algorithm: The SWIFT data does not support the hypothesis, however, we very much doubt the quality of that data set and consider the hypothesis as valid on the |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig13.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg76.png) |

Figure 13:

The two sided-hypothesis test of the 36-day period for the Lomb-Scargle algorithm: the frequencies of the first harmonic are consistent on the |

| Open with DEXTER | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig14.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg77.png) |

Figure 14:

The two sided-hypothesis test of 36-day period for the SigSpec algorithm: This period can be found to be persistent in the data on a |

| Open with DEXTER | |

Table 1:

The ![]() - significance levels of the corresponding hypothesis tests brought face to face with the identified 72 day period and its higher harmonics.

- significance levels of the corresponding hypothesis tests brought face to face with the identified 72 day period and its higher harmonics.

This study allows for a new limit on black hole masses in the

possible BBH system in Mrk 501. These limits are in

accordance with measurements with the total black hole mass derived

with other techniques. For Mrk 501 the observed data corresponds to f=8 (where

![]() is the observed maximum to minimum ratio of the spectral flux density) and a TeV spectral index of

is the observed maximum to minimum ratio of the spectral flux density) and a TeV spectral index of

![]() (cf. Aharonian et al. 1999). Together with our proposed periodicity of 72 days we can determine the masses of the constituting black holes of the binary system by simultaneously solving Eqs. (8) and (9). The results are shown in Table 2, where a total mass of

(cf. Aharonian et al. 1999). Together with our proposed periodicity of 72 days we can determine the masses of the constituting black holes of the binary system by simultaneously solving Eqs. (8) and (9). The results are shown in Table 2, where a total mass of

![]() (Woo et al. 2005) and a TeV spectral index of

(Woo et al. 2005) and a TeV spectral index of

![]() have been applied for the calculations. In Fig. 10 we show the limiting black hole masses for the large as well for the small black hole. These are compared for the new found 72-day period and the previously found 23-day period.

have been applied for the calculations. In Fig. 10 we show the limiting black hole masses for the large as well for the small black hole. These are compared for the new found 72-day period and the previously found 23-day period.

The obtained value for the separation of the BBH obviously changes in time as a consequence of gravitational radiation. In Fig. 10 we show the limiting black hole masses for the large as well for the small black hole. These are compared for the new found 72-day period and the previously found 23-day period.

Table 2:

The maximum masses of the constituting black holes, the separation d and the intrinsic orbital period for the inclination angles

![]() with bulk Lorentz factor

with bulk Lorentz factor

![]() .

.

Our analysis shows two main results: Even for the quiescent state of the source Mrk501 we find that on the one hand the well-established 23-day period (Osone 2006) is still undefeated, while on the other a 72-day period is upcoming. These results are not in contradiction since the 23- and also the 36-day periods can, within the uncertainties, be regarded as the second and first harmonic of the

![]() -day period, respectively. Figure 7 clearly identifies the 72 days, together with the persistent appearance of the first and second harmonics in the data sets (save for SWIFT, see discussion above). It is not fully clear how robust the applied methods are by themselves, however the combination of different approaches showing concordant results provides a solid argument in favour of the SMBBH model.

-day period, respectively. Figure 7 clearly identifies the 72 days, together with the persistent appearance of the first and second harmonics in the data sets (save for SWIFT, see discussion above). It is not fully clear how robust the applied methods are by themselves, however the combination of different approaches showing concordant results provides a solid argument in favour of the SMBBH model.

This finding has possible consequences for our model of Mrk 501: It allows for a new limit on the black hole masses in the source's possible BBH system. A limit which is firstly in accordance with measurements of the total black hole mass as derived from other techniques and secondly allows for a higher ratio

![]() between the total mass of the binary and the mass of the companion BH. A scenario which is much more likely to exhibit the helical structure as proposed by Rieger & Mannheim (2000).

between the total mass of the binary and the mass of the companion BH. A scenario which is much more likely to exhibit the helical structure as proposed by Rieger & Mannheim (2000).

There is a reason why this has not yet been found: Short data sets are not able to show the 72-day feature since their length has to be well above these 72 days or aliasing will smear out this feature.

Regarding the physical model there is now, with a multi-wavelength analysis, more reason to support the BBH model, but still long time observations, especially in very high energies, are needed to prove our results.

The results of this study have an impact on X-ray, gamma-ray and gravitational wave astronomy: With evidence of multi-wavelength periodic variability, gravitational wave telescopes may help to tackle the problem of the origin of such variations and also aid in restricting the parameters of these sources.

Acknowledgements

The authors would like to thank the anonymous referee for his detailed comments, which greatly helped improving the paper.

C.R. thanks Mr Piet Reegen for supplying her with the source of his SIGSPEC - code. C.R. acknowledges support from the Max-Weber-foundation, O. E. is grateful for funding from the Elite Network of Bavaria. T.B. thanks the Deutsches Zentrum für Luft- und Raumfahrt and the LISA-Germany collaboration for funding. This research has made use of data obtained through the High Energy Astrophysics Science Archive Research Center Online Service, provided by the NASA/Goddard Space Flight Center.

References

- Aharonian, F. A., Akhperjanian, A. G., & Barrio, J. A., et al. 1999, A&A, 349, 11 [NASA ADS] (In the text)

- Albert, J., Aliu, E., & Anderhub, H., et al. 2007, ApJ, 669, 862 [NASA ADS] [CrossRef] (In the text)

- Barthelmy, S. D., & Swift Team 2003, in BA&AS, 35, BA&AS, 767

- Begelman, M. C., Blandford, R. D., & Rees, M. J. 1980, Nature, 287, 307 [NASA ADS] [CrossRef] (In the text)

- Bogdanovic, T., Smith, B. D., Sigurdsson, S., & Eracleous, M. 2008, ApJS, 174, 455 [NASA ADS] [CrossRef] (In the text)

- Camenzind, M., & Krockenberger, M. 1992, A&A, 255, 59 [NASA ADS]

- De Paolis, F., Ingrosso, G., & Nucita, A. A. 2002, A&A, 388, 470 [NASA ADS] [CrossRef] [EDP Sciences]

- De Paolis, F., Ingrosso, G., & Nucita, A. A., et al. 2003, A&A, 410, 741 [NASA ADS] [CrossRef] [EDP Sciences]

- Fan, J. H., Rieger, F. M., Hua, T. X., et al. 2008, Astrop. Phys., 28, 508 [NASA ADS] [CrossRef] (In the text)

- Fan, J. H., Xie, G. Z., Lin, R. G., et al. 1997, A&AS, 125, 525 [NASA ADS] [CrossRef] [EDP Sciences]

- Fitchett, M. 1987, MNRAS, 224, 567 [NASA ADS]

- Hayashida, N., Hirasawa, H., & Ishikawa, F., et al. 1998, ApJ, 504, L71 [NASA ADS] [CrossRef]

- Hudson, D. S., Reiprich, T. H., & Clarke, T. E., et al. 2006, A&A, 453, 433 [NASA ADS] [CrossRef] [EDP Sciences]

- Jahoda, K., Swank, J. H., Giles, A. B., et al. 1996, in SPIE Conf. Ser. 2808, ed. O. H. Siegmund, & M. A. Gummin, 59

- Kataoka, J. 2008, in Blazar Variability across the Electromagnetic Spectrum (In the text)

- Komossa, S. 2003, in The Astrophysics of Gravitational Wave Sources, ed. J. M. Centrella, AIP Conf. Ser., 686, 161 (In the text)

- Komossa, S. 2006, Mem. Soc. Astron. Ital., 77, 733 [NASA ADS] (In the text)

- Kranich, D., The HEGRA Collaboration, de Jager, O., Kestel, M., & Lorenz, E. 2001, in International Cosmic Ray Conference, 7, 2630

- Lainela, M., Takalo, L. O., Sillanpää, A., et al. 1999, ApJ, 521, 561 [NASA ADS] [CrossRef]

- Larsson, S. 1996, A&AS, 117, 197 [NASA ADS] [CrossRef] [EDP Sciences]

- Leahy, D. A., Darbro, W., & Elsner, R. F., et al. 1983, ApJ, 266, 160 [NASA ADS] [CrossRef] (In the text)

- Lomb, N. R. 1976, Ap&SS, 39, 447 [NASA ADS] [CrossRef] (In the text)

- Mannheim, K., Westerhoff, S., & Meyer, H., et al. 1996, A&A, 315, 77 [NASA ADS]

- Osone, S. 2006, Astrop. Phys., 26, 209 [NASA ADS] [CrossRef] (In the text)

- Osone, S., & Teshima, M. 2001, in International Cosmic Ray Conference, International Cosmic Ray Conference, 7, 2695

- Peters, P. C., & Mathews, J. 1963, Phys. Rev., 131, 435 [NASA ADS] [CrossRef]

- Petry, D. 1999, The MAGIC Telescope Collaboration, A&AS, 138, 601 (In the text)

- Press, W. H., Teukolsky, S. A., & Vettering, W. T., et al. 2003, Eur. J. Phys., 24, 329 [CrossRef] (In the text)

- Raiteri, C. M., Villata, M., Aller, H. D., et al. 2001, A&A, 377, 396 [NASA ADS] [CrossRef] [EDP Sciences]

- Reegen, P. 2007, A&A, 467, 1353 [NASA ADS] [CrossRef] [EDP Sciences] (In the text)

- Rieger, F. M. 2007, Ap&SS, 309, 271 [NASA ADS] [CrossRef] (In the text)

- Rieger, F. M., & Mannheim, K. 2000, A&A, 359, 948 [NASA ADS] (In the text)

- Rieger, F. M., & Mannheim, K. 2001, in AIP Conf. Ser. 558, ed. F. A. Aharonian, & H. J. Völk, 716

- Roland, J., Teyssier, R., & Roos, N. 1994, A&A, 290, 357 [NASA ADS]

- Romero, G. E., Fan, J.-H., & Nuza, S. E. 2003, Chin. J. Astron. Astrophys., 3, 513 [NASA ADS] [CrossRef]

- RXTE. 2009, http://heasarc.gsfc.nasa.gov/docs/xte/xhp_geninfo.html [Online; accessed 02-February-2009]

- Scargle, J. D. 1982, ApJ, 263, 835 [NASA ADS] [CrossRef] (In the text)

- Schwarzenberg-Czerny, A. 1997, ApJ, 489, 941 [NASA ADS] [CrossRef]

- Sillanpaa, A. K. 1998, in 19th Texas Symposium on Relativistic Astrophysics and Cosmology, ed. J. Paul, T. Montmerle, & E. Aubourg

- Sillanpaa, A., Haarala, S., Valtonen, M. J., Sundelius, B., & Byrd, G. G. 1988, ApJ, 325, 628 [NASA ADS] [CrossRef]

- Spada, M. 1999, Astrop. Phys., 11, 59 [NASA ADS] [CrossRef] (In the text)

- Spada, M., Salvati, M., & Pacini, F. 1999, ApJ, 511, 136 [NASA ADS] [CrossRef]

- Sudou, H. 2003, Astronomical Herald, 96, 656 [NASA ADS]

- Swift 2008, http://heasarc.gsfc.nasa.gov/docs/swift/swiftsc.html [Online; accessed 01-December-2008] (In the text)

- Swift 2009a, http://xte.mit.edu/ASM_lc.html [Online; accessed 02-February-2009] (In the text)

- Swift 2009b, http://swift.gsfc.nasa.gov/docs/swift/about_swift/ [Online; accessed 02-February-2009]

- Vaughan, S. 2005, A&A, 431, 391 [NASA ADS] [CrossRef] [EDP Sciences] (In the text)

- Whipple 2008, http://veritas.sao.arizona.edu/content/blogsection/6/40/ [Online; accessed 06-August-2008] (In the text)

- Woo, J.-H., Urry, C. M., & van der Marel, R. P., et al. 2005, ApJ, 631, 762 [NASA ADS] [CrossRef] (In the text)

All Tables

Table 1:

The ![]() - significance levels of the corresponding hypothesis tests brought face to face with the identified 72 day period and its higher harmonics.

- significance levels of the corresponding hypothesis tests brought face to face with the identified 72 day period and its higher harmonics.

Table 2:

The maximum masses of the constituting black holes, the separation d and the intrinsic orbital period for the inclination angles

![]() with bulk Lorentz factor

with bulk Lorentz factor

![]() .

.

All Figures

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig1.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg36.png) |

Figure 1: Subset of the whole available ASM data on Mrk 501. The sampling is not equidistant and the data points clearly differ significantly from zero. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig2.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg39.png) |

Figure 2: Complete available data for SWIFT containing 1153 data points with error bars. Background has already been subtracted before data retrieval (for details see Swift 2009a). |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig3.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg40.png) |

Figure 3:

Subset of SWIFT data around the maximum amplitude in order to show the single points more clearly. The highest peak lies 12.8 |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig4.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg42.png) |

Figure 4: Measured high-energy data from MAGIC in 2005, the set contains 24 data points. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig5.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg44.png) |

Figure 5: Preliminary high-energy data measured by WHIPPLE from 2006 to 2008, the set contains 33 data points. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig6.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg63.png) |

Figure 6: Histogram of the variance of 300 random-noise-modelling samples and the one-parameter fit onto a Gaussian-distribution yielding a correlation coefficient of 0.975010. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig7.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg64.png) |

Figure 7: Pink-corrected Lomb-Scargle periodogram for the RXTE data: 1998-2008 of Mrk 501. This final periodogram is the sum of the periodograms of the original lightcurve and the simulated lightcurves. The dashed graph is the periodogram of the original data set. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig8.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg70.png) |

Figure 8: Pink-corrected Lomb-Scargle periodogram for the preliminary WHIPPLE data 2006-2008 of Mrk 501. This final periodogram was produced using a very high oversampling parameter so as to resolve long periods in this short data set. Thus the influence of artifacts dominates for periods larger than 30 days. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig9.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg72.png) |

Figure 9:

Phase diagram of the period = 72.6 day in the RXTE 1998-2008 data: The Signature is clearly that of a sinusoidal signal. |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig10}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg73.png) |

Figure 10:

Required mass dependence for a BBH system in Mrk 501. The dotted and dot-dashed lines show the upper and lower limits of the binary mass estimation derived from the

|

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig11.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg74.png) |

Figure 11:

The two sided-hypothesis test of whether the 23-day period can be accepted within the |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig12.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg75.png) |

Figure 12:

The two sided-hypothesis test of the 23-day period for the SigSpec algorithm: The SWIFT data does not support the hypothesis, however, we very much doubt the quality of that data set and consider the hypothesis as valid on the |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig13.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg76.png) |

Figure 13:

The two sided-hypothesis test of the 36-day period for the Lomb-Scargle algorithm: the frequencies of the first harmonic are consistent on the |

| Open with DEXTER | |

| In the text | |

![\begin{figure}

\par\includegraphics[angle=-90,width=7cm,clip]{11814fig14.eps}

\end{figure}](/articles/aa/full_html/2009/27/aa11814-09/Timg77.png) |

Figure 14:

The two sided-hypothesis test of 36-day period for the SigSpec algorithm: This period can be found to be persistent in the data on a |

| Open with DEXTER | |

| In the text | |

Copyright ESO 2009

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.